Abstract

Most evolutionary algorithms have multiple parameters and their values drastically affect the performance. Due to the often complicated interplay of the parameters, setting these values right for a particular problem (parameter tuning) is a challenging task. This task becomes even more complicated when the optimal parameter values change significantly during the run of the algorithm since then a dynamic parameter choice (parameter control) is necessary. In this work, we propose a lazy but effective solution, namely choosing all parameter values (where this makes sense) in each iteration randomly from a suitably scaled power-law distribution. To demonstrate the effectiveness of this approach, we perform runtime analyses of the \((1+(\lambda ,\lambda ))\) genetic algorithm with all three parameters chosen in this manner. We show that this algorithm on the one hand can imitate simple hill-climbers like the \((1+1)\) EA, giving the same asymptotic runtime on problems like OneMax, LeadingOnes, or Minimum Spanning Tree. On the other hand, this algorithm is also very efficient on jump functions, where the best static parameters are very different from those necessary to optimize simple problems. We prove a performance guarantee that is comparable to the best performance known for static parameters. For the most interesting case that the jump size k is constant, we prove that our performance is asymptotically better than what can be obtained with any static parameter choice. We complement our theoretical results with a rigorous empirical study confirming what the asymptotic runtime results suggest.

Similar content being viewed by others

1 Introduction

Evolutionary algorithms (EAs) are general-purpose randomized search heuristics. They are adapted to the particular problem to be solved by choosing suitable values for their parameters. This flexibility is a great strength on the one hand, but a true challenge for the algorithm designer on the other. Missing the right parameter values can lead to catastrophic performance losses.

Despite being a core topic of both theoretical and experimental research, general advice on how to set the parameters of an EA are still rare. The difficulty stems from the fact that different problems need different parameters, different instances of the same problem may need different parameters, and even during the optimization process on one instance the most profitable parameter values may change over time.

In an attempt to design a simple one-size-fits-all solution, Doerr et al. [22] proposed to use random parameter values chosen independently in each iteration from a power-law distribution (note that random mutation rates were used before [15, 16], but with different distributions and for different reasons). Mostly via mathematical means, this was shown to be highly effective for the choice of the mutation rate of the \((1 + 1)\) EA when optimizing the jump benchmark, which has the property that the optimal mutation rate depends strongly on the problem instance. More precisely, for a jump function with representation length n and jump size \(2 \le k = o(\sqrt{n})\), the standard mutation rate \(p=1/n\) gives an expected runtime of \((1+o(1)) e n^k\), where \(e \approx 2.718\) is Euler’s number. The asymptotically optimal mutation rate \(p = k/n\) leads to a runtime of \((1+o(1)) n^k (e/k)^k\). Deviating from the optimal rate by a small constant factor increases the runtime by a factor exponential in k. When using the mutation rate \(\alpha /n\), where \(\alpha \in [1..n/2]\) is sampled independently in each iteration from a power-law distribution with exponent \({\beta > 1}\), the runtime becomes \(\Theta (k^{\beta -0.5} n^k (e/k)^k)\), where the constants hidden by the asymptotic notation are independent of n and k. Consequently, apart from the small polynomial factor \(\Theta (k^{\beta -0.5})\), this randomized mutation rate gives the performance of the optimal mutation rate and in particular also achieves the super-exponential runtime improvement by a factor of \((e/k)^{\Theta (k)}\) over the standard rate 1/n.

The idea of choosing parameter values randomly according to a power-law distribution was quickly taken up by other works. In [32, 39], variants of the heavy-tailed mutation operator were proposed and analyzed on TwoMax, Jump, MaxCut, and several sub-modular problems. In [27, 30, 49], power-law mutation in multi-objective optimization was studied. In [12], the authors compared power-law mutation and artificial immune systems. In [2], heavy-tailed mutation was regarded for the \({(1 + (\lambda , \lambda ))}\) GA), however again only for a single parameter and this parameter being the mutation rate. Very recently, the first analysis of a heavy-tailed choice of a parameter of the selection operator was conducted [17].

While optimizing a single parameter is already non-trivial (and the latest work [2] showed that the heavy-tailed mutation rate can even give results better than any static mutation rate, that is, it can inherit advantages of dynamic parameter choices), the really difficult problem is finding good values for several parameters of an algorithm. Here the often intricate interplay between the different parameters can be a true challenge (see, e.g., [23] for a theory-based determination of the optimal values of three parameters).

The only attempt to choose randomly more than one parameter was made in [3] for the \({(1 + (\lambda , \lambda ))}\) GA having a three-dimensional parameter space spanned by the parameters population size \(\lambda \), mutation rate p, and crossover bias c. For this algorithm, first proposed in [14], the product \(d = pcn\) of mutation rate, crossover bias, and representation length describes the expected distance of an offspring from the parent. It was argued heuristically in [3] that a reasonable parameter setting should have \(p = c\), that is, the same mutation rate and crossover bias. With this reduction of the parameter space to two dimensions, the parameter choice in [3] was made as follows. Independently (and independently in each iteration), both \(\lambda \) and d were chosen from a power-law distribution. Mutation rate and crossover bias were both set to \(\sqrt{d/n}\) to ensure \(p=c\) and \(pcn = d\). When using unbounded power-law distributions with exponents \(\beta _\lambda =2+\varepsilon \) and \(\beta _d=1+\varepsilon '\) with \(\varepsilon , \varepsilon ' > 0\) any small constants, this randomized way of setting the parameters gave an expected runtime of \(e^{O(k)} (\frac{n}{k})^{(1+\varepsilon )k/2}\) on jump functions with jump size \(k \ge 3\). This is very similar (slightly better for \(k < \frac{1}{\varepsilon }\), slightly worse for \(k>\frac{1}{\varepsilon }\)) to the runtime of \((\frac{n}{k})^{(k+1)/2} e^{O(k)}\) obtainable with the optimal static parameters. This is a surprisingly good performance for a parameter-less approach, in particular, when compared to the runtime of \(\Theta (n^k)\) of many classic evolutionary algorithms. Note that both for the static and dynamic parameters only upper bounds were proven,Footnote 1 hence we cannot make a rigorous conclusion on which algorithm performs better on jump. The proofs of these upper bounds however suggest to us that they are tight.

Our Results: While the work [3] showed that in principle it can be profitable to choose more than one parameter randomly from a power-law distribution, it relied on the heuristic assumption that one should take the mutation rate equal to the crossover bias. There is nothing wrong with using such heuristic insight, however, one has to question if an algorithm user (different from the original developers of the \({(1 + (\lambda , \lambda ))}\) GA) would have easily found this relation \(p=c\).

In this work, we show that such heuristic preparations are not necessary: One can simply choose all three parameters of the \({(1 + (\lambda , \lambda ))}\) GA from (scaled) power-law distributions and obtain a runtime comparable to the ones seen before. More precisely, when using the power-law exponents \(2+\varepsilon \) for the distribution of the population size and \(1 + \varepsilon '\) for the distributions of the parameters p and c and scaling the distributions for p and c by dividing by \(\sqrt{n}\) (to obtain a constant distance of parent and offspring with constant probability), we obtain the same \(e^{O(k)} (\frac{n}{k})^{(1+\varepsilon )k/2}\) runtime guarantee as in [3]. From our theoretical results one can see that the exact choice of \(\varepsilon '\) does not affect the asymptotical runtime neither on easy functions such as OneMax, nor on hard functions such as \(\textsc {Jump} _k\). Hence if an algorithm user would choose all exponents as \(2+\varepsilon \), which is a natural choice as it leads to a constant expectation and a super-constant variance as usually desired from a power-law distribution, the resulting runtimes would still be \(O(n \log n)\) for OneMax and \(e^{O(k)} (\frac{n}{k})^{(1+\varepsilon )k/2}\) for jump functions with gap size k.

With this approach, the only remaining design choice is the scaling of the distributions. It is clear that this cannot be completely avoided simply because of the different scales of the parameters (mutation rates are in [0, 1], population sizes are positive integers). However, we argue that here very simple heuristic arguments can be employed. For the population size, being a positive integer, we simply use a power-law distribution on the non-negative integers. For the mutation rate and the crossover bias, we definitely need some scaling as both number have to be in [0, 1]. Recalling that (and this is visible right from the algorithm definition) the expected distance of offspring from their parents in this algorithm is \(d = pcn\) and recalling further the general recommendation that EAs should generate offspring with constant Hamming distance from the parent with reasonable probability (this is, for example, implicit both in the general recommendation to use a mutation rate of 1/n and in the heavy-tailed mutation operator proposed in [22]), a scaling leading to a constant expected value of d appears to be a good choice. We obtain this by taking both p and c from power-law distributions on the positive integers scaled down by a factor of \(\sqrt{n}\). This appears again to be the most natural choice. We note that if an algorithm user would miss this scaling and scale down both p and c by a factor of n (e.g., to obtain an expected constant number of bits flipped in the mutation step), then our runtime estimates would increase by a factor of \(n^{\frac{\beta _p + \beta _c}{2} - 1}\), which is still not much compared to the roughly \(n^{k/2}\) runtimes we have and the \(\Theta (n^k)\) runtimes of many simple evolutionary algorithms.

Our precise result is a mathematical runtime analysis of this heavy-tailed algorithm for arbitrary parameters of the three heavy-tailed distributions (power-law exponent and upper bound on the range of positive integers it can take, including the case of no bound) on a set of “easy” problems (OneMax, LeadingOnes, the minimum spanning tree and the partition problem) and on Jump function. We show that on easy problems the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA asymptotically is not worse than the \((1 + 1)\) EA, and on Jump it significantly outperforms the \((1 + 1)\) EA for a wide range of the parameters of power-law distributions. These results show that the absolutely best performance can be obtained by guessing correctly suitable upper bounds on the ranges. Since guessing these parameters wrong can lead to significant performance losses, whereas the gains from these optimal parameter values are not so high, we would rather advertise our parameter-less “layman recommendation” to use unrestricted ranges and power-law exponents slightly more than two for the population size and slightly more than one for other parameters. These recommendations are supported by the empirical study shown in Sect. 4.

Our work also provides an example where a dynamic (here simply randomized) parameter choice provably gives an asymptotic runtime improvement. This improvement is significantly more pronounced than the \(o(\sqrt{\log n})\) factor speed-up observed in [2, 13] for the optimization of OneMax via the \({(1 + (\lambda , \lambda ))}\) GA.

We note that our situation is different, e.g., from the optimization of jump functions via the \((1 + 1)\) EA. Here the mutation rate \(\frac{k}{n}\) is asymptotically optimal [22] for \(\textsc {Jump} _k\). Clearly, for the easy OneMax-type part of the optimization process, the mutation rate \(\frac{1}{n}\) would be superior, but the damage from using the larger rate \(\frac{k}{n}\) only leads to a lower-order increase of the runtime.

We prove that this is different for the optimization of the jump functions via the \({(1 + (\lambda , \lambda ))}\) GA. Since this effect is already visible for constant values of k, and in fact strongest visible, to ease the presentation, we assume that k is constant. We note that only for constant k the different variants of the \({(1 + (\lambda , \lambda ))}\) GA had a polynomial runtime, so clearly, k constant (and not too large) is the most interesting case.

For constant k, our result is \(e^{O(k)} (\frac{n}{k})^{(1+\varepsilon )k/2}\). The best runtime that could be obtained with a static mutation rate was \(e^{O(k)} n^{(k + 1)/2} k^{-k/2}\). Hence by choosing \(\varepsilon \) sufficiently small, our upper bound is asymptotically smaller than the best upper bound for static parameters. Unfortunately, no lower bounds were proven in [6] for static parameters. To rigorously support our claim that dynamic parameter choices can asymptotically outperform static ones when optimizing jump functions via the \({(1 + (\lambda , \lambda ))}\) GA, in Sect. 3.3 we prove such a lower bound. Since this is not the main topic of this work, we shall not go as far as proving that the upper bound for static parameters is tight, but we content ourselves with a simpler proof of a weaker lower bound, which however suffices to support our claim of the superiority of dynamic parameter choices.

In summary, our results demonstrate that choosing all parameters of an algorithm randomly according to a simple (scaled) power-law can be a good way to overcome the problem of choosing appropriate fixed or dynamic parameter values. We are optimistic that this approach will lead to a good performance also for other algorithms and other optimization problems.

2 Preliminaries

In this section we collect definitions and tools which we use in the paper. To avoid misreading of our results, we note that we use the following notation. By \({\mathbb {N}}\) we denote the set of all positive integer numbers and by \({\mathbb {N}}_0\) we denote the set of all non-negative integer numbers. We write [a..b] to denote an integer interval including its borders and [a, b] to denote a real-valued interval including its borders. For any probability distribution \(\mathcal {L}\) and random variable X, we write \(X\sim \mathcal {L}\) to indicate that X follows the law \(\mathcal {L}\). We denote the binomial law with parameters \(n \in {\mathbb {N}}\) and \(p \in [0,1]\) by \({{\,\textrm{Bin}\,}}\left( n, p\right) \). We denote the geometric distribution taking values in \(\{1, 2, \dots \}\) with success probability \(p \in [0,1]\) by \({{\,\textrm{Geom}\,}}(p)\). We denote by \(T_I\) and \(T_F\) the number of iterations and the number of fitness evaluations performed until some event holds (which is always specified in the text).

2.1 Objective Functions

In this paper we consider five benchmark functions and problems, namely OneMax, LeadingOnes, the minimum spanning tree problem, the partition problem and \(\textsc {Jump} _k\). All of them are pseudo-Boolean functions, that is, they are defined on the set of bit strings of length n and return a real number.

OneMax returns the number of one-bits in its argument, that is, \(\textsc {OneMax} (x) = \textsc {OM} (x) = \sum _{i = 1}^n x_i\). It is one of the most intensively studied benchmarks in evolutionary computation. Many evolutionary algorithms can find the optimum of OneMax in time \(O(n \log n)\) [4, 34, 37, 47]. The \({(1 + (\lambda , \lambda ))}\) GA with a fitness-dependent or self-adjusting choice of the population size [13, 14] or with a heavy-tailed random choice of the population size [1] is capable of solving OneMax in linear time when the other two parameters are chosen suitably depending on the population size.

LeadingOnes returns the number of the leading ones in a bit string. In more formal words we maximize function

The runtime of the most classic EAs is at least quadratic on LeadingOnes. More precisely, the runtime of the \((1 + 1)\) EA is \(\Theta (n^2)\) [20, 41], the runtime of the \((\mu +1)\) EA is \(\Theta (n^2 + \mu n\log (n))\) [47], the runtime of the \((1+\lambda )\) EA is \(\Theta (n^2 + \lambda n)\) [34] and the runtime of the \((\mu +\lambda )\) EA is \(\Omega (n^2 + \frac{\lambda n}{\max \{1,\log (\lambda / n)\}})\) [9]. It was shown in [5] that the \({(1 + (\lambda , \lambda ))}\) GA with standard parameters (\(\lambda \in [1..\frac{n}{2}]\), \(p = \frac{\lambda }{n}\) and \(c = \frac{1}{\lambda }\)) also has a \(\Theta (n^2)\) runtime on LeadingOnes.

In the minimum spanning tree problem (MST for brevity) we are given an undirected graph \(G = (V, E)\) with positive integer edge weights defined by a weight function \(\omega : E \rightarrow {\mathbb {N}}_{\ge 1}\). We assume that this graph does not have parallel edges or loops. The aim is to find a connected subgraph of a minimum weight. By n we denote the number of vertices, by m we denote the number of edges in G.

This problem can be solved by minimizing the following fitness function defined on all subgraphs \(G' = (V, E')\) of the given graph G.

where \(cc(G')\) is the number of connected components in \(G'\) and \(W_{{{\,\textrm{total}\,}}}\) is the total weight of the graph G, that is, the sum of all edge weights. This definition of the fitness guarantees that any connected graph has a better (in this case, smaller) fitness than any unconnected graph and any tree has a better fitness than any graph with cycles.

The natural representation for subgraphs used in [38] is via bit-strings of length m, where each bit corresponds to some particular edge in graph G. An edge is present in subgraph \(G'\) if and only if its corresponding bit is equal to one. In [38] it was shown that the \((1 + 1)\) EA solves the MST problem with the mentioned representation and fitness function in expected number of \(O(m^2 \log (W_{{{\,\textrm{total}\,}}}))\) iterations.

In the partition problem we have a set of n objects with positive integer weights \(w_1, w_2, \dots , w_n\) and our aim is to split the objects into two sets (usually called bins) such that the total weight of the heavier bin is minimal. Without loss of generality we assume that the weights are sorted in a non-increasing order, that is, \(w_1 \ge w_2 \ge \cdots \ge w_n\). By w we denote the total weight of all objects, that is, \(w = \sum _{i = 1}^n w_i\). By a \((1 + \delta )\) approximation (for any \(\delta > 0\)) we mean a solution in which the weight of the heavier bin is at most by a factor of \((1 + \delta )\) greater than in an optimal solution.

Each partition into two bins can be represented by a bit string of length n, where each bit corresponds to some particular object. The object is put into the first bin if and only if the corresponding bit is equal to one. As fitness f(x) of an individual x we consider the total weight of the objects in the heavier bin in the partition which corresponds to x.

In [46] it was shown that the \((1 + 1)\) EA finds a \((\frac{4}{3} + \varepsilon )\) approximation for any constant \(\varepsilon > 0\) of any partition problem in linear time and that it finds a \(\frac{4}{3}\) approximation in time \(O(n^2)\).

The function \(\textsc {Jump} _k\) (where k is a positive integer parameter) is defined via OneMax as follows.

A plot of \(\textsc {Jump} _k\) is shown in Fig. 1. The main feature of \(\textsc {Jump} _k\) is a set of local optima at distance k from the global optimum and a valley of extremely low fitness in between. Most EAs optimizing \(\textsc {Jump} _k\) first reach the local optima and then have to perform a jump to the global one, which turns out to be a challenging task for most classic algorithms. In particular, for all values of \(\mu \) and \(\lambda \) it was shown that \((\mu +\lambda )\) EA and \((\mu ,\lambda )\) EA have a runtime of \(\Omega (n^k)\) fitness evaluations when they optimize \(\textsc {Jump} _k\) [20, 26]. Using a mutation rate of \(\frac{k}{n}\) [22], choosing it from a power-law distribution [22], or setting it dynamically with a stagnation detection mechanism [28, 42,43,44] reduces the runtime of the \((1 + 1)\) EA by a \(k^{\Theta (k)}\) factor, however, for constant k the runtime of the \((1 + 1)\) EA remains \(\Theta (n^k)\). Many crossover-based algorithms have a better runtime on \(\textsc {Jump} _k\), see [18, 19, 31, 35, 40, 50] for results on algorithms different from the \({(1 + (\lambda , \lambda ))}\) GA. Those beating the \({\tilde{O}}(n^{k-1})\) runtime shown in [19] may appear somewhat artificial and overfitted to the precise definition of the jump function, see [48]. Outside the world of genetic algorithms, the estimation-of-distribution algorithm cGA and the ant-colony optimizer 2-MMAS\(_{ib}\) were shown to optimize jump functions with small \(k=O(\log n)\) in time \(O(n \log n)\) [7, 25, 33]. Runtime analyses for artificial immune systems, hyperheuristics, and the Metropolis algorithm exist [10, 11, 36], but their runtime guarantees are asymptotically weaker than \(O(n^k)\) for constant k.

2.2 Power-Law Distributions

We say that an integer random variable X follows a power-law distribution with parameters \(\beta \) and u if

Here \(C_{\beta , u} = (\sum _{j = 1}^u j^{-\beta })^{-1}\) denotes the normalization coefficient. We write \(X \sim {{\,\textrm{pow}\,}}(\beta , u)\) and call u the bounding of X and \(\beta \) the power-law exponent.

The main feature of this distribution is that while having a decent probability to sample \(X = \Theta (1)\) (where the asymptotic notation is used for \(u \rightarrow +\infty \)), we also have a good (inverse-polynomial instead of negative-exponential) probability to sample a super-constant value. The following lemmas show the well-known properties of the power-law distributions. Their proofs can be found, for example, in [3].

Lemma 1

(Lemma 1 in [3]) For all positive integers a and b such that \(b \ge a\) and for all \(\beta > 0\), the sum \(\sum _{i = a}^b i^{-\beta }\) is

-

\(\Theta ((b + 1)^{1 - \beta } - a^{1 - \beta })\), if \(\beta < 1\),

-

\(\Theta (\log (\frac{b + 1}{a}))\), if \(\beta = 1\), and

-

\(\Theta (a^{1 - \beta } - (b + 1)^{1 - \beta })\), if \(\beta > 1\),

where \(\Theta \) notation is used for \(b \rightarrow +\infty \).

Lemma 2

(Lemma 2 in [3]) The normalization coefficient \(C_{\beta , u} = (\sum _{j = 1}^u j^{-\beta })^{-1}\) of the power-law distribution with parameters \(\beta \) and u is

-

\(\Theta (u^{\beta - 1})\), if \(\beta < 1\),

-

\(\Theta (\frac{1}{\log (u) + 1})\), if \(\beta = 1\), and

-

\(\Theta (1)\), if \(\beta > 1\),

where \(\Theta \) notation is used for \(u \rightarrow +\infty \).

Lemma 3

(Lemma 3 in [3]) The expected value of the random variable \(X\sim {{\,\textrm{pow}\,}}(\beta , u)\) is

-

\(\Theta (u)\), if \(\beta < 1\),

-

\(\Theta (\frac{u}{\log (u) + 1})\), if \(\beta = 1\),

-

\(\Theta (u^{2 - \beta })\), if \(\beta \in (1, 2)\),

-

\(\Theta (\log (u) + 1)\), if \(\beta = 2\), and

-

\(\Theta (1)\), if \(\beta > 2\),

where \(\Theta \) notation is used for \(u \rightarrow +\infty \).

Lemma 4

If \(X\sim {{\,\textrm{pow}\,}}(\beta , u)\), then \(E[X^2]\) is

-

\(\Theta (u^2)\), if \(\beta < 1\),

-

\(\Theta (\frac{u^2}{\log (u) + 1})\), if \(\beta = 1\),

-

\(\Theta (u^{3 - \beta })\), if \(\beta \in (1, 3)\),

-

\(\Theta (\log (u) + 1)\), if \(\beta = 3\), and

-

\(\Theta (1)\), if \(\beta > 3\),

where \(\Theta \) notation is used for \(u \rightarrow +\infty \).

Lemma 4 simply follows from Lemma 1.

2.3 The Heavy-Tailed \({(1 + (\lambda , \lambda ))}\) GA

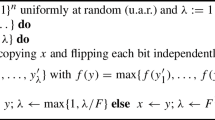

We now define the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA. The main difference from the standard \({(1 + (\lambda , \lambda ))}\) GA is that at the start of each iteration the mutation rate p, the crossover bias c, and the population size \(\lambda \) are randomly chosen as follows. We sample \(p \sim n^{-1/2}{{\,\textrm{pow}\,}}(\beta _p, u_p)\) and \(c \sim n^{-1/2}{{\,\textrm{pow}\,}}(\beta _c, u_c)\). The population size is chosen via \(\lambda \sim {{\,\textrm{pow}\,}}(\beta _\lambda , u_\lambda )\). Here the upper limits \(u_\lambda \), \(u_p\) and \(u_c\) can be any positive integers, except we require \(u_p\) and \(u_c\) to be at most \(\sqrt{n}\) (so that we choose both p and c from interval (0, 1]). The power-law exponents \(\beta _\lambda \), \(\beta _p\) and \(\beta _c\) can be any non-negative real numbers. We call these parameters of the power-law distribution the hyperparameters of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA and we give recommendations on how to choose these hyperparameters in Sect. 3.3. The pseudocode of this algorithm is shown in Algorithm 1. We note that it is not necessary to store the whole offspring population, since only the best individual has a chance to be selected as a mutation or crossover winner. Hence also large values for \(\lambda \) are algorithmically feasible.

Concerning the scalings of the power-law distributions, we find it natural to choose the integer parameter \(\lambda \) from a power-law distribution without any normalization. For the scalings of the power-law determining the parameters p and c, we argued already in the introduction that the scaling factor of \(n^{-1/2}\) is natural as it ensures that the Hamming distance between parent and offspring, which is pcn for this algorithm, is one with constant probability. We see that there is some risk that an algorithm user misses this argument and, for example, chooses a scaling factor of \(n^{-1}\) for the mutation rate, which leads to the Hamming distance between parent and mutation offspring being one with constant probability. A completely different alternative would be to choose \(c \sim \frac{1}{{{\,\textrm{pow}\,}}(\beta _m, u_m)}\), inspired by the recommendation “\(c := 1/(pn)\)” made for static parameters in [14]. Without proof, we note that these and many similar strategies increase the runtime by at most a factor of \(\Theta (n^c)\), c a constant independent of n and k, thus not changing the general \(n^{(0.5+\varepsilon ) k}\) runtime guarantee proven in this work.

The following theoretical results exist for the \({(1 + (\lambda , \lambda ))}\) GA. With optimal static parameters the algorithm solves OneMax in approximately \(O(n\sqrt{\log (n)})\) fitness evaluations [14]. The runtime becomes slightly worse on the random satisfiability instances due to a weaker fitness-distance correlation [8]. In [5] it was shown that the runtime of the \({(1 + (\lambda , \lambda ))}\) GA on LeadingOnes is the same as the runtime of the most classic algorithms, that is, \(\Theta (n^2)\), which means that it is not slower than most other EAs despite the absence of a strong fitness-distance correlation. The analysis of the \({(1 + (\lambda , \lambda ))}\) GA with static parameters on \(\textsc {Jump} _k\) in [6] showed that the \({(1 + (\lambda , \lambda ))}\) GA (with uncommon parameters) can find the optimum in \(e^{O(k)}(\frac{n}{k})^{(k + 1)/2}\) fitness evaluations, which is roughly a square root of the \(\Theta (n^k)\) runtime of many classic algorithms on this function.

Concerning dynamic parameter choices, a fitness-dependent parameter choice was shown to give linear runtime on OneMax [14], which is the best known runtime for crossover-based algorithms on OneMax. In [13], it was shown that also the self-adjusting approach of controlling the parameters with a simple one-fifth rule can lead to this linear runtime. The adapted one-fifth rule with a logarithmic cap lets the \({(1 + (\lambda , \lambda ))}\) GA outperform the \((1 + 1)\) EA on random satisfiability instances [8].

Choosing \(\lambda \) from a power-law distribution and taking \(p = \frac{\lambda }{n}\) and \(c = \frac{1}{\lambda }\) lets the \({(1 + (\lambda , \lambda ))}\) GA optimize OneMax in linear time [2]. Also, as it was mentioned in the introduction, with randomly chosen parameters (but with some dependencies between several of them) the \({(1 + (\lambda , \lambda ))}\) GA can optimize \(\textsc {Jump} _k\) in time of \(e^{O(k)}(\frac{n}{k})^{(1 + \varepsilon )k/2}\) [3]. For the LeadingOnes it was shown in [5] that the runtime of the \({(1 + (\lambda , \lambda ))}\) GA is \(\Theta (n^2)\) and that any dynamic choice of \(\lambda \) does not change this asymptotical runtime.

In our proofs we use the following language (also for the \({(1 + (\lambda , \lambda ))}\) GA with static parameters). When we analyse the \({(1 + (\lambda , \lambda ))}\) GA on Jump and the algorithm has already reached the local optimum, then we call the mutation phase successful if all k zero-bits of x are flipped to ones in the mutation winner \(x'\). We call the crossover phase successful if the crossover winner has a greater fitness than x.

2.4 Useful Tools

An important tool in our analysis is Wald’s equation [45] as it allows us to express the expected number of fitness evaluations through the expected number of iterations and the expected cost of one iteration.

Lemma 5

(Wald’s equation) Let \((X_t)_{t \in {\mathbb {N}}}\) be a sequence of real-valued random variables and let T be a positive integer random variable. Let also all following conditions be true.

-

1.

All \(X_t\) have the same finite expectation.

-

2.

For all \(t \in {\mathbb {N}}\) we have \(E[X_t \mathbb {1}_{\{T \ge t\}}] = E[X_t] \Pr [T \ge t]\).

-

3.

\(\sum _{t = 1}^{+\infty } E[|X_t| \mathbb {1}_{\{T \ge t\}}] < \infty \).

-

4.

E[T] is finite.

Then we have

In our analysis of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA we use the following multiplicative drift theorem.

Theorem 6

(Multiplicative Drift [21]) Let \(S \subset {\mathbb {R}}\) be a finite set of positive numbers with minimum \(s_{\min }\). Let \(\{X_t\}_{t \in {\mathbb {N}}_0}\) be a sequence of random variables over \(S \cup \{0\}\). Let T be the first point in time t when \(X_t = 0\), that is,

which is a random variable. Suppose that there exists a constant \(\delta > 0\) such that for all \(t \in {\mathbb {N}}_0\) and all \(s \in S\) such that \(\Pr [X_t = s] > 0\) we have

Then for all \(s_0 \in S\) such that \(\Pr [X_0 = s_0] > 0\) we have

We use the following well-known relation between the arithmetic and geometric means.

Lemma 7

For all positive a and b it holds that \(a + b \ge 2\sqrt{ab}\).

3 Runtime Analysis

In this section we perform a runtime analysis of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA on the easy problems OneMax, LeadingOnes, and Minimum Spanning Tree as well as the more difficult Jump problem. We show that this algorithm can efficiently escape local optima and that it is capable of solving Jump functions much faster than the known mutation-based algorithms and most of the crossover-based EAs. At the same time it does not fail on easy functions like OneMax, unlike the \({(1 + (\lambda , \lambda ))}\) GA with those static parameters which are optimal for Jump [6].

From the results of this section we distill the recommendations to use \(\beta _p\) and \(\beta _c\) slightly greater than one and to use \(\beta _\lambda \) slightly greater than two. We also suggest to use almost unbounded power-law distributions, taking \(u_c = u_p = \sqrt{n}\) and \(u_\lambda = 2^n\). These recommendations are justified in Corollary 16.

3.1 Easy Problems

In this subsection we show that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA has a reasonably good performance on the easy problems OneMax, LeadingOnes, minimum spanning tree, and partition.

3.1.1 OneMax

The following result shows that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA just like the simple \((1 + 1)\) EA solves the OneMax problem in \(O(n\log (n))\) iterations.

Theorem 8

If \(\beta _\lambda > 1\), \(\beta _p > 1\), and \(\beta _c > 1\), then the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA finds the optimum of OneMax in \(O(n\log (n))\) iterations. The expected number of fitness evaluations is

-

\(O(n\log (n))\), if \(\beta _\lambda > 2\),

-

\(O(n\log (n)(\log (u_\lambda ) + 1))\), if \(\beta _\lambda = 2\), and

-

\(O(nu_\lambda ^{2 - \beta _\lambda } \log (n))\), if \(\beta _\lambda \in (1, 2)\).

The central argument in the proof of Theorem 8 is the observation that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration equivalent to one of the \((1 + 1)\) EA with a constant probability, which is shown in the following lemma.

Lemma 9

If \(\beta _p\), \(\beta _c\) and \(\beta _\lambda \) are all strictly greater than one, then with probability \(\rho = \Theta (1)\) the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA chooses \(p = c = \frac{1}{\sqrt{n}}\) and \(\lambda = 1\) and performs an iteration of the \((1 + 1)\) EA with mutation rate \(\frac{1}{n}\).

Proof

Since we choose p, c and \(\lambda \) independently, then by the definition of the power-law distribution and by Lemma 2 we have

If we have \(\lambda = 1\), then we have only one mutation offspring which is automatically chosen as the mutation winner \(x'\). Note that although we first choose \(\ell \sim {{\,\textrm{Bin}\,}}(n, p)\) and then flip \(\ell \) random bits in x, the distribution of \(x'\) in the search space is the same as if we flipped each bit independently with probability p (see Section 2.1 in [14] for more details).

In the crossover phase we create only one offspring y by applying the biased crossover to x and \(x'\). Each bit of this offspring is different from the bit in the same position in x if and only if it was flipped in \(x'\) (with probability p) and then taken from \(x'\) in the crossover phase (with probability c). Therefore, y is distributed in the search space as if we generated it by applying the standard bit mutation with mutation rate pc to x. Hence, we can consider such iteration of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA as an iteration of the \((1 + 1)\) EA which uses a standard bit mutation with mutation rate \(pc = \frac{1}{n}\). \(\square \)

We are now in position to prove Theorem 8.

Proof of Theorem 8

By Lemma 9 with probability at least \(\rho \), which is at least some constant independent of the problem size n, the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA. Hence, the probability P(i) to increase fitness in one iteration if we have already reached fitness i is

Hence, we estimate the total runtime in terms of iterations as a sum of the expected runtimes until we leave each fitness level.

To compute the expected number of fitness evaluations until we find the optimum we use Wald’s equation (Lemma 5). Since in each iteration of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA we make \(2\lambda \) fitness evaluations, we have

By Lemma 3 we have

Therefore,

\(\square \)

Theorem 8 shows that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA can fall back to a \((1 + 1)\) EA behavior and turn into a simple hill climber. Since we do not have a matching lower bound, our analysis leaves open the question to what extent the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA benefits from iterations in which it samples parameter values different from the ones used in the lemma above. On the one hand, in [2] it was shown that if we choose only one parameter \(\lambda \) from the power-law distribution and set the other parameters to their optimal values in the \({(1 + (\lambda , \lambda ))}\) GA (namely, \(p = \frac{\lambda }{n}\) and \(c = \frac{1}{\lambda }\) [14]), then we have a linear runtime on OneMax. This indicates that there is a chance that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA with an independent choice of three parameters can also have a \(o(n\log (n))\) runtime on this problem. On the other hand, the probability that we choose p and c close to their optimal values is not high, hence we have to rely on making good progress when using non-optimal parameters values. Our experiments presented in Sect. 4.1 suggest that such parameters do not yield the desired progress speed and that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA has an \(\Omega (n\log (n))\) runtime (see Fig. 3). For this reason, we rather believe that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA proposed in this work has an inferior performance on OneMax than the one proposed in [2]. Since our new algorithm has a massively better performance on jump functions, we feel that losing a logarithmic factor in the runtime on OneMax is not too critical.

Lemma 9 also allows us to transform any upper bound on the runtime of the \((1 + 1)\) EA which was obtained via the fitness level argument or via drift with the fitness into the same asymptotical runtime for the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA. We give three examples in the following subsections.

3.1.2 LeadingOnes

For the LeadingOnes problem, we now show that arguments analogous to the ones in [41] can be used to prove an \(O(n^2)\) runtime guarantee also for the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA.

Theorem 10

If \(\beta _\lambda > 1\), \(\beta _p > 1\), and \(\beta _c > 1\), then the expected runtime of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA on LeadingOnes is \(O(n^2)\) iterations. In terms of fitness evaluations the expected runtime is

Proof

The probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA improves the fitness in one iteration is at least the probability that it performs an iteration of the \((1 + 1)\) EA that improves the fitness. By Lemma 9 the probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA is \(\Theta (1)\). The probability that the \((1 + 1)\) EA increases the fitness in one iteration is at least the probability that it flips the first zero-bit in the string and does not flip any other bit, which is \(\frac{1}{n}(1 - \frac{1}{n})^{n - 1} \ge \frac{1}{en}\). Hence, the probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA increases the fitness in one iteration is \(\Omega (\frac{1}{n})\).

Therefore, the expected number of iterations before the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA improves the fitness is O(n) iterations. Since there will be no more than n improvements in fitness before we reach the optimum, the expected total runtime of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA on LeadingOnes is at most \(O(n^2)\) iterations. Since by Lemma 3 with \(\beta _\lambda > 1\) the expected cost of one iteration is

by Wald’s equation (Lemma 5) the expected total runtime in terms of fitness evaluations is

\(\square \)

3.1.3 Minimum Spanning Tree Problem

We proceed with the runtime on the minimum spanning tree problem. Reusing some of the arguments from [38] and some more from the later work [21], we show that the expected runtime of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA admits the same upper bound \(O(m^2\log (W_{{{\,\textrm{total}\,}}}))\) as the \((1 + 1)\) EA.

Theorem 11

If \(\beta _\lambda > 1\), \(\beta _p > 1\), and \(\beta _c > 1\), then the expected runtime of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA on minimum spanning tree problem is \(O(m^2 \log (W_{{{\,\textrm{total}\,}}}))\) iterations. In terms of fitness evaluations it is

Proof

In [38] it was shown that starting with a random subgraph of G, the \((1 + 1)\) EA finds a spanning tree graph in \(O(m\log (n))\) iterations. We now briefly adjust these arguments to the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA. If \(G'\) is disconnected, then the probability to reduce the number of connected components is at most the probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA multiplied by the probability that an iteration of the \((1 + 1)\) EA adds an edge which connects two connected components (and does not add or remove other edges from the subgraph \(G'\)). The latter probability is at least \(\frac{cc(G') - 1}{m} (1 - \frac{1}{m})^{m - 1} \ge \frac{cc(G') - 1}{em}\), since there are at least \(cc(G') - 1\) edges which we can add to connect a pair of connected components. Therefore, by the fitness level argument we have that the expected number of iterations before the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA finds a connected graph is \(O(m \log (n))\).

If the algorithm has found a connected graph, with probability \(\Omega (\frac{|E'| - (n - 1)}{m})\) the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA that removes an edge participating in a cycle (since there are at least \((|E'| - (n - 1))\) such edges). Therefore, in \(O(m\log (m))\) iterations the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA finds a spanning tree (probably not the minimum one). Note that \(O(m\log (m)) = O(m\log (n))\), since we do not have loops and parallel edges and thus \(m \le \frac{n(n - 1)}{2}\).

Once the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA has obtained a spanning tree, it cannot accept any subgraph that is not a spanning tree. Therefore, we can use the multiplicative drift argument from [21]. Namely, we define a potential function \(\Phi (G')\) that is equal to the weight of the current tree minus the weight of the minimum spanning tree. In [21] it was shown that for every iteration t of \((1 + 1)\) EA, we have

where \(G'_t\) denotes the current graph in the start of iteration t. By Lemma 9 and since the weight of the current graph cannot decrease in one iteration, for the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA we have

for some \(\rho \), which is a constant independent of m and W. Since the edge weights are integers, we have \(\Phi (G'_t) \ge 1 =:s_{\min }\) for all t such that \(G'_t\) is not an optimal solution. We also have \(\Phi (G'_0) \le W_{{{\,\textrm{total}\,}}}\) by the definition of \(W_{{{\,\textrm{total}\,}}}\). Therefore, by the multiplicative drift theorem (Theorem 6) we have that the expected runtime until we find the optimum starting from a spanning tree is at most

Together with the runtime to find a spanning tree, we obtain a total expected runtime of

iterations. By Lemma 3 and by Wald’s equation (Lemma 5) the expected number of fitness evaluations is therefore

\(\square \)

3.1.4 Approximations for the Partition Problem

We finally regard the partition problem. We use similar arguments as in [46] (slightly modified to exploit multiplicative drift analysis) to show that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA also finds a \((\frac{4}{3} + \varepsilon )\) approximation in linear time. For \(\frac{4}{3}\) approximations we improve the \(O(n^2)\) runtime result of [46] and show that both the \((1 + 1)\) EA and the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA succeed in \(O(n\log (w))\) fitness evaluations.

Theorem 12

If \(\beta _\lambda > 2\), \(\beta _p > 1\), and \(\beta _c > 1\), then the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA finds a \((\frac{4}{3} + \varepsilon )\) approximation to the partition problem in an expected number of O(n) iterations. The expected number of fitness evaluations is

The heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA and the \((1 + 1)\) EA also find a \(\frac{4}{3}\) approximation in an expected number of \(O(n\log (w))\) iterations. The expected number of fitness evaluations for the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA is

Proof

We first recall the definition of a critical object from [46]. Let \(\ell \ge \frac{w}{2}\) be the fitness of the optimal solution. Let \(i_1< i_2< \dots < i_k\) be the indices of the objects in the heavier bin. Then we call the object r in the heavier bin the critical one if it is the object with the smallest index such that

In other words, the critical object is the object in the heavier bin such that the total weight of all previous (non-lighter) objects in that bin is not greater than \(\ell \), but the total weight of all previous objects together with the weight of this object is greater than \(\ell \). We call the weight of the critical object the critical weight. We also call the objects in the heavier bin which have index at least r the light objects. This notation is illustrated in Fig. 2.

We now show that at some moment the critical weight becomes at most \(\frac{w}{3}\) and does not exceed this value in the future. For this we consider two cases.

Case 1: \(w_2 > \frac{w}{3}\). Note that in this case we also have \(w_1 > \frac{w}{3}\), since \(w_1 \ge w_2\) and the weight of all other objects is \(w - w_1 - w_2 < \frac{w}{3}\). If the two heaviest objects are in the same bin, then the weight of this (heavier) bin is at least \(\frac{2w}{3}\). In any partition in which these two objects are separated the weight of the heavier bin is at most \(\max \{w - w_1, w - w_2\} < \frac{2w}{3}\), therefore if the algorithm generates such a partition it would replace a partition in which the two heaviest objects are in the same bin. For the same reason, once we have a partition with the two heaviest objects in different bins, we cannot accept a partition in which they are in the same bin.

The probability of separating the two heaviest objects into two different bins is at least the probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA (which by Lemma 9 is \(\Theta (1)\)) multiplied by the probability that in this iteration we move one of these two objects into a different bin and do not move the second object. This is at least

Consequently, in an expected number of O(n) iterations the two heaviest objects will be separated into different bins.

Note that the weight of the heaviest object cannot be greater than the weight of the heavier bin (even in the optimal solution), hence we have \({w_2 \le w_1 \le \ell }\). Therefore, when the two heaviest objects are separated into different bins neither of them can be the critical one. Hence, the critical weight is now at most \(w_3 < \frac{w}{3}\).

Case 2: \(w_2 \le \frac{w}{3}\). Since the heaviest object can never be the critical one, the critical weight is at most \(w_2 \le \frac{w}{3}\).

Once the critical weight is at most \(\frac{w}{3}\), we define a potential function

where \(x_t\) is the current individual of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA at the beginning of iteration t. Note that this potential function does not increase due to the elitist selection of the \({(1 + (\lambda , \lambda ))}\) GA.

We now show that as long as \(\Phi (x_t) > 0\), any iteration which moves any light object to the lighter bin and does not move other objects reduces the fitness (and the potential). Recall that the weight of each light object is at most \(\frac{w}{3}\). Then the weight of the bin which was heavier before the move is reduced by the weight of the moved object. The weight of the other bin becomes at most

Therefore, the weight of both bins becomes smaller than the weight of the bin which was heavier before the move, hence such a partition is accepted by the algorithm.

Now we estimate the expected decrease of the potential in one iteration. Recall that by Lemma 9 the probability that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs an iteration of the \((1 + 1)\) EA is at least some \(\rho = \Theta (1)\). The probability that in such an iteration we move only one particular object is \(\frac{1}{n}(1 - \frac{1}{n})^{n - 1} \ge \frac{1}{en}\). Hence we have two options.

-

If there is at least one light object with weight at least \(\Phi (x_t)\), then moving it we decrease the potential to zero, since the wight of the heavier bin becomes not greater than \(\ell + \frac{w}{6}\) and the weight of the lighter bin also cannot become greater than \(\ell + \frac{w}{6}\) as it was shown earlier. Hence, we have

$$\begin{aligned} E\left[ \Phi (x_t) - \Phi (x_{t + 1}) \mid \Phi (x_t) = s\right] \ge \frac{s\rho }{en}. \end{aligned}$$ -

Otherwise, the move of any light object decreases the potential by the weight of the moved object, since the heavy bin will remain the heavier one after such a move. The total weight of the light objects is at least \(f(x_t) - \ell \ge \Phi (x_t)\). Let L be the set of indices of the light objects. Then we have

$$\begin{aligned} E\left[ \Phi (x_t) - \Phi (x_{t + 1}) \mid \Phi (x_t) = s\right] \ge \rho \sum _{i \in L} \frac{w_i}{en} \ge \frac{s\rho }{en}. \end{aligned}$$

Now we are in position to use the multiplicative drift theorem (Theorem 6). Note that the maximum value of potential function is \(\frac{w}{2}\) and its minimum positive value is \(\frac{1}{6}\) (since \(f(x_t)\) and \(\ell \) are integer values and \(\frac{w}{6}\) is divided by \(\frac{1}{6}\)). Therefore, denoting \(T_I\) as the smallest t such that \(\Phi (x_t) = 0\), we have

When \(\Phi (x_t) = 0\), we have

which means that \(x_t\) is a \(\frac{4}{3}\) approximation of the optimal solution.

To show that we obtain a \((\frac{4}{3} + \varepsilon )\) approximation in expected linear time for all constants \(\varepsilon > 0\), we use a modified potential function \(\Phi _\varepsilon \), which is defined by

For this potential function the drift is at least as large as for \(\Phi \), but its smallest non-zero value is \(\frac{\varepsilon w}{2}\). Hence, by the multiplicative drift theorem (Theorem 6) the expectation of the first time \(T_I(\varepsilon )\) when \(\Phi _\varepsilon \) turns to zero is at most

When \(\Phi (x_t) = 0\), we have

therefore \(x_t\) is a \((\frac{4}{3} + \varepsilon )\) approximation.

By Lemma 3 and by Wald’s equation (Lemma 5) we also have the following estimates on the runtimes \(T_F\) and \(T_F(\varepsilon )\) in terms of fitness evaluations.

\(\square \)

3.2 Jump Functions

In this subsection we show that the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs well on jump functions, hence there is no need for the informal argumentation [3] to choose mutation rate p and crossover bias c identical. The main result is the following theorem, which estimates the expected runtime until we leave the local optimum of \(\textsc {Jump} _k\).

Theorem 13

Let \(k \in [2..\frac{n}{4}]\), \(u_p \ge \sqrt{2k}\), and \(u_c \ge \sqrt{2k}\). Assume that we use the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA (Algorithm 1) to optimize \(\textsc {Jump} _k\), starting already in the local optimum. Then the expected number of fitness evaluations until the optimum is found is shown in Table 1, where \(p_{pc}\) denotes the probability that both p and c are in \([\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]\). Table 2 shows estimates for \(p_{pc}\).

The proof of Theorem 13 follows from similar arguments as in [3, Theorem 6], the main differences being highlighted in the following two lemmas.

Lemma 14

Let \(k \le \frac{n}{4}\). If \(u_p \ge \sqrt{2k}\) and \(u_c \ge \sqrt{2k}\), then the probability \(p_{pc} = \Pr [p \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}] \wedge c \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]]\) is as shown in Table 2.

Proof

Since we choose p and c independently, we have

By the definition of the power-law distribution and by Lemmas 1 and 2, we have

We can estimate \(\Pr [c \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]]\) in the same manner, which gives us the final estimate of \(p_{pc}\) shown in Table 2. \(\square \)

Now we proceed with an estimate of the probability to find the optimum in one iteration after choosing p and c.

Lemma 15

Let \(k \in [2..\frac{n}{4}]\). Let \(\lambda \), p and c be already chosen in an iteration of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA and let \(p, c \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]\). If the current individual x of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA is in the local optimum of \(\textsc {Jump} _k\), then the probability that the algorithm generates the global optimum in one iteration is at least \(e^{-\Theta (k)}\min \{1, (\frac{k}{n})^k \lambda ^2 \}\).

Proof

The probability \(P_{pc}(\lambda )\) that we find the optimum in one iteration is the probability that we have a successful mutation phase and a successful crossover phase in the same iteration. If we denote the probability of a successful mutation phase by \(p_M\) and the probability of a successful crossover phase by \(p_C\), then we have \(P_{pc}(\lambda ) = p_M p_C\). Then with \(q_\ell \) being some constant which denotes the probability that the number \(\ell \) of bits we flip in the mutation phase is in [pn, 2pn], by Lemmas 3.1 and 3.2 in [6] we have

If \(\lambda \ge \sqrt{\frac{n}{k}}^k\), then we have

Otherwise, if \(\lambda < \sqrt{\frac{n}{k}}^k\), then both minima are equal to their second argument. Thus, we have

Bringing the two cases together, we finally obtain

\(\square \)

Now we are in position to prove Theorem 13.

Proof of Theorem 13

Let the current individual x of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA be already in the local optimum. Let P be the probability of event F when the algorithm finds optimum in one iteration. By the law of total probability this probability is at least

where \(p_{(F \mid pc)}\) denotes \(\Pr [F \mid p, c \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]]\) and \(p_{pc}\) denotes \(\Pr [p, c \in [\sqrt{\frac{k}{n}}, \sqrt{\frac{2k}{n}}]]\).

The number \(T_I\) of iterations until we jump to the optimum follows a geometric distribution \({{\,\textrm{Geom}\,}}(P)\) with success probability P. Therefore,

Since in each iteration the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA performs \(2\lambda \) fitness evaluations (with \(\lambda \) chosen from the power-law distribution), by Wald’s equation (Lemma 5) the expected number \(E[T_F]\) of fitness evaluations the algorithm makes before it finds the optimum is

In the remainder we show how \(E[\lambda ]\), \(p_{(F \mid pc)}\) and \(p_{pc}\) depend on the hyperparameters of the algorithm.

First we note that \(p_{pc}\) was estimated in Lemma 14. Also, by Lemma 3 the expected value of \(\lambda \) is

Finally, we compute the conditional probability of F via the law of total probability.

where \(P_{pc}(i)\) is as defined in Lemma 15, in which it was shown that \(P_{pc}(i) \ge e^{-\Theta (k)}\min \{1, (\frac{k}{n})^k i^2 \}\). We consider two cases depending on the value of \(u_\lambda \).

Case 1: when \(u_\lambda \le (\frac{n}{k})^{k/2}\). In this case we have \(P_{pc}(i) \ge e^{-\Theta (k)}(\frac{k}{n})^k i^2\), hence

By Lemma 4 we estimate \(E[\lambda ^2]\) and obtain

Case 2: when \(u_\lambda > (\frac{n}{k})^{k/2}\). In this case we have \(P_{pc}(i) \ge e^{-\Theta (k)}(\frac{k}{n})^k i^2\), when \(i \le (\frac{n}{k})^{k/2}\) and we have \(P_{pc}(i) \ge e^{-\Theta (k)}\), when \(i > (\frac{n}{k})^{k/2}\). Therefore, we have

Estimating the sums via Lemma 1, we obtain

Gathering the estimates for the two cases and the estimates of \(E[\lambda ]\) and \(p_{pc}\) together, we obtain the runtimes listed in Table 1. \(\square \)

3.3 Recommended Hyperparameters

In this subsection we subsume the results of our runtime analysis to show most preferable parameters of the power-law distributions for the practical use. We point out the runtime with such parameters on OneMax and \(\textsc {Jump} _k\) in Corollary 16. We then also prove a lower bound on the runtime of the \({(1 + (\lambda , \lambda ))}\) GA with static parameters to show that when k is constant (that is, the most interesting case, since only then we have a polynomial runtime), then the performance of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA is asymptotically better than the best performance we can obtain with the static parameters.

Corollary 16

Let \(\beta _\lambda = 2 + \varepsilon _\lambda \) and \(\beta _p = 1 + \varepsilon _p\) and \(\beta _c = 1 + \varepsilon _c\), where \(\varepsilon _\lambda , \varepsilon _p, \varepsilon _c > 0\) are some constants. Let also \(u_\lambda \) be at least \(2^n\) and \(u_p = u_c = \sqrt{n}\). Then the expected runtime of the heavy-tailed \({(1 + (\lambda , \lambda ))}\) GA is \(O(n \log (n))\) fitness evaluations on OneMax and \(e^{O(k)}(\frac{n}{k})^{(1 + \varepsilon _\lambda )k/2}\) fitness evaluations on \(\textsc {Jump} _k\), \(k \in [2..\frac{n}{4}]\).

This corollary follows from Theorems 8 and 13. We only note that for the runtime on \(\textsc {Jump} _k\) the same arguments as in Theorem 8 show us that the runtime until we reach the local optimum is at most \(O(n\log (n))\), which is small compared to the runtime until we reach the global optimum. Also we note that when \(\beta _p\) and \(\beta _c\) are both greater than one and \(u_p = u_c =\sqrt{n} \ge \sqrt{2k}\), by Lemma 14 we have \(p_{pc} = \Theta (k^{-\frac{\varepsilon _p + \varepsilon _c}{2}})\), which is implicitly hidden in the \(e^{O(k)}\) factor of the runtime on \(\textsc {Jump} _k\). We also note that \(u_\lambda = 2^n\) guarantees that \(u_\lambda > (\frac{n}{k})^{k/2}\), which yields the runtimes shown in the right column of Table 1.

Corollary 16 shows that when we have (almost) unbounded distributions and use power-law exponents slightly greater than one for all parameters except the population size, for which we use a power-law exponent slightly greater than two, we have a good performance both on easy monotone functions, which give us a clear signal towards the optimum, and on the much harder jump functions, without any knowledge of the jump size.

We now also show that the proposed choice of the hyper-parameters gives us a better performance than any static parameters choice on \(\textsc {Jump} _k\) for constant k. As we have already noted in the introduction, only for such values of k different variants of the \({(1 + (\lambda , \lambda ))}\) GA and many other classic EAs have a polynomial runtime, hence this case is the most interesting to consider. We prove the following theorem which holds for any static parameters choice of the \({(1 + (\lambda , \lambda ))}\) GA, even when we use different population sizes \(\lambda _M\) and \(\lambda _C\) in the mutation and in the crossover phases respectively.

Theorem 17

Let n be sufficiently large. Then the expected runtime of the \({(1 + (\lambda , \lambda ))}\) GA with any static parameters p, c, \(\lambda _M\) and \(\lambda _C\) on \(\textsc {Jump} _k\) with \(k \le \frac{n}{512}\) is at least \(B :=\frac{1}{91\sqrt{\ln (n/k)}}(\frac{2n}{k})^{(k + 1)/2}\).

Before we prove Theorem 17, we give a short sketch of the proof to ease the further reading. First we show that with high probability the \({(1 + (\lambda , \lambda ))}\) GA with static parameters starts at a point with approximately \(\frac{n}{2}\) one-bits. In the second step we handle a wide range of parameter settings and show that for them we cannot obtain a runtime better than B by showing that the probability to find the optimum in one iteration is at most 1/B. For the remaining settings we then show that we are not likely to observe an \(\Omega (n)\) progress in one iteration, hence with high probability there is an iteration when we have a fitness which is \(\frac{n}{2} + \Omega (n)\) and at the same time which is \(n - k - \Omega (n)\). From that point on the probability that we have a progress which is \(\Omega (k\log (\frac{n}{k}))\) is very unlikely to happen hence with high probability the \({(1 + (\lambda , \lambda ))}\) GA does not reach the local optima of \(\textsc {Jump} _k\) (nor the global one) in \(\Omega (\frac{n}{k\log (\frac{n}{k})})\) iterations which is equal to \(\Omega (\frac{(\lambda _M + \lambda _C)n}{k\log (\frac{n}{k})})\) fitness evaluations by the definition of the algorithm. For the narrowed range of parameters this yields the lower bound.

To transform these informal arguments into a rigorous proof we use several auxiliary tools. The first of them is Lemma 14 from [29], which we formulate as follows.Footnote 2

Lemma 18

(Lemma 14 in [29]) Let x be a bit string of length n with exactly m one-bits in it. Let y be an offspring of x obtained by flipping each bit independently with probability \(\frac{r}{n}\), where \(r \le \frac{n}{2}\). Let also \(m'\) be a random variable denoting the number of one-bits in y. Then for any \(\Delta \ge 0\) we have

We also use the following lemma, which bounds the probability to make a jump to a certain point.

Lemma 19

If we are in distance \(d \le \frac{n}{2}\) from the unique optimum of any function, then the probability P that the \({(1 + (\lambda , \lambda ))}\) GA with mutation rate p, crossover bias c and population sizes for the mutation and crossover phases \(\lambda _M\) and \(\lambda _C\) respectively finds the optimum in one iteration is at most

A very similar, but less general result has been proven in [6] (Theorem 16).

Proof

Without loss of generality we assume that the unique optimum is the all-ones bit string. Hence, the current individual has exactly d zero-bits. Let \(p_\ell \) be the probability that we choose \(\ell \) as the number of bits to flip at the start of the iteration of the \({(1 + (\lambda , \lambda ))}\) GA. Let also \(p_m(\ell )\) be the probability (conditional on the chosen \(\ell \)) that the mutation winner has all zero-bits flipped to ones. Note that this is necessary for crossover to be able to create the global optimum. Let \(p_c(\ell )\) be the probability that conditional on the chosen \(\ell \) and on that we flip all d zero-bits in the mutation winner, we then flip \(\ell - d\) zeros in the mutation winner in at least one crossover offspring. Then by the law of total probability we have

For \(\ell < d\) the probability that we flip all d zero-bits in the mutation winner is zero. For \(\ell = d\) we flip all d zero-bits in one particular mutation offspring with probability \(q_m(\ell ) = \left( {\begin{array}{c}n\\ d\end{array}}\right) ^{-1}\). Since we create all \(\lambda _M\) offspring independently, the probability that we flip all d zero-bits in at least one offspring is

where we used Bernoulli inequality. Since when we create such an offspring in the mutation phase, we already find the optimum, we assume that we do not need to perform the crossover and therefore, \(p_c(\ell ) = 1\) in this case.

When \(\ell > d\), the probability to flip all d zero-bits in one offspring is

The probability to do so in one of \(\lambda _M\) independently created offspring is thus

The probability that in one crossover offspring we take from the current individual all \(\ell - d\) bits which are zeros in the mutation winner and take from the mutation winner all d bits which are zeros in the current individual is \(q_c(\ell ) = c^d (1 - c)^{\ell - d}\). Consequently, the probability that we do this in at least one of \(\lambda _C\) independently created individuals is

Recall that \(\ell \) is chosen from the binomial distribution \({{\,\textrm{Bin}\,}}(n, p)\), thus we have \(p_\ell = \left( {\begin{array}{c}n\\ \ell \end{array}}\right) p^{\ell }(1 - p)^{n - \ell }\). Putting all the estimates above into (1) we obtain

We now consider function \(f_d(x) = x^d (1 - x)^{n - d}\) on interval \(x \in [0, 1]\). To find its maximum, we consider its value in the ends of the interval (which is zero in both ends) and in the roots of its derivative, which is

Hence, the only root of the derivative is in \(x = \frac{d}{n}\). Since \(f_d(x)\) is a smooth function, it reaches its maximum there, which is,

Since we assume that \(d \le \frac{n}{2}\), we conclude that for all \(x \in [0, 1]\) we have

Hence we have both \(p^d (1 - p)^{n - d} \le (\frac{d}{2n})^d\) and \((1 - pc)^{n - d} (pc)^d \le (\frac{d}{2n})^d\), from which we conclude

Since P cannot exceed one, we also have

\(\square \)

An important corollary from Lemma 19 is the following lower bound for the case when we use too small population sizes.

Corollary 20

Consider the run of the \({(1 + (\lambda , \lambda ))}\) GA with static parameters on \(\textsc {Jump} _k\) with \(k < \frac{n}{2}\). Let the population sizes which are used for the mutation and crossover phases be \(\lambda _M\) and \(\lambda _C\) respectively. Let also the current individual x be a point outside the fitness valley, but with at least \(\frac{n}{2}\) one-bits. Then if \(\lambda _M\lambda _C < \ln (\frac{n}{k})(\frac{2n}{k})^{k - 1}\), then the expected runtime until we find the global optimum is at least \(\frac{1}{2\sqrt{\ln (n/k)}}(\frac{2n}{k})^{\frac{k + 1}{2}}\).

Proof

Since the algorithm has already found the point outside the fitness valley, it will never accept a point inside it as the current individual x. Hence, unless we find the optimum, the distance to it from the current individual is at least k and at most \(\frac{n}{2}\).

We now consider the term \((\frac{d}{2n})^d\), which is used in the bound given in Lemma 19, as a function of d and maximize it for \(d \in [k, \frac{n}{2}]\). For this purpose we consider its values in the ends of the interval and in the zeros of its derivative, which is,

Hence, the derivative is equal to zero only when \(\frac{d}{2n} = e^{-1}\), that is, when \(d = \frac{2n}{e}\). Since we only consider d which are at most \(\frac{n}{2}\), the derivative does not have roots in this range. We also note that for \(d < \frac{2n}{e}\) the derivative is negative, hence the maximal value of \((\frac{d}{2n})^d\) is reached when \(d = k\). Therefore, by Lemma 19 we have

Since \(\lambda _M\lambda _C \le \ln (\frac{n}{k})(\frac{2n}{k})^{k - 1}\) and since for all \(x \ge 2\) we have \(\ln (x) < \frac{x}{2}\), we compute

Therefore, the minimum in (2) is equal to the second argument.

Thus, the runtime \(T_I\) (in terms of iterations) is dominated by the geometric distribution with parameter \(2\lambda _M\lambda _C \left( \frac{k}{2n}\right) ^k \le \frac{1}{2}\). This implies that the expected number of unsuccessful iterations is \(E[T_I] - 1 \ge \frac{E[T_I]}{2}\). Since in each unsuccessful iteration we have exactly \(\lambda _M + \lambda _C\) fitness evaluation, we have

By Lemma 7 we obtain

\(\square \)

In the following lemma we also show that too large population sizes also yield a too large expected runtime.

Lemma 21

Consider the run of the \({(1 + (\lambda , \lambda ))}\) GA with static parameters on \(\textsc {Jump} _k\) with \(k < \frac{n}{2}\). Let the population sizes which are used for the mutation and crossover phases be \(\lambda _M\) and \(\lambda _C\) respectively. Let also the current individual x be a point outside the fitness valley, but with at least \(\frac{n}{2}\) one-bits. Then if \(\lambda _M\lambda _C > \frac{1}{\ln (\frac{n}{k})}(\frac{2n}{k})^{k + 1}\), then the expected runtime until we find the global optimum is at least \(\frac{1}{16\sqrt{\ln (n/k)}}(\frac{2n}{k})^{\frac{k + 1}{2}}\).

Proof

By Lemma 7 we have that the cost of one iteration is

hence to prove this lemma it is enough to consider only the first iteration of the algorithm, which already takes at least \(\frac{2}{\sqrt{\ln (n/k)}}(\frac{2n}{k})^{\frac{k + 1}{2}}\) fitness evaluations plus one evaluation for the initial individual.

We first show that if \(\lambda _M \ge 2^{\frac{n}{2}} - 2\), then we are not likely to sample the optimum before making \(2^{\frac{n}{2}} - 1\) fitness evaluations. For this we note that the initial individual and all mutation offspring in the first iteration are sampled independently of the fitness function, thus they are random points in the search space. Therefore, for each of these individuals the probability to be the optimum is \(2^{-n}\). Consequently, by the union bound, when we create the initial individual and \(2^{\frac{n}{2}} - 2\) mutation offspring, the probability that at least one of them is the optimum is at most

Hence, with probability at least \((1 - 2^{-\left( \frac{n}{2} + 1\right) })\) we have to make \(2^\frac{n}{2} - 1\) or more fitness evaluations, which implies that

if \(n \ge 3\). Without proof we note that \(\frac{1}{16\sqrt{\ln (n/k)}}(\frac{2n}{k})^{\frac{k + 1}{2}}\) is increasing in k for \(k \le \frac{n}{2}\). Hence, for \(k \le \frac{n}{2}\) we have that

In the rest of the proof we assume that \(\lambda _M < 2^{\frac{n}{2}} - 2\). Since the mutation winner is chosen based on the fitness, we cannot use the same argument with random points in the search space for the crossover phase. However, we can consider an artificial process, which in parallel runs the crossover phase for each mutation offspring seen as winner. If none of these parallel processes has generated the optimum within m crossover offspring samples, then also the true process has not done so within a total of \(1 + \lambda _M + m\) fitness evaluations. We note that in the parallel crossover phases, since no selection has been made, again all offspring are uniformly distributed in \(\{0,1\}^n\).

Let us fix \(m = 2^{\frac{n}{2} - 1}\). By the union bound, the probability that one of \(1 + \lambda _M + m\lambda _M\) individuals generated by the artificial process is the optimum is at most

At the same time, if the original \({(1 + (\lambda , \lambda ))}\) GA creates \(1 + \lambda _M + m \ge m\) individuals, it also performs at least m fitness evaluations. Hence, the expected number of fitness evaluations is at least

\(\square \)

We are now in position to prove Theorem 17.

Proof of Theorem 17

Initialization. Recall that the initial individual is sampled uniformly at random, hence the number of one-bits in it follows a binomial distribution \({{\,\textrm{Bin}\,}}(n, \frac{1}{2})\). By the symmetry argument we have that the number of one-bits X in the initial individual is at least \(\frac{n}{2}\) with probability at least \(\frac{1}{2}\). By Chernoff bounds (see, e.g., Theorem 1.10.1 in [24]) we also have that the probability that X is greater than \(\frac{n}{2} + \frac{n}{8}\) is at most

Hence, with probability at least \(\frac{1}{2} - e^{-\Theta (n)}\) the initial individual has a number of one-bits (and hence, the fitness) in \([\frac{n}{2}, \frac{5n}{8}]\). We now condition on this eventFootnote 3.

Narrowing the reasonable population sizes. Since we condition on starting in distance \(d \le \frac{n}{2}\) from the optimum of \(\textsc {Jump} _k\), by Corollary 20 and Lemma 21 we have that if we choose \(\lambda _M\) and \(\lambda _C\) such that \(\lambda _M\lambda _C \ge \frac{1}{\ln (n/k)}(\frac{2n}{k})^{k + 1}\) or \(\lambda _M\lambda _C \le \ln (\frac{n}{k})(\frac{2n}{k})^{k - 1}\), then the expected runtime is at least\(\frac{1}{16\sqrt{\ln (n/k)}}(\frac{2n}{k})^{\frac{k + 1}{2}} = \frac{91B}{16}\). Hence, in the rest of the proof we assume that \(\ln (\frac{n}{k})(\frac{2n}{k})^{k - 1}< \lambda _M \lambda _C < \frac{1}{\ln (n/k)}(\frac{2n}{k})^{k + 1}\).

We note that by Lemma 7 this assumption also implies that the cost of one iteration is

Narrowing the reasonable mutation rate and crossover bias. We now show that using a too large mutation rate or crossover bias also yields a runtime which is greater than \((\frac{2n}{k})^{\frac{k + 1}{2}}\) and therefore greater than B. Conditional on the current individual x being in distance \(d \le \frac{n}{2}\) from the optimum, by Lemma 19 we have that if \(pc \ge \frac{1}{2}\) (and therefore, \(p \ge \frac{1}{2}\)), then we have

Therefore, the expected number of fitness evaluations until we find the optimum is at least

Since we already assume that \(\lambda _M \lambda _C \le \frac{1}{\ln (n/k)}(\frac{2n}{k})^{k + 1}\), by Lemma 7 we have

Therefore, we have

We note that for \(k \in [1, \frac{n}{512}]\) the term \(\left( \frac{k}{2n}\right) ^{k + 1}\) is decreasing in k (we avoid the proof of this fact, but note that it trivially follows from considering the derivative). Consequently, if we assume that \(k \le \frac{n}{512}\), then we have

Hence, using \(pc \ge \frac{1}{2}\) gives us the expected runtime which is not less than B.

Making a linear progress. For the rest of the proof we assume that we have population sizes such that \(\lambda _M \lambda _C \in [\ln (\frac{n}{k})(\frac{2n}{k})^{k - 1}, \frac{1}{\ln (n/k)}(\frac{2n}{k})^{k + 1}]\) and p and c such that \(pc \le \frac{1}{2}\). We now show that at some iteration before we have already made at least \((\frac{2n}{k})^{\frac{k + 1}{2}}\) fitness evaluations we get a current individual x with fitness in \([\frac{n}{2} + \frac{n}{8}, \frac{n}{2} + \frac{n}{4}]\). For this we show that conditional on \(f(x) \ge \frac{n}{2}\) we are not likely to increase fitness in one iteration by at least \(\frac{n}{8}\) in a very long time.

For this purpose we consider a modified iteration of the \({(1 + (\lambda , \lambda ))}\) GA, where in the crossover phase we create not only \(\lambda _C\) offspring by crossing the current individual x with the mutation winner \(x'\), but we create \(\lambda _M \cdot \lambda _C\) offspring by performing crossover between x and each mutation offspring \(\lambda _C\) times. The best offspring in this modified iteration cannot be worse than the best offspring in a non-modified iteration. Hence the probability that we increase the fitness by a least \(\frac{n}{8}\) is at most the probability that the best offspring of this modified iteration is better than the current individual x by at least \(\frac{n}{8}\).

Consider one particular offspring \(y'\) created in this modified iteration. Recall that its parent was created by first choosing a number \(\ell \) from the binomial distribution \({{\,\textrm{Bin}\,}}(n, p)\) and then flipping \(\ell \) bits, therefore it is distributed as if we created it by flipping each bit independently with probability p. Then when we create \(y'\) we take each flipped bit from its parent with probability c, hence in the resulting offspring each bit is flipped with probability pc, independently of other bits. Consequently the distribution of \(y'\) is the same as if we created it via the standard bit mutation with probability of flipping each bit equal to pc. Note that this argument works only when we consider one particular individual, since the mutation offspring are dependent on each other (since they have the same number \(\ell \) of bits flipped) and therefore, their offspring are all also dependent.

To estimate the probability that \(y'\) has a fitness by \(\frac{n}{8}\) greater than x, we use Lemma 18 with \(r = pcn\) (note that since \(pc < \frac{1}{2}\), we have \(r \le \frac{n}{2}\), thus we satisfy the conditions of Lemma 18). Since we are conditioning on \(m = f(x) \ge \frac{n}{2}\), we have \((n - 2m)\frac{r}{n} \le 0\). Hence, with \(\Delta = \frac{n}{8}\) we obtain

After \(\frac{n}{k}\) modified iterations we create \(\frac{\lambda _M \lambda _C n}{k}\) offspring, therefore by the union bound the probability that at least one of them has a fitness by at least \(\frac{n}{8}\) greater than the fitness of its parent is at most \(\frac{\lambda _M \lambda _C n}{k}e^{-\frac{n}{70}}\). Since we also have \(\lambda _M \lambda _C \le \frac{1}{\ln (n/k)}(\frac{2n}{k})^{k + 1}\), this probability is at most

We also note that the term \(\left( \frac{2n}{k}\right) ^{k + 2}\) is increasing in k for \(k \in [1, \frac{n}{512}]\) (we omit the proof, since it trivially follows from considering its derivative). Therefore, for such k this probability is at most

Let \(T_{5n/8}\) be the first iteration when we have x with at least \(\frac{5n}{8}\) one-bits. If \(T_{5n/8} \le \frac{n}{k}\), then with probability \(1 - e^{-\Theta (n)}\) none of the offspring created up to this moment improved the fitness by more than \(\frac{n}{8}\). Hence, we have that x has at most \(\frac{5n}{8} + \frac{n}{8} = \frac{3n}{4}\) one-bits. Otherwise, if \(T_{5n/8} > \frac{n}{k}\), by this iteration we already make at least

fitness evaluations and thus the runtime exceeds \((\frac{2n}{k})^{\frac{k + 1}{2}}\). Hence, in the rest of the proof we assume that at some point we have a current individual x with fitness in \([\frac{5n}{8}, \frac{3n}{4}]\).

Slow progress towards the local optimum. We now show that after reaching fitness at least \(\frac{5n}{8}\), the \({(1 + (\lambda , \lambda ))}\) GA makes a progress not greater than \(\delta :=\frac{26}{3}(k + 2) \ln (\frac{n}{k})\) per iteration.

For this purpose we again consider an iteration of the modified algorithm, which generates \(\lambda _M\lambda _C\) offspring in each iteration. Recall that each offspring created here can be considered as one created by standard bit mutation with mutation rate pc. We apply Lemma 18 to one particular offspring \(y'\) with \(r = pcn\) and \(\Delta = \frac{r}{4} + \delta \) and obtain

For all \(\delta > 0\) and \(r > 0\) we bound the argument of the exponent as follows.

Recall that \(\delta = \frac{26}{3}(k + 2) \ln (\frac{n}{k})\). Hence, we have

By the union bound the probability that we create such offspring in \(\frac{n/4 - k}{\delta }\) iterations is at most

If we start at some point x with fitness \(f(x) \le \frac{3n}{4}\) and we do not improve fitness by at least \(\delta \) for \(\frac{n/4 - k}{\delta }\) iterations, then we do not reach the local optima or the global optimum in this number of iterations (note that for the considered values of \(k \le \frac{n}{32}\) and \(\delta \) the value of \(\frac{n/4 - k}{\delta }\) is at least one). During these iterations we do at least \((\lambda _M + \lambda _C)\frac{n/4 - k}{\delta }\) fitness evaluations. Since we have already shown that \(\lambda _M + \lambda _C \ge 2\sqrt{\ln (\frac{n}{k})}(\frac{n}{k})^{\frac{k - 1}{2}}\), this is at least