Abstract

How to efficiently and reliably spread information in a system is one of the most fundamental problems in distributed computing. Recently, inspired by biological scenarios, several works focused on identifying the minimal communication resources necessary to spread information under faulty conditions. Here we study the self-stabilizing bit-dissemination problem, introduced by Boczkowski, Korman, and Natale in [SODA 2017]. The problem considers a fully-connected network of n agents, with a binary world of opinions, one of which is called correct. At any given time, each agent holds an opinion bit as its public output. The population contains a source agent which knows which opinion is correct. This agent adopts the correct opinion and remains with it throughout the execution. We consider the basic \(\mathcal {PULL}\) model of communication, in which each agent observes relatively few randomly chosen agents in each round. The goal of the non-source agents is to quickly converge on the correct opinion, despite having an arbitrary initial configuration, i.e., in a self-stabilizing manner. Once the population converges on the correct opinion, it should remain with it forever. Motivated by biological scenarios in which animals observe and react to the behavior of others, we focus on the extremely constrained model of passive communication, which assumes that when observing another agent the only information that can be extracted is the opinion bit of that agent. We prove that this problem can be solved in a poly-logarithmic in n number of rounds with high probability, while sampling a logarithmic number of agents at each round. Previous works solved this problem faster and using fewer samples, but they did that by decoupling the messages sent by agents from their output opinion, and hence do not fit the framework of passive communication. Moreover, these works use complex recursive algorithms with refined clocks that are unlikely to be used by biological entities. In contrast, our proposed algorithm has a natural appeal as it is based on letting agents estimate the current tendency direction of the dynamics, and then adapt to the emerging trend.

Similar content being viewed by others

1 Introduction

1.1 Background and motivation

Disseminating information from one or several sources to the whole population is a fundamental building block in a myriad of distributed systems [16, 18, 21, 32, 34], including in multiple natural systems [28, 38, 39]. This task becomes particularly challenging when the system is prone to faults [15, 25, 29, 30], or when the agents or their interactions are constrained [5, 14]. Among others, these issues find relevance in insect populations [38], chemical reaction networks [17], and mobile sensor networks [41]. In particular, in many biological systems, the internal computational abilities of individuals are impressively diverse, whereas the communication capacity is highly limited [10, 28, 38]. An extreme situation, often referred to as passive communication [40], is when information is gained by observing the behavior of other animals, which, in some cases, may not even intend to communicate [19, 33]. Such public information can reflect on the quality of possible behavioral options, hence allowing to improve fitness when used properly [20].

Consider, for example, the following scenario that serves as an inspiration for our model. A group of n animals is scattered around an area searching for food. Assume that one side of the area, say, either the eastern side or the western side, is preferable (e.g., because it contains more food or because it is less likely to attract predators). However, only a few animals know which side is preferable. These knowledgeable animals will therefore spend most of their time in the preferable side of the area. Other animals would like to exploit the knowledge held by the knowledgeable animals, but they are unable to distinguish them from others. Instead, what they can do, is to scan the area in order to roughly estimate how many animals are on each side, and, if they wish, move between the two sides. Can the group of non-knowledgeable animals manage to locate themselves on the preferable side relatively fast, despite initially being spread in an arbitrary way while being completely uncorrelated?

The scenario above illustrates the notion of passive communication. The decision that an animal must make at any given time is to specify on which side of the area it should forage. This choice would be visible by others, and would in fact be the only information that an animal could reveal. Moreover, it cannot avoid revealing it. Knowledgeable animals have a clear incentive to remain on the preferable side, promoting this choice passively. On the other hand, uninformed animals may choose a side solely for communication purposes, regardless of which they think is best. However, such communication has to be limited in time, since all animals need to eventually converge towards the desirable side.

1.2 Informal description of the problem

This paper studies the self-stabilizing bit-dissemination problem, introduced by Boczkowski, Korman, and Natale in [14], with the aim of solving it using passive communication. The problem considers a fully-connected network of n agents, and a binary world of opinions, say \(\{0,1\}\) (in the motivating example above, the opinion corresponds to being either on the eastern side of an area, or the western side). One of these opinions is called correct and the other is called wrong. Execution proceeds in synchronous rounds (though agents do not have knowledge about the round number). At any given round t, each agent i holds an opinion bit \(\in \{0,1\}\) (viewed as its output variable). The population contains one source agent which knows which opinion is correct. This agent adopts the correct opinion and remains with it throughout the execution. The goal of the remaining agents, i.e., the non-source agents, is to converge on the opinion held by the source. Because the source does not change its opinion, it may be thought of as representing the environment and thus, not participating in the protocol. Instead, the protocol is executed by the non-source agents.

We study the basic \(\mathcal {PULL}\) model of communication [15, 21, 34], in which in each round, each agent sees the information held by \(\ell \) other agents, chosen uniformly at random, where \(\ell \) is small compared to n. Although the sampling is done by non-source agents, the \(\ell \) random agents are chosen from the whole population of agents (including the source). Importantly, however, agents are unable to distinguish the case of sampling the source from the case of sampling a non-source agent. Specifically, we consider the passive communication model which assumes that the only information that can be obtained by sampling an agent is its opinion bit.

The convergence time of the protocol corresponds to the first round that the configuration of opinions reached a consensus on the correct opinion, and remained unchanged forever after.

We consider the self-stabilization framework [4, 24] to model the lack of global organization inherent to biological systems. We assume that the source has pertinent knowledge about which opinion is correct. Conversely, all other agents cannot be sure of their opinion, hence, we assume that their opinion may be corrupt, and even set by an adversary. In the self-stabilizing setting, the goal of the non-source agents is to quickly converge on the correct opinion, despite having an arbitrary initial configuration, set by an adversary.

Our framework and proofs could be extended to allow for a constant number of sources, however, in this case it must be guaranteed that all source agents agree on which opinion is the correct one. Indeed, as mentioned in Sect. 1.4, when there are conflicts between sources, the problem cannot be solved efficiently in the passive communication model, even if significantly more agents support one opinion.

1.3 Previous works

The self-stabilizing bit-dissemination problem was introduced in [14], with the aim of minimizing the message size. As mentioned therein, if all agents share the same notion of global time, then convergence can be achieved in \(\mathcal {O}(\log n)\) time w.h.p. even under passive communication. The idea is that agents divide the time horizon into phases of length \(T=4\log n\). In the first half of each phase, if a non-source agent observes an opinion 0, then it copies it as its new opinion, but if it sees 1 it ignores it. In the second half, it does the opposite, namely, it adopts the output bit 1 if and only if it sees an opinion 1. Now, consider the first phase. If the source supports opinion 0 then at the end of the first half, every output bit would be 0 w.h.p., and the configuration would remain that way forever. Otherwise, if the source supports 1, then at the end of the second half all output bits would be 1 w.h.p., and remain 1 forever.

The aforementioned protocol indicates that the self-stabilizing bit-dissemination problem could be solved efficiently by running a self-stabilizing clock-syncronization protocol in parallel to the previous example protocol. This parallel execution amounts to adding one bit to the message size of the clock synchronization protocol. The main technical contribution of [14], as well as the focus of the subsequent work [11], was solving the self-stabilizing clock-synchronization using as few as possible bits per message. In fact, the authors in [11] managed to do so using 1-bit messages. This construction thus implies a solution to the self-stabilizing bit-dissemination problem using 2 bits per message. A recursive procedure, similar to the one established in [14], then allowed to further compress the 2 bits into 1-bit messages.

Importantly, however, the protocols in [11, 14] do not fit the framework of passive communication. Indeed, in the corresponding protocols the message revealed by an agent is not necessarily the same as its opinion bit, which is kept in the protocols of [11, 14] as an internal variable. Moreover, these works use complex recursive algorithms with refined clocks that are unlikely to be used by biological entities. Instead, we are interested in identifying algorithms that have a more natural appeal.

1.4 On the difficulties resulting from using passive communication

At first glance, to adhere to the passive communication model, one may suggest that agents simply choose their opinion to be the 1-bit messages used in [11], just for the purpose of communication, until a consensus is reached, and then switch their opinions to be the correct bit, once it is identified. There are, however, two difficulties to consider regarding this approach. First, in our setting, the source agent does not change its opinion (which, in the case of [11], may prevent the protocol from reaching a consensus at all). Second, even assuming that the protocol functions properly despite the source having a stable opinion, it is not clear how to transition from the “communication” phase (where agents use their opinion to operate the protocol, e.g., for synchronizing clocks) to the “consensus” phase (where all opinions must be equal to the correct bit at every round). For instance, the first agents to make the transition may disrupt other agents still in the first phase.

To further illustrate the difficulty of self-stabilizing information spread under passive communication, let us consider a more general problem called majority bit-dissemination. As explained in [14], this problem could be solved efficiently when separating the messages from the opinions but, as shown below, could not be solved efficiently under passive communication. In this problem, the population contains \(k\ge 1\) source agents which may not necessarily agree on which opinion is correct. Specifically, in addition to its opinion, each source-agent stores a preference bit \(\in \{0,1\}\). Let \(k_i\) be the number of source agents whose preference is i. Assume that sufficiently more source agents share a preference i over \(1-i\) (e.g., at least twice as many), and call i the correct bit. Then, w.h.p., all agents (including the sources that might have the opposite preference) should converge their opinions on the correct bit in poly-logarithmic time, and remain with that opinion for polynomial time. The authors of [14] showed that the self-stabilizing majority bit-dissemination problem could be solved in logarithmic time, using messages of size 3 bits, and the authors of [11] showed how to reduce the message size to 1. As mentioned, the messages in these protocols do not necessarily coincide with the opinions, which were stored as internal variables, and hence the protocols in [11, 14] are not based on passive communication. In fact, the following simple argument implies that this problem could not be solved in poly-logarithmic time under the model of passive communication, even if the sample size is n (i.e., all agents are being observed in each round)!

Assume by contradiction that there exists a self-stabilizing algorithm that efficiently solves the majority bit-dissemination problem using passive communication. Let us run this algorithm on a scenario with \(k_1=n/2\) and \(k_0=n/4\). Since \(k_1\gg k_0\), then after a poly-logarithmic time, w.h.p., all agents would have opinion 1, and would remain with that opinion for polynomial time. Denote by \(t_0\) the first time after convergence, and let s denote the internal state of one of the n/4 non-source agents at time \(t_0\). Similarly, let \(s'\) denote the internal state at time \(t_0\) of one of the n/4 source agents with preference 0. Now consider a second scenario, where we have \(k_0=n/4\) and \(k_1=0\). An adversary sets the internal states of agents (including their opinions) as follows. The internal states of the \(k_0\) source agents (with preference 0) are all set to be \(s'\). Moreover, their opinions (that these sources must publicly declare on) are all 1. Next, the adversary sets the internal states of all non-source agents to be s, and their opinions to be 1. We now compare the execution of the algorithm in the first scenario (starting at time \(t_0\)) with the execution in the second scenario (starting at time 0, i.e., after the adversary manipulated the states). Note that both scenarios start with all opinions being 1. Hence, since we consider the passive communication model, all observations in the first round of the corresponding executions, would be unanimously 1. Moreover, as long as no agent changes its opinion in both scenarios, all observations would continue to be unanimously 1. Furthermore, it is given that from time \(t_0\), w.h.p., all agents in the first scenario remain with opinion 1 for a polynomially long period. Therefore, using a union bound argument, it is easy to see that also in the second scenario, w.h.p., all agents would remain with opinion 1 for polynomial time. This contradicts the fact that in the second scenario, w.h.p., the agents should converge on the opinion 0 in poly-logarithmic time.

Note that the aforementioned impossibility result does not preclude the possibility of solving the self-stabilizing bit-dissemination problem in the passive communication model, which does not involve a conflict between sources.

1.5 Problem definition

Let \(\mathcal {Y}= \{0,1\}\) be the opinion space. The population contains n agents, for some \(n \in \mathbb {N}\), with one specific agent, called source, and \(n-1\) non-source agents. The source agent holds an opinion \(z \in \mathcal {Y}\) which is called the correct opinion. Informally, the source agent may be observed by other agents but does not actively participate in the protocol. In particular, its opinion, which is assumed to be set by an adversary, remains fixed throughout the execution. Instead, the protocol is run by the set \(I = \{1,\ldots ,n-1\}\) of non-source agents.

Execution proceeds in discrete, synchronous rounds \(t=0,1,\ldots \). Each non-source agent is seen as a state machine over \(\mathcal {Y}\times \Sigma \), where \(\Sigma \) depends on the protocol. We write \(\gamma _t^{(i)} = (Y_t^{(i)}, \sigma _t^{(i)}) \in \mathcal {Y}\times \Sigma \) to denote the state of Agent i in round t. Specifically, we refer to \(Y_t^{(i)} \in \mathcal {Y}\) as the opinion of Agent i in round t, and to \(\sigma _t^{(i)}\) as its internal memory state. A configuration \(C \in \mathcal {Y}\times \left( \mathcal {Y}\times \Sigma \right) ^I\) of the system consists of the correct opinion, together with the state of every non-source agent. We write \(C_t\) to denote the configuration of the system in round t.

Non-source agents do not “communicate” in the classical sense. Instead, in every round t, they consecutively perform the two following operations. Note that both operations occur within a single round. Hence, since we are interested in the measure of the number of rounds, we assume that these operations occur instantaneously.

-

1.

Sampling. Every agent i receives an opinion sample \(S_t^{(i)} \in \mathcal {Y}^\ell \), where \(\ell \) is a parameter called sample size. Every element in \(S_t^{(i)}\) is equal to the correct opinion z with probability 1/n, and is equal to the opinion of a non-source agent chosen uniformly at random (u.a.r) in I otherwise. This is equivalent to assuming that in each sample the opinion of an agent is sampled uniformly at random, where we consider all agents, including the source, as having equal probability of being sampled. We assume that all elements in \(S_t^{(i)}\) are drawn independently and that the opinion samples \(\left\{ S_t^{(i)} \right\} _{i \in I}\) are also independent of each other.

-

2.

Computation. Every agent i adopts a new state \(\gamma _{t+1}^{(i)}\), based on its previous state \(\gamma _t^{(i)}\) and the opinion sample \(S_t^{(i)}\), according to a transition function

$$\begin{aligned} f: (\mathcal {Y}\times \Sigma ) \times \mathcal {Y}^\ell \mapsto (\mathcal {Y}\times \Sigma ), \end{aligned}$$which depends on the protocol. The transition function might be randomized.

An execution of a protocol on an initial configuration \(C_0\) is a random sequence of configurations \(\{C_t\}_{t \in \mathbb {N}}\), where every \(C_t\) is obtained from \(C_{t-1}\) by applying the two aforementioned steps simultaneously on all (non-source) agents in parallel.

Using the terminology from [24], an execution is considered legal if, for every round t and every non-source agent i, the opinion of Agent i in round t is equal to the correct opinion, i.e., \(Y_t^{(i)} = z\). A configuration C is safe if every execution starting from C is legal. We say that an event happens with high probability (w.h.p.) if it happens with probability at least \(1-1/n^2\) as long as n is large enough. A protocol is said to solve the self-stabilizing bit-dissemination problem in time T if, for any initial configuration \(C_0\), the T’th configuration in an execution, namely \(C_T\), is safe w.h.p., where the probability is taken over the possible executions.

Important comments. Although our model is now already well-defined, let us make a few remarks to clarify it further.

-

The indices are used for analysis purposes, and it should be clear that a non-source agent is neither aware of its index, nor of the round number. Moreover, upon receiving an opinion sample \(S_t\), it is not aware of where the opinions in the sample come from (and whether they are observing the correct opinion directly).

-

Note that we do not require agents to irrevocably commit to their final opinion, but rather that they eventually converge on the correct opinion without necessarily being aware that convergence has happened.

-

As mentioned, we consider the source as an agent that holds the correct opinion z throughout the execution. Despite the word “agent”, the source does not have an internal memory state, and does not run the protocol. This is justified by the fact that in our interpretation, the source represents an informed individual, unwilling to cooperate, and incentivized to always keep the correct opinion. Alternatively, the source can be thought of as representing the environment, and hence, sampling the source is equivalent to sampling the environment. Importantly, as made clear in our problem definition, self-stabilization is defined only with respect to the non-source agents. In other words, at the beginning of an execution, an adversary may choose the opinion \(Y_0^{(i)}\) and internal memory state \(\sigma _0^{(i)}\) of every non-source agent, as well as the correct opinion z; Hence, since in our protocols the internal memory state of non-source agents does not include the possibility of indicating that the agent is a source, the adversary cannot “trick” non-source agents into believing that they are the source. The source itself should be seen as a part of the environment that the non-source agents are facing.

-

One may consider a system where non-source agents suffer from transient faults that corrupt their internal states. As is common in self-stabilization contexts, we think of the initial configuration \(C_0\) as the last configuration for which a transient fault has occurred. In this respect, the convergence time corresponds to the time until the system fully recovers from the last transient fault.

-

Our model is closely related to the message-passing setting, where the communication topology would be the complete graph over all agents (including the source). However, agents do not choose whether and where to send messages. Instead, following the PULL model of communication, (non-source) agents observe \(\ell \) neighbors chosen uniformly at random. Note that, following the passive communication assumption, the only information that is revealed upon observing an agent is its opinion.

-

Since all opinion samples are random by definition (and not chosen by an adversary), there is no need for any fairness assumption. Instead, our results will typically hold w.h.p.

Additional notations. For any \(k \in \mathbb {N}\) and \(p \in [0,1]\), we write \(\mathcal {B}(p)\) (resp. \({\mathcal {B}}_k(p)\)) to denote the Bernoulli (resp. Binomial) distribution with parameter p (resp. (k, p)). Moreover, we write \(\mathbb {P}(B_k(p) > B_k(q))\) to represent \(\mathbb {P}(X > Y)\) where \(X \sim {\mathcal {B}}_k(p), Y \sim {\mathcal {B}}_k(q)\), and X and Y are independent. Finally, we write

to denote the proportion of agents with opinion 1 in round t among all agents (including the source agent).

1.6 Our results

We propose a simple algorithm that efficiently solves the self-stabilizing bit-dissemination problem in the passive communication model. The algorithm has a natural appeal as it is based on letting agents estimate the current tendency direction of the dynamics, and then adapt to the emerging trend. More precisely (but still, informally), each non-source agent counts the number of agents with opinion 1 it observes in the current round and compares it to the number observed in the previous round. If more 1’s are observed now, then the agent adopts the opinion 1, and similarly, if more 0’s are observed now, then it adopts the opinion 0 (if the same number of 1’s is observed in both rounds then the agent does not change its opinion). Intuitively, on the global level, this behavior creates a persistent movement of the average opinion of the non-source agents towards either 0 or 1, which “bounces” back when hitting the wrong opinion.

More formally, the protocol uses \(\ell = c \log n\) samples, for a sufficiently large constant c. The internal state space is \(\Sigma = \{0,\ldots ,\ell \}\). For any opinion sample \(A \in \mathcal {Y}^\ell \), let \(\texttt {COUNT(A)}\) denote the number of 1-opinions in A. The transition function is as follows:

As it turns out, one feature of the aforementioned protocol will make the analysis difficult – that is, that \(Y_{t}\) and \(Y_{t+1}\) are dependent, even when conditioning on \((x_{t-1},x_{t})\). This is because \(\sigma _{t}\) is used to compute both \(Y_{t}\) and \(Y_{t+1}\). For example, if the sample \(S_{t-1}\) at round \(t-1\) happens to contain more 1’s, then \(\sigma _t\) is larger. In this case, \(Y_{t}\) has a higher chance of being 1, and \(Y_{t+1}\) has a higher chance of being 0. For this reason we introduce a modified version of the protocol that solves this dependence issue. The idea is to divide the sample of round t into 2 samples of equal size. One sample will be used to compare with one sample of round \(t-1\), and the other sample will be used to compare with one sample of round \(t+1\). Note that this implies that the sample size is twice as big, however, since we are interested in the case \(\ell =O(\log n)\), this does not cause a problem. This modified protocol, called Follow the Emerging Trend (FET), is the one we shall actually analyze. Its transition function is specified below (Protocol 1).

Note that, although we used time indices for clarity, the protocol does not require the agents to know t. The following theorem consists of the main result in the paper. Its proof is deferred to Sects. 2, 3, 5 and 6.

Theorem 1

Algorithm FET solves the self-stabilizing bit-dissemination problem in the passive communication model. It converges in \(O(\log ^{5/2} n)\) rounds on the correct opinion, with high probability, while relying on \(\ell ={\Theta }(\log n)\) samples in each round, and using \({\Theta }(\log \ell )\) bits of memory per agent.

The task of discovering and analyzing an algorithm using less than a logarithmic number of samples (e.g., a constant) appears challenging and is, therefore, left for future work. We note, however, that on a practical level, we believe that demonstrating the existence of algorithms utilizing logarithmic and constant sample sizes offers similar insights into the capacity of biological systems to efficiently spread information reliably.

Provided that \(\ell = \Theta (\log n)\), it is worth noting that all algorithms require \(\Omega (\log n / \log \log n)\) rounds to solve the bit-dissemination problem (even with active communications). This is because this is the time needed for merely spreading information from the source to the whole population. More precisely, with fewer rounds, the configurations in which the source has opinion 0 and 1 are perfectly indistinguishable for a non-empty subset of the agents. Therefore, although our upper bound on the convergence time of Protocol 1 may not be tight, one can only hope to decrease it by approximately a factor of \(\log ^{3/2} n\).

Finally, we consider the more general case with k opinions, for an arbitrary \(k \in \mathbb {N}\). Importantly, however, we restrict attention to the case that the agents agree on a labeling of the opinions. That is, we assume that the set of opinions is \(\mathcal {Y}= \{0,\ldots ,k-1\}\). The case in which there may be conflicts in the way agents view the labeling of opinions remains for future work, see Sect. 8. The following theorem is proved in Sect. 7.

Theorem 2

Let k be a positive integer and let \(m = \lceil \log _2 k \rceil \). When \(\mathcal {Y}= \{0,\ldots ,k-1\}\), there exists a protocol that solves the bit-dissemination problem in \(O(\log ^{5/2} n)\) rounds with high probability, while relying on \(\ell ={\Theta }(\log n)\) samples in each round and using \({\Theta }(m \log \ell )\) bits of memory.

Our analysis involves partitioning the configuration space and subsequently studying the dynamics’ behavior in each subset, using classical concentration and anti-concentration results. This approach required us to identify a proper partitioning for which the dynamics are analytically tractable both on each part of the partitioning and in between parts. While we find this approach interesting and challenging at times, it is not novel [23]. Moreover, it is anticipated that, in a specific setting, the method may necessitate customization, presenting challenges for its reuse in other related problems.

1.7 Other related works

In recent years, the study of population protocols has attracted significant attention in the distributed computing community [1,2,3, 6, 8]. These models often consider agents that interact under random meeting patterns while being restricted in both their memory and communication capacities. While these model are inspired by biological scenarios, in many cases, the algorithms used appear unlikely to be employed by biological ensembles. By now, we understand the computational power of such systems rather well, but apart from a few exceptions [7], this understanding is limited to non-faulty scenarios.

The framework of opinion dynamics corresponds to settings of multiple agents, where in each round, each agent samples one or more agents at random, extracts their opinions, and employs a certain rule for updating its opinion. The study of opinion dynamics crosses disciplines, and is highly active in physics and computer science, see review e.g., in [12]. Many of the models of opinion dynamics can be considered as following passive communication, since the information an agent reveals coincides with its opinion. Generally speaking, however, the typical scientific approach in opinion dynamics is to start with some simple update rule, and analyze the resulting dynamics, rather than tailoring an updating rule to solve a given distributed problem. For example, perhaps the most famous dynamics in the context of interacting particles systems concerns the voter model [36]. In theoretical computer science, extensive research has been devoted to analyzing the time to reach consensus, following different updating rules including the 3-majority [23], Undecided-State Dynamics [6], and others. In these works, consensus should be reached either on an arbitrary value, or on the majority (or plurality) opinion, as evident in the initial configuration.

In contrast, in many natural settings the group must converge on a particular consensus value that is a function of the environment. Moreover, agents have different levels of knowledge regarding the desired value, and the system must utilize the information held by the more knowledgeable individuals [9, 35, 37, 39]. As explained in more detail below, when communication is restricted, and the system is prone to faults, this task can become challenging.

Propagating information from one or more sources to the rest of the population has been the focus of a myriad of works in distributed computing. This dissemination problem has been studied under various models taking different names, including rumor spreading, information spreading, gossip, broadcast, and others, see e.g., [16, 18, 21, 31, 32, 34]. A classical algorithm in the \(\mathcal {PULL}\) model spreads the opinion of the source to all others in \(\Theta (\log n)\) rounds w.h.p., by letting each uninformed agent copy the opinion of an informed agent whenever seeing one for the first time, as observed in, e.g., [34]. Unfortunately, this elegant algorithm does not suit all realistic scenarios, since its soundness crucially relies on the absence of misleading information. To address such issues, rumor spreading has been studied under different models of faults. One line of research investigates the case that messages may be corrupted with some fixed probability [13, 27]. Another model of faults is self-stabilization [22], where the system must eventually converge on the opinion of the source regardless of the initial configuration of states [22]. For example, the algorithm in [34] fails in this setting, since non-source agents may be initialized to “think” that they have already been informed by the correct opinion, while they actually hold the wrong opinion. For an introduction to self-stabilizing algorithms, see, e.g., [4, 24], and see [26] for another work solving self-stabilizing problems using weak communications.

Finally, it is worth noting that the term “bio-inspired”, often used in the literature, typically refers to research focused on applications in artificial intelligence or swarm robotics. In contrast, our aim is to employ algorithmic tools to comprehend the behavior of biological ensembles. Consequently, many articles labeled as bio-inspired may be irrelevant to our context.

2 Proof of Theorem 1: an overview

The goal of this section is to prove Theorem 1. The \(O(\log \ell )\) bits upper bound on the memory complexity clearly follows from the fact that the internal state space \(\Sigma = \{0,\ldots ,\ell \}\) of the FET algorithm (Protocol 1) is only of size \(\ell +1\), as required to memorize the number of 1’s in a sample.

Since the protocol is symmetric with respect to the opinion of the source, we may assume without loss of generality that the source has opinion 1. The closure property is easy to verify. Indeed, if there exists a round t such that the system has reached consensus in round t, then all future samples will be equal to the correct opinion, and by construction of Protocol 1, this implies that every agent will keep the correct opinion.

Our goal would therefore be to show that the FET algorithm converges to 1 fast, w.h.p., regardless of the initial configuration of non-source agents. Note that in order to achieve running time of O(T) w.h.p guarantee, is it sufficient to show that the algorithm stabilizes in T rounds with probability at least \(1-1/n^\epsilon \), for some \(\epsilon >0\). Indeed, because of the self-stabilizing property of the algorithm, the probability that the algorithm does not stabilize within \(2T/\epsilon \) rounds is at most \((1/n^\epsilon )^{2/\epsilon }=1/n^{2}\).

Recall that \(x_t\) denotes the fraction of agents with opinion 1 at round t among the whole population (including the source). We shall extensively use the two dimensional grid \({\mathcal {G}}:=\{0,\frac{1}{n},\ldots ,\frac{n-1}{n},1\}^2\). When analyzing what happens at round \(t+2\), the x-axis of \({\mathcal {G}}\) would represent \(x_t\), and the y-axis would represent \(x_{t+1}\).

Observation 1

For any t, conditioning on \((x_t,x_{t+1}) = (\mathbf{x_{t}},\mathbf{x_{t+1}})\), a non-source agent i has opinion 1 on round \(t+2\) w.p.

Moreover, there are independent binary random variablesFootnote 1\(X_1,\ldots ,X_n\) such that \(x_{t+2}\) is distributed as \(\frac{1}{n} \sum X_i\). Eventually,

The proof of Observation 1 is deferred to Sect. 4. A consequence of Observation 1, is that the execution of the algorithm induces a Markov chain on \({\mathcal {G}}\). This Markov chain has a unique absorbing state, (1, 1), since we assumed the source to have opinion 1. To prove Theorem 1 we therefore only need to bound the time needed to reach (1, 1).

2.1 Partitioning the grid into domains

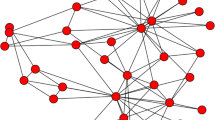

Let us fix \(\delta >0\) (\(\delta \) should be though of as a very small constant) and \(\lambda _n = \frac{1}{\log ^{1/2+\delta }n}\). We partition \({\mathcal {G}}\) into domains as follows (see illustration on Fig. 1a).

Similarly, for the former 4 domains, we define \(\text {G}\textsc {reen}_{0}\), \(\text {P}\textsc {urple}_{0}\), \(\text {R}\textsc {ed}_{0}\) and \(\text {C}\textsc {yan}_{0}\) to be their symmetric equivalents (w.r.t the point (\(\frac{1}{2},\frac{1}{2}\))), and finally define: \(\text {G}\textsc {reen}_{} = \text {G}\textsc {reen}_{0} \cup \text {G}\textsc {reen}_{1}\), \(\text {P}\textsc {urple}_{} = \text {P}\textsc {urple}_{0} \cup \text {P}\textsc {urple}_{1}\), \(\text {R}\textsc {ed}_{} = \text {R}\textsc {ed}_{0} \cup \text {R}\textsc {ed}_{1}\), and \(\text {C}\textsc {yan}_{} = \text {C}\textsc {yan}_{0} \cup \text {C}\textsc {yan}_{1}\). We shall analyze each area separately, conditioning on the Markov chain to be at any point in that area, and focusing on the number of rounds required to escape the area, and the probability that this escape is made to a particular other area. Figure 1b represents a sketch of the proof of Theorem 1, which may help to navigate the intermediate results.

a Partitioning the state space into domains. The x-axis (resp., y-axis) represents the proportion of agents with opinion 1 in round t (resp., \(t+1\)). The thick dashed line at the frontier between \(\text {P}\textsc {urple}_{1}\) and \(\text {R}\textsc {ed}_{1}\) is defined by \(x_{t+1} = (1-\lambda _n) x_t\). b Sketch of the proof of Theorem 1. All transitions w.p. at least \(1-1/n^{\Omega (1)}\). The process stays in a domain for as many rounds as indicated on the corresponding self-loop w.p. at least \(1-1/n^{\Omega (1)}\), and at most a constant number of rounds when no self-loop is represented. The source is assumed to have opinion 1

As it happens, the dynamics starting from a point \((x_t,x_{t+1})\) highly depends on the difference between \(x_t\) and \(x_{t+1}\). Roughly speaking, the larger \(|x_{t+1}-x_t|\) is the faster is the convergence. For this reason, we refer to \(|x_{t+1}-x_t|\) as the speed of the point \((x_t,x_{t+1})\). (This could also be viewed as the process’ “derivative” at time t.)

2.2 Dynamics at different domains

Let us now give an overview of the intermediate results. First we consider \(\text {G}\textsc {reen}_{}\), in which the speed of points is large. In Lemma 1 we show that from points in that domain, non-source agents reach a consensus in just one round w.h.p. In particular, if the Markov chain is at some point in \(\text {G}\textsc {reen}_{1}\), then the consensus will be on 1, and we are done. If, on the other hand, the Markov chain is in \(\text {G}\textsc {reen}_{0}\), then the consensus of non-source agents would be on 0. As we show later, in that case the Markov chain would reach \(\text {C}\textsc {yan}_{1}\) in one round w.h.p.

Lemma 1

(Green area) Assume that c is sufficiently large. If \((x_t,x_{t+1}) \in \text {G}\textsc {reen}_{1}\), then w.h.p., for every non-source agent i, \(Y_{t+2}^{(i)} = 1\). Similarly, if \((x_t,x_{t+1}) \in \text {G}\textsc {reen}_{0}\), then w.h.p., for every non-source agent i, \(Y_{t+2}^{(i)} = 0\).

The proof of Lemma 1 follows from a simple application of Hœffding’s inequality, and is deferred to Sect. 6.1. Next, we consider the area \(\text {P}\textsc {urple}_{}\), and show that the population goes from \(\text {P}\textsc {urple}_{}\) to \(\text {G}\textsc {reen}_{}\) in just one round, w.h.p. In \(\text {P}\textsc {urple}_{}\), the speed is relatively low, and \(x_t\) and \(x_{t+1}\) are quite far from 1/2. On the next round, we expect \(x_{t+2}\) to be close to 1/2, thus gaining enough speed in the process to join \(\text {G}\textsc {reen}_{}\). The proof of the following lemma is rather straightforward, and is deferred to Sect. 6.2.

Lemma 2

(Purple area) Assume that c is sufficiently large. If \((x_t,x_{t+1}) \in \text {P}\textsc {urple}_{1}\), then \((x_{t+1},x_{t+2}) \in \text {G}\textsc {reen}_{1}\) w.h.p. Similarly, if \((x_t,x_{t+1}) \in \text {P}\textsc {urple}_{0}\), then \((x_{t+1},x_{t+2}) \in \text {G}\textsc {reen}_{0}\) w.h.p.

Next, we bound the time that can be spent in \(\text {R}\textsc {ed}_{}\), by using the fact that as long as the process is in \(\text {R}\textsc {ed}_{1}\) (resp., \(\text {R}\textsc {ed}_{0}\)), \(x_t\) (resp., \((1-x_t)\)) decreases (deterministically) by at least a multiplicative factor of \((1-\lambda _n)\) at each round. After a poly-logarithmic number of rounds, the Markov chain must leave \(\text {R}\textsc {ed}_{}\) and in this case, we can show that it cannot reach \(\text {Y}\textsc {ellow}\) right away. The proof of the following lemma is again relatively simple, and is deferred to Sect. 6.3.

Lemma 3

(Red area) Consider the case that \((x_{t_0},x_{t_0+1}) \in \text {R}\textsc {ed}_{}\) for some round \(t_0\), and let \(t_1 = \min \{ t \ge t_0, (x_t,x_{t+1}) \notin \text {R}\textsc {ed}_{} \}\). Then \(t_1 < t_0 + \log ^{1/2+2\delta } n\), and \((x_{t_1},x_{t_1+1}) \notin \text {Y}\textsc {ellow}\cup \text {R}\textsc {ed}_{}\).

Next, we bound the time that can be spent in \(\text {C}\textsc {yan}_{1}\). (A similar result holds for \(\text {C}\textsc {yan}_{0}\).) Roughly speaking, this area corresponds to the situation in which, over the last two consecutive rounds, the population is in an almost-consensus over the wrong opinion. In this case, many agents (a constant fraction) see only 0 in their corresponding samples in the latter round. As a consequence, everyone of them who will see at least one opinion 1 in the next round, will adopt opinion 1. We can expect this number to be of order \(\ell =O(\log n)\). This means that, as long as the Markov chain is in \(\text {C}\textsc {yan}_{1}\), the value of \(x_t\) would grow by a logarithmic factor in each round. This implies that within \(\log (n)/\log (\log n)\) rounds, the Markov chain will leave the \(\text {C}\textsc {yan}_{1}\) area and go to \(\text {G}\textsc {reen}_{1} \cup \text {P}\textsc {urple}_{1}\). Informally, this phenomenon can be viewed as a form of “bouncing” — the population of non-sources reaches an almost consensus on the wrong opinion, and “bounces back”, by gradually increasing the fraction of agents with the correct opinion, up to an extent that is sufficient to enter \(\text {G}\textsc {reen}_{1} \cup \text {P}\textsc {urple}_{1}\). The proof of the following lemma is given in Sect. 6.4.

Lemma 4

(Cyan area) Consider the case that \((x_{t_0},x_{t_0+1}) \in \text {C}\textsc {yan}_{1}\) for some round \(t_0\), and let \(t_1 = \min \{ t \ge t_0, (x_t,x_{t+1}) \notin \text {C}\textsc {yan}_{1} \}\). Then with probability at least \(1-1/n^{\Omega (1)}\) we have (1) \(t_1 < t_0 + O(\log (n)/\log (\log n))\), and (2) \((x_{t_1},x_{t_1+1}) \in \text {G}\textsc {reen}_{1} \cup \text {P}\textsc {urple}_{1}\). Moreover, the same holds symmetrically for \(\text {C}\textsc {yan}_{0}\).

Eventually, we consider the central area, namely, \(\text {Y}\textsc {ellow}\), where the speed is very low, and bound the time that can be spent there. The proof of the following lemma is more complex than the previous ones, and it appears in Sect. 5.

Lemma 5

(Yellow area) Consider the case that \((x_{t_0},x_{t_0+1}) \in \text {Y}\textsc {ellow}\). Then, w.h.p.,

2.3 Assembling the lemmas

Given the aforementioned lemmas, we have everything we need to prove our main result.

Proof of Theorem 1

Recall that without loss of generality, we assumed the source to have opinion 1, and that we already checked the closure property. The reader is strongly encouraged to refer to Fig. 1b to follow the ensuing arguments more easily.

-

Let \(t_1 = \min \{ t \ge 0, (x_t,x_{t+1}) \notin \text {Y}\textsc {ellow}\}\). If \((x_0,x_1) \in \text {Y}\textsc {ellow}\), we apply Lemma 5 to get that

$$\begin{aligned} {\left\{ \begin{array}{ll} t_1 < O(\log ^{5/2} n) ~\text {w.h.p., and } \\ (x_{t_1},x_{t_1+1}) \in \text {R}\textsc {ed}_{} \cup \text {C}\textsc {yan}_{} \cup \text {P}\textsc {urple}_{} \cup \text {G}\textsc {reen}_{}. \end{array}\right. } \end{aligned}$$(3)Else, \((x_0,x_1) \notin \text {Y}\textsc {ellow}\) so \(t_1 = 0\), and Eq. (3) also holds.

-

Let \(t_2 = \min \{ t \ge t_1, (x_t,x_{t+1}) \notin \text {R}\textsc {ed}_{} \}\). If \((x_{t_1},x_{t_1+1}) \in \text {R}\textsc {ed}_{}\), we apply Lemma 3 to get that

$$\begin{aligned} {\left\{ \begin{array}{ll} t_2 < t_1 + \log ^{1/2 + 2\delta } n ~\text {w.h.p., and } \\ (x_{t_2},x_{t_2+1}) \in \text {C}\textsc {yan}_{} \cup \text {P}\textsc {urple}_{} \cup \text {G}\textsc {reen}_{}. \end{array}\right. } \end{aligned}$$(4)Else, \((x_{t_1},x_{t_1+1}) \notin \text {R}\textsc {ed}_{}\) so \(t_1 = t_2\), and by Eq. (3), it implies that Eq. (4) also holds.

-

Let \(t_3 = \min \{ t \ge t_2, (x_t,x_{t+1}) \notin \text {C}\textsc {yan}_{} \}\). If \((x_{t_2},x_{t_2+1}) \in \text {C}\textsc {yan}_{}\), we apply Lemma 4 to get that

$$\begin{aligned} {\left\{ \begin{array}{ll} t_3 < t_2 + \log (n)/\log (\log n), ~\text { and } \\ (x_{t_3},x_{t_3+1}) \in \text {P}\textsc {urple}_{} \cup \text {G}\textsc {reen}_{} \hbox { w.p.~}\ \ge 1-1/n^{\Omega (1)}. \end{array}\right. } \end{aligned}$$(5)Else, \((x_{t_2},x_{t_2+1}) \notin \text {C}\textsc {yan}_{}\) so \(t_2 = t_3\), and by Eq. (4), it implies that Eq. (5) also holds.

-

Let \(t_4 = \min \{ t \ge t_3, (x_t,x_{t+1}) \in \text {G}\textsc {reen}_{} \}\). By Lemma 2, and by Eq. (5), we have that \(t_4 = t_3\) or \(t_4 = t_3+1\) w.h.p.

If \((x_{t_4},x_{t_4+1}) \in \text {G}\textsc {reen}_{1}\), then by Lemma 1 the consensus is reached on round \(t_4+1\). Otherwise, if \((x_{t_4},x_{t_4+1}) \in \text {G}\textsc {reen}_{0}\), by Lemma 1, we obtain that \(x_{t_4+2} = 1/n\) w.h.p. (meaning that all agents have opinion 0 except the source). Therefore, in this case, either \((x_{t_4+1},x_{t_4+2}) \in \text {G}\textsc {reen}_{0}\) or \((x_{t_4+1},x_{t_4+2}) \in \text {C}\textsc {yan}_{1}\) (because for a point \((x_t,x_{t+1})\) to be in any other area, it must be the case that \(x_{t+1} \ge 1/ \log (n)\), by definition). In the former case, we apply Lemma 1 again to get that \(x_{t_4+3} = 1/n\) w.h.p., which implies that \((x_{t_4+2},x_{t_4+3}) = (1/n,1/n) \in \text {C}\textsc {yan}_{1}\). As we did before, we apply Lemma 4, 2 and 1 to show that, with probability at least \(1-1/n^{\Omega (1)}\), the system goes successively to \(\text {P}\textsc {urple}_{1} \cup \text {G}\textsc {reen}_{1}\), then to \(\text {G}\textsc {reen}_{1}\), and eventually reaches the absorbing state (1, 1) in less than \(\log (n)/\log (\log n)+2\) rounds.

Altogether, the convergence time is dominated by \(t_1\), and is hence \(O(\log n)^{5/2}\) with probability at least \(1-1/n^{\epsilon }\), for some \(\epsilon >0\). As mentioned, this implies that for any given \(c>1\), the algorithm reaches consensus in \(O(\log n)^{5/2}\) time with probability at least \(1-1/n^{c}\). In other words, there exists \(T = O(\log ^{5/2} n)\) such that w.h.p., the configuration \(C_{T}\) of the system in round T is safe, which concludes the proof of Theorem 1. \(\square \)

3 Probabilistic tools–competition between coins

Consider two coins such that one coin has a greater probability of yielding “heads”, and toss them k times each.

3.1 Lower bounds on the probability that the best coin wins

In Lemmas 6 and 7 we aim to lower bound the probability that the more likely coin yields more “heads”, or in other words, we lower bound the probability that the favorite coin wins. Lemma 6 is particularly effective when the difference between p and q is sufficiently large. Its proof is based on a simple application of Hœffding’s inequality.

Lemma 6

For every \(p,q \in [0,1]\) s.t. \(p < q\) and every integer k, we have

Proof

Let \(Y_i\), \(i \in \{ 1,\ldots ,k \}\) be i.i.d. random variables with

Then

Since each \(Y_i\) is bounded, and \(\mathbb {E}(Y_i) = (p - q)\), we can apply Hœffding’s inequality (Theorem 5) to get

\(\square \)

Lemma 6 is not particularly effective when p and q are close to each other. For such cases, we shall use the following lemma.

Lemma 7

Let \(\lambda >0\). There exist \(\epsilon = \epsilon (\lambda )\) and \(K = K(\lambda )\), s.t. for every \(p,q \in [1/2-\epsilon ,1/2+\epsilon ]\) with \(p<q\), and every \(k > K\),

Proof

For every \(p,q \in [0,1]\), we have

so

Let us compute \(\mathbb {P}\left( B_k(q) = B_k(p) - d \right) \):

where

Since \(A_{k,d,p,q}\) is symmetric w.r.t. p, q, i.e., \(A_{k,d,p,q} = A_{k,d,q,p}\), we can simplify Eq. (6) as

Intuitively, the quantity

can be seen as the “advantage” given by playing with the better coin (q) in a k-coin-tossing contest, knowing that one coin hit “head” d times more than the other. Before we continue, we need the following simple claim. \(\square \)

Claim 1

For every \(a,b \in [0,1]\) with \(a>b\), the sequence

is increasing in n.

Proof

Rewrite

and notice that, since \(a>b\), \(\left( (b/a)^n \right) \), \(n \in \mathbb {N}\) is a decreasing sequence. \(\square \)

Claim 2

Let \(0< \gamma < 1\) and \(d\in \mathbb {N}\). There exists \(\epsilon = \epsilon (\gamma ,d)\), such that for every \(p,q \in [1/2-\epsilon ,1/2+\epsilon ]\) with \(p<q\),

Proof

First, by a telescopic argument:

Note that

and that

Hence, since \(\gamma <1\), and provided that p, q are close enough to 1/2, we obtain

which completes the proof of Claim 2. \(\square \)

Next, let \(\lambda >0\) as in the Lemma’s statement, and let \(\lambda ' = \lambda +1\). Denote \(D = \lceil \lambda ' \rceil +1 > \lambda '\) and \(\gamma = \lambda '/D < 1\). By Claim 2, there exists \(\epsilon = \epsilon (\gamma ,D) = \epsilon (\lambda )\), s.t. for \(p,q \in [1/2-\epsilon ,1/2+\epsilon ]\),

Now we derive a lower bound on Eq. (7):

Since \(\lambda '>\lambda \), and since \(\mathbb {P}\left( |B_k(q) - B_k(p)| < D \right) \) tends to 0 as k tends to \(+\infty \), there exists \(K = K(\lambda )\) s.t. for all \(k>K\),

Eventually, we write

Hence,

which concludes the proof of Lemma 7.

3.2 Lower bounds on the probability that the worse coin wins

We now deal with the opposite problem, that is, to lower bound the probability that the underdog coin wins. Formally,

Lemma 8

For every \(p,q \in [0,1]\) s.t. \(p < q\) and every integer k, we have

where \(C = 0.4748\) and \(\sigma = \sqrt{p(1-p) + q(1-q)}\).

Proof

Let \(Y_i\), \(i \in \{ 1,\ldots ,k \}\) be i.i.d. random variables with

Let \(\mu = \mathbb {E}(Y_1)\), \(\sigma ^ 2 = \text {Var}(Y_1)\), and \(\rho = \mathbb {E}(|Y_1-\mu |^3)\). Writing the definitions and simplifying, we obtain

We have

where

By the Berry-Esseen theorem (Theorem 7),

implying that

and so

where, e.g., \(C = 0.4748\). \(\square \)

Claim 3

We have that \(\rho < \sigma ^2\).

Proof

Let \(f(p) = 2p^3 - p/2\) and \(g(p) = 1/4 - p^2\). We start by proving that for every \(p \in [-1/2,1/2]\), \(|f(p)| \le g(p)\). Since f is anti-symmetric, |f| is symmetric, and g is symmetric, we can restrict the analysis to [0, 1/2]. On this interval, \(|f(p)| = p/2-2p^3\), and

We can rewrite Eq. (10) as

Therefore,

which concludes the proof of Claim 3. \(\square \)

By Claim 3, we end up with

which concludes the proof of Lemma 8. \(\square \)

Just as Lemma 7 was a version of Lemma 6 optimized for cases where p and q are close to each other, Lemma 9 complements Lemma 8 in such situations.

Lemma 9

There exists a constant \(\alpha >1\), s.t. for every integer k, every \(p,q \in [1/3,2/3]\) with \(p<q\) and \(q-p \le 1/ \sqrt{k}\), we have

Proof

Recall that (see Eq. (7) in the proof of Lemma 7):

The following claim is analogous to Claim 2, but this time we are looking for an upper bound (instead of a lower bound) on the same quantity. \(\square \)

Claim 4

There exists a constant \(\alpha >1\), s.t. for every integer k, every \(p,q \in [1/3,2/3]\) with \(p<q\), and all \(d\in \mathbb {N}\),

As in the proof of Claim 2, we have

where \(\alpha \) is any upper bound on \(1/(q(1-p))\), e.g., \(\alpha = 9\). Hence,

which concludes the proof of Claim 4.

Using Claim 4 on Eq. (11), we obtain

Claim 5

For every \(p,q \in [1/3,2/3]\) with \(p<q\), and every integer k,

Proof

For \(i\in \{ 1,\ldots ,k \}\), let \(X_i^{(1)},X_i^{(2)} \sim {\mathcal {B}}(q)\) and \(Y_i \sim {\mathcal {B}}(1-p/q)\) be independent random variables. Let

Clearly, \(X^{(1)} \sim {\mathcal {B}}_k(q)\) and \(X^{(2)} \sim {\mathcal {B}}_k(q)\). Since for every i,

we obtain that \(\tilde{X}^{(2)} \sim {\mathcal {B}}_k(q\cdot (1- (1-p/q))) = {\mathcal {B}}_k(p)\). Similarly, for every i,

hence, we obtain that \(Z \sim {\mathcal {B}}_k(q\cdot (1-p/q)) = {\mathcal {B}}_k(q-p)\). We notice that \((X^{(1)},X^{(2)})\) are independent, as well as \((X^{(1)},\tilde{X}^{(2)})\). Hence

We have \(\mathbb {E}(Z) = k(q-p)\), and

which concludes the proof of Claim 5. \(\square \)

We note that

Eventually, Eq. (12) becomes

To conclude, we write

Hence,

which concludes the proof of Lemma 9.

4 Preliminaries

Remark 1

At various times throughout our analysis, we would like to calculate different statistical properties of the system at round \(t+2\), conditioning on \((\mathbf{x_{t}},\mathbf{x_{t+1}})\in {\mathcal {G}}\), as was done in e.g., Observation 1. For the sake of clarity of presentation, in all subsequent cases, we shall omit the conditioning notation. The reader should therefore keep in mind, that whenever such properties are calculated, they are actually done while conditioning on \(x_t = \mathbf{x_{t}}\) and \(x_{t+1} = \mathbf{x_{t+1}}\), where the point \((\mathbf{x_{t}},\mathbf{x_{t+1}})\) would always be clear from the context.

Proof of Observation 1

Let I be the set of agents (including the source). Let \(I_t^{1} \subset I\) be the set of all non-source agents with opinion 1 at round t. Recall that we condition on \(x_t = \mathbf{x_{t}}\) and \(x_{t+1} = \mathbf{x_{t+1}}\) (although we avoid writing this conditioning). In addition, the proof will proceed by conditioning on \(I_{t+1}^1= \mathbf{I_{t+1}^{1}}\). Since we shall show that the statements are true for every \(\mathbf{I_{t+1}^{1}}\), the lemma will hold without this latter conditioning.

By definition of the protocol, and because it operates under the \(\mathcal {PULL}\) model, \({\textsf {tmp}}^{(i)}\) and \({\sigma }^{(i)}\) are obtained by sampling \(\ell \) agents uniformly at random in the population (with replacement) and counting how many have opinion 1. Therefore, conditioning on \((x_t,x_{t+1})\) and \(I_{t+1}^1\),

-

(i)

variables \(({\textsf {tmp}_{t+2}}^{(i)})_{i \in I}\) and \(({\sigma _{t+1}}^{(i)})_{i \in I}\) are mutually independent, thus variables \((Y_{t+2}^{(i)})_{i \in I}\) are mutually independent.

-

(ii)

for every \(i \in I\), \({\textsf {tmp}_{t+2}}^{(i)} \sim {\mathcal {B}}_\ell (x_{t+1})\), and \({\sigma _{t+1}}^{(i)} \sim {\mathcal {B}}_\ell (x_{t})\), so we can write for every non-source agent \(i \in I_{t+1}^1\),

$$\begin{aligned} \mathbb {P}\left( Y_{t+2}^{(i)} = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) \ge B_{\ell }\left( x_t \right) \right) , \end{aligned}$$and for every non-source agent \(i \notin I_{t+1}^1\),

$$\begin{aligned} \mathbb {P}\left( Y_{t+2}^{(i)} = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) > B_{\ell }\left( x_t \right) \right) . \end{aligned}$$

This establishes Eq. (1). Now, let us define independent binary random variables \((X_j)_{1 \le j \le n}\), taking values in \(\{0,1\}\), as follows;

-

\(X_1 = 1\),

-

for every j s.t. \(1 < j \le n \cdot x_{t+1}\), \(\mathbb {P}\left( X_j = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) \ge B_{\ell }\left( x_t \right) \right) \),

-

for every j s.t. \(n \cdot x_{t+1} < j \le n\), \(\mathbb {P}\left( X_j = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) > B_{\ell }\left( x_t \right) \right) \).

We assumed the source to have opinion 1, so there are \(nx_t -1\) non-source agents with opinion 1 and \(n(1-x_t)\) non-source agents with opinion 0. Therefore, by (i) and (ii) and by construction of the \((X_j)_{1 \le j \le n}\), \(x_{t+2} = \frac{1}{n} \sum _{i \in I} Y_{t+2}^{(i)}\) is distributed as \(\frac{1}{n} \sum _{j=1}^n X_j\), which establishes the second statement in Observation 1. Computing the expectation (still conditioning on \(x_t\), \(x_{t+1}\)) is straightforward and does not depend on \(I_{t+1}^1\):

This establishes Eq. (2), and concludes the proof of Observation 1. \(\square \)

Remark 2

From Observation 1, we obtain the following straightforward bounds: for every non-source agent i,

and

Because the source has opinion 1, the left-hand side in Eq. (15) is loose (specifically, \(-1/n\) is not necessary). Nevertheless, we will use this equation in the proofs, because it has a symmetric equivalent (w.r.t. to the center of \({\mathcal {G}}\), \((\frac{1}{2},\frac{1}{2})\)) which will allow our statements about \(x_{t+2}\) to hold symmetrically for \(1-x_{t+2}\), despite the asymmetry induced by the source.

Remark 3

Eq. (2) in Observation 1 implies the following convenient bounds:

5 Escaping the yellow area

The goal of this section is to prove Lemma 5. It might be easier for the reader to think of the Yellow area as a square. Formally, let us define \(\text {Y}\textsc {ellow}'\) as the following square bounding box around \(\text {Y}\textsc {ellow}\):

Obviously, \(\text {Y}\textsc {ellow}\subset \text {Y}\textsc {ellow}'\), so in order to prove Lemma 5 it suffices to prove Lemma 10 below.

Lemma 10

Consider that \((x_{t_0},x_{t_0+1}) \in \text {Y}\textsc {ellow}'\). Then, w.h.p., \( \min \{ t > t_0 \text { s.t. } (x_t,x_{t+1}) \notin \text {Y}\textsc {ellow}' \} < t_0 + O(\log ^{5/2} n).\)

5.1 Effects of noise

We will need the following result to break ties.

Lemma 11

There exists a constant \(\beta > 0\) s.t. for n large enough, and if \(\mathbb {E}(x_{t+2}) \in [1/3,2/3]\), then

Proof

Consider \(X_1,\ldots ,X_n\) from the statement of Observation 1. We have (see the proof of Observation 1)

-

\(X_1 = 1\),

-

for every j s.t. \(1 < j \le n \cdot x_{t+1}\), \(\mathbb {P}\left( X_j = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) \ge B_{\ell }\left( x_t \right) \right) \),

-

for every j s.t. \(n \cdot x_{t+1} < j \le n\), \(\mathbb {P}\left( X_j = 1 \right) = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) > B_{\ell }\left( x_t \right) \right) \).

Let \(p = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) \ge B_{\ell }\left( x_t \right) \right) \) and \(q = \mathbb {P}\left( B_{\ell }\left( x_{t+1} \right) > B_{\ell }\left( x_t \right) \right) \). By Observation 1,

By assumption on \(\mathbb {E}(x_{t+2})\), this implies that

Moreover, \(p-q = \mathbb {P}\left( B_\ell (x_{t+1}) = B_\ell (x_t) \right) \) which tends to 0 as n tends to infinity, i.e., p and q are arbitrarily close. Hence, for n large enough, the last equation implies that \(p\in [1/4,3/4]\) and \(q\in [1/4,3/4]\). Let \(Y_p = \sum _{i=2}^{n\cdot x_{t+1}} X_i\) and \(Y_q = \sum _{i=n\cdot x_{t+1}+1}^n X_i\). These two variables follow binomial distributions, and since \(p,q\in [1/4,3/4]\), there is a constant probability that \(Y_p \ge \mathbb {E}(Y_p)\), and there is a constant probability that \(Y_q \ge \mathbb {E}(Y_q)\) as well. Without loss of generality, we assume that \(x_{t+1} \ge 1/2\) and focus on \(Y_p\) (if \(x_{t+1} < 1/2\), then we could consider \(Y_q\) instead). Let \(m = n\cdot x_{t+1}-1\) be the number of samples of \(Y_p\). In this case, \(m \ge n/2-1\) tends to \(+\infty \) as n tends to \(+\infty \). Let \(\sigma _p = \sqrt{p(1-p)}\). By the central limit theorem (Theorem 6), the random variable

converges in distribution to \({\mathcal {N}}(0,1)\). Moreover, \(\text {Var}(Y_p) = m \sigma _p^2 = (n\cdot x_{t+1}-1)p(1-p) \ge (n/2-1)p(1-p) \ge np(1-p)/3 \), so for any \(\epsilon >0\) and n large enough,

By taking a small enough \(\epsilon \), and because p is bounded, we conclude the proof of Lemma 11 (the other inequality can be obtained symmetrically). \(\square \)

We can use the previous result to show that the Markov process \((x_t,x_{t+1})\) is sufficiently noisy so that it is never too likely to be at any given point (x, y).

Lemma 12

There is a constant \(c_1 = c_1(c) > 0\) (recall that the sample size is \(\ell = c \cdot \log n\)), such that for any \(a \in [1/2-4\delta ,1/2+4\delta ]\), and any round t s.t. \((x_t,x_{t+1}) \in \text {Y}\textsc {ellow}'\), we have either

or \((x_{t+1},x_{t+2}) \notin \text {Y}\textsc {ellow}'\) w.h.p.

Proof

If \(\mathbb {E}(x_{t+2}) \notin [1/3,2/3]\), then \((x_{t+1},x_{t+2}) \notin \text {Y}\textsc {ellow}'\) w.h.p. Otherwise, let \(a \in [1/2-4\delta ,1/2+4\delta ]\). If \(a > \mathbb {E}(x_{t+2})\), then by Lemma 11,

Similarly, if \(a \le \mathbb {E}(x_{t+2})\), Lemma 11 gives

which concludes the proof of Lemma 12. \(\square \)

5.2 General structure of the proof

In order to prove Lemma 10, we first partition \(\text {Y}\textsc {ellow}'\), as follows (for an illustration, see Fig. 2):

Similarly, we define \(\textbf{A}_0,\textbf{B}_0,\textbf{C}_0\) their symmetric equivalents (w.r.t the point (\(\frac{1}{2},\frac{1}{2}\))), and \(\textbf{A}= \textbf{A}_0 \cup \textbf{A}_1\), \(\textbf{B}= \textbf{B}_0 \cup \textbf{B}_1\), and \(\textbf{C}= \textbf{C}_0 \cup \textbf{C}_1\).

In the next lemma, we study the distribution of the future location of any point \((x_t,x_{t+1})\in \textbf{A}\). This area happens to be ideal to escape \(\text {Y}\textsc {ellow}'\), because it allows the Markov chain to quickly build up “speed”. Item (a) in the next lemma says that, with some probability that depends on the current speed the following occur: (1) the speed in the following round increases by a factor of two, and (2) the process in the next round either remains in \(\textbf{A}\), or goes outside of \(\text {Y}\textsc {ellow}'\). Note that when the current speed is not too low, that is, when \(x_{t+1}-x_t > 1/\sqrt{n}\), this combined event happens with constant probability. Item (b) says that with constant probability, (1) the speed in the next round would not be too low, and (2) the process either remains in \(\textbf{A}\), or goes outside of \(\text {Y}\textsc {ellow}'\).

Lemma 13

If \((x_t,x_{t+1}) \in \textbf{A}\), and provided that \(\delta \) is small enough and n is large enough,

-

(a)

-

(b)

There exists a constant \(c_2 = c_2(c)>0\) s.t.

Proof

Without loss of generality, we assume that \((x_t,x_{t+1}) \in \textbf{A}_1\) (the same arguments apply to \(\textbf{A}_0\) symmetrically). We have, provided that \(\delta \) is small enough and n is large enough,

More precisely, in the second inequality, the term \(-1/n\) disappears within the \(6(x_{t+1} - x_t)\) lower bound. Indeed, we can take \(\lambda \gg 6\) (from Lemma 7) for this purpose. Moreover, we can assume \(x_{t+1} - x_t \ge 1/n\), by definition of \(\textbf{A}_1\), and ruling out the case \(x_t = x_{t+1}\) by Lemma 12.

Hence,

and by definition of \(\textbf{A}_1\), \((x_{t+1} - (2x_t - 1/2)) \ge 0\) and so

By Observation 1, we can apply Chernoff’s inequality (Theorem 4). Taking \(\epsilon = 2(x_{t+1}-x_t)/(4(x_{t+1}-x_t)+x_{t+1})\), we have

Since \(x_{t}\) and \(x_{t+1}\) are close to 1/2, we have for \(\delta \) small enough

Now, we show that the event “\(x_{t+2} - x_{t+1} > 2 (x_{t+1} - x_t)\)” suffices for \((x_{t+1},x_{t+2})\) to remain in \(\textbf{A}_1\) or leave \(\text {Y}\textsc {ellow}'\). \(\square \)

Claim 6

If \((x_t,x_{t+1}) \in \textbf{A}_1\) and \(x_{t+2} - x_{t+1} > 2 (x_{t+1} - x_t)\), then \((x_{t+1},x_{t+2})\in \textbf{A}_1\) or \((x_{t+1},x_{t+2})\notin \text {Y}\textsc {ellow}'\).

Proof

If \((x_{t+1},x_{t+2})\notin \text {Y}\textsc {ellow}'\), the result holds. Otherwise, \((x_{t+1},x_{t+2})\in \text {Y}\textsc {ellow}'\) and we have to prove that \((x_{t+1},x_{t+2})\) satisfies \(\textbf{A}_1\).(i) and \(\textbf{A}_1\).(ii). First we prove that \((x_{t+1},x_{t+2})\) satisfies \(\textbf{A}_1\).(i):

Then we prove that \((x_{t+1},x_{t+2})\) satisfies \(\textbf{A}_1\).(ii):

which concludes the proof of Claim 6. \(\square \)

Next, we apply Claim 6 to Eq. (18) to establish (a). Finally, \(x_{t+2} > x_{t+1} + 4 (x_{t+1}-x_t) + 1/ \sqrt{n}\) implies \(x_{t+2} - x_{t+1} > 2 (x_{t+1}-x_t)\) so we can use Claim 6,

where the existence of \(c_2\) is guaranteed by Lemma 11. This establishes (b).

Now, we can iteratively use the previous result to prove that any state in \(\textbf{A}\) has a reasonable chance to escape \(\text {Y}\textsc {ellow}'\).

Lemma 14

There is a constant \(c_3 = c_3(c)\) s.t. if \((x_{t_0},x_{t_0+1}) \in \textbf{A}\), then

Proof

Without loss of generality, we assume that \((x_t,x_{t+1}) \in \textbf{A}_1\) (the same arguments apply to \(\textbf{A}_0\) symmetrically). Let us define event \(H_{t_0+1}\), that the system is either in \(A_1\) or out of \(\text {Y}\textsc {ellow}'\) in round \(t_0+1\), and that the “gap” \((x_{t_0+2}-x_{t_0+1})\) is not too small. Formally,

For \(t>t_0+1\), we define event \(H_t\), that the system is either in \(A_1\) or out of \(\text {Y}\textsc {ellow}'\) in round t, and that the gap \((x_{t+1}-x_t)\) doubles. Formally,

We start with the following observation, which results directly from the definition of \(H_t\) for \(t \ge t_0+1\):

For every \(t > t_0+1\) such that \((x_t,x_{t+1}) \in \textbf{A}_1\),

By Lemma 13 (b), \((x_{t_0+1},x_{t_0+2}) \in \textbf{A}_1\) and \(x_{t_0+2} - x_{t_0+1} > 1 / \sqrt{n}\) w.p. \(c_2>0\). Together with the last equation and using the union bound, we get

We have the following very rough upper bounds

Hence, we have proved that for every \(t_1 > t_0+1\) such that \((x_{t_1},x_{{t_1}+1}) \in \textbf{A}_1\),

By Eq. (19), it implies that for every \(t_1 > t_0+1\) such that \((x_{t_1},x_{{t_1}+1}) \in \textbf{A}_1\),

For \(t_1\) large enough (e.g., \(t_1 = t_0 + \log n\)), this implies that \((x_{t_1-1},x_{t_1}) \notin \text {Y}\textsc {ellow}'\), otherwise the gap \((x_{t_1}-x_{t_1-1})\) would be greater than \(8\delta \) which is the diameter of \(\text {Y}\textsc {ellow}'\). This concludes the proof of Lemma 14. \(\square \)

We are left with proving that the system cannot be stuck in \(\textbf{B}\) or \(\textbf{C}\) for too long. We start with \(\textbf{B}\). The analysis of this area is relatively complex, because it is difficult to rule out the possibility that the Markov chain remains there at a low speed. We prove that any state in \(\textbf{B}\) must either make a step towards escaping \(\text {Y}\textsc {ellow}'\), or have a good chance of leaving \(\textbf{B}\). The proof of the following lemma is rather long and is deferred to Sect. 5.3.

Lemma 15

There are constants \(c_4,c_5>0\) such that if \((x_{t},x_{t+1}) \in \textbf{B}\), then either

-

(a)

\(|x_{t+1}-1/2| > \left( 1+c_4/\sqrt{\ell } \right) |x_{t}-1/2|\), or

-

(b)

\(\mathbb {P}\left( (x_{t+1},x_{t+2}) \notin \textbf{B} \right) > c_5\).

Now, we can iteratively use the previous result to prove that any state in \(\textbf{B}\) either leaves \(\textbf{B}\) or escapes Yellow’ in a reasonable amount of time.

Lemma 16

If \((x_{t_0},x_{t_0+1}) \in \textbf{B}\), then, w.h.p., \(\min \{ t \ge t_0, (x_{t},x_{t+1}) \notin \textbf{B}\} < t_0 + \frac{\sqrt{c}}{c_4} \cdot \log ^{3/2} n\).

Proof

Without loss of generality, we assume that \((x_t,x_{t+1}) \in \textbf{B}_1\) (the same arguments apply to \(\textbf{B}_0\) symmetrically). For any round t, let \(H_t\) the event that \((x_t,x_{t+1}) \in \textbf{B}\) and (a) of Lemma 15 holds. Let \(t_{\max } = t_0 + (\sqrt{c}/c_4) \cdot \log ^{3/2} n\), and let X be the number of rounds between \(t_0\) and \(t_{\max }\) for which \(H_t\) does not happen. Each time (a) in Lemma 15 doesn’t hold, (b) of Lemma 15 holds so there is a constant probability to leave \(\textbf{B}\), so

Note that

This, together with Eq. (20), implies that either (i) \(X < (t_{\max }-t_0)/4\), or w.h.p. (ii) there is a time \(t_0 \le t \le t_{\max }\) such that \((x_{t},x_{t+1}) \notin \textbf{B}\) (in which case Lemma 16 holds).

Now, consider case (i). Let \(u_t = x_t - 1/2\). By Lemma 12, after \(O(\log n)\) rounds, we’ll have \(u_{t} > 1/ \sqrt{n}\) w.h.p., so up to waiting a logarithmic number of rounds, we assume that \(u_{t_0} > 1/ \sqrt{n}\).

Note that whenever \((x_t,x_{t+1}) \in \textbf{B}_1\), by definition \(x_{t+1}\ge x_t > 1/2\) and so \((x_{t+1},x_{t+2})\) cannot be in \(\textbf{B}_0\). This implies that the system must remain in \(\textbf{B}_1\) until it leaves \(\textbf{B}\). Also by definition of \(\textbf{B}_1\), if \((x_t,x_{t+1}) \in \textbf{B}_1\) then \(x_t \le x_{t+1}\), and so

Moreover, by the fact that we are in case (i), we have that the number of rounds \(t_0 \le t \le t_{\max }\) such that \(H_t\) happens is at least

Note that at each such round, by definition of \(H_t\) and (a) in Lemma 15,

Hence, by Eq. (21),

When n is large, this quantity is larger than 1, hence (i) is impossible unless \((x_t,x_{t+1}) \notin \textbf{B}\). This concludes the proof of Lemma 16. \(\square \)

We are left with proving that the system cannot stay in \(\textbf{C}\) for too long. Fortunately, from this area, the Markov chain is naturally pushed towards \(\textbf{A}\), which makes the analysis simple.

Lemma 17

There is a constant \(c_6>0\) such that if \((x_{t},x_{t+1}) \in \textbf{C}\), then

Proof

Without loss of generality, we assume that \((x_t,x_{t+1}) \in \textbf{C}_1\) (the same arguments apply to \(\textbf{C}_0\) symmetrically). By Observation 1, we have

By Lemma 7 (taking \(\lambda > 2\)), this becomes

Case 1. If \((x_{t+1}-x_t) > 1/2 - x_{t+1}\), then Eq. (22) implies

so with constant probability \(x_{t+2} > 1/2\) and thus \((x_{t+1},x_{t+2}) \in \textbf{A}_1\) or is not in \(\text {Y}\textsc {ellow}'\).

Case 2. Else, if \((x_{t+1}-x_t) \le 1/2 - x_{t+1}\), Eq. (22) rewrites

Since \((x_t,x_{t+1}) \in \textbf{C}_1\), we have \(x_{t+1} \ge x_t\) and \(1/2 > x_{t+1}\). Moreover, for \(\ell \) large enough, \(1 - 2 \cdot \mathbb {P}\left( B_\ell (x_{t+1}) = B_\ell (x_{t}) \right) > 0\). Hence,

If \(\mathbb {E}(x_{t+2}) \notin [1/3,2/3]\), then \((x_{t+1},x_{t+2}) \notin \textbf{A}_1\) w.h.p. Otherwise, we can apply Lemma 11: with constant probability \(x_{t+2} > (1/2 + x_{t+1}) / 2\), i.e., \(x_{t+2} - x_{t+1} > 1/2 - x_{t+2}\). If so, Case 1 applies and with constant probability, \((x_{t+2},x_{t+3}) \in \textbf{A}_1\) or is not in \(\text {Y}\textsc {ellow}'\). This concludes the proof of Lemma 17. \(\square \)

Eventually, we have all the necessary results to conclude the proof regarding the Yellow area.

Proof of Lemma 10

By Lemma 14, if \((x_{t_0},x_{t_0+1}) \in \textbf{A}\), then

By Lemma 17, this implies that if \((x_{t_0},x_{t_0+1}) \in \textbf{A}\cup \textbf{C}\),

By Lemma 16, w.h.p., whenever the process is at \(\textbf{B}\), it does not spend more than \((\sqrt{c} / c_4)\cdot \log ^{3/2} n\) consecutive rounds there. This means, that for any constant \(c'>0\), during \(c'\log ^{5/2} n\) consecutive rounds, w.h.p., we must either leave \(\text {Y}\textsc {ellow}'\) or be at \(\textbf{A}\cup \textbf{C}\) on at least \(\frac{c'\log ^{5/2} n}{(\sqrt{c} / c_4)\cdot \log ^{3/2} n} = \frac{c' c_4}{\sqrt{c}} \cdot \log n\) distinct rounds. By Eq. (23), the probability that the system fails to escape \(\text {Y}\textsc {ellow}'\) in each of these occasions is at most \((1-c_3 \cdot c_6)^{(c' c_4/\sqrt{c}) \cdot \log n}\). Taking \(c'\) to be sufficiently large concludes the proof of Lemma 10. \(\square \)

5.3 Proof of Lemma 15—regarding Area B

The goal of this section is to prove Lemma 15, which concerns Area B inside the \(\text {Y}\textsc {ellow}'\) domain. Without loss of generality, we may assume that \((x_t,x_{t+1}) \in \textbf{B}_1\) (the same arguments apply to \(\textbf{B}_0\) symmetrically). Let us define

so that, conditioning on \((x_t,x_{t+1})\), \(\mathbb {E}(x_{t+2}) = g(x_t,x_{t+1})\) by Observation 1. Informally, our plan is the following. For all points \((x_t,x_{t+1})\) such that \(\mathbb {E}(x_{t+2}) = g(x_t,x_{t+1})\) is “small” compared to \(x_{t+1}\), then the process will lose speed and get out of \(\textbf{B}\), corresponding to item (b) in the statement of Lemma 15. Otherwise, \(\mathbb {E}(x_{t+2}) = g(x_t,x_{t+1})\) is sufficiently “large” compared to \(x_{t+1}\), and the process makes a significant step towards escaping \(\text {Y}\textsc {ellow}\), corresponding to item (a).

We will proceed in two steps: first, we analyze function g, and only then, we prove Lemma 15.

5.3.1 Analysis of g

We start with the following claim, which will be used to prove the subsequent claim.

Claim 7

Let \(x_0 \in [1/3,2/3]\). On the interval \([x_0,x_0+1 / \sqrt{\ell }]\), and for \(\ell \) large enough, \(y \mapsto g(x_0,y)-y\) is a strictly increasing function of y.

Proof

The key observation is that the derivative, w.r.t. x, of \(\mathbb {P}\left( B_{k}\left( x \right) > B_{k}\left( p \right) \right) \) in the neighborhood of p is relatively high. The following claim formalizes this idea. \(\square \)

Claim 8

There exists a constant \(\beta ' > 0\) such that for every k large enough, and every \(p,x \in [1/3,2/3]\) satisfying \(p \le x \le p + 1/ \sqrt{k}\),

Proof

Let \(h > 0\). We will proceed by using a coupling argument. Let \(X_i\), \(i\in \{ 1,\ldots ,k \}\), be i.i.d. random variables uniformly distributed over the interval [0, 1]. Let \(Y_1 = |\{ i \text { s.t. } X_i \le x \}|\) and \(Y_2 = |\{ i \text { s.t. } X_i \le x+h \}|\). By construction, \(Y_1 \sim {\mathcal {B}}_k(x)\) and \(Y_2 \sim {\mathcal {B}}_k(x+h)\). Next, let \(H = |\{ i \text { s.t. } x < X_i \le x+h \}|\). By construction, \(Y_2 = Y_1 + H \ge Y_1\). Let \(Z \sim {\mathcal {B}}_k(p)\) be a binomially distributed random variable, independent from \(Y_1\) and \(Y_2\). Now, we have:

Let \(J = \{ j \in \mathbb {N}\text { s.t. } kp \le j \le kp + \sqrt{k} \}\). We can rewrite the last equation as

The following result is a well-known fact. \(\square \)

Observation 2

There exists a constant \(\beta > 0\) such that for every k large enough, every \(p \in [1/3,2/3]\), and every i satisfying \(|i-kp| \le \sqrt{k}\), we have \(\mathbb {P}\left( B_k(p) = i \right) \ge \frac{\beta }{\sqrt{k}}\).

Proof

By the De Moivre-Laplace theorem, for any i in \(\{kp-\sqrt{k},\ldots ,kp+\sqrt{k}\}\),

where we used \(\approx \) in the sense that the ratio between the left-hand side and the right-hand side tends to 1 as k tends to infinity. Since \(|i-kp| \le \sqrt{k}\),

By Eq. (26), we can conclude the proof of Observation 2 for k large enough by taking, e.g.,

\(\square \)

For \(j \in J\), by Observation 2, \(\mathbb {P}\left( Z = j \right) \ge \beta / \sqrt{k}\), for some constant \(\beta >0\). Moreover,

By the assumption in the lemma, \(p \le x \le p + 1/ \sqrt{k}\), and so \(kp \le kx \le kp + \sqrt{k}\). Therefore, for \(j \in J\), \(|j-kx|\le \sqrt{k}\), and by Observation 2, we get that \(\mathbb {P}\left( Y_1 = j \right) \ge \beta / \sqrt{k}\). Hence, we can rewrite Eq. (25) as

Now, let us find a lower bound on \(\mathbb {P}\left( H \ge 1 \mid Y_1 = j \right) \), for \(j \in J\). Note that, by definition, \(Y_1=j\) if and only if \(|\{ i \text { s.t. } X_i > x \}| = k-j\). Since \(X_i, 1 \le i \le k\), is uniformly distributed over [0, 1],

Therefore, for every \(j\in J\)

This implies that

We have

Eventually, we get from Eq. (27)

We can conclude the proof of Claim 8 for k large enough by taking, e.g., \(\beta ' = \frac{\beta ^2 (1-p)}{2(1-x)}\).

Now, we are ready to conclude the proof of Claim 7. We can rewrite Eq. (24) as

Hence,

The first term in Eq. (28) is equal to \(\mathbb {P}\left( B_\ell \left( y \right) = B_\ell \left( x_0 \right) \right) \), which is positive. Moreover, \(\mathbb {P}\left( B_\ell \left( y \right) \ge B_\ell \left( x_0 \right) \right) \) is obviously increasing in y, so the second term is also non-negative. By Claim 8, the third term in Eq. (28) satisfies

where the last inequality comes from the fact that \(x_0\in [1/3,2/3]\) and \(y\in [x_0,x_0+1/\sqrt{\ell }] \subseteq [1/4,3/4]\). For \(\ell \) large enough, this implies that

which concludes the proof of Claim 7.

5.3.2 Finishing the proof

The next claim concerns the fixed points of g(x, y) as a function of y.

Claim 9

For any given \(x \in [1/2+4/n,1/2+4\delta ]\), as a function of y, the equation \(y = g(x,y)\) has at most one solution on the interval \([x,x+1/ \sqrt{\ell }]\). Moreover, in the case that it has no solution, then \(g(x,x+1/\sqrt{\ell }) < x+1/ \sqrt{\ell }\).

Proof

First, we claim that \(g(x,x) < x\). Let \(p = \mathbb {P}(B_\ell (x) > B_\ell (x))\) and \(q = \mathbb {P}(B_\ell (x) = B_\ell (x))\). We rearrange the definition of g slightly to obtain \(g(x,x) = p + x\cdot q + \frac{p}{n}\). Moreover, \(x = x \cdot (2 p + q) \ge \left( 1+8/n \right) \cdot p + x\cdot q > g(x,x)\), where the first inequality is because \(x \ge 1/2+4/n\). Next, let \(h(y) = g(x,y)-y\). Function h is continuous, and what we just showed implies \(h(x) < 0\). Moreover, by Claim 7, we know that h is strictly increasing on \([x,x+1/\sqrt{\ell }]\). Therefore, either \(h(x+1/ \sqrt{\ell }) \ge 0\), in which case there is a unique \(y^\star \in [x,x+1/\sqrt{\ell }]\) such that \(h(y^\star ) = 0\); or \(h(x+1/ \sqrt{\ell }) < 0\), i.e., \(g(x,x+1/\sqrt{\ell }) < x+1/ \sqrt{\ell }\), in which case the equation \(y = g(x,y)\) has no solution on the interval. \(\square \)

For every \(x \in [1/2+4/n,1/2+4\delta ]\), let f(x) be the solution of the equation \(y = g(x,y)\) in the interval \([x,x+1/\sqrt{\ell }]\) if it exists, and \(f(x) = x+1/\sqrt{\ell }\) otherwise. Note that by Claim 9, with this definition we always have

Claim 10

For any \(x \in [1/2+4/n,1/2+4\delta ]\), it holds that

where \(\alpha > 1\) is the constant stated in Lemma 9, stated in Sect. 3.

Proof

If f(x) is not a solution to \(y = g(x,y)\), then by definition \(f(x) = x+1/ \sqrt{\ell }\), i.e.,

and so the statement holds. Otherwise, then \(f(x) = g(x,f(x))\) and belongs to \([x,x+1/\sqrt{\ell }]\). By Lemma 9, there exists \(\alpha >0\) s.t.

This can be plugged into the definition of f (Eq. (24)) to give

which we can rewrite,

This gives

where the last inequality comes from \(\mathbb {P}\left( B_\ell (f(x)) = B_\ell (x) \right) < 1/2\) (which is true when \(\ell \) is large enough), and from the fact that \(f(x)>x\). Since \((x-1/2) \ge 4/n\), this implies

as desired. This completes the proof of Claim 10. \(\square \)

Next, rewriting \(f(x)-x = \left( f(x)-1/2 \right) - \left( x-1/2 \right) \), we get from Claim 10 that for every \(x \in [1/2+4/n,1/2+4\delta ]\),