Abstract

This article shows how to construct an overlay network of constant degree and diameter \(O(\log n)\) in \(O(\log n)\) time starting from an arbitrary weakly connected graph. We assume a synchronous communication network in which nodes can send messages to nodes they know the identifier of, and new connections can be established by sending node identifiers. Suppose the initial network’s graph is weakly connected and has constant degree. In that case, our algorithm constructs the desired topology with each node sending and receiving only \(O(\log n)\) messages in each round in \(O(\log n)\) time w.h.p., which beats the currently best \(O(\log ^{3/2} n)\) time algorithm of Götte et al. (International colloquium on structural information and communication complexity (SIROCCO), Springer, 2019). Since the problem cannot be solved faster than by using pointer jumping for \(O(\log n)\) rounds (which would even require each node to communicate \(\Omega (n)\) bits), our algorithm is asymptotically optimal. We achieve this speedup by using short random walks to repeatedly establish random connections between the nodes that quickly reduce the conductance of the graph using an observation of Kwok and Lau (Approximation, randomization, and combinatorial optimization. Algorithms and techniques (APPROX/RANDOM 2014), Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, 2014). Additionally, we show how our algorithm can be used to efficiently solve graph problems in hybrid networks (Augustine et al. in Proceedings of the fourteenth annual ACM-SIAM symposium on discrete algorithms, SIAM, 2020). Motivated by the idea that nodes possess two different modes of communication, we assume that communication of the initial edges is unrestricted, whereas only polylogarithmically many messages can be sent over edges that have been established throughout an algorithm’s execution. For an (undirected) graph G with arbitrary degree, we show how to compute connected components, a spanning tree, and biconnected components in \(O(\log n)\) time w.h.p. Furthermore, we show how to compute an MIS in \(O(\log d + \log \log n)\) time w.h.p., where d is the initial degree of G.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Many modern distributed systems (especially those which operate via the internet) are not concerned with the physical infrastructure of the underlying network. Instead, these large-scale distributed systems form logical networks that are often referred to as overlay networks or peer-to-peer networks. In these networks, nodes are considered as connected if they know each other’s IP addresses. Examples include overlay networks like Chord [55], Pastry [51], and skip graphs [3]. This work considers the fundamental problem of constructing an overlay network of low diameter as fast as possible from an arbitrary initial state. Note that \(O(\log n)\) is the obvious lower bound for the problem: If the nodes initially form a line, it takes \(O(\log n)\) rounds for the two endpoints to learn each other, even if every node could introduce all of its neighbors to each other in each round.

To the best of our knowledge, the first overlay construction algorithm with polylogarithmic time and communication complexity that can handle (almost) arbitrary initial states has been proposed by Angluin et al. [2]. The authors assume a weakly connected graph of initial degree d. If in each round, each node can send and receive at most d messages, and new edges can be established by sending node identifiers, their algorithm transforms the graph into a binary search tree of depth \(O(\log n)\) in \(O(d+\log ^2 n)\) time, w.h.p.Footnote 1 A low-depth tree can easily be transformed into many other topologies, and fundamental problems such as sorting or routing can be easily solved from such a structure.

This idea has sparked a line of research investigating how quickly such overlays can be constructed. Table 1 provides an overview of the works that can be compared with our result. For example, [4] gives an \(O(\log n)\) time algorithm for initial graphs with outdegree 1. If the initial degree is d and nodes can send and receive \(O(d\log n)\) messages, there is a deterministic \(O(\log ^2 n)\) time algorithm [30]. Very recently, this has been improved to \(O(\log ^{3/2} n)\), w.h.p. [31]. However, to the best of our knowledge, there is no \(O(\log n)\)-time algorithm that can construct a well-defined overlay with logarithmic communication for any topology. This article finally closes the gap and presents the first algorithm that achieves these bounds, w.h.p.

All of the previous algorithms (i.e., [2, 4, 28, 30, 31]) essentially employ the same high-level approach of [2] to alternatingly group and merge so-called supernodes (i.e., sets of nodes that act in coordination) until only a single supernode remains. However, these supernodes need to be consolidated after being grouped with adjacent supernodes to distinguish internal from external edges. This consolidation step makes it difficult to improve the runtime further using this approach. This work presents a radically different approach, arguably simpler than existing solutions. Instead of arranging the nodes into supernodes (and paying a price of complexity and runtime for their maintenance), we establish random connections between the nodes by performing short constant length random walks. Each node starts a small number of short random walks, connects itself with the respective endpoints, and drops all other connections. Then, it repeats the procedure on the newly obtained graph.

The approach is based on classical overlay maintenance algorithms for unstructured networks such as, for example, [41] or [29] as well as practical libraries for overlays like JXTA [48]. Note that our analysis significantly differs from [41] and [29] as we do not assume that nodes arrive one after the other. Instead, we assume an arbitrary initial graph with possibly small conductance. Using novel techniques by Kwok and Lau [39] combined with elementary probabilistic arguments, we show that short random walks incrementally reduce the conductance of the graph. Once the conductance is constant, the graph’s diameter must be \(O(\log n)\). Note that such a graph can easily be transformed into many other overlay networks, such as a sorted ring, e.g., by performing a BFS and applying the algorithm of Aspnes and Wu [4] to the BFS tree or by using the techniques by Gmyr et al. [30]

1.1 Related work

The research on overlay construction is not limited to the examples in the introduction. Since practical overlay networks are often characterized by dynamic changes coming from churn or adversarial behavior, many papers aim to reach and maintain a valid topology of the network in the presence of faults. These works can be roughly categorized into two areas. On the one hand, there are so-called self-stabilizing overlay networks, which try to detect invalid configurations locally and recover the system into a stable state (see, e.g., [21] for a comprehensive survey). However, since most of these solutions focus on a very general context (such as asynchronous message passing and arbitrary corrupted memory), only a few algorithms provably achieve polylogarithmic runtimes [14, 35], and most have no bounds on the communication complexity. On the other hand, there are overlay construction algorithms that use only polylogarithmic communication per node and proceed in synchronous rounds. We have algorithms maintaining an overlay topology under randomized or adversarial errors in this category. These works focus on quickly reconfiguring the network to distribute the load evenly (under churn) or to reach an unpredictable topology (in the presence of an adversary) [9, 10, 18, 32]. However, a common assumption is that the overlay starts in some well-defined initial state. Gilbert et al. [28] combine the fast overlay construction with adversarial churn. They present a construction algorithm that tolerates adversarial churn as long as the network remains connected, and there eventually is a period of length \(\Omega (\log n^2)\) where no churn happens. The exact length of this period depends on the goal topology. Further, there is a paper by Augustine et al. [6] that considers \(\widetilde{O}(d)\)-timeFootnote 2 algorithms for so-called graph realization problems. They aim to construct graphs of any given degree distributions as fast as possible. They assume, however, that the network starts as a line, which makes the construction of the graphs considered in this work considerably more straightforward.

One of the main difficulties in designing algorithms to construct overlay networks quickly lies in the node’s limited communication capabilities in a broader context. Therefore, our algorithm further touches on a fundamental question in designing efficient algorithms for overlay networks: How can we exploit the fact that we can (theoretically) communicate with every node in the system but are restricted to sending and receiving \(O(\log n)\) messages? Recently, the impact of this restriction has been studied in the so-called Node-Capacitated Clique (NCC) model [7], in which the nodes are connected as a clique and can send and receive at most \(O(\log n)\) messages in each round. The authors present \(\widetilde{O}(a)\) algorithms, where a is the arboricityFootnote 3 of G for local problems such as MIS, matching, or coloring, a \(\widetilde{O}(D+a)\) algorithm for BFS tree, and a \(\widetilde{O}(1)\) algorithm for the minimum spanning tree (MST) problem. Robinson [50] investigates the information the nodes need to learn to solve graph problems and derives a lower bound for constructing spanners in the NCC. Interestingly, his result implies that spanners with constant stretch require polynomial time in the NCC and are much harder to compute than MSTs. As pointed out in [20], the NCC can simulate PRAM algorithms efficiently under certain limitations. If the input graph’s degree is polylogarithmic, for example, we easily obtain polylogarithmic time algorithms for (minimum) spanning forests [17, 34, 49]. Notably, Liu et al. [43] recently proposed an \(O(\log D + \log \log _{m/n} n)\) time algorithm for computing connected components in the CRCW PRAM model, which would also likely solve overlay construction. Assadi et al. [5] achieve a comparable result in the MPC model (that uses \(O(n^{\delta })\) communication per node) with a runtime logarithmic in the input graph’s spectral expansion. Note that, however, the NCC, the MPC model, and PRAMs are arguably more powerful than the overlay network model considered in this article since nodes can reach any other node (or, in the case of PRAMs, processors can contact arbitrary memory cells), which rules out a naive simulation that would have \(\Omega (\log n)\) overhead if we aim for a runtime of \(O(\log n)\). Also, if the degree is unbounded (our assumption for the hybrid model), simulating PRAM algorithms, which typically have work \(\Theta (m)\), becomes completely infeasible. Furthermore, since many PRAM algorithms are very complicated, it is highly unclear whether their techniques can be applied to our model. Last, there is a hybrid network model by Augustine et al. [8] that combines global (overlay) communication with classical distributed models such as \(\textsf{CONGEST}\) or \(\textsf{LOCAL}\). Here, in a single round, each node can communicate with all its neighbors in a communication graph G, and in addition, can send and receive a limited amount of messages from each node in the system. So far, most research for hybrid networks focussed on shortest-path problems [8, 20, 38]. For example, in general graphs, APSP can be solved exactly and optimally (up to polylogarithmic factors) in \(\widetilde{O}(\sqrt{n})\) time, and SSSP can be computed in \(\widetilde{O}\left( \min \left\{ n^{2/5}, \sqrt{D}\right\} \right) \) time exactly. Whereas even an \(\Omega (\sqrt{n})\) approximation for APSP takes time \(\widetilde{O}(\sqrt{n})\), a constant approximation of SSSP can be computed in \(\widetilde{O}(n^\varepsilon )\) time [8, 38]. Note that these algorithms require very high (local) communication. If the initial graph is very sparse, then SSSP can be solved in (small) polylogarithmic time and with limited local communication, exploiting the power of the NCC [20].

1.2 Our contribution

The main goal of this article is to construct a well-formed tree, which is a rooted tree of degree \(O(\log n)\) and diameter \(O(\log n)\) that contains all nodes of G. We chose this structure because any well-behaved overlays of logarithmic degree and diameter (e.g., butterfly networks, path graphs, sorted rings, trees, regular expanders, De Bruijn Graphs, etc.) can be constructed in \(O(\log n)\) rounds, w.h.p., starting from a well-formed tree. Distributed algorithms can use these overlays for common tasks like aggregation, routing, or sampling in logarithmic time. We present an algorithm that constructs such a tree in \(O(\log {n})\) time, w.h.p., in the P2P-\(\textsf{CONGEST}\) model. In addition to their initial neighborhood, we only assume that all nodes know an approximation of \(\log {n}\), i.e., a very loose polynomial upper bound on n, the number of nodes. All nodes must know the same approximation as we use it to synchronize between the different stages of the algorithm. More precisely, all know a common value \(L \in \Theta (\log {n})\) that affects the runtime. Further, all nodes must know a common value \(\Lambda \in \Omega (\log n)\) that controls how many overlay edges the nodes create, which in turn affects the algorithm’s success probability. We believe that the common knowledge of these variables is a realistic assumption, as a node may simply use the length of its identifier as an approximation for \(\log {n}\). Our main result is the following:

Theorem 1.1

(Main theorem) Let \(G = (V, E)\) be a weakly connected directed graph with degree O(d) and assume each node knows a runtime bound \(L \in \Theta (\log n)\) and security parameter \(\Lambda \in \Omega (\log n)\). There is a randomized algorithm in the P2P-\(\textsf{CONGEST}\) model that with probability \(1-e^{-\Theta (\Lambda )}\)

-

1.

constructs a well-formed tree \(T_G = (V,T_V)\) in O(L) rounds, and

-

2.

lets each node send at most \(O(d\Lambda )\) messages per round.

Note that for \(L,\Lambda \in \Theta (\log n)\), the algorithm completes in \(O(\log n)\) time and sends \(O(d\log n)\) messages, w.h.p. We present the promised algorithm in Sect. 3. Note that we can use techniques from [30] or [4] to further refine our constructions and to reduce the degree of the well-formed tree to O(1). However, since the main focus of our algorithm is the novel idea of using random walks, we omit further details here and refer the reader to [30] and [4].

Our result comes with various applications and implications for several distributed computation problems. First, we point out the following immediate implications that simply follow from the fact that any initial overlay topology can be turned into a well-formed overlay in \(O(\log n)\) time.

-

1.

Every monitoring problem presented in [30] where the goal is to observe the properties of the input graph can be solved in \(O(\log n)\) time, w.h.p., instead of \(O(\log ^2 n)\) deterministically. These problems include monitoring the graph’s node and edge count, its bipartiteness, and the approximate (and even exact) weight of an MST.

-

2.

For the churn-resistant overlays from [6, 9, 10, 18, 32], the common assumption is that the graph starts in a well-initialized overlay. This assumption can be dropped.

-

3.

For most algorithms presented for the NCC (and hybrid networks that model the global network by the NCC) [7, 8, 20], the rather strong assumption that all node identifiers are known may be dropped. Instead, suppose the initial knowledge graph has degree \(O(\log n)\). In that case, we can construct a butterfly network in \(O(\log n)\) time, which suffices for most primitives to work (note that all presented algorithms have a runtime of \(\Omega (\log n)\) anyway).

In Sect. 4, we then give further applications of the algorithm for the hybrid model. For an (undirected) graph G with arbitrary degree, we show how to compute connected components (cf. Sect. 4.1), a maximal independent set (cf. Sect. 4.2), a spanning tree (cf. Sect. 4.3), and biconnected components (cf. Sect. 4.4). As already pointed out, all of the following algorithms can be performed in the hybrid network model of Augustine et al. [8], which provides a variety of novel contributions for these networks. The local capacity of each edge is O(1), which corresponds to the classic \(\textsf{CONGEST}\) model. For each algorithm, we give a bound on the required global capacity. Note that our algorithms may likely be optimized to require a smaller global capacity using more sophisticated techniques. We remark that all of the following algorithms can be adapted to achieve the same runtimes in the NCC\(_0\) model if the initial degree is constant. In the following, we briefly summarize the results.

1.2.1 Connected components

As a first application of our algorithm, in Sect. 4.1, we show how to establish a well-formed tree on each connected component of G (if G is not connected initially).

Theorem 1.2

Let \(G = (V, E)\) be a directed graph. Further, all components have a (known) size of \(O(n')\). There is a randomized algorithm that constructs a well-formed tree on each connected component of (the undirected version of) G in \(O(\log {n'})\) rounds, w.h.p., in the hybrid model. The algorithm requires global capacity \(O(\log ^2 n)\), w.h.p.

If the graph is connected, i.e., there is exactly one component with n nodes, this theorem simply translates Theorem 1.1 to the hybrid model. Note that in the hybrid model, a node \(v \in V\) can no longer create \(O(d(v)\log n)\) overlay edges, which was possible in the \(\textsc {P2P}-\textsf{CONGEST} \) model. Thus, high degree nodes cannot simply simulate the algorithm from before. The section, therefore, presents an adaption of our main algorithm that circumvents some problems introduced by the potentially high node degrees. Here, we first need to transform the graph into a sparse spanner using the efficient spanner construction of Miller et al. [46], later refined by Elkin and Neiman [19]. In their algorithm, each node \(v \in V\) draws an exponential random variable \(\delta _u\) and broadcasts it to all nodes in the graphFootnote 4. Let \(u^*_v\) be the node that maximizes \(\delta _{u^*_v} - \textsf{dist}(u^*_v,v) \) where \(\textsf{dist}(u^*_v,v) \) is the hop distance between \(u^*_v\) and v. Then, v joins the cluster of \(u^*_v\) by storing its identifier and adding the first edge over which it received the broadcast from \(u^*_v\) to the spanner. Finally, the nodes share the identifier of their cluster with their neighbors and add edges between the clusters to obtain a connected graph. It is known that the resulting subgraph of G has very few edges as each node has only a few neighbors in different clusters. This follows from the fundamental properties of the exponential distribution.

We show that if the size of each connected component in graph G is bounded by \(n'\), it suffices to observe variables \(\delta _v\) that are conditioned on being smaller than \(4\log {n'}\), i.e., truncated exponential random variables. With this small change, we speed up the algorithm to \(O(\log {n'})\) while still producing a sparse subgraph with fewer edges. This graph can then be rearranged into a connected \(O(\log n)\)-degree network, allowing us to apply our main algorithm of Theorem 1.1 with parameters \(L \in O(\log {n'})\) and \(\Lambda \in O(\log {n})\). Note that the message complexity per node is still logarithmic in n, the size of the original graph, and not in \(n'\).

1.2.2 Maximal independent set (MIS)

In the MIS problem, we ask for a set \(S \subseteq V\) such that (1) no two nodes in S are adjacent in the initial graph G and (2) every node \(v \in V \setminus S\) has a neighbor in S. We present an efficient MIS algorithm that combines the shattering technique [12, 23] with our overlay construction algorithm to solve the MIS problem in almost \(O(\log d)\) time, w.h.p. This technique shatters the graph into small components of undecided nodes in \(O(\log d)\) time, w.h.p. We then compute a well-formed tree of depth \(O(\log {d}+\log \log n)\) in all these small components. This takes \(O(\log {d}+\log \log n)\) rounds w.h.p. using the algorithm of Theorem 1.2. In each component, we can now independently compute MIS solutions using \(O(\log {n})\) parallel executions of the \(\textsf{CONGEST}\) algorithm by Metivier et al. [47]. As we will see, at least one of these executions must finish after \(O(\log d +\log \log n)\) round w.h.p, so the nodes only need to agree on a solution. The problem is that the nodes cannot locally check which executions have finished. However, we can use our well-formed tree to broadcast information on the executions to all nodes in the component in \(O(\log {d}+\log \log n)\) time. This leads to an \(O(\log d + \log \log n)\) time algorithm, where d is the initial graph’s degree. In Sect. 4.2, we provide the necessary details omitted in this overview and show that:

Theorem 1.3

Let \(G = (V, E)\) be a weakly connected directed graph. There is a randomized algorithm that computes an MIS of G in \(O(\log d + \log \log n)\) rounds, w.h.p., in the hybrid model. The algorithm requires global capacity \(O(\log ^2 n)\), w.h.p.

1.2.3 Spanning trees

A spanning tree \((V, E_S)\) is a subgraph of G with \(n-1\) edges that connects all its nodes. In Sect. 4.3, we compute a (not necessarily minimum) spanning tree of the initial graph G. We show how to obtain this spanning tree by unwinding the random walks over which the additional edges to make up our well-formed tree have been established. More precisely, for each edge (v, w) used by our algorithm, the identifier of w must have somehow been sent to v along some random walk \((v_1 = w, \dots , v_\ell = v)\). Given that (v, w) is part of spanning tree, we show how replace (v, w) by some edge \((v_i,v_{i+1})\). Through recursively applying this trick, we show that:

Theorem 1.4

Let \(G = (V, E)\) be a weakly connected directed graph. There is a randomized algorithm that constructs a spanning tree of (the undirected version of) G in \(O(\log n)\) rounds, w.h.p., in the hybrid model. The algorithm requires global capacity \(O(\log ^2 n)\), w.h.p.

We remark that it is unclear whether our algorithm also helps compute a minimum spanning tree, which is easily possible with earlier overlay construction algorithms. It seems that in order to do so, we would need different techniques.

1.2.4 Biconnected components

We call an undirected graph H biconnected if every two nodes \(u,v \in V\) are connected by two directed node-disjoint paths. Intuitively, biconnected graphs are guaranteed to remain connected even if a single node fails. Our goal is to find the biconnected components of G, which are the maximal biconnected subgraphs of G. Note that cut vertices,i.e., nodes whose removal increases the number of connected components, are contained in multiple biconnected components. In Sect. 4.4, we show how to apply the PRAM algorithm of Tarjan and Vishkin [56] to compute the biconnected components of a graph to the hybrid model. The algorithm relies on a spanning tree computation, which allows us to use Theorem 1.4 to achieve a runtime of \(O(\log n)\), w.h.p.

Theorem 1.5

Let \(G = (V, E)\) be a weakly connected directed graph. There is a randomized algorithm that computes the biconnected components of (the undirected version of) G in \(O(\log n)\) rounds, w.h.p., in the hybrid model. Furthermore, the algorithm computes whether G is biconnected and, if not, determines its cut nodes and bridge edges. The algorithm requires global capacity \(O(\log ^5 n)\), w.h.p.

2 Mathematical preliminaries

Before we formally define the problems considered in this article, we first review some basic concepts from graph theory, probability theory, and in particular, the analysis of random walks.

2.1 Basic terms from graph theory

We begin with some general terms that will be used throughout this article. Recall that \(G = (V,E)\) is a directed graph with \(n := \vert V\vert \) nodes and \(m := \vert E\vert \) edges. A node’s outdegree denotes the number of outgoing edges, i.e., the number of identifiers it stores. Analogously, its indegree denotes the number of incoming edges, i.e., the number of nodes that store its identifier. A node’s degree is the sum of its in- and outdegree, and the graph’s degree is the maximum degree of any node, which we denote by d. We say that a graph is weakly connected if there is a (not necessarily directed) path between all pairs of nodes. For a node pair \(v,w \in V\), we let \(\textsf{dist}(v,w)\) we let be the length of a shortest path between v and w in the undirected version of G, i.e., the minimum number of hops to get from v to w. A graph’s diameter is the length of the longest shortest path in G. Last, for a graph G we define \(G^{\ell } := (V,E_{\ell })\) to be the \(\ell \)-walk graph of G, i.e., \(G^\ell \) is the multigraph where each edge \((v,w) \in E_{\ell }\) corresponds to an \(\ell \)-step walk in G. Note that a walk can visit the same edge more than once, so \(G^{\ell }\) must be a multigraph. For a subset \(S \subseteq V\), we denote \(\overline{S} := V \setminus S\). We define the cut \(c(S,\overline{S})\) as the set of all edges \((v,w) \in V\) with \(v \in S\) and \(w \in \overline{S}\). We define the number edges that cross a cut \(c(S,\overline{S})\) as \(O_S:=\vert c(S,\overline{S})\vert \).

2.2 Probability theory and combinatorics

In this section, we establish well-known bounds and tail estimates from probability theory and useful facts from combinatorics that help us analyze certain random events in our algorithms. The first bound is Markov’s inequality, which estimates the probability of a random variable reaching a certain value based on its expectation. It holds:

Lemma 2.1

(Markov’s inequality) Let X be a non-negative random variable and \(a>0\), then it holds:

While this inequality applies to various variables, it is not very precise. For more precise bounds, we heavily use the well-known Chernoff bound, another standard tool for analyzing distributed algorithms. In particular, we will use the following version:

Lemma 2.2

(Chernoff bound) Let \(X = \sum _{i=1}^n X_i\) for independent distributed random variables \(X_i \in \{0,1\}\) and \(\mathbb {E}(X) \le \mu _H\) and \(\delta \ge 1\).

Similarly, for \(\mathbb {E}(X) \ge \mu _L\) and \(0 \le \delta \le 1\) we have

Further, we use the union bound that helps us to bound the probability for many correlated events as long as all these events have a very small probability of happening. It holds:

Lemma 2.3

(Union bound) Let \(\mathcal {B} := B_1, \dots , B_m\) be a set of m (possibly dependent) events. Then, the probability any of the events in \(\mathcal {B}\) happens can be bounded as follows:

This bound is tremendously helpful when dealing with a polynomial number of bad events, say \(n^{c}\) many, that do not happen with high probability, say \(1-n^{c'}\) for some tunable constant \(c'\). If we choose this constant \(c'\) big enough, the union bound trivially implies that the probability of any bad event happening is \({n^{-c''}}\) for a constant \(c'' := c'-c\). Thus, if we can show that a specific event holds for a single node w.h.p., the union bound implies that it holds for all nodes w.h.p. However, if the number of events in question is superpolynomial, i.e., bigger than \(n^c\) for any constant c, the union bound alone is not enough to show that these events do not happen w.h.p. In this work, we need to make some statements about events that correlate to all possible subsets of nodes of a random graph. Since there are exponentially many of these subsets, we need to apply the union bound more carefully. Indeed, we can show the following technical lemma:

Lemma 2.4

(Cut-based union bound) Let \(G := (V,E)\) be a (multi-)graph with n nodes and m edges and let \(\mathcal {B} := \{\mathcal {B}_S \mid S \subseteq V \wedge \vert S\vert \le n/2\}\) a set of bad events.

Suppose that the following three properties hold:

-

1.

G has at most \(m \in O(n^{c_1})\) edges for some constant \(c_1>1\).

-

2.

For each \(\mathcal {B}_S\) it holds \({\textbf{Pr}\left[ \mathcal {B}_S\right] } \le e^{-c_2 O_S}\) for some constant \(c_2>0\).

-

3.

The minimum cut of G is at least \(\Lambda := 4\frac{c_1}{c_2}c_3\log n\) edges for some tunable constant \(c_3>1\).

Then, the probability any of the events in \(\mathcal {B}\) happens can be bounded by:

In other words, w.h.p. no event from \(\mathcal {B}\) occurs.

Proof

The core of this lemma is a celebrated result of Karger [36] that bounds the number of cuts (and therefore the number of subsets \(S \subset V\) with \(\vert S\vert \le n/2\) as one side of each cut must have fewer than n/2 nodes) with at most \(\alpha \Lambda \) outgoing edges by \(O(n^{2\alpha })\). More precisely, it holds:

Theorem 2.1

(Theorem 3.3 in [36], simplified) Let G be an undirected, unweighted (multi-)graph and let \(\Lambda >1\) be the size of a minimum cut in G. For an even parameter \(\alpha \ge 1\), the number of cuts with at most \(\alpha \Lambda \) edges is bounded by \(n^{2\alpha } \).

Thus, if the probability of a bad event for a set S exponentially depends on \(O_S\) (and not some constant c), a careful application of the union bound will give us the desired result. The idea behind the proof is to divide all subsets into groups based on the number of their outgoing edges. Then, we use Karger’s Theorem to bound the number of sets in a group and use the union bound for each group individually.

More precisely, let \(\textsf{Pow}(V)\) denote all possible subsets of V. Then, we define \(\mathcal {S}_\alpha \in \textsf{Pow}(V)\) to be the set of all sets that have a cut of size \(c \in [\alpha \Lambda ,2\alpha \Lambda )\). Using this definition, we can show that the following holds by using the union bound and regrouping the sum:

Note that the upper limit \(\frac{m}{\Lambda }\) of the outermost sum is derived from the fact that at most m edges may cross any cut in the graph. Further note that that for each \(\alpha > 1\) it holds that \(\mathcal {S}_{\alpha } \subset \{S \subset V \mid O_S \le 2\alpha \Lambda \}\) by definition and therefore \(\vert \mathcal {S}_{\alpha }\vert \le \vert \{S \subset V \mid O_S \le 2\alpha \Lambda \}\vert \) Now we can apply Theorem 2.1 and see that

Here, inequality (8) followed from Theorem 2.1, everything else from the definition of \(S_{\alpha }\) and \(\mathcal {B}_S\). Finally, our specific choice of \(\Lambda \ge 4\frac{c_1}{c_2}c_3\log n\) comes into play. Plugging it into the exponent of our bound, we get:

To complete the proof, we need to bound \(\frac{m}{\Lambda }\). Per definition, it holds that \(m \le n^{c_1}\). Back in the formula, we get

This proves the lemma. \(\square \)

2.3 Random walks on regular graphs

Finally, we observe the behavior of (short) random walks on regular graphs and establish useful definitions and results. Starting with the most basic definition, a random walk on a graph \(G := (V, E)\) is a stochastic process \(\left( v_i \right) _{i \in \mathbb {N}}\) that starts at some node \(v_0 \in V\) and in each step moves to some neighbor of the current node. If G is \(\Delta \)-regular, the probability of moving from v to its neighbor w is  . Here, e(u, w) denotes the number of edges between u and w as G is a multigraph. We say that a \(\Delta \)-regular graph G (and the corresponding walk on its nodes) is lazy if each node has at least \(\frac{\Delta }{2}\) self-loops (and therefore a random walk stays at its current node with probability at least

. Here, e(u, w) denotes the number of edges between u and w as G is a multigraph. We say that a \(\Delta \)-regular graph G (and the corresponding walk on its nodes) is lazy if each node has at least \(\frac{\Delta }{2}\) self-loops (and therefore a random walk stays at its current node with probability at least  in each step). For \(v,w \in V\) let \(X^\ell _v(w, G)\) be the indicator for the event that an \(\ell \)-step random walk in G which started in v ends in w. Analogously, let \(X^1_v(w, G^\ell )\) be the indicator that a 1-step random walk in \(G^\ell \) which started in v ends in w. If we consider a fixed node v that is clear from the context, we may drop the subscript and write \(X^1(w, G^\ell )\) instead. Further, let \(P_\ell (v,w)\) be the exact number of walks of length \(\ell \) between v and w in G. Note that it holds \(P_1(v,w) = e(v,w)\). Given these definitions, the probability to move from v to w in \(G^\ell \) is given by the following lemma:

in each step). For \(v,w \in V\) let \(X^\ell _v(w, G)\) be the indicator for the event that an \(\ell \)-step random walk in G which started in v ends in w. Analogously, let \(X^1_v(w, G^\ell )\) be the indicator that a 1-step random walk in \(G^\ell \) which started in v ends in w. If we consider a fixed node v that is clear from the context, we may drop the subscript and write \(X^1(w, G^\ell )\) instead. Further, let \(P_\ell (v,w)\) be the exact number of walks of length \(\ell \) between v and w in G. Note that it holds \(P_1(v,w) = e(v,w)\). Given these definitions, the probability to move from v to w in \(G^\ell \) is given by the following lemma:

Lemma 2.5

Let G be a \(\Delta \)-regular graph and \(G^\ell \) its \(\ell \)-walk graph for some \(\ell > 1\) , then it holds:

Proof

The statement can be proved via an induction over \(\ell \), the length of the walk. For the base case, we need to show that 1-step random walk in G is equivalent to picking an outgoing edge in \(G^1 := G\) uniformly at random. This follows trivially from the definition of a random walk. Now suppose that performing an \((\ell -1)\)-step random walk in G is equivalent to performing a 1-step walk in \(G^{\ell -1}\). Consider a node \(w \in V\) and let \(N_w\) denote its neighbors in G and witself. By the law of total probability, it holds:

Using the induction hypothesis we we can substitute \({\textbf{Pr}\left[ X^{\ell -1}(u,G) = 1\right] }\) for \({\textbf{Pr}\left[ X^{1}(u,G^{\ell -1}) = 1\right] }\) and get:

Recall that G is a multigraph, and there can be more than one edge between each u and w and e(u, w) denote the number of edges between u and w for every \(u \in N_w\). Since we defined that \(w \in N_w\), the value e(w, w) counts w’s self-loops. Since G is \(\Delta \)-regular, the probability that a random walk at node u moves to w is exactly \(\frac{e(u,w)}{\Delta }\). Back in the formula, we get:

Finally, note that \(\sum _{u \in N_w} {P_{\ell -1}(v,u)}\cdot {e(u,w)}\) counts all paths of length exactly \(\ell \) from v to w in G. This follows because each path \(P := (e_1, \ldots , e_\ell )\) from u to w can be decomposed into a path \(P' := (e_1, \ldots , e_{\ell -1})\) of length \(\ell -1\) to some neighbor of w (or w itself) and the final edge (or self-loop) \(e_\ell \). Thus, it follows that:

This was to be shown.

\(\square \)

In other words, the multigraph \(G^\ell \) is \(\Delta ^\ell \)-regular and has edge (v, w) for every walk of length \(\ell \) between v and w.

Our analysis will heavily rely on the conductance of the communication graph. The conductance of set \(S \subset V\) is the ratio of its outgoing edges and all its edges. The conductance \(\Phi _{G}\) of a graph G is the minimal conductance of every subset that contains less than n/2 nodes. Formally, the conductance is defined as follows:

Definition 1

(Conductance) Let \(G := (V,E)\) be a connected \(\Delta \)-regular graph and \(S \subset V\) with \(\vert S\vert \le \frac{\vert V\vert }{2}\) be any subset of G with at most half its nodes. Then, the conductance \(\Phi _{G}(S) \in (0,1)\) of S is

We further need the notion of small-set conductance, which is a natural generalization of conductance. Instead of denoting the minimum conductance of all sets smaller than n/2, small-set conductance only considers sets of size \(\frac{\delta \vert V\vert }{2}\) for any \(\delta \in (0,1]\). Analogous to the conductance, it is defined as follows:

Definition 2

(Small-set conductance) Let \(G := (V,E)\) be a connected \(\Delta \)-regular graph and \(S \subset V\) with \(\vert S\vert \le \frac{\delta \vert V\vert }{2}\) be any subset of G. The small-set conductance \(\Phi _{\delta }\) of G is

We further need two well-known facts about the conductance. First, we see that we can relate the minimum conductance of a \(\Delta \)-regular graph to its minimum cut. It holds:

Lemma 2.6

(Minimum conductance) Let \(G := (V,E)\) be any \(\Delta \)-regular connected graph with minimum cut \(\Lambda \ge 1\). Then for all \(\delta \in (0,1]\) it holds:

Proof

Consider the set S with \(\vert S\vert \le \frac{\delta n}{2}\) that minimizes \(\Phi (S')\) among all sets \(\vert S'\vert \le \frac{\delta }{2}n\). Then, it holds by the definition \(\Phi _\delta (G)\), S and \(\Lambda \) that:

\(\square \)

Second, we show that a constant conductance implies a logarithmic diameter if the graph is regular. It holds:

Lemma 2.7

(High conductance implies low diameter) Let \(G := (V,E)\) be any lazy bi-directed \(\Delta \)-regular graph with conductance \(\Phi \), then the diameter of G is at most \(O(\Phi ^{-2}\log n)\).

Proof

We will prove this lemma by analyzing the distribution of random walks on G. Let \(v,w \in V\) be two nodes of G and let \(\ell >0\) be an integer. We denote \(p^{\ell }(v,w)\in [0,1]\) as the probability that an \(\ell \)-step random walk that starts in v, ends in w. Note that \(p^{\ell }(v,w)>0\) implies that there must exist path of length \(\ell \) from v to w. Following this argument, if it holds \(p^{\ell }(v,w)>0\) for all pairs \(v,w \in V\), then the graph’s diameter must be smaller or equal to \(\ell \). Thus, in the following, we will show that for \(\ell \in \Omega (\Phi _G^{-2}\log {n})\) we have \(p^{\ell }(v,w)>0\) for all pairs of nodes. First, we note that a sharp upper bound on the probabilities also implies a lower bound.

Claim 1

Let \(v \in V\) be a node with \(p^{\ell }(v,w) \le \frac{1}{n}+\frac{1}{n^2}\) for all \(w \in V\). Then, it holds

Proof

As each random walk must end some node \(w \in V\), we have:

Together with our upper bound of \(\frac{1}{n}+\frac{1}{n^2}\), we can now derive the following lower bound

\(\square \)

Thus, a low enough maximal probability implies a positive lower bound. Our next goal is to find such a precise upper bound. We will use well-known concepts from the analysis of Markov chains to do this. We define \(\pi := \left( \pi _v\right) _{v \in V}\) as the stationary distribution of a random walk on G. For any \(\Delta \)-regular graph, it holds:

For a connected, bidirected, non-bipartite graph, the distribution of possible endpoints of a random walk converges towards its stationary distribution. For a fixed \(\ell \), we define the relative pointwise distance as:

This definition describes how far the distribution is from the stationary distribution after \(\ell \) steps. Given this definition, it is easy to see that the following claim holds:

Claim 2

Suppose that \(\rho _G(\ell ) < \frac{1}{n}\), then it holds for all \(v,w \in V\) that

Proof

For contradiction, assume that the statement is false. Then, there is a pair \(v',w' \in V\) with

for some \(c>1\). Further, it must hold that

This is the desired contradiction. \(\square \)

Thus, we will determine an upper bound for \(\rho _G(\ell )\) in the remainder. In an influential article, Jerrum and Sinclair proved that relative pointwise distance after \(\ell \) steps is closely tied to the graph’s conductance. In particular, they showed that:

Lemma 2.8

(Theorem 3.4 in [54], simplified) Let G be lazy, regular, and connected. Further, let \(\pi \) be its stationary distribution of a random walk. Then for any node \(v \in V\), the relative pointwise distance satisfies

With \(\pi ^* := \max _{v \in V}\{\pi _v\}\)

In the original lemma, the underlying Markov chain must be ergodic, i.e., every state is reachable from every other state, and time-reversible, i.e., it holds \(p^\ell (v,w) = p^\ell (w,v)\). The first property is implied by the fact that the graph is connected, so every node is reachable. The latter follows from the facts that the graph is bi-directed and regular, so it holds \(p_1(v,w) = \frac{e(v,w)}{\Delta } = \frac{e(w,v)}{\Delta } = p_1(w,v)\). Further, note that for our graph, we have \(\pi ^* := \frac{1}{n}\). Plugging this and \(\ell := 4\Phi ^{-2}\log {n}\) in the formula, we get:

Here, inequality (57) follows from the well-known fact that  for any \(x>1.5\), which clearly holds as \(\Phi _G^2 < 1\). Thus, following Claims 1 and 2, all probabilities are strictly positive after \(\ell \) steps of the random walk and the diameter must be smaller \(\ell \). \(\square \)

for any \(x>1.5\), which clearly holds as \(\Phi _G^2 < 1\). Thus, following Claims 1 and 2, all probabilities are strictly positive after \(\ell \) steps of the random walk and the diameter must be smaller \(\ell \). \(\square \)

For our analysis, it will be crucial to observe the (small-set) conductance of \(G^\ell \) for a constant \(\ell \). However, the standard Cheeger inequality (see, e.g., [53] for an overview) that is most commonly used to bound a graph’s conductance with the help of the graph’s eigenvalues does not help us in deriving a meaningful lower bound for \(\Phi _{G^\ell }\). In particular, it only states that \(\Phi _{G^\ell } = \Theta (\ell \Phi _{G}^{2})\). Thus, it only provides a useful bound if \(\ell = \Omega (\Phi _{G}^{-1})\), which is too big for our purposes, as \(\Omega (\Phi _{G}^{-1})\) is only constant if \(\Phi _{G}\) is constant. More recent Cheeger inequalities shown in [42] relate the conductance of smaller subsets to higher eigenvalues of the random walk matrix. At first glance, this seems helpful, as one could use these to show that at least the small sets start to be more densely connected and then, inductively, continue the argument. Still, even with this approach, constant length walks are out of the question as these new Cheeger inequalities introduce an additional tight \(O(\log n)\) factor in the approximation for these small sets. Thus, the random walks would need to be of length \(\Omega (\log n)\), which is still too much to achieve our bounds. Instead, we use the following result by Kwok and Lau [39], which states that \(\Phi _{G^\ell }\) improves even for constant values of \(\ell \). Given this bound, we can show that benign graphs increase their (expected) conductance from iteration to iteration. In the following, we prove Lemma 2.9 by outlining the proofs of Theorem 1 and 3 in [39]. It holds that:

Lemma 2.9

(Conductance of \(G^\ell \), based on Theorem 1 and 3 in [39]) Let \(G = (V,E)\) be any connected \(\Delta \)-regular lazy graph with conductance \(\Phi _{G}\) and let \(G^\ell \) be its \(\ell \)-walk graph. For a set \(S \subset G\) define \(\Phi _{G^\ell }(S)\) as the conductance of S in \(G^\ell \). Then, it holds:

Further, if \(\vert S\vert \le \delta n\) for any \(\delta \in (0,\frac{1}{2}]\), we have

Proof

Before we go into the details, we need another batch of definitions from the study of random walks and Markov chains. Let \(G:=(V,E)\) be a \(\Delta \)-regular, lazy graph and let \(A_G \in \mathbb {R}^{n \times n}\) the stochastic random walk matrix of G. Each entry \(A_G(v,w)\) in the matrix has the value \(\frac{e(v,w)}{\Delta }\) where e(v, w) denotes the number of edges between v and w (or self-loops if \(v=w\)). Likewise \(A^\ell _G\) is the random walk matrix of \(G^\ell \) where each entry has value \(\frac{P_\ell (v,w)}{\Delta ^\ell }\). Note that both \(A_G\) and \(A^\ell _G\) are doubly-stochastic, meaning that their rows and columns sum up to 1. For these types of weighted matrices, Kwok and Lau define the expansion \(\varphi (S)\) of a subset \(S \subset V\) as follows:

For regular graphs (and only those), this value is equal to the conductance \(\Phi _{G}(S)\) of S, which we observed before. The following elementary calculation can verify this claim:

Therefore, the claim that Kwok and Lau make for the expansion also holds for the conductance of regular graphsFootnote 5. The proof in [39] is based on the function \(C^{(\ell )}(\vert S\vert )\) introduced by Lovász and Simonovits [44]. Consider a set \(S \subset V\), then Lovasz and Simonovits define the following curve that bounds the distribution of random walk probabilities for the nodes of S.

Here, the vector \(p_S\) is the so-called characteristic vector of S with \(p_i = \frac{1}{\vert S\vert }\) for each \(v_i \in S\) and 0 otherwise. Further, the term \((A^{\ell } p)_i\) denotes the \(i^{th}\) value of the vector \(A^{\ell } p_S\). Lovász and Simonovits used this curve to analyze the mixing time of Markov chains. Kwok and Lau now noticed that it also holds that:

Lemma 2.10

(Lemma 6 in [39]) It holds:

Based on this observation, they deduce that a bound for \(1-C^{(\ell )}(S)\) doubles as a bound for \(\Phi _{G^\ell }\). In particular, they can show the following bounds for \(C^{(\ell )}(\vert S\vert )\):

Lemma 2.11

(Lemma 7 in [39]) It holds

We refer the interested reader to Lemma 7 of [39] for the full proof with all necessary details. For the next step, we need the following well-known inequality:

Lemma 2.12

For any \(t>1\) and \(z \le \frac{1}{2}\), it holds:

Now assume that G does not already have a constant conductance of \(\Phi (G) = \frac{1}{2}\). Plugging this assumption and the two insights by Kwok and Lau together, we get

The last inequality follows from the fact that \(\sqrt{\ell }\Phi _{G}\) is at most \(\frac{1}{2}\). The second part of the theorem can be derived similarly. Again, we observe an auxiliary lemma by Kwok and Lau and see:

Lemma 2.13

(Lemma 10 in [39]) Let S be set of size at most \(\delta n\) with \(\delta \in [0,\frac{1}{4})\). Then, it holds:

Proof

Analogously to the previous case, we get for \(\Phi _{2\delta }(G) \le \frac{1}{4}\) that

In the last inequality, we used Lemma 2.12 with \(z=2\Phi _{2\delta }(G)\). \(\square \)

Finally, these two lower bounds are too loose for graphs (and subsets) that already have good conductance. Instead we require that \(\Phi _{G^\ell }(S)\) is at least as big as \(\Phi _{G}(S)\). Note that this is not necessarily the case for all graphs. Instead, we must use the fact that our graphs are lazy. We show this in the following lemma:

Lemma 2.14

Let \(G := (V,E)\) be any connected \(\Delta \)-regular lazy graph with conductance \(\Phi _{G}\) and let \(G^\ell \) be its \(\ell \)-walk graph. For a set \(S \subset G\) define \(\Phi _{G^\ell }(S)\) the conductance of S in \(G^\ell \). Then, it holds:

Proof

Our technical argument is based on the following recursive relation between \(C^{(\ell +1)}\) and \(C^{(\ell )}\), which was (in part) already shown in [44]: \(\square \)

Lemma 2.15

(Lemma 1.4 in [44]) It holds

Here, we use the abbreviation \(\hat{\vert S\vert } := \max \{\vert S\vert , n-\vert S\vert \}\). The remainder of the proof is based on two claims. First, we claim that \(C^{(\ell )}(\vert S\vert )\) is monotonically increasing in \(\ell \).

Claim 3

It holds \(C^{(\ell )}(\vert S\vert ) \le C^{(\ell -1)}(\vert S\vert )\)

Proof

This fact was already remarked in [44] based on an alternative formulation. However, given that \(C^{(\ell )}\) is concave, it holds that for all values \(\gamma , \beta \ge 0\) with \(\gamma \le \beta \) that

And thus, together with Lemma 2.15, we get:

Here, we chose \(\beta =2\Phi _{G}\) and \(\gamma =0\) and applied Eq. 81. This proves the first claim. \(\square \)

Second, we claim that \(C^{(1))}(\vert S\vert )\) is equal to \(1-\Phi _{G}(S)\) as long as the graph we observe is lazy.

Claim 4

It holds \(C^{(1)}(\vert S\vert ) = 1-\Phi _{G}(S)\)

Proof

For this claim (which was not explicitly shown in [39], but implied in [44]) we observe

and find the assignment of the \(\delta \)’s that maximizes the sum. Lovasz and Simonovits already remarked that it is maximized by setting \(\delta _i=1\) for all \(v_i \in S\). However, we prove it here since there is no explicit lemma or proof to point to in [44]. First, we show that all entries \((A_Gp_S)_i\) for nodes \(v_i \in S\) are least \(\frac{1}{2\vert S\vert }\) and all entries \((A_Gp_S)_{i'}\) for nodes \(v_{i'} \not \in S\) are at most \(\frac{1}{2\vert S\vert }\). We begin with the nodes in S. Given that G is \(\Delta \)-regular and lazy, we have for all \(v_i \in S\) that

Here, \({p_S}_i = \frac{1}{\vert S\vert }\) follows because \(v_i \in S\) per definition. The inequality \(A_G(v_i,v_i) \ge \frac{1}{2}\) follows from the fact that A is lazy and each node has a self-loop with probability \(\frac{1}{2}\). As a result, the entry \((A_Gp_S)_i\) for \(v_i \in S\) has at least a value of \(\frac{1}{2\vert S\vert }\), even if it has no neighbors in S. On the other hand, we have for all nodes \(v_{i'} \not \in S\) that

This follows from excluding all entries \(p_j\) with \(v_j \not \in S\). Note that for these values, it holds \(p_j=0\). Further, Since A is \(\Delta \)-regular and lazy, each node \(v_{i'} \not \in S\) has at most \(\frac{\Delta }{2}\) edges to nodes in S.

Thus, the corresponding value \((A_Gp_S)_i\) of any \(v_i \in S\) is at least as big as value \((A_Gp_S)_{i'}\) of \(v_{i'} \not \in S\). By a simple greedy argument, we now see that \(\sum _{i=1}^n \delta _i (A^{\ell } p_S)_i\) is maximized by picking \(\delta _i = 1\) for all nodes in S: To illustrate this, suppose that there is a choice of the \(\delta \)’s such that \(\sum _{i=1}^n \delta _i (A_Gp_s)_i\) is maximized and it holds \(\delta _i < 1\) for some \(v_i \in S\). Since no \(\delta \) can be bigger than 1 and the \(\sum _{i = 1}^n \delta _i = \vert S\vert \) there must be a \(v_{i'} \not \in S\) with \(\delta _{i'}>0\). Since \((A_Gp_S)_i \ge (A_Gp_S)_{i'}\) decreasing \(\delta _{i'}\) and increasing \(\delta _{i}\) does not decrease the sum. Thus, choosing \(\delta _i=1\) for all \(v_i \in S\) must maximize the term \(\sum _{i=1}^n \delta _i (A_Gp_s)_i\). This yields:

Here, the value \({O_S}\) denotes the edges leaving S. Given that the graph is \(\Delta \)-regular, the term \(\Delta \vert S\vert -{O_S}\) counts all edges in S. This was to be shown. \(\square \)

If we combine our two claims, the lemma follows. \(\square \)

Thus, \(\Phi _{G^\ell }(S)\) is at least as big as \(\Phi _{G}(S)\). Together with the previous lemmas, this proves Lemma 2.9. \(\square \)

3 The overlay construction algorithm

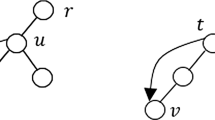

In this section, we present our algorithm to construct a well-formed tree in \(O(\log n)\) time, w.h.p., and give the proof to establish the correctness of Theorem 1.1. To the best of our knowledge, our approach is different from all previous algorithms for our problem [3, 4, 30, 31] in that it does not use any form of clustering to contract large portions of the graph into supernodes. On a high level, our algorithm progresses through \(O(\log n)\) iterations, where the next graph is obtained by establishing random edges on the current graph. More precisely, each node of a graph simply starts a few random walks of constant length and connects itself with the respective endpoints. The next graph only contains the newly established edges. We will show that after \(O(\log n)\) iterations of this simple procedure, we reach a graph with diameter \(O(\log n)\). One can easily verify that this strategy does not trivially work on any graph, as the graph’s degree distributions and other properties significantly impact the distribution of random walks. However, as it turns out, we only need to ensure that the initial graph has some nice properties to obtain well-behaved random walks. More precisely, throughout our algorithm, we maintain that the graph is benign, which we define as follows.

Definition 3

(Benign graphs) Let \(G:= (V, E)\) be a simple undirected graph and \(\Lambda \in \Omega (\log n)\) and \(\Delta \ge 64\Lambda \) be two values (with big enough constants hidden by the \(\Omega \)-Notation). Then, we call G benign if and only if it has the following three properties:

-

1.

(G is \(\Delta \)-regular) Every node \(v \in V\) has exactly \(\Delta \) in- and outgoing edges (which may be self-loops).

-

2.

(G is lazy) Every node \(v \in V\) has at least

self-loops.

self-loops. -

3.

(G has a \(\Lambda \)-sized minimum cut) Every cut \(c(S,\overline{S})\) with \(S \subset V\) has at least \(\Lambda \) edges.

The properties of benign graphs are carefully chosen to be as weak as possible while still ensuring the correct execution of our algorithm. A degree of \(\Delta \in \Omega (\log n)\) is necessary to keep the graph connected. If we only had a constant degree, a standard result from random graphs implies that, w.h.p., there would be nodes disconnected from the graph when sampling new neighbors. If the graphs were not lazy, many theorems from the analysis of Markov chains would not hold as the graph could be bipartite, which would greatly complicate the analysis. This assumption only slows down random walks by a factor of 2. Lastly, the \(\Lambda \)-sized cut ensures that the graph becomes more densely connected in each iteration, w.h.p. In fact, with constant-sized cuts, we cannot easily ensure this property when using random walks of constant length.

3.1 Algorithm description

We will now describe the algorithm in more detail. Recall that throughout this section, we will assume the P2P-\(\textsf{CONGEST}\) model. Further, we assume that the initial graph has maximum degree d and is connected. Given these preconditions, the algorithm has four input parameters \(\ell ,\Delta ,\Lambda \), and L known to all nodes. Recall that \(L \in \Theta (\log n)\) is an upper bound on \(\log n\) and determines the runtime. The value \(\ell \in \Omega (1)\) denotes the length of the random walks, \(\Delta \in O(\log n)\) is the desired degree, and \(\Lambda \in O(\log n)\) denotes the (approximate) size of the minimum cut. All of these parameters are tunable and the hidden constants need to be chosen big enough for the algorithm to succeed, w.h.p. In particular, the value of \(\Lambda \) will determine the success probability. We discuss this in more detail in the analysis.

Our algorithm consists of three stages. In the first stage, we ensure that the initial graph is benign by adding additional edges to each node. In the second stage, which is the main part of the algorithm, we continuously increase the graph’s conductance to random walks while assuring that it stays benign. Finally, in the last stage, we exploit the graph’s logarithmic diameter to construct a well-formed tree in \(O(\log n)\) time. This phase primarily uses techniques from [3, 4, 30, 31]. We now describe each phase in more detail.

Stage 1: Initialization

Before the first iteration, we need to prepare the initial communication graph to comply with parameters \(\Delta \) and \(\Lambda \), i.e., we must turn it into a benign graph. W.l.o.g., we can assume that G is a simple bidirected graph. Otherwise, all of its multi-edges can be merged into a single edge, and all directed edges \((v,w) \in E\) can be bi-directed by sending v’s identifier to w. Next, we deal with the graph’s regularity. Arguably, the easiest way to make a graph of maximum degree d regular is adding \(d-d(v)\) self-loops to each node \(v \in V\), so all nodes have the same degree. However, this is not possible in general, as in our model, each node can only send and receive \(O(d(v)\log {n})\) messages per round, which might be lower than d. Thus, we need another approach. For this, we need the concept of virtual nodes \(V'\), which are simulated by the nodes of graph G. A virtual node \(v' \in V'\) is simulated by \(v \in V\) and has its own virtual identifier of size \(O(\log {n})\). This virtual identifier consists of the original node’s identifier combined with a locally unique identifier. For the simulation, any message intended for \(v'\) will first be sent to v (using v’s identifier) and then locally passed to \(v'\). Given this concept, we show that the node in graph \(G:= (V, E)\) can simulate a graph \(G':= (V', E')\) with \(\vert V'\vert = 2\vert E\vert \) that only consists of virtual nodes and edges between them. We construct \(V'\) through the following process:

-

1.

First, For each edge \(\{v,w\} \in E\) both v and w create and simulate virtual nodes \(v'\) and \(w'\) and add them to \(V'\).

-

2.

Second, v and w connect the virtual nodes \(v'\) and \(w'\) via an edge \(\{v',w'\}\) by exchanging the respective identifiers. This ensures that for each edges \((v,w) \in E\), there is an edge \((v',w') \in E'\).

-

3.

Finally, all virtual nodes of a real node v are connected in a cycle. For this, v first sorts its virtual nodes in an arbitrary order \( v'_1, \dots , v'_{d(v)}\). Then, it adds bidirected edges between \(v_1\) and \(v_{d(v)}\) and every consecutive pair of nodes in the order.

The resulting graph \(G'\) is connected and each node \(v' \in V'\) has at most 3 edges. To be precise, it has exactly one edge to another virtual node \(w' \in V'\) simulated by \(v \ne w \in V\) and at most two connections to the predecessor and successor in the cycle of virtual nodes simulated by v. Further, each original node simulates exactly d(v) virtual nodes, so as long as each virtual node receives at most \(O(\log n)\) messages (which is the case in our algorithm), this is possible in our model. Later, we will show how to revert the simulation and obtain a well-formed tree for the original node set V.

Given that the graph is regular, we need to increase its degree and minimal cut. Since the input graph has a maximum degree of 3, this is quite simple as we can assume \(6\Lambda \le \Delta \) by choosing \(\Delta \) big enough. Given this assumption, the graph can be turned benign in 2 steps:

-

1.

First, all edges are copied \(\Lambda \) times to obtain the desired minimum cut. After this step, each node has at least \(\Lambda \) edges to other nodes and at least \(\Lambda \) edges cross each cut.

-

2.

Then, each node adds self-loops until its degree is \(\Delta \) and each node has \(\frac{\Delta }{2}\) self-loops. As we chose \(6\Lambda \le \Delta \), this is always possible.

Thus, the resulting graph is benign. Further, note that the resulting graph is a multi-graph while G is a simple graph.

Stage 2: Construction of a Low Diameter Overlay

Let now \(G_0 = (V', E_0)\) be the resulting benign graph. The algorithm proceeds in iterations \(1, \dots , L\). In each iteration, a new communication graph \(G_i = (V', E_i)\) is created through sampling \(\frac{\Delta }{8}\) new neighbors via random walks of length \(\ell \). Each node \(v \in V'\) creates \(\frac{\Delta }{8}\) messages containing its own identifier, which we call tokens. Each token is randomly forwarded for \(\ell \) rounds in \(G_i\). More precisely, each node that receives a token picks one of its incident edges in \(G_i\) uniformly at random and sends the token to the corresponding node.Footnote 6 This happens independently for each token. If v receives less than \(\frac{3}{8}\Delta \) tokens after \(\ell \) steps, it sends its identifier back to all the tokens’ origins to create a bidirected edge. Otherwise, it picks \(\frac{3}{8}\Delta \) tokens at random (without replacement)Footnote 7. Since the origin’s identifier is stored in the token, both cases can be handled in one communication round. Finally, each node adds self-loops until its degree is \(\Delta \) again. The whole procedure is given in Fig. 1 as the method CreateExpander(\(G_0,\ell ,\Delta ,\Lambda ,L\)). The subroutine MakeBenign(\(G_0,\ell ,\Delta ,\Lambda \)) add edges and self-loops to make the graph comply with Definition 3 (i.e., it implements the first stage). Our main observation is that after \(L = O(\log n)\) iterations, the resulting graph \(G_L\) has constant conductance, w.h.p., which implies that its diameter is \(O(\log n)\). Furthermore, the degree of \(G_L\) is \(O(\Delta )\) by construction. Finally, if we add any virtual nodes in the first stage, we can now merge them back into a single node (with all connections of all its virtual nodes). For this, we simply transform each edge \((v',w') \in E_L\) between two virtual nodes \(v',w'\) to an edge (v, w) between two original nodes \(v,w \in V\). This produces a graph with the same degree distribution as G and can only decrease the diameter further. We denote this graph as \( G'_L\).

Stage 3: Finalization

To obtain a well-formed tree \(T_G\), perform a BFS on \( G'_L\) starting from the node with the lowest identifier. Since a node cannot locally check whether it has the lowest identifier, the implementation of this step is slightly more complex. The algorithm proceeds for \(L \in O(\log n)\) rounds. In the first round, every node creates a token message that contains its identifier. Then, it sends the token to all its neighbors. For all remaining rounds \(1, \ldots , L\), every node that receives one or more tokens only forwards the token with the lowest identifier to all its neighbors and drops all others. Since the graph’s diameter is \(O(\log n)\), all nodes must have received the lowest identifier at least once after these \(L \in O(\log n)\) rounds. Finally, each node v marks the edge (v, w) over which it first received the token with the lowest identifier. Ties can be broken arbitrarily. If the node itself has the smallest identifier, it does not mark any edge. All marked edges then constitute a tree T with degree \(O(\Delta )\) and diameter \(O(\log n)\). Note that this process requires \(O(\Delta )\) messages per node and round, as each node sends at most one token to all its neighbors per round. To transform this tree T into a well-formed tree, we perform the merging step of the algorithm of [30, Theorem 2]. This deterministic subroutine transforms any tree of degree \(O(\Delta )\) into a well-formed tree of degree \(O(\log n)\) in \(O(\log n)\) rounds.

To make this article self-contained, we sketch the approach of [30, Theorem 2] in the remainder. The algorithm first transforms T into a constant-degree child-sibling tree [4], in which each node arranges its children as a path and only keeps an edge to one of them. For each inner node \(w \in V\) let \(w_1, \dots , w_{d^{+}(w)}\) denote its children in T sorted by increasing identifier. Now v only keeps the child with the lowest identifier and delegates the others as follows: Each \(w_i \in N(w)\) with \(i>1\) changes its parent to be its predecessor \(w_{i-1}\) on the path and stores its successor \(w_{i+1}\) as a sibling (if it exists). In the resulting tree, each node stores at most three identifiers: a parent and possibly a sibling and a child. Since we can interpret the sibling of a node as a second child, we obtain a binary tree. This transformation takes O(1) rounds and requires \(O(\Delta )\) communication as each node needs to send two identifiers to its children.

Note that the tree’s diameter has now been increased by a factor of \(O(\Delta )\). Based on this binary tree, we construct a ring of virtual nodes using the so-called Euler tour technique (see, e.g., [20, 30, 56]). Consider the depth-first traversal of the tree that visits the children of each node in order of increasing identifier. A node occurs at most three times in this traversal. Let each node act as a distinct virtual node for each such occurrence and let \(k\le 3n\) be the number of virtual nodes. More specifically, every node v executes the following steps:

-

1.

v creates virtual nodes \(v^{0}, \dots , v^{d(v)-1}\) where \(v^i\) has the virtual identifier \(id(v^i) := v \circ i\). Intuitively, the node \(v^0\) corresponds to the traversal visiting v from its parent. Analogously, each \(v^{i}\) is the visit from child \(w_i\).

-

2.

v sends the identifier of \(v^0\) and \(v^{d(v)-1}\) to its parent. Note that \(v^0\) and \(v^{d(w)-1}\) may be the same virtual node if v has no children.

-

3.

Let \(w_{i}^0\) and \(w_{i}^{d(w)-1}\) be the identifier received from \(w_i\), i.e., the \(i^{th}\) child of v. Then v sets \(w_{i}^0\) as the successor of \(v^{i-1}\) and \(w_{i}^{d(w)-1}\) as the predecessor of \(v^i\). In other words, \(v^{i-1}\) and \(v^i\) are connected to the first and last virtual node of \(w_i\).

-

4.

Finally, each virtual node introduces itself to its predecessor and successor.

Therefore, the nodes can connect their virtual nodes into a ring in O(1) rounds by sending at most two messages per edge in each round. Next, we use the Pointer jumping technique (see, e.g., [20, 30, 56]) to quickly add chords (i.e., shortcut edges) to the ring. To be precise, the virtual nodes execute the following protocol for \(L \in O(\log n)\) rounds:

-

1.

Let \(l_0\) and \(r_0\) be the predecessor and successor of v in the ring. In the first round of pointer jumping, v sends \(l_0\) to \(r_0\) and vice versa.

-

2.

In round \(t>0\), each node receives an identifier \(l_{t-1}\) and \(r_{t-1}\) sent in the previous round. It sets \(l_{t}\) to the identifier received from \(l_{t-1}\) and \(r_t\) to the identifier received from \(r_{t-1}\). Finally, it sends \(l_t\) to \(r_t\) and vice versa.

A simple induction reveals that the distance between these neighbors (w.r.t the ring) doubles from round to round (until the distance exceeds the number of virtual nodes k). Based on this observation, it is not hard to show that after the L rounds, the graph’s diameter has reduced to \(O(\log n)\) while the degree has grown to \(O(\log n)\). A final BFS from the node of the lowest identifier then yields our desired well-formed tree \(T_G\), which concludes the algorithm.

With further techniques that exploit the structure of the chords, the degree can be reduced to 6. For details, we refer to [30, Theorem 2]. Another possibility to achieve a constant degree is the algorithm by Aspnes and Wu [4]. This algorithm requires a graph of outdegree 1—which simply is a by-product of the BFS—and requires \(O(W+\log n)\) time, w.h.p. The term W denotes the length of the node identifiers, which is also \(O(\log n)\) in our case. Although the algorithm is simple, elegant, and has the desired runtime, we do not use it as a black box as it would lead to problems with some applications. We need to create a well-formed tree for subgraphs with \(n' < n\) nodes for some of the applications and require that the runtime is logarithmic in \(n'\) and not n. Without additional analysis, the algorithm by Aspnes and Wu would still take \(O(\log n)\) time, w.h.p., whereas the approach sketched above always finishes in \(O(\log {n'})\) time.

3.2 Analysis of CreateExpander

The main challenge of our analysis is to show that after \(L \in O(\log n)\) iterations, the final graph \(G_{L}\) has a diameter of \(O(\log n)\). We will conduct an induction over the graphs \(G_1, \ldots , G_L\) to show this. Our main insight is that—given the communication graph is benign—we can use short random walks of constant length to iteratively increase the graph’s conductance until we reach a graph of low diameter. In the following, we will abuse notation and refer to the virtual nodes \(V'\) used in this stage of the algorithm as V. We show the following:

Lemma 3.1

Let \(G_0 := (V,E_0)\) a benign (multi-)graph with n nodes and \(O(n^3)\) edges. Let \(L:=3\log n\) and \(\Lambda := 6400\lambda \log n\) for some tunable \(\lambda >1\). Then, it holds:

Intuitively, this makes sense as the conductance is a graph property that measures how well-connected a graph is, and—since the random walks monotonically converge to the uniform distribution—the newly sampled edges can only increase the graph’s connectivity. Our formal proof is structured into four steps: First, we show that w.h.p. each node receives and sends at most \(O(\Delta )\) messages in each round. Thus, no messages are dropped during the execution of the algorithm. With this technicality out of the way, we show that the conductance of \(G_{i+1}\) increases by a factor of \(\Omega (\sqrt{\ell })\) w.h.p. if \(G_i\) is benign. Furthermore, we show that each \(G_{i+1}\) is benign if \(G_i\) is benign. Finally, we use the union bound to tie these facts together and prove Theorem 1.1.

3.2.1 Bounding the communication complexity

Before we go into the proof’s more intricate details, let us first prove that all messages are successfully sent during the algorithm’s execution. Remember that we assume the nodes have a communication capacity of \(O(\log n)\). Thus, a node can only send and receive \(O(\log n)\) messages as excess messages are dropped arbitrarily. To prove that no message is dropped, we must show that no node receives more than \(O(\log n)\) random walk tokens in a single step. However, this is a well-known fact about the distribution of random walks:

Lemma 3.2

(Also shown in [16, 18, 52]) For a node \(v \in V\) and an integer t, let X(v, t) be the random variable that denotes the number of tokens at node v in round t. Then, it holds \({\textbf{Pr}\left[ X(v,t) \ge \frac{3\Delta }{8}\right] } \le e^{-\frac{\Delta }{12}}\).

The lemma follows from the fact that each node receives \(\frac{\Delta }{8}\) tokens in expectation, given that all neighbors received \(\frac{\Delta }{8}\) tokens in the previous round. This holds because \(G_i\) is regular. Since all nodes start with \(\frac{\Delta }{8}\) tokens, the lemma follows inductively. Since all walks are independent, a simple application of the Chernoff bound with \(\delta =2\) (cf. Lemma 2.2) yields the result as

Note that this Lemma also directly implies that, w.h.p., all random walks create an edge as every possible endpoint receives less than \(\frac{3\Delta }{8}\) token and therefore replies to all of them. For our concrete value of \(\Delta \) it holds:

Lemma 3.3

Let \(\Delta \ge \Lambda := 6400 \lambda \log n\) for some tunable parameter \(\lambda > 1\). Then, for any round t it holds with probability at least \(1-{n^{-8\lambda }}\) that every node holds fewer than \(\frac{3}{8}\Delta \) tokens.

Proof

This follows directly from Lemma 3.2. Denote X(v, t) the number of tokens that node \(v \in V\) receives after t steps. Consider the event that node v receives more than \(\frac{3}{8}\Delta \) tokens. Recall that the probability for this event is

Now we use that \(\Delta \) is at least as big as \(\Lambda := 6400\lambda \log n\). Plugging this into the formula yields:

Finally, let \(\mathcal {B}\) the event that any node receives more than \(\frac{3}{8}\Delta \) tokens. By the union bound, we see

\(\square \)

Therefore, all nodes receive less than \(\frac{3\Delta }{8}\) tokens each round and the algorithm stays within the congestion bounds of our model with probability \(1-{n^{-8\lambda }}\). Since all iterations take \(\ell \cdot L \in O(\log n)\) rounds in total, the union bound implies that no node receives too many messages in any round, w.h.p.

3.2.2 Bounding the conductance of \(G_i\)

In this section, we show that the graph’s conductance is increasing by a factor \(\Omega (\sqrt{\ell })\) from \(G_i\) to \(G_{i+1}\) w.h.p. if \(G_i\) is benign. More formally, we show the following:

Lemma 3.4

Let \(\lambda > 0\) be a parameter and let \(G_i\) and \(G_{i+1}\) be the graphs created in iteration i and \(i+1\), respectively. Finally, assume that \(G_i\) is benign with a minimum cut of at least \(\Lambda \ge 6400 \lambda \log n\) and degree \(\Delta > 64\Lambda \). Then, it holds with probability at least \(1-n^{-7\lambda }\) that

In particular, for any \(\ell \ge (2 \cdot 640)^2\), it holds

Our first observation is the fact that random walks of length \(\ell \) are distributed according to 1-step walks in \(G_i^{\ell }\). In particular, if we consider a subset \(S \subset V\) and pick a node \(v \in S\) uniformly at random, then \(\Phi _{G_i^\ell }(S)\) denotes the probability that a random walk started at v ends outside of the subset after \(\ell \) steps.

Lemma 3.5

Let G be a \(\Delta \)-regular graph and \(S \subset V\) be a any subset of nodes with \(\vert S\vert \le \frac{n}{2}\) and suppose each node in S starts \(\frac{\Delta }{8}\) random walks. Let \(\mathcal {Y}_S\) count the \(\ell \)-step random walks that start at some node in \(v \in S\) and end at some node \(w \in V \setminus S\). Then, it holds:

Proof

First, we observe that we can express \(\mathcal {Y}_S\) as the sum of binary random variables for each walk. For each \(v_i \in S\) let \(Y_i^1, \dots , Y_i^{d}\) be indicator variables that denote if a token started by \(v_i\) ended in \(\overline{S} := V\setminus S\) after \(\ell \) steps. Given this definition, we see that

Recall that an \(\ell \)-step random walk in \(G_i\) corresponds to a 1-step random walk in \(G^\ell _i\). This means that for each of its \(\frac{\Delta }{8}\) tokens node \(v_j\) picks one of its outgoing edges in \(G^\ell _i\) uniformly at random and sends the token along this edge (which corresponds to an \(\ell \)-step walk). For ease of notation, let \(O^\ell _j\) be the number of edges of node \(v_j \in S\) in \(G^\ell _i\) where the other endpoint is not in S, i.e.,

Now consider the \(k^{th}\) random walk started at \(v_j\) and observe \(Y_j^k\). Note that it holds:

Here, Eq. (116) follows from Lemma 2.5. Let \({O}^\ell _S\) be the number of all outgoing edges from the whole set S in \(G_i^{\ell }\). It holds that \(O^\ell _S := \sum _{v_j \in S} {O}^\ell _j\). Recall that the definition of \(\Phi _{G_i^\ell }\) is the ratio of edges leading out of S and all edges with at least one endpoint in S. Given that \(G_i^{\ell }\) is a \(\Delta ^{\ell }\)-regular graph, a simple calculation yields:

In the last line , we used that \(\Phi _{G_i^\ell }(S) := \frac{O^\ell _S}{\vert S\vert \Delta ^{\ell }}\) per definition as \({O}^\ell _S\) counts the edges with an endpoint outside of S and \(\vert S\vert \Delta ^\ell \) counts the total number edges with an endpoint in S as \(G_i^{\ell }\) is a \(\Delta ^{\ell }\)-regular graph. This proves the lemma. \(\square \)

Therefore, a lower bound on \(\Phi _{G_i^\ell }\) gives us a lower bound on the expected number of tokens that leave any set S. Thus, as long as \(G_i\) is regular and lazy, we have a suitable lower bound for \(\Phi _{G_i^\ell }\) with Lemma 2.9. In fact, given that the random walks are independent, we can even show the following:

Lemma 3.6

Let \(S \subset V\) be set of nodes with \(O_S\) outgoing edges, then it holds:

Proof

This follows from the Chernoff bound and the fact that the random walks are independent. Recall that for each set S, the number of outgoing edges \(\mathcal {Y}_S := \sum ^{s}_{i=1} \sum _{k=1}^{\Delta /8} Y_i^k\) in \(G_{i+1}\) is determined by a series independent binary variables. Thus, by the Chernoff bound, it holds that

By choosing  , we get

, we get

Therefore, it remains to show that our claim that \(\mathbb {E}[\mathcal {Y}] \ge \frac{O_S}{8}\) holds, and we are done. By Lemma 3.5 we have for all set with \(O_S\) outgoing edges that

This proves the lemma. \(\square \)

Recall that we need to show that every subset \(S \subset V\) with \(\vert S\vert \le n/2\) has a conductance of \(O(\sqrt{\ell }\Phi _{G_i})\) in \(G_{i+1}\) in order to prove that \(\Phi _{G_{i+1}} = \Omega (\sqrt{\ell }\Phi _{G_i})\). In the following, we want to prove that for every set S, there are at least \(\Theta (\sqrt{\ell }\Phi _{G_i})\) tokens that start at some node \(v \in S\) and end at some node \(w \in \overline{S}\) after \(\ell \) steps w.h.p. These tokens are counted by the random variable \(\mathcal {Y}_S\), which we analyzed above. In particular, given that a set S has \(O_S\) outgoing edges, the value of \(\mathcal {Y}_S\) is concentrated around its expectation with probability \(e^{-\Omega (O_S)}\). Thus, we can apply Lemma 2.4 and show that—for a big enough \(\Lambda \)—all sets have many tokens that escape.

Lemma 3.7

Let \(G_i\) be a benign graph with \(\Lambda \ge 6400\lambda \log n\). Then, it holds

Proof

For a set \(S \subset V\) we define \(\mathcal {B}_S\) to be the event that S has bad conductance, i.e., it holds that \(\mathcal {Y}_S\)—the number of tokens that leave S—is smaller than \(\min \left\{ \frac{\Delta \vert S\vert }{32},\frac{\Delta \vert S\vert }{640}\sqrt{\ell }\Phi _G \right\} \). We let \(\mathcal {B}_1 = \bigcup _{S \subset V} \mathcal {B}_S\) be the event that there exists a set S with bad conductance, i.e., there is any \(\mathcal {B}_S\) that is true. By Lemma 3.6, we know that the probability of \(\mathcal {B}_S\) exponentially depends on \(O_S\). Thus, we want to use Lemma 2.4 to that no \(\mathcal {B}_S\) occurs w.h.p. Therefore, we must show that the three conditions mentioned in the lemma are fulfilled. The first and third property follow directly from the definition of benign graphs, as the graph is polynomial in size and has a logarithmic minimum cut with a tunable constant. For the concrete constants, it holds:

-

1.

G has at most \(m \in O(n^{c_1})\) edges for some constant \(c_1>1\): Recall that we limited ourselves to simple initial graphs with \(O(n^2)\) edges and copied each edge \(O(\Lambda )\) times. Since \(\Lambda \in o(n)\), we have strictly less than \(n^{3}\) edges. Thus, we have \(c_1 := 3\).

-

2.

For each \(\mathcal {B}_S\) it holds \({\textbf{Pr}\left[ \mathcal {B}_S\right] } \le e^{-c_2 O_S}\) for some constant \(c_2>0\): By Lemma 3.6 a bad event \(\mathcal {B}_S\) for a set \(S \subseteq V\) happens with probability at most \(e^{-\frac{O_S}{64}}\). Thus, we have \(c_2 := \frac{1}{64}\).

-

3.