Abstract

Answering a pair of questions of Conrey, Gabbard, Grant, Liu, and Morrison, we prove that a triplet of dice drawn from the multiset model are intransitive with probability \(1/4+o(1)\) and the probability a random pair of dice tie tends toward \(\alpha n^{-1}\) for an explicitly defined constant \(\alpha \). This extends and sharpens the recent results of Polymath regarding the balanced sequence model. We further show the distribution of larger tournaments converges to a universal tournamenton in both models. This limit naturally arises from the discrete spectrum of a certain skew-symmetric operator (given by the kernel in the title acting on \(L^2([-1,1])\)). The limit exhibits a degree of symmetry and can be used to prove that, for instance, the limiting probability that \(A_i\) beats \(A_{i+1}\) for \(1\le i\le 4\) and that \(A_5\) beats \(A_1\) is \(1/32+o(1)\). Furthermore, the limiting tournamenton has range contained in the discrete set \(\{0,1\}\). This proves that the associated tournamenton is non-quasirandom in a dramatic fashion, vastly extending work of Cornacchia and Hązła regarding the continuous analogue of the balanced sequence model. The proof is based on a reduction to conditional central limit theorems (related to work of Polymath), the use of a “Poissonization” style method to reduce to computations with independent random variables, and the systematic use of switching-based arguments to extract cancellations in Fourier estimates when establishing local limit-type estimates.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We consider the following pair of models of random dice.

Definition 1.1

A n-sided die is a sequence of numbers \((a_1,\ldots ,a_n)\in [n]^{n}\) such that \(\sum _{j=1}^na_j = n(n+1)/2\). In the multiset model, the faces of a die \((a_1,\ldots ,a_n)\) are sampled as a uniform random nondecreasing sequence in [n] which satisfy \(\sum _{j=1}^na_j = n(n+1)/2\). In the balanced sequence model the faces of a die \((a_1,\ldots ,a_n)\) are sampled as a uniform random sequence in [n] such that \(\sum _{j=1}^na_j = n(n+1)/2\).

We also require a notion of when one die is said to “beat” another die.

Definition 1.2

An n-sided die \((a_1,\ldots ,a_n)\) beats another die \((b_1,\ldots ,b_n)\) if

Furthermore we say that die \((a_1,\ldots ,a_n)\) ties die \((b_1,\ldots ,b_n)\) if

Our goal is to study dice tournaments. Specifically, we sample m independent random n-sided dice, either all from the multiset model or all from the balanced sequence model, and consider the outcome of each pair. We will think of m as fixed while n is tending to infinity.

The phenomenon of intransitive dice are exemplified by an example constructed by Efron in the 1960s [11]: consider the diceFootnote 1

Efron observed that in this example that A beats B, B beats C, C beats D, and D beats A: peculiarly, the relation “beats” is not transitive. This phenomenon gathered a substantial amount of popular interest [12, 23] including appearing in Martin Gardner’s column in Scientific American [11].

Mathematical work until recently had largely been focused on constructing tournaments with various properties [1,2,3, 10, 18, 28]; for instance work of Moon and Moser [18] established that given any tournament T there exists a set of dice (not necessarily satisfying the sum constraints of Definition 1.1) which realize this tournament T.Footnote 2 However, recently there has been significant interest in understanding random models of intransitive dice due to a set of conjectures raised in work of Conrey et al. [7].

In the work of Conrey et al. [7], the authors considered dice drawn from the multiset model. While a nice model for dice, one may ask why they do not consider the “most natural” model of dice where there is no additional condition on the sum. In this case it is straightforward to observe empirically (and can be proven rigorously) that with high probability whether die A beats die B can be determined simply by looking at the sum of the faces of the dice. Conrey et al. [7] conducted empirical simulations in the multiset model. Based on these experimental results, they conjectured ([7, Conjectures 1,2,3]) that as \(n\rightarrow \infty \) (a) the probability a pair of dice tie is o(1) (b) for a random triplet of dice A, B, and C the probability that A beats B, B beats C, and C beats A is \(1/8+o(1)\) and (c) the tournament associated to dice is quasirandom. (Conrey et al. [7] equivalently formulate (c) in terms of the probability of various m-die tournaments.)

The first rigorous progress towards these conjectures was made by Polymath [22], where they considered n-sided die drawn from not the multiset model but from the balanced-sequence model in Definition 1.1. In this balanced sequence model, Polymath [22] was able to prove both conjectures (a) and (b) by showing that for almost all dice A drawn from the balanced sequence model, approximately half of the dice from the balanced sequence model beat it. However, based on numerical calculations Polymath conjectured that (c) is false (see discussion surrounding [22, Conjecture 1.3]). This suspicion was later confirmed in a continuous analogue of the balanced sequence model by work of Cornacchia and Hązła [8] where die faces are sampled from [0, 1] uniformly at random. They proved this by studying four-cycle counts and proved that there exists a small absolute constant \(\varepsilon > 0\) such that the probability that A beats B, B beats C, C beats D, and D beats A for n large is at least \(1/16 + \varepsilon \) (higher than if the underlying tournament was quasirandom). Finally, in the work of Hązła et al. [14], the phenomenon of transitivity was investigated in the context of die faces which are drawn independently at random from a fixed distribution \(\rho \) which is continuous. Remarkably, the phenomenon of intransitivity is extremely delicate and under mild conditions on \(\rho \) the only distribution exhibiting any form of intransitivity is the uniform distribution. In all the rigorous work regarding probabilistic models of intransitive dice, the use of local central limit theorem type techniques has been crucial and this has been aided by the fact that the underlying faces of the die are independent modulo conditioning on a simple linear relation. We note this is no longer true in the original multiset model of Conrey et al. [7] and this served as a key obstacle for extending results to the original model.

Our main result is a complete characterization of the tournament associated with intransitive dice. Our results are sufficiently strong to naturally explain the results of Polymath [22] and Cornacchia and Hązła [8] and point to a number of surprising phenomena which are not immediately obvious numerically.

In order to state our main result we will require the definition of a certain operator on \(L^2([-1,1])\).

Definition 1.3

Consider the skew-symmetric kernel \(f:[-1,1]^2\rightarrow \mathbb {R}\) defined by

Define the operator \(\mathcal {A}:L^2([-1,1])\rightarrow L^2([-1,1])\) (with the Lebesgue measure) by

Let \(\sigma _1\ge \sigma _2\ge \cdots \) denote real numbers so that \(\{\pm i\sigma _\ell :\ell \ge 1\}\) forms the discrete spectrum of \(\mathcal {A}\).

Remark

Since \(\mathcal {A}\) is real skew-symmetric we have that the spectrum is purely imaginary and coming in pairs. Furthermore, based on numerical computation, a closed form solution for \(\sigma _j\) appears unlikely.

Our main result captures the precise probability distribution associated with the dice tournament.

Theorem 1.4

Fix \(m\ge 2\) and independently sample n-sided dice \(A_1,\ldots ,A_m\), either all from the multiset model or all from the balanced sequence model. Let \(G^{(j)}\) for \(1\le j\le m\) be infinite vectors of standard Gaussians and for \(1\le j<k\le m\) let

Then for any digraph D on vertices [m],

Remark

\(H_{jk}\) is defined by a convergent sum almost surely due to the bound \(\sum _{\ell \ge t}\sigma _\ell ^2=O(1/t)\), which we prove in Lemma 2.6 (M9), and an application of Borel–Cantelli to the random events \(\mathcal {E}_t\) defined by \(|\sum _{t\le \ell <2t}\sigma _\ell (G_{2\ell -1}^{(j)}G_{2\ell }^{(k)}-G_{2\ell }^{(j)}G_{2\ell -1}^{(k)})|\ge t^{-1/4}\) for t ranging over powers of 2. Indeed, \(\mathbb {P}[\mathcal {E}_t]=O(t^{-1/2})\) by the Chebyshev inequality, which has finite sum over powers of 2, so all but finitely many \(\mathcal {E}_t\) hold and the convergence follows. Alternatively, we can interpret each individual \(H_{jk}\) as a Gaussian with random variance equal to the inverse of an almost surely convergent weighted sum of chi-squared distributions.

Remark

The proof of Theorem 1.4 actually shows something stronger, which is that \((H_{jk})_{1\le j<k\le m}\) is the limiting distribution of \((c\cdot \textrm{margin}_{jk}/n)_{1\le j<k\le m}\), where \(c=1/2\) for the multiset model and \(c=1\) for the balanced sequence model and where \(\textrm{margin}_{jk}\) is by how much die \(A_j\) beats \(A_k\) (i.e., how many more pairs than \(n^2/2\), possibly negative, \(A_j\) beats \(A_k\) for).

We note that the statement of Theorem 1.4 may appear slightly strange and difficult to work with; however, a number combinatorial consequences follow in a routine manner given Theorem 1.4.

Corollary 1.5

Sample m independent random n-sided dice \(A_1,\ldots , A_m\) either all from the multiset model or all from the balanced sequence model. Then for any digraph D on vertices [m] let \(D_v\) denote the digraph where all edges incident to the vertex \(v\in [m]\) are reversed. We have

for all \(v\in [m]\). Furthermore let \(D'\) denote the digraph where all the edges of D are reversed. We have

From Theorem 1.4 we see that the probability a pair of dice tie is o(1). Then, considering D to be a directed cycle on 3 vertices and comparing to \(D_v\), along with using permutation symmetry, we immediately see that all labelled 3-vertex tournaments appear asymptotically with the same probability. Thus a random triplet of dice is intransitive with probability \(1/4+o(1)\). This immediately implies the conjectures of Conrey et al. [7, Conjectures 1, 2] (and recovers the results of Polymath which proved these two facts in the balanced sequence model).

We can deduce that a forest with e edges occurs with probability \(2^{-e}+o(1)\) by iteratively applying Corollary 1.5 to a leaf (and using that ties occur negligibly). We can also deduce that any orientation of a labeled \((2k+1)\)-cycle occurs with the same limiting probability \(2^{-(2k+1)}+o(1)\) by repeatedly applying the two operations specified in Corollary 1.5. These are perhaps surprising given the results of Cornacchia and Hązła [8] showing a lack of quasirandomness for continuous dice models. We conjecture, however, that the only equalities between complete tournaments in the limit can be achieved via these symmetries and permutation symmetry.

For our next corollary, we will require the tournament analogue of a graphon. We refer the reader to [29, Chapter 4] for a more extensive discussion of graphons.

Definition 1.6

Given two measurable functions \(U,W:[0,1]^2\rightarrow \mathbb {R}\), define the cut metric as

where the infimum \(\phi \) is taken over all invertible measure preserving maps. We define the tournamentons \(T_0\) to be the space of all functions \(T:[0,1]^2\rightarrow [0,1]\) such that \(T(x,y) = 1-T(y,x)\) and let \(\widetilde{T_0}\) denote the space of tournamentons modulo identifying tournamentons with cut distance 0.

As is standard one can identify a graph G with an associated graphon, and similar for a tournamenton, by embedding the adjacency matrix into \([0,1]^2\) (for the tournamenton this requires putting values of 1/2 on the diagonal); we will carry this transformation out without comment.

Corollary 1.7

Consider the graph \(T_n\) where the vertex set is either (a) all nondecreasing sequences \((a_1,\ldots ,a_n)\) in \([n]^n\) such that \(\sum _{j=1}^na_j = n(n+1)/2\) or (b) all sequences \((a_1,\ldots ,a_n)\) in \([n]^n\) such that \(\sum _{j=1}^na_j = n(n+1)/2\), and where there is a directed edge from one sequence to another if the corresponding die beats the other.

Then \(T_n\) converges under the cut metric to a tournamenton \(\mathcal {T}\) (which is the same in cases (a) and (b)). Furthermore, the preimage of the set \(\{0,1\}\) under \(\mathcal {T}\) has measure 1.

Remark

Technically \(T_n\) may not be a tournament but a partial tournament due to ties, so a priori we only have convergence to a partial tournamenton; however, a consequence of Theorem 1.4 discussed above is that ties occur with probability o(1) so we will obtain a genuine tournamenton in the limit.

Note that the density of digraph D in the tournament \(T_n\) is precisely the probability that the associated digraph of dice beating other dice occurs when sampling from either the multiset model (case (a)) or the balanced sequence model (case (b)). Thus the density of digraph D in the limit tournament \(\mathcal {T}\) is the limiting probability described by Theorem 1.4.

The claim that the preimage of the set \(\{0,1\}\) has measure 1 is equivalent to the fact that for every \(\varepsilon >0\) there is a k such that a \(\mathcal {T}\)-random tournament (defined analogously to a W-random graph [29, Section 4.4]) on k vertices lies in a set of size \(2^{\varepsilon k^2}\) with at probability at least \(1-\varepsilon \). This equivalence is detailed in Lemma 8.1; we will prove Corollary 1.7 through this equivalence and prove that one can take a polynomial relation between k and \(\varepsilon \). The fact that \(\mathcal {T}\ne 1/2\) corresponds to a lack of quasirandomness. We also establish that the directed 4-cycle in particular occurs with limiting probability greater than 1/16 in Proposition 8.2, and show that all digraphs D have positive density in \(\mathcal {T}\) in Proposition 8.3.

Finally, we also precisely quantify the probability that a given pair of dice are tied beyond the o(1) guaranteed as a consequence of Theorem 1.4.

Theorem 1.8

Let A and B be dice which are jointly drawn independently from the multiset model. Let \(\alpha = 2^{-5/2}\pi ^{-1/2}\mathbb {E}[(\sum _{\ell \ge 1}\sigma _\ell ^2(Z_\ell ^2+Z_\ell '^2))^{-1/2}]\), where \(Z_\ell ,Z_\ell '\sim \mathcal {N}(0,1)\). We have

for some absolute constant \(c=c_{1.8}>0\). If instead A and B are jointly drawn independently from the balanced sequence model then

Remark

This can be heuristically reconstructed by considering the second remark after Theorem 1.4 with \(m=2\). \(H_{12}\) is the limiting distribution of \(c\cdot \textrm{margin}_{12}/n\) (where \(c=1/2\) for the multiset model and \(c=1\) for the balanced sequence model). If we imagine that the mass of this distribution was discretized in the obvious way along all possible values of \(\textrm{margin}_{12}\) in the lattice \(\mathbb {Z}/2\), we obtain the above. In fact, one can use the techniques in Sect. 9 to show a local limit theorem for \(\textrm{margin}_{12}\):

uniformly for \(x\in \mathbb {Z}/2\), where \(f_{H_{12}}\) is the probability density function of \(H_{12}\). We do not prove this here since the technical details are quite involved, but note that Theorem 1.8 is the \(x=0\) case.

We interpret the constant \(\alpha \) as the (inverse) standard deviation around the best linear approximant (in the sense of Ordinary Least Squares) to a conditioned Brownian motion at the end of Sect. 9.

In general, a tournament T with exactly t ties among m dice, and \(\left( {\begin{array}{c}m\\ 2\end{array}}\right) -t\) prescribed outcomes of the other match-ups, where m and \(0\le t\le \left( {\begin{array}{c}m\\ 2\end{array}}\right) \) are fixed, should occur with probability \((c_T+o(1))n^{-t}\). We do not pursue such a general statement here though similar techniques may apply and a probabilistic interpretation of the constant \(c_T\) should arise from Theorem 1.4 similar to the case \((m,t)=(2,1)\) above.

1.1 First steps, proof outline, and organization

Our techniques at a high level involve Fourier analysis in the style of local limit theorems. In particular, we study various “conditional Fourier coefficients” in detail to show that the normalized joint distribution of “victory margins” (see the second remark following Theorem 1.4) converges to \((H_{jk})_{1\le j<k\le m}\). We also use a more detailed analysis involving additional control on the “coarseness” of certain modified statistics of random dice to get very good local control of the event that there is a precise tie. We defer a more detailed proof outline to Sect. 3 after developing the basic tools to attack the problem in Sect. 2.

The first step in proving Theorem 1.4 (in the multiset model) relies on observing that while the dice face in the multiset model are nonindependent, the frequency statistics can be given a natural “near-independent” model. This ultimately relies on a well-known bijection between the multiset model and the simple random walk; the details appear in Lemma 2.2. We note that in the context of the balanced sequence model, Lemma 2.2 reduces to the “Poissonization” trick. Given this we interpret the “beats” relation through frequency counts (Lemmas 2.3, 2.5) and the operator in Definition 1.3 arises naturally. These initial steps are carried out in Sect. 2, and provide the key starting point to understand the necessary distributions from a Fourier perspective.

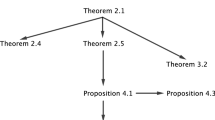

Given the setup in Sect. 2, we provide a heuristic outline of the argument for Theorems 1.4 and 1.8 and an overview of the various consequences in Sect. 3. We then collect a list of technical preliminaries which will be used throughout the paper in Sect. 4. We prove various Fourier coefficient bounds used in the proofs of Theorems 1.4 and 1.8 in Sect. 5. We prove Theorem 1.4 in Sect. 6, modulo a technical ingredient proven in Sect. 7, and then collect various consequences following from Theorem 1.4 in Sect. 8. Finally we prove Theorem 1.8 in Sect. 9.

1.2 Notation

We write \(f=O(g)\) to mean that \(f\le Cg\) for some absolute constant C, and \(g=\Omega (f)\) and \(f\lesssim g\) to mean the same. We write \(f=o(g)\) if for all \(c > 0\) we have \(f\le cg\) once the implicit growing parameter (typically n) grows large enough, and \(g=\omega (f)\) means the same. We also use \(\asymp \) to denote two quantities which are within absolute constants. Subscripts imply a dependence of these implicit constants on those parameters. We use \(\overset{d.}{=},\overset{d.}{\rightarrow }\) for distributional equality and limits, respectively.

For \(\mu \in \mathbb {R}^d\) and positive semidefinite \(\Sigma \in \mathbb {R}^{d\times d}\) we let \(\mathcal {N}(\mu ,\Sigma )\) be the Gaussian vector with mean \(\mu \) and covariance matrix \(\Sigma \). For finite matrices M we will use \(M_{ij}\) to denote the entry in the (i, j) position. Throughout this paper all logarithms are base e.

2 Count statistics of balanced sequence model and multiset model

The idea to get a handle on the multiset model is to create a procedure for sampling which derives from a sequence of independent random variables. We will require the notion of a frequency statistic which will be crucial for our purposes.

Definition 2.1

Given an n-sided die \(A = (a_1,\ldots ,a_n)\) define the frequency counts of A to be

for \(1\le i\le n\).

The key point is the following distributional claim regarding the frequency counts of a die drawn from either multiset or balanced sequence model, which relates these models to a sequence of either geometric (in the multiset case) or a sequence of Poisson random variables (in the balanced sequence case). In the balanced sequence case this is essentially equivalent to the “Poissonization” trick.

Lemma 2.2

We have the following:

-

If B is drawn from the multiset model we have

$$\begin{aligned} (\widetilde{b}_1,\ldots ,\widetilde{b}_n) \overset{d.}{=} (G_1,\ldots ,G_n), \end{aligned}$$where \(G_j\) are sampled as follows: draw independent \(\textrm{Geom}(1/2)\) random variablesFootnote 3\(G_i\) and then condition on \(\sum _{j=1}^nG_j = n\) and \(\sum _{j=1}^njG_j = n(n+1)/2\).

-

If B is drawn from the balanced sequence model we have

$$\begin{aligned} (\widetilde{b}_1,\ldots ,\widetilde{b}_n) \overset{d.}{=} (P_1,\ldots ,P_n), \end{aligned}$$where \(P_j\) are sampled as follows: draw independent \(\textrm{Pois}(1)\) random variables \(P_j\) and then condition on \(\sum _{j=1}^nP_j = n\) and \(\sum _{j=1}^njP_j = n(n+1)/2\).

Proof

We consider the first case. Notice that the multiset model of a die can equivalently be sampled by sampling a uniformly random right-up walk between (1, 1) and \((n+1,n)\) and looking at the height of each rightward step, conditional on the area under the walk being \(n(n+1)/2\). Indeed, there is a standard bijection between nondecreasing integer sequences \((b_1,\ldots ,b_n)\) with \(1\le b_j\le n\) and such walks: each \(a_j\) corresponds to a rightward step from \((j,b_j)\) to \((j+1,b_j)\); furthermore, the area under the walk ends up being \(b_1+\cdots +b_n\). Notice that drawing such a walk is equivalent to looking at an infinite random walk which takes steps in the directions (1, 0) or (0, 1) each with probability 1/2 and then conditioning on starting at (1, 1) and passing through both \((n+1,n)\) and \((n+1,n+1)\), then truncating appropriately.

Now define \(G_j\) as precisely the length of the horizontal segment on the line \(y=j\) in this conditioned infinite random walk. In the unconditioned infinite walk starting at (1, 1), we have that the lengths (which might be 0) of these horizontal segments in order have an independent distribution where the law is by definition a sequence of independent geometric random variables with parameter 1/2. Notice that conditioning on the walk passing through the line segment \((n+1,n)\) and \((n+1,n+1)\) is equivalent to \(\sum _{j=1}^nG_j = n\) and the conditioning on area is equivalent to \(\sum _{j=1}^njG_j = n(n+1)/2\). Considering the bijection defined above, these conditioned \(G_j\) then correspond directly to the \(\widetilde{b}_j\).

The second case is simpler. Notice that if one draws n faces from [n] uniformly at random then we have the proportionality

for tuples \((c_j)_{1\le j\le n}\in \{0,\ldots ,n\}^n\) with sum n. The result then follows since \(\mathbb {P}[\textrm{Pois}(1)=k]=\frac{e^{-1}}{k!}\) for \(k\in \mathbb {Z}\), and since conditioning on the sum of the dice being \(n(n+1)/2\) corresponds to conditioning on \(\sum _{j=1}^njP_j=n(n+1)/2\). \(\square \)

The precise reason this description is useful is that given a die B one can define a linear function of the frequency count statistics of another die A which captures precisely whether A beats B or not. An equivalent computation appears in the work of Polymath [22, Section 4]; the formulation presented there however is more naturally a linear function of the “die faces” instead of the “frequency count statistics”.

Lemma 2.3

We have that a die A with sides \((a_1,\ldots ,a_n)\) beats a die B with sides \((b_1,\ldots ,b_n)\) if and only if

and ties if and only if the sum on the left is 0.

Proof

Notice that

Since A is an n-sided die with sum of faces \(n(n+1)/2\) we have

and therefore the event that A beats B is precisely equivalent to

whereas A and B being tied corresponds to the left side being equal to 0. \(\square \)

We cast this condition in an equivalent form which will be useful for computations involving Gaussians.

Definition 2.4

Let \(I_n\) be the \(n\times n\) identity matrix. Let \(\vec {v}_1,\vec {v}_2\in \mathbb {R}^n\) be defined by \(v_{1i}=1/\sqrt{n}\) for \(1\le i\le n\) and \(v_{2i}=(i-(n+1)/2)/\sqrt{n(n^2-1)/12}\) for \(1\le i\le n\). Note these are orthogonal unit vectors. Let \(M_n\in \mathbb {R}^{n\times n}\) be defined via \((M_n)_{ij}=\mathbbm {1}_{i<j}+(\mathbbm {1}_{i=j}/2)\) and \(M_n^*\in \mathbb {R}^{n\times n}\) via

Equivalently, we re-express the (asymmetric) bilinear form \(M_n\) in a basis including \(\vec {v}_1,\vec {v}_2\) on both sides, zero out the rows and columns corresponding to \(\vec {v}_1,\vec {v}_2\), and then convert back. Finally, define \(\sigma _{n,1}\ge \cdots \ge \sigma _{n,\lfloor n/2\rfloor }\) be such that \(\{\pm i\sigma _{n,\ell }:\ell \in [\lfloor n/2\rfloor ]\}\) is the spectrum of \(M_n^{*}\) (or the spectrum minus a copy of 0 if n is odd).

The following lemma introduces this discrete variant of the kernel which appears in the title of the paper.

Lemma 2.5

Given dice A, B with frequency vectors \(\widetilde{a},\widetilde{b}\in \{0,\ldots ,n\}^n\), we have that A beats B if and only if

Proof

This is immediate since a simple manipulation of Lemma 2.3 shows the condition that A beats B is equivalent to \((\widetilde{b}-\sqrt{n}\vec {v}_1)^\intercal M_n(\widetilde{a}-\sqrt{n}\vec {v}_1)>0\), and since \(\vec {v}_1^\intercal (\widetilde{a}-\sqrt{n}\vec {v}_1) = \vec {v}_2^\intercal (\widetilde{a}-\sqrt{n}\vec {v}_1)=\vec {v}_1^\intercal (\widetilde{b}-\sqrt{n}\vec {v}_1) = \vec {v}_2^\intercal (\widetilde{b}-\sqrt{n}\vec {v}_1) = 0\). \(\square \)

Finally, we record some properties of \(M_n^*\) as well as \(\mathcal {A}\) (Definition 1.3). We are brief with the details as it mostly amounts calculation with explicit functions and operators.

Lemma 2.6

There exists \(C = C_{2.6}>0\) such that the following holds. Let \(M_n^*\) be as in Definition 2.4, \(x = (n + 1 - 2i)/(n-1)\) and \(y = (n + 1-2j)/(n-1)\). Then we have the following:

- M1:

-

$$\begin{aligned} (M_n^*)_{ij} = \frac{\mathbbm {1}_{x\ge y}-\mathbbm {1}_{x\le y}}{2} - \frac{3(x-y)(1-1/n)}{4} - \frac{3xy(x-y)(n-1)^2}{4n(n+1)}. \end{aligned}$$

- M2:

-

\(M_n^*\) is skew-symmetric.

- M3:

-

\(\Vert M_n^*\Vert _{1\rightarrow \infty }\le C_{2.6}\) (i.e., the entries are of bounded size).

- M4:

-

\(\Vert M_n^*\Vert _{1\rightarrow 2}=\Vert M_n^{*\intercal }\Vert _{1\rightarrow 2}\le C_{2.6}\sqrt{n}\) (i.e., the row and column \(L^2\)-norms are \(O(\sqrt{n})\) in size).

- M5:

-

\(\Vert M_n^*\Vert _F/n\in [C_{2.6}^{-1},C_{2.6}]\).Footnote 4

- M6:

-

For fixed \(t\ge 1\) and n sufficiently large,

$$\begin{aligned} \sum _{\ell \ge t}\sigma _{n,\ell }^2\le C_{2.6}n^2/t. \end{aligned}$$ - M7:

-

For \(1\le i,j,k\le n\) we have \(|(M_n^*)_{ji}-(M_n^*)_{ki}|\le C_{2.6}|j-k|/n\) for all \(i\notin [j,k]\cup [k,j]\).

We also have the following properties of \(\mathcal {A}\).

- M8:

-

\((\sigma _{n,\ell }/n)_{1\le \ell \le t}\rightarrow (\sigma _\ell )_{1\le \ell \le t}\) as \(n\rightarrow \infty \).

- M9:

-

For all \(t\ge 1\) we have that \(t\sigma _t\in [C_{2.6}^{-1},C_{2.6}]\) (i.e., \(t\sigma _t\) is bounded above and below by an absolute constant).

Proof

Via a direct, albeit tedious computation, one has that if \(x = (n + 1 - 2i)/(n-1)\) and \(y = (n + 1-2j)/(n-1)\) then

The properties M2 to M5 and M7 all follow immediately via direct inspection.

To prove M6, it suffices to show that there is a rank \(t+4\) (say) approximation of \(M_n^*\), call it \(R_t\), such that \(\Vert M_n^*-R_t\Vert _F^2\lesssim n^2/t\). This follows from considering a rank t approximation for \(M_n\) and then plugging it into Definition 2.4. An appropriate rank t approximation for \(M_n\) with square-error \(O(n^2/t)\) can be formed by removing square matrices of 1s from the right isosceles triangle above the main diagonal of \(M_n\) in a dyadic fashion.

To prove the convergence given in M8, we proceed by an argument identifying matrices with operators in \(L^2([-1,1])\) via step functions. In particular, consider the matrices \(M_n^*\) and identify them with the kernels

and note that the action of \(M_n^*\) on \(\mathbb {R}^n\) corresponds exactly to the action of kernel \(M_n^{(*)}(x,y)\) on step functions where the index \(i\in [n]\) has been mapped to the interval \([1-2i/n,1-2(i-1)/n)\). These have the same spectrum: the multiplicative factor of n/2 corresponds to fact that the step function which is 1 on a single length 2/n interval has norm \((2/n)^{1/2}\) in the continuous formulation while it has norm 1 when viewed as a vector in \(\mathbb {R}^n\).

In general, given a kernel \(K:[-1,1]^2\rightarrow \mathbb {R}\) one can define the integral operator

and we have \(\Vert \widetilde{K}\Vert _{L^2([-1,1])\rightarrow L^2([-1,1])}\le \Vert K\Vert _{L^2([-1,1]^2)}\) by Cauchy–Schwarz (see e.g. [13, Example 9.23]). Via this identification, we have the strong convergence \(M_n^{(*)}(x,y)/n\rightarrow \widetilde{M^{(*)}}:=\mathcal {A}\), where the corresponding kernel is

Given this, since \(\mathcal {A},M_n^{(*)},M_n^*\) are skew-symmetric (hence normal) operators, it is easy to see that the normalized eigenvalues of \(M_n^*\) converge to those specified by Definition 1.3 (as strong convergence implies convergence of the spectrum). This proves M8.

Finally we prove M9. In order to prove M9, we first note that \(\mathcal {A}\) is a skew-symmetric perturbation of the integral operator associated to the function \(g(x,y) = \frac{\mathbbm {1}_{x\ge y}-\mathbbm {1}_{x\le y}}{4}\), with the rank of the perturbation bounded by 2. We claim that it suffices to prove that the t-th singular value of \(\widetilde{g}\) scales as \(\Theta (1/t)\). Indeed, apply the generalized Weyl’s inequality to the Hermitian operator \(\widetilde{g}^\dagger \widetilde{g}\) using that \(\mathcal {A}^\dagger \mathcal {A}\) is a bounded rank perturbation.

To compute the spectrum of \(\widetilde{g}\) (and thus that of \(\widetilde{g}^\dagger \widetilde{g}\)), note that the matrix given by \((T_n)_{ij} = \mathbbm {1}_{i\ge j} - \mathbbm {1}_{i\le j}\) has characteristic polynomial \(\frac{(-1)^n((\lambda +1)^n+(\lambda -1)^n)}{2}\); this is easily proven via row operations and induction. It follows that the eigenvalues of \(T_n\) are \((1+\exp (\pi i (2j-1)/n))/(1-\exp (\pi i (2j-1)/n))\) for \(1\le j\le n\). Thus the jth largest eigenvalue in magnitude scales as \(\Theta (n/j)\). The desired result then follows by rescaling and taking \(n\rightarrow \infty \). \(\square \)

3 Outline of the remainder of the proof

We now outline the remainder of the proofs of Theorems 1.4 and 1.8 in the multiset model; the balanced sequence model is very similar modulo adjusting various constant factors arising due to \(\textrm{Var}[\textrm{Geom}(1/2)] = 2\textrm{Var}[\textrm{Pois}(1)]=2\). We also discuss the various deductions which follow from Theorem 1.4. Consider a set of m dice \(A_1,\ldots ,A_m\) and let \(\widetilde{a}_k=(\widetilde{a}_{kj})_{1\le j\le n}\) be the n-dimensional vector corresponding to the frequency counts of \(A_k\) for \(1\le k\le m\).

3.1 Theorem 1.4 and its consequences

By Lemma 2.5 we have that \(A_j\) beats \(A_k\) if an only if \(\widetilde{x}_k^\intercal M_n^*\widetilde{x}_j>0\). Note that the constraints that \(\widetilde{x}_j\) satisfy are precisely \((1,\ldots ,1)^\intercal \widetilde{x}_j = n\) and \((1,2,\ldots ,n)^\intercal \widetilde{x}_j = n(n+1)/2\) (equivalently, \(\widetilde{x}_j-\vec {1}\) is orthogonal to \(\vec {v}_1,\vec {v}_2\)). By construction we have that \(M_n^*\vec {1} = \vec {0}\) and \(M_n^*(1,2\ldots ,n) = \vec {0}\). Therefore for the sake of reasoning heuristically, we can pretend that the conditioning in Lemma 2.2 does not affect the probability distribution of \(\widetilde{x}_k^\intercal M_n^*\widetilde{x}_j\) and instead suppose that \(\widetilde{x}_\ell \) are replaced by \(X_\ell \), n-dimensional vectors where every entry is taken independently at random to be \(\textrm{Geom}(1/2)\). Now \(X_k^\intercal M_n^*X_j\) is a bilinear polynomial of independent random variables. Tools such as the invariance principle of Mossel et al. [19] imply that the associated distribution is close to the distribution in the case where \(X_\ell \) are replaced by \(Z_\ell \), where each entry of \(Z_\ell \) is an independent normal of variance \(\textrm{Var}[\textrm{Geom}(1/2)]=2\). Given this, we can convert to a Gaussian quadratic form. This is invariant under orthogonal transformation, so a singular value decomposition for the skew-symmetric matrix \(M_n^*\) and an appropriate variant of the spectral theorem quickly leads to the distribution in Theorem 1.4. In particular, the coefficients associated in Theorem 1.4 arise precisely from an application of Lemma 2.6.

In order to prove this heuristic, we need to precisely understand the joint distribution of \((1,\ldots ,1)^\intercal X_j\), \((1,2,\ldots ,n)^\intercal X_j\), and the desired quadratic forms. We proceed using Fourier transform (characteristic function) and computing the multidimensional Fourier coefficients of the joint distribution of the quadratic forms conditional on these linear equalities. This conditional expectation can be recast using Bayes’ theorem and converted to an expression involving joint coefficients involving both quadratic and linear forms, which we can provide control for using the techniques in Sect. 5. Our proof here is closely related to that of that in the work of Polymath [22] which similarly used local central limit theorem techniques to decouple various linear conditions; however the implementation is performed in a rather different manner.

We write this more explicitly. For the sake of this discussion, let \(\mathcal {E}\) denote the event that all that the m samples \(X_\ell \) for \(1\le \ell \le m\) satisfy \((1,\ldots ,1)^\intercal X_j = n,(1,2,\ldots ,n)^\intercal X_j = n(n+1)/2\). We then must compute

for all choices of \(\theta =(\theta _{jk})_{1\le j<k\le m}\), where \(\Vert \theta \Vert _\infty \) is roughly \(\widetilde{O}(1/n)\).

Via applying Bayes’ rule, this amounts to computing

since then considering \(\theta =0\) gives an estimate for \(\mathbb {E}[\mathbbm {1}_{\mathcal {E}}]=\mathbb {P}[\mathcal {E}]\) and we can divide to obtain the conditional expectation. At this juncture, much as in the work of Polymath [22], we rely on the Fourier inversion formula to convert the indicator \(\mathbbm {1}_{\mathcal {E}}\) into an explicit integral formula in terms of additional Fourier terms involving the above linear forms. (Note that this conversion is only available to us in the multiset model due to the key lemma Lemma 2.2, and even in the balanced sequence model we utilize the setup of Lemma 2.2 to prove Theorem 1.4.)

In particular, by applying Fourier inversion on the lattices we will find

In order to prove the desired result, we split the integral into several regions. If any \(|\xi _{1j}|\ge n^{-1/2}(\log n)^7\) or \(|\xi _{2j}|\ge n^{-3/2}(\log n)^6\), we prove that the corresponding term in the integral is super-polynomially small using Lemmas 5.6–5.8. Specifically, we condition on everything outside of the index r, and then the corresponding Fourier integral is simply a product of independent terms handled by these lemmas. To prove these lemmas, we extract cancellation in a systematic and clean manner by considering pairs and triplets of indices and performing “switches” between then in order to extract Boolean randomness. These switches allow for one to provide sufficient conditions on various coefficient sequences to be good enough to perform these arguments, and said conditions exist purely in “physical space” (whereas the approach taken in the work of Polymath [22] naturally leads one to consider how various coefficients are distributed with respect to angles on the torus). Finally, in the region where \(|\xi _{1j}|\le n^{-1/2}(\log n)^{7}\) and \(|\xi _{2j}|\le n^{-3/2}(\log n)^{6}\) we apply a Lindeberg exchange argument (see [16], and also the related proof of the invariance principle [19]) to replace the geometric random variables with Gaussians of the same variance. Using the rapid decay of Fourier coefficients the Gaussian and Gaussian rotational symmetry one can verify the Fourier coefficient matches that of the associated Gaussian prediction and thus the desired result follows via Lévy continuity and similar techniques which convert Fourier control back to physical space control.

In order to prove Corollary 1.5, we directly cite Theorem 1.4 and uses symmetries of the Gaussian distribution under negation to derive the necessary result. For Corollary 1.7, note that convergence to a tournamenton follows from general machinery since we have the convergence of each digraph. To deduce that the associated tournamenton is \(\{0,1\}\)-valued we reduce to proving a random tournament on M dice takes on outcomes within a specific set of complete tournaments of size \(2^{\varepsilon M^2}\) with probability at least \(1-\varepsilon \). This is shown using Theorem 1.4: note that we can simulate the limiting tournament on M vertices by sampling the Gaussians \(G_\ell ^{(j)}\) for \(1\le j\le M\) and \(\ell \ge 1\) and computing the various \(H_{jk}\) and checking their signs. By revealing for each j the first \(2M^{1/2}\) Gaussians \(Z_\ell ^{(j)}\) within a rounding error of \(M^{-10}\), this provides at most \(\exp (O(M^{3/2}\log M))\) buckets where almost all the probability mass lies and also allows us with good probability to determine the outcome of almost all match-ups in the tournament (this deduction requires Gaussian anticoncentration results such as Theorem 4.3 in order to see that it is unlikely that many match-ups are “too close to call” due to the rounding error). Then revealing the outcomes of the remaining match-ups introduces \(\exp (o(M^2))\) total buckets that contain almost all the probability mass, and which uniquely determine the outcome of the M-die tournament.

Given the non-quasirandomness of the associated tournament from Corollary 1.7 and the underlying symmetries in Corollary 1.5 it also follows from a simple Cauchy–Schwarz argument that the limiting probability A beats B, B beats C, C beats D, and D beats A is strictly larger than 1/16 (see Proposition 8.2). Finally, we note that via carefully choosing various Gaussians \(Z_\ell ^{(j)}\) to lie in certain ranges one can prove that the limiting probability of any fixed M-die tournament occurring is strictly positive (see Proposition 8.3). This allows one to quickly deduce a number of prior results as discussed in the introduction.

3.2 Proof of Theorem 1.8

To compute the probability that two dice tie, proceed via a more delicate route. As discussed in the remark following Theorem 1.8, one can see this as a (special case of a) local limit theorem version of Theorem 1.4 with two dice.

We use ideas closely related to those in the proof of Theorem 1.4, as well as additional Fourier coefficient estimates (Lemmas 5.4, 5.5) which use the extra condition that certain associated coefficient sequences “resemble a simple random walk at all scales” in a coarse sense. It follows that for almost all outcomes of die \(A_1\), the probability a random die \(A_2\) with frequency counts \(\widetilde{x}_2\) ties \(A_1\) is proportional to \(\Vert M_n^*\vec {x}_2\Vert _2^{-1}\). (We note that such a result for the balanced sequence model is essentially implicit in the work of Polymath [22] although not stated in such a manner; however, again, our work proceeds through frequency counts instead of using independent die faces which are not available for the multiset model.)

Therefore the natural approach at this point would be to prove a limit theorem for \(\Vert M_n^{*}X\Vert _2^2\), where X is a sequence of geometric random variables conditional on the two linear constraints \((1,\ldots ,1)^\intercal X=n,(1,2,\ldots ,n)^\intercal X=n(n+1)/2\). While this appears to be possible note that \(\Vert M_n^*\vec {x}\Vert _2^2\) is a genuinely quadratic polynomial in the underlying random variables (instead of being multilinear in the case of Theorem 1.4) and hence for a direct approach various tools developed by Berkowitz [4], developed in the context of local central limit theorems for clique counts in dense random graphs, would appear to be necessary, which would greatly complicate the situation.

To circumvent this, we proceed indirectly so as to only require linear Fourier estimates. The basic idea is that given a sufficiently good upper bound on \(\Vert M_n^*X\Vert _3\) (conditional on our two linear constraints), by sampling a fixed number of random coordinates \(j_1,\ldots ,j_T\) for some large constant T we have

holds with high probability as \(T\rightarrow \infty \). Therefore the question can be reduced to a question of understanding the linear statistics \((\langle e_{j_\ell },M_n^{*}X \rangle )_{1\le \ell \le T}\) jointly conditional on our two linear constraints. This can be handled by precisely the techniques developed we discussed in Sect. 3.1 for Theorem 1.4. The estimates are necessarily a bit delicate since the function \(y\mapsto 1/y\) is not bounded near 0 and thus care must be taken to rule out the pathological cases where \(\Vert M_n^*X\Vert _2\) is small with unusually large probability.

4 Preliminaries

We briefly collect a series of preliminaries which will be used throughout the proof. First we will require a version of the classical Bernstein inequality, which generalizes Chernoff.

Theorem 4.1

([26, Theorem 2.8.1]) For a random variable X define the \(\psi _1\)-norm

There is an absolute constant \(c = c_{4.1}> 0\) such that the following holds. If \(X_1,\ldots ,X_N\) are independent random variables then

for all \(t\ge 0\).

Next we will require the Azuma–Hoeffding inequality (see [15, Theorem 2.25]).

Lemma 4.2

(Azuma–Hoeffding inequality) Let \(X_0, \ldots , X_n\) form a martingale sequence such that \(|X_k-X_{k-1}|\le c_k\) almost surely. Then

Remark

We will refer to \(\sum _{k=1}^nc_k^2\) as the variance proxy in such a situation.

Furthermore we will require the Carbery–Wright theorem [5] for which prove that low-degree functions of Gaussians are anticoncentrated; we will only require the quadratic case.

Theorem 4.3

(see e.g. [17, Theorem 1.4]) Fix an integer \(d\ge 1\). There exists a constant \(C_d\) such that the following holds. For any \(\varepsilon >0\), if \((G_i)_{1\le i\le n}\) are independent Gaussian random variables, and P is a polynomial of degree at most d then

We will also require the invariance principle of Mossel et al. [19]. The version stated in Theorem 4.5 below is a stated as [20, (11.66)] (with the necessary hypercontractivity following from [19, Proposition 3.16]).

Definition 4.4

Given a multilinear polynomial \(g(x_1,\ldots ,x_n) = \sum _{S\subseteq [n]} a_S\prod _{i\in S}x_i\), for \(t=1,\ldots ,n\) the influence of the variable \(x_t\) is defined as

Theorem 4.5

Fix \(M\ge 1\); there exists \(M'>0\) such that the following holds. Let g be an n-variable multilinear polynomial of degree at most k. Let \(\vec {y}\) be a vector of i.i.d. random variables such that \(\mathbb {E}[y_i] = 0\), \(\mathbb {E}[y_i^2] = 1\) and \(\mathbb {E}[|y_i|^3]\le M\). Let \(\vec {z}\sim \mathcal {N}(0,1)^{\otimes n}\) be a vector of independent standard Gaussian random variables. Then for any three-times-differentiable function \(\psi :\mathbb {R}\rightarrow \mathbb {R}\), we have

Next, we will require the following concentration inequality for low-degree polynomials of Gaussian; the Rademacher case is stated as [20, Theorem 9.23] and the Gaussian case follows by taking limits via and applying the central limit theorem.

Theorem 4.6

Let f be a polynomial in n variables of degree at most d. Let \(\vec {x}=(x_1,\ldots ,x_n)\) be a vector of independent standard Gaussian random variables. Then for any \(t\ge (2e)^{d/2}\),

We also require a statement allowing one to quantify the convergence in distribution of a random variable given convergence of the associated Fourier transform. The following result is immediate from [21, p. 104, Theorem 1]; this is an essentially standard inequality used in the proof of the Berry–Esseen theorem.

Theorem 4.7

There exists an absolute constant \(C = C_{4.7}>0\) such that the following statement holds. Consider a pair of random variables X and Y and a parameter \(T>0\). We have that

Next we will require a multidimensional version of Esséen’s concentration inequality.

Theorem 4.8

([24, Lemma 7.17]) There exists an absolute constant \(C = C_{4.8}>0\) such that the following statement holds. Given a random variable X in \(\mathbb {R}^d\), we have that

Finally we will require the following consequence of Fourier inversion on lattices.

Theorem 4.9

Given a bounded random variables \(T\in \mathbb {Z}^d\) and \(X\in \mathbb {R}\), possibly dependent, we have

5 Fourier coefficient bounds

5.1 Coefficient sequence

For the purposes of proving various central limit theorem and local central limit theorems, we will consider sums

with coefficient sequences \((c_j)_{1\le j\le n}\) which are more general than those arising from \(\big (\sum _{1\le k<j}\widetilde{b}_k + \frac{\widetilde{b}_j}{2} - (j-1/2)\big )_{1\le j\le n}\), which comes out of Lemma 2.3. The following definitions for such sequences arises from the proof; roughly, a sequence is well-bounded if it does not deviate much more than a simple random walk would, and it is coarse if it further resembles such a simple random walk at some finer scales.

Definition 5.1

We say a coefficient sequence \((c_j)_{1\le j\le n}\) is well-bounded if the following conditions hold:

- S1:

-

\(|c_j|\le \sqrt{n}\log n\);

- S2:

-

\(\sum _{j=1}^nc_j = 0\);

- S3:

-

\(|c_j-c_k|\le \sqrt{|j-k|}(\log n)^2\) for all \(1\le j,k\le n\);

and we say it is coarse if it is well-bounded and additionally the following hold:

- S4:

-

\(\min _{a,b\in \mathbb {R}}\sum _{j=1}^n(c_j-aj-b)^2\ge n^2/(\log n)^2\);

- S5:

-

There are at least \(n/\log n\) indices j such that \(c_j = c_{j+1} = c_{j+2} - 1/2\);

- S6:

-

For each integer \(y\in [n^{1/4},n/(\log n)^2]\) there are at least \(n/\log n\) indices \(1\le j\le n-2y\) such that \(|c_j-2c_{j+y}+c_{j+2y}|\ge \sqrt{y}\).

5.2 Fourier estimates

We now bound various Fourier expressions that will serve as a key input to our argument. We first define the basic setup.

Definition 5.2

Let \(\Delta \) be a distribution which is either \(\textrm{Geom}(1/2)\) or \(\textrm{Pois}(1)\). Sample \(X_j\sim \Delta \) independently for \(1\le j\le n\) and fix a sequence \((c_j)_{1\le j\le n}\). Define the random variables

We will be interested in Fourier coefficients of the form \(\mathbb {E}\exp (i\vec {\Theta }\cdot (T_1,T_2,T_3))\). Our approach in general will be to reduce to essentially expressions involving Rademacher random variables and then to apply various basic bounds to conclude. (Note that we are not conditioning on the sum variable \(T_1\) or “area” variable \(T_2\) at this stage.)

Fact 5.3

Given \(R\sim \textrm{Ber}(1/2)\), \(R\sim \textrm{Geom}(1/2)\), or \(R\sim \textrm{Pois}(1)\) and real \(|\Theta |\le 3\pi /2\) we have

for some appropriate absolute constant \(c_{5.3}>0\).

Proof

This follows immediately from the explicit computation that

and some simple bounds based on Taylor series. \(\square \)

We first handle \(\vec {\Theta }\) where \(|\Theta _3|\) is large, since it is the most involved and serves as a basis for the other proofs. The key idea, which will be used to handle all the estimates present, is to extract independent random variables which isolate the effect of exactly one of the \(\Theta _j\).

Lemma 5.4

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(n^{-1/2}(\log n)^2\le |\Theta _3|\le \pi \). Then given Definition 5.2 and that \((c_j)_{1\le j\le n}\) is coarse, we have

Remark

This estimate, as well as Lemma 5.5, is only needed to establish Theorem 1.8.

Proof

Since the coefficient sequence \((c_j)_{1\le j\le n}\) is coarse, using S5 there exists a 4-separated set of indices J (i.e., the difference of distinct elements is at least 4) such that \(|J|=\Omega (n/\log n)\) and such that for \(j\in J\) we have \(c_j = c_{j+1} = c_{j+2}-1/2\). We now claim that

where R, W, and Z are independent random variables defined via \(R=\textrm{Ber}(1/2)\),

for \((k_1,k_2,k_3)\in \mathbb {Z}_{\ge 0}^3\). Indeed, to see this let \(2q=\min \{\mathbb {P}[(X_1,X_2,X_3) = (0,2,0)], \mathbb {P}[(X_1,X_2,X_3) = (1,0,1)]\}\) and consider the following procedure: sample \((X_1,X_2,X_3)\), but if either of the tuples \((x_1,x_2,x_3)\in \{(0,2,0),(1,0,1)\}\) is drawn then with probability \(q/\mathbb {P}[(X_1,X_2,X_3)=(x_1,x_2,x_3)]\) enter a “resampling phase” where we decide with probability 1/2 whether to output (0, 2, 0) or (1, 0, 1), overwriting the old value to produce a tuple \((X_1',X_2',X_3')\). (So, the “resampling phase” occurs with chance 2q by the law of total probability.) We see the distributional equality \((X_1',X_2',X_3')\overset{d.}{=}(X_1,X_2,X_3)\) by construction, but \((X_1',X_2',X_3')\) is easily seen to be captured by the formula (5.1).

Note (5.1) holds even if we shift indices. Furthermore as the indices in J are 4-separated we have the distributional equality

Notice by the triangle inequality and independence that

The first and second lines follow from independence and the triangle inequality, the third follows from

and the fourth follows from Fact 5.3. In the final line we have used independence and Bernstein’s inequality, which implies that \(\#\{j\in J:W_j=1\}=\sum _{j\in J}\mathbbm {1}_{W_j=1}\le cn/\log n\) occurs with super-polynomially small probability for some small absolute constant \(c>0\). \(\square \)

We next handle the case of intermediate \(|\Theta _3|\). The remaining unhandled range will be in some sense controllable by an appropriate central limit theorem.

Lemma 5.5

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(n^{-1}(\log n)^3\le |\Theta _3|\le n^{-1/2}(\log n)^2\). Then given Definition 5.2 and that \((c_j)_{1\le j\le n}\) is coarse, we have

Proof

Let \(y\in [n^{1/4},n/(\log n)^2]\) be an integer to be chosen later based on \(n,|\Theta _3|\). Since \((c_j)_{1\le j\le n}\) is a coarse sequence, by S6 there exists a set of indices J of size \(\Omega (n/\log n)\) such that the sets \(J, J+y, J+2y\) are disjoint and for each \(j\in J\) we have \(\sqrt{y}\le |c_j-2c_{j+y}+c_{j+2y}|\le 2\sqrt{y}(\log n)^2\) (the second inequality follows from two applications of S3). Therefore proceeding in an essentially identical manner to Lemma 5.4 (in particular writing \((X_j,X_{j+y},X_{j+2y})=(1-W_j)Z_j+W_j((0,2,0)+R_j(1,-2,1))\) for \(j\in J\) similar to the proof of the previous lemma), we have that

The reasoning is essentially identical to that in the proof of Lemma 5.4. We need that \(|\Theta _3(c_j-2c_{j+y}+c_{j+2y})|\le \sqrt{y}(\log n)^2|\Theta _3|\le 3\pi /2\) in order to apply Fact 5.3. If we additionally have that \(\Theta _3^2y\cdot n/(\log n)\ge (\log n)^2\), then using \(|J|=\Omega (n/\log n)\) we can conclude the final estimate in a similar manner to the proof of Lemma 5.4. Thus it suffices to choose an integer y satisfying

and \(y\in [n^{1/4},n/(\log n)^2]\). This clearly exists by the given bounds for \(|\Theta _3|\). \(\square \)

We now prove a similar estimate for the case where \(|\Theta _2|\) is near the maximum size. The proof is once again rather similar, but in this case we only need to consider consecutive pairs of indices \((j,j+1)\) in order to extract the necessary effect.

Lemma 5.6

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(n^{-1/2}\log n\le |\Theta _2|\le \pi \), \(|\Theta _3|\le n^{-1}(\log n)^3\) and \((c_j)_{1\le j\le n}\) satisfies S1 and S3. Then given Definition 5.2, we have

Remark

Lemmas 5.6–5.8 are needed for both Theorems 1.4 and 1.8. Note that these lemmas do not need an assumption on coarseness of \((c_j)_{1\le j\le n}\).

Proof

Since the coefficient sequence \((c_j)_{1\le j\le n}\) satisfies S3 we have \(|c_j-c_{j+1}|\le (\log n)^2\). Let \(J\subseteq [n]\) be a 2-separated set of indices of size \(\Omega (n)\). Furthermore note that \((X_1,X_2) \overset{d.}{=}\ (1-W)Z + W((1,0) + R(-1,1))\), where R, W, Z are independent random variables with \(R=\textrm{Ber}(1/2)\), \(W = \textrm{Ber}(\mathbb {P}[(X_1,X_2)=(1,0)])\), and

for \((k_1,k_2)\in \mathbb {Z}_{\ge 0}^2\), similar to as in the proof of Lemma 5.4.

Now for each index in J, we write \((X_j,X_{j+1}) = (1-W_j)Z_j + W_j((1,0) + R_j(-1,1))\). Notice by the triangle inequality and independence that

The first and second line follows from triangle inequality, the third follows from \((1,1)\cdot (-1,1) = 0\), \((j,j+1)\cdot (-1,1) = 1\), and \((c_j,c_{j+1})\cdot (-1,1) = c_{j+1}-c_j\) for \(j\in J\), and the fourth from Fact 5.3 as well as \(2|c_{j+1}-c_j||\Theta _3|\le 2n^{-1}(\log n)^5\le |\Theta _2|/2\). In the final line we have once again used Bernstein’s inequality. \(\square \)

We next handle intermediate \(|\Theta _2|\). In the remaining range central limit theorem type estimates become effective.

Lemma 5.7

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(n^{-3/2}(\log n)^6\le |\Theta _2|\le n^{-1/2}\log n\), \(|\Theta _3|\le n^{-1}(\log n)^3\) and \((c_j)_{1\le j\le n}\) satisfies S1 and S3. Then given Definition 5.2, we have

Proof

Let \(1\le y\le n/8\) be an integer to be chosen later based on \(n,|\Theta _2|\). Consider \(J = \{\lfloor n/2\rfloor -2y,\lfloor n/2\rfloor -2y + 1,\ldots ,\lfloor n/2\rfloor -y\}\). We have

for all \(j\in J\). We ensure that y is chosen so that \(\sqrt{y}(\log n)^5/n\le cy|\Theta _2|\) for an appropriately small absolute constant \(c>0\) and so that \(y|\Theta _2|\le 1/8\) as well. We also guarantee \(y\ge (\log n)^2\).

We now write \((X_j,X_{n-j}) = (1-W_j)Z_j + W_j((1,0) + R_j(-1,1))\) for \(j\in J\) in a similar manner to the proof of Lemma 5.6, and find

We used that \((n-2j)\Theta _2\) dominates \(2(c_{n-j}-c_j)\Theta _3\) in the second last line, as well as \(2|(n-2j)\Theta _2|\le 1\) to apply Fact 5.3. For the last line, we note that \(\sum _{j\in J}\mathbbm {1}_{W_j = 1}\lesssim y\) occurs with super-polynomially small probability (since \(y\ge (\log n)^2\)) and we are using the estimate \(y^3\Theta _2^2\ge (\log n)^2\).

To finish the proof, we check that it is possible to choose integer \(1\le y\le n/8\) with \(y\ge (\log n)^{2/3}|\Theta _2|^{-2/3}\) and \(y\ge (\log n)^2\) as well as \(y\le |\Theta _2|^{-1}/8\) and \(y\ge c^{-2}(\log n)^{10}/(n^2\Theta _2^2)\). The bounds on \(|\Theta _2|\) easily imply this is possible. \(\square \)

We now are finally in position to handle the cases where \(|\Theta _1|\) is large. The remaining region will be handled by central limit theorem style techniques.

Lemma 5.8

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(n^{-1/2}(\log n)^7\le |\Theta _1|\le 5\pi /4\), \(|\Theta _2|\le n^{-3/2}(\log n)^6\), and \(|\Theta _3|\le n^{-1}(\log n)^3\) and \((c_j)_{1\le j\le n}\) satisfies S1 and S3. Then given Definition 5.2, we have

Proof

Note that \(|\Theta _2|n + |\Theta _3|\max _{1\le j\le n}|c_j|\le 2(\log n)^6n^{-1/2}\). Therefore we have

where we have simply noted that \(\Theta _1\) dominates \(\Theta _2j+2\Theta _3c_j\) and applied Fact 5.3. \(\square \)

We now prove the desired estimate for the region which is approximately within the region which is controlled via a central limit theorem. For completeness we provide a short proof via an argument closely related to the Lindeberg exchange method [16] and the proof of the Gaussian invariance principle [19] (see Theorem 4.5). This will help us reduce computing the necessary integrals to a purely Gaussian integration problem.

Lemma 5.9

Suppose that \(\vec {\Theta } = (\Theta _1,\Theta _2,\Theta _3)\) is such that \(|\Theta _1|\le n^{-1/2}(\log n)^7\), \(|\Theta _2|\le n^{-3/2}(\log n)^6\), \(|\Theta _3|\le n^{-1}(\log n)^3\), and \((c_j)_{1\le j\le n}\) satisfies S1 and S2. Given Definition 5.2, we further define

where we independently sample \(X_j'\sim \mathcal {N}(0,\textrm{Var}[\Delta ])\). Then we have

Remark

If \(\Delta =\textrm{Geom}(1/2)\) then \((\mathbb {E}[\Delta ],\textrm{Var}[\Delta ])=(1,2)\), and if \(\Delta =\textrm{Pois}(1)\) then \((\mathbb {E}[\Delta ],\textrm{Var}[\Delta ])=(1,1)\).

Proof

Notice that by iteratively replacing \(X_i\) by \(X_i'\) and applying the triangle inequality we have

To justify the second-to-last inequality, we use that \(|\exp (ix) - 1 - ix + x^2/2|\le |x|^3\) for \(x\in \mathbb {R}\) from Taylor’s theorem and that the first and second moments of \(X_j-1\) and \(X_j'\) match. \(\square \)

6 Translating Fourier information

We now translate Fourier information into probabilistic information in order to prove Theorem 1.4. We defer the proof of the following lemma, which shows that certain coefficient sequences that will arise in our computation are well-bounded with very high probability, until the next section.

Lemma 6.1

Fix m and let \(1\le k^*\le m\). Consider \(\Theta \ne 0\) and \(\theta =(\theta _{jk})_{1\le j<k\le m}\) with \(\Vert \theta \Vert _\infty \le \Theta \). Consider independent random variables \(X_j^{(k)}\sim \Delta \) for \(1\le k\le m\) and \(1\le j\le n\), where \(\Delta \in \{\textrm{Geom}(1/2),\textrm{Pois}(1)\}\). Finally, let

Then with probability \(1-n^{-\omega (1)}\) we have that \((c_j^{(k^*)})_{1\le j\le n}\) is well-bounded (Definition 5.1).

Now we prove Theorem 1.4, which we recall for convenience.

Theorem 1.4

Fix \(m\ge 2\) and independently sample n-sided dice \(A_1,\ldots ,A_m\), either all from the multiset model or all from the balanced sequence model. Let \(G^{(j)}\) for \(1\le j\le m\) be infinite vectors of standard Gaussians and for \(1\le j<k\le m\) let

Then for any digraph D on vertices [m],

Proof of Theorem 1.4 given Lemma 6.1

Sample k dice either all from the multiset model or all from the balanced sequence model, \(A_1,\ldots ,A_m\) with \(A_j=(a_{j1},\ldots ,a_{jn})\in [n]^n\). Let the frequency counts of die \(A_k\) be \(\widetilde{a}_{ki}=|\{j:a_{kj}=i\}|\). We are given the tournament D on [k] and wish to understand the chance that \(A_i\) beats \(A_j\) precisely when ij is a directed edge. (Note that we may assume D is a full tournament since partial tournaments clearly follow by summing appropriately.)

If we are in the multiset model let \(\Delta =\textrm{Geom}(1/2)\) and if we are in the balanced sequence model let \(\Delta =\textrm{Pois}(1)\). Consider m independent copies of the setup in Definition 5.2 (ignoring the sequence \(c_j\) and random variable \(T_3\)), denoted by \((X_j^{(k)})_{1\le j\le n}\) and \((T_j^{(k)})_{1\le j\le 2}\) for \(1\le k\le m\), corresponding to the k-th die. Note that \((\widetilde{a}_{ki})_{1\le i\le n}\) is distributed as \((X_j^{(k)})_{1\le j\le n}\) conditional on \(T_1^{(k)}=T_2^{(k)}=0\) by Lemma 2.2.

Let

for \(1\le k_1<k_2\le m\) (recall \(\vec {v}_1\) from Definition 2.4), and for \(\theta =(\theta _{k_1k_2})_{1\le k_1<k_2\le m}\) let

By Theorem 4.9 with \(T:=(T_b^{(k)})_{k\in [m],b\in [2]}\) and indexing the coordinates of \(\vec {\xi }\) by \((\xi _{kb})_{k\in [m],b\in [2]}\), we have

We fix some \(\theta \) satisfying \(\Vert \theta \Vert _\infty \le n^{-1}(\log n)^3\). Given this condition, we will now estimate the integrand and show that it is very small unless \(\Vert \vec {\xi }_{\cdot 1}\Vert _\infty =\widetilde{O}(n^{-1/2})\) and \(\Vert \vec {\xi }_{\cdot 2}\Vert _\infty =\widetilde{O}(n^{-3/2})\).

We now collect terms so as to express the argument in the exponential as a linear function of \(X^{(k^*)}\) with coefficients depending on \(X^{(k)}\) for \(k\ne k^*\). We see

for some \(\widetilde{Y}_{k^*}\) that depends only on \((X^{(k)})_{k\ne k^*}\). Consider \(\Theta =(\log n)^3/n\), and define

for \(k^*\in [m]\) and \(j\in [n]\). We have

Now we apply Lemmas 5.6–5.8 to gain control over \(\xi \). In order to use these, we need each \((c_j^{(k^*)})_{1\le j\le n}\) for \(k^*\in [m]\) to be a well-bounded coefficient sequence. By Lemma 6.1, this occurs with probability \(1-n^{-\omega (1)}\) over \((X^{(k)})_{k\ne k^*}\).

So if \(n^{-1/2}\log n\le |\xi _{k^*2}|\le \pi \) then by Lemma 5.6 we have that (6.3) is of magnitude \(n^{-\omega (1)}\): condition on an outcome of \((X^{(k)})_{k\ne k^*}\) for which \(c_j^{(k^*)}\) is well-bounded using Lemma 6.1, and then apply Lemma 5.6. We are using that \(\Theta =n^{-1}(\log n)^3\). Similarly, if \(n^{-3/2}(\log n)^6\le |\xi _{k^*2}|\le n^{-1/2}\log n\) then by Lemmas 5.7 and 6.1 we see that (6.3) is of magnitude \(n^{-\omega (1)}\). Finally, if \(|\xi _{k^*2}|\le n^{-3/2}(\log n)^6\) and \(n^{-1/2}(\log n)^7\le |\xi _{k^*1}|\le \pi \) then Lemmas 5.8 and 6.1 show (6.3) is of magnitude \(n^{-\omega (1)}\).

Combining these observations with (6.1) and (6.2) we see

where \(\tau _1=n^{-1/2}(\log n)^7\) and \(\tau _2=n^{-3/2}(\log n)^6\). Additionally, the product in the region of integration is interpreted as corresponding to the choice of \(b\in \{1,2\}\), i.e., the region is defined by \(|\xi _{k1}|\le \tau _1\) and \(|\xi _{k2}|\le \tau _2\).

Recall also that we assumed \(\Vert \theta \Vert _\infty \le n^{-1}(\log n)^3\). We can now use an approach similar to the proof of Lemma 5.9 (or [16, 19]) to exchange the variables \(X_j^{(k)}\) with shifted Gaussians \(Z_j^{(k)}+1\), where \(Z_j^{(k)}\sim \mathcal {N}(0,\textrm{Var}[\Delta ])\). Note that

Now since \(X_j^{(k)}-1\) are independent and mean 0, and have variance \(\textrm{Var}[\Delta ]\), we are in position to apply Theorem 4.5. We first compute that the influences for the degree 2 multilinear polynomial corresponding to (6.5) are bounded by

using Lemma 2.6 (specifically, M4).

Let \(Z_j^{(k)}\sim \mathcal {N}(0,\textrm{Var}[\Delta ])\) be independent Gaussians and let

By Theorem 4.5 and (6.4) we have

Note that the latter two sums in \(\widetilde{Z}\), which involve \(\vec {\xi }\), only depend on \(Z^{(k)}\cdot \vec {v}_1\) and \(Z^{(k)}\cdot \vec {v}_2\) whereas the bilinear forms only depend on the projection of \(Z^{(k)}\) to the orthogonal complement of \(\textrm{span}_{\mathbb {R}}\{\vec {v}_1,\vec {v}_2\}\) (by Definition 2.4). Therefore we see that the first sum is independent from the latter two. This means that the integrand in (6.6) is the product of some constant and some multivariate Gaussian characteristic function.

Now, if \(\Vert \xi _{\cdot 1}\Vert _\infty \ge \tau _1\) or \(\Vert \xi _{\cdot 2}\Vert _\infty \ge \tau _2\), then easily we find there is some \(k^*\in [m]\) with

We therefore deduce that for such \(\vec {\xi }\),

Furthermore, since the integrand is proportional to the characteristic function of some multivariate Gaussian, it is easy to see that the integral to infinity over such \(\vec {\xi }\) is still \(n^{-\omega (1)}\) in size. So, from (6.6) we deduce

Plugging in \(\theta =\vec {0}\) and dividing, and noting that the integral in the last line is order \(\Theta ((n^{-1/2}\cdot n^{-3/2})^m)\) (treating m as fixed), we deduce

for \(\Vert \theta \Vert _\infty \le n^{-1}(\log n)^3\).

We wish to show

since Lemma 2.5 (and the facts \(\vec {v}_1^\intercal M_n^*=0\) and \(M_n^*\vec {v}_1=0\)) shows \(A_j\) beats \(A_k\) precisely when \(Y_{jk}>0\). Now let \(G^{(j)}\) and \(H_{jk}\) be as in Theorem 1.4. From (6.7) and Lévy continuity, we see it is enough to show

as \(n\rightarrow \infty \). (Simple inspection of the proof shows that this would also imply the second remark following Theorem 1.4.) Note that we may assume \(\textrm{Var}[\Delta ]=1\) since \(Z_\ell ^{(j)}\sim \mathcal {N}(0,\textrm{Var}[\Delta ])\) and we are now in a scale-invariant situation with respect to \(\Delta \).

We are now purely in a setting of joint convergence of certain bilinear forms of standard Gaussian vectors. Thus, the problem will ultimately reduce to limiting spectral properties of the operators \(M_n^*\). By a variant of the spectral theorem, since \(M_n^*\) is skew-symmetric by Lemma 2.6 (M2), we can write \(M_n^*=Q_n\Sigma _nQ_n^\intercal \), where \(Q_n\) is orthogonal and \(\Sigma _n\) consists of diagonal \(2\times 2\) blocks of the form

for \(\ell \in [\lfloor n/2\rfloor ]\), and possibly a single 0 in the final diagonal entry if n is odd. By orthogonal invariance of Gaussian vectors, applying the orthogonal matrix \(Q_n\), our distribution is the same as

where \(G^{(j)}\) are independent standard Gaussian vectors. We have

We have that for any constant \(t\ge 1\), \((\sigma _{n,\ell }/n)_{1\le \ell \le t}\rightarrow (\sigma _\ell )_{1\le \ell \le t}\) as \(n\rightarrow \infty \) by Lemma 2.6 (M8).

Now consider some fixed \(t\ge 1\) (which we will take to be growing slowly at the end of this argument). Using \(\sum _{\ell \ge t}(\sigma _{n,t}/n)^2=O(1/t)\) and Chebyshev’s inequality we easily see that with probability \(1-O(t^{-1/2})\), the sum in (6.8) over indices \(\ell \ge t\) contributes at most \(O(t^{-1/4})\). Furthermore, \((\sigma _{n,\ell }/n)_{1\le \ell \le t}\rightarrow (\sigma _\ell )_{1\le \ell \le t}\) as \(n\rightarrow \infty \) by the above argument. Hence, we deduce that with probability at least \(1-O(t^{-1/2})\),

is within \(\ell ^\infty \) distance \(O(t^{-1/4})\) of a random vector which converges to

in distribution. Finally, taking \(t\rightarrow \infty \) slowly gives the desired result, recalling from the remark following Theorem 1.4 that almost surely the appropriate sums converge as \(t\rightarrow \infty \). \(\square \)

7 Properties of coefficient sequences

We next prove Lemma 6.1.

Proof of Lemma 6.1

By definition we have

hence \(\sum _{j=1}^nc_j^{(k^*)}=0\) immediately follows, establishing S2. Note also that \(c_j^{(k^*)}\) is a weighted sum of independent random variables \(X-1\), where \(X\sim \Delta \). Since \(\mathbb {E}[\Delta ]=0\) and \(\Delta \) is either Poisson or geometric we easily see that it is a sum of independent mean 0 random variables with bounded \(\Vert X-1\Vert _{\psi _1}\). Additionally, the coefficients of \(c_j^{(k^*)}\) are of the form \(\theta _{kk^*}(M_n^*)_{jj'}/(2\Theta )\) and \(\theta _{k^*k}(M_n^*)_{j'j})/(2\Theta )\), which by definition and Lemma 2.6 (M3) are bounded in magnitude.

Hence we can apply Bernstein’s inequality (Theorem 4.1) to obtain

Choose \(t=\sqrt{n}\log n\), which implies that the event \(|c_j^{(k^*)}|\ge \sqrt{n}\log n\) occurs with probability at most \(\exp (-\Omega ((\log n)^2))\). Taking a union bound over n events for \(1\le j\le n\), we obtain S1 with probability \(1-n^{-\omega (1)}\).

Finally, S3 is similar. We wish to show \(|c_{j_1}-c_{j_2}|\le \sqrt{|j_1-j_2|}(\log n)^2\) for all \(1\le j_1<j_2\le n\) occurs with probability \(1-n^{-\omega (1)}\), as then a union bound will finish the proof. To do this, we will exploit cancellations in \((M_n^*)_{j_1j'}-(M_n^*)_{j_2j'}\). In particular, we have

By Lemma 2.6 (M7) we have that \(|(M_n^*)_{j_1j'}-(M_n^*)_{j_2j'}|=O(|j_1-j_2|/n)\) for all but \(O(|j_1-j_2|)\) values of \(j'\), for which the value is O(1). Since \(M_n^*\) is skew-symmetric (M2), the same occurs when we transpose the matrix. Therefore we can use Bernstein’s inequality (Theorem 4.1) again, this time deducing

Taking \(t=\sqrt{|j_1-j_2|}(\log n)^2\ge (\log n)^2\) and taking a union bound, we deduce the desired conclusion. \(\square \)

We now prove that the coefficient sequence coming from Lemma 2.3 is typically coarse. This will be used to prove Theorem 1.8 later. We note that the idea of breaking into various intervals and extracting tuples of coefficients with the desired properties also appears in the work of Polymath, in particular in [22, Lemma 5.10]; however the proofs here are simpler as we require only a “physical space” condition on the coefficients.

Lemma 7.1

Let \(\Delta \in \{\textrm{Geom}(1/2),\textrm{Pois}(1)\}\). Let \(\widetilde{X}_j\sim \Delta \) for all \(1\le j\le n\) and then condition on \(\sum _{j=1}^n\widetilde{X}_j=n\) and \(\sum _{j=1}^nj\widetilde{X}_j=n(n+1)/2\). If

then with probability \(1-n^{-\omega (1)}\) the sequence \((c_j)_{1\le j\le n}\) is coarse (Definition 5.1).

Proof

We will prove that everything but S2 occurs in the unconditioned independent model with probability \(1-n^{-\omega (1)}\). Then note that

from Lemma 9.1 (which is proved only using results up to Sect. 5) or from the line before (6.7) in the proof of Theorem 1.4.

Thus the failure probability of any property in the conditional model will be at most equal to \((n^{-\omega (1)})/(\Omega (n^{-2}))=n^{-\omega (1)}\) by Bayes’ rule. So it suffices to consider the independent model, noting that S2 follows from the conditions \(\sum _{j=1}^n\widetilde{X}_j=n\) and \(\sum _{j=1}^nj\widetilde{X}_j=n(n+1)/2\).

S1 and S3 are simple Bernstein inequality calculations, similar to the proof of Lemma 6.1, and we omit the details. For S5, note that \(c_j=c_{j+1}=c_{j+2}-1/2\) follows if \(\widetilde{X}_j=\widetilde{X}_{j+1}=0\) and \(\widetilde{X}_{j+2}=1\). Let J be a 3-separated sequence of size \(\Omega (n)\) and note that \(j\in J\) satisfies the condition required by S5 with probability \(\Omega (1)\). Thus Bernstein’s inequality or Chernoff easily implies S5.

For S6, consider J which is all multiples of 4y in \(\{1,\ldots ,n-4y\}\), of size \(\Omega (n/y)\). For each \(j\in J\), the probability that \(|c_j-2c_{j+y}+c_{j+2y}|\ge \sqrt{y}\) is seen to be \(\Omega (1)\) by the central limit theorem, and this is independent over all \(j\in J\). Thus by Bernstein or Chernoff, with probability at least \(1-\exp (-\Omega (n/y))\) there are at least \(\Omega (n/y)\) many \(j\in J\) satisfying the condition required by S6. We can repeat the argument for the translations of J by \(\{1,2,\ldots ,y\}\) and take a union bound, which yields \(\Omega (n)\) many indices j with probability \(1-n^{-\omega (1)}\) as desired.

Finally, we consider S4. We can mimic the proof of S6 above except with \(y=\lfloor n/(\log n)^{3/2}\rfloor \) and still deduce that with probability \(1-n^{-\omega (1)}\), there are at least \(\Omega (n)\) indices \(1\le j\le n-2y\) with \(|c_j-2c_{j+y}+c_{j+2y}|\ge \sqrt{y}\). We can pass to a subset J of size \(\Omega (n)\) with the property that \(j-j'\notin \{\pm y,\pm 2y\}\) for all \(j,j'\in J\). For each \(j\in J\) we have

using the inequality \(x_1^2+x_2^2+x_3^2\ge (x_1-2x_2+x_3)^2/4\). Hence we deduce

for all \(a,b\in \mathbb {R}\). The result follows. \(\square \)

8 Consequences of Theorem 1.4

We now derive the claimed symmetry facts from the statement of Theorem 1.4.

Proof of Corollary 1.5

For the first consequence, note that the Gaussian distribution is negation invariant and therefore the result for reversing the edges at vertex u follows by negating the Gaussian \(G^{(u)}\) in Theorem 1.4. For the second consequence, simple replace every die with its “complement”, i.e., we map \((a_1,\ldots ,a_n)\) to \((n+1-a_n,\ldots ,n+1-a_i)\). (In the limiting expression of Theorem 1.4, this corresponds to switching \(G_{2\ell -1}^{(j)}\) and \(G_{2\ell }^{(j)}\) for all \(1\le j\le m\) and \(\ell \ge 1\).) \(\square \)

Now we turn to Corollary 1.7. We require the following lemma relating a tournamenton having image in the set \(\{0,1\}\) to the distribution of its k-vertex subtournaments. Recall that for tournamenton \(\mathcal {T}\), a \(\mathcal {T}\)-random tournament on M vertices is obtained by sampling M random points \(x_1,\ldots ,x_M\) from [0, 1] uniformly at random, and then sampling a directed edge from \(x_i\) to \(x_j\) with probability \(\mathcal {T}(x_i,x_j)\) (and otherwise putting one from \(x_j\) to \(x_i\)) for all \(1\le i<j\le M\).

Lemma 8.1

Fix a tournamenton \(\mathcal {T}\). Suppose that for every \(\varepsilon >0\), for all M sufficiently large there is a set \(\mathcal {F}_M\) of M-vertex tournaments with \(|\mathcal {F}_M|\le 2^{\varepsilon M^2}\) such that a \(\mathcal {T}\)-random tournament on M vertices lies in \(\mathcal {F}_M\) with probability at least \(1-\varepsilon \). Then \(\mu (\{(x,y):\mathcal {T}(x,y)\notin \{0,1\}\}) = 0\), where \(\mu \) is the Lebesgue measure on \([0,1]^2\).

Proof

Suppose that \(\mu (\{(x,y):\mathcal {T}(x,y)\notin \{0,1\}\})>0\). Then there exists \(\delta >0\) such that

Sample an M-vertex \(\mathcal {T}\)-random tournament, and define \(x_1,\ldots ,x_M\) as above. Let

We have that \(\mathbb {E}|X_M|\ge \delta \left( {\begin{array}{c}M\\ 2\end{array}}\right) \) from (8.1) and thus by applying the Azuma–Hoeffding inequality (Lemma 4.2) on the Doob martingale formed by revealing \(x_1,\ldots ,x_M\) in order, we see that \(\mathbb {P}[X_M\ge \delta M^2/4]\ge 1-\delta \) for M sufficiently large as a function of \(\delta \).

This means there is an event \(\mathcal {E}\) occurring with probability at least \(1-\delta \) over the randomness of \(x_1,\ldots ,x_M\) such that conditional on \(\mathcal {E}\), the entropy of our \(\mathcal {T}\)-random tournament is at least \(H(\textrm{Ber}(\delta ))\cdot |X_M|\gtrsim \delta ^2\,M^2\).

But by initial assumption there is an event \(\mathcal {F}\) holding with probability \(1-\varepsilon \) such that the original M-vertex tournament is in \(\mathcal {F}_M\). We see that the entropy of the \(\mathcal {T}\)-random tournament must be at most \(H(\varepsilon )+\log _2|\mathcal {F}_M|+\varepsilon \log _2(2^{M^2})\lesssim \varepsilon M^2\). Taking \(\varepsilon \) much smaller than \(\delta ^2\) and M sufficiently large, we obtain a contradiction. \(\square \)

We now are in position to prove Corollary 1.7.

Proof of Corollary 1.7

We first note that \(T_n\) converges to a limit tournamenton \(\mathcal {T}\) since Theorem 1.4 implies that for a fixed digraph D the associated densities converge. Thus the result follows via convergence of subgraph densities implying convergence in cut metric (see [9] where this theory is worked out in the case of directed graphs; the theory for tournamentons follows as a direct consequence via say applying [25, Theorem 4.1] which characterizes a directed graph limit being a tournamenton in terms of certain subgraph counts vanishing).

The more difficult part of Corollary 1.7 is verifying the conditions of Lemma 8.1. Fix m dice, where we will consider m large, and consider the random series \(H_{jk}\) from Theorem 1.4. For these m dice, reveal \(G_\ell ^{(j)}\) for \(\ell \le 2\lfloor m^{1/2}\rfloor \) and round the value to the nearest \(1/m^{25}\), and label each vertex with the corresponding tuple of values. Call the collection of these labels L(G), which depends only on \(G_\ell ^{(j)}\) for \(\ell \le 2\lfloor m^{1/2}\rfloor \). Note that with probability \(1-\exp (-\Omega (m))\) all these sampled Gaussians are bounded by m and hence there is a set \(\mathcal {L}\) of at most \(\exp (O(m^{3/2}\log m))\) different possible labelings such that \(L(G)\in \mathcal {L}\) under this event. Furthermore given these labels L(G), the value

is pinned down to within an interval \(I_{jk}(G)\) (defined whenever \(L(G)\in \mathcal {L}\)) of length at most \(m^{-20}\), say, for all \(1\le j<k\le m\).

Note that \(H_{j,k}-H_{j,k}^*\) has variance \(O(m^{-1/2})\) and hence with probability \(1-\exp (-m^{\Omega (1)})\) all these infinite tails are of magnitude at most say \(m^{-1/5}\) by Theorem 4.6.

Let \(\mathcal {L}'\) be the set of labelings \(L(G)\in \mathcal {L}\) such that the interval \(I_{jk}(G)\) intersects \([-m^{-1/5},m^{1/5}]\) for at most \(m^{2-1/20}\) many choices of \(1\le j<k\le m\). Let B(G) be the set of (j, k) where there is an intersection. Note B(G) depends only on L(G) whenever \(L(G)\in \mathcal {L}\). Combining the observations above, there is an event \(\mathcal {E}\) which occurs with probability \(1-\exp (-m^{\Omega (1)})\) such that the following holds if we assume \(\mathcal {E}\):

-

\(L(G)\in \mathcal {L}\), where \(\mathcal {L}\) is a deterministic set of size \(\exp (O(m^{3/2}\log m))\);

-

If \(L(G)\in \mathcal {L}'\) then the digraph \(D(G):=\{(j,k):H_{jk}>0\}\) depends only on the identity of L(G) and on whether (j, k) or (k, j) is in D(G) for all \((j,k)\in B(G)\). Here \(\mathcal {L}'\) is the deterministic subset of \(\mathcal {L}\) defined above.

If we can show that \(L(G)\in \mathcal {L}'\) with probability \(1-O(m^{-1/20})\), say, then by Theorem 1.4 this will establish the hypotheses of Lemma 8.1 and hence this will finish the proof. Indeed, then we know that with high probability the digraph D(G) can be determined by revealing \(L(G)\in \mathcal {L}\) (with \(\exp (O(m^{3/2}\log m))\) choices), which determines B(G), and then revealing whether \((j,k)\in D(G)\) for all \((j,k)\in B(G)\), which has at most \(2^{m^{2-1/20}}\) choices. This will establish the hypothesis of Lemma 8.1 for \(M=\Omega (\varepsilon ^{-20})\), say.

Finally, by Theorem 4.3 for fixed \(1\le j<k\le m\) the probability that \(H_{jk}^*=O(m^{-1/5})\) is at most \(O(m^{-1/10})\). Therefore by Markov’s inequality, there are at most \(m^{2-1/20}\) pairs in B(G) with probability \(1-O(m^{-1/20})\). The result follows. \(\square \)

We now give a sketch of an alternative proof of Corollary 1.7 pointed out by the anonymous referee; this proof more directly stems from Theorem 1.4.

Sketch of alternative proof of Corollary 1.7

By using measure-isomorphisms note that one can view digraphons as measurable functions \(W:X^2\rightarrow [0,1]\) where X is \(\Omega = (X,\mu )\) is any atomless probability space. Consider \(X = \mathbb {R}^{\mathbb {N}^{+}}\) equipped with the Borel \(\sigma \)-algebra (of the product topology). Let \(\mu \) be the distribution of an infinite vector of standard Gaussians and define \(W:X^2\rightarrow [0,1]\) by

By Theorem 1.4, sampling a digraph on m vertices from W has desired densities. Therefore \(\mathcal {T}\) is exactly W by the fact that convergence of tournamentons in cut metric is equivalent to convergence of digraph homomorphism densities. Furthermore W is obviously a \(\{0,1\}\)-tournamenton and thus the desired result follows. \(\square \)