Abstract

The Erdős–Taylor theorem (Acta Math Acad Sci Hungar, 1960) states that if \(\textsf{L}_N\) is the local time at zero, up to time 2N, of a two-dimensional simple, symmetric random walk, then \(\tfrac{\pi }{\log N} \,\textsf{L}_N\) converges in distribution to an exponential random variable with parameter one. This can be equivalently stated in terms of the total collision time of two independent simple random walks on the plane. More precisely, if \(\textsf{L}_N^{(1,2)}=\sum _{n=1}^N \mathbb {1}_{\{S_n^{(1)}= S_n^{(2)}\}}\), then \(\tfrac{\pi }{\log N}\, \textsf{L}^{(1,2)}_N\) converges in distribution to an exponential random variable of parameter one. We prove that for every \(h \geqslant 3\), the family \( \big \{ \frac{\pi }{\log N} \,\textsf{L}_N^{(i,j)} \big \}_{1\leqslant i<j\leqslant h}\), of logarithmically rescaled, two-body collision local times between h independent simple, symmetric random walks on the plane converges jointly to a vector of independent exponential random variables with parameter one, thus providing a multivariate version of the Erdős–Taylor theorem. We also discuss connections to directed polymers in random environments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Let \(S^{(1)},\dots ,S^{(h)}\) be independent, simple, symmetric random walks on \(\mathbb {Z}^2\) starting at the origin. We will use \(\textrm{P}_x\) and \(\textrm{E}_x\) to denote the probability and expectation with respect to the law of the simple random walk when starting from \(x \in \mathbb {Z}^2\) and we will omit the subscripts when the walk starts from 0. For \(1\leqslant i <j \leqslant h\) we define the collision local time between \(S^{(i)}\) and \(S^{(j)}\) up to time N by

Notice that given \(1\leqslant i<j\leqslant h\), \(\textsf{L}_N^{(i,j)}\) has the same law as the number of returns to zero, before time 2N, for a single simple, symmetric random walk S on \(\mathbb {Z}^2\), that is \( \textsf{L}_N^{(i,j)}{\mathop {=}\limits ^{\text {law}}}\textsf{L}_N:=\sum _{n=1}^N \mathbb {1}_{\{S_{2n}=0\}}\). This equality is a consequence of the independence of \(S^{(i)}\), \(S^{(j)}\) and the symmetry of the simple random walk. A first moment calculation shows that

see Sect. 2 for more details. It was established by Erdős and Taylor, 60 years ago [10], that under normalisation (1.1), \(\textsf{L}_N\) satisfies the following limit theorem.

Theorem A

([10]) Let \(\textsf{L}_N:=\sum _{n=1}^N \mathbb {1}_{\{S_{2n}=0\}}\) be the local time at zero, up to time 2N, of a two-dimensional, simple, symmetric random walk \((S_n)_{n\geqslant 1}\) starting at 0. Then, as \(N \rightarrow \infty \),

where Y has an exponential distribution with parameter 1.

Theorem A was recently generalised in [17]. In particular,

Theorem B

[17] Let \(h \in \mathbb {N}\) with \(h \geqslant 2\) and \(S^{(1)},\ldots ,S^{(h)}\) be h independent two-dimensional, simple random walks starting all at zero. Then, for \(\beta \) such that \(|\beta | \in (0,1)\), it holds that the total collision time \(\sum _{1\leqslant i<j\leqslant h} \textsf{L}_N^{(i,j)}\) satisfies

and, consequently,

where \( \Gamma \big (\tfrac{h(h-1)}{2},1\big )\) denotes a Gamma variable, which has a density \(\Gamma (h(h-1)/2)^{-1} x^{\tfrac{h(h-1)}{2}-1} e^{-x}\); \(\Gamma (\cdot )\), in the expression of the density, denotes the Gamma function.

Given the fact that a gamma distribution \(\Gamma (k,1)\), with parameter \(k\geqslant 1\), arises as the distribution of the sum of k independent random variables each one distributed according to an exponential random variable with parameter one (denoted as \(\textrm{Exp}(1)\)), Theorem B raises the question as to whether the joint distribution of the individual rescaled collision times \(\Big \{\frac{\pi }{\log N} \, \textsf{L}_N^{(i,j)}\Big \}_{1\leqslant i<j \leqslant h}\) converges to that of a family of independent \(\text {Exp}(1)\) random variables. This is what we prove in this work. In particular,

Theorem 1.1

Let \(h \in \mathbb {N}\) with \(h\geqslant 2\) and \(\varvec{\beta }:=\{\beta _{i,j}\}_{1\leqslant i<j \leqslant h} \in \mathbb {R}^{\frac{h(h-1)}{2}}\) with \(|\beta _{i,j}| <1\) for all \(1\leqslant i<j\leqslant h\). Then we have that

and, consequently,

where \(\big \{ Y^{(i,j)} \big \}_{1\leqslant i<j\leqslant h}\) are independent and identically distributed random variables following an \(\text {Exp}(1)\) distribution.

An intuitive way to understand the convergence of the individual collision times, or equivalently of the local time of a planar walk, to an exponential variable is the following. By (1.1), the number of visits to zero of a planar walk, which starts at zero, is \(O(\log N)\) and, thus, much smaller than the time horizon 2N. Typically, also, these visits happen within a short time, much smaller than 2N, so that every time the random walk is back at zero, the probability that it will return there again before time 2N is not essentially altered. This results in the local time \(\textsf{L}_N\) being asymptotic to a geometric random variable with parameter of order \((\log N)^{-1}\) (as also manifested by (1.1)), which when rescaled suitably converges to an exponential random variable.

The fact that the joint distribution of \( \Big \{ \tfrac{\pi }{\log N} \,\textsf{L}_N^{(i,j)} \Big \}_{1\leqslant i<j\leqslant h} \) converges to that of independent exponentials is much less apparent as the collision times have obvious correlations. A way to understand this is, again, through the fact that collisions happen at time scales much shorter than the time horizon N and, thus, it is not really possible to actually distinguish which pairs collide when collisions take place. More crucially, the logarithmic scaling, as indicated via (1.1), introduces a separation of scales between collisions of different pairs of walks, which is what, essentially, leads to the asymptotic factorisation of the Laplace transform (1.2). This intuition is reflected in the two main steps of our proof, which are carried out in Sects. 3.3 and 3.4.

Even though the Erdős–Taylor theorem appeared a long time ago, the multivariate extension that we establish here appears to be new. In [11] it was shown that the law of \(\tfrac{\pi }{\log N}\, \textsf{L}^{(1,2)}_N\), conditioned on \(S^{(1)}\), converges a.s. to that of an \(\textrm{Exp}(1)\) random variable. This implies that \(\big \{ \tfrac{\pi }{\log N} \,\textsf{L}^{(1,i)}_N\big \}_{1<i\leqslant h}\) converge to independent exponentials. However, it does not address the full independence of the family of all pairwise collisions \(\big \{ \tfrac{\pi }{\log N} \,\textsf{L}^{(i,j)}_N\big \}_{1\leqslant i<j\leqslant h}\).

In the continuum, phenomena of independence in functionals of planar Brownian motions have appeared in works around log-scaling laws see [18] (where the term log-scaling laws was introduced) as well as [19] and [14]. These works are mostly concerned with the problem of identifying the limiting distribution of windings of a planar Brownian motion around a number of points \(z_1,\ldots ,z_k\), different than the starting point of the Brownian motion, or the winding around the origin of the differences \(B^{(i)}-B^{(j)}\) between k independent Brownian motions \(B^{(1)},\ldots ,B^{(k)}\), starting all from different points, which are also different than zero. Without getting into details, we mention that the results of [14, 18, 19] establish that the windings (as well as some other functionals that fall within the class of log-scaling laws) converge, when logarithmically scaled, to independent Cauchy variables. [14] outlines a proof that the local times of the differences \(B^{(i)}-B^{(j)}, 1\leqslant i<j\leqslant k\), on the unit circle \(\{z\in \mathbb {R}^2 :|z|=1\}\) converge, jointly, to independent exponentials \(\textrm{Exp}(1)\), when logarithmically scaled, in a fashion similar to the scaling of Theorem 1.1. The methods employed in the above works rely heavily on continuous techniques (Itô calculus, time changes etc.), which do not have discrete counterparts. In fact, the passage from continuous to discrete is not straightforward either at a technical level (see e.g. the discussion on page 41 of [14] and [15]) or at a phenomenological level (see e.g. discussion on page 736 of [18]).

The approach we follow towards Theorem 1.1 starts with expanding the joint Laplace transform in the form of chaos series, which take the form of Feynman-type diagrams. To control (and simplify) these diagrams, we start by inputing a renewal representation as well as a functional analytic framework. The renewal theoretic framework was originally introduced in [3] in the context of scaling limits of random polymers (we will come back to the connection with polymers later on) and it captures the stream of collisions within a single pair of walks. The functional analytic framework can be traced back to works on spectral theory of delta-Bose gases [8, 9] and was also recently used in works on random polymers [4, 12, 17]. The core of this framework is to establish operator norm bounds for the total Green’s functions of a set of planar random walks conditioned on a subset of them starting at the same location and on another subset of them ending up at the same location. Roughly speaking, the significance of these operator estimates is to control the redistribution of collisions when walks switch pairs. The operator framework (together with the renewal one) allows to reduce the number of Feynman-type diagrams that need to be considered. For the reduced Feynman diagrams, one, then, needs to look into the logarithmic structure, which induces a separation of scales and leads to the fact that, asymptotically, the structure of the Feynman diagrams becomes that of the product of Feynman diagrams corresponding to Laplace transforms of single pairs of random walks.

Relations to random polymers. Exponential moments of collision times arise naturally when one looks at moments of partition functions of the model of directed polymer in a random environment (DPRE), we refer to [5] for an introduction to this model. For a family of i.i.d. variables \(\big ( \omega _{n,x} :n\in \mathbb {N}, x\in \mathbb {Z}^2 \big )\) with log-moment generating function \(\lambda (\beta )\), the partition function of the directed polymer measure is defined as

where \(\textrm{E}_{x}\) is the expected value with respect to a simple, symmetric walk starting at \(x\in \mathbb {Z}^2\). In the case that \(\omega _{n,x}\) is a standard normal variable, an explicit computation, for \(\beta _N:=\beta \sqrt{\tfrac{\pi }{ \log N}}\), gives that

A corollary of our Theorem 1.1 is that the limit of the h-th moment of the DPRE partition function converges to \((1-\beta ^2)^{-h(h-1)/2}\); a result that was previously obtained in [17] combining upper bounds on moments, established in [17], with results on the distributional convergence of the partition function established in [1]. Using Theorem 1.1, we can further extend this to convergence of mixed moments. More precisely, for \(\beta _{i,N}:=\beta _i \sqrt{\tfrac{\pi }{\log N}}\) and \(i=1,\ldots ,h\), we have that

where the equality is again via an explicit computation as in (1.4) when the disorder is standard normal and the convergence follows from Theorem 1.1 after specialising the parameters \(\beta _{i,j}\) to the particular case of \(\beta _i\beta _j\).Footnote 1

Moment estimates on polymer partition functions are important in establishing fine properties, such as structure of maxima, of the field of partition functions \(\big \{ \sqrt{\log N } \big ( \log Z_{N,\beta }(x) -{\mathbb {E}}[\log Z_{N,\beta }(x) ] \big ):x\in \mathbb {Z}^2 \big \}\), which is known to converge to a log-correlated gaussian field [2]. We refer to [6] for more details. We expect the independence structure of the collision local times, that we establish here, to be useful towards these investigations. An interesting problem, in relation to this (but also of broader interest), is how large can the number h of random walks be (depending on N), before we start seeing correlations in the limit of the rescaled collisions. The work of Cosco–Zeitouni [6] has shown that there exists \(\beta _0 \in (0,1)\) such that for all \(\beta \in (0,\beta _0)\) and \(h=h_N \in \mathbb {N}\) such that

one has that

with \(c(\beta ) \in (0,\infty )\) and \(0\leqslant \epsilon _N =\epsilon (\beta ,N)\downarrow 0\) as \(N \rightarrow \infty \). This suggests that the threshold might be \(h=h_N=O(\sqrt{\log N})\). More recent results [7], imply that the independence fails when the number of walks is \( \gg \log N\). The question of whether there is a critical constant \(c_{crit.}\) such that for a number of walks larger that \(c_{crit.}\log N\) the independence of the collisions fails is an open and interesting problem.

Outline. The structure of the article is as follows: In Sect. 2 we set the framework of the chaos expansion, its graphical representations in terms of Feynman-type diagrams, as well as the renewal and functional analytic frameworks. In Sect. 3 we carry out the approximation steps, which lead to our theorem. At the beginning of Sect. 3 we also provide an outline of the scheme.

We close by mentioning our convention on the constants: whenever a constant depends on specific parameters, we will indicate this at the beginning of the statements but then drop the dependence, while if no dependence on parameters is indicated, then they will be understood as absolute constants.

2 Chaos expansions and auxiliary results

In this section we will introduce the framework, within which we work, and which consists of chaos expansions for the joint Laplace transform

for a fixed collection of numbers \(\varvec{\beta }:=\{\beta _{i,j}\}_{1\leqslant i<j \leqslant h} \in \mathbb {R}^{\frac{h(h-1)}{2}}\) with \(|\beta _{i,j} | \in (0,1)\) for all \(1 \leqslant i<j \leqslant h\). We denote by

and define

Convention: From now on we will be assuming that all parameters \(\beta _{i,j}\) are nonnegative. We will return to the general case at the very end when discussing the proof of Theorem 1.1.

We will use the notation \(q_n(x):=\textrm{P}(S_n=x)\) for the transition probability of the simple, symmetric random walk. The expected collision local time between two independent simple, symmetric random walks will be

and by Proposition 3.2 in [3] we have that in the two-dimensional setting

as \(N\rightarrow \infty \), with \(\alpha =\gamma +\log 16 -\pi \simeq 0.208\) and \(\gamma \simeq 0.577\) is the Euler constant.

2.1 Chaos expansion for two-body collisions and renewal framework

We start with the Laplace transform of the simple case of two-body collisions \( \textrm{E}\Big [e^{\beta ^{i,j}_N\, \textsf{L}^{(i,j)}_N}\Big ]\) and deduce its chaos expansion as follows:

where in the last equality we used the Markov property, in the third we expanded the product and in the second we used the simple fact that

with \(\sigma ^{i,j}_{N}\) defined in (2.3). We will express (2.6) in terms of the following quantity \(U^{\beta }_N(n,x)\), which plays an important role in our formulation. For \(\sigma _N:=\sigma _N(\beta ):=e^{\frac{\pi \beta }{\log N}}-1\) and \((n,x)\in \mathbb {N}\times \mathbb {Z}^2\), we define

and \(U_N^{\beta }(n,x):=\mathbb {1}_{\{x=0\}}\), if \(n=0\). Moreover, for \(n\in \mathbb {N}\) we define

\(U^{\beta }_N(n,x)\) represents the Laplace transform of the two-body collisions, scaled by \(\beta \), between a pair of random walks that are constrained to end at the spacetime point \((n,x) \in \{1,\dots ,N\}\times \mathbb {Z}^2\), starting from (0, 0). In particular, for any \(1\leqslant i <j\leqslant h\), we can write (2.6) as

We will call \(U^{\beta }_N(n,x)\) a replica and for \(\sigma _N(\beta )=e^{\frac{\pi \beta }{\log N}}-1\) we will graphically represent \(\sigma _N(\beta )\, U^{\beta }_N(n,x)\) as

In the second line we have assigned weights \(q_{n'-n}(x'-x)\) to the solid lines going from (n, x) to \((n',x')\) and we have assigned the weight \(\sigma _N(\beta )=e^{\frac{\pi \beta }{\log N}}-1\) to every solid dot.

\(U^{\beta }_N(n)\) and \(U^{\beta }_N(n,x)\) admit a very useful probabilistic interpretation in terms of certain renewal processes. More specifically, consider the family of i.i.d. random variables \((T^{\scriptscriptstyle (N)}_i, X^{\scriptscriptstyle (N)}_i)_{i \geqslant 1}\) with law

and \(R_N\) defined in (2.4). Define the random variables \(\tau ^{\scriptscriptstyle (N)}_k:=T_1^{\scriptscriptstyle (N)}+\dots +T_k^{\scriptscriptstyle (N)}\), \(S^{\scriptscriptstyle (N)}_k:=X^{\scriptscriptstyle (N)}_1+\dots +X^{\scriptscriptstyle (N)}_k\), if \(k \geqslant 1\), and \((\tau _0,S_0):=(0,0)\), if \(k=0\). It is not difficult to see that \(U^{\beta }_N(n,x) \) and \(U^{\beta }_N(n) \) can, now, be written as

This formalism was developed in [3] and is very useful in obtaining sharp asymptotic estimates. In particular, it was shown in [3] that the rescaled process \(\Big (\frac{\tau ^{(N)}_{\lfloor s\log N\rfloor }}{N}, \frac{S^{(N)}_{\lfloor s\log N\rfloor }}{\sqrt{N}} \Big )\) converges in distribution for \(N\rightarrow \infty \) with the law of the marginal limiting process for \(\tfrac{\tau ^{(N)}_{\lfloor s\log N\rfloor }}{N}\) being the Dickman subordinator, which was defined in [3] as a truncated, zero-stable Lévy process.

An estimate that follows easily from this framework, which is useful for our purposes here, is the following: for \(\beta <1\), it holds

where we used the fact that

2.2 Chaos expansion for many-body collisions

We now move to the expansion of the Laplace transform \(M^{\varvec{\beta }}_{N,h}\) of the many-body collisions. The goal is to obtain an expansion in the form of products of certain Markovian operators. The desired expression will be presented in (2.17). This expansion will be instrumental in obtaining some important estimates in Sect. 2.3.

The first steps are similar as in the expansion for the two-body collisions, above. In particular, we have

where the last sum is over k distinct elements of the set

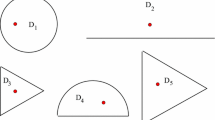

This is a graphical representation of expansion (2.11) corresponding to the collisions of four random walks, each starting from the origin. Each solid line will be marked with the label of the walk that it corresponds to throughout the diagram. Each solid dot, which marks a collision among a subset A of the random walks, is given a weight \(\prod _{ i,j\in A} \sigma _N^{i,j}\). Any solid line between points (m, x), (n, y) is assigned the weight of the simple random walk transition kernel \(q_{m-n}(y-x)\). The hollow dots are assigned weight 1 and they mark the places where we simply apply the Chapman–Kolmogorov formula

The graphical representation of expansion (2.11) is depicted in Fig. 1. There, we have marked with black dots the space-time points (n, x) where some of the walks collide and we have assigned to each the weight \(\prod _{1\leqslant i<j \leqslant h}\sigma _N^{i,j} \mathbb {1}_{\{S_n^{(i)}=S_n^{(j)}=x \}}\).

We now want to write the above expansion as a convolution of Markovian operators, following the Markov property of the simple random walks. We can partition the time interval \(\{0,1,\ldots ,N\}\) according to the times when collisions take place; these are depicted in Fig. 1 by vertical lines. In between two successive times m, n, the walks will move from their locations \((x^{(i)})_{i=1,\ldots ,h}\) at time m to their new locations \((y^{(i)})_{i=1,\ldots ,h}\) at time n (some of which might coincide) according to their transition probabilities, giving a total weight to this transition of \(\prod _{i=1}^h q_{n-m}(y^{(i)}-x^{(i)})\). We, now, want to encode in this product the coincidences that may take place within the sets \((x^{(i)})_{i=1,\ldots ,h}\) and \((y^{(i)})_{i=1,\ldots ,h}\). To this end, we consider partitions I of the set of indices \(\{1,\ldots ,h\}\), which we denote by \(I\vdash \{1,\ldots ,h\}\). We also denote by |I| the number of parts of I. Given a partition \(I \vdash \{1,\dots ,h\}\), we define an equivalence relation \({\mathop {\sim }\limits ^{I}}\) in \(\{1,\dots ,h\}\) such that \(k {\mathop {\sim }\limits ^{I}} \ell \) if and only if k and \(\ell \) belong to the same part of partition I. Given a vector \(\varvec{y}=(y_1,\dots ,y_h) \in (\mathbb {Z}^2)^h\) and \(I \vdash \{1,\dots ,h\}\), we shall use the notation \(\varvec{y}\sim I\) to mean that \(y_k=y_\ell \) for all pairs \(k {\mathop {\sim }\limits ^{I}}\ell \). We use the symbol \(\circ \) to denote the one-part partition,Footnote 2 that is, \(\circ :=\{1,\dots ,h\}\), and \(*\) to denote the partition consisting only of singletons, that is \(*:=\bigsqcup _{i=1}^h \{i\}\). Moreover, given \(I \vdash \{1,\dots ,h\}\) such that \(|I|=h-1\) and \(I=\{i,j\}\sqcup \bigsqcup _{k \ne i,j} \{k\}\), by slightly abusing notation, we may identify and denote I by its non-trivial part \(\{i,j\}\).

Given this formalism, we denote the total transition weight of the h walks, from points \(\varvec{x}=(x^{(1)},\ldots ,x^{(h)})\in (\mathbb {Z}^2)^h\), subject to constraints \(\varvec{x}\sim I\) at time m, to points \(\varvec{y}=(y^{(1)},\ldots ,y^{(h)})\in (\mathbb {Z}^2)^h\), subject to constraints \(\varvec{y}\sim J\) at time n, by

We will call this operator the constrained evolution. Furthermore, for a partition \(I \vdash \{1,\dots ,h\}\) and \(\varvec{\beta }=\{\beta _{i,j}\}_{1\leqslant i<j \leqslant h}\) we define the mixed collision weight subject to I as

with \(\sigma _N^{i,j} \) as defined in (2.3). We can then rewrite (2.11) in the form

This is the simplified version of Figure’s 1 graphical representation of the expansion (2.14), where we have grouped together the blocks of consecutive collisions between the same pair of random walks. These are now represented by the wiggle lines (replicas) and we call the evolution in strips that contain only one replica as replica evolution (although strip seven is the beginning of another wiggle line, we have not represented it as such since we have not completed the picture beyond that point). The wiggle lines (replicas) between points (n, x), (m, y), corresponding to collisions of a single pair of walks \(S^{(k)}, S^{(\ell )}\), are assigned weight \(U_N^{\beta _{k,\ell }}(m-n, y-x)\). A solid line between points (m, x), (n, y) is assigned the weight of the simple random walk transition kernel \(q_{m-n}(y-x)\)

We want to make one more simplification in this representation, which, however, contains an important structural feature. This is to group together consecutive constrained evolution operators \(\sigma _N(I_i) Q^{I_{i-1};I_i}_{n_i-n_{i-1}}(\varvec{x}_{i-1},\varvec{x}_i) \) for which \(I_{i-1}=I_i=h-1\). An example in Fig. 1 is the sequence of evolutions in the first three strips and another one is the group of evolutions in strips five and six. Such groupings can be captured by the following definition: For a partition \(I\vdash \{1,\dots ,h\}\) of the form \(I=\{k,\ell \} \sqcup \bigsqcup _{j\ne k,\ell }\{j\}\) and \(\varvec{x}=(x^{(1)},\dots ,x^{(h)})\), \(\varvec{y}=(y^{(1)},\dots ,y^{(h)}) \in (\mathbb {Z}^2)^h\), we define the replica evolution as

with \(U^{\beta }_{N}(n,y^{(k)}-x^{(k)})\) defined in (2.7). We name this replica evolution since in the time interval [0, n] we see a stream of collisions between only two of the random walks. The simplified version of expansion (2.14) (and Fig. 1) is presented in Fig. 2.

In order to re-express (2.14) with the reduction of the replica evolution (2.15), we need to introduce one more formalism, which is

where we recall that |J| is the number of parts of J and so \(|J|=h-1\) means that J has the form \(\{k,\ell \}\sqcup \bigsqcup _{i\ne k,\ell } \{i\}\), corresponding to a pairwise collision, while \(|J|<h-1\) means that there are multiple collisions (the latter would correspond to the end of the eighth strip in Fig. 1). In other words, the operator \(P_n^{I;J}\) groups together each replica evolution with its preceding constrained evolution.

We, finally, arrive at the desired expression for the Laplace transform of the many-body collisions:

2.3 Functional analytic framework and some auxiliary estimates

Let us start with some, fairly easy, bounds on operators Q and \(\textsf{U}\) (with the estimate on the latter being an upgrade of estimate (2.9)).

Lemma 2.1

Let the operators \(Q^{I;J}_n, \textsf{U}^J_n\) be defined in (2.12) and (2.15), respectively. For all partitions \(I\ne J\) with \(|J|=h-1\), \({{\bar{\beta }}}<1\) defined in (2.2) and \(\sigma _N(I)\) defined in (2.13), we have the bounds

for any \({\bar{\beta }}\,' \in ({{\bar{\beta }}}, 1)\) and all large enough N.

Proof

We start by proving the first bound in (2.18). By definition (2.15) we have that

by using that \(\sum _{z \in \mathbb {Z}^2} q_n(z)=1\) to sum all the kernels \(q_n(y^{(j)}-x^{(j)})\) for \(j\ne k,\ell \) and \(\sum _{z \in \mathbb {Z}^2}U^{\beta }_N(n,z)=U^{\beta }_N(n)\). Moreover, by definition (2.7) and (2.3), since \(\beta _{k,\ell } \leqslant \bar{\beta }\), we have

and by (2.10) we have that for any \({{\bar{\beta }}}\,'\in ({{\bar{\beta }}}, 1)\) and all N large enough

Therefore,

For the second bound in (2.18) we recall from (2.12) that when \(J=\{k,\ell \} \sqcup \bigsqcup _{j \ne k,\ell } \{j\}\), then

since \(\varvec{y}\sim J\) means that \(y_k=y_\ell \). Therefore, \(\sigma _N(J) =\sigma _N(\beta _{i,j})\leqslant \sigma _N({{\bar{\beta }}})\). We, now, use that \(\sum _{z \in \mathbb {Z}^2}q_n(z)=1\) in order to sum the kernels \(q_n(y^{(j)}-x^{(j)}), \, j \ne k,\ell \), while we also have by Cauchy-Schwarz that

by (2.10), for all N large enough, thus establishing the second bound in (2.18). \(\square \)

Next, in Proposition 2.2, we are going to recall some norm estimates from [17] on the Laplace transform of operators \(P^{I;J}_{n}\), defined (2.16). For this, we need to set up the functional analytic framework. We start by defining \((\mathbb {Z}^2)^h_I:=\{\varvec{y}\in (\mathbb {Z}^2)^h: \varvec{y}\sim I\}\) and, for \(q \in (1,\infty )\), the \(\ell ^q((\mathbb {Z}^2)^h_I)\) space of functions \(f:(\mathbb {Z}^2)^h_I \rightarrow \mathbb {R}\) which have finite norm

For \(q \in (1,\infty )\) and for an operator \(\mathsf T:\ell ^q\big ((\mathbb {Z}^2)^h_J\big )\rightarrow \ell ^q\big ((\mathbb {Z}^2)^h_I\big )\) with kernel \(\mathsf T(\varvec{x},\varvec{y})\), one can define the pairing

The operator norm will be given by

for \(p,q \in (1,\infty )\) conjugate exponents, i.e. \(\frac{1}{p}+\frac{1}{q}=1\).

We introduce the weighted Laplace transforms of operators \(Q^{I,J}\) and \(\textsf{U}^J\). In particular, let w(x) be any continuous function in \(L^\infty (\mathbb {R}^2)\cap L^1(\mathbb {R}^2)\) such that \(\log w(x)\) is Lipschitz (one can think of \(w(x)=e^{-|x|}\)) and define \(w_N(x):=w(x/\sqrt{N})\). Also, for a function \(g:\mathbb {R}^2\rightarrow \mathbb {R}\) we define the tensor product \(g^{\otimes h}(x_1,\ldots ,x_h)=g(x_1)\cdots g(x_h)\), The weighted Laplace transforms are now defined as

The passage to a Laplace transform will help to estimate convolutions involving operators \(Q^{I;J}_{n}(\varvec{x},\varvec{y})\) and \(\textsf{U}^{J}_{n}(\varvec{x},\varvec{y})\) and the introduction of the weight comes handy in improving integrability when these operators are applied to functions which are not in \(\ell ^1((\mathbb {Z}^2)^h)\). We will see this in Lemma 2.3 below.

We also define the Laplace transform operator of the combined evolution (2.16):

For our purposes, it will be sufficient to take \(\lambda =0\) and consider operators \({\widehat{\textsf{Q}}}^{I;J}_{N,0}, {\widehat{\textsf{U}}}^J_{N,0}\) and \( {{\widehat{\textsf{P}}}}^{I;J}_{N,0}\).

Using the above formalism we summarise in the next proposition some key estimates of [17] (see Propositions 3.2–3.4 and the proof of Theorem 1.3 therein), which are refinements of estimates in [4] (Section 6) and [8] (Section 3).

Proposition 2.2

Consider the operators \({\widehat{\textsf{Q}}}^{I;J}_{N,0}\) and \( {{\widehat{\textsf{P}}}}^{I;J}_{N,0}\) defined in (2.21) and (2.22) with \(\lambda =0\) and a weight function \(w\in L^\infty (\mathbb {R}^2)\cap L^1(\mathbb {R}^2)\) such that \(\log w(x)\) is Lipschitz. Then there exists a constant \(C=C(h,{\bar{\beta }},w) \in (0, \infty )\) (recall \({{\bar{\beta }}}\) from (2.2)) such that for all \(p,q\in (1,\infty )\) with \(\frac{1}{p}+\frac{1}{q}=1\) and all partitions \(I,J \vdash \{1,\dots ,h\}\), such that \(|I|,|J|\leqslant h-1\) and \(I \ne J\) when \(|I|=|J|=h-1\), we have that

Moreover, if \(g \in \ell ^q(\mathbb {Z}^2)\),

for \(g^{\otimes h}(x_1,\ldots ,x_h):=g(x_1)\cdots g(x_h)\).

Let us now present the following lemma, which demonstrates how the above functional analytic framework will be used. This lemma will be useful in the first approximation, that we will perform in the next Section, in showing that contributions from multiple, i.e. three or more, collisions are negligible.

Lemma 2.3

Let \(H_{r,N}\) be the \(r^{th}\) term in the expansion (2.17), that is,

and \(H^{\mathsf {(multi)}}_{r,N}\) be the corresponding term with the additional constraint that there is at least one multiple collision (i.e. at some point, three or more walks meet), that is,

Then the following bounds hold:

for any \(p,q\in (1,\infty )\) with \(\tfrac{1}{p}+\tfrac{1}{q}=1\) and a constant C that depends on h and \( {{\bar{\beta }}}\) but is independent of N, r, p, q.

Proof

We start by considering \(w(x)=e^{-|x|}, w_N(x):=w(\tfrac{x}{\sqrt{N}})\) and \(w_N^{\otimes h}(x_1,\ldots ,x_h)=\prod _{i=1}^h w_N(x_i)\) and by including in the expression (2.25) the term

thus rewriting \(H_{r,N}\) as

We can extend the summation on \(\varvec{x}_0\) from \(\varvec{x}_0=0\) to \(\varvec{x}_0\in \mathbb {Z}^2\) by introducing a delta function \(\delta _0^{\otimes h}\) at zero. Then

We can, now, bound the last expression by extending the temporal range of summations from \(1\leqslant n_1<\dots <n_r\leqslant N\) to \(n_i-n_{i-1} \in \{1,\dots , N\}\) for all \(i=1,\ldots ,r\). Recalling the definition of the Laplace transforms of the operators (2.21), (2.22), we, thus, obtain the upper bound

which we can write in the more compact and useful notation, using the brackets (2.19), as

We note, here, that in the right-hand side we set the \(I_0\) partition to be equal to \(I_0=\{1\}\sqcup \cdots \sqcup \{h\}\). In this case \({\widehat{\textsf{P}}}^{I_{0};I_1}={\widehat{\textsf{P}}}^{*;I_1} ={\widehat{\textsf{Q}}}^{*;I_1} \) by definition (2.22). The delta function \(\delta _0^{\otimes h}(\varvec{x}_0)\) will force all points of \(\varvec{x}_0\) to coincide at zero, thus, forcing \(I_0\) to be equal to the partition \(\circ =\{1,\ldots ,h\}\) but, at the stage of operators, we do not yet need to enforce this constraint. At this stage we can proceed with the estimate using the operator norms (2.20) as

By (2.24) of Proposition 2.2 we have that

and by (2.23) we have that for all \(1\leqslant i \leqslant r-1\),

Inserting these estimates in (2.27) we deduce that

for a constant \(C=C(h,{\bar{\beta }})\in (0,\infty )\), not depending on p, q, r, N. We now notice that for any partition \(I\vdash \{1,\ldots ,h\}\), it holds that \(\sigma _N(I)\leqslant C/ \log N\) (recall definitions (2.13) and (2.3)), so

Moreover, by Riemann summation, \(N^{-h/q}\left\Vert w_N\right\Vert _{\ell ^q}^{h}\) is bounded uniformly in N. Therefore, applying these on (2.28) we arrive at the bound

for a new constant \(C=C(h,{\bar{\beta }})\in (0,\infty )\), which is the first claimed estimate in (2.26). For the second estimate in (2.26) we follow the same steps until we arrive at the bound

Then we notice that for a partition \(I\vdash \{1,\ldots ,h\}\) with \(|I|<h-1\) it will hold that \(\sigma _N(I)\leqslant C (\log N)^{-2}\) (recall definitions (2.13) and (2.3)). This fact, together with the fact that there are r possible choices among the partitions \(I_1,\ldots ,I_r\) that can be chosen so that \(|I_j|<h-1\), leads to the second bound in (2.26). \(\square \)

3 Approximation steps and proof of the theorem

In this section we prove Theorem 1.1 through a series of approximations on the chaos expansion (2.11), (2.17). The first step, in Sect. 3.1, is to establish that the series in the chaos expansion (2.17) can be truncated up to a finite order and that the main contribution comes from diagrams where, at any fixed time, we only have at most two walks colliding. The second step, Sect. 3.2, is to show that the main contribution to the expansion and to diagrams like in Fig. 3, comes when all jumps between marked dots (see Fig. 3) happen within diffusive scale. The third step, in Sect. 3.3, captures the important feature of scale separation. This is intrinsic to the two-dimensionality and can be seen as the main feature that leads to the asymptotic independence of the collision times. With reference to Fig. 3, this says that the time between two consecutive replicas, say \(a_4-b_3\) in Fig. 3 must be much larger than the time between the previous replicas, say \(b_3-b_2\). This would then lead to the next step in Sect. 3.4, see also Fig. 4, which is that we can rewire the links so that the solid lines connect only replicas between the same pairs of walks. The final step, which is performed in Sect. 3.5 is to reverse all the above approximations within the rewired diagrams, to which we arrived in the previous step. The summation, then, of all rewired diagrams leads, in the limit, to the right hand of (1.2), thus completing the proof of the theorem.

3.1 Reduction to 2-body collisions and finite order chaoses

In this step, we use the functional analytic framework and estimates of the previous section to show that for each \(r \geqslant 1\), \(H_{r,N}\) decays exponentially in r, uniformly in \(N \in \mathbb {N}\) and that it is concentrated on configurations which contain only two-body collisions between the h random walks.

Proposition 3.1

There exist constants \(\textsf{a}\in (0,1)\) and \({\bar{C}}=C(h,{\bar{\beta }},\textsf{a}) \in (0,\infty )\) such that for all \(r \geqslant 1\),

Proof

We use the estimates in (2.26) and make the choice \(q=q_N:=\frac{\textsf{a}}{C_1} \log N \) with \(\textsf{a}\in (0,1)\) and a constant \(C_1\) such that \(\frac{Cpq}{\log N} <\textsf{a}\) (recall that \(\tfrac{1}{p}+\tfrac{1}{q}=1\)). Moreover, this choice of q implies that

Therefore, choosing \({\bar{C}}=\, e^{\frac{C_1\, (h+1)}{\textsf{a}}}\) implies the first estimate in (3.1).

The second estimate follows from the same procedure and the same choice of \(q=q_N:=\frac{\textsf{a}}{C_1} \log N \) in the second bound of (2.26). \(\square \)

Proposition 3.2

If \(M^{\varvec{\beta }}_{N,h}\) is the joint Laplace transform of the collision local times \( \Big \{ \tfrac{\pi }{\log N} \,\textsf{L}_N^{(i,j)} \Big \}_{1\leqslant i<j\leqslant h} \), (2.1) and \(H_{r,N}\) is the \(r^{th}\) term in its chaos expansion (2.25), then for any \(\epsilon >0\) there exists \(K=K_\epsilon \) such that

uniformly for all \(N \in \mathbb {N}\).

Proof

By Proposition, 3.1, \(H_{r,N}\) decay exponentially in r, uniformly in \(N \in \mathbb {N}\) and therefore

which means that we can truncate the expansion of \(M^{\varvec{\beta }}_{N,h}\) to a finite number of terms K depending only on \(\epsilon \). \(\square \)

By Proposition 3.1 we can focus on only two-body collisions, since higher order collisions bear a negligible contribution as \(N\rightarrow \infty \). Let us introduce some notation to conveniently describe the expansion of \(H_{r,N}\), after the reduction to only two-body collisions, which we will use in the sequel. Given \(r\geqslant 1\) we will denote by \(a_i,b_i \in \mathbb {N}\cup \{0\}\), \(a_i\leqslant b_i\), \(i=1,\dots ,r\) the times where replicas start and end respectively, see (2.15) and Fig. 2, where replicas are represented by wiggle lines. Thus, \(a_i\) will be the time marking the beginning of the \(i^{th}\) wiggle line and \(b_i\) the time marking its end. Note that, \(a_1=0\). Moreover, we use the notation \(\vec {\varvec{x}}=(\varvec{x}_1,\varvec{x}_2,\dots ,\varvec{x}_r) \in (\mathbb {Z}^{2})^{hr}\) to denote the starting points of the r replicas and \(\vec {\varvec{y}}=(\varvec{y}_1,\dots ,\varvec{y}_r) \in (\mathbb {Z}^{2})^{hr}\) the corresponding ending points. Again, notice that \(\varvec{x}_1=\varvec{0}\). We then define the set

We also define a set of finite sequences of partitions

Using the notational conventions outlined above we can write \( H_{r,N}= H^{(2)}_{r,N}+H^{\mathsf {(multi)}}_{r,N}\) with

In the next sections will focus on \(H^{(2)}_{r,N}\), which by Proposition 3.1 contains the main contributions.

3.2 Diffusive spatial truncation

In this step we show that we can introduce diffusive spatial truncations in all the kernels appearing in (3.3) which originate from the diffusive behaviour of the simple random walk in \(\mathbb {Z}^2\). For a vector \(\varvec{x}=(x^{(1)},\dots ,x^{(h)}) \in (\mathbb {Z}^2)^h\), we shall use the notation

where \(|\cdot |\) denotes the usual Euclidean norm on \(\mathbb {R}^2\). For each \(r \in \mathbb {N}\), define \(H^{\mathsf {(diff)}}_{r,R,N}\) to be the sum in (3.3) where \(\mathsf C_{r,N}\) is replaced by

and similarly we define

where

Note that then we have that

We have the following Proposition.

Proposition 3.3

For all \(r\geqslant 1\) we have that

Proof

We use the bounds established in Lemma 3.4, below, and (2.18) to show (3.6). We can use a union bound for (3.5) to obtain that

We split the sum on the last two lines of (3.7) according to the two indicator functions that appear therein. By repeated successive application of the bounds from (2.18) for \(j<i\leqslant r\) and then by using (3.10), which reads as

we deduce that

We then continue the summation using the bounds from (2.18), to obtain that the right-hand side of the inequality in (3.8) is bounded by

Similarly, for the sum involving the second indicator function in (3.7) we obtain by using (2.18) and (3.9) of Lemma 3.4 that

Therefore, the right-hand side of the inequality in (3.7) is bounded by

where the \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) ^r\) factor comes from the fact that there are at most \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) ^r\) choices for the sequence \((I_1,\dots ,I_r) \in {\mathcal {I}}^{(2)}\). Thus, recalling (3.7) we get that

\(\square \)

Lemma 3.4

Let \(I,J \vdash \{1,\ldots ,h\}\) such that \(|I|=|J|=h-1\) and \(I \ne J\). For large enough \(R \in (0,\infty )\) and uniformly in \(\varvec{x}\in (\mathbb {Z}^2)_I^h\) we have that for a constant \(\kappa =\kappa (h,{\bar{\beta }}) \in (0,\infty )\),

and

Proof

We start with the proof of (3.9). Since \(|J|=h-1\), let us assume without loss of generality that \(J=\{k,\ell \} \sqcup \bigsqcup _{j \ne k,\ell }\{j\}\). In this case, \(Q^{I;J}_n(\varvec{x},\varvec{y})\) contains \(h-2\) random walk jumps with free endpoints \(y^{(j)}\), \(j \ne k,\ell \), that is

Moreover, J imposes the constraint that \(y^{(k)}=y^{(\ell )}\), which appears in \(Q^{I;J}_n(\varvec{x},\varvec{y})\) through the product of transition kernels

recall (2.12). The constraint \(\left\Vert \varvec{x}-\varvec{y}\right\Vert _{\infty }>R \sqrt{n}\) implies that there exists \(1\leqslant j \leqslant h\) such that \(|x^{(j)}-y^{(j)}| > R\, \sqrt{n}\). We distinguish two cases:

-

(1)

There exists \(j \ne k,\ell \) such that \(|x^{(j)}-y^{(j)}| > R\, \sqrt{n}\), or

-

(2)

\(|x^{(j)}-y^{(j)}| > R\, \sqrt{n}\) for \(j=k\) or \(j=\ell \).

In both cases, we can use \(\sum _{z \in \mathbb {Z}^2} q_n(z)=1\) to sum the kernels \(q_n(y^{(i)}-x^{(i)})\) with \(i \notin \{ j, k,\ell \}\) to which we do not impose any super-diffusive constraints. By symmetry and translation invariance we can upper bound the left-hand side of (3.9) by

Looking at the first summand in (3.11) we have by Cauchy-Schwarz that

since \(\sum _{z \in \mathbb {Z}^2}q^2_{n}(z)=q_{2n}(0)\). Let us recall the deviation estimate for the simple random walk, which can be found in [16], Proposition 2.1.2, that is

for a constant \(c\in (0,\infty )\) and all \(R \in (0,\infty )\), large enough. By using bound (3.12) and subsequently (3.13) on the first summand of (3.11) we get that

We recall from (2.4) and (2.5) that \(R_N=\sum _{n=1}^N q_{2n}(0) {\mathop {\approx }\limits ^{N \rightarrow \infty }} \frac{\log N}{\pi }\), therefore,

for some \({{\bar{\beta }}}' \in ({{\bar{\beta }}},1)\). The second summand in the parenthesis in (3.11) can be bounded via Cauchy-Schwarz by

For the first term in (3.15), using that \(\sup _{z \in \mathbb {Z}^2 }q_n(z)\leqslant \frac{C}{n}\) we get

For the second term in (3.11), we have that for all \(u \in \mathbb {Z}^2\)

Thus, by (3.15) together with (3.16) we conclude that for the second summand in (3.11) we have

Therefore, recalling (3.14) we deduce that there exists a constant \(\kappa (h,{\bar{\beta }}) \in (0,\infty )\) such that

We move to the proof of (3.10). Similar to the proof of (3.9), we can bound the left-hand side of (3.10) by

For the first summand in (3.17), by (3.13) we have that

and \(\sum _{1\leqslant n \leqslant N,\, w \in \mathbb {Z}^2} U_N^{{\bar{\beta }}}(n,w)\leqslant \frac{1}{1-{\bar{\beta }}'}\), therefore

For the second summand, we use the renewal representation of \(U^{{\bar{\beta }}}_N(\cdot ,\cdot )\) introduced in (2.8). In particular, we have that

Then, by conditioning on the times \((T^{\scriptscriptstyle (N)}_i)_{1 \leqslant i \leqslant k}\) for which \(\tau _k^{\scriptscriptstyle (N)}=T^{\scriptscriptstyle (N)}_1+\dots +T^{\scriptscriptstyle (N)}_k\) we have that

Note that when we condition on \(\cap _{i=1}^k\big \{T^{(\scriptscriptstyle N)}_i=n_i\big \}\), \(S_k^{\scriptscriptstyle (N)}\) is a sum of k independent random variables \((\xi _i)_{1 \leqslant i \leqslant k}\) taking values in \(\mathbb {Z}^2\), with law

The proof of Proposition 3.5 in [17] showed that there exists a constant \(C \in (0,\infty )\) such that for all \(\lambda \geqslant 0\)

uniformly over the values \(n_1,\ldots ,n_k\). Therefore, by (3.21) with \(\lambda =\frac{1}{\sqrt{n}}\) and Markov’s inequality we obtain that

Thus, looking back at (3.20) we have that for all \(k \geqslant 0\),

therefore, plugging the last inequality into (3.19), we get that

therefore by (3.18) and (3.22) we have that there exists a constant \(\kappa (h,{{\bar{\beta }}})\in (0,\infty )\) such that

for large enough \(R \in (0,\infty )\), thus concluding the proof of (3.10). \(\square \)

3.3 Scale separation

In this step we show that given \(r \in \mathbb {N}\), \(r \geqslant 2\), the main contribution to \(H^{\mathsf {(diff)}}_{r,N}\) comes from configurations where \(a_{i+1}-b_i>M(b_i-b_{i-1})\) for all \(1\leqslant i \leqslant r\) and large M, as \(N\rightarrow \infty \). Recall from (3.4) that

Define the set

with the convention \(b_0:=0\) and accordingly define

We then have the following approximation proposition:

Proposition 3.5

For all fixed \(r\in \mathbb {N}\), \(r \geqslant 2\) and \(M \in (0,\infty )\),

Proof

Fix \(M>0\). Let us begin by showing (3.25) for the simplest case which is \(r=2\). We have

We can bound \(\sigma _N(I_2)\) by \(\frac{\pi {{\bar{\beta }}}\,'}{\log N}\), for some \({{\bar{\beta }}}\,'\in ({{\bar{\beta }}},1)\) and use (2.18) to bound the last replica, i.e. the sum over \((b_2,\varvec{y}_2)\), thus getting

Notice that at this stage we can sum out the spatial endpoints of the free kernels in (3.26) and bound the coupling strength \(\beta _{k,\ell }\) of any replica \(\textsf{U}^{I_1}_{b_1}(0,\varvec{y}_1)\) with \(I_1=\{k,\ell \}\sqcup \bigsqcup _{j\ne k,\ell }\{j\}\) by \({{\bar{\beta }}}\) to obtain

For the last inequality we also have used that the number of possible partitions \((I_1,I_2)\in {\mathcal {I}}^{(2)}\) is bounded by \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) ^2\). For every fixed value of \(b_1\) in (3.27), we use Cauchy-Schwarz for the sum over \((a_2,x_2) \in (0,(1+M)b_1]\times \mathbb {Z}^2\) in (3.27) to obtain that

We can bound the leftmost parenthesis in the last line of (3.28) by \(R^{1/2}_N=\Big (\sum _{n=1}^N q_{2n}(0)\Big )^{1/2}=O(\sqrt{\log N})\). For the other term we have

Therefore, using (3.28) and (3.29) along with \(\sum _{0\leqslant b_1\leqslant N,\,y_1 \in \mathbb {Z}^2} U^{{{\bar{\beta }}}}_N(b_1,y_1) \leqslant (1-{{\bar{\beta }}}\,')^{-1}\) in (3.27) we obtain that

Let us show how this argument can be extended to work for general \(r \in \mathbb {N}\). The key observation is that for every fresh collision between two random walks, that is \(I_{i+1}=\{k,\ell \} \sqcup \bigsqcup _{j \ne k,\ell } \{j\} \), happening at time \(0<a_{i+1}\leqslant N\), we have \(I_i \ne I_{i+1}\), therefore one of the two colliding walks with labels \(k,\ell \) has to have travelled freely, for time at least \(a_{i+1}-b_{i-1}\) from its previous collision. More precisely, every term in the expansion of \( H^{\mathsf {(diff)}}_{r,N,R} - H^{\mathsf {(main)}}_{r,N,R,M}\) contains for every \(1\leqslant i\leqslant r-1\) a product of the form

see Fig. 3. Recall from (3.2) and (3.24) that we have the expansion

where by definition (3.23) we have that

We will start the summation of (3.30) from the end until we find the index \(1\leqslant i \leqslant r-1\) for which the sum over \(a_{i+1}\) is restricted to \(\big (b_i,b_i+M(b_i-b_{i-1})\big ]\), in agreement with (3.31), using (2.18) to bound the contribution of the sums over \(b_{j},a_{j+1}\) and the corresponding spatial points for \(i<j\leqslant r-1\). Next, notice that we can bound the contribution of the sum over \(a_{i+1} \in \big (b_i,b_i+M(b_i-b_{i-1})\big ]\) and \(x_{i+1} \in \mathbb {Z}^2\), using a change of variables, by a factor of

using Cauchy–Schwarz as in (3.28) and (3.29). The remaining sums over \(b_j,a_{j-1}\), \(1\leqslant j\leqslant i\) can be bounded again via (2.18). Therefore, taking into account that by (3.31) there are \(r-1\) choices for the index i such that the sum over \(a_{i+1}\) is restricted to \(\big (b_i,b_i+M(b_i-b_{i-1})\big ]\), we can give an upper bound to \(H^{\mathsf {(diff)}}_{r,N,R}- H^{\mathsf {(main)}}_{r,N,R,M}\) as follows:

where we also used that the number of distinct sequences \((I_1,\dots ,I_r) \in {\mathcal {I}}^{(2)}\) is bounded by \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) ^r\). \(\square \)

A diagramatic representation of a configuration of collisions between 4 random walks in \(H^{(2)}_{4,N}\) with \(I_1=\{2,3\}\), \(I_2=\{1,2\}\), \(I_3=\{3,4\}\) and \(I_4=\{2,3\}\). Wiggly lines represent replica evolution, see (2.15)

3.4 Rewiring

Recall the expansion of \(H^{\mathsf {(main)}}_{r,N,R,M} \),

We also remind the reader that we may identify a partition \(I=\{k,\ell \}\sqcup \bigsqcup _{j\ne k,\ell }\{j\}\) with its non-trivial part \(\{k,\ell \}\). Moreover, if \(\big (\{i_1,j_1\},\cdots ,\{i_r,j_r\}\big ) \in {\mathcal {I}}^{(2)}\) we will use the notation

with the convention that \(\textsf{p}(m)=0\) if \(\{i_k,j_k\} \ne \{i_m,j_m\}\) for all \(1\leqslant k <m\). Given this definition, the time \(b_{\textsf{p}(m)}\) represents the last time walks \({i_m,j_m}\) collided before their new collision at time \(a_m\). Note that since we always have \(\{i_k,j_k\} \ne \{i_{k+1},j_{k+1}\}\) by construction, \(\textsf{p}(m)<m-1\).

Consider a sequence of partitions \(\big (\{i_1,j_1\},\dots ,\{i_m,j_m\}\big ) \in {\mathcal {I}}^{(2)}\) and let \(m \in \{2,\dots ,r\}\). The goal of this step will be to show that we can make the replacement of weight

by inducing an error which is negligible when \(M \rightarrow \infty \). We iterate this procedure for all partitions \(I_1,\dots ,I_r\). We call the procedure described above rewiring, see Figs. 3 and 4. The first step towards the full rewiring is to show the following lemma which quantifies the error of a single replacement (3.33).

Lemma 3.6

Let \(r\geqslant 2\) fixed and \(m \in \{2,\dots ,r\}\) with \(I_m=\{i_m,j_m\}\). Then, for every fixed \(R \in (0,\infty )\) and uniformly in \((\vec {a},\vec {b},\vec {\varvec{x}},\vec {\varvec{y}})\, \in \,\mathsf C^{\mathsf {(main)}}_{r,N,R,M}\) and all sequences of partitions \((I_1,\dots ,I_r) \in {\mathcal {I}}^{(2)}\),

where \(o_{M}(1)\) denotes a quantity such that \(\lim _{M \rightarrow \infty } o_{M}(1)=0\).

Proof

We will show that

and by symmetry we will get (3.34). To this end, we invoke the local limit theorem for simple random walks, which we recall from [16]. In particular, by Theorem 2.3.11 [16], we have that there exists \(\rho >0\) such that for all \(n\geqslant 0\) and \(x \in \mathbb {Z}^2\) with \(|x|<\rho \, n\),

where \(g_t(x)=\frac{e^{-\frac{|x|^2}{2t}}}{2 \pi t}\) denotes the 2-dimensional heat kernel and

The last constraint in (3.36) is a consequence of the periodicity of the simple random walk. Let us proceed with the proof of Lemma 3.6. First, we derive two inequalities which are going to be useful for the approximations using the local limit theorem.

Auxiliary inequality 1. We claim that

To this end, we start with the fact that

which we will apply repeatedly. Starting with \(k:=m-1\), we have that

We can then estimate the second term in the right-hand-side as

where in the last step we used (3.38) with \(k:=m-2\). Inserting this into (3.39) we have that

We next decompose \(M(b_{m-2}-b_{m-3})\) as we did in (3.39) for \(M(b_{m-1}-b_{m-2})\) and iterating this procedure up to \({\mathfrak {p}}(m)\) we obtain that

where in the last step we reduced \(M(b_{j}-a_{j})\) to \((M-1)(b_{j}-a_{j})\) and then telescoped.

Auxiliary inequality 2. As a second step, we will prove that

To this end, by the reverse triangle inequality we have that

Note that by the diffusivity constraints of \(\mathsf C^{\mathsf {(main)}}_{r,N,R,M}\) we have that

By Cauchy-Schwarz on the right hand side of (3.42), (3.37) and the fact that \(m\leqslant r\), we furthermore have that

Combining (3.43) and (3.41) we arrive at (3.40).

Now, we are ready to show approximation (3.35). By (3.36) we have

Let us look at each term on the right hand side of (3.44), separately. First, we have

and by using (3.40) we have

Therefore, by (3.40), again, we get

Similarly, we can get a lower bound of

since \( a_m-b_{\textsf{p}(m)}<\big (1+\frac{1}{M-1}\big )(a_m-b_{m-1})\) by (3.37). The second term in (3.44) can be handled by (3.37) as

For the last term in (3.44) we have that

where we used (3.40) along with the inequality \((x+y)^4\leqslant 8(x^4+y^4)\) for \(x,y \in \mathbb {R}\). Therefore,

where we used in the last inequality that \(a_m-b_{m-1}>M(b_{m-1}-b_{m-2})\geqslant M\) by (3.23). \(\square \)

Figure 3 after rewiring. We use blue lines to represent the new kernels produced by rewiring. The dashed lines represent remaining free kernels from the rewiring procedure as well as kernels coming from using the Chapman–Kolmogorov formula for the simple random walk

3.5 Final step

Now that we have Lemma 3.6 at our disposal, we can prove the main approximation result of this step. Recall from (3.32) that

Define \(H^{\mathsf {(rew)}}_{r,N,R,M}\) to be the resulting sum after rewiring has been applied to every term of \(H^{\mathsf {(main)}}_{r,N,R,M}\), that is, given a sequence of partitions \((I_1,\dots ,I_r)\in {\mathcal {I}}^{(2)}\) and \((\vec {a},\vec {b},\vec {\varvec{x}},\vec {\varvec{y}})\, \in \,\mathsf C^{\mathsf {(main)}}_{r,N,R,M}\), we apply the kernel replacement (3.33) to all partitions \(I_1,\dots ,I_r\) starting from \(I_r\) and moving backward. We remind the reader that we may denote a partition \(I=\{i,j\} \sqcup \bigsqcup _{k \ne i,j}\{k\} \in {\mathcal {I}}^{(2)}\) by its non-trivial part \(\{i,j\}\), see subsection 2.2.

Proposition 3.7

Fix \(0\leqslant r\leqslant K\). We have that

and

Proof

Equation (3.45) is a consequence of Lemma 3.6 and the fact that \(r\leqslant K\), while expansion (3.46) is a direct consequence of the rewiring procedure we described in the previous step, see also Figs. 3 and 4. \(\square \)

Next, we derive upper and lower bounds for \(H^{\mathsf {(rew)}}_{r,N,R,M}\). We begin with the upper bound.

Proposition 3.8

We have that

Proof

Fix \(r\geqslant 1\) and from (3.46) recall that

For the sake of obtaining an upper bound on \(H^{\mathsf {(rew)}}_{r,N,R,M}\) we can sum \((\vec {a},\vec {b},\vec {\varvec{x}},\vec {\varvec{y}})\) in (3.47) over \(\mathsf C_{r,N}\), see definition in (3.2), instead of \(\mathsf C^{\mathsf {(main)}}_{r,N,R,M}\). We start the summation of the right hand side of (3.47) from the end. Using that for \(n \in \mathbb {N}\), \(\sum _{z \in \mathbb {Z}^2} q_n(z)=1\) we deduce that

We leave the sum \(\sum _{b_r \in [a_r,N], \, y^{(i_r)}_r \in \mathbb {Z}^2} U_N\big (b_r-a_r,y_r^{(i_r)}-x_r^{(i_r)}\big )\) intact and move on to the time interval \([b_{r-1},a_r]\). We use again that for \(n \in \mathbb {N}\), \(\sum _{z \in \mathbb {Z}^2} q_n(z)=1\), to deduce that

Again, we leave the sum \(\sum _{a_r \in \, (b_{r-1},b_r], \,x_r^{(i_r)} \in \mathbb {Z}^2}\, q_{a_r-b_{\textsf{p}(r)}}^2\big (x^{(i_r)}_r- y^{(i_{r})}_{\textsf{p}(r)} \big )\) intact. We can iterate this procedure inductively since due to rewiring all the spatial variables \(y^{(\ell )}_{r-1}\), \(\ell \ne i_{r-1},j_{r-1}\) are free, that is, there are no outgoing laces starting off \(y^{(\ell )}_{r-1}\), \(\ell \ne i_{r-1},j_{r-1}\) at time \(b_{r-1}\). The summations we have performed correspond to getting rid of the dashed lines in Fig. 4. Iterating this procedure inductively then implies the following upper bound for \(H^{\mathsf {(rew)}}_{r,N,R,M}\).

\(\square \)

In the next proposition we derive complementary lower bounds for \(H^{\mathsf {(rew)}}_{r,N,R,M}\). Given \(0 \leqslant r \leqslant K\) and a sequence of partitions \(\vec {I}=(\{i_1,j_1\},\dots ,\{i_r,j_r\}) \in {\mathcal {I}}^{(2)}\) we define the set \(\mathsf C^{\mathsf {(rew)}}_{r,N,R,M}(\vec {I}\,)\) to be \(\mathsf C^{\mathsf {(main)}}_{r,N,R,M}\) where for every \(2\leqslant m \leqslant r\) we replace the diffusivity constraint \(\left\Vert \varvec{x}_m-\varvec{y}_{m-1}\right\Vert _{\infty } \leqslant R \sqrt{a_m-b_{m-1}}\) by the constraints

This replacement transforms the diffusivity constraints imposed on the jumps of two walks \(\{i_m,j_m\}\) from their respective positions at time \(b_{m-1}\) to time \(a_m\), which is the time they (re)start colliding, to a diffusivity constraint connecting their common position at time \(b_{\textsf{p}(m)}\), which is the last time they collided before time \(a_m\), to their common position at time \(a_m\) when they start colliding again.

We have the following Lemma.

Lemma 3.9

Let \(0 \leqslant r \leqslant K\) and \(M>2K\). For all \(\vec {I}=\big (\{i_1,j_1\},\dots ,\{i_r,j_r\}\big ) \in \, {\mathcal {I}}^{(2)}\) we have that

Proof

Fix \(0\leqslant r \leqslant K\), a sequence \(\vec {I}=\big (\{i_1,j_1\}, \dots , \{i_r,j_r\}\big ) \in {\mathcal {I}}^{(2)}\) and \((\vec {a},\vec {b},\vec {\varvec{x}},\vec {\varvec{y}}) \in \mathsf C^{\mathsf {(rew)}}_{r,N,M,R}(\vec {I}\,)\). Moreover, let \(2\leqslant m \leqslant r\). By symmetry it suffices to prove that

Indeed, by the definition of \(\mathsf C^{\mathsf {(rew)}}_{r,N,M,R}(\vec {I}\,)\) and (3.40) we have that for \((\vec {a},\vec {b},\vec {\varvec{x}},\vec {\varvec{y}}) \in \mathsf C^{\mathsf {(rew)}}_{r,N,M,R}(\vec {I}\, )\),

Moreover by (3.37) we have that

Therefore,

Combining inequalities (3.48) and (3.49) we get the result. \(\square \)

Proposition 3.10

Let \(0 \leqslant r \leqslant K\). For \(M>2K\) we have that

with \(C_{K,M}:=\sqrt{1-\frac{1}{M}}\bigg (1-\sqrt{\frac{2K-1}{M-1}}\bigg )\).

Proof

Recall from (3.46) that

By Lemma 3.9 we have that

The first step in getting a lower bound for \(H^{\mathsf {(rew)}}_{r,N,R,M}\) is to get rid of the dashed lines, see Fig. 4. We follow the steps we took in the proof of Proposition 3.8 for the upper bound. In particular, we start the summation of (3.50) beginning from the end. Using that for \(n \in \mathbb {N}\) and \(R\in (0,\infty )\)

by (3.13), we get that

We leave the sum

as is and move on to the time interval \([b_{r-1},a_r]\). We use (3.51) to deduce that

Again, we leave the sum \(\sum _{a_r \in \, (b_{r-1},b_r], \,x_r^{(i_r)} \in \mathbb {Z}^2}\, q_{a_r-b_{\textsf{p}(r)}}^2\big (x^{(i_r)}_r-y^{(i_{r})}_{\textsf{p}(r)}\big )\) intact. We can continue this procedure since due to rewiring all the spatial variables \(y^{(\ell )}_{r-1}\), \(\ell \ne i_{r-1},j_{r-1}\) are free, i.e. there are no outgoing laces starting off \(y^{(\ell )}_{r-1}\), \(\ell \ne i_{r-1},j_{r-1}\) at time \(b_{r-1}\), and there are no diffusivity constraints linking \(x^{(i_r)}_r=x_r^{(j_r)}\) with \(y^{(i_r)}_{r-1},y^{(j_r)}_{r-1}\) by definition of \(\mathsf C^{\mathsf {(rew)}}_{r,N,R,M}(\vec {I})\). Iterating this procedure we obtain that

with \(C_{K,M}=\sqrt{1-\frac{1}{M}}\bigg (1-\sqrt{\frac{2K-1}{M-1}}\bigg )\). \(\square \)

Graphical representation of a term of the chaos representation of \(\prod _{1\leqslant i<j \leqslant h}\textrm{E}\Big [e^{\frac{\pi \beta _{i,j}}{\log N}\, \textsf{L}_N^{(i,j)}}\Big ]\) for \(h=3\). This diagram captures the main contribution, which is when all a’s and b’s are distinct. Configurations where more than one pair of walks collide at a same time \(n\leqslant N\) have lower order contributions

To proceed with the last steps of our proof it is useful to record two chaos expansions of \(\prod _{1\leqslant i<j \leqslant h} \textrm{E}\Big [e^{\frac{\pi \beta _{i,j}}{\log N}\, \textsf{L}_N^{(i,j)}}\Big ]\). These read as

This follows from (2.6) and (2.7) and by grouping together intervals [a, b] where one observes collisions of only a single pair of random walks. Firgure 5 presents a graphical explanation / representation of these chaos expansions.

Proposition 3.11

We have that

Proof

We are going to prove this Proposition via means of the lower and upper bounds established in Propositions 3.8 and 3.10. By Proposition 3.8 we have that

Summing the spatial points on the right hand side of (3.54) we obtain that

Using (3.52) one can deduce that

Next, by Proposition 3.10 we have that

Lifting the diffusivity conditions imposed on the right-hand side of (3.57) can be done using arguments already present in Lemma 3.4. More specifically, we use that for \(0 \leqslant m \leqslant N\), \(w \in \mathbb {Z}^2\) and \(1\leqslant i<j \leqslant h\),

where in the first inequality we used (3.22) from Lemma 3.4 with a suitable constant \(\kappa ({{\bar{\beta }}}) \in (0,\infty )\). Similarly we have that

by tuning the constant \(\kappa \) if needed. Therefore, we finally obtain that

The last restriction we need to lift is the restriction \(a_{i+1}-b_i>M(b_i-b_{i-1}), \, 1\leqslant i \leqslant r-1\). This can be done via the arguments used in Proposition 3.5, so we do not repeat it here, but only note that there exists a constant \({\widetilde{C}}_{K}={\widetilde{C}}_K({{\bar{\beta }}},h) \in (0,\infty )\) such that for all \(0 \leqslant r \leqslant K\), the corresponding sum to the right hand side of (3.58), but with its temporal range of summation be such that there exists \(1\leqslant i\leqslant r-1:\, a_{i+1}-b_i\leqslant M(b_i-b_{i-1})\), satisfies the bound

where \(\epsilon _{N,M}\) is such that \(\lim _{N \rightarrow \infty } \epsilon _{N,M}=0\) for any fixed \(M \in (0,\infty )\). Therefore, the resulting lower bound on \(H^{\mathsf {(rew)}}_{r,N,R,M}\) will be

Note that

where \(A_N^{(1)}\) denotes the part of the chaos expansion of \(\prod _{1\leqslant i<j \leqslant h}\textrm{E}\Big [e^{\frac{\pi \beta _{i,j}}{\log N}\, \textsf{L}^{(i,j)}_N}\Big ]\) where there exists a time \(n\leqslant N\) at which multiple pairs collide. Moreover, \(A^{(2)}_{N,K}\) denotes the corresponding sum on the right hand side of (3.60) but from \(r=K+1\) to \(\infty \), that is

Next, we will give bounds for \(A^{(1)}_{N}\) and \(A_{N,K}^{(2)}\). Beginning with \(A_{N,K}^{(2)}\), let \(\rho _K:=\left\lfloor {\frac{K}{2\left( {\begin{array}{c}h\\ 2\end{array}}\right) }}\right\rfloor \, \). Since we are summing over \(r>K\), there has to be a pair \(1\leqslant i<j \leqslant h\) which has recorded more than \(\rho _K\) collisions. We recall from (2.8) that \(U^{\beta }_N(\cdot )\) admits the renewal representation

There are \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) \) choices for the pair with more than \(\rho _K\) collisions. We can also use the bound (2.9) to bound the contribution of the rest \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) -1\) pairs in \(A^{(2)}_{N,K}\). Therefore, we can write

uniformly in N, where \({\bar{\beta }}' \in ({{\bar{\beta }}},1)\).

Let us now proceed with estimating \(A_N^{(1)}\) and showing that it has negligible contribution. \(A_N^{(1)}\) consists of configurations where \({\mathfrak {m}}\geqslant 2\) pairs \(\{i_1,j_1\}, \{i_2,j_2\},\ldots ,\{i_{{\mathfrak {m}}}, j_{{\mathfrak {m}}} \}\) collide at a same time \(n\leqslant N\). Referring to Fig. 5, a case of \({\mathfrak {m}}=2\) could correspond to a situation when, for example, \(a_3=a_4\). Let n be the first time a multiple pair-collision takes place. We can choose the pairs \(\{i_1,j_1\}, \{i_2,j_2\},\ldots ,\{i_{{\mathfrak {m}}}, j_{{\mathfrak {m}}} \}\), which collide at that time, in \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) \cdot \big (\left( {\begin{array}{c}h\\ 2\end{array}}\right) -1\big ) \cdots \big (\left( {\begin{array}{c}h\\ 2\end{array}}\right) -{\mathfrak {m}}+1\big ) / {\mathfrak {m}}! < \left( {\begin{array}{c}h\\ 2\end{array}}\right) ^{{\mathfrak {m}}}\) ways. We can use bound (2.9) to bound the contribution to \(A^{(1)}_{N}\) from the rest of the \(\left( {\begin{array}{c}h\\ 2\end{array}}\right) -{\mathfrak {m}}\) pairs by c\((1-{{\bar{\beta }}}')^{-{h\atopwithdelims ()2}+{\mathfrak {m}}}\) and the contribution from collisions involving pairs \(\{i_1,j_1\}, \{i_2,j_2\},\ldots ,\{i_{{\mathfrak {m}}}, j_{{\mathfrak {m}}} \}\) beyond time n by \(c(1-{{\bar{\beta }}}')^{-{\mathfrak {m}}}\). More precisely, we obtain that

Schematically (making reference again to Fig. 5), we have summed out everything except the ‘wiggle’ lines which span [0, n] and which correspond to the pairs that simultaneously collide at time n and then summed out the spatial dependence of the latters. We will next use Proposition 1.5 of [3], which provides the estimate

where the second inequality follows by the local limit theorem. Therefore, from this, (2.8) and (2.10) we get that

for some \({\bar{\beta }}' \in ({{\bar{\beta }}},1)\). Since \({\bar{\beta }}'<1\) we have that \(\sum _{k \geqslant 0} k \cdot ({\bar{\beta }}')^k < \infty \) and we deduce that there exists a constant \(C=C({\bar{\beta }}')\) such that \(\big (U^{\bar{\beta }}_N\big )^{{\mathfrak {m}}}(n) \leqslant \frac{C^{{\mathfrak {m}}}}{n^{{\mathfrak {m}}}\,(\log N)^{{\mathfrak {m}}}}\). Since \(\sum _{n \geqslant 1} \frac{1}{n^{{\mathfrak {m}}}}<\infty \), for \({{\mathfrak {m}}}\geqslant 2\), there exists a (different) constant \(C=C({\bar{\beta }}')\in (0,\infty )\) such that

The two bounds above, in combination with (3.59) and (3.60), allow us to write:

which together with upper bound (3.56) entail that

\(\square \)

We are now ready to put all pieces together and prove the main result of the paper, Theorem 1.1.

Proof of Theorem 1.1

The joint convergence statement (1.3) will follow from the convergence of the joint Laplace transform (1.2) for \(|\beta _{i,j}|<1\) and general results on the relation between convergence of Laplace transforms and distributional convergence. Let us sketch this argument: Since \(\big (\tfrac{\pi }{\log N} \textsf{L}_N^{(i,j)} \big )_{1\leqslant i<j\leqslant N}\) are non-negative random variables, it suffices by the Cramer-Wold device (see [13], Corollary 4.5) to establish the distributional convergence of any nonnegative linear combinations, i.e. \(\textsf{L}_N^{\varvec{\beta }}:=\sum _{i<j} \tfrac{\beta _{i,j} \pi }{\log N} \textsf{L}_N^{(i,j)}\) with \(\beta _{i,j}\geqslant 0\). The fact that \(\textsf{L}_N^{\varvec{\beta }}\) has exponential moments both positive (as follows from our estimates) and negative (as is clear from the nonnegativity of \(\textsf{L}_N^{\varvec{\beta }}\)) implies that the sequence \(\textsf{L}_N^{\varvec{\beta }}\) is tight. Tightness together with exponential moments imply that subsequential limits of the Laplace transforms exist, with the convergence being uniform in the vicinity of 0 in the complex plane. This leads to the analyticity of the limiting Laplace transforms in the neighbourhood of 0. We will establish that the Laplace transform (1.2) with positive parameters converge to that of the corresponding linear combination of independent exponential distributions with parameter 1, which is also analytic in the vicinity of zero (see right-hand side of (1.2)). Since the limiting Laplace transforms agree on an interval with non-empty interior (in this case determined by the conditions \(\beta _{i,j}\in [0,1)\)), by analyticity they have to agree in the whole domain of analyticity, which includes an open disc containing 0.

Therefore, it only remains to establish the convergence of the joint Laplace transform (1.2) for \(\beta _{i,j}\in [0,1)\). Let us do so relying on the results we have proven so far.

Let \(\epsilon >0\). There exists large \(K=K_\epsilon \in \mathbb {N}\) such that uniformly in \(N \in \mathbb {N}\)

by Proposition 3.2. We have

By Propositions 3.1, 3.3 we have that

Moreover, by Proposition 3.5 we have that

Last, by Proposition 3.7 we have that

therefore

By Proposition 3.11 we have that

Therefore, by (3.61), (3.62) and (3.63) we obtain that

\(\square \)

Notes

(1.5) could also be achieved if one refined the results of [1] to joint convergence of \(Z_{N,\beta _{1,N}}(x), \dots ,Z_{N,\beta _{h,N}}(x)\) and combined with the moment estimates of [17]. However, the independence of the collision times that we establish here cannot be recovered from joint moments of partition functions as these will only give rise to Laplace transforms with a restricted form of Laplace parameters as \(\{\beta _i\beta _j\}_{1\leqslant i<j\leqslant h}\) instead of \(\{\beta _{i,j}\}_{1\leqslant i<j\leqslant h}\), needed for the joint convergence of the vector \(\{L_N^{(i,j)}\}_{1\leqslant i <j \leqslant h}\). The structural aspects of the collision structure that we unveil in the present work are crucial towards establishing the independence property.

The notation \(\circ \), with which we denote the one-part partition, here, should not be confused with the \(\circ \) that appears in the figures, where it just marks places where we apply the Chapman–Kolmogorov formula.

References

Caravenna, F., Sun, R., Zygouras, N.: Universality in marginally relevant disordered systems. Ann. Appl. Prob. 27, 3050–3112 (2017)

Caravenna, F., Sun, R., Zygouras, N.: The two-dimensional KPZ equation in the entire subcritical regime. Ann. Prob. 48(3), 1086–1127 (2020)

Caravenna, F., Sun, R., Zygouras, N.: The Dickman subordinator, renewal theorems and disordered systems. Electron. J. Prob. 24 (2019)

Caravenna, F., Sun, R., Zygouras, N.: The critical \(2d\) stochastic heat flow. Invent. Math. 1–136 (2023)

Comets, F.: Directed Polymers in Random Environments. Lecture Notes in Mathematics, vol. 2175. Springer, Cham (2017)

Cosco, C., Zeitouni, O.: Moments of partition functions of \(2d\) gaussian polymers in the weak disorder regime - I. Commun. Math. Phys. 403(1), 417–450 (2023)

Cosco, C., Zeitouni, O.: Moments of partition functions of 2D Gaussian polymers in the weak disorder regime – II, arXiv:2305.05758

Dell’Antonio, G.F., Figari, R., Teta, A.: Hamiltonians for systems of N particles interacting through point interactions. Ann. de l’IHP Physique théorique 60(3), 253–290 (1994)

Dimock, J., Rajeev, S.: Multi-particle Schrödinger operators with point interactions in the plane. J. Phys. A Math. Gen. 37(39), 9157 (2004)

Erdős, P., Taylor, S.J.: Some problems concerning the structure of random walk paths. Acta Math. Acad. Sci. Hungar. 11, 137–162 (1960)

Gärtner, J., Sun, R.: A quenched limit theorem for the local time of random walks on \(Z^2\). Stoch. Process. Their Appl. 119(4), 1198–1215 (2009)

Gu, Y., Quastel, J., Tsai, L.C.: Moments of the 2D SHE at criticality. Prob. Math. Phys. 2(1), 179–219 (2021)

Kallenberg, O.: Foundations of Modern Probability. Springer, Berlin (1997)

Knight, F.B.: Some remarks on mutual windings. In: Séminaire de probabilités de Strasbourg, vol. 27, pp. 36–43. Springer (1993)

Knight, F.B.: Erratum to: “Some remarks on mutual windings’’. Séminaire de probabilités de Strasbourg, Springer 28, 334 (1994)

Lawler, G.F., Limic, V.: Random Walk: A Modern Introduction. Cambridge Studies in Advanced Mathematics, vol. 123. Cambridge University Press, London (2010)

Lygkonis, D., Zygouras, N.: Moments of the 2d directed polymer in the subcritical regime and a generalisation of the Erdős–Taylor theorem. Commun. Math. Phys. 1–38 (2023)

Pitman, J., Yor, M.: Asymptotic laws of planar Brownian motion. Ann. Probab. 14(3), 733–779 (1986)

Yor, M.: Étude asymptotique des nombres de tours de Plusieurs movement Browniens complexes corrélés. In: Random Walks, Brownian Motion, and Interacting Particle Systems, Prog. Probab., vol. 28, pp. 441–455. Birkhauser (1991)

Acknowledgements

We thank Francesco Caravenna and Rongfeng Sun for useful comments on the draft. D. L. acknowledges financial support from EPRSC through Grant EP/HO23364/1 as part of the MASDOC DTC at the University of Warwick. NZ was supported by EPSRC through Grant EP/R024456/1.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lygkonis, D., Zygouras, N. A multivariate extension of the Erdős–Taylor theorem. Probab. Theory Relat. Fields 189, 179–227 (2024). https://doi.org/10.1007/s00440-024-01267-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00440-024-01267-3

Keywords

- Planar random walk collisions

- Erdős–Taylor theorem

- Schrödinger operators with point interactions

- Directed polymer in random environment