Abstract

Objectives

To use machine learning classifiers (MLCs) to seek differences in visual fields (VFs) between normal eyes and eyes of HIV+ patients; to find the effect of immunodeficiency on VFs and to compare the effectiveness of MLCs to commonly-used Statpac global indices in analyzing standard automated perimetry (SAP).

Methods

The high CD4 group consisted of 70 eyes of 39 HIV-positive patients with good immune status (CD4 counts were never <100/ml). The low CD4 group had 59 eyes of 38 HIV-positive patients with CD4 cell counts <100/ml at some period of time lasting for at least 6 months. The normal group consisted of 61 eyes of 52 HIV-negative individuals. We used a Humphrey Visual Field Analyzer, SAP full threshold program 24-2, and routine settings for evaluating VFs. We trained and tested support vector machine (SVM) machine learning classifiers to distinguish fields from normal subjects and high and CD4 groups separately. Receiver operating characteristic (ROC) curves measured the discrimination of each classifier, and areas under ROC were statistically compared.

Results

Low CD4 HIV patients: with SVM, the AUROC was 0.790 ± 0.042. SVM and MD each significantly differed from chance decision, with p < .00005. High CD4 HIV patients: the SVM AUROC of 0.664 ± 0.047 and MD were each significantly better than chance (p = .041, p = .05 respectively).

Conclusions

Eyes from both low and high CD4 HIV+ patients have VFs defects indicating retinal damage. Generalized learning classifier, SVM, and a Statpac classifier, MD, are effective at detecting HIV eyes that have field defects, even when these defects are subtle.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

With the improvement brought about by highly active antiretroviral therapy (HAART) in the health of human immunodeficiency virus (HIV)-positive patients, it has become important to determine whether damage continues to occur under HAART, which implies good immune control, and to identify methods of finding such damage. In HIV patients without history or evidence of retinitis, previous studies by us and others have disclosed structural damage to the retina and functional deficits resulting from such damage [1–13]. Individuals with HIV retinopathy without retinitis and with low CD4 T-lymphocyte counts show deficits in visual function, even though the central vision may be preserved [1, 2]. In the era before HAART became available, damage was suggested in such eyes by studies showing that HIV-positive patients have reduced sensitivity in the field of vision [3, 4], decreased color and contrast sensitivity in the test of central vision [5, 6], and altered retinal processing with electrophysiological testing [7]. Further studies from our group have shown that there are particular topographic patterns of this visual field (VF) loss [8]. Retinal cotton wool spots, microaneurysms, capillary drop-out, and ischemia are assumed to damage the ganglion cell layer and retinal nerve fiber layer (RNFL) [1, 9, 10].

Even in the HAART era, it has been shown that damage still occurs in HIV patients managed by HAART [11]. Using high-resolution optical coherence tomography and scanning laser polarimetry, we found thinning in the retinal nerve fiber layer of HIV patients with low CD4 counts [12, 13]. Multifocal electroretinogram (mfERG) showed abnormalities in the second order kernel (inner retina) in HIV populations [14]. Second order kernel abnormalities in mfERGs indicated that not only low CD4 patients but also high CD4 patients underwent detectable electrophysiological alteration and maybe even damage in the inner retina [15].

HIV patients with high CD4 counts may also have symptoms of retinopathy. Although most of these patients do not have visual symptoms, a few patients observe visual field changes. Automated perimetry is currently the most widely used method to detect functional deficits that anatomic changes in this population might cause. Because these changes are usually subtle, the deficits are difficult to detect by human observers, including perimetric experts.

Pattern recognition techniques, especially machine learning classifiers (MLCs), have been previously applied to ophthalmologic problems, such as the interpretation and classification of visual fields [16, 17], detection of visual field progression [18, 19], assessment of the structure of the optic nerve head [20, 21], measurement of retinal nerve fiber layer thickness [22, 23], and separation of noise from visual field information [24]. From previous studies in glaucoma, we found support vector machine (SVM) to be particularly effective for discriminating between normal and glaucomatous visual fields [16, 25]. MLCs can be trained to distinguish the group identity of patterns, sometimes with greater sensitivity than a human expert [25–28].

In this study, we applied SVM with the Gaussian kernel to determine if visual fields in HIV subjects differ from visual fields in normal subjects. Since the immune function presumably was better in the high CD4 group, we expected HIV retinopathy damage to be less in the high CD4 group than in the low CD4 group. We assumed that there was enough information in the visual fields to distinguish low CD4 patients from HIV-negative patients, and we anticipated that there might be enough information to discriminate high CD4 patients from HIV-negatives. The Statpac global indices, mean deviation (MD) and pattern standard deviation (PSD) are widely available and in common use to interpret automated perimetry for glaucoma. We compared MLCs to MD and PSD in the ability to separate fields of low CD4 from those of high CD4 HIV patients.

Machine learning classifiers (MLCs) have evolved to approach the theoretical limit in finding the differences between classes. With these theoretically more effective MLCs, we (1) seek differences in visual fields between normal eyes and eyes of HIV patients, (2) try to find the effect of immunodeficiency on visual fields, as reflected in CD4 count, and (3) compare the effectiveness of MLCs to commonly-used Statpac global indices in analyzing standard automated perimetry (SAP).

Methods

Patients

The HIV-positive patients come from an Institutional Review Board-approved, National Institutes of Health-sponsored longitudinal study of HIV disease at the University of California, San Diego (UCSD). The research followed the tenets of the Declaration of Helsinki. Non-HIV controls were age-matched healthy participants in the HIV study as well as non-glaucomatous age-matched healthy controls from the National Eye Institute-sponsored ongoing longitudinal Diagnostic Innovations in Glaucoma Study (DIGS).

The patients were divided into three groups. The high CD4 group (H) consisted of HIV-positive patients with good immune status. Their medical records showed that their CD4 counts were never valued at <100 (1.0 × 109/l). The low CD4 group (L) were HIV-positive patients with CD4 cell counts measured at <100 (1.0 × 109/l) at some period of time in their medical history lasting for at least 6 months. Out of 59 eyes in this subgroup, 32 had signs of HIV retinopathy at the time of examination (n = 6) or based on their medical records (n = 26). None of the eyes had evidence of retinopathy caused by other virus. All HIV patients were on HAART therapy prior to the time of the examination, and a substantial portion of these patients had a recovery in their CD4 counts. The HIV individuals had no confounding ocular disease or eye surgery. The normal group (N) consisted of HIV-negative patients without evidence of ocular damage. This normal control group comprised 17% with a life style similar to the HIV groups, and 83% from DIGS.

Ophthalmologic evaluation

All patients had a complete ocular examination, including indirect ophthalmoscopy and morning intraocular pressure measurement. The exclusion criteria were inability to perform visual field testing, corrected visual acuity worse than 20/40, spherical refraction beyond ±5 diopters, cylindrical correction greater than 3 diopters, unclear ocular media, concurrent or healed CMV retinitis (a fellow eye without retinitis was eligible), scotopic pupil size <3 mm, glaucoma or suspicion of glaucoma by disk or field or intraocular pressure greater than 21 mmHg on two visits, and diseases that can cause retinopathy, like diabetes or uncontrolled hypertension.

Visual field testing and data input for the classifier

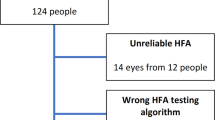

We used a Humphrey Visual Field Analyzer (model 620; Carl Zeiss Meditec, Dublin, CA, USA), standard automated perimetry (SAP) full threshold program 24-2, and routine settings for evaluating visual fields. Visual fields were taken within 1 week of the ophthalmologic examination. The examination was paused for a while before evaluating the second eye. Only reliable VFs, defined as those with less than 33% false-positives, 33% false-negatives, and 33% fixation losses, were used. Thus, eight eyes had to be excluded from analysis. Naïve visual fields were not analyzed; fields were included only after initial practice.

The absolute sensitivity (in decibels) of 52 visual field locations (54 excluding the two located in the blind spot) formed a feature vector in 52-dimensional input space for each of the 124 SAP fields of normal and HIV eyes [16, 25]. SAPs from the left eye were mapped to right eye format to make all the fields appear as right eyes for input for the SVMs.

Machine learning classifiers

Pattern recognition can use methods of machine learning classifiers. Support vector machine (SVM) is a machine classification method that seeks the boundary that best separates sparse samples that are difficult to separate in the two classes [29, 30]. SVM learning adapts to the data and often outperforms other classifiers; the use of sparse data helps SVM to learn efficiently. Support vector techniques have been used for various clinical medicine classification applications including the detection of glaucoma and HIV-related ocular disease [16, 21, 31]. The support vector method was implemented by using Platt’s sequential minimal optimization algorithm in commercial software (MatLab, version 7.0 MathWorks, Natick, MA). For classification of the SAP data, Gaussian (nonlinear) kernels of various widths were tested, and the chosen Gaussian kernel width was the one that gave the highest area under the receiver operating characteristic (AUROC) curve, using 10-fold cross-validation to separate teaching and test samples.

Training and testing machine learning classifiers

In this study, we used 10-fold cross validation, which randomly split each class into ten equal subsets. The classifier was trained on a set that combined nine of the ten partitions, and the 10th partition served as the testing set. This procedure was performed ten times, with each partition having a chance to serve as the test set.

Performance measure of trained machine learning classifiers

Receiver operating characteristic (ROC) curves display the discrimination of each classifier as the separation threshold is moved from one end of the data to the other. We generated an ROC curve to represent a chance decision to permit comparison of the machine learning classifiers against chance; the predictor with performance equal to chance will have AUROC = 0.5, while the ideal classifier will give an AUROC = 1.0. We tested the null hypothesis (p-value) for comparing the AUROCs of classifiers [25, 32]. We trained and tested SVM to distinguish fields from normal subjects and high CD4 group, and between normal subjects and low CD4 patients group.

Dimension reduction by feature selection

We trained the machine learning classifiers with the full feature set (SVM full) and, in an effort to improve performance, with a performance-peaking subset of near optimal features derived with feature selection [21]. To create small subsets with the best features, we used backward elimination (SVM back) with SVM. Previous research found backward elimination to work better than forward selection on visual field data [21]. Backward elimination started with the full feature set. The feature that, when removed, either maximally increased or minimally decreased the SVM performance was removed, and the process was repeated sequentially down to one feature. Close to the best feature set could be determined by choosing the reduced feature set with peak performance.

Results

There were 132 subjects (118 men, 14 women, 190 eyes) in the HIV group. There were 70 Hispanic, 48 Caucasian, 12 African-American and two Asian-Pacific patients in this cohort. The normal group consisted of 52 HIV-negative individuals (61 eyes) with the mean age ± standard deviation of 48.5 ± 8.2 years and mean spherical equivalent in diopters (Dsph) of −0.70 ± 0.43 Dsph. The high CD4 group had 39 patients (70 eyes) with mean age of 47.1 ± 8.1 years and mean spherical equivalent −1.20 ± 0.46 Dsph. There were 38 patients (59 eyes) in the low CD4 group, with mean age of 46.5 ± 7.8 years and mean spherical equivalent −0.91 ± 0.57 Dsph. The AUROCs and their standard deviations for the various combinations of CD4 level and feature set size are shown in the Table 1, which also demonstrates the p-values for chosen comparisons.

HIV patients with low CD4 counts

With SVM full, the AUROC was 0.790 ± 0.042 (Table 1, Fig. 1a). It significantly differed from chance decision with p < 0.00005 (Table 1). Backward elimination selected a peak-performing 11-feature feature set (arrow in Fig. 2a). The AUROC for SVM back significantly improved to 0.833 ± 0.037 (p = .050), compared to SVM full. The bold dashed curve was the average of curves generated by the standard method of backward elimination [29] (Fig. 2). The location of the eight most significant field locations were mapped to the standard visual field display. Eight were chosen to match the size of the best feature subset for high CD4 patients (see below). The majority of the top eight features were located near the blind spot, with a preponderance superiorly and temporally (Fig. 3a).

Receiver operating curves (ROCs) for support vector machine (SVM) and Statpac global indices, mean deviation (MD) and pattern standard deviation (PSD), in human immunodeficiency virus (HIV) positive patients. SVM full are ROCs generated by SVM trained on all 52 field locations. SVM back are ROCs generated from the subset with the peak performance. The chance curve is the effect of SVM learning to distinguish classes with data randomly distributed between them. a ROCs from distinguishing low CD4 eyes from normal. b ROCs from distinguishing high CD4 eyes from normal

Performance curves measuring area under receiver operating curve (AUROC) for the best feature combination for each size subset of features generated by backward elimination between one feature and all 52 features. The bold curve averages the curves (thin dark gray curves) derived from the standard backward elimination. The peak (arrow) is the subset size with the best performance. a Curves generated by backward elimination applied to low CD4 vs normal eyes. b Curves generated by backward elimination applied to high CD4 vs normal eyes

Ranking by backward elimination. a Location of field defect in low CD4 group showing that the top eight field locations tend to be clustered superior temporally, close to the blind spot. b Location of field defect in high CD4 group showing that the top eight field locations tend to be without discernable pattern

MD and PSD produced AUROCs of 0.813 ± 0.039 and 0.723 ± 0.047 respectively. MD was better than PSD (p = .03). SVM back was significantly more effective than PSD (p = .004 )(Table 1), but not significantly better than MD (p = .41).

HIV patients with high CD4 counts

The AUROC was 0.664 ± 0.047 with SVM trained on the full feature set of 52 SAP locations (Table 1, Fig. 1b). It was significantly better than chance (p = 0.041). Backward elimination produced subsets that peaked at eight features. The AUROC with the eight-feature subset, was 0.733 ± 0.044. This peaking was demonstrated by the arrow in Fig. 2b. The top eight visual field locations were diffusely scattered (Fig. 3b).

The Statpac indices, MD and PSD, generated AUROCs of 0.651 ± 0.48 and 0.587 ± 0.50 respectively. SVM back was significantly better than PSD (p = .0007) but not MD (p = .10).

Discussion

This study in the HAART era confirmed the reports in previous publications that eyes from HIV patients with low CD4 T-lymphocyte counts have retinopathy damage that affects the visual field [3, 4, 8]. SVM trained with the full set of visual field locations, optimized SVM trained on the best subset of visual field locations, MD and PSD all distinguished visual fields of HIV subjects with low CD4 counts from fields in normal eyes. SVM and optimized SVM conferred no advantage over MD. A larger number of examples in each group is necessary to determine if optimized SVM differs from MD.

MD outperformed PSD in low CD4 eyes (p = .03). As a mass output measure of decreased field sensitivity, MD does not indicate if the depressions are focal, regional, or diffuse. PSD, designed to suppress global depression, is more responsive to local and regional field depression. PSD is more sensitive than MD to glaucomatous field defects, which tend to be regional [25]. The better performance of MD in eyes of low CD4 HIV eyes suggests that the field defects may be diffusely scattered, and less likely to be focal or regional. Also, since these patients were not old, the ability of PSD to account for cataracts was not beneficial.

Optimizing SVM significantly improved its performance on low CD4 eyes (p = .05), reducing the likelihood that the choice of the top field locations was due to the vagaries of the data set. The standard backward elimination curve found no increase in accuracy with the use of more than the top 11 locations (Fig. 2a). The eight most important field locations for distinguishing the low CD4 HIV eyes from normal tended to be superior, temporal, and close to the blind spot (Fig. 3a). This tendency located the retinal damage in low CD4 eyes to regions mostly close to the optic nerve, inferior, and nasal. It is not clear whether the damage was most prominent near the disk, or whether it was just more easily detected there.

SVM, optimized SVM, and MD were able to distinguish eyes from HIV patients with high CD4 T-lymphocyte counts from normal eyes, though with less assurance than with low CD4. PSD was no better than chance in making the distinction in high CD4 eyes (p = .17), though it was better than chance for low CD4 eyes (p = .002). The diminished assurance indicated that the visual field defects were fewer and less deep in high CD4 eyes than in low CD4 eyes. It is unclear if the smaller difference from normal in the high CD4 eyes is due to resolution of some defects in the high CD4 group, or if a relatively greater depression in the field around the optic nerve is present in the low CD4 subjects. This is a cross-sectional analysis, and the true answer to the point above could be possible with longitudinal observation and a larger data set. A comparison of field defects between those whose CD4 counts remained <100 at the time of testing versus those whose CD4 counts recovered would be interesting, as the question that arises is whether these defects are reversible with a recovery in CD4 counts.

Optimizing SVM did not significantly improve performance on high CD4 eyes compared to SVM full. This observation diminished the veracity of the ranking for high CD4 and made the location of the significant defects uncertain. The locations of the eight most important field locations were scattered without a pattern.

Our previous observation at the beginning of the HAART era showed a pattern of visual loss sparing of the papillomacular bundles and associated damage to the inferior retina external to the posterior pole [8]. Similarly, the papillomacular area was spared in this cohort, as was the inferior retina outside the arcades. The diffuse pattern of damage has also been shown when analyzing one eye per patient only, in a similar but not identical HIV-positive cohort [32]. It is tempting to speculate that HAART therapy may have an effect on the extent of retinal damage; longitudinal observation could bring more light to this complex problem.

HIV retinopathy is a microvasculopathy that causes peripapillary hemorrhages, microangiopathy, and cotton-wool spots in retinae that have not been secondarily infected [9, 10, 33, 34]. Inner retinal thinning was previously reported with OCT and scanning laser polarimetry of low CD4 eyes, with inferior thinning being more prominent [12, 13]. RNFL thinning was found even in patients with good immune status in the HAART era [35]. Retinal microinfarctions may be responsible for the RNFL defects and field deficits. Similar findings were also reported in HIV-positive children using the third-generation OCT [36]. Although fields from high CD4 eyes appear mostly normal to human perimetric experts, this study found that the trained machine learning classifiers and MD could each distinguish between eyes from high CD4 patients and normal eyes.

Pattern recognition has proved extremely useful in this clinical scenario. Even if testing the visual field in HIV patients currently does not have the same relevance for managing patients as it does in glaucoma, it has served to uncover information about the disease process. To assist the management of patients with HIV, a future approach could be the establishment of threshold values for MD or for SVM that would enable identification of individuals who have retinal damage.

SVM, especially when the feature set is optimized by dimensionality reduction, is a sensitive classification method that approaches the performance of the theoretical optimal classifier for classifying visual fields [16, 18, 24, 25]. Optimized machine learning classifiers appear to be a valid approach to detecting subtle abnormalities in medical tests with complex multidimensional measurements. This concept was demonstrated in our previous report analyzing complex datasets from mfERG in HIV-positive patients [15].

In summary, we have confirmed that eyes from low CD4 HIV patients have visual field measurements indicating retinal damage, and that high CD4 eyes also have retinal damage. We have demonstrated that a generalized learning classifier, SVM, is effective at learning which eyes have field defects, even when these defects are subtle, and we have discovered that MD, a statistical classifier tuned to visual field data, is also effective in distinguishing both high CD4 fields and low CD4 fields from normal. An important message to people at risk of HIV and to their providers is that HIV infection may produce ocular damage under HAART, even if there is good immune status.

References

Goldberg DE, Smithen LM, Angelilli A, Freeman WR (2005) HIV-associated retinopathy in the HAART era. Retina 25(5):633–649

Quiceno JI, Capparelli E, Sadun AA, Maunguia D, Grant I, Listhaus A, Crapotta J, Lambert B, Freeman WR (1992) Visual dysfunction without retinitis in patients with acquired immunodeficiency syndrome. Am J Ophthalmol 113:8–13

Plummer DJ, Sample PA, Arevalo JF, Grant I, Quiceno JI, Dua R, Freeman WR (1996) Visual field loss in HIV-positive patients without infectious retinopathy. Am J Ophthalmol 122:542–549

Geier SA, Nohmeier C, Lachenmayr BJ, Klaus V, Goebel FD (1995) Deficits in perimetric performance in patients with symptomatic human immunodeficiency virus infection or acquired immunodeficiency syndrome. Am J Ophthalmol 119:335–344

Geier SA, Kronawitter U, Bogner JR, Hammel G, Berninger T, Klauss V, Goebel FD (1993) Impairment of colour contrast sensitivity and neuroretinal dysfunction in patients with symptomatic HIV infection or AIDS. Br J Ophthalmol 77:716–720

Geier SA, Hammel G, Bogner JR, Kronawitter U, Berninger T, Goebel FD (1994) HIV-related ocular microangiopathic syndrome and color contrast sensitivity. Invest Ophthalmol Vis Sci 35:3011–3021

Iragui VJ, Kalmijn J, Plummer DJ, Sample PA, Trick GL, Freeman WR (1996) Pattern electroretinograms and visual evoked potentials in HIV infection evidence for asymptomatic retinal and postretinal impairment in the absence of infectious retinopathy. Neurology 47:1452–1456

Sample PA, Plummer DJ, Mueller AJ, Matsubara KI, Sadun A, Grant I, Freeman WR (1999) Pattern of early visual field loss in HIV-infected patients. Arch Ophthalmol 117:755–760

Kozak I, Bartsch DU, Cheng L, Freeman WR (2006) In vivo histology of cotton-wool spots using high-resolution optical coherence tomography. Am J Ophthalmol 141(4):748–750

Kozak I, Bartsch DU, Cheng L, Freeman WR (2007) Hyper-reflective sign in resolved cotton wool spots using high resolution optical coherence tomography and OCT ophthalmoscope. Ophthalmology 114:537–543

Shah KH, Holland GN, Yu F, Van Natta M, Nusinowitz S (2006) Contrast sensitivity and color vision in HIV-infected individuals without infectious retinopathy. Am J Ophthalmol 142(2):284–292

Kozak I, Bartsch DU, Cheng L, Kosobucki BR, Freeman WR (2005) Objective analysis of retinal damage in HIV-positive patients in the HAART era using OCT. Am J Ophthalmol 139(2):295–301

Kozak I, Bartsch DU, Cheng L, McCutchan A, Weinreb RN, Freeman WR (2007) Scanning laser polarimetry demonstrates retinal nerve fiber layer damage in human immunodeficiency virus positive patients without infectious retinitis. Retina 27(9):1267–1273

Falkenstein IA, Bartsch DU, Azen SP, Dustin L, Sadun AA, Freeman WR (2008) Multifocal electroretinography in HIV-positive patients without infectious retinitis. Am J Ophthalmol 146(4):579–588

Goldbaum MH, Falkenstein I, Kozak I, Hao J, Bartsch DU, Sejnowski T, Freeman WR (2008) Analysis of support vector machine shows HIV-positive subjects without infectious retinitis have mfERG deficiencies compared to normal eyes. Trans Am Ophthalmol Soc 106:196–204

Goldbaum MH, Sample PA, White H, Colt B, Raphaelian P, Fechtner RD, Weinreb RN (1994) Interpretation of automated perimetry for glaucoma by neural network. Invest Ophthalmol Vis Sci 35:3362–3373

Mutlukan E, Keating K (1994) Visual field interpretation with a personal computer based neural network. Eye 8:321–323

Brigatti L, Nouri-Mahdavi K, Weitzman M, Caprioli J (1997) Automatic detection of glaucomatous visual field progression with neural networks. Arch Ophthalmol 115:725–728

Sample PA, Boden C, Zhang Z, Pascual J, Lee TW, Zangwill LM, Weinreb RN, Crowston JG, Hoffmann EM, Medeiros FA, Sejnowski T, Goldbaum (2005) Unsupervised machine learning with independent component analysis to identify areas of progression in glaucomatous visual fields. Invest Ophthalmol Vis Sci 46:3684–3692

Brigatti L, Hoffman BA, Caprioli J (1996) Neural networks to identify glaucoma with structural and functional measurements. Am J Ophthalmol 121:511–521

Bowd C, Chan K, Zangwill LM, Goldbaum MH, Lee TW, Sejnowski TJ, Weinreb RN (2002) Comparing neural network and linear discriminant functions for glaucoma detection using confocal scanning laser ophthalmoscopy of the optic disc. Invest Ophthalmol Vis Sci 49:3444–3454

Zangwill LM, Chan K, Bowd C, Hao J, Lee TW, Weinreb RN, Sejnowski T, Goldbaum MH (2004) Comparing confocal scanning laser ophthalmoscopy measurements of the optic nerve head and peripapillary retina for detecting glaucoma using machine learning classifiers. Invest Ophthalmol Vis Sci 45:3144–3151

Bowd C, Medeiros FA, Zhang Z, Zangwill LM, Hao J, Lee TW, Sejnowski TJ, Weinreb RN, Goldbaum MH (2005) Relevance vector machine and support vector machine classifier analysis of scanning laser polarimetry retinal nerve fiber layer measurements. Invest Ophthalmol Vis Sci 46:1322–1329

Henson DB, Spenceley SE, Bull DR (1997) Artificial neural network analysis of noisy visual field data in glaucoma. Artif Intell Med 10:99–113

Goldbaum MH, Sample PA, Chan K, Williams J, Lee TW, Blumenthal E, Girkin CA, Zangwill LM, Bowd C, Sejnowski T, Weinreb RN (2002) Comparing machine learning classifiers for diagnosing glaucoma from standard automated perimetry. Invest Ophthalmol Vis Sci 43:162–169

Sample PA, Chan K, Boden C, Lee TW, Blumenthal EZ, Weinreb RN, Bernd A, Pascual J, Hao J, Sejnowski T, Goldbaum MH (2004) Using unsupervised learning with variational bayesian mixture of factor analysis to identify patterns of glaucomatous visual field defects. Invest Ophthalmol Vis Sci 45(8):2596–2605

Goldbaum MH, Sample PA, Zhang Z, Chan K, Hao J, Lee TW, Boden C, Bowd C, Bourne R, Zangwill L, Sejnowski T, Spinak D, Weinreb RN (2005) Using unsupervised learning with independent component analysis to identify patters of glaucomatous visual field defects. Invest Ophthalmol Vis Sci 46:3676–3683

Goldbaum MH, Jang G-J, Bowd C, Hao J, Zangwill LM, Liebmann J, Girkin C, Jung TP, Weinreb RN, Sample PA (2009) Patterns of glaucomatous visual field loss in SITA fields automatically identified using independent component analysis. Trans Am Ophthalmol Soc 107:136–144

Vapnik V (1998) Statistical learning theory. Wiley, New York

Vapnik V (2000) The nature of statistical learning theory, 2nd edn. Springer, New York

Kozak I, Sample PA, Hao J, Freeman WR, Weinreb RN, Lee TW, Goldbaum MH (2007) Machine learning classifiers detect subtle field defects in eyes of HIV individuals. Trans Am Ophthalmol Soc 105:111–118

DeLong ER, DeLong DM, Clarke-Pearson DL (1988) Comparing the areas under two or more correlated receiver operating characteristic curves: a nonparametric approach. Biometrics 44:837–845

Kupperman BD, Petty JG, Richman DD, Mathews WC, Fullerton SC, Rickman LS, Freeman WR (1993) The correlation between CD4+ counts and the prevalence of cytomegalovirus retinitis and human immunodeficiency virus-related non-infectious retinal vasculopathy in patients with acquired immunodeficiency syndrome. Am J Ophthalmol 115:575–582

Newsome DA, Green WR, Miller ED, Kiesslin LA, Morgan B, Jabs DA, Polk BF (1984) Microvascular aspects of acquired immunodeficiency syndrome retinopathy. Am J Ophthalmol 98:590–501

Besada E, Shechtman D, Black G, Hardigan PC (2007) Laser scanning confocal ophthalmoscopy and polarimetry of human immunodeficiency virus patients without retinopathy, under antiretroviral therapy. Optom Vis Sci 84(3):189–196

Moschos MM, Mostrou G, Psimenidou E, Spoulou V, Theodoridou M (2007) Objective analysis of retinal function in HIV-positive children without retinitis using optical coherence tomography. Ocul Immunol Inflamm 15:319–323

Acknowledgments

Supported in part by University of California San Diego, Research to Prevent Blindness, New York City, NY, Unrestricted grant and NIH Grants EY13928 (MHG), EY07366 (WRF), and EY08208 (PAS), Research support from Carl Zeiss Meditec Inc. (PAS).

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Additional information

Published in part in the Transactions of the American Ophthalmological Society, 2008

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Goldbaum, M.H., Kozak, I., Hao, J. et al. Pattern recognition can detect subtle field defects in eyes of HIV individuals without retinitis under HAART. Graefes Arch Clin Exp Ophthalmol 249, 491–498 (2011). https://doi.org/10.1007/s00417-010-1511-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00417-010-1511-x