Abstract

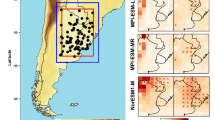

Projections for South America of future climate change conditions in mean state and seasonal cycle for temperature during the twenty-first century are discussed. Our analysis includes one simulation of seven Atmospheric-Ocean Global Circulation Models, which participated in the Intergovernmental Panel on Climate Change Project and provided at least one simulation for the twentieth century (20c3m) and one simulation for each of three Special Report on Emissions Scenarios (SRES) A2, A1B, and B1. We developed a statistical method based on neural networks and Bayesian statistics to evaluate the models’ skills in simulating late twentieth century temperature over continental areas. Some criteria [model weight indices (MWIs)] are computed allowing comparing over such large regions how each model captures the temperature large scale structures and contributes to the multi-model combination. As the study demonstrates, the use of neural networks, optimized by Bayesian statistics, leads to two major results. First, the MWIs can be interpreted as optimal weights for a linear combination of the climate models. Second, the comparison between the neural network projection of twenty-first century conditions and a linear combination of such conditions allows the identification of the regions, which will most probably change, according to model biases and model ensemble variance. Model simulations in the southern tip of South America and along the Chilean and Peruvian coasts or in the northern coasts of South America (Venezuela, Guiana) are particularly poor. Overall, our results present an upper bound of potential temperature warming for each scenario. Spatially, in SRES A2, our major findings are that Tropical South America could warm up by about 4°C, while southern South America (SSA) would also undergo a near 2–3°C average warming. Interestingly, this annual mean temperature trend is modulated by the seasonal cycle in a contrasted way according to the regions. In SSA, the amplitude of the seasonal cycle tends to increase, while in northern South America, the amplitude of the seasonal cycle would be reduced leading to much milder winters. We show that all the scenarios have similar patterns and only differ in amplitude. SRES A1B differ from SRES A2 mainly for the late twenty-first century, reaching more or less an 80–90% amplitude compared to SRES A2. SRES B1, however, diverges from the other scenarios as soon as 2025. For the late twenty-first century, SRES B1 displays amplitudes, which are about half those of SRES A2.

Similar content being viewed by others

References

Allen MR, Stott PA, Mitchell JFB, Schnur R, Delworth TL (2000) Quantifying the uncertainty in forecasts of anthropogenic climate change. Nature 407:617–620

Boulanger J-P, Leloup J, Penalba O, Rusticucci M, Lafon F, Vargas W (2005) Low-frequency modes of observed precipitation variability over the La Plata basin. Clim Dyn 24:393–413. DOI 10.1007/s00382-004-0514-x

Coelho CAS, Pezzulli S, Balmaseda M, Oblas-Reyes FJD, Stephenson DB (2004) Forecast calibration and combination: a simple Bayesian approach for ENSO. J Clim 17:1504–1516

Collins WD et al (2005) The community climate system model, version 3. J Clim (in press)

Degallier N, Favier C, Boulanger J-P, Menkes C, Oliveira C, Rubens Costa Lima J, Mondet B (2005) Early determination of the reproductive number for vector-borne diseases: the case of dengue in Brazil. (in press)

Delworth et al (2005) GFDL’s CM2 global coupled climate models—Part 1: Formulation and simulation characteristics. J Clim (in press)

Forest CE, Stone PH, Sokolov AP, Allen MR, Webster MD (2002) Quantifying uncertainties in climate system properties with the use of recent climate observations. Science 295:113–117

Giorgi F, Mearns LO (2002) Calculation of average, uncertainty range and reliability of regional climate changes from AOGCM simulations via the “reliability ensemble averaging” (REA) method. J Clim 15(10):1141–1158

Giorgi F et al (2001) Regional climate information: evaluation and projections. In: Houghton JT et al (eds) Climate change 2001: the scientific basis. Contribution of working group I to the 3rd assessment report of the intergovenmental panel on climate change, Chap 10. Cambridge University Press, Cambridge, pp 583–638

Gnanadesikan et al (2005) GFDL’s CM2 global coupled climate models—Part 2: The baseline ocean simulation (in press)

Gordon C, Cooper C, Senior CA, Banks HT, Gregory JM, Johns TC, Mitchell JFB, Wood RA (2000) The simulation of SST, sea ice extents and ocean heat transports in a version of the Hadley Centre coupled model without flux adjustments. Clim Dyn 16:147–168

Haak H et al (2003) Formation and propagation of great salinity anomalies. Geophys Res Lett 30: 1473. DOI 10.1029/2003GL17065

Johns TC, Carnell RE, Crossley JF, Gregory JM, Mitchell JFB, Senior CA, Tett SFB, Wood RA (1997) The second hadley centre coupled ocean–atmosphere GCM: model description, spinup and validation. Clim Dyn 13:103–134

Jones PD, Moberg A (2003) Hemispheric and large-scale surface air temperature variations: an extensive revision and an update to 2001. J Clim 16:206–223

MacKay DJC (1992) Bayesian interpolation. Neural Comput 4:415–447

Marsland et al (2003) The Max-Planck-Institute global ocean/sea ice modelwith orthogonal curvelinear coordinates. Ocean Model 5:91–127

Nabney IT (2002) Netlab. Algorithms for pattern recognition. Advances in Pattern Recognition. Springer, Berlin Heidelberg New York, pp 420

Nakicenovic N, Alcamo J, Davis G, de Vries B, Fenhann J, Gaffin S, Gregory K, Grbler A, Jung TY, Kram T, La Rovere EL, Michaelis L, Mori S, Morita T, Pepper W, Pitcher H, Price L, Raihi K, Roehrl A, Rogner H-H, Sankovski A, Schlesinger M, Shukla P, Smith S, Swart R, van Rooijen S, Victor N, Dadi Z (2000) IPCC special report on emissions scenarios. Cambridge University Press, Cambridge, pp 599

New MG, Hulme M, Jones PD (2000) Representing twentieth-century space–time climate variability. Part II: Development of 1901–1996 monthly grids of terrestrial surface climate. J Clim 13:2217–2238

Reilly J, Stone PH, Forest CE, Webster MD, Jacoby HD, Prinn RG (2001) Uncertainty in climate change assessments. Science 293(5529):430–433

Roeckner et al (2003) The atmospheric general circulation model ECHAM5 Report No. 349OM

Ruosteenoja K, Carter TR, Jylhä K, Tuomenvirta H (2003) Future climate in world regions: an intercomparison of model-based projections for the new IPCC emissions scenarios. The Finnish Environment 644. Finnish Environment Institute, 83 pp

Salas-Mélia D, Chauvin F, Déqué M, Douville H, Gueremy JF, Marquet P, Planton S, Royer JF, Tyteca S (2004) XXth century warming simulated by ARPEGE-Climat-OPA coupled system

Stouffer et al. (2005) GFDL’s CM2 global coupled climate models—Part 4: Idealized climate response (in press)

Tebaldi C, Smith RL, Nychka D, Mearns LO (2005) Quantifying uncertainty in projections of regional climate change: a Bayesian approach to the analysis of multi-model ensembles (in press)

Wigley TML, Raper SCB (2001) Interpretation of high projections for global-mean warming. Science 293:451–454

Wittenberg et al (2005) GFDL’s CM2 global coupled climate models—Part 3: Tropical Pacific climate and ENSO (in press)

Acknowledgements

We wish to thank the Institut de Recherche pour le Développement (IRD), the Institut Pierre-Simon Laplace (IPSL), the Centre National de la Recherche Scientifique (CNRS; Programme ATIP-2002) for their financial support crucial in the development of the authors’ collaboration. We are also grateful to the European Commission for funding the CLARIS Project (Project 001454) within whose framework the present study was undertaken. We are grateful to the University of Buenos Aires and the “Department of Atmosphere and Ocean Sciences for welcoming Jean-Philippe Boulanger. We thank Tim Mitchell and David Viner for providing the CRU TS2.0 datasets. Finally, we thank the European project CLARIS (http://www.claris-eu.org) for facilitating the access to the IPCC simulation outputs. We thank the international modeling groups for providing their data for analysis, the Program for Climate Model Diagnosis and Intercomparison (PCMDI) for collecting and archiving the model data, the JSC/CLIVAR Working Group on Coupled Modelling (WGCM) and their Coupled Model Intercomparison Project (CMIP) and Climate Simulation Panel for organizing the model data analysis activity, and the IPCC WG1 TSU for technical support. The IPCC Data Archive at Lawrence Livermore National Laboratory is supported by the Office of Science, U.S. Department of Energy. Special thanks are addressed to Alfredo Rolla for his strong support in downloading all the IPCC model outputs.

Author information

Authors and Affiliations

Corresponding author

Appendix: The multi-layer perceptron (MLP)

Appendix: The multi-layer perceptron (MLP)

1.1 General description

The MLP is probably the most widely used architecture for practical applications of neural networks (Nabney 2002). From a computational point of view, the MLP can be described by a set of functions applied between different elements (neurons) using relatively simple arithmetic formulae, and a set of methods to optimize these functions based on a set of data. In the present study, we will only focus on a two-layer network architecture (Fig. 19). Its simplest element is called a neuron and is connected to all the neurons in the upper layer (either the hidden layer if the neuron belongs to the input layer or the output layer if the neuron belongs to the hidden layer). Each neuron has a value, and each connection is associated to a weight (Fig. 20).

Schematic representation of a two-layer MLP. \({\xi}_u\) is the set of input value and ou is its corresponding output (here we represent a specific case with a three-value input vector and a two-value output vector). The units or neurons called bias are units not connected to a lower layer. Their values are always equal to −1. They actually represent the threshold value of the next upper layer

As shown in Fig. 19, in the MLP case we considered, the neurons are organized in layers: an input layer (the values of all the input neurons except the bias are specified by the user), a hidden layer and an output layer. Each neuron in one layer is connected to all the neurons in the next layer. More specifically, defining the input vector \(({\xi}_i)_{i{\text{=1}},\;I},\) the first layer of the network forms H linear combinations (H is the number of neurons in the hidden layer) of the input vector to give the following set of intermediate activation variables:

where b (1) j corresponds to the bias of the input layer. Then, each activation variable is transformed by a non-linear activation function, which in most cases (including ours), is the hyperbolic tangent function (tanh): v j =tanh (h (1) j ), j=1,... , H. Finally, the v j are transformed to give a second set of activation variables associated to the neurons in the output layer:

where b (2) k corresponds to the bias of the hidden layer. In most cases (including ours), the activation variables are associated to each neuron of the output layer through the linear function: y k =h (2) k . Other more complex functions may be used according to the problem under consideration.

The weights and biases are initialized by random selection from a zero mean, isotropic Gaussian unit variance where the variance is scaled by the fan-in of the hidden or output units as appropriate. During the training phase, the neural network compares its outputs to the correct answers (a set of observations used as output vector), and it adjusts its weights in order to minimize an error function. In our case, the weights and biases are optimized by back-propagation using the scaled conjugate gradient method.

This architecture is capable of universal approximation and, given a sufficiently large number of data, the MLP can model any smooth function. Finally, the interested reader can find an exhaustive description of the MLP network, its architecture, initialization and training methods in Nabney (2002). Our study made use of the Netlab software (Nabney 2002).

1.2 Bayesian approach for selecting the “best” MLP architecture

When optimizing a model to the data, it is usual to consider the model as a function such as: y = f(x, w) + ɛ, where y are the observations, x the inputs, f the model, w the parameters to optimize (or the weights in our case) and ε the remaining error (model-data misfit). The more complex the model to fit (i.e., the number of parameters), the smaller the error, with the usual drawback of overfitting the data by fitting both the “true” data and its noise. Such an overfit is usually detected due to a very poor performance of the model on unseen data (data not included in the training phase). Therefore, optimizing the model parameters through minimizing the residual ε may actually lead to a poor model performance. One way to avoid such a problem is to consider also the errors in the model parameters. The use of a Bayesian approach is very helpful to address such an issue. Although two kinds of Bayesian approaches have been demonstrated to be effective (Laplace approximation and Monte Carlo techniques), we will only consider the first one. Nabney (2002) offers an exhaustive discussion of this subject. For the reader to understand our approach, we believe the following summary is important.

First of all, following the same notations as in Nabney (2002), let’s consider two models M1 and M2 (in our case two MLPs which only differ in the number of neurons in the hidden layer and M2 having more neurons than M1). Using Bayes’ theorem, the posterior probability or likelihood for each model is:

Without any a priori reason to prefer any of the two models, the models should actually be compared considering the probability p(D|M i ), which can be written (MacKay 1992) as p(D|M i )=∫ p(D|w,M i )p(w|M i ))dw. Considering that for either model, there exists a best choice of parameters for which the probability is strongly peaked, then the previous equation can actually be simplified:

where the last term represents the volume (in the space of the parameters) when the probability is uniform. Assuming that the prior probability \(p(\hat{w}_{i} |M_{i})\) has been initialized so that it is uniform over a certain volume of the prior parameters, we can rewrite the previous equation as:

The new equation is the product of two terms evolving in opposite directions as the complexity of the model increases. The first term on the right-hand side increases (i.e., the model-data misfit decreases) as the model complexity increases. The second term is always lower than 1 and is approximately exponential with parameters (Nabney 2002), which penalizes the most complex models. In conclusion, if this is taken into account, the weight uncertainty should reduce the overfitting problem. We will now explain how this can be done.

For a given number of units in the hidden layer, an optimum set of weights and biases can be calculated using the maximum likelihood to fit a model to data. In such a case, this optimum set of parameters (weights and biases) is the one, which is most likely to have generated the observations. A Bayesian approach (or quasi-Bayesian approach due to difficulties in using Bayesian inference caused by the non-linear nature of the neural networks) may be valuable to infer these two classes of errors: model-data misfit and parameter uncertainty.

According to Bayes’ theorem, for a given MLP architecture, the density of the parameters (noted w) for a given dataset (D) is given by:

In a first step, let’s only consider the terms depending on the weights. The negative log likelihood is given by E=− Log(p(w|D))=− Log (p(D|w)) − Log (p(w)).

The likelihood p(D|w) represents the model-data fit error, which can be modeled by a Gaussian function of the form:

where β represents the inverse variance of the model-data fit error, f is the MLP function of the inputs (x n ) and weights (w), and y n are the observations.

The requirement for small weights (i.e., avoiding the overfitting) suggests a Gaussian distribution for the weights of the form:

where α represents the inverse variance of the weight distribution. α and β are known as hyperparameters. Therefore, in order to compare different MLP architectures, we need first to optimize the MLP weights, biases, and hyperparameters for any given architecture. Such an optimization can be made using the evidence procedure, which is an iterative algorithm. Here again, we refer the reader to Nabney (2002). Briefly, if we consider a model to be determined (for any given architecture) by its two hyperparameters, we can write (as previously) that two models may be compared through their respectively maximized evidence p(D|α, β), which log evidence can be written in the form:

where A is the Hessian matrix of the total error function (function of α and β).

Based on the previous equation, the evidence procedure is used to optimize the weights and hyperparameters for any given architecture, and the model optimized log evidence is calculated.

1.3 Model weight indices

Interestingly, the concept of hyperparameters introduced previously can actually be generalized by assigning a separate hyperparameter to each input neuron. The hyperparameter represents the inverse variance of the weights fanning out from the input neuron to the hidden neurons. A high hyperparameter value means that the weights are small, close to zero and therefore that the corresponding input is less important. Therefore, the hyperparameter is indicative of the importance of the input to the rained output. Based on that information, we introduced a MWI, which is defined as the variance of the weights fanning out from a neuron (i.e., the inverse of the model input hyperparameter) normalized by the sum of the weight variances of all the model inputs. The MWI is comprised between 0 and 1, and indicates the relative importance of the different models to the trained output. By analogy, each MWI can be compared to a linear weight applied to each model when combining them linearly. We will show that, although the MLP represents better observations than any linear combination of the models, a linear combination based on the MWIs has also a certain skill in reproducing the observations. The linear combination of models based on MWIs is a simplified linear version of the neural network.

Rights and permissions

About this article

Cite this article

Boulanger, JP., Martinez, F. & Segura, E.C. Projection of future climate change conditions using IPCC simulations, neural networks and Bayesian statistics. Part 1: Temperature mean state and seasonal cycle in South America. Clim Dyn 27, 233–259 (2006). https://doi.org/10.1007/s00382-006-0134-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00382-006-0134-8