Abstract

Point clouds consist of 3D data points and are among the most considerable data formats for 3D representations. Their popularity is due to their broad application areas, such as robotics and autonomous driving, and their employment in basic 3D vision tasks such as segmentation, classification, and detection. However, processing point clouds is challenging compared to other visual forms such as images, mainly due to their unstructured nature. Deep learning (DL) has been established as a powerful tool for data processing, reporting remarkable performance enhancements compared to traditional methods for all basic 2D vision tasks. However new challenges are emerging when it comes to processing unstructured 3D point clouds. This work aims to guide future research by providing a systematic review of DL on 3D point clouds, holistically covering all 3D vision tasks. 3D technologies of point cloud formation are reviewed and compared to each other. The application of DL methods for point cloud processing is discussed, and state-of-the-art models’ performances are compared focusing on challenges and solutions. Moreover, in this work the most popular 3D point cloud benchmark datasets are summarized based on their task-oriented applications, aiming to highlight existing constraints and to comparatively evaluate them. Future research directions and upcoming trends are also highlighted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Point clouds constitute an alternative data format for 3D scenes’ representation. Their popularity is attributed to the increasing availability of point cloud capturing devices and the wide range of their application in various scientific fields [1, 2]. Different sensing devices are currently available, accompanied by algorithms, for the detailed acquisition of point clouds in a wide range of computational and economical costs. Note that Apple has included Light Detection And Ranging (LiDAR) capabilities in its latest hardware to help the camera autofocus faster and capture details even in low-lighting conditions. A point cloud consists of thousands of unorganized colored 3D points that identify objects’ shapes. Each point is denoted by a set of three Cartesian coordinates (X, Y, Z), providing at the same time additional information, such as intensity or reflectance, when active sensors are used to generate it, geometric information, scale, as well as distance and speed estimations [3]. Point cloud representations allow for adaptive storing space and imagining details of varying levels by controlling the number of points based on the desired density [4]. This flexible control stems from the unstructured nature of point clouds, lacking a strict topology and thus enabling their easy formatting to properly adapt to any real-time application. However, these same advantages denote substantial challenges related to point cloud management, associated with data sparsity, unstructured nature, uneven distributions, redundant data, modeling errors, and noise artifacts.

Point clouds are generated by 3D laser scanners, referring mainly to LiDAR technology [5] and Red Green Blue-Depth (RGB-D) cameras [6] with different resolutions and sensor restrictions, or by photogrammetry software [7]. Each laser scan measurement is represented by a point, while all scans register to form the entire scene. Point clouds having temporal dimensions are referred to as dynamics and consist of a sequence of static point clouds. Dynamic point clouds can be generated at speed by mounting sensors on mobile mapping devices, i.e., ground vehicles or Unmanned Aerial Vehicle (UAVs) [8].

Point clouds are employed in a great variety of applications, such as 3D object recognition [9], robotics [10] for simultaneous localization and mapping (SLAM) [11], odometry (visual odometry and LiDAR odometry), autonomous driving [12], change detection [13], remote sensing [14], medical treatment [15], image matching [16], shape analysis [17], etc. To enrich the quality of low-density point clouds, up-sampling is performed by combining point clouds with the corresponding 2D images of objects [18]. This combination may seem ideal, especially for objects’ representation that their original 2D images are available and can be used as input data. However, the fact that 2D and 3D images obtain different characteristics makes it challenging; 3D point clouds are unstructured and have thousands of points with geometry and attribute information, while 2D images are in a limited structured grid shape. Moreover, a point cloud denotes the 3D external surface of objects, in contrast to 2D images which project the 3D world on a 2D plane. These inherent differences need to be considered when developing point cloud processing methods [19]. Thereby, from point cloud formation and processing, several challenges are emerging; robust and accurate methods as well as efficient algorithms need to be considered.

Deep learning (DL) methods are proven powerful tools for data processing in computer vision due to their capability for automatic feature extraction and high reported performance. For this reason, combined with the simultaneous development of powerful Graphics Processing Units (GPUs) and the existence of suitable training datasets, DL has been adapted for point cloud processing and analysis for all popular 3D vision tasks: semantic segmentation [20], classification [3], object detection [21], as well as 3D registration [22], completion [23] and compression [24, 25]. It was in 2017 when, for the first time, PointNet [26], a deep network, was introduced directly to sets of points, without any pre-processing or conversion to other forms, followed by PointNet + + [27] to resolve drawbacks of PointNet and form the basis for upcoming deep networks. These works set the start of a new 3D point cloud processing era. In the following years, related research focused on point cloud generation, processing, and the description of specific datasets for various applications [28,29,30]. A recent method proposed a new point cloud re-identification network (PointReIDNet) consisting of a global semantic module and a local feature extraction module, able to decrease the 3D shape representation parameters from 2.3 M to 0.35 M [31]. However, DL application on raw 3D point clouds remains challenging; the limited scale of existing datasets, the high dimensionality and the irregular nature of unstructured 3D point clouds pose the basic limitations in the utilization of DL methods for the direct processing of point clouds. In recent years, there has been a plethora of available point cloud datasets derived from various sensors, such as Structure from Motion (SfM), RGB-D cameras, and LiDAR systems. Many existing available datasets include real single-sensor data such as Argoverse [32], real multi-sensor data such as KITTI [33], and synthetic data such as Apollo [34]. Available benchmark datasets, however, decrease as their size and complexity increase, consist of real or virtual scenes, and focus on different tasks. Yet, the existence of large-scale multi-sensory datasets is crucial for DL applications, that need great amounts of ground truth labels for training deep networks.

To this end, this study aims to provide an exhausting overview and present the current status of DL methods on 3D point cloud processing. This work covers a wide range of aspects, summarized in the following distinct points: (1) a comparison of existing point clouds acquisition technologies, (2) a holistic review of all 3D point cloud related vision tasks, (3) the presentation of available point cloud datasets, (4) the presentation of emerging challenges by using point clouds in DL applications, in contrast to the use of other image data formats, e.g., 2D images, (5) proposed solutions to face these challenges and (6) future research directions. The review is based on a holistic taxonomical classification of DL methods for 3D point clouds as illustrated in Fig. 1. This work to the best of the authors’ knowledge is the first to particularly focus on DL algorithms for all basic 3D point cloud related tasks, including classification, segmentation, detection and tracking, registration, completion and compression, as well as the first work that integrates all aforementioned research aspects. These tasks are the most commonly addressed in research and applications related to 3D computer vision and point cloud processing, contributing towards extracting meaningful information from point cloud data. Their selection is based on their significance in applications employing 3D point clouds, such as robotics and autonomous vehicles, as defined from the investigation of the high-frequency terms used in deep learning on point clouds based on relevant papers of the examined literature. This work comprises a Systematic literature review (SLR) that aims to cover a wide range of 3D point clouds related aspects, focusing on all fundamental concepts and tasks, so as to provide a complete road map for people newly introduced to this research field.

The rest of this paper is organized as follows. Section 2 provides the motivation and the contributions of this work. Section 3 presents the research strategy followed in this work. Section 4 describes point cloud formation technologies, including a brief comparison between them. Section 5 focuses on the relationship between computer vision and point clouds, presenting DL methods on 3D point clouds, and various challenges. Section 6 reviews the literature on point cloud learnable methods and techniques for main vision tasks. Section 7 summarizes available point cloud datasets. Section 8 includes an exhausting discussion based on the research findings and provides future research directions. Finally, Sect. 9 concludes the paper.

2 Motivation and contribution

During the last decade, sensory 3D point cloud data acquisition has increased, allowing users to visualize highly detailed and realistic scenes easily, as well as to manipulate, explore and analyze them to the extent needed for various tasks, identifying potential issues, and concluding to better decisions. However, since these data are complex and large-scale for their manipulation, more robust and efficient methods are required. At the same time, DL methods are established as a powerful tool for point cloud processing and analysis. Researchers are turning to the investigation of robust and efficient DL algorithms for point cloud data inputs for various vision tasks, and simultaneously various point cloud datasets are developing.

Several similar review articles can be found in the recent literature, however, there is a lack of a complete investigation of DL on point clouds. Guo et al. [35] in their review, cover only three major tasks, i.e., classification, segmentation, and object detection and tracking. The authors focus on the comprehensive comparison of existing DL methods on several datasets, providing evaluation results for all corresponding tasks. Wang et al. [36] review urban reconstruction algorithms and evaluate their performance in the context of architectural modeling, focusing on LiDAR capturing technologies. In [37], technical developments of RGB-D sensors and consequent data processing methods to handle various challenges, such as missing depth, are reviewed, while [38] deals with novel developments in high resolution synthetic aperture radar (SAR) interferometry. Ahmed et al. [39] provide a comprehensive overview of various 3D representations, discuss DL methods for each representation and compare algorithms based on certain datasets. Liu et al. [40] focus on feature learning methods for point clouds and analyze their advantages and disadvantages, including the three basic vision tasks and corresponding datasets. In [41], Vinodkumar et al., present a review of DL-based tasks for 3D point clouds, including segmentation, detection, and classification. Evaluation performance is reported, as well as the used datasets. Ioannidou et al. [42] survey methods that apply DL on 3D data and classify them according to the way the input data is treated before being inserted into the DL models. Camuffo et al. [43] review DL-based semantic scene understanding, compression, and completion, introducing a new taxonomy classification based on the characteristics of the acquisition setup and the data peculiarities. Bello et al. [44] provide a review of DL-based classification segmentation and detection, including popular benchmark point cloud datasets. Xiao et al. [45] focus on unsupervised point cloud representation learning using DL. In general, more review articles published over the years, yet they focus only on specific tasks, such as registration [46], classification [3, 47], completion [23], compression [48], and segmentation [20]. In [44], Bello et al. compile a review for DL on 3D point clouds, focusing on DL state-of-the-art approaches for raw point cloud data.

Table 1 includes the basic features of the aforementioned related works regarding DL methods on point clouds. There are also other proposed surveys about point clouds in the literature not included in Table 1 since their index is far non-comparable to the proposed study. Such indicative works include the survey implemented by Xiao et al. [49] focusing on label-efficient learning of point clouds, the survey of Li et al. [50] for DL for scene flow estimation on point clouds, the review of Grill et al. [51] for point cloud segmentation and classification algorithms, and the review on DL-based semantic segmentation for point clouds of Zhang et al. [20]. Therefore, the most contextual similar works were considered at this point, aiming to comparatively highlight the contribution of the present review work (Ours) versus previous ones.

According to Table 1, the present work aims to fill the identified research gap, by providing: an overview of the main 3D point cloud generation technologies and comparing the quality of point clouds among different acquisition sensors; discussing all DL-based 3D vision tasks; highlighting challenges and constraints from earlier traditional methods; reporting solutions to face the challenges stemming from DL methods; providing the corresponding datasets for each task; comparing point cloud data to other visual data forms; providing deeper insights and underlining differences, advantages, and drawbacks of each modality; providing a critical evaluation of point clouds’ utilization for different tasks and datasets; and, finally, suggesting future research directions and trends in the field.

To the best of the authors’ knowledge, this review is the first to holistically cover DL-based tasks, including segmentation, classification, detection and tracking, registration, completion, and compression, and to combine point cloud fundamentals, DL research advances on point clouds for all tasks, challenges, solutions, datasets, and future research directions, as opposed to already existing reviews. Performance comparison results of DL algorithms on 3D point cloud processing tasks can be found in [35, 43, 44].

3 Research methodology

Within the context of this work, a systematic literature review took place by using the Kitchenham approach [52] to identify the status of research in DL on 3D point clouds, based on six basic research questions:

RQ1: What are the challenges regarding point cloud data processing?

RQ2: What are the challenges that DL models face with 3D point cloud data?

RQ3: What is the status of 3D point cloud datasets for DL-based applications?

RQ4: In which applications does it make sense to apply point clouds?

RQ5: To what extent do different sensors affect the point cloud resolution?

RQ6: Under what conditions does the use of point clouds provide benefits against 2D images?

We performed a search of peer-reviewed journal publications in the Scopus database using the query “( TITLE-ABS-KEY ( point AND cloud) AND TITLE-ABS-KEY ( deep AND learning)) AND ( LIMIT-TO ( DOCTYPE, "ar") OR LIMIT-TO ( DOCTYPE, "cp") OR LIMIT-TO ( DOCTYPE, "ch")) AND ( LIMIT-TO ( LANGUAGE, "English")) AND ( EXCLUDE ( PUBYEAR, 2023))”. The process returned 3370 documents. Figure 2 summarizes the number and the proportion of total published works on the subject per year from 2013 to 2022, to illustrate a full period of 10 years. References from 2023 were also considered in this work, as indicated in the query above; however, they were not illustrated in the graph as related research in 2023 is ongoing. Although DL methods have been applied to point clouds only just in the last decade, the even increasing number of publications, arithmetically and proportionally, shows an overall upward trend, indicating the significance of this research topic. Figure 3 illustrates a tag of high-frequency used keyword terms in DL on point clouds literature, based on their occurrence. The font size indicates the frequency of the used terms based on the keywords of the papers. As it can be observed, most of the literature focuses on deep learning networks. In addition, segmentation classification and object detection tasks, are the most used for various applications.

4 Point cloud essentials

This section summarizes the form and characteristics of 3D point clouds. Working principles of the main technologies for point cloud data acquisition and generation are also discussed. The section concludes with the comparison of point clouds with alternative visual data formats.

4.1 Point cloud data acquisition and generation technologies

Point clouds can be captured with either laser scanners or photogrammetry. Currently, there are several frequently used techniques able to generate a 3D point cloud using 3D laser scanners. Point clouds quality depends on the technology that is adopted for its acquisition since each technology has its own features and peculiarities.

4.1.1 LiDARs

LiDAR is an active remote sensing technology that employs a laser beam to sense objects through ultraviolet visible or near-infrared sources and measures the distance between an object and the scanner. This is achieved through multiple light waves (pulses) that scan the scene from side to side. LiDAR technology can cover large areas from the ground and above, when mounted on aerial vehicles, at flight height between 100–1000 m, while the angle scan is from 40° to 75° maximum with rhythm 20–40 Hz. A typical range of pulse is 10 ns with repetition 5–33 kHz-max50Khz and frequency 10 kHz, i.e., 10,000 points per second [53]. Information of distance and direction are recorded to generate a point in 3D space and the differences in the pulse, return times, and wavelengths are used to generate the 3D representation of the target and calculate the exact distance from the objects [54, 55].

LiDARs can be classified based on their functionality and their inherent characteristics in three broader categories. Based on their functionality, they can be divided into Airborne (ALS) and terrestrial LiDAR. ALS LiDARs are mounted on aerial vehicles and can be further classified as topographic, to monitor the topography in terms of geomorphology, and bathymetric, to measure the depth of water and locate objects in the bottom of water bodied, e.g., oceans, lakes, etc. Terrestrial LiDARs are mounted on stable places, e.g., a tripod, or on moving vehicles, and can be classified as static, when it is portable and located at fixed points, or mobile when it is mounted on moving platforms. A third category, includes all other LiDAR types designated for special applications, including Differential Absorption LiDAR (DIAL) for sensing the ozone, Raman LiDAR for monitoring water vapor and aerosol, Wind LiDAR to measure wind data, Spaceborne LiDAR for out-of-space detection and tracking, and airborne High Spectral Resolution LiDAR (HSRL) for aerosols and clouds characterization. Figure 4 illustrates the classification of LiDARs.

A laser mapping LiDAR system comprises (1) the LiDAR unit itself, which emits rapid pulses of infrared laser light to scan the scene, (2) a Global Positioning System (GPS), (3) an inertial measurements unit (IMU) and (4) a computer for controlling the system and storing the data. GPS and IMU combination allows identifying accurately the location of the laser at the time, at which the corresponding pulse is transmitted, while by using another GPS the ground truth is measured. IMU is responsible for accurate elevation calculations using orientation to accurately determine the actual position of the pulse on the ground. Figure 5 shows a typical LiDAR unit. The unit consists of a laser rangefinder and a scan system. The rangefinder system includes a laser transmitter, photodetector, optics and microcontroller, and signal processing electronics. Different azimuths and vertical angles of laser beams are steered from the scan system. The operation of a typical LiDAR is based on the scanning of its field of view with one or more laser beams, via a beam steering system which is produced by a laser diode with modulated amplitude, emitting at near-infrared wavelength. Laser beams are reflected from the environment backwards to the scanner. The returning signal is sensed by the photodetector. The signal is filtered by fast electronics and the difference between transmitted and received signal is estimated. The exact range is calculated based on this difference by the sensor model. Signal processing is used to compensate for differences in variations of the transmitted and reflected signals because of surface materials. The outputs of LiDAR are 3D point clouds corresponding to scanned environments, and intensities corresponding to the reflected laser energies [56].

4.1.2 RGB-D cameras

RGB-D cameras are a type of depth camera able to provide both depth and color data from their field of view, towards a point cloud generation in real-time [37, 57]. Microsoft Kinect, as the first RGB-D sensor commercially released, paved the way for range sensing technologies to flood the market and promote research, providing cheap and powerful tools for static and dynamic scene reconstruction.

RGB-D images can be captured with either active or passive sensing. Passive ranging is feasible due to the input combination of two (stereo or binocular) or multiple cameras (monochrome or color). For estimating the depth of a scene, the triangulation process is employed [58]. Active sensing can be classified in structured light (SL) and time of flight (ToF) cameras. SL techniques refer to the process of projecting a distinctive pattern in the scene and therefore, adding known features to enable feature matching and compute the depth even for areas in the image that lack discriminative features. ToF cameras emit a pulse of light and estimate distance by the round-trip time. In both cases, depth information is retrievable through a depth map/image acquired from infrared measurements.

Figure 6 illustrates the taxonomy of the RGB-D cameras, while Fig. 7 shows the typical workflow of point cloud generation using an RGB-D camera. Color and depth data are captured concurrently by the different sensor types. The color images are transformed, while infrared images lead to 3D mapping. Then, the camera’s position and orientation are determined relative to the desired object (target) and the pose is estimated from 2D images using pixel correspondence and 3D object points [59]. The intrinsic parameters of the camera contribute to pixel-by-pixel point cloud projection from the depth images to 3D points. In the next step, the camera is calibrated to correct possible errors, while in real-time applications it is calibrated to obtain the desired coordinate system. Thereupon the features are extracted, and homologous points are detected between previous and current frames at each given time and matched. Then, a low-resolution sparse point cloud is generated. The local coordinates of the point cloud are converted into a global coordinate system with the aim of co-linearity equations. From the sparse cloud arises a denser point cloud whose density is based on the frame's number; moreover, when the depth map is combined with color information, the point cloud obtains color [60].

RGB-D video allows capturing active depth when the sensor is moving in a static scene. By fusing the captured frames, the scene’s reconstruction is possible. Multiple RGB-D cameras could also be employed to enable dynamic scene reconstruction. The recent advancements in DL made monocular depth estimation also possible [61]. Prior information, such as relations between geometric structures, is used to conclude from a single image into depth information. Depending on the used ground image, monocular DL-based depth estimation can be classified into supervised [62], unsupervised [63] and semi-supervised [64]. DL models for monocular depth estimation are usually jointly trained in the framework of other basic tasks, such as segmentation, therefore depth estimation is not examined separately in this work as an independent task.

4.1.3 Radars

Synthetic Aperture Radar (SAR) is a significant active microwave imaging sensor [65]. A SAR point cloud generation system processes SAR data acquired from multiple spatially separated SAR apertures so as to calculate the exact 3D positions of all scatterers in the image scene. Aperture is the opening used to collect the reflected energy and form an image. Interferometric Synthetic Aperture Radar (InSAR) is a geodetic radar technique for remote sensing applications generating maps of deformation on surfaces or digital elevations by comparing two or more SAR images.

SAR techniques stand out for their simple design process, their flexibility to change any scanning scheme, and the high computation efficiency for processing. However, data acquisition is generally slow, many antenna pairs or scan positions are required and are more suitable for stationary or slowly moving targets. It should be noted here that mm-Wave radars in general, e.g. Multi-input Multi-output (MIMO) [66] can be used for (range, azimuth and elevation) point cloud generation to detect moving targets. Point clouds generated by mm-Wave radars are attracting growing attention from academia and industry [67] due to their excellent performance and capabilities. Frequency-Modulated Continuous Wave Radars (FMCW) are another category of radar sensors that radiate continuous transmission power. FMCWs can alter their operating frequency during the measurement, offering more robust sensing [68].

Figure 8 illustrates the operational principles of SAR; p1 and p2 are the phases of two reflected signals, λ refers to the wavelength of a signal and Δp is the displacement (between two different phases).

4.1.4 Photogrammetry

Photogrammetry is an alternative method to generate 3D models, by using photographs instead of light to collect data, and methods from optics and projective geometry [69]. Photogrammetry needs a conventional camera to capture the images, a computer, and specialized software to create the 3D representation of the objects. Photogrammetry, the same as laser scanning, can be terrestrial, based on photos taken from the ground, or aerial, based on photos taken from an aerial vehicle with a mounted camera.

The most common aerial vehicles for point cloud capturing, are the Unmanned Aerial Vehicles (UAVs), namely drones. A UAV is an aircraft without a pilot that has an assistive onboard system that is controlled remotely or autonomously [70]. Various categories of drones differ in terms of flexibility, accuracy, weight, and performance in altering weather conditions. A general categorization is to classify them according to their flight mechanism into Multi-Rotor Fixed-Wing and Hybrid-Wing drones [71]. The choice depends on the intended use and application requirements. Their detecting and surveying systems usually incorporate high resolution visual cameras, RADAR and LiDAR. This technology is the most popular due to the handy, low acquisition and operating cost for point cloud generation through both commercial and open-source photogrammetric software packages [72]. The primary data are obtained by the sensors mounted on UAVs (vertical and oblique overlapping images) and then the point cloud data are extracted after the data post-processing.

Figure 9 shows the data post-processing procedure for the point cloud generation. Images are inserted into the software, the homologous points are detected and matched, and images are aligned and oriented. In the next step, input Ground Control Points (GCPs), i.e., markers with known coordinates, are defined to geo-reference. At this stage, error resolution is also computed. A sparse point cloud is consequently generated using Structure from Motion (SfM), followed by a denser cloud that is created by Multi-view Stereo (MVS), with a metric value. In this phase, for each image, the corresponding depth map is calculated. Dense point cloud can be extracted and stored in.las or.laz file format for further processing. Moreover, a mosaic (orhomosaic) arises when a dense point cloud is converted to mesh with texture. Finally, from the orthomosaic, the Digital Surface Model (DSM) and Digital Elevation Model (DEM) are also extracted.

4.1.5 Comparison and evaluation of different point cloud acquisition and generation technologies

The main advantages of LiDAR technology are high accuracy, fast data acquisition, fast processing time, automated procedures therefore independent from human interventions, independent functionality from bad weather conditions, independent from lighting conditions, e.g., sun inclination as well as during night-time. Since point clouds have high data density, they can be used as input data to create several elevation models, such as DSM, Digital Terrain Model (DTM) and DEM. DSM is a digital representation of the heights of the surface of earth, including man-made structures and above-ground features. DTM is a bare-earth topographic representation of earth’s surface, while DEM is a superset of DSM and DTM. However, disadvantages of LiDAR technology also exist. It functions better for static objects, and for moving objects it needs to be combined with other technologies, e.g., camera, GPS, IMU, to establish a complete mapping system. Even though it can penetrate dense foliage, as the rays of light, it cannot penetrate very dense structures. Finally, it has high operational costs due to costly equipment and the need for experienced operators able to interpret and analyze the captured data. Additional limitations are accuracy problems caused by reflective surfaces; in extreme weather conditions data collection can be interrupted; high dependency of its accuracy on the quality and calibration of the scanning system, the GPS, and IMU components.

RGB-D technology has advantages such as affordable acquisition, computational cheap 3D reconstruction methods, and low power consumption translated to high autonomy. However, in many cases, final images may comprise missing values, translated to holes, which must be filled, or depth maps of low resolutions, which must be up-sampled. Moreover, disadvantages at sensory level are observed. RGB-D sensors may fail to capture objects and surfaces with reflections, transparencies, absorptive materials, motion blurred, noisy characteristics and errors can be displayed (systematic and random) due to strong light and to their limited scanning speed. Additionally, low-cost RGB-D sensors cannot provide high quality data. In this case, a metrological analysis of their performance needs to be considered. Moreover, the detection of point correspondences between two cameras during triangulation with passive RGB-D sensors is also challenging since it needs adequate local intensities and variations of colors in images. Therefore, passive sensor data can provide accurate depth information only in rich textured areas within a scene. For areas with less information (fewer features), active sensors provide better depth measurements. Moreover, since with ToF cameras depth is estimated by the round-trip time of emitted light, measurements are not affected at all by the lack of features on the scene.

By conducting a direct comparison between point cloud generation techniques from LiDAR data and image data (photogrammetry), a set of definitive conclusions emerge. Point cloud quality from LiDAR (aerial and terrestrial) depends on scan frequency, point density and flying height. Point cloud quality from images using SfM is affected by the ground sample distance (GSD), flight altitude and image content. Moreover, the point clouds created from LiDAR are denser (2–100 ppsm) than that from images (1 ppsm or less) [73]. By using UAV platforms, a 3D point cloud can be created with photogrammetry only when a second homologous point is found in another image or overlapping images. The main difference, however, that distinguishes photogrammetry from LiDAR is color, since photogrammetry results in a colorized point cloud. Yet, LiDAR point clouds can be more accurate, as already said, due to the fact that LiDAR emits light and reflects features (ground or surfaces), thus, the scene’s texture does not affect the modelling. Furthermore, in LiDAR each reflected point has a coordinate location (X, Y, Z) without having to find a second or third point in overlapping images. Due to the total amount of points sprayed at once, the LiDAR laser can penetrate below heavily vegetated areas and provide more accurate surface models. From the photorealism aspect, photogrammetry provides photorealistic mapping (orthomosaics, point clouds, textured mesh), while LIDAR provides a sparse laser point cloud which is colorized based on the intensity of reflection; yet, it is without contextual detail [74].

Compared to LiDAR, SAR tomography (TomoSAR) offers moderate accuracy on the order of 1 m, as it is reconstructed from spaceborne data. In contrast, ALS LiDARs provide much higher accuracy on the order of 0.1 m [75]. TomoSAR focuses on different objects than LiDAR due to its coherent imaging nature and side-looking geometry system. It can provide rich information and high-resolution reconstructions in complex scenes, such as buildings, by leveraging multiple viewing angles [76]. The combination of LiDAR and SAR sensors can provide 4D information from space [77]. However, TomoSAR does have some drawbacks, such as its limited orbit spread, the small image number, and multiple scattering, which can lead to location errors and outliers. Another advantage of LiDAR over Radar is the difference in wavelength; the lower wavelength in LiDAR enables the identification of extremely small objects, such as cloud particles. Additionally, it’s important to note that LiDAR performance declines in bad weather conditions, while radars can function effectively regardless of weather conditions. Finally, Radars are more robust to weather changes and possess day and night operational capabilities.

4.2 Point cloud formats

A point cloud is sparse, noisy, irregular, and represents objects’ shape, size, position and orientation in a scene. The term “cloud” refers to its collection of unorganized points and spatial coherence. However, it has unsharp boundaries, and consists of numerous and scattered points described by 3D coordinates (X, Y, Z) and attributes, such as intensity, while they can also contain additional information, e.g., color. In the case of different sensory combinations, a point cloud can also provide additional multispectral or thermal information. Figure 10 shows a point cloud sample from an archaeological site.

A variety of file formats for point cloud data storage is currently available. The two main categories of point cloud files are ASCII (XYZ, OBJ, PTX, and ASC) and binary (FLS, PCD, and LAS) or both binary and ASCII (e.g., PLY, FBX, and E57). The format selection depends on the data acquisition source and the intended use, e.g., for data meant to be saved for a long time, the best packing format is in ASCII file.

It should be noted that when dealing with point cloud processing using DL models, the input data can either be in its raw or transformed into a more easily handled data structure that suits the requirements of the DL model architecture. Commonly, used structures are volumetric [78], shell (or boundary), and depth maps.

4.3 Comparison of 3D point clouds with other visual data forms

Nowadays, there exist various representation types of the physical world, including 2D images, orhomosaics, depth images, meshes and 3D point clouds. While humans can perceive and understand any kind of representation through vision, the understanding of scenes in the computer vision field is achieved mainly by 2D images and 3D point clouds, used differently due to their distinct characteristics. Therefore, different visual forms are employed for different problems, due to their inherent differences. 2D images are presented in a regular grid, i.e., an RGB pixel array, while 3D point clouds consist of thousands of points where each point is encoded with spatial coordinates (X, Y, Z), including other information as well. Moreover, 2D images are captured by light rays using a lens and are projections of the 3D world on 2D planes, whereas 3D point clouds represent surfaces, are sparse and contain outliers.

RGB-D images combine four channels of which the three channels include the color (RGB) and the fourth channel represents the depth. In depth images each pixel describes the distance between the object (target) and the image plane [19]. Comparing 3D point clouds and depth maps, one could say that they have different goals and purposes. More specifically, a 3D point cloud has an irregular shape form, whereas a depth map conveys information about the distance. In terms of viewpoint, from a point cloud, it is visible each point used to create the image, while a depth map provides a view of the data points from a particular angle [79]. From the dimension aspect, point cloud images are visible into three axes (X, Y, Z), unlike depth maps which present information only from Z-axis. A depth image, if compared to a flat image, is more accurate and provides additional elements around and behind the target. Point clouds generated from images obtained from UAV platforms vary in terms of quality, outliers, and holes. Their quality depends on the spatial resolution of the images, which is affected by several parameters, such as the flying height, sensor characteristics, and weather conditions, as already mentioned.

Nowadays, there are cases, where 3D point clouds are complemented by 2D images and depth data in various applications, towards a better understanding of a scene. Recently, researchers have developed algorithms and applied learnable approaches using either LiDAR point clouds combined with digital images as input data [80], aerial images (orthophotos) fused with airborne LiDAR point clouds [81], LiDAR and depth data combinations [82]. However, images may present limitations as opposed to point clouds due to sun angle and viewing geometry, occlusions shadows, lack of texture, illumination, atmospheric conditions reflections, and image displacement in areas with steep terrain [83].

5 Computer vision and point cloud processing

Computer vision (CV) was developed in the late 1960s aiming to simulate the human visual system and through automated tasks to achieve, from images or videos, a high-level understanding. This was achieved by information extraction related to their structure. In the next decades, many algorithms and mathematical models have been applied to object and shape representation from various cues, such as shading, texture, and contours [84]. In the last decades, the need for 3D reconstruction and visualization of the real-world, including camera calibration, led to optimization methods and multi-view stereo techniques. However, images are limited from spectral characterization, sampling effectiveness, measurement accuracy, and operating conditions and the natural data process in raw form is also limited due to the parameters’ sensitivity, the algorithms’ strength, and the results’ accuracy [85].

To tackle these issues, classic machine learning methods were applied to various 3D point cloud related applications. Considering the rapid computer vision evolution, the needs, and requirements of high-precision data for real-world recording and modelling are increasing. By using 2D images the latter cannot be achieved, since 2D images do not provide depth and position information that are essential for advanced applications, e.g., robotics and autonomous driving. At the same time the enhancement of technologies for 3D geospatial acquisition of data from various 3D sensors brought to the fore a plethora of computer vision applications providing new data formats such as point clouds, for the rich representation of the scenes [86].

Point cloud processing for information extraction is a complex and challenging task due to its unordered structure and different sizes, which depend on the recorded scene. Moreover, matching between scenes is not feasible due to the lack of neighboring. However, traditional machine learning methods for the processing of point clouds depend on handcrafted features and specifically designed optimization methods. Point cloud features of static properties are invariant to transformations, therefore, application-oriented optimization methods need to be developed in each case, and generalization cannot be achieved [87]. Therefore, the need for developing enhanced and more efficient methods to process point cloud data is apparent. DL methods can automatically learn discriminative features, have proven their effectiveness, and therefore have been also adapted to point cloud processing. Recently, researchers and industrial organizations have employed DL techniques to handle point clouds. In deep learning, the features are learned automatically based on artificial neural networks during the training process. However, used methods depend on the application and the computer vision task and pose many challenges.

In the next subsection, the basic challenges of point cloud processing are reviewed, while in the following section, task-oriented challenges of DL methods for point cloud processing are analyzed.

5.1 A brief review of DL-based point cloud processing and corresponding challenges

Currently, the 3D representation of scenes via point clouds is promoted by a variety of different advanced sensors. Yet, data does not contain topology, and connectivity, including occlusions, can be affected by illumination, objects’ motion, noise of sensors, and sources of external radiation. The latter issues can lead to wrong coordinates’ estimation and thus, point clouds can be sparse due to the mostly concentrated points around key visual features, appearing holes and missing data due to unsampled areas around smooth regions [88, 89]. This data sparsity and uneven points’ distribution can get worse depending on the quality of the acquisition device or in cases of specific sensors, such as ToF sensors where occlusions and hidden surfaces deteriorate the generated point cloud.

Redundancy of data is another critical issue on point clouds. Point cloud representations can be highly redundant, compared to meshes representations, especially on planar surfaces. The latter results in large files that cannot be shared or stored. In such cases, the point cloud needs to be efficiently organized to provide good representations that could be feasibly processed. Finally, the last basic challenge in point cloud processing is the existence of noise in the resulting models. Illumination, radiation, motion blur, sensory noises, etc. can severely affect the point cloud, deriving false estimations of surfaces and flying pixels. These kinds of artifacts can be observed on vision-based acquisition devices, as well as in all devices where environmental changes can alter the quality of the derived data, e.g., in FMCW radar sensors.

Addressing such issues with traditional methods can lead to increased memory costs [89]. Computer vision can offer more powerful tools and point cloud processing techniques. DL methods are capable of confronting the limitations of traditional computer vision solutions using deep denoising [90], volumetric multi-view, and point-based methods [91]. In addition, DL methods for feature learning can be applied pointwise, such as Multilayer Perceptrons (MLPs) and Convolutional Neural Networks (CNNs) as the PointNet family [92], or on graph and hierarchical data structures [93] by either converting the point cloud into other formats or directly on the raw data [94]. Challenges related to resolution were faced by super-resolution methods aiming to upscale low-resolution representations [95]. The challenge imposed by the great number of points of a point cloud, in regular conditions, is handled by finding similarities or dissimilarities i.e., comparing corresponding pairs of pixels like in the case of images; however, on point clouds, there is no 3D dissimilarity measurement. The latter was tackled by using supervised, unsupervised and autoencoder methods [96,97,98]. Finally, additional methods have been developed focusing on capturing local structures and providing richer representations through sampling, grouping, and mapping functions [98, 99].

It should be noted that all aforementioned challenges are more general; specific challenges emerge when DL methods are used in different tasks, as reviewed in the following section.

6 DL-based computer vision tasks using point clouds

In this section, the main vision tasks are classified into six categories: registration, segmentation, classification, 3D object detection and tracking, compression and completion. The advantages, disadvantages and challenges of using point clouds in each task are discussed separately, aiming to deliver an in-depth understanding of the impact of DL on point clouds and the extent to which various point cloud management challenges have already been addressed. This analysis is significant as it can highlight research gaps, current trends, and future research directions in the field.

DL-based methods for geometric data pose challenges in terms of performing convolution. Numerous DL model architectures have been proposed aiming to learn geometric features from point clouds and implementing main DL operations on 3D points. The main idea is to interpret point clouds locally as structured data by considering each point concerning its neighboring points or achieving a learning process that remains invariant to the order of the point cloud. Based on this, feature learning on point clouds is classified according to Liu et al. [40] in raw point-based methods, where DL models directly use raw point cloud data and k-dimensional tree (Kd-tree) methods, where the point cloud is transformed into another representation before being inserted into the DL models.

Table 2 summarizes the main vision tasks as defined in this work, providing optical examples, definitions for every task as well as information about the type of input/output data.

6.1 Registration

In registration, two-point clouds that are acquired from different angle views of the same scene are aligned through a rigid transformation, aiming to obtain a common coordinate system [46].

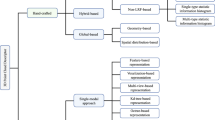

When point cloud data is captured by the same sensor, at different times, it may contain noise and outliers, while being partially overlapped due to varying viewpoints. In cases of cross-sensory data acquisition, different scales are introduced due to the physical metrics, making rigid motion prediction for aligning one point cloud into another challenging. Nowadays various challenges are being addressed by DL methods, including partial point cloud registration. To classify DL methods for the registration task, we can first distinguish them based on the origin of the data: same sensor or multi sensors [100]. Figure 11 illustrates the classification of DL methods for the registration task.

Methods for the same sensors are based on optimization, feature learning and end-to-end learning. Optimization methods utilize techniques such as Iterative Closest Point (ICP) [101], graphs [102], Gaussian mixture models (GMM) [103] and semi-definite registration [104]. One of the key advantages of this category is the presence of rigorous mathematical theories that guarantee their convergence. Additionally, these methods do not require training data and can generalize well to unknown scenes. To address challenges like noise, outliers’ density variations, and partial overlap, optimization methods are employed; nonetheless, the computation cost is increased.

Feature learning methods are used for accurate correspondence estimation, including learning on both volumetric and point cloud data. Volumetric methods involve converting point clouds into 3D volumetric data and then utilizing a Neural Network (NN). However, they require a large Graphic Process Unit (GPU) memory and are sensitive to rotation variations. Some representative algorithms in this category are PPFNet [105], SiamesePointNet [106] and deep closest point (DCP) [107]. These methods offer robust and accurate registration using a simple RANdom SAmple Consensus (RANSAC) iterative algorithm. Nonetheless, certain issues persist, such as the necessity for large training data and poor registration performance in unknown scenes.

In End-to-end learning methods, two-point clouds are inserted, and a transformation matrix that aligns them is obtained as output [113]. These methods encompass registration by regression and optimization. Regression transforms the registration problem into a regression task [114], combining the conventional optimization theories with deep neural networks (DNN), resulting in improved accuracy compared to previous methods. Algorithms for both regression and optimization have also been developed. End-to-end methods are developed especially for the task, and the optimization of the NN depends on the specific objective. Note as drawbacks that DL regression methods are considered “black boxes”, the coordinate measurements based on Euclidean space are sensitive to noise and density differences, and feature-metric registration focuses on local structure information.

Point cloud registration methods based οn cross-sensors face more challenges compared to single-sensor cases, requiring the use of advanced registration frameworks. The benefits of cross-sensor methods include leveraging advantages from combining different sensors, providing the best information for augmented reality applications; however, they also suffer from limitations such as lower accuracy and higher computational cost. These methods can be categorized into optimization-based, feature learning-based and pairwise global point cloud-based. Optimization-based methods aim to estimate the transformation matrix using optimization techniques [115] or deep networks [116]. While these methods are similar to the same sensor approaches, the computational cost problem remains an issue, and their performance with different datasets can be problematic. DL methods offer models focusing on various aspects of registration, including feature extraction and key point selection [117], key point detector [118], and the entire registration process embedded in a DL network [119]. Pairwise global point cloud-based methods [120] consist of hybrid methods that exploit pose-invariant features and feature descriptors for local features’ extraction. Additionally, there are End-to-End methods, which comprise both pose-invariant and pose-variant feature methods, with pose-invariant methods excelling [121]. Recently, a probability driven approach for point cloud registration has been proposed [122], outperforming state-of-the-art registration methods on registration accuracy.

6.1.1 Comparative discussion on image-based registration

When it comes to images, image registration involves aligning multitemporal and multimodal images, as well as images from different viewpoints. Image registration methods aim to address specific challenges such as finding similarity measurements, especially for multimodal images, reducing the computational cost, particularly in real-time applications, improving quality of images and handling deformations. Moreover, traditional methods often suffer from good generalization and usually converge to local minima [46]. To address these challenges, DL models, such as CNN, RNN, Autoencoder, Reinforcement Learning (RL), Generative Adversarial Network (GAN), as well as regular intensity-based similarity metrics like sum-of-square distance (SSD) and mean square distance (MSD), have been extended to tackle the geometric computer vision task of registration [46]. However, when dealing with multimodal images, the results were found to be poor. To address the metric problem, handcrafted descriptors were applied, but these descriptors were error-prone, and deep similarity metric methods slowed down the registration process. Additionally, the image alignment’s quality directly impacted the accuracy of the models. To tackle accuracy problems, special data augmentation techniques were proposed [123]. Despite presenting satisfactory results, these techniques posed difficulties in optimization and did not reduce the computational cost. DL methods mainly focused on rigid registration, since non-rigid registration models involved high dimensionality and non-linearity. With DL methods, an improvement of 20%–30% was observed [124] compared to traditional methods.

The most significant shortcoming of DL lies in the limitation of the transformation model from high to low dimensionality [125]. The high dimensionality of the output parametric space, coupled with the scarcity of datasets for training, containing ground truth transformations, and the challenges of regularization in predicted transformations are tackled through supervised transformation prediction and the use of data augmentation methods [126]. However, these approaches insert additional errors, like the bias of unrealistic artificial transformations and shifts of image domain between the testing and training phases. Additional problems arise when the transformation fails to captivate the wide range of variations found in real image registration scenarios, leading to potential mismatches between image pairs. To address this issue, transformation generation models [127] are employed, and to overcome the scarcity of training datasets, unsupervised transformation prediction is applied [128].

6.2 Segmentation

Point cloud segmentation is utilized for scene understanding and to determine the shape, size, and other assets of objects in 3D data [129]. During segmentation, a point cloud is divided into different segments (subsets) with identical attributes; in other words, points are clustered based on similar characteristics into homogenous regions. Segmentation is an essential task in 3D point cloud processing since it is the first step for detecting objects in a scene that cannot be directly discerned from a raw point cloud directly [130].

Three types of segmentation can be distinguished: semantic, instance, and part segmentation. In semantic segmentation, objects are grouped into predefined categories. Instance segmentation is a specialized form of semantic segmentation that detects instances of objects with the same semantic meaning and defines their boundaries. Object part segmentation addresses the challenge of providing pixel-level semantic annotations that imply fine-grained object parts, instead of just object labels. Semantic, instance and part segmentation are applied at scene, object, and part levels, respectively. All forms of segmentation present challenges related to comprehending details of the global geometric structure for every point, defining surface descriptors that describe the object’s parts, as well as developing robust algorithms to compute these features [131]. Segmentation methods learn point distribution patterns from annotated datasets and make predictions. Previous traditional segmentation methods have encountered challenges in defining feature calculation units and developing suitable feature descriptors for classifier training. However, handcrafted features act as a limitation factor for the generalization performance of algorithms in complex scenes. In contrast to traditional machine learning methods, DL methods address the aforementioned challenges by employing DNN training to encode point features and make predictions, or to design effective backbones, leveraging the unique characteristics of point clouds.

The classes of segmentation methods are illustrated in Fig. 12. Semantic segmentation includes projection-based methods, further categorized into multi-view, spherical and cylindrical methods. Other methods involve discretization-based methods (Dense or Sparse), point-based methods (Point-wise multi-layer perceptron (MPL), Point convolution, or RNN, Graph), Transformer-based and hybrid-based methods. Instance segmentation methods are categorized in proposal and proposal-free methods, while the last category is part segmentation. In their simplest form, these methods often apply pre-trained CNN models, e.g., AlexNet, VGG, GoogLeNet, etc., on various image datasets. In what follows, each category of Fig. 13 is examined separately.

6.2.1 Semantic segmentation-projection-based methods

In projection-based methods, point clouds are projected into 2D images. These methods are efficient in terms of computational complexity and can result in improvement of performance for various 3D tasks by capturing several views of the area of interest. Predictions are then made based on the outputs, either through fusion or majority voting. However, multi-view segmentation methods are easily affected by viewpoint selection and occlusions, and they do not exploit geometric and structural information due to information loss [132]. On the other hand, spherical methods achieve fast and accurate segmentation, making them suitable even for the segmentation of LiDAR point clouds in real-time [133]. Semantic labels of 2D range images are assigned to 3D point clouds to enhance the discretization of errors and improve the quality of outputs. Spherical projection retains more information compared to multi-view methods, making it suitable for labeling LiDAR point clouds. Nevertheless, discretization errors and occlusion issues persist. Methods based on cylindrical coordinates have recently proven to be really effective in representing LiDAR point clouds for various tasks [134]. Despite their sparsity and density effectiveness, they still encounter noise issues [135].

6.2.2 Semantic segmentation-discretization-based methods

Discretization-based methods transform a point cloud into a discrete representation structure either dense, referring to voxels or octrees, or sparse, referring to permutohedral lattices. Then, dense or sparse convolution can be easily employed. In dense methods the space taken by point clouds is divided into volumetric occupancy grids and all points that belong to the same cell are assigned to the same label. Subsequently, predictions are made for each voxel center using a convolutional architecture. Previous methods voxelized the point clouds as dense grids; however, these methods were obstructed by the granularity of the voxels and the boundary artifacts due to the partitioning of the point cloud. In practice, there is no selection of a suitable grid resolution. To address these issues, advanced methods exploit the scalability of fully CNNs, allowing them to handle even large-scale point clouds [136]. Moreover, these methods can train volumetric networks with different spatial sizes point clouds. The latter can lead to high computational costs due to the high resolution and the loss of details. To mitigate this, trilinear interpolation models that can learn automatically or 3D convolution filters have been used [137]. The natural sparsity of point cloud models results in a relatively small number of filled cells in volumetric representations. To resolve this, sparse convolutional networks have been proposed [138]. These networks reduce memory and computational costs by limiting the output of convolutions to be related solely to occupied voxels. In this way, these methods can process efficiently high-dimensional and spatially sparse data.

6.2.3 Semantic segmentation-Point-based methods

Point-based networks operate on unstructured point clouds, avoiding some limitations posed by previous methods, such as projection and discretization. In this category, point cloud data processing is conducted directly. These methods are divided into point-wise MLP, point convolution, RNN based, and graph-based methods.

Point-wise MLP methods utilize the joint MLP as the main unit of their network due to its superior effectiveness. However, the extracted features by these methods may not fully describe the local geometry and common interactions between points. To address this, various networks have been proposed, involving attention aggregation, neighboring feature pooling, and local–global feature concatenation [139], which enable better local structure learning and wider point capturing. Point convolution methods apply specific 3D convolution operators tailored to continuous or discrete point clouds. The definition of the 3D continuous convolution kernels is done on a continuous space, where the weights for neighboring points are associated to spatial distribution. Ιn discrete point clouds, CNNs are specified on regular grids, and the neighboring points' weights are associated with the offsets regarding the center point [140]. RNN networks can model the interdependency of acquired point cloud at different times. PointRNN leveraged this idea [141], while other solutions have been proposed by combining CNNs and recurrent architectures [142], capturing inherent context features from point clouds [143], exploring several RNN architectures [144], and using dynamic models [145]. Additionally, graph methods have also been developed for capturing the shape and geometric structure of 3D point clouds [146].

6.2.4 Transformer-based methods

Transformer-based methods are decoder-encoder structures that consist of input embedding, positional (order) encoding, and self-attention, enabling the learning context and tracking relationships in sequential data. Particularly, self-attention plays the most important role as it generates sophisticated input attention features, according to the global context, and consequently the output attention also learns the global context [147]. These methods are suitable for processing of point clouds due to their natural independence of the input order. In these frameworks, Natural Language Processing (NLP) methods provide better performance than CNN, allowing for parallel processing and are much faster than any other model with similar performance [148].

6.2.5 Hybrid-based methods

Hybrid-based methods are popular and involve the utilization of over-segmentation or point cloud segmentation algorithms [146] as a pre-segmentation stage to reduce the data volume. However, reducing the amount of data may lead to a slight loss of accuracy. Moreover, additional methods are learning multi-modal features from 3D scans and leverage all available information. For example, 3D-multi-view networks combining RGB and geometric features [149], 3D CNN stream and a back-projection layer to learn 2D embeddings and 3D geometric features [150], or a unified point-based framework for learning 2D textural appearance, 3D structures and global context features from point clouds. These networks are directly applied to extract local geometric features and global context from a sparse sampling of point sets without voxelization. In contrast, other techniques like Multi-view PointNet (MVPNet) [151] combine appearance features from 2D multi-view images and spatial geometric features in the canonical point cloud space.

6.2.6 Instance segmentation

Instance segmentation focuses on distinguishing points of different semantic meanings and separating instances accordingly. It combines the advantages of semantic segmentation and object detection; however, it requires more accuracy and granularity due to points, compared to semantic segmentation methods. Instance segmentation presents some significant challenges, such as difficulty in segmenting smaller objects, dealing with occlusions, inaccurate depth estimation, and handling of aerial images. Existing DL-based instance segmentation methods can be categorized into proposal and proposal-free approaches [35].

Proposal methods transform the problem of instance segmentation in 3D object detection and prediction of instance mask [152]. For this purpose, several methods have been introduced [153, 154], with Generative Shape Proposal Network (GSPN) [150] being the first reported approach. However, these techniques are computationally expensive, require substantial memory and rely on large amounts of data, presenting challenges in their implementation. Proposal-free methods [155, 156] consider instance segmentation as a successive step of clustering at a pixel level to generate instances after semantic segmentation, without involving any object detection module. Existing methods assume that points within the same instances can have alike features, thus focusing on discriminative feature learning and grouping of points. Group Proposal Network (SGPN) was the first proposal-free method [157] reported in the literature. Proposal-free methods do not require computationally costly region-proposal components. However, they exhibit lower objectiveness in instance segmentation since they do not clearly identify boundaries of objects. Essentially, these methods rely on grouping/clustering techniques at a pixel level to generate instances, covering potential gaps through the use of semantic segmentation methods.

6.2.7 Part segmentation

In part segmentation, semantic annotations indicate fine-grained object parts at the pixel level, rather than just object labels. The difficulties in this task are related to 3D shapes. For instance, parts of shapes having the same semantic label exhibit big geometric variations and ambiguity, and the total parts having the same semantic meaning can differ significantly. These challenges have been partially faced by volumetric CNNs [158], Synchronized Spectral CNN [159], Shape Fully Convolutional Networks [160], and part decomposition networks [161], which have reported improvements in part segmentation outcomes. However, they have stated sensitivity to initial parameters and limitations in learning local features.

6.2.8 Semantic segmentation

As a general conclusion, DL-based methods for semantic segmentation on point clouds offer numerous benefits, even for very large-scale point clouds. Instance segmentation requires more discriminative features, while the combination of semantic and instance segmentation can enable label prediction simultaneously [162]. Such an approach can be particularly useful for still images displaying many overlapping objects in a scene, as it allows models to be better trained in real-world scenarios, effectively handling dense objects and significant overlaps between them [163]. Furthermore, image segmentation in various fields, such as medicine, highlights the need for large-scale annotated 3D image datasets, which can be challenging to create, compared to datasets in lower dimensional counterparts.

6.2.9 Comparative discussion on image-based segmentation

Image segmentation methods on 2D data have been developed using interpretable deep models [164], weakly-supervised and unsupervised learning [165], unsupervised learning [166], self-supervised learning [167] and Reinforcement Learning [168]. However, challenges regarding the kind of information used with interpretable models, their behavior, dynamics and the efficiency related to accuracy and computational cost remain. Image segmentation algorithms depend on the spatial properties of image intensity values. However, these intensities are not purely quantitative and can be influenced by a variety of factors, including hardware, protocols, and noise. Researchers have made attempts to address these challenges by using traditional methods [169]. Unfortunately, these methods have had limited success, as they often require manual interventions for abnormal cases and lack the necessary robustness to handle sensitive input data effectively. To overcome these limitations, more advanced approaches have been developed, such as U-net [170] and DeepLab [171].

These methods leverage large amounts of image data to enhance performance, increase robustness, and obtain more reliable estimates. Additionally, they help mitigate the computational cost by combining differently constructed architectures in various applications, particularly in the field of medicine. Methods based on RGB images in general, lack information to achieve semantic segmentation of complex scenes. It should be noted that RGB-D semantic segmentation providing additional depth information, was concluded to reach to better segmentation results [172].

6.3 Classification

Point cloud classification refers to the assignment of predefined category labels to groups of points within a point cloud, determining which points belong to which objects. In the past, methods for point cloud classification relied on handcrafted features and traditional classifiers for point cloud preprocessing, as well as machine learning techniques, like unsupervised, supervised, or a combination of them [173]. However, unsupervised methods were limited by their dependency on thresholds, leading to poor adaptability. Supervised methods struggled to learn high-level features, making it challenging to achieve significant improvements in classification accuracy. Although combining these methods improved classification accuracy to some extent, they still inherited certain limitations [174]. Nowadays, DL methods have proven their powerful capabilities for representation learning directly from the data. The latter has led to significant advancements in the field of 3D point cloud classification.

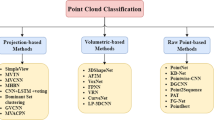

DL-based methods for classification of point clouds can be divided in projection-based, volumetric-based and point-based methods according to the different input data formats used by the neural networks, as illustrated in Fig. 13. It should be noted that many of these classification methods share, to some extent, similar concepts with segmentation methods.

6.3.1 Projection-based methods

Multi-view methods [175] involve projecting 3D shapes into multiple views and extracting view-wise features, which are then fused to achieve precise shape classification. However, these methods often encounter information loss. One of the main challenges for these methods lies in the way to combine the several view-wise features in a discriminative global representation. As a result, several methods have been suggested aiming towards improving the accuracy of recognition [176, 177].

6.3.2 Volumentic-based methods

Volumetric-based methods voxelize point clouds into 3D grids and utilize 3D CNN for shape classification [178]. The main challenge with this approach is the scaling of dense 3D data, as both memory footprint and computations increase exponentially with the resolution. To address these concerns, researchers have introduced OctNet [137], which reduces the computational and memory costs, as well as the runtime, especially for high-resolution point clouds. However, despite these efforts, volumetric-based methods are not appropriate for handling large-scale point clouds because of the persistently high computational cost, which has not yet been efficiently resolved.

6.3.3 Point-based methods

Point-based methods are applied directly for processing raw points without voxelization or projection, enabling high precision and efficiency due to the irregularity of the distribution of point clouds and the scenes’ complexity [179]. These methods encompass point-wise MLP, convolution, graph, and hierarchical data structure-based approaches. It is noted that point-wise MLP, convolution and graph methods share similarities with the segmentation methods, albeit with some variations.

Point-wise MLP methods aggregate global features using a symmetric aggregation function. However, applying DL methods for images directly to a 3D point cloud is challenging because of their irregular data nature. Unlike images where kernels are described on a 2D grid structure, designing point clouds convolutional kernels is complex due to their irregularity. Numerous methods have been developed based on point convolutional kernels, which can be categorized into continuous and discrete convolution methods. Continuous methods define a convolutional kernel in a continuous space, where the neighboring points’ weights are determined based on their spatial distribution regarding the center point. These methods can be translated as a weighted sum over a given subset [180, 181]. 3D discrete methods describe convolutional kernels on conventional grids, and the neighboring points’ weights are determined based on the offsets from the center point [182]. In graph NNs each point is treated as a vertex in a graph, and directed edges are generated for the graph based on the neighboring points of each vertex. Then, feature learning is applied in either the spectral or spatial domain for effectively capturing the local structure data of point clouds [183]. However, the receptive field size of many graph NNs is often not enough for capturing comprehensive contextual information. Graph-based methods in the spatial domain define operations like convolution and pooling, while convolutions are defined as spectral filtering, implemented through signals’ multiplication on the graph with eigenvectors of the graph Laplacian matrix. On the other hand, hierarchical methods employ networks constructed using different hierarchical data structures, wherein the learning of point features is done hierarchically from leaves to the root node of a tree structure [184]. Recently, a unified representation of image, text, and 3D point cloud was introduced, namely ULIP, pre-trained by using object triplets from all three modalities [185]. The ULIP reported state-of-the-art performances in standard 3D classification and zero-shot 3D classification, bringing multi-modal point cloud classification in the forefront of point cloud related research. A 3D point cloud classification method based on dynamic coverage of local area was presented in [186], introducing a new type of convolution to aggregate local features. For point cloud classification and segmentation, it was also proposed a new space-cover CNN (SC-CNN) [187], towards implementing a depth-wise separable convolution to the point cloud using a space-cover operator. The latter approach was proven capable of better perceiving the shape information of point clouds and improving the robustness of the DL model.

6.3.4 Comparative discussion on image-based classification

Classification of 2D images using DL refers to the training process of a model to classify the images into predetermined classes. CNNs are widely employed in image classification due to their ability to capture and learn hierarchical features. Due to their structure, their powerful feature learning abilities, as well as the availability of GPU computing, outperform in most cases the traditional machine learning techniques. Therefore, CNNs have reported significant performances in various large-scale identification computer vision tasks. Despite their great achievements, DL models for 2D image classification still face challenges to tackle, such as insufficient data because due to the fact that DL models require large amounts of labeled data for effective training, overfitting issues especially when the model is complex having a large number of hyperparameters, resulting in capturing noise instead of general patterns. Addressing these challenges requires a combination of advanced algorithmic improvements, data curation strategies and deployment of robust image classification models that could be applied to a wider range of cases.

6.4 Object detection and tracking

Object detection and tracking task also involves 3D scene flow estimation. Given the arbitrary nature of point cloud data, the aim of object detection is the identification and localization of instances of predefined categories, providing their geometric 3D location, orientation, and semantic instance label. This information is embodied by a bounding box encompassing the target, indicating the object’s center position, and orientation size [188, 189]. Figure 14 shows the categorization of object detection and tracking methods.

6.4.1 3D object detection

Object detection finds applications in various real-world scenarios, including autonomous driving, surveillance, transportation, scene analysis from drones, and robotic vision [40]. However, specific challenges arise in this task, such as simultaneous classification and localization, real-time processing, handling multiple spatial scales and aspect ratios, dealing with multiple feature maps, limited data, and addressing class imbalances [190]. To address the issue of simultaneous classification and localization, specific methods use multi-task loss functions, penalizing misclassifications and localization errors. Convolutional Neural Networks are employed to handle false classifications and misalignment of bounding boxes. For real-time detection, speed methods like Yolo or Faster R-CNN are applied to mitigate the problem to a certain extent. It is worth to note that maintaining real-time speed (e.g., at least 24 fps) can be challenging when processing video shoots continuously. Currently, YOLO v3 offers object detection at multiple scales. However, there are still challenges that need improvement, such as achieving real-time detection with high-level classification and localization accuracy, as well as ensuring continuity in video tracking between frames, rather than processing them separately [191]. In addition, during object tracking, several challenges arise, including smooth object motion without abrupt changes, dealing with sudden and gradual changes in both object and scene backgrounds, maintaining camera stability, handling varying numbers and sizes of objects, and addressing occlusion limitations [192]. These challenges are tackled by using region proposal [193, 194] and single-shot proposal methods [195]. Region proposal methods suggest possible regions (proposals) that may contain objects and subsequently the extraction of region-wise features towards determining the class label of each proposal.