Abstract

The reconstruction of flame from the captured images is a difficult and computationally expensive problem. Reconstruction from color images will keep the colorful appearance, as is beneficial for visually realistic flame modeling. Most of existing color-image-based methods rebuild three density fields from RGB intensities; however, these methods suffer from the color distortion problem due to the high correlation of RGB intensities. A novel method for 3D flame reconstruction using color temperature is presented in this paper. Color-temperature mapping is calculated to avoid color distortion; this method maps the RGB intensities into the color temperature and its joint intensity. We improve the multiplication reconstruction with visual hull restriction so that the energy distribution is more reasonable, which allows avoidance of the impossible zones. Experimental results indicate that our approach is efficient in the visually plausible 3D flame generation and produces better color restorations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fire/flame plays an essential role in virtual environments, which is an inherently dynamic phenomenon with sparse density, uneven particle distribution and self-illumination. Generating computer animated flame is a difficult and computationally expensive problem.

Flame simulation methods currently focus on dynamic texture, particle system, physics-based simulation and image-based reconstruction [1]. Dynamic texture exhibits certain stationarity properties in time from sequences of flame images. It has advantages in the extrapolation and synthesis of 2D dynamic flame; however, the flame information from the third dimension is lost. A particle system that generates random particles is easy to implement to simulate turbulent flame, but particle movement is too random to achieve an accurate description of the movement of flame. Physics-based simulation is closer to the real development according to the physics equations; however, it is difficult to capture the flames high-frequency details due to the numerical dissipation. Unlike traditional flame simulations, image-based 3D reconstruction [2] captures multi-view images directly from real flame, and based on these data generates 3D flame models. This method is not only useful for reconstructing visually realistic 3D flame, but it also deepens our understanding of flame details.

Image-based reconstruction methods have obvious advantages for modeling visually realistic flame. However, they also have two significant problems. On the one hand, two-view reconstruction methods, such as multiplication and flame sheet generation [3] are easy to implement but yield poor visual effects. Multi-view (\({>}\)2) reconstruction methods generate 3D modeling accurately but are high time-consuming due to computational complexity. Thus, it is preferable to develop a reconstruction algorithm with a simple system setup, which decreases the setup time making the process more cost efficient and produces visually plausible results. On the other hand, most of the existing color-image-based methods [3–5] reconstruct three density fields from RGB intensities according to the order of their appearance. These methods suffer from color distortion due to the high correlation of RGB intensities. A minor reconstruction error in one of the RGB components will result in an apparent color difference in the final rendered image.

The motivation for this study is derived from the fact that there is a one-to-one mapping relationship between the temperature and color of a luminosity flame according to the principle of color pyrometry [4]. A novel method for 3D flame reconstruction with color temperature is presented in this paper. A multi-camera digital imaging system is designed to capture flame color images synchronously, and the captured images are preprocessed to meet the linear optical model. Then, we calculate the color-temperature mapping to convert the RGB intensities into the color temperature and its joint intensity for flame reconstruction. At last, a 3D flame is reconstructed with color temperature using the multiplication reconstruction and visual hull technology. Three different flame scenes are tested to verify the efficiency of our method.

The main contribution of this paper includes: First, the color temperature is calculated to avoid the color distortion in flame reconstruction. Second, the multiplication reconstruction is restricted using a visual hull to discard the impossible zones for shape constraint and compensate the removed intensity for photo-consistency. To the best of our knowledge, this is the first study to introduce color temperature into the flame reconstruction.

The remainder of the paper is organized as follows. The next section reviews related work. Section 3 gives a concise introduction to image formation model and an overview of our method. Section 4, 5 and 6 describe data preprocessing, color-temperature conversion and 3D flame generation, respectively. Section 7 introduces experimental setup. Results and conclusions are given in Sects. 8 and 9.

2 Related work

Image-based flame reconstruction has been an active research area for the past few years. Some researchers [6, 7] focus on the characterization of flame geometry based on images. Bheemul et al. [6] introduced an instrumentation system for the three-dimensional quantitative characterization of flame geometry, where a set of geometric parameters including volume, surface area, orientation, length and circularity are obtained. Upton et al. [7] put forward an optical acquisition system which collects projection data from 12 directions to measure the flame surface of the turbulent reacting flow. It is effective to observe the details of turbulent combustion, but difficult to calibrate since the two views are imaged to each of the six cameras using a mirror array.

Furthermore, the image-based reconstruction methods [8–10] were developed to acquire and rebuild physical parameters of flame. Atcheson et al. [8] addressed the first time-resolved schlieren tomography system for capturing 3D refractive index values, which directly correspond to physical properties of the flame with moderate hardware requirements. Ishino et al. [9] further designed a 40-lens camera to measure the distribution of local burning velocity which is important to develop data driven flame simulation on a turbulent flame. In addition, a lot of work is about flame temperature field measurement, such as [4, 10] and [11].

More important is the image-based 3D flame intensity and density field reconstruction because flame is a typical inhomogeneous participating media. Ishino and Ohiwa [12] introduced a custom-made camera which has 40 lenses to simultaneously capture flame images and to reconstruct the instantaneous 3D emission distribution of flame. Gilabert et al. [13] employed a digital imaging technique to reconstruct gray-scale sections of flame from three 2D images taken by three identical charge-coupled device (CCD) monochromatic cameras. They [14] further presented a 3D imaging system with three RGB CCD cameras to acquire the luminosity distribution of combustion flame. It is useful to quantitatively characterize the internal structures of flame. In 2003, Hasinoff and Kutulakos [3] presented a photo-consistent reconstruction method which reduces fire reconstruction to the convex combination of sheet-like density fields. It is easy to implement two-view reconstruction, but multi-view (more than two views) reconstruction has very high computational complexity. A year later, Ihrke and Magnor [5] proposed a tomographic method for reconstructing a volumetric model from multiple color images of flame, which restricted the solution by visual hull. Their approach reconstructs the actual 3D distribution of flame intensity, but does not consider the relationship of the RGB intensities.

In this paper, we are not focusing on the simulating physical properties of fire, but will instead focus on generating visually plausible flame animations that can be rendered from arbitrary viewpoints. Three-dimensional flame is reconstructed using the multiplication reconstruction and visual hull technology for shape constraint and photo-consistency. Different from existing color-image-based methods, our method considers the relationship of RGB intensities using color temperature to generate 3D flame.

3 Method overview

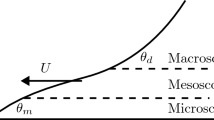

In this section, a concise introduction to the authors’ s image formation model and an overview of the modeling method are provided. There is a linear relationship between intensity and density of the flame based on the linear optical model (see Fig. 1), which was introduced by Hasinoff et al. [3].

At a given image point, the image irradiance \(\hat{I}\) can be expressed as the sum of integrates radiance from luminous flame material along the ray through that pixel, which incorporates background radiance:

where \(D(t)\) is the flame’s density field along the ray; \(J\) is the total effect of emission and in-scatter less absorption and out-scatter; \(L\) defines the interval \([0, L]\) along the ray where the field is nonzero; \(\hat{I}_{bg}\) is the radiance of the background; and \(\tau \) models transparency:

where \(\sigma \) is a positive constant dependent on the medium. To simplify the model, we further ignore refraction, neglect scattering, assume constant self-emission, darken background \(\hat{I}_{bg}=0\), and linearize the model as

where \(I_{p}\) is pixel \(p\)’s intensity. Since flame irradiance is proportional to the intensity of pixels when we assume a linear camera response, the flame is modeled as a 3D density field \(D\) based on flame reaction products, and image intensity is related to the density of luminous particles in the flame.

Color-image-based reconstruction which keeps the color information has advantage for modeling visually realistic flame. Most existing color-image-based methods rebuild three density fields from RGB intensities according to Eq. (3). However, a minor reconstructed error in one RGB components will result in an apparent color difference in the final rendered image. Considering the high correlation of RGB intensities, a novel flame generation method with color temperature is proposed in this paper. This method includes three processes: data preprocessing, color-temperature mapping and 3D flame reconstruction, as illustrated in Fig. 2.

The first step of data preprocessing is essential to meet the assumption of a linear optical model. The second step calculates color-temperature mapping which maps the RGB intensities into the color temperature and its joint intensity. In the third step, a 3D flame is reconstructed with color temperature using the multiplication reconstruction and visual hull technology. These steps will be described in detail in the following sections.

4 Data preprocessing

According to the linear optical model, the sum of the image’s intensity which is obtained from any view is constant for a given flame density field, as shown in Fig. 3. Where \(D\) is a given flame density field, \(I_{1}\) and \(I_{2}\) are the image intensity obtained when the camera is located at different angles, and then \(I_{1}\) should be equal to \(I_{2}\). There are, however, some tiny differences between \(I_{1}\) and \(I_{2}\) in the same epipolar plane. Potential sources of this error are background noises, camera calibration errors, captured image saturation, nonlinear camera response curve, absorption and scattering of flame. In practice, eliminating the error entirely is not easily done within the limitation of the current data capture equipment and methodology. Therefore, one must preprocess the captured images using background subtraction, epipolar plane matching and epipolar line equalization before generating 3D flame.

Background subtraction eliminates the majority of the noises to isolate the target flames. In this paper, Otsu’s method [15] is used to automatically perform clustering-based image thresholding and to remove the background noises, as shown in Fig. 4. It is able to enhance the precision of color-temperature conversion and 3D flame reconstruction.

Epipolar plane matching is used to locate the same object in different images, which is crucial to reconstructing 3D flame data field as shown in Fig. 5 where \(M\) represents a point in space, \(V_{1}\) and \(V_{2}\) are the image planes of two cameras. This allows us to get the projected pixel coordinates of \(M\) in the image plane \(V_{1}\) and \(V_{2}, m_{1}(x_{1}, y_{1})\) and \(m_{2}(x_{2}, y_{2})\), based on the projection matrices of the two cameras.

Assuming that all cameras are located in the same horizontal plane and are equidistant to the target flame, where the pitch angles are the same for all the cameras in world coordinate. Therefore, the epipolar lines of \(y = y_{1}\) in \(V_{1}\) and \(y = y_{2}\) in \(V_{2}\) located in the same epipolar plane, which are shown as dashed lines in Fig. 5. Then, the pixels of \(y = y_{1}+n\) in \(V_{1}\) and \(y = y_{2}+n\) in \(V_{2}\) locate in the same epipolar plane where \(n\) is an arbitrary integer.

Epipolar line equalization ensures that the sum of the pixel intensities of each epipolar line, such as \(y = y_{1}\) and \(y = y_{2}\) (see Fig. 5), is a constant in the same epipolar plane in order to meet the requirement of the linear optical model. The sums of pixel intensities over each epipolar line are averaged in the same epipolar plane formulated as:

where \(\bar{I}\) is the mean intensity of the epipolar lines, \(N\) is number of viewpoints, and \(I_{i}\) is the sum of pixel intensity of the \(i\)th epipolar line. Furthermore, the pixel intensity for each epipolar line is corrected with \(\bar{I}\) , shown as:

where \(p(j)\) is the initial intensity of the \(j\)th pixel, \(p_{\mathrm{new}}(j)\) is the corrected one, and \(M\) is the total number of pixels on this epipolar line. For color images, it is necessary to equalize the RGB intensities, respectively, which further exacerbates the problem of color distortion. In the following section, the color temperature of flame is introduced to avoid color distortion in flame reconstruction.

5 Color-temperature mapping

There is a one-to-one mapping between the temperature and color of luminosity flame according to the radiation thermometry principle [16]. In this section, the ideal blackbody color is obtained from Planck’s blackbody law and three primary color principle (T2RGB), and a closest point search method is designed to calculate the color temperature of flame images (RGB2T). This mapping is assessed by test data at the end.

5.1 Radiation thermometry principle for flame

According to Planck’s radiation law [17], the radiant existence of an ideal surface radiator with wavelength \(\lambda \) (nm) and temperature \(T\) (K) can be expressed as

where \(C_{1}\) and \(C_{2}\) are known as Planck constants, \(\varepsilon (\lambda , T)\) is the blackness of radiator, and \(\varepsilon =1\) when radiator is an ideal blackbody. Then the radiant existence of the radiator on camera response band \((\lambda _{1},\lambda _{2)}\) can be described as

To an image sensor (CCD or CMOS), the irradiance provokes photoelectric conversion which generates an image. In paraxial geometrical optics, the irradiance \(E\) that the image plane receives can be described as [16]

where \(K_{T}(\lambda )\) denotes the optical system’s transmittance, which is the function of wavelength, and \({2a}/{f'}\) is called relative aperture. Furthermore, the output current \((I)\) of CCD photo-generated charge is not only related to the irradiance \(E\), but also related to exposure time \(t\), namely \(I=\mu E t\), where \(\mu \) denotes the coefficient of photoelectric conversion. Let the spectral response function of monochrome CCD is \(Y(\lambda )\) in visible range (380 nm, 780 nm), then the pixel gray-scale value can be obtained as

where \(\eta \) demonstrates the conversion factor between the outputted current and the image gray value. For color CCD, there are three spectral response functions, \(r(\lambda ), g(\lambda )\) and \(b(\lambda )\), then the outputted RGB gray-level values of CCD pixel can be described as [18]

where \(A=({\eta \mu t}/4) \cdot K_{T}(\lambda )\cdot {[ {2a}/{f'} ]}^{2}\) and can be obtained by calibration.

The monodromy of temperature determines the monodromy of the objects radiation spectra, and further determinates the monodromy of the color generated by the radiation spectra. Therefore, there is a one-to-one mapping between the measured temperature \(T\) and the outputted R, G, B gray-level values of CCD pixel [16, 18, 19].

However, in term of flame, it is difficult to find the real surface as it is a volume radiator. The intensity of a pixel in the flame image is determined by the integral of radiant energy of the small radiators with different temperature along the viewing ray, as illustrated by Fig. 6a. Dividing the 3D flame into voxels, and the radiant existence of the \(i\)th voxel with temperature \(T, M_{i}(T)\), can be calculated using Eq. 7. Then the radiant existence that pixel \(j\) received from voxel \(i\) can be formulated as [10, 19]

where \(W(i \rightarrow j)\) is the weight of voxel \(i\) to the intensity of pixel \(j\), and the radiant existence of all voxels to the pixel \(j\) can be described as

Assume the accumulated radiant existence \(M_{j}\) equals to the radiant existence of an ideal surface radiator with temperature \(T\), namely \(M_{j}=M(T)\), the same irradiance on the image planes will be received, and the CCD will output the same pixel intensity. So, the volume flame can be approximated by an ideal surface radiator in viewing direction according to the radiation thermometry principle [10, 16, 18, 19], as shown in Fig. 6b. This radiator meets the following assumptions: its surface can be divided into several facets which have a one-to-one correspondence with the camera’s pixels; each pixel only accepts the radiation energy of its corresponding facet; and each facet has a temperature \(T\).

Therefore, there are a one-to-one mapping between the pixel’s intensity and the facet’s temperature. The intensity of the flame image can be considered as another “form” of the temperature field distribution [10]. And the facet’s temperature \(T\) can be regraded as the color temperature of corresponding pixel color. Certainly, this temperature is only a relative temperature to blackbody radiation rather than the real one.

5.2 Color calculation of the ideal blackbody

The spectrum emitting from an ideal blackbody is entirely determined by its temperature. According to the definition of RGB color space [20], the RGB coordinates of an ideal blackbody at temperature \(T\) would be given by:

where \(\bar{r}(\lambda ), \bar{g}(\lambda )\) and \(\bar{b}(\lambda )\) are the sensitivity functions for the R, G and B sensors, respectively.

To simplify the integral calculation and avoid the device-dependant of RGB color space, a discrete method [22] is adopted to compute the spectra color. We first compute the tristimulus values of CIE \(XYZ\) color space, then transform the CIE perceptual color \(XYZ\) into device parameters \(RGB\) with specific primary color chromaticities. The CIE \(XYZ\) components of an ideal blackbody at temperature \(T\) are calculated through

here, \(\bar{x}_{\lambda },\bar{y}_{\lambda }\) and \(\bar{z}_{\lambda }\) are the CIE color matching functions. They are constructed by measuring the mean color perception of human observers samples over the visual range [21]. Since the perceived color only depends upon the relative magnitudes of \(X, Y\) and \(Z\), the chromaticity coordinates are defined as

Furthermore, we convert perceptual color (CIE \(XYZ\)) to device color (RGB) by solving \((r, g, b)\) in Eq. 16.

where \((x_{r}, x_{g}, x_{b}), (y_{r}, y_{g}, y_{b})\) and \((z_{r}, z_{g}, z_{b})\) are known as the equipment specified color parameters; \((r, g, b)\) are the weight of red, green and blue primaries which yield the desired \(x, y\) and \(z\).

Since the temperature of radiators with observable color (minimum visible red) is more than 800 K [23], and the color temperature of common light sources does not exceed 10,000 K. The colors of the ideal blackbody (between 1,000 and 10,000 K) are computed, as shown in Fig. 7.

5.3 Closest point search for color temperature calculation

The color temperature of a flame is the temperature of an ideal blackbody radiator that radiates light of comparable hue to that of the flame. Given the color of a flame image, its color temperature can be resolved by Eq. (7) with the Newton iteration method in theory. However, it is an ill-posed problem for involving a large spectrum width. Instead of solving the complex nonlinear equations, a Closest Point Search Method is proposed to approximately compute the flame color temperature (\( RGB2T \)).

The closeness of the two colors can be represented by the distance between two points in the normalized RGB space [24]. Normalized RGB is obtained from the RGB values by a simple normalization procedure

In normalized RGB space, we look up the closest point on the blackbody color temperature curve with target point and take the temperature of the closest point as the temperature of target color. The pseudo codes of this algorithm are as follows.

Different from the direct search method with time complexity of \(O(n)\), the closest point search method is easy to implement with time complexity of \(O(lgn)\). The conversion between flame color and temperature is a lossy process, and it is acceptable when the error is within the permissible range. Taking two flame datasets for example, we first change the flame images into the combination of color temperature and its joint intensity (\(\sqrt{R^{2}+G^{2}+B^{2}}\)) by the closest point search method (\( RGB2TI \)), then convert the color temperature and joint intensity back to RGB images (\( TI2RGB _{\mathrm{converted}}\)) by the blackbody radiation color calculation. Figure 8b, f shows that the temperature distributions calculated from Fig. 8a, e are basically in accordance with the real flames. Figure 8c, g shows that the reconstructed images from Fig. 8b, f are the same as the raw flames (Fig. 8a, e), and the conversion errors (Fig. 8d, h) are visually acceptable. Therefore, color-temperature conversion is an effective way to map the RGB intensities into the color temperature and its joint intensity in flame reconstruction.

Instances of flame color-temperature conversion. The first data come from Reelfire 2 [25], and the second data come form Alcohol dataset. Where a, e are the original RGB images; b, f are the calculated temperature fields with equipotential lines using the closest point research method; c, g show the results of converting the temperature field back to color image; d, h are the residuals of \(\mathbf{a}{-}\mathbf{c}\), \(\mathbf{e}{-}\mathbf{g}\), (\( RGB {-} RGB _{\mathrm{converted}}\)), and the residual plots are shown in grayscales

6 Three-dimensional flame reconstruction

Flame reconstruction is equivalent to a computerized tomography problem by modeling fire as a 3D density field (see Fig. 1). Since each epipolar plane is independent of the others under the assumption of the flame linear imaging model, the 3D reconstruction can be regarded as an \(L\) 2D reconstruction problems where \(L\) is the number of pixel rows of the image captured.

In this section, a reconstruction method with color temperature is proposed since the intensity of the flame image can be considered as another “form” of the temperature field distribution, where the initial 3D field is generated using multiplication and the projected results are restricted using visual hull technology, as shown in Fig. 9.

Firstly, the initial 3D data field is reconstructed rapidly using multiplication [3] from two orthogonal views. Given images \(I_{1}\) and \(I_{2}\) corresponding to the row and column sums of the field, respectively, the multiplication reconstruction can be describe as \(D = I_{1}I_{2}^{T}\) (see Fig. 10). Different from existing methods, we use color temperature and its joint intensity instead of RGB intensities to generate 3D data field by multiplication. Multiplication reconstruction is a real 3D approach but generates significant artifacts during view synthesis since the result represents the most spread-out and least-coherent solution. It is imperative to further restrain the multiplication solution.

Secondly, the initial 3D field is projected to a new image plane which is calculated for a new rendering viewpoint. There are two reasons why we must project data field to 2D image plane before visual hull constraint. On the one hand, it is advantageous to compensate the removed intensities for photo-consistency. On the other hand, the color temperature is more meaningful in 2D image than 3D, since it is a relative temperature of integrated brightness. The detailed process of field data projection as shown in Fig. 11. Where \(I_{1}\) and \(I_{2}\) are the inputted images, \(O\) is the center of data field in an epipolar plane. Given a viewing angle, we assume existing an imaging plane \(I_{\mathrm{new}}\) which across the center of 3D field and is perpendicular to the optical axis. \(l\) is the intersecting line of \(I_{\mathrm{new}}\) and the epipolar plane, \(V_{i}\) is the field data of voxel \(i\). Assuming \(V_{i}\) is projected to \(l\) at \(P_{i}\), where \(P_{j} \le P_{i} < P_{j+1}\), then the projected results can be described as

where \(D_{j}\) is the projection of pixel \(j\) received, \(d_{\mathrm{pixel}}\) is the unit pixel size.

Finally, the 3D visual hull is computed from 2D silhouettes of 4 views images by rapid octree construction [26]. The visual hull is projected onto the target image plane, and the projected data that lay outside the projected silhouette are removed. Most importantly, the removed pixels’ intensity is allocated in proportion to the remainder in the same epipolar line for photo-consistency.

The pseudo codes of 3D flame reconstruction algorithm are as follows.

The multiplication reconstruction with color temperature is restricted by using visual hull technology, where the culled intensities are compensated for photo-consistency while the impossible zones are discarded for shape constraint. Figure 12 shows that visual hull constraint reduces the artifacts and maintains the shape of real flames during view synthesis.

Steps of visual hull generation and constraint: the data come from Candle dataset, frame 45, and the 50\(^{\circ }\) angle of view is synthesized. a generate 3D flame field with multiplication, b calculate 3D visual hull, c project the visual hull onto the target image plane and binarization, d remove the outside of silhouette and e compensate the inside of silhouette

7 Experimental setup

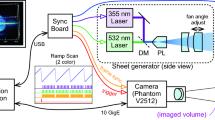

A multi-camera digital imaging system comprised of four optical RGB cameras and an auxiliary ring is used to acquire 2D color images of flame simultaneously from four directions, as depicted in Fig. 13.

On this platform, the cameras are located on the auxiliary ring with 45\(^{\circ }\) intervals. The target flame is placed at the center of the circle \(O\), which is equidistant to each camera. In addition, the pitch angles for each camera are basically equal, which is easy to match the epipolar plane. The physical devices are shown in Fig. 14.

In our setup, the cameras are the Point Greys Flea 2 series 08S2C color cameras, with standard resolution of 1,032 \(\times \) 776 and are connected to a computer via IEEE-1394b interfaces. The auxiliary ring is a double circle ring with a diameter of 1.1 m, and the inner ring is rotatable on which the cameras are fixed. We can adjust the positions of the cameras by rotating the inner ring while maintaining their relative positions. A black cloth is used to enclose the acquisition environment to shield other light sources. Additionally, we add a cover to the alcohol lamp to avoid the specular reflection and highlights. The Flea 2 cameras are used to support the synchronization of soft trigger and external trigger. We utilize the software provided by the manufacturer for soft trigger synchronization. The extrinsic and intrinsic parameters of all cameras are calibrated with standard techniques [27].

8 Results and discussion

Three different flame scenes were tested in this paper showing the combustion of candle, alcohol and a mixture of both (“Mixture”). Experiments were performed on a machine with dual core CPU 2.66 GHz, 2.67 GHz and 2 GB of memory. We captured 100 frames of 640 \(\times \) 480 images from three scenes, respectively, with a frame rate of 30 fps.

The comparison was made between the reconstructed results and the ground truth on the datasets of Candle and Alcohol (see Fig. 15). The first column is the captured images from real fire, the second column are the generated results by our method, and the last column is the reconstruction errors with grayscales. The first and third rows are the input viewpoints, the second and forth rows are the extrapolated viewpoints for (a) and (b), respectively. To preserve visual realism, reconstructed densities must reproduce the input images. Figure 15 shows that the generated flames are basically consistent with the captured flames, where the reconstructed results reproduced the input images. So, it is effective to reconstruct different flames with photo-consistency.

Figure 16 shows the rendering results of incorporating more views other than the four input views. In Fig. 16, all views can be rendered without obvious over-fitting. Therefore, it is a real 3D method which satisfied good view extrapolation.

Figure 17 shows the comparison of reconstructed results before and after the visual constraint. It is obvious that the restricted flames (Fig. 17d, i) are closer to the real flames (Fig. 17a, f) than the multiplication reconstructed results (Fig. 17b, g). The basic shape of the flame was retained when we restricted the multiplication reconstruction with visual hull (Fig. 17c, h), and the reconstruction errors (Fig. 17e, j) are visually acceptable. So the visual hull constraint is essential to generate visually plausible 3D flame in this paper.

Visual hull constraint. a, f are ground truth images we captured, b, g are the reconstructed results in a interpolation view with multiplication, c, h are the visual hulls generated from four views, d, i are the results after removing the outside of silhouette and compensating the inside of silhouette, and e, j are the reconstruction errors with grayscales

Figure 18 shows the comparison results of flame reconstruction using RGB intensities and color temperature. There were obvious color differences in the reconstructed results when using RGB intensities (Fig. 18a) due to the high correlation of RGB intensities. A minor change in one of RGB components may result in an apparent color difference in the final rendered image. However, flame reconstruction with color temperature (Fig. 18b) can acquire better color consistency in rendered images. These results show that flame reconstruction using color temperature is effective to solve the color distortion problem in flame reconstruction because the color temperature fixed the RGB coupling relationship.

Improvement in average construction time (ms) per frame as shown in Table 1 where time costs of data processing for three datasets are record. In Table 1, the average generation time is about 4.5 s in Mixture dataset with resolution 310 \(\times \) 200, and is only 2.4 s in Candle dataset with resolution 240 \(\times \) 200. The total time of flame reconstruction is no more than 5 s per frame, which is much less than the flame sheet decomposition (hours) [3] and the algebraic tomographic reconstruction algorithm (minutes or dozens of minutes) [13, 28].

In order to describe the performance clearly, we converted the Table 1 data into 3-layer pie chart representing the results of each dataset (Candle, Alcohol and Mixture). Figure 19 shows that visual hull constraint was the most time-consuming part of our method which takes about half of total time, and the color-temperature conversion is the second. This information allowed to further improve the performance by optimizing these two parts for interactive applications. Comparing the time of color-temperature conversion from the three selected datasets, we concluded that consumed time increases accordingly with the increase in image complexity.

All in all, four observations can be made from our experiments. First, our 3D method has excellent view extrapolation. Second, the visual hull compensated the culled intensity for photo-consistency while abandoning the impossible zones for shape constraint. Third, better color consistency was achieved in rendered image since the color temperature fixes the relationship among RGB intensities. Forth, our method is time-saving (no more than 5 s per frame) and easy to implement (only 4 views) thereby making it cost efficient.

Certainly, there are still some limitations in our method, i.e., difficulties in addressing flames with holes and the inability of our color-temperature conversion to process a color that deviates too far from the blackbody radiation curve. The experiments conducted herein dealt only with ideal blackbody colors.

9 Conclusions and future work

In this paper, a novel 3D flame reconstruction method using color temperature has been presented. Firstly, the color-temperature mapping of flame was calculated to map the RGB intensities into the color temperature and its joint intensity for solving the color distortion problem in flame reconstruction. Secondly, the multiplication reconstruction with color temperature was restricted by using visual hull, which compensated the culled intensity for photo-consistency while discarding the impossible zones for shape constraint. Three different flame scenes were tested in this paper. Experimental results illustrated that our approach is time-saving (\(<\)5 s) and efficient to generate visually plausible 3D flame without apparent color distortions.

Future work includes calibrating the color temperatures to the real temperatures, applying our method to flame interactive simulation, and exploring realistic flame rendering based on the color temperature field.

References

Wu, Z., Zhou, Z., Wu, W.: Realistic fire simulation: a survey. In: Computer-Aided Design and Computer Graphics (CAD/Graphics), pp. 333–340 (2011)

Zhao, Q.P.: Data acquisition and simulation of natural phenomena. Sci. China Inf. Sci. 54(4), 683–716 (2011)

Hasinoff, S.W., Kutulakos, K.N.: Photo-consistent 3D fire by flame-sheet decomposition. In: IEEE International Conference on Computer Vision, pp. 1184–1191 (2003)

Hossain, M.M., Lu, G., Yan, Y.: Measurement of flame temperature distribution using optical tomographic and two-color pyrometric techniques. In: IEEE International Instrumentation and Measurement Technology Conference (I2MTC), pp. 1856–1860 (2012)

Ihrke, I., Magnor, M.: Image-based tomographic reconstruction of flames. In: Proceedings of ACM SIGGRAPH/Eurographics Symposium on Computer Animation, pp. 365–373 (2004)

Bheemul, H.C., Lu, G., Yan, Y.: Three-dimensional visualization and quantitative characterization of gaseous flames. Meas. Sci. Technol. 13, 1643–1650 (2002)

Upton, T.D., Verhoeven, D.D., Hudgins, D.E.: High-resolution computed tomography of a turbulent reacting flow. Exp. Fluids. 50(1), 125–134 (2011)

Atcheson, B., Ihrke, I., Heidrich, W., Tevs, A., Bradley, D., Magnor, M., Seidel, H.P.: Time-resolved 3d capture of non-stationary gas flows. ACM Trans. Graph. 27(5), 1–10 (2008)

Ishino, Y., Takeuchi, K., Shiga, S., Ohiwa, N.: Measurement of instantaneous 3D-distribution of local burning velocity on a turbulent premixed flame by non-scanning 3D-CT reconstruction. Proc. Eur. Combust. Meet. 810178, 1–6 (2009)

Wang, X., Wu, Z., Zhou, Z., Wang, Y., Wu, W.: Temperature field reconstruction of combustion flame based on high dynamic range images. Opt. Eng. 52(4), 43601:1–43601:10 (2013)

Fu, T., Wang, Z., Cheng, X.: Temperature measurements of diesel fuel combustion with multicolor pyrometry. J. Heat Transf. 132(5), 051602:1–051602:7 (2010)

Ishino, Y., Ohiwa, N.: Three-Dimensional computerized tomographic reconstruction of instantaneous distribution of emission intensity in turbulent premixed flames. In: Lean Combustion Technology, vol. 2, pp. 1–6 (2001)

Gilabert, G., Lu, G., Yan, Y.: Three dimensional visualisation and reconstruction of the luminosity distribution of a flame using digital imaging techniques. J. Phys. Conf. Seri. 15(2005), 167–171 (2005)

Gilabert, G., Lu, G., Yan, Y.: Three-dimensional tomographic reconstruction of the luminosity distribution of a combustion flame. IEEE Trans. Instrum. Meas. 56(4), 1300–1306 (2007)

Sezgin, M.: Survey over image thresholding techniques and quantitative performance evaluation. J. Elect. Imaging 13(1), 146–168 (2004)

Zhao, H., Feng, H., Xu, Z., Li, Q.: Research on temperature distribution of combustion flames based on high dynamic range imaging. Opt. Laser Technol. 39(7), 1351–1359 (2007)

Rybicki, G.B., Lightman, A.P.: Radiative Processes in Sstrophysics. Wiley, London (2004)

Luo, Z., Zhou, H.C.: A combustion-monitoring system with 3-D temperature reconstruction based on flame-image processing technique. IEEE Trans. Instrum. Meas. 56(5), 1877–1882 (2007)

Zhang, X., Cheng, Q., Lou, C., Zhou, H.: An improved colorimetric method for visualization of 2-D, inhomogeneous temperature distribution in a gas fired industrial furnace by radiation image processing. In: Proceedings of the Combustion Institute, vol. 33, no. 2, pp. 2755–2762 (2011)

Tkalcic, M., Tasic, J.F.: Colour spaces: perceptual, historical and applicational background. In: Eurocon 2003, vol. 1, pp. 1–5 (2003)

Walker, J.: Colour rendering of spectra. http://www.fourmilab.ch/documents/specrend/ (2003). Accessed Apr 25, 2014

Hunt, R., Pointer, M.R.: A colour-appearance transform for the CIE 1931 standard colorimetric observer. Color Res. Appl. 10(3), 165–179 (1985)

Pardo, P.J., Cordero, E.M., Suero, M.I., Prez, L.: Influence of the correlated color temperature of a light source on the color discrimination capacity of the observer. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 29(2), A209–A215 (2012)

Oleari, C., Melgosa, M., Huertas, R.: Euclidean color-difference formula for small-medium color differences in log-compressed OSA-UCS space. J. Opt. Soc. Am. A Opt. Image Sci. Vis. 26(1), 121–134 (2009)

Artbeats: ReelFire 2. http://www.artbeats.com/collections/214-ReelFire-2. Accessed Apr 25, 2014

Szeliski, R.: Rapid octree construction from image sequences. Comput. Vis. Image Underst. 58(1), 23–32 (1993)

Zhang, Z.: A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 22(11), 1330–1334 (2000)

Gregson, J., Krimerman, M., Hullin, M.B., Heidrich, W.: Stochastic tomography and its applications in 3d imaging of mixing fluids. ACM Trans. Graph. 31(4), 52 (2012)

Acknowledgments

This work was supported by the National 863 Program of China (Grant No. 2012AA011803) and National Natural Science Foundation of China (No.61300066). We thank Voicu Popescu of Purdue University for his lectures about academic writing.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution License which permits any use, distribution, and reproduction in any medium, provided the original author(s) and the source are credited.

About this article

Cite this article

Wu, Z., Zhou, Z., Tian, D. et al. Reconstruction of three-dimensional flame with color temperature. Vis Comput 31, 613–625 (2015). https://doi.org/10.1007/s00371-014-0987-5

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00371-014-0987-5