Abstract

This contribution presents a model order reduction framework for real-time efficient solution of trimmed, multi-patch isogeometric Kirchhoff-Love shells. In several scenarios, such as design and shape optimization, multiple simulations need to be performed for a given set of physical or geometrical parameters. This step can be computationally expensive in particular for real world, practical applications. We are interested in geometrical parameters and take advantage of the flexibility of splines in representing complex geometries. In this case, the operators are geometry-dependent and generally depend on the parameters in a non-affine way. Moreover, the solutions obtained from trimmed domains may vary highly with respect to different values of the parameters. Therefore, we employ a local reduced basis method based on clustering techniques and the Discrete Empirical Interpolation Method to construct affine approximations and efficient reduced order models. In addition, we discuss the application of the reduction strategy to parametric shape optimization. Finally, we demonstrate the performance of the proposed framework to parameterized Kirchhoff-Love shells through benchmark tests on trimmed, multi-patch meshes including a complex geometry. The proposed approach is accurate and achieves a significant reduction of the online computational cost in comparison to the standard reduced basis method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the past decades, the integration of geometric design and finite element analysis has attracted a lot of attention within the computational engineering community. The introduction of Isogeometric Analysis (IGA) [1] paved the way for an integrated framework from Computer Aided Design (CAD) to numerical simulation followed by a wide range of successful applications in several fields. Adopting the isogeometric paradigm, the effort involved in meshing and geometry clean-up can be circumvented by employing the same representation for both geometric description and numerical analysis. The prevailing technology for geometric representation in CAD is based on splines, namely B-splines and in particular non-uniform rational B-splines (NURBS). The reader is further referred to [1, 2] for a detailed overview of the method.

In the context of shell analysis, IGA offers clear advantages. In particular, Kirchhoff-Love shell formulations are based on fourth order partial differential equations (PDEs) and require \(C^1\)-continuity that hinders the use of classical \(C^0\)-continuous finite elements. Due to the higher continuity of splines, isogeometric discretizations are well suited for the modeling of higher-order PDEs. An overview of isogeometric methods for Kirchhoff-Love shell analysis is given in [3].

Nevertheless, real-world applications of complex geometries require the treatment of further issues. Typically, complex shapes are represented in CAD using Boolean operations such as intersection and union. This results in trimmed meshes, where the underlying discretization is unfitted with respect to the physical object. First, tackling trimmed surfaces needs to be properly addressed in the numerical simulation. A detailed review on trimming and related challenges such as numerical integration, conditioning, and others, is given in [4]. We also refer to [5, 6] in the context of trimmed shell analysis. Besides trimming, complex shapes are commonly represented by multiple, non-conforming patches in CAD and require suitable coupling strategies. In particular for shells, \(C^1\)-continuity between adjacent patches is required for the analysis. There are several methods in the literature to achieve displacement and rotational continuity in a weak sense, such as the penalty, Nitsche’s, and mortar methods. A comprehensive overview of these methods in the context of IGA is given in [7]. We also refer to previous works on mortar methods [8,9,10,11], penalty approaches [12,13,14], and Nitsche’s method [15,16,17,18] including their application to the analysis of trimmed shells. For the case of conforming patches, \(C^1\)-continuity across interfaces can be even imposed strongly, as shown recently in [19].

On the other hand, there exist several applications where the analysis needs to be performed rapidly for many different parametric configurations. This is, for example, the case in design and shape optimization, where the geometry is updated on the fly within the optimization loop. The reader is further referred to [20,21,22], for an overview on isogeometric shape optimization. To achieve a computational speedup, research efforts have been devoted to the development of efficient reduced order models (ROMs) for the solution of parameterized problems. In particular, we refer to previous works in the context of combining IGA with ROMs [23,24,25]. Most of these works employ the reduced basis method to construct projection-based ROMs in combination with hyper-reduction techniques such as the Empirical Interpolation Method [26] to handle geometrical parameterizations for non-affine problems. A detailed overview on reduced basis methods is given in [27, 28]. We also refer to [29, 30] in the context of isogeometric ROMs for multi-patch geometries. Nevertheless, the study of efficient ROMs in the context of isogeometric shell analysis is a subject that still lacks thorough investigation. The application of reduced basis methods to isogeometric Kirchhoff-Love shells has been explored in [31] for simple geometries. However, a general ROM framework suitable for trimmed, multi-patch geometries of industrial relevance is still missing.

This contribution aims to investigate the use of efficient ROM strategies for fast isogeometric Kirchhoff-Love shell analysis formulated on parameterized trimmed and multi-patch geometries. Based on our previous work [32], we employ a local reduced basis method to construct efficient ROMs for problems formulated on parameterized unfitted geometries obtained by trimming operations. Local ROMs have been a subject of previous research works within the model reduction community [33,34,35,36]. The method we use in this work is based on extension and parameter-based clustering of snapshots to apply reduction techniques such as the Proper Orthogonal Decomposition and Discrete Empirical Interpolation Method [37, 38].

We recast the Kirchhoff-Love shell formulation into parameterized trimmed geometries within a multi-patch setting. The proposed reduction framework enables an efficient offline/online decomposition and is agnostic to the underlying coupling method, therefore different strategies can be in principle considered to enforce displacement and rotational continuity in a weak sense. In addition, we discuss the application of the reduction framework to parametric shape optimization problems. We also refer to previous works on ROM-based parametric optimization [39,40,41].

The manuscript is structured as follows: Sect. 2 provides a brief overview of basic concepts related to B-splines and trimming formulated on parameterized domains. In Sect. 3 we present the parameterized formulation for the Kirchhoff-Love shell, while Sect. 4 provides the necessary definitions related to the coupling of multi-patches. We discuss the local reduced basis method in Sect. 5 and its application to parametric shape optimization in Sect. 6. In Sect. 7 we study several numerical experiments including non-conforming discretizations and a complex geometry to assess the performance of the proposed framework. The main conclusions that can be drawn from this study are finally summarized in Sect. 8.

2 Parameterized trimmed geometries in isogeometric analysis

In this section, we provide a brief review of some basic concepts related to B-splines and trimming in isogeometric analysis. In particular, we formulate the trimming operation in the context of parameterized geometries for single-patch domains. For a detailed review of splines in the context of isogeometric analysis, the reader is referred to [1, 2, 42]. Moreover, we refer to [4] for a comprehensive review of trimming in isogeometric analysis.

2.1 B-spline basis functions

To illustrate the basic concept of B-splines, we introduce a knot vector in the parametric space [0, 1] as a non-decreasing sequence of real values denoted by \(\Xi = \{\xi _1,\dots ,\xi _{n+p+1}\}\). Here, the integer n denotes the number of basis functions and p the degree. We further introduce a univariate B-spline basis function \(b^j_{i_j,p_j}\) where \(p_j\) denotes the degree and \(i_j\) the index of the function in the jth parametric direction. The B-spline functions \({\mathcal {B}}_{{\varvec{i}},{\varvec{p}}}(\varvec{\xi })\) can be easily defined in multiple dimensions exploiting the tensor product of univariate B-splines:

where \({\hat{d}}\) is the dimension of the parametric space. Moreover, the vector \({\textbf{i}}=(i_1,\dots ,i_{{\hat{d}}})\) is a multi-index denoting the position in the tensor-product structure and \({\textbf{p}}=(p_1,\dots ,p_{{\hat{d}}})\) the polynomial degree corresponding to the parametric coordinate \(\varvec{\xi }=(\xi ^1,\dots ,\xi ^{{\hat{d}}})\). For simplicity, we will assume from now on that the vector \(\textbf{p}\) is identical in all parametric directions and can be replaced by a scalar value p. The B-spline basis is \(C^{p-k}\)-continuous at every knot, where k is the multiplicity of the knot, and is \(C^{\infty }\) elsewhere. The concept of B-splines can be easily extended to rational B-splines and the interested reader is further referred to [2] for a detailed exposition.

2.2 Parameterized trimming

In the following, we briefly present the mathematical foundation of trimming in isogeometric analysis and recast its formulation into the context of parameterized geometries. For this purpose, we adopt the notation previously introduced in [43, 44].

Let us first define the parameterized physical domain \(\Omega ({\varvec{\mu }}) \subset {\mathbb {R}}^d\) described by the geometrical parameters \({\varvec{\mu }} \in {\mathcal {P}} \subset {\mathbb {R}}^M\), where d is the dimension of the physical space for our problem, \({\mathcal {P}}\) is the space of parameters, and M is the number of parameters. We will show later how to obtain \(\Omega ({\varvec{\mu }})\) through trimming operations. Now we consider the non-trimmed, single-patch physical domain \(\Omega _0 \subset {\mathbb {R}}^d\) and its counterpart in the parameter space \({\hat{\Omega }}_0 = [0,1]^{{\hat{d}}}\). In the following, we assume that the non-trimmed domain \(\Omega _0\) is parameter-independent without loss of generality. In principle, the extension to the parameter dependent case is straightforward. The multi-patch setting will be discussed below in Sect. 4.

Given a control point mesh \(\mathbf{P_i}\), a spline geometric map \({\textbf{F}}: {\hat{\Omega }}_0 \rightarrow \Omega _0\) can be defined as

As a result, by an abuse of notation, the non-trimmed physical domain is obtained by \(\Omega _0 = {\textbf{F}}({\hat{\Omega }}_0)\). In what follows we are interested in parameterizing the trimmed regions. For this purpose, we introduce K parameter-dependent trimmed regions \(\Omega _1({\varvec{\mu }}),\dots ,\Omega _K({\varvec{\mu }}) \subset {\mathbb {R}}^d\) that are cut away by the trimming operation. The obtained physical domain can then be expressed as:

The concept is illustrated in Fig. 1. Following this definition, the boundary of the domain consists of a trimmed part \(\partial {\Omega ({{\varvec{\mu }}})}\backslash \partial {\Omega _0}\) and a part that corresponds to the original domain \(\partial {\Omega ({{\varvec{\mu }}})}\cap \partial {\Omega _0}\). For the sake of simplicity, we will assume from now on that Dirichlet boundary conditions are not applied on the trimming boundary. The interested reader may refer to [45, 46] regarding the weak imposition of Dirichlet boundary conditions on the trimming boundary in combination with stabilization techniques.

Furthermore, it should be noted that the elements and basis functions are defined on the non-trimmed domain \(\Omega _0\) and the original domain remains unchanged by the trimming operation. Thus, we define the B-spline space \(S^p_{0}\), which is constructed upon \(\Omega _0\) and is independent of the parameters \({{\varvec{\mu }}}\), as

In fact, let us now rewrite the trimming operation in the parametric domain \({\hat{\Omega }}_0\). The counterpart of the parameter-dependent trimmed regions \(\Omega _i({\varvec{\mu }}), i=1,\dots ,K\) (see also (3)) in the parametric domain are defined as \({\hat{\Omega }}_i \subset {\hat{\Omega }}_0\), while it holds that \(\Omega _i({\varvec{\mu }}) = {\textbf{F}}({\hat{\Omega }}_i), i=1,\dots ,K\). Thus, Eq. (3) can be reinterpreted as

Let us now introduce the B-spline space of degree p restricted to the trimmed domain \({\Omega }({{\varvec{\mu }}})\):

where \(\text {supp}({{\mathcal {B}}_{{\textbf{i}},p}})\) is the support of the non-trimmed basis functions. We remark that for numerical integration, a re-parameterization of cut elements is performed in the trimmed parametric domain \({\hat{\Omega }}({{\varvec{\mu }}})\). In this work we follow the high-order reparameterization procedure discussed in [47]. The trimming operation can be understood as restricting the map \({\textbf{F}}\) to an active region of the original domain, which is the visible part after the trimmed regions are cut away. Indeed, only the basis functions whose support intersects \({\hat{\Omega }}({{\varvec{\mu }}})\) are active. The remaining basis functions are inactive and do not contribute to the solution discretization of the problem. Moreover, the active basis functions and the dimension of the space may change for different values of the parameters \({{\varvec{\mu }}}\). Therefore, to apply reduce order modeling techniques we will rely on the definition of the non-trimmed domain \(\Omega _0\) and associated B-spline space \(S^p_{0}\) following [32]. This aspect will be further discussed in Sect. 5.

3 Parameterized Kirchhoff-Love shell formulation

In the following we introduce the weak formulation of the Kirchhoff-Love shell problem following closely the notation set in [13, 18]. Let us consider a parameterized single-patch computational domain \(\Omega ({{\varvec{\mu }}}) \subset {\mathbb {R}}^3\) representing a two-dimensional manifold with smooth boundary \(\Gamma ({{\varvec{\mu }}}) = \partial \Omega ({{\varvec{\mu }}})\). The boundary is partitioned such that both displacements and rotations, respectively, as well as their energetically conjugate shears forces and bending moments can be prescribed on the boundary. Thus, we partition \(\Gamma ({{\varvec{\mu }}})\) into a Dirichlet boundary \(\Gamma _D({{\varvec{\mu }}}) = \Gamma _{D,u}({{\varvec{\mu }}}) \cup \Gamma _{D,\theta }({{\varvec{\mu }}})\) associated with prescribed transverse displacements and normal rotations as well as a Neumann boundary \(\Gamma _N({{\varvec{\mu }}}) = \Gamma _{N,s}({{\varvec{\mu }}}) \cup \Gamma _{N,b}({{\varvec{\mu }}})\) associated with applied transverse shear forces and bending moments, respectively. Note that it holds \(\Gamma ({{\varvec{\mu }}}) = \overline{\Gamma _D({{\varvec{\mu }}}) \cup \Gamma _N({{\varvec{\mu }}})}\), \(\Gamma _{D,u}({{\varvec{\mu }}}) \cap \Gamma _{N,s}({{\varvec{\mu }}})= \varnothing\) and \(\Gamma _{D,\theta }({{\varvec{\mu }}}) \cap \Gamma _{N,b}({{\varvec{\mu }}}) = \varnothing\). Now let us further introduce a set of corners \(\chi ({{\varvec{\mu }}})\subset \Gamma ({{\varvec{\mu }}})\) that can be decomposed into a Dirichlet part \(\chi _D ({{\varvec{\mu }}})= \chi ({{\varvec{\mu }}})\cap \Gamma _D({{\varvec{\mu }}})\) and a Neumann part \(\chi _N({{\varvec{\mu }}}) = \chi ({{\varvec{\mu }}})\cap \Gamma _N({{\varvec{\mu }}})\). Note that \(\chi ({{\varvec{\mu }}})= \chi _D({{\varvec{\mu }}}) \cup \chi _N({{\varvec{\mu }}})\) and \(\chi _D ({{\varvec{\mu }}})\cap \chi _N ({{\varvec{\mu }}})= \varnothing\). Let us also assume an applied body load \(\tilde{\textbf{f}} \in [L^2(\Omega ({{\varvec{\mu }}}))]^3\), a prescribed bending moment \(\tilde{{B}}_{nn} \in L^2(\Gamma _{N,b}({{\varvec{\mu }}}))\), an applied twisting moment \({\tilde{S}}\big \vert _C \in {\mathbb {R}}\) at corner \(C \in \chi _N\), and a prescribed transverse shear or ersatz traction \(\tilde{\textbf{T}} \in L^2(\Gamma _{N,s}({{\varvec{\mu }}}))^3\) as defined in [18].

Hereinafter, for the sake of simplicity of exposition, and without loss of generality, we consider that no normal rotations are prescribed on the boundary, i.e., \(\Gamma _{D,\theta }({{\varvec{\mu }}})=\emptyset\), and that only homogeneous Dirichlet boundary conditions are applied on \(\Gamma _{D}({{\varvec{\mu }}})=\Gamma _{D,u}({{\varvec{\mu }}})\).

We further recall the spline geometric map \({\textbf{F}}\) in (2) and construct a covariant basis, where the basis vectors are defined as

Here \({{\textbf{F}}}_{,\alpha }\) denotes the partial derivative of the spline geometric mapping with respect to the \(\alpha\)th curvilinear coordinate. The midsurface normal vector \({\textbf{a}}_{3}\) can be constructed as a normalized cross-product of the two in-plane vectors \({\textbf{a}}_{\alpha }\), that is

Then, we can construct the contravariant basis vectors that satisfy the Kronecker relationship as \(\textbf{a} _{\alpha }\cdot \textbf{a}^{\beta } = \delta _{\alpha }^{\beta }\). Note that it holds \({\textbf{a}}_3 = {\textbf{a}}^3\). The reader is further referred to [18] for an elaborate discussion on fundamentals of differential geometry that are relevant to the Kirchhoff-Love shell formulation.

Let us now introduce a discrete space \(V_h({{\varvec{\mu }}})\) to approximate our problem:

where the spline space \(S^p({{{{\Omega }}({{\varvec{\mu }}})}})^3\) has at least \(C^1\)-continuity, as typically required by isogeometric Kirchoff-Love formulations (see, e.g., [3]). Higher continuity requirements will be discussed in Sect. 4.2 for the case of Nitsche’s interface coupling for multi-patch domains.

Now let us define the discrete weak formulation of the parameterized Kirchhoff-Love shell problem as: find \({{\varvec{u}}}_h({{\varvec{\mu }}}) \in V_h({{\varvec{\mu }}})\) such that

The parameterized bilinear form \(a(\cdot ,\cdot ;{\varvec{\mu }})\) is given as:

and the parameterized linear functional \(f(\cdot ;{\varvec{\mu }})\) reads:

Note that \(\theta _n({\varvec{v}}_h) = - {\varvec{n}}\cdot \nabla {{\varvec{u}}}^{\top }_h{\textbf{a}}_3\) is the normal rotation, \({\varvec{n}}\) is the unit outward normal vector to the boundary \(\Gamma ( \varvec{\mu })\), and the membrane and bending strain tensors are defined, respectively, as:

where \(\text {sym}(\cdot )\) denotes the symmetric part of a tensor, \(\nabla ^*\) is the surface gradient, and \(P= {\textbf{I}} - {\textbf{a}}_3 \otimes {\textbf{a}}_3\) is the in-plane projector, with \(\textbf{I}\) the identity tensor.

Let us recall that in Kirchhoff-Love shell kinematics we assume that the transverse shear strains vanish, i.e., the normal vectors remain straight and normal during deformation. The rotations are therefore constrained as follows:

Now, let us define the energetically conjugate stresses for both the membrane and bending strains. For this purpose, we assume a linear elastic constitutive model and define the following fourth-order elasticity tensor:

where repeated indices imply summation from 1 to 3, and E and \(\nu\) are the elasticity modulus and Poisson’s ratio, respectively. Assuming a constant thickness t and performing through-thickness integration we obtain the membrane and bending stresses as:

For a rigorous derivation of the weak formulation the reader is further referred to [18].

4 Multi-patch geometries

Let us now consider that the parameterized domain \(\Omega ({\varvec{\mu }})\) is split into non-overlapping subdomains, i.e., patches, such that

where \(\Omega _{k}({\varvec{\mu }}_{k}) \cap \Omega _{l}({\varvec{\mu }}_{l}) = \varnothing\) for \(l \ne k\). Here the patches \({\Omega }_{k}({\varvec{\mu }}_{k})\) are in principle trimmed and their definition follows the setting introduced in Sect. 2.2. For ease of exposition, we assume that the parameters associated to the kth patch coincide with the parameters describing the global computational domain such that \({\varvec{\mu }}_{k} = {\varvec{\mu }}\) for \(k=1,\dots ,N_p\), although in principle different choices are possible (see [30] for more details).

Furthermore, we define the common interface \(\gamma _{j}({{\varvec{\mu }}})\), between two adjacent patches such that

where \(N_{\Gamma }\) is the number of interfaces. We note that the interface can be both trimmed or non-trimmed. The B-spline space (4) can be now rewritten as

where \({\mathcal {B}}_{{\textbf{i}},p}^{k}\) and \({\textbf{F}}_k\) are the B-spline functions and the geometric map associated to the kth patch, respectively. The dimension of the associated space is denoted as \({\mathcal {N}}_{k,0}=\text {dim}(S^p_{k,0})\). And, analogously to (6), the B-spline space of degree p corresponding to the trimmed patch \(\Omega _{k}({\varvec{\mu }})\) now reads

where \({\hat{\Omega }}_{k}({{\varvec{\mu }}})\) is the kth parametric trimmed domain in (5). Following the choice (9), the multi-patch discrete space can be then written as

As in the single-patch case, and unless stated otherwise, we assume the spaces \(V_{h,k}({{\varvec{\mu }}})\) to be \(C^1\)-continuous within each patch. Nevertheless, the multi-patch space \(V_{h}({{\varvec{\mu }}})\) is discontinuous across the interfaces \(\gamma _{j}({{\varvec{\mu }}})\). The continuity required by problem (10) will be imposed in a weak sense. Thus, let us now define the coupling conditions for each interface \(\gamma _{j}({{\varvec{\mu }}})\). First, we denote the displacement fields restricted to \(\partial {\Omega _{k}}({{\varvec{\mu }}})\) and \(\partial {\Omega _{l}}({{\varvec{\mu }}})\) as \({\varvec{u}}_{k}({{\varvec{\mu }}})\) and \({\varvec{u}}_{l}({{\varvec{\mu }}})\), respectively. Then the coupling conditions can be expressed as

that, by means of the standard jump operators we can rewrite as

There exist several coupling strategies for the imposition of the above continuity constraints in a weak sense. In the numerical experiments of Sect. 7, we use both a super-penalty [13] and Nitsche’s [18] methods to achieve displacement and rotational continuity, both described below. In principle, the reduced order modeling techniques to be applied (Sect. 5) are also suitable for other coupling strategies, such as the mortar method where Lagrange multipliers can be eliminated in the context of the reduced basis method [48].

The weak formulation (10) of the parameterized problem can be extended to the multi-patch case by enriching the bilinear form with additional terms that weakly enforce the coupling conditions. Thus, the shell multi-patch problem reads: find \({{\varvec{u}}}_h({{\varvec{\mu }}}) \in V_{h}({{\varvec{\mu }}})\) such that

where \(a_k\) and \(f_k\) are the bilinear and linear parameterized forms (11) and (12), respectively, restricted to the patch k, while the coupling terms \(a_j^{\Gamma }\) will be further discussed in Sects. 4.1 and 4.2. The discretization yields the following parameterized linear system of dimension \({\mathcal {N}}_h({{\varvec{\mu }}})=\text {dim}\left( V_h({{\varvec{\mu }}})\right)\)

where \({\textbf{K}}\) \(\in {\mathbb {R}}^{{\mathcal {N}}_h({{\varvec{\mu }}})\times {\mathcal {N}}_h({{\varvec{\mu }}})}\) is the global stiffness matrix, \({\textbf{f}} \in {\mathbb {R}}^{{\mathcal {N}}_h({{\varvec{\mu }}})}\) is the force vector, and \({\mathcal {N}}_h({{\varvec{\mu }}})\) is the global number of degrees of freedom. The problem (24)–(25) is the high-fidelity or full order model (FOM) upon which we aim to construct a reduced order model (ROM) for fast parametric simulations.

In what follows, we describe the super-penalty [13] and Nitsche’s [18] coupling methods (Sects. 4.1 and 4.2, respectively). While the first approach is simpler and more efficient, its application is limited to the case in which each interface can be associated to a parametric face for at least one of the two contiguous patches at the interface. On the other hand, Nitsche’s method overcomes such limitation, but is more convoluted as it involves further terms and third-order derivatives, and, consequently, requires \(C^2\)-discretization spaces.

4.1 Projected super-penalty approach

In what follows, we will briefly present the projected super-penalty approach that we will use to impose the continuity constraints (23) for some of the numerical experiments in Sect. 7. The reader is further referred to [13, 49] for a more detailed overview of the coupling approach.

At each interface \(\gamma _j({{\varvec{\mu }}})\), for \(j=1,\dots ,N_{\Gamma }\), let us first choose arbitrarily one of the neighboring patches as active. This active patch must be chosen such that \(\gamma _j({{\varvec{\mu }}})\) is fully contained in one of its four patch parametric faces. We extract the patch knot vector associated to that face and remove the first and last knots, denoting the resulting vector as \(\Xi _j\). Then, using \(\Xi _j\) we construct a one-dimensional lower degree spline space \(S^{p-2}\left( \gamma _j(\varvec{\mu })\right)\). Finally, we denote as \(\Pi _j\) the \(L^2\)-projection, related to the interface \(\gamma _j(\varvec{\mu })\), onto the reduced vector space \(S^{p-2}\left( \gamma _j(\varvec{\mu })\right) ^d\) for displacements, and onto \(S^{p-2}\left( \gamma _j(\varvec{\mu })\right)\) for normal rotations. The discretized bilinear form is enriched with penalty terms that weakly enforce the coupling conditions (23) and exploit the properties of the \(L^2\)-projection. Thus, these coupling terms read

The parameters of the penalty method are chosen as:

where \(h_j\) denotes the interface mesh size and the measure \(|\gamma _j(\varvec{\mu })|\) is the length of the coupling interface \(\gamma _j(\varvec{\mu })\). The exponent factor \(c_{\text {exp}}\) is chosen as \(c_{\text {exp}}=p-1\) in the numerical experiments of Sect. 7, which yields optimal convergence of the method in the \(H^2\) norm. A detailed discussion on the choice of \(c_{\text {exp}}\) in relation to optimal convergence and conditioning of the underlying system of equations is given in [13].

We remark that this coupling approach is locking-free at interfaces and the choice of penalty parameters can be done automatically based on the problem setup. Nevertheless, the computational cost grows for higher order splines (\(p > 3\)) and the condition number related to the chosen penalty parameters may affect the accuracy of the solution. In addition, the coupling at interfaces where both sides are trimmed is still an open issue. To this end, we will consider the Nitsche’s method for the coupling of patches in more general cases of complex geometries.

Remark 1

To overcome such problem, in [13] the internal knots of the trimming interfaces are neglected for the computation of the intersection mesh at the interface, which can yield sub-optimal results in particular for non-smooth interfaces.

4.2 Nitsche’s method

Let us now introduce the Nitsche’s method for recovering \(C^1\)-continuity at the multi-patch interfaces. A detailed overview of the method is given in [18]. The coupling conditions are imposed in a weak sense by augmenting the discretized bilinear form with penalty, consistency, and symmetry terms. Thus, the coupling terms in (24) read

Here, \(\langle \cdot \rangle _{\delta }\) denotes the average operator, \(B_{nn}={\varvec{n}} \cdot B({\varvec{u}}_h) {\varvec{n}}\) is the bending moment and \(\textbf{T}\) is the ersatz force, defined in [18] as

where \({\varvec{b}} = - \nabla {\textbf{a}}_3\) is the curvature tensor, \({\varvec{t}}\) is the counter-clockwise positively-oriented unit tangent vector to \(\Gamma (\varvec{\mu })\), and \(B_{nt}({\varvec{u}}_h) = {\varvec{n}} \cdot B({\varvec{u}}_h) {\varvec{t}}\) is the twisting moment. The average operator is defined as:

where a is an arbitrary function defined over \(\Omega ({\varvec{\mu }})\), and \(\left. a\right| _{\Omega _k({\varvec{\mu }})}\) and \(\left. a\right| _{\Omega _l({\varvec{\mu }})}\) its restrictions to the patches \(\Omega _k({\varvec{\mu }})\) and \(\Omega _l({\varvec{\mu }})\) at the interface \(\gamma _j({\varvec{\mu }})\). In the numerical experiments of Sect. 7, the penalty constants are chosen as

We remark that this coupling approach is variationally consistent and stable (see Remark 3 below). In addition, its discretized weak formulation results in a well-conditioned system of linear system and is more robust with respect to the chosen parameters compared to penalty approaches. Nevertheless, it is easy to realize that the ersatz force \(\textbf{T}\) requires third-order derivatives (terms \(\nabla \cdot B({\varvec{u}}_h)\) and \(\partial B_{nt}({\varvec{u}}_h)/\partial {\varvec{t}}\) in Eq. (29)), what implies the necessity of \(C^2\)-continuous discretization spaces. Furthermore, the number of terms and the order of the involved derivatives make this method’s implementation more convoluted than the super-penalty approach (recall Sect. 4.1).

Remark 2

The ersatz force \(\textbf{T}\) in Eq. (29) involves third-order derivatives (terms \(\nabla \cdot B({\varvec{u}}_h)\) and \(\partial B_{nt}({\varvec{u}}_h)/\partial {\varvec{t}}\)), what implies the necessity of \(C^2\)-continuous discretization spaces. In particular, and as detailed in [18], the patch approximation spaces \(V_{h,k}({{\varvec{\mu }}})\) in (21) must be chosen such that

where \(u_1, u_2, u_3\) are contravariant components of the displacement, i.e., \({{\varvec{u}}_h} = u_1{\textbf{a}}^1 + u_2{\textbf{a}}^2 + u_3{\textbf{a}}^3\). Consequently, in the numerical examples included in Sect. 7, whenever Nitsche’s method is applied, \(C^2\)-continuous spline spaces are considered.

Remark 3

As discussed, for instance, in [50], in the case of trimmed domains that present small elongated cut elements at the interface, instability effects may arise in the evaluation of the normal fluxes in the formulation (28). This problem can be easily overcome in the case in which one of the patches is not trimmed at the interface, by just selecting \(\delta =1\) in (30), i.e., computing the flux on the non-trimmed side only. However, this is not possible when both patches are trimmed and both present small elongated cut elements at the interface.

A possible alternative is the use of stabilization techniques that have been recently proposed for the Nitsche method in the context of isogeometric discretizations [45, 50, 51]. However, and to the best of our knowledge, no mathematically sound stabilization method has been proposed yet for isogeometric Kirchhoff-Love shells. It is our belief that the minimal stabilization technique proposed in our previous work [50] may be handy in stabilizing the problem. Nevertheless, this study is out of the scope of this paper and has not been addressed yet. In the numerical experiments included in Sect. 7, no numerical instabilities were found.

5 Local reduced basis method

We are interested in problems where a large amount of solution evaluations are required for problem (25) for different values of the parameters vector \(\varvec{\mu }\). The use of reduced order modeling techniques, such as the reduced basis method, can speedup the computation of parameterized problems. The main idea is based on an offline/online split: In the offline phase, the first step is to compute snapshots of the FOM and extract a linear combination of reduced basis functions using techniques such as the greedy algorithm or the Proper Orthogonal Decomposition method. Then, a ROM is constructed by orthogonal projection into the subspace spanned by the reduced basis. In the online phase, a reduced problem is solved to obtain the solution for any given parameter. The reader is further referred to [27, 28] for more details on the reduced basis method. Nevertheless, the application of standard reduced order modeling techniques on parameterized trimmed domains entails several challenges:

-

The spline space \(V_h({{\varvec{\mu }}})\) and its dimension \({\mathcal {N}}_h({{\varvec{\mu }}})\) depend on the geometric parameters \({{\varvec{\mu }}}\). In particular, the set of active basis functions may change for different snapshots depending on the value of the parameters \({{\varvec{\mu }}}\). This impedes the construction of snapshots matrices to extract a reduced basis, since snapshots may be vectors of different length, and requires suitable snapshots extension [52].

-

The efficiency of the offline/online decomposition is based on the assumption that operators depend affinely on the parameters. In case of geometrically parameterized problems, this assumption does not always hold and has to be recovered by constructing affine approximations with efficient hyper-reduction techniques [26, 38].

-

The solution manifold obtained by extended snapshots is highly nonlinear with respect to the parameters \({\varvec{\mu }}\). The same holds also for the affine approximations of the parameter-dependent operators. The approximation of a nonlinear manifold with a single, linear reduced basis space may yield a very high dimension of the basis. This requires tailored strategies to ensure the efficiency of the method.

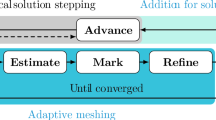

In the following we will briefly review a local reduced basis method to construct efficient ROMs on parameterized trimmed domains based on clustering strategies and the Discrete Empirical Interpolation Method (DEIM). The reader is further referred to our previous work [32] for a more detailed exposition. The main steps of the local method that will be discussed in the following sections are summarized as follows:

-

1.

perform a trivial extension of snapshots and define the extended FOM on a common, background mesh,

-

2.

cluster the parameters and associated snapshots in order to construct DEIM and reduced basis approximations,

-

3.

in the offline phase, train local DEIM approximations and reduced bases for each cluster combination and perform the projection,

-

4.

choose the cluster with the smallest distance to a given parameter during the online phase and solve a reduced problem of sufficiently small dimension.

5.1 Snapshots extension

Let us first discuss the construction of snapshots. The domain at hand \(\Omega ({\varvec{\mu }})\) depends on \({\varvec{\mu }}\), and thus the support of the B-spline basis functions is also parameter-dependent. Since both the spline space \(V_h({{\varvec{\mu }}})\) and its dimension \({\mathcal {N}}_h({{\varvec{\mu }}})\) depend on the geometric parameters \({{\varvec{\mu }}}\), the dimension of each solution snapshot may differ from one parameter to the other. Therefore, the first step is to perform a suitable extension of the solution vector \({\textbf{u}}({\varvec{\mu }})\) such that all solution vectors have the same length. As the original non-trimmed domain \(\Omega _0\) is independent of the parameters, the natural choice is to use it as a common background domain where snapshots are extended. In this work, we consider a zero extension although other choices are also possible [52]. This extension is performed over the non-trimmed, multi-patch space \(V_{h,0}=\oplus _{k=1}^{N_p} (S^p_{k,0})^3\) (recall definition (19)), whose dimension is \({\mathcal {N}}_{h,0}=\text {dim}\left( V_{h,0}\right)\).

With these definitions at hand, the extended full order problem in (25) becomes:

where \(\widehat{\textbf{K}}\) \(\in {\mathbb {R}}^{{\mathcal {N}}_{h,0}\times {\mathcal {N}}_{h,0}}\) is the extended stiffness matrix, \(\widehat{\textbf{u}}({\varvec{\mu }}) \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}}\) is the extended solution vector and \(\widehat{\textbf{f}}({\varvec{\mu }}) \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}}\) is the extended right-hand side vector. Therefore, the size of the above extended problem is \({\varvec{\mu }}\)-independent and is suitable for the computation of snapshots.

5.2 Clustering strategy

In what follows we will briefly review the clustering strategy to construct local ROMs. The main idea is to build multiple approximations based on smaller subspaces instead of one global space. Then in the online phase, the closest cluster is selected for a given parameters vector \({\varvec{\mu }}\) and a local reduced problem is solved.

For geometrically parameterized problems on trimmed domains we opt for a parameter-based clustering [32]. We seek for a partition of the parameter space \({\mathcal {P}}\) in \(N_c\) subspaces, such that

In this work, we will use the k-means clustering algorithm [53] to partition the parameter space although other partitioning strategies are also possible [33, 54, 55]. The main idea is to assign a given parameter vector \({{\varvec{\mu }}}\) to the cluster that minimizes the maximum distance \({\mathcal {D}}({{\varvec{\mu }}},k)\) between boundaries of the trimmed regions \(\Omega _i({\varvec{\mu }}), \ i=1,\dots ,K\), in (3), where \(\exists C > 0\)

and \(\bar{{\varvec{\mu }}}_k\) is the centroid of the kth cluster.Footnote 1 Then, the parameter space \({\mathcal {P}}\) is partitioned into \(N_c\) subspaces as

Thus, the k-means clustering minimizes the distance between each parameter vector and the cluster’s centroid with respect to the Euclidean norm \(\left\Vert \cdot \right\Vert _2\). This strategy partitions trimmed discretizations that have similar active and inactive regions.

For performing such partition, we work with a discrete counterpart of the continuous space \({\mathcal {P}}\). Thus, we create a sufficiently fine and properly selected training sample set \({\mathcal {P}}^s=\{{\varvec{\mu }}_1,\dots ,{\varvec{\mu }}_{N_s}\} \subset {\mathcal {P}}\) of dimension \(N_s=\text {dim}({\mathcal {P}}^s)\), for which the \(N_c\) centroids are sought and updated iteratively until the algorithm converges. We refer the interested reader to [34, Algorithm 5] for a detailed overview of the k-means algorithm. Once the parameter space partition (34) is created, the training set \({\mathcal {P}}^s\) can be clustered accordingly as

Note that a suitable number of clusters \(N_c\) should be chosen in advance to perform the k-means algorithm. The suitability of this choice can be evaluated a posteriori by considering the k-means variance as

In particular, the k-means variance is expected to decrease with increasing number of clusters and the smallest integer can be chosen as \(N_c\) at the transition between a steep slope and a plateau [56]. Note that in the numerical experiments of Sect. 7, this transition occurs at \(N_c \le 10\).

Once the clustering is performed, local ROMs are constructed in the offline phase. The construction of the localized ROM will be discussed in Sects. 5.4 and 5.5, while a detailed overview of the offline phase of the algorithm is given in [32, Algorithms 1–2]. Afterwards, in the online phase, for a given parameters vector \({\varvec{\mu }}\), we determine the corresponding cluster \({\mathcal {P}}_k\) according to (36) and select its associated local ROM for solving a reduced problem. This online phase of the algorithm is also presented in more detail in [32, Algorithm 3].

5.3 Proper orthogonal decomposition

In the following we will briefly recall the Proper Orthogonal Decomposition (POD) that we will use for the construction of the ROM later on. The POD is based on the singular value decomposition algorithm (SVD) and aims to extract a set of orthonormal basis functions [57]. The SVD of a matrix \({{\mathbb {S}}} \in {\mathbb {R}}^{m \times n}\) reads

where the orthogonal matrices \({\mathbb {U}}=[{\varvec{\zeta }}_1,\dots ,{\varvec{\zeta }}_{m}] \in {\mathbb {R}}^{m \times m}\), \({\mathbb {Z}}=[{\varvec{\psi }}_1,\dots ,{\varvec{\psi }}_{n}] \in {\mathbb {R}}^{n \times n}\) have columns containing the left and right singular vectors of \({{\mathbb {S}}}\), respectively, \(\varvec{\Sigma }\in {\mathbb {R}}^{m \times n}\) is a rectangular diagonal matrix that contains the singular values \(\sigma _1 \ge \sigma _2 \ge \dots \sigma _r\), and \(r \le \min (m, n)\) is the rank of \({\mathbb {S}}\). The POD basis of dimension N is then defined as the set of the first N left singular vectors of \({\mathbb {S}}\), i.e., the N largest singular values. We can choose the dimension of the basis N such that the error in the POD basis is smaller than a prescribed tolerance \(\varepsilon _{\text {POD}}\) [28], namely N is the smallest integer such that

Note that the POD basis is orthonormal by construction and the basis functions can be understood as modes that retain most of the energy of the original system. The dimension of the latter is then reduced such that the energy captured by the neglected modes in smaller than or equal to \(\varepsilon _{\text {POD}}\) in (40).

5.4 Local reduced basis problem

In order to construct an efficient ROM, we seek for local reduced bases \({\textbf{V}}_k \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}\times N_k}\) for every cluster k, where \(N_k\) is the local reduced space dimension that is ideally of sufficiently small dimension, i.e., \(N_k \ll {\mathcal {N}}_{h,0}\). The reduced basis is constructed in the offline phase separately for each cluster based on the strategy discussed in Sect. 5.2. In this work we will consider the POD to construct each reduced basis \({\textbf{V}}_k\), while its construction was briefly discussed in Sect. 5.3. Note that other techniques can in principle be also chosen for the construction, as, e.g., the greedy algorithm [30].

To construct a POD basis, let us again consider a fine training sample set \({\mathcal {P}}^s = \{{\varvec{\mu }}_1,\dots ,{\varvec{\mu }}_{N_s}\} \subset {\mathcal {P}}\) with dimension \(N_s=\text {dim}({\mathcal {P}}^s)\) introduced in Sect. 5.2. Then we form the snapshots matrix \({{\mathbb {S}}} \in {\mathbb {R}}^{{\mathcal {N}}_{h,0} \times N_s}\) as

where the vectors \(\widehat{\textbf{u}}_j \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}}\) denote the extended solutions \(\widehat{\textbf{u}}{({\varvec{\mu }}_j)}\) for \(j=1,\dots ,N_s\). These snapshots are also partitioned into \(N_c\) submatrices as \(\lbrace {\textbf{S}}_u^1,\dots ,{\textbf{S}}_u^{N_c}\rbrace\), according to \({\mathcal {P}}^s = \cup _{k=1}^{N_c}{\mathcal {P}}^s_{k}\) in (37). The local reduced basis \({\textbf{V}}_k\) is then extracted from each cluster \({\textbf{S}}_u^k\), separately applying the POD as discussed in Sect. 5.3. Thus, the basis reads:

Let us now derive the local reduced basis problem. For any \({{\varvec{\mu }}} \in {\mathcal {P}}_k\), the solution \(\widehat{{\textbf{u}}}({\varvec{\mu }})\) can be approximated using the local reduced basis \({\textbf{V}}_k\), as

where \({\textbf{u}}_N({\varvec{\mu }})\in {\mathbb {R}}^{N_k}\) is the solution vector of the reduced problem. A projection-based ROM can be obtained from (33) by enforcing the residual to be orthogonal to the subspace spanned by \({\textbf{V}}_k\), such that

Thus, the local reduced basis problem reads:

while the reduced matrix and vector are defined as

The size of the reduced problem (45) is \(N_k \ll {\mathcal {N}}_{h,0}\), which makes it suitable for fast online computation given many different parameters \({\varvec{\mu }} \in {\mathcal {P}}_k\). However, the solution of the reduced problem requires the assembly of the parameter-dependent operators \(\widehat{{\textbf{K}}}({\varvec{\mu }})\) and \(\widehat{{\textbf{f}}}({\varvec{\mu }})\). Therefore, an important assumption for the efficiency of the reduced basis method in general is that the operators depend affinely on the parameters \({{\varvec{\mu }}}\). This assumption is not always fulfilled in the presence of geometrical parameters. Therefore, we will build affine approximations to recover the affine dependence. Since we aim to approximate a manifold of extended operators that is nonlinear with respect to \({\varvec{\mu }}\), the dimension of the approximation space may be high. Therefore, the clustering strategy of Sect. 5.2 will be also considered to construct local affine approximations. Note that from now on we assume for ease of exposition that the clustering is performed only once for constructing both the reduced bases and affine approximations, although in principle this could be chosen differently [32]. We now introduce the following local affine approximation for any \({{\varvec{\mu }}} \in {\mathcal {P}}_k\):

where \(\theta _{a,q}^{k}: {\mathcal {P}}_k \rightarrow {\mathbb {R}}\), for \(q=1,\dots ,Q_{a}^k\), and \(\theta _{f,q}^k : {\mathcal {P}}_k \rightarrow {\mathbb {R}}\), for \(q=1,\dots ,Q_f^k\), are \({\varvec{\mu }}\)-dependent functions, whereas \(\widehat{{\textbf{K}}}_q^k \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}\times {\mathcal {N}}_{h,0}}\) and \(\widehat{{\textbf{f}}}_q^k \in {\mathbb {R}}^{{\mathcal {N}}_{h,0}}\) are \({{\varvec{\mu }}}\)-independent forms.Footnote 2 Since the latter forms do not depend on the parameters \({{\varvec{\mu }}}\), they can be pre-computed and stored in the offline phase. Then, the online assembly requires only the evaluation of \(\theta _{a,q}^{k}, \theta _{f,q}^k\), which is inexpensive assuming that \(Q_{a}^k, Q_f^k \ll {\mathcal {N}}_{h,0}\). To obtain the affine approximation in the form of (47), we will employ the Discrete Empirical Interpolation Method in combination with Radial Basis Functions Interpolation. This hyper-reduction strategy will be further discussed in Sect. 5.5. Once the affine approximation is recovered, inserting (47) into (46) yields, for any given parameter \({{\varvec{\mu }}} \in {\mathcal {P}}_k\):

where \({\textbf{K}}_N({\varvec{\mu }}) \in {\mathbb {R}}^{N_k \times N_k}\) and \({{\textbf{f}}}_N({\varvec{\mu }}) \in {\mathbb {R}}^{N_k}\) are the reduced matrix and right-hand side vector, respectively. We remark that in (48) only the coefficients \(\theta _{a,q}^{k}, \theta _{f,q}^k\) depend on the parameters \({\varvec{\mu }}\) and are evaluated online, while all other quantities are assembled and stored in the offline phase. Finally, during the online phase, for any given parameter \({\varvec{\mu }}\) the respective cluster is selected as in (36) and the local reduced problem in (45) is solved considering the approximation assembly in (48). Finally, the high-fidelity approximation of the solution can be recovered through (43). We remark that the efficiency of the overall method depends on the size of the local reduced problem \(N_k\), on the number of local affine terms \(Q_{a}^k, Q_f^k\), as well as the efficient online evaluation of the coefficients \(\theta _{a,q}^{k}, \theta _{f,q}^k\). The latter aspects will be further elaborated in Sect. 5.5.

5.5 Local discrete empirical interpolation method

In this section we will briefly present the hyper-reduction strategy based on the Discrete Empirical Interpolation Method (DEIM) for matrices and vectors. The reader is further referred to [38] for a detailed presentation of the method.

As discussed before, the first crucial step for the efficiency of the ROM involves constructing local affine approximations in the form of Eq. (47). These are constructed separately for each cluster during the offline phase. For ease of exposition, we consider the same training sample set \({\mathcal {P}}^s = \{{\varvec{\mu }}_1,\dots ,{\varvec{\mu }}_{N_s}\} \subset {\mathcal {P}}\) of dimension \(N_s\) as the one in (41), although other choices are also possible [32]. Then we form the snapshots matrices \({{\textbf{S}}_a} \in {\mathbb {R}}^{{{{\mathcal {N}}^2_{h,0}}} \times N_{s}}\) and \({{\textbf{S}}_f} \in {\mathbb {R}}^{{\mathcal {N}}_{h,0} \times N_{s}}\)

where the vectors \(\widehat{\textbf{k}}_i = \text {vec}(\widehat{{\textbf{K}}} ({\varvec{\mu }}_i))\in {\mathbb {R}}^{{\mathcal {N}}^2_{h,0}}\) and \(\widehat{\textbf{f}}_i=\widehat{\textbf{f}}({\varvec{\mu }}_i)\in {\mathbb {R}}^{{\mathcal {N}}_{h,0}}\), with \(i=1,\dots ,N_s\), denote the vectorization of the extended stiffness matrix and the extended right-hand side vector, respectively. Following the training sample set partitioning \({\mathcal {P}}^s = \cup _{k=1}^{N_c}{\mathcal {P}}^s_{k}\) in (37), the snapshots matrices \({\textbf{S}}_a\) and \({\textbf{S}}_f\) are also partitioned into \(N_c\) submatrices \(\lbrace {\textbf{S}}_a^1,\dots ,{\textbf{S}}_a^{N_c}\rbrace\) and \(\lbrace {\textbf{S}}_f^1,\dots ,{\textbf{S}}_f^{N_c}\rbrace\), accordingly. Then, we apply the POD to each submatrix to obtain the matrices \(\widehat{{\textbf{K}}}_q^k\) and vectors \(\widehat{{\textbf{f}}}_q^k\) in (47). Here, the number of affine terms \(Q_a^k\) and \(Q_f^k\) can be determined by prescribing a tolerance \(\varepsilon _{\text {POD}}\) as in (40). It should be remarked that the latter should in general be lower than the tolerance used to construct the local reduced basis, so that the accuracy of the DEIM approximation does not impede the overall accuracy of the ROM [28].

Now, let us discuss how to efficiently compute the parameter-dependent coefficients \(\theta _{a,q}^{k} ({\varvec{\mu }})\) and \(\theta _{f,q}^{k} ({\varvec{\mu }})\) in (47) for each cluster. For this purpose, we will use the known as magic points [58] according to the empirical interpolation procedure [26]. For each local affine approximation k, a collection of \(Q_a^k, Q_f^k\) entries is selected based on a greedy algorithm that minimizes the interpolation error over the snapshots [28]. In what follows, the selected magic points are denoted as \({\mathcal {J}}_a^k, {\mathcal {J}}_f^k\) for the stiffness matrix and right-hand side vector, respectively. These entries fulfill exactly the following interpolation constraints for the stiffness matrix and right-hand side vector for each \({\varvec{\mu }}\in {\mathcal {P}}_k\):

We remark that the two right-hand sides in the equations above require the online assembly of a collection of \(Q_a^k\)/\(Q_f^k\) FOM matrix/vector entries for a given \({{\varvec{\mu }}} \in {\mathcal {P}}_k\), which can be costly and intrusive. Therefore, we will opt for interpolating with radial basis functions (RBFs) following our previous work [32]. Similar to the construction of local affine approximations, local RBF-interpolants are constructed separately for each cluster k. The main idea is to compute offline the values of \(\theta _{a,q}^{k} ({\varvec{\mu }})\) and \(\theta _{f,q}^{k} ({\varvec{\mu }})\) as in (50), for each training sample \({{\varvec{\mu }}} \in {\mathcal {P}}^s_k\), and train a fast interpolant using these computations. Then, in the online phase the local interpolants can be evaluated rapidly for any given \({{\varvec{\mu }}} \in {\mathcal {P}}_k\), being the coefficients \(\theta _{a,q}^{k} ({\varvec{\mu }})\) in (47) approximated as

where \(N_s^k=\text {dim}({\mathcal {P}}^s_{k})\) is the number of training samples associated to the kth cluster, \(\phi _{q,j}^k\) is the radial basis function associated to the jth center parameter point \({\varvec{\mu }}_j\) and \(\left\Vert \cdot \right\Vert _2\) denotes the Euclidean norm. For the numerical experiments in Sect. 7 we will use cubic RBFs, although other types of functions can be also chosen [59]. During the offline phase, the unknown weights \(\omega _{a,q,j}^{k}\) are computed separately for each cluster such that they fulfill the interpolation constraint exactly for \({{\varvec{\mu }}_k} \in {\mathcal {P}}^s_{k}\)

The procedure is identical for the coefficients \({\theta }^k_{f,q}\) associated to the right-hand side vector and is therefore omitted here.

So far we have presented two approximations with respect to the FOM in (25), namely the local reduced problem as well as affine approximations of the extended stiffness matrix \(\widehat{\textbf{K}}({\varvec{\mu }})\) and right-hand side vector \(\widehat{\textbf{f}}({\varvec{\mu }})\). The construction of localized reduced bases and local affine approximations via DEIM allows to confine the dimension of the bases and number of affine terms, respectively. Moreover, the RBF-interpolation of the coefficients in (51) enables a rapid evaluation in the online phase. It should be noted that the localized method requires additional offline effort to construct and store multiple bases, nevertheless, the main advantage is the reduction of the online computational cost.

6 ROM-based parametric shape optimization

In this section, we will briefly review the aplication of the discussed reduction strategies to optimization problems, following closely the notation in [57]. Such problems require several evaluations of the solution and the objective function to be minimized, which can be expensive. Therefore, these can benefit from reduced basis approximations.

First, let us assume that \(\varvec{\mu }\) controls the shape of the computational domain at hand \(\Omega (\varvec{\mu })\) for the optimization process. As a first step, a reduced model is constructed offline for the design variables \(\varvec{\mu }\) following the approach presented in Sect. 5. Then, the optimization is performed online. In what follows we will directly use the discrete reduced approximation \({{\textbf{u}}}_N={{\textbf{u}}}_N(\varvec{\mu })\) of Eq. (43) for our exposition.

The parametric optimization problem that we will consider in this paper is the compliance minimization under a given volume constraint, which is a common choice in structural optimization. The minimization of the compliance implies that the structure deforms less, i.e., it becomes stiffer. Let us now formulate the optimization problem at hand as:

where \(V({\varvec{\mu }})\) is the volume of the domain \(\Omega ({\varvec{\mu }})\), \(V_0\) a prescribed maximum volume, and \(\varvec{\mu }_{min}, \varvec{\mu }_{max}\) the lower and upper bounds for the design variable \(\varvec{\mu }\), respectively. Here, the cost functional \(J_N({\textbf{u}}_N,\varvec{\mu })\) is obtained by evaluating the reduced basis approximation of the problem solution. For the compliance case, the reduced objective function reads

Remark 4

In the case of the extended FOM problem, the compliance functional (54) can be written as

that, after introducing the approximations (43) and (46), becomes

where the orthogonality of the basis \({\textbf{V}}_k\) was considered.

There are several ways to solve the optimization problem (53)–(54). In the following we will consider a gradient-based approach, where the parameters \(\varvec{\mu }\) are updated in an iterative fashion depending on the gradient of the cost functional \(J_N\). The gradient can be evaluated either analytically or based on a suitable approximation, e.g., with a finite difference scheme. The latter yields a black-box optimization approach that simply requires the reduced solution and the evaluation of the objective function. Nevertheless, the reduced model is perfectly suitable for computing parametric sensitivities due to its differentiability and affine parametric dependence [28, Proposition 5.3]. Let us now reformulate the parameterized reduced problem as

With these definitions at hand and following [28, Proposition 11.3], the gradient of the objective function \({\tilde{J}}_N(\varvec{\mu })=J_N({\textbf{u}}_N,\varvec{\mu })\) reads:

The evaluation of these gradient requires the solution of M sensitivity equations

where D denotes the Fréchet derivative. Instead of directly solving the above equations at each step of the optimization process, we define an additional adjoint problem and denote its solution as \(\overline{\textbf{u}}_N =\overline{\textbf{u}}_N(\varvec{\mu })\) such that

It should be remarked that this approach requires in general the construction of a reduced model for the adjoint problem, which implies additional offline cost. This can be constructed following the approach in Sect. 5. Given the reduced adjoint approximation \(\overline{\textbf{u}}_N\), the gradient in Eq. (58) can be reformulated as:

Note that the evaluation of the above derivatives with respect to \(\varvec{\mu }\) is inexpensive assuming that the operators depend affinely on the parameters.

Considering the compliance case, the solution of the adjoint problem can be directly obtained from the reduced solution \({{\textbf{u}}}_N\). Thus, by inserting Eqs. (54) and (57) into Eq. (60), the adjoint problem for the compliance case reads:

Finally, inserting Eq. (62) into (61), and expanding the derivative \(D_{\varvec{\mu }}{\textbf{G}}_N({\textbf{u}}_N,\varvec{\mu })\), the gradient reads:

Under the assumption of affine parametric dependence in (47) and considering (34) and (43), we rewrite the gradient for \(\varvec{\mu } \in {\mathcal {P}}_k\), as

The derivatives \(\partial {\theta _{a,q}^k(\varvec{\mu })}/ \partial \varvec{\mu }\) are simple and inexpensive to evaluate by just differentiating the expression (51). The procedure for \(\partial {\theta _{f,q}^k(\varvec{\mu })}/ \partial \varvec{\mu }\) is identical.

7 Numerical results

In this section we present some numerical experiments for the Kirchhoff-Love shell problem to assess the capabilities of the presented ROM framework for parameterized trimmed and multi-patch geometries. In what follows we apply the two presented strategies to enforce interface coupling conditions in a weak sense, namely the projected super-penalty (Sect. 4.1) and Nitsche’s method (Sect. 4.2). The numerical experiments are carried out using the open-source Octave/Matlab isogeometric package GeoPDEs [60] in combination with the re-parameterization tool for integration of trimmed geometries presented in our previous works [43, 47], while the ROM construction exploits the open-source library redbKIT [61]. We remark that the stiffness matrix is preconditioned with a diagonal scaling to avoid large conditions numbers and loss of accuracy due to small trimmed elements as discussed in [62].

7.1 Scordelis-Lo roof with holes

The first numerical example is a single-patch, trimmed variant of the Scordelis-Lo roof. The geometry and material properties are adopted from the well known benchmark, see, e.g., [63] for more details. Thus, the Young’s modulus is \(E=4.32 \cdot 10^8\) Pa, the Poisson’s ratio \(\nu =0\), and the thickness \(t=0.25\) m. The shell structure is subjected to self-weight with a vertical loading of \(f_z = -90\) N/m\(^2\) as depicted in Fig. 2. Rigid diaphragm boundary conditions are imposed on the curved ends of the shell, that is, we fix the displacements in the xz-plane. In this example, we cut out two circular holes in the parametric domain as shown in Fig. 2. The radius of the holes in the parametric domain is fixed as \(r = 0.2\). Their location is parameterized, where \(\mu \in {\mathcal {P}}=[0, 0.1]\) is a geometric parameter representing the location of the center of each circle that moves along the diagonal of the unit square. The geometry of the shell structure in the physical space is then obtained by an additional mapping as mentioned in Sect. 2. The shell is discretized with cubic \(C^2\)-continuous B-splines and the dimension of the non-trimmed space is \({\mathcal {N}}_{h,0} = 1083\).

Let us now construct a ROM with the local reduced basis method presented in Sect. 5. For this purpose, we use a training sample of dimension \(N_s = 500\) obtained by Latin Hypercube sampling [64] for the construction of the reduced basis. Note that this sampling is also suitable for more general problems with higher dimension of the parameter space [28]. Figure 3a depicts the decay of the singular values of the POD for different numbers of clusters. We remark that the POD basis is constructed such that it minimizes the squared projection error with respect to the matrix norm, that represents the algebraic counterpart of the \(H^2\) norm. Moreover, the number of clusters for the DEIM approximations is fixed to 16 for all computations. It can be observed that the decay is more rapid with localized ROMs. Now we employ a test sample of dimension \(N_t = 30\) with a uniform random distribution to perform the error analysis whose results are presented in Fig. 3b. We observe that increasing the number of clusters further reduces the dimension of the reduced basis, while an accuracy of \(10^{-5}\) is achieved in the \(H^2\) norm. The optimal number of clusters is selected as \(N_c=8\) based on the k-means variance in Fig. 4. Note that the computation of the optimal number of clusters is performed offline and requires 0.21 s for the problem at hand. Figure 5 depicts the vertical displacement solutions, where the local ROM with \(N_c = 8\) clusters is compared to the FOM. The online CPU time is for the ROM is 0.12 s including the closest cluster iterations, whereas the assembly and solution with the FOM requires 2.32 s. This results in a speedup of \(19 \times\). Note that the trimming operation for computing the FOM or vizualizing the results requires additionally 0.854 s. We remark that the offline time to compute the snapshots for the POD basis is 3 min exploiting the affine approximation (47). The offline time to compute the snapshot matrices and vectors for the DEIM approximations is 1.75 h given a training sample of 2000 snapshots. We remark that this includes the trimming operation for each snapshot and can be further optimized with the use of parallelization.

Moreover, we perform optimization using both the local ROM and the FOM. The upper and lower bounds for the design variable are defined by the parameterization at hand, that is we seek the optimal shape within the bounds \(0 \le \mu \le 0.1\) during the optimization. For this problem we do not consider any volume constraints. First, we solve the optimization problem with the FOM using a finite difference scheme to compute sensitivities. The solution requires 7 iterations and 23 function evaluations in total. Then, we employ the local ROM with \(N_c=8\) clusters for the optimization. The ROM with approximate sensitivities requires 17 function evaluations versus the ROM with exact sensitivities and 15 function evaluations. Note that a forward finite difference scheme is employed for the computation of the approximate sensitivities during the optimization. The solution is slightly faster for the ROM with exact sensitivities and comprises 76.1 ms versus 79.5 ms for the solution with finite difference approximation of the gradient. In all cases the optimal shape is obtained for \(\mu = 0.0319\) after 7 iterations. Figure 6 depicts the optimization results. The optimization history is shown in Fig. 6a by depicting the evolution of the relative compliance during the optimization. For the optimal solution, the compliance is reduced 8% respect to the initial configuration (i.e., for \(\mu =0\)). Finally, Fig. 6b shows the vertical displacement solution for the final optimal shape, comparing ROM and FOM solutions.

Example 7.1: Optimization results depicting: a the evolution of the relative compliance during the optimization comparing the FOM, the ROM with approximate sensitivities, and the ROM with exact sensitivities; and b the vertical displacement for the final optimal shape with \(\mu = 0.0319\) using the FOM (top) and the local ROM (bottom). (Colour figure online)

7.2 Multi-patch simple geometries

In this section we will assess the capabilities of the ROM framework for multi-patch geometries. For this purpose we employ two simple geometries, namely the multi-patch Scordelis-Lo roof and two non-conforming planar patches. The coupling of patches is achieved in both cases using the projected super-penalty method discussed in Sect. 4.1.

7.2.1 Non-trimmed multi-patch Scordelis-Lo roof

This example is intended to test the ROM framework on a non-trimmed, multi-patch setting. For this purpose, we employ again the Scordelis-Lo roof and split the geometry into two subdomains. The geometric setup is depicted in Fig. 7. We employ the projected super-penalty method to enforce interface coupling conditions. The material parameters, loading, and boundary conditions are the same as in the previous example. The shell structure is modeled with two conforming subdomains and the parameterization of the multi-patch design is shown in Fig. 7b, c for a coarse geometry. The common interface is depicted in red color. Note that the geometry is parameterized by moving the depicted control points in the vertical direction, where the geometric parameter \(\mu \in {\mathcal {P}} = [0,10]\) defines their position in the y-direction prescribing the curvature of the shell structure. We remark the \(\mu =0\) corresponds to the original coordinates of the Scordelis-Lo roof benchmark. In Fig. 7b, c we denote for simplicity \(P(\mu ) = P(x,y+\mu ,z)\). The parameters are first defined on a coarse mesh and then the geometry is further refined for the analysis as discussed in [30]. The geometry is discretized with quadratic \(C^1\)-continuous B-splines for the analysis resulting in \({\mathcal {N}}_{h}=1944\) degrees of freedom.

Now let us construct a ROM for the multi-patch geometry. For this purpose, we employ a training sample of dimension \(N_s=500\) obtained by Latin Hypercube sampling. First, the affine approximations are constructed with the DEIM. Figure 8 depicts the error of the DEIM approximations in the \(L^{\infty }\) norm for both the right-hand side vector and the stiffness matrix. It can be observed that the error already decays rapidly with one global approximation. The error reaches an accuracy of \(10^{-7}\) with \(Q_a=8\) and \(Q_f=5\) affine terms for the stiffness matrix and the right-hand side vector, respectively. As a further step, a reduced basis is constructed with the POD. Figure 9 shows the decay of the singular values and the relative error of the reduced solution in the \(H^2\) norm. The error analysis is performed using a test sample of dimensions \(N_t=30\) and a uniform random distribution. Similarly to the DEIM approximations, the decay is already rapid by constructing only one global reduced basis space and the error reaches an accuracy of \(10^{-7}\) for a reduced basis space of dimension \(N=7\). In Fig. 10 we compare the vertical displacement solution of the ROM with the solution of the full order model for three different parameter values.

Moreover, we perform optimization considering a volume constraint. The initial volume is set as \(V_0= 1.9 \cdot 10^3\) and we seek the optimal shape for the design variable bounded by \(0 \le \mu \le 10\). The optimization is completed after 4 iterations and 14 function evaluations. The ROM-based optimization is computed in 67 ms, while the optimization with the high fidelity solution is solved in 518 ms. This implies a speedup of \(7.76\times\). In both cases the exact gradients are computed at every optimization step. At the final iteration, the compliance is reduced by \(16.45\%\) compared to the initial configuration. Figure 11 depicts the vertical displacement solutions for the optimal shape obtained for \(\mu = 5.3127\) with both the ROM and full order solution, while Table 1 summarizes the main results and obtained computation times. We conclude that this verifies the suitability of the ROM framework for multi-patch geometries coupled along non-trimmed interfaces.

7.2.2 Trimmed non-conforming planar patches

In this example we aim to assess the capabilities of the ROM for trimmed multi-patch geometries with both conforming and non-conforming discretizations. In particular, we investigate the behavior of the local ROM for patches that are coupled along parameterized trimming interfaces and are expected to behave poorly with a standard global reduced basis. For this purpose we consider a planar setting, where the computational domain is a unit square and is subdivided into two patches coupled along a curved trimming interface. We employ the projected super-penalty method to enforce interface coupling conditions. The geometric setup and parameterization are depicted in Fig. 12 for a coarse geometry, while the interface is shown in red color. The geometric parameter \(\mu \in {\mathcal {P}}=[0.25,0.75]\) defines the position of the control point \(P(\mu )\) that prescribes the curvature of the trimming interface. The material parameters are the Young’s modulus \(E=10^6\) Pa, the Poisson ratio \(\nu =0.3\), and the thickness \(t=0.022\) m. The non-conforming discretization is generated by shifting the internal knots of the original knot vector at the trimming interface by a factor \(\frac{\root \of {2}}{100}\). Note that since both patches are trimmed, the internal knots of the coupling curve are neglected for the construction of the interface knot vector \(\Xi _j\) following the discussion in Remark 1. The geometry is discretized with quadratic \(C^1\)-continuous B-splines and the dimension of the non-trimmed space is \({\mathcal {N}}_{h,0}=1944\). The applied boundary conditions and loading are adopted from a manufactured smooth solution given in [13] such that \((u_x,u_y,u_z) = (0,0, \sin (\pi x) \sin (\pi y))\).

Now let us construct local ROMs for both the conforming and non-conforming case. For this purpose, we employ a training sample of dimension \(N_s = 500\) that we obtain by Latin Hypercube sampling. First, we investigate the k-means variance in Eq. (38) in order to choose the number of clusters. As it can be seen in Fig. 13, the k-means variance does not decrease significantly after \(N_c=10\) clusters. To perform the error analysis, we consider a testing sample of dimension \(N_t=30\), which is obtained by a uniform random distribution. The relative error in \(H^2\) norm is depicted in Fig. 14 for \(N_c=8,10\) and the results of both discretizations are compared to each other. As expected, the size of the reduced basis decreases for increasing number of clusters. We observe that the non-conformity slightly affects the maximum number of reduced basis functions N, while the error is of the same magnitude in all cases. It can be concluded that the ROM framework is suitable for multi-patch geometries coupled at trimmed interfaces with both conforming and non-conforming discretizations.

7.3 Joint of intersecting tubes

The last example aims to demonstrate the capabilities of the presented framework for complex geometries. For this purpose we consider the geometry of three intersecting tubes (see Fig. 15) that represent a generic configuration for, e.g., joints in steel support truss structures (see also [14, 17] for a similar variant). We remark that optimizing such large-scale structures of industrial relevance is a challenging task, where the shape parameters of each joint element may differ and the ROM can be reused for several online evaluations. The optimization of such problems requires further measures for efficiency (e.g. domain decomposition strategies), which is out of the scope of the present work. For this particular geometry, both sides are trimmed at all interfaces, which hinders the use of the projected super-penalty approach. To this end, we employ the Nitsche’s method to enforce interface coupling conditions. The material parameters are the Young’s modulus \(E= 3 \cdot 10^6\), the Poisson’s ratio \(\nu =0.3\), and the thickness \(t=0.2\). The radius of the main tube is \(R_1=1\). Homogeneous Dirichlet boundary conditions are applied on the top and bottom sections of the main tube, while periodic boundary conditions are applied at the cylinders’ closure. A vertical load \(f_z = 10\) is applied on all tubes. The geometry is discretized with cubic \(C^2\)-continuous B-splines for the analysis and results in \({\mathcal {N}}_{h,0}=2673\) degrees of freedom. In the following we will demonstrate the capabilities of the ROM for two test cases of geometrical parameterization.

7.3.1 Parameterization of radius

First, we consider a geometrical parameter \(\mu \in {\mathcal {P}}=[0.6,0.8]\) that represents the radius of the connecting skewed tubes. The geometry setup and parameterization is depicted in Fig. 15. Note that the angles of the connecting tubes with respect to the horizontal plane are fixed to \(45^{\circ }\) and \(-30^{\circ }\) for the top and bottom tubes, respectively (see Fig. 16). In Fig. 17, the trimming configuration, including the trimmed parametric domains and quadrature points are shown for a particular case of \({\varvec{\mu }}\). To construct the ROM, we employ a training sample of dimension \(N_s = 1000\) that we obtain by Latin Hypercube sampling. Note that this refers to the global dimension before clustering, while the snapshots are computed in parallel to speedup the offline phase. The k-means variance is depicted in Fig. 18a for increasing number of clusters. We observe that the variance does not change significantly after 10 clusters, thus \(N_c=10\) is chosen in what follows. The error analysis is performed using a test sample of dimension \(N_t = 30\) obtained by a uniform random distribution. Figure 18b shows the error convergence for \(N_c=10\) while in Fig. 19 the displacement solution obtained with the ROM is compared to the FOM for three parameter values from the test sample. The main results and computation times are summarized in Table 2.

Example 7.3.1: Trimming configuration for \(\mu =0.7465\). On the left, trimming patches in the physical domain, on the center and right, trimmed parametric domains (trimmed elements are shaded). Black dots depict quadrature points in the cut elements, crosses (in physical domain) quadrature points at interfaces

7.3.2 Parameterization of angle

Let us now consider a geometrical parameter that represents the angle of the connecting tubes with respect to the horizontal plane. In this case, the radius of the intersecting tubes is fixed as \(R_2=0.8\) and the absolute value of the angle varies as \(\mu \in {\mathcal {P}}=[25.7^{\circ },36^{\circ }]\). Note that here the angles of both tubes are symmetric with respect to the horizontal plane. The geometrical variation of the angle is illustrated in Fig. 20a. Training and test samples are generated similarly to the previous test case for the construction of the ROM and the error analysis, respectively. The number of clusters is chosen as \(N_c=10\) based on the k-means variance in Fig. 20b. Figure 21 shows the absolute and relative error in \(H^2\) norm with respect to the maximum number of reduced functions over all clusters, while Fig. 22 compares the displacement solutions using the ROM with the FOM for three parameter values of the test sample. Moreover, we employ the ROM to solve an optimization problem that minimizes the compliance within the parameter space \({\mathcal {P}}\) without volume constraints. Here, we consider a displacement constraint such that \(\text {max}({\textbf{u}}(\varvec{\mu )})\le 2.5 \cdot 10^{-3}\). That is, the compliance is minimized such that the maximum displacement does not exceed the prescribed value. We remark that we also employ the ROM for the computation of the displacement constraint to speedup the optimization. Note that the initial shape at the beginning of the optimization corresponds to the minimum angle with \(\mu =25.7^{\circ }\). The optimization using exact sensitivities requires 8 iterations and 29 function evaluations. Figure 23a depicts the evolution of the relative compliance during the optimization. At the final iteration, the compliance is decreased by 17.9% compared to the initial configuration. Note that the optimization with approximate sensitivities using a forward finite difference scheme requires 8 iterations and 21 function evaluations to reach almost identical results. Figure 23b depicts the displacement solution for the optimal shape with \(\mu =32.22^{\circ }\) and Table 3 summarizes the main results and computation times. It is remarked that the online computation time corresponds to the cluster with the maximum number of basis functions, thus the online cost might differ from one parameter to the other.

8 Conclusion

In this work we present a parametric model reduction framework for trimmed, multi-patch isogeometric Kirchhoff-Love shells. The proposed strategy is suitable for fast simulations on parameterized geometries represented by multiple, non-conforming patches where the trimming interfaces change for different values of the geometric parameters. Following our previous work [30], efficient ROMs are constructed using a local reduced basis method and hyper-reduction with DEIM. The latter enables an efficient offline/online split with low online cost, which is advantageous for solving parametric optimization problems.

We have investigated the capabilities of the proposed framework through several numerical experiments. To this end, we considered trimmed, multi-patch geometries with parameterized interfaces, considering both conforming and non-conforming cases, and that were glued applying super-penalty and Nitsche coupling methods. We observed a high accuracy of the local ROMs, while the solution evaluation in the online phase was obtained with low computational cost. Moreover, we validated the proposed approach for parametric optimization problems and applied it to a complex geometry.

Overall, the application of the ROM framework to isogeometric Kirchhoff-Love shell analysis of complex geometries and optimization problems is a cost-effective alternative. The application to more complex optimization problems, including higher number of design parameters, and the extension to Reissner-Mindlin shell formulations are future research directions to explore. Regarding the ROM, a further subject of future work is related to error certification using greedy algorithms and tailored a posteriori error estimators.

Data availability

Data will be made available on request.

Notes

Hereinafter, and for the sake of conciseness, whenever it is clear from the context, the range \(1,\dots ,N_c\) of the cluster index k will be omitted.

Henceforward, and for the sake of clarity, whenever it is clear from the context, the ranges \(1,\dots ,Q_{a}^k\) and \(1,\dots ,Q_{f}^k\) of the index q referred to the terms of the local affine approximation (47) will be omitted.

References

Hughes TJR, Cottrell JA, Bazilevs Y (2005) Isogeometric analysis: CAD, finite elements, NURBS, exact geometry and mesh refinement. Comput Methods Appl Mech Eng 194:4135–4195

Cottrell JA, Hughes TJR, Reali A (2007) Studies of refinement and continuity in isogeometric structural analysis. Comput Methods Appl Mech Eng 196:4160–4183

Kiendl J, Bletzinger K-U, Linhard J, Wüchner R (2009) Isogeometric shell analysis with Kirchhoff-Love elements. Comput Methods Appl Mech Eng 198(49):3902–3914