Abstract

The present study aims to develop a sustainable framework employing brain-inspired neural networks for solving boundary value problems in Engineering Mechanics. Spiking neural networks, known as the third generation of artificial neural networks, are proposed for physics-based artificial intelligence. Accompanied by a new pseudo-explicit integration scheme based on spiking recurrent neural networks leading to a spike-based pseudo explicit integration scheme, the underlying differential equations are solved with a physics-informed strategy. We propose additionally a third-generation spike-based Legendre Memory Unit that handles large sequences. These third-generation networks can be implemented on the coming-of-age neuromorphic hardware resulting in less energy and memory consumption. The proposed framework, although implicit, is viewed as a pseudo-explicit scheme since it requires almost no or fewer online training steps to achieve a converged solution even for unseen loading sequences. The proposed framework is deployed in a Finite Element solver for plate structures undergoing cyclic loading and a Xylo-Av2 SynSense neuromorphic chip is used to assess its energy performance. An acceleration of more than 40% when compared to classical Finite Element Method simulations and the capability of online training is observed. We also see a reduction in energy consumption down to the thousandth order.

Similar content being viewed by others

1 Introduction

The successful application of Artificial Neural Networks (ANNs) or the so-called Deep Neural Networks (DNNs) in engineering sciences and applied mathematics has brought a sea change in the field of computational science. They have been reliably implemented in the fields of fluid dynamics [1,2,3,4], material parameter identification [5,6,7], uncertainty quantification [8, 9], biomechanics [10, 11] for biomedical applications [12,13,14], and structural mechanics [15,16,17] to highlight a few examples. Efforts to determine and reduce the remarkable energy consumption of ANNs and especially of deep learning algorithms were published in recent years [18]. Tools for estimating the energy consumption of neural networks were investigated in [19,20,21] to be an important part of the overall energy amount in the industry. First strategies were developed to reduce the energy amount of second-generation ANNs as shown in [22,23,24,25] proposing techniques to overcome high energy requirement by removing or dropping out unnecessary neurons and synapse connections [26, 27]. However, the energy savings of the mentioned efforts were drastically undercut by introducing brain-inspired AI such as spiking neural networks (SNNs) [28]. Spikes mimic biological neurons by sending discrete electrical signals and working continuously in time. This is where the neuromorphic hardware becomes important since the transmission of information takes place only when a spike appears otherwise the synaptic weights and memory remain inaccessible. This makes it so efficient compared to deep learning using second-generation networks [29]. However, second-generation networks are well established in engineering science, so data-driven and physics-informed methods must be embedded in a new sustainable SNN framework. This is where the present study comes in. The present study aims to provide a framework for introducing SNNs into the Finite Element Method (FEM), which is essential in Engineering Mechanics. To the best knowledge of the authors, brain-inspired neural network-enhanced FEM for mechanical boundary value problems is not available in the literature so far. It will be demonstrated in this investigation how the FEM profits from the efficiency of SNNs such as significant energy and memory saving.

In solid mechanics, second-generation networks have been implemented predominantly to improve or replace the classical material models. In the so-called model-free data-driven paradigm [30,31,32,33] the stress–strain pair corresponding to material deformation is mapped under physical constraints to the closest stress–strain pair appearing in the data set. This leads to the complete bypassing of empirical material modeling while solving boundary value problems. Alternative approaches comprising of the studies [34,35,36,37,38,39,40,41,42,43] keep the postulation of the empirical material model and learn a surrogate nonlinear mapping between strain and stress (and other possible state variables). Further, machine learning techniques were also deployed in combination with the Finite Element Method (FEM) at the element level to trace the nonlinear evolution of internal force and structural stiffness [17, 44,45,46]. In the studies mentioned above, the qualitative learning of the NN is governed by minimizing the norm of the distance between the expected and the predicted output through the NN. Such algorithms will be referred to as the ‘data-dominant’ or ‘data-driven’ algorithms in the present study.

To establish the fundamentals of physical laws/constraints in the ANNs, a new stream of neural networks termed the Physics Informed Neural Networks (PINNs) was introduced recently. The DNNs belonging to this class, adopt an enhanced training procedure consisting of additional loss terms corresponding to either the residual of the governing equation [47, 48] or in a weak sense by penalizing regularization terms violating physical constraints, e.g constraints corresponding to convexity for hyperelasticity, thermodynamics of dissipative materials, and stable stiffness computation [17, 44, 49,50,51,52]. Although the application of neural networks for solving ODEs and PDEs is not entirely new [53,54,55], the availability of modern algorithms supporting parallel CPU and GPU (Tensorflow [56] and Theano [57]) processing encourages more research in this direction. The described PINN framework has found successful applications for solving forward and inverse nonlinear systems of PDEs in studies [58, 59]. A multi-network PINN framework was introduced by Haghighat et al. [60] to characterize the material behavior and explore its application in generating a nonlinear surrogate for sensitivity analysis. The advantage of requiring small or even no labeled datasets has bolstered research in the domains of fluid mechanics [61,62,63], solid mechanics [64,65,66,67], and porous media [68, 69] to name a few. Further, an unsupervised automated material discovery tool was proposed in the studies [70, 71], to completely circumvent stress–strain labeled data pairs by satisfying the momentum balance equation and assumed kinetics.

Against the backdrop of the outlined studies, and encouraged by the promising developments in the domain of PINNs, we recently developed a Recurrent Enhanced Implicit Integration Scheme (REIIS) [72]. The proposed framework comprises a combination of the LSTM (Long-Short Term Memory) layers and Feed Forward layers to predict the equivalent plastic strain rate obeying Karush–Kuhn–Tucker (KKT) conditions. The NN model in the framework was pre-trained on virtual experiments consisting of Finite Element (FE) simulations and KKT conditions to learn the nonlinear evolution of the equivalent plastic strain rate. After the model was trained to the desired accuracy, transfer learning was implemented and it was deployed as a nonlinear solver in the plastic-corrector of the integration scheme [72].

Although ANNs have been successfully implemented in the applications mentioned above, several computational bottlenecks arise due to their utilization such as high memory access for model initialization, access latency, and throughput [73] which lead to an increased demand for computational resources. Further, since most of the ANNs are implemented for training on the GPUs the movement of data between memory and the processors has become a point of concern, especially for applications such as image recognition, and natural language processing [74, 75]. This inefficiency of the traditional NNs can be attributed to the suboptimal nature of matrix multiplication which induces dependence on data communication and memory. On the contrary, the human brain performs similar tasks consuming significantly less amount of energy [75]. The biological neurons interact with each other through sparse signals instead of dense communication, which fundamentally empowers the biological neuron to function more efficiently. The class of neural networks which empowers the neurons with such a transmission mechanism is referred to as spike neural networks (SNNs) [76]. These sparse signals are efficiently processed by brain-inspired neuromorphic hardware/chips such as Loihi [77], SpiNaker [78], SynSense [79] etc. These chips can be analog, digital, or mixed and essentially comprise small units mimicking the neurons in the brain. Very Large Scale Integration (VLSI) is performed by the chips to utilize these units for computation, whereas a layer of logical gates is used to implement algorithms on neuromorphic chips. This helps the SNNs to fulfill their potential and reduce the energy consumption by a factor up to \(10^{3}\) [77, 80, 81].

Some of the applications of SNNs consist of studies corresponding to image processing [82], image-recognition [83], image segmentation [84] and localization [85]. Also, since they can inherently handle sequential data, they are used for temporal data processing using spiking LSTM variants [86, 87]. To take advantage of the well-established machine learning frameworks (Tensorflow [56], Keras [88] and PyTorch [89]), in certain studies a pre-trained DNN is converted to an SNN [90, 91]. In the present study, we are interested in deploying SNNs for regression tasks, however, so far SNNs have found limited applications in regression problems. Piecewise constant functions were utilized for processing inter-spike interval temporal encoding [92]. In [93], a DeepONet consisting of SNN was proposed to regress on one-dimensional functions. Further, in [94] gradient descent was employed to learn spike times, and in [95] classification problems were recast as regression tasks. More recently, an LSTM spiking variant of SNN was introduced to predict history-dependent 1-D material behavior in [96], and also in [97] a Spike Marching Scheme is introduced to efficiently integrate time-dependent ODEs and PDEs.

In the present study, we extend our previous work concerning the REIIS scheme to take advantage of the third-generation SNNs. We propose a multilayered spiking variant of Legendre Memory Unit (LMU) [98] and traditional Feed-Forward Neural Network (FFNN) to act as a nonlinear solver for computing the equivalent plastic strain rate satisfying KKT conditions. In comparison to an LSTM [99] cell, the memory cell developed in LMU is independent of the number of hidden neurons initialized in a layer and is instead initialized through an ODE that approximates linear transfer function for a continuous delay [98]. Such an attribute allows the LMUs to outperform equivalently-sized LSTMs on sequences having long windows of time [98]. Further, the LMUs enable the proposed model to become independent of the step size, since the LMUs learn scale-invariant features from the data set. Another important point concerning the SNNs is that they communicate with each other through sparse binary signals. We establish an encoding–decoding strategy to handle the nonlinear input–output sequences as described in Sect. 4. Further, we pre-train our model through virtual FE experiments with a combination of data-driven and physics-guiding loss terms, propelling the model to make an informed prediction when deployed in the FE solver. Since the nonlinear evolution of equivalent plastic strain rate remains the same for deformation at every Gaussian point, the pre-training equips the model to make an informed prediction and allows it to reach a converged solution by penalizing the loss corresponding to the yield condition when deployed in the FE solver. This gives the framework, the ability to adapt/tune itself to unseen deformation sequences and is referred to as Self-Learning which makes the framework independent of labeled datasets. We label such a framework as a Spike-based Pseudo Explicit Integration Scheme (SPEIS). To the best of the author’s knowledge, a physics-based self-learning framework consisting of recurrent-based SNNs is new in the literature and it proposes the following developments in comparison to the current literature:

-

A sustainable neural network enhanced Finite Element theory for mechanical boundary value problems.

-

A hybrid framework of SNNs (third-generation) and ANNs (second-generation) acting as a nonlinear surrogate and solver simultaneously.

-

The Spike-based LMU (SLMU) enables the model to learn scale-invariant features from the dataset, resulting in the reduction of the number of online training steps required to reach a converged solution.

-

Neuronal plasticity in the form of an adaptive threshold [100] is included in the Spike-based LMU layer and is guided by the physics-based loss terms.

-

The energy consumption of the SLMU model is compared to its non-spiking variant using KerasSpiking V0.3.1.

-

A numerical validation is performed by deploying the third-generation networks on Xylo-Av2 neuromorphic hardware.

-

The Spike-based LMU model requires no or very few online training steps to reach a converged solution for completely unseen input sequences.

Although we benchmark the Spike-based model on neuromorphic hardware to estimate the energy consumption, it is deployed on the CPU when it is used in combination with the FE solver.

This helps in having a fair comparison when the computational efficiency is compared between the SPEIS framework and the classical integration scheme. The predictive capability of the proposed SPEIS framework is examined on geometrically nonlinear plate elements undergoing viscoplastic deformations. The first-order shear deformation moderate rotation plate/shell theory [101] along with Lemaitre–Chaboche viscoplastic material model [102] is utilized as the basis for developing the FE solver. A detailed description of the development of the physics-based framework and Spike-based LMU is discussed in the sections below.

The present study is organized as follows. In Sect. 2, a brief introduction of the geometrically nonlinear theory along with the Lemaitre–Chaboche [102] viscoplastic model is described. An introduction to SNNs and the nonlinear surrogate is presented in Sect. 3. The hybrid combination of Spike-based LMU and dense networks is introduced along with the fundamentals of PINNs in Sect. 4, which is followed by sampling, pre-training, SPEIS framework, physics-based online training, and hyperparameter identification. A variety of boundary value problems corresponding to steel, copper, and aluminum materials are presented for cyclic loading followed by discussions in Sect. 6. Finally, the conclusion of the current work is presented in Sect. 7.

2 Motivation and problem description

Here we present a concise summary of large-deformation elastic–viscoplastic solid mechanics, as implemented in [101, 103,104,105]. The strong form of mechanical equilibrium on a continuous domain can be written as:

where \({{{\textbf{S}}}}\) represents the second Piola–Kirchhoff stress (PK) tensor, \({\textbf{b}}\) represents the body forces, and \(\Omega _{0}\) is the volumetric domain. The above-mentioned partial differential equation (PDE) is subject to boundary conditions prescribed as traction or displacement. The domains \(\Omega _{S}\) and \(\Omega _{b}\) are non-overlapping i.e. \(\Omega _{S} \cap \Omega _{b} = \emptyset ,\) but belong to the same volumetric domain \(\Omega _{S} \cup \Omega _{b} = \Omega _{0}.\) In this work, large deformations arise due to moderately large mid-surface rotations; thus, in the following, we briefly mention the kinematics of the plate. For a detailed description, the reader is referred to the studies [101, 106, 107]. Let us consider a plate \({\textbf{P}},\) with mid-surface \(\Omega _{p}\) and constant thickness t. The cartesian coordinate system \((x_{1}, x_{2}, x_{3})\) having unit vectors \(({\textbf{e}}_{\textbf{1}}, {\textbf{e}}_{\textbf{2}}, {\textbf{e}}_{\textbf{3}})\) is introduced such that \({\textbf{e}}_{\textbf{1}}\) and \({\textbf{e}}_{\textbf{2}}\) lie on the mid-surface \(\Omega _{p}.\) The displacement vector \({\textbf{u}}\) in its vector form is given as follows.

where \({\textbf{W}}\) represents a skew tensor responsible for the rotation of the fibers orthogonal to the mid-plane. The Green-Lagrange strain tensor thus can be computed as shown below:

In the case of small-strain solid mechanics, the strain tensor can be additively decomposed into elastic \(({{\textbf{E}}}_{\textbf{e}})\) and plastic strains \(({{\textbf{E}}}_{\textbf{p}}),\) which can be written in its rate form as follows:

The inelastic or plastic strain rate \(({\dot{\textbf{E}}}_{p})\) is computed from the Lemaitre–Chaboche [102] model using the following evolution equation

where \(\dot{\bar{\boldsymbol{\varepsilon}}}_p\) represents the rate of an equivalent plastic strain having the following form.

Correspondingly the evolution of the back-stress tensor and isotropic hardening is computed as

The terms k, \({\textbf{X}},\) and R represent the initial yield stress, back-stress tensor, and isotropic hardening, respectively, while the terms K, a, s, \(b_{1},\) \(b_{2},\) k and \(\tilde{n}\) are material parameters are obtained from tension tests and the values for Aluminum and Copper are listed in the Table 1 [108]. The operator \(()^{\prime }\) computes the deviatoric part of the tensor and the invariant \({J_{2}({{\textbf{S}}^{\prime } - {\textbf{X}}^{\prime })}}\) represents the equivalent Huber–Mises–Hencky stress computed as \(J_{2}({{{{{\textbf{S}}^{\prime }}-{\textbf{X}}^{\prime }}}}) = \sqrt{\frac{3}{2}({{{{{\textbf{S}}^{\prime }}-{\textbf{X}}^{\prime }}}}): ({{{{{\textbf{S}}^{\prime }}-{\textbf{X}}^{\prime }}}})}.\) The angle brackets in Eq. 8 are referred to as McCauley brackets and are defined as \(\langle x \rangle = \frac{1}{2}(x + |x|).\) Thus, once the inelastic part of the strain is computed by iteratively solving the equations Eqs. (7)–(10), the stress is calculated by the generalized Hooke’s law

where \({\mathbb{C}}\) is the elastic stiffness operator, defined as,

where \({\textbf{I}}\) and \({\mathbb{I}}\) represent the 2nd and 4th order identity tensors, \(\kappa\) represents the bulk modulus and \(\mu\) is the shear modulus. The stress computed by the above set of equations must satisfy the strong form of the mechanical equilibrium as mentioned in Eqs. (1)–(3). These solutions obtained by solving the outlined non-linear system of equations [109] act as a reference for pre-training and for assessing the qualitative learnings of the SPEIS framework.

3 Preliminaries on spiking neural networks

SNNs also termed third-generation neural networks can be perceived as history-dependent ANNs. The memory effect is introduced in these neurons through various biological processes. With the present study, we introduce briefly, the Leaky-Integrate and Fire (LIF) model which is one of the most popularly used models for describing the dynamics of neuron membrane potential [110, 111]. The LIF neuron is used as a building block in the current research and has a similar role as the densely connected feed-forward neural networks (FFNNs) in the second-generation counterpart.

We introduce the following notation in the current work. An arbitrary sequence s is denoted by a superscript [s] and the tth time-step in a sequence is denoted by a superscript t.

Representative model of an SNN. The dotted lines denote that there is no transfer of information between the neurons at the time-instant, and the arrow indicates that a signal of 1 is passed at the given instant. The superscripts x and y denote the dimensions of the input and the output features. \({\textbf{z}}_{(d)}\) represents the output of \(d^{th}\) hidden layer in the neural network model

Processing of input information in an ANN and an SNN. The subscripts x and y denote the dimensions of the input and the output features, the output in an ANN follows dense matrix multiplication. \(u_{1}, u_{2},\ldots u_{x}\) represent the input vector entering the neuron. \(V_{thr}\) represents the membrane potential of the neuron and \(\phi _{s}\) represents the spiking activation

To this end, the output of a neuron l from an arbitrary hidden layer d of an SNN (see Figs. 1, 2) at a certain time-step t can be mathematically written as follows:

where \(\phi _{s}\) represents the activation function and is written as

Here, \(V^{t}_{l, (d)}\) represents the membrane potential of the respective neuron, \(\beta _{l, (d)}\) denotes the membrane decay rate, and \(\sum _{j}{W}_{lj, (d)}{z}^{{t}}_{j,(d-1)}\) stands for the term which can be directly compared to the matrix multiplication occurring in the second-generation neural networks. The activation function presented in Eq. (14) governs the discrete pulses or spikes emitted by the neurons depending on its membrane potential, and the term \(\phi _{s}(V^{{t-1}}_{l, (d)})V^{thr}_{l, (d)}\) translates to a reset mechanism which reduces the potential of the activated neuron back to the threshold potential \((V^{thr}_{l, (d)}).\) The decay rate and the threshold potential can either be pre-defined or can be trained to best suit the physical problem at hand. Thus, the learnable parameters of the above transformation are

To compare the forward pass of the SNN with classical ANN, the forward pass of a simple FFNN can be written as follows:

Thus, if we compare Eqs. (14) and (16), we see that the most fundamental transformation in SNNs is inherently time-dependent while its traditional counterpart is not. This is directly dependent on the fact, that Eq. (13) is obtained by discretizing an ODE through an explicit forward Euler scheme [100]. Another important difference is in the way the information is processed and propagated at each neuron. In SNNs, the information is processed through sparse signals, rather than the traditional approach of using dense signals. The sparsity is introduced due to the activation function which sends a signal of one or zero depending upon the membrane potential reached by the respective neuron as mentioned above. This is where the neuromorphic hardware becomes important since the transmission of information takes place only when a spike appears otherwise the synaptic weights and memory remain inaccessible. This allows the SNN framework to be energy-efficient.

Since the activation function showcased in Eq. (14) is non-differentiable, the method of surrogate gradients [75, 112, 113] was used instead of the spiking activation during the backward-pass of the model. In this study, we employ the arcus tangent surrogate activation which was proposed in [96, 112]. This enables the model to process and update the learnable parameters to approximate the true gradient of the network’s performance concerning the spikes. As a result, the SNN can maintain stable gradient dynamics and propagate information throughout the network.

Since the SNNs are inherently time-dependent we compare the unrolled form of the SNNs with that of second-generation Recurrent Neural Networks (RNNs) as shown in Fig. 3. The RNNs make use of parameter sharing and recursions to keep a trace of the memory while processing path-dependent data. Thus, the output depends on the current input and all the evaluations made in the previous time steps. Mathematically, this transformation along with its trainable parameters can be presented as

If we compare the forward passes presented in Eqs. (13), (17) and (18), we realize that an additional memory term \({\textbf{h}}_{t+1}\) is computed in the framework of classical RNNs to maintain path-dependency, while the path-dependency in SNNs stems from the time-discretization of ODE used to describe the spiking model [110]. To capitalize on the benefits of previously developed recurrent frameworks for classical ANNs, we propose a spiking dynamic memory-based recurrent variant termed as Spiking Legendre Memory Unit (LMU) [98] to enable the SPEIS framework to efficiently handle nonlinear path-dependent behavior. A detailed description of the spiking variant of LMU along with the SPEIS framework is provided in the following subsections.

4 Neural network model formulation to compute equivalent plastic strain rate

The material model presented in Eqs. (7), (8), (9), and (10) can be solved using a variety of integration schemes. In the current work, however, we use the backward Euler implicit scheme to compute the inelastic response because it has a more robust and efficient convergence behavior [114]. These implicit formulations need to be solved through an iterative algorithm to compute the internal variables of the material model at the current time step. The scalar equation governing the behavior can be expressed as follows

If \(\varphi \le 0,\) then a pure elastic deformation is assumed and the process of solving the nonlinear set of the equations is skipped; if \(\varphi > 0,\) then a nonlinear solver like Newton–Raphson, Pegasus method, etc., is triggered to compute the solution at the given time-step. The variables \(\widehat{{\textbf{S}}}^{\prime }\ \text{and}\ \widehat{{\textbf{X}}}\) represent the trial stress and back stress tensor. The computational expense of these iterative solvers can increase depending on the assumptions and the variables used for modeling the nonlinear behavior.

Thus, in the current research, we propose a hybrid-loss-based Spiking LMUs that acts as a data-driven model and as a physics-based nonlinear solver to predict the evolution of equivalent plastic strain rate fulfilling the requirement showcased in Eq. (20). This implicitly computes the state variables \({\textbf{S}},\) \({\textbf{X}},\) and R at the current time step. Thus, the input to the model is proposed as follows \({\textbf{u}}^{[p]} = ([({\widehat{{\textbf{S}}}}^{\prime })^1, {\textbf{0}}, k, K, 0],\ldots .,[({\widehat{{\textbf{S}}}}^{\prime })^i, (\widehat{{\textbf{X}}})^i, k, K, R^{i}],\ldots .,[({\widehat{{\textbf{S}}}}^{\prime })^I, (\widehat{{\textbf{X}}})^I, k, K, R^{I}]),\) where \({\textbf{u}}^{[p]}\) represents \(p^{th}\) input sequence, superscript i represents the input at an arbitrary time-step i, and I represents the superscript for the last time step. The corresponding output sequence can be seen as follows \({\textbf{v}}^{[p]} = ([\Delta {\bar{\varepsilon }}_{p}]^{{1}}, [\Delta {\bar{\varepsilon }}_{p}]^{{2}}, [\Delta {\bar{\varepsilon }}_{p}]^{{3}},\ldots ,[\Delta {\bar{\varepsilon }}_{p}]^{{I}}),\) which leads to us having a labeled pair of data-set \(({\textbf{u}}, {\textbf{v}})^{\{p\}}.\)

Learning lessons from the second-generation deep learning models, the input sequence in the present study is pre-processed, so that each of the five mentioned features has the same operational range. This aids in a smoother and more stable training process. The above is achieved by carrying out the following transformation:

The superscript \(()^{n}\) represents the normalized quantities while the terms with superscript \(()^{*}\) represent scalar quantities which are decided from preliminary assessment of the data set. In the present research, these were chosen to be the maximum values of the respective input quantities. The above transformation thus maps the input sequence to a normalized domain where the quantities have an operating range between 0 and 1. Further, the output does not undergo the mentioned linear transformation because it naturally stays within the scaled range. The ordered label pair used for pre-training thus can be given as \([{\textbf{u}}^{n}, {\textbf{v}}].\) Additionally, it should be noted that the value k, which denotes the material’s yield limit is a constant input particular to a material and thus, helps to implicitly trace the nonlinear evolution corresponding to different materials.

The crucial point associated with SNNs for performing nonlinear regression concerns the encoding and decoding of real input data. The former in the present work is achieved by passing the real data directly to a Spike-based LMU transformation which learns to map these real values to an appropriate binary spike representation through the training process. The decoding of the binary spikes is performed by a recurrent variant of the spike neural network, where the potential (real-valued) is taken as the output in contrast to its spikes. An overview and detailed description of other encoding–decoding strategies such as latency encoding, rate encoding, latency decoding, etc., can be found in [110]. Thus, the proposed Spike-based recurrent transformation that belongs to the class of physics-informed NNs can be represented through the following nonlinear transformations

where the input is first processed by the above-mentioned encoding transformation \(({\mathbf {\mathcal{S}}}_{enc}),\) followed by further spike-based transformations \(({\mathbf {\mathcal{S}}})\) to finally undergo the decoding process \(({\mathbf {\mathcal{F}}}_{dec}).\) The terms \(\theta _1,\) \(\theta _2,\) and \(\theta _3\) represent the trainable parameters of the model and are optimized based on a loss function expressed by

Equation (23) thus represents a combination of data-driven \(({\textbf{L}}_d)\) and physics-based loss \(({\textbf{L}}_p)\) terms. Section 4.2 below presents more details corresponding to it. Finally, the superscript n corresponding to the normal quantities is also dropped for convenience, and it is agreed that only non-dimensional quantities are used for NN training and prediction.

4.1 Sampling data sequences

Since the SNNs and the spiking variant of LMU are inherently temporal, in the present study we collect the data in the form of sequences as referenced above. The quality of the response from the NN depends strongly on the sampling strategy adopted and the scope of the nonlinear effects covered by the data. Thus, we use the Finite Element simulation of geometrically nonlinear plate elements undergoing viscoplastic deformations (see Fig. 4). It can be seen that normal stresses and shear stress are extracted from different Gaussian points to avoid the inclusion of spurious stresses and the shear-locking phenomenon [101]. Further, due to geometrically nonlinear effects, the training data is extracted from layer 5 (see Fig. 4) and from the Gaussian points throughout the depth of the element.

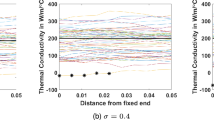

Now, we first concretize the bounds of the training range. The maximum equivalent Huber–Mises–Hencky stress developed is \(\sigma _{e} = 160.859 ~\text{MPa}\) for Aluminium and \(241.839~\text{MPa}\) for Copper materials. Also, since the Lemaitre Chaboche equations include rate dependency it becomes important to collect the data at different time increments. Thus, we collect the data in the range of \([10^{-3}, 10^{-6}]\)s. Another important point must be addressed concerning the computational feasibility of covering all the nonlinear effects through the BVP mentioned in Fig. 4. All the deformation patterns cannot be covered while training, thus to overcome this drawback we introduce a self-learning procedure that empowers the Spiking-NN to adapt itself to unseen data and reach a converged solution. More details concerning the online update can be found in Sect. 4.2 below. Thus, the training sequences consist of labeled pairs of deviatoric trial stress \((\widehat{{\textbf{S}}}^{\prime })^{[q]} = ([\widehat{{\textbf{S}}}^{\prime }]^{1}, [\widehat{{\textbf{S}}}^{\prime }]^{2},\ldots , [\widehat{{\textbf{S}}}^{\prime }]^{I}),\) back-stress tensor \(({\textbf{B}})^{[p]} = ([{\textbf{0}}], [\widehat{{\textbf{X}}}]^{2}, \ldots , [\widehat{{\textbf{X}}}]^{I}),\) isotropic hardening variable \((R)^{[q]} = ([0], [R^{1}],\ldots , [R^{I-1}]),\) and material properties \(M = (k, K)\) as input sequences while the increment of equivalent plastic strain is collected as the corresponding output sequence \(({E}_{p})^{[q]} = ([\Delta \bar{\varepsilon }_{p}^{1}, \Delta \bar{\varepsilon }_{p}^{2},\ldots , \Delta \bar{\varepsilon }_{p}^{I}]),\) where q represents an arbitrary sequence. The above-mentioned process is repeated for different strain rates and the training data in the form of labeled pairs is collected \(({\textbf{S}}, {\textbf{B}}, R, M, E_{p})^{N},\) where N represents the number of samples. In the present study, we extract 1000 sequences out of which 750 sequences are used for pre-training the NN model while the remaining 250 sequences are used for testing the performance of the spiking-LMU model.

4.2 Physics informed spiking recurrent neural network approach

In the current section, we propose a Spiking variant of LMU enhanced pseudo explicit integration scheme which transforms the input sequence

to compute the increment of equivalent plastic strain at the respective time step

In one of the authors’ previous works [17], a second-generation LSTM network was employed to compute the viscoplastic material behavior undergoing kinematic hardening. In an LSTM cell [99] the short and long-term memory variables are dependent directly upon the number of initialized hidden cells. Since long-term memory is responsible for preserving the memory of a layer, increasing its capacity would help in processing even long sequences. This was achieved in the study [98] and was named as LMU cell (Fig. 5), where the processing of long-term memory of the system was decoupled from the number of hidden units. Thus, with the current research, we introduce a possible Spiking variant (third generation) of the LMU cells that could be deployed on neuromorphic hardware. In the following, we briefly introduce the nonspiking and spiking variants of LMU cells.

LMU cell: Rectangular blocks A and B denote linear transformation applied on the memory computed at the previous time step \(({\textbf{m}}^{t-1})\) and the signal \({{\textbf{u}}}^{t},\) which used to compute the memory at the current time step. \({\textbf{e}}_x, {\textbf{e}}_m,\ \text{and}\ {\textbf{e}}_h\) represent learnable parameters of the LMU cell. \({\textbf{x}}^t,\) \({\textbf{h}}^{t}\) and \({\textbf{o}}^{t}\) represent the input, working memory, and output of the cell

The core concept of an LMU cell depends on the dynamic computation of its memory \({\textbf{m}}^{t},\) which is derived from the linear transfer function for the continuous delay and is best approximated by n coupled ordinary differential equations (ODEs)

where \({\textbf{m}}(t) \in {\mathbb{R}}^{n}\) denotes a state vector with n dimensions, \(({\textbf{A}}, {\textbf{B}})\) represent ideal state-space matrices derived from the Padé approximants as mentioned in studies [98, 115], and \({\textbf{u}}(t) \in {\mathbb{R}}\) represents an input signal which is orthogonalized across a sliding time window of length \(\theta .\) These n sets of equations are mapped to the memory of the cell at discrete time steps t and are expressed by

where \(\tilde{\textbf{A}}\) and \(\tilde{\textbf{B}}\) are discretized matrices and in the present work are computed using the zero-order hold (ZOH) method. The parameters of the memory \(\tilde{\textbf{A}},\) \(\tilde{\textbf{B}}\) and \(\theta\) can also be trained by back-propagating through the ODE solver to adapt itself to different time-scales, however, in the present research, we do not tune them and accept the discretization through ZOH. The signal \({\textbf{u}}^{t}\) writes to the memory in Eq. (27) and is computed using the equation

where \({\textbf{e}}_{\textbf{x}},\) \({\textbf{e}}_{\textbf{h}},\) and \({\textbf{e}}_{\textbf{m}}\) represent parameters used to dynamically project relevant information in its memory cell. The input provided to the model at the current time step is denoted by \({\textbf{x}}^{t},\) while \({\textbf{h}}^{t-1}\) represents the hidden state at the previous time step. Finally, the memory is then passed to a simple recurrent hidden cell which nonlinearly transforms the memory to produce the output \({\textbf{o}}^{{\textbf{t}}}\) and the current state of the layer \({\textbf{h}}^{{\textbf{t}}},\) written as

where \({\textbf{W}}^h,\) \({\textbf{W}}^m,\) and b represent a set of trainable parameters to learn the required function. The spiking variant of the LMU cell (SLMU) can be computed by keeping the Eqs. (26), (27), and (28) unchanged and introducing the spikes in Eqs. (29) and (30) as

representing the update that would take place at any arbitrary neuron l of an arbitrary hidden layer d. j denotes the number of hidden neurons in dth layer and k denotes the number of units used to compute the memory. Here, \(\phi _{s}\) represents the activation function and is written in the form

Looking at Eqs. (31) and (32), we can deduce that the cell state \(h^t_{l,(d)}\) can be interpreted as the neuron’s potential \((V^t_{l, (d)})\) and is activated only when a certain threshold is exceeded. The corresponding set of learnable parameters for the proposed SLMU cell can be expressed by

SLMU based nonlinear solver: The input variables are assumed to be fed with dense connection (indicated by filled arrows) a certain time step t. The first layer named Autoencoding SLMU layer converts the real-valued data into binary spike representation. The discrete lines indicate that no signal or 0 is passed while the simple arrows indicate that the threshold a signal of 1 is transmitted. The black arrows placed on every neuron of the SLMU layer indicate the presence of recurrent connections. The isotropic hardening parameter \(R^{t}\) can be expressed using \(R^{t-1}\) and \((\Delta \varepsilon _p)_{c}^{t}\) as shown in Eq. (38)

Further, the present study introduces a simpler variant than SLMU termed spiking recurrent neural network (SRNN) as a decoder. Mathematically, it is described in the form

If we compare the transformations in Eqs. (13) and (34) we realize that only an additional term corresponding to a recurrent weight \({\textbf{U}}_{l, (d)}\) and the output from the previous time step \(\phi _{s}(V^{t-1}_{k, (d)})\) is explicitly added to strengthen the sequential nature of the neuron further. Thus, the set of trainable parameters corresponding to the above transformation can be written as

Hence, in the present study, we combine the third-generation spike LMU and spike RNN layers with dense layers and propose a hybrid neural network model (consisting of second and third-generation neurons) to establish a data and physics-empowered solver (see Fig. 6). Normally, the traditional nonlinear solvers such as Newton–Raphson, Pegasus method, etc., require an initial guess or multiple initial guesses to search for the solution of a nonlinear equation or a set of nonlinear equations.

In the current study, the proposed spike-based NN model has the ability to not only reach a converged solution for a set of nonlinear equations but also remember the solution for the corresponding set of input variables through its learnable parameters \(\tilde{\theta }.\) This sets the solving capabilities of the NN apart from those of classical nonlinear solvers. Further, to take advantage of such a unique ability we pre-train the model shown in Fig. 6 with a variety of input and output labeled pairs following the sampling strategy presented in Sect. 4.1. Thus, the model is penalized to learn through the data and physical constraint, guiding the NN to the converged solution. This empowers the NN model to either directly guess the solution or learn the solution for an unknown set of input parameters through a process of online training which in the present study is referred to as self-learning.

We now focus on one forward pass through the model presented in Fig. 6. At a certain time step t, the input sequence is given as \(x = ([((\widehat{{\textbf{S}}}^{\prime })^t), \widehat{{\textbf{X}}}^{t}, R^{t-1}, k, K, ({\mathcal{S}}_{p})_{1}^{t}, ({\mathcal{S}}_{p})_{2}^{t}, ({\mathcal{S}}_{p})_{3}^{t}, ({\mathcal{S}}_{p})_{4}^{t}]).\) The internal parameters of the LMU cell are represented by \(({\mathcal{S}}_{p})_{i}^{t} = [h^{t-1}_{i}, m^{t-1}_{i}]\) where i takes the value from \((1,\ldots ,3)\) and the internal parameters of SRNN layer is represented by \(({\mathcal{S}}_p)_{4}^t = {h_{4}^{t-1}}.\) These parameters enable the model to handle the nonlinear evolution at each Gaussian point individually since each integration point has a unique set of \(({\mathcal{S}}_p)_{i}^{t}\) values for a given time step t. Thus, all the spike-based LMU (SLMU) layers and the SRNN layer play a crucial role to limit any loss of information that can occur while trying to establish the equilibrium at each Gaussian point. Furthermore, because the input variables are real-valued, they must be encoded to obtain a binary representation of the data. The first SLMU layer deployed in the model achieves this in the proposed study. This means that the model’s first SLMU layer serves as the auto-encoding layer, generating a binary (spike) representation of the input sequence at a given time t. The generated spikes then pass through the following SLMU layers, enriching the model with a complex higher-dimensional representation of the input sequence. Because the increment of equivalent plastic strain is also real-valued, the spikes are decoded by the SRNN layer, which uses the potential computed at the respective time steps as outputs. Finally, the decoded data is further processed through two dense layers before computing the output \(\Delta \bar{\varepsilon }_p\) and the internal parameters corresponding to the recurrent spike layers. This completes the forward pass of the model.

In the training process of the spiking NN model, in the present study, the model undergoes two learning processes, the first being the pre-training process and the second being the online training (self-learning) process. In the pre-training process, the model is trained by a combination of data-assisted or data-driven \(({\textbf{L}}_{d})\) and physics-based \(({\textbf{L}}_{p})\) loss functions. Mathematically, they are denoted as follows.

where \(\tilde{m}\) represents a particular sequence, \(l^{\tilde{m}}\) stands for the length of a particular sequence, T denotes the total number of sequences used for training, the subscripts a and c in Eqs. (37) and (38) highlight the actual and computed values of strain, and t represents a certain time step of a particular sequence m. In the pre-training phase, the NN model as presented in Fig. 6 is trained by a combination of both data-driven and physics-based loss functions. Thus, the coefficients of the loss terms are accepted as \(\lambda _1 = \lambda _2 = 1.\) More details about pre-training can be found in Sect. 4.3 below. The next phase starts when the spiking NN model is deployed in the integration scheme as shown in Algorithm 1. In the elastic predictor step, the trial stress \(\widehat{{\textbf{S}}},\) deviatoric form of the trial stress \(\widehat{{\textbf{S}}}^{\prime }\) and the trial back-stress tensor \(\widehat{{\textbf{X}}}^{\prime }\) is computed. Now, if \(\varphi < 0\) then the trial variables are accepted as the new updates as shown in Algorithm 1. On the other hand, if the constraint is violated then we enter the plastic corrector step and need to solve a set of nonlinear equations to compute the state variables at the new time step t.

Since the present study concerns a plane stress problem, an iterative process needs to be adopted to satisfy \(\sigma _{33}=0.\) The details concerning the iterative procedure are not presented in the Algorithm 1, rather, a pseudo implementation is highlighted. Further, instead of employing a nonlinear solver like Newton–Raphson, secant-tangent, etc., to solve the mentioned nonlinear equation (see Eq. 38), we employ a spiking neural network as shown in Fig. 6 to obtain a converged solution. The model which is pre-trained takes the input sequence \({\textbf{u}}^{n}\) along with internal parameters from the previous time steps as the input to predict the response at the current time step. Once \(\Delta \bar{\boldsymbol{\varepsilon}}_{p}\) is predicted by the network, the physics-based loss term \({\textbf{L}}_{p}\) is computed as shown in Eq. (38). If \({\textbf{L}}_{p}\) is within the convergence criteria i.e. less than \(10^{-6}\) then the predicted output is accepted as the solution for the current time step, else a self-learning procedure is triggered which ensures that the physical constraint of the \({\textbf{L}}_{p}\) is satisfied to obtain the expected solution. This is achieved by tuning the weights of dense layers on physics-based loss term \({\textbf{L}}_{\textbf{p}}\) while freezing the weights of SLMU and SRNN layers. Such a strategy is employed to avoid loss of information from the Spiking layers and at the same time to be able to fine-tune the model for unknown sequences. To the best of the authors’ knowledge, such a self-learning framework with spiking variants of LMU and RNN transformation is novel in the literature, and it attempts to investigate the use of spiking networks in computational mechanics.

4.3 Pre-training phase

Pre-training aims to guide the NN model with experiences collected through data and its corresponding physics to approximate a spike-based sequential model. This phase can be perceived to be the normal training phase of a NN model, where the freshly initialized parameters are optimized through loss functions presented in Eqs. (36), (37) and (38). The Adam optimizer [116] is used in the present study to tune the parameters of the model and learn the underlying physical behavior of the system.

In addition to the physical constraint, highlighted in Eq. (38), an additional constraint that needs to be satisfied while undergoing pre-training is the positivity of equivalent plastic strain i.e. \(\bar{\varepsilon }_{p} \ge 0.\) In [60], a penalty constraint was proposed which increased the loss of the system if a negative value was predicted. However, for the present physical behavior a negative prediction while pre-training causes non-convergence since a negative number raised to a fraction results in a complex number (see Eq. (38)). To circumvent the issue, we adopted a sigmoid activation in our study [17], as it always results in a positive output. Despite the positive results, several issues with diminishing gradients or the so-called plateau problem [117] were reported. Additionally, since the output values for sigmoid activation are constrained between 0 and 1, they are inappropriate for BVPs where the increment for \(\Delta \bar{\varepsilon }_p\) is greater than 1. Thus, to evade the above-mentioned issues we employ a soft plus [118] activation function in the output layer of the proposed model. Its inclusion ensures a more stable and efficient gradient process since there are no sharp corners or discontinuities. Further, since it is a monotonic function that always increases with an increase in its input the problem of vanishing gradients is avoided.

The complete pre-training phase is described in Algorithm 2. The outermost loop describes the number of epochs for which the model is trained. The following for loop represents the batches of sequences used for training from the complete set of training sequences, while \({\textbf{u}}\) and \({\textbf{v}}\) thus represent the corresponding pre-processed batches of input sequences. The innermost for loop indicates the processing of information through time. A particular time step is represented by t, while the total number of time steps in a series is represented by l. Both the physics-based and data-driven loss terms are computed and added over all the time steps to update the parameters of the model. Naturally, since the training data is extracted for the converged solution, the internal parameters of the model are updated after each time step.

4.4 Hyperparameter tuning

In the present study, the number of SLMU layers, the number of hidden units in the SLMU layer, the number of hidden Dense layers, number of units of the SRNN layer, and the number of hidden units in the Dense layers are chosen to be the hyperparameters to be tuned. For all the other parameters either the advised values are accepted, such as the learning rate of the Adam optimizer which is chosen to be \(\eta = 0.001\) or the choice was made depending on the physical constraint that needs to be satisfied such as the choice of the activation function for the output layer, which is chosen to be the soft plus activation.

To tune the mentioned hyperparameters we employ a Hyperband search algorithm [119] which results in efficient exploration and investigation of suitable architectures. Finally, the search domain along with the tuned values of hyperparameters can be seen in Table 2.

5 Case studies and results

The present study proposes a framework for implementing sustainable neuromorphic computing into the Finite Element Method. This includes the brain-inspired variant of third-generation recurrent neural networks referred to as SLMU layers and a physics-based self-learning integration scheme (SPEIS) to compute the nonlinear material response by eliminating/minimizing the iterations required in the classical implicit scheme. The result is significant energy saving in comparison to classical FEM or second-generation deep learning models. Furthermore, the new sustainable neural network approach still ensures higher simulation speed and better convergence behavior compared to classical FEM as observed in second-generation neural networks. Also, the model is regularized [120] through the spiking mechanism which assists in obtaining a robust response over unseen sequences. The SLMU layers used in combination with second-generation dense layers act as a nonlinear solver for evaluating the material response at the Gaussian integration points. Thus, the hybrid solver (see Fig. 6) combining the neural transformations across second and third generations is deployed in a Finite Element code to compute the geometrically and physically nonlinear viscoplastic behavior of plate structures made up of aluminum (see Fig. 7). To investigate and showcase the adaptability of the proposed solver, the results for test sequences and a series of BVPs where the deformation lies outside of the pre-training phase are discussed. Finally, a detailed comparison of the model’s energy consumption is presented when deployed on a CPU, GPU, Loihi, and Xylo-Av2 SynSense chip in the following subsection.

5.1 Case study: response on test sequences

The performance of the proposed hybrid model is first evaluated on two test sequences as shown in Figs. 8 and 9. These sequences correspond to the deformation presented in Fig. 4. Since the model is able to accurately predict the response for the test sequences it can be concluded that the necessary nonlinear behavior has been learned by the model through the proposed combination of physics-based and data-driven loss terms (see Sect. 4.3).

5.2 Case study: horizontal plate structure exhibiting physical nonlinear behavior

In this subsection, we present a boundary value problem (BVP) as shown in Fig. 10. The BVP consists of plate elements, each having 9 nodes and 13 integration points along the element and 5 integration points along its depth as shown in Fig. 4. To understand the learning ability of the SLMU model we start by analyzing its response only for physically nonlinear behavior.

The cyclic loading sequence applied for the respective BVP has a similar distribution as the pre-training phase, however, for certain Gaussian points the deformation pattern is completely unknown. This leads to a number of conclusions regarding the computational effort needed for the BVP, the self-learning ability of the recently developed SPEIS scheme (see Algorithm 1), and the impact of the internal parameters of the SLMU layers on the accuracy of computing the equivalent plastic strain rate, all of which are covered in more detail below (see Sect. 6.2). Furthermore, to compare the accuracy of the third-generation and second-generation networks, we compute the response of the SPEIS framework with the model shown in Fig. 6 and its corresponding second-generation variant.

The stress–strain curves corresponding to elements 1 and 100 are shown in Figs. 11 and 12 and it can be seen that the SLMU model and its second-generation variant are able to precisely compute the nonlinear response at the corresponding integration points. It can also be concluded that from the point of view of accuracy, the third-generation network was able to match the accuracy of its second-generation variant indicating smooth performance of the corresponding encoding and decoding strategies. Thus, for all the following BVPs the results are presented only with the SLMU model. Also, it must be noted that since the SLMU model is deployed in the corrector step of Algorithm 1 its influence is only visible in the nonlinear domain which has the following advantages:

-

1.

Less computational effort in the corrector step since, the NN model requires no/less online training steps to achieve a converged solution.

-

2.

With each converged solution, the NN model remembers the path and stores the corresponding knowledge in its internal parameters which helps in avoiding iterations for other Gaussian points which may undergo similar nonlinear deformation patterns.

5.3 Case study: spatial plate structures with symmetric and unsymmetric loading exhibiting physical nonlinear behavior

In this subsection, the SPEIS framework is evaluated concerning its response to two spatial structures (see Figs. 13 and 14). The main purpose of these BVPs is to understand and assess the response of the model over unseen deformation patterns i.e. during the pre-training phase and understand the extent of the online training required to achieve a converged solution. The corresponding stress–strain curves can be seen in Figs. 15, 16, 17, and 18 respectively. A detailed discussion on accuracy can be found in Sect. 6.2.

Comparing the prediction of SLMU model (3rd gen) enhanced FE and LMU model (2nd gen) enhanced FE for element number 1 with the results from classical FEM (see Fig. 10)

Comparing the prediction of SLMU model (3rd gen) enhanced FE and LMU model (2nd gen) enhanced FE for element number 100 with the results from classical FEM (see Fig. 10)

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 3 with the results from classical FEM see Fig. 13

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 48 with the results from classical FEM see Fig. 13

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 45 with the results from classical FEM see Fig. 14

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 30 with the results from classical FEM see Fig. 14

Comparing the force vs. displacement response from classical FE solution and NN-Enhanced FE solution for a geometrically and physically nonlinear plate structure. The BVP presented in Fig. 10 is simulated encapsulating the nonlinear effects

5.4 Case study: horizontal plate structure exhibiting physical and geometrical nonlinear behavior

With this subsection, we investigate and understand the response of the SPEIS framework for handling a combination of physical and geometrical nonlinear behavior. The geometrical nonlinear behavior arises through the moderate rotations included in Eq. (4) and through the nonlinear strain-displacement relationship as shown in Eq. (5). A superposition of the physical and geometrical nonlinear effects can be observed clearly when the structure undergoes rotation and is loaded in the transverse direction followed by the Huber–Mises–Hencky stress reaches the yield limit. In such a scenario, the proposed SPEIS framework is able to compute the nonlinear material response without losing/hampering the nonlinear effects arising due to geometric nonlinearity. This can be observed through the force-displacement curve seen in Fig. 19 and through stress–strain curves shown in Figs. 20 and 21.

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 90 with the results from classical FEM see Fig. 10

Comparing the prediction of SLMU model (3rd gen) enhanced FE for element number 9 with the results from classical FEM see Fig. 10

6 Discussions

6.1 About the hybrid model and self-learning

In the present research, the term hybrid model is associated with the combined implementation of the third-generation SLMU layers, SRNN layer, and second-generation dense layers. During the pre-training phase, all the layers are initialized to be trainable and thus gain experience/knowledge from a combined loss function consisting of data-driven (see Eq. 37) and physics-based loss terms (see Eq. 38). Once, the model is trained with the required amount of accuracy which is assumed to be \(5 \times 10^{-6},\) the pre-training phase is assumed to be completed and the model is deployed in the SPEIS framework. Once, the model is deployed the trainable parameters of the third-generation SLMU layers and SRNN layer is frozen, while the second-generation dense layers are free to be trained. Such a strategy was deployed in the present research to preserve the knowledge/learning from the pre-training phase and use it to make informed predictions in the nonlinear plastic domain. Suppose after its deployment in the FE solver, the SLMU model was not able to directly predict the corresponding converged solution, then the online training strategy is triggered and the NN model adapts itself to reach the converged solution. This ability of the SLMU model to automatically trigger online training is referred to as self-learning and its responsible for tracing the nonlinear evolution at each Gaussian point.

6.2 About accuracy

The accuracy in the present study is computed by comparing the stress response of the structures obtained from the Euler-Implicit Integration scheme (EIIS) and from the proposed SPEIS framework. Root Mean Square Error (RMSE) values are used as a measure for comparing the performance of EIIS and SPEIS framework for Gaussian points that underwent maximum plastic deformation as shown in Table 3. Also, to see its impact on the structure’s global equilibrium, we compare the RMSE values of the global displacement vector for structures shown in Figs. 10, 13, and 14. It is clear that the structure subjected to a combined geometrical and physical nonlinear deformation was predicted to have the least amount of error. Given that it corresponds to the Gaussian point closest to the externally applied load in Fig. 21, which only exhibits geometrically nonlinear behavior. Additionally, if we look at the stress evolution in Fig. 20, we can see that the SPEIS framework handled the combined nonlinear effects effectively (see Fig. 19) because the model was able to reach the necessary converged solution with a low number of online training steps, which in the current study is set at 25. On the flip side, the initialization of such a low number of online training steps can also introduce errors as seen in Fig. 17. Thus, to minimize the loss of accuracy and computational effort we can optimize the ideal number of training steps and periodically re-train the SLMU model to achieve optimum performance.

6.3 About computational effort

The term Spiking Pseudo Explicit Integration Scheme (SPEIS) stems from the inherent implicit nature of the integration scheme since it requires minimal or no training steps/iterations to arrive at a converged solution. This results in a reduced computational effort when SPEIS framework is deployed in Finite Element simulations in comparison to its classical counterpart. In the present research, an in-house FE solver is developed in Python, hence the comparison of computation speed must be regarded as conjectural since it depends upon the implementation of the solver.

For the BVP shown in Fig. 10 undergoing only physically nonlinear deformation it was observed that the Finite Element solution with the SLMU model and its second-generation counterpart was 41% and 29% faster in comparison to the traditional Finite Element solution. Such an acceleration is introduced by the elimination or reduction of the number of online training steps required to reach a converged solution. The higher computational effort required by the SLMU model in comparison to its traditional counterpart can be attributed to the loss of information/knowledge during the propagation and conversion of real-valued signals to spiking and vice-versa. Furthermore, the SPEIS framework was able to achieve a computational gain of around 33% and 30% for the structures presented in Figs. 13 and 14, respectively. This indicates that the computational gain achieved by the SPEIS framework is more evident for complex BVPs since the framework is able to make an informed prediction for Gaussian points undergoing nonlinear deformations.

In the case of a combined geometrical and physical nonlinear deformation behavior as shown in Fig. 19 the SPEIS framework had a computational gain of around 18% as compared to its traditional FE solution. This is a result of the fact that both nonlinearities play a crucial role in determining the computational effort of the FE solver, and thus the SPEIS framework needs to adapt\learn the deformation pattern produced by these combined non-linear effects. The robust nature of the SPEIS framework can be seen from the Figs. 20 and 21, where the internal response of the element undergoing maximum plastic deformation and maximum geometrical nonlinear effect is accurately predicted.

Finally, it must be noted that the SLMU model could be periodically re-trained for sequences and distributions where the model takes a significant amount of online training steps to reach a converged solution. This would further help in minimizing the time required to reach a solution. Finally, it must be noted that the SLMU model could be periodically re-trained for sequences and distributions where the model takes a significant amount of online training steps to reach a converged solution. This would further help in minimizing the time required to reach a solution.

6.4 About deployment of spiking networks on a Xylo-Av2 neuromorphic chip

In the preceding subsections, we proposed algorithms, such as encoding and decoding strategies, to aid in converting real-valued signals into their corresponding spiking signals and vice versa. These algorithms play a crucial role in enabling third-generation spiking networks to be deployed for learning real-valued regression tasks. Thus, the following section investigates the applicability of these algorithms when the model is implemented on neuromorphic hardware, such as SynSense’s Xylo-Av2 chip. The Xylo family is designed for low-power continuous temporal processing tasks and hence it is chosen to learn the path-dependent (temporal) regression task. The Rockpool library [79] developed by SynSense is used to initialize the spiking model which is later deployed using Samna [79], a device toolchain, on the chip.

Since the chip is designed for low-power tasks there are certain conditions that must be fulfilled before the model can be deployed on it. The only types of neurons that can be used on the chip are Leaky-Integrate and Fire (LIF) and Recurrent Leaky-Integrate and Fire (RLIF) neurons. Thus, we replace the SLMU layers in the model presented in Fig. 6 with LIF and RLIF layers as shown in Fig. 22. Additionally, as the input and output to the chip are expected to be feed-forward in nature rather than recurrent, the LIF layer is utilized instead of the RLIF layer for autoencoding and decoding the respective real-valued and binary signals. Once, the model is initialized, it first undergoes pre-training as described in Sect. 4.3. This pre-training is carried out on the CPU since there is no on-chip training possible with the Xylo-Av2 chip. This means that once the model is trained, the learned parameters of only the spiking layers are deployed on the chip using Samna, while the remaining second-generation dense layers are deployed on the CPU. A complete forward pass of the proposed model for the Xylo-Av2 chip can be seen in Fig. 22, where the autoencoding takes place on the CPU since only binary signals are accepted by the chip as an input. In the following step, the information is processed on the chip and is decoded again into real-valued signals by the last LIF (decoding) layer. These decoded signals are further transformed using a sequence of dense layers to compute the required equivalent plastic strain increment.

The efficient energy performance of the chip can be observed through the Tables 4 and 5 where the combined energy consumption of these layers is presented. A total reduction factor of 111.55 and 3161.125 is observed when the energy values of the third-generation layers are computed on the Xylo-Av2 chip and compared with the energy values from GPU and CPU respectively. Finally, for the entire hybrid model a reduction factor of 1.6140 and 1.6051 is observed indicating the efficient performance of the proposed strategy for physics-based nonlinear regression. Hence, a numerical validation on a real neuromorphic hardware is performed.

Overview of the flow of information when the proposed autoencoding strategy combined with the decoding strategy is implemented on a signal processing Xylo-Av2 neuromorphic chip which is represented by a high-level diagram of main components of the Xylo development kit [79]

6.5 About energy consumption

In the present subsection, we conduct several power-profiling experiments with the SLMU model and its second-generation equivalent in order to determine the extent of the advantage provided by the SLMU model with regard to energy consumption per forward pass. Through the use of KerasSpiking V0.3.1, the inference corresponding to the amount of energy consumed is computed. The energy values for an Intel i7-4960X chip as CPU [121], Nvidia GTX Titan Black as GPU [121], and the Intel Loihi chip [77] as the neuromorphic hardware are used in the current study. Table 6 displays the layer-by-layer breakdown of the energy consumed by the models when run on a CPU, GPU, Loihi, Loihi (SLMU layers) + GPU (Dense layers), and Loihi (SLMU layers) + CPU (Dense layers). A layer’s overall energy consumption represents the energy necessary to complete total synaptic operations and neuron updates. It should be emphasized that the overhead energy cost, i.e., the cost of deploying the model on/off the device, is not considered in the current study. Also, these energy values that are stored in KerasSpiking are used from the results published in [77, 121] thus, the values obtained from it should be held conjectural as they rely on a number of assumptions.

Synaptic operations and neuron updates only take place for activated neurons in spiking neurons and layers because the memory and neurons are only accessed when the threshold is surpassed. This reflects in the energy consumption of the model which is the lowest when processed on a Loihi chip as shown in Table 6. Furthermore from Table 7 a layerwise and total reduction in the model’s energy consumption can be observed. When comparing the outcomes of the spiking model when implemented on the Loihi chip with the non-spiking variant on GPU and CPU, respectively, we observe a total reduction factor of 1188.2092 and 34,392.33 (see Table 7). However, it should be noted that KerasSpiking assumes that the deployed hybrid NN model only consists of third-generation neurons. This indicates that the energy values for the second-generation dense layers do not accurately reflect the values for the suggested hybrid model. By deploying only the SLMU layers on the Loihi chip and the dense layers on the GPU/CPU, as demonstrated in Tables 6 and 7, a more reliable set of values can be obtained. Also, since the energy corresponding to one neuron update is used to compute the energy of the entire layer and the NN, the real-energy consumption of the hybrid NN can vary in comparison to the presented values in Tables 6 and 7, and is a limitation of the present method.

6.6 About adaptive brain-inspired regularization

The output coming from a spiking neuron depends upon the potential reached by the neuron during a certain time increment. A neuron fires only when its potential exceeds the threshold potential \((V^{thr}),\) else it remains deactivated. This means for a given forward pass only a certain subset of neurons remain active/alive which depends upon the threshold potential and the spiking input signal. Such a transformation can be directly compared to a dropout [122] layer, wherein a certain subset of neurons are randomly switched off with a certain probability. The authors first highlighted this effect achieved due to the spiking mechanism in [120]. In the present study, the threshold potential corresponding to each neuron is considered to be a trainable parameter, thus each neuron is now able to adapt its threshold potential depending upon the physical problem at hand which further helps in regularizing the learning of the proposed SLMU model.

6.7 About convergence behavior

The SPEIS framework can typically arrive at a converged solution for input sequences where the patterns resemble the distribution found in the training data without undergoing online training steps. However, for the distributions that are unknown to the hybrid model such as for new material or for an unknown loading sequence, the online training triggers and the model adapts itself to satisfy the convergence criteria/physical loss \((L_{p})\) as shown in the plastic corrector step of Algorithm 1.

It was observed that during the online training process, the model faced issues of diminishing gradients or the so-called plateau problem [117] affecting the convergence of the FE solution. In the present research, we circumvent these issues by employing a cyclical learning rate scheduler as shown in Table 8. This helps the model explore its parameter space more efficiently and reach the converged solution swiftly. The model is also less likely to get stuck in local minima because it periodically investigates different parameter space regions with varied learning rates. This results in enhanced generalization and performance on untested data.

7 Conclusions

In the present study, a framework is proposed to implement spiking neural networks into numerical simulation methods such as the Finite Element Method. A crucial point of this achievement is the realistic transformation of physical values into binary signals and vice versa. Hence, in this study, we propose an autoencoding technique that transforms history-dependent real-valued signals into a unique spiking representation, allowing it to be applied to a class of deep learning systems known as Physics-Informed Neural Networks (PINNs). The first spiking layer of the NN model is used as the encoder, while the membrane potential from the last spiking layer is used to transform the spiking signals back to their real-valued representation.

We further propose a physics-based self-learning framework that is combined with a hybrid model, i.e. a combination of third-generation spiking variant of Legendre Memory Unit (SLMU) layers, Spiking Recurrent layers (SRNNs), and dense transformations to predict the rate-dependent nonlinear material response in Finite Element Method (FEM) for plate structures. This hybrid model is firstly pre-trained using a weighted combination of data-driven and physics-based loss terms. Once trained, the model is deployed in the plastic-corrector step where it acts as an informed nonlinear solver to minimize/eliminate the iterations required to reach the converged solution. The authors are aware that while learning the evolution of equivalent plastic strain rate during pre-training, it is possible that the model either directly memorizes the data or undergoes overfitting. This is where the self-learning ability of the model comes in which guides it using the physics-based loss term to predict a converged solution even for a completely new distribution in the FE solver. Another important factor that influences the learning of the hybrid model is the computation and update of its internal variables. The LMU/SLMU cell proposed in the present study plays a crucial role in predicting the evolution of nonlinear response at each Gaussian point. Since the internal variables of these layers are computed independently of the number of hidden neurons, more memory capacity can be provided to each SLMU layer to further enhance its learning capabilities.

By including spikes, the proposed model can be used with neuromorphic hardware like the Loihi and Xylo-Av2 chip to compare its energy consumption to that of the GPU and CPU respectively. When the SLMU layers and decoder are deployed on Loihi and the dense layers are deployed on GPU and CPU, respectively, a reduction factor of 12.9567 and 8.0734 per epoch is observed, while a reduction factor of 1.6410 and 1.6051 is obtained when the deployed on Xylo. In terms of the hybrid model’s accuracy, Table 3 demonstrates that the RMSE values are comparable with those of the second-generation LMU model, highlighting strong approximation capabilities. The brain-inspired regularization introduced due to the spiking mechanism in each of the third-generation layers also enhances its learning abilities.

Data availability

Data will be made available on reasonable request.

References

Brenner MP, Eldredge JD, Freund JB (2019) Perspective on machine learning for advancing fluid mechanics. Phys Rev Fluids 4:100501. https://doi.org/10.1103/PhysRevFluids.4.100501

Brunton SL, Noack BR, Koumoutsakos P (2020) Machine learning for fluid mechanics. Annu Rev Fluid Mech 52(1):477–508. https://doi.org/10.1146/annurev-fluid-010719-060214

Kutz JN (2017) Deep learning in fluid dynamics. J Fluid Mech 814:1–4. https://doi.org/10.1017/jfm.2016.803

Raissi M, Yazdani A, Karniadakis GE (2020) Hidden fluid mechanics: learning velocity and pressure fields from flow visualizations. Science 367(6481):1026–1030. https://doi.org/10.1126/science.aaw4741

Theocaris PS, Panagiotopoulos PD (1995) Plasticity including the Bauschinger effect, studied by a neural network approach. Acta Mech 113:63–75. https://doi.org/10.1007/BF01212634

Theocaris PS, Panagiotopoulos PD (1997) On the parameter identification problem for failure criteria in anisotropic bodies. Acta Mech 123:34–56. https://doi.org/10.1007/BF01178399

Meißner P, Watschke H, Winter J, Vietor T (2020) Artificial neural networks-based material parameter identification for numerical simulations of additively manufactured parts by material extrusion. Polymers 12:2949. https://doi.org/10.3390/polym12122949

Papadopoulos L, Bakalakos S, Nikolopoulos S, Kalogeris I, Papadopoulos V (2023) A computational framework for the indirect estimation of interface thermal resistance of composite materials using XPINNs. Int J Heat Mass Transf 200:123420. https://doi.org/10.1016/j.ijheatmasstransfer.2022.123420

Olivier A, Shields MD, Graham-Brady L (2021) Bayesian neural networks for uncertainty quantification in data-driven materials modeling. Comput Methods Appl Mech Eng 386:114079. https://doi.org/10.1016/j.cma.2021.114079

Dursun G, Tandale SB, Eschweiler J, Tohidnezhad M, Markert B, Stoffel M (2020) Recognition of tenogenic differentiation using convolutional neural network. Curr Dir Biomed Eng 6(3):200–204. https://doi.org/10.1515/cdbme-2020-3051

Dursun G, Tandale SB, Gulakala R, Eschweiler J, Tohidnezhad M, Markert B, Stoffel M (2021) Development of convolutional neural networks for recognition of tenogenic differentiation based on cellular morphology. Comput Methods Programs Biomed 208:106279. https://doi.org/10.1016/j.cmpb.2021.106279

Stoffel M, Weichert D, Müller-Rath R (2009) Modeling of articular cartilage replacement materials. Arch Mech 61(1):69–87

Stoffel M, Willenberg W, Azarnoosh M, Fuhrmann-Nelles N, Zhou B, Markert B (2017) Towards bioreactor development with physiological motion control and its applications. Med Eng Phys 39:106–112. https://doi.org/10.1016/j.medengphy.2016.10.010

Gamez C, Schneider-Wald B, Schuette A, Mack M, Hauk L, Khan AUM, Gretz N, Stoffel M, Bieback K, Schwarz ML (2020) Bioreactor for mobilization of mesenchymal stem/stromal cells into scaffolds under mechanical stimulation: preliminary results. PLoS One 15(1):0227553

Stoffel M, Bamer F, Markert B (2018) Artificial neural networks and intelligent finite elements in non-linear structural mechanics. Thin Walled Struct 131:102–106. https://doi.org/10.1016/j.tws.2018.06.035

Gorji MB, Mozaffar M, Heidenreich JN, Cao J, Mohr D (2020) On the potential of recurrent neural networks for modeling path dependent plasticity. J Mech Phys Solids 143:103972. https://doi.org/10.1016/j.jmps.2020.103972

Balkrishna Tandale S, Markert B, Stoffel M (2022) Intelligent stiffness computation for plate and beam structures by neural network enhanced finite element analysis. Int J Numer Methods Eng 123(17):4001–4031. https://doi.org/10.1002/nme.6996

Rodrigues CF, Riley G, Luján M (2018) SyNERGY: an energy measurement and prediction framework for Convolutional Neural Networks on Jetson TX1. In: Proceedings of the international conference on parallel and distributed processing techniques and applications (PDPTA). The Steering Committee of The World Congress in Computer Science, Computer Engineering and Applied Computing (WorldComp), pp 375–382

García-Martín E, Rodrigues CF, Riley G, Grahn H (2019) Estimation of energy consumption in machine learning. J Parallel Distrib Comput 134:75–88. https://doi.org/10.1016/j.jpdc.2019.07.007

Yang T-J, Chen Y-H, Emer J, Sze V (2017) A method to estimate the energy consumption of deep neural networks. In: 51st Asilomar conference on signals, systems, and computers, Pacific Grove, CA, USA, 1916–1920. https://doi.org/10.1109/ACSSC.2017.8335698

Strubell E, Ganesh A, McCallum A (2019) Energy and policy considerations for deep learning in NLP. arXiv preprint arXiv:1906.02243

Cai E, Juan DC, Stamoulis D, Marculescu D (2017) Neuralpower: predict and deploy energy-efficient convolutional neural networks. In: Asian conference on machine learning. PMLR, pp 622–637

Chen Y-H, Krishna T, Emer JS, Sze V (2017) Eyeriss: an energy-efficient reconfigurable accelerator for deep convolutional neural networks. IEEE J Solid State Circuits 52(1):127–138

Gauen K, Rangan R, Mohan A, Lu Y-H, Liu W, Berg AC (2017) Low-power image recognition challenge. In: 2017 22nd Asia and South Pacific design automation conference (ASP-DAC), Chiba, Japan, pp 99–104. https://doi.org/10.1109/ASPDAC.2017.7858303