Abstract

Three-dimensional background-oriented schlieren (3D-BOS) is an effective method for reconstructing 3D density fields from optically measured data, but it has limitations in measuring near-wall regions, where most of the light paths are blocked. This paper proposes a new extension, 3D-BOS using Mirror, which uses a wall as a mirror to provide sufficient light paths. In this paper, first, the conventional formulations are modified for the proposed method to handle the mirror reflections of the light paths. Subsequently, the proposed method is validated using artificially generated model data of an ideal axisymmetric distribution. The proposed method can reconstruct the distribution as accurately as the conventional method for all the number of cameras examined. Finally, the proposed method is experimentally demonstrated using a candle plume. The proposed method can capture cylindrical low-density regions near the wall surface.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

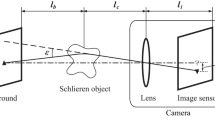

The schlieren method is a technique used to visualize light refraction caused by density gradients. It enables to observe the instantaneous flow geometry of a wide area without disturbing the flow and is an essential tool for studying compressible flows, such as jets, wakes, mixing layers, and shock waves (Van Dyke 1982; Settles 2001). A variant of the schlieren method, background-oriented schlieren (BOS) (Raffel 2015), has also been widely used. The patterns on the background behind the flows appeared to shift because of light refraction caused by density gradients. The magnitude of refraction can be obtained quantitatively by measuring the displacement of the patterns in an image captured with a camera. Although BOS has some disadvantages, such as blurred images of the flow owing to out-of-focus and inferior spatial resolution compared with the schlieren method, it has gained interest because of its simple apparatus.

A problem with these visualization methods is that three-dimensional (3D) flows are difficult to capture because all refractions along the light path are integrated into visualized data. This can be solved by reconstructing the 3D density distribution using computed tomography (referred to as 3D-BOS in this paper). Numerous studies have explored applications from relatively simple flows, including (i) axisymmetric or (ii) steady/periodic flows measured using a single camera, to (iii) more complicated, non-axisymmetric, and unsteady flows measured using multiple cameras. Group (i) includes jets (Venkatakrishnan et al. 2011; van Hinsberg and Rösgen 2014; Tan et al. 2015), flows around wind tunnel models (Venkatakrishnan and Meier 2004; Venkatakrishnan and Suriyanarayanan 2009; Sourgen et al. 2012; Leopold et al. 2013), shock waves (Hayasaka et al. 2016), candle plumes (Guo and Liu 2017), and combustion flows (Wahls and Ekkad 2022a, b). Group (ii) includes non-axisymmetric jets (Venkatakrishnan 2005; Goldhahn and Seume 2007; Tipnis et al. 2013; Lang et al. 2017; Kirby 2018; Lee et al. 2021) and flows around non-axisymmetric wind tunnel model (Ota et al. 2011). Group (iii) includes natural convection (Zeb et al. 2011), flow around projectiles (Yamagishi et al. 2021), wing tip vortices (Klinge et al. 2004), cascade wake (Hartmann et al. 2015), jets (Adamczuk et al. 2013; Nicolas et al. 2016, 2017a, b; Hartmann and Seume 2016; Lanzillotta et al. 2019), candle plumes (Nicolas et al. 2016), flames (Atcheson et al. 2008; Grauer et al. 2018; Liu et al. 2021), and fuel sprays (Lee et al. 2012). In recent years, further studies have been conducted on the implementation in wind tunnels (Bathel et al. 2019, 2022; Weisberger et al. 2020), improvement of analysis methods (Unterberger et al. 2019; Grauer and Steinberg 2020; Unterberger and Mohri 2022; Cai et al. 2022), uncertainty analysis (Rajendran et al. 2020), velocity field estimation using physics-informed neural networks (Cai et al. 2021; Molnar et al. 2023a, b), high-speed measurements using various devices such as optical fibers (Liu et al. 2020; Ukai 2021; Gomez et al. 2022), and the use of speckle BOS (Amjad et al. 2023) and plenoptic BOS (Davis et al. 2021).

Despite the progress of these studies, the application of the 3D-BOS method for measuring the density distributions near a wall is still challenging. This is because the wall blocks most of the light paths, resulting in a lack of light from multiple directions for obtaining the refraction data for reconstructing the density distributions. This problem may arise for near-wall flows, such as transonic buffets (Kouchi et al. 2016), shock wave–boundary layer interactions (Bolton et al. 2017), and supersonic impinging jets (Akamine et al. 2021). In the third case, a supersonic jet impinges on an inclined flat plate, forming a 3D shock structure on the wall, that interacts with a turbulent jet shear layer to produce intense acoustic waves. To further understand the noise source mechanism, it is necessary to measure the unsteady behavior of 3D shock structures and the large-scale turbulent fluctuations of shear layers. Such measurements have been difficult because, for example, all fluctuations on the wall are integrated into schlieren images and the shear layers slightly distant from the wall cannot be captured by measuring the wall pressures. These difficulties can be overcome if the 3D-BOS can measure near-wall density distributions.

For near-wall density measurements based on the 3D-BOS method, modifications are required to provide sufficient light paths near the wall. One possible approach is the use of transparent walls; however, the light direction is still restricted because of the total internal reflection (the critical angle is approximately 42° for glass with a typical refractive index of 1.5). To the best of the authors’ knowledge, two extensions have been proposed (Hashimoto et al. 2017; Bühlmann 2020), although each has its limitations. The method of Hashimoto et al. is an application of near-field BOS (van Hinsberg and Rösgen 2014), in which a wall surface is used as a background of the BOS optical system. This has the advantage of enabling density measurements with only minor modifications from the conventional 3D-BOS. However, for example, Fig. 3 of van Hinsberg and Rösgen (2014) shows that the smallest detectable refraction angle of the near-field BOS rapidly deteriorates when the distance between the wall and density distribution approaches about 40 mm or less. The other method by Bühlmann uses the speckle BOS instead of conventional BOS. It uses a speckle pattern created by the reflection of the laser on the rough surface of the wall instead of the usual background pattern, enabling measurements up to the vicinity of the wall. However, Bühlmann also noted that a phenomenon called speckle decorrelation occurs, in which the speckle pattern not only shifts but also deforms when the refraction angle is large. A method for designing optical systems to avoid speckle decorrelation has not yet been established. A method that is more similar to the conventional BOS with higher sensitivity in the vicinity of the wall would be useful.

This paper proposes a new extension, 3D-BOS using Mirror, to measure the density field near the wall surface to overcome the limitations. The proposed method uses the wall as a mirror to provide sufficient light paths for the reconstruction. Unlike the method of Hashimoto et al., the sensitivity in the near-wall region can be maintained by using the mirror image of the background away from the density distribution. Additionally, unlike Bühlmann’s method, most of the optical settings are the same as in the conventional 3D-BOS (Nicolas et al. 2017a). A major difference from the conventional method is that the light paths are reflected by the mirror. The refraction data for the reconstruction becomes complicated because both the direct and mirror-reflected images are superposed. This paper first proposes a formulation for such complicated data. Next, the proposed method is validated using artificially generated model data of an ideal axisymmetric density distribution. The differences between the true and reconstructed distributions are compared with those of the conventional method. Finally, the proposed method was experimentally demonstrated by measuring density distributions of a candle plume.

2 Methods

The proposed method, 3D-BOS using Mirror, shares most of the concepts of the measurement and analysis with the conventional 3D-BOS (Nicolas et al. 2016, 2017a). In this section, the methods are described in four parts to illustrate the similarities and differences between the conventional and proposed methods: (i) measurement setup (Sect. 2.1), (ii) measured data (Sect. 2.2), (iii) reconstruction analysis from the measured data (Sect. 2.3), and (iv) camera calibration in experiments (Sect. 2.4).

2.1 Measurement setup

Figure 1a shows a typical measurement setup for a conventional 3D-BOS. Around the target density distribution, multiple cameras are placed within 180° on one side, and background boards with patterns are placed on the other side. In contrast, the proposed method considers a case in which a flat wall is located adjacent to the density distribution, as shown in Fig. 1b. Unlike conventional methods, the cameras cannot be placed behind walls. Thus, the cameras are placed within 90° on one side and the background boards on the other side. Using the wall as a mirror to reflect light, the proposed method provides light paths from directions blocked by the wall (Cam 1 to Cam 11 in Fig. 1b). It may be desirable to provide light paths that are almost parallel to the mirror to accurately measure the density gradient normal to the mirror plane. However, depending on location of the background boards and the sizes of the density distribution and mirror, there is a minimum camera direction relative to the mirror plane at which the reflected images of the background boards can be captured. In the experiment described in Sect. 3.2, an additional camera was placed almost parallel to the mirror (Cam 12 in Fig. 1b).

2.2 Measured data

The data measured in the setups described in Sect. 2.1 is explained using the schematic of the light paths shown in Fig. 2. Despite differences owing to the mirror reflection, both the conventional and proposed methods capture images with cameras and calculate the light direction vectors before and after passing through the reconstructed region including the target density distribution. The directional changes of those vectors are used to reconstruct the density distribution in Sect. 2.3. Specifically, the light direction vectors are calculated based on the following concepts.

Figure 2a shows the light paths in the conventional method. Images are captured by cameras when the density distribution is present (disturbed case) and removed (quiescent case). The shifts of the background pattern between the two images due to light refraction are measured using methods such as optical flow. Figure 2a shows the case when the pattern appearing at D1 in the disturbed case is shifted to Q1 in the quiescent case. Under the camera model assuming that all the projecting light paths are through a pinhole O, the straight light paths Q1–O and D1–O are calculated from the pixel coordinates of Q1 and D1, respectively. In the quiescent case, by extending Q1–O, the location of point Q2 is obtained as the intersection with the background board. In the disturbed case, the unit light direction vectors changes from the vector \({{\varvec{e}}}_{\mathrm{in}}\) at point D2, where the light enters the reconstructed region, to the vector \({{\varvec{e}}}_{\mathrm{out}}\) at point D3, where the light exits the reconstructed region, owing to the refraction caused by the density distribution between D2 and D3. Though the light path D2–D3 is actually a curved line, the displacement from a straight line is small because the refraction angle is small (Nicolas et al. 2016). Thus, approximating the light path D2–D3 with a straight line extended the line D1–O, the locations of points D2 and D3 are calculated as the intersections with the surfaces of the reconstructed region. From the directions of the straight lines D1–D2 and D3–Q2, the unit light direction vectors \({{\varvec{e}}}_{\mathrm{in}}\) and \({{\varvec{e}}}_{\mathrm{out}}\) are obtained, respectively.

Figure 2b shows the light paths in the proposed method. The light paths are reflected at the mirror located at a surface of the reconstructed region. As in the conventional method, the lines D1–O and Q1–O are first extended to calculate points D2 and D3 in the disturbed case and Q2 in the quiescent case. By reflecting and extending the line Q1–Q2, the location of point Q3 is obtained as the intersection with the background board. The intersection point D4 with the surface of the reconstructed region can also be determined by reflecting and extending the line D1–D3 based on the approximation of the conventional method that the light paths in the reconstructed region can be considered straight lines. From the directions of the straight lines D1–D2 and D4–Q3, the unit light direction vectors \({{\varvec{e}}}_{\mathrm{in}}\) and \({{\varvec{e}}}_{\mathrm{out}}\) are obtained, respectively. These vectors represent the light directions at points D2 and D4 where the light path enters and exits the reconstructed region, respectively.

2.3 Reconstruction of density distribution

2.3.1 Fundamentals

This section describes the method of reconstructing the density distribution using the changes between the light direction vectors \({{\varvec{e}}}_{\mathrm{in}}\) and \({{\varvec{e}}}_{\mathrm{out}}\) obtained in Sect. 2.2. In this study, the proposed method was formulated based on a direct approach (Nicolas et al. 2016; Grauer et al. 2018) among the various conventional 3D-BOS methods. In both the conventional and proposed methods, a measurement vector \({\varvec{b}}\) defined in Sects. 2.3.2 and 2.3.3 is first calculated from the light direction vectors \({{\varvec{e}}}_{\mathrm{in}}\) and \({{\varvec{e}}}_{\mathrm{out}}\) corresponding to each pixel on the image plane of each camera. Next, a reconstructed region is defined that includes the target density distribution. A vector \({\varvec{\rho}}\) denotes the density vector, which is reshaped from the density values on a grid discretizing the reconstructed region, and a matrix \(A\) represents the transformation from \({\varvec{\rho}}\) to \({\varvec{b}}\). The vector \({\varvec{\rho}}\) is calculated such that the norm of the difference between \({\varvec{b}}\) and \(A{\varvec{\rho}}\), \({\Vert {\varvec{b}}-A{\varvec{\rho}}\Vert }_{2}^{2}\), is minimized:

The second term is a regularization term to keep the solution smooth. The regularization parameter \(\lambda\) is determined using the L-curve method for every data point (Nicolas et al. 2016), which was \(\lambda ={10}^{-4}\) in Sect. 3.1 and \(\lambda ={10}^{-5}\) in Sect. 3.2. This paper uses an iterative method called LSQR (Paige and Saunders 1982) to solve the minimization problem in Eq. (1). The obtained density distribution has an arbitrary constant corresponding to the constant of integration. The constants are determined to minimize the difference from the true distribution in Sect. 3.1, and to make the densities at the outer surfaces of the reconstructed region become the atmospheric density in Sect. 3.2.

The matrix \(A\) and vector \({\varvec{b}}\) in Eq. (1) were derived from the following two basic equations. One is the ray equation in geometric optics (Eq. 2.27 in (Träger 2012)):

where \(s\) is the distance along the light path, \({\varvec{e}}\) is the unit light direction vector, and \(n\) is the refractive index. The other equation is the Gladstone–Dale law (Merzkirch 1987):

where \(G=2.26\times {10}^{-4}\) m3/kg is the Gladstone–Dale constant for dry air. In the succeeding subsections, the formulations of the conventional and proposed methods are explained based on these equations. For simplicity, the light beams were treated as the principal rays in this study.

2.3.2 Conventional 3D-BOS

Although the conventional and proposed methods share the basic Eqs. (2) and (3), they differ in the definition of the measurement vector \({\varvec{b}}\) and transformation matrix \(A\) in Eq. (1) owing to the mirror reflection of the light paths. This subsection summarizes the derivation of the measurement vector \({\varvec{b}}\) and transformation matrix \(A\) for the conventional method. Substituting Eq. (3) into Eq. (2) and integrating over the interval D2–D3 in Fig. 2a,

where \({n}_{0}\) is the refractive index of the surrounding atmosphere, and \({s}_{D2}\) and \({s}_{D3}\) are the distances measured along the light path from D1 to D2 and D3, respectively. By selecting two vectors \({{\varvec{e}}}_{\xi }\) and \({{\varvec{e}}}_{\eta }\) such that \(\left\{{{\varvec{e}}}_{\xi }, {{\varvec{e}}}_{\eta }, {{\varvec{e}}}_{\mathrm{in}}\right\}\) is an orthonormal basis, and transforming the coordinates of Eq. (4) to this basis by determining the inner product,

Only two of three equations in Eq. (5) are independent because \({{\varvec{e}}}_{\mathrm{out}}\) is the unit vector, i.e., \({\left({{\varvec{e}}}_{\xi }\cdot {{\varvec{e}}}_{\mathrm{out}}\right)}^{2}+{\left({{\varvec{e}}}_{\eta }\cdot {{\varvec{e}}}_{\mathrm{out}}\right)}^{2}+{\left({{\varvec{e}}}_{\mathrm{in}}\cdot {{\varvec{e}}}_{\mathrm{out}}\right)}^{2}=1\). We select the first and second equations in Eq. (5).

By using the scalars \({b}_{\xi }\) and \({b}_{\eta }\) on the left-hand side of the first and second equations in Eq. (5), the measurement vector \({\varvec{b}}\) in Eq. (1) can be constituted as follows. By writing \({{\varvec{e}}}_{\xi }={\left(\begin{array}{ccc}{e}_{\xi x}& {e}_{\xi y}& {e}_{\xi z}\end{array}\right)}^{T}\), the right-hand side of the first equation in Eq. (5) becomes

where the \(x, y,\) and \(z\) axes are those of the grid of the reconstructed region. By discretizing Eq. (6) and summarizing those for all the pixels of all the cameras,

The vector \({{\varvec{b}}}_{\xi }\) consists of the scalars \({b}_{\xi }\) of all the pixels of all the cameras. The vector \({\varvec{\rho}}\) is the density vector as in Eq. (1), which consists of the density values on the grid points. The numbers of elements of the vectors \({{\varvec{b}}}_{\xi }\) and \({\varvec{\rho}}\) are denoted by \({N}_{i}\) and \({N}_{j}\), respectively. The \({N}_{j}\times {N}_{j}\) matrices \({D}_{x}, {D}_{y},\) and \({D}_{z}\) are the operators that transform the density vector \({\varvec{\rho}}\) into vectors consisting of the density gradients \(\frac{\partial \rho }{\partial x},\frac{\partial \rho }{\partial y},\) and \(\frac{\partial \rho }{\partial z}\) on the grid points. In this paper, these are defined as the coefficient matrices for the second-order central difference or, at the end points, second order one-sided differences. The \({N}_{i}\times {N}_{j}\) matrix \(P\) is the operator that transforms the values on the grid points to the integral between D2 and D3. In this paper, this is defined as the coefficient matrix for the trapezoidal integration of the values on the light path linearly interpolated from the grid points. The \({N}_{i}\times {N}_{i}\) matrices \({E}_{\xi x}, {E}_{\xi y},\) and \({E}_{\xi z}\) are the diagonal matrices consisting of the scalars \({e}_{\xi x}, {e}_{\xi y},\) and \({e}_{\xi z}\) for all the pixels of all the cameras, respectively. For the scalar \({b}_{\eta }\) in Eq. (5), we can express the same form as in Eq. (7); thus,

where \(O\) is the \({N}_{i}\times {N}_{j}\) zero matrix, and the \({N}_{i}\times {N}_{i}\) matrices \({E}_{\eta x}, {E}_{\eta y},\) and \({E}_{\eta z}\) are the diagonal matrices consisting of the scalars \({e}_{\eta x}, {e}_{\eta y},\) and \({e}_{\eta z}\) for all the pixels of all the cameras, respectively, where \({{\varvec{e}}}_{\eta }={\left(\begin{array}{ccc}{e}_{\eta x}& {e}_{\eta y}& {e}_{\eta z}\end{array}\right)}^{T}\). The \(2{N}_{i}\)-vector on the left-hand side and \(2{N}_{i}\times {N}_{j}\) matrix on the right-hand side in Eq. (8) are the vector \({\varvec{b}}\) and matrix \(A\) in Eq. (1), respectively, for the conventional method.

2.3.3 Proposed 3D-BOS using mirror

This subsection summarizes the derivation of the measurement vector \({\varvec{b}}\) and transformation matrix \(A\) for the proposed method. The major difference from the conventional method is an additional refraction in the light path after the mirror reflection, D3–D4, as shown in Fig. 2b. Substituting Eq. (3) into Eq. (2) and integrating between D2–D3 and D3–D4, respectively,

where \({s}_{D2},{s}_{D3},\) and \({s}_{D4}\) are the distances measured along the light path from D1 to D2, D3, and D4, respectively. The unit vectors \({{\varvec{e}}}_{D3}\) and \({{\varvec{e}}}_{D3}^{\prime}\) represent the light directions before and after the mirror reflection, and they have the following relationship (Householder transform):

where \({{\varvec{e}}}_{n}\) is the unit normal vector of the mirror plane. Using \({{\varvec{e}}}_{\xi }\) and \({{\varvec{e}}}_{\eta }\) defined as in the conventional methods, Eqs. (9a)–(9c) can be summarized into two equations as follows. By determining the inner product of Eq. (9a) and \({{\varvec{e}}}_{\xi }\),

By determining the inner product of Eq. (9b) and the vector \({{\varvec{e}}}_{\xi }^{\prime}={{\varvec{e}}}_{\xi }-2\left({{\varvec{e}}}_{\xi }\cdot {{\varvec{e}}}_{n}\right){{\varvec{e}}}_{n}\), which is the mirror reflection of \({{\varvec{e}}}_{\xi }\),

By determining the inner product of Eq. (9c) and \({{\varvec{e}}}_{\xi }^{\prime}\), and using that \({{\varvec{e}}}_{n}\) is a unit vector, i.e., \({\Vert {{\varvec{e}}}_{n}\Vert }_{2}^{2}={{\varvec{e}}}_{n}\cdot {{\varvec{e}}}_{n}=1\),

Substituting Eqs. (10c) and (10a) into Eq. (10b),

After performing Eq. (10d), and similarly for \({{\varvec{e}}}_{\eta }\) and its mirror reflection \({{\varvec{e}}}_{\eta }^{\prime}\), we obtain the following equations:

This is the equation for the proposed method corresponding to Eq. (5) in the conventional method.

The right-hand side of the first equation in Eq. (11) can be expressed as follows, similar to Eq. (6) in the conventional method:

where \({{\varvec{e}}}_{\xi }^{\prime}={\left(\begin{array}{ccc}{e}_{\xi x}^{\prime}& {e}_{\xi y}^{\prime}& {e}_{\xi z}^{\prime}\end{array}\right)}^{T}\), and the \(x, y,\) and \(z\) axes are those of the grid of the reconstructed region. By discretizing Eq. (12) and summarizing those for all the pixels of all the cameras,

The matrices \({D}_{x}, {D}_{y}, {D}_{z}, P, {E}_{\xi x}, {E}_{\xi y}\), and \({E}_{\xi z}\) are the same as in Eq. (7). The \({N}_{i}\times {N}_{j}\) matrix \({P}^{\prime}\) is the operator that transforms the values on the grid points to the integral between D3 and D4. The \({N}_{i}\times {N}_{i}\) matrices \({E}_{\xi x}^{\prime}, {E}_{\xi y}^{\prime},\) and \({E}_{\xi z}^{\prime}\) are the diagonal matrices consisting of the scalars \({e}_{\xi x}^{\prime}, {e}_{\xi y}^{\prime},\) and \({e}_{\xi z}^{\prime}\) for all the pixels of all the cameras, respectively. For the scalar \({b}_{\eta }\) in Eq. (11), we can express this in the same form as in Eq. (13); thus,

where the \({N}_{i}\times {N}_{i}\) matrices \({E}_{\eta x}^{\prime}, {E}_{\eta y}^{\prime},\) and \({E}_{\eta z}^{\prime}\) are the diagonal matrices consisting of the scalars \({e}_{\eta x}^{\prime}, {e}_{\eta y}^{\prime},\) and \({e}_{\eta z}^{\prime}\) for all the pixels of all the cameras, respectively, where \({{\varvec{e}}}_{\eta }^{\prime}={\left(\begin{array}{ccc}{e}_{\eta x}^{\prime}& {e}_{\eta y}^{\prime}& {e}_{\eta z}^{\prime}\end{array}\right)}^{T}\). The \(2{N}_{i}\)-vector on the left-hand side and \(2{N}_{i}\times {N}_{j}\) matrix on the right-hand side in Eq. (14) are the vector \({\varvec{b}}\) and matrix \(A\) in Eq. (1), respectively, for the proposed method.

2.4 Camera calibration

Before the analysis described in Sects. 2.2 and 2.3, camera calibration was performed to obtain the locations and attitudes of the cameras, background boards, and mirror. Similar to the camera calibration for the conventional method (Le Sant et al. 2014), a marker board of known size was placed near the target density distribution and several images were captured with the cameras while changing the attitude of the marker board, as shown in Case A of Fig. 3a. The pixel coordinates of the markers were detected from the images, providing the intrinsic parameters, locations, and attitudes of the cameras relative to the marker boards (Zhang 2000), and thus the relative locations and attitudes between the cameras. Furthermore, as shown in Case B in Fig. 3b, the locations and attitudes of the background board were obtained by attaching the markers to the background board and applying the same analysis.

The proposed method additionally requires the location and attitude of the mirror. In this study, the location and attitude of the mirror were obtained by fixing the marker board and capturing several images while changing the mirror attitudes (Takahashi et al. 2012, 2016), as shown in Case C in Fig. 3c.

To further reduce the error in the location and attitude of the mirror, we captured the mirror-reflected images while changing the attitude of the marker board placed near the density distribution, as shown in Case D in Fig. 3d, to obtain information about the locations of the light paths reflected by the mirror. A parameter optimization called the bundle adjustment (Hartley and Zisserman 2004) was then performed to minimize the difference between the pixel coordinates of the measured markers and those calculated with the obtained parameters for all the data in Cases A–D.

In Cases A and D, a circle grid (3 rows × 7 columns asymmetric grid with 2 mm dot diameter and 5 mm column spacing) was used because the markers were not in focus, and in Cases B and C, ChArUco markers (Garrido-Jurado et al. 2014) with a chessboard square length of 10 mm and marker square size of 5 mm were used for robust detection. Both the markers were detected using the OpenCV library implementation. In Zhang’s method, an ideal simple camera was assumed to stabilize the estimation of the intrinsic parameters: the scale factors of the pixel coordinate in the horizontal and vertical directions were \(\alpha =\beta\), the skew factor between the two axes on the image plane was \(\gamma =0\), the pixel coordinates of the pinhole \(\left({u}_{0}, {v}_{0}\right)\) was fixed to the image center, and the lens distortion was ignored.

3 Results and discussion

3.1 Model data tests

The proposed method, 3D-BOS using Mirror, was validated by examining whether the reconstructed distribution agrees with the true distribution for an artificially generated model data. First, a model data of an ideal axisymmetric distribution was generated. By setting cameras virtually, the values \({b}_{\xi }\) and \({b}_{\eta }\) were calculated for the model data using the right-hand sides of the first and second equations of Eq. (5) for the conventional method and Eq. (11) for the proposed method. With the calculated \({b}_{\xi }\) and \({b}_{\eta }\), we reconstructed the distribution using the conventional and proposed methods, respectively. The differences between the reconstructed and true distributions were compared for the conventional and proposed methods to examine whether the differences between these two methods affected the reconstructed results. Because the number of cameras was known to affect the reconstruction error (Nicolas et al. 2016), we varied the number of cameras in the range \({N}_{\mathrm{cam}}=3-24\).

The model data were defined as an axisymmetric distribution around \(y\)-axis in the region \(\left[-0.5, 0.5\right]\times \left[-0.5, 0.5\right]\times \left[-0.5, 0.5\right]\) with a radial profile:

where \(r= \sqrt{{x}^{2}+{z}^{2}}\), and the radius and boundary thickness of the cylinder were set at \({r}_{e}=0.25\) and \({\delta }_{e}=0.08\), respectively. The grid resolution was heuristically determined to be 31 × 31 × 31. The isosurfaces in Fig. 4a show the 3D distribution of the model data, and the gray surface (\(z=0.5\)) indicates the mirror plane when the proposed method was examined. Figure 4b shows the distribution on the plane perpendicular to the \(y\)-axis in Fig. 4a. The distributions on the horizontal and vertical dashed lines are plotted on the upper and right sides, respectively.

Figure 5a and b show examples of virtual camera configurations for the conventional and proposed methods, respectively. The red and blue lines indicate the image plane and its normal vector, and the thin gray lines are the light paths indicating the angle of view for each camera. The orange lines show the reconstructed region, and the thick gray line in Fig. 5b indicates the mirror plane. In the conventional method, \({N}_{\mathrm{cam}}\) cameras were equally spaced on a semicircle of a radius of 10 centered at the origin. In the proposed method, \({N}_{\mathrm{cam}}\) cameras were equally spaced within the range of 10 to 75 degrees to the \(z\)-axis, on a circle of a radius of 10 centered at the point \(\left(x, y, z\right)=(0, 0, 0.5)\) located on the mirror plane. The resolution of each camera was \(51\times 29\) pixels, and the scale factor to the pixel coordinates (Sect. 2.4) was set at \(\alpha =300\).

Using these configurations, the values \({b}_{\xi }\) and \({b}_{\eta }\) were computed with the right-hand side of the first and second equations in Eq. (5) for the conventional method and Eq. (11) for the proposed method. It should be noted that the principal rays (i.e., infinite depth of focus) were assumed for simplicity. The Gauss–Kronrod quadrature was used to calculate each integral, and the density gradient was obtained analytically from Eq. (15) as

Figure 5c and d show the horizontal components, \({b}_{\xi }\), of each camera in the conventional and proposed methods, respectively, when the number of cameras was \({N}_{\mathrm{cam}}=8\); the vertical components, \({b}_{\eta }\), whose values were approximately zero, are not shown. For the conventional method, Fig. 5c shows red and blue vertical lines, which were caused by refraction at the edge of the cylindrical distribution shown in Fig. 4a. Because of the axial symmetry, the same distributions were obtained for all the cameras. In contrast, for the proposed method, Fig. 5d shows a more complicated distribution, where the direct and mirror-reflected images overlapped depending on the camera directions.

Figure 6 shows the distribution reconstructed by solving Eq. (1) with the proposed method with \({N}_{\mathrm{cam}}=8\) cameras. The 3D isosurfaces in Fig. 6a show an axisymmetric distribution as in Fig. 4a. Figure 6b shows the slice view as in Fig. 4b. In the upper and right plots, the orange lines coincide with the black lines, indicating that the reconstructed distribution agreed with the true distribution. The near-wall cylindrical distribution could be accurately reconstructed from the complicated data in Fig. 5d using the proposed method.

Figure 7 shows that the proposed method can accurately reconstruct the distribution with a different number of cameras. Figure 7a shows the relative error \({\Vert {\varvec{\rho}}-{{\varvec{\rho}}}_{\mathrm{true}}\Vert }_{2}/{\Vert {{\varvec{\rho}}}_{\mathrm{true}}\Vert }_{2}\) between the true distribution \({{\varvec{\rho}}}_{\mathrm{true}}\) and reconstructed distribution \({\varvec{\rho}}\). The horizontal axis is the number of cameras. For both the conventional and proposed methods, the error decreased to approximately 2%–3% as the number of cameras increased. These two plots almost agreed, indicating that there is no significant error inherent to the proposed method.

Figure 7b shows the reconstructed distributions for \({N}_{\mathrm{cam}}=3, 4, 6, 8,\) and \(24\) cameras. The left two columns show the reconstructed distributions of the conventional method, and the right two columns show those of the proposed method. The profiles on the vertical dashed lines in the first and third columns are plotted on the second and fourth columns, where the orange and black lines represent the reconstructed and true distributions, respectively. For \({N}_{\mathrm{cam}}=8\) and \({N}_{\mathrm{cam}}=24\), the reconstructed distributions agreed well with the true distributions for both the conventional and proposed methods, indicating that the distributions were accurately reconstructed. Additionally, for \({N}_{\mathrm{cam}}=3, 4,\) or \(6\), the reconstructed distributions of the proposed method did not exhibit significant errors compared with the conventional method. The proposed method could reconstruct the distribution as accurately as the conventional method for all the number of cameras examined. The remaining errors may be attributed to the discretization and approximation common to both the conventional and proposed methods.

In addition to the basic validation of the concept of simple cylindrical distribution described above, we investigated whether the proposed 3D-BOS using a mirror could reconstruct more complicated model data. Future applications of the proposed method, such as supersonic jets impinging on an inclined flat plate, should include wall-attached asymmetric density distributions. Additional model data were reconstructed to investigate the applicability of measuring these distributions. The model data were defined using the following profile:

where \(\zeta\) is the distance on a line inclined at 30° relative to the mirror plane (the origin is the center of the mirror plane), and \(r\) and \(\theta\) are the radial distance and azimuthal angle around the \(\zeta\)-axis, respectively. The mean radius of the cylinder was \({r}_{0}=10\) mm, the thickness was \({\delta }_{e}=3\) mm, the azimuthal wavenumber was \(n=8\), the amplitude was \(\Delta r=1\) mm, the length of the cylinder was \({\zeta }_{0}=20\) mm, and the boundary thickness in \(\zeta\)-direction was \({\delta }_{\zeta }=3\) mm. As a realistic setting, the grid and camera resolutions were the same as those employed in the experiments in Sect. 3.2. The grid discretized the 60 mm × 60 mm × 30 mm region into \(81\times 81\times 41\) voxels (0.75 mm-side). \({N}_{\mathrm{cam}}=12\) cameras were equally spaced within the range of 10–75° of the \(z\)-axis on a circle with a radius of 500 mm from the mirror center. The resolution of each camera was \(180\times 135\) pixels, and the scale factor of the pixel coordinates (Sect. 2.4) was set at \(\alpha =925\).

Figure 8a shows the 3D isosurface of the true distribution. The slice view of the center plane is shown on the left side of Fig. 8b, and the profile of the dashed line is shown on the right side. Figure 8c shows the distributions of \({b}_{\xi }\) and \({b}_{\eta }\) (horizontal and vertical components, respectively) obtained through numerical integration as in the case of the simple model data described above. Using these data, the distributions shown in Fig. 8e and f were reconstructed using the proposed method. Features such as overall shape and azimuthal wavenumber were qualitatively captured. Although optimization of factors such as the camera layout is required, this result indicates the applicability of the proposed method to a wall-attached asymmetric density distribution.

3.2 Experimental demonstration for a candle plume

As an experimental demonstration of the proposed method, 3D-BOS using Mirror, the density distributions of a candle plume was measured. The candle plume was used for demonstration in a previous study of the conventional 3D-BOS (Nicolas et al. 2016), because the density gradient is large, making it easy to measure, and the density distribution is simple and almost cylindrical immediately above the flame.

The experimental setup is shown in Fig. 9a. A 200 mm × 200 mm, 2 mm-thick first-surface mirror was used as the wall, and a candle was placed at a distance of approximately 20 mm from the wall. Twelve cameras (The Imaging Source, DMK33GX273) and background boards were placed around the candle. The parameters of the BOS optical systems were determined by considering the sensitivity and diameter of the circle of confusion, as in the conventional method (Nicolas et al. 2017a), because mirror reflection does not affect these factors. The distance from the camera to the candle was \(m\approx 500\) mm and the distance from the candle to the background was \(l\approx 300\) mm. A focal length was \(f=25\) mm and the f-value was \({f}_{\#}\approx 8\). The diameter of the circle of confusion at the density distribution (Nicolas et al. 2017a) was \(\frac{fl}{\left(f+l\right) {f}_{\#}}\approx 1.2\) mm. Although the camera had a resolution of 1440 × 1080 pixels, the resolution was reduced to 1/8 (i.e., 180 × 135 pixels at maximum) after the optical flow analysis described below was conducted, resulting in a scale factor of the pixel coordinates (Sect. 2.4) of \(\alpha \approx 925\). The exposure time was 2 ms and the frame rate was 1 Hz. A total of 231 images were captured by synchronizing all the cameras with a pulsed signal. For the background, semi-randomly distributed dots proposed by Nicolas et al. (2017a) were used (dot diameter = 0.4 mm, average dot spacing = 0.6 mm, and standard deviation of dot displacement = 0.1 mm). A K-type thermocouple was placed approximately 80 mm above the candle to measure temperature.

Before the measurement, the camera calibration described in Sect. 2.4 was performed by capturing a total of 1754 images, as shown in the bottom row of Fig. 3. Figure 9b shows the obtained locations and attitudes of the cameras, background boards, and mirror: the thick gray lines are the mirror and background boards, the thin gray lines indicate the angle of view of the cameras, and the red and blue lines represent the image plane and its normal vector of each camera. The 60 mm × 45 mm × 45 mm region immediately above the candle, indicated by the orange lines, was set as the reconstructed region. The grid resolution was heuristically determined to be 81 × 61 × 61 (0.75 mm-side voxels).

Figure 9c shows an example image captured with Cam 6 in the disturbed case. The direct and mirror-reflected images of the thermocouple and flame appear on the right and left sides, respectively. Behind them, the dots drawn on the background board appeared as shown in the enlarged part (100 × 100 pixels). The shifts in these dots were measured using the Farnebäck method, a type of optical flow implemented in the OpenCV library, with an averaging window size of 15 × 15 pixels. To reduce the extra resolution, the images were resampled by averaging each 8 × 8 pixel window. The displacement due to thermal deformation of the mirror was subtracted, resulting in the distribution as shown in Fig. 9d. Only the distributions of the horizontal displacements are shown; the vertical components, which were approximately zero, are not shown. Similar to Fig. 5d of the model data, the direct and mirror-reflected images were superposed, producing a complicated distribution that differed for each camera. Excluding the shaded areas in which the flame or thermocouple prevent the dot-shift measurements, we calculated the values \({b}_{\xi }\) and \({b}_{\eta }\) using the left-hand side of Eq. (11).

Figure 10 shows examples of the instantaneous density distributions reconstructed from \({b}_{\xi }\) and \({b}_{\eta }\) by solving Eq. (1) using the proposed method. Figure 11 shows the time-averaged density distribution. Both show the value \(\rho -{\rho }_{0}\), where \({\rho }_{0}\) is the atmospheric density. In the instantaneous distributions, the high-temperature, low-density region owing to the candle plume appeared to be circular (Fig. 10a and b) or deformed (Fig. 10c and d). The time-averaged distribution in Fig. 11 captures a nearly cylindrical distribution of the candle plume. These results showed that the proposed method can experimentally reconstruct the almost cylindrical density distribution near the wall.

The white circles in Figs. 10a, c, and 11a show the location of the thermocouple determined from the images. The temperature history measured using this thermocouple is shown in the upper part of Fig. 12, and density history converted by the equation of state for the ideal gas is shown in the lower part of Fig. 12 as a blue line. The orange line in Fig. 12 shows the density history immediately below the thermocouple reconstructed with the proposed method. Both plots show a fluctuating but almost steady densities. The time-averaged density was approximately \(-0.81\) kg/m3 for the thermocouple and \(-0.88\) kg/m3 for the proposed method, with a difference of approximately 9%. This may be attributed to the composition of the candle plume. These plots were obtained using the gas constant of air, \(R\approx 287.1\) J/kg-K, to convert the thermocouple temperature to density, and the Gladstone–Dale constant of air, \(G=2.26\times {10}^{-4}\) m3/kg, to calculate Eq. (11), although the candle plume contained the combustion gas. If all the oxygen in the air were used to combustion of the paraffin wax of the candle, the gas constant would be \(R\approx 288.4\) J/kg-K, a change of approximately 0.5%, and the Gladstone–Dale constant would be \(G\approx 2.41\times {10}^{-4}\) m3/kg, a change of approximately 7%, which was roughly estimated based on the studies of Merzkirch (1987) and Qin et al. (2002). Considering these uncertainties, the densities obtained from the thermocouple and reconstructed with the proposed method were consistent.

4 Conclusions

This paper proposes a new extension, 3D-BOS using Mirror, of the conventional 3D-BOS to measure three-dimensional near-wall density distribution by using the wall as a mirror to reflect light. The modifications to the formulation of the conventional method to incorporate the mirror-reflected light paths were presented. A validation using the artificially generated model data of the ideal axisymmetric distribution showed that the proposed method could reconstruct the density distribution as accurately as the conventional 3D-BOS for all the number of cameras examined. In an experimental demonstration using a candle plume, the proposed method reconstructed the almost cylindrical density distribution near the wall.

Future work will include improving the results using newer optical flow algorithms, a more realistic treatment of light rays as conical beams, and more parametric studies on grid resolutions and camera configurations. It is also expected that the proposed method can be applied to unknown density distributions such as supersonic jets impinging on an inclined flat plate.

Availability of data and materials

The data that support the findings of this study are available from the corresponding author upon reasonable request.

References

Adamczuk RR, Hartmann U, Seume J (2013) Experimental demonstration of Analyzing an Engine’s Exhaust Jet with the Background-Oriented Schlieren Method. In: AIAA Ground Testing Conference, San Diego, CA, USA. AIAA Paper, pp 2013–2488

Akamine M, Okamoto K, Teramoto S, Tsutsumi S (2021) Experimental study on effects of plate angle on acoustic waves from supersonic impinging jets. J Acoust Soc Am 150:1856–1865. https://doi.org/10.1121/10.0006236

Amjad S, Soria J, Atkinson C (2023) Three-dimensional density measurements of a heated jet using laser-speckle tomographic background-oriented Schlieren. Exp Thermal Fluid Sci 142:110819. https://doi.org/10.1016/j.expthermflusci.2022.110819

Atcheson B, Ihrke I, Heidrich W et al (2008) Time-resolved 3d capture of non-stationary gas flows. ACM Trans Graph 27(5):132

Bathel BF, Weisberger J, Jones SB (2019) Development of tomographic background-oriented Schlieren capability at NASA langley research center. In: AIAA Aviation 2019 Forum, Dallas, Texas, USA. AIAA Paper, pp 2019–3288

Bathel BF, Weisberger JM, Ripley WH, Jones SB (2022) Preparations for tomographic background-oriented Schlieren at the 31-Inch Mach 10Wind Tunnel. In: AIAA AVIATION 2022 Forum, Chicago, IL, USA & Virtual. AIAA Paper, pp 2022–3475

Bolton JT, Thurow BS, Alvi FS, Arora N (2017) Single camera 3D measurement of a shock wave-turbulent boundary layer interaction. In: 55th AIAA Aerospace Sciences Meeting, Grapevine, Texas, USA. AIAA Paper, pp 2017–0985

Bühlmann P (2020) Laser speckle background oriented Schlieren imaging for near-wall measurements. Doctoral dissertation, ETH Zurich

Cai S, Wang Z, Fuest F et al (2021) Flow over an espresso cup: inferring 3-D velocity and pressure fields from tomographic background oriented Schlieren via physics-informed neural networks. J Fluid Mech. https://doi.org/10.1017/jfm.2021.135

Cai H, Song Y, Ji Y et al (2022) Direct background-oriented Schlieren tomography using radial basis functions. Opt Exp 30:19100–19120. https://doi.org/10.1364/OE.459872

Davis JK, Clifford CJ, Kelly DL, Thurow BS (2021) Tomographic background oriented Schlieren using plenoptic cameras. Meas Sci Technol 33:025203. https://doi.org/10.1088/1361-6501/ac3b09

Garrido-Jurado S, Muñoz-Salinas R, Madrid-Cuevas FJ, Marín-Jiménez MJ (2014) Automatic generation and detection of highly reliable fiducial markers under occlusion. Pattern Recogn 47:2280–2292. https://doi.org/10.1016/j.patcog.2014.01.005

Goldhahn E, Seume J (2007) The background oriented schlieren technique: sensitivity, accuracy, resolution and application to a three-dimensional density field. Exp Fluids 43:241–249. https://doi.org/10.1007/s00348-007-0331-1

Gomez M, Grauer SJ, Ludwigsen J et al (2022) Megahertz-rate background-oriented Schlieren tomography in post-detonation blasts. Appl Opt 61:2444–2458. https://doi.org/10.1364/AO.449654

Grauer SJ, Steinberg AM (2020) Fast and robust volumetric refractive index measurement by unified background-oriented Schlieren tomography. Exp Fluids 61:80. https://doi.org/10.1007/s00348-020-2912-1

Grauer SJ, Unterberger A, Rittler A et al (2018) Instantaneous 3D flame imaging by background-oriented schlieren tomography. Combust Flame 196:284–299. https://doi.org/10.1016/j.combustflame.2018.06.022

Guo G-M, Liu H (2017) Density and temperature reconstruction of a flame-induced distorted flow field based on background-oriented Schlieren (BOS) technique. Chin Phys B 26:064701. https://doi.org/10.1088/1674-1056/26/6/064701

Hartley R, Zisserman A (2004) Multiple view geometry in computer vision. Cambridge University Press, Cambridge

Hartmann U, Adamczuk R, Seume J (2015) Tomographic background oriented Schlieren applications for turbomachinery (Invited). In: 53rd AIAA Aerospace Sciences Meeting, Kissimmee, Florida, USA. AIAA Paper, pp 2015–1690

Hartmann U, Seume JR (2016) Combining ART and FBP for improved fidelity of tomographic BOS. Meas Sci Technol 27:097001. https://doi.org/10.1088/0957-0233/27/9/097001

Hashimoto Y, Fujii K, Kameda M (2017) Modified application of algebraic reconstruction technique to near-field background-oriented Schlieren images for three-dimensional flows. Trans Jpn Soc Aeronaut Space Sci 60:85–92. https://doi.org/10.2322/tjsass.60.85

Hayasaka K, Tagawa Y, Liu T, Kameda M (2016) Optical-flow-based background-oriented Schlieren technique for measuring a laser-induced underwater shock wave. Exp Fluids 57:179. https://doi.org/10.1007/s00348-016-2271-0

Kirby R (2018) Tomographic background-oriented Schlieren for three-dimensional density field reconstruction in asymmetric shock-containing jets. In: 2018 AIAA Aerospace Sciences Meeting, Kissimmee, Florida, USA. AIAA Paper, pp 2018–0008

Klinge F, Kirmse T, Kompenhans J (2004) Application of quantitative background oriented Schlieren (BOS): investigation of a Wing Tip Vortex in a Transonic Wind Tunnel. In: PSFVIP-4. Chamonix, France

Kouchi T, Yamaguchi S, Koike S et al (2016) Wavelet analysis of transonic buffet on a two-dimensional airfoil with vortex generators. Exp Fluids 57:166. https://doi.org/10.1007/s00348-016-2261-2

Lang HM, Oberleithner K, Paschereit CO, Sieber M (2017) Measurement of the fluctuating temperature field in a heated swirling jet with BOS tomography. Exp Fluids 58:88. https://doi.org/10.1007/s00348-017-2367-1

Lanzillotta L, Léon O, Donjat D, Le Besnerais G (2019) 3D density reconstruction of a screeching supersonic jet by synchronized multi-camera Background Oriented Schlieren. In: 8th European Conference for Aeronautics and Aerospace Sciences (EUCASS)

Le Sant Y, Todoroff V, Bernard-Brunel A et al (2014) Multi-camera calibration for 3DBOS. In: 17th International Symposium on Applications of Laser Techniques to Fluid Mechanics. Lisbonne, Portugal

Lee J, Kim N, Min K (2012) Measurement of spray characteristics using the background-oriented Schlieren technique. Meas Sci Technol 24:025303. https://doi.org/10.1088/0957-0233/24/2/025303

Lee C, Ozawa Y, Haga T et al (2021) Comparison of three-dimensional density distribution of numerical and experimental analysis for twin jets. J vis 24:1173–1188. https://doi.org/10.1007/s12650-021-00765-z

Leopold F, Ota M, Klatt D, Maeno K (2013) Reconstruction of the unsteady supersonic flow around a spike using the colored background oriented Schlieren technique. J Flow Control, Meas vis 1:69–76. https://doi.org/10.4236/jfcmv.2013.12009

Liu H, Shui C, Cai W (2020) Time-resolved three-dimensional imaging of flame refractive index via endoscopic background-oriented Schlieren tomography using one single camera. Aerosp Sci Technol 97:105621. https://doi.org/10.1016/j.ast.2019.105621

Liu H, Huang J, Li L, Cai W (2021) Volumetric imaging of flame refractive index, density, and temperature using background-oriented Schlieren tomography. Sci China Technol Sci 64:98–110. https://doi.org/10.1007/s11431-020-1663-5

Merzkirch W (1987) Flow visualization, 2nd edn. Academic Press, Orlando

Molnar JP, Venkatakrishnan L, Schmidt BE et al (2023b) Estimating density, velocity, and pressure fields in supersonic flows using physics-informed BOS. Exp Fluids 64:14. https://doi.org/10.1007/s00348-022-03554-y

Molnar JP, Grauer SJ, Léon O, et al (2023a) Physics-informed background-oriented Schlieren of Turbulent underexpanded jets. In: AIAA SciTech 2023 Forum, National Harbor, MD, USA & Online. AIAA Paper, pp 2023–2441

Nicolas F, Todoroff V, Plyer A et al (2016) A direct approach for instantaneous 3D density field reconstruction from background-oriented schlieren (BOS) measurements. Exp Fluids 57:13. https://doi.org/10.1007/s00348-015-2100-x

Nicolas F, Donjat D, Léon O et al (2017a) 3D reconstruction of a compressible flow by synchronized multi-camera BOS. Exp Fluids 58:46. https://doi.org/10.1007/s00348-017-2325-y

Nicolas F, Donjat D, Plyer A et al (2017b) Experimental study of a co-flowing jet in ONERA’s F2 research wind tunnel by 3D background oriented schlieren. Meas Sci Technol 28:085302. https://doi.org/10.1088/1361-6501/aa7827

Ota M, Hamada K, Kato H, Maeno K (2011) Computed-tomographic density measurement of supersonic flow field by colored-grid background oriented Schlieren (CGBOS) technique. Meas Sci Technol 22:104011. https://doi.org/10.1088/0957-0233/22/10/104011

Paige CC, Saunders MA (1982) LSQR: an algorithm for sparse linear equations and sparse least squares. ACM Trans Math Softw 8:43–71. https://doi.org/10.1145/355984.355989

Qin X, Xiao X, Puri IK, Aggarwal SK (2002) Effect of varying composition on temperature reconstructions obtained from refractive index measurements in flames. Combust Flame 128:121–132. https://doi.org/10.1016/S0010-2180(01)00338-8

Raffel M (2015) Background-oriented Schlieren (BOS) techniques. Exp Fluids 56:1–17. https://doi.org/10.1007/s00348-015-1927-5

Rajendran LK, Zhang J, Bhattacharya S et al (2020) Uncertainty quantification in density estimation from background-oriented Schlieren measurements. Meas Sci Technol 31:054002. https://doi.org/10.1088/1361-6501/ab60c8

Settles GS (2001) Schlieren and shadowgraph techniques. Springer, Berlin Heidelberg

Sourgen F, Leopold F, Klatt D (2012) Reconstruction of the density field using the colored background oriented Schlieren technique (CBOS). Opt Lasers Eng 50:29–38. https://doi.org/10.1016/j.optlaseng.2011.07.012

Takahashi K, Nobuhara S, Matsuyama T (2016) Mirror-based camera pose estimation using an orthogonality constraint. IPSJ Trans Comput vis Appl 8:11–19. https://doi.org/10.2197/ipsjtcva.8.11

Takahashi K, Nobuhara S, Matsuyama T (2012) A new mirror-based extrinsic camera calibration using an orthogonality constraint. In: 2012 IEEE Conference on Computer Vision and Pattern Recognition. IEEE, Providence, RI, USA, pp 1051–1058

Tan DJ, Edgington-Mitchell D, Honnery D (2015) Measurement of density in axisymmetric jets using a novel background-oriented Schlieren (BOS) technique. Exp Fluids 56:204. https://doi.org/10.1007/s00348-015-2076-6

Tipnis TJ, Finnis MV, Knowles K, Bray D (2013) Density measurements for rectangular free jets using background-oriented Schlieren. Aeronaut J 117:771–785. https://doi.org/10.1017/S0001924000008447

Träger F (ed) (2012) Springer handbook of lasers and optics. Springer, Berlin Heidelberg

Ukai T (2021) The principle and characteristics of an image fibre background oriented Schlieren (Fibre BOS) technique for time-resolved three-dimensional unsteady density measurements. Exp Fluids 62:170. https://doi.org/10.1007/s00348-021-03251-2

Unterberger A, Mohri K (2022) Evolutionary background-oriented Schlieren tomography with self-adaptive parameter heuristics. Opt Exp 30:8592–8614. https://doi.org/10.1364/OE.450036

Unterberger A, Kempf A, Mohri K (2019) 3D evolutionary reconstruction of scalar fields in the gas-phase. Energies 12:2075. https://doi.org/10.3390/en12112075

Van Dyke M (1982) An album of fluid motion. Parabolic Press, Stanford

van Hinsberg NP, Rösgen T (2014) Density measurements using near-field background-oriented Schlieren. Exp Fluids 55:1720. https://doi.org/10.1007/s00348-014-1720-x

Venkatakrishnan L (2005) Density Measurements in an axisymmetric underexpanded jet by background-oriented Schlieren technique. AIAA J 43:1574–1579. https://doi.org/10.2514/1.12647

Venkatakrishnan L, Meier GEA (2004) Density measurements using the background oriented Schlieren technique. Exp Fluids 37:237–247. https://doi.org/10.1007/s00348-004-0807-1

Venkatakrishnan L, Suriyanarayanan P (2009) Density field of supersonic separated flow past an afterbody nozzle using tomographic reconstruction of BOS data. Exp Fluids 47:463–473. https://doi.org/10.1007/s00348-009-0676-8

Venkatakrishnan L, Wiley A, Kumar R, Alvi F (2011) Density field measurements of a supersonic impinging jet with Microjet control. AIAA J 49:432–438. https://doi.org/10.2514/1.J050511

Wahls BH, Ekkad SV (2022a) A new technique using background oriented Schlieren for temperature reconstruction of an axisymmetric open reactive flow. Meas Sci Technol 33:055202. https://doi.org/10.1088/1361-6501/ac51a5

Wahls BH, Ekkad SV (2022b) Temperature reconstruction of an axisymmetric enclosed reactive flow using simultaneous background oriented schlieren and infrared thermography. Meas Sci Technol 33:115201. https://doi.org/10.1088/1361-6501/ac83e2

Weisberger JM, Bathel BF, Jones SB et al (2020) Preparations for tomographic background-oriented Schlieren measurements in the 11-by 11-foot transonic Wind tunnel. In: AIAA AVIATION 2020 FORUM. AIAA Paper, pp 2020–3102

Yamagishi M, Yahagi Y, Ota M et al (2021) Quantitative density measurement of wake region behind reentry capsule (Improvements in accuracy of 3D reconstruction by evaluating the view-angle of measurement system). J Fluid Sci Technol 16:JFST0021. https://doi.org/10.1299/jfst.2021jfst0021

Zeb MF, Ota M, Maeno K (2011) Quantitative measurement of heat flow in natural heat convection using color-stripe background oriented Schlieren (CSBOS) method. J JSEM 11:s141–s146. https://doi.org/10.11395/jjsem.11.s141

Zhang Z (2000) A flexible new technique for camera calibration. IEEE Trans Pattern Anal Mach Intell 22:1330–1334. https://doi.org/10.1109/34.888718

Acknowledgements

The authors gratefully acknowledge financial support from the Japan Society for the Promotion of Science (Grant numbers JP20K14645, JP21H01529).

Funding

Open access funding provided by The University of Tokyo. This study was supported by the Japan Society for the Promotion of Science (Grant numbers JP20K14645, JP21H01529).

Author information

Authors and Affiliations

Contributions

MA: Conceptualization, methodology, code validation, experiments, data analysis, visualization, writing, review, funding acquisition. ST and KO: discussions, review, supervision, funding acquisition.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Akamine, M., Teramoto, S. & Okamoto, K. Formulation and demonstrations of three-dimensional background-oriented schlieren using a mirror for near-wall density measurements. Exp Fluids 64, 134 (2023). https://doi.org/10.1007/s00348-023-03672-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-023-03672-1