Abstract

Recently, large progress was made in the development towards low-cost PIV (Particle Image Velocimetry) for industrial and educational applications. This paper presents the use of two low-cost action cameras for stereoscopic planar PIV. A continuous wave laser or alternatively an LED was used for illumination and pulsed by a frequency generator. A slight detuning of the light pulsation and camera frame rate minimizes systematic errors by the rolling shutter effect and allows for the synchronization of both cameras by postprocessing without the need of hardware synchronization. The setup was successfully qualified on a rotating particle pattern in a planar and stereoscopic configuration as well as on the jet of an aquarium pump. Since action cameras are intended to be used at outdoor activities, they are small, very robust and work autarkic. In conjunction with the synchronization and image pre-processing scheme presented herein, those cameras enable stereoscopic PIV in harsh environments and even on moving experiments.

Graphic abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Access to experimental flow data is of crucial importance in a wide range of engineering, science and education. Therefore, experimental methods have established themselves as a foundation of fluid dynamics research next to numerical simulations.

One of the major experimental technique that provides highly resolved flow data is the Particle Image Velocimetry (PIV). Since its origin in the 1980s, PIV became a well-established velocity measurement technique that significantly augmented the study of fluid flows (Adrian 2005). Further improvements of the basic, two-dimensional technique allows to measure all three velocity components in a planar measurement domain which is known as stereoscopic PIV (Arroyo and Greated 1991) or even in a three-dimensional domain with techniques like tomographic PIV (Elsinga et al. 2006) and 3D-particle tracking velocimetry (3D-PTV) (Maas et al. 1993; Nishino et al. 1989; Shnapp et al. 2019; Cierpka et al. 2013). A recent overview of modern algorithms can be found in Kähler et al. (2016).

PIV is especially well suited for flow visualization and can provide fast and simple access to flow velocities and related properties. Therefore, it is also predestined for educational purposes where it can be employed to provide easy access to complex flow phenomena which otherwise would be hard to understand (Minichiello et al. 2020). Furthermore, very often velocity measurements in harsh industrial environments or in complex moving or rotating systems are necessary to investigate the underlying flow physics.

All this leads towards a wide application of PIV in the field of experimental fluid mechanics research, however, the price of even a simple scientific PIV setup can easily add up to 60.000 - 100.000 €. Therefore, the high system costs are mainly preventing a further spread of PIV into industrial applications as well as undergraduate education, both being areas, where experimental flow visualization would be of great benefit. Therefore, robust and low-cost PIV techniques for flow measurements are desirable.

However, in recent years efforts were made to lower the costs of a PIV setup and expending its range of application in the above-mentioned fields. The cost for a PIV setup can be split into two major parts: First, the cost of commercial evaluation software and second the cost of the hardware consisting mainly but not exclusively of the laser, cameras, and computing hardware. To circumvent the former, many open-source tools like the OpenPIV Python Package (Liberzon et al. 2021), PIVlab (Thielicke and Stamhuis 2014), JPIV (Vennemann 2007) or a plugin for ImageJ (Tseng 2011), among others, have been made available. A free mobile phone application called ’smartPIV’ provides mobile phone-based planar PIV, even for non-expert users (Cierpka and Mäder 2019).

For the latter, several different approaches have been proposed and successfully tested, targeting different parts of the experimental hardware. For illumination Willert et al. (2010) suggested the use of a high-performance LED instead of a laser, while Cierpka et al. (2016) successfully employed mobile phone cameras for particle image recording in planar PIV. The application of mobile phone cameras has shown to be promising and, was recently extended for tomographic PIV (Aguirre-Pablo et al. 2017). The time coding in that case was done via different colored LEDs that were consecutively switched on. The different color channels of a single image of the mobile phone camera represent the different frames. The authors reconstructed velocity fields by commercially available software using the colored shadow images. In addition to, the fact that for the four cameras optical access has to be available, the tomographic reconstruction is computationally expensive. Furthermore, the resolution is drastically decreased, since for a Bayer mosaic camera only every fourth pixel (red and blue channel) or every second pixel (green channel) is used.

To the authors knowledge, a stereoscopic PIV setup that benefits from the low cost of mass-produced hardware has not been presented. The main advantages are as follows: a higher seeding concentration in comparison to tomographic approaches, a higher resolution due to the higher seeding concentration and the use of all pixel simultaneously and, only one optical access would be necessary. However, in comparison to tomographic PIV the full velocity vector would be only measured in a plane instead a volume. Therefore, this work proposes a stereoscopic PIV setup that uses low-cost action cameras together with a novel technique for camera synchronization that utilizes the light source for timing. The technique is robust against motion blur or systematic errors caused by the rolling shutter and can be used for planar as well as stereoscopic PIV. A major benefit of the system is that the cameras can be installed autarkic without the need for any wiring. Thus they could even be used in pressure vessels (Cierpka et al. 2019) or on moving experiments (Hoff and Harlander 2019; Kinzel et al. 2009).

The manuscript is structured as follows: at first, the camera characteristics are presented and assessed; second the image reconstruction and synchronization technique is explained, followed by a benchmark experiment using a well-known planar rotational movement. Finally, the stereoscopic setup is described and the suitability is accessed for a free jet flow in water.

2 Camera qualification

2.1 Field of view and spatial resolution

In a first step, the used action cameras (GoPro Hero 5) had to be qualified. The camera uses an image sensor with a pixel size of 1.55 \(\mu\)m \(\times\) 1.55 \(\mu\)m and offers various kinds of image acquisition, ranging from single images over time series of images to videos with a frame rate up to 240 Hz and different resolutions and fields of view (FOV). While the camera is capable of recording videos with a resolution of up to 3840 \(\times\) 2160 pixel at a frame rate of 30 Hz, the resolution is significantly reduced to 1280 \(\times\) 720 pixel and the FOV decreases if a frame rate of 240 Hz is chosen. On the one hand the decreased area of active pixel reduces the possible measurement resolution, but on the other hand limits the occurring barrel distortion of the fish-eye objective of the camera which increases for wider FOV.

Choosing the highest possible frame rate allows for accessing larger velocities (Cierpka et al. 2016). Therefore, the further investigation is based on the 240 Hz video mode. To quantify the spatial resolution and the quality of the optics, camera calibrations were performed using a multi-plane calibration target (type 204-15 LaVision GmbH). The camera was positioned with the optical axis perpendicular to the calibration target in varying distances l between 130 mm and 500 mm. A sketch of the setup can be seen in Fig. 1. The distance \(l = 130\) mm is the minimum spacing between camera and calibration target required to obtain a focused image, whereas at a distance l of 500 mm the whole calibration target is projected on the camera sensor.

Figure 2 shows the horizontal and vertical spatial resolution (a) and the FOV (b) of the camera as a function of the distance l between the camera and calibration target. It can be seen that the horizontal and vertical spatial resolution for the same distance is approximately equal, ranging from 0.07 mm per pixel at a distance of 130 mm to 0.29 mm per pixel at a distance of 500 mm. This allows to measure an area of approximately 230 mm \(\times\) 125 mm at a distance of 300 mm, which might apply for many typical PIV setups, especially for educational purposes.

In addition to the FOV and the spatial resolution, the rms of the calibration error was also evaluated. The calibration was performed using the third-order polynomial approach for image mapping (Soloff et al. 1997). The results are presented in Fig. 3 that shows the rms of the calibration error as a function of the distance l.

It can be seen that the rms of the calibration fit ranges from 0.09 pixel at a working distance of 135 mm to 0.17 pixel at a working distance of 215 mm. The rms describes the mean absolute deviation from the found marker positions to the predicted marker position by the calibration function, i.e. how good the calibration model is capable to map the true distortions. The large scatter in the rms of the calibration fit, especially for smaller working distances, result from the fact that the calibration target was too large to completely fit on the image sensor and for an increasing working distance more calibration markers entered the images at its border as can be seen in the corners of the calibration image for l = 145 mm in Fig. 4 (top). Due to the fish-eye wide-field objective lens of the camera, the optical distortion of the FOV is largest at the margin. In order to correct these optical distortions by a mapping function, calibration markers should be present also at the edge. In Fig. 4 calibration images for a distance of l = 145 mm and 260 mm can be seen. Since only one calibration target with constant spacing between the markers was used for the whole depth range, less calibration markers were imaged for small working distances and the distance in the image between neighboring markers was large (top). This can result in a larger rms error and a significant scatter. With increasing working distance the FOV increases while the magnification decreases, yielding more calibration markers with smaller distance on the image sensor (bottom). Hence, the effect of new markers entering the FOV and the larger distances between the markers becomes less significant. However, this can be further reduced by the use of a calibration target which is adopted to the actual FOV. Nevertheless, the rms errors show that a reliable calibration is possible. This proves the suitability of the optics to be used with common calibration approaches, in particular, considering that the action camera is a mass-produced consumer product with a wide-field objective lens.

2.2 Image acquisition

In addition to the quality of the optics, several other camera attributes must be considered. Most important are the rolling shutter, the settings of the virtual aperture of the camera, the recording frequency as well as the ability to synchronize two cameras and the light source in the stereoscopic setup. Like most consumer cameras, the GoPro Hero 5 uses a rolling shutter. This means that in opposite to a global shutter, the pixel information is not read out simultaneously but in a continuous process line by line. This can result in a systematic error of the velocity estimation and, even more decisive, complicates a conventional camera synchronization.

The virtual aperture is a software function that controls the exposure time of the camera to protect the image from overexposure. By default the exposure time is automatically adjusted. However, this function, that is often helpful in everyday use, can result in a loss of information if the aperture closes before the pixel can be read out by the camera. Hence, the automatic adjustment of the virtual aperture must be deactivated in the advanced settings of the camera.

Another factor that highly influences the accuracy of the measurement is the exact knowledge of the recording frequency and its temporal stability. Therefore, the readout frequency of two cameras was characterized in an experiment. The experimental setup consisted of the two cameras and a pulsed high-performance LED (Thorlabs SOLIS-525C with the DC2200 LED driver). The LED was adjusted so that the pulse frequency (\(f_{{p}}\)) equals the nominal frame rate (\(f_{{r}}\)) of the camera, namely 240 Hz. Since the exposure time of the camera cannot be adjusted and to avoid motion blur, pulsed illumination was used and controlled in the current setup by the LED driver. The duration of the light pulses (\(t_{i}\)) was set to 50 \(\mu\)s, which is substantially smaller than the time between consecutive images. This results in a ’freezing’-effect of the particle image motion since the image sensor is only illuminated for very short times. In addition, the pulsed illumination is necessary using more than one camera as will be explained in Sect. 3.2 since the cameras cannot be synchronized.

Because the pixel are read out continuously by the rolling shutter, a small black stripe appears on the image where the sensor is insensitive to light during the readout process. If the camera frame rate and the pulse frequency align perfectly, the position of the black stripe should remain at the same position over a whole time-series. By determining the position of the black stripe over a larger number of frames, the actual readout frame rate can be estimated. It turned out that the frame rate for both cameras is not only lower than the nominal frame rate with \(f_{r}\) = 240 Hz but also differs between the cameras with \(\pm\) 0.001 Hz. However, the frame rate of both cameras is constant in time. On some images no black stripe could be identified, which indicates that the rolling shutter has a small reset time \(t_{reset}\) after the readout of the last pixel row.

At first glance, the deviation seems to be small but it adds up over larger observation times and furthermore, the cameras require constant synchronization if used in a stereoscopic setup. Additionally, to the frame rate, the possibility to simultaneously start the recording on both cameras was examined. It is possible to control several cameras using voice commands, a smartphone app or a remote controller. For the investigation, the cameras were controlled using this remote controller. Even though both cameras were connected to the remote and received the same signal, it was not possible to reliably start the recording for both cameras at the same time. Because of that and because of the deviation in the frame rate of the cameras a new way to synchronize the cameras had to be found.

3 Image capturing

3.1 Camera recording and synchronization

Since a conventional synchronization between cameras and light source could not be established, a technique based on the light pulse frequency \(f_{p}\) has been developed and applied. As described in the section above, a light pulse can be used to create a snapshot of the scene if there is no other source of illumination. If the light source runs on a frequency that is equal or lower than the frame rate of the cameras, the rolling shutter can read out the whole active sensor before it is illuminated again. Regarding the circumstance that the cameras record with slightly different frequencies, in practice a light pulse frequency slightly smaller than the lower frame rate, e.g. 211 Hz must be used.

As inherent for all PIV setups when the light source is pulsed, the illumination determines the timing scheme. Thus, the frame rate does not need to be temporally constant as long as it remains higher than the pulse frequency of the LED. However, the difference in frequencies results in a number of black pixel rows. This black region in the images is caused firstly by the readout of the camera, when the pixel are not sensitive to light and secondly by the times when the light source is switched off due to the different frequencies. The height of the black pixel rows also depends on the ratio of the camera frame rate and the light pulse frequency. The lower the light pulse frequency while keeping the camera frame rate constant, the larger is the black region. Since the light pulses occur also during the image readout, the information obtained from one illumination may be split onto two camera frames. Therefore, an image reconstruction method to match those parts of the images and reconstruct the image information is required (see Sect. 3.2 for details). At this point it also has to be mentioned, that the velocity range for the velocity determination is limited by the time delay between frames. As the light pulse frequency has to be smaller than the maximum camera frame rate the time delay is given by \(1/f_{p}\), which is in the order of \(\varDelta t \sim 5\) ms. As the cameras cannot be synchronized and the frame rate might not be constant, frame straddling, a typically used technique to reduce \(\varDelta t\) is not applicable.

The image reconstruction is explained in the following and a sketch of the main part of the image reconstruction scheme is provided in Fig. 5. All parts of the scheme were implemented as MATLAB scripts, however, for example Python with the relevant scientific packages can also be used for this purpose. For this method to work, there are a few precautions to consider. At first, there should not be any other illuminations except from the pulsed LED. Secondly, the cameras must start recording before the LED is activated. By this, a reference point for the image reconstruction can be determined by the single first frame of each camera that contains illuminated pixel. The frame number will most likely not coincide between both cameras.

3.2 Image reconstruction

After recording, all frames of the videos are extracted and saved as images. As next step a mask was created to define a region of interest, which increases the robustness of the image reconstruction.

Sketch of the timing and image reconstruction scheme. The two left columns show the timing of the light source and the camera. The green dashes depict the light pulses. The illumination time of the light pulse \(t_{i}\) is much shorter than \(1/f_{p}\). The black, horizontal dashes in the camera timing column indicate the rolling shutter’s timing, which directly corresponds to the shutter position on the camera sensor. The recording time \(1/f_{p}\) of a single frame consists of the readout time \(t_{read}\) of the camera sensor and the reset time of the rolling shutter \(t_{reset}\). However, since the reset time is much shorter than the readout time, it does not affect the main principle of the image reconstruction, and was, therefore, not further investigated. The third column shows the camera readout resulting from the relative timing between LED and camera. The green color represents the part of the sensor that was illuminated by a light pulse. The black areas result from the requirement \(f_{r}>f_{p}\). Therefore, some sensor parts are read out twice during the period \(1/f_{p}\). The different hatchings are applied to illustrate the relation between the camera readout and the reconstructed frames in column four, later used for the PIV evaluation. The developed algorithm merges those parts of the camera readout that belongs to the same light pulse. This way, instantaneous snapshots with a temporal distance of \(1/f_{p}\) can be derived. However, due to the pixel’s light insensitivity during the readout process, a small reconstruction artifact, which contains no information, remains

Subsequently, the actual image reconstruction routine can be started. In a first step, the routine calculates the average pixel intensity of the whole image. If the average is below a background threshold, the image is considered as not illuminated and skipped. When the first illuminated image is detected it is stored in a buffer and the algorithm calculates the average of each row. The average is then used to determine the edge of the illumination by comparing the row averages with a threshold, and the image gets forwarded in the buffer. The algorithm then continues with the next camera image and again calculates the row average in order to determine the edge of the illumination. A new image containing the information of one light pulse is now generated by combining the part below the edge of the previous image with the part above the edge of the recent image, as displayed in Fig. 5. The reconstructed image is then stored while that part of the raw image used for the reconstruction is set to zero and forwarded in the buffer for reconstruction of the next image. If the illumination coincides with the reset time of the rolling shutter, no reconstruction is required. The image gets directly saved, and the related buffer entry is set to zero. This procedure continues until the desired number of images is saved or no further camera frames are available.

To allow a quick impression of the result of the reconstruction, Fig. 6 compares a reconstructed image (a) and an image recorded using continuous wave (cw) illumination (b). The images contain the blade of an optical chopper with a printed particle pattern mounted on it.

In the reconstructed image a black stripe can be seen. This stripe is a reconstruction artifact and is caused by an insensitivity of the the pixel rows at readout and corresponds to the rolling shutter position during pulse illumination (white arrow in Fig. 6). While this must be considered as a disadvantage, the reconstructed image has the advantage of a highly reduced motion blur compared to the image with cw illumination image; however, the brightness is lower. Also a temporal allocation, which is essential for stereoscopic processing, is only possible for the pulsed illumination mode. To introduce the reader to the further used coordinate system and the rotation \(\omega\), those were drawn into the image (b).

4 PIV measurements

4.1 2D-PIV of a rotating disk

To access the suitability of the reconstruction method, an experiment was set up using only one camera, a LED and an optical chopper. To evaluate images with a known displacement a printed particle pattern, with a radius of roughly 40 mm, was attached to the chopping blade of an optical chopper. The chopping blade is powered by a small electrical engine that is closed-loop controlled and provides a uniform, clockwise rotation. The rotation rate n can be preset precisely while also the theoretical value of the velocity can be easily calculated using the equation \(u_{\text{ circ }}(r)=2\) \(\cdot \,\pi \,\cdot \,r\,\cdot \,n\). In this equation, \(u_{\text{circ}}\) denotes the circumferential velocity of the turning wheel in dependence of the radius r and fixed rotation rate n of the chopping blade. For the pulsed illumination, the LED driver was set to deliver a pulse frequency of \(f_{p}\) = 211 Hz and an illumination time of \(t_{i} = 20\,\upmu\)s. Rotation rates n in the range of 1.5 to 4 Hz were investigated resulting in a velocity \(u_{\text{ circ }}\) of approximately 0.375 m/s and 1 m/s at radius \(r\) = 40 mm, respectively.

To not only compare the measurement values with the theoretical value also the performance of the constant wave illumination mode for the same setup was accessed. This mode was already applied for PIV measurements with mobile phone cameras (Cierpka et al. 2016). In this case, the PIV processing was performed using the commercial software DAVIS (LaVision GmbH). However, other PIV software, especially open source software can also be used. For the PIV processing the correlation averaging approach was used due to the temporal constant velocity and to compensate for the reconstruction artifacts. For the initial pass, an interrogation window size of 128 \(\times \) 128 pixel and interrogation window overlap of 50 \(\%\) was chosen. The final pass was performed using an interrogation window size of 24 \(\times\) 24 pixel and an overlap of 75 \(\%\).

The vector field for the pulsed illumination mode and the rotation rate \(n\) = 4 Hz is shown in Fig. 7. The visualized data were interpolated onto a polar grid using a cubic interpolation. It can be seen that the velocity increases linearly for an increasing radius as expected. However, small irregularities can be spotted at the center. This is caused by the mounting screws of the chopping blade. A quantitative overview of the velocity that compares the result of the pulsed illumination mode, the cw illumination, and the theoretical value is presented in Fig. 8.

The figure shows circumferential averaged velocity profiles of the measured velocity for r between 25 mm and 35 mm on the left y-axis (blue) as well as the absolute averaged deviation between the measured and the theoretical velocity on the right y-axis (red). It shows that the averaged velocity profile, especially for the pulsed illumination mode, fits well with the theoretical values. The pulsed illumination mode outperforms the cw illumination mode for the displayed radii with a deviation from the theoretical value of up to 1 mm/s which is about ten times smaller than the difference between the cw exposure and the theoretical value. In comparison to the absolute velocity this corresponds to a deviation smaller than 0.2 \(\%\) for the pulsed mode and 2 \(\%\) for the cw mode. In addition to the circumferential averaged profile, non-averaged horizontal profiles of the velocities and the deviations of the velocities from the theoretical values are shown in Fig. 9.

Horizontal profiles of the vertical velocity \(u_{y}\) of the chopping blade (a) and its deviation from the theoretical value (b) at a rotation rate of \(n\) = 4 Hz. The dashed lines represent the cw mode data for \(x<\) 0 (red) and \(x>\) 0 (green), the solid lines the pulsed mode data for \(x<\) 0 (blue) and \(x>\) 0 (cyan), and the dotted black line the theoretical velocity

In plot (a) the absolute value of the vertical velocities \(|\,u_{y}\,|\) are plotted over the distance from the center \(|\,x\,|\). The coordinates correspond to the coordinate system introduced in Fig. 6. Solid lines represent the pulsed mode, dashed line the cw mode and the dotted line the theoretical value. It can be seen that velocity profiles of both modes agree reasonably well with the theoretical value, however, the velocity profile of the pulsed mode achieve a better match especially for larger velocities.

A detailed view of the deviation of the velocity profiles from the theoretical values (\(|u_{y}|- |u_{y,\text{ t }} |\)) is shown in plot (b). The detailed view shows that the velocity deviation for the pulsed mode lies predominantly around 5 mm/s with a maximum of \(\approx \) 8 mm/s and no trend can be discerned. However, the deviation of the cw mode velocity lies constantly higher with a maximum of \(\approx\) 26 mm/s and a linear increase of the deviation for larger \(|\,x\,|\) can be distinguished. This is caused by the nature of the rolling shutter. The rolling shutter moves either contrary or towards the vertical displacement. This means that displacements in the positive y-direction for \(x<0\), i.e. vertically upward, are underestimated and in the negative y-direction for \(x<0\), i.e. vertically downward, are overestimated. Furthermore, the particle images are elongated in the vertical direction due to motion blur, which increases the overestimation of the vertical velocity for \(x>0\) and the underestimation for \(x<0\), respectively, and can be clearly seen in Fig. 9b.

4.2 Stereoscopic PIV of a tilted rotating disk

To not only access the suitability for planar motion, but also displacements perpendicular to the measurement plane, a stereoscopic setup using two GoPro Hero 5 cameras was installed. A schematic top view as well es a picture of the used setup is presented in Figs. 10 and 11, respectively.

Both cameras were positioned in such a way that the z-distance between particle pattern and camera optics amounted to 300 mm. The cameras were arranged with a stereo angle \(\alpha \approx \) 30\(^{\circ }\) which is in the range of an optimal stereo angle according to the documentation of the DAVIS software. The pulsed LED was deployed in between the cameras so that the particle pattern was evenly illuminated. In opposite to the 2D-PIV, the optical chopper was placed on top of a precision rotation stage. This arrangement allowed to precisely adjust the optical chopper by a tilt angle \(\beta\) of up to \(\pm \) 5\(^{\circ }\) with a sensitivity of \(\approx \) 0.04\(^{\circ }\).

At the beginning of the experiment calibration videos of a 3D calibration target (type 204-15 LaVision GmbH) were recorded. After the calibration videos were taken, the chopper blade with the particle pattern was translated into the calibration plane. Thus, defining the zero-tilt position of the rotation stage. Subsequently, the LED was adjusted to a pulse frequency of 211 Hz and an exposure time of 30 \(\mu\)s and videos for each tilt angles \(\beta\) = − 5\(^{\circ }\), − 3\(^{\circ }\), − 1\(^{\circ }\), 0\(^{\circ }\), 1\(^{\circ }\), 3\(^{\circ }\), 5\(^{\circ }\) and rotation rates of \(n\,=\, 4\) Hz and 8 Hz were recorded. Again, in order to allow for a successful camera synchronization, the cameras were activated slightly ahead of the LED. Due to the tilt of the optical chopper, the movement of the particle pattern was no longer parallel to the calibration plane but contains an orthogonal component. The analytical description is given by the equations \(u_{x}(y)=2\cdot \pi \cdot y\cdot n \cdot\) cos(\(\beta\)) for the horizontal component and by \(u_{z}(y)=- 2\cdot \pi \cdot y\cdot n \cdot\) sin(\(\beta\)) for the orthogonal component, respectively.

Subsequent to the video recording the reconstruction algorithm explained in Sect. 3 was applied for both cameras. As the first step of the processing, a polynomial-based calibration was performed using images that were extracted from the calibration video. To improve the calibration and to account for misalignment between particle pattern and calibration target, the initial calibration was refined using the stereo self-calibration (Wieneke 2005). For the self-calibration, the particle images of the velocity measurement with a tilt angle of \(\beta \) = 0\(^{\circ }\) were used. The self-calibration correlates images that were taken at the same time but by different cameras to correct misalignment. Therefore, an exact synchronization of the cameras is crucial in order to successfully apply the self-calibration and improve the calibration fit.

Prior to the PIV interrogation, the image quality was adjusted by applying a sliding average filter. For the stereo-PIV interrogation, the sum-of-correlation approach was chosen, due to the constant nature of the motion. For the initial pass, the overlap was set to 50 \(\%\) and interrogation window size of 128 \(\times \) 128 pixel. The final interrogation pass was performed with an interrogation window size of 24 \(\times\) 24 pixel and an overlap of 75 \(\%\). In Fig. 12 the resulting vector field for \(n\) = 4 Hz and \(\beta \) = 5\(^{\circ }\) is presented. The arrows visualize the in-plane-velocity (\(u_{x}\) and \(u_{y}\)) and the background color the \(u_{z}\) velocity.

Velocity field determined from the pulsed mode images of the tilted chopper blade. The images were recorded using a light pulse frequency \(f_{p}\,=\,211\) Hz, a rotation rate of \(n\,=\,4\,\) Hz and a tilt angle of \(\beta\) = 5\(^{\circ }\). The arrows visualize the in-plane-velocity (\(u_{x}\) and \(u_{y}\)) and the background color the out of plane velocity \(u_{z}\)

It can be seen that the magnitude of \(|\,u_{z}\,|\) increases with \(|\, y\,|\) as predicted by the equation; however, the \(|\,u_{z}\,|\) is not as symmetrically distributed as predicted. The in-plane velocity (\(u_{x}\) and \(u_{y}\)) is evenly distributed across the whole circumference and, in fact, a tilt by 5\(^\circ\) is to small to be properly visualized in this graph. To precisely determine the deviation between the measured velocity \(u_{z}\) and its theoretical value, Fig. 13 compares the experimentally determined values with the corresponding theoretical values for \(y\) = -35 ... -15 mm in plot (a) and \(y\) = 15 ... 35 mm in plot (b), respectively. It can be seen that every velocity profile, independent of its individual tilt angle, differs slightly. By comparing both plots, it gets obvious that the absolute value of all velocities are either underestimated for \(y<\) 0 or overestimated for \(y>\) 0; this indicates a systematic error in the \(u_{z}\) velocity component.

This systematic error is caused by a slightly non-planar disk. In some regions the printed paper is corrugated. Therefore, the printed particle images are in a different plane with respect to the calibration plane. This issue cannot be corrected by self-calibration as this region rotates with the disk. This deviation is negligible for the in-plane velocity components but causes a systematic error for the out-of-plane component, as the in-plane vectors used for the reconstruction of the out-of-plane components do not correspond to the calibration plane. This effect is only present in the plane where the angle between the two cameras is adjusted, i.e. it only affects \(u_z\) if \(|u_x |> 0\) and thus results in a systematic error in dependence of y and in dependence of the rotation directions as can be seen in Fig. 12. This problem would never occur for real measurements, as the laser light sheet illuminates always the same plane. However, since the systematic error is the same for every tilt angle, the results can be corrected by subtracting the velocity profile for \(\beta \) = 0\(^{\circ }\) from each velocity profile. A detailed view of the vertical \(u_{z}\) velocity profile is shown in Fig. 14. In this graph the \(u_{z}\) velocity is plotted over the vertical distance from the center \(|\,y\,|\). It becomes apparent that the velocity is in good approximation symmetrical to the center and increases linearly from \(\approx\) 3 mm/s to \(\approx\) 12 mm/s with increasing distance.

The results of the subtractions of the zero-tilt velocity profiles are presented in Fig. 15; it can be seen that by the correction the experimental data match the theoretical data very well.

Corrected vertical velocity profiles of \(u_{z}\) for different tilt angles \(\beta\) and rotation rate \(n\) = 4 Hz. Solid lines are experimentally determined, dashed lines mark the theoretical value. The values are corrected by subtracting the velocity profile for tilt angle \(\beta \) = 0\(^{\circ }\)

Furthermore, the magnitude of the \(u_{z}\) component investigated with the rotating disk is comparatively small due to the tilting limitation. Table 2 shows that for larger \(u_{z}/u_{x}\) ratios the relative deviation between the uncorrected measured and the predicted velocity rapidly decreases from \(\approx\) 65 \(\,\%\) for \(\beta \;=\;\pm \) 1\(^{\circ }\) down to \(\approx \) 13 \(\,\%\) for \(\beta \;=\;\pm \) 5\(^{\circ }\). The absolute velocity deviations of \(u_{z}\) for \(y\) = \(\pm \) 30 mm lie within the range of -6.75 mm/s and -9.61 mm/s.

4.3 Stereoscopic PIV on a free jet

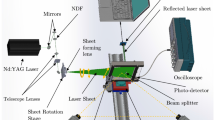

In addition to the quantitative assessment on the tilted rotating disk, the stereoscopic setup was tested on the jet of an aquarium pump. A schematic top view of the measurement setup is shown in Fig. 16.

The aquarium has a size of approximately \(20\times 20\times 30\) cm\(^3\) and was filled with water up to the height of 18 cm. A conventional aquarium pump with a diameter \(d_{r}\,\approx \) 5 cm was placed in the middle of the glass wall according to Fig. 16 and run with a rotation rate \(n\) = 1000 Hz which corresponds to a Reynolds number Re = \(\;\frac{\rho \,\cdot \,n\,\pi \,d_{r}^{2}}{\eta }\;\) = 7,85 \(\,\times \,10^{6}\) at the pump propeller, with \(\rho\) and \(\eta\) being the density and the dynamic viscosity of water, respectively. The light sheet was positioned about 1 cm apart from the opposing side of the aquarium perpendicular to the main flow direction of the jet. The cameras were positioned in a stereo angle of 30\(^{\circ }\) and 30 cm apart from the light sheet plane. For the lightsheet a cw laser, pulsed by a function generator with a frequency of \(f_{p}\) = \(211\) Hz and an illumination time of \(t_{i} = 100\,\mu\)s in each period, was used.

Example particle images of the jet flow. Image a has been recorded by the camera in the forward scatter arrangement, image b was recorded by the camera in the backward scatter arrangement. The horizontal black stripe in the image center is an reconstruction artifact. The minimum of 100 images that did not contain any reconstruction artifacts was already subtracted

In those images, the background was already subtracted. For the evaluation videos with a length of 4 \(\min\) were recorded corresponding to just above 50,000 images for each camera. The evaluation was done using the background-subtracted particle images (see Fig. 17) with a sliding sum-of-correlation approach with a filter length of two. This approach was chosen since the expected displacement was small and it can compensate for the missing particle image information due to the reconstruction artifact. Just as a general remark, in the region of the black stripe due to reconstruction artifacts no reliable velocity determination would be possible and the region should be masked out. This is easily possible, as the position is known for the reconstruction algorithm. Recently, also a very powerful masking tool has been developed that takes the intensity distribution of the surrounding pixel into account and builds a random intensity mask. For details, the interested reader is referred to Anders et al. (2019). Since most often the corrupted region extents only for several lines, the size of the interrogation windows in that direction is larger. This means, using spectral random masking would only decrease the signal-to-noise ratio of the corresponding interrogation window but allow for a valid velocity determination. However, for the current study this masking procedure was not applied.

Since the displacement was small the correlation was not performed with directly consecutive images but with a step width of the three to increase the dynamic velocity range. This way the maximum displacement was increased to \(\approx \) 13 pixel. The used interrogation window size was 64 \(\times \) 64 pixel with an overlap of 50 \(\%\) for the initial pass an 32 \(\times \) 32 pixel with an overlap of 75 \(\%\) for the final passes. The averaged and an instantaneous vector field of the flow is shown in Fig. 18.

Vector fields of the jet flow. The vector field in subfigure a shows the averaged value while in subfigure b an instantaneous vector field is displayed. Missing vectors were interpolated (Garcia 2010)

The average velocity field displays the mean of almost 17,000 instantaneous velocity fields. The temporal average clearly shows that the largest positive out-of-plane component is located in the center and the flow deviates to the sides and flows back at the edges as one would expect from a jet flow near the wall. The instantaneous velocity field shows the turbulence of the flow and the inhomogeneous distribution of \(u_{z}\). The magnitude of the instantaneous velocity \(u_{z}\) is about ten times larger than in the averaged velocity \(\left\langle u_{z}\right\rangle\) indicating a highly unsteady flow; however, the jet flow is clearly recognizable which proves that the presented method is also capable of providing instantaneous velocity fields. Horizontally and vertically averaged velocity profiles of the averaged velocity field and the probability density functions (PDF) of all velocity components are shown in Fig. 19 and 20.

As already indicated by the averaged velocity field it can be seen that the velocity \(u_{z}\) is largest in the middle of the FOV and decreases towards the borders where the back-flow sets in. However, this is more distinct in along the x-axis due to the larger extension of the measuring range into this dimension which was limited in the y-axis direction due to the necessary illumination. The maximum probability is also slightly shifted towards small negative \(u_{z}\) values, though the amount of vectors with a high \(u_{z}\) magnitude is larger for positive \(u_{z}\) values. This is in line with the expectations for such a flow. The velocity \(u_{x}\) in the vertically averaged profile and the \(u_{y}\) velocity in the horizontally averaged profile are close to zero at the center and increase in magnitude towards the boundary of the FOV. In the vertically averaged profile, the effect of the sidewalls on the velocity \(u_{x}\) gets apparent which deflects the flow movement in the x-direction. This effect is less apparent for the velocity component \(u_{y}\) in the horizontally averaged velocity profile again due to the small extension in this direction. One would expect the velocity \(u_{y}\) in the vertically averaged profile and the velocity \(u_{x}\) in the horizontally averaged profile, respectively, to be close to zero along the whole profile. However, the velocity is slightly positive along most of the profiles. This is due to the fact that the vector field is not exactly centered around the jet as well as the circumstance that the measurement plane is limited by solid walls on three sides and a free surface on top. This is also observable in the PDFs of \(u_{x}\) and \(u_{y}\), which maxima are slightly shifted towards small positive velocities. However, the velocity vectors are evenly distributed around the maximum as expected.

5 Conclusion and outlook

A low-cost stereoscopic PIV setup using two action cameras was proposed and successfully tested. Since action cameras cannot be synchronized with the illumination and their images are captured in rolling shutter mode, a novel approach was developed applying a pulsed illumination with detuned frequency and image reconstruction.

It was shown that the pulsed illumination is robust against motion blur, minimizes errors due to the rolling shutter and, therefore, outperforms the continuous illumination used in many low-cost planar PIV setups. However, using an LED or an cw-laser that can be pulsed by a standard frequency generator, keeps the costs in the order of several hundred Euros and thus two orders of magnitude lower than for a high-performance PIV system.

The presented reconstruction scheme allows for composing image captures with particles illuminated at the same time instant over the entire field of view. However, small reconstruction artifacts remain, which can be neglected applying an ensemble average evaluation, e.g. sliding sum-of-correlation. Moreover, starting the image acquisition before the first illumination pulse allows for a later synchronization of several cameras. Stereoscopic measurements on a rotating particle pattern and on a jet flow demonstrated the capability of this approach for reliable three-component velocity measurements in a plane, even in unsteady flows.

Further developments can be made by using more than two cameras and applying the reconstructed and synchronized images to particle tracking velocimetry. In this way, the presented approach promise volumetric flow measurements in industrial and educational applications at a reasonable costs.

References

Adrian RJ (2005) Twenty years of particle image velocimetry. Exp Fluids 39:159–169. https://doi.org/10.1007/s00348-005-0991-7

Aguirre-Pablo AA, Alarfaj MK, Li EQ, Hernández-Sánchez JF, Thoroddsen ST (2017) Tomographic particle image velocimetry using smartphones and colored shadows. Sci Rep 7:1–18. https://doi.org/10.1038/s41598-017-03722-9

Anders S, Noto D, Seilmayer M, Eckert S (2019) Spectral random masking: a novel dynamic masking technique for PIV in multiphase flows. Exp Fluids 60:68. https://doi.org/10.1007/s00348-019-2703-8

Arroyo M, Greated C (1991) Stereoscopic particle image velocimetry. Measure Sci Technol 2:1181. https://doi.org/10.1088/0957-0233/2/12/012

Cierpka C, Mäder P (2019) SmartPIV - Smartphone-based flow visualization for education. In: 27. Fachtagung “Experimentelle Strömungsmechanik”, Erlangen, Germany, September 3–5

Cierpka C, Lütke B, Kähler CJ (2013) Higher order multi-frame particle tracking velocimetry. Exp Fluids 54:1533. https://doi.org/10.1007/s00348-013-1533-3

Cierpka C, Hain R, Buchmann NA (2016) Flow visualization by mobile phone cameras. Exp Fluids 57:108. https://doi.org/10.1007/s00348-016-2192-y

Cierpka C, Kästner C, Resagk C, Schumacher J (2019) On the challenges for reliable measurements of convection in large aspect ratio rayleigh-bénard cells in air and sulfur-hexafluoride. Exp Therm Fluid Sci 109:109841. https://doi.org/10.1016/j.expthermflusci.2019.109841

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41:933–947. https://doi.org/10.1007/s00348-006-0212-z

Garcia D (2010) Robust smoothing of gridded data in one and higher dimensions with missing values. Comput Stat Data Anal 54:1167–1178. https://doi.org/10.1016/j.csda.2009.09.020

Hoff M, Harlander U (2019) Stewartson-layer instability in a wide-gap spherical couette experiment: Rossby number dependence. J Fluid Mech 878:522–543. https://doi.org/10.1017/jfm.2019.636

Kähler CJ, Astarita T, Vlachos P, Sakakibara J, Hain R, Discetti S, La Foy R, Cierpka C (2016) Main results of the fourth international PIV challenge. Expe Fluids 57:97. https://doi.org/10.1007/s00348-016-2173-1

Kinzel M, Holzner M, Lüthi B, Tropea C, Kinzelbach W, Oberlack M (2009) Experiments on the spreading of shear-free turbulence under the influence of confinement and rotation. Exp Fluids 47:801–809. https://doi.org/10.1007/s00348-009-0724-4

Liberzon A, Käufer T, Bauer A, Vennemann P, Zimmer E (2021) OpenPIV python package. https://doi.org/10.5281/zenodo.593157

Maas HG, Gruen A, Papantoniou DA (1993) Particle tracking velocimetry in three-dimensional flows. Exp Fluids 15(2):133–146. https://doi.org/10.1007/BF00190953

Minichiello A, Armijo D, Mukherjee S, Caldwell L, Kulyukin V, Truscott T, Elliott J, Bhouraskar A (2020) Developing a mobile application-based particle image velocimetry tool for enhanced teaching and learning in fluid mechanics: a design-based research approach. Comput Appl Eng Educ. https://doi.org/10.1002/cae.22290

Nishino K, Kasagi N, Hirata M (1989) Three-dimensional particle tracking velocimetry based on automated digital image processing. J Fluids Eng 111(4):384–391. https://doi.org/10.1115/1.3243657

Shnapp R, Shapira E, Peri D, Bohbot-Raviv Y, Fattal E, Liberzon A (2019) Extended 3d-PTV for direct measurements of lagrangian statistics of canopy turbulence in a wind tunnel. Sci Rep. https://doi.org/10.1038/s41598-019-43555-2

Soloff SM, Adrian RJ, Liu ZC (1997) Distortion compensation for generalized stereoscopic particle image velocimetry. Measure Sci Technol 8:1441. https://doi.org/10.1088/0957-0233/8/12/008

Thielicke W, Stamhuis E (2014) PIVlab - towards user-friendly, affordable and accurate digital particle image velocimetry in MATLAB. J Open Res Softw. https://doi.org/10.5334/jors.bl

Tseng Q (2011) Study of multicellular architecture with controlled microenvironment. PhD thesis, University of Grenoble

Vennemann P (2007) JPIV-software package for particle image velocimetry

Wieneke B (2005) Stereo-piv using self-calibration on particle images. Exp Fluids 39:267–280. https://doi.org/10.1007/s00348-005-0962-z

Willert CE, Stasicki B, Klinner J, Moessner S (2010) Pulsed operation of high-power light emitting diodes for imaging flow velocimetry. Measure Sci Technol 21:075402. https://doi.org/10.1088/0957-0233/21/7/075402

Acknowledgements

The authors acknowledge financial support by the Thüringer Ministerium für Wirtschaft, Wissenschaft und Digitale Gesellschaft. The authors also want to thank Matthias Tuchscherer for his support on the camera characterization and Thomas Fuchs for fruitful discussions.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

In Table 1 the velocities \(u_{x}\), \(u_{x}^{*}\), \(u_{x}^{'}\), \(u_{z}\), \(u_{z}^{*}\) and \(u_{z}^{'}\) as well as the ratio \(u_{z}/u_{x}\) for the different tilt angles and the distances − 30 mm and 30 mm are shown. The asterisk denotes the velocity that are corrected either by adding, in the case of \(u_{x}^{*}\), or by subtraction, in the case of \(u_{z}^{*}\), the \(u_{z}\)(\(\beta \) = 0) velocity profile from the measured values. The apostrophe denotes the theoretical velocity.

Table 2 contains the deviation between experimentally determined velocity \(u_{z}\) and the theoretical value \(u_{z}^{'}\), the absolute deviation between the corrected velocity \(u_{z}^{*}\) and the theoretical value \(u_{z}^{'}\), the relative deviations between \(u_{z}\) and \(u_{z}^{'}\), \(u_{z}^{*}\) and \(u_{z}^{'}\) as well as between \(u_{x}\) and \(u_{x}^{'}\) for the different tilt angles and the distances \(y\) = −30 mm and 30 mm.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Käufer, T., König, J. & Cierpka, C. Stereoscopic PIV measurements using low-cost action cameras. Exp Fluids 62, 57 (2021). https://doi.org/10.1007/s00348-020-03110-6

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-020-03110-6