Abstract

We use structured monochromatic volume illumination with spatially varying intensity profiles, to achieve 3D intensity particle tracking velocimetry using a single video camera. The video camera records the 2D motion of a 3D particle field within a fluid, which is perpendicularly illuminated with depth gradients of the illumination intensity. This allows us to encode the depth position perpendicular to the camera, in the intensity of each particle image. The light intensity field is calibrated using a 3D laser-engraved glass cube containing a known spatial distribution of 1100 defects. This is used to correct for the distortions and divergence of the projected light. We use a sequence of changing light patterns, with numerous sub-gradients in the intensity, to achieve a resolution of 200 depth levels.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tomographic PIV with four cameras is becoming one of the standard techniques in 3D velocimetry (Elsinga et al. 2006; Westerweel et al. 2013). The recent addition of shake-the-box algorithm (Schanz et al. 2016) greatly speeds up the calculations and changes the basic nature from a correlation technique to high-resolution particle tracking. However, this technique uses particle tracks over numerous time steps, thus requiring multiple specialized video cameras to implement the 3D tracking. In principle, only two cameras are needed to track particles in 3D, but ambiguities due to overlap of numerous images severely limits the total number of particles for possible reconstruction.

Efforts to reduce the cost of multi-camera systems have already been carried out. Aguirre-Pablo et al. (2017) produced instantaneous tomographic PIV measurements of a vortex ring without the need for specialized equipment by recording with multiple smartphone cameras and using high-power LEDs in a back-lit configuration. This reduced dramatically the cost of the hardware for these volumetric three-component (3D-3C) velocity measurements. Despite these cost-cutting efforts, the need for multiple cameras still makes such setups complex.

Cierpka and Kähler (2012) provide an in-depth review of other attempts for 3D-3C velocity measurement methods used in microfluidics, including multiple and single camera techniques. Other studies proposing to use a single camera for 3D-3C velocity field measurements include: using a three pin-hole aperture (Willert and Gharib 1992; Pereira et al. 1998; Rohaly and Hart 2006; Tien et al. 2014), image splitters to produce multiple views on a single sensor (Kreizer and Liberzon 2011; Gao et al. 2012; Peterson et al. 2012; Maekawa and Sakakibara 2018), defocused PTV (Wu et al. 2005; Toprak et al. 2007), optical aberrations (Hain and Kähler 2006; Cierpka et al. 2010), scanning laser sheets or scanning laser volumes (Hoyer et al. 2005; Casey et al. 2013), plenoptic (light-field) cameras (Cenedese et al. 2012; Rice et al. 2018; Shi et al. 2018; Skupsch and Brücker 2013) or color-coded illumination (Murai et al. 2015; Ido et al. 2003; Malfara et al. 2007; McGregor et al. 2007, 2008; Dennis and Siddiqui 2017; Ruck 2011; Watamura et al. 2013; Xiong et al. 2017; Zibret et al. 2004).

However, such techniques reduce the effective camera sensor resolution or have a low aspect ratio between the \(x,\;y\) and z direction of the reconstructed volume, resulting in relatively low depth resolution or low temporal resolution. The majority of these techniques have an error in the depth position estimation between 3 and 15% with respect to the total reconstructed depth, and the ones with the smallest error sacrifice the size of the volume reconstruction, e.g., using image splitting.

Herein we describe a simple technique using one monochromatic camera and structured illumination to track in time numerous particles in a fully 3D volume of \(60 \times 60 \times 50\;{\mathrm{{mm}}^3}\), achieving 200 levels of depth resolution with a depth position error estimation of approximately 0.5% with respect to the total reconstructed depth in z. This proposed technique does not have the drawback of ghost particles, inherent to actual tomographic PIV reconstruction algorithms. Distortions and light divergence are herein corrected for with a calibration using a 3D laser-engraved glass cube.

2 Experimental setup

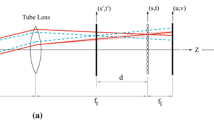

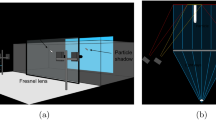

We use a common consumer LCD projector (Epson EX9200 Pro) for illuminating particles seeded in a transparent acrylic tank, containing a BK7 glass refractive index-matched liquid. A mixture of silicone-based heat transfer fluids number 510 and 710 is used as the working fluid. The motion of the illuminated particles is recorded by a 5.5 Mpx s-CMOS B/W video camera (pco.edge 5.5) with high quantum efficiency, capable of recording images at 16-bits. This camera is placed perpendicularly to the projected illumination, as sketched in Fig. 1. We use a Nikkor 25–85 mm macro-lens with the focal length set at 35 mm and the aperture set at F# 16, to bring the entire illuminated volume into focus. We use green light of different intensities from the projector, to minimize possible chromatic aberrations due to diffraction from the particle or through the walls of the acrylic container. The illuminated volume for the actual experiments was approximately \(60 \times 60 \times 50\;{\mathrm{{mm}}^{3}}\). One of the main advantages of using a projector for the illumination, is the flexibility of the structured light, making it simple to modify and adjust the illuminated volume size, as well as controlling the projected frame rate and intensity patterns. There is however a trade-off between the size of the illuminated volume vs. the brightness recorded from the particles.

2.1 Illumination sequence

The illumination sequence used is shown in Fig. 2. The basic principle is to change the illumination to refine the location of the particles in subsequent video images. Each illumination cycle starts with projecting a single frame of uniform lighting to calibrate the intrinsic brightness of each particle, see Fig. 2a. Subsequently, we include a step-like structured lighting, or linear discrete gradient over the entire depth, to get an approximate depth location (\(L_{10}\)) (ten sectors in this experiment), see Fig. 2b. The following frame is a mirrored image of the previous one to minimize any error, see Fig. 2c. To finish, this is followed by a stack of multiple intensity gradients, to refine these locations (\(L_{20}\)) (20 levels for each sector), see Fig. 2d. This image is projected for five subsequent frames. Thus, the projected image sequence allow us to obtain 200 digital depth levels of resolution in z, the direction not visible by the camera. The total length of the projected video sequence is eight frames, allowing for a sequence of five separate accurate depth estimates to follow particle motion. This illumination cycle is repeated in a loop and allows us to continuously track particles with time. We use white polyethylene spherical particles (Cospheric) of size 125–150 \(\upmu\)m and density of 1.25 g/cm3. This material has a matte surface and allows the particle to scatter enough light to be detected by the camera sensor, while minimizing the contamination to the signal intensity of neighboring particles.

We have not attempted to optimize the illumination sequence and the intensity-gradient structure. There are obviously a plethora of possibilities. Perhaps, it is not necessary to repeat the uniform and coarse illumination as often after the first sequence, using larger number of subsequent fine-gradient structures. The depth resolution of particles which loiter around the peaks and troughs of the profiles in Fig. 2d, is likely to be less precise, than the particles in the sharp gradients. This could be addressed by shifting these gradients by half a wavelength in the z-direction, between some of the adjacent frames.

3 Calibration

3.1 Light intensity calibration with glass cube and particles

This technique relies on the intensity of light scattered by the particles. Thus, it is crucial to minimize any noise or fluctuation in the observed intensity due to size dispersion, surface roughness or imperfections of the particles. A test of the temporal consistency of the projected light intensity profile is carried out by placing a white matte target at \(45^{\circ }\) between the camera and a projected static continuous intensity gradient (see Figs. S1 and S2). Temporal statistics of the intensity presented in Fig. S1b, show very constant illumination values in time, with a standard deviation \(< \pm 1\)% of the total range of intensities within each gradient. The spatial variations in illumination intensity due to proximity to the projector are taken into consideration when we calibrate the intrinsic light intensity of each particle and study the intensity parameters proposed in Sect. 4.1.

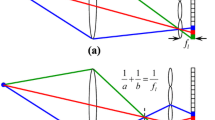

First, a master light curve is produced from the statistics of thousands of particles in the field of view of the camera, with no motion applied to the fluid. A sequence of uniform intensity 8-bit images are projected, to illuminate all the particles. The response signal is recorded with the PCO camera. This is repeated for 20 different intensity levels starting from 84 counts to 255, the maximum counts of a 8-bit monochromatic image. The lower illumination limit is set by the darkest particle which is still visible, since tracking will fail if particles become as dark as the background. The digital 2D planar pixel-coordinates (\(x^{\prime }, y^{\prime }\)) of the particles are obtained using an open source software for Particle Tracking available in Fiji, Trackmate (Tinevez et al. 2017). The original intended use for Trackmate was for cell detection and tracking, among other biomedical and biological applications. However, the flexibility and robustness of this program make it a very good choice for 2D particle tracking in a flow field. The main detection algorithm is based on the Laplacian of Gaussian segmentation. The particle-linking algorithm is based on the linear assignment problem created by Jaqaman et al. (2008). In this way, many of the features of every single particle can be measured, such as the effective particle intensity (\(I_\mathrm{{o}}\)) and the value of the maximum intensity pixel (\(I_\mathrm{{max}}\)) within each particle, for every illumination intensity level. Such parameters are described in further detail in Sect. 3.2. The response signal for each individual particle at all levels is then normalized by the value obtained when the image of 255 counts is projected. The mean response signal (see Sect. 3.2) and a second-degree polynomial fit are presented in Fig. 3, as well as their standard deviation dispersion. This curve serves primarily to calibrate the projector intensity response, thereby allowing us to determine the depth level (\(z^{\prime }\)) in which the particle is contained using the algorithm described in Sect. 4.1.

The particle image density (particles per pixel) in our technique is limited by the capability of the 2D particle tracking algorithm used to distinguish individual particles, since we are using single camera images. The recorded images herein, have a relatively low particle image density (0.002 ppp) to minimize errors for the particle tracking algorithm initially. However, the image density can be increased substantially when using advanced 2D PTV algorithms such as the one proposed by Fuchs et al. (2017). In their study, experimental results show an image density of 0.02 ppp for a near-wall turbulent boundary layer. Cowen and Monismith (1997) state that the optimal particle concentration for 2D PTV is between 0.005 and 0.02 ppp. Keep in mind that for the PIV correlation technique \(\sim \;\)6–10 particles are recommended for each interrogation window, which in principle give similar number of vectors per volume for PIV and PTV.

With a particle image density of 0.002 ppp, we can get approximately 1200 particles (vectors) at every time step in the whole volume, representing approximately 0.01 vectors/\(\hbox {mm}^3\) in the current setup. There is clearly scope for increasing the particle image density, which will increase proportionally the number of tracks.

3.2 Intensity of isolated particle images

To test the potential of this technique, we first illuminate a slowly moving field of a few particles with a uniform volume illumination. This is used to quantify how constant the scattered light from individual particles remains as their images are shifted over pixel boundaries on the CMOS sensor. Figure 4 shows a sequence of real pixel intensities for a typical particle. It shows clearly how the distribution of intensities spreads among the pixels, making them vary strongly from frame to frame. For this randomly selected particle in Fig. 4, the peak 8-bit pixel intensities take the following values: 176, 188, 170, 183, 187, 175, 187, 186 and 190 varying over a min–max range of \(\simeq 11\)%. The effective particle intensity must therefore be estimated from a weighted integral over the particle area, thereby incorporating both its intensity C and its width \(\sigma\).

The use of a 2D Gaussian profile when studying particle image properties reduces random noise in the sensor, as studied in detail by Massing et al. (2016). Therefore, we fit the intensity profile of each particle in every frame with a 2D Gaussian shape,

This profile is fit by shifting the subpixel peak location \((x_\mathrm{{o}},y_\mathrm{{o}})\) and adjusting the magnitude C and width \(\sigma\). The least-square best fit only uses pixels around the peak, where the intensity exceeds a certain threshold. Figure 5 shows these best fits for C and \(\sigma\) following this particle over a number of subsequent video frames. As expected, the peak intensity and the width are anti-correlated. However, empirically we find the best intensity estimate by combining the central intensity and the image width as

Repeated tests show that the variation of this quantity is within bounds of \(\pm \;\)2%. This parameter helps us to determine the intrinsic brightness of every particle, regardless of their location or size which produces variation in the intensity of the recorded pixels at identical illumination intensities (more details on this process are presented in Sect. 4.1). Theoretically, with ten sub-gradients of the illumination intensity, we should therefore be able to distinguish 250 depth levels for the particles.

The best fit values of C, \(\sigma\) and \(C\sigma ^{3/2}\) for the particle in Fig. 4 over nine consecutive frames with uniform illumination. The values are normalized by their corresponding averages

3.3 The 3D calibration cube

Here we describe the correction for the projector light divergence and perspective distortion. To calibrate the real space coordinates (\(x,\;y,\;z\)) from the projected frames and correct lens distortions, a 3D laser-engraved calibration cube of \(80 \times 80\times 80\;\) mm3 is designed in-house. The 3D laser engraving process in glass is a well-known industrial process used for the production of trophies, awards and souvenirs. It consists of a high-power laser that produces micro-cracks in a structured way inside the glass where the focal point of the laser is placed. The material of the cube used herein is BK7 optical glass, which has a refractive index of 1.519 at \(20\;^{\circ }\mathrm{{C}}\) and a wavelength of 527 nm (SCHOTT glass AG). We take advantage of this feature to produce a 3D array of micro-cracks in ten different planes, containing 110 micro-cracks each. Each plane is rotated with respect to the previous one by \({32.7^{\circ }}\) to ensure that no other crack will block the illumination from the projector, nor block it from the camera. This allows us to simulate a static particle field of 1100 particles, as shown in Fig. 6.

a Typical frame from the PCO camera of the calibration cube illuminated by a uniform intensity frame. Note the different intensity levels for each particle. b XY view of the cube reconstruction. c XZ (top view) of the cube reconstruction. Note the volume distortion due to the projection divergence. d 3D view of the reconstructed cube, particles are colored by their depth position (\(z^{\prime }\))

A light-intensity calibration (described in the Sect. 3.1) is applied using the same 20 intensity levels to obtain the digital depth position (\(z^{\prime }\)) implementing the algorithm described in Sect. 4.1. The digital coordinates (\(x^{\prime },\;y^{\prime }\)) with subpixel accuracy of the particles in the cube are obtained using Trackmate. Subsequently, the video sequence with multiple frames and gradients (see Fig. 2) is projected in the static cube the same way as it is done for the real particle field. Both calibrations (light intensity and spatial calibration) allow us to reconstruct the 3D pattern of the cube simulated particles, see Fig. 7. Subsequently, a 3D mapping function is obtained to correct distortions and connect the digital space coordinates (\(x^{^{\prime }},\;y^{\prime },\;z^{\prime }\)) to real space coordinates (\(x,\;y,\;z)\) as described in Sect. 4.2.

The 3D calibration glass cube used herein, represents a simple approach for spatial mapping and correcting illumination and imaging distortions, i.e., achieving a “set-and-shoot” solution. However, the need for a refractive index-matched liquid can be a severe hindrance. Nevertheless, when such a liquid cannot be used, one can use a planar dotted calibration target placed at \({45^{\circ }}\) between the projector and the camera, translating it across the reconstructed volume to produce the 3D mapping functions. This process and algorithm will be more complex and could be a representative source of errors.

4 Computational algorithm

4.1 Obtaining the depth position \(z^{\prime }\)

To obtain the depth position \(z^{\prime }\) for every particle, it is necessary to analyze its recorded intensity in space and time. For the current setup, the particles are not of uniform size, having a range \(\sim \;\) 4–8 pixels in diameter. Therefore, we compare three different parameters within each particle: maximum pixel intensity (\(I_{\mathrm{{max}}}\)), Gaussian-weighted average intensity (\(I_\mathrm{{w}}\)) and the effective particle intensity (\(I_\mathrm{{o}}\)) defined in Sect. 3.2.

First, these parameters are normalized with the maximum value of each illumination cycle. This allows us to quantify the intrinsic intensity for every single particle during multiple illumination cycles and thereby deduce the corresponding depth position. A plot comparing the statistical dispersion as mean absolute deviation (MAD) in a light intensity calibration of the above parameters is presented in Fig. 8. The MAD is defined as

where N is the number of sampled particles, I is the parameter being studied (\(I_{\mathrm{{max}}}\), \(I_\mathrm{{w}}\) or \(I_\mathrm{{o}}\)) and \({\overline{I}}\) is the mean of that parameter. This measure of variability is used since it is more robust in identifying the parameter that produces the smallest error deviation, thereby being more resilient to outlier data points and assigning more weight to the data points closer to the fit.

From Fig. 8, we can clearly see that \(I_\mathrm{{o}}\) presents the lowest dispersion values. Therefore, \(I_\mathrm{{o}}\) is the parameter of choice to continue, providing a low error reconstruction. Hence, a master light curve using \(I_\mathrm{{o}}\) is obtained as described in Sect. 3.1

We now describe the general algorithm used to determine the depth \(z^{\prime }\) of each particle. A flowchart summarizing the process is provided in the Supplemental materials.

Each particle is assigned to a bin depending on its \(I_\mathrm{{o}}\) value at every projected frame. Such bins are created based on the theoretical camera response obtained by the light calibration curve shown in Fig. 3. In the first video frame (Fig. 2a), a solid green color is projected to calibrate the intrinsic intensity of the particle, thus, it does not require a bin. For the second video frame (Fig. 2b), ten equally distributed bins are created with their midpoints (\(I_{\mathrm{{ mid}}}\)) starting from 84 to 255 intensity counts in steps (\(2 \delta _\mathrm{{s}}\)) of 18 counts, after normalization by the maximum intensity of an 8-bit image (255 counts). The upper and lower limits are defined by \({{L_{im}}}=I_{\mathrm{{mid}}}\pm \delta _\mathrm{{s}}\). The third projected frame (Fig. 2c) is a mirrored version of frame 2. Frames 4–8 (Fig. 2d) consist of 20 bins (84–255 counts) with \(2\delta _\mathrm{{s}}=9\) counts.

Every particle is then allocated to the corresponding depth bin (\(L_{10}\) for projected frames 2 and 3 and \(L_{20}\) for projected frames 4–8) at every recorded video frame by evaluating the master light curve (see Sect. 3.1) with the detected \(I_\mathrm{{o}}\).

It is important to mention that LCD projectors have a transition time between projected frames. Therefore due to the unsynchronized camera projector system, one can notice a periodical single transition frame recorded with the PCO camera for every projected frame. This frame is neglected for the depth estimation, however, 2D information of the particles in those frames is evaluated. In future implementations, this transition frame can be eliminated with appropriate synchronization and exposure timing.

One of the main advantages of oversampling the projected video frames (four frames in the recorded video at, 60 fps, represent one projected frame at 15 fps for this iteration) is that we can use temporal statistics for correcting spurious depth estimations in \(L_{10}\) and \(L_{20}\). These spurious depth estimations are mainly due to overlapping particles, as seen in Fig. 9, where clear deviations occur intermittently, when the tracking jumps to erroneous particles. To fully define the digital depth position \(z^{\prime }\) at any time, it is necessary to define \(L_{10}\) and \(L_{20}\) for every particle at every time. Therefore, if the statistical mode frequency of \(L_{10}\) from projected frames 2 and 3 is \(\mathrm{{Mo}}(L_{10})\ge 4\), \(L_{10}\) is defined during that illumination cycle. Additionally, if \(\mathrm{{max}}(L_{20})-\mathrm{{min}}(L_{20})<10\), i.e., when the particle stays in the same \(L_{10}\) bin, \(L_{20}\) is defined and the digital depth (\(z^{\prime }\)) can be initially estimated for that particle and illumination cycle. This is valid for particles which do not have very high velocities in the depth direction (\(z^{\prime }\)).

However, if \(\mathrm{{max}}(L_{20})-\mathrm{{min}}(L_{20})\ge 10\) for a single illumination cycle, it is assumed that the particle has crossed the boundary of a bin in \(L_{10}\). Therefore, to establish the depth in those cases, it is necessary to look into the last and first few frame levels (\(L_{10}\) and \(L_{20}\)) from the previous and next illumination cycle, respectively. The linkage of temporal information allows us to define most of the remaining depth positions for every particle.

Furthermore, the initial estimation of the \(z^{\prime }\) component of the particle trajectories is refined by a bisquared weighted least-squared fitting \(z^{\prime }=f(t)\). This iterative fitting method assigns smaller weights to the positions that are far away from the original fit. The quadratic polynomial fit is obtained for every single particle. A comparison of a few particle trajectories vs the quadratic polynomial fit are shown in Fig. 9. The few outliers do not significantly distort the true trajectory.

Particles moving into the test volume, during the frames with the finest intensity gradients, can be dealt with by tracking them backwards in time, starting from subsequent uniform lighting.

4.2 Mapping functions

Here we describe the mapping from digital space (\(x^{\prime },\;y^{\prime },\;z^{\prime }\)) to real space coordinates (\(x,\;y,\;z\)). Using the data collected in Sect. 3.3, it is observed that the reconstructed cube has distortions due to the divergence of the illumination and lens aberrations (see Fig. 7c). Therefore, we can link the digital coordinates with the real space using the known coordinates of the particle field of the cube. It is assumed that the real space coordinate \(z=f(x^{\prime },\;z^{\prime })\), since the projected light is vertical and the pattern projected does not vary in the vertical axis y. The mapping function is obtained by a bisquared weighted polynomial 3D surface fit of degree 2 in \(x^{\prime }\) and \(z^{\prime }\). The polynomial model is presented in Eq. S4 presented in Supplementary Information. A 4D non-linear regression fit is used for mapping \(x=f(x^{\prime },\;y^{\prime },\;z)\) and \(y=f(x^{\prime },\;y^{\prime },\;z)\) as specified in the Supplementary Information Eqs. S5 and S6.

The polynomial coefficients and the fidelity of fit information of the mapping functions are summarized in Table S1 in Supplementary Information. A flowchart summarizing the algorithm process for the 3D reconstruction is presented in Online Supplemental Materials Fig. S3.

After applying the mapping functions in the cube (see Fig. 10), we find that the error in the 3D reconstruction of the cube has an \(|\mathrm{{RMS}}_\mathrm{{e}}|=0.273\) mm, where the depth component of the error (\(e_z\)) is the greatest component with \(\mathrm{{RMS}}_{e_{z}}=0.271\) mm. These error estimation values are obtained from the goodness of fit of the mapping functions, where the function \(z(x^{\prime },\;z^{\prime })\) is obtained from the known coordinates of 1000 detected particles from the calibration cube. This provides simultaneously an error estimation of the detected depth (\(z^{\prime }\)). This value represents approximately 0.5% of the 50 mm depth from the reconstructed volume.

5 Tracking results

5.1 Experiments in a rotational flow

We produce a rotational flow in a tank full of the heat transfer fluid mix with a disk attached to a controllable speed motor, as depicted in Fig. 11. This flow field is usually referred to as the von Kármán pump. The liquid is seeded with white polyethylene particles. The acrylic tank of \(120\times 120\times 250\;{\mathrm{{mm}}^{3}}\) is filled with a mixture of heat transfer fluid 510 and 710. The refractive index of the mixture is 1.515 measured at 22 \(^\circ\)C. This is to match the refractive index of the BK7 glass cube used in the calibration.

First, the light intensity calibration is carried out as described in Sect. 3.1. The motion of the particles is then tracked using Trackmate software, providing us with the 2D digital coordinates (\(x^{\prime },\;y^{\prime }\)) with subpixel accuracy, as shown in Fig. 12. Spatial calibration using the crystal cube is carried out as described in Sect. 3.3.

The disk is rotated at 60 rpm and the 2D trajectories in time allow us to obtain the intensity profile for every particle in each recorded frame. Thus, using the algorithm described in Sect. 4.1, we can obtain the corresponding depth position \(z^{\prime }\). The 3D spurious reconstructions are filtered to the ones that have a \(z^{\prime }(t)\) polynomial fit with \(R^2>0.9\), described at the end of Sect. 4.1. Subsequently, the mapping function defined in Sect. 4.2 is applied to the digital coordinates (\(x^{\prime },\;y^{\prime },\;z^{\prime }\)) to transform them to the real world coordinates (\(x,\;y,\;z\)). Pathlines of 960 unique particles colored by their velocity magnitude are presented in Fig. 13a–d. The corresponding velocity histograms for \(u,\;v\) and w are presented in Fig. 14, showing the statistical “smoothness” of the results in light of the multi-scale reconstruction steps. An animated video of the 3D pathlines is presented in Supplemental Material.

6 Concluding remarks

Herein, we have demonstrated the implementation of intensity 3D PTV with a single monochromatic sCMOS video camera and a consumer-grade LCD projector as a light source. We reconstruct and track particles in 3D inside a liquid by structuring the projected light, with numerous depth gradients in intensity. This new methodology increases dramatically the depth resolution of previous single camera 3D PTV systems, up to 200 levels with low error in the 3D depth position estimation \(\sim \;0.5\%\) of the total depth, while increasing the reconstructed volume size and at the same time minimizing the complexity of the hardware setup.

One of the drawbacks of the proposed technique, is the need for optical access for whole volume illumination from the projector perpendicular to the camera. This may limit the complexity of the possible geometries to be studied. However, this might be overcome by taking advantage of the flexibility in the projected illumination pattern (temporally and spatially), and designing the structured light for specific problems. For example, one could adjust the incoming light to correct for aberrations from the container shape.

In the actual implementation, the main limitation is the framerate of the projected patterns (15 fps for the actual setup). We estimate that the maximum velocity of the particles in the depth direction z could be \(w \le 37\) mm/s in the current configuration. This is to ensure that the particle does not travel through more than one complete sector \(L_{10} = 5\) mm between two frames of the early part of the illumination cycle. However, the projector used herein is capable of 60 fps refresh rate. With perfect synchronization of the camera and the projector, we could therefore, in principle, obtain four times higher depth velocity (\(w\le 150\) mm/s) with the current hardware.

Conceptually, the present technique is very simple and in principle, using a fast high-sensitivity low-noise camera, one could achieve finer depth levels than the in-plane pixel spacing. This will however be more dependent on the quality of the structured light, than the sensor sensitivity.

It remains to optimize the projected illumination pattern and frame sequence. Numerous possibilities can be tested. In principle one only needs to project the uniform lighting (Fig. 2a) and course levels (Fig. 2c), when new particles enter the volume. We can certainly repeat the fine gradients (Fig. 2d) over longer sequences of frames. While this would complicate the tracking algorithm, it might help to track forwards and backwards in time from the latest reference illuminations.

A reviewer has suggested the use of a typical color camera with a Bayer filter array could allow us to combine some of the frames of the illumination sequence, shown in Fig. 2, in the different fields. For example, by combining a red frame Fig. 2a, a blue frame Fig. 2c and a green frame Fig. 2d, one can thereby code the intrinsic particle intensity and rough depth location in the red and blue, while retaining a more refined depth location in the green pixels. This would of course reduce the in-plane spatial resolution, while reducing the needed number of frames in the sequence to only one, thereby increasing greatly the temporal resolution. However, when using chromatic illumination, one has to consider carefully the effects of, color cross-talk, errors from pixel demosaicing and chromatic aberrations (Aguirre-Pablo et al. 2017). Even while using the RAW format, the disparate spatial samplings of the Gaussian particle images could introduce large errors. This could be overcome with a three-chip color camera, but would require a much more expensive camera and would also violate the spirit of our single-sensor technique.

Histograms of the different components of velocity a u, b v and c w. The u and v components in the sensor plane are given in pixel shifts over the time step, while the depth velocity is given in the units of mm/s. The u and w velocity components in the horizontal plane, are close to symmetric, while the vertical v component is predominantly upwards, driven by the von Kármán pump. Keep in mind that the y coordinate points downwards and \(\Delta t=\) 1/60 s

This inexpensive technique will enable industrial, scientific and educational institutions to experimentally study the 3D structure of fluid flows for energy, biological, engineering and medical applications.

References

Aguirre-Pablo AA, Alarfaj MK, Li EQ, Hernández-Sánchez JF, Thoroddsen ST (2017) Tomographic particle image velocimetry using smartphones and colored shadows. Sci Rep 7(1):3714

Casey TA, Sakakibara J, Thoroddsen ST (2013) Scanning tomographic particle image velocimetry applied to a turbulent jet. Phys Fluids 25(2):025102

Cenedese A, Cenedese C, Furia F, Marchetti M, Moroni M, Shindler L (2012) 3D particle reconstruction using light field imaging. In: International symposium on applications of laser techniques to fluid mechanics

Cierpka C, Kähler CJ (2012) Particle imaging techniques for volumetric three-component (3D3C) velocity measurements in microfluidics. J Vis 15(1):1–31

Cierpka C, Segura R, Hain R, Kähler CJ (2010) A simple single camera 3C3D velocity measurement technique without errors due to depth of correlation and spatial averaging for microfluidics. Meas Sci Technol 21(4):045401

Cowen E, Monismith S (1997) A hybrid digital particle tracking velocimetry technique. Exp Fluids 22(3):199–211

Dennis K, Siddiqui K (2017). A multicolor grid technique for volumetric velocity measurements. In: ASME 2017 fluids engineering division summer meeting. American Society of Mechanical Engineers, pp V01BT06A020

Elsinga GE, Scarano F, Wieneke B, van Oudheusden BW (2006) Tomographic particle image velocimetry. Exp Fluids 41(6):933–947

Fuchs T, Hain R, Kähler CJ (2017) Non-iterative double-frame 2D/3D particle tracking velocimetry. Exp Fluids 58(9):119

Gao Q, Wang HP, Wang JJ (2012) A single camera volumetric particle image velocimetry and its application. Sci China Technol Sci 55(9):2501–2510

Hain R, Kähler CJ (2006) 3D3C time-resolved measurements with a single camera using optical aberrations. In: 13th Int. Symp. on applications of laser techniques to fluid mechanics, vol 136

Hoyer K, Holzner M, Lüthi B, Guala M, Liberzon A, Kinzelbach W (2005) 3D scanning particle tracking velocimetry. Exp Fluids 39(5):923

Ido T, Shimizu H, Nakajima Y, Ishikawa M, Murai Y, Yamamoto F (2003) Single-camera 3-D particle tracking velocimetry using liquid crystal image projector. In: ASME/JSME 2003 4th joint fluids summer engineering conference. American Society of Mechanical Engineers, pp 2257–2263

Jaqaman K, Loerke D, Mettlen M, Kuwata H, Grinstein S, Schmid SL, Danuser G (2008) Robust single-particle tracking in live-cell time-lapse sequences. Nat Methods 5(8):695

Kreizer M, Liberzon A (2011) Three-dimensional particle tracking method using FGPA-based real-time image processing and four-view image splitter. Exp Fluids 50(3):613–620

Maekawa A, Sakakibara J (2018) Development of multiple-eye PIV using mirror array. Meas Sci Technol 29:064011

Malfara R, Bailly Y, Prenel J-P, Cudel C (2007) Evaluation of the rainbow volumic velocimetry (RVV) process by synthetic images. J Flow Vis Image Process 14(1):1–15

Massing J, Kaden D, Kähler C, Cierpka C (2016) Luminescent two-color tracer particles for simultaneous velocity and temperature measurements in microfluidics. Meas Sci Technol 27(11):115301

McGregor TJ, Spence DJ, Coutts DW (2007) Laser-based volumetric colour-coded three-dimensional particle velocimetry. Opt Lasers Eng 45(8):882–889

McGregor TJ, Spence DJ, Coutts DW (2008) Laser-based volumetric flow visualization by digital color imaging of a spectrally coded volume. Rev Sci Instrum 79(1):013710

Murai Y, Yonezawa N, Oishi Y, Tasaka Y, Yumoto T (2015) Color particle image velocimetry improved by decomposition of RGB distribution integrated in depth direction. In: ASME/JSME/KSME 2015 joint fluids engineering conference. American Society of Mechanical Engineers, pp V01AT20A003

Pereira F, Modarress D, Gharib M, Dabiri D, Jeon D (1998) Aperture coded camera for three dimensional imaging. US Patent 7006132

Peterson K, Regaard B, Heinemann S, Sick V (2012) Single-camera, three-dimensional particle tracking velocimetry. Opt Exp 20(8):9031–9037

Rice BE, McKenzie JA, Peltier SJ, Combs CS, Thurow BS, Clifford CJ, Johnson K (2018) Comparison of 4-camera tomographic PIV and single-camera plenoptic PIV. In: 2018 AIAA aerospace sciences meeting, pp 2036

Rohaly J, Hart, DP (2006) Monocular three-dimensional imaging. US Patent 8675291

Ruck B (2011) Colour-coded tomography in fluid mechanics. Opt Laser Technol 43(2):375–380

Schanz D, Gesemann S, Schröder A (2016) Shake-the-box: Lagrangian particle tracking at high particle image densities. Exp Fluids 57(5):70

Shi S, Ding J, Atkinson C, Soria J, New TH (2018) A detailed comparison of single-camera light-field PIV and tomographic PIV. Exp Fluids 59(3):46

Skupsch C, Brücker C (2013) Multiple-plane particle image velocimetry using a light-field camera. Opt Exp 21(2):1726–1740

Tien WH, Dabiri D, Hove JR (2014) Color-coded three-dimensional micro particle tracking velocimetry and application to micro backward-facing step flows. Exp Fluids 55:1684

Tinevez J-Y, Perry N, Schindelin J, Hoopes GM, Reynolds GD, Laplantine E, Bednarek SY, Shorte SL, Eliceiri KW (2017) Trackmate: an open and extensible platform for single-particle tracking. Methods 115:80–90

Toprak E, Balci H, Blehm BH, Selvin PR (2007) Three-dimensional particle tracking via bifocal imaging. Nano Lett 7(7):2043–2045

Watamura T, Tasaka Y, Murai Y (2013) LCD-projector-based 3D color PTV. Exp Thermal Fluid Sci 47:68–80

Westerweel J, Elsinga GE, Adrian RJ (2013) Particle image velocimetry for complex and turbulent flows. Ann Rev Fluid Mech 45:409–436

Willert C, Gharib M (1992) Three-dimensional particle imaging with a single camera. Exp Fluids 12(6):353–358

Wu M, Roberts JW, Buckley M (2005) Three-dimensional fluorescent particle tracking at micron-scale using a single camera. Exp Fluids 38(4):461–465

Xiong J, Idoughi R, Aguirre-Pablo AA, Aljedaani AB, Dun X, Fu Q, Thoroddsen ST, Heidrich W (2017) Rainbow particle imaging velocimetry for dense 3D fluid velocity imaging. ACM Trans Graph (TOG) 36(4):36

Zibret D, Bailly Y, Prenel J-P, Malfara R, Cudel C (2004) 3D flow investigations by rainbow volumic velocimetry (RVV): recent progress. J Flow Vis Image Process 11(3):223–238

Acknowledgements

This study was supported by King Abdullah University of Science and Technology (KAUST) under Grant no. URF/1/2621-01-01.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Aguirre-Pablo, A.A., Aljedaani, A.B., Xiong, J. et al. Single-camera 3D PTV using particle intensities and structured light. Exp Fluids 60, 25 (2019). https://doi.org/10.1007/s00348-018-2660-7

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00348-018-2660-7