Abstract

Objectives

The assessment of lumbar central canal stenosis (LCCS) is crucial for diagnosing and planning treatment for patients with low back pain and neurogenic pain. However, manual assessment methods are time-consuming, variable, and require axial MRIs. The aim of this study is to develop and validate an AI-based model that automatically classifies LCCS using sagittal T2-weighted MRIs.

Methods

A pre-existing 3D AI algorithm was utilized to segment the spinal canal and intervertebral discs (IVDs), enabling quantitative measurements at each IVD level. Four musculoskeletal radiologists graded 683 IVD levels from 186 LCCS patients using the 4-class Lee grading system. A second consensus reading was conducted by readers 1 and 2, which, along with automatic measurements, formed the training dataset for a multiclass (grade 0–3) and binary (grade 0–1 vs. 2–3) random forest classifier with tenfold cross-validation.

Results

The multiclass model achieved a Cohen’s weighted kappa of 0.86 (95% CI: 0.82–0.90), comparable to readers 3 and 4 with 0.85 (95% CI: 0.80–0.89) and 0.73 (95% CI: 0.68–0.79) respectively. The binary model demonstrated an AUC of 0.98 (95% CI: 0.97–0.99), sensitivity of 93% (95% CI: 91–96%), and specificity of 91% (95% CI: 87–95%). In comparison, readers 3 and 4 achieved a specificity of 98 and 99% and sensitivity of 74 and 54%, respectively.

Conclusion

Both the multiclass and binary models, while only using sagittal MR images, perform on par with experienced radiologists who also had access to axial sequences. This underscores the potential of this novel algorithm in enhancing diagnostic accuracy and efficiency in medical imaging.

Key Points

Question How can the classification of lumbar central canal stenosis (LCCS) be made more efficient?

Findings Multiclass and binary AI models, using only sagittal MR images, performed on par with experienced radiologists who also had access to axial sequences.

Clinical relevance Our AI algorithm accurately classifies LCCS from sagittal MRI, matching experienced radiologists. This study offers a promising tool for automated LCCS assessment from sagittal T2 MRI, potentially reducing the reliance on additional axial imaging.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Lumbar central canal stenosis (LCCS) is a condition where the spinal canal is narrowed, resulting in compression of the dural sac, possibly causing low back pain and radiating pain [1,2,3]. It has a prevalence of 11% in the general population and is most often observed in elderly patients [4, 5]. Magnetic resonance imaging (MRI) is primarily used to diagnose LCCS since it provides excellent soft tissue contrast, enabling a clear distinction of the intervertebral discs (IVDs), nerves, and the osseous spinal canal [6]. Grading systems such as the Lee’s and the Schizas grading systems have been developed to consistently and reliably classify the severity of LCCS [7,8,9,10]. Alternatively, several studies have proposed quantitative diagnostic criteria with cutoff values for different severities of LCCS [11]. Utilizing quantitative measurements provides a more objective approach to classifying LCCS, minimizing ambiguity. However, manual quantitative measurements are time-consuming and are, therefore, rarely used in routine clinical practice [7].

Despite its efficacy in diagnosing LCCS, MRI has its limitations. Notably, MRI is expensive and time-consuming, often requiring multiple sequences to obtain axial images at different vertebral levels, orthogonal to the spinal canal. This can lead to situations where certain vertebral levels lack axial images or where the images obtained are not precisely parallel to the IVD. Consequently, developing an automatic grading system that relies solely on sagittal images for evaluating LCCS across all levels would be beneficial. Such a system would streamline the diagnostic process by automatically measuring LCCS, which reduces reporting time while ensuring objective and consistent evaluation of the condition’s severity.

Several deep learning systems have been developed for grading LCCS automatically, using methods such as convolutional neural networks [12,13,14,15,16]. However, employing neural networks for LCCS classification poses challenges in terms of interpretability and explainability of predictions. Integrating additional automatic quantitative measurements could provide further insight into the classification process. A recent study by Bharadwaj et al addressed this issue by developing a decision tree classifier to predict LCCS classifications, using the ratio between the cross-sectional areas of the dural sac and the IVDs [17]. However, this classifier solely relied on one novel metric, overlooking other conventional metrics with established correlations to LCCS, such as the dural sac AP-diameter and cerebrospinal fluid (CSF) signal loss [8, 18]. Incorporating these metrics could enhance the classifier’s robustness and comprehensiveness, offering a better understanding of LCCS severity and supporting clinical decision-making.

The purpose of this study was to develop and validate a novel algorithm that classifies LCCS. This algorithm automatically extracts quantitative metrics related to LCCS based on 3D segmentations, which are used to classify LCCS on lumbar sagittal MRI scans.

Methods

Data selection

Lumbar spine MRI studies were collected at the Radboud University Medical Center in the Netherlands. The complete dataset consisted of all MRI studies of patients suffering from low back pain and/or neurogenic leg pain based on the MRI study description, acquired between 2014 and 2019 on a 1.5-T Siemens MRI system. This retrospective study was approved by the institutional review board (IRB 2016-2275). Exemption from informed consent was granted due to the use of retrospectively deidentified MRI examinations.

The following exclusion criteria were applied:

-

No LCCS based on the radiology report. Exclusion was performed by including MRI studies with reports that include “canal stenosis”, and by excluding MRI studies with reports containing “no canal stenosis” or “no spinal canal stenosis”.

-

Studies that do not contain at least one T2 sagittal and one T2 axial series.

-

Studies where accurate LCCS assessment was deemed infeasible due to, for example, post-operative changes, metastases, or poor image quality.

This resulted in 1107 eligible MRI studies, of which a random subset was drawn. Given the labor-intensive nature of data annotation, a sample size of 200 studies was selected. If a patient received more than one MRI examination, only the oldest MRI study was included to avoid post-treatment scans. The exclusion of post-operative scans was motivated by the often-compromised visibility caused by the presence of scar tissue and metal artifacts, which can significantly hinder accurate LCCS assessment. All 200 MRI studies underwent manual review by experienced radiologists (reader 1 and 2 (R1 and R2)) to assess the feasibility of accurately evaluating LCCS. Subsequently, 14 MRI studies were excluded from the analysis: eleven were excluded due to post-operative changes, one due to the presence of metastases, one due to misalignment between the sagittal and axial sequences, and one was misclassified as a lumbar acquisition. In total, 186 MRI studies from unique patients suspected of LCCS were included, resulting in a total of 312 axial T2 series of various vertebral levels, and 186 sagittal T2 series (Fig. 1).

In accordance with the CLAIM 2024 (Checklist for Artificial Intelligence in Medical Imaging) guidelines [19], we ensured comprehensive reporting and transparency throughout the study. Detailed adherence to each item in the CLAIM 2024 checklist is documented in Appendix A.

Manual data labeling

All MRI studies underwent independent manual labeling by four experienced radiologists: R1, R2, R3, and R4, with respectively 27, 25, 15, and 14 years of experience. The severity of LCCS was assessed on both axial and sagittal images using the classification system described by Lee et al with grade 0 being ‘no stenosis,’ grade 1 ‘mild stenosis,’ grade 2 ‘moderate stenosis,’ and grade 3 ‘severe stenosis’ [8]. The highest observed grade was annotated for each IVD level visible in the available T2 axial images. Additionally, a mid-sagittal image containing disk numbers was included to ensure consistent identification of discs.

Following individual readings, R1 and R2 conducted a second consensus reading for all the IVD levels where they had assigned different grades. This consensus reading was regarded as the ground truth. The grading process was executed on the medical imaging annotating platform Grand-Challenge.org.

For binary classification, the grades from the consensus reading were ‘binarized’. Lee grades 0 and 1 were considered indicative of ‘no stenosis’ and grades 2 and 3 were classified as ‘stenosis’.

Deep learning segmentation

An existing lumbar spine segmentation algorithm, capable of segmenting the vertebrae, IVDs, and spinal canal, was employed to generate the required segmentation masks [20]. This U-net-based algorithm uses a 3D patch-based iterative scheme to segment one pair of vertebrae and the corresponding inferior IVD at a time, together with the segment of the spinal canal covered by the image patch [20]. It is trained and validated on the publicly available multicenter SPIDER dataset, which includes 447 sagittal lumbar MRI series from 218 patients with low back pain [21]. The reported Dice score of the spinal canal and IVD segmentation were 0.92 (SD 0.04) and 0.84 (SD 0.10) with 100% and 98.7% detection, respectively, in sagittal T2 scans. The segmentation of the spinal canal corresponds to the anatomical dural sac. An example of an automatically generated segmentation mask is illustrated in Fig. 2. Additional examples of successful as well as faulty segmentation masks can be found in Appendix B.

An MRI study paired with its automatically generated segmentation mask is presented. a Depicts the mid-sagittal slice from a series of sagittal T2-weighted MRI images. b Illustrates an axial slice at the L4-L5 intervertebral disc level. c Displays a reconstructed axial view, derived from the sagittal MRI series, corresponding to the level of image b

Automatic measurements

The following metrics were automatically extracted using the segmentation masks:

-

1.

Dural sac cross-sectional area (CSA), defined as the cross-sectional area of the dural sac measured in an axial view of the spine (Fig. 3). The CSA, expressed in square millimeters, was calculated by summing up the surface area of all voxels of the spinal canal mask.

-

2.

Dural sac antero-posterior diameter (APD), expressed in millimeters, was defined as the largest possible antero-posterior distance of the spinal canal mask (Fig. 3).

-

3.

CSF signal loss (FSL) was calculated by determining the average signal strength of all MRI voxels within the spinal canal mask of an angled axial plane.

To accurately perform these measurements, the axial view of the spinal canal should be parallel to the IVD of that specific level. This is accomplished by rotating and resampling the MRI volume separately for each IVD level (Fig. 4). The 3D spatial angulation required for rotating and resampling the MRI volume was determined by fitting a 3D plane through each IVD using principal component analysis (PCA) [22]. The third axis of the PCA, which is perpendicular to the 3D plane, was used to determine the angulation of the IVD. The middle slice of each IVD, alongside 20 slices above and below the middle slice, was extracted for automatic measurements. With a standardized slice-thickness of 0.5 mm this was equal to a total range of 2 cm around the center of each IVD. This range was chosen to ensure that an average healthy IVD is entirely included in the measurements and that with degenerative pathological IVDs the endplates are incorporated as well. Measurements of the most caudal 15 mm of the spinal canal segmentation were discarded. This was based on the naturally decreased size of the dural sac in this area, which could lead to false positives for stenoses if included.

Visualization of the angulations of all measurements. Angulation was determined for each intervertebral disc, with absolute measurements extracted from the green volumes, utilizing only the measurements from the most stenotic plane within that volume. These absolute measurements were then compared to the cranially adjacent mid-vertebral measurements (presented by red lines) to derive the relative measurement values (the ratio-based values)

The three metrics (CSA, APD, and FSL) were quantified across all 40 axial planes for each IVD, orthogonal to the spinal canal. Subsequently, only the measurements of the most stenotic plane were utilized. The most stenotic plane was determined through a cumulative ranked system that integrates all three metrics. Axial planes were individually ranked for each metric, and the plane with the lowest cumulative rank was identified as the most stenotic. Only the measurements from this most stenotic plane were used for LCCS classification.

For each of the three aforementioned metrics, two measurements were taken, an absolute value and a ratio-based value. These additional relative metrics were included to correct for size differences between vertebral levels, as well as anatomical differences between patients. The ratios were calculated between the IVD level measurements and the corresponding reference measurements (Fig. 4). Reference measurements were taken at the mid-vertebral level of each cranially adjacent vertebra for all IVDs. The angulation of the reference measurement was computed by taking the average of the angulations of the two neighboring IVDs. Examples of the angled IVD planes and the corresponding reference measurements are shown in Fig. 4. These relative metrics are reported as rCSA, rADP, and rFSL.

Training data

The total dataset consists of 683 IVD levels from 186 patients suspected of LCCS. For each IVD level, the dataset includes six automatic measurements alongside the ground truth Lee grade, serving as the label. Table 1 provides a comprehensive overview of the entire dataset.

Classification

During the development phase, experiments were conducted with several machine learning models, including logistic regression, decision trees, and random forests, ultimately selecting random forests based on superior performance. The results of these experiments are shown in Appendix C. Two random forest classification models, one for multiclass and one for binary classification, were developed using scikit-learn 1.3.2 [23]. Both models underwent training via tenfold cross-validation, employing a 90-10 training-validation split strategy. The binary model utilized a standard tenfold split, whereas the multiclass model employed a stratified tenfold split to address discrepancies in class sizes.

The random forest models were trained under specific settings chosen after performing a random grid search. For the multiclass classification, the parameters were set to 1 sample per leaf, 208 trees, and a maximum depth of 46, whereas for binary classification, they were set to 23 samples per leaf, 29 trees, and a maximum depth of 72.

The multiclass model was trained with the consensus reading grades as labels and the six specified metrics as features. Conversely, the binary model utilized binarized consensus reading grades alongside the same six metrics. To assess the impact of individual metrics on model performance an ablation study was done. Evaluations were conducted with varying feature subsets, deliberately omitting certain metrics in each iteration.

Statistical analysis

The performance of the multiclass random forest model, in comparison to the ground truth, was quantified using Cohen’s quadratic weighted kappa score (κw). Levels of agreement were interpreted as: κw ≤ 0 ‘poor’ agreement, 0.01–0.20 ‘slight,’ 0.21–0.40 ‘fair,’ 0.41–0.60 ‘moderate,’ 0.61–0.80 ‘substantial,’ and 0.81–1.00 ‘almost perfect’ agreement [24, 25]. The κw was calculated based on the predictions on the validation sets during tenfold cross-validation. The κw of R3 and R4, compared with the consensus reading, were calculated and used to compare the model’s performance with the radiologists’ performance.

The performance of all binary random forest models was evaluated using the area under the receiver operating characteristic curve (AUC). AUC values were interpreted as: 0.50–0.59 ‘fail,’ 0.60–0.69 ‘poor,’ 0.70–0.79 ‘fair,’ 0.80–0.89 ‘good,’ and 0.90–1 ‘excellent’ [26]. To compute this metric, probability predictions generated from the validation folds during cross-validation were compared to the ground truth. Similarly, the binarized R3 and R4 gradings were compared to the ground truth to calculate the sensitivity and specificity. These metrics served as a benchmark to compare the binary model’s performance against that of radiologists. Not only were the optimal sensitivity and specificity of the model (determined by the Youden’s J index [27]) used for the comparison, but also the sensitivities and specificities thresholded at matching performance of the two readers. Lastly, the accuracy, positive predictive value (PPV), and negative predictive value (NPV) were computed for the algorithm (thresholded at the Youden’s J index) and the two readers.

Results

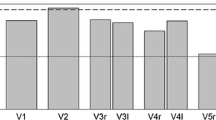

Cross-validation of the multiclass random forest model resulted in a mean κw of 0.86 (95% CI: 0.82–0.90). R3 and R4 obtained κw scores of 0.85 (95% CI: 0.80–0.89) and 0.73 (95% CI: 0.68–0.79), respectively. The confusion matrices of the algorithm, R3, and R4 are shown in Fig. 5.

The mean AUC during cross-validation of the binary random forest model was 0.98 (95% CI: 0.97–0.99) compared to binarized consensus grades. Analysis of the model’s performance at the optimal threshold of 0.46, determined by maximizing Youden’s J index, resulted in a sensitivity of 93% (95% CI: 91–96%) and a specificity of 91% (95% CI: 87–95%) with a J index of 0.84. R3 and R4 obtained specificity scores of 98 and 99% and sensitivity scores of 74 and 54%, respectively. Table 2 shows the sensitivity and specificity of the binary model thresholded at the closest matching sensitivity and specificity of the two readers. Figure 6 shows the receiver operating characteristic (ROC) curve of all binary models, including the performance of R3 and R4. Finally, the algorithm demonstrated an accuracy of 0.93, a PPV of 0.84, and an NPV of 0.96. In comparison, R3 and R4 showed accuracies of 0.91 and 0.86, PPVs of 0.94 and 0.97, and NPVs of 0.90 and 0.84, respectively.

To test the contribution of the various metrics included in the presented models, an ablation study was performed. Table 3 shows performance of the various multiclass and binary models when certain features are removed from the training data.

Discussion

This study introduces a new AI-based algorithm designed to accurately classify LCCS at any IVD level, employing only sagittal lumbar MR images. The findings demonstrate almost perfect agreement with expert radiologists’ consensus, which was graded using both sagittal and axial MR images. The multiclass model showed Cohen’s weighted kappa (κw) score of 0.86 (95% CI: 0.82–0.90), while the binary model (grade 0 and 1 ‘no stenosis’ and grades 2 and 3 ‘stenosis’) exhibits a sensitivity of 93% (95% CI: 91–96%) and specificity of 91% (95% CI: 87–95%). Comparative analysis with two radiologists reveals compelling results: the multiclass grading yields κw scores of 0.85 (95% CI: 0.80–0.89) and 0.73 (95% CI: 0.68–0.79), while binary grading demonstrates specificity scores of 98 and 99%, and sensitivity scores of 74 and 54%, respectively. Finally, the binary model demonstrated an accuracy of 0.93, compared to 0.91 and 0.86 for R3 and R4, with comparable PPVs and NPVs. These findings underscore a remarkable alignment between both AI models and radiologists’ consensus gradings, affirming the efficacy of the proposed approach.

Previous studies have focused on LCCS detection and grading, utilizing various AI methods. Many of these studies relied on opaque black box models, such as convolutional neural network classifiers, yielding κw scores ranging from 0.80 to 0.82 in multiclass classification and AUC values of 0.95–0.97 in binary classification [12,13,14,15,16]. In contrast, our approach emphasizes explainability and efficiency by employing a more understandable methodology that uses quantitative measurements while requiring less training data. By leveraging these measurements, our model offers traceable predictions, enhancing interpretability [28].

Bharadwaj et al developed a decision tree model based on the ratio between the cross-sectional areas of the dural sac and the IVD, with axial MRI slices as input [17]. Their model achieved a κw of 0.80 (90% CI: 0.76–0.82), lower than our method, which exclusively utilizes sagittal MR images. Moreover, our research dataset exhibits a higher prevalence of moderate to severe LCCS grades, accounting for 28% of cases, in contrast to Bharadwaj’s dataset, which only compromised 15% of such cases. This highlights the robustness and applicability of our approach across a broad spectrum of LCCS severity levels.

A notable strength of our method is that it leverages six distinct measurements related to LCCS, namely the absolute and relative surface area (CSA), AP-diameter (APD), and signal intensity (FSL) of the dural sac [11, 18]. These measurements, while informative, are labor-intensive and, therefore, rarely conducted in clinical settings [7]. Our algorithm automatically performs these measurements at any specified level of the lumbar spine. Among the metrics included, FSL emerged as the most influential factor in automatic prediction. Despite the pivotal role FSL plays in determining the Lee grade [8], and its demonstrated effectiveness in LCCS assessment [18], to our knowledge no research has been published on using automatic FSL measurements for LCCS classification. This underscores the novelty and potential impact of our approach in advancing LCCS diagnosis and management.

Furthermore, by exclusively utilizing sagittal lumbar MRI scans as input, our algorithm can automatically classify LCCS across all vertebral levels without the need for axial sequences. In clinical practice, axial sequences are angulated such that the MR volume is parallel to the IVD. However, in cases where multiple IVD levels are encompassed within the same MR volume, achieving parallel alignment for all IVDs becomes challenging due to the spine’s natural curvature. Our approach addresses this challenge by aligning the MRI volume parallel to the segmented IVD in 3D. This method of extracting IVD angulation was successfully used in a recent study on automatic Cobb angle measurements, demonstrating comparable accuracy to human readers [29].

This study has several limitations. First, the absence of an external test set for validating the model is notable. The reliance on data solely from one hospital for the tenfold cross-validation may restrict the broader applicability of the findings. Furthermore, even though the prevalence of stenotic IVD levels is higher compared to other studies, the size of the dataset is relatively small, possibly limiting the generalizability. Especially when looking at the T12-L1 IVD level or higher. To ensure the robustness of the algorithm, it should be trained on more data, which includes post-operative MRI scans and should be sourced from different MRI scanners across various hospitals. In this study, we demonstrated that this novel method is effective with the current dataset. However, future research should focus on testing the presented algorithm on larger multicenter datasets to ensure its robustness across diverse patient populations and imaging conditions.

Second, while the presented algorithm accurately classifies LCCS, it does not encompass other pathologies often associated with LCCS, such as lateral recess stenosis and foraminal stenosis. Given their significance in treatment decision-making, accurate assessment of these pathologies is essential. A potential solution could involve developing a model where the width of the neural foramina is measured based on vertebrae and IVD segmentation masks, which would require additional research.

Last, relying solely on sagittal MR images inevitably means working with lower axial resolutions compared to axially obtained MR images. This may potentially reduce the precision of axial measurements compared to those derived from axial MR images. However, despite this limitation, our study demonstrates that the lower axial resolution remains adequate for accurate LCCS classification.

In summary, this study introduces a novel AI-based algorithm designed for both multiclass and binary LCCS classification, demonstrating performance comparable to experienced radiologists. Through the integration of automatic quantitative measurements, our approach not only achieves high accuracy but also enhances the interpretability and traceability of LCCS predictions, distinguishing it from opaque models. The algorithm presented holds promise as a valuable tool for automating LCCS assessment from sagittal T2 MRI scans, potentially reducing reporting time and streamlining the diagnostic process.

Abbreviations

- AP:

-

Antero-posterior

- APD:

-

Antero-posterior diameter

- AUC:

-

Area under the receiver operating characteristic curve

- CLAIM:

-

Checklist for artificial intelligence in medical imaging

- CSF:

-

Cerebrospinal fluid

- κw:

-

Cohen’s quadratic weighted kappa score

- CSA:

-

Cross-sectional area

- FSL:

-

Fluid signal loss

- IVD:

-

Intervertebral disc

- LCCS:

-

Lumbar central canal stenosis

- MRI:

-

Magnetic resonance imaging

- NPV:

-

Negative predictive value

- PPV:

-

Positive predictive value

- PCA:

-

Principal component analysis

- SD:

-

Standard deviation

References

Ota Y, Connolly M, Srinivasan A et al (2020) Mechanisms and origins of spinal pain: from molecules to anatomy, with diagnostic clues and imaging findings. Radiographics 40:1163–1181. https://doi.org/10.1148/rg.2020190185

Lee SY, Kim T-H, Oh JK et al (2015) Lumbar stenosis: a recent update by review of literature. Asian Spine J 9:818

Van Der Graaf JW, Kroeze RJ, Buckens CFM et al (2023) MRI image features with an evident relation to low back pain: a narrative review. Eur Spine J 32:1830–1841. https://doi.org/10.1007/s00586-023-07602-x

Jensen RK, Jensen TS, Koes B, Hartvigsen J (2020) Prevalence of lumbar spinal stenosis in general and clinical populations: a systematic review and meta-analysis. Eur Spine J 29:2143–2163. https://doi.org/10.1007/s00586-020-06339-1

Kalichman L, Cole R, Kim DH et al (2009) Spinal stenosis prevalence and association with symptoms: the Framingham Study. Spine J 9:545–550. https://doi.org/10.1016/j.spinee.2009.03.005

de Schepper EI, Overdevest GM, Suri P et al (2013) Diagnosis of lumbar spinal stenosis: an updated systematic review of the accuracy of diagnostic tests. Spine (Phila Pa 1976) 38:E469–E481. https://doi.org/10.1097/BRS.0b013e31828935ac

Andreisek G, Deyo RA, Jarvik JG et al (2014) Consensus conference on core radiological parameters to describe lumbar stenosis-an initiative for structured reporting. Eur Radiol 24:3224–3232

Lee GY, Lee JW, Choi HS et al (2011) A new grading system of lumbar central canal stenosis on MRI: an easy and reliable method. Skelet Radiol 40:1033–1039. https://doi.org/10.1007/s00256-011-1102-x

Schizas C, Theumann N, Burn A et al (2010) Qualitative grading of severity of lumbar spinal stenosis based on the morphology of the dural sac on magnetic resonance images. Spine (Phila Pa 1976) 35:1919–1924

Ko Y, Lee E, Lee JW et al (2020) Clinical validity of two different grading systems for lumbar central canal stenosis: Schizas and Lee classification systems. PLoS One 15:e0233633

Steurer J, Roner S, Gnannt R, Hodler J (2011) Quantitative radiologic criteria for the diagnosis of lumbar spinal stenosis: a systematic literature review. BMC Musculoskelet Disord 12:175. https://doi.org/10.1186/1471-2474-12-175

Hallinan JTPD, Zhu L, Yang K et al (2021) Deep learning model for automated detection and classification of central canal, lateral recess, and neural foraminal stenosis at lumbar spine MRI. Radiology 300:130–138. https://doi.org/10.1148/radiol.2021204289

Won D, Lee HJ, Lee SJ, Park SH (2020) Spinal stenosis grading in magnetic resonance imaging using deep convolutional neural networks. Spine (Phila Pa 1976) 45:804–812. https://doi.org/10.1097/brs.0000000000003377

Windsor R, Jamaludin A, Kadir T, Zisserman A (2024) Automated detection, labelling and radiological grading of clinical spinal MRIs. Scientific Reports 14:14993. https://doi.org/10.1038/s41598-024-64580-w

Lu J-T, Pedemonte S, Bizzo B et al (2018) Deep spine: automated lumbar vertebral segmentation, disc-level designation, and spinal stenosis grading using deep learning. PMLR 85:403–419

Tumko V, Kim J, Uspenskaia N et al (2024) A neural network model for detection and classification of lumbar spinal stenosis on MRI. Eur Spine J 33:941–948. https://doi.org/10.1007/s00586-023-08089-2

Bharadwaj UU, Christine M, Li S et al (2023) Deep learning for automated, interpretable classification of lumbar spinal stenosis and facet arthropathy from axial MRI. Eur Radiol 33:3435–3443. https://doi.org/10.1007/s00330-023-09483-6

Hızal M, Özdemir F, Kalaycıoğlu O, Işık C (2021) Cerebrospinal fluid signal loss sign: assessment of a new radiological sign in lumbar spinal stenosis. Eur Spine J 30:3297–3306. https://doi.org/10.1007/s00586-021-06929-7

Tejani AS, Klontzas ME, Gatti AA et al (2024) Checklist for artificial intelligence in medical imaging (CLAIM): 2024 update. Radiol Artif Intell 6:e240300. https://doi.org/10.1148/ryai.240300

van der Graaf JW, van Hooff ML, Buckens CF et al (2024) Lumbar spine segmentation in MR images: a dataset and a public benchmark. Sci Data 11:264

van der Graaf JW, van Hooff ML, Buckens CF et al (2023) SPIDER. Lumbar spine segmentation in MR images: a dataset and a public benchmark. https://doi.org/10.5281/zenodo.10159290

Abdi H, Williams LJ (2010) Principal component analysis. WIREs Comput Stat 2:433–459. https://doi.org/10.1002/wics.101

Pedregosa F, Varoquaux G, Gramfort A et al (2011) Scikit-learn: machine learning in Python. J Mach Learn Res 12:2825–2830

Cohen J (1968) Weighted kappa: nominal scale agreement provision for scaled disagreement or partial credit. Psychol Bull 70:213–220. https://doi.org/10.1037/h0026256

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33:159–174

Nahm FS (2022) Receiver operating characteristic curve: overview and practical use for clinicians. Korean J Anesthesiol 75:25

Ruopp MD, Perkins NJ, Whitcomb BW, Schisterman EF (2008) Youden Index and optimal cut‐point estimated from observations affected by a lower limit of detection. Biom J 50:419–430. https://doi.org/10.1002/bimj.200710415

Marcus E, Teuwen J (2024) Artificial intelligence and explanation: how, why, and when to explain black boxes. Eur J Radiol 173:111393. https://doi.org/10.1016/j.ejrad.2024.111393

van der Graaf JW, van Hooff ML, van Ginneken B et al (2024) Development and validation of AI-based automatic measurement of coronal Cobb angles in degenerative scoliosis using sagittal lumbar MRI. Eur Radiol 34:5748–5757

Funding

This study received funding from Radboud AI for Health (ICAI).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Guarantor

The scientific guarantor of this publication is M.d.K.

Conflict of interest

The authors of this manuscript declare relationships with the following companies: B.v.G. is CSO of Thirona; N.L. is employed at Stryker. The remaining authors declare no conflicts of interest.

Statistics and biometry

One of the authors has significant statistical expertise.

Informed consent

Written informed consent was not required for this study because it was exempted, given the use of retrospective anonymized MRI examinations. This retrospective study was approved by the institutional review board at Radboud University Medical Center (IRB 2016-2275).

Ethical approval

Institutional Review Board approval was obtained.

Study subjects or cohorts overlap

No study subjects or cohorts have been previously reported.

Methodology

-

Retrospective

-

Experimental

-

Performed at one institution

Additional information

Publisher’s Note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

van der Graaf, J.W., Brundel, L., van Hooff, M.L. et al. AI-based lumbar central canal stenosis classification on sagittal MR images is comparable to experienced radiologists using axial images. Eur Radiol (2024). https://doi.org/10.1007/s00330-024-11080-0

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00330-024-11080-0