Abstract

Irrigation water is an expensive and limited resource and optimal scheduling can boost water efficiency. Scheduling decisions often need to be made several days prior to an irrigation event, so a key aspect of irrigation scheduling is the accurate prediction of crop water use and soil water status ahead of time. This prediction relies on several key inputs including initial soil water status, crop conditions and weather. Since each input is subject to uncertainty, it is important to understand how these uncertainties impact soil water prediction and subsequent irrigation scheduling decisions. This study aims to develop an uncertainty-based analysis framework for evaluating irrigation scheduling decisions under uncertainty, with a focus on the uncertainty arising from short-term rainfall forecasts. To achieve this, a biophysical process-based crop model, APSIM (The Agricultural Production Systems sIMulator), was used to simulate root-zone soil water content for a study field in south-eastern Australia. Through the simulation, we evaluated different irrigation scheduling decisions using ensemble short-term rainfall forecasts. This modelling produced an ensemble of simulations of soil water content, as well as ensemble simulations of irrigation runoff and drainage. This enabled quantification of risks of over- and under-irrigation. These ensemble estimates were interpreted to inform the timing of the next irrigation event to minimize both the risks of stressing the crop and/or wasting water under uncertain future weather. With extension to include other sources of uncertainty (e.g., evapotranspiration forecasts, crop coefficient), we plan to build a comprehensive uncertainty framework to support on-farm irrigation decision-making.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Irrigation comprises 70% of freshwater consumption globally, with irrigated agriculture contributing to 40% of total food production worldwide (The World Bank 2017). Irrigation scheduling, determining when and how much to irrigate, is thus a critical decision to promote efficient irrigation water use and good agricultural productivity (Pereira 1999). Surface irrigation is the most common irrigation method worldwide, for which, control of irrigation depth is only possible to a certain degree (Brouwer et al. 1989), and thus the question about irrigation timing becomes the most critical.

On-farm scheduling of surface irrigation often relies on the knowledge and experience of farmers, and is often by visual inspection of crop status (e.g., colour, curling of leaves) (Brouwer et al. 1989; Martin 2009). Empirical irrigation calendars have been developed for major crops under various climatic and soil conditions. These can be adopted as a rough guide to scheduling in a cropping season (Brouwer et al. 1989). Alternatively, on-farm irrigation scheduling is also commonly informed by simple calculations of crop water demand based on reference crop evapotranspiration (ET0) (Allen et al. 1998; Brouwer et al. 1989).

In contrast to the on-farm implementations, recent research has greatly advanced approaches to precise irrigation scheduling, which can be broadly classified into model-based and monitoring-based approaches (Gu et al. 2020). The model-based approaches often focus on estimating crop evapotranspiration (ETc) and then predicting the soil water balance and root-zone soil moisture contents. The prediction is then used to determine future irrigation timing based on when the total soil water depletion exceeds a user-defined threshold, or management allowable depletion (MAD) (Allen et al. 1998). The monitoring-based approaches can inform scheduling decisions by either monitoring the soil water to estimate available water for the crop (Campbell et al. 1982; Topp and Davis 1985), or monitoring crop status, such as canopy temperature and other canopy measurements, to infer crop water stress (Goldhamer and Fereres 2001; Sezen et al. 2014). Traditional monitoring-based methods have the key drawbacks of often being invasive and being labour intensive. These issues have been substantially resolved with the development of automated monitoring and remote sensing techniques (Jones 2004).

The model-based approaches show greater potential to provide operational tools to support on-farm irrigation scheduling due to their capability for making predictions and testing management options (George et al. 2000; Gu et al. 2020). However, a major challenge to implement the model-based approach arises from the high uncertainty associated with the modelling process. For example, models require accurate observations and forecasts of recent and future weather conditions to estimate the atmospheric evaporative demand (Allen et al. 1998; Rhenals and Bras 1981). Uncertainties in future weather can arise from uncertain weather forecasts (Slingo and Palmer 2011), while historical weather observations can be subject to instrumental errors, limited representation of spatial variability and issues in quality control/assurance (Wright 2008). Model-based irrigation scheduling also relies on good information on the characteristics of soil (e.g., soil hydraulic parameters) and crop (e.g., growing stages and crop coefficient) for estimating soil drainage, actual soil evaporation and crop transpiration (Allen et al. 1998; Pereira et al. 2003). Crop characteristics can display a wide variation with location, climate and growth stage (Guerra et al. 2016), while soil properties are also highly variable (Ratliff et al. 1983; Rawls et al. 1982). The soil water modelling also relies on knowing soil water content to define the initial conditions, which is often obtained from soil water monitoring. While soil water monitoring can be practically performed at point scale, there is high uncertainty in spatial representativeness due to variability (Grayson and Western 1998; Or and Hanks 1993; Paraskevopoulos and Singels 2014). To enable effective scheduling decision-making under these uncertainties, a quantitative uncertainty-based analysis framework is critical for evaluating different scheduling decisions.

Within all the sources of uncertainty that need to be considered in irrigation scheduling, the uncertainty in future weather conditions attracts the most attention. The recent rapid development of weather forecasting has greatly facilitated research on irrigation scheduling under uncertain future weather (Azhar and Perera 2011; Jones et al. 2020; Perera et al. 2016). Most modelling studies that have incorporated short-term forecasts focus on rainfall—which is generally considered the most important weather input to irrigation scheduling—and have generally achieved a saving in irrigation water while improving rainfall utilization efficiency (Cai et al. 2011; Cao et al. 2019; Gowing and Ejieji 2001; Wang and Cai 2009). Cao et al. (2019) combined the forecasted probabilities of different rain intensities (no rain, light rain and heavy rain) for three days into the future to derive an expected rainfall amount. The expected rainfall was used to drive a rule-based irrigation scheduling approach and led to a 0–100 mm saving in irrigation water, and a 0–60 mm reduction in drainage, when compared with conventional scheduling not considering weather forecast. Wang and Cai (2009) explored the value of 7-day probabilistic rainfall forecasts for real-time irrigation decision making. The forecasted rainfall probability was linked to rule-based irrigation scheduling to inform future irrigations, leading to a 16% increase in the modelled crop profit compared with relying on real-time soil moisture information alone. Cai et al. (2011) used probabilistic rainfall forecasts to optimize the irrigation amount, with an objective function that considers both the expected incremental crop yield gain and the irrigation operational costs. Specifically, the forecast 7-day future rainfall probabilities were combined with the historical average intensities for different rainfall probabilities to produce historical ensemble rainfall forecasts (referred to as the ‘imperfect forecast’ in their paper). These ensemble forecasts were then used to derive ensemble simulations of the incremental crop yield gain (representing the accumulation of crop profit over the season), and then aggregated to their expected value as input to the objective function for irrigation scheduling optimization. Informed by the historical ensemble rainfall forecasts, the optimal irrigation decisions derived were found to lead to 2.4–8.5% gain in crop profit and 11.0–26.9% water saving, relative to irrigation scheduling assuming no rainfall for the future seven days. In an extension of Cai et al. (2011), Hejazi et al. (2014) also optimized irrigation scheduling. In that study, each weather input (for rainfall, minimum temperature and maximum temperature) took the weighted average of ensemble forecasts from a bias-corrected regional climate model. As summarized above, a number of studies have used probabilistic or ensemble weather (mostly on rainfall) forecasts to incorporate the uncertainties in future weather into irrigation scheduling, which in turn helped identifying the best irrigation decisions with benefits illustrated by the modelled irrigation water efficiency, crop yield or profit.

While optimization appears intuitively attractive, application of the existing studies for practical on-farm irrigation scheduling remains limited. An important reason for this is they fail to recognise varying risk preferences between irrigators, which suggests that risk oriented approaches may be more appropriate for practical management. Specifically, existing studies have all recommended the ‘optimal’ irrigation decisions based on objective functions that are often an aggregation over the modelled risks and profits. In operational situations, the key considerations for irrigation decision making extend well beyond risks and profits to include individual farmers’ experience and management preference (e.g., a desire to completely eliminate crop water stress or to intentionally apply some stress to achieve certain quality characteristics for the crop), as well as integration with other on-farm operations (e.g., fertilization, spraying). Therefore, the ‘optimized’ decisions may not represent the true interests and objectives of farmers (Cai et al. 2011). Considering these issues, we argue that operational irrigation decision making would benefit more from having transparent information on the modelled outcomes of different irrigation decisions and their associated uncertainty, rather than direct recommendations of the optimal irrigation decisions. To achieve this, we need an uncertainty-based analysis framework to:

-

1)

Incorporate multiple sources of uncertainty in irrigation scheduling modelling. This should include, but not be limited to, utilizing ensemble weather forecasts to better incorporate the uncertainty in future weather.

-

2)

Enable evaluation and comparison of different irrigation scheduling decisions under uncertainty.

Nested in the larger problem of lack of a quantitative, uncertainty-based analysis framework, the use of weather forecasts in representing uncertainties in future weather also needs to be improved. Very few studies have fully incorporated the uncertainty in ensemble forecast to evaluate the performance of irrigation decisions (Cai et al. 2011). In most existing studies, weather forecasts, although initially obtained in ensemble or probabilistic forms, have been used only as a deterministic input to irrigation scheduling models (Cao et al. 2019; Hejazi et al. 2014; Wang and Cai 2009), which has greatly limited the exploration of uncertainties. Further, only Hejazi et al. (2014) attempted bias correction of the weather forecasts. There is a general lack of quality control of the weather forecasts used, raising questions regarding how well they can represent the expected future weather and associated uncertainty.

This study aims to establish a quantitative, uncertainty-based analysis framework to support short-term in-season irrigation scheduling by quantifying the risks of over- or under-irrigation arising from various irrigation scheduling options, while incorporating uncertainty in future weather conditions. As a first step towards developing a full uncertainty framework, we consider the uncertainty contributed solely by unknown future rainfall, which represents one of the biggest sources of uncertainty in making scheduling decisions. In doing so uncertainty in future weather is incorporated using short-term ensemble rainfall forecasts. The key novelty of this study is developing an uncertainty-based framework to quantitatively evaluate risks associated with different irrigation decisions. Within this framework, this study also incorporates high-quality short-term ensemble rainfall forecasts developed through post-processing of numerical weather forecasts, to improve the forecast accuracy and uncertainty representation. The key question this study addresses is: how does uncertainty in rainfall forecasts translate to risks of over- or under-irrigation with different scheduling decisions?

Materials and methods

Overview

In this study, we focused on surface irrigation systems and assumed that each irrigation replenishes the soil moisture deficit to field capacity. Thus, irrigation timing was the key variable in scheduling decisions. The framework was based on model simulations of root-zone soil water content. Specifically, the crop model APSIM (Agricultural Production Systems sIMulator, see details in “The APSIM crop model”) was used to derive ensemble simulations of soil water content for the 9-day future period starting from each day in the cropping season. The simulations combined: (1) ensemble short-term rainfall forecast for the 9-day future period (“Ensemble short-term rainfall forecasts”); and (2) different irrigation timings ranging from Day 1 to Day 9 into the future (“Ensemble simulations to evaluate risks from different irrigation decisions”). The ensemble soil water simulations were then used to evaluate the risks of over- or under-irrigation (i.e., wasting water or stressing the crop) for different irrigation timings for each day in the season. A schematic of the simulation is shown in Fig. 1.

Schematic of the risk analysis framework to evaluate irrigation options. APSIM refers to the crop model, Agricultural Production Systems sIMulator, which is detailed in “The APSIM crop model”

Study field

To illustrate this risk analysis framework, we focused on one maize field within the Goulburn-Murray Irrigation District (GMID) in south-east Australia (field centered at − 36.17S, 145.02E) over the 2019–2020 summer cropping season. The cropping season for summer maize in this region generally stretches from November to May, and was from 29 November 2019 to 6 April 2020 for our particular case study. The study field was located on the borderline between temperate and arid steppe climate regions (Peel et al. 2007). The long-term annual mean rainfall is 447 mm based on observations from 1964 to 2021, at the closest public weather station (Kyabram, Australian Bureau of Meteorology #80091, 19 km away).

We established continuous in-field monitoring of the climate and soil water content over the study period, which was used to define the initial conditions to model irrigation scheduling for each day in the cropping season (detailed in “Ensemble simulations to evaluate risks from different irrigation decisions”). We monitored the air temperature, solar radiation, relative humidity and wind speed at 2 m above ground from a standing weather station, along with a tipping-bucket rain gauge installed at ground level. The weather station was installed near the field boundary to avoid canopy interference with the solar radiation sensor and rain gauge. We also installed a Sentek drill-and-drop soil moisture probe within one crop row, which we assumed was representative of the soil water status of the entire cropping field (see further discussion on this assumption in “Discussion and conclusion”). The soil water probe measured soil water content continuously for each 10 cm layer down to 90 cm. The measurements from each layer were calibrated against soil samples taken next to the probe, towards the end of the season. All climate variables were monitored every 6 min and the soil water was monitored at a 30-min interval. To facilitate the APSIM simulations, which were all at a daily step, we processed the 6-min raw measurements for solar radiation, air temperature and rainfall into the daily average solar radiation (MJ/m2), the daily maximum and minimum temperature (°C), and the daily total rainfall (mm). We took the mid-day values of the sub-daily soil water content measurements as representative daily values.

The APSIM crop model

To simulate crop water use and thus soil water content, we used APSIM (Agricultural Production Systems sIMulator, v7.10), which is widely applied for simulating agricultural systems using information on climate, plant phenology, soil properties and irrigation (Holzworth et al. 2014). For this study, we focused on the crop and soil water modules within the model. The Maize crop module (APSIM 2021b; Carberry and Abrecht 1991; Carberry et al. 1989; Keating et al. 1991; Keating and Wafula 1992) was applied to represent the phenology, biomass accumulation, root depth and crop water use.

The SoilWat module (APSIM 2021c), which conceptualizes each soil layer as a bucket (Jones and Kiniry 1986; Littleboy et al. 1992), was used to model the fluctuation of soil water with time within each soil layer, and the vertical movement of water between layers, in response to irrigation, rainfall and evapotranspiration. The module partitions total irrigation and rainfall to evapotranspiration, runoff, infiltration and drainage, each of which is defined and conceptualized in APSIM as follows:

-

Evapotranspiration includes evaporation from the soil surface and transpiration from the crop. The upper limit of evapotranspiration (potential evapotranspiration) is first estimated with the Priestly and Taylor model from climate data (Priestley and Taylor 1972). This potential evapotranspiration is then converted to 1) actual soil evaporation depending on the wetness of the soil surface layer; and 2) crop transpiration through daily vapour pressure deficit, soil water deficit, as well as the biomass accumulation and transpiration efficiency which are both determined by the Maize crop module based on simulated phenology (APSIM 2021b).

-

Runoff represents the residual water leaving the soil surface. It is generated using the USDA curve number approach in SoilWat, and is thus dependent on the amount of irrigation and/or rainfall (APSIM 2021c).

-

Infiltration represents the water movement from each layer to the next layer below, depending on the saturation status of layers, the water content gradient between the layers and the diffusivity (APSIM 2021c).

-

Drainage is the amount of water leaving the bottom of the soil store (i.e., the deepest layer), and is dependent on a parameter representing drainage proportion for the bottom layer and the moisture of that layer (APSIM 2021c).

To simulate soil water, APSIM requires the following inputs:

-

[1]

The sowing and harvesting dates, which were provided by the field owner for this study.

-

[2]

The daily climate data consisting of solar radiation, maximum and minimum air temperature, and rainfall, which were all obtained from our in-field monitoring.

-

[3]

The initial soil water status, which was extracted from our calibrated in-field soil water observations at the start of the season.

-

[4]

Timing and amount of each irrigation event. For calibration purposes, these were back-calculated from our in-field observations of soil water (calibrated) and rainfall. The back-calculated irrigation amounts were found to underestimate the actual irrigation amount reported by the farmer (~ 40 mm estimated compared to ~ 70 mm applied). However, we assumed a minimal effect of this uncertainty on the modelled irrigation amount, which we will explicitly address in future studies (see the “Discussion and conclusion” section). For the forecast evaluations, irrigation was handled differently, as detailed below.

To simulate the soil water content for each soil layer, APSIM requires several key soil water parameters e.g., bulk density (BD), field capacity (DUL), crop wilting point (LL15). To determine these parameters, we first chose a soil type from the in-built soil database ApSoil (Clay loam Lismore #827) that was representative of our study field. Note that this selection is not based on geological proximity of the soil sample to our study field, but on whether the soil parameters can reproduce the range of in-field soil water observations obtained from our study site. We chose to focus on matching simulations to observations for the top three soil layers: from the soil surface to depths of 150 mm, 300 mm and 700 mm, as the total depth of 700 mm was the approximate depth of root zone at crop maturity inferred from our soil water observations. We then further improved the representativeness of the soil parameters by adjusting the values of DUL and LL15 for each layer. These adjustments were informed by a manual model calibration process, which used the abovementioned model inputs ([1]–[4]) and aimed to achieve a good match between the modelled and the observed soil water contents over the full cropping season. Since the study does not intend to investigate effect of nutrient availability, we ensured that the crop was not nutrient-limited by applying a sufficiently large initial soil nitrogen. Specifically, this was achieved by setting the initial NO3 and NH4 for each of the three soil layers as 50 ppm and 3 ppm respectively, with no fertilizer applied during the season. We also assumed a large initial surface residual of organic matter (e.g., stubble from previous season) of 1000 kg/ha (APSIM 2021a). We ensured that the crop was not nutrient-limited with these settings via a preliminary model simulation.

Ensemble short-term rainfall forecasts

Ensemble short-term rainfall forecasts were generated for the study field over the cropping season as a critical input in this risk evaluation framework. There are four steps involved in generating these rainfall forecasts:

-

1)

We obtained the raw hourly forecasts from the ACCESS-G2 model, for the future 9-day period across all of Australia. This deterministic model was the Australian Bureau of Meteorology’s operational numerical weather prediction model for the study period.

-

2)

The raw forecasts were then aggregated to match the spatial and temporal resolutions of the daily precipitation data—0.05° × 0.05° grid (approximately 5 km)—across Australia, derived from historical observations by the Australian Water Availability Project (AWAP).

-

3)

The raw daily forecasts were individually calibrated by using the Seasonally Coherent Calibration (SCC) model (Wang et al. 2019; Yang et al. 2021; Zhao et al. 2021) to improve their match with the AWAP data for our study field site, and to generate 100 ensemble forecast members for the future 9-day period.

-

4)

The forecast ensemble members at different lead times were stochastically linked using the Schaake Shuffle technique (Clark et al. 2004) to form forecast ensemble time series with appropriate serial correlations between days.

This process yielded a 100-member ensemble of daily rainfall forecasts for the study field, for the future 9 day period. The process was repeated starting from each day in the cropping season.

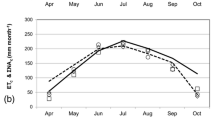

Figure 2 summarizes the performance of rainfall forecasts with three performance metrics (see the Appendix for details of the estimation for each metric):

-

The bias in average daily precipitation in the raw and calibrated forecasts;

-

The continuous ranked probability score (CRPS) skill score to measure skill in each of the raw and calibrated forecasts relative to ensemble climatology reference forecasts; and

-

The alpha index (α) to summarize the reliability of the calibrated forecasts.

The a) bias, b) continuous ranked probability score (CRPS) skill score and c) alpha index (α) for the ensemble rainfall forecasts. These summarize the forecast accuracy, skill and reliability, respectively. For both a) and b), the corresponding performance of the raw forecasts is also shown for comparison. Please see the Appendix for detailed definitions and methods of calculation

The calibrated precipitation forecasts demonstrate better accuracy than the raw forecasts. The raw forecasts overpredict precipitation by more than 0.15 mm/day for lead times of 1–7 (i.e., Days 1–7) (Fig. 2a). Calibration with the SCC model reduced the biases in the raw forecasts by over 40% for Days 1–7. In this study, we used the long-term (30-year) AWAP data to derive the climatology of observations for forecast calibration. The remaining biases in the calibrated forecasts mainly reflect the deviation of precipitation observations during the evaluation period (4/2016–3/2020) from the 30-year climatology (4/1990–3/2020).

The calibrated forecasts are much more skillful than the raw forecasts. Specifically, the raw forecasts have increasingly negative CRPS skill scores (meaning worse performance than climatology forecasts) beyond Day 1 due to both systematic and erratic errors, as well as the lack of uncertainty representation in the raw (deterministic) forecasts (Fig. 2b). Calibration with the SCC substantially improved forecast skill and shows better performance than the climatology forecasts across all lead times due to correction of both the bias and ensemble spread. The high α-index values of the calibrated forecasts suggest that they have high reliability. The α-index remains above 0.95 for all lead times, indicating good quantification of forecast uncertainties, with the distribution of the calibrated ensemble forecasts being neither too narrow nor too wide (Fig. 2c).

Ensemble simulations to evaluate risks from different irrigation decisions

To evaluate risks from different irrigation decisions, we ran APSIM to generate an ensemble simulation of soil water contents for each of nine different irrigation timings within the future 9-day period. The simulations for each 9-day forecast period were made by running APSIM from the start of the season with forcing constructed by combining three sub-periods. These periods were: (a) the antecedent period including all days within the season prior to the 9-day window of interest; (b) the future 9-day periods for which irrigation decisions need to be made using forecasts; and (c) the post-decision period with all days beyond the future 9-day until the season ends. For the antecedent period, the observed climate data and the initial soil water content at the start of the season were used as input data, with irrigation determined using the automatic irrigation routines in APSIM (see paragraph below). For the future 9-day period, the climate input data included the ensemble rainfall forecasts, observed data from the remaining climate variables. Irrigation was assumed to occur only once during the 9 days and individual simulations were performed for all possible irrigation timings from Day 1 to Day 9. The simulation for the post-decision period relied on the observed climate data with no irrigation applied. The simulation results from the post-decision period was used only for evaluating possible drainage following irrigation during the 9 day forecast period.

To ensure sufficient water supply to the crop before each 9-day forecast period (i.e., during the antecedent period), we did not use the actual irrigation events derived from the observed soil water. Instead, we set up ‘automatic irrigation’ within the antecedent period, which triggered an irrigation (filling to DUL) as soon as soil water dropped to a user-defined critical level (refill point). The critical refill point was estimated using a tension-based approach, by calculating the total readily available water over the top three soil layers that correspond to a soil matric potential of − 40 kPa. The estimation of soil water corresponding to − 40 kPa followed a regional guide on refill point estimation by crop and soil type (Giddings 2004).

While the simulations were run from the start of the season to define the antecedent soil water and crop conditions, the following 9-day forecast period was focused on evaluating each possible irrigation decision (timing of irrigation on Days 1–9). The simulations were driven by the 100 sets of weather inputs from the corresponding 9-day ensemble rainfall forecasts (“Ensemble short-term rainfall forecasts”). Since this study focuses on future rainfall uncertainty, our in-field climate observations for the 9-day period were used for all other required weather inputs (i.e., temperature and radiation) (“Study field”).

To evaluate each irrigation decision. We first extracted the following outputs from the 100 simulations:

-

1)

Daily root-zone (70 cm) soil water content, within the 9-day period;

-

2)

Daily runoff, within the 9-day period;

-

3)

Daily drainage, within the 9-day period plus a 6-day lag period. The lag period was determined by a preliminary analysis in which we applied a large irrigation amount when the soil water content was near field capacity, and concluded a 6-day period was required for drainage to complete.

We summarized the outcome of each irrigation decision by estimating the corresponding risks of stressing the crop and wasting water, specifically:

-

Risk of crop stress: this was summarized in two ways—the duration of crop stress, and the extent of stress. The stress duration was calculated as the total number of days for which the simulated soil water content was below the critical refill point (same as the that used to trigger the ‘automatic irrigation’) within the 9-day period. The stress extent was calculated by summing up the differences between the soil water content and the critical refill point across all days within the stress duration, which thus had a unit of mm days. This stress duration was intended to account for different levels of cumulative stress; specifically, both a low and high deficit below the refill point for one day can lead to crop stress with the same duration of stress, but would likely have different impacts on the crop.

-

Risk of wasting water: this was calculated as the sum of runoff and drainage after the irrigation event within the 9-day period. For runoff, the summation period ended on the last day of the 9-day period; for drainage, the summation period ended 6 days after the end of the 9-day period to allow for the abovementioned lag in drainage (item 3 in the abovementioned outputs from the 100 model simulation). Thus, this wastage risk had a unit of mm.

The above procedure for running APSIM ensemble simulations and extracting simulation outputs to evaluate irrigation decision was repeated to evaluate each decision to irrigation on Day 1 to Day 9, within each 9-day forecast period over the full cropping season. Figure 3 shows an example of the ensemble soil water simulations for one irrigation decision on Day 8 within a single 9-day period, which enables estimation of risks associated with this decision.

An example of ensemble soil water simulations for a specific irrigation decision (i.e., to irrigate on Day 8) within one 9-day period. The dotted lines indicate the field capacity and the critical refill point: the former (blue dotted line) will trigger risks of wastage, which were summarized by the sum of runoff over the 9-day period and drainage over the 9-day plus a 6-day lag period to account for drainage (blue arrow); the latter (red dotted line) will trigger risks of crop stress, which were summarized by both the duration and the cumulative amount of soil water below the refill point within the 9-day period (red arrow)

Results

In this section, we first present the detailed risk results for three 9-day forecast periods within the cropping season, starting on Day 42, Day 50 and Day 66 of the cropping season. Results for these particular days were chosen because they are representative examples of contrasting risk patterns expected in the next 9 days: (1) having both stress and wastage risks present; (2) having only the wastage risk present; (3) having only the stress risk present. Each case is discussed in detail in “Typical short-term risk profiles”. We then present a summary of the variation of risks across the full cropping season in “Variation of risks across the full season”.

Typical short-term risk profiles

The 9-day period starting on Day 42 of the cropping season experiences both stress and wastage risks, resulting in a trade-off that should be considered in deciding the irrigation timing. Since this is the first example we discuss, we present more detailed results on the two types of risks associated with different irrigation timings, as well as their derivation to illustrate how the risks were calculated from the APSIM simulations. Figure 4 shows the 100-member ensemble rainfall forecasts for the 9-day period starting on Day 42 (panel a)), and the corresponding 100-member APSIM ensemble simulations of soil water content (middle column, b), e) and h)) and total runoff and drainage (right column, c), f) and i)). The simulations were performed for all possible irrigation timings, i.e. on Days 1 to 9, with only three irrigation timings (Day 1, Day 5 and Day 9) shown in Fig. 4 for illustration, with one day on each row. Note that within the 9 days, there will be no stress after the irrigation event, thus the period to assess the stress risk should include all days within the 9-day period prior to the irrigation event. We thus considered analysis periods of varying lengths for different irrigation timings i.e., from the day 1 until the day of irrigation, which ensured that the risk results are purely influenced by the irrigation timings assessed. The analysis period for the wastage risk included all days within the 9-day forecast period and a further 6 days after it to allow for delayed drainage to be considered (see details in Risk of wasting water in “Ensemble simulations to evaluate risks from different irrigation decisions”).

The 9 day ensemble forecasts and simulations for Day 42 in the season, including rainfall forecasts (panel a), the corresponding ensemble simulation for soil water contents (panels b), e) and h) and the ensemble simulation of waste (panels c), f) and i). The simulated soil water contents and waste are shown for scenarios with irrigation occurring on Day 1, Day 5 or Day 9, in separate rows. The red and blue rectangles highlight the periods for which the stress and wastage risks were accounted for, respectively—see the text for explanation

With the ensemble APSIM simulations in Fig. 4, the distributions of stress and wastage risks for each irrigation timing are derived and summarized in Fig. 5. Specifically, for each irrigation timing, the stress duration and extent were summarized using the ensemble soil water simulations shown in the middle column of Fig. 4 (panels b), e) and h)). The wastage risk was summarized with the ensemble simulations of runoff and drainage as shown in the right column of Fig. 4 (panels c), f) and i)), see “Ensemble simulations to evaluate risks from different irrigation decisions” for the detailed risk estimation approach).

The ensemble simulations of: a) stress duration in days; b) stress extent in mm day, and c) waste extent in mm. All risks are plotted for different irrigation timing decisions i.e., to water on Day 1–9 within the future 9 days starting on Day 42 in the season (x-axes). All boxplots are shown with no outliers and whiskers are 1.5 times the interquartile ranges

Figure 5 shows that the stress risk (considering both the stress duration and extent) can only be completely avoided if irrigation occurs on Day 1. Note that in summarising the highly skewed risks from individual simulations, the summary of risks in Fig. 5 (and the subsequent Figs. 6 and 7) only extends to 1.5 times the interquartile range (i.e., end of the whiskers), and do not consider outliers. With irrigation from Day 2 onwards, both the stress duration and extent increase as irrigation is applied later. No wastage risk is produced unless irrigation is applied on either Day 8 or Day 9. With both risks likely to occur within the 9-day period, the irrigation decision making needs to consider the trade-off between both risks. If the objective is to completely avoid both risks, the optimal timing to irrigation would be Day 1.

The ensemble simulations of: a) stress duration in days; b) stress extent in mm.day, and c) waste extent in mm. All risks are plotted for different irrigation decisions i.e., to water on Day 1–9 within the future 9 days starting on Day 50 in the season (x-axes). All boxplots are shown with no outliers and whiskers are 1.5 times the interquartile ranges

The ensemble simulations of: a) stress duration in days; b) stress extent in mm day, and c) waste extent in mm. All risks are plotted for different irrigation decisions i.e., to water on Days 1–9 within the future 9 days starting on Day 66 in the season (x-axes). All boxplots are shown with no outliers and whiskers are 1.5 times the interquartile ranges

Figure 6 shows the stress and wastage risks for the 9-day period starting on Day 50 of the season, derived from the ensemble APSIM simulation in the same way as illustrated in Fig. 4. For this period, wastage risk presents if irrigation occurs on Days 1 to 3. There is no risk of stress over the entire 9-day period, which suggests the best irrigation decision as to not to irrigate on any day. The difference in risk between different forecast starting days (e.g., comparing Day 46 with Day 50) is expected to be due to different initial soil water conditions and differences in the future rainfall forecasted, which will be discussed in more detail in “Variation of risks across the full season”.

Figure 7 shows the risks for the future 9-day period starting on Day 66 of the season. For this entire period there is no risk of wastage. Stress risk is not produced if irrigating on Day 1 or Day 2; if irrigation occurs on Day 3 onwards, stress risk will keep increasing with irrigation on a later day. Therefore, if the irrigation aims to completely avoid the stress risk, the best timing would be either Day 1 or 2.

Variation of risks across the full season

“Typical short-term risk profiles” illustrated how the APSIM ensemble simulations are used to derive the stress and wastage risks associated with different irrigation decisions for individual 9-day periods, and presented three typical periods over a cropping season with different types of risks occurring. In this section, we summarize the estimated risks over all 9-day forecast periods in the season in Figs. 8 and 9. Note that these results do not intend to present or recommend any specific irrigation strategy, but instead, they focus on the expected short-term risks of under- and over-irrigation throughout the season under different soil water and rainfall conditions. As such, Figs. 8 and 9 intend to discuss the critical periods of risks across this season, for which decisions on irrigation timing should be carefully considered.

The probability of occurrence of the three risk types: stress only, waste only and both risks for each day in the season. The probability of occurrence for each risk type for each day in the season was estimated by averaging the probability of each risk type occurring when irrigating on each of the future 9 days. For irrigation on each day within the 9 days, the probability of occurrence for the specific risk was obtained from the 100 ensemble soil water simulations corresponding to the 100 ensemble rainfall forecasts. The vertical dashed lines indicate Day 42, 50 and 66 which were used as examples in “Typical short-term risk profiles” to illustrate the three risk types

a) The 75th percentile of forecast rainfall (with 1-day lead time) in mm; and b) the soil water at the start of each forecast period over the full cropping season. In both a) and b), the colours of the dots indicate the three possible types of risks: trade-off between stress and wastage risks, stress risk only, wastage risk only, and no risk. The vertical dashed lines indicate Day 42, 50 and 66 which were used as examples in “Typical short-term risk profiles” to illustrate the three risk types. Panels c) and d) show how the simulated average risk extents for each 9-day period varies against forecasted rainfall and soil moisture contents at the start of the period, where the risk are shown separately for stress in mm days (orange) and waste in mm (blue) when each risk presents. Panel e) plots the relationship between the forecasted rainfall and initial soil water

Figure 8 presents the simulated probabilities of occurrence for the three different risk types for each day in the season, specifically: the crop being stressed, wasting water and both a stressed crop and water waste occurring together. For irrigation on each day within the future 9-day period, the probabilities of each risk type (9 values in total) were estimated by counting the number of the corresponding 100 ensemble simulations that have non-zero extent of the specific risk type. The simulations were produced based on the corresponding 100 individual ensemble rainfall forecasts. For each risk type, the 9 probabilities of occurrence (corresponding to irrigation on each day within Days 1–9 in the future) were then averaged to derive the final risk probability for each day in the season, as shown in Fig. 8. The risk probabilities in Fig. 8 suggest that there is some risk of wastage nearly all the time over the season, with peaks around periods of higher soil water content; in contrast, the occurrences of stress risk are infrequent, and are aligned in time with low soil water levels (Fig. 9b). Finally, the combination of both the stress and waste risks occurring together is expected for only a few days in the season. The more frequent occurrence of the waste risk is a result of the ensemble rainfall forecasts used. It results because there are always a few ensemble members with significant rainfall. To provide a more comprehensive assessment of the expected risk patterns across the full season, instead of considering the full set of ensemble simulations, we focus our discussion on the 75th percentile of the estimated risk extents in the subsequent results (Fig. 9).

The top two panels of Fig. 9 show: (a) the 75th percentile of the ensemble rainfall forecasts for each day in the cropping season, with a 1-day forecast lead-time (chosen because it provided the most accurate forecasts); and b) the initial soil water (as defined in “Ensemble simulations to evaluate risks from different irrigation decisions”) for each day in the cropping season. The time-series in both panels a) and (b) are overlayed by coloured dots which indicate which of the three risk types is expected over the corresponding 9-day forecast period, where the definition of each risk type was based on the 75th percentile of the specific risk from the 100 ensemble simulations. In contrast to Fig. 8, if considering only the higher probably of wastage (Fig. 9), almost half of the days in this cropping season have no stress or wastage risk for the future 9 days (47.3%). This absence of risk occurs regardless of whether any irrigation is applied within the 9-day period, or which day irrigation is applied. A further 15.5% and 29.5% of days in the season have only the stress or wastage risk for the future 9-day period, respectively. There is only a small number of days (7.8%) where both stress and wastage risks are expected for the future 9 days. These periods with both risks present a trade-off situation that requires critical decisions on irrigation timing.

Panels c) and d) of Fig. 9 show the 75th percentile of extent of each risk type averaged over each 9-day period, plotted against the rainfall forecasts and the initial soil water status. The extents of stress and waste risks were each estimated by averaging the 75th percentile of the specific risk extent across the future 9-day period, and were summarized in mm.days and mm, respectively. Higher stress risks generally occur on days when soil water is low; while waste risks generally increase with higher soil water content. For this particular study site and cropping season, the short-term rainfall forecasts do not seem to have big influence on the extents of both the stress and waste risks. In theory, the sizes stress and waste risks should be controlled by both the soil moisture and rainfall. The much higher sensitivity of these risks to soil moisture than to forecasted rainfall is likely due to the limited high rainfall events in our forecasts and the wide range of soil moisture levels during dry days (Fig. 9e).

Discussion and conclusion

The risk analysis framework presented in this study can be used in two ways to inform practical irrigation decision making. Firstly, the risks identified for individual 9-day periods within the season can help inform a farmer’s day-to-day irrigation decisions, as we illustrated with the three contrasting examples in “Typical short-term risk profiles”. Having the risk information, rather than only an optimized decision recommendation, allows farmers to bring other management considerations into their decision making. Assessing risks across multiple 9-day periods or over the full cropping season (“Variation of risks across the full season”) allows one to understand the occurrence of different types of risk, the amount of stress or waste expected, and the likely soil and weather conditions contributing to these risks. Such full season analysis can inform the critical periods and the types of risks within a cropping season that require more attention for short-term irrigation decision-making. When both risks present, a comprehensive risk analysis as we illustrated is particularly important for farmers to understand the uncertainty in the risks and deciding an optimal irrigation timing.

This study addresses an important knowledge gap in providing decision support information based on high-quality short-term ensemble weather forecasts (Hejazi et al. 2014). Specifically, the analysis framework we developed provides an alternative view, compared with previous work on optimization for irrigation scheduling (Cai et al. 2011; Cao et al. 2019; Gowing and Ejieji 2001; Hejazi et al. 2014; Wang and Cai 2009). This framework can better facilitate operational irrigation scheduling by presenting the uncertainty in the outcomes of different irrigation decisions, while allowing flexibility for individual farmers to make their own decisions. As illustrated in “Typical short-term risk profiles”, the 9-day periods starting from Day 42 and Day 66 both involve increasing stress risks if irrigation is applied on a later day, so it is better to irrigate earlier in both cases if the aim is to completely avoid crop stress. While we used a fixed soil water deficit to estimate stress, in real operation, as the crop grows and root zone develops, a farmer may use variable refill points across a season (instead of the constant refill point used in this study), based on his/her experience with the local climate, crop water use conditions and growth stage of the crop, implying a time variable representation of water stress would be required. A farmer can also choose to intentionally reduce irrigation at a key growth stage to induce a desired crop response through a regulated crop stress. In this situation, the farmer may decide to irrigate on a later day with some minor stress expected, thus not following the optimal irrigation recommendations (Cai et al. 2011). A potential for further extension of our framework lies in risk communication with farmers, which might better focus on impacts on profit (rather than the stress and waste results presented), which might be induced by the impacts of crop stress on water use, crop yield and quality (e.g., Cai et al. 2011; Wang and Cai 2009).

It is worth noting that the novelty of this study is the analysis framework developed, rather than the risk results presented. Specifically, all the risk results generated were based on a case study at a maize field in northern Victoria (as detailed in “Study field”), and only the uncertainty in future rainfall was considered. The risk results shown are specific to the study field and cropping season, which experienced only a few rain events. In contrast, for fields/seasons with more frequent and/or larger rainfall events, we can expect more frequent situations where both the stress and waste risks (i.e., trade-off) occur. The risk results are also dependent on the irrigation method. With surface irrigation, the scheduling often consists of a near-constant irrigation depth across a season; such irrigation strategy creates relatively large deficits followed by a large irrigation application, which means stress risks only appear near the end of each irrigation cycle and waste risks tend to occur just after each irrigation. The pattern of risk would be markedly different with sprinkler or drip irrigation, which is often applied more frequently and with more flexibility to vary the irrigation depth across a season (Brouwer et al. 1989). Nevertheless, the framework we present is flexible enough to estimate irrigation risks for any cropping fields with any irrigation methods, while also incorporating other sources of uncertainty.

This study is the first step in establishing an uncertainty framework to evaluate and inform irrigation decisions, thus several simplifications and assumptions were made. We focused on gravity-fed surface irrigation systems and a critical assumption was that irrigation decisions were only about timing. Uncertainty in irrigation amounts can be contributed by multiple sources of uncertainty. First, in calibrating the APSIM model, we used the irrigation amounts which were back-calculated from our calibrated soil water observations (“The APSIM crop model”), which were found to under-estimate the actual irrigation amounts that the farmer applied, possibly due to an unrepresentative monitoring site. We assumed that the under-estimation of these irrigation amounts compared to actual irrigation had minimal impacts on the modelling. Realistically, such under-estimation may cause the calibrated model to under-represent the variability in soil water contents, which further leads to under-estimation of both the stress and waste risks. Therefore, we recommend improved soil water measurements using more detailed site-specific calibration, multiple monitoring sites, and/or multiple sources of information including soil samples and irrigation records from farmers. Further, uncertainty in irrigation amounts can also affect the implementation of APSIM for soil water simulations when assessing different irrigation timings. Here we assumed that the irrigation amounts were known with precision and each irrigation always refilled soil water to the field capacity (“Ensemble simulations to evaluate risks from different irrigation decisions”). However, such modelling is limited in representing realistic irrigation events which may not be able to precisely replace the deficit of the soil profile. Future work will need to incorporate this important uncertainty in irrigation amounts to be able to better represent gravity-fed surface irrigation systems, as well as extending the analysis framework to different irrigation systems such as drip irrigation, for which irrigation amount is another critical decision variable apart from timing (Brouwer et al. 1989).

In this study, we generated probabilistic risks by considering only the uncertainty that arises from rainfall forecasts. We plan to incorporate other sources of uncertainty in our future expansion of the analysis framework, including:

-

1)

Uncertainties in potential evapotranspiration forecasts. This is a key source of uncertainty which is especially relevant to the stress risks, as it affects the rate of soil water depletion in between irrigation and rainfall events (Perera et al. 2014). To represent realistic future weather conditions, any cross-correlation between multiple climate variables (e.g., temperature, rainfall and solar radiation) should also be taken into account while incorporating the uncertainty future rainfall and potential evapotranspiration (Li et al. 2017).

-

2)

Uncertainty in soil water modelling. Similar to 1), this also contributes to the uncertainty of estimated soil water depletion and thus has important impacts on the estimation of stress risks within each period of analysis (9 days in this study). The key issues here include uncertainty in model parameterization (e.g., crop water use characteristics and soil hydraulic properties) and alternative choices of crop growth and soil water models (e.g., SWAT, CropWat), which both influence the modelled rate of soil water depletion (Confalonieri et al. 2016; Sándor et al. 2017; Wallach and Thorburn 2017).

-

3)

Uncertainty in initial soil water content estimates. Depending on how these are established uncertainties may arise from (a) uncertainty in soil water observations, (b) model uncertainties, or (c) uncertainties in historical weather observations and irrigation estimates. In this study, uncertainty in initial soil water status can be contributed by all three factors. The APSIM model calibration relied on field observations of soil water, which may be subject to instrumental/human errors as well as insufficient representation of spatial heterogeneity of the cropping field (Grayson and Western 1998; Or and Hanks 1993; Paraskevopoulos and Singels 2014). When running ensemble simulations with the calibrated model, the initial soil water status for each forecast period (9 days) depended on both the model uncertainty and uncertainties in historical weather observations and irrigation estimates for the preceding period.

It is expected that adding these further sources of uncertainty will further expand the range of ensemble soil water simulations compared to those presented in this study (e.g., Fig. 4). As a result, we can expect more frequent occurrence of the risk trade-off situation (i.e., having both the stress and waste risks together) and less of the no-risk situation.

A comprehensive uncertainty framework can be established once these further sources of uncertainty are incorporated. Such a framework will represent uncertainties involved in irrigation decision making in a more realistic manner, and thus enable more effective comparison and optimization of irrigation decisions, which is highly beneficial to irrigation scheduling in practice.

References

Allen RG, Pereira LS, Raes D, Smith M (1998) FAO Irrigation and drainage paper No. 56. Rome: Food and Agriculture Organization of the United Nations 56(97):e156

APSIM (2021a) Introduction to Apsim UI. https://www.apsim.info/support/apsim-training-manuals/introduction-to-apsim-ui/

APSIM (2021b) Maize. https://www.apsim.info/documentation/model-documentation/crop-module-documentation/maize/

APSIM (2021c) SoilWat. https://www.apsim.info/documentation/model-documentation/soil-modules-documentation/soilwat/

Azhar AH, Perera BJC (2011) Prediction of rainfall for short term irrigation planning and scheduling—case study in Victoria, Australia. J Irrig Drain Eng 137(7):435–445

Brouwer C, Prins K, Heibloem M (1989) Irrigation water management: irrigation scheduling. International Institute for Land Reclamation and Improvement. Food and Agriculture Organization of the United Nations. Rome (Italy), Training manual, 4

Cai X, Hejazi MI, Wang D (2011) Value of probabilistic weather forecasts: assessment by real-time optimization of irrigation scheduling. J Water Resour Plan Manag 137(5):391–403. https://doi.org/10.1061/(ASCE)WR.1943-5452.0000126

Campbell G, Campbell M, Hillel D (1982) Irrigation scheduling using soil moisture measurements: theory and practice. Adv Irrig 1:25–42

Cao J, Tan J, Cui Y, Luo Y (2019) Irrigation scheduling of paddy rice using short-term weather forecast data. Agric Water Manag 213:714–723. https://doi.org/10.1016/j.agwat.2018.10.046

Carberry P, Abrecht D (1991) Tailoring crop models to the semiarid tropics. CSIRO Division of Tropical Crops and Pastures. Aitkenvale QLD 4814 (Australia).

Carberry P, Muchow R, McCown R (1989) Testing the CERES-Maize simulation model in a semi-arid tropical environment. Field Crop Res 20(4):297–315

Clark M, Gangopadhyay S, Hay L, Rajagopalan B, Wilby R (2004) The Schaake shuffle: a method for reconstructing space–time variability in forecasted precipitation and temperature fields. J Hydrometeorol 5(1):243–262

Confalonieri R, Orlando F, Paleari L, Stella T, Gilardelli C, Movedi E, Pagani V, Cappelli G, Vertemara A, Alberti L, Alberti P, Atanassiu S, Bonaiti M, Cappelletti G, Ceruti M, Confalonieri A, Corgatelli G, Corti P, Dell’Oro M, Acutis M (2016) Uncertainty in crop model predictions: what is the role of users? Environ Model Softw 81:165–173. https://doi.org/10.1016/j.envsoft.2016.04.009

George BA, Shende SA, Raghuwanshi NS (2000) Development and testing of an irrigation scheduling model. Agric Water Manag 46(2):121–136. https://doi.org/10.1016/S0378-3774(00)00083-4

Giddings J (2004) Readily available water (RAW ). Retrieved from https://1040663.app.netsuite.com/core/media/media.nl?id=20653&c=1040663&h=ba8eb8d627b98c84ce25&_xt=.pdf

Goldhamer DA, Fereres E (2001) Irrigation scheduling protocols using continuously recorded trunk diameter measurements. Irrig Sci 20(3):115–125

Gowing JW, Ejieji CJ (2001) Real-time scheduling of supplemental irrigation for potatoes using a decision model and short-term weather forecasts. Agric Water Manag 47(2):137–153. https://doi.org/10.1016/S0378-3774(00)00101-3

Grayson RB, Western AW (1998) Towards areal estimation of soil water content from point measurements: time and space stability of mean response. J Hydrol 207(1):68–82. https://doi.org/10.1016/S0022-1694(98)00096-1

Gu Z, Qi Z, Burghate R, Yuan S, Jiao X, Xu J (2020) Irrigation scheduling approaches and applications: a review. J Irrig Drain Eng 146(6):04020007. https://doi.org/10.1061/(ASCE)IR.1943-4774.0001464

Guerra E, Ventura F, Snyder R (2016) Crop coefficients: a literature review. J Irrig Drain Eng 142(3):06015006

Hejazi MI, Cai X, Yuan X, Liang X-Z, Kumar P (2014) Incorporating reanalysis-based short-term forecasts from a regional climate model in an irrigation scheduling optimization problem. J Water Resour Plan Manag 140(5):699–713. https://doi.org/10.1061/(ASCE)WR.1943-5452.0000365

Holzworth DP, Huth NI, deVoil PG, Zurcher EJ, Herrmann NI, McLean G, Chenu K, van Oosterom EJ, Snow V, Murphy C, Moore AD, Brown H, Whish JPM, Verrall S, Fainges J, Bell LW, Peake AS, Poulton PL, Hochman Z, Keating BA (2014) APSIM – evolution towards a new generation of agricultural systems simulation. Environ Model Softw 62:327–350. https://doi.org/10.1016/j.envsoft.2014.07.009

Jones HG (2004) Irrigation scheduling: advantages and pitfalls of plant-based methods. J Exp Bot 55(407):2427–2436. https://doi.org/10.1093/jxb/erh213

Jones C, Kiniry J (1986) A simulation model of maize growth and development. Texas A & M University Press, College Station

Jones AS, Andales AA, Chávez JL, McGovern C, Smith GEB, David O, Fletcher SJ (2020) Use of predictive weather uncertainties in an irrigation scheduling tool part II: an application of metrics and adjoints. J Am Water Resour Assoc 56(2):201–211. https://doi.org/10.1111/1752-1688.12806

Keating B, Godwin D, Watiki J (1991) Optimising nitrogen inputs in response to climatic risk. Climatic risk in crop production-models and management for the semi-arid tropics and subtropics. CAB Internatinal, Wallingford

Keating B, Wafula B (1992) Modelling the fully expanded area of maize leaves. Field Crop Res 29(2):163–176

Li W, Duan Q, Miao C, Ye A, Gong W, Di Z (2017) A review on statistical postprocessing methods for hydrometeorological ensemble forecasting. Wires Water 4(6):e1246. https://doi.org/10.1002/wat2.1246

Littleboy M, Silburn D, Freebairn D, Woodruff D, Hammer G, Leslie J (1992) Impact of soil erosion on production in cropping systems. I. Development and validation of a simulation model. Soil Research 30(5):757–774

Martin EC (2009) Methods of determining when to irrigate. Arizona Water Series No. 30. extension.arizona.edu/pubs/az1220–2014.pdf

Or D, Hanks RJ (1993) Irrigation scheduling considering soil variability and climatic uncertainty: simulation and field studies. In: Russo D, Dagan G (eds) Water flow and solute transport in soils: developments and applications In Memoriam Eshel Bresler (1930–1991). Springer, Berlin Heidelberg, pp 262–282

Paraskevopoulos AL, Singels A (2014) Integrating soil water monitoring technology and weather based crop modelling to provide improved decision support for sugarcane irrigation management. Comput Electron Agric 105:44–53. https://doi.org/10.1016/j.compag.2014.04.007

Peel MC, Finlayson BL, McMahon TA (2007) Updated world map of the Köppen-Geiger climate classification. Hydrol Earth Syst Sci 11(5):1633–1644

Pereira L, Teodoro P, Rodrigues P, Teixeira J (2003) Irrigation scheduling simulation: the model ISAREG. Tools for drought mitigation in mediterranean regions. Springer, pp 161–180

Pereira LS (1999) Higher performance through combined improvements in irrigation methods and scheduling: a discussion. Agric Water Manag 40(2):153–169. https://doi.org/10.1016/S0378-3774(98)00118-8

Perera KC, Western AW, Nawarathna B, George B (2014) Forecasting daily reference evapotranspiration for Australia using numerical weather prediction outputs. Agric For Meteorol 194:50–63. https://doi.org/10.1016/j.agrformet.2014.03.014

Perera KC, Western AW, Robertson DE, George B, Nawarathna B (2016) Ensemble forecasting of short-term system scale irrigation demands using real-time flow data and numerical weather predictions. Water Resour Res 52(6):4801–4822. https://doi.org/10.1002/2015WR018532

Priestley C, Taylor R (1972) On the assessment of surface heat flux and evaporation using large-scale parameters. Mon Weather Rev 100(2):81–92

Ratliff LF, Ritchie JT, Cassel DK (1983) Field-measured limits of soil water availability as related to laboratory-measured properties. Soil Sci Soc Am J 47(4):770–775

Rawls WJ, Brakensiek DL, Saxtonn K (1982) Estimation of soil water properties. Trans ASAE 25(5):1316–1320

Rhenals AE, Bras RL (1981) The irrigation scheduling problem and evapotranspiration uncertainty. Water Resour Res 17(5):1328–1338. https://doi.org/10.1029/WR017i005p01328

Sándor R, Barcza Z, Acutis M, Doro L, Hidy D, Köchy M, Minet J, Lellei-Kovács E, Ma S, Perego A, Rolinski S, Ruget F, Sanna M, Seddaiu G, Wu L, Bellocchi G (2017) Multi-model simulation of soil temperature, soil water content and biomass in Euro-Mediterranean grasslands: uncertainties and ensemble performance. Eur J Agronomy 88:22–40. https://doi.org/10.1016/j.eja.2016.06.006

Sezen SM, Yazar A, Daşgan Y, Yucel S, Akyıldız A, Tekin S, Akhoundnejad Y (2014) Evaluation of crop water stress index (CWSI) for red pepper with drip and furrow irrigation under varying irrigation regimes. Agric Water Manag 143:59–70. https://doi.org/10.1016/j.agwat.2014.06.008

Slingo J, Palmer T (2011) Uncertainty in weather and climate prediction. Philos Trans R Soc A: Math Phys Eng Sci 369(1956):4751–4767

The World Bank (2017) World Development Indicators: Annual freshwater withdrawals, agriculture (% of total freshwater withdrawal). https://data.worldbank.org/indicator/er.h2o.fwag.zs

Topp GC, Davis JL (1985) Time-Domain Reflectometry (TDR) and its application to irrigation scheduling* *This article is LRRI Contribution #85–13. In: Hillel D (ed) Advances in irrigation, vol 3. Elsevier, pp 107–127

Wallach D, Thorburn PJ (2017) Estimating uncertainty in crop model predictions: current situation and future prospects. Eur J Agronomy 88:A1–A7. https://doi.org/10.1016/j.eja.2017.06.001

Wang D, Cai X (2009) Irrigation scheduling—role of weather forecasting and farmers’ behavior. J Water Resour Plan Manag 135(5):364–372. https://doi.org/10.1061/(ASCE)0733-9496(2009)135:5(364)

Wang Q, Zhao T, Yang Q, Robertson D (2019) A seasonally coherent calibration (SCC) model for postprocessing numerical weather predictions. Mon Weather Rev 147(10):3633–3647

Wright W (2008) Observing the climate—challenges for the 21st century. World Meteorological Organization. https://public.wmo.int/en/bulletin/observing-climate%E2%80%94challenges-21st-century

Yang Q, Wang QJ, Hakala K (2021) Achieving effective calibration of precipitation forecasts over a continental scale. J Hydrol: Regional Stud 35:100818. https://doi.org/10.1016/j.ejrh.2021.100818

Zhao P, Wang QJ, Wu W, Yang Q (2021) Which precipitation forecasts to use? Deterministic versus coarser-resolution ensemble NWP models. Q J R Meteorol Soc 147(735):900–913

Acknowledgements

The authors would also like to thank Dr Yating Tang and Shirui Hao for their assistance with modelling; Kevin Saillard, Kevin Clurey, Brett Williamson, Jackson Wilson, Zitian Gao, Rodger Young and Arash Parehkar for their help with fieldwork and data collection; Russell Pell for kindly providing the field for monitoring; and David Aughton for his guidance on the overall research direction.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions. This work was supported by the Australian Research Council and Rubicon Water through the linkage program [grant number LP170100710].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors have no competing interests to declare that are relevant to the content of this article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Evaluation of forecasting performance

Appendix: Evaluation of forecasting performance

Accuracy

We first evaluated the forecast accuracy of precipitation by calculating bias in average precipitation in the raw and calibrated forecasts relative to AWAP data using:

where Bias refers to bias in average precipitation (mm/day); T is total days during the validation period (4/2016–3/2020); x(t) is daily precipitation (mm/day) or wet days (%) during the 4-year period in forecasts, and y(t) is the corresponding observations of the same period.

Skill of the raw and calibrated forecasts

We used the continuous ranked probability score (CRPS) skill score to measure the skill in the raw and calibrated forecasts relative to ensemble climatology reference forecasts. CRPS has been widely used to measure forecasting skill of probabilistic forecasts that provide predictive cumulative distribution functions. Specifically, CRPS was calculated using Eqs. 2 and 3:

where F(t,x) is the cumulative density function of an ensemble forecast, and y(t) is the observation at time t; H is the Heaviside step function (H = 1 if x–y(t) ≥ 0 and H = 0 otherwise); the overbar represents averaging across the T days. For deterministic forecasts, CRPS is reduced to absolute errors.

Following the derivation of CRPS values for the raw, calibrated, and ensemble climatology reference forecasts, we further calculated the CRPS skill score (CRPSSS) using:

where CRPSreference is the CRPS value of ensemble climatology reference forecasts; and CRPSforecasts refers to the CRPS value of the raw or calibrated forecasts. Here CRPSSS measures the difference between the CRPS values of the forecasts and those of the ensemble climatology forecasts. Positive skill scores indicate better skill than the ensemble climatology reference forecasts and vice versa.

Reliability

One important advantage of SCC is the capability to convert deterministic raw forecasts into ensemble forecasts, with the ensemble spread representing forecast uncertainty. The reliability of ensemble forecasts refers to the consistency between forecast distributions and the frequency of associated events in the observations. To further evaluate the reliability of calibrated forecasts, we calculated the probability integral transform (π(t)) value using:

where F(t,x) is the cumulative density function of the ensemble forecast, and y(t) is the observation. For reliable forecasts, π(t) follows a uniform distribution. To demonstrate the spatial patterns of reliability of calibrated forecasts across Australia, we then calculated an alpha index (α) to summarize the reliability in each grid cell using Eq. 6 below:

where π* (t) is the sorted π(t) in ascending order; and n is the total number of days. This index represents the total deviation of calibrated forecasts from the corresponding uniform quantile. Perfectly reliable forecasts should have an α-index of 1, and forecasts have lower reliability as α-index decreases from 1.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Guo, D., Wang, Q.J., Ryu, D. et al. An analysis framework to evaluate irrigation decisions using short-term ensemble weather forecasts. Irrig Sci 41, 155–171 (2023). https://doi.org/10.1007/s00271-022-00807-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00271-022-00807-w