Abstract

A mean-field selective optimal control problem of multipopulation dynamics via transient leadership is considered. The agents in the system are described by their spatial position and their probability of belonging to a certain population. The dynamics in the control problem is characterized by the presence of an activation function which tunes the control on each agent according to the membership to a population, which, in turn, evolves according to a Markov-type jump process. In this way, a hypothetical policy maker can select a restricted pool of agents to act upon based, for instance, on their time-dependent influence on the rest of the population. A finite-particle control problem is studied and its mean-field limit is identified via \(\varGamma \)-convergence, ensuring convergence of optimal controls. The dynamics of the mean-field optimal control is governed by a continuity-type equation without diffusion. Specific applications in the context of opinion dynamics are discussed with some numerical experiments.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Multipopulation agent systems have drawn much attention in the last decades as a tool to describe the evolution of groups of individuals with some features that can change with time. These models find their application in contexts as varied as evolutionary population dynamics [11, 36, 48], economics [53], chemical reaction networks [39, 42, 44], and kinetic models of opinion formation [31, 50]. In these models, each agent carries a label that may describe, for instance, membership to a population (e.g., leaders or followers), or the strategy used in a game. While this label space is often discrete, for many applications (and also as a necessary condition for the existence of Nash equilibria [43]) it is useful to attach to each agent located at a point \(x\in {\mathbb {R}}^d\) a continuous variable which describes their mixed strategies or, referring back to the context of leaders and followers, their degree of influence. If U denotes the space of labels, this may be encoded by a probability measure \(\lambda \in {\mathcal {P}}(U)\). It is natural to postulate that \(\lambda \) can vary with time according to a spatially inhomogeneous Markov-type jump process with a transition rate \({\mathcal {T}}(x,\lambda ,({\varvec{x}},\varvec{\lambda }))\) that may depend on the position x of the agent and on the global state of the system \(({\varvec{x}},\varvec{\lambda })\), containing the positions and the labels of all the agents. Leadership may indeed be temporary and affected, for instance, by circumstances, need, location, and mutual distance among the agents.

Mean-field descriptions of such systems allow for an efficient treatment by replacing the many-agent paradigm with a kinetic one [21, 23], consisting of a limit PDE whose unknown is the distribution of agents with their label, as those obtained in [6, 7, 41, 49] (see also [40] for a related Boltzmann-type approach).

A further step which we devise in this paper is the extension of the mean-field point of view to the problem of controlling such systems, possibly in a selective way. The underlying idea is the presence of a policy maker whose control action, at any instant of time, concentrates on a subset of the population chosen according to the level of influence of the agents.

More precisely, in a population of N agents, the time-dependent state of the i-th agent is given by \(t\mapsto y_i(t)=(x_i(t),\lambda _i(t))\), where \(x_i\in {\mathbb {R}}^d\) and \(\lambda _i\in {\mathcal {P}}(U)\), for every \(i=1,\ldots ,N\), and evolves according to the controlled ODE system

where v is a velocity field, \(u_i\) is the control on the i-th agent belonging to a compact convex subset K of \({\mathbb {R}}^{d}\), and \(h\ge 0\) is a non-negative activation function selecting the set of agents targeted by the decision of the policy maker, depending on their state and, possibly, on the global state of the system. The values \(u_i\) are determined by minimization of the cost functional

where \(\varPsi ^N_t\) is the empirical measure defined as \(\varPsi ^N_t :=\frac{1}{N}\sum _{i=1}^{N} \delta _{y_i(t)}\) and \(\phi \) is a positive convex cost function, superlinear at infinity, and such that \(\phi (0)=0\); finally, the Lagrangian \({\mathcal {L}}_N(\cdot )\) is continuous and symmetric (see Definition 1 and Remark 2 below).

In this paper we show that the variational limit, in the sense of \(\varGamma \)-convergence [13, 26] in a suitable topology, of the functional introduced in (2) is given by

where \({\mathcal {L}}\) is a certain limit Lagrangian cost and where \(\varPsi _t\in {\mathcal {P}}({\mathbb {R}}^d\times {\mathcal {P}}(U))\) and w are coupled by the mean-field continuity equation

with the request that w be integrable with respect to the measure \(h\varPsi _t\otimes \mathrm {d}t\). From the point of view of the applications, we remark that our main result Theorem 2 implies that a minimizing pair \((\varPsi ,w)\) for the optimal control problem (3) can be obtained as the limit of minimizers \(({\varvec{y}}^N,{\varvec{u}}^N)\) of the finite-particle optimal control problem (2) (a precise statement is given in Corollary 1).

In this sense, our result extends to the multipopulation setting the results of [32, 34] with the relevant feature that the activation function h allows the policy maker to tune the control action on a subset of the entire population which is not prescribed a priori, but rather depends on the evolution of the system. At fixed time \(t>0\), it can target its intervention on the most influential elements of the population according to a threshold encoded by h. This is similar, in spirit, to a principle of sparse control, as considered, e.g., in [1, 22, 33]. Again, our model includes additional features; in particular, a control action only through leaders is already present in [33], where however the leaders population is fixed a priori and discrete. A localized control action on a small time-varying subset \(\omega (t)\) of the state space of the system is presented in [22] as an infinite-dimensional generalization of [37]; there, no optimal control is considered and the evolution of \(\omega (t)\) is algorithmically constructed to reach a desired target, instead of being determined by the evolution itself. The numerical approach of [1] makes use of a selective state-dependent control specifically designed for the Cucker–Smale model. For other recent examples of localized/sparse intervention in mean-field control systems, we refer the reader to [3, 4, 18, 24, 38, 46, 51].

The role of the variable \(\lambda \) deserves some attention. It can be generally intended as a measure of the influence of an agent, accounting for a number of different interpretations according to the context. Similar background variables have been used in recent literature to describe wealth distribution [28, 30, 45], degree of knowledge [16, 17], degree of connectivity of an agent in a network [5, 15], and also applications to opinion formation [29], just to name a few. Comparing to these other approaches, our mean-field approximation (3), (4) features a more profound interplay between the variable \(\lambda \) and the spatial distribution x of the agents, resulting in a higher flexibility of the model: not only is \(\lambda \) changing in time, but its variation is driven by an optimality principle steered by the controls.

We present some applications in Sect. 5 in the context of opinion dynamics, where \(\lambda \) represents the transient degree of leadership of the agents. Specifically, in the former example we highlight the emergence of leaders and how this can be exploited by a policy maker; in the latter, two competing populations of leaders with different targets and campaigning styles are considered, and the effect of the control action in favoring one of them is analyzed.

The plan of the paper is the following: in Sect. 2 we introduce the functional setting of the problem and we list the standing assumptions on the velocity field v, on the transition operator \({\mathcal {T}}\), and on the cost functions \({\mathcal {L}}_N\), \({\mathcal {L}}\), and \(\phi \); in Sect. 3 we present and discuss the existence of solutions to the finite-particle control problem; in Sect. 4 we introduce the mean-field control problem and prove the main theorem on the \(\varGamma \)-convergence to the continuous problem. In Sect. 5 we discuss the applications mentioned above.

1.1 Technical Aspects

We highlight the main technical aspects of the proof of Theorem 2. The \(\varGamma \)-liminf inequality builds upon a compactness property of sequences of empirical measures \(\varPsi ^{N}_{t}\) with uniformly bounded cost \({\mathcal {E}}_{N}\). The hypotheses on the velocity field v and on the transition operator \({\mathcal {T}}\) in (1) (see Sect. 2) imply, by a Grönwall-type argument, a uniform-in-time estimate of the support of \(\varPsi ^{N}_{t}\), ensuring the convergence to a limit \(\varPsi _{t}\). The lower bound and the identification of the control field w are consequences of the convergence of \({\mathcal {L}}_{N}\) to \({\mathcal {L}}\) and of the convexity and superlinear growth of the cost function \(\phi \). As for the \(\varGamma \)-limsup inequality, we remark that the sole integrability of w (contrary to the situation considered in [32]) does not guarantee the existence of a flow map for the associated Cauchy problem

and therefore does not allow for a direct construction of a recovery sequence based on the analysis of (5) due to the lack of continuity with respect to the data. Following the main ideas of [34], we base our approximation strategy on the superposition principle [11, Theorem 5.2], [41, Theorem 3.11] (see also [9, 10, 20, 47]), which indeed selects a sequence of trajectories \({\varvec{z}}^{N}\) such that the corresponding empirical measures \(\varLambda ^{N}_{t} :=\frac{1}{N} \sum _{i=1}^{N} \delta _{z^{N}_{i}(t)}\) converge to \(\varPsi _{t}\) and the cost \({\mathcal {E}}_N({\varvec{z}}^N,{\varvec{u}}^N)\) converges to \({\mathcal {E}}(\varPsi ,w)\), where we have set \(u_{i}^{N}(t) :=w(t, z^{N}_{i}(t))\). The explicit dependence of (5) on the global state of the system calls for a further modification of the trajectories \({\varvec{z}}^{N}\). Here, the fact that h may take the value 0 introduces an additional technical difficulty as we cannot exploit the linear dependence on the controls in (1). To overcome this problem, we resort once again to the local Lipschitz continuity of v and of \({\mathcal {T}}\), and construct the trajectories \({\varvec{y}}^{N}\) by solving the Cauchy problem

By Grönwall estimates, we can conclude that the distance between \(\varLambda ^{N}_{t}\) and the empirical measure \(\varPsi ^{N}_{t}\) generated by \({\varvec{y}}^{N}\) is infinitesimal, so that we obtain the desired convergences of \(\varPsi ^{N}_{t}\) to \(\varPsi _{t}\) and of \({\mathcal {E}}_{N}({\varvec{y}}^{N}, {\varvec{u}}^{N})\) to \({\mathcal {E}}(\varPsi , w)\). Let us also mention that the symmetry of the cost is used in a crucial way to deal with the initial conditions in (6).

2 Mathematical Setting

In this section we introduce the mathematical framework and notation to study our system.

Basic notation. Given a metric space \((X,{\mathsf {d}}_X)\), we denote by \({\mathcal {M}}(X)\) the space of signed Borel measures \(\mu \) in X with finite total variation \(\Vert \mu \Vert _{\mathrm {TV}}\), by \({\mathcal {M}}_+(X)\) and \({\mathcal {P}}(X)\) the convex subsets of nonnegative measures and probability measures, respectively. We say that \(\mu \in {\mathcal {P}}_c(X)\) if \(\mu \in {\mathcal {P}}(X)\) and the support \(\mathrm {spt}\, \mu \) is a compact subset of X. For any \(K \subseteq X\), the symbol \({\mathcal {P}}(K)\) denotes the set of measures \(\mu \in {\mathcal {P}}(X)\) such that \(\mathrm {spt}\, \mu \subseteq K\). Moreover, \({\mathcal {M}}(X;{\mathbb {R}}^d)\) denotes the space of \({\mathbb {R}}^d\)-valued Borel measures with finite total variation.

As usual, if \((Z, {\mathsf {d}}_{Z})\) is another metric space, for every \(\mu \in {\mathcal {M}}_+(X)\) and every \(\mu \)-measurable function \(f:X\rightarrow Z\), we define the push-forward measure \(f_\#\mu \in {\mathcal {M}}_+(Z)\) by \((f_\#\mu )(B):=\mu (f^{-1}(B))\), for any Borel set \(B\subseteq Z\).

For a Lipschitz function \(f:X\rightarrow {\mathbb {R}}\) we define its Lipschitz constant by

and we denote by \(\mathrm {Lip}(X)\) and \(\mathrm {Lip}_b(X)\) the spaces of Lipschitz and bounded Lipschitz functions on X, respectively. Both are normed spaces with the norm \(\Vert f\Vert _{\mathrm {Lip}} :=\Vert f\Vert _\infty + \mathrm {Lip}(f)\), where \(\Vert \cdot \Vert _\infty \) is the supremum norm. Furthermore, we use the notation \(\mathrm {Lip}_{1}(X)\) for the set of functions \(f \in \mathrm {Lip}_{b} (X)\) such that \(\mathrm {Lip}(f) \le 1\).

In a complete and separable metric space \((X,{\mathsf {d}}_X)\), we shall use the Wasserstein distance \(W_1\) in the set \({\mathcal {P}}(X)\), defined as

Notice that \(W_1(\mu ,\nu )\) is finite if \(\mu \) and \(\nu \) belong to the space

and that \(({\mathcal {P}}_1(X),W_1)\) is a complete metric space if \((X,{\mathsf {d}}_X)\) is complete.

If \((E, \Vert \cdot \Vert _{E})\) is a Banach space and \(\mu \in {\mathcal {M}}_+(E)\), we define the first moment \(m_1(\mu )\) as

Notice that for a probability measure \(\mu \) finiteness of the integral above is equivalent to \(\mu \in {\mathcal {P}}_1(E)\), whenever E is endowed with the distance induced by the norm \(\Vert \cdot \Vert _{E}\). Furthermore, the notation \(C^1_b(E)\) will be used to denote the subspace of \(C_b(E)\) of functions having bounded continuous Fréchet differential at each point. The symbol \(\nabla \) will be used to denote the Fréchet differential. In the case of a function \(\phi :[0,T]\times E \rightarrow {\mathbb {R}}\), the symbol \(\partial _t\) will be used to denote partial differentiation with respect to t.

Functional setting. We consider a set of pure strategies U, where U is a compact metric space, and we denote by \(Y:={\mathbb {R}}^{d} \times {\mathcal {P}}(U)\) the state-space of the system.

According to the functional setting considered in [11, 41], we consider the space \({\overline{Y}}:={\mathbb {R}}^{d} \times {\mathcal {F}}(U)\), where we have set (see, e.g., [8, 12] and [52, Chap. 3])

The closure in (7) is taken with respect to the bounded Lipschitz norm \(\Vert \cdot \Vert _{\mathrm {BL}}\), defined as

We notice that, by definition of \(\Vert \cdot \Vert _{\mathrm {BL}}\), we always have

in particular, \(\Vert \lambda \Vert _{\mathrm {BL}} \le 1\) for every \(\lambda \in {\mathcal {P}}(U)\).

Finally, we endow \({\overline{Y}}\) with the norm \(\Vert y\Vert _{{{\overline{Y}}}}=\Vert (x,\lambda )\Vert _{{{\overline{Y}}}}:=|x |+\Vert \lambda \Vert _{\mathrm {BL}}\) .

For every \(R>0\), we denote by \(\mathrm {B}_R^Y\) the closed ball of radius R in Y, namely \(\mathrm {B}_R^Y=\{y\in Y:\Vert y\Vert _{{{\overline{Y}}}}\le R\}\) and notice that, in our setting, \(\mathrm {B}^{Y}_{R}\) is a compact set.

As in [41], we consider, for every \(\varPsi \in {\mathcal {P}}_{1} (Y)\), a velocity field \(v_{\varPsi } :Y \rightarrow {\mathbb {R}}^{d}\) such that

- (\(v_1\)):

-

for every \(R>0\), \(v_{\varPsi } \in \mathrm {Lip} (\mathrm {B}^{Y}_{R}; {\mathbb {R}}^{d})\) uniformly with respect to \(\varPsi \in {\mathcal {P}}( \mathrm {B}^{Y}_{R})\), i.e., there exists \(L_{v, R}>0\) such that

$$\begin{aligned} | v_{\varPsi } (y_{1}) - v_{\varPsi }(y_{2}) | \le L_{v, R} \Vert y_{1} - y_{2} \Vert _{{\overline{Y}}} \qquad \text {for every }y_{1}, y_{2} \in Y\,; \end{aligned}$$ - (\(v_2\)):

-

for every \(R>0\) there exists \(L_{v, R}>0\) such that for every \(\varPsi _{1}, \varPsi _{2} \in {\mathcal {P}}(\mathrm {B}^{Y}_{R})\) and every \(y \in \mathrm {B}^{Y}_{R}\)

$$\begin{aligned} | v_{\varPsi _{1}} (y) - v_{\varPsi _{2}} (y) | \le L_{v, R} W_{1} (\varPsi _{1}, \varPsi _{2})\,; \end{aligned}$$ - (\(v_3\)):

-

there exists \(M_{v}>0\) such that for every \(y \in Y\) and every \(\varPsi \in {\mathcal {P}}_{1}(Y)\)

$$\begin{aligned} | v_{\varPsi } (y) | \le M_{v} \big ( 1 + \Vert y \Vert _{{\overline{Y}}} + m_{1} ( \varPsi ) \big ). \end{aligned}$$

As for \({\mathcal {T}}\), for every \(\varPsi \in {\mathcal {P}}_{1} (Y)\) we assume that the operator \({\mathcal {T}}_{\varPsi } :Y \rightarrow {\mathcal {F}}(U)\) is such that

- (\({\mathcal {T}}_0\)):

-

for every \((y , \varPsi ) \in Y \times {\mathcal {P}}_{1}( Y )\), the constants belong to the kernel of \({\mathcal {T}}_{\varPsi }(y)\), i.e.,

$$\begin{aligned} \left\langle {\mathcal {T}}_{\varPsi }(y), 1 \right\rangle _{{\mathcal {F}}(U), \mathrm {Lip}(U)} = 0, \end{aligned}$$where \(\langle \cdot ,\cdot \rangle \) denoted the duality product;

- (\({\mathcal {T}}_1\)):

-

there exists \(M_{{\mathcal {T}}}>0\) such that for every \(y \in Y\) and every \(\varPsi \in {\mathcal {P}}_{1}(Y)\)

$$\begin{aligned} \Vert {\mathcal {T}}_{\varPsi }(y) \Vert _{\mathrm {BL}} \le M_{{\mathcal {T}}} \big ( 1 + \Vert y \Vert _{{\overline{Y}}} + m_{1} ( \varPsi ) \big )\,; \end{aligned}$$ - (\({\mathcal {T}}_2\)):

-

for every \(R>0\), there exists \(L_{{\mathcal {T}}, R}>0\) such that for every \((y_{1}, \varPsi _{1}), (y_{2}, \varPsi _{2}) \in \mathrm {B}^{Y}_{R} \times {\mathcal {P}}(\mathrm {B}^{Y}_{R})\)

$$\begin{aligned} \Vert {\mathcal {T}}_{\varPsi _{1}} ( y_{1} ) - {\mathcal {T}}_{\varPsi _{2}} (y_{2} ) \Vert _{\mathrm{BL}} \le L_{{\mathcal {T}}, R} \big ( \Vert y_{1} - y_{2} \Vert _{{\overline{Y}}} + W_{1} (\varPsi _{1}, \varPsi _{2}) \big )\,; \end{aligned}$$ - (\({\mathcal {T}}_3\)):

-

for every \(R>0\) there exists \(\delta _{R}>0\) such that for every \( \varPsi \in {\mathcal {P}}_{1}(Y)\) and every \(y = (x, \lambda ) \in \mathrm {B}^{Y}_{R}\) we have

$$\begin{aligned} {\mathcal {T}}_{\varPsi } (y) + \delta _{R} \lambda \ge 0. \end{aligned}$$

For every \(y \in Y\) and every \(\varPsi \in {\mathcal {P}}_{1}(Y)\) we set \( b_{\varPsi }(y) :=\left( \begin{array}{cc} \displaystyle v_{\varPsi }(y) \\ \displaystyle {\mathcal {T}}_{\varPsi } (y) \end{array}\right) \), which is the velocity field driving the evolution; we also consider an activation function \(h_{\varPsi } :{\overline{Y}} \rightarrow [0,+\infty )\) satisfying:

- (\(h_{1}\)):

-

\(h_{\varPsi }\) is bounded uniformly with respect to \(\varPsi \in {\mathcal {P}}_{1}(Y)\);

- (\(h_{2}\)):

-

for every \(R>0\) there exists \(L_{h, R}>0\) such that for every \(\varPsi _{1}, \varPsi _{2} \in {\mathcal {P}}_{1}(\mathrm {B}^{Y}_{R})\) and every \(y_{1}, y_{2} \in \mathrm {B}^{Y}_{R}\)

$$\begin{aligned} | h_{\varPsi _{1}} (y_{1}) - h_{\varPsi _{2}} (y_{2}) | \le L_{h, R} \big ( \Vert y_{1} - y_{2} \Vert _{{\overline{Y}}} + W_{1}( \varPsi _{1}, \varPsi _{2}) \big ). \end{aligned}$$

Remark 1

We notice that assumptions \(({\mathcal {T}}_{0})\) and \(({\mathcal {T}}_{3})\) together imply that for every \(y = (x, \lambda ) \in \mathrm {B}^{Y}_{R}\) we have \({\mathcal {T}}_{\varPsi } (y) + \delta _{R} \lambda \in {\mathcal {P}}(U)\), as shown for instance in [41, formula (3.13)].

In order to define the optimal control problems of Sects. 3 and 4 , we have to introduce some further notation. For every \(N \in {\mathbb {N}}\), we define

In particular, we notice that, up to a permutation, every N-tuple \({\varvec{y}}^{N}:=(y_{1}, \ldots , y_{N}) \in Y^{N}\) can be identified with an element \(\varPsi \in {\mathcal {P}}^{N}(Y)\). We now give the following two definitions (see also [34, Definition 2.1].

Definition 1

For every \(N \in {\mathbb {N}}\), we say that a map \(F_{N} :Y \times Y^{N} \rightarrow [0,+\infty )\) is symmetric if \(F_{N}(y , {\varvec{y}}) = F_{N}( y, \sigma ({\varvec{y}}))\) for every \(y \in Y\), every \({\varvec{y}}\in Y^N\), and every permutation \(\sigma :Y^{N} \rightarrow Y^{N}\).

Remark 2

Notice that, by symmetry and by identifying \({\varvec{y}}^{N}:=(y_{1}, \ldots , y_{N}) \in Y^N\) with \(\varPsi ^{N} = \frac{1}{N} \sum _{i=1}^{N} \delta _{y_{i}}\), we may write \(F_{N}(y, \varPsi ^{N})\) for \(F_{N}(y, {\varvec{y}}^{N})\).

Definition 2

Let \(F_{N} :Y \times Y^{N} \rightarrow [0,+\infty )\) be symmetric. We say that \(F_{N}\) \({\mathcal {P}}_{1}\)-converges to \(F :Y \times {\mathcal {P}}_1(Y) \rightarrow [0,+\infty )\) uniformly on compact sets as \(N\rightarrow \infty \) if for every subsequence \(N_{k}\) and every sequence \(\varPsi _{k} \in {\mathcal {P}}^{N_{k}} (Y)\) converging to \(\varPsi \) in \({\mathcal {P}}_1(Y)\) w.r.t. the 1-Wassertstein distance we have

For the cost functionals for the finite particle control problem and for their mean-field limit we consider the functions \(\phi :{\mathbb {R}}^{d} \rightarrow [0, + \infty )\), \({\mathcal {L}}_{N} :Y \times Y^{N} \rightarrow [0,+\infty )\), and \({\mathcal {L}}:Y \times {\mathcal {P}}_{1}(Y) \rightarrow [0,+\infty )\) such that

- (\(\phi _{1}\)):

-

\(\phi \) is convex and superlinear with \(\phi (0) = 0\);

- (\({\mathcal {L}}_{1}\)):

-

\({\mathcal {L}}_{N}\) is continuous and symmetric;

- (\({\mathcal {L}}_{2}\)):

-

\({\mathcal {L}}_{N}\) \({\mathcal {P}}_{1}\)-converges to \({\mathcal {L}}\) uniformly on compact sets;

- (\({\mathcal {L}}_{3}\)):

-

for every \(R>0\), \({\mathcal {L}}\) is continuous on \(\mathrm {B}^{Y}_{R} \times {\mathcal {P}}(\mathrm {B}^{Y}_{R})\).

We conclude this section recalling an existence result for ODEs on convex subsets of Banach spaces. We refer to [14, Sect. I.3, Theorem 1.4, Corollary 1.1] (see also [11, Theorem B.1] and [41, Corollary 2.3]) for a proof.

Theorem 1

Let \((E,\Vert \cdot \Vert _E)\) be a Banach space, C a closed convex subset of E, and let \(A(t,\cdot ):C\rightarrow E\), \(t\in [0,T]\), be a family of operators satisfying the following properties:

-

(i)

for every \(R>0\) there exists a constant \(L_R\ge 0\) such that for every \(c_1,\,c_2\in C\cap B_R\) and \(t\in [0,T]\)

$$\begin{aligned} \Vert A(t,c_1)-A(t,c_2)\Vert _E\le L_R\Vert c_1-c_2\Vert _E; \end{aligned}$$ -

(ii)

there exists \(M>0\) such that for every \(c\in C\), there holds

$$\begin{aligned} \Vert A(t,c)\Vert _E\le M(1+\Vert c\Vert _E); \end{aligned}$$ -

(iii)

for every \(c\in C\) the map \(t\mapsto A(t,c)\) belongs to \(L^1([0,T]; E)\);

-

(iv)

for every \(R>0\) there exists \(\theta >0\) such that

$$\begin{aligned} c\in C,\ \Vert c\Vert _E\le R\quad \Rightarrow \quad c+\theta A(t,c)\in C. \end{aligned}$$

Then for every \({\bar{c}}\in C\) there exists a unique absolutely continuous curve \(c:[0,T]\rightarrow C\) satisfying \(c_t\in C\) for all \(t\in [0,T]\) and

for a.e. \(t\in [0,T]\). Moreover, if \(c^1,\,c^2\) are the solutions starting from the initial data \({\bar{c}}^1,\,{\bar{c}}^2\in C\cap B_R\) respectively, there exists a constant \(L=L(M,R,T)>0\) such that

3 The Finite Particle Control Problem

We now introduce the finite particle control problem. We fix a compact and convex subset K of \({\mathbb {R}}^{d}\) of admissible controls with \(0 \in K\). For every \(N \in {\mathbb {N}}\) and every control function \(u_{i} \in L^{1}([0,T]; K)\), \(i=1, \ldots , N\), the dynamics of the N-particles system is driven by the Cauchy problem

where we have set \(\varPsi ^{N}_{t} :=\frac{1}{N} \sum _{i=1}^{N} \delta _{y_{i}(t)} \in {\mathcal {P}}^N (Y)\). For simplicity of notation, we set \({\varvec{u}}^{N}(t) :=( u_{1}(t), \ldots , u_{N}(t)) \in K^{N}\) for every \(t \in [0,T]\). In view of assumptions \((v_{1})\)–\((v_{3})\), \(({\mathcal {T}}_{0})\)–\(({\mathcal {T}}_{3})\), and \((h_{1})\)–\((h_{2})\), applying Theorem 1 we deduce that the Cauchy problem (8) admits a unique solution \({\varvec{y}}^{N} :=( y_{1}, \ldots , y_{N}) \in AC([0,T] ;Y^{N})\), which is also identified with the empirical measure \(\varPsi ^{N}_{t}\), up to a permutation. To ease the notation in our analysis, we give the following definition.

Definition 3

We say that \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in AC([0,T]; Y^{N}) \times L^{1}([0,T]; K^{N})\) generates the pairs \((\varPsi ^{N}, \varvec{\nu }^{N}) \in AC([0,T]; ( {\mathcal {P}}^{N}(Y) ; W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) if  with \(\varPsi ^{N}_{t} = \frac{1}{N} \sum _{i=1}^{N} \delta _{y_{i}(t)}\) and

with \(\varPsi ^{N}_{t} = \frac{1}{N} \sum _{i=1}^{N} \delta _{y_{i}(t)}\) and  with

with

where  denotes the Lebesgue measure on \({\mathbb {R}}\) restricted to the interval [0, T].

denotes the Lebesgue measure on \({\mathbb {R}}\) restricted to the interval [0, T].

In a similar way, if \({\varvec{y}}^{N}_{0}= (y_{0,1}, \ldots , y_{0,N}) \in Y^{N}\), we say that \({\varvec{y}}_{0}^{N}\) generates \(\varPsi ^{N}_{0} \in {\mathcal {P}}^{N}(Y)\) if \(\varPsi ^{N}_{0} = \frac{1}{N} \sum _{i=1}^{N} \delta _{y_{0,i}}\).

Given \({\varvec{y}}^{N}_{0} = (y_{0,1}, \ldots , y_{0,N}) \in Y^{N}\), we define the set of couples trajectory-control solving the Cauchy problem (8) as

Given functions \(\phi \), \({\mathcal {L}}_{N}\), and \({\mathcal {L}}\) satisfying conditions \((\phi _{1})\), \(({\mathcal {L}}_{1})\), and \(({\mathcal {L}}_{2})\), for every initial condition \({\varvec{y}}^{N}_{0} \in Y^{N}\) and every \(({\varvec{y}}, {\varvec{u}}) \in AC([0,T]; Y^{N}) \times L^{1} ( [ 0,T] ; K^{N} ) \), we define the cost functional

where \((\varPsi ^{N}, \varvec{\nu }^{N})\) is the pair generated by \(({\varvec{y}}, {\varvec{u}})\). Therefore, the optimal control problem for the N-particle system reads as follows:

We now prove the existence of solutions of the minimum problem (11). First, we state the boundedness of the trajectories \({\varvec{y}}\) for given control and initial datum, which will also be useful in the \(\varGamma \)-convergence analysis of Sect. 4.

Proposition 1

For every \(N \in {\mathbb {N}}\), every initial datum \({\varvec{y}}^{N}_{0}= (y_{0,1}, \ldots , y_{0,N}) \in Y^{N}\), and every \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) we have

for a positive constant C independent of N.

Proof

Let \((\varPsi ^{N}, \varvec{\nu }^{N})\) be the pair generated by \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\). Since the control \({\varvec{u}}^{N}\) takes values in \(K^{N}\) with K compact in \({\mathbb {R}}^{d}\) and in view of the assumptions \((v_{1})\), \(({\mathcal {T}}_{1})\), and \((h_{1})\), for every \(t \in [0, T]\) we estimate

for some positive constant \({\overline{C}}\) depending only on h and K. Taking the supremum over \(i\in \{1, \ldots , N\}\) in the previous inequality and applying Grönwall inequality we deduce (12). \(\square \)

Proposition 2

For every \(N \in {\mathbb {N}}\) and every initial datum \({\varvec{y}}^{N}_{0} \in Y^{N}\), the minimum problem (11) admits a solution \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\). If \((\varPsi ^{N}, \varvec{\nu }^{N})\) is the pair generated by \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\), then also the pair \(({\varvec{y}}^{N}, {\tilde{{\varvec{u}}}}^{N})\) where

is a solution of (11). If the cost function \(\phi \) satisfies \(\{ \phi =0\} = \{0\}\), then every solution \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\) of (11) satisfies \(u_{i}(t)=0\) a.e. on \(\{t\in [0,T]: \, h_{\varPsi ^{N}_{t}} (y_{i}(t)) = 0\}\) for \(i= 1, \ldots , N\).

Proof

Let us fix \(N \in {\mathbb {N}}\) and let \({\varvec{u}}_{k}^{N}= (u_{k,1}, \ldots , u_{k,N}) \in L^{1}([0,T]; K^{N})\) and \({\varvec{y}}_{k}^{N}= (y_{k,1}, \ldots , y_{k,N}) \in AC([0,T]; Y^{N})\) be a minimizing sequence for the cost functional \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}}\). In particular, we may assume \(({\varvec{y}}_{k}^{N}, {\varvec{u}}_{k}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) for every k. Let us further denote \((\varPsi ^{N}_{k},\varvec{\nu }^{N}_{k}) \in AC([0,T]; ({\mathcal {P}}^{N}(Y); W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) the pair generated by \(({\varvec{y}}_{k}^{N}, {\varvec{u}}_{k}^{N})\).

Since \({\varvec{u}}_{k}^{N}\) takes values in \(K^{N}\) and K is compact and convex in \({\mathbb {R}}^{d}\), up to a subsequence we have that \({\varvec{u}}_{k}^{N} \rightharpoonup {\varvec{u}}^{N}\) \(\hbox {weakly}^{*}\) in \(L^{\infty }([0,T]; K^{N})\). By Proposition 1, \({\varvec{y}}_{k}^{N}\) is bounded in \(C([0,T]; Y^{N})\). Let us fix \(R>0\) such that \(\Vert {\varvec{y}}_{k}^{N}(t) \Vert _{ {\overline{Y}}^{N} } \le R\) for \(t \in [0,T]\) and \(k \in {\mathbb {N}}\). Then, by \((v_{1})\), \(({\mathcal {T}}_{1})\), and \((h_{1})\), for every \(s<t \in [0,T]\), every \(i=1, \ldots , N\), and every k we have that

Thus, \({\varvec{y}}_{k}^{N}\) is bounded and equi-Lipschitz continuous in [0, T]. By Ascoli-Arzelà Theorem, \({\varvec{y}}_{k}^{N}\) converges uniformly to some \({\varvec{y}}^{N} \in C([0,T]; Y^{N})\) along a suitable subsequence, and \({\varvec{y}}^{N}(0) = {\varvec{y}}_{0}^{N}\). Furthermore, if \((\varPsi ^{N}, \varvec{\nu }^{N})\) is the pair generated by \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\), we also deduce that \(\varPsi ^{N}_{k} \rightarrow \varPsi ^{N}\) in \(C([0,T]; ({\mathcal {P}}^{N}(Y); W_{1}))\). In view of \((v_{2})\), \(({\mathcal {T}}_{2})\), and \((h_{2})\), it is easy to see that \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\).

Finally, the continuity of \({\mathcal {L}}_{N}\) and the convexity of \(\phi \) yield the lower semicontinuity of the cost functional \({\mathcal {E}}_{N}^{{\varvec{y}}_{0}^{N}}\), so that

and \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\) is a solution of (11).

The second part of the statement follows from the structure of system (8). Indeed, if we define \({\tilde{{\varvec{u}}}}^{N}\) as in (13), the trajectory \({\varvec{y}}^{N}\) solution of (8) does not change and \(({\varvec{y}}^{N},{\tilde{{\varvec{u}}}}^{N})\in {\mathcal {S}}({\varvec{y}}_{0}^{N})\). Since the cost function \(\phi \) is non-negative with \(\phi (0) = 0\), it is easy to see that \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}} ({\varvec{y}}^{N}, {\tilde{{\varvec{u}}}}^{N}) \le {\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}} ({\varvec{y}}^{N}, {\varvec{u}}^{N})\). Finally, if \(\{\phi =0\} = \{0\}\), the previous inequality and the minimality of \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\) imply that \(u_{i}(t) = {\tilde{u}}_{i}(t)\) for \(t \in [0,T]\) and \(i=1, \ldots , N\), and the proof is concluded. \(\square \)

4 Mean-Field Control Problem

Before introducing the mean-field optimal control problem and stating the main \(\varGamma \)-convergence result, we discuss the compactness of sequences of pairs trajectory-control \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\) with bounded energy \({\mathcal {E}}^{{\varvec{y}}^{N}_{0}}_{N}\). To ease the notation, given a curve \(\varPsi \in C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) we denote by \(h_{\varPsi }\) the map \((t, y) \mapsto h_{\varPsi _{t}}(y)\) and by \(h_{\varPsi } \varPsi \) the curve \(t \mapsto h_{\varPsi _{t}} \varPsi _{t}\) identified with the measure  . Similarly to (9) we define, for every \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\), the set

. Similarly to (9) we define, for every \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\), the set

Proposition 3

For \(N \in {\mathbb {N}}\), let \({\varvec{y}}^{N}_{0} = (y_{0,1}, \ldots , y_{0,N}) \in Y^{N}\) and \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) with corresponding generated measures \(\varPsi ^{N}_{0} \in {\mathcal {P}}^{N}(Y)\) and \((\varPsi ^{N}, \varvec{\nu }^{N} ) \in AC([0,T]; ({\mathcal {P}}^{N}(Y); W_{1}))\times {\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d})\). Assume that \(\varPsi ^{N}_{0} \rightarrow {\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\) in the 1-Wasserstein distance and that

Then, up to a subsequence, the curve \(\varPsi ^{N}\) converges uniformly in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) to \(\varPsi \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\), \(\varvec{\nu }^{N}\) converges \(\hbox {weakly}^*\) to \(\varvec{\nu }\in {\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d})\), and \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\).

The proof of Proposition 3 is provided in Sect. 4.1.

Remark 3

Since \(\varvec{\nu }\ll h_{\varPsi }\varPsi \) for \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\), there exists a function \(v \in L^{1}_{h_{\varPsi }\varPsi }([0,T] \times Y;{\mathbb {R}}^d)\) such that \(\varvec{\nu }= v h_{\varPsi }\varPsi \). Furthermore, if we consider \({\overline{v}}(t, y) :=v(t, y) {\mathbf {1}}_{\{ h_{\varPsi } \ne 0\}} (t, y)\), we still have \(\varvec{\nu }= {\overline{v}} h_{\varPsi }\varPsi \).

In view of the compactness result in Proposition 3, for \(\varPsi \in C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) and \(\varvec{\nu }\in {\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d})\) we define the cost functional for the mean-field control problem as

where we have set for \((\varLambda , \varvec{\mu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\)

With the above notation at hand, the mean-field optimal control reads as

In order to discuss the existence of solutions to (19), we introduce the auxiliary functionals

for every \(( \varLambda , \varvec{\mu }) \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d}) \). In the next two propositions we show the existence of solutions to (19). We start by proving that for each \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\), the support of \(\varPsi _{t}\) is bounded in Y uniformly.

Proposition 4

Let \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\). Then, there exist \(R>0\) and \(L>0\) such that for every \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) the curve \(t \mapsto \varPsi _{t}\) is L-Lipschitz continuous and satisfies \(\mathrm {spt}(\varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\).

Proof

Let \((\varPsi , \varvec{\nu })\) be as in the statement of the proposition. In particular, we may write \(\varvec{\nu }= w h_{\varPsi } \varPsi \) for \(w \in L^{1}_{ h_{\varPsi } \varPsi } ([0, T] \times Y ; K)\) such that

Since \(\phi (0) = 0\) and \(\phi \ge 0\), without loss of generality we may suppose \(w (t, y) = 0\) in \(\{ (t, y) \in [0, T] \times Y: \, h_{\varPsi } (t, y) = 0\}\).

Let us first give a bound on the first moment \(m_{1}(\varPsi _{t})\). To do this, we fix a function \(\zeta \in C_{c}({\mathcal {F}}(U))\) such that \(0 \le \zeta \le 1\) and \(\zeta (\lambda ) = 1 \) for \(\lambda \in {\mathcal {P}}(U)\), which is possible since \({\mathcal {P}}(U)\) is a compact subset of \({\mathcal {F}}(U)\). For every \(n \in {\mathbb {N}}\) and every \(\varepsilon >0\), let us fix \(g_{\varepsilon }(x) :=\sqrt{|x|^{2} + \varepsilon ^{2}}\) and \(\theta _{n}(x) :=\theta (\frac{x}{n})\), where \(\theta \in C_{c} ({\mathbb {R}}^{d})\) is such that \(0 \le \theta \le 1\), \(|\nabla _{x} \theta | \le 1\) in \({\mathbb {R}}^{d}\), \(\theta (x) = 1 \) for \(|x| \le 1\), and \(\theta (x) = 0\) for \(|x| \ge 2\). Recalling that \(y= (x, \lambda )\), we have that the function \(\zeta g_{\varepsilon } \theta _{n} \in C_{c}({\overline{Y}})\) and

Since \(|\nabla _{x} \theta _{n}| \le \frac{1}{n}\), \(g_{\varepsilon }(x) \le |x| + \varepsilon \), and \((h_{1})\)–\((h_{2})\) hold, we continue in (21) with

for a positive constant C dependent only on h and on K. Passing to the limit, in the order, as \(\varepsilon \rightarrow 0\) and \(n \rightarrow \infty \), and using \( (v_{3}) \) and \(({\mathcal {T}}_{1})\), we deduce from (22) that

Since \(\varPsi _{t} \in {\mathcal {P}}(Y)\) for every \(t \in [0, T]\), applying Grönwall inequality to (23) we infer that

for some positive constant \({\overline{C}}\) only depending on h, K, v, and \({\mathcal {T}}\).

We now prove the uniform bound of the support of \(\varPsi _{t}\). To do this, we will apply the superposition principle [11, Theorem 5.2]. The curve \(\varPsi \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) solves the continuity equation

where the velocity field \(b :[0,T]\times {\overline{Y}} \rightarrow {\overline{Y}}\) is defined as

and is extended to 0 in \({\overline{Y}} \setminus Y\). By (24), \( (v_{3}) \), \(({\mathcal {T}}_{1})\), and \((h_{1})\), and by the fact that \(w (t, y) \in K\), we can estimate

We are therefore in a position to apply [11, Theorem 5.2] with velocity field b. Hence, there exists \(\pi \in {\mathcal {P}}(C([0, T]; {\overline{Y}}))\) such that

where \(\mathrm {ev}_{t} ( \gamma ) :=\gamma (t)\) for every \( \gamma \in C([0, T]; {\overline{Y}})\) and every \(t \in [0, T]\). Moreover, \(\pi \) is concentrated on solutions of the Cauchy problems

For every \( \gamma \in C([0, T]; {\overline{Y}})\) solution of (29), for \(t \in [0, T]\) we have, by \((v_{2})\), \(({\mathcal {T}}_{2})\), and \((h_{2})\), that

where C is as in (22). Again by Grönwall inequality, since \(y_{0} \in \mathrm {spt}({\widehat{\varPsi }}_{0}) \) and (24) holds, we deduce from (30) that there exists \(R>0\) independent of t such that every solution \(t \mapsto y(t)\) of the Cauchy problem (29) takes values in \(\mathrm {B}^{Y}_{R}\), so that \(\mathrm {spt}(\varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\) by (28). This implies, together with \((v_{1})\), \(({\mathcal {T}}_{1})\), and \((h_{1})\), that

for every \(t \in [0, T]\) and every \(y \in \mathrm {spt}{\varPsi }_{t}\). Since \(\varPsi \) solves (25), we deduce that \(t \mapsto \varPsi _{t}\) is Lipschitz continuous, with Lipschitz constant L only depending on R. In particular, all the above computations are independent of the choice of \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\). This concludes the proof of the proposition. \(\square \)

Proposition 5

For every \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\) the minimum problem (19) admits a solution.

Proof

The proof of existence follows from the Direct Method. Let \((\varPsi _{k}, \varvec{\nu }_{k}) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) be a minimizing sequence for (19). For every k, we may write \(\varvec{\nu }_{k} = w_{k} h_{\varPsi _{k}} \varPsi _{k}\) for \(w_{k} \in L^{1}_{ h_{\varPsi _{k}} \varPsi _{k}} ([0, T] \times Y ; K)\) such that

Without loss of generality we may suppose \(w_{k}(t, y) = 0\) in \(\{ (t, y) \in [0, T] \times Y: \, h_{\varPsi _{k}} (t, y) = 0\}\).

By Proposition 4, \(\varPsi _{k, t}\) have a uniformly bounded support in Y and is equi-Lipschitz continuous. By Ascoli-Arzelà theorem, there exists \(\varPsi \in AC([0, T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) such that, up to a subsequence, \(\varPsi _{k}\) converges to \(\varPsi \) uniformly in \(C([0, T]; ({\mathcal {P}}_{1}(Y); W_{1}))\).

Since \(\varvec{\nu }_{k} = w_{k} h_{\varPsi _{k}}\varPsi _{k}\), we have that, up to a subsequence, \(\varvec{\nu }_{k} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^*\) in \({\mathcal {M}}([0, T]\times {\overline{Y}}; {\mathbb {R}}^{d})\). Let us define the auxiliary measure \(\varvec{\mu }_{k} :=w_{k} \varPsi _{k} \in {\mathcal {M}}([0, T]\times {\overline{Y}}; {\mathbb {R}}^{d})\). In particular, we may assume that \(\varvec{\mu }_{k} \rightharpoonup \varvec{\mu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0, T] \times {\overline{Y}} ; {\mathbb {R}}^{d})\). Thus, thanks to \((h_{1})\), to the uniform convergence of \(\varPsi _{k}\) to \(\varPsi \), and to the fact that \(\mathrm {spt}(\varPsi _{k, t}) \subseteq \mathrm {B}^{Y}_{R}\), we also have that \(\varvec{\nu }= h_{\varPsi } \varvec{\mu }\). By definition of \(\varPhi _{min}\) and of \(\overline{\varPhi }\) (see (17)–(18) and (20)), we have that for every k

so that

Applying [19, Corollary 3.4.2] we infer that \(\varvec{\mu }\ll \varPsi \) and

Since \(\varvec{\nu }= h_{\varPsi } \varvec{\mu }\), we also have that \(\varvec{\nu }\ll h_{\varPsi } \varPsi \). Moreover, since K is convex and compact with \(0 \in K\), we have that \(w :=\frac{\mathrm {d}\varvec{\mu }}{\mathrm {d}\varPsi } \in K\) for \(\varPsi \)-a.e. \((t, y) \in [0, T] \times {\overline{Y}}\) and

Thus, \( (\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) and, by (32),

Finally, by \(({\mathcal {L}}_{3})\), by the uniform convergence of \(\varPsi _{k}\) to \(\varPsi \), and by the uniform inclusion \(\mathrm {spt}(\varPsi _{k, t}) \subseteq \mathrm {B}^{Y}_{R}\), we get that

Combining (33) and (34) we infer that

which concludes the proof of the proposition. \(\square \)

We are now in a position to state our main \(\varGamma \)-convergence result.

Theorem 2

Let \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\). Then the following facts hold:

(\(\varGamma \)-liminf inequality) for every sequence \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in AC([0,T]; Y^{N}) \times L^{1}([0,T]; K^{N})\) and \({\varvec{y}}^{N}_{0} \in Y^{N}\), let \((\varPsi ^{N}, \varvec{\nu }^{N})\in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) be the pair generated by \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\) and let \(\varPsi _{0}^{N}\) be the measure generated by \({\varvec{y}}_{0}^{N}\). Assume that \(\varPsi ^{N}\) converges to \(\varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\), that \(\varvec{\nu }^{N}\) converges \(\hbox {weakly}^{*}\) to \(\varvec{\nu }\) in \( {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\), and that \(W_1(\varPsi ^{N}_{0},{{\widehat{\varPsi }}}_{0})\rightarrow 0\) as \(N\rightarrow \infty \). Then

(\(\varGamma \)-limsup inequality) for every \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) and every sequence of initial data \({\varvec{y}}_0^N \in Y^{N}\) such that the generated measures \(\varPsi _0^N\) satisfy \(W_1(\varPsi _0^N,{{\widehat{\varPsi }}}_0)\rightarrow 0\), there exists a sequence \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) with generated pairs \((\varPsi ^{N},\varvec{\nu }^{N})\in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d})\) such that \(\varPsi ^{N} \rightarrow \varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1} (Y); W_{1}))\), \(\varvec{\nu }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0,T] \times {\overline{Y}}; {\mathbb {R}}^{d})\), as \(N\rightarrow \infty \), and

We provide the proof of Theorem 2 in Sect. 4.1.

As a corollary of Theorem 2, we obtain the convergence of minima and minimizers.

Corollary 1

Let \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\) and let \({\varvec{y}}^{N}_{0} \in Y^{N}\) a fixed sequence of initial data with generated measure \(\varPsi ^{N}_{0} \in {\mathcal {P}}^{N}(Y)\) satisfying \(W_{1}(\varPsi ^{N}_{0}, {\widehat{\varPsi }}) \rightarrow 0\) as \(N \rightarrow \infty \). Then for every sequence \(( {\varvec{y}}^{N} , {\varvec{u}}^{N} ) \in {\mathcal {S}} ({\varvec{y}}^{N}_{0})\) of solutions to (11) with generated pairs \((\varPsi ^{N}, \varvec{\nu }^{N})\), there exists \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) solution to (19) such that, up to a subsequence, \(\varPsi ^{N} \rightarrow \varPsi \) in \(C([0, T]; ({\mathcal {P}}_{1}(Y); W_{1}))\), \(\varvec{\nu }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0, T]\times {\overline{Y}}; {\mathbb {R}}^{d})\), and

Proof

The result is standard in \(\varGamma \)-convergence theory (see, e.g., [13, 26]) and follows from the compactness result in Proposition 3 and from Theorem 2. \(\square \)

4.1 Proofs of Proposition 3 and Theorem 2

Before proving Proposition 3 and Theorem 2, we state two lemmas regarding the control part of the cost functional \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}}\) and the functionals \( \overline{\varPhi }\) and \(\overline{\phi }\) defined in (20).

Lemma 1

Let \(N \in {\mathbb {N}}\), let \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in AC([0,T]; Y^{N}) \times L^{1}([0,T]; K^{N})\), and let \((\varPsi ^{N},\varvec{\nu }^{N}) \in AC([0,T]; ({\mathcal {P}}^{N}(Y); W_{1}))\times {\mathcal {M}}([0,T]\times {\overline{Y}};{\mathbb {R}}^d)\) be the pair generated by \(({\varvec{y}}^{N},{\varvec{u}}^{N})\); finally, let

Then, for a.e. \(t \in [0,T]\) we have

If \({\varvec{y}}_{0}^{N} \in Y^{N}\) and the pair \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}} ({\varvec{y}}_{0}^{N})\) is such that \(u_{i}(t) = 0\) if \(h_{\varPsi ^{N}_{t}} (y_{i}(t)) = 0\) for \(i=1, \ldots , N\) and \(t \in [0,T]\), then for a.e. \(t \in [0,T]\), we have that

Proof

The proof of (38) can be found in [34, Lemma 6.2, formula (6.2)]. Arguing as in the proof of [34, Lemma 6.2, formula (6.3)] we may also prove (39). Referring to the notation in [34, Lemma 6.2], the only modification we have to make is that, whenever \(y_{i}(t) = y_{j}(t)\) for \(t \in S \subseteq [0,T]\) and for some \(i\ne j\), the equality \({\dot{y}}_{i}(t) = {\dot{y}}_{j}(t)\) for a.e. \(t \in S\) only implies that \(h_{\varPsi ^{N}_{t}} (y_{i}(t) ) u_{i}(t) = h_{\varPsi ^{N}_{t}} (y_{j}(t)) u_{j}(t)\) for a.e. \(t \in S\). Therefore, for a.e. \(t \in S \cap \{ h_{\varPsi ^{N}_{t}} (y_{i}(t)) \ne 0\}\) we have \(u_{i}(t) = u_{j}(t)\). Instead, for a.e. \(t \in S \cap \{ h_{\varPsi ^{N}_{t}} (y_{i}(t)) = 0\}\) we have \(u_{i}(t) = u_{j}(t) = 0\) by assumption. This implies that \(u_{i}(t) = u_{j}(t)\) a.e. in S, and the proof can be concluded as in [34, Lemma 6.2]. \(\square \)

Lemma 2

Let \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\) and \({\varvec{y}}^{N}_{0} \in Y^{N}\) be such that the generated measure \(\varPsi _{0}^{N}\) converges to \({\widehat{\varPsi }}_{0}\) in the 1-Wasserstein distance. Let \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) and let \((\varPsi ^{N}, \varvec{\nu }^{N}) \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1})) \times {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) be the corresponding generated measures, according to Definition 3. Assume that

and that \(\varPsi ^{N} \rightarrow \varPsi \) uniformly in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) and \(\varvec{\nu }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0,T]\times {\overline{Y}} ; {\mathbb {R}}^{d})\). Then, \(\varvec{\nu }\ll h_{\varPsi } \varPsi \), \(\frac{\mathrm {d}\varvec{\nu }}{\mathrm {d}h_{\varPsi } \varPsi } \in K\) for \(h_{\varPsi } \varPsi \)-a.e. \((t, y) \in [0,T]\times {\overline{Y}}\), and

Proof

We define the auxiliary measures \(\varvec{\mu }^{N}\) and \(\varvec{\mu }^{N}_{t}\) as in (37) and we notice that \(\varvec{\nu }^{N} = h_{\varPsi ^{N}} \varvec{\mu }^{N}\), \(\varvec{\nu }^{N}_{t} = h_{\varPsi ^{N}_{t}} \varvec{\mu }^{N}_{t}\) for \(t \in [0,T]\). In view of Proposition 1, both \(\varvec{\mu }^{N}\) and \(\varvec{\nu }^{N}\) are supported on a compact subset of \([0,T] \times Y\) and are bounded in \({\mathcal {M}}([0,T]\times {\overline{Y}};{\mathbb {R}}^{d})\). In particular, we deduce that there exists \(\varvec{\mu }\in {\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) such that, up to a not relabelled subsequence, \(\varvec{\mu }^{N} \rightharpoonup \varvec{\mu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\). Since \((h_{2})\) holds, \(\varPsi ^{N} \rightarrow \varPsi \) uniformly in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\), and \(\varvec{\mu }^{N}\) and \(\varvec{\nu }^{N}\) have uniformly compact support, in the limit it holds \(\varvec{\nu }= h_{\varPsi } \varvec{\mu }\).

By Lemma 1 and by the boundedness of the energy \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}}\), it is clear that

Hence, we can apply [19, Corollary 3.4.2] to infer that, in the limit, \(\varvec{\mu }\ll \varPsi \) and

Since \(\varvec{\nu }= h_{\varPsi } \varvec{\mu }\) and \(\varvec{\mu }\ll \varPsi \), we have that \(\varvec{\nu }\ll h_{\varPsi }\varPsi \). Furthermore, being K a convex and compact set with \(0 \in K\), we have that \(\frac{\mathrm {d}\varvec{\mu }}{\mathrm {d}\varPsi }(t, y) \in K\) for \(\varPsi \)-a.e. \((t, y) \in [0,T] \times {\overline{Y}}\). and, denoting \(w :=\frac{\mathrm {d}\varvec{\mu }}{\mathrm {d}\varPsi }\),

so that \(\varvec{\nu }= w h_{\varPsi } \varPsi \) and \(\varPhi (w, \varPsi ) = \overline{\varPhi } ( \varPsi , \varvec{\mu })\). Finally, by definition of \(\varPhi _{\mathrm{min}}\) in (17) we get (40). \(\square \)

We now prove Proposition 3.

Proof of Proposition 3

Let \({\varvec{y}}^{N}_{0}\), \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\), \((\varPsi ^{N}, \varvec{\nu }^{N})\), and \(\varPsi ^{N}_{0}\) be as in the statement of the proposition. Since \(W_1(\varPsi ^{N}_{0},{\widehat{\varPsi }}_{0})\rightarrow 0\) as \(N\rightarrow \infty \), by Proposition 1 we obtain that for every \(t\in [0,T]\) the probability measure \(\varPsi ^{N}_{t}\) has support contained in the compact set \(\mathrm {B}^{Y}_{R}\) for a suitable \(R>0\) independent of t and N. This implies that the curve \(\varPsi ^{N}\) takes values in a compact subset of \({\mathcal {P}}_{1}(Y)\) with respect to the 1-Wasserstein distance. Let us now show that the sequence \(\varPsi ^{N}\) is equi-continuous. Thanks to the assumptions \((v_{1})\), \(({\mathcal {T}}_{1})\), and \((h_{1})\), to the fact that \({\varvec{u}}^{N}(t) \in K^{N}\) and \(\mathrm {spt}( \varPsi ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\) for \(t \in [0,T]\), for every \(s<t \in [0,T]\) we estimate

for a positive constant C independent of t, s, and N. Therefore, \(\varPsi ^{N}\) is equi-continuous in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) and, by Ascoli-Arzelà Theorem, it converges, up to a subsequence, to a limit curve \(\varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\). By (42), \(\varPsi \) is also Lipschitz continuous.

Since \({\varvec{u}}^{N}\) takes values in \(K^{N}\) with K compact and \(h_{\varPsi ^{N}}\) is bounded by \((h_{1})\), we have that, up to a further subsequence, \(\varvec{\nu }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^*\) in \({\mathcal {M}}([0,T] \times {\overline{Y}}; {\mathbb {R}}^{d})\). Since the cost functional \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}} ({\varvec{y}}^{N}, {\varvec{u}}^{N})\) is bounded, we deduce from Lemma 2 that \(\varvec{\nu }\ll h_{\varPsi }\varPsi \) and \(\frac{\mathrm {d}\varvec{\nu }}{\mathrm {d}h_{\varPsi }\varPsi } (t, y) \in K\) for \(h_{\varPsi }\varPsi \)-a.e. \((t, y) \in [0,T]\times {\overline{Y}}\).

We finally show that \((\varPsi , \varvec{\nu })\) solves the corresponding continuity equation in the sense of distributions. By the uniform convergence of \(\varPsi ^{N}\) to \(\varPsi \), we have that \(\varPsi _{0}= {\widehat{\varPsi }}_{0}\). For every test function \(\varphi \in C^{\infty }_{c}((0,T) \times {\overline{Y}})\), since \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\), we have that, for every \(t \in [0,T]\),

Since \(\varPsi ^{N} \rightarrow \varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) and \(\varvec{\nu }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^{*}\) in \({\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\), we only have to determine the limit of the second integral on the right-hand side of (43). To do this, we estimate

By the regularity of the test function \(\varphi \), by assumptions \((v_{2})\) and \(({\mathcal {T}}_{2})\), and by the uniform inclusion \(\mathrm {spt}(\varPsi ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\), we may estimate \(I_{1}^{N}\) with

for a positive constant \(L_{R}\) depending only on R. Since \(\varPsi ^{N} \rightarrow \varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\), we deduce from the previous inequality that \(I_{1}^{N} \rightarrow 0\) as \(N\rightarrow \infty \). Again by \((v_{2})\) and \(({\mathcal {T}}_{2})\), the function \(y \mapsto \nabla \varphi (t, y) b_{\varPsi _{t}}(t)\) is Lipschitz continuous on \(\mathrm {B}^{Y}_{R}\) for every \(t \in [0,T]\), with Lipschitz constant \(C_{R}>0\) uniformly bounded in time. Since \(\mathrm {spt}(\varPsi _{t}), \mathrm {spt}(\varPsi ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\) for \(t \in [0,T]\), we estimate \(I^{N}_{2}\) with

and \(I^{N}_{2} \rightarrow 0\) as \(N\rightarrow \infty \). We can now pass to the limit in (44) to obtain that

which in turn implies, by passing to the limit in (43), that

By the arbitrariness of \(\varphi \in C^{\infty }_{c}((0,T)\times {\overline{Y}})\), we conclude that \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\). This completes the proof. \(\square \)

Eventually, we prove the \(\varGamma \)-convergence result.

Proof of Theorem 2

The proof follows the lines of [34, Theorem 3.2]. We divide the proof into two steps.

Step 1: \(\varGamma \)-liminf inequality. Let \((\varPsi , \varvec{\nu })\), \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\), \({\varvec{y}}^{N}_{0}\), \((\varPsi ^{N},\varvec{\nu }^{N})\), and \(\varPsi ^{N}_{0}\) be as in the statement. If \(\liminf _{N\rightarrow \infty } {\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}} ({\varvec{y}}^{N}, {\varvec{u}}^{N}) =+\infty \) there is nothing to show. Without loss of generality we may therefore assume that

which implies, by definition (10) of \({\mathcal {E}}_{N}^{{\varvec{y}}^{N}_{0}}\), that \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) for every N. Furthermore, by Proposition 1 there exists \(R>0\) independent of N and t such that \(\mathrm {spt}(\varPsi ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\). By Proposition 3 we have that the limit pair \((\varPsi , \varvec{\nu })\) belongs to \({\mathcal {S}}({\widehat{\varPsi }}_{0})\) and \(\mathrm {spt}(\varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\) for every \(t \in [0,T]\). Applying Lemma 2 we infer that

Since \({\mathcal {L}}_{N}\) \({\mathcal {P}}_{1}\)-converges to \({\mathcal {L}}\) uniformly on compact sets and \(\mathrm {spt}(\varPsi ^{N}_{t}) , \mathrm {spt}(\varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\), we get that

Combining (45) and (46) we conclude that

which is (35).

Step 2: \(\varGamma \)-limsup inequality. We will construct a sequence \(({\varvec{y}}^{N},{\varvec{u}}^{N})\) such that

and we recall that this condition is equivalent to (36).

Let \((\varPsi , \varvec{\nu }) \in {\mathcal {S}}({\widehat{\varPsi }}_{0})\) be such that \({\mathcal {E}}^{{\widehat{\varPsi }}_{0}}(\varPsi , \varvec{\nu }) <+\infty \), and let \(w \in L^{1}_{h_{\varPsi } \varPsi }([0,T]\times Y; K)\) be such that \(\varvec{\nu }= w h_{\varPsi } \varPsi \) and \(\varPhi _{\mathrm{min}}(\varPsi , \varvec{\nu }) = \varPhi ( w, \varPsi ) \). In particular, we may assume that \(w = 0\) on the set \(\{(t, y) \in [0,T] \times {\overline{Y}}: \, h_{\varPsi _{t}} (y) = 0 \}\).

As in [34, Theorem 3.2], the construction of a recovery sequence is based on the superposition principle [11, Theorem 5.2]. The curve \(\varPsi \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) solves indeed the continuity equation

where the velocity field \(b :[0,T]\times {\overline{Y}} \rightarrow {\overline{Y}}\) is defined by

and is extended to 0 in \({\overline{Y}} \setminus Y\). By Proposition 4, there exists \(R>0\) such that \(\mathrm {spt}(\varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\) for every \(t \in [0, T]\), and arguing as in (27) we get that \(b \in L^{1}_{\varPsi } ([0, T] \times {\overline{Y}})\).

We are therefore in a position to apply [11, Theorem 5.2] with velocity field b. Setting

we infer that there exists a probability measure \(\pi \in {\mathcal {P}}(\varGamma )\) concentrated on \(\varDelta \) such that for every \(t \in [0,T]\) \(\varPsi _{t} = (\mathrm {ev}_{t})_{\#}\pi \). We further notice that Proposition 4, the boundedness of the control w, and assumptions \((v_{3})\), \(({\mathcal {T}}_{1})\), and \((h_{1})\) yield

We define the auxiliary functional

We notice that by Fubini Theorem

Furthermore, \({\mathcal {F}}\) is lower semicontinuous in \(\varDelta \). Indeed, if \( \gamma _{k} , \gamma \in \varDelta \) are such that \( \gamma _{k} \rightarrow \gamma \) with respect to the uniform convergence in \(\varGamma \), since w takes values in the compact set K we immediately deduce that \(w(\cdot , \gamma _{k} (\cdot ))\) is bounded in \(L^{\infty }([0,T]; {\mathbb {R}}^{d})\), and therefore converges \(\hbox {weakly}^*\), up to a subsequence, to some \(g \in L^{\infty }([0,T]; {\mathbb {R}}^{d})\) and, by convexity of \(\phi \), we have the lower-semicontinuity (see, e.g., [25, Theorem 3.23])

Since \( \gamma _{k} \in \varDelta \) for every k, for \(s<t \in [0,T]\) we can write

Passing to the limit in the previous equality we deduce, thanks to \( (v_{1}) \), \(({\mathcal {T}}_{2})\), and \((h_{2})\),

On the other hand, being \( \gamma \in \varDelta \) we have that

which implies, by the arbitrariness of s and t, that \(h_{\varPsi _{\tau }} ( \gamma (\tau )) g( \tau ) = h_{\varPsi _{\tau }} ( \gamma (\tau )) w( \tau , \gamma ( \tau ))\) for a.e. \( \tau \in [0,T]\). Hence, \(g(t) = w(t, \gamma (t))\) for a.e. \(t \in \{ s \in [0,T] : \, h_{\varPsi _{s}} ( \gamma (s)) \ne 0\}\), while \(w(t, \gamma (t)) = 0\) for \(t \in \{ s \in [0,T]: \, h_{\varPsi _{s}} ( \gamma (s)) = 0 \}\). Since \(\phi \ge 0\) and \(\phi (0) = 0\), we finally obtain

By Lusin theorem, we can select an increasing sequence of compact sets \(\varDelta _{k} \Subset \varDelta _{k+1} \Subset \varDelta \) such that \(\pi ( \varDelta \setminus \varDelta _{k}) <\frac{1}{k}\) and \({\mathcal {F}}\) is continuous on \(\varDelta _{k}\). Setting

we have that

In particular, the first limit follows from (50) and from the narrow convergence of \(\overline{\pi }_{k}\) to \(\pi \). The second limit is a consequence of the first.

Since \(\varDelta _{k}\) is compact, we can select a sequence of curves \(\{( \gamma _{k} )_{i}^{m}: \, i =1, \ldots , m, \, m \in {\mathbb {N}}\} \subseteq \varDelta _{k} \) such that for every k the measures

satisfy

where the second equality is due to the fact that \({\mathcal {F}}\) is continuous and bounded on \(\varDelta _{k}\).

Let us fix a countable dense set \(D :=\{\varphi _{\ell } \}_{\ell \in {\mathbb {N}}}\) in \(C_{c}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\). We recall that, by construction, on the set \(\varDelta _{k}\) the function \( \gamma \mapsto {\mathcal {F}}(\gamma ) \) is continuous. Since \(\phi \) is superlinear, this implies that \(w(\cdot , \gamma _{j} (\cdot ) ) \rightarrow w(\cdot , \gamma (\cdot ))\) in \(L^{p}([0,T]; {\mathbb {R}}^{d})\) for every \(p<+\infty \) whenever \(\gamma _{j}, \gamma \in \varDelta _{k}\) with \(\gamma _{j} \rightarrow \gamma \). Hence, also the map

is continuous in \(\varDelta _{k}\) for every \(\ell \in {\mathbb {N}}\). Combining this fact with (52) and (53), we are able to select a suitable strictly increasing sequence m(k) such that for every \(m \ge m(k)\) it holds

where in the last inequality we have used that \(\overline{\pi }_{k}^{m}\) converges narrowly to \(\overline{\pi }_{k}\) as \(m\rightarrow \infty \) and that \(\overline{\pi }_{k}^{m}\) is concentrated on curves belonging to \(\varDelta _{k}\).

Therefore we set \(\pi _{N} :=\overline{\pi }_{k}^{N}\) for \(m(k) \le N < m(k+1)\) and obtain that

so that

We now construct the recovery sequence \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\). First, we define the auxiliary curves \(\varLambda _{t}^{N} :=(\mathrm {ev}_{t})_{\#} \pi _{N} \in AC([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\) and the corresponding curves \({\varvec{z}}^{N} = (z_{1}, \ldots , z_{N}) \in AC([0,T]; Y^{N})\) so that \(\varLambda _{t}^{N}=\frac{1}{N}\sum _{i=1}^{N} \delta _{z_{i}(t)}\). Then, we set \({\overline{u}}_{i} (t) :=w(t, z_{i}(t))\) for every \(t \in [0,T]\), every \(i=1, \ldots , N\), and every \(N \in {\mathbb {N}}\), and \(\overline{{\varvec{u}}}^{N} :=({\overline{u}}_{1}, \ldots , {\overline{u}}_{N}) \in L^{1}([0, T]; K^{N})\). In particular, each component of \({\varvec{z}}^{N}\) solves the ODE

with initial point \(z_{i}(0) \in \mathrm {spt}( {\widehat{\varPsi }}_{0})\). The curves \({\varvec{z}}^{N}\) have to be further modified, since in the ODE (59) the velocity field \(b_{\varPsi _{t}}\) still contains the state of the limit system \(\varPsi _{t}\) rather than \(\varLambda ^{N}\), and the initial data \({\varvec{z}}^{N}_{0} = (z_{1} (0), \ldots , z_{N}(0))\) do not coincide with \({\varvec{y}}^{N}_{0}\).

Being \(\varPsi ^{N}_{0}\) and \(\varLambda ^{N}_{0}\) two empirical measures, we can find a sequence of permutations \(\sigma ^{N} :Y^{N} \rightarrow Y^{N}\) such that

Let us further denote by \(\sigma ^{N}_{{\mathbb {R}}^{d}}:({\mathbb {R}}^{d})^{N} \rightarrow ({\mathbb {R}}^{d})^{N}\) the spatial component of \(\sigma ^{N}\). We set \(\overline{{\varvec{y}}}^{N}_{0} :=\sigma ^{N} ({\varvec{y}}^{N}_{0})\) and denote by \({\overline{y}}_{0, i}\) its i-th component. We define \(\overline{{\varvec{y}}}^{N} = ({\overline{y}}_{ 1}, \ldots , {\overline{y}}_{ N}) \in AC([0,T]; Y^{N})\) by solving for \(i=1, \ldots , N\) the Cauchy problems

where, as for the Cauchy problem in (8), we have set \(\varPsi ^{N}_{t} :=\frac{1}{N} \sum _{i=1}^{N} \delta _{{\overline{y}}_{i}(t)} \in {\mathcal {P}}^N (Y)\). By [41, Corollary 2.3] system (61) admits a unique solution and \((\overline{{\varvec{y}}}^{N}, \overline{{\varvec{u}}}^{N}) \in {\mathcal {S}}(\overline{{\varvec{y}}}^{N}_{0})\). Finally, we set \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) :=( (\sigma ^{N})^{-1}( \overline{{\varvec{y}}}^{N}), (\sigma ^{N}_{{\mathbb {R}}^{d}})^{-1} (\overline{{\varvec{u}}}^{N})) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\), where, with a slight abuse of notation, we have denoted by \(\sigma ^{N}_{{\mathbb {R}}_{d}}\) the action of the permutation \(\sigma ^{N}\) on the \(({\mathbb {R}}^{d})^{N}\)-component of \({\varvec{y}}^{N}\).

We denote by \((\varPsi ^{N}, \varvec{\nu }^{N})\) and \((\varLambda ^{N}, \varvec{\eta }^{N})\) the pairs generated by \((\overline{{\varvec{y}}}^{N}, \overline{{\varvec{u}}}^{N})\) and by \(({\varvec{z}}^{N}, \overline{{\varvec{u}}}^{N})\), respectively, and notice that, by invariance with respect to permutations, \((\varPsi ^{N}, \varvec{\nu }^{N})\) coincides with the pair generated by \(({\varvec{y}}^{N}, {\varvec{u}}^{N})\). We want to show that

To do this, we will prove that

and that

so that (62) follows by triangle inequality.

Let us consider the pair \((\varLambda ^{N}, \varvec{\eta }^{N})\). Since \(z_{i}(0)\in \mathrm {spt}( {\widehat{\varPsi }}_{0})\) for every \(i=1,\ldots ,N\) and \({\widehat{\varPsi }}_{0} \in {\mathcal {P}}_{c}(Y)\), Proposition 1 yields the existence of \(R>0\) independent of N and t such that \(\mathrm {spt}(\varLambda ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\) for every \(t\in [0,T]\). Repeating the computations performed in (42) we obtain that \(\varLambda ^{N}\) is equi-Lipschitz continuous with respect to t. The convergence in (57) implies that \(W_1(\varLambda ^{N}_{t},\varPsi _{t})\rightarrow 0\) for every \(t\in [0,T]\) as \(N\rightarrow \infty \), so that an application of Ascoli-Arzelà Theorem yields that \(\varLambda ^{N} \rightarrow \varPsi \) in \(C([0,T]; ({\mathcal {P}}_{1}(Y); W_{1}))\). This proves the second convergence in (63).

To prove the first convergence in (63), we estimate the distance between \(\overline{{\varvec{y}}}^{N}\) and \({\varvec{z}}^{N}\). First we notice that, up to possibly taking a larger R, we have that \(\Vert {\overline{y}}_{i}(t)\Vert _{{\overline{Y}}} \le R\) for every \(i=1,\ldots ,N\) for every \(N\in {\mathbb {N}}\) and for every \(t \in [0,T]\), so that \(\mathrm {spt}(\varPsi ^{N}_{t}) \subseteq \mathrm {B}^{Y}_{R}\). For every \(t \in [0,T]\) and every \(i=1, \ldots , N\) we have, by definition of \({\overline{y}}_{i}\) and \(z_{i}\) and by assumptions \((v_{2})\), \(({\mathcal {T}}_{2})\), and \((h_{2})\),

for some positive constant \(L_{R}\) independent of N. Hence, by Grönwall and triangle inequalities we deduce from (65) that

Summing (66) over \(i=1, \ldots , N\) and recalling (60), we infer that for every \(t \in [0, T]\)

Applying once again Grönwall inequality to (67) we obtain for every \(t \in [0, T]\)

Since \(W_{1}( \varLambda ^{N}_{0}, {\widehat{\varPsi }}_{0}) \rightarrow 0\), \(W_{1}(\varPsi ^{N}_{0}, {\widehat{\varPsi }}_{0}) \rightarrow 0\), and the second limit in (63) holds, from (68) we conclude (63) and the convergence of \(\varPsi ^{N}\) to \(\varPsi \) in \(C([0, T]; ({\mathcal {P}}_{1}(Y); W_{1}))\).

We now turn our attention to (64). The second convergence in (64) is a matter of a direct computation. Indeed, for every \(\varphi \in C_{c}([0,T] \times {{\overline{Y}}}; {\mathbb {R}}^{d})\) and every \(\varepsilon >0\) we can fix \(\varphi _{\ell } \in D\) such that \(\Vert \varphi - \varphi _{\ell }\Vert _{C([0,T]\times {\overline{Y}})} \le \varepsilon \) and estimate

for some positive constant C independent of \(\varepsilon \). We now estimate the right-hand side of (69). By definition of \(\pi _{N}\) and by (54) and (56), for every \(N \in [m(k), m(k+1))\) with \(k \ge \ell \) we have that

Passing to the limit as \(N\rightarrow \infty \) in the previous inequality we get by the boundedness of w, \(\varphi _{\ell }\), and h, that

Therefore, passing to the limsup as \(N\rightarrow \infty \) in (69) we obtain

By the arbitrariness of \(\varepsilon \) and \(\varphi \) we infer that \(\varvec{\eta }^{N} \rightharpoonup \varvec{\nu }\) \(\hbox {weakly}^*\) in \({\mathcal {M}}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\).

We now turn to the first convergence in (64). For every \(\varphi \in C_{c}([0,T]\times {\overline{Y}}; {\mathbb {R}}^{d})\) we have that, using the definition of \(\varvec{\nu }^{N}\), of \(\varvec{\eta }^{N}\), and of the controls \({\varvec{u}}^{N}\),

In order to continue in (70) let us fix a modulus of continuity \(\omega _{\varphi }\) for the function \(\varphi \). Notice that, without loss of generality, we may assume \(\omega _{\varphi }\) to be increasing and concave. Thus, by \((h_{1})\), \((h_{2})\), by the fact that \(w(t, z_{i}(t)) \in K\) and \({\overline{y}}_{i}, z_{i} \in \mathrm {B}^{Y}_{R}\) for every \(t \in [0,T]\) and every \(i=1, \ldots , N\), and by the inequalities (67), (68), we can further estimate (70) with

where \(C>0\) is a constant independent of N. Therefore, by (63) we conclude that

which yields the first convergence in (64).

Finally, we prove (47). As already observed, \(({\varvec{y}}^{N}, {\varvec{u}}^{N}) \in {\mathcal {S}}({\varvec{y}}^{N}_{0})\) by construction, so that

Since \(\mathrm {spt}(\varPsi ^{N}_{t}) , \, \mathrm {spt}( \varPsi _{t}) \subseteq \mathrm {B}^{Y}_{R}\) for every \(t \in [0,T]\) and, by \(({\mathcal {L}}_{1})\) and \(({\mathcal {L}}_{2})\), \({\mathcal {L}}_{N}\) is continuous and \({\mathcal {L}}_{N}\) \({\mathcal {P}}_{1}\)-converges to \({\mathcal {L}}\) uniformly on compact sets, we have that

As for the second term on the right-hand side of (71), we recall that \({\varvec{u}}^{N} = (\sigma ^{N}_{{\mathbb {R}}^{d}})^{-1}(\overline{{\varvec{u}}}^{N})\) with \({\overline{u}}_{i}(t) = w(t, z_{i}(t))\) and that \(\varLambda ^{N}_{t} = ( \mathrm {ev}_{t})_{\#}\pi _{N}\), so that we can write

In view of (58), we infer that

which implies, together with (72), that

which is (47). This concludes the proof of the theorem. \(\square \)

5 Numerical Experiments

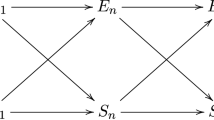

In this section we consider specific applications of our model in the context of opinion dynamics. In Sect. 5.1, we discuss the effects of controlling a single population of leaders. In Sect. 5.2, instead, two competing populations of leaders and a residual population of followers are considered, but the policy maker favors only one of the populations of leaders towards their goal.

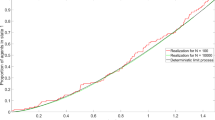

In both cases, for the continuity equation (4) we use a finite volume scheme with dimensional splitting for the state space discretization, following a similar approach to the one employed in [6]. Introducing a suitable discretization of the density \(\varPsi ^n_i=\varPsi (t_n,y_i)\) on uniform grid with parameters \(\varDelta x,\varDelta \lambda \) in the state space, and \(\varDelta t\) in time, the resulting scheme reads

where \({\mathcal {T}}_{i\pm 1/2},{\mathcal {V}}_{i\pm 1/2}\) are suitable discretizations of the transition operator and the non-local velocity flux, respectively, and \(w^{n}\) denotes the control computed at the corresponding time. Notice that the update of \(\varPsi \) follows a two-step approximation, first in \(\lambda \) then in x, of the continuity equation (4) (see also [7] for a rigorous convergence result).

The realization of the control is approximated using a nonlinear Model Predictive Control (MPC) tecnique. Hence, an open-loop optimal control action is synthesized over a prediction horizon \([0,T_p]\), by solving the optimal control problem (3), (4). Having prescribed the system dynamics and the running cost, this optimization problem depends on the initial state and the horizon \(T_p\) only. The control \(w^*\), which is obtained for the whole horizon \([0,T_p]\), is implemented over a possibily shorter control horizon \([0,T_c]\). At \(t=T_c\) the initial state of the system is re-initialized to \(\varPsi (T_c)\) and the optimization is repeated. In this setting, to comply with an efficient solution of the dynamics, we perform the MPC optimization selecting \(T_p=T_c=\varDelta t\). This choice of the horizons correponds to a instantaneous relaxation towards the target state. For further discussion on MPC literature we refer to [1, 27, 35] and references therein.

5.1 A Leader–Follower Dynamics

In this setting, the set U consists of two elements, that is \(U:=\{F, L\}\) and is endowed with a two-valued distance

The space \({\mathcal {P}}_1(\{F,L\})\) is identified with the interval [0, 1]; accordingly, in the discrete model, \(\lambda _i\) is a scalar value describing the probability of the i-th particle of being a follower.

In order to tune the influence of the control, the simplest possible choice is to fix a function \(h_\varPsi (x,\lambda )=h(\lambda )\) in (8) for a suitable bounded non-negative Lipschitz function \(h:[0,1]\rightarrow {\mathbb {R}}\). In the applications, where the policy maker aims at controlling only the population of leaders, the ideal function h should be non-increasing and equal to zero when \(\lambda \) is close to 1. As shown in Proposition 2, if the cost function \(\phi \) satisfies \(\{\phi =0\}=\{0\}\), the optimal control will steer only agents with small \(\lambda \).

It is natural to partition the total population into leaders and followers, according to \(\lambda \). Given \(\varPsi \in {\mathcal {P}}({\mathbb {R}}^d\times [0,1])\), and for a fixed Lipschitz function \(g:[0,1]\rightarrow [0,1]\), we define the followers and leaders distributions as

for each Borel set \(B \subset {\mathbb {R}}^d\). In particular, the sum \(\mu _\varPsi ^F(B)+\mu _\varPsi ^L(B)\) coincides with the first marginal of \(\varPsi \) and therefore it counts the total population contained in B. In the discrete setting, the leaders and followers distributions in (73) are given by

A typical choice for g is any Lipschitz regularization of the indicator function of the set \(\{\lambda \ge m\}\), with \(m\ge 0\) a small given threshold. Doing so amounts to classifying agents with small \(\lambda \) (and therefore high influence) as leaders and the remaining ones as followers. However, different and softer choices for g are possible. For instance, the choice \(g(\lambda )=\lambda \) allows one to measure the average degree of influence of an agent sitting in the region B on the remaining ones.

It is a common feature of many-particle models to assume that each agent experiences a velocity which combines the action of the overall followers and leaders distribution. Hence, these velocities are an average velocity of the system, weighted by the probability \(\lambda \) that an agent located at x has of being a follower, and have the general form

where the functions \(g_i:[0,1]\rightarrow {\mathbb {R}}\) (for \(i=1,2\)) are given Lipschitz continuous functions and \(K^{\star \bullet } :{\mathbb {R}}^{d} \rightarrow {\mathbb {R}}^{d}\) for \(\star ,\bullet \in \{F,L\}\) are suitable Lipschitz continuous interaction kernels. Let us remark that the choice \(g_1=g_2=g\), so that the velocities actually depend on \(\varPsi \) through the distributions \( \mu ^F_\varPsi \) and \(\mu ^L_\varPsi \), is quite plausible in this kind of modeling. In the discrete setting, a velocity field of this kind reads as

Similar principles can be used for defining the transitions rates. According to the identification of \({\mathcal {P}}_1(\{F,L\})\) with [0, 1], the transition operator \({\mathcal {T}}_\varPsi (x,\lambda )\) will be identified with a scalar (see (76) below), instead of taking values in the two-dimensional space \({\mathcal {F}}(\{F,L\})\). Indeed, in this case \(({\mathcal {T}}_0)\) uniquely determines the second component of \({\mathcal {T}}_\varPsi \) once the first one is known. For instance, one can consider

with \(\alpha _\bullet \) having the typical form

and where \(g_3:[0,1]\rightarrow [0,1]\), \(H_\bullet :{\mathbb {R}}^d\rightarrow {\mathbb {R}}_+\), and \(\ell _\bullet :[0,1]\rightarrow [0,1]\) are given Lipschitz functions. Notice that condition \(({\mathcal {T}}_3)\) amounts to requiring that the conditions

are satisfied (equivalently, the evolution of \(\lambda \) is confined into [0, 1]). If one chooses \(g_3(\lambda )=\lambda \), for fixed x and \(\varPsi \) the evolution of \(\lambda \) is governed by a linear master equation. Instead, for \(g_3=g\), the switching rates \(\alpha _F\) and \(\alpha _L\) are activated depending on the population to which an agent belongs. The function \(H_\bullet \) can be used to localize the effect of the overall distribution on the transition rates; within this model, an agent sitting at x is able to interact only with agents in a small neighborhood around x. Similarly, with a proper choice of \(\ell _\bullet \), one can tune the influence of the surrounding agents according to their probability of belonging to the populations of followers or leaders. The choice \(\ell _F=1-\ell _L=g\) corresponds to having rates which depend on \(\varPsi \) through the distributions \(\mu ^F_\varPsi \) and \(\mu ^L_\varPsi \). Let us however stress that, in general, also with these choices it is not possible to decouple equation (14) into a system of equations for \(\mu ^F_\varPsi \) and \(\mu ^L_\varPsi \), which, on the contrary, can only be reconstructed after solving for \(\varPsi \) first. Some particular cases where this is instead possible are discussed in [41, Proposition 4.8].

With the arguments of [41, Sect. 4], one can see that choices of \(v_\varPsi \) and \({\mathcal {T}}_\varPsi \) made in (75) and (76) fit in our general framework. Let us remark that in [41, Sect. 4], only the case \(g(\lambda )=g_i(\lambda )=\lambda \), \(i=1,2,3\) was discussed, but the adaption to the current, more general situation, is straightforward.

A typical Lagrangian that we may consider should penalize the distance of the leaders from a desired goal. This may be encoded by a function of the form

where \({\bar{x}}\in {\mathbb {R}}^d\) is the position of the desired goal and \(\theta :[0,1]\rightarrow [0,1]\) is zero when \(\lambda \) is above a given threshold (a possible choice is even \(\theta (\lambda )=1-g(\lambda )\)). Moreover, a competing effect, depending on the overall distribution of the population, can be taken into account: leaders should stay as close as possible to the population of followers, in order to influence their behavior. This may be encoded by a function of the form

which favors a leader agent to be close to the barycenter of the followers distribution. Notice that the function \({\mathcal {L}}_2\) depends continuously on \(\varPsi \) as long as \(\mu _\varPsi ^F({\mathbb {R}}^d)>0\), which is always the case in practical situations. Hence, the Lagrangian of the system is the sum

for \(\alpha \in [0,1]\) a given constant.

Finally, a very simple and natural family of cost functions is

In particular, \(\phi _{p}\) is strictly convex and \(\{\phi _{p} = 0\} = \{0\}\), so that the conclusions of Proposition 2 hold true in the case \(h_{\varPsi } = h\) mentioned above. Namely, the optimal control \({\varvec{u}}\in L^{1}([0,T] ; ({\mathbb {R}}^{d})^{N})\) in the N-particle problem will actually act only on the population of leaders, while the evolution of the population of followers will be determined by the velocities and transitions rates detailed above.

5.1.1 Test 1: Opinion Dynamics with Emerging Leaders Population

We study the setting proposed in [2, 31] for opinion dynamics in presence of leaders influence, and we assume that \(x\in [-1,1]\), where \(\{\pm 1\}\) identify two opposite opinions. The interaction field \(v_{\varPsi }\) (75) is characterized by bounded confidence kernels with the following structure

where \(\varepsilon \ge 0\) is a regularization parameter for the characteristic function \(\chi \) and \(\kappa _{\bullet \star }\) represent the confidence intervals with the following numerical values,

The weighting functions \(g_1,g_2\) are such that \( g_1(\lambda )\equiv g_2(\lambda )\equiv \ell (\lambda )\) with

The transition operator \({\mathcal {T}}_\varPsi (x,\lambda )\) in (76) is identified by the following quantities

where the functions \({\mathcal {D}}_F\) and \( {\mathcal {D}}_L\) represent the concentration of followers and leaders at position x and are defined by

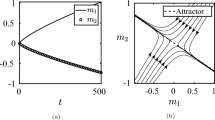

with \(G_F(\lambda ) = \ell (\lambda )\) and \(G_L(\lambda ) = 1-G_F(\lambda )\), and \(S_\bullet \) normalization constants such that concentrations are bounded above by one, i.e., \({\mathcal {D}}_\bullet (x,\varPsi ) \in [0,1]\) to preserve the positivity of the rates \(\alpha _F\) and \(\alpha _L\), and with the following parameters