Abstract

We consider a partially hinged composite plate problem and we investigate qualitative properties, e.g. symmetry and monotonicity, of the eigenfunction corresponding to the density minimizing the first eigenvalue. The analysis is performed by showing related properties of the Green function of the operator and by applying polarization with respect to a fixed plane. As a by-product of the study, we obtain a Hopf type boundary lemma for the operator having its own theoretical interest. The statements are complemented by numerical results.

Similar content being viewed by others

1 Introduction

Let \(\Omega =(0,\pi )\times (-\ell ,\ell )\subset {\mathbb R}^2\, \) with \(\ell >0\), we consider the weighted eigenvalue problem:

where \(\sigma \in [0,1)\) and, for \(\alpha ,\beta \in (0,+\infty )\) with \(\alpha <\beta \) fixed, p belongs to the following family of weights:

The interest for problem (1.1) is due to the fact that it describes the oscillating modes of the non-homogeneous partially hinged rectangular plate \(\Omega \) which, up to scaling, can model the decks of footbridges and suspension bridges, see [6, 23, 25] for more details; in particular, the partially hinged boundary conditions reflect the fact that decks of bridges are supported by the ground only at the short edges. We also remark that, in this framework, \(\sigma \) represents the so-called Poisson ratio which for most materials belongs to the interval [0, 1), p represents the density function of the plate and the integral condition in (1.2) means that the total mass of the plate is preserved.

In order to study the stability properties of the plate it is important to investigate the effect of the density function p on the eigenvalues, i.e on the frequencies of the plate. In this respect, the starting point of the study is the minimization problem:

where \(\lambda _1(p)\) denotes the first eigenvalue of (1.1). There exists a rich literature dealing with the second order Dirichlet version of (1.1)–(1.3) which is usually named composite membrane problem; this corresponds to the problem of building a body of prescribed shape and mass, out of given materials in such a way that the first frequency of the resulting membrane is as small as possible, see e.g. [14,15,16,17] and the monograph [27]. In the fourth order case, problem (1.3) is named composite plate problem and has been mainly studied under clamped (Dirichlet) or hinged (Navier) boundary conditions, see e.g. [3, 18,19,20,21,22, 29]. As far as we are aware, the partially hinged composite plate problem (1.1)–(1.3) has only been studied in [9], see also [7] for results about higher eigenvalues; in [9] it is proved that the infimum in (1.3) is achieved by the piecewise constant density:

where \(\chi _{S}\) denotes the characteristic function of a suitable set \(S \subset \Omega \), see Proposition 2.6 in Sect. 2. This information is useful in engineering applications, since the assemblage of two materials with constant density is simpler than the manufacturing of a material having variable density; however, the region S is given in terms of sub and super level sets of the eigenfunction \(u_{{\widehat{p}}}\) of \(\lambda _1({\widehat{p}})\) which is not explicitly known. Hence, in order to find more precise information about the location of the two materials, it is important to study the qualitative properties of \(u_{{\widehat{p}}}\) . In this field of research, typical results are qualitative properties, such as symmetry or monotonicity, of the first eigenfunction corresponding to the minimizer of (1.3), see e.g. [3, 15] and references therein. From this point of view, a crucial obstruction, when passing from the membrane to the plate problem, i.e. from the second to the fourth order case, is represented by the loss of maximum and comparison principles which usually enter in the techniques applied to prove symmetry results, such as reflections methods or moving planes techniques. Nevertheless, some interesting results have been recently obtained in [19, 20] for the fourth order equation by exploiting suitable choices of the boundary conditions and of the geometry of the domain for which proper comparison principles hold (e.g. by considering Navier boundary conditions on sufficiently smooth domains or Dirichlet boundary conditions on balls).

Regarding problem (1.1), the above mentioned difficulties are further increased by the unusual boundary conditions and only few results about qualitative properties of the first eigenfunction were proved in [9] for \(p=p(y)\). An important step forward in the study of (1.1) has been recently done in [8] by computing explicitly the Fourier expansion of the Green function of the operator in (1.1) and by showing its positivity, see Proposition 2.1 below. In particular, as a direct consequence of these results, it follows the positivity of the first eigenfunction of (1.1) and the simplicity of the first eigenvalue which are not obvious facts when dealing with higher order PDEs. The main aim of the present paper is to investigate reflection and monotonicity properties of the Green function in order to, possibly, exploit them to deduce related properties of the eigenfunction \(u_{{\widehat{p}}}\). Broadly speaking, the idea is to replace maximum principle arguments, not available in this case, with arguments based on the explicit knowledge of the Green function. To our best knowledge, this idea was first exploited in [10, 24]; in particular, in [10] a variant of the moving plane method, relying on fine estimates for the Green function [11], was developed in order to prove Gidas–Ni–Nirenberg type symmetry results for higher order Dirichlet problems in the ball. A similar approach has also been recently adopted in [19] for the Dirichlet composite plate problem in the ball, in order to prove radial symmetry and monotonicity of the first eigenfunction. We notice that all above mentioned proofs are based on polarization, a simple two-point rearrangement for functions which is well defined in first order Sobolev spaces, spaces of continuous functions or \(L^p\)-spaces, see e.g. [5, 12, 13, 31, 32].

However, since the Green function to (1.1) is only known in terms of its Fourier expansion, it is hard to get in our case all the precise information available for the Green function of the Dirichlet problem in balls, see [26, Chap. 6], and, in turn, to adopt in our framework the moving plane method as done in [19]. Nevertheless, we still managed to apply polarization by fixing the plane of reflection equal to the line \(x=\pi /2\) and by exploiting suitable reflection properties proved for the Green function with respect to this line. More precisely, we first establish a duality principle which reduces our minimization problem in \(H^2\) to a maximization problem in \(L^{2}\) and then, with the help of polarization, we prove a partial symmetry result in the x-direction for the maximizers of the reduced problem. We remark that, in general, it is quite delicate to exploit polarization in the higher order case since the polarization of an \(H^2\)-function is not contained in \(H^2\) anymore; the duality principle helps us to overcome this difficulty, see Lemma 5.1. We refer the interested reader to [10] where a similar idea was originally exploited to prove partial symmetry of minimizers for subcritical higher order Sobolev embeddings into weighted \(L^p\) spaces. Unfortunately, the fact of reflecting with respect to a fixed plane does not allow us to get monotonicity information about \(u_{{\widehat{p}}}\) as it happens, instead, when applying the moving plane method. However, by a direct inspection of the Green function derivatives we succeed in deducing some local information about the derivatives of \(u_{{\widehat{p}}}\). It is worth mentioning that, as a by-product of our analysis, we also obtain a Hopf type boundary lemma for the operator (1.1) having it own theoretical interest, see Corollary 2.3.

The paper is organised as follows: in Sect. 2 we set precisely our problem an we state our main results while in Sect. 3 we complement the study with suitable numerical results. The other sections are devoted to the proofs of the results.

2 Main Results

The natural functional space where to set problem (1.1) is

Note that the condition \(u=0\) has to be meant in a classical sense because \(\Omega \) is a planar domain and the energy space \(H^2_*(\Omega )\) embeds into continuous functions. Furthermore, for \(\sigma \in [0,1)\) fixed, \(H^2_*(\Omega )\) is a Hilbert space when endowed with the scalar product

with associated norm \( \Vert u\Vert _{H^2_*(\Omega )}^2=(u,u)_{H^2_*(\Omega )} \, \) which is equivalent to the usual norm in \(H^2(\Omega )\), see [23, Lemma 4.1]. Problem (1.1) in weak form reads

Hence, the first eigenvalue can be characterized as follows:

It is well known that the sign and simplicity property of the first eigenfunction of a differential operator are strictly related to the sign property of its Green function. For \(p=(\rho ,w)\in \overline{\Omega }\) fixed, the Green function to the operator in (1.1) is, by definition, the unique solution \(G(\cdot ,p)\in H^2_*(\Omega )\) to:

and it has been recently computed in [8]; we recall the precise statement here below.

Proposition 2.1

[8] There holds

where the \(\varphi _m\in C^2([-\ell , \ell ]\times [-\ell , \ell ])\) are strictly positive and strictly decreasing with respect to m, i.e.

Furthermore, \(G\in C^0(\overline{\Omega }\times \overline{\Omega })\) and

For the explicit (and very involved) expression of the functions \(\varphi _m\), we refer the interested reader to [8]. In the present paper we enrich the statement of Proposition 2.1 by showing that:

Theorem 2.2

For all \(y,w \in [-\ell ,\ell ]\), there holds:

where the derivative of G in the x-direction are meant in classical sense and \(G_x\in C^0(\overline{\Omega }\times \overline{\Omega })\). Furthermore, since \(G(x,y,\rho ,w)=G(\rho ,y,x,w)\), the above results hold by inverting x and \(\rho \).

It’s worth pointing out that neither the proof of (2.8) or that of Theorem 2.2 trivially follow from (2.6); indeed, they require an accurate inspection of each term of the expansion and sharp estimates. In this respect, the hardest part is the proof of (2.7) which follows only after lengthy computations.

A remarkable consequence of Proposition 2.1 is the validity of the positivity preserving property for the operator in (1.1) whereas Theorem 2.2 can be exploited to prove a Hopf type boundary lemma. For the sake of clarity we collect both statements in the following:

Corollary 2.3

If \(f\in L^2(\Omega )\) and \(u\in H^2_*(\Omega )\) is a (weak) solution to

then the following implication holds

where the derivatives of u in (2.9) are meant in classical sense.

Coming back to problem (1.1), in what follows we will always assume

By exploiting Proposition 2.1 and Theorem 2.2, we also obtain the following statement about the first eigenfunction of (1.1):

Corollary 2.4

Let \(p\in P_{\alpha ,\beta }\) with \(P_{\alpha ,\beta }\) as in (1.2). Then, the first eigenvalue \(\lambda _1(p)\) of problem (1.1) is simple and the first eigenfunction \(u_{p}\) is of one sign in \(\Omega \). Furthermore, \(u_p \in C^{3,\gamma }(\overline{\Omega })\) for some \(0<\gamma <1\) and, assuming \(u_p\) positive, we have:

Next we set

Definition 2.5

A couple \( (\widehat{p},{\widehat{u}}) \in P_{\alpha ,\beta } \times H^2_*(\Omega )\) is called optimal pair if \({\widehat{p}}\) achieves the infimum in (2.11) and \({\widehat{u}}\) is an eigenfunction associated with \(\lambda _1({\widehat{p}})\) .

From [9, Theorem 3.2], suitably combined with Corollary 2.4, we have the following:

Proposition 2.6

[9] There exists and optimal pair \((\widehat{p},\widehat{u}) \in P_{\alpha ,\beta } \times H^2_*(\Omega )\) with \(\widehat{u}\) positive. Furthermore,

where \(\chi _{S}\) and \( \chi _{\Omega \setminus S}\) are the characteristic functions of the sets S and \(\Omega \setminus S\); \(S\subset \Omega \) is such that \(|S|=\frac{\beta -1}{\beta -\alpha }\,|\Omega |\) and \(S := \{ (x,y)\in \Omega \,:\,0<\widehat{u}(x,y) \leqslant \sqrt{t} \}\) for some \(t> 0\).

Proposition 2.6 gives the useful information that optimal plates, in the sense of Definition 2.5, are made by only two materials. However, the region S is given in terms of the optimal eigenfunction \(\widehat{u}\) which is not explicitly known, hence, in order to locate the position of the materials, it is important to investigate symmetry and monotonicity properties of \(\widehat{u}\). To this aim, we set

and we denote by \(({\overline{x}}, y)\in \mathbb {R}^2\) the reflection of \((x,y)\in \mathbb {R}^2\) with respect to \(\partial \mathcal {H}_{\frac{\pi }{2}}\), i.e. \({\overline{x}}=\pi -x\). By exploiting related reflection properties of the Green function, see Lemma 6.4 in Sect. 6, we prove:

Theorem 2.7

Let \((\widehat{p},\widehat{u}) \in P_{\alpha ,\beta } \times H^2_*(\Omega )\) be an optimal pair with \(\widehat{u}\) positive. Then, one of the following alternative holds:

- (i):

-

\(\widehat{u}(x,y)>\widehat{u}({\overline{x}},y)\) for all \((x,y) \in (0, \frac{\pi }{2})\times [-\ell ,\ell ]\,;\)

- (ii):

-

\(\widehat{u}(x,y)<\widehat{u}({\overline{x}},y)\) for all \((x,y) \in (0, \frac{\pi }{2})\times [-\ell ,\ell ]\,;\)

- (iii):

-

\(\widehat{u} (x,y)= \widehat{u}({\overline{x}},y)\) for all \((x,y) \in [0,\pi ]\times [-\ell ,\ell ]\).

In few words, according to Theorem 2.7, two situations may occur: either \(u_{{\widehat{p}}}\) is symmetric w.r.t. \(\partial \mathcal {H}_{\frac{\pi }{2}}\), i.e. (iii) occurs, or it is “concentrated” on one of the two half plates delimited by \(\partial \mathcal {H}_{\frac{\pi }{2}}\), i.e. (i) or (ii) occurs. In particular, if (i) occurs then, by symmetry, we can always find an optimal pair such that also (ii) occurs and uniqueness of the optimal pair certainly fails. We notice that uniqueness is even not guaranteed in case (iii) since there could exist many weights symmetric with respect to \(x=\frac{\pi }{2}\) and having the form (2.12); this case could be ruled out by showing very precise monotonicity information about \(\widehat{u}\). Unfortunately, the polarization approach adopted in the proof of Theorem 2.7, by keeping the reflection plane fixed, nothing says about the monotonicity of \(\widehat{u}\); nevertheless, by direct inspection of the representation formula of solutions, we get the following local information.

Proposition 2.8

Let \((\widehat{p},\widehat{u}) \in P_{\alpha ,\beta } \times H^2_*(\Omega )\) be an optimal pair with \(\widehat{u}\) positive.Then, \(\widehat{u}\) satisfies (2.10) and one among the following:

-

if case (i) of Theorem 2.7 holds, then \(\widehat{u}_x\big (\frac{\pi }{2},y\big )<0\) for all \(y\in [-\ell ,\ell ]\);

-

if case (ii) of Theorem 2.7 holds, then \(\widehat{u}_x\big (\frac{\pi }{2},y\big )>0\) for all \(y\in [-\ell ,\ell ]\);

-

if case (iii) of Theorem 2.7 holds, then \(\widehat{u}_x\big (\frac{\pi }{2},y\big )=0\) for all \(y\in [-\ell ,\ell ]\).

For what so far stated, piecewise constant densities symmetric with respect to \(x=\frac{\pi }{2}\) and with the denser material \(\beta \) located near this line are among the candidates for being optimal in the sense of Definition 2.5. Nevertheless, due to the high complexity of the analytic expression of the coefficients in (2.6), a theoretical proof of their optimality seems out of reach by means of our techniques; this issue is instead supported by the numerical results we provide in Sect. 3.

We conclude the section by pointing out that, even in the second order case, the picture of results about symmetry and monotonicity properties of minimizers of Poincaré inequalities on rectangular domains is far from being complete, when mixed boundary conditions are dealt with. See e.g. [4, Sect. 6] where the authors left as on open problem the one dimensionality of extremals for certain Poincaré inequalities arising when dealing with the stationary Navier-Stokes equation in a square, under mixed Dirichlet-Neumann boundary conditions. For results in this direction, but under Neumann boundary conditions, we refer the interested reader to [28, Chap. II.5], [30] and references therein.

3 Numerical Results

In this section we illustrate some numerical results which complete the statements of Theorem 2.7 and Proposition 2.8.

3.1 Numerical Algorithm to Solve (2.11)

In order to find an optimal weight, we adopt an algorithm based on the following rearrangement lemma.

Lemma 3.1

[7, Lemma 5.4] Let \(u\in H^2_*(\Omega )\) be strictly positive in \(\Omega \). Then, the problem

admits the solution \( p_u(x,y) = \alpha \chi _{S} (x,y)+ \beta \chi _{\Omega \setminus S}(x,y)\) for a.e. \((x,y)\in \Omega \), where \(S=S(u) \subset \Omega \) is such that \(|S|=\frac{\beta -1}{\beta -\alpha }\,|\Omega |\). Moreover, set

we have that \( \{(x,y)\in \Omega \,:0<u(x,y)< \sqrt{t} \} \subseteq S \subseteq \{(x,y)\in \Omega \,: 0<u(x,y)\leqslant \sqrt{t}\}\,. \)

To solve (2.11) we run the numerical scheme below adjusted from [7], see also [18] where the algorithm was proposed for the clamped and simply supported problems and [15] for related numerical results in the second order case.

-

(i)

We solve numerically (1.1) with an arbitrary weight \(p^{(i)}\) and we determine the corresponding first eigenvalue \(\lambda ^{(i)}_1\) and the first eigenfunction \(u^{(i)}\).

-

(ii)

We compute numerically \(t^{(i)}>0\) such that \(|S^{(i)}|=| \{(x,y)\in \Omega \,:0<u^{(i)}(x,y)\leqslant \sqrt{ t^{(i)}}\}|=\frac{\beta -1}{\beta -\alpha }|\Omega |\) and we define the weight

$$\begin{aligned} p^{(i+1)}:=p_{u^{(i)}}=\alpha \chi _{S^{(i)}} (x,y)+ \beta \chi _{\Omega \setminus S^{(i)}}(x,y). \end{aligned}$$ -

(iii)

We solve numerically (1.1) with the weight \(p^{(i+1)}\) and we determine the corresponding first eigenvalue \(\lambda ^{(i+1)}_1\) and the first eigenfunction \(u^{(i+1)}\).

-

(iv)

Thanks to Lemma 3.1 we get

$$\begin{aligned} \Vert \sqrt{p^{(i+1)}}u^{(i)}\Vert _2^2\geqslant \Vert \sqrt{p^{(i)}}u^{(i)}\Vert _2^2. \end{aligned}$$Notice that we can apply Lemma 3.1 with \(S=S^{(i)}\) as in step (ii) since the \(u^{(i)}\) solve the equation in (1.1) a.e. hence, being strictly positive, their level sets must have zero measure.

-

(v)

We use the characterization (2.5)

$$\begin{aligned} \lambda ^{(i+1)}_1= \min _{\begin{array}{c} u \in H^2_*(\Omega )\setminus \{0\} \end{array}} \frac{\Vert u\Vert _{H^2_*}^2}{\Vert \sqrt{p^{(i+1)}}\,u\Vert _{2}^2}=\frac{\Vert u^{(i+1)}\Vert _{H^2_*}^2}{\Vert \sqrt{p^{(i+1)}}\,u^{(i+1)}\Vert _{2}^2}\leqslant \frac{\Vert u^{(i)}\Vert _{H^2_*}^2}{\Vert \sqrt{p^{(i+1)}}\,u^{(i)}\Vert _{2}^2}\leqslant \frac{\Vert u^{(i)}\Vert _{H^2_*}^2}{\Vert \sqrt{p^{(i)}}\,u^{(i)}\Vert _{2}^2}=\lambda ^{(i)}_1. \end{aligned}$$ -

(vi)

Iterating the procedure, we obtain a non increasing sequence \(i\mapsto \lambda _1^{(i)}\) bounded from below by \(\lambda _{\alpha ,\beta }\), so that the convergence of the algorithm to a certain \(\overline{\lambda }_1\geqslant \lambda _{\alpha ,\beta }\) is assured.

The only drawback of this algorithm is that we do not know a priori whether \(\overline{\lambda }_1= \lambda _{\alpha ,\beta }\); from a numerical point of view the problem may be circumvented by repeating the procedure with several different initial weights and noticing that we always get the same limit. To find the approximate solution of (1.1), for a given weight, we expand the solutions in Fourier series, adopting as orthonormal basis of \(L^2(\Omega )\) the explicit eigenfunctions of (1.1) with \(p\equiv 1\), known from [23]; in order to get a numerical approximation, we truncate the series at a certain \(N \in {\mathbb N}_{+}\) and we solve a linear system of 2N equations where the unknowns are the Fourier coefficients, see [7] for the details.

3.2 Conclusions

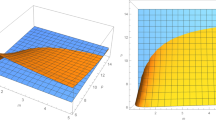

The level sets of \(u^{(1)}\) (left) and \({\overline{u}}\) (right) corresponding respectively to the densities \(p^{(1)}(x,y)\) and \({\overline{p}}(x,y)\). We assume \(N=20\) and (2.1)

In Figs. 1 and 2 we show some of our results on a plate having the following features:

More precisely, in Fig. 1 we compare the level sets of \(u^{(1)}\) (left) and of \({\overline{u}}\) (right) corresponding, respectively, to the weights \(p^{(1)}\) and \({\overline{p}}\) plotted below, i.e. the initial and the last weight of our algorithm: even if \(p^{(1)}\) and \(u^{(1)}\) are not \(\pi /2\)-symmetric, \({\overline{p}}\) and \({\overline{u}}\) are \(\pi /2\)-symmetric. A comparison between the level sets of \(u^{(1)}\) (black) and \({\overline{u}}\) (red) is given in Fig. 2. We triggered the algorithm with very different initial weights \(p^{(1)}\) either symmetric or not; after some iterations, we always get that the procedure converges to the same density \({\overline{p}}\) symmetric with respect to \(x=\pi /2\). Furthermore, we repeated the experiments reducing the width of the plate \(\ell \) towards choices consistent with common bridge design, e.g. \(\ell =\frac{\pi }{150}\). In any case, we recorded the same kind of results which suggest to locate the denser material in the middle of the plate; we point out that by reducing \(\ell \) we find weights \({\overline{p}}\) equals to \(\beta \) on a region which is approximately a rectangle centered at \(\pi /2\).

In conclusion, the observed results lead us to state the following conjecture:

there exists an optimal pair \(({\widehat{p}}, {\widehat{u}})\) of (2.11) with \({\widehat{u}}\) symmetric w.r.t. \(x=\pi /2\) and s.t.

Clearly, if the above conjecture holds, by taking \(\widehat{p}=p_{\widehat{u}}\) as given in (2.12), we get that the corresponding optimal weight is symmetric w.r.t. \(x=\pi /2\) and it is equal to \(\beta \) in the central part of the plate.

4 Proof of Theorem 2.2

We define the series

with the \(\varphi _m\) as in (2.6), and we state two preliminary lemmas. Notice that we neglect the dependence on y, w since it does not play a role in the proofs.

Lemma 4.1

Let the series \(\mathcal {S}_1(z)\) be as in (4.15); then

Proof

We split the proof into three steps.

Step 1 Thanks to Theorem 2.1–(2.7) we know that \( 0<\varphi _{m}<\varphi _1\) \(\forall m>1\), then we obtain

and, in turn, that

Since \(\arcsin \big (\frac{\pi ^2}{6}-1\big )<\frac{\pi }{4}\), through (4.17) we have

Step 2 We fix \(N\geqslant 3\) and write \(\mathcal {S}_1(z)= \sum _{m=1}^N \dfrac{\varphi _m}{m^2}\sin (mz)+\sum _{m=N+1}^\infty \dfrac{\varphi _m}{m^2}\sin (mz)\,. \) Then, we exploit the elementary inequality \( \sin (mz)>\sin (z)\), \(\forall z\in \big (0,\frac{\pi }{N+1}\big )\) and \(\forall m=2,\dots ,N\) (see [8, Lemma 6.3] for a proof) and Theorem 2.1–(2.7) to get

On the other hand, through Theorem 2.1–(2.7), we get

By combining (4.19) and (4.20) we infer

where

Next we denote by \(z_N\) the unique solution to the equation:

the above definition makes sense for all \(N\geqslant 1\) since the map \(N\mapsto C_N\) is positive, strictly decreasing and \(0<C_N<1\). We prove that

When \(N=3\), \(z_3\approx 0.21<\frac{\pi }{5}\) and (4.22) follows. We complete the proof of (4.22) by showing that

To this purpose we notice that \(\sum \limits _{m=1}^N \frac{1}{m^2}> 1\) and \(\sum \limits _{m=N+1}^\infty \frac{1}{m^2}< \int _N^\infty \frac{1}{x^2}\,dx=\frac{1}{N}\), implying that

To tackle (4.23) we use the estimate:

Combining this with (4.24), (4.23) follows by noticing that \( \dfrac{1}{N}<\dfrac{3}{N+2}\) for all \(N\geqslant 4\). Finally, in view of (4.22), we get

Hence, by combining (4.18) with (4.26) written for all \(3\leqslant N \leqslant {\overline{N}}\), we obtain \( \mathcal {S}_1(z)>0\) \(\forall z\in \big [\frac{\pi }{{\overline{N}}+2},\frac{3}{4}\pi \big ]\) and passing to the limit as \({\overline{N}}\rightarrow +\infty \) we conclude that

Step 3 It remains to consider \(z\in \big (\frac{3}{4}\pi ,\pi \big )\). For \(N\geqslant 3\), odd integer, we set \({\overline{z}}=\pi -z\) and we rewrite the series as

By Theorem 2.1 we know that \(\varphi _m>0\) and it strictly decreasing with respect to \(m\in \mathbb {N}^+\) for all \(y,w\in [-\ell ,\ell ]\); hence the following estimate holds:

Next, by exploiting the inequality

(see Lemma 7.1 in the Appendix for a proof) and (4.29), we get

for all \({\overline{z}}\in (0,\dfrac{\pi }{N+1})\). On the other hand, through the monotonicity of the \(\varphi _m\), we get

From (4.30)–(4.31), for all \(N\geqslant 3\) odd, we infer

where

Next we denote by \({\overline{z}}_N\) the unique solution to the equation

The above definition makes sense for all \(N\geqslant 3\), odd, since the map \(N\mapsto {\overline{C}}_N\) is positive, strictly decreasing and \(0<{\overline{C}}_N<1\).

We prove that \( {\overline{C}}_N< \sin \big (\frac{\pi }{N+3}\big )\) for all \(N\geqslant 3\), odd. To this aim we note that \( \frac{3}{4}+\sum \limits _{\begin{array}{c} m=3\\ \text {odd} \end{array}}^N \big [\frac{1}{m}-\frac{1}{m+1}\big ]^2> \frac{3}{4}\) and \(\sum \limits _{m=N+2}^\infty \frac{1}{m^2}< \int _{N+1}^\infty \frac{1}{x^2}\,dx=\frac{1}{N+1}, \) implying \( {\overline{C}}_N< \frac{4}{3(N+1)}\) \(\forall N\geqslant 3.\) Finally, by exploiting (4.25), we get \({\overline{C}}_N <\frac{4}{3(N+1)}\leqslant \frac{3}{N+3}\leqslant \sin \big (\frac{\pi }{N+3}\big )\) for all \(N\geqslant 3\).

Summarizing, from the above estimates we get

By repeating the above argument for all \(3 \leqslant N \leqslant {\overline{N}}\) with N and \({\overline{N}}\) odd and taking the union of the sets, we finally obtain \( \mathcal {S}_1(z)>0\) \(\forall z\in \big (\frac{3}{4}\pi ,\pi -\frac{\pi }{{\overline{N}}+3}\big ]\). Hence, passing to the limit as \({\overline{N}}\rightarrow +\infty \), and combining with (4.27) we obtain (4.16). \(\square \)

Lemma 4.2

Let the series \(\mathcal {S}_2(z)\) be as in (4.15); then

Proof

It suffices noticing that:

with \(\mathcal {S}_1\) as given in (4.15). Then, the thesis comes from Lemma 4.1 since \(\mathcal {S}_1({\overline{z}})>0\) \(\forall \, {\overline{z}}\in (0, \pi )\). \(\square \)

Proof of Theorem 2.2 completed

We neglect the dependence on y, w since it does not affect the results. Differentiating (2.6) with respect to x we get

By (4.15), we may write

Therefore, the sign of \(G_x(0,\rho )\) and of \(G_x(\pi ,\rho )\) follows from from Lemma 4.1 and Lemma 4.2.

Next we turn to the sign of \(G_x\) for \(x=\frac{\pi }{2}\). Clearly, \(G_x\big (\frac{\pi }{2},\frac{\pi }{2}\big )=0\). For \(\rho \in (0,\frac{\pi }{2})\), we have

For \(\rho \in (\frac{\pi }{2}, \pi )\) we have

Since the \(\varphi _{2k}\) are decreasing with respect to k, see Theorem 2.1–(2.7), the proof of the sign of the above term follows by arguing as in the proof of Lemmas 4.1 and 4.2 with minor changes.

5 Proof of Corollaries 2.3, 2.4 and duality principle

5.1 Proof of Corollary 2.3

Since u writes

the proofs of both the sign and the monotonicity issues follow as direct consequence of the related properties of the Green function given in Proposition 2.1 and Theorem 2.2.

5.2 Proof of Corollary 2.4

The proof that the first eigenfunction is of one sign, and hence simple, follows by exploiting the so-called dual cone decomposition technique which relies on the positivity preserving property stated in Corollary 2.3. Since the proof is standard we omit it and we refer the interested reader to [9, Lemma 7.2] where the same issue, together with the simplicity of the first eigenvalue, was proved for a related fourth order eigenvalue problem in dimension 1. As concerns the regularity of \(u_p\), it follows by combining elliptic regularity and embedding arguments. Indeed, it was proved in [23, Lemma 4.2] that the operator in (1.1) satisfies the the complementing conditions of Agmon–Douglis–Nirenberg [2], hence elliptic regularity theory applies. In particular, from \(\lambda _1(p) p u_p\in L^{\infty }(\Omega )\) we infer that \(u_p \in W^{4,q}(\Omega )\) for all \(1<q< +\infty \); then the thesis comes by noticing that \(W^{4,q}(\Omega )\subset C^{3,\gamma }(\overline{\Omega })\) for some \(0<\gamma <1\), see [1, Theorem 5.4].

Now we turn to the sign of \((u_p)_x\) on the short edges. Recalling Theorem 2.2, we have

Similarly, we get \( (u_p)_x(\pi ,y)<0\) for all \(y \in [-\ell , \ell ] \) and this concludes the proof.

5.3 Duality Principle

Let \({\mathcal G}: L^{2}(\Omega ) \rightarrow H^2_*(\Omega )\) denote the solution operator for the biharmonic equation under partially hinged boundary conditions defined by

In terms of the Green function (2.6), we get the usual integral representation:

Next, inspired by [10, Lemma 12], we associate to (2.5) the following dual maximization problem:

By standard compactness arguments, the supremum in (4.3) is achieved, furthermore, if v is a maximizer, by exploiting (4.2) and the positivity of G, also \(|v|\in L^{2}(\Omega )\) is a maximizer; hence, a maximizer to (4.3) can always be assumed nonnegative. Finally we state:

Lemma 5.1

(Duality principle) Let \(p\in P_{\alpha , \beta }\), then \(\Theta _1(p)= \lambda _1^{-1}(p)\). Furthermore,

- (i):

-

if \(u \in H^2_*(\Omega )\) is a positive minimizer of (2.5) with \(\Vert \sqrt{p}\,u\Vert _2=1\), then u is a maximizer for (4.3);

- (ii):

-

if \(v \in L^{2}(\Omega )\) is a nonnegative maximizer of (4.3) with \(\Vert \sqrt{p}\,v\Vert _2=1\), then \(v \in H^2_*(\Omega )\) and it is a minimizer for (2.5), hence positive.

Proof

Let \(u \in H^2_*(\Omega )\) be a positive minimizer for (2.5) with \(\Vert \sqrt{p}\,u\Vert _2=1\). Then u solves problem (1.1), therefore \(u=\lambda _1 {\mathcal G}(p \,u)\). By multiplying both sides of this equality by \(p\,u\) and integrating over \(\Omega \), we get \( \lambda _1\int _\Omega {\mathcal G}(p \,u)\,p \,u\,dxdy=\int _\Omega p \,u^2\,dx=\Vert \sqrt{p}\,u\Vert _2^2=1, \) hence

Viceversa let \(v \in L^{2}(\Omega )\) be a nonnegative maximizer for (4.3) with \(\Vert \sqrt{p}\,v\Vert _2=1\). The corresponding Euler-Lagrange equation in weak form reads

implying \({\mathcal G}(p\, v)=\Theta _1\,v\) a. e. in \(\Omega \). Therefore, taking \(v=\frac{1}{\Theta _1}{\mathcal G}(p\, v) \in H^2_*(\Omega )\), we obtain by (4.1)

so that

By (4.4) and (4.5) we get \(\Theta _1(p)= \lambda _1^{-1}(p)\). But then the first inequality in (4.4) must be an equality, and (i) follows. Similarly, the first inequality in (4.5) must be an equality, and (ii) follows. \(\square \)

6 Proof of Theorem 2.7

By Proposition 2.6 we know that there exists an optimal pair \((p_{\widehat{u}},{{\widehat{u}}}) \in P_{\alpha ,\beta } \times H^2_*(\Omega )\), with \( p_{\widehat{u}}\) as given in (2.12). For the sake of simplicity, in the following we will simply denote \((p_u, u)\) this pair, hence

and \(t>0\) is fixed as in the statement of Proposition 2.6.

To begin with we recall some notations about the polarization of a function, adapting the technique to our framework. Let \(\mathcal {H}_{\frac{\pi }{2}}\subset \mathbb {R}^2\) be the half-plane defined in (2.13); for every \((x,y)\in \mathbb {R}^2\) we denote by \(({\overline{x}}, y)\in \mathbb {R}^2\) the reflection of (x, y) with respect to \(\partial \mathcal {H}_{\frac{\pi }{2}}\), i.e. \(\overline{x}=\pi -x\). For every measurable function \(v: \Omega \rightarrow \mathbb {R}\) we define its polarization with respect to \(\mathcal {H}_{\frac{\pi }{2}}\) as \(v_{\mathcal {H}_{\frac{\pi }{2}}}: \Omega \rightarrow \mathbb {R}\) such that

In the sequel, for the sake of brevity, we will simply write \(\mathcal {H}\) instead of \(\mathcal {H}_{\frac{\pi }{2}}\) and \(v_{\mathcal {H}}\) instead of \(v_{\mathcal {H}_{\frac{\pi }{2}}}\). It is readily seen that the following pointwise identity holds:

Now, for \(t>0\) as fixed in (4.1), we also consider the weight:

By direct inspection, arguing as in the proof of Lemma 6.3 below, we have that \(\int _{\Omega }p_{u_{\mathcal {H}}}\,dxdy=\int _{\Omega }p\,dxdy=|\Omega |\), hence \(p_{u_{\mathcal {H}}}\in P_{\alpha , \beta }\). Next we state two technical lemmas whose proofs can be obtained by slightly modifying the proofs of similar statements in [19], hence we omit them.

Lemma 6.1

[19, Lemma 5.3] The following identity holds:

Lemma 6.2

[19, Lemma 5.4] There holds:

- (i):

-

if \( p_{u}(x,y)u(x,y)\equiv [p_u(x,y)\,u(x,y)]_\mathcal {H}\text { in } \Omega \), then \(u(x,y)\equiv u_\mathcal {H}(x,y)\text { in } \Omega \);

- (ii):

-

if \( p_{u}({\overline{x}},y)u({\overline{x}},y)\equiv [p_u(x,y)\,u(x,y)]_\mathcal {H}\text { in } \Omega \), then \(u({\overline{x}},y)\equiv u_\mathcal {H}(x,y)\text { in } \Omega \) .

Finally, we prove the identity:

Lemma 6.3

We have

Proof

We compute:

where the first equality in the last line simply comes by changing variables. \(\square \)

Next we turn to the proof of some reflection properties of the Green function G that will be crucial in the following.

Lemma 6.4

For \((x,y)\in \mathcal {H} \cap \overline{\Omega }\) and \((\rho ,w)\in \mathcal {H} \cap \overline{\Omega }\) we have:

- (i):

-

\(G(x,y,\rho ,w)\geqslant \max \{G({\overline{x}},y,\rho ,w),G(x,y,\overline{\rho },w)\}\) with strict inequality if \(x,\rho \ne 0,\frac{\pi }{2}\);

- (ii):

-

\(G(x,y,\rho ,w)=G({\overline{x}},y,\overline{\rho },w)\);

- (iii):

-

\(G({\overline{x}},y,\rho ,w)=G(x,y,\overline{\rho },w)\).

Proof

The variables y, w do not play a role, hence we fix them and we do not write them in the proof. \(\square \)

Proof of (i)

We study the sign of

For \({\widetilde{x}}=2x\) and \(\widetilde{\rho }=2\rho \), we observe that

The proof the above inequality can be obtained by repeating, with minor changes, the proof of (2.8) as given in [8, Theorem 2.2]. The main ingredient is the monotonicity of the functions \(\varphi _{2k}\) with respect to k. This implies that \(G(x,\rho )-G({\overline{x}},\rho )>0\) for all \((x,\rho )\in (0,\pi /2)^2\). We observe that for \(x\in \{0,\pi /2\}\) or \(\rho \in \{0,\pi /2\}\) we have \(G(x,\rho )=G({\overline{x}},\rho )\), giving \(G(x,\rho )\geqslant G({\overline{x}},\rho )\) for all \((x,\rho )\in [0,\pi /2]^2\).

Repeating the above arguments, but inverting the variables x and \(\rho \), we get the statement (i). \(\square \)

Proof of (ii) and (iii)

For all \((x,\rho )\in [0,\pi ]^2\) we get

\(\square \)

Thanks to Lemma 6.4 we obtain:

Lemma 6.5

Let \({\mathcal G}: L^{2}(\Omega ) \rightarrow H^2_*(\Omega )\) denote the solution operator defined by (4.1). Then,

and the equality holds in (4.3) if and only if

Proof

We define

where g and h have to be meant equal to u or \(u_\mathcal {H}\) . Then, by writing \(\Omega \times \Omega =[(\Omega \cap \mathcal {H}) \times (\Omega \cap \mathcal {H})] \cup [(\Omega \cap \mathcal {H}) \times (\Omega \setminus \mathcal {H})] \cup [(\Omega \setminus \mathcal {H}) \times (\Omega \cap \mathcal {H})]\cup [(\Omega \setminus \mathcal {H}) \times (\Omega \setminus \mathcal {H})]\) and changing variables properly, we get

By Lemma 6.1 and (4.2) we have

so that

Then, thanks to Lemma 6.4 (ii) and (iii) we conclude that

With similar arguments we get

and combining (4.4)–(4.5) we obtain

Now, by Lemma 6.4(i), we know that \(G(x,y,\rho ,w)-G(x,y,\overline{\rho },w)\geqslant 0\) while, by Lemma 6.1, we get

Finally, (4.3) follows by noticing that, through Lemma 6.1 and (4.2), we have

To prove the last part of the statement we set \(D_1:=\{(x,y)\in \Omega \cap \mathcal {H}:\, p_u(x,y)u(x,y)>p_u(\overline{x},y)u({\overline{x}},y)\}\) and \(D_2:=\{(\rho ,w)\in \Omega \cap \mathcal {H}:\, [p_u(\rho ,w)u(\rho ,w)]_{\mathcal {H}}>p_u(\rho ,w)u(\rho ,w)\}\). If equality holds in (4.3) we get

Now, (4.6) makes sense if and only if \(|D_1|=0\) or \(|D_2|=0\), i.e., if and only if \([p_u(x,y)u(x,y)]_\mathcal {H}=p_u(\overline{x},y)u({\overline{x}},y)\) or \([p_{u}(x,y)u(x,y)]_\mathcal {H}=p_u(x,y)u(x,y)\) a.e. in \(\Omega \). \(\square \)

Proof of Theorem 2.7 completed

Thanks to Lemma 5.1 we have that u is a maximizer for (4.3) with \(p=p_u\). Then, since \((u,p_u)\) is an optimal pair, \(u_\mathcal {H}\in L^2(\Omega )\) and \({p_{u_{\mathcal {H}}}}\in P_{\alpha , \beta }\), we infer that

Recalling that, by Lemma 6.3, \(\Vert \sqrt{p_{u_\mathcal {H}}}u_\mathcal {H}\Vert _2=\Vert \sqrt{p_u} u\Vert _2\), from above we get that

Then, by Lemma 6.5, (4.3) holds with the equality and, in view of Lemma 6.2, this implies \(u(x,y)=u_\mathcal {H}(x,y)\) or \(u({\overline{x}},y)= u_\mathcal {H}(x,y)\) a.e. in \(\Omega \). Since u is continuous, we obtain

Let us consider the first case of (4.7); then, it is readily seen that:

Indeed, if \(p_{u}(x_0,y_0)u(x_0,y_0)< p_u(\overline{x}_0,y_0)\,u({\overline{x}}_0,y_0)\) for some \((x_0,y_0)\in \overline{\Omega }\cap \mathcal {H}\), by (4.7) we get \(p_{u}(x_0,y_0)=\alpha \) and \(p_{u}({\overline{x}}_0,y_0)=\beta \). But then \(u({\overline{x}}_0,y_0)\leqslant u( x_0,y_0) \leqslant \sqrt{t}\) and \(p_{u}({\overline{x}}_0,y_0)=\alpha \) which is a contradiction.

Suppose now that there exists \((x_1,y_1)\in \overline{\Omega }\cap \mathcal {H}\) such that the strict inequality holds in the first of (4.7), clearly \(x_1 \ne 0, \pi /2\). Then, by continuity, there exists a subset \(U\subset ( \overline{\Omega }\cap \mathcal {H})\) of positive measure such that \(u(x,y)>u({\overline{x}},y)\) for all \((x,y)\in U\) and, by arguing as for the proof of (4.8), such that

Finally, through Lemma 6.4, (4.8) and (4.9), for all \((x,y) \in (0, \frac{\pi }{2})\times [-\ell ,\ell ]\) we obtain

implying that (i) or (iii) holds. Similarly, if we consider the second inequality in (4.7), we get that (ii) or (iii) holds. This concludes the proof. \(\square \)

7 Proof of Proposition 2.8

First, for all \( \rho \in (0,\pi )\) and \(y,w\in [-\ell ,\ell ]\), we note that

By exploiting the above equality we write

From Theorem 2.2 we know that \(G_x\big (\frac{\pi }{2},y,\rho ,w\big )<0\) for all \(\rho \in \big (0,\frac{\pi }{2}\big )\); then, if case (i) of Theorem 2.7 holds, by (4.8)–(4.9), we get \(p_u(\rho ,w)u(\rho ,w)>p_u(\overline{\rho },w)u(\overline{\rho },w)\) and, in turn, that \(u_x\big (\frac{\pi }{2},y\big )<0\) for all \(y\in [-\ell ,\ell ]\). Similarly, the reverse inequality holds if case (ii) occurs. Finally, when (iii) holds, then \(p_u(\rho ,w)u(\rho ,w)\equiv p_u(\overline{\rho },w)u(\overline{\rho },w)\) in \(\overline{\Omega }\), hence \(u_x\big (\frac{\pi }{2},y\big )=0\) for all \(y\in [-\ell ,\ell ]\).

References

Adams, R.A.: Sobolev Spaces. Academic Press, New York (1975)

Agmon, S., Douglis, A., Nirenberg, L.: Estimates near the boundary for solutions of elliptic partial differential equations satisfying general boundary value conditions I. Commun. Pure Appl. Math. 12, 623–727 (1959)

Anedda, C., Cuccu, F., Porru, G.: Minimization of the first eigenvalue in problems involving the bi-Laplacian. Rev. Mate. Teor. Appl. 16, 127–136 (2009)

Arioli, G., Gazzola, F., Koch, H.: Uniqueness and bifurcation branches for planar steady Navier-Stokes equations under Navier boundary conditions (2019) (preprint)

Bartsch, T., Weth, T., Willem, M.: Partial symmetry of least energy nodal solutions to some variational problems. J. Anal. Math. 96, 1–18 (2005)

Berchio, E., Buoso, D., Gazzola, F.: On the variation of longitudinal and torsional frequencies in a partially hinged rectangular plate. ESAIM Control Optim. Calc. Var. 24, 63–87 (2018)

Berchio, E., Falocchi, A.: Maximizing the ratio of eigenvalues of non-homogeneous partially hinged plates. J. Spectr. Theory. arXiv: 1907.11097 (to appear)

Berchio, E., Falocchi, A.: A positivity preserving property result for the biharmonic operator under partially hinged boundary conditions. arXiv: 2004.03862v1 (2020)

Berchio, E., Falocchi, A., Ferrero, A., Ganguly, D.: On the first frequency of reinforced partially hinged plates. Commun. Contemp. Math., 1950074 (2019)

Berchio, E., Gazzola, F., Weth, T.: Radial symmetry of positive solutions to nonlinear polyharmonic Dirichlet problems. J. Reine Angew. Math. 620, 165–183 (2008)

Boggio, T.: Sulle funzioni di Green d’ordine \(m\). Rend. Circ. Mat. Palermo 20, 97–135 (1905)

Brock, F.: Symmetry and monotonicity of solutions to some variational problems in cylinders and annuli. Electron. J. Differ. Equ. 108, 1–20 (2003)

Brock, F., Solynin, A.Y.: An approach to symmetrization via polarization. Trans. Am. Math. Soc. 352, 1759–1796 (2000)

Chanillo, S.: Conformal geometry and the composite membrane problem. Anal. Geom. Metr. Spaces 1, 31–35 (2013)

Chanillo, S., Grieser, D., Imai, M., Kurata, K., Ohnishi, I.: Symmetry breaking and other phenomena in the optimization of eigenvalues for composite membranes. Commun. Math. Phys. 214, 315–337 (2000)

Chanillo, S., Grieser, D., Kurata, K.: The free boundary problem in the optimization of composite membranes. In: Differential Geometric Methods in the Control of Partial Differential Equations (Boulder, CO, 1999). Contemporary Mathematics, vol. 268, , pp. 61-81. American Mathematical Society, Providence (2000)

Chanillo, S., Kenig, C., To, T.: Regularity of the minimizers in the composite membrane problem in \(\mathbb{R}^2\). J. Funct. Anal. 255(9), 2299–2320 (2008)

Chen, W., Chou, C.-S., Kao, C.-Y.: Minimizing eigenvalues for inhomogeneous rods and plates. J. Sci. Comput. 69, 983–1013 (2016)

Colasuonno, F., Vecchi, E.: Symmetry in the composite plate problem. Commun. Contemp. Math. 21(2), 1850019 (2018)

Colasuonno, F., Vecchi, E.: Symmetry and rigidity for the hinged composite plate problem. J. Differ. Equ. 266(8), 4901–4924 (2019)

Colbois, B., Provenzano, L.: Eigenvalues of elliptic operators with density. Calculus Var. Partial Differ. Equ. 57, 36 (2018)

Cuccu, F., Emamizadeh, B., Porru, G.: Optimization problems for an elastic plate. J. Math. Phys. 47, 08290 (2006)

Ferrero, A., Gazzola, F.: A partially hinged rectangular plate as a model for suspension bridges. Disc. Cont. Dyn. Syst. A 35, 5879–5908 (2015)

Ferrero, A., Gazzola, F., Weth, T.: Positivity, symmetry and uniqueness for minimizers of second order Sobolev inequalities. Ann. Mat. Pura Appl. 186, 565–578 (2007)

Gazzola, F.: Mathematical Models for Suspension Bridges MS&A, vol. 15. Springer, Cham (2015)

Gazzola, F., Grunau, H.C., Sweers, G.: Polyharmonic Boundary Value Problems, LNM 1991. Springer, Berlin (2010)

Henrot, A.: Extremum Problems for Eigenvalues of Elliptic Operators. Frontiers in Mathematics. Birkäuser, Basel (2006)

Kawohl, B.: Rearrangements and Convexity of Level Sets in PDE. Lecture Notes in Mathematics, vol. 1150. Springer, Berlin (1985)

Lamberti, P.D., Provenzano, L.: A maximum principle in spectral optimization problems for elliptic operators subject to mass density perturbations. Eurasian Math. J. 4(3), 70–83 (2013)

Nazarov, A.I.: On the “one-dimensionality” of the extremal in the Poincaré inequality in the square. J. Math. Sci. 109(5), 1928–1939 (2002)

Smets, D., Willem, M.: Partial symmetry and asymptotic behavior for some elliptic variational problems. Calc. Var. 18, 57–75 (2003)

Weth, T.: Symmetry of solutions to variational problems for nonlinear elliptic equations via reflection methods. Jahresber. Dtsch. Math.-Ver. 112(3), 119–158 (2010)

Acknowledgements

The authors are members of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM) and are partially supported by the INDAM-GNAMPA 2019 Grant: “Analisi spettrale per operatori ellittici con condizioni di Steklov o parzialmente incernierate” and by the PRIN project 201758MTR2: “Direct and inverse problems for partial differential equations: theoretical aspects and applications” (Italy).

Funding

Open access funding provided by Politecnico di Torino within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Lemma 7.1

Let \(N\geqslant 3\) be an integer. For all \(z\in \big (0,\frac{\pi }{N+1}\big )\) and for all \(m=3,\dots ,N\), there holds

Proof

Clearly, \(\upsilon _m(0)=0\); we set \(a_m:=\big [\frac{1}{m^2}-\frac{1}{(m+1)^2}\big ]^2\) and we compute

Using the complex identities for the trigonometric functions we obtain

Hence \(\sin [(m+1) z]>\sin (m z)\) for \(z\in \big (0,\frac{\pi }{2m+1}\big )\) and \(\upsilon _m''(z)>0\) for \(z\in \big (0,\frac{\pi }{2m+1}\big )\); this readily implies that \(\upsilon _m(z)>0\) for \(z\in \big (0,\frac{\pi }{2m+1}\big ]\).

For \(z\in \big (\frac{\pi }{2m+1},\frac{\pi }{m+1}\big ) \) we have

We study the sign of \(\overline{\upsilon }_m (z)\) for \(z\in \big (\frac{\pi }{2m+1},\frac{\pi }{m+1}\big )\). We have

since, by (7.47), we have \(\sin [(m+1) z]-\sin (m z)<0\) for \(z\in \big (\frac{\pi }{2m+1},\frac{3\pi }{2m+1}\big )\) and \(\frac{\pi }{m+1}<\frac{3\pi }{2m+1}\) for \(m\geqslant 3\). Thus if \(\overline{\upsilon }_m \big (\frac{\pi }{m+1}\big )>0\) and \(\overline{\upsilon }_m \big (\frac{\pi }{2m+1}\big )>0\) we conclude that \(\overline{\upsilon }_m(z)>0\) for \(z\in \big (\frac{\pi }{2m+1},\frac{\pi }{m+1}\big )\) and, in turn, \(\upsilon _m(z)>0\) for all \(z\in \big (\frac{\pi }{2m+1},\frac{\pi }{m+1}\big )\).

Recalling that \(\sin \big (\frac{m\pi }{m+1}\big )=\sin \big (\frac{\pi }{m+1}\big )\) we get

Moreover \(\sin \big (\frac{m\pi }{2m+1}\big )=\sin \big (\frac{(m+1)\pi }{2m+1}\big )\) so that

We observe that \(\sin \big (\frac{m\pi }{2m+1}\big )>\sin \big (\frac{\pi }{m+1}\big )>0\) for all \(m\geqslant 3\), indeed \(\frac{\pi }{2}>\frac{m\pi }{2m+1}>\frac{\pi }{m+1}>0\) for all \(m\geqslant 3\); moreover we have \(\frac{1}{m^2}-\frac{1}{(m+1)^2}>\frac{1}{m^2}-\frac{1}{m(m+1)}>0\), implying \( \overline{\upsilon }_m \big (\frac{\pi }{2m+1}\big )>0\). This concludes the proof. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Berchio, E., Falocchi, A. About Symmetry in Partially Hinged Composite Plates. Appl Math Optim 84, 2645–2669 (2021). https://doi.org/10.1007/s00245-020-09722-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00245-020-09722-y