Abstract

We searched for evidence that the auditory organization of categories of sounds produced by actions includes a privileged or “basic” level of description. The sound events consisted of single objects (or substances) undergoing simple actions. Performance on sound events was measured in two ways: sounds were directly verified as belonging to a category, or sounds were used to create lexical priming. The category verification experiment measured the accuracy and reaction time to brief excerpts of these sounds. The lexical priming experiment measured reaction time benefits and costs caused by the presentation of these sounds prior to a lexical decision. The level of description of a sound varied in how specifically it described the physical properties of the action producing the sound. Both identification and priming effects were superior when a label described the specific interaction causing the sound (e.g. trickling) in comparison to the following: (1) more general descriptions (e.g. pour, liquid: trickling is a specific manner of pouring liquid), (2) more detailed descriptions using adverbs to provide detail regarding the manner of the action (e.g. trickling evenly). These results are consistent with neuroimaging studies showing that auditory representations of sounds produced by actions familiar to the listener activate motor representations of the gestures involved in sound production.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There has been increasing interest in the properties of sound sources that listeners can identify and classify, both through behavioral research (Cabe and Pittenger 2000; Carello et al. 1998; Grassi 2005; Grassi and Casco 2010; Houben et al. 2004; Klatzky et al. 2000; Kunkler-Peck and Turvey 2000; Lakatos et al. 1997; Warren and Verbrugge 1984) and through neuroimaging research (Aglioti and Pazzaglia 2010; Alaerts et al. 2009; Aziz-Zadeh et al. 2004; Beauchamp et al. 2004; Bidet-Caulet et al. 2005; Chen et al. 2008; De Lucia et al. 2009; Gazzola et al. 2006; James et al. 2011; Kaplan and Iacobani 2007; Lewis et al. 2004, 2005; Lewis 2006; Lewis et al. 2011, 2012; Pizzamiglio et al. 2005; Sedda et al. 2011). Well-identified environmental sounds are desirable for research in experimental and clinical neuropsychology (Marcell et al. 2000, 2007). However, studies using environmental sounds often face the challenge that listeners can categorize one sound event in a variety of ways (e.g. causal physical properties, semantic knowledge, perceptual similarity), each of which has its own levels of organization. For instance, although the ability to identify the material of struck objects has been well studied, the evidence indicates that listeners do not reliably discriminate glass from metal, and plastic from wood (see Lemaitre and Heller 2012, for a review). Therefore, at this level of generality (wood, glass, metal, etc.), the conclusion might be that material discrimination based on acoustics is, on average, poor in human listeners. However, at a higher level of generality in the hierarchy of sound-producing physical events (resonant vs. non-resonant material), the conclusion would be that discrimination performance is quite good. This example illustrates that the question of how well listeners can identify a given sound source property can sometimes be reformulated as at what level of the hierarchy they can identify it.

In order to characterize the psychological organization of sound source properties, it is necessary to define the scope of the causal properties under examination. There are reasons to focus on the actions that generate the sound events, with an eye toward the physics of the causal events and how they influence the acoustics. Vanderveer (1979) found that people who are asked to identify sounds made by common objects would spontaneously describe the actions involved in generating the sounds. More recently, Lemaitre and Heller (2012) found that the actions producing simple sound events were better identified than the materials. Furthermore, several neuroimaging studies have shown that the sounds of actions (and in particular the sounds made by human gestures) activate motor and pre-motor areas as well as regions involved in multimodal or amodal processing of biological motion, whereas other types of sounds (meaningless noises, sounds of the environment) do not (Aglioti and Pazzaglia 2010; Aziz-Zadeh et al. 2004; Galati et al. 2008; Keysers et al. 2003; Lewis et al. 2005; Pizzamiglio et al. 2005). Other results have shown that subjects use sounds of impacted objects to plan grip gestures (Castiello et al. 2010; Sedda et al. 2011). This suggests that sound perception and action planning are robustly associated. One possible implication is that the most neurally and behaviorally accessible property of sound events may be the actions that generated them. Therefore, the current study focused on the perception of the actions and gestures producing the sound, rather than the properties of the objects (size, shape, etc.) producing the sounds.

Sound-generating physical events and actions can be described at different levels of generality (e.g. “continuous motion”, “friction”, “scraping”, etc.). Consider the following descriptions of the same sound produced by different listeners (Lemaitre et al. 2010; Houix et al. 2012): “a series of quiet, short and high-pitched sounds”, “drips”, “water”, “plumbing noise”. Yet it is unknown whether there is a taxonomy of sound events and whether there is a level that is the most accessible to listeners. This type of knowledge is a necessary foundation for understanding both the psychological organization of sound and the neural basis of the determination of sound source properties.

One way to approach this question is to find the entry level at which an object is identified, akin to the “basic level” of categories found by Rosch and Mervis (1975) and Rosch (1978). Adams and Janata (2002), using images and sounds of animals to investigate the neural bases of object categorization, compared identification at a basic level defined as common categories of animals (dogs, birds, etc.), and at a subordinate level corresponding to particular species (crow, sparrow, etc.). What could be a taxonomy of action sounds and what could be its basic level? Lemaitre et al. (2010) and Houix et al. (2012) studied empirically how listeners categorize a set of everyday sounds. Naïve subjects grouped together sounds without being told which criteria to use and then provided textual descriptions of their category. Sorting data were hierarchically clustered, and descriptions were submitted to lexical analysis. In the resulting taxonomy, the most general level included four categories of solids (e.g. cutting food), fluids (e.g. water leaking, the burner of a gas stove), and machines (e.g. a blender). The same experimental technique was applied to another sound set focusing exclusively on sounds made by solid objects. Analysis of these data revealed a first level separating sounds made either by the deformation of one object (e.g. crumpling) or the interaction of several objects (e.g. hitting). The interactions were then split between continuous (e.g. rubbing) and discrete interactions (e.g. hitting), and the deformations were split in deformations without (e.g. crumpling) and with destruction of the objects (e.g. tearing). Finally, the most specific level corresponded to the specific gestures producing the sounds. The lexical analysis highlighted the predominance of verbs used to describe the sounds. The resulting taxonomy was confirmed by studying vocal imitations of these sounds (Lemaitre et al. 2011). Subjects grouped together vocal imitations of a set of everyday sounds, without any criteria being specified. The resulting categories corresponded to the categories found with the original sounds. This taxonomy, with its focus on the actions creating the sounds, was the starting point of the current study. As will be detailed later in this paper, our taxonomy was also influenced by our interest in the intersection between perception of the actions producing the sounds and semantics and categorization.

Many studies of sound identification or categorization have considered the acoustic taxonomy proposed by Gaver (1993) as a starting point (Carello et al. 1998; Gygi et al. 2004; Houix et al. 2012; Keller and Stevens 2004; Lemaitre et al. 2010; Marcell et al. 2000; van den Doel and Pai 1998). A first level in this organization corresponds to the nature of the interacting substances: solids, liquids, and gases. The next level consists of “basic events” in each of the former categories. They are simple interactions, such as impacts for solids, and explosions for gases. From these basic events, a third and more complex level is derived by varying the properties of the objects or interactions. Although Gaver’s taxonomy was not accompanied by empirical data to validate its psychological relevance, Lemaitre et al. (2010) and Houix et al. (2012) found that their most specific level of simple gestures corresponded rather well with Gaver’s “basic events”.

Most theories of categorization posit some form of a hierarchical organization of the categories: a taxonomy. A taxonomy is a system by which categories are related to another by means of class inclusion. The quantity of properties shared by the items in a category defines different levels of categorization in a taxonomy. In Rosch’s proposal, three levels are distinguished, from the more general to the more specific: superordinate, basic, and subordinate levels. There are two properties of the basic level highlighted by Rosch et al. (1976) that are important for acoustic studies. First, identification at this level is faster than at other levels, and identification is more accurate at the basic level than at the superordinate level. Second, category labels and sensory representations are the most tightly coupled at the basic level, as evidenced by priming experiments. For instance, the participants in the Rosch et al. experiments indicated on which side of a card partially masked images of man-made objects were presented. Subjects made more errors when the images were preceded by the presentation of the category name at the superordinate level (e.g. furniture) than at the basic level (e.g. table) or the subordinate level (e.g. dining room table). The basic level is the most important because the categories at this level delineate the perceived structure of the world. Rosch et al. have shown that items within basic-level categories share more physical attributes in common, that subjects agree more on the sequences of movements necessary to interact with items at the basic level, that the shapes of objects are more similar within basic-level categories, and that line-drawings of the items are better identified at the basic level than at the other levels.

Rosch’s framework, encompassing the notions of graded category membership, hierarchical structure, and existence of a privileged level, has received considerable empirical support. Even though there are more current theories of categorization that do not assume an explicit hierarchy, categories are, nevertheless, loosely structured in coarse-to-fine levels, and there is typically a preferred, facilitated level for item naming and category and feature verification (Rogers and McClelland 2004).

If both identification time and priming effects show advantages for the basic level of words and pictures, do they show the same advantage for words and sounds? The answer is not clear from past research. First, with regard to the time course of identification, Ballas (1993) first showed that listeners needed less time to identify everyday sounds when they identified them accurately than when they found them ambiguous. Aramaki et al. (2010), Giordano et al. (2010), Marcell et al. (2000, 2007), and Saygin et al. (2005) replicated this result for different sets of sounds. However, none of these studies compared different levels of specificity of the sound descriptions. To our knowledge, only Adams and Janata (2002) showed that the time needed to identify sounds made by different objects was shorter when the objects were described at the basic level of a taxonomy. It should be noted that Gaver (1993) used the term “basic event” but it is not synonymous with Rosch’s (1978) “basic” level category.

Second, despite early negative results (Chiu and Schacter 1995; Stuart and Jones 1995), several studies have shown that the prior presentation of a sound can prime a lexical decision (“is this a word or not?”) when the sound and the word are semantically related. Priming has been reported by measuring event related potentials (amplitude of the N400 component Aramaki et al. 2010; Cummings et al. 2006; Galati et al. 2008; Orgs et al. 2006; Pizzamiglio et al. 2005; Schön et al. 2009), and reaction times (van Petten and Rheinfelder 1995). However, none of these past studies varied the specificity of the descriptions to test levels of categorization.

The goal of the present study was therefore to identify the basic level in a taxonomy of action sounds, using these two properties of the basic level defined by Rosch et al. (1976): identification time and priming effect. The first experiment (“classification verification experiment”) compared the accuracies and reaction times of listeners identifying the sounds at different levels in the taxonomy. The second experiment (“priming experiment”) compared the priming of a lexical decision task by the prior presentation of a sound, using a paradigm inspired by van Petten and Rheinfelder (1995). Our choice of experimental design was influenced by the fact that our sound stimuli, being recordings of everyday events, varied in acoustical factors such as rise time, level, and duration. These acoustic variations alone could influence reaction times. For instance, Grassi and Darwin 2006 and Grassi and Casco 2009 showed that listeners react faster to sounds with sharp attacks. To control for attack time and other acoustic variables, we only compared reactions times between conditions if they used the same target sounds. Therefore, differences in reaction time can be attributed to semantic context but not to acoustical factors.

Sounds and categories used in the study

A linguistic taxonomy of sound events

The first step was to establish a taxonomy of types of sound-producing interactions inspired by Gaver’s principles (1993) and empirical data from Lemaitre et al. (2010) and Houix et al. (2012) that were linguistically homogeneous and relied on conceptual categories familiar to lay listeners. The highest and most general level (L1—state of matter) was defined by the three states of matter (liquid, solid, and gas). In each of these categories, we selected three types of interactions that were identifiable: friction, deformation, impact, splash, drip, pour, whoosh, wind, and explosion. These nine categories formed the second highest-level L2 (types of interaction) in the taxonomy. The levels L1 and L2 were selected from the first two levels of Gaver’s taxonomy and Houix’s empirical taxonomy of everyday sounds. The list of hyponyms (for instance, scraping is an hyponym of friction) of these categories served as a basis to establish the variations of the types of interactions that formed the more detailed level L3 (specific actions). We used the Wordnet database of semantic relationships between words in English (Fellbaum 1998; Miller 1995) and the Merriam Webster Dictionary. Two categories were defined within each category at the level L2 (hence, there were 18 categories at level L3). All the descriptions at level L3 consisted of a verb in its radical + ING form (e.g. scraping). In particular, the six categories of specific actions made with solid objects were directly borrowed from the empirical results of Houix et al. (2012). Creating an even lower level with more detailed descriptions proved to be impossible with only one word. To form level L4a, we added an adverb of manner (e.g. scraping rapidly) to specify how the actions were made (“manner of the action”), and to form level L4b, we added a noun (e.g. scraping a board) to specify the object upon which the actions were executed (“object of the action”). There were 18 categories in levels L4a and L4b. We did not assume that either one had a greater level of specificity. The resulting taxonomy is sketched in Table 1.

Recordings

Three examples were recorded in each of the 18 categories reported in Table 1 resulting in fifty-four sounds. All the sounds but the explosions were created and recorded in an IAC sound-attenuated booth, with the walls covered with Auralex echo-absorbing foam wedges, using an Earthworks QTC30 1/4″ condenser microphone, a Tucker-Davis Technologies MA-3 microphone amplifier and an Olympus LS-10 digital recorder. All sounds were recorded with a 96 kHz sampling rate and a 32-bit resolution. The microphone was located approximately 30 cm away from the source. The explosion sounds were selected from commercial and non-commercial databases. The levels of the recorded sounds varied from 51 to 112 dB(A) and their effective duration (calculated with the Ircam Timbre toolbox, Peeters et al. 2011) varied from 106 to 2,780 ms.

Identification

The goals of the identification experiment were twofold. The first goal was to measure the performance of listeners identifying the sounds in different categories and levels to assess if one of the levels in the taxonomy could be considered as basic. These properties of the basic level are (Rosch et al. 1976):

-

Identification is faster at the basic level than at the super- or subordinate level.

-

Identification is better at the basic than at the superordinate level and is not better at the subordinate than at the basic level.

Because sounds develop over time, it is very likely that the amount of time required to identify a sound depends on the time course of each particular sound. Therefore, the second goal of identification experiment was to observe the different durations needed by listeners to correctly identify different sounds, by using a Rosch-like paradigm with each sound gated at different durations. These results were used to select sounds for the priming experiment, for which short excerpts were required.

Methods

Listeners

Seventy-five listeners (40 male and 35 female), between 18 and 38 years of age (median 21), were compensated for their participation with either pay or academic credit. All reported normal hearing and were English native speakers. Fourteen were randomly assigned to Group 1, 15 to Group 2, 16 to Group 3, 14 to Group 4, and 16 to Group 5.

Stimuli

The stimuli were the 54 sounds described above. Because of the large level differences between the recorded sounds (ranging 61 dB), the range of level variation was linearly compressed, so that the loudest sounds were played at a comfortable level (about 81 dB(A)), yet the quietest sounds were clearly audible, even when gated at the shortest duration. Playback levels (measured at listener’s position for the full duration) varied between 43 and 81 dB(A), and durations varied between 106 and 2,780 ms.

Apparatus

The digital files were converted to analog signals by an Echo AudioFire sound board, amplified by a Rotel RMB 1066 amplifier, and played through a pair of KRK ST6 loudspeakers. The listener was seated in the center of an IAC double-walled sound-attenuating booth with two speakers in the front corners, each located 1 m from the listener. Booth walls were covered in echo-absorbing foam. Stimulus presentation and response collection were programmed on an Apple Macintosh Dual 2-Ghz Power PC G5 with Matlab 7.5.0.338 using the Psychophysics Toolbox extensions version 3.0.8 (“Psychotoolbox”, Brainard 1997). The latency of the keyboard was estimated to be 15.4 ms on average (by comparing the timing of registered key presses to the timing of their recorded sound, using Psychtoolbox built-in functions), and the smallest detectable difference of reaction times with a 95 % confidence interval was estimated to be 13.8 ms.

Procedure

Groups 1 through 5 received labels from the levels L1 (state of matter), L2 (type of interaction), L3 (specific action), L4a (manner of the action), and L4b (object of the action), respectively. The experiment consisted of 4 blocks of 108 trials. In each trial, a row of crosses was first presented on the computer display for a duration that was randomly varied between 400 and 500 ms. Then, a word, consisting of one of the 3 (L1), 9 (L2), or 18 (L3, L4a, and L4b) labels of the sounds, was presented for 300 ms. A sound was presented 600 ms after the label was hidden. In each of the four blocks, the sounds were gated at, respectively, 100, 200, 400, and 800 ms. The participants’ task was to indicate whether the sound could have been caused by what the word described. Two response keys (“c” and “m”) could indicate “yes” or “no”. The mapping between key and answer was randomly assigned to each participant. They could answer as soon as the sound started. Each of the 54 sounds was presented twice during a block, once preceded by the correct label, once by an incorrect label. The incorrect label was randomly chosen in a category that was different from that of the sound, at the most general level (i.e. “dripping” could be paired with “tapping”, but not with “dribbling”). The principle was that the incorrect label should be unambiguously irrelevant: “tapping” is clearly not a correct description of the sound of dripping. The order of presentation of the sounds was randomized for each listener, with the constraint that the same sound could not be presented twice in a row. Prior to the main experiment, the participants were familiarized with the procedure by doing three blocks of six trials using different sounds and different labels (e.g. a door being shut, ice cubes poured in a glass, etc.). After the experiment, they were invited to comment on their experience. The participants were instructed to answer as quickly as possible, without making too many errors.

Results

Accuracy

Overall, both experimental factors had a significant effect on the accuracy scores: longer durations produced better accuracy, as did more specific labels (levels L3—specific action, L4a—manner of the action, and L4b—object of the action).

The accuracy scores, averaged across listeners, durations, and groups ranged from 57.3 (pouring 3) to 96.6 % (dripping 3). The accuracy scores averaged across sounds are represented on the upper panel of Fig. 1. They were submitted to a repeated-measure analysis of variance (ANOVA) with the gate durations as a within-subject factor and the levels of the description as a between-subject factor. All statistics are reported after Geisser-Greenhouse correction). The gate duration has the largest significant effect on accuracy (F(3, 210) = 116.3, p < .000001 partial η 2 = 31.5 %). Overall accuracy increased with gate duration, with averaged accuracy ranging from 71.4 % at the shortest gate duration to 85.7 % at the longest. The main effect of the level of specificity of the description was also significant (F(4, 70) = 6.164, p < .001, partial η 2 = 12.0 %). The interaction between level of specificity and gate duration was significant, although this contributed only marginally to the total variance of the data (F(12, 210) = 3.380, p < 0.001, partial η 2 = 3.7 %). Post hoc comparison using the Tukey HSD test revealed that accuracy for levels 3 (specific action), 4a (manner of the action), and 4b (object of the action) were not significantly different from each other (respectively, M = 82.2, 80.8, and 84.1 %, SD = 1.46, 1.57, and 1.46; for level 4b vs. 4a, p = .554; for level 4b vs. 3, p = .882), but were significantly different form levels 1 (state of matter) and 2 (type of interaction, M = 75.1 % for level 1, and M = 76.8 % for level 2; SD = 1.56 and 1.51; for level 4b vs. level 2 p = 0.008 and level 4b vs. level 1 p = 0.001).

Reaction times

Only the reaction times for the cases when the sound and the label were related and the answer was correct were analyzed. The percentage of correct identification and the reaction times (averaged for each sound across the three gate durations) were significantly and negatively correlated across all levels (r(N = 54) = −0.61, −0.41, −0.68, −0.64, and −0.58, respectively, p < .01). Overall, listeners made fewer errors when they answered more rapidly, indicating no trade-off between speed and accuracy.

Prior to the analysis, we excluded those reaction times that were greater than two standard deviations above the mean of the group (i.e. greater than 1920, 1993, 1202, and 1844 ms for the five groups). Reaction times are represented on the lower panel of Fig. 1.

The data were submitted to a repeated-measure analysis of variance (ANOVA), with the level of specificity as a between-subject factor, and the gate duration as a within-subject variable. The degrees of freedom were corrected with the Geisser-Greenhouse procedure to account for possible violations of the sphericity assumption, as were the p-values. The results of the ANOVA showed that the reaction times were significantly different for the different gate durations (F(3, 210) = 14.04, p < .000001, η 2 = 2.88 %), although the effect was small. The reaction times decreased as the duration of the excerpt increased. The level of specificity produced significantly different reaction times (F(4, 70) = 3.63, p = .01, η 2 = 14.1 %). Identification of the specific actions (L3) produced the shortest reaction times (M = 544 ms, SD = 34 ms). Post hoc comparisons using the Tukey HSD test indicated that the mean reaction time for this level was significantly different from the identification of the types of interaction (L2, M = 710 ms, SD = 36 ms, p = .011), as well as from the states of matter (L1, M = 689 ms, SD = 37 ms, p = .041), but not from the manner of the actions (L4a, M = 658 ms, SD = 37 ms, p = .172), and the objects of the actions (L4b, M = 607 ms, SD = 35 ms, p = .584). Interaction between the level of specificity and gate duration was not significant (F(12, 210) = 0.644, p = .737).

Discussion

The results of the identification experiment suggest that the level L3 (specific actions) in the taxonomy meets one criterion for a basic level in the sense of Rosch et al. (1976): more general descriptions resulted in more identification errors and slower reaction times, and more specific descriptions resulted in similar numbers of errors and longer identification times. The reaction time at level L2 (type of interaction) is almost 200 ms faster than level L3 (specific actions), whereas Rosch reported differences of about 60 ms. However, post hoc analyses have revealed that reaction times and accuracy scores were not different between levels L3 (specific actions), L4a (specifying the manner of the action), and L4b (specifying the object). The V-shaped pattern of the lower panel of Fig. 1 (the one predicted by Rosch) should therefore be interpreted as a discontinuity (a step) occurring at level L3 (specific actions).

The correlation between the reaction times and the accuracy scores is consistent with the results of Ballas (1993) and Saygin et al. (2005) who showed that the time needed to identify a sound depends on its causal uncertainty (the number of possible causes).

Furthermore, the patterns of results across levels have at least two implications. First, the task could be thought of as easiest at level L1 (state of matter, for which there were only three categories to discriminate and remember) or L2 (type of interaction, nine categories to discriminate). However, listeners were slower and less accurate at these levels than at level L3 (specific action, 18 categories to discriminate). The results of the experiment can therefore not simply be accounted for by the Hick-Hyman law (Hyman 1953), which would predict the opposite pattern of results. In particular, the difference in reaction times cannot be merely explained by the number of categories subjects had to discriminate. The discontinuity in the pattern of results at level L3 (specific actions) indicates that there is no linear relationship between reaction times and number of categories. Second, at level L3 (specific actions, where we observed the best performance), the descriptions were mainly verbs describing the action that caused the sound, whereas at level L2 (types of interaction), the descriptions were more conceptual categories. The results therefore indicate that sounds are best identified as the actions that caused them.

It is evident that the 54 sounds used in the experiment were not equivalently well identified. For example, the poorest performance was obtained at the shortest gate duration for the “pouring” and “scraping” sounds. These sounds have in common a very noisy spectrum. In the post-experimental interviews, the listeners indicated that, at the shortest gate duration, it was very difficult to interpret the cause of these sounds. Only as the sounds unfolded over time did they reveal their nature, either because of temporal patterns or because of information conveyed in the offset of the sounds. This information was used to select sounds for the following priming experiment that were well identified at moderate durations.

Semantic priming

To further test the hypothesis that the specific actions (level L3) share some properties of the basic level of the taxonomy, we used a priming paradigm inspired by Rosch et al. (1976) who showed (in her experiment 6) that when subjects decided whether two pictures were similar, their reaction times decreased when the name of the picture was briefly flashed before the image. The similarity decision was primed by the name of the objects in the picture. However, priming occurred only at the basic level.

The goal of our priming experiment was therefore to assess whether the categories of sounds we used could be primed by listening to them. We compared levels L2 (types of interaction) and L3 (specific actions). Levels L4 and L4b (specifying the object or the manner of the actions) could not be used in this framework because their categories were described by two words. Level L1 (states of matter) was not used because it produced poor performance in the identification experiment. We used an experimental design similar to van Petten and Rheinfelder (1995). The listeners performed a lexical decision task. A computer display very briefly flashed strings of letters, and listeners decided whether they formed a word or not. Prior to the word presentation, a brief sound was played. The sounds and the words could be related or unrelated (i.e. the word could describe the prior sound), and we observed the accuracy and the reaction times. Based on the results of Experiment 1, we expected L2 to function as if it were superordinate to L3.

Methods

Listeners

Sixty-one listeners took part in the experiments and were compensated for their participation with either pay or academic credit. Data from two of them were removed because their performances were below chance, indicating that they might have misunderstood the instructions. The fifty-nine remaining participants (28 male and 31 female) were between 18 and 45 years of age (median 21). All reported normal hearing and were English native speakers. None had previously participated in identification experiment.

Apparatus

The apparatus was the same as in the identification experiment.

Stimuli

The listeners were presented with a series of 24 different strings of letters, preceded by 24 different sounds. The sounds were selected from the results of the identification experiment: we selected only the sounds identified with more than 80 % accuracy at levels L2 (type of interaction) and L3 (specific action), for a gate duration of 200 ms. This duration ensured that the sounds were correctly identified and was brief enough for the priming paradigm. There were four different series of stimuli. They were created by looking up some orthographic statistics (number of letters, frequency in English, orthographic neighbor statistics, constrained bigram statistics) of the descriptions L2 (type of interaction) and L3 (specific action) of the 24 sounds (see Table A1 and Table A2, supplemental material), known to potentially influence reaction times in lexical decision tasks (Balota et al. 2004; Grainger 1990). The statistics were collected using the MCWord database (Medler and Binder 2005). Then, we constructed lists of unrelated words and non-words, matching their orthographic statistics to those of the L2 (type of interaction) and L3 (specific action) description sets. Because the descriptions in L3 all have a form radical + ING, half of the unrelated words and non-words also had a +ING suffix. The lists of unrelated words and non-words are reported in Tables A3 and A4 (supplemental material). We carefully chose the unrelated words so that their average orthographic statistics matched those of the related labels. Each series of 24 sounds was split in four parts, and the 6 sounds in each part were paired with either a description from the L2 or L3 sets, an unrelated word or a non-word. The partition of each of the four series was different, and the four series were assigned to four different groups of subjects. This ensured that the 24 sounds were associated with the four kinds of descriptions across subjects, but that no subject heard the same sound twice. In fact, the principle of the design was that every sound was paired with every description level across the four groups, but that the pairing was different for each group of subjects. This design had three purposes. First, its main goal was that every sound was paired with its possible descriptions, an unrelated word, and a non-word across the experiment. This allowed us to compare, for the same sound, the priming of words at different levels of generality. Second, the fact that listeners heard every sound only once ensured that they could not build semantic associations during the experiment that could influence subsequent presentation. Third, splitting the labels across the groups ensured that subjects in each group saw every word only once. For instance, with 2 groups corresponding to the 2 levels of descriptions, some labels would have occurred three times in the group corresponding to level L2 (type of interaction), and only once in the group corresponding to level L3 (specific action), because labels are shared by different sounds at level L2 (“blasting” corresponds to 3 sounds, etc.). In the design borrowed from van Petten and Rheinfelder (1995), every different words and non-words occurred only once for each subject. This design has therefore the great advantage to compare the two levels of description on a fair ground, with no bias potentially due to repeated presentations of some words or sounds. It had, however, the drawback that non-words occurred only 25 % of the time. Responses to non-words may therefore have been affected by the lower rate of presentation. However, we were only interested only in the comparison between words and unrelated words and not in the main priming effect. Data for non-words were therefore excluded from analyses. The resulting stimuli are summarized in Table 2.

Procedure

The listeners were first provided with written instructions explaining the lexical decision task. The experiment consisted of one block of 24 trials. Each trial corresponded to a sound/string of letters pair. The order of the stimuli was randomized for every participant. In each trial, a row of crosses was first presented on the computer display, and the sound was played for 200 ms. The string of letters was then displayed 100 ms after the end of the sound for 100 ms. The listeners answered whether the string of letters formed a word in English by hitting the “c” or “m” keys. The “yes” answer was assigned to the subject’s dominant hand. Before the actual experiment, the listeners performed four training blocks. The training blocks used four different sets of 24 sounds and strings of letters. The training sounds were recordings of cylinders submitted to different actions that did not appear in the main experiment.

Results

We only report the results for the word conditions and omit the non-word conditions because we are only interested in comparing the potential priming of the lexical decision by the preceding sounds (overall, the listeners always responded more slowly and less accurately to non-words). We separately analyzed identification accuracy and the reaction times for the word conditions.

Accuracy of the decision

To further rule out the possibility of a confounded effect of the orthographic statistics on the accuracy scores (due for instance to the particular splitting of the sounds and labels for each group of subject), we first calculated the correlation coefficients between the orthographic statistics and the accuracy scores, averaged for each combination of group and type of label. This resulted in 16 values, except for the word frequency that was unavailable for the non-words (thus, there were 12 values). The resulting coefficients were all not significantly different from zero: frequency of the word in English (r(N = 12) = .01, p = .481), number of letters (r(N = 16) = .01, p = .489), number of orthographic neighbors (r(N = 16) = .13, p = .321), statistics of constrained bigrams (r(N = 16) = .17, p = .076). This indicates that accuracy was not driven by orthographic statistics of the strings of letters.

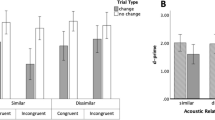

The upper panel of Fig. 2 shows that, overall, listeners were more accurate when there was a semantic relationship between the sounds and the words, and even more accurate when the words described specific actions (L3).

The accuracy scores (averaged for each participant across the sounds for each type of description) were submitted to a repeated-measure ANOVA with the type of label as within-subject factor and the group as a between-subject factor. All statistics are reported after Geisser-Greenhouse correction. The effect of the type of label was significant (F(2, 110) = 13.9, p < .001, η 2 = 27.2 %). The effect of the group (F(3, 55) = 2.290, p = .088, η 2 = 9.6 %) and the interaction between the type of label and the group (F(6, 165) = 1.6, p = .208, η 2 = 6.0 %) were not significant. Post hoc comparisons using the Tukey HSD test indicated that the mean accuracy score the mismatched labels L1 (M = 89.3 %, SD = 13.7 %) was significantly different from the mean accuracy score for labels L2 (type of interaction, p = .001, M = 94.3 %, SD = 9.1 %) and labels L3 (p = .027, specific action M = 98.9 %, SD = 4.22 %). Accuracy for level L3 (specific action) was also better than level L2 (type of interaction p < .01).

Reaction times

Figure 2 shows that lexical decision reaction times were significantly shorter when a word was preceded by a related sound.

To again rule out the possibility of an effect of the orthographic statistics on the accuracy scores, we first calculated the correlation coefficients between the orthographic statistics and the reaction times, using the same procedure as before. The resulting coefficients were all not significantly different from zero: frequency of the word in English (r(N = 12) = −.05, p = .432), number of letters (r(N = 16) = .06, p = .406), number of orthographic neighbors (r(N = 16) = −.11, p = .342), statistics of constrained bigrams (r(N = 16) = .267, p = .158). Again, orthographic statistics did not drive the results.

The reaction times for the correct answers that were not greater than two standard deviations above the mean for each type of label (3.5 % of the data were excluded) were averaged across the six sounds for each type of label and were submitted to a repeated-measure ANOVA. The degrees of freedom were corrected with the Geisser-Greenhouse procedure to account for possible violations of the sphericity assumption. The main effect of the level of description (a within-subject factor) was significant (F(2, 110) = 73.1, p < .000001) and was the experimental factor that accounted for most of the variance (η 2 = 35.5 %). Planned contrasts revealed that the descriptions of the specific actions (L3, M = 531.7 ms, SD = 67.3 ms) resulted in shorter reaction times than the unrelated labels (L1, M = 623.0 ms, SD = 109 ms, p < .000001), but the reaction times for the types of interaction (L2, M = 532.2 ms, SD = 67.6 ms) and the specific actions (L3) were not significantly different (p = 0.984). Retrospective power was estimated to be 1.000 for the levels (for an alpha value of 0.05), and the 95 % confidence interval was estimated to be [−9.6 to −9.8], suggesting that the non-significant difference could not be attributed to a type II error (Howell 2009). This indicates that priming occurred both for levels L2 and L3, and the size of the effect was similar for these two conditions. There was no significant effect of the between-groups factor of the specific subset of stimuli used for each group (F(3, 55) = 0.363, p = .78). There was no significant interaction between the groups and the levels of description (F(6, 110) = 32.28, p = .068).

General discussion

These experiments compared identification time and semantic priming for the different levels within a taxonomy of everyday sounds organized according to the actions producing the sounds. The results reported herein are consistent with the view that there is privileged level of specificity within a psychological hierarchy of action sounds within which identification is easier. In particular, verifying the categories of the sounds in general categories was more difficult than in specific categories.

The results of these experiments suggest that the specific actions in the taxonomy share some of the properties of a basic level of categorization. Sounds at the level of “specific actions” were identified faster at this level than at more general levels, and a ceiling in performance occurred for this level and the more specific ones. The names of the categories were primed by prior presentation of these sounds, and the size of the priming effect was significantly larger for the names of the categories at this level than at a more general level. It is worth noting that this level is distinct from the one that Gaver (1993) called “basic events” which instead maps onto our “types of interaction” level.

It is important to consider what makes this level different from the other ones. It may be that the categories of specific actions were the most homogeneous. The states of matter consisted of three very general categories: sounds made by solid objects, liquids, and gases. These categories were very large, and it is not surprising that the listeners did not have a clear idea of what the sound of a solid object, for instance, might be in general. The types of interaction consisted of categories of physical phenomena. The specific actions consisted of the particular actions. Whereas the categories of interaction types defined different actions in a rather abstract way (e.g. friction is the action of rubbing one object against another), the verbs describing the specific actions also specified how the action was made in a less abstract way. The specification of the properties of the action considerably limits the variety of sounds and objects included in each category. For instance, “scraping” specifies a noisy sound created by rubbing hard rough surfaces. “Squeaking” indicates a shrill sound created by rubbing hard smooth objects. The categories of specific actions not only described more precisely the events that created the sounds, but also formed more homogeneous categories of sounds. A theoretical approach that considers the variance of distribution of the items in a category could probably account for the listener’s poorer performance at identifying that a sound was made by deformation (a more general type of interaction) than by crumpling (a specific action).

The descriptions explicitly specifying the objects involved in the actions, or the manner of the actions, were linguistically different from the other levels: the names of the categories consisted of a second word added to the verbs describing the specific actions. One level added a name specifying the object on which the action was executed, and the other added an adverb specifying how the action was made. Both specifications were somewhat redundant with the meaning of the verb (e.g. water trickling, tapping once). Nevertheless, they resulted in identification times that were different. The categories specifying the object of the actions were identified more slowly than the categories of specific actions, but the difference was not significant. One explanation might be that specifying the object did not fundamentally change the possible range of sounds contained in the categories, because the important properties of the objects constraining the sounds were already specified by the verbs describing the actions. It is for instance possible that a subject would generate the same set of expectations when presented with “dripping” and “water dripping”, or “shooting” and “shooting a gun”. Specifying the object might therefore be considered as redundant.

On the contrary, identification times were slower for categories specifying the manner of the actions. This suggests that this level really was more specific than the action verbs only. In fact, many of the adverbs used to create the categories at this level specified either the force of the speed of the action. Acoustically, this specifies the loudness and the duration of the resulting sounds, and thus, a specific subset of the sounds implied by each category. Perhaps being more specific may not produce a benefit for reaction times if it describes a subordinate level rather than a basic level. An alternative explanation is that introducing object properties into an experimental context focused on actions introduced irrelevant information that slowed down subjects’ reactions.

The new priming effects observed here, for both specific and more general descriptions of actions, have both practical and theoretical implications. At a practical level, it is important to point out that the priming of a lexical decision by the prior presentation of a sound is a subtle effect that is difficult to observe at the behavioral level (many prior observations of the priming effect were based on brain imaging methods). Many previous studies did not consider the fact that a sound can be described at many levels of specificity, nor that the different levels of specificity may result or not in an observable priming effect. Our results show that priming can occur at more than one level of the taxonomy, but the level matters.

At a more theoretical level, semantic priming of a lexical decision is generally accounted for by a spread of activation generated by the prime (Neely 1991). Our results therefore suggest that listening to simple sound events activate lexical and/or semantic representations that may interact with the lexical or semantic representations activated by the names of the categories of types of interactions and specific actions. Because both the names of these categories describe the actions (and in the majority of the cases the gesture) that produced the sounds, this suggests that hearing a sound activates the semantic representations of the actions (and possibly gestures) producing the sounds. This illustration is consistent with several studies showing that the sounds of actions (and in particular the sounds made by human gestures) uniquely activate motor and pre-motor areas. It is also consistent with theories of embodied cognition where there are close links between conceptual memory and sensory and motor systems (Barsalou 2008).

The present study examined a specific taxonomy of sounds motivated by considering how the interactions between objects create physical and acoustic categories of events. It is worth noting that the sounds selected for this experiment clearly conveyed their sound-generating events, and the lexical descriptions focused primarily on the actions, rather than the objects, producing the sounds (although the lexical level that described state of matter could be considered a property of objects). Other taxonomies of sound events are possible (for instance, Schön et al. 2009 used the taxonomy of abstract sounds developed by Schaffer, or sound events can also be organized according to the objects that produced the sounds). Therefore, the basic level found in this study should be understood as a basic level for action sounds. For instance, our results do not rule out the possibility that the level L1 (state of matter) could produce shorter reaction times in a taxonomy of object-related properties. Further work is needed to compare these different organization principles.

References

Adams RB, Janata P (2002) A comparison of neural circuits underlying auditory and visual object categorization. Neuroimage 16:361–377

Aglioti SM, Pazzaglia M (2010) Representing actions through their sound. Exp Brain Res 206(2):141–151

Alaerts K, Swinnen SP, Wenderoth N (2009) Interaction of sound and sight during action perception: evidence for shared modality-dependent action representations. Neuropsychologia 47:2593–2599

Aramaki M, Marie C, Kronland-Martinet R, Ystad S, Besson M (2010) Sound categorization and conceptual priming for nonlinguistic and linguistic sounds. J Cogn Neurosci 22(11):2555–2569

Aziz-Zadeh L, Iacobini M, Zaidel E, Wilson S, Mazziota J (2004) Left hemisphere motor facilitation in response to manual action sounds. Eur J Neurosci 19:2609–2612

Ballas JA (1993) Common factors in the identification of an assortment of brief everyday sounds. J Exp Psychol Hum Percept Perform 19(2):250–267

Balota DA, Cortese MJ, Sergent-Marshall SD, Spieler DH, Yap MJ (2004) Visual word recognition of single-syllable words. J Exp Psychol Gen 133(2):283–316

Barsalou LW (2008) Grounded cognition. Annu Rev Psychol 59:617–645

Beauchamp MS, Argall D, Borduka J, Duyn JH, Martin A (2004) Unraveling multisensory integration: patchy organization within human STS multisensory cortex. Nat Neurosci 7(11):1190–1992

Bidet-Caulet A, Voisin J, Bertrand O, Fonlupt P (2005) Listening to a walking human activates the temporal biological motion area. NeuroImage 28:132–139

Brainard DH (1997) The psychophysics toolbox. Spat Vis 10:433–436

Cabe PA, Pittenger JB (2000) Human sensitivity to acoustic information from vessel filling. J Exp Psychol Hum Percept Perform 26(1):313–324

Carello C, Anderson KL, Kunkler-Peck AJ (1998) Perception of object length by sound. Psychol Sci 9(3):211–214

Castiello U, Giordano BL, Begliomini C, Ansuini C, Grassi M (2010) When ears drive hands: the influence of contact sound on reaching to grasp. PLoS One 5:e12240

Chen JL, Penhune VB, Zatorre RJ (2008) Listening to musical rhythms recruits motor regions of the brain. Cereb Cortex 18(12):2844–2854

Chiu CYP, Schacter DL (1995) Auditory priming for nonverbal information: implicit and explicit memory for environmental sounds. Conscious Cogn 4:440–458

Cummings A, Ceponiene R, Katoma A, Saigin AP, Townsend J, Dick F (2006) Auditory semantic networks for words and natural sounds. Brain Res 115:92–107

De Lucia M, Camen C, Clark S, Murray MM (2009) The role of actions in auditory object discrimination. Neuroimage 48:475–485

Fellbaum C (ed) (1998) WordNet: an electronic lexical database. MIT Press, Cambridge, MA

Galati G, Commiteri G, Spitoni G, Aprile T, Russo FD, Pitzalis S, Pizzamiglio L (2008) A selective representation of the meaning of actions in the auditory mirror system. Neuroimage 40(3):1274–1286

Gaver WW (1993) What do we hear in the world? An ecological approach to auditory event perception. Ecol Psychol 5(1):1–29

Gazzola V, Aziz-Zadeh L, Keysers C (2006) Empathy and the somatotopic auditory mirror system in humans. Curr Biol 16:1824–1829

Giordano BL, McDonnell J, McAdams S (2010) Hearing living symbols and nonliving icons: category specificities in the cognitive processing of environmental sounds. Brain Cogn 73:7–19

Grainger J (1990) Word frequency and neighborhood frequency effects in lexical decisions and naming. J Mem Lang 29:228–244

Grassi M (2005) Do we hear size or sound? Balls dropped on plates. Percept Psychophys 67(2):274–284

Grassi M, Casco C (2009) Audiovisual bounce-inducing effect: attention alone does not explain why the discs are bouncing. J Exp Psychol Hum Percept Perform 35(1):235–243

Grassi M, Casco C (2010) Audiovisual bounce-inducing effect: when sound congruence affects grouping in vision. Atten Percept Psychophys 72:378–386

Grassi M, Darwin CJ (2006) The subjective duration of ramped and damped sounds. Percept Psychophys 68(8):1382–1392

Gygi B, Kidd GR, Watson CS (2004) Spectral-temporal factors in the identification of environmental sounds. J Acoust Soc Am 115(3):1252–1265

Houben MMJ, Kohlrausch A, Hermes DJ (2004) Perception of the size and speed of rolling balls by sound. Speech Commun 43:331–345

Houix O, Lemaitre G, Misdariis N, Susini P, Urdapilleta I (2012) A lexical analysis of environmental sound categories. J Exp Psychol Appl 18(1):52–80

Howell DC (2009) Statistical methods for psychology, vol 7. Wadsworth, Cengage learning

Hyman R (1953) Stimulus information as a determinant of reaction time. J Exp Psychol 45(3):188–196

James TW, Stevenson RA, Kim S, VanDerKlok RM, Harman James K (2011) Shape from sound: evidence for a shape operator in the lateral occipital cortex. Neuropsychologia 49:1807–1815. doi:10.1016/j.neuropsychologia.2011.03.004

Kaplan JT, Iacobani M (2007) Multimodal action representation in human left ventral premotor cortex. Cogn Process 8:103–113

Keller P, Stevens C (2004) Meaning from environmental sounds: types of signal-referent relations and their effect on recognizing auditory icons. J Exp Psychol Appl 10(1):3–12

Keysers C, Kohler E, Ulmità MA, Nanetti L, Fogassi L, Gallese V (2003) Audiovisual neurons and action recognition. Exp Brain Res 153:628–636

Klatzky RL, Pai DK, Krotkov EP (2000) Perception of material from contact sounds. Presence 9(4):399–410

Kunkler-Peck AJ, Turvey MT (2000) Hearing shape. J Exp Psychol Hum Percept Perform 26(1):279–294

Lakatos S, McAdams S, Caussé R (1997) The representation of auditory source characteristics: simple geometric forms. Percept Psychophys 59(8):1180–1190

Lemaitre G, Heller LM (2012) Auditory perception of material is fragile, while action is strikingly robust. J Acoust Soc Am 131(2):1337–1348

Lemaitre G, Houix O, Misdariis N, Susini P (2010) Listener expertise and sound identification influence the categorization of environmental sounds. J Exp Psychol Appl 16(1):16–32

Lemaitre G, Dessein A, Susini P, Aura K (2011) Vocal imitations and the identification of sound events. Ecol Psychol 23:267–307

Lewis JW (2006) Cortical networks related to human use of tools. Neuroscientist 12(3):211–231

Lewis JW, Wightman FL, Brefczynski JA, Phinney RE, Binder JR, DeYoe EA (2004) Human brain regions involved in recognizing environmental sounds. Cereb Cortex 14:1008–1021

Lewis JW, Brefczynski JA, Phinney RE, Janik JJ, DeYoe EA (2005) Distinct cortical pathways for processing tools versus animal sounds. J Neurosci 25(21):5148–5158

Lewis JW, Talkington WJ, Puce A, Engel LR, Frum C (2011) Cortical networks representing object categories and high-level attributes of familiar real-world action sounds. J Cogn Neurosci 23(8):2079–2101

Lewis JW, Talkington WJ, Tallaksen KC, Frum CA (2012) Auditory object salience: human cortical processing of non-biological action sounds and their acoustical signal attributes. Frontiers in systems neuroscience 6:article 27. http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3348722/

Marcell MM, Borella D, Greene M, Kerr E, Rogers S (2000) Confrontation naming of environmental sounds. J Clin Exp Neuropsychol 22(6):830–864

Marcell MM, Maltanos M, Leahy C, Comeaux C (2007) Identifying, rating and remembering environmental sound events. Behav Res Methods 39(3):561–569

Medler D, Binder J (2005) MCWord: an on-line orthographic database of the English language. http://www.neuro.mcw.edu/mcword/

Miller GA (1995) Wordnet: a lexical database for English. Commun ACM 38(11):39–41

Neely JH (1991) Semantic priming effects in visual word recognition: a selective review of current findings and theories. In: Besner D, Humphreys GW (eds) Basic processes in reading: visual word recognition, Chap. 9. Lawrence Erlbaum Associates, Hillsdale, NJ, pp 264–335

Orgs G, Lange K, Dombrowski JH, Heil M (2006) Conceptual priming for environmental sounds: an ERP study. Brain Cogn 62:267–272

Peeters G, Giordano BL, Susini P, Misdariis N, McAdams S (2011) The timbre toolbox: extracting audio descriptors from musical signals. J Acoust Soc Am 130(5):2902

Pizzamiglio L, Aprile T, Spitoni G, Pitzalis S, Bates E, D’Amico S, Di Russo F (2005) Separate neural systems of processing action- or non-action-related sounds. Neuroimage 24:852–861

Rogers TT, McClelland JL (2004) Semantic cognition. A Parallel-Distributed- Processing approach. The MIT press, Cambridge, MA

Rosch E (1978) Principles of categorization. In: Rosch E, Lloyd BB (eds) Cognition and categorization, Chap 2. Lawrence Erlbaum Associates, Hillsdale, NJ, pp 27–48

Rosch E, Mervis CB (1975) Family resemblances: studies in the internal structure of categories. Cogn Psychol 7:573–605

Rosch E, Mervis CB, Gray WD, Johnson DM, Boyes-Braem P (1976) Basic objects in natural categories. Cogn Psychol 8:382–439

Saygın AP, Dick F, Bates E (2005) An on-line task for contrasting auditory processing in the verbal and nonverbal domains and norms for younger and older adults. Behav Res Methods 37(1):99–110

Schön D, Ystad S, Kronland-Martinet R, Besson M (2009) The evocative power of sounds: conceptual priming between words and nonverbal sounds. J Cogn Neurosci 22(5):1026–1035

Sedda A, Monaco S, Bottini G, Goodale MA (2011) Integration of visual and auditory information for hand actions: preliminary evidence for the contribution of natural sounds to grasping. Exp Brain Res 209:365–374

Stuart GP, Jones DM (1995) Priming the identification of environmental sounds. Q J Exp Psychol A Hum Exp Psychol 48(3):741–761

van den Doel K, Pai DK (1998) The sounds of physical shapes. Presence 7(4):382–395

van Petten C, Rheinfelder H (1995) Conceptual relationships between spoken words and environmental sounds: event related brain potential measures. Neuropsychologia 33(4):485–508

Vanderveer NJ (1979) Ecological acoustics: human perception of environmental sounds. PhD thesis, Cornell University

Warren WH, Verbrugge RR (1984) Auditory perception of breaking and bouncing events: a case study in ecological acoustics. J Exp Psychol Hum Percept Perform 10(5):704–712

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Lemaitre, G., Heller, L.M. Evidence for a basic level in a taxonomy of everyday action sounds. Exp Brain Res 226, 253–264 (2013). https://doi.org/10.1007/s00221-013-3430-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-013-3430-7