Abstract

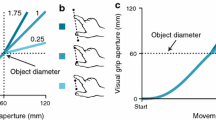

When we reach out to grasp objects, vision plays a major role in the control of our movements. Nevertheless, other sensory modalities contribute to the fine-tuning of our actions. Even olfaction has been shown to play a role in the scaling of movements directed at objects. Much less is known about how auditory information might be used to program grasping movements. The aim of our study was to investigate how the sound of a target object affects the planning of grasping movements in normal right-handed subjects. We performed an experiment in which auditory information could be used to infer size of targets when the availability of visual information was varied from trial to trial. Classical kinematic parameters (such as grip aperture) were measured to evaluate the influence of auditory information. In addition, an optimal inference modeling was applied to the data. The scaling of grip aperture indicated that the introduction of sound allowed subjects to infer the size of the object when vision was not available. Moreover, auditory information affected grip aperture even when vision was available. Our findings suggest that the differences in the natural impact sounds of objects of different sizes being placed on a surface can be used to plan grasping movements.

Similar content being viewed by others

References

Arnott SR, Binns MA, Grady CL, Alain C (2004) Assessing the auditory dual-pathway model in humans. Neuroimage 22:401–408

Binda P, Bruno A, Burr DC, Morrone MC (2007) Fusion of visual and auditory stimuli during saccades: a Bayesian explanation for perisaccadic distortions. J Neurosci 27:8525–8532

Calvert GA (2001) Crossmodal processing in the human brain: insights from functional neuroimaging studies. Cereb Cortex 11:1110–1123

Carello C, Anderson KL, Kunkler-Peck AJ (1998) Perception of object length by sound. Psychol Sci 9:211–214

Fagioli S, Hommel B, Schubotz RI (2007) Intentional control of attention: action planning primes action-related stimulus dimensions. Psychol Res 71:22–29

Gibson JJ (1966) The senses considered as perceptual systems. Houghton Mifflin, Boston

Giordano BL, Rocchesso D, McAdams S (2010) Integration of acoustical information in the perception of impacted sound sources: the role of information accuracy and exploitability. J Exp Psychol Hum Percept Perform 36:462–476

Goodale MA, Humphrey GK (1998) The objects of action and perception. Cognition 67:181–207

Grassi M (2005) Do we hear size or sound? Balls dropped on plates. Percept Psychophys 67:274–284

Green DM (1987) Profile analysis. Auditory intensity discrimination. Oxford University Press, Oxford

Green T, McKeown D (2007) The role of auditory memory traces in attention to frequency. Percept Psychophys 69:942–951

Hommel B (2004) Event files: feature binding in and across perception and action. Trends Cogn Sci 8:494–500

Hu Y, Eagleson R, Goodale MA (1999) The effects of delay on the kinematics of grasping. Exp Brain Res 126:109–116

Jeannerod M (1984) The timing of natural prehension movements. J Mot Behav 16:235–254

Kagerer FA, Contreras-Vidal JL (2009) Adaptation of sound localization induced by rotated visual feedback in reaching movements. Exp Brain Res 193:315–321

Kim JK, Zatorre RJ (2010) Can you hear shapes you touch? Exp Brain Res 202:747–754

King BR, Kagerer FA, Contreras-Vidal JL, Clark JE (2009) Evidence for multisensory spatial-to-motor transformations in aiming movements of children. J Neurophysiol 101:315–322

Klatzky RL, Pai DK, Krotkov EP (2000) Perception of material from contact sounds. PRESENCE: Teleoperators Virtual Environ 9:399–410

Kunkler-Peck AJ, Turvey MT (2000) Hearing shape. J Exp Psychol Hum Percept Perform 26:279–294

Lakatos S, McAdams S, Causse R (1997) The representation of auditory source characteristics: simple geometric form. Percept Psychophys 59:1180–1190

Lewkowicz DJ (2000) The development of intersensory temporal perception: an epigenetic systems/limitations view. Psychol Bull 126:281–308

Middlebrooks JC, Green DM (1991) Sound localization by human listeners. Annu Rev Psychol 42:135–159

Oldfield RC (1971) The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia 9:97–113

Patchay S, Castiello U, Haggard P (2003) A cross-modal interference effect in grasping objects. Psychon Bull Rev 10:924–931

Pettypiece CE, Goodale MA, Culham JC (2010) Integration of haptic and visual size cues in perception and action revealed through cross-modal conflict. Exp Brain Res 201:863–873

Ren L, Khan AZ, Blohm G, Henriques DY, Sergio LE, Crawford JD (2006) Proprioceptive guidance of saccades in eye-hand coordination. J Neurophysiol 96:1464–1477

Sober SJ, Sabes PN (2003) Multisensory integration during motor planning. J Neurosci 23:6982–6992

Tubaldi F, Ansuini C, Tirindelli R, Castiello U (2008) The grasping side of odours. PLoS One 3:e1795

Westwood DA, Goodale MA (2003) A haptic size-contrast illusion affects size perception but not grasping. Exp Brain Res 153:253–259

Zmigrod S, Hommel B (2009) Auditory event files: integrating auditory perception and action planning. Atten Percept Psychophys 71:352–362

Conflict of interest

The authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Sedda, A., Monaco, S., Bottini, G. et al. Integration of visual and auditory information for hand actions: preliminary evidence for the contribution of natural sounds to grasping. Exp Brain Res 209, 365–374 (2011). https://doi.org/10.1007/s00221-011-2559-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-011-2559-5