Abstract

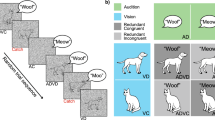

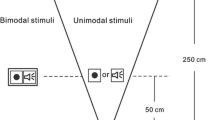

Participants presented with auditory, visual, or bimodal audiovisual stimuli in a speeded discrimination task, fail to respond to the auditory component of bimodal targets significantly more often than to the visual component, a phenomenon known as the Colavita visual dominance effect. Given that spatial and temporal factors have recently been shown to modulate the Colavita effect, the aim of the present study, was to investigate whether semantic congruency also modulates the effect. In the three experiments reported here, participants were presented with a version of the Colavita task in which the stimulus congruency between the auditory and visual components of the bimodal targets was manipulated. That is, the auditory and visual stimuli could refer to the same or different object (in Experiments 1 and 2) or audiovisual speech event (Experiment 3). Surprisingly, semantic/stimulus congruency had no effect on the magnitude of the Colavita effect in any of the experiments, although it exerted a significant effect on certain other aspects of participants’ performance. This finding contrasts with the results of other recent studies showing that semantic/stimulus congruency can affect certain multisensory interactions.

Similar content being viewed by others

Notes

Frick (1995) has argued that one cannot confidently accept the null hypothesis when the P value is in the range from 0.200 to 0.500, but if the P value is greater than 0.500, he argues that this provides one important criterion for accepting the null hypothesis.

Observed power is the probability of correctly rejecting a false statistical null hypothesis (Type II error; the probability of a Type II error is referred to as β) and is equal to 1 − β. Thus, while a low P-value and a high observed power would provide support for the H1 hypothesis, a P-value above 0.500 and a low observed power would be evidence supporting the null hypothesis (Frick 1995).

Fourteen naïve participants took part in this control study. The apparatus, materials, design, and procedure were exactly the same as in Experiment 1 with the sole exception that the experimental stimuli now consisted of pictures and sounds of cats and phones (rather than of cats and dogs as used in Experiment 1). The data from the bimodal trials in which the participants failed to respond to one of the stimuli were analysed using an ANOVA with the factors of Response (Auditory-only or Visual-only) and Target Congruency (Congruent or Incongruent). The analysis of the error data revealed a significant main effect of Response [F(1, 13) = 12.00, P = 0.004], attributable to participants making significantly more visual-only than auditory-only responses (21.3 vs. 8.2% of all bimodal trials, respectively); once again demonstrating a Colavita effect. The analysis of the error data also revealed a significant main effect of Target Congruency [F(1, 13) = 10.64, P = 0.006], attributable to participants making significantly more errors when the auditory and visual components of the bimodal stimuli were congruent (16.4% errors) than when they were incongruent (13.1% errors). Crucially, however, there was no interaction between Response and Target Congruency [F(1, 13) = 1.47, P = 0.247]. Hence, once again, no effect of semantic congruence on the magnitude of the Colavita effect was observed.

It should be noted that the task that participants had to perform could also be considered to be an identification task (where participants discriminate which modality a target is presented in). Quite what is the most appropriate description for the task is ambiguous, as participants were both detecting stimuli in different modalities, and discriminating which modality a target was presented in.

Ten naïve participants took part in this control study. The apparatus, materials, design, and procedure were exactly the same as in Experiment 1 with the sole exception that the participants were now instructed to press one key in response to cats and another key in response to dogs. Thus, for the congruent bimodal trials, the participants only had to press one key (a single-response trial). Whenever an incongruent bimodal stimulus (containing both a cat and a dog target) was presented, the participants were explicitly instructed to press both response keys (a two-response trial). The data from the incongruent bimodal trials in which the participants failed to respond to one of the two stimuli were analysed using an ANOVA with the factors of Response (Auditory-only or Visual-only) and Auditory Stimulus (Cat or Dog). The analysis revealed a significant main effect of Response [F(1, 9) = 8.27, P = 0.010], with participants making significantly more visual-only than auditory-only responses (19.2 vs. 9.1% of all bimodal trials, respectively), thus demonstrating a robust Colavita visual dominance effect. None of the other terms in this analysis of the error data reached significance [both Fs < 1, n.s.]. The RT data from those trials in which only a single response was required were analysed using an ANOVA with the factor of Stimulus Type (Auditory, Visual, or Bimodal) and revealed a significant main effect [F(2, 18) = 17.95, P < 0.001]. Participants responded more rapidly to bimodal targets (512 ms) than to either auditory (570 ms; t(9) = 5.59, P < 0.001) or visual targets (539 ms; t(9) = 3.84, P = 0.004), and more rapidly to visual than to auditory targets (t(9) = 2.79, P = 0.021). Finally, an ANOVA performed on the bimodal congruent and incongruent RT data with the factors of Semantic Congruency (Congruent or Incongruent) revealed a significant main effect [F(1, 9) = 38.92, P < 0.001], with participants responding significantly more rapidly to congruent (539 ms) than to incongruent targets (712 ms).

References

Baylis GC, Simon SL, Baylis LL, Rorden C (2002) Visual extinction with double simultaneous stimulation: what is simultaneous? Neuropsychologia 40:1027–1034

Bertelson P, de Gelder B (2004) The psychology of multimodal perception. In: Spence C, Driver J (eds) Crossmodal space and crossmodal attention. Oxford University Press, Oxford, pp 141–177

Callan DE, Callan AM, Kroos C, Vatikiotis-Bateson E (2001) Multimodal contribution to speech perception revealed by independent component analysis: a single-sweep EEG case study. Cogn Brain Res 10:349–353

Callan DE, Jones JA, Munhall K, Callan AM, Kroos C, Vatikiotis-Bateson E (2003) Neural processes underlying perceptual enhancement by visual speech gestures. Neuroreport 14:2213–2218

Calvert GA, Spence C, Stein BE (eds) (2004) The handbook of multisensory processes. MIT, Cambridge, MA

Colavita FB (1974) Human sensory dominance. Percept Psychophys 16:409–412

Colavita FB, Tomko R, Weisberg D (1976) Visual prepotency and eye orientation. Bull Psychon Soc 8:25–26

Colavita FB, Weisberg D (1979) A further investigation of visual dominance. Percept Psychophys 25:345–347

Driver J, Spence C (2000) Multisensory perception: beyond modularity and convergence. Curr Biol 10:R731–R735

Egeth HE, Sager LC (1977) On the locus of visual dominance. Percept Psychophys 22:77–86

Frick RW (1995) Accepting the null hypothesis. Mem Cognit 23:132–138

Hay JC, Pick HLJ, Ikeda K (1965) Visual capture produced by prism spectacles. Psychon Sci 2:215–216

Heron J, Whitaker D, McGraw PV (2004) Sensory uncertainty governs the extent of audio-visual interaction. Vision Res 44:2875–2884

Howard IP, Templeton WB (1966) Human spatial orientation. Wiley, New York

Johnson TL, Shapiro KL (1989) Attention to auditory and peripheral visual stimuli: effects of arousal and predictability. Acta Psychol 72:233–245

Koppen C, Spence C (2006) Prior entry and the Colavita effect. Poster presented at the 7th meeting of the IMRF. Trinity College, Dublin, 18–21 June [Abstract no. 111]

Koppen C, Spence C (2007a) Seeing the light: exploring the Colavita visual dominance effect. Exp Brain Res 180:737–754

Koppen C, Spence C (2007b) Spatial coincidence modulates the Colavita visual dominance effect. Neurosci Lett 417:107–111

Koppen C, Spence C (2007c) Assessing the role of stimulus probability on the Colavita visual dominance effect. Neurosci Lett 418:266–271

Laurienti PJ, Kraft RA, Maldjian JA, Burdette JH, Wallace MT (2004) Semantic congruence is a critical factor in multisensory behavioral performance. Exp Brain Res 158:405–414

Lavie N (2005) Distracted and confused? Selective attention under load. Trends Cogn Sci 9:75–82

Marcell ME, Borella D, Greene M, Kerr E, Rogers S (2000) Confrontation naming of environmental sounds. J Clin Exp Neuropsychol 22:830–864

McGurk H, MacDonald J (1976) Hearing lips and seeing voices. Nature 264:746–748

McRae K, de Sa VR, Seidenberg MS (1997) On the nature and scope of featural representations of word meaning. J Exp Psychol Gen 126:99–130

Miller JO (1982) Divided attention: evidence for coactivation with redundant signals. Cogn Psychol 14:247–279

Molholm S, Ritter W, Javitt DC, Foxe JJ (2004) Multisensory visual-auditory object recognition in humans: a high-density electrical mapping study. Cereb Cortex 14:452–465

Molholm S, Ritter W, Murray MM, Javitt DC, Schroeder CE, Foxe JJ (2002) Multisensory auditory-visual interactions during early sensory processing in humans: a high-density electrical mapping study. Cogn Brain Res 14:121–134

Morein-Zamir S, Soto-Faraco S, Kingstone A (2003) Auditory capture of vision: examining temporal ventriloquism. Cogn Brain Res 17:154–163

Partan S, Marler P (1999) Communication goes multimodal. Science 283:1272–1273

Quinlan P (2000) The ‘late’ locus of visual dominance. Abstr Psychon Soc 5:64

Radeau M, Bertelson P (1977) Adaptation to auditory-visual discordance and ventriloquism in semirealistic situations. Percept Psychophys 22:137–146

Recanzone GH (2003) Auditory influences on visual temporal rate perception. J Neurophysiol 89:1078–1093

Rosenblum LD, Wuestefeld AP, Anderson KL (1996) Auditory reachability: an affordance approach to the perception of sound source distance. Ecol Psychol 8:1–24

Schneider W, Eschman A, Zuccolotto A (2002a) E-prime user’s guide. Psychology Software Tools, Pittsburgh

Schneider W, Eschman A, Zuccolotto A (2002b) E-prime reference guide. Psychology Software Tools, Pittsburgh

Sekiyama K, Kanno I, Miura S, Sugita Y (2003) Auditory-visual speech perception examined by fMRI and PET. Neurosci Res 47:277–287

Shams L, Kamitani Y, Shimojo S (2000) What you see is what you hear: sound induced visual flashing. Nature 408:788

Shimojo S, Shams L (2001) Sensory modalities are not separate modalities: plasticity and interactions. Curr Opin Neurobiol 11:505–509

Sinnett S, Spence C, Soto-Faraco S (2007) Visual dominance and attention: the Colavita effect revisited. Percept Psychophys

Slutsky DA, Recanzone GH (2001) Temporal and spatial dependency of the ventriloquism effect. Neuroreport 12:7–10

Snodgrass JG, Vanderwart M (1980) A standardized set of 260 pictures: norms for name agreement, image agreement, familiarity, and visual complexity. J Exp Psychol Hum Learn Mem 6:174–215

Spence C (2007) Audiovisual multisensory integration. Acoust Sci Technol 28:61–70

Stein BE, Meredith MA (1993) The merging of the senses. MIT, Cambridge, MA

Taylor KI, Moss HE, Stamatakis EA, Tyler LK (2006) Binding crossmodal object features in perirhinal cortex. Proc Natl Acad Sci USA 103:8239–8244

Tyler LK, Moss HE (2001) Towards a distributed account of conceptual knowledge. Trends Cogn Sci 5:244–252

Vatakis A, Spence C (2007a) Crossmodal binding: evaluating the ‘unity assumption’ using audiovisual speech. Percept Psychophys 69:744–756

Vatakis A, Spence C (2007b) Evaluating the influence of the ‘unity assumption’ for the temporal perception of realistic audiovisual stimuli. Acta Psychol doi:10.1016/j.actpsy.2006.12.002

Vroomen J, Keetels M (2006) The spatial constraint in intersensory pairing: no role in temporal ventriloquism. J Exp Psychol Hum Percept Perform 32:1063–1071

Wallace MT, Roberson GE, Hairston WD, Stein BE, Vaughan JW, Schirillo JA (2004) Unifying multisensory signals across time and space. Exp Brain Res 158:252–258

Watanabe K, Shimojo S (1998) Attentional modulation in perception of visual motion events. Perception 27:1041–1054

Welch RB (1999) Meaning, attention, and the “unity assumption” in the intersensory bias of spatial and temporal perceptions. In: Ashersleben G, Bachmann T, Müsseler J (eds) Cognitive contributions to the perception of spatial and temporal events. Elsevier Science, B.V., Amsterdam, pp 371–387

Welch RB, DuttonHurt LD, Warren DH (1986) Contributions of audition and vision to temporal rate perception. Percept Psychophys 39:294–300

Welch RB, Warren DH (1980) Immediate perceptual response to intersensory discrepancy. Psychol Bull 3:638–667

Welch RB, Warren DH (1986) Intersensory interactions. In: Boff KR, Kaufman L, Thomas JP (eds) Handbook of perception and performance, vol 1. Sensory processes and perception. Wiley, New York, pp 25-1–25-36

Zampini M, Guest S, Shore DI, Spence C (2005) Audio-visual simultaneity judgments. Percept Psychophys 67:531–544

Acknowledgements

C. K. was supported by a Departmental Studentship from the Department of Experimental Psychology, University of Oxford. Correspondence regarding this article should be addressed to C. K., at the Department of Experimental Psychology, University of Oxford, South Parks Road, Oxford, OX1 3UD. E-mail: camille.koppen@psy.ox.ac.uk.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Koppen, C., Alsius, A. & Spence, C. Semantic congruency and the Colavita visual dominance effect. Exp Brain Res 184, 533–546 (2008). https://doi.org/10.1007/s00221-007-1120-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00221-007-1120-z