Abstract

We study independent long-range percolation on \(\mathbb {Z}^d\) for all dimensions d, where the vertices u and v are connected with probability 1 for \(\Vert u-v\Vert _\infty =1\) and with probability \(p(\beta ,\{u,v\})=1-e^{-\beta \int _{u+\left[ 0,1\right) ^d} \int _{v+\left[ 0,1\right) ^d} \frac{1}{\Vert x-y\Vert _2^{2d}}d x d y } \approx \frac{\beta }{\Vert u-v\Vert _2^{2d}}\) for \(\Vert u-v\Vert _\infty \ge 2\). Let \(u \in \mathbb {Z}^d\) be a point with \(\Vert u\Vert _\infty =n\). We show that both the graph distance \(D(\textbf{0},u)\) between the origin \(\textbf{0}\) and u and the diameter of the box \(\{0,\ldots , n\}^d\) grow like \(n^{\theta (\beta )}\), where \(0<\theta (\beta ) < 1\). We also show that the graph distance and the diameter of boxes have the same asymptotic growth when two vertices u, v with \(\Vert u-v\Vert _2 > 1\) are connected with a probability that is close enough to \(p(\beta ,\{u,v\})\). Furthermore, we determine the asymptotic behavior of \(\theta (\beta )\) for large \(\beta \), and we discuss the tail behavior of \(\frac{D(\textbf{0},u)}{\Vert u\Vert _2^{\theta (\beta )}}\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider independent long-range bond percolation on \(\mathbb {Z}^d\) where all edges \(\{u,v\}\) with \(\Vert u-v\Vert _\infty =1\) are open and an edge \(\{u,v\}\) with \(\Vert u-v\Vert _\infty \ge 2\) is open with probability

where \(\mathcal {C}{:}{=}\left[ 0,1\right) ^d\), \(\beta \ge 0\), and where we use \(\Vert \cdot \Vert \) for the Euclidean norm of a vector. We call the corresponding probability measure \(\mathbb {P}_\beta \) and denote its expectation by \(\mathbb {E}_\beta \). The resulting graph is clearly connected and the graph distance D(u, v) between two points \(u,v\in \mathbb {Z}^d\) satisfies \(D(u,v) \le \Vert u-v \Vert _\infty \). We are interested in the scaling of the typical distance of two points \(u,v \in \mathbb {Z}^d\) and the scaling of the diameter of boxes \(\left\{ 0,\ldots ,N\right\} ^d\). In [16] it is proven that the typical diameter of some box grows at most polynomially with some power strictly smaller than 1. More precisely, Coppersmith, Gamarnik, and Sviridenko proved that for all \(\beta >0\) there exists an exponent \(\theta ^\prime = \theta ^\prime (\beta ) < 1 \) such that \(\lim _{N \rightarrow \infty } \mathbb {P}_\beta \left( \text {Diam}\left( \left\{ 0,\ldots ,N\right\} ^d\right) \le N^{\theta ^\prime }\right) = 1\). However, the authors do not give any polynomial lower bound for dimensions \(d\ge 2\). An analogous lower bound was already conjectured in [7, 11], and an exact lower bound was later proven to hold in one dimension: In [20] Ding and Sly showed that for the connection probability \(p(\beta , \{u,v\})\) given by \(p(\beta , \{u,v\})= \frac{\beta }{|u-v|^2}\wedge 1\) for \(|u-v|\ge 2\) and \(p(\beta , \{u,v\})= 1\) for \(|u-v|= 1\) the typical distance between the two points \(0,n \in \mathbb {N}\) and the diameter of \(\{0, \ldots ,n\}\) both grow like \(n^\theta \) for some \(\theta \in \left( 0,1\right] \), where \(\theta =1\) if and only if \(\beta =0\). More precisely, they proved that

where the notation \(A(n)\approx _P B(n)\) means that for all \(\varepsilon >0 \) there exist \(0<c<C<\infty \) such that \(\mathbb {P}\left( cB(n) \le A(n) \le CB(n)\right) > 1-\varepsilon \) for all \(n \in \mathbb {N}\). In this paper, we prove an analogous result for all dimensions.

1.1 Main results

Theorem 1.1

For all dimensions d and all \(\beta \in (0,1)\), there exists an exponent \(\theta = \theta (d,\beta ) < 1\) such that

and

As we consider the dimension d as fixed, we also write \(\theta (\beta )\) for \(\theta (d,\beta )\), although \(\theta (d,\beta )\) depends on the dimension. We write \(\textbf{0}\) for the vector with all entries equal to 0 and the notation \(A(u)\approx _P B(u)\) means that for all \(\varepsilon >0 \) there exist \(0<c<C<\infty \) such that \(\mathbb {P}_\beta \left( cB(u) \le A(u) \le CB(u)\right) > 1-\varepsilon \) for all \(u \in \mathbb {Z}^d\). The inclusion probability \(p(\beta ,\{u,v\}) {:}{=}1-e^{-\beta \int _{u+\mathcal {C}} \int _{v+\mathcal {C}} \frac{1}{\Vert x-y\Vert ^{2d}} \textrm{d}x \textrm{d}y }\) is only one possible choice for a function which asymptotically grows like \(\frac{\beta }{\Vert u-v\Vert ^{2d}}\). In Sect. 7, we will show the same results for other possible choices of such functions. Examples of inclusion probabilities we consider are \(\frac{\beta }{\Vert u-v\Vert ^{2d}}\wedge 1\) and \(1-e^{-\tfrac{\beta }{\Vert u-v\Vert ^{2d}}}\).

The exponent \(\theta =\theta (\beta )\) defined in Theorem 1.1 arises through a subadditivity argument (see Sect. 2.2 below) and its precise value is not known to us. However, we determine the asymptotic behavior of the function \(\theta (\beta )\) for large \(\beta \).

Theorem 1.2

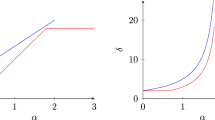

For all dimensions d, there exist constants \(0<c<C<\infty \) such that

for all \(\beta \ge 2\).

1.2 The continuous model

For \(\beta >0\), the described discrete percolation model has a self-similarity that comes from a coupling with the underlying continuous model, that we will now describe for any dimension. This will also explain our, at first sight complicated, choice of the connection probability. Consider a Poisson point process \(\tilde{\mathcal {E}}\) on \(\mathbb {R}^d \times \mathbb {R}^d\) with intensity \(\frac{\beta }{2 \Vert t-s\Vert _2^{2d}}\). Define the symmetrized version \(\mathcal {E}\) by \(\mathcal {E}{:}{=}\{(t,s) \in \mathbb {R}^d \times \mathbb {R}^d: (s,t) \in \tilde{\mathcal {E}}\} \cup \tilde{\mathcal {E}}\). For \(u,v \in \mathbb {Z}^d\) with \(\Vert u-v\Vert _\infty \ge 1\) we put an edge between u and v if and only if \(\left( \left( u+\mathcal {C}\right) \times \left( v+\mathcal {C}\right) \right) \cap \mathcal {E}\ne \emptyset \), where we use the notation \(\mathcal {C}= \left[ 0,1\right) ^d\). The cardinality of \(\left( \left( u+\mathcal {C}\right) \times \left( v+\mathcal {C}\right) \right) \cap \tilde{\mathcal {E}}\) is a random variable with Poisson distribution and parameter \(\int _{u+\mathcal {C}} \int _{v+\mathcal {C}} \frac{\beta }{2 \Vert t-s\Vert ^{2d}} \textrm{d}s \textrm{d}t\). Thus, by the properties of Poisson processes, the probability that \(u \not \sim v\) equals

which is exactly the probability that \(u \not \sim v\) under the measure \(\mathbb {P}_\beta \). Note that for \(\{u,v\}\) with \(\Vert u-v\Vert _\infty =1\) we have \(\int _{u+\mathcal {C}} \int _{v+\mathcal {C}} \frac{\beta }{ \Vert t-s\Vert ^{2d}} \textrm{d}s \textrm{d}t = \infty \). So we really get that all edges of the form \(\{u,v\}\) with \(\Vert u-v\Vert _\infty =1\) are open. The construction with the Poisson process also implies that the presence of different bonds is independent and thus the resulting measure of the random graph constructed above equals \(\mathbb {P}_\beta \). The chosen inclusion probabilities have many advantages. First of all, the resulting model is invariant under translation and invariant under the reflection of coordinates, i.e., when we change the i-th component \(p_i(x)\) of all \(x\in \mathbb {Z}^d\) to \(-p_i(x)\). Furthermore, the model has the following self-similarity: For some vector \(u= (p_1(u),\ldots ,p_d(u))\in \mathbb {Z}^d\) and \(n\in \mathbb {N}_{>0}\) we define the translated boxes \(V_u^n {:}{=}\prod _{i=1}^{d} \{p_i(u) n, \ldots , (p_i(u)+1)n-1\} = nu + \prod _{i=1}^{d} \{0,\ldots , n-1\} \). Then for all points \(u,v \in \mathbb {Z}^d\), and all \(n\in \mathbb {N}_{>0}\) one has

which shows the self-similarity of the model. Also observe that for any \(\alpha \in \mathbb {R}_{> 0}\) the process \(\alpha \tilde{\mathcal {E}} {:}{=}\left\{ (x,y) \in \mathbb {R}^d \times \mathbb {R}^d: \left( \frac{1}{\alpha }x, \frac{1}{\alpha }y \right) \in \tilde{\mathcal {E}} \right\} \) is again a Poisson point process with intensity \(\frac{\beta }{2 \Vert x-y\Vert ^{2d}}\).

1.3 Notation

We use the notation \(e_i\) for the i-th standard unit vector in \(\mathbb {R}^d\). For a vector \(y \in \mathbb {R}^d\), we write \(p_i(y)\) for the i-th coordinate of y, i.e., \(p_i(y) = \langle e_i, y \rangle \). We also use the notation \(\textbf{0}\) for the vector with all entries equal to 0 and the notation \(\textbf{1}\) for the vector with all entries equal to 1. When we write \(\Vert u\Vert \) we always mean the 2-norm of the vector u. We write \(\mathcal {S}_k\) for the set of points \(\left\{ x \in \mathbb {Z}^d: \Vert x\Vert _\infty = k \right\} \) and \(\mathcal {S}_{\ge k}\) for the set \(\left\{ x \in \mathbb {Z}^d: \Vert x\Vert _\infty \ge k \right\} \). For the closed ball of radius r around \(x \in \mathbb {Z}^d\) in the \(\infty \)-norm we use the notation \(B_r(x)\), i.e., \(B_r(x)=\left\{ y \in \mathbb {Z}^d: \Vert x-y\Vert _\infty \le r \right\} \). For a vector \(u\in \mathbb {Z}^d\) and \(n\in \mathbb {N}\), we write

for the box of side length n shifted by nu. When we want to emphasize that we work on certain subgraphs \(A\subset \mathbb {Z}^d\) we will write \(D_A \left( x,y\right) \) for the graph distance inside the set A, i.e., when we consider edges with both endpoints inside A only. Whenever we write \(\text {Diam}(A)\) for some set \(A\subset \mathbb {Z}^d\) we always mean the inner diameter of this set, i.e., \(\text {Diam}(A) = \max _{x,y \in A} D_A(x,y)\). For a graph (V, E) we think of the percolation configuration as a random element \(\omega \in \{0,1\}^E\), where we say that the edge e exists or is open or present if \(\omega (e)=1\). For \(\omega \in \left\{ 0,1\right\} ^E\) and \(e \in E\), we define the configuration \(\omega ^{e-} \in \{0,1\}^E\) by

so this is the configuration where we deleted the edge e. For \(\omega \in \{0,1\}^E\), we also write \(D(u,v;\omega )\) for the graph distance between u and v in the environment represented by \(\omega \).

1.4 Related work

The scaling of the graph distance, also called chemical distance or hop-count distance, is a central characteristic of a random graph and has also been examined for many different models of percolation, see for example [1, 3, 7, 10,11,12,13, 16, 18,19,20,21, 24, 25, 27, 32]. For all dimensions d, one can also consider the long-range percolation model with connection probability asymptotic to \(\frac{\beta }{\Vert u-v\Vert ^s}\). When varying the parameter s, there are a total of 5 different regimes, with the transitions happening at \(s=d\) and \(s=2d\). The value of the first transition \(s=d\) is very natural, as the resulting random graph is locally finite if and only if \(s>d\). For \(s<d\) the graph distance between two points is at most \(\lceil \frac{d}{d-s} \rceil \) [8], whereas for \(s=d\), the diameter of the box \(\{0,\ldots ,n\}^d\) is of order \(\frac{\log (n)}{\log (\log (n))}\) [16, 37]. In [7, 11,12,13] the authors proved that for \(d<s<2d\) the typical distance between two points of Euclidean distance n grows like \(\log (n)^\Delta \), where \(\Delta ^{-1} = \log _2 \left( \frac{2d}{s}\right) \). The behavior of the typical distance for long-range percolation on \(\mathbb {Z}^d\) also changes at \(s=2d\). The reason why \(s=2d\) is a critical value is that for \(s=2d\) the graph is self-similar, as described in Sect. 1.2. For \(s>2d\) the graph distance grows at least linearly in the Euclidean distance of two points, as proven in [10]. In [20] it is shown that the typical distance for \(d=1, s=2\) grows like \(n^\theta \) for some \(\theta \in (0,1)\). For \(d\ge 2\) and \(s=2d\) the authors in [16] proved a polynomial upper bound on the graph distance, but no lower bound. In this paper, we show a matching polynomial lower bound for all dimensions d, similar to the results of [20] in one dimension. For fixed dimension d, different characteristics of the function \(\beta \mapsto \theta (\beta )\) are considered in the companion paper [4], where it is shown that the exponent is continuous and strictly decreasing in \(\beta \). Together with the fact \(\theta (0)=1\) and Theorem 1.2 this shows that the long-range percolation model for \(s=2d\) interpolates between linear growth and subpolynomial growth as \(\beta \) goes from 0 to \(+\infty \).

Another line of research is to investigate what happens when one drops the assumption that \(p(\beta ,\{u,v\})=1\) for all nearest neighbor edges \(\{u,v\}\), but assigns i.i.d. random variables to the nearest neighbor edges instead. For \(d=1\), there is a change of behavior at \(s=2\). As proven in [2, 35] or [22], an infinite cluster can not emerge for \(s > 2\) and for \(s=2, \beta \le 1\), no matter how small \(\mathbb {P}\left( k \not \sim k+1\right) \) is. On the other hand, an infinite cluster can emerge for \(s<2\) and \(s=2,\beta > 1\) (see [33]). In [2] the authors proved that there is a discontinuity in the percolation density for \(s=2\), contrary to the situation for \(s<2\), as proven in [9, 29]. For models, for which an infinite cluster exists the behavior of the percolation model at and near criticality is also a well-studied question (cf. [5, 6, 9, 14, 17, 29,30,31]). It is not known up to now how the typical distance in long-range percolation grows for \(s=2d\) and \(p(\beta ,\{u,v\})<1\) for nearest-neighbor edges \(\{u,v\}\), but we conjecture also a polynomial growth in the Euclidean distance, whenever an infinite cluster exists.

2 Asymptotic Behavior of the Distance Exponent for Large \(\beta \)

In this chapter, we prove Theorem 1.2. On the way, in Sect. 2.1, we prove several elementary bounds on connection probabilities between certain points and boxes in the long-range percolation graph that will also be used in the following sections. In Sect. 2.2, we prove a submultiplicative structure of the expected distance between two points, leading to the existence of a distance exponent, and also to the inverse logarithmic upper bound in Theorem 1.2. In Sect. 2.3, we show that vertices inside a box are not connected to more than one box that is far away, typically. This is necessary in order to prove strict positivity of the distance exponent \(\theta (\beta )\) in Sect. 2.4, and the lower bound on \(\theta (\beta )\) in Theorem 1.2.

2.1 Bounds on connection probabilities

Lemma 2.1

For all \(\beta \ge 0\), all \(n\in \mathbb {N}\), and all \(u,v\in \mathbb {Z}^d\) with \(\Vert u-v\Vert _\infty \ge 2\), one has the upper bound

and one has the lower bound

For all \(k\ge 2\) one has

and for \(m\in \mathbb {N}\), any vertex \(x \in V_\textbf{0}^m\), and a box \(V_w^m\) with \(\Vert w\Vert _\infty \ge 2\) one has

Proof

The equality \(\mathbb {P}_\beta \left( u\sim v\right) = \mathbb {P}_\beta \left( V_u^n \sim V_v^n\right) \) is clear from the discussion about the underlying continuous model in Sect. 1.2. We start with the proof of (3). Applying the inequalities \(1-e^{-x} \le x\) and \(\Vert \cdot \Vert _2 \ge \Vert \cdot \Vert _\infty \), we get that for two vertices u, v with \(\Vert u-v\Vert _\infty \ge 2\)

In order to bound the connection probability between u and v from below, first observe that \(\Vert x\Vert _2 \le \Vert x\Vert _1 \le d \Vert x\Vert _\infty \) for all \(x\in \mathbb {R}^d\). Thus we have

and this already gives

as \(1-e^{-x} \ge \frac{x}{2}\wedge \frac{1}{2}\) for all \(x \in \mathbb {R}_{\ge 0}\). So we showed (4).

For each point \(x\in \mathcal {S}_k = \{z \in \mathbb {Z}^d: \Vert z\Vert _\infty = k\}\), at least one of its coordinates \(p_i(x)\) equals \(-k\) or \(+k\). All other coordinates can be any integer between \(-k\) and \(+k\). Thus we can bound the cardinality of the set \(\mathcal {S}_k\) by \(\left| \mathcal {S}_k\right| \le 2d (2k+1)^{d-1}\). In (7) we showed that \(\mathbb {P}_\beta \left( \textbf{0}\sim x \right) \le \frac{\beta }{(\Vert x\Vert _\infty -1)^{2d}}\). This already implies that for \(k \ge 2\)

and thus also

which already proves (5). For \(m \in \mathbb {N}\), a vertex \(x \in V_\textbf{0}^m\), and a box \(V_w^m\) with \(\Vert w\Vert _\infty \ge 2\), we have for all \(z \in V_w^m\) that \(\Vert x-z\Vert _\infty \ge (\Vert w\Vert _\infty -1)m\). This implies

which shows (6). \(\quad \square \)

We will often condition on the event that two blocks \(V_u^n, V_v^n\) are connected. So if we write X for the number of edges between them, we condition on the event \(X\ge 1\). This conditioning clearly increases the expected number of edges between \(V_u^n\) and \(V_v^n\), but by at most \(+1\), as shown in the next lemma.

Lemma 2.2

Let \(u,v\in \mathbb {Z}^d\) with \(u\ne v\) and let X be the number of edges between the blocks \(V_u^n\) and \(V_v^n\). Then for all \(\beta >0\)

Proof

The random variable X is a sum of independent Bernoulli random variables and we prove the statement for all random variables of this type. We use the notation \(X= \sum _{i=1}^{m} X_i\), where \(m\in \mathbb {N}\), and \((X_i)_{i\in \{1,\ldots , m\}}\) are independent Bernoulli random variables. For \(i \in \{1,\ldots ,m\}\), let \(A_i\) be the event that \(X_i = 1\) and \(X_j = 0\) for all \(j \in \{1,\ldots , i-1\}\). As \(\{X\ge 1\}\) implies that there is a first index i such that \(X_i=1\), we get that

where the symbol \(\bigsqcup \) means a disjoint union. On the event \(A_i\), we know that all the random variables \(X_j\) with \(j<i\) equal 0, but we have no information about random variables \(X_j\) with \(j>i\). Thus we get that

Multiplying by \(\mathbb {P}_\beta \left( A_i\right) \) on both sides of this inequality we get that \(\mathbb {E}_\beta \left[ X \mathbb {1}_{A_i}\right] \le \mathbb {P}_\beta \left( A_i \right) \left( 1+\mathbb {E}_\beta \left[ X\right] \right) \). As the events \((A_i)_{i\in \{1,\ldots ,m\}}\) are disjoint, we finally get that

\(\square \)

2.2 Submultiplicativity and the upper bound in Theorem 1.2

In this section, we prove the submultiplicative structure in the model in Lemma 2.3. This allows us to define the distance growth exponent \(\theta (\beta )\) and also helps to prove the upper bound on \(\theta (\beta )\) in Theorem 1.2.

Lemma 2.3

For all dimensions d and all \(\beta \ge 0\) the sequence

is submultiplicative and for all \(\beta \ge 0\)

Proof

We show (9) using a renormalization argument. As before, we define \(V_u^n= \prod _{i=1}^d \left\{ p_i(u) n, \ldots ,(p_i(u)+1)n-1\right\} \). The graph \(G^\prime \) obtained by identifying all the vertices in \(V_u^n\) to one vertex r(u) has the same connection probabilities as the original model. An example of this construction is given in Fig. 1. For \(x,y \in \left\{ 0, \ldots ,mn-1\right\} ^d\), say with \(x \in V_u^n\) and \(y\in V_w^n\), we create a path from x to y as follows. First we consider the shortest path \(\mathcal {P}= \left( r(u_0)=r(u), r(u_1), \ldots , r(u_{l-1}), r(u_l) = r(w) \right) \) from r(u) to r(w) in \(G^\prime \), where \(l=D_{G^\prime }(r(u),r(w))\) is the distance between r(u) and r(w) in the renormalized model. Inside \(V_{u_i}^n\), we first fix two vertices \(z_i\) and \(v_i\) such that \(z_i \sim V_{u_{i-1}}^n\) and \(v_i \sim V_{u_{i+1}}^n\); for \(i=0\) set \(z_0=x\) and for \(i=l\) set \(v_l=y\). In case there are several such vertices \(z_i\) and \(v_i\), we choose the one with smallest coordinates, where we weigh the coordinates in decreasing order (any deterministic rule that does not depend on the environment would work here). For each i, there clearly is a path between \(z_i\) and \(v_i\) that is completely inside \(V_{u_i}^n\). As no information has been revealed up to now about the edges with both endpoints inside \(V_{u_i}^n\), the expected distance between \(v_i\) and \(z_i\) inside \(V_{u_i}^n\) is at most

Now we glue all these paths together to get a path from x to y. To bound the total distance between x and y note that we have \(l+1\) sets \(V_{u_i}^n\) in which we need to find a path between two vertices. Additionally, we need to add \(+l\) for the steps that we make from \(V_{u_i}^n\) to \(V_{u_{i+1}}^n\) for \(i=0,\ldots ,l-1\). Thus we get that

Taking expectations on both sides of this inequality yields

As \(x,y \in \left\{ 0, \ldots ,nm-1\right\} ^d\) were arbitrary we obtain

and as the sequence is submultiplicative we can define

Actually, this limit exists not just along dyadic points of the form \(2^k\), for \(k\in \mathbb {N}\), but even when taking a limit along the integers, i.e.,

which follows from Lemma 4.1 below. As a next step, we want to show that \(\Lambda (n) \ge n^\theta \) for all n. We do this using a proof by contradiction. So assume the contrary, i.e., that for some \(\beta \ge 0\) there exists a natural number N and a \(c<1\) with \(\Lambda (N,\beta )= c N^{\theta (\beta )}\). Using (10) we get that for every integer k

and thus

which is a contradiction. Knowing this already gives us that for all positive numbers K we have

\(\square \)

This lemma and its proof already have several interesting applications. First, we emphasize that \(\Lambda (mn,\beta ) \ge \Lambda (n,\beta )\) for all \(m,n \in \mathbb {N}_{>0}\). This holds, as for arbitrary \(x,y \in V_\textbf{0}^n\), the distance \(D_{V_\textbf{0}^{mn}}(u,v)\) between \(u \in V_x^m\) and \(v \in V_y^m\) is at least the distance between r(x) and r(y) in \(G^\prime \). Using the self-similarity and taking expectations we thus get that

which shows our claim. For \(n=3\), we have for all \(u,v \in \{0,1,2\}^d\) with \(u\ne v\), and for all \(\beta >0\) that

and this already implies that \(\Lambda (3) {=}{:}3^{\theta ^\prime } < 3\) for some \(\theta ^\prime = \theta ^\prime (\beta ) < 1\). Inductively, with a renormalization at scale 3, we get that

for all \(k,N \in \mathbb {N}_{>0}\). This inequality already gives the upper bound in expectation for \(s=2d\), that was already observed in [16] with a very similar technique. Next, we do a renormalization at scale \(\root 2d \of {\beta }\) instead of scale 3 in order to get the inverse logarithmic upper bound stated in Theorem 1.2.

Proof of the upper bound in Theorem 1.2

We want to show that for each dimension d there exists a constant \(C<\infty \) such that for all \(\beta \ge 2\)

As the connection probability \(\mathbb {P}_\beta \left( u \sim v\right) \) between any two vertices \(u,v\in \mathbb {Z}^d\) is increasing in \(\beta \), the distance exponent \(\theta : \mathbb {R}_{\ge 0} \rightarrow \left[ 0,1\right] \) is clearly decreasing by the Harris coupling, see for example [28]. Thus it suffices to show the upper bound for \(\beta \) large enough with \(\root 2d \of {\beta } \in \mathbb {N}\). For such a \(\beta \) and all \(u,v \in \{0, \ldots , \root 2d \of {\beta } - 1 \}^d\), we have for all \(y \in u + \mathcal {C}\) and \(x \in v + \mathcal {C}\) that

and this already implies

Inserting this into the definition \(p(\beta , \{u,v\})\) and using that \(1-e^{-x}\ge \frac{x}{2}\) for all \(x\le 1\) we get that for large enough \(\beta \) that satisfies \(\frac{1}{d^{2d} \beta } \le 1\)

for all \(u,v \in \{0,\ldots ,\root 2d \of {\beta }-1\}^d\). Next, we bound the expected graph distance between u and v. We do this by comparing the distance to a geometric random variable. Let \((u=u_0,u_1,\ldots , u_k=v)\) be a deterministic self-avoiding path from u to v inside \(V_{\textbf{0}}^{\root 2d \of {\beta }}\), with \(k \le \root 2d \of {\beta }\) and \(\Vert u_{i}- u_{i-1}\Vert _\infty =1\) for all \(i \in \{1,\ldots ,k\}\). Starting from this, we build a shorter path between u and v as follows. We start at \(u_0=u\). Then for \(i=0,\ldots ,k-1\), if \(u_i \sim v\), directly go to v. If \(u_i \not \sim v\), then go to \(u_{i+1}\). This gives a path P between u and v, and for \(l\in \{1,\ldots ,k\}\) this path has length of at least l if and only if \(u_{i} \not \sim v\) for all \(i\in \{0,\ldots ,l-2\}\). As the connections between v and different \(u_i\)-s are independent we get that

This already implies that \(\Lambda \left( \root 2d \of {\beta }, \beta \right) \le (2d)^{2d} + 1 \le (3d)^{2d}\). Applying the submultiplicativity of \(\Lambda \) iteratively we get that

which finishes the proof. \(\quad \square \)

2.3 Spacing between points with long bonds

In this section, we investigate certain geometric properties of the cluster inside certain boxes. Mostly, we want to get upper bounds on the probability that a vertex is connected to two different long edges. As we will need it at a later point, namely in Sect. 5.1, we will prove the statements for \(\Vert x-y\Vert _\infty \le 1\) instead of \(x=y\). This does not cause major difficulties, as for each point \(x \in \mathbb {Z}^d\), there are only \(3^d\) many points \(y \in \mathbb {Z}^d\) with \(\Vert x-y\Vert _\infty \le 1\). We start with showing that the probability that two vertices x, y with \(\Vert x-y\Vert _\infty \le 1\) are both connected to far away boxes is very low.

Lemma 2.4

For blocks \(V_u^m, V_v^m, V_w^m\) with \(\Vert u-v\Vert _\infty , \Vert v-w\Vert _\infty \ge 2\), there exists a constant \(C_d < \infty \) such that for all \(\beta \ge 0\)

Proof

By translational invariance we can assume that \(v=\textbf{0}\), and thus \(\Vert u\Vert _\infty , \Vert w\Vert _\infty \ge 2\). For each \(x \in V_{\textbf{0}}^m\) there are at most \(3^d\) vertices \(y\in V_{\textbf{0}}^m\) with \(\Vert x-y\Vert _\infty \le 1\). For \(x,y \in V_{\textbf{0}}^m\) the probability that \(y \sim V_w^m\) is bounded by \(\frac{\beta 4^{2d}}{\Vert w\Vert _\infty ^{2d}m^d}\), and the probability that \(x\sim V_u^m\) is bounded by \(\frac{\beta 4^{2d}}{\Vert u\Vert _\infty ^{2d}m^d}\), using (6). Thus

which finishes the proof. \(\quad \square \)

Lemma 2.5

For blocks \(V_u^m, V_v^m, V_w^m\) with \( \Vert v-w\Vert _\infty \ge 2\) and \(\Vert u-v\Vert _\infty = 1\), there exists a constant \(C_d < \infty \) such that for all \(\beta \ge 0\)

Proof

By translational invariance we can assume that \(v=\textbf{0}\), and thus \(\Vert u\Vert _\infty = 1, \Vert w\Vert _\infty \ge 2\). For each \(x \in V_{\textbf{0}}^m\) there are at most \(3^d\) vertices \(y\in V_{\textbf{0}}^m\) with \(\Vert x-y\Vert _\infty \le 1\). For each vertex \(y \in V_{\textbf{0}}^m\) the probability that \(y \sim V_w^m\) is bounded by \(\frac{\beta 4^{2d}}{\Vert w\Vert _\infty ^{2d} m^d}\) by (6). Thus

As \(\Vert u\Vert _\infty =1\) we have \(D_\infty (x,V_u^m) \le m\) for all \(x \in V_{\textbf{0}}^m\), where \(D_\infty \) is the distance with respect to the \(\infty \)-norm. We furthermore have the inequality

for all \(k \in \mathbb {N}\). This is clear for \(k>m\), as the relevant set is empty in this case. For \(k \le m\) the set \( \left\{ x \in \mathbb {Z}^d: D_\infty \left( x, V_u^m\right) = k \right\} \) is just the boundary of the box

which is a box of side length \(m+2k \le 3m\). Thus the boundary has a cardinality of at most \(2d (3m)^{d-1} \le 6^d m^{d-1}\). Using this observation we get that

Inserting this into (15), we get that

which finishes the proof. \(\quad \square \)

Lemma 2.6

Let \(m\in \mathbb {N}, l\in \{1,\ldots , 3^d-1\} \), and let \(v_0,v_1,\ldots , v_{l+1} \in \mathbb {Z}^d\) be distinct with \(\Vert v_{i+1}-v_i\Vert _\infty = 1\) for all \(i\in \{0,\ldots , l\}\), \(\Vert v_{i}-v_0\Vert _\infty = 1\) for all \(i\in \{1,\ldots , l\}\) and \(\Vert v_{l+1}-v_0\Vert _\infty =2\). (An example of such a sequence of points is given in Fig. 2). Then there exists a constant \(C_d<\infty \) such that the two probabilities

are both bounded by \(\frac{C_d \beta \lceil \beta \rceil \log (m)}{m} \) for \(d=1\), respectively by \(\frac{C_d \beta \lceil \beta \rceil }{m} \) for \(d\ge 2\).

An example of points \(v_0,\ldots ,v_{l+1}\) with \(l=5\) as described in Lemma 2.6. \(\Vert v_i-v_{i+1}\Vert _\infty = 1\) for all \(i\in \{0,\ldots ,5\}\) and \(v_{l+1}\) is the first point with \(\Vert v_{l+1}-v_0\Vert _\infty > 1\)

Proof

By a union bound we have that

The sum \( \sum _{x \in V_{v_i}^m } \mathbb {P}_\beta \left( x\sim V_{v_{i-1}}^m \right) \) was already upper bounded in (16). Using this upper bound, \(l\le 3^d\), and inserting this into the line above we get that

which finishes the proof for the first item in the statement of the lemma. The estimate for the second term works analogously. \(\quad \square \)

2.4 The lower bound in Theorem 1.2

Finally, we develop all the necessary techniques in order to show the lower bound in Theorem 1.2, i.e., that for all dimensions d, there exists a constant \(c>0\) such that \(\theta (\beta ) \ge \frac{c}{\log (\beta )}\) for all \(\beta \ge 2\).

Proof of the lower bound in Theorem 1.2

Inequality (4) and Lemma 2.4 show that for all dimensions d there exists a constant \(C_d < \infty \) such that for all \(\beta \ge 2\) and all u, v, w with \(\Vert u-v\Vert _\infty , \Vert v-w\Vert _\infty \ge 2\)

Analogously, Lemma 2.5 shows that there exists a constant \(C_d < \infty \) such that for all \(\beta \ge 2\) and all u, v, w with \(\Vert u-v\Vert _\infty \ge 2\) and \(\Vert v-w\Vert _\infty =1\)

where we also used that \(\frac{\log (m)}{m} = \mathcal {O} \left( m^{-1/2}\right) \). Lemma 2.6 implies that for every \(l\in \{1,\ldots ,3^d-1\}\) and \(v_0,v_1,\ldots , v_{l+1} \in \mathbb {Z}^d\) distinct with \(\Vert v_{i+1}-v_i\Vert _\infty = 1\) for all \(i\in \{0,\ldots , l\}\), \(\Vert v_{i}-v_0\Vert _\infty = 1\) for all \(i\in \{1,\ldots , l\}\), and \(\Vert v_{l+1}-v_0\Vert _\infty =2\), one has the bound

as a path from \(V_{v_0}\) to \(V_{v_{l+1}}\) in \(l+1 \le 3^d\) steps needs to contain at least one edge \(\{x_i,x_{i+1}\}\) with \(\Vert x_i-x_{i+1}\Vert _\infty \ge \frac{m}{3^d}\) and thus \(x_i \sim x_i+\mathcal {S}_{\ge \frac{m}{6^d}}\) in this case. We will now show that

for \(m \ge \left( 2000 \cdot \lceil \beta \rceil ^3 3^{5d} C_d\right) ^{\left( 3^{4d}\right) }\) and all large enough M. We will see later where this condition on m comes from. To see (20), we use a renormalization. For \(u \in V_{\textbf{0}}^{M}\), we identify the blocks \(V_u^m\) to vertices r(u) and call the resulting graph \(G^\prime \). Then we will prove that

for large enough M. This implies (20), as the random graphs \(G^\prime \) and \(V_{\textbf{0}}^{M}\) have the same distribution, as shown in Sect. 1.2. Now we condition on the graph \(G^\prime \), i.e., we already have the knowledge which blocks of the form \(V_u^m\) are connected in the original graph. Let \(P^\prime = (r(v_0),\ldots , r(v_k))\) be a self-avoiding path in \(G^\prime \) starting at the origin vertex, i.e., \(v_0=\textbf{0}\). Let \(k \ge 3^{d+3}\) and let . For \(j \in \{0,\ldots , l\}\), we call the subsequence \(R_j {:}{=}\left( r(v_{2j3^{d}}), r(v_{2j3^{d}+1}), \ldots , r(v_{(2j+2)3^{d}})\right) \) separated if there does not exist a sequence \(\left( x_i\right) _{i=2j3^d + 1}^{(2j+2) \cdot 3^{d} - 1}\) such that \(x_i \in V_{v_i}^m \text { for all } i \in \{2j3^d + 1,\ldots , (2j+2) 3^{d} - 1 \} \) and

For a given self-avoiding path \(P^\prime \subset G^\prime \) and different values of \(j \in \{0,\ldots ,l\}\), it is independent whether the subsequences \(\left( r(v_{2j3^{d}}), r(v_{2j3^{d}+1}), \ldots , r(v_{(2j+2)3^{d}})\right) \) are separated, and the probability that a specific subsequence \(\Big (r(v_{2j3^{d}}), r(v_{2j3^{d}+1}), \ldots , r(v_{(2j+2)3^{d}})\Big )\) is not separated is bounded by \(\frac{C_d \beta ^2}{m^{1/2}}\), as for every sequence \(\Big (v_{2j3^{d}}, v_{2j3^{d}+1}, \ldots , v_{(2j+2)3^{d}} \Big )\) at least one of the situations of (17), (18) or (19) holds, as we will argue below. Here we say that the situation of (17) holds if there exists an index \(i \in \{2j3^d+1,\ldots ,(2j+2)3^d-1\}\) such that \(\Vert v_i-v_{i+1}\Vert _\infty , \Vert v_i-v_{i-1}\Vert _\infty \ge 2\), the situation of (18) holds if there exists an index \(i \in \{2j3^d+1,\ldots ,(2j+2)3^d-1\}\) such that \(\Vert v_i-v_{i+1}\Vert _\infty = 1, \Vert v_i-v_{i-1}\Vert _\infty \ge 2\) or \(\Vert v_i-v_{i-1}\Vert _\infty = 1, \Vert v_i-v_{i+1}\Vert _\infty \ge 2\), and the situation of (19) holds if there exists \(l\in \{1,\ldots ,3^d-1\}\) such that \(\Vert v_{i+1}-v_i\Vert _\infty = 1\) for all \(i\in \{2j3^d,\ldots , 2j3^d+l\}\), \(\Vert v_{i}-v_{2j3^d}\Vert _\infty = 1\) for all \(i\in \{2j3^d+1,\ldots , 2j3^d+l\}\) and \(\Vert v_{2j3^d+l+1}-v_{2j3^d}\Vert _\infty =2\). If none of the situations in (17),(18) holds, then the path makes only nearest neighbor-jumps within the subsequence \(R_j\). However, as that there are only \(3^d-1\) many points \(v \in \mathbb {Z}^d\) with \(\Vert v-v_{2j3^d}\Vert _\infty = 1\), the situation of (19) must occur within the subsequence \(R_j\) for some l. So in total we see that

The reason why we consider separated subsequences is that in a separated subsequence, the walk on the original graph \(V_{\textbf{0}}^{mM}\) needs to take at least one additional step. For a fixed path \(P^\prime \subset G^\prime \) of length k and  we have that

we have that

Next, we want to bound the expected degree of vertices in the long-range percolation graph from above. With the bound on the connection probability \(\mathbb {P}_\beta (\textbf{0}\sim u)\) (3), we get that

Let \(\mathcal {P}_k^\prime \) be the set of self-avoiding paths of length k in \(G^\prime \) starting at \(r(\textbf{0})\). By a comparison to the case of a Galton-Watson tree, inequality (21) already gives that \(\mathbb {E}_\beta \left[ | \mathcal {P}_k^\prime | \right] \le \left( \lceil \beta \rceil 3^{5d} \right) ^k\). As  , we see that

, we see that

by the choice of \(m \ge \left( 2000 \cdot 3^{5d} C_d \lceil \beta \rceil ^3 \right) ^{\left( 3^{4d}\right) }\). Next, we want to translate this bound on the probability of certain events to bounds on the expectation of the distances. For this, let \(\mathcal {G}_{k}\) be the event that all self-avoiding paths \(P^\prime \subset G^\prime \) starting at the origin and of length \(\tilde{k} \ge k\) contain at least separated subpaths \(R_j\). With the preceding inequality we directly get \(\mathbb {P}_\beta \left( \mathcal {G}_k\right) \ge 1 - 0.1^k\). On the event \(\mathcal {G}_k\), each path \(P \subset V_{\textbf{0}}^{mM}\) starting at the origin, for which the loop-erased projection on \(G^\prime \) goes through \(\tilde{k}+1\) different blocks of the form \(V_u^m\), needs to have a length of at least . Furthermore, if we have \(D_{G^\prime }\left( r(\textbf{0}), r\left( (M-1)e_1\right) \right) = \tilde{k}\), then every path connecting \(\textbf{0}\) to \((mM-1)e_1\) in the original model \(V_\textbf{0}^{mM}\) goes through at least \(\tilde{k}+1\) different blocks of the form \(V_u^m\), with \(u\in V_\textbf{0}^M\). So we get that

where the last inequality holds for all large enough M, as the probability of the event \(\left\{ D_{V_{\textbf{0}}^M}\left( \textbf{0}, (M-1)e_1 \right) < 3^{d+3} \right\} \) tends to 0 as \(M \rightarrow \infty \). Say that it holds for all \(M \ge m^N\), where \(m= \Big \lceil \left( 2000 \cdot 3^{5d} C_d \lceil \beta \rceil ^3 \right) ^{\left( 3^{4d}\right) } \Big \rceil \). The important property about the choice of m is, that its size is polynomial in \(\beta \). This already implies that

for some small \(c>0\) and all \(\beta \ge 2\). \(\quad \square \)

3 Connected Sets in Graphs

The expected number of open paths in the long-range percolation model, of length k, and starting at \(\textbf{0}\), grows at most like \(\mathbb {E}\left[ \deg (\textbf{0})\right] ^k\), which can be easily proven by a comparison with a Galton-Watson tree. However, it is a priori not clear how the number of connected subsets of \(\mathbb {Z}^d\) containing the origin grows. In particular, because the maximal degree of vertices is unbounded. In this chapter, we prove several results about the structure of connected sets in the long-range percolation graph. Mostly, we want to prove that with exponentially high probability in k, all connected sets of size k in the graph have not too many edges. First, we need to define what we mean by a connected set. Formally, we define the a connected set as follows. For a graph \(G=(V,E)\) we say that a subset \(Z \subset V\) is connected if the graph \((Z, E^\prime )\) with edge set \(E^\prime = \left\{ \{x,y\} \in E: x,y \in Z \right\} \) is connected. As a first step, we bound the expected number of connected sets of certain size in Galton-Watson trees. This counting of connected sets plays an important role in Sect. 5 below and in the companion paper [4].

Lemma 3.1

Let \(\mathcal {X}\) be a countable set with a total ordering and a minimal element, let X be a countable sum of independent Bernoulli-distributed random variables over this set, i.e., \(X=\sum _{i\in \mathcal {X}} X_i\), and let \(\mu \) be the expectation value of X. Say that \(q(k)=\mathbb {P}(X_k=1)\). Let T be a Galton-Watson tree with offspring distribution \(\mathcal {L}(X)\). We denote the set of all subtrees of T of size k containing the origin by \(\mathcal {T}_k\). Then

Proof

The choice of the set \(\mathcal {X}\) and the total ordering on it do not influence the outcome, so we will always work with \(\mathcal {X}=\mathbb {N}\) from here on. We can think of the Galton-Watson tree as a model of independent bond percolation on the graph with vertex set \(L=\bigcup _{n=0}^\infty L_n\), where \(L_n = \mathbb {N}^n\), and with edge set \(S=\left\{ \{v,(v \ m)\}: v \in L, m\in \mathbb {N}\right\} \) where some edge of the form \(\{v,(v \ m)\}\) is open with probability q(m). Note that the graph \(G=(L,S)\) is a tree, so in particular there exists a unique path from the origin \(\emptyset \) to every vertex; this tree is also known as the Ulam-Harris tree. For a vertex \(v \in L\), the number of open edges of the form \(\{v,(v \ m)\}\) has the same law as X and thus we can identify the open cluster connected to the root \(\emptyset \) with a Galton-Watson tree with offspring distribution \(\mathcal {L}(X)\). So in particular, the expected number of subtrees of the Galton-Watson tree T of size k is the same as the expected number of connected sets of size k in (L, S). For a vertex \(v \in L\), the number of open edges of the form \(\{v,(v \ m)\}\) has the same law as X and thus we can identify the open cluster connected to the root \(\emptyset \) with a Galton-Watson tree with offspring distribution \(\mathcal {L}(X)\). For a vertex \(v\in L\), we call the vertices of the form \((v \ m)\) that are connected to v by an open bond the children of v. Vice versa, we say that v is the parent of the vertex \((v \ m )\), if \((v \ m)\) is connected to v. For a connected set \(L^\prime \subset L\) of size k, we now describe an exploration process \(\left( Y_i\right) _{i\in \{1,\ldots ,2k-1\}}\) of it:

-

0.

Start with \(Y_1 = \emptyset \).

-

1.

For \(i=1, \ldots , 2k-1\)

-

(a)

If there exists \(m\in \mathbb {N}\) for which \(\left( Y_i \ m\right) \in L^\prime \) and \(Y_j \ne (Y_i \ m)\) for all \(j< i\), let \(m^\prime \) be the minimal among those \(m\in \mathbb {N}\) and set \(Y_{i+1} = (Y_i \ m^\prime )\).

-

(b)

If such an m does not exist, let \(Y_{i+1}\) be the parent of \(Y_i\).

-

(a)

An example of this procedure is given in Fig. 3. This exploration process traverses every edge exactly twice in opposite directions and starts and ends at the origin of the tree. We also say that the exploration process \(Y_i\) goes (one level) down if (a) occurs in the algorithm above and otherwise we say that the process goes (one level) up. We also define a different process \(\left( Y^\prime _i\right) _{i\in \{1,\ldots ,k\}}\), where \(Y^\prime _i\) is the unique point \(Y_l\) such that \(\left| \{Y_1,\ldots , Y_{l-1}\}\right| < i\) and \(\left| \{Y_1,\ldots , Y_{l}\}\right| = i\). So the process \(\left( Y^\prime _i\right) _{i\in \{1,\ldots ,k\}}\) is like a depth-first search from left to right in the tree. We can encode all information contained in the subtree \(L^\prime \) by the two sequences \(\left( a_1,\ldots ,a_{2k-2}\right) \in \{u,d\}^{2k-2}\) and \(\left( m_1,\ldots , m_{k-1}\right) \in \mathbb {N}^{k-1}\). The first sequence \(\left( a_1,\ldots ,a_{2k-2}\right) \) encodes whether the process \(Y_i\) goes one level up or down at a certain point. Here \(a_i=u\) if the process goes one level up after \(Y_i\), i.e., if \(Y_{i+1}\) is the parent of \(Y_i\). Otherwise we set \(a_i=d\), i.e., if \(Y_{i+1}\) is a child of \(Y_i\). The sequence \(\left( m_1,\ldots , m_{k-1}\right) \) encodes the direction of the process, where the i-th coordinate gives the direction when the walk goes down for the i-th time. This happens when it touches the vertex \(Y_{i+1}^\prime \) for the first time. So if v is the parent of \(Y_{i+1}^\prime \), then \(Y_{i+1}^\prime = \left( v \ m_i\right) \).

For fixed \(\overset{\rightarrow }{a}\ = \left( a_1,\ldots ,a_{2k-2}\right) \in \{u,d\}^{2k-2}\), we want to upper bound the expected number of subtrees containing the origin with exactly this up-and-down structure. Assume that the exploration process \(Y_i\) visits exactly l children of some vertex \(Y_j^\prime \). Then the expected number of ways to choose these l children among the children of \(Y_j^\prime \) in an increasing way is given by

We have this choice for all vertices \(Y_j^\prime \) in the tree. The sum over the number of children of all the vertices is \(k-1\), as every vertex, except the origin \(\emptyset \), is the child of exactly one vertex. Thus the expected number of trees with a specified up-and-down structure can be bounded from above by

Up to now, we considered a fixed up-and-down-structure. However, there are at most \(\left| \{u,d\}^{2k-2}\right| = 2^{2k-2}\) possible up-and-down structures \((a_1,\ldots ,a_{2k-2})\) (In fact there are significantly less combinations, as one has additional constraints like \(a_1=d\)). So in total, we get

\(\square \)

We now want to use the above lemma about the Galton-Watson tree in order to get results about the average degree of connected subsets of the long-range percolation graph. For this, we define the average degree of some set finite \(Z\subset \mathbb {Z}^d\) by

One elementary inequality we will use in the following controls the exponential moments of certain random variables. Assume that \(\left( U_{i}\right) _{i\in \mathbb {N}}\) are independent Bernoulli random variables and \(U= \sum _{i=1}^{\infty } U_i\). Then

and this already implies, by Markov’s inequality, that for any \(C>0\)

Lemma 3.2

Let \(\mathcal{C}\mathcal{S}_k= \mathcal{C}\mathcal{S}_k\left( \mathbb {Z}^d\right) \) be all connected subsets of the long-range percolation graph with vertex set \(\mathbb {Z}^d\), which are of size k and contain the origin \(\textbf{0}\). We write \(\mu _\beta \) for \(\mathbb {E}_\beta \left[ \deg (\textbf{0})\right] \). Then for all \(\beta >0\)

Proof

Consider percolation on the tree \(L = \bigcup _{n=0}^\infty L_n\), where \(L_n = \left( \mathbb {Z}^d \setminus \{\textbf{0}\}\right) ^n\), the edge set is given by \(S=\left\{ \{v,(v \ m)\}: v \in L, m\in \mathbb {Z}^d {\setminus } \{\textbf{0}\} \right\} \) and an edge of the form \(\{v,(v \ m)\}\) is open with probability \(p\left( \beta , \{\textbf{0},m\}\right) \). A total ordering on \(\mathbb {Z}^d \setminus \{\textbf{0}\}\) is given by considering an arbitrary deterministic bijection with \(\mathbb {N}\). From Lemma 3.1, we know that the expected number of connected sets of size k in L is bounded by \(4^k \mu _\beta ^k\). We want to project a finite tree \(T\subset L\) of size k down to \(\mathbb {Z}^d\). Remember the notation \(\left( Y_i^\prime \right) _{i\in \{1,\ldots ,k\}}\) for the depth-first search from left to right in the tree. The information contained in the structure of the tree can be represented by the vectors \(\overset{\rightarrow }{a}=(a_1,\ldots ,a_{2k-2}) \in \{u,d\}^{2k-2}\) and \(\overset{\rightarrow }{m}=(m_1,\ldots ,m_{k-1}) \in \left( \mathbb {Z}^d{\setminus }\{\textbf{0}\}\right) ^{k-1}\). We now define a subgraph \(\left( Z(T),E(T)\right) \) of the integer lattice and an exploration process \(\left( X^\prime _i\right) _{i \in \{1,\ldots , k\}}\) as follows:

-

0.

Start with \(X_1^\prime = \textbf{0}, E_1(T)= \emptyset \).

-

1.

For \(i=2,\ldots ,k:\)

Let \(j<i\) be such that \(Y_i^\prime = (Y_j^\prime \ m)\) for some \(m \in \mathbb {Z}^d{\setminus }\{\textbf{0}\}\). Set \(X_i^\prime = X_j^\prime + m\) and \(E_i(T) = E_{i-1}(T) \cup \left\{ \{X_i^\prime , X_j^\prime \}\right\} \).

-

2.

\(Z(T)= \bigcup _{i=1}^k \{X_i^\prime \}\) and \(E(T)=E_{k}(T)\).

A tree T with 5 vertices, \((a_1,\ldots ,a_{8})=(d,u,d,d,u,d,u,u)\), and \((m_1,\ldots ,m_4)=(-2,1,-2,2)\), and its projection on \(\mathbb {Z}\). The vertices with thick boundary \(\{-2,-1,0,1,3\} \subset \mathbb {Z}\) are the set Z(T) and the thick edges between them are the set E(T). Note that (Z(T), E(T)) really is a tree for this example

See Fig. 4 for an example of this projection. The graph \(\left( Z(T),E(T)\right) \) is clearly connected, but it is not necessarily a tree, as there can be \(i\ne j\) with \(X_i^\prime = X_j^\prime \), in which case there exists a loop containing \(X_i^\prime \). We call both the graph \(\left( Z(T), E(T)\right) \) and the tree T admissible if \(\left( Z(T),E(T)\right) \) is a tree. We also write \(\mathcal{T}\mathcal{A}_k\) for the set of admissible trees \(T\subset (L,S)\) of size k. For a tree \(T\subset (L, S)\) of size k, the condition \(T \in \mathcal{T}\mathcal{A}_k\) is equivalent to \(|Z(T)|=k\), as every connected graph with k vertices and \(k-1\) edges is a tree. Assume that the graph \(\left( Z(T),E(T)\right) \) is admissible. Then the probability that all edges exist in the random graph equals \(\prod _{i=1}^{k-1}p(\beta , \{\textbf{0},m_i\})\), which is exactly the probability that all edges of the tree T exist inside (L, S). Every connected set \(Z \subset \mathbb {Z}^d\) has a spanning tree. Thus there exists a tree \(T\subset L\) with \(Z=Z(T)\) such that all edges in E(T) exist. This and the result of Lemma 3.1 imply that

For an admissible tree T, the degree of each vertex \(v\in Z(T)\) is the sum of an inside degree and an outside degree, which we will now define. The inside degree \(\deg _{Z(T)}(v)\) of a vertex \(v \in Z(T)\) is defined by

which is just the number of edges in E(T) containing v. Note that for a given admissible tree T, the inside degree is purely deterministic and does not depend on the environment. Also note that, by the handshaking lemma,

where the last equality holds as (Z(T), E(T)) is a tree. Now let us turn to the outside degree \(\deg _{Z(T)^C}(v)\) of a vertex \(v\in Z(T)\), which we define by

The outside degree depends on the random environment \(\omega \) and is a non-constant random variable, contrary to \(\deg _{Z(T)}(v)\). Now we want to get bounds on the random variable \(\sum _{v \in Z(T)} \deg _{Z(T)^C}(v)\). Note that \(\{u,v\} \notin E(T)\) does not imply that \(u\notin Z(T)\), but only that u and v are not neighbors in the graph induced by T. The random variable \(\sum _{v \in Z(T)} \deg _{Z(T)^C}(v)\) is not the sum of independent Bernoulli random variables, as we might count some edges twice. But as one can count every edge at most twice in this sum, one has the bound

where the expression on the right-hand side is a sum of independent Bernoulli random variables with expectation at most \(|Z(T)|\mu _\beta \). So for each admissible tree T we always have

We use the notation

For a given finite admissible tree T, we have that

So far we only got this bound for a fixed admissible tree \(T\subset (L,S)\). Remember that every connected set \(Z\in \mathcal{C}\mathcal{S}_k\) has a spanning tree and there exists a tree \(T\subset (L,S)\) so that \(\left( Z(T),E(T)\right) \) is exactly this spanning tree. Again, we use the notation \(\mathcal{T}\mathcal{A}_k\) for the set of admissible trees \(T\subset (L,S)\) of size k. With the observation from before we get that

where we used that \(\mu _\beta \ge 2\) in the last inequality. This holds for long-range percolation with our parameters, as each vertex is always connected to its nearest neighbors. The final inequality is exactly the result that we wanted to show and thus we finish the proof.

\(\square \)

4 Distances in \(V_{\textbf{0}}^n\)

In this section, we give several bounds on the distribution of the graph distances between points, respectively sets, inside of certain boxes. In Sect. 4.1, we determine several different properties of the function \((x,y)\mapsto \mathbb {E}_\beta \left[ D_{V_\textbf{0}^n} (x,y) \right] \). It is intuitively clear that the expression is large when x, y also have a big Euclidean distance, for example when \(x=\textbf{0}\) and \(y=(n-1)\textbf{1}\). This intuition is made rigorous in Lemma 4.2. In Sect. 4.2, we upper bound the second moment of random variables of the form \(D_{V_\textbf{0}^n} (x,y)\). Then, in Sect. 4.3 we use these results in order to bound the distance between certain points and sets in the long-range percolation graph.

4.1 Graph distances of far away points

From the definition of \(\Lambda (n,\beta )\) in (9) it is not clear which pair u, v maximizes the expected distance and how the expected graph distances can be compared for different graphs \(V_\textbf{0}^n\) and \(V_\textbf{0}^{n^\prime }\). In Proposition 4.1, we construct a coupling between the long-range percolation graph on \(V_{\textbf{0}}^n\) for different n. In Lemma 4.2, we show that, up to a constant factor, the maximum in the definition of \(\Lambda (n,\beta )\) gets attained by the pair \(\{\textbf{0}, (n-1)e_1\}\) or \(\{\textbf{0},(n-1)\textbf{1}\}\).

Lemma 4.1

Let \(\beta \ge 0\) and \(n^\prime , n \in \mathbb {N}_{>0}\) with \(n^\prime \le n\). For \(u,v \in V_{\textbf{0}}^n\) define \(u^\prime {:}{=}\lfloor \frac{n^\prime }{n} u \rfloor , v^\prime {:}{=}\lfloor \frac{n^\prime }{n} v \rfloor \), where the rounding operation is componentwise. There exists a coupling of the random graphs with vertex sets \(V_{\textbf{0}}^n\) and \(V_{\textbf{0}}^{n^\prime }\) such that both are distributed according to \(\mathbb {P}_\beta \) and

for all \(u,v \in V_{\textbf{0}}^n\). The same holds true when one considers the graph \(\mathbb {Z}^d\) instead of \(V_{\textbf{0}}^n\) and this also implies that

Proof

We prove the statement via a coupling with the underlying continuous model. As the claim clearly holds for \(\beta =0\) or for \(u=v\), we can assume \(\beta >0 \), and \( u \ne v\) from here on. Let \(\tilde{\mathcal {E}}\) be a Poisson point process on \(\mathbb {R}^d\times \mathbb {R}^d\) with intensity \(\frac{\beta }{2\Vert t-s\Vert ^{2d}}\) and define \(\mathcal {E}= \left\{ (t,s)\in \mathbb {R}^d \times \mathbb {R}^d: (s,t)\in \tilde{\mathcal {E}}\right\} \cup \tilde{\mathcal {E}}\). Remember that this point process has a scaling invariance, namely that for a constant \(\alpha >0\) the set \(\alpha \mathcal {E}\) has exactly the same distribution as \(\mathcal {E}\). We now define a random graph \(G=(V,E)\): For \(u,v \in V_{\textbf{0}}^n {=}{:}V\) we place an edge between u and v if and only if \((u+\mathcal {C}) \times (v + \mathcal {C}) \cap n \mathcal {E}\ne \emptyset \). We have already seen in Sect. 1.2 about the continuous model that this creates a sample of independent long-range percolation where the connection probability between the vertices u and v is given by \(p(\beta ,|v-u|)=1-e^{-\int _{u+\mathcal {C}} \int _{v+ \mathcal {C}} \frac{\beta }{\Vert t-s\Vert ^{2d}}\textrm{d}t \textrm{d}s}\). We can do the same procedure for \(V^\prime {:}{=}V_{\textbf{0}}^{n^\prime }\) and \(n^\prime \mathcal {E}\) to get a random graph \(G^\prime =(V^\prime , E^\prime )\). Formally, we place an edge between two vertices \(u^\prime , v^\prime \in V^\prime \) if and only if \((u^\prime +\mathcal {C}) \times (v^\prime + \mathcal {C}) \cap n^\prime \mathcal {E}\ne \emptyset \). We now claim that for any two vertices \(u,v \in V\) with \(u\ne v\) and \(u^\prime , v^\prime \) defined as above one has \(D_{G^\prime }(u^\prime , v^\prime ) \le 2 D_{G}(u,v)+1\), which already implies (28). Assume that \((x_0=u,x_1,\ldots ,x_l=v)\) is the shortest path between u and v in G, where \(l=D_G(u,v)\). Then for all \(i=1,\ldots ,l\) there are points

In particular one has

for all \(i=2,\ldots ,l\), \(\Vert y(1,0)-u\Vert _\infty < 1\), and \(\Vert y(l,1) - v\Vert _\infty < 1\). For all \(i=1,\ldots , l\) and \(j \in \{0,1\}\) define \(y^\prime (i,j) = \frac{n^\prime }{n} y(i,j)\), which implies \(\left( y^\prime (i,0), y^\prime (i,1) \right) \in n^\prime \mathcal {E}\). With this definition one clearly has

for all \(i=2,\ldots ,l\), \(\Vert y^\prime (1,0) - \frac{n^\prime }{n} u \Vert _\infty <1\), and \(\Vert y^\prime (l,1) - \frac{n^\prime }{n} v \Vert _\infty <1\). So in \(G^\prime \) we can use the path from \(u^\prime \) to \(v^\prime \) that uses all the edges \(\left\{ \lfloor y^\prime (i,0) \rfloor , \lfloor y^\prime (i,1) \rfloor \right\} \) and in the case where \(\lfloor y^\prime (i-1,1) \rfloor \ne \lfloor y^\prime (i,0) \rfloor \) holds, respectively the analogous statement for \(u^\prime \) or \(v^\prime \) holds, we can use the nearest neighbor edge between those vertices, which exists as \(\Vert y^\prime (i-1,1) - y^\prime (i,0) \Vert _\infty < 1\). So for each vertex that is touched by the shortest path between u and v in G one needs to make at most one additional step for the path between \(u^\prime \) and \(v^\prime \) in \(G^\prime \), which implies that \(D_{G^\prime }(u^\prime ,v^\prime ) \le 2D_G(u,v) + 1\). If one does not restrict to the sets \(V=V_{\textbf{0}}^n\) and \(V^\prime = V_{\textbf{0}}^{n^\prime }\), but works on the graph with vertex set \(\mathbb {Z}^d\) instead, the same proof works. \(\quad \square \)

Lemma 4.2

For all \(\beta \ge 0\), \(n \in \mathbb {N}_{>0}\), and \(u,v \in V_{\textbf{0}}^n\), we have

and

This lemma already has two interesting implications, that we want to discuss before going to the proof.

Remark 4.3

Combining (30) and (31) already implies that for \(\Lambda (n,\beta ) = \max _{u,v \in V_{\textbf{0}}^{n}} \mathbb {E}_\beta \left[ D_{V_\textbf{0}^n} (u,v) \right] +1\) one has

Remark 4.4

For all bounded sets \(K\subset \mathbb {R}_{\ge 0}\) there exists a constant \(\theta ^\star >0\) such that for all \(\beta \in K\) and all M, N large enough one has

Proof

Remark 4.3 together with (20) already show the existence of such an \(\theta ^\star \) along a subsequence of numbers of the form \(M=m^k\). Proposition 4.1 shows the result for all large enough M. \(\quad \square \)

Proof of Lemma 4.2

Using the triangle inequality and linearity of expectation we get for all \(u,v \in V_\textbf{0}^n\) that

and thus, in order to prove (30), it suffices to show that

for all \(v \in V_{\textbf{0}}^n\). By symmetry, we can assume that \(p_1(v) \ge p_2(v)\ge \ldots \ge p_d(v)\). For \(k\in \{0,\ldots ,d\}\), we define the vector \(v(k) \in V_{\textbf{0}}^n\) by

i.e., the first k coordinates of v(k) equal the corresponding coordinates of v and all other coordinates are 0. By the triangle inequality and linearity of expectation we clearly have

So in order to show (32), it suffices to show that

for all \(i \in \{0,\ldots , d-1\}\). For each such index i, the cube

is contained in the cube \(V_{\textbf{0}}^n\). These cubes \(\left( \mathcal {B}_i\right) _{i \in \{0,\ldots , d-1\}}\) are chosen in such a way that the cube \(\mathcal {B}_i \subset V_\textbf{0}^n\), both points v(i) and \(v(i+1)\) are corners of the cube \(\mathcal {B}_i\) and the line-segment connecting v(i) to \(v(i+1)\) is an edge of the cube. This property will then allow us to use the symmetry of the model, together with Proposition 4.1.

See Fig. 5 for an example. Allowing the geodesic to use less edges clearly increases the distance between two points, which implies \(D_{V_{\textbf{0}}^n} (v(i),v(i+1)) \le D_{\mathcal {B}_i} (v(i),v(i+1))\) as \(\mathcal {B}_i \subset V_{\textbf{0}}^n\). As the model is invariant under changing the coordinates and under the action \(e_i \mapsto -e_i\) we already get for all \(i\in \{0,\ldots ,d-1\}\)

where we used Proposition 4.1 for the last inequality. This shows (33) and thus finishes the proof of (30). Now let us go to the proof of (31). Define \(y\in \mathbb {Z}^d\) by \(p_1(y)=1, p_i(y) = -1\) for \(i\ge 2\) and define the cube \(\mathcal {B}\) by \(\mathcal {B}=\{n-1,\ldots , 2n-2\} \times \{0,\ldots ,n-1\}^{d-1}\). By the triangle inequality we have

Observe that \((2n-2)e_1 = (n-1)\textbf{1}+ (n-1)y\). The pairs of vertices \(\textbf{0}\) and \((n-1)\textbf{1}\) lie on opposite corners of the cube \(V_\textbf{0}^n\). The vertices \((n-1)\textbf{1}\) and \((2n-2)e_1\) also lie on opposite corners of the cube \(\mathcal {B}\). The two cubes \(V_\textbf{0}^n\) and \(\mathcal {B}\) differ by a translation only; in particular, they have the same side length. As the long-range percolation model is invariant under translation and reflection of any coordinate the two terms in the sum (34) have the same distribution which implies that

Using Proposition 4.1, we finally get

which shows (31). \(\quad \square \)

4.2 The second moment bound

The next lemma relates the second moment of the distances to their first moment. We use a technique that has already been used in [20] before in a slightly different form for dimension \(d=1\) only. As we need the result in a uniform dependence on \(\beta \) in our companion paper [4], we directly prove the uniform statement here. The uniformity does not cause any complications for \(d\ge 2\), but it causes minor technical difficulties for \(d=1\). So we give the proof for \(d=1\) in the appendix. The situation for \(d\ge 2\) is easier, as there are no cut points, in the sense that for every \(u,v \in V_{\textbf{0}}^n\) there exist two disjoint paths between u and v. For \(d=1\), and in particular for \(\beta < 1\), such a statement will typically not be true.

Lemma 4.5

For all \(\beta \ge 0\), there exists a constant \(C_\beta <\infty \) such that for all \(n \in \mathbb {N}\), all \(\varepsilon \in \left[ 0,1\right] \) and all \(u,v \in V_{\textbf{0}}^n\)

Proof of Lemma 4.5for \(d\ge 2\) Fix \(\beta \ge 0\). We will prove that for all \(\varepsilon \in \left[ 0,1\right] \), all \(m,n \in \mathbb {N}\), and all \(u,v \in V_{\textbf{0}}^{mn}\)

Iterating over this inequality one gets for some large enough N that

for all \(k \in \mathbb {N}\). By Remark 4.4 there exists \(\theta ^\star = \theta ^\star (\beta ) > 0\) such that for all \(\varepsilon \in \left[ 0,1\right] \), and all \(m,n \in \mathbb {N}\) large enough one has

Take m large enough so that also \(300\,m^{-\theta ^\star } < \frac{1}{2}\) is satisfied. Inserting this into (37) gives

for large enough N. This shows (35) along the subsequence \(N, mN, m^2N, \ldots \). For general \(n \in \mathbb {N}\), the desired result follows from Proposition 4.1. So we are left with showing (36). For this, we use an elementary observation, that was already used in [20]. Assume that \(X_1, \ldots , X_{\tilde{m}}\) are independent non-negative random variables and let \(\tau = \arg \max _{i \in \{1,\ldots , \tilde{m}\}} \left( X_i\right) \). Then

We still need to show inequality (36), i.e., that

Let \(u,v \in V_{\textbf{0}}^{mn}\), say with \(u \in V_x^n, v \in V_y^n\), where \(x,y \in V_{\textbf{0}}^m\). Inequality (36) clearly holds in the case where \(x=y\). For the case \(x \ne y\), let \(x_0=x, x_1, \ldots , x_l = y\) and \(x^\prime _0=x, x^\prime _1, \ldots , x^\prime _{l^{\prime }} = y\) be two completely disjoint and deterministic paths between x and y inside \(V_{\textbf{0}}^m\) that are of length at most \(m+1\) and use only nearest-neighbor edges, i.e., \(\Vert x_i-x_{i-1}\Vert _\infty =1\) and \(\Vert x_i^\prime - x_{i-1}^\prime \Vert _\infty =1\) for all suitable indices i. By completely disjoint we mean that \(\left\{ x_1,\ldots , x_{l-1}\right\} \cap \left\{ x_1^\prime ,\ldots , x_{l^\prime -1}^\prime \right\} = \emptyset \); the starting point \(x=x_0=x_0^\prime \) and the end point \(y=x_l=x_{l^\prime }^\prime \) already need to agree by the construction. Now we iteratively define sequences \((L_i,R_i)_{i=0}^l\) and \((L_i^\prime ,R_i^\prime )_{i=0}^{l^\prime }\) as follows:

-

0.

Set \(L_0 = u, R_l=v\).

-

1.

For \(i=1,\ldots , l\), choose \(R_{i-1} \in V_{x_{i-1}}^n\) and \(L_i \in V_{x_i}^n\) such that \({\Vert R_{i-1}-L_i\Vert _\infty = 1}\).

Analogously, we define \((L_i^\prime ,R_i^\prime )_{i=0}^{l^\prime }\) by

-

0.

Set \(L^\prime _0 = u, R^\prime _{l^\prime }=v\).

-

1.

For \(i=1,\ldots , l^\prime \), choose \(R_{i-1}^\prime \in V_{x_{i-1}^\prime }^n\) and \(L_i^\prime \in V_{x_i^\prime }^n\) such that \({\Vert R^\prime _{i-1}-L^\prime _i\Vert _\infty = 1}\).

The graph \(V_\textbf{0}^9\) for \(d=2\). The division into \(3\times 3\)-boxes is marked in gray. In this picture, u, v are the black vertices, \(R_0,L_1,R_1,L_2\) are the starred vertices and the nearest-neighbor edges \(\{R_0, L_1\}\) and \(\{R_1,L_2\}\) are black and thick. The vertices \(R_0^\prime ,L_1^\prime ,R_1^\prime ,L_2^\prime ,R_2^\prime ,L_3^\prime \)are the vertices with the extra circle and dot in the inside; The nearest-neighbor edges {\(R_0^\prime ,L_1^\prime \}, \{R_1^\prime ,L_2^\prime \}, \text { and } \{R_2^\prime ,L_3^\prime \}\) are black and thick

The choice of these algorithms in step 1 is typically not unique. If there are several possibilities, we always choose the vertices with some deterministic rule that does not depend on the environment. An example of such a construction is given in Fig. 6. The idea behind this construction is, that there exists a path that goes from \(L_0\) to \(R_0\) to \(L_1\) to \(R_1\)... to \(L_l\) to \(R_l\). Furthermore, there also exists a path that goes from \(L_0^\prime \) to \(R_0^\prime \) to \(L_1^\prime \)... to \(L_{l^\prime }^\prime \) to \(R_{l^\prime }^\prime \). We then compare the length of these two paths.

By construction we have \(L_i, R_i \in V_{x_i}^n\) and \(L_i^\prime , R_i^\prime \in V_{x_i^\prime }^n\) for all \(i \in \{0,\ldots , l\}\), respectively \(i \in \{0,\ldots , l^\prime \}\). Define

These are at most \(l-1+l^\prime -1 \le 2m\) random variables and they are independent, as the boxes \(V_{x_i^\prime }^n\) and \(V_{x_i}^n\) are disjoint. We order the random variables \(\left\{ X_i: i \in \{1,\ldots ,l-1\}\right\} \cup \left\{ X_i^\prime : i \in \{1,\ldots ,l^\prime -1\}\right\} \) in a descending way and call them \(Y_1,Y_2,\ldots , Y_{l+l^\prime -2 }\). The idea in finding a short path between u and v is now to avoid the box where the maximum of the \(Y_i\)-s is attained. Assume that the maximum of them is one of the \(X_i\)-s, i.e., \(X_i=Y_1\) for some \(i\in \{1,\ldots ,l-1\}\). Then we consider the path that goes from \(L_0^\prime =u\) to \(R_0^\prime \) and from there to \(L_1^\prime \), and from there we go successively to \(R_{l^\prime }^\prime =v\). Otherwise, we have \(X_i^\prime = Y_1\) for some \(i \in \{1,\ldots ,l^\prime -1\}\). In this situation, we consider the path that goes from \(L_0 =u\) to \(R_0\), from there to \(L_1\), and successively to \(R_{l} =v\). In both cases we have constructed a path between u and v. The length of this path is an upper bound on the chemical distance between u and v and thus we get

where the summand \((m+1)\) arises as one still needs to go from \(R_i\) to \(L_{i+1}\) for all \(i\in \{0,\ldots , l-1\}\), or from \(R_i^\prime \) to \(L_{i+1}^\prime \) for all \(i\in \{0,\ldots , l^\prime -1\}\). But by assumption one has \(l, l^\prime \le m+1\), so one needs at most \(m+1\) additional steps. From (38) we know that

For the distance between \(L_0 \) and \(R_0\) one clearly has

and the same statements hold for \(D_{V_x^n}(L_0^\prime , R_0^\prime ), D_{V_y^n}(L_l, R_l), \) and \( D_{V_y^n}(L_{l^\prime }^\prime , R_{l^\prime }^\prime )\). Using the elementary inequality \(\left( \sum _{i=1}^{6} a_i\right) ^2 \le 36 \sum _{i=1}^{6} a_i^2\) that holds for any six numbers \(a_1,\ldots , a_6 \in \mathbb {R}\) for the term in (39), we get that

which shows (36) and thus finishes the proof. \(\quad \square \)

Corollary 4.6

Iterating this technique one can show that for all \(k\in \mathbb {N}\) of the form \(k=2^l\) and for all \(\beta > 0\) there exists a constant \(C_\beta <\infty \) such that for all \(n \in \mathbb {N}\), and all \(u,v \in V_{\textbf{0}}^n\)

Then, one can extend this bound to all \(k\in \mathbb {R}_{\ge 0}\) with Hölder’s inequality.

Proof of Corollary 4.6for \(d\ge 2\) For \(r> 0\), define the quantity

and assume that \(\Lambda ^r(\beta ,n) \le C \Lambda (\beta ,n)^r\) for some constant C and all \(n\in \mathbb {N}\). Using the same notation as in (39) above we get that for any \(u,v \in V_\textbf{0}^{mn}\), say with \(u\in V_x^n\) and \(y\in V_y^n\),

and thus we also get that

We have that

and from here the same proof as in Lemma 4.5 shows that \(\Lambda ^{2r}(\beta ,n) \le C(r) \Lambda (\beta ,n)^{2r}\) for some constant \(C(r)< \infty \). Inductively, we thus get that for all \(r=2^k\), with \(k\in \mathbb {N}\) one has \(\Lambda ^r(\beta ,n) \le C(r) \Lambda (\beta ,n)^r\). Whenever \(r>0\) is not of the form \(r=2^k\) for some \(k\in \mathbb {N}\), let k be large enough so that \(r < 2^k\). Then we get that

for some constant C. \(\quad \square \)

4.3 Graph distances between points and boxes

So far, we only considered distances between two different points in a box. In this section, we investigate the distance between certain points and boxes. For \(n\in \mathbb {N}\) and \(0< \iota < \frac{1}{2}\) we define the boxes \(L_\iota ^n {:}{=}\left[ 0,\iota n\right] ^d\) and \(R_\iota ^n {:}{=}\left[ n-1-\iota n, n-1\right] ^d\). These are boxes that lie in opposite corners of the cube \(V_{\textbf{0}}^n\), where \(L_\iota ^n\) lies in the corner containing \(\textbf{0}\) and \(R_\iota ^n\) lies in the corner containing \(\textbf{1}\). The next lemma deals with the graph distance of these two boxes. A similar statement of Lemma 4.7 for the continuous model and \(d=1\), was already proven in [20]. We follow the same strategy for the proof of this lemma. Again, we prove it uniformly for \(\beta \) in some compact intervals, as we will need this uniform statement in [4]. The uniformity does not make any complications in this proof here.

Lemma 4.7

For all \(\beta \ge 0\), there exists an \(\iota >0\) such that uniformly over all \(\varepsilon \in \left[ 0,1\right] \) and \(n\in \mathbb {N}\)

and there exists \(c^\star >0\) such that uniformly over all \(\varepsilon \in \left[ 0,1\right] \) and \(n\in \mathbb {N}\)

Proof

The statement clearly holds for small n, so we focus on \(n \in \mathbb {N}\) large enough from here on. Let \(x \in L_\iota ^n\) and \(y \in R_\iota ^n\) be the minimizers of \(D_{V_{\textbf{0}}^n}\left( L_\iota ^n, R_\iota ^n \right) \), i.e., \(D_{V_{\textbf{0}}^n}\left( L_\iota ^n, R_\iota ^n \right) = D_{V_{\textbf{0}}^n}\left( x,y \right) \). If the minimizers are not unique, pick two minimizers with a deterministic rule not depending on the environment. The choice of x, y, and the distance \(D_{V_{\textbf{0}}^n}\left( L_\iota ^n, R_\iota ^n \right) \) depend only on edges with at least one endpoint in \(V_{\textbf{0}}^n {\setminus } \left( L_\iota ^n \cup R_\iota ^n\right) \). The distances \(D_{L_\iota ^n} (\textbf{0},x)\), respectively \(D_{R_\iota ^n} (y,(n-1)\textbf{1})\), depend only on edges with both endpoints in \(L_\iota ^n\), respectively \(R_\iota ^n\). Thus we get that

For \(\iota \) small enough and n large enough, we get uniformly over \(\varepsilon \in \left[ 0,1\right] \) that

for some \(\theta ^\prime > 0\) by Remark 4.4. So by Lemma 4.2, respectively Remark 4.3, we can choose \(\iota \) small enough so that uniformly over \(n \in \mathbb {N}\) large enough and \(\varepsilon \in \left[ 0,1\right] \)

and this implies that

which proves (42). For such an \(\iota \), define the event \(\mathcal {A}\) by \(\mathcal {A} = \left\{ D_{V_{\textbf{0}}^n}\left( L_\iota ^n, R_\iota ^n \right) \ge \frac{1}{4} \mathbb {E}_{\beta +\varepsilon } \left[ D_{V_{\textbf{0}}^n}\left( \textbf{0}, (n-1) \textbf{1}\right) \right] \right\} \). By the Cauchy-Schwarz inequality we have

where the last inequality holds for some \(C^\prime < \infty \), by Lemma 4.2 and Lemma 4.5. Solving the previous line of inequalities for \(\mathbb {P}_{\beta + \varepsilon }\left( \mathcal {A} \right) \) shows (43). \(\quad \square \)

Lemma 4.8

For all \(\beta \ge 0\) and all dimensions d, there exists a constant \(c_1>0\) such that uniformly over all \(n\in \mathbb {N}\) and all \(x \in \mathcal {S}_n\)

and the constant \(c_1\) can be chosen in such a way so that it only depends on the dimension d and the value \(\iota > 0\) in (42).

Proof

Let \(v \in \mathcal {S}_n\) be one of the minimizers of \(y \mapsto \mathbb {E}_\beta \left[ D_{B_n(\textbf{0})}(\textbf{0},y)\right] \) among all vertices \(y \in \mathcal {S}_n\). By reflection symmetry, we can assume that all coordinates of v are non-negative. With the notation \(e_0=e_d\) we define the vectors \(v_0,\ldots ,v_{d-1}\) by

which are just versions of the vector v, where we cyclically permuted the coordinates. By invariance under changes of coordinates, we have

for all \(i \in \{0,\ldots , d-1 \}\). Define the vertices \(u_0,\ldots , u_{d}\) by \(u_j = \sum _{i=1}^{j} v_i\). By our construction we have \(u_0 = \textbf{0}\) and \(u_{d} = \sum _{i=1}^{d} v_i = N \textbf{1}\) for some integer \(N \ge n\). The balls \(B_n(u_i)\) are all contained in the cube \(\Upsilon =\{-n,\ldots , N+n\}^d\) for all \(i\in \{0,\ldots , d\}\). Thus we have

and by translation invariance we also have for the cube \(\Upsilon _1 = \{0,\ldots , 2n + N\}^d\)

Using the triangle inequality, we see that for all \(k\in \mathbb {N}\) the expected distance between \(n\textbf{1}\) and \((n+kN)\textbf{1}\) inside the cube \(\Upsilon _k = \{0,\ldots , 2n + kN\}^d \) is upper bounded by

But Proposition 4.1 also gives that for \(s=\frac{n}{kN + 2n}\) and \(w_1 = \lfloor s n\textbf{1}\rfloor , w_2 = \lfloor s (n+kN)\textbf{1}\rfloor \)

As \(N\ge n\), for each fixed \(\iota >0\) we can choose k large enough so that \(w_1 \in L_\iota ^n\) and \(w_2 \in R_\iota ^n\) and thus \(\mathbb {E}_\beta \left[ D_{V_{\textbf{0}}^n} (w_1,w_2) \right] \ge \mathbb {E}_\beta \left[ D_{V_{\textbf{0}}^n} (L_\iota ^n, R_\iota ^n) \right] \). Then we get by the lower bound on the expected distance between the boxes \(L_\iota ^n\) and \(R_\iota ^n\) (42) that for such a k

which finishes the proof, as \(v \in \mathcal {S}_n\) was assumed to minimize the expected distance \(\mathbb {E}_\beta \left[ D_{B_n(\textbf{0})}(\textbf{0},y)\right] \) among all vertices \(y \in \mathcal {S}_n\). \(\quad \square \)

Lemma 4.9

For all dimensions d and all \(\beta >0\), there exists an \(\eta \in \left( 0, \frac{1}{2}\right) \) such that uniformly over all \(n\in \mathbb {N}\) and all \(x \in \mathcal {S}_n\)

where \(c_1\) is the constant from (44) and there exists a constant \(c_2\) such that

Furthermore, for each \(\beta \ge 0\) there exist constant \(c_3 > 0\) such that

uniformly over all \(n \in \mathbb {N}\).

Note that in the above lemma, for \(x\in \mathcal {S}_n\) the box \(B_{\eta n}(x)\) is not completely contained inside \(B_n(\textbf{0})\), but from the definition of \(D_{B_n(\textbf{0})} \left( \cdot , \cdot \right) \), we only consider the part that intersects \(B_n(\textbf{0})\).

Proof

Given the results of Lemma 4.8, the proof of (45) and (46) works in the same way as the proof of Lemma 4.7 and we omit it. Regarding the statement of (47), we will first prove that for \(\eta >0\) small enough

for some constant \(c_4>0\) and uniformly over all \(n\in \mathbb {N}\). For this, we use the FKG inequality, see [28, Section 1.3] or [23, 26] for the original papers. We can cover the set \(\bigcup _{x\in \mathcal {S}_n} B_{\eta n} (x)\) with uniformly (in n) finitely many sets of the form \(B_{\eta n} (x)\). For example, we have

where \(F=\left\{ ne_1 + \sum _{i=2}^{d} k_i e_i: k_i \in \left\{ - \big \lceil \frac{n}{\lceil \eta n \rceil } \big \rceil ,\ldots , \big \lceil \frac{n}{\lceil \eta n \rceil } \big \rceil \right\} \text { for all } i \in \{2,\ldots , d\} \right\} \), and all other faces of the set \(\bigcup _{x\in \mathcal {S}_n} B_{\eta n} (x)\) can be covered in a similar way. Suppose that \(A^\prime _n \subset \mathcal {S}_n\) is a sequence of finite sets with \(\sup _n |A_n^\prime |{=}{:}A^\prime < \infty \) such that

for all \(n\in \mathbb {N}\). So in particular we have

The events \(\left\{ D_{B_n(\textbf{0})} \left( B_{\eta n}(\textbf{0}), B_{\eta n}(x) \right) \ge \frac{c_1}{4} \Lambda (n,\beta )\right\} \) are decreasing for all \(x \in \mathcal {S}_n\) in the sense that they are stable under the deletion of edges. Thus the FKG inequality and (46) imply that that

Assume that there is no direct edge from \(\left[ -(n-\eta n), (n-\eta n) \right] ^d\) to \(\mathbb {Z}^d {\setminus } \left[ -n,n\right] ^d\). This has a uniform positive probability in n and is also a decreasing event. Then any path from \(B_{\eta n}(\textbf{0})\) to \(B_n(\textbf{0})^C\) goes through at least one box \(B_{\eta n }(x) \cap B_n(\textbf{0})\) for some \(x \in \mathcal {S}_n\). So with another application of the FKG inequality we get that

for some \(c_5>0\) and uniformly over all \(n \in \mathbb {N}\). Next, let \(A_n \subset B_n(\textbf{0})\) be a sequence of sets such that \(\bigcup _{x \in A_n} B_{\eta n} (x) = B_n(\textbf{0})\) and \(\sup _n |A_n| {=}{:}\bar{A} < \infty \). Then \(D\left( B_n(\textbf{0}),B_{2n}(\textbf{0})^C\right) < \frac{c_1}{4} \Lambda (n,\beta )\) already implies that there exists a point \(x \in A_n\) such that \( D\left( B_{\eta n}(x), B_n(x)^C\right) < \frac{c_1}{4} \Lambda (n,\beta )\). By another application of the FKG inequality we have

which proves (47). \(\quad \square \)

Lemma 4.10

For all \(\beta \ge 0\) and all \(\varepsilon >0\), there exist \(0<c<C<\infty \) such that

for all \(n \in \mathbb {N}\).

Similar statements for one dimension and the continuous model were already proven in [20]. We follow a similar strategy here.

Proof

By the inequality \(D\left( \textbf{0},B_n(\textbf{0})^C\right) \le D_{V_\textbf{0}^{n+2}}\left( \textbf{0},(n+1)\textbf{1}\right) \) we get that

Using Markov’s inequality we see that

and thus the probability \(\mathbb {P}_\beta \left( D(\textbf{0},B_n(\textbf{0})^C) \le C\Lambda (n,\beta )\right) \) can be made arbitrarily close to 1 by taking C large enough. We will also refer to this case as the upper bound. The probability of the lower bound \(\mathbb {P}_\beta \left( c \Lambda (n,\beta )\right) \le D(\textbf{0},B_n(\textbf{0})^C)\) can be made arbitrarily close to 1 for small n by taking c small enough. So we will always focus on n large enough from here on. Fix \(K, N \in \mathbb {N}_{>1}\) such that the function \(i \mapsto \Lambda \left( K^{2i}N,\beta \right) \) is increasing in i. This is possible by Remark 4.4. We now consider boxes of the form \(B_{K^{2i}N}(\textbf{0})\). The probability of a direct edge from \(B_{K^{2(i-1)} N}(\textbf{0})\) to \(B_{K^{2i}N}(\textbf{0})^C\) equals the probability of a direct edge between \(\textbf{0}\) and \(B_{K^2}(\textbf{0})^C\), and is by (5) bounded by \(\beta 50^d K^{-2}\). So the probability that there is some \(i \in \{1,\ldots , K\}\) for which there is a direct edge from \(B_{K^{2(i-1)} N}(\textbf{0})\) to \(B_{K^{2i}N}(\textbf{0})^C\) is bounded by \(\beta 50^d K^{-1}\). We denote the complement of this event by \(\mathcal {A}\). Conditioned on the event \(\mathcal {A}\), where there exists no edge between \(B_{K^{2(i-1)} N}(\textbf{0})\) and \(B_{K^{2i}N}(\textbf{0})^C\) for all \(i\in \{1,\ldots , K\}\), each path from \(B_{N}(\textbf{0})\) to \(B_{K^{2K}N}(\textbf{0})^C\) needs to cross all the distances from \(B_{K^{2(i-1)} N}(\textbf{0})\) to \(B_{2K^{2(i-1)}N}(\textbf{0})^C\). For odd i, these distances are independent. As the function \(i \mapsto \Lambda \left( K^{2i}N,\beta \right) \) is increasing in i, conditioned on the event \(\mathcal {A}\), we have the bound

where the second last inequality holds because of FKG, as events of the form \(\left\{ D(\cdot ,\cdot ) < x\right\} \) are increasing and \(\mathcal {A}\) is decreasing, and where \(c_3\) is the constant from (47). Thus we have that

and this quantity can be made arbitrary small by suitable choice of K. To finish the proof, remember that \(\Lambda (N, \beta )\) and \(\Lambda \left( K^{2K} N, \beta \right) \) are off by a multiplicative factor of at most \(K^{2K}\), as

Thus we have

Now, for fixed \(\varepsilon >0\), take K large enough so that \((1-c_3)^{\lfloor \frac{K}{2}\rfloor } + \beta 50^d K^{-1} < \varepsilon \). For \(n \in \mathbb {N}\) large enough with \(n > K^{2K}\), let N be the largest integer for which \(K^{2K}N \le n\). We know that \(K^{2K}N \le n \le K^{2K}2N\) and this also yields, by Proposition 4.1, that

which already implies

\(\square \)

The previous lemma tells us that for fixed \(\beta > 0\) all quantiles of \(D\left( \textbf{0},B_n(\textbf{0})^C\right) \) are of order \(\Lambda (n,\beta )\). We want to prove a similar statement for the quantiles of \(D\left( B_n(\textbf{0}),B_{2n}(\textbf{0})^C\right) \). However, an analogous statement can not be true, as there is a uniform positive probability of a direct edge between \(B_n(\textbf{0})\) and \(B_{2n}(\textbf{0})^C\). But if we condition on the event that there is no such direct edge, the statement still holds.

Lemma 4.11

Let \(\mathcal {L}\) be the event that there is no direct edge between \(B_n(\textbf{0})\) and \(B_{2n}(\textbf{0})^C\). For all \(\beta > 0\) and all \(\varepsilon >0\), there exist \(0<c<C<\infty \) such that

for all \(n \in \mathbb {N}\).

Proof

We know that