Abstract

We prove the positivity of Lyapunov exponents for the normal form of a Hopf bifurcation, perturbed by additive white noise, under sufficiently strong shear strength. This completes a series of related results for simplified situations which we can exploit by studying suitable limits of the shear and noise parameters. The crucial technical ingredient for making this approach rigorous is a result on the continuity of Lyapunov exponents via Furstenberg–Khasminskii formulas.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The understanding and detection of chaotic properties has been a central theme of dynamical systems theory over the past decades. Particular interest has been devoted to proving positive Lyapunov exponents in nonuniformly hyperbolic regimes [38], as an indicator of chaotic structures. Since such endeavours have turned out to be tremendously difficult for purely deterministic systems, as, for instance, the standard map [21], more and more attention has been given to random systems where noise can help to render chaotic features visible [13, 14, 37].

A particular mechanism for creating chaotic attractors under, potentially random, perturbations has been suggested and studied by Wang, Young and co-workers and has become known as shear-induced chaos [26, 27, 34]. The main idea is to perturb limit cycles in the radial direction, where the amplitude of the perturbation depends on the angular coordinates along the limit cycle, such that a shear force in the form of radius-dependent angular velocity can lead to a stretch-and-fold mechanism in combination with overall volume contraction. In the situation of random perturbations, this has contributed to a particular view on stochastic Hopf bifurcation, complementary to previous studies [2, 5].

In more detail, the following model of a Hopf normal form with additive white noise has been studied in [16, 17], also drawing attention from applications to e.g. laser dynamics [35]:

where \(\sigma \ge 0\) is the strength of the noise, \(\alpha \in {\mathbb {R}}\) is a parameter equal to the real part of eigenvalues of the linearization of the vector field at (0, 0), \(b \in {\mathbb {R}}\) represents shear strength, \(a > 0\), \(\beta \in {\mathbb {R}}\), and \((W(t))_{t \in {\mathbb {R}}_{\ge 0}}\) is a 2-dimensional Brownian motion. We will focus on the case \(\alpha > 0\), such that the system without noise (\(\sigma =0\)) possesses a limit cycle with radius \(\sqrt{\alpha a^{-1}}\).

Deville et al. [16] showed that, in the limits of small noise and small shear, the largest Lyapunov exponent \(\lambda (\alpha , \beta , a, b, \sigma )\) for system (1.1) is negative. Doan et al. [17] extended these stability results to parts of the global parameter space and proved that the random attractor for the associated random dynamical system is a singleton, establishing exponentially fast synchronization of almost all trajectories.

Based on numerical investigations, it was conjectured in several works [16, 17, 26, 35] that large enough shear in combination with noise may cause Lyapunov exponents to turn positive, leading to chaotic random dynamical behaviour without synchronization. Wang and Young [34] obtained a proof of shear-induced chaos with deterministic instantaneous periodic driving. Lin and Young [26] introduced a simpler, affine linear SDE model that still retains the important features of (1.1) and exhibits favorable scaling properties of the parameter-dependent (numerically computed) Lyapunov exponents. Using a slight modifcation of the noise, Engel et al. [19] obtained an analytical proof of positive Lyapunov exponents for this kind of simplified model in cylindrical coordinates. For that they used a Furstenberg–Khasminskii formula in terms of a function \(\Psi \) (cf. Fig. 1 and Theorem 2.3 below), based on results in [24].

1.1 Main result

The key insight of our work presented here is that the simplified cyclinder model in [19] can be found as a large shear, small noise limit for system (1.1). This allows us to finally prove the existence of positive Lyapunov exponents by using the corresponding results in [19] and an argument concerning the continuity of Lyapunov exponents. Hence, the main result can be expressed by associating the limit for \(\lambda (\alpha , \beta , a, \epsilon ^{-1} b, \epsilon \sigma )\) with the explicit Furstenberg–Khasminskii formula in terms of the function \(\Psi \) (see Fig. 1), yielding positive Lyapunov exponents for sufficiently large shear and small noise.

Theorem A

For all \(\alpha , a, \sigma \in {\mathbb {R}}_{> 0}\) and \(\beta , b \in {\mathbb {R}}\), the largest Lyapunov exponent of system (1.1) satisfies

In particular, there is a constant \(C_0 \approx 3.45\) such that

whenever \(b^2\sigma ^2>2C_0\alpha ^2a\) and \(\epsilon >0\) is sufficiently small, depending on \(\alpha , \beta , a, b\) and \(\sigma \).

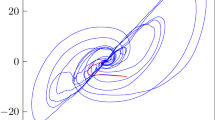

We remark that the situation of positive Lyapunov exponents allows for several conclusions concerning the nature of the random attractor \(\{A(\omega )\}_{\omega \in \Omega }\) (\(\Omega \) denotes the canonical Wiener space here), as established in [17]. In this reference, the authors identified \(A(\omega ) = {{\,\textrm{supp}\,}}(\mu _{\omega })\), where \(\mu _{\omega }\) denote the disintegrations of the invariant measure \(\mu \) for the random dynamical system, corresponding with the stationary measure \(\rho \) for the SDE (1.1). Now, when \(\lambda > 0\), we may deduce that \(\mu _{\omega }\) is atomless almost surely by an extension of results by Baxendale [4, Remark 4.12] to the non-compact setting [18, Theorem 5.1.1]. Furthermore, by applying results on Pesin’s formula for random dynamical systems in \({\mathbb {R}}^d\) [11], one obtains positive metric entropy with respect to the invariant measure \(\mu \) whenever \(\lambda > 0\) (see also [18, Corollary 5.2.10]). The fact that the disintegrations \(\mu _{\omega }\) are SRB measures should follow by a similar extension of Ledrappier’s and Young’s work [25] to the non-compact state space case (Fig. 2).

Chaotic random attractor for (1.1) with parameters \(\alpha = 1\), \(\beta = 1\), \(a = 1\), \(b = -10\) and \(\sigma = 1\). The deterministic limit cycle \(\left\{ z \in {\mathbb {R}}^2: \Vert z\Vert = \sqrt{\alpha a^{-1}}\right\} \) is shown in blue for reference. The plot was obtained by taking 50,000 samples from the stationary distribution of (1.1) and evolving them numerically using an Euler–Maruyama scheme for a fixed time \(T \approx 500\) with a fixed realization of the noise

1.2 Interpretation of the result

Lin and Young [26] raised also quantitative questions about the roles of the shear strength, the contraction rate and the noise strength in creating shear-induced chaos. For the simplified cylinder model these questions were answered in [19]: the sign of the top Lyapunov exponent is positive if and only if the quantity

is larger than the constant \(C_0 \approx 3.45\) (cf. Theorem 2.4 below).

In the setting of (1.1), contraction occurs only in radial direction, while the shearing effect occurs in angular direction. Thus we refer to the radial derivative of the angular part of the vector field as shear strength and to the negative radial derivative of the radial part of the vector field as contraction rate. As opposed to the simplified cylinder model, shear strength and contraction rate are not constant and depend on the distance to the origin. However, since our main result Theorem A is concerned with a small noise limit, the relevant shear strength and contraction rates are the ones near the limit cycle of the corresponding deterministic system (\(\sigma = 0\)). Note that the contraction rate at the limit cycle is precisely its non-trivial Floquet-multiplier. The radius of this deterministic limit cycle has another important role. Since the noise is purely additive, it cannot induce chaotic behavior directly. Instead the presence of additive noise creates a phase-amplitude coupling, which in tandem with the amplitude-phase coupling created by the shear leads to chaos (see [26] for a detailed explanation of the mechanism of shear-induced chaos). Thus, the effective noise strength, which describes the strength of the phase-amplitude coupling, is proportional to the curvature of the deterministic limit cycle, which is inversely proportional to its radius. In the parameters of (1.1), shear strength, contraction rate and effective noise strength can be expressed as follows:

Analogously to the results of [19], Theorem A asserts that in the large shear, small noise limit the sign of the top Lyapunov exponent is positive if the quantity

is larger than \(C_0\approx 3.45\). In order to obtain such a result, it is crucial for the exponents of \(\epsilon \) in (1.2) to be chosen in such a way that the quantity (1.3) remains constant.

1.3 Structure of the paper

The proof of Theorem A is presented in Sect. 3, based on a formal first derivation in Sect. 2. In more detail, Sect. 2.1 recalls the bifurcation from synchronization to chaos, indicated by a change of sign of the largest Lyapunov exponent, in the simplified model in cylindrical coordinates studied in [19]. In Sect. 2.2, we provide a rigorous framework to study model (1.1) as a random dynamical system, also introdcuing the corresponding Lyapunov exponents. Based on a specific geometric insight, we introduce the crucial, new coordinates for the variational process along trajectories of (1.1) and the change of variables \(b= \epsilon ^{-1} b'\) and \(\sigma = \epsilon \sigma '\), yielding a description of the system that allows for obtaining the simplified model in a formal \(\epsilon \rightarrow 0\) limit.

As a first step towards a rigorous proof, Sect. 3.1 derives SDEs and coefficient estimates for auxiliary processes, parametrizing the projective bundle process, in order to provide Furstenberg–Khasminskii formulas that make the RDS and its linearization associated to (1.1), indexed by \(\epsilon \), comparable to the simplified SDE model. In Sect. 3.2, we establish the unique stationary measures for the respective bundle processes along with concentration bounds and a weak convergence result as \(\epsilon \rightarrow 0\). After showing in Sect. 3.3 the Furstenberg–Khasminkii formula for the limiting process, obtained in the simplified model, we collect the previous description of the bundle processes and their invariant measures to prove, in Sect. 3.4, the limit of the largest Lyapunov exponent as stated in Theorem A. Thereby, we have to use two different coordinate systems for the variational process, one allowing for an arbitrarily close approximation of the deterministic limit cycle and one controlling the polar coordinate singularity at radius \(r=0\).

1.4 Additional relations to other work and outlook

Lately, Breden and Engel [15] proved the existence of positive conditioned Lyapunov exponents, as introduced in [20], for model (1.1) restricted to a bounded domain around the deterministic limit cycle. The setting considers limits of trajectories, conditioned on not having hit the boundary of the domain. The proof involves computer-assistance, using interval arithmetic, for obtaining an approximation of the relevant quasi-ergodic measure in a modified Furstenberg–Khasminskii formula, together with a rigorous error estimate. Naturally, the proof procedure is always based on choosing particular values for the parameters, i.e. in the end one can show the result only for a finite number of parameter combinations.

In recent years, Bedrossian, Blumenthal and Punshon-Smith have embarked on a program to make random dynamical systems theory fruitful for relating chaotic stochastic dynamics with positive Lyapunov exponents to turbulent fluid flow, in particular in the form of Lagrangian chaos and passive scalar turbulence [8, 9]. On a related note, the same authors have developed a new method for obtaining lower bounds for positive Lyapunov exponents, using an identity resembling Fisher information and and an adapted hypoellitpic regularity theory [10]. They have applied this method to Euler-like systems, including a stochastically forced version of Lorenz 96, where an energy and volume conserving system is weakly perturbed, in the sense of small scaling, by linear damping and noise. Note that our situation also considers small noise perturbations but, being a dissipative system, strong damping; hence, the mechanism leading to chaos and positive Lyapunov exponents is fundamentally different, requiring a strong shear force interacting with noise and dissipation.

This leads to the general question of whether the reduction of the perturbed normal form (1.1) to the simplified cylinder models in [19, 26], yielding the positivity of Lyapunov exponents, can be extended to a broad, maybe even universal, class of oscillators (van der Pol, FitzHugh-Nagumo etc.). We leave it as an open question for the future to find a such rigorous generalization for the phenomenon of shear-noise induced chaos.

The key method used in the present work is a coordinate change for the variational process which depends on the scaling parameter \(\epsilon \). Such rescalings have been used before in the literature, see e.g. [3, 6, 7, 29]. However, opposed to the mentioned works, the coordinate change used in the present work is linear only in polar coordinates, leading to a non-linear transformation in Cartesian coordinates. The geometric implications of this will be discussed in Remark 2.8 below. Finally, we would like to mention that, in contrast to noise-induced chaos, there have also been remarkable results on noise-induced order in recent years [28], in particular concerning contracting Lorenz maps [12].

2 Large Shear, Small Noise Limit and Relation to Simplified Model

In this section, we will first give an overview over the simplified model studied by Engel et al. [19], inspired by Lin and Young [26]. We will then provide a formal, non-rigorous derivation of our main result, which shall serve as a guide for the rigorous proof given in the final section.

2.1 A bifurcation in the simplified model

As a slightly modified version of a model studied in [26], Engel et al. [19] have investigated the SDE

where \(({\hat{S}}(t))\) is a real-valued process and \((\hat{\Theta }(t))\) is an \(S^1:= {\mathbb {R}}/(2 \pi {\mathbb {Z}})\)- valued process. Furthermore, \(\hat{\alpha }, \hat{\sigma } \in {\mathbb {R}}_{> 0}\) are positive real parameters, \(\hat{b}\in {\mathbb {R}}\) is a real parameter and \(({\hat{W}}_1(t), {\hat{W}}_2(t))\) are independent Brownian motions. Note that the parameters \(\hat{\alpha }, \hat{b}\) and \(\hat{\sigma }\) have roles that are similar to their respective counterparts \(\alpha , b\) and \(\sigma \) in Eq. (1.1). Based on previous work by Imkeller and Lederer [24], it was shown in [19] that, depending on the values of the parameters \(\hat{\alpha }, \hat{b}\) and \(\hat{\sigma }\), the top Lyapunov exponent for (2.1) can attain both positive and negative values.

We will start by giving a brief overview of the formal setup for studying solutions of (2.1) from a random dynamical systems viewpoint. Due to the Lipschitz-continuity of all terms on the right-hand side of (2.1), this equation generates a differentiable random dynamical system [1, Definition 1.1.3], which can be constructed as follows. Set \(\Omega = {\mathcal {C}}^0({\mathbb {R}}_{\ge 0}, {\mathbb {R}}^2)\), where \({\mathcal {C}}^0({\mathbb {R}}_{\ge 0}, {\mathbb {R}}^2)\) denotes the space of all continuous functions \(\omega : {\mathbb {R}}_{\ge 0}\rightarrow {\mathbb {R}}^2\) satisfying \(\omega (0) = 0\). We equip \(\Omega \) with the compact-open topology and let \({\mathbb {P}}\) be the Wiener measure defined on the Borel-measurable subsets of \(\Omega \). Furthermore we denote by \(\varsigma : {\mathbb {R}}_{\ge 0}\times \Omega \rightarrow \Omega \) the shift action given by

The Brownian motions \({\hat{W}}_{i}\), \(i=1,2\), in (2.1) will be interpreted as random variables defined by

There exists a stochastic flow map \(\hat{\varphi }: \Omega \times {\mathbb {R}}_{\ge 0}\times ({\mathbb {R}}\times S^1) \rightarrow {\mathbb {R}}\times S^1\) induced by (2.1) which has the following properties.

-

(i)

For each \(({\hat{S}}_0, \hat{\Theta }_0) \in {\mathbb {R}}\times S^1\), the stochastic process \(({\hat{S}}(t), \hat{\Theta }(t))\) defined by

$$\begin{aligned} ({\hat{S}}(t), \hat{\Theta }(t)):= \hat{\varphi }(t, \cdot , {\hat{S}}_0, \hat{\Theta }_0) \end{aligned}$$is a strong solution to (2.1) with initial condition \(({\hat{S}}(0), \hat{\Theta }(0)) = ({\hat{S}}_0, \hat{\Theta }_0)\).

-

(ii)

After possibly restricting to a \(\varsigma \)-invariant subset \(\tilde{\Omega } \subseteq \Omega \) of full \({\mathbb {P}}\)-measure, the skew-product \((\varsigma , \hat{\varphi })\) forms a continuously differentiable random dynamical system (RDS) in the sense of [1, Definition 1.1.3]. In particular the cocycle property

$$\begin{aligned} \hat{\varphi }(s+t, \omega , {\hat{S}}_0, \hat{\Theta }_0) = \hat{\varphi }(t, \varsigma (s,\omega ), \hat{\varphi }(s, \omega , {\hat{S}}_0, \hat{\Theta }_0)) \end{aligned}$$(2.2)holds for every \(s,t \in {\mathbb {R}}_{\ge 0}\), \(\omega \in \tilde{\Omega }\) and \(({\hat{S}}_0, \hat{\Theta }_0) \in {\mathbb {R}}\times S^1\).

For simplicity of notation, we will identify \(\tilde{\Omega }\) and \(\Omega \) and will use \(\hat{\varphi }\) to mean its restriction to \(\tilde{\Omega }\). This allows us to define a linear map \(\hat{\Phi }: {\mathbb {R}}_{\ge 0}\times \Omega \times ({\mathbb {R}}\times S^1) \rightarrow {\mathbb {R}}^{2\times 2}\) by

The cocycle property of \(\hat{\Phi }\) over the RDS \((\varsigma , \hat{\varphi })\), i.e.

comes as a direct consequence of applying the chain rule to (2.2). By the Furstenberg–Kesten Theorem, the integrability condition of which can be easily verified for our situation, the top Lyapunov exponent \(\hat{\lambda }(\hat{\alpha }, \hat{b}, \hat{\sigma })\) can now be defined as

A useful tool for studying Lyapunov exponents is the variational process given by

for some initial condition \(({\hat{s}}_0, \hat{\theta }_0) \ne (0,0)\). This process satisfies the so-called variational equation (see e.g. [1, Theorem 2.3.32]), which in our case takes the form

obtained by differentiating the coefficients of the original SDE (2.1) (Fig. 3).

Typical trajectories (red) for \(({\hat{s}}(t), \hat{\theta }(t))\), \(t \in [0,10]\), starting in \(({\hat{s}}_0, \hat{\theta }_0) = (0,1)\), with parameters \(\hat{\alpha } = \hat{b} = \hat{\sigma } =1\) (a) and \(\hat{\alpha } = \hat{b} =1\), \(\hat{\sigma } = 2\) (b). The blue arrows indicate the drift field and the green arrows the noise field. Note that for a we have \(\zeta := \hat{b}^2\hat{\sigma }^2\hat{\alpha }^{-3} = 1 < C_0\), while for b we have \(\zeta = 4 > C_0\)

Here, the process \((\hat{W}_3(t))\) is given by

By Levy’s criterion, see e.g. [30, Theorem IV.3.6], the process \((\hat{W}_3 (t))\) is again a Brownian motion.

Remark 2.1

Note that \((\hat{W}_3(t))\) does not only depend on \(\omega \) but also on the initial condition \(({\hat{S}}_0, \hat{\Theta }_0)\) for the original equation (2.1). Thus, the process \(({\hat{s}}(t), \hat{\theta }(t))\) also depends not only on its own initial condition \(({\hat{s}}_0, \hat{\theta }_0)\), but also on \(({\hat{S}}_0, \hat{\Theta }_0)\). However, the law of \((\hat{W}_3 (t))\) is always that of a Brownian motion, independently of \(({\hat{S}}_0, \hat{\Theta }_0)\); hence, the law of \(({\hat{s}}(t), \hat{\theta }(t))\) also does not depend on \(({\hat{S}}_0, \hat{\Theta }_0)\) (but of course still on \(({\hat{s}}_0, \hat{\theta }_0)\)).

It follows from results in [24] that, for any initial condition \(({\hat{s}}_0, \hat{\theta }_0) \ne (0,0)\), we almost surely have

Thus the Lyapunov exponent \(\hat{\lambda }(\hat{\alpha }, \hat{b}, \hat{\sigma })\) is fully determined by the law of the SDE (2.3).

Remark 2.2

For each \(({\hat{S}}_0, \hat{\Theta }_0) \in {\mathbb {R}}\times S^1\) and almost every \(\omega \in \Omega \) there will still exist a one-dimensional subspace \(V(\omega , {\hat{S}}_0, \hat{\Theta }_0)\subset {\mathbb {R}}^2\) such that

where \(\hat{\lambda }_2(\hat{\alpha }, \hat{b}, \hat{\sigma })\) is the second Lyapunov exponent. However, due to a result from [23] based on Hörmander’s condition, one can show that the distribution of \(V(\cdot , {\hat{S}}_0, \hat{\Theta }_0)\) in the projective space \({\mathbb {R}}{\mathbb {P}}^1\) is atomless. Thus, for each fixed \(({\hat{S}}_0, \hat{\Theta }_0, {\hat{s}}_0, \hat{\theta }_0)\) we have for almost every \(\omega \in \Omega \)

such that the equality (2.4) holds.

It turns out that one can make use of homogenities of system (2.3) to simplify the analysis of the sign of the top Lyapunov exponent. Substituting \(\hat{\theta }(t) \mapsto \gamma \hat{\theta }(t)\) for some \(\gamma > 0\) gives the identity

It is worth mentioning, that this coordinate change is of the type discussed in [29, Section 7]. Similarly, substituting \(t \mapsto \delta t\) gives (cf. [19, Proposition 3.2])

Together, these two identities show that the sign of \(\hat{\lambda }(\hat{\alpha }, \hat{b}, \hat{\sigma })\) will only depend on the value of \(\zeta := \hat{b}^2\hat{\sigma }^2\hat{\alpha }^{-3}\). In [24], Imkeller and Lederer derived a semi-explicit formula for \(\hat{\lambda }(\hat{\alpha }, \hat{b}, \hat{\sigma })\). To give this formula, we first define for each \(\zeta > 0\) a function \(m_\zeta : {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}_{\ge 0}\) by

Here \(K_\zeta >0\) is a normalization constant given by

such that \(m_\zeta \) becomes the density of a probability distribution on \({\mathbb {R}}_{> 0}\). Next, we define a function \(\Psi : {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}\) by

Now one can give the following formula for the top Lyapunov exponent of the RDS induced by Eq. (2.1).

Theorem 2.3

([24, Theorem 3], see also [19, Theorem 2.1]). For any initial condition \(({\hat{s}}(0), \hat{\theta }(0))\ne (0,0)\) the solution to (2.3) satisfies

In particular, this means that the sign of \(\hat{\lambda }(\hat{\alpha }, \hat{b}, \hat{\sigma })\) is determined by the sign of \(\Psi (\zeta )\), where \(\zeta := \hat{b}^2\hat{\sigma }^2\hat{\alpha }^{-3}\). Engel et. al. used this fact to show the following bifurcation result.

Theorem 2.4

[19, Theorem 1.1]. The function \(\Psi : {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}\) has a unique zero at \(C_0\approx 3.45\). Furthermore, we have \(\Psi (\zeta ) < 0\) for \(\zeta < C_0\) and \(\Psi (\zeta )>0\) for \(\zeta >C_0\). In particular, the top Lyapunov exponent satisfies

Remark 2.5

Our constant \(C_0 \approx 3.45\) is different from the constant \(c_0 \approx 0.2823\) from [19], but related by \(C_0:= c_0^{-1}\). This is due to a slight reformulation of the statement.

2.2 A formal derivation of the large shear, small noise limit

Now we turn our attention again to the Hopf normal form with additive noise, which we will write as

where \(F: {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) is given by

Recall that \(\alpha , a\) and \(\sigma \) are positive real parameters, \(\beta \) and b are real parameters and \((W(t))_{t \in {\mathbb {R}}_{\ge 0}}\) is a 2-dimensional Brownian motion. We will consider the parameters \(\alpha , \beta , a, b\) and \(\sigma \) as fixed for the time being. The formal setup for studying this equation is very similar to the one described in the previous subsection. System (2.6) has already been investigated from a random dynamical systems viewpoint in [16, 17] and we will build on their results. As before, the probability space is given by \(\Omega = {\mathcal {C}}^0({\mathbb {R}}_{\ge 0}, {\mathbb {R}}^2)\), with Wiener measure \({\mathbb {P}}\) and \({\mathbb {P}}\)-invariant time shift denoted by \(\varsigma : {\mathbb {R}}_{\ge 0}\times \Omega \rightarrow \Omega \), again identifying the process (W(t)) with random variables \(W(t):= \omega (t).\) It has been shown in [17, Theorem A], that (2.6) induces a random dynamical system in the same sense as in the previous subsection, i.e. that there exists a map \(\varphi : {\mathbb {R}}_{\ge 0}\times \Omega \times {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^2\) satisfying the following properties:

-

(i)

For each \(Z_0 \in {\mathbb {R}}^2\), the stochastic process (Z(t)) defined by

$$\begin{aligned} Z(t):= \varphi (t, \cdot , Z_0) \end{aligned}$$is a strong solution to (2.6) with initial condition \(Z(0)= Z_0\).

-

(ii)

After possibly restricting to a \(\varsigma \)-invariant subset \(\tilde{\Omega } \subseteq \Omega \) of full \({\mathbb {P}}\)-measure, the skew-product \((\varsigma , \varphi )\) forms a continuously differentiable random dynamical system in the sense of [1, Definition 1.1.3]. In particular, the cocycle property

$$\begin{aligned} \varphi (s+t, \omega , Z_0) = \varphi (t, \varsigma (s, \omega ), \varphi (s, \omega , Z_0)) \end{aligned}$$holds for every \(s,t \in {\mathbb {R}}_{\ge 0}\), \(\omega \in \tilde{\Omega }\) and \(Z_0 \in {\mathbb {R}}^2\).

As before, we define the linearization \(\Phi : {\mathbb {R}}_{\ge 0}\times \Omega \times {\mathbb {R}}^2 \rightarrow {\mathbb {R}}^{2\times 2}\) by

which satisfies the identity

Since the integrability condition of the Furstenberg–Kesten Theorem is verified [17, Proposition 4.1], we can define the top Lyapunov \(\lambda (\alpha , \beta , a, b, \sigma )\) by

Analogously to the previous subsection, we introduce the variational process (Y(t)) defined by

for some initial condition \(Y_0 \ne (0,0)\). The corresponding variational equation is given by the linear random ordinary differential equation

It was shown in [16] that the top Lyapunov exponent can be expressed by the almost-sure identity

which holds independently of the chosen initial conditions \(Z_0 \in {\mathbb {R}}^2\) and \(Y_0 \in {\mathbb {R}}^2{\setminus } \{0\}\).

One of the defining features of Eq. (2.6) is the rotational symmetry of the drift term. Thus, it is reasonable to express the system in polar coordinates. Given the solution Z(t) to (2.6), we consider the \({\mathbb {R}}_{\ge 0}\)-valued process (r(t)) and the \({\mathbb {R}}/(2\pi {\mathbb {Z}})\)-valued process \((\phi (t))\) uniquely defined by

Proposition 2.6

The processes (r(t)) and \((\phi (t))\) satisfy the Itô-SDEs

Proof

See “Appendix A”. The same derivation can also be found in [16]. \(\square \)

We now introduce two real-valued processes \((W_r(t))\) and \((W_\phi (t))\) uniquely defined by \(W_r(0) = W_\phi (0) = 0\) and the Itô-SDEs

Again, using Levy’s criterion (see e.g. [30, Theorem IV.3.6]), it can be easily checked that \((W_r(t))\) and \((W_\phi (t))\) are independent Brownian motions. We can now rewrite (2.10) as

We will also conduct a change of coordinates for the variational process (Y(t)). In order to simplify the equation, we express (Y(t)) in an orthonormal basis that is adjusted to the polar representation of Z(t) (see Fig. 4).

Geometric construction of the different coordinates used. The processes (r(t)) and \((\phi (t))\) are the usual polar coordinates for (Z(t)). Formally, the variational process (Y(t)) has values \(Y(t) \in T_{Z(t)}{\mathbb {R}}^2 \simeq {\mathbb {R}}^2\), where \(T{\mathbb {R}}^2\) denotes the (trivial) tangent bundle of \({\mathbb {R}}^2\). Now the pair \((s(t), \vartheta (t))\) expresses the same point in \(T_{Z(t)}{\mathbb {R}}^2\) in a time-dependent orthonormal basis. In particular the process (s(t)) describes radial perturbations of Z(t), while \((\vartheta (t))\) describes angular perturbations of Z(t)

For that purpose, we introduce two real-valued processes (s(t)) and \((\vartheta (t))\) given by

and

Clearly the norm is unchanged, i.e. we have

for all \(t\ge 0\). Due to the rotational symmetry of our original system (2.6), this basis change also simplifies the stochastic differential equations for the variational process.

Proposition 2.7

The processes (s(t)) and \((\vartheta (t))\) satisfy the Stratonovich SDEs

Proof

See “Appendix A”. \(\square \)

Note that the SDE is given in Stratonovich form, which will be used throughout the rest of the paper whenever it fits the given situation best, as is the case here. Recall that we are interested in studying the large shear, small noise limit. To that end, we consider from now on fixed real parameters \(\alpha , \beta , a, b'\) and \(\sigma '\), with \(\alpha , a\) and \(\sigma '\) positive, and set \(b:= \epsilon ^{-1} b'\) and \(\sigma = \epsilon \sigma '\) such that we can study the limit \(\epsilon \rightarrow 0\). The main object of interest for our analysis is the (joint) law of the processes (s(t)) and \((\vartheta (t))\) since this will be crucial to compute the Lyapunov exponent via (2.8). Note that the process \((\phi (t))\) only enters the right-hand side of (2.12) indirectly, through \((W_\phi (t))\). However, by a similar argument to the one made in Remark 2.1, the law of \((s(t), \vartheta (t))\) does only depend on their initial values \((s(0), \vartheta (0))\) and the law of (r(t)). Since the equation for (r(t)) in (2.11) also does not depend on \(\phi (t)\), the law of \((s(t), \vartheta (t))\) can be studied via the following “self-contained” system of SDEs.

At the moment taking a limit \(\epsilon \rightarrow 0\) is, even formally, not possible due to the presence of a term \(\epsilon ^{-1}\) in the last equation. To circumvent this problem, we rescale the process \((\vartheta (t))\) by introducing the real-valued process \((\theta (t))\) as

This allows us to rewrite (2.13) as

Note that, due to the estimate

which holds for sufficiently small \(\epsilon \), we still have

In Eq. (2.14), we can now set \(\epsilon = 0\), at least formally. This leaves us with

In this singular limit, the equation for r(t) is a purely deterministic ODE, which has a globally exponentially stable equilibrium at \(\hat{r}:= \sqrt{\alpha a^{-1}}\). Since we are only interested in the asymptotic behavior as t tends to infinity, we may furthermore formally set \(r(t) = \hat{r}\), yielding

Note that after setting

Equation (2.17) has the same form as the variational equation (2.3) studied in section 2.1 (note that Itô and Stratonovich integrals coincide here). This means that the law of the processes \((s(t), \theta (t))\) evolving under (2.17) and the processes \(({\hat{s}}(t), \hat{\theta }(t))\) evolving under (2.3) are identical. Therefore, by Theorem 2.3, the solutions to (2.17) almost surely satisfy

for all initial conditions \((s(0),\theta (0))\ne (0,0)\). It is thus reasonable to believe that

should hold which we will, in fact, prove in the following section.

Remark 2.8

The crucial step in this formal derivation is the coordinate change from \((s,\vartheta )\) to \((s,\theta )\). Since this is a non-orthonormal change of coordinates of the tangent-bundle \(T{\mathbb {R}}^2\), it may also be interpreted as a change in the metric tensor, and thereby the geometry of the manifold \({\mathbb {R}}^2\). In particular the change \(\vartheta \mapsto \theta := \epsilon \vartheta \) has the effect of shortening (assuming \(\epsilon <1\)) distances in angular direction, i.e. along circles centered at the origin, while preserving distances in radial direction, i.e. along straight lines through the origin. This precisely corresponds to the geometry of a cone. Now, taking the limit \(\epsilon \rightarrow 0\) has the effect of reducing the angle at the apex of the cone to 0 or equivalently sending the apex to infinity. Geometrically this results in a cylinder, which is precisely the state space for the simplified model (2.1). The singularity at the origin, which arises through the coordinate change for \(\epsilon < 1\), foreshadows an obstacle (cf. Sect. 3.4) that is dealt with in the rigorous proof presented in the remainder of this paper.

3 Proof of Theorem A

The goal of this final section is to rigorously prove the large shear, small noise limit behaviour, derived formally in the previous section. Thereby we will establish the main result, Theorem A. A crucial challenge is that, in general, Lyapunov exponents do not depend continuously on the underlying system (see [33] for a survey). In the case of random systems, however, continuity of Lyapunov exponents can be shown under fairly general conditions, going back to ideas by Young [36]. The main technique is to express the top Lyapunov exponent as an integral against the stationary distribution of an auxiliary process on the projective bundle, by the so-called Furstenberg–Khasminskii formula.

In order to make the dependence on \(\epsilon \) more explicit, we denote the processes \((r(t), s(t), \theta (t))\) by \((r_\epsilon (t), s_\epsilon (t), \theta _\epsilon (t))\). With this notation, we rewrite equation (2.14) as

where the matrix-valued functions \(A^{(i)}: {\mathbb {R}}_{> 0}\times {\mathbb {R}}_{\ge 0}\rightarrow {\mathbb {R}}^{2\times 2}\), \(i=1,2\), are given by

We also rewrite the variational equation (2.3) of model (2.1) as

Remark 3.1

Due to the singularity at \(r = 0\), this process resists treatment by standard results in a lot of cases. The first instance of this appears in Lemma 3.9, where the lack of Lipschitz-continuity prohibits the use of classical results. For the final proof, even more explicit estimates for the neighborhood of \(r = 0\) are required. These are obtained in Lemma 3.11.

3.1 Auxiliary processes for Furstenberg–Khasminskii formula

The Furstenberg–Khasminskii formula is a way to express the top Lyapunov exponent via an integral against the stationary distribution of an induced process on the projective bundle. We will use the processes \(\psi \) and \(\Lambda \), introduced generally in the following lemma, to work with such a formula in convenient coordinates.

Lemma 3.2

Let (w(t)) be a semimartingale with values in some open set \(D\subset {\mathbb {R}}\), \(B^{(i)}: D \rightarrow {\mathbb {R}}^{2\times 2}\), \(i=1,2\), some smooth matrix-valued functions and (v(t)) be an \(({\mathbb {R}}^2{\setminus }\{0\})\)-valued stochastic process satisfying the SDE

Consider the processes

Then \((\psi (t))\) and \((\Lambda (t))\) satisfy the SDEs

where the functions \(p_{i}, q_{i}: D \times S^1 \rightarrow {\mathbb {R}}\), \(i=1,2\), are given by

Proof

See “Appendix A”. \(\square \)

Remark 3.3

A similar statement is given in [24, Equations (5) and (6)], with a slightly different parametrization of the projective space \({\mathbb {R}}{\mathbb {P}}^1 \simeq S^1\), leading to different expressions.

In the spirit of Lemma 3.2 we define for each \(\epsilon \ge 0\) an \(({\mathbb {R}}/2\pi {\mathbb {Z}})\)-valued process \((\psi _\epsilon (t))\) and an \({\mathbb {R}}_{> 0}\)-valued process \((\Lambda _\epsilon (t))\) by

as well as an \(({\mathbb {R}}/2\pi {\mathbb {Z}})\)-valued process \((\hat{\psi } (t))\) and an \({\mathbb {R}}_{> 0}\)-valued process \((\hat{\Lambda }(t))\) by

Here, the values of \(\psi _\epsilon \) and \(\hat{\psi }\) should be seen as a parametrization of the projective space \({\mathbb {R}}{\mathbb {P}}^1 \simeq S^1\) by \([-\pi , \pi )\). Indeed, our definition implies

The values of \((t^{-1}\Lambda _\epsilon (t))\) and \((t^{-1}{\hat{\Lambda }}(t))\) are commonly called finite time Lyapunov exponents in the literature. Note that, by (2.16), we have

for each \(\epsilon >0\) and, by (2.18), we have

Recalling (3.1) and (3.2), we introduce the functions \(g_{i}, h_{i}: {\mathbb {R}}_{> 0}\times S^1 \times {\mathbb {R}}_{\ge 0}\rightarrow {\mathbb {R}}\), \(i=1,2\), by

and the functions \(g_3, h_3: {\mathbb {R}}_{> 0}\times S^1 \times {\mathbb {R}}_{\ge 0}\rightarrow {\mathbb {R}}\) by

Proposition 3.4

The processes \((\psi _\epsilon (t))\) and \((\Lambda _\epsilon (t))\) satisfy the SDEs

The processes \((\hat{\psi }(t))\) and \((\hat{\Lambda }(t))\) satisfy the SDEs

Proof

The equations follow directly by applying Lemma 3.2 and the Itô–Stratonovich correction formula. Here, we make use of the fact that the quadratic covariation between the semimartingales \((r_\epsilon (t))\) and \((W_\phi (t))\) vanishes since \((r_\epsilon (t))\) is only driven by the Brownian motion \((W_r(t))\), which is independent of \((W_\phi (t))\). \(\square \)

For technical reasons, we will also have to consider the logarithm of the norm of the variational process in the original coordinates \((s(t),\vartheta (t))\). For that purpose, we rewrite the SDE for (s(t)) and \((\vartheta (t))\) given in (2.13) as

where the matrix-valued functions \(\tilde{A}^{(i)}: {\mathbb {R}}_{> 0}\times {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}^{2\times 2}\), \(i=1,2\), are given by

Remark 3.5

Note that while the SDE (3.1) is well-defined for \(\epsilon \ge 0\), the SDE (3.8) is only well-defined for \(\epsilon >0\). This is precisly the motivation for mainly considering the coordinates \((s_\epsilon , \theta _\epsilon )\), rather than \((s_\epsilon , \vartheta _\epsilon )\).

Similarly to our previous definitions, we set

Note that (2.15) implies

for sufficiently small \(\epsilon >0\). In addition, we define the functions \(\tilde{g}_{i}, \tilde{h}_{i}: S^1 \times {\mathbb {R}}_{> 0}\times {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}\), \(i=1,2\), by

and a function \(\tilde{g}_3: S^1 \times {\mathbb {R}}_{> 0}\times {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}\) by

Remark 3.6

Note that we have \(\tilde{h}_2(r,\psi ,\epsilon ) = 0\), for all r,\(\psi \) and \(\epsilon \). This is precisely the case if and only if \(\tilde{A}^{(2)}(r, \epsilon ) \in \mathfrak {so}(2)\), where \(\mathfrak {so}(2)\) denotes the Lie-algebra of the Lie-group SO(2) and is explicitly given by

Since \(\tilde{h}_2 = 0\), it is not necessary to define a function \(\tilde{h}_3\).

Now, completely analogous to Proposition 3.4, we can obtain the following SDEs for \((\tilde{\psi }_\epsilon )\) and \((\tilde{\Lambda }_\epsilon (t))\).

Proposition 3.7

The processes \((\tilde{\psi }_\epsilon (t))\) and \((\tilde{\Lambda }_\epsilon (t))\) satisfy the SDEs

The precise shape of the functions \(\tilde{h}_1\) and \(h_{i}, g_{i},, \tilde{g}_{i}\), \(i=1,2,3\), will not be important for the remainder of this section. Instead, we will only deploy their continuity and certain bounds they satisfy. We will use the notation ”\(\bullet \lesssim \circ \)“, meaning ”There exists a constant C, possibly depending on \(\alpha , \beta , a, b'\) and \(\sigma '\), but not on \(r, \psi \) or \(\epsilon \), such that \(\bullet \le C \circ \) holds“. The relevant bounds can be formulated in the following way.

Lemma 3.8

The following estimates hold.

-

(i)

\(|h_2(r,\psi , \epsilon )| \lesssim 1+\epsilon ^{-2}r^{-1}.\)

-

(ii)

\(|h_3(r,\psi , \epsilon )|\lesssim 1+r^2+\epsilon ^{-4}r^{-2}\).

-

(iii)

\(|\tilde{h}_1(r,\psi , \epsilon )|\lesssim 1+\epsilon ^{-1}r^2.\)

Proof

From the definitions (3.2) and (3.9) we get the estimates

Here, it is most convenient to think of \(\Vert \cdot \Vert \) as the maximums-norm on \({\mathbb {R}}^{2\times 2}\); however, all statements hold independently of the norm chosen. Plugging this into (3.5) and (3.11) respectively yields

Finally, we obtain

This finishes the proof. \(\square \)

3.2 Stationary measures

We briefly introduce some notation for the following: Let \({\mathbb {X}}\) be a topological space. We will denote by \({\mathcal {B}}_0({\mathbb {X}})\) the Banach space of bounded measurable functions on \({\mathbb {X}}\) and by \({\mathcal {C}}_0({\mathbb {X}})\) the Banach space of bounded continuous functions on \({\mathbb {X}}\), both equipped with the usual supremum norm. Furthermore, we will consider the set of probability measures \({\mathcal {M}}_1({\mathbb {X}})\) on \({\mathbb {X}}\), and the push-forward \(m_*: {\mathcal {M}}_1({\mathbb {X}}) \rightarrow {\mathcal {M}}_1({\mathbb {Y}})\) associated to some map \(m: {\mathbb {X}} \rightarrow {\mathbb {Y}}\). We say that a sequence of measures \((\nu _n)_{n \in {\mathbb {N}}}\) in \({\mathcal {M}}_1({\mathbb {X}})\) converges weakly to some measure \(\nu \in {\mathcal {M}}_1({\mathbb {X}})\), if for each \(\eta \in {\mathcal {C}}_0({\mathbb {X}})\) we have

The SDE for \((r_\epsilon (t),\psi _\epsilon (t))\) (cf. Eq. (3.6)) induces a Markov process on \({\mathbb {R}}_{> 0}\times S^1\), for each \(\epsilon \ge 0\). We will denote the time-1 transition operator of this Markov-process by \({\mathcal {P}}_\epsilon : {\mathcal {B}}_0({\mathbb {R}}_{> 0}\times S^1) \rightarrow {\mathcal {B}}_0({\mathbb {R}}_{> 0}\times S^1)\), i.e.

We denote its dual operator by \({\mathcal {P}}^*_\epsilon : {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1 ) \rightarrow {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1)\). Analogously, we introduce the time-1 transition operator \(\hat{{\mathcal {P}}}: {\mathcal {B}}_0(S^1) \rightarrow {\mathcal {B}}_0(S^1)\) of the Markov process induced by the SDE for \(\hat{\psi }\) (cf. Eq. (3.7)), and its dual \(\hat{{\mathcal {P}}}^*: {\mathcal {M}}_1(S^1) \rightarrow {\mathcal {M}}_1(S^1)\). An operator \({\mathcal {P}}: {\mathcal {B}}_0({\mathbb {X}}) \rightarrow {\mathcal {B}}_0({\mathbb {X}})\) has the Feller property, if it can be restricted to an operator \({\mathcal {P}}: {\mathcal {C}}_0({\mathbb {X}}) \rightarrow {\mathcal {C}}_0({\mathbb {X}})\).

Lemma 3.9

The following hold.

-

(i)

Let \(\eta \in {\mathcal {C}}_0 ( {\mathbb {R}}_{> 0}\times S^1)\). Then the map \({\mathcal {P}}_\bullet \eta : (r, \psi , \epsilon ) \mapsto \left( {\mathcal {P}}_\epsilon \eta \right) (r, \psi )\) is in \(C_0({\mathbb {R}}_{> 0}\times S^1 \times {\mathbb {R}}_{\ge 0})\).

-

(ii)

For each \(\epsilon \ge 0\), the operator \({\mathcal {P}}_\epsilon : {\mathcal {B}}_0({\mathbb {R}}_{> 0}\times S^1) \rightarrow {\mathcal {B}}_0({\mathbb {R}}_{> 0}\times S^1)\) has the Feller property.

-

(iii)

The operator \(\hat{{\mathcal {P}}}: {\mathcal {B}}_0(S^1) \rightarrow {\mathcal {B}}_0(S^1)\) has the Feller property.

Proof

(i) The boundedness of \({\mathcal {P}}_\bullet \eta \) follows directly from the boundedness of \(\eta \). Thus, we only have to show continuity. Without loss of generality, we fix some \(\epsilon ^* > 0\) and henceforth only consider \(\epsilon \in [0, \epsilon ^*]\). Next we amend the SDE (3.6) by \(\textrm{d}\epsilon (t) = 0\), i.e. we consider the SDE

on the state space \((r',\psi ', \epsilon ') \in {\mathbb {R}}_{> 0}\times S^1 \times [0, \epsilon ^*]\), where \((W'_1(t), W'_2(t))\) is a two-dimensional Brownian motion. Since \((r'(t))\) has the same law as \((r_{\epsilon _0}(t))\) with \(\epsilon _0 = \epsilon '(0)\), there is almost surely no blow-up in finite time and global (in time) solutions of (3.12) exist. Let

be a solution to the SDE (3.12) with

We want to show that the process (3.13) has a modification which is continuous in \(r_0\), \(\psi _0\) and \(\epsilon _0\). For each \(n \in {\mathbb {N}}\) we define the waiting time \(\tau _n(r_0,\psi _0, \epsilon _0)\) by

and consider the stopped process

Note that we have, for any \(n, m \in {\mathbb {N}}\),

for all \(t \le 1 \wedge \tau _n(r_0,\psi _0, \epsilon _0) \wedge \tau _m(r_0,\psi _0, \epsilon _0)\). The drift and diffusion coefficients of (3.12) are smooth and thus bounded and Lipschitz continuous on the restricted state space \(\Gamma _n:= [n^{-1},n] \times S^1 \times [0, \epsilon ^*]\). Therefore we can use [30, Theorem IX.2.4] to find a modification of (3.14) which is continuous in \(r_0\), \(\psi _0\) and \(\epsilon _0\). Since any two continuous modifications are necessarily indistinguishable, the equality (3.15) does still hold almost surely. Now we can define a modification of (3.13) by

Since the sequence on the right-hand side restricted to \((r_0,\psi _0, \epsilon _0) \in \Gamma _m\) is almost surely eventually constant for each \(m \in {\mathbb {N}}\), this does indeed define a modification of (3.13) which is continuous in \(r_0\), \(\psi _0\) and \(\epsilon _0\).

Note that \((r'_{r_0, \psi _0, \epsilon _0}(t),\psi '_{r_0, \psi _0, \epsilon _0}(t))\) has the same law as the solution \((r_{\epsilon _0}(t),\psi _{\epsilon _0}(t))\) of (3.6) with initial conditions \(r_{\epsilon _0} (0) = r_0\) and \(\psi _{\epsilon _0} (0) = \psi _0\). Thus, we get

and continuity of \({\mathcal {P}}_\bullet \eta \) follows from the dominated convergence theorem.

(ii) Let again \(\eta \in {\mathcal {C}}_0 ( {\mathbb {R}}_{> 0}\times S^1)\). Since by i) the map \({\mathcal {P}}_\bullet \eta : (r, \psi , \epsilon ) \mapsto \left( {\mathcal {P}}_\epsilon \eta \right) (r, \psi )\) is in \(C_0({\mathbb {R}}_{> 0}\times S^1 \times {\mathbb {R}}_{\ge 0})\), we have, in particular, that \({\mathcal {P}}_\epsilon \eta : (r,\psi ) \mapsto \left( {\mathcal {P}}_\epsilon \eta \right) (r, \psi )\) is in \(C_0({\mathbb {R}}_{> 0}\times S^1)\) for each \(\epsilon \), implying the Feller property.

(iii) Note that the functions \(g_3({\hat{r}},\cdot , 0), ~g_2({\hat{r}},\cdot , 0): S^1 \rightarrow {\mathbb {R}}\) appearing in Eq. (3.7) are smooth functions defined on a compact domain and thus, in particular, bounded and Lipschitz-continuous. Thus the Feller property of \(\hat{{\mathcal {P}}}\) follows directly from [30, Theorem IX.2.5]. \(\square \)

In the following let \(\mathfrak {p}^{{\mathbb {R}}_{> 0}}: {\mathbb {R}}_{> 0}\times S^1 \rightarrow {\mathbb {R}}_{> 0}\) and \(\mathfrak {p}^{S^1}: {\mathbb {R}}_{> 0}\times S^1 \rightarrow S^1\) denote the projections onto the first and second component respectively. Firstly, we establish the existence and uniqueness of stationary measures for the respective processes.

Proposition 3.10

The following hold.

-

(i)

For each \(\epsilon >0\), there exists a unique measure \(\rho _\epsilon \in {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1)\) with \({\mathcal {P}}_\epsilon ^* \rho _\epsilon = \rho _\epsilon \). Its marginal on the r-coordinate \(\mathfrak {p}_{*}^{{\mathbb {R}}_{> 0}} \rho _\epsilon \in {\mathcal {M}}_1 ({\mathbb {R}}_{> 0})\) has a density \(\xi _\epsilon : {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}_{\ge 0}\) with respect to Lebesgue measure given by

$$\begin{aligned} \xi _\epsilon (r) := L_\epsilon r \exp \left( -\frac{a}{2\epsilon ^2\sigma '^2}\left( r^2-\hat{ r}^2\right) ^2\right) , \end{aligned}$$(3.16)where \(L_\epsilon \ge 0\) is a normalization constant given by

$$\begin{aligned} L_\epsilon := \int _0^\infty r \exp \left( -\frac{a}{2\epsilon ^2\sigma '^2}\left( r^2-\hat{ r}^2\right) ^2\right) \textrm{d}r = \frac{2\sqrt{2a}}{\sqrt{\pi }\epsilon \sigma '{\text {erfc}}\left( -\frac{\alpha }{\epsilon \sigma '\sqrt{2a}}\right) }, \end{aligned}$$with \({\text {erfc}}\) denoting the complementary error function.

-

(ii)

The exists a unique measure \(\hat{\rho } \in {\mathcal {M}}_1 (S^1)\) with \(\hat{{\mathcal {P}}}^* \hat{\rho } = \hat{\rho }.\)

-

(iii)

The measure \(\rho _0 = \delta _{{\hat{r}}} \times \hat{\rho } \in {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1)\) is the unique measure satisfying \({\mathcal {P}}_0^* \rho _0 = \rho _0\).

Proof

For (i), we will start by establishing existence of a stationary measure. One can easily check that, for each \(\epsilon >0\), the function \(\xi _\epsilon \) satisfies the stationary Fokker-Planck equation

and \(\xi _\epsilon (r)\textrm{d}r \in {\mathcal {M}}_1({\mathbb {R}}_{> 0})\) is thus a stationary distribution for the process \((r_\epsilon (t))\). Next we can consider the family of probability measures \({\mathcal {A}}_\epsilon \subset {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1)\), defined by

Since \(\xi _\epsilon (r)\textrm{d}r\) is stationary, \({\mathcal {A}}_\epsilon \) is \({\mathcal {P}}_\epsilon ^*\) invariant, i.e. we have \({\mathcal {P}}_\epsilon ^*{\mathcal {A}}_\epsilon \subseteq {\mathcal {A}}_\epsilon \). Furthermore, with respect to the topology of weak convergence, \({\mathcal {A}}_\epsilon \) is convex and closed by definition and tight since \(\xi _\epsilon (r)\textrm{d}r\) is tight and \(S^1\) is compact. Starting from an arbitrary \(\rho _\epsilon ^{(0)} \in {\mathcal {A}}_\epsilon \), we can construct a sequence \(\left( \rho _\epsilon ^{(n)}\right) _{n \in {\mathbb {N}}_0}\) by

By invariance and convexity of \({\mathcal {A}}_\epsilon \), we have \(\rho _\epsilon ^{(n)} \in {\mathcal {A}}_\epsilon \) for all \(n \in {\mathbb {N}}_0\). By Prokhorov’s theorem, the sequence has an accumulation point \(\rho _\epsilon \) with respect to the topology of weak convergence, which must lie in \({\mathcal {A}}_\epsilon \) due to closedness. Analagously to the proof of the Krylov-Bogolyubov theorem (see e.g. [22, Theorem 1.10]), we can conclude that \({\mathcal {P}}_\epsilon ^*\rho _\epsilon = \rho _\epsilon \). Uniqueness follows directly from the uniform ellipticity of the associated generator (see e.g. [31, section 3] for details).

For (ii), both existence and uniqueness were already established in [24], by using the Krylov–Bogolyubov theorem for existence and Hörmander’s theorem for uniqueness.

For (iii), it is easy to check that \(\rho _0 = \delta _{{\hat{r}}} \times \hat{\rho }\) satisfies \({\mathcal {P}}_0^* \rho _0 = \rho _0\). Thus it only remains to show that \(\rho _0\) is the unique stationary measure. Suppose we are given a measure \(\rho _0' \in {\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1)\) with \({\mathcal {P}}_0^* \rho _0' = \rho _0'\). Since the process \((r_0(t))\) is governed by the ODE

we must have \(\mathfrak {p}_{*}^{{\mathbb {R}}_{> 0}} \rho _0' = \delta _{{\hat{r}}}\). Furthermore, if we set \(r_0(t) = {\hat{r}}\) for all \(t\ge 0\), the process \((\psi _0(t))\) is governed by the same equation as \((\hat{\psi }(t))\). Therefore, it follows from ii) that \(\mathfrak {p}_{*}^{S^1} \rho _0' = \hat{\rho }\) and, thus, \(\rho _0'= \delta _{{\hat{r}}} \times \hat{\rho }= \rho _0\). \(\square \)

We will now prove two lemmas, using properties of the stationary measures established in Proposition 3.10, that will be essential for our proof of Theorem A. The first lemma concerns a concentration bound for the measure \(\xi _\epsilon (r)\textrm{d}r\) for sufficiently small \(\epsilon \).

Lemma 3.11

Let \(f: {\mathbb {R}}_{> 0}\rightarrow {\mathbb {R}}\) be a function that satisfies \(|f(r)| \lesssim 1+r^{-1}+r^k\) for some \(k \in {\mathbb {N}}\). Then \(f\in L^1_{\xi _\epsilon }\), for all \(\epsilon >0\). Furthermore, if there exists an open neighborhood \(U \subset {\mathbb {R}}_{> 0}\) of \({\hat{r}}\) with \(f(r) = 0\) for all \(r\in U\), then the bound

holds for sufficiently small \(\epsilon >0\).

Proof

To show these statements we will make use of the substitution \(p:= r^2\) and will write \(\tilde{f}(p):= f(r) = f(\sqrt{p})\). Firstly, we observe that

where \({\mathcal {N}}({\hat{r}}^2, \epsilon ^2\sigma '^2a^{-1})\) denotes a normal distribution with mean \({\hat{r}}^2\) and variance \(\epsilon ^2\sigma '^2a^{-1}\) and we interpret \(\tilde{f}\) as a function defined on the entire real line by setting \(\tilde{f}(p):= 0\) for \(p \le 0\). Since the complementary error function always takes values larger than 1 for negative arguments, we get the bound

Therefore, in order to show \(f \in L^1_{\xi _\epsilon }\), it is sufficient to show \(\tilde{f} \in L^1_{{\mathcal {N}}({\hat{r}}^2, \epsilon ^2\sigma '^2a^{-1})}\). Suppose f satisfies \(f(r) \lesssim 1+r^{-1}+r^k\) and thus equivalently \(\tilde{f}(p) \lesssim 1+p^{-\frac{1}{2}}+p^{\frac{k}{2}}\). Then, we have

which implies \(\mathbb {1}_{(0,1]}\tilde{f} \in L^1_{{\text {Leb}}}\) and in particular \(\mathbb {1}_{(0,1]}\tilde{f} \in L^1_{{\mathcal {N}}({\hat{r}}^2, \epsilon ^2\sigma '^2a^{-1})}\). Similarly, we have

which immediately implies \(\mathbb {1}_{(1,\infty )}\tilde{f} \in L^1_{{\mathcal {N}}({\hat{r}}^2, \epsilon ^2\sigma '^2a^{-1})}\). Since we have \(\tilde{f} = \mathbb {1}_{(0,1]}\tilde{f} + \mathbb {1}_{(1,\infty )}\tilde{f}\), we also have \(\tilde{f} \in L^1_{{\mathcal {N}}({\hat{r}}^2, \epsilon ^2\sigma '^2a^{-1})}\), which shows the first part of the lemma.

For the second part, suppose \(f(r) = 0\) for all \(r \in U\), where U is an open neighborhood of \({\hat{r}}\). Then \(\tilde{f}\) will vanish in a neighborhood around \({\hat{r}} ^2\). Let \(0< \delta < {\hat{r}}^2\) be such that \(\tilde{f}(p) = 0\) for all \(p \in ({\hat{r}}^2 -\delta , {\hat{r}}^2 +\delta )\). For \(p \in {\mathbb {R}}_{> 0}{\setminus } ({\hat{r}}^2 -\delta , {\hat{r}}^2 +\delta )\), we have

Now, we can estimate

By the first part of the lemma, we have \(\tilde{f} \in L^1_{{\mathcal {N}}({\hat{r}}^2, \sigma '^2a^{-1})}\) such that the expectation in the last line is finite. Thus, for sufficiently small \(\epsilon >0\), we get the desired bound

This finishes the proof. \(\square \)

The second lemma concerns the weak convergence of \(\rho _{\epsilon }\) to \(\rho _0\) which will be crucial for obtaining continuity of the top Lyapunov exponents for \(\epsilon \rightarrow 0\).

Lemma 3.12

The measures \(\rho _\epsilon \) converge weakly in \(\mathcal {M}_1({\mathbb {R}}_{> 0}\times S^1)\) to \(\rho _0 = \delta _{\hat{r}}\times \hat{\rho }\) as \(\epsilon \) tends to zero.

The proof of this statement is inspired by [32, Proposition 5.9].

Proof of Lemma 3.12

By using formula (3.16), we obtain directly that \((\mathfrak {p}_{*}^{{\mathbb {R}}_{\ge 0}} \rho _\epsilon ) = (\xi _\epsilon (r)\textrm{d}r)\) converges weakly in \({\mathcal {M}}_1({\mathbb {R}}_{> 0})\) to \(\delta _{\hat{r}}\) as \(\epsilon \) tends to zero, i.e. weak convergence in the r-direction. Thus, it is sufficient to show that \((\mathfrak {p}_{*}^{S^1} \rho _\epsilon )\) converges weakly in \({\mathcal {M}}_1 (S^1)\) to \(\hat{\rho }\). The space \({\mathcal {M}}_1 (S^1)\) is compact, by the Banach-Alaoglu theorem, and metrizable. Therefore, \((\mathfrak {p}_{*}^{S^1} \rho _\epsilon )\) converges weakly to \(\hat{\rho }\) if and only if every accumulation point of \((\mathfrak {p}_{*}^{S^1} \rho _\epsilon )\), as \(\epsilon \) tends to zero, is equal to \(\hat{\rho }\).

Suppose now that \(\nu \in {\mathcal {M}}_1(S^1)\) is such an accumulation point and \((\epsilon _n)_{n \in {\mathbb {N}}}\) is chosen such that \(\epsilon _n \rightarrow 0\) and \(\mathfrak {p}_{*}^{S^1}\rho _{\epsilon _n} \rightarrow \nu \) weakly. The latter property is equivalent to \((\rho _{\epsilon _n})\) converging weakly to \(\delta _{\hat{r}} \times \nu \), which in turn also implies that \((\rho _{\epsilon _n} \times \delta _{\epsilon _n})\) converges weakly in \({\mathcal {M}}_1({\mathbb {R}}_{> 0}\times S^1 \times {\mathbb {R}}_{\ge 0})\) to \(\delta _{\hat{r}} \times \nu \times \delta _{0}\). Let \(\eta \in {\mathcal {C}}^0({\mathbb {R}}_{> 0}\times S^1)\) be an arbitrary bounded continuous function. We have

Now we can use Lemma 3.9 i) and the weak convergence \(\rho _{\epsilon _n} \times \delta _{\epsilon _n} \rightarrow \delta _{\hat{r}} \times \nu \times \delta _{0}\) to get

Since \(\eta \in C^0({\mathbb {R}}_{> 0}\times S^1)\) was arbitrary, this implies \(\delta _{\hat{r} \times \nu } = {\mathcal {P}}^*_0 (\delta _{\hat{r}} \times \nu )\). However, by Proposition 3.10, the measure \(\delta _{\hat{r}}\times \hat{\rho } \) is the unique fixed point of \({\mathcal {P}}_0^*\), so we must have \(\nu = \hat{\rho }\), completing the proof. \(\square \)

3.3 Furstenberg–Khasminskii formula for limiting process

Recall from Sect. 2.1 the Lyapunov exponent \(\hat{\lambda }\) (see (2.4)) of (2.1), the function \(\Psi \) given by (2.5) and the change of parameters

For the limiting process on \(S^1\), as given in Proposition 3.4, we can prove the following Furstenberg–Khasminskii formula. A similar formula for a more general situation is already given in [24]. For completeness of our arguments, we provide a self-sustained derivation here.

Proposition 3.13

We have

In order to proof this proposition, we require the following lemma.

Lemma 3.14

Suppose (w(t)) is a real-valued semi-martingale satisfying

Then, for (W(t)) denoting some Brownian motion, we have

Proof

Let (w(t)) satisfy the assumption. Define a process (X(t)) by

Clearly (X(t)) is a local martingale with quadratic variation \(\langle X(t) \rangle \) given by

By the theorem of Dambis, Dubins-Schwarz (see e.g. [30, Theorem V.1.7]) there exists a Brownian motion \((W_X(t))\) (which is not adapted to the original filtration and possibly even defined on an extension of the probability space), such that

By well-known growth bounds on the Brownian motion, this implies

\(\square \)

Proof of Proposition 3.13

From Theorem 2.3 we get

Using the SDE representation from Proposition 3.4 yields

Since the function \(h_2({\hat{r}},\cdot , 0)\) is bounded, the process \((h_2({\hat{r}},\hat{\psi }(t'), 0))\) satisfies the assumption of Lemma 3.14 and we obtain

Ergodicity now gives us

since \({\hat{\rho }}\) is the unique stationary distribution of the Markov process \((\hat{\psi }(t))\) by Proposition 3.10 (ii). \(\square \)

3.4 Continuity of Lyapunov exponents

Our goal is to show the limit

For that purpose, we want to find a Furstenberg–Khasminskii formula for the Lyapunov exponent on the left hand side, similar to the expression for the right-hand side given in Eq. (3.17). While such a formula has been already given in [16, Equation (19)], we encounter a subtle issue here. In order to show (3.18) along the formal outline in Sect. 2.2, we express the variational process in \((s_{\epsilon }, \theta _{\epsilon })\)-coordinates (cf. (3.1)), which are transformed into the projective coordinate \(\psi _{\epsilon }\) (see Proposition 3.4). Analogously to the proof of Proposition 3.13, we can write

Now we would like to use Lemma 3.14 in order to show that the second integral is negligible in the limit. By ergodicity, the assumption of Lemma 3.14 is equivalent to \(h_2(\cdot , \cdot , \epsilon ) \in L^2_{\rho _\epsilon }\), where \(h_2\) is given by (3.5). Since it seems out of reach to make explicit statements about the distribution \(\rho _\epsilon \) beyond the fact that its marginal in the r-direction has density \(\xi _\epsilon \), one is forced to rely on the estimate \(h_2(r, \psi , \epsilon ) \le \sup _{\psi '}h_2(r, \psi ', \epsilon )\), which leads to the bound \(|h_2(r,\psi , \epsilon )| \lesssim 1+\epsilon ^{-2}r^{-1}\) given in Lemma 3.8. This, however, is not sufficient for obtaining the assumption of Lemma 3.14 since we clearly have \((r \mapsto 1+\epsilon ^{-2}r^{-1}) \notin {L^2_{\xi _\epsilon }}\)(even though it is in \(L^1_{\xi _\epsilon }\), c.f. Lemma 3.11). Analogously, for the first integral above, we would hope to have \(h_3(\cdot , \cdot , \epsilon ) \in L^1_{\rho _\epsilon }\) in order to use ergodicity; however, we encounter a very similar problem.

We want to point out two aspects of this issue. Firstly, in both cases the problem arises due to the singularity at \(r=0\). Secondly, neither of these problems has been present in previous works like [16], as they only arise in the rescaled coordinates \((s_\epsilon , \theta _\epsilon ) = (s_\epsilon , \epsilon \vartheta _\epsilon )\) (and their polar representation \((\psi _\epsilon , \Lambda _\epsilon )\)) and are not present in the original coordinates \((s_\epsilon , \vartheta _\epsilon )\) (and their polar representation \((\tilde{\psi }_\epsilon , \tilde{\Lambda }_\epsilon )\), see Proposition 3.7). However, the coordinate rescaling is crucial to obtain the aspired limit, as outlined in Sect. 2.2. In order to fix this issue we will introduce coordinates for the variational process (2.7) which behave like \((s_\epsilon , \vartheta _\epsilon )\) whenever \(r_\epsilon \) is close to 0 and behave like \((s_\epsilon , \theta _\epsilon )\) whenever \(r_\epsilon \) is close to \({\hat{r}}\). Thereby we avoid the integrabitlity problem around the singularity at the origin, while having the “correct” coordinate system for the \(\epsilon \rightarrow 0\)-limit for a significant portion of time. We are now ready to finally prove Theorem A.

Proof of Theorem A

Note that Theorem 2.4 already covers the second part of the statement. Thus it only remains to show (1.2).

We start by partitioning the positive real axis into four intervals \(I_1 \cup I_2 \cup I_3 \cup I_4 = {\mathbb {R}}_{> 0}\) defined by

Next, we define a function \(\chi : {\mathbb {R}}_{> 0}\rightarrow [0,1]\) (see Fig. 5) by

and a process \((\Lambda _\epsilon ^*(t))\) by

By (3.4) we have

and thus also

Our goal now is to show

which will complete the proof. By the definition of \((\Lambda ^*_\epsilon (t))\), Propositions 3.4, 3.7 and Eq. (2.11), we have

Here, we have made use of the fact that the quadratic covariations \(\langle r_\epsilon , \Lambda _\epsilon \rangle = \langle r_\epsilon , \tilde{\Lambda }_\epsilon \rangle = 0\) vanish. In the following, we will determine the corresponding limits for the time averages of \(\text {I}_{\epsilon }(t), \dots , \text {V}_{\epsilon }(t)\) separately. In particular we will show

as well as

Note that together these equations imply (3.19), thereby finishing the proof.

(i) \(\text {I}_{\epsilon }\mathbf {(t)}\): we split the integral into

First we will bound the second summand. Using Lemma 3.8(ii) we get

The function \(f(r):= \mathbb {1}_{I_4}(r) r^2\) satisfies all assumptions of Lemma 3.11. Thus we can use ergodicity get the following estimate.

Concerning the first summand, we obtain by ergodicity

Since \((I_2\cup I_3)\times S^1\) is a compact domain, the function \(h_3(\cdot , \cdot , \epsilon )\) converges uniformly to \(h_3(\cdot , \cdot , 0)\) as \(\epsilon \rightarrow 0\). Using this fact together with the weak convergence established in Lemma 3.12 and the expression (3.17), we get

(ii) \(\text {II}_{\epsilon }\mathbf {(t)}\): similarly to the argument for the second summand in (i), we first use Lemma 3.8 (iii) and then ergodicity to get

Again, the function \(f(r):= [1-\chi (r)]r^2\) satisfies all the assumptions in Lemma 3.11 and we obtain

(iii) \(\text {III}_{\epsilon } \mathbf {(t)}\): note that the term

is bounded uniformly in \(\epsilon \) for \(\epsilon \in (0,1]\). Together with the bound (3.10), this allows for the estimate

Now we can again use Lemma 3.11 to obtain

iv) \(\text {IV}_{\epsilon }\mathbf {(t)}\): as a consequence of Lemma 3.8 (i), we have

Thus, in particular, the process \((\chi (r_\epsilon (t)) h_2( r_\epsilon (t), \psi _\epsilon (t),\epsilon ))\) is bounded for every \(\epsilon >0\). Therefore Lemma 3.14 gives

for each \(\epsilon >0.\)

(v) \(\text {V}_{\epsilon }\mathbf {(t)}\): using the estimate (3.10), the process \((\mathbb {1}_{I_2 }(r_\epsilon (t'))(\Lambda _\epsilon (t')-\tilde{\Lambda }_\epsilon (t')))\) is bounded. Hence, similarly to iv), Lemma 3.14 yields

for each \(\epsilon >0.\) \(\square \)

Data Availability

Data sharing not applicable to this article as no datasets were generated or analysed during the current study.

References

Arnold, L.: Random Dynamical Systems. Springer Monographs in Mathematics. Springer, Berlin (1998)

Arnold, L., Sri Namachchivaya, N., Schenk-Hoppé, K.R.: Toward an understanding of stochastic Hopf bifurcation: a case study. Int. J. Bifurc. Chaos Appl. Sci. Eng. 6(11), 1947–1975 (1996)

Auslender, E.I., Mil’shteĭn, G.N.: Asymptotic expansions of the Liapunov index for linear stochastic systems with small noise. J. Appl. Math. Mech. 46(3), 277–283 (1982)

Baxendale, P.H.: Statistical equilibrium and two-point motion for a stochastic flow of diffeomorphisms. In: Spatial Stochastic Processes, Volume 19 of Progress in Probability, pp. 189–218. Birkhäuser, Boston (1991)

Baxendale, P.H.: A stochastic Hopf bifurcation. Probab. Theory Relat. Fields 99(4), 581–616 (1994)

Baxendale, P.H., Goukasian, L.: Lyapunov exponents of nilpotent Itô systems with random coefficients. Stoch. Process. Appl. 95(2), 219–233 (2001)

Baxendale, P.H., Goukasian, L.: Lyapunov exponents for small random perturbations of Hamiltonian systems. Ann. Probab. 30(1), 101–134 (2002)

Bedrossian, J., Blumenthal, A., Punshon-Smith, S.: The Batchelor spectrum of passive scalar turbulence in stochastic fluid mechanics at fixed Reynolds number. Commun. Pure Appl. Math. 75(6), 1237–1291 (2022)

Bedrossian, J., Blumenthal, A., Punshon-Smith, S.: Lagrangian chaos and scalar advection in stochastic fluid mechanics. J. Eur. Math. Soc. (JEMS) 24(6), 1893–1990 (2022)

Bedrossian, J., Blumenthal, A., Punshon-Smith, S.: A regularity method for lower bounds on the Lyapunov exponent for stochastic differential equations. Invent. Math. 227(2), 429–516 (2022)

Biskamp, M.: Pesin’s formula for random dynamical systems on \(\mathbb{R} ^d\). J. Dyn. Differ. Equ. 26(1), 109–142 (2014)

Blumenthal, A., Nisoli, I.: Noise induced order for skew-products over a non-uniformly expanding base. Nonlinearity 35(10), 5481–5504 (2022)

Blumenthal, A., Xue, J., Young, L.-S.: Lyapunov exponents for random perturbations of some area-preserving maps including the standard map. Ann. Math. (2) 185(1), 285–310 (2017)

Blumenthal, A., Xue, J., Young, L.-S.: Lyapunov exponents and correlation decay for random perturbations of some prototypical 2D maps. Commun. Math. Phys. 359(1), 347–373 (2018)

Breden, M., Engel, M.: Computer-assisted proof of shear-induced chaos in stochastically perturbed Hopf systems. Ann. Appl. Probab. 33(2), 1052–1094 (2023)

Deville, R., Namachchivaya, N., Rapti, Z.: Stability of a stochastic two-dimensional non-Hamiltonian system. SIAM J. Appl. Math. 71(4), 1458–1475 (2011)

Doan, T.S., Engel, M., Lamb, J.S.W., Rasmussen, M.: Hopf bifurcation with additive noise. Nonlinearity 31(10), 4567–4601 (2018)

Engel, M.: Local phenomena in random dynamical systems: bifurcations, synchronisation, and quasi-stationary dynamics. Ph.D. thesis, Imperial College London (2018)

Engel, M., Lamb, J., Rasmussen, M.: Bifurcation analysis of a stochastically driven limit cycle. Commun. Math. Phys. 365(3), 935–942 (2019)

Engel, M., Lamb, J.S.W., Rasmussen, M.: Conditioned Lyapunov exponents for random dynamical systems. Trans. Am. Math. Soc. 372(9), 6343–6370 (2019)

Gorodetski, A.: On stochastic sea of the standard map. Commun. Math. Phys. 309(1), 155–192 (2012)

Hairer, M.: Convergence of Markov processes. https://hairer.org/notes/Convergence.pdf (2021)

Imkeller, P.: The smoothness of laws of random flags and Oseledets spaces of linear stochastic differential equations. Potential Anal. 9(4), 321–349 (1998)

Imkeller, P., Lederer, C.: Some formulas for Lyapunov exponents and rotation numbers in two dimensions and the stability of the harmonic oscillator and the inverted pendulum. Dyn. Syst. 16(1), 29–61 (2001)

Ledrappier, F., Young, L.-S.: Entropy formula for random transformations. Probab. Theory Relat. Fields 80(2), 217–240 (1988)

Lin, K.K., Young, L.-S.: Shear-induced chaos. Nonlinearity 21(5), 899–922 (2008)

Lu, K., Wang, Q., Young, L.-S.: Strange attractors for periodically forced parabolic equations. Mem. Am. Math. Soc. 224(1054), vi+85 (2013)

Nisoli, I.: How does noise induce order? J. Stat. Phys. 190(1), 22–41 (2023)

Pinsky, M.A., Wihstutz, V.: Lyapunov exponents of nilpotent Itô systems. Stochastics 25(1), 43–57 (1988)

Revuz, D., Yor, M.: Continuous Martingales and Brownian Motion, Volume 293 of Grundlehren der mathematischen Wissenschaften [Fundamental Principles of Mathematical Sciences], 3rd edn. Springer, Berlin (1999)

Tantet, A., Chekroun, M.D., Dijkstra, H.A., Neelin, J.D.: Ruelle–Pollicott resonances of stochastic systems in reduced state space. Part II: stochastic Hopf bifurcation. J. Stat. Phys. 179(5–6), 1403–1448 (2020)

Viana, M.: Lectures on Lyapunov exponents. Cambridge Studies in Advanced Mathematics, vol. 145. Cambridge University Press, Cambridge (2014)

Viana, M.: (Dis)continuity of Lyapunov exponents. Ergod. Theory Dyn. Syst. 40(3), 577–611 (2020)

Wang, Q., Young, L.-S.: Strange attractors in periodically-kicked limit cycles and Hopf bifurcations. Commun. Math. Phys. 240, 509–529 (2003)

Wieczorek, S.: Stochastic bifurcation in noise-driven lasers and Hopf oscillators. Phys. Rev. E (3) 79(3), 036209, 10 (2009)

Young, L.-S.: Random perturbations of matrix cocycles. Ergod. Theory Dyn. Syst. 6(4), 627–637 (1986)

Young, L.-S.: Chaotic phenomena in three settings: large, noisy and out of equilibrium. Nonlinearity 21(11), T245–T252 (2008)

Young, L.-S.: Mathematical theory of Lyapunov exponents. J. Phys. A 46(25), 254001, 17 (2013)

Acknowledgements

The authors are thankful to the referees for their detailed comments and suggestions. The authors thank the DFG SPP 2298 for supporting their research. Both authors have been additionally supported by Germany’s Excellence Strategy - The Berlin Mathematics Research Center MATH+ (EXC-2046/1, project ID: 390685689), in the case of D.C. via the Berlin Mathematical School and in the case of M.E. via projects AA1-8 and AA1-18. Furthermore, M.E. thanks the DFG CRC 1114 for support.

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by M. Hairer.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Polar Coordinates

A Polar Coordinates

This appendix contains the proofs for Propositions 2.6 and 2.7 and for Lemma 3.2.

Proof of Proposition 2.6

First we will derive Stratonovich SDEs for (r(t)) and \((\phi (t))\). Note that, by definition,

By the chain-rule for Stratonovich SDEs, we have

For \((\phi (t))\) we first show

Now, using the chain-rule again, we can compute

It remains to compute the relevant quadratic co-variations for the Itô-Stratonovich correction terms. Using the notation \(\langle \cdot , \cdot \rangle \) for the quadratic co-variation of two semi-martingales, we get

Finally, we can use these to obtain Itô-SDEs for (r(t)) and \((\phi (t))\). We have

as well as,

This finishes the proof. \(\square \)

Proof of Proposition 2.7

Recall that (s(t)) and \((\vartheta (t))\) were defined by

and

By the integration by parts formula for Stratonovich integrals, we get

For the sake of readability, we will compute the summands separately. Firstly, we have

Secondly, we obtain

Together this gives

We proceed analogously for \((\vartheta (t))\). Integration by parts gives

Computing the summands separately again we get

as well as,

Together this gives

which finishes the proof. \(\square \)

Proof of Lemma 3.2

Recall that \((\psi (t))\) and \((\Lambda (t))\) are defined by

For ease of notation, we will simply write \(\psi ,\Lambda , v_1, \dots \) instead of \(\psi (t), \Lambda (t), v_1(t), \dots \) in the following. Note that we have

and

This allows us to derive the identities

and

Applying the chain rule to (A.2) yields

and

This finishes the proof. \(\square \)

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Chemnitz, D., Engel, M. Positive Lyapunov Exponent in the Hopf Normal Form with Additive Noise. Commun. Math. Phys. 402, 1807–1843 (2023). https://doi.org/10.1007/s00220-023-04764-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-023-04764-z