Abstract

We study emergent oscillatory behavior in networks of diffusively coupled nonlinear ordinary differential equations. Starting from a situation where each isolated node possesses a globally attracting equilibrium point, we give, for an arbitrary network configuration, general conditions for the existence of the diffusive coupling of a homogeneous strength which makes the network dynamics chaotic. The method is based on the theory of local bifurcations we develop for diffusively coupled networks. We, in particular, introduce the class of the so-called versatile network configurations and prove that the Taylor coefficients of the reduction to the center manifold for any versatile network can take any given value.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Coupled dynamical systems play a prominent role in biology [8], chemistry [11], physics and other fields of science [21]. Understanding the emergent dynamics of such systems is a challenging problem depending starkly on the underlying interaction structure [9, 12, 14, 16, 19].

In the early fifties, Turing thought of the emergent oscillatory behavior due to diffusive interaction as a model for morphogenesis [23]. We note that weak coupling of globally stable individual systems cannot alter the stability of the homogeneous regime, this is the globally attracting state. At the same time, no matter what the individual dynamics are, the strong diffusive coupling by itself stabilizes the homogeneous regime. Therefore the idea that the intermediate strength diffusive coupling can create a non-trivial collective behavior is quite paradoxical. However, in the mid-seventies, Smale [20] proposed an example of diffusion-driven oscillations. He considered two 4th order diffusively coupled differential equations which by themselves have globally asymptotically stable equilibrium points. Once the diffusive interaction is strong enough, the coupled system exhibits oscillatory behavior. Smale posed a problem to find conditions under which diffusively coupled systems would oscillate.

Tomberg and Yakubovich [22] proposed a solution to this problem for diffusive interaction of two systems with scalar nonlinearity. For networks, Pogromsky, Glad and Nijmeijer [18] showed that diffusion-driven oscillations can result from an Andronov-Hopf bifurcation. Moreover, they presented conditions to ensure the emergence of oscillations for general graphs. While this provides a good picture of the instability leading to periodic oscillations, there is evidence that the diffusive coupling may also lead to chaotic oscillations. Indeed, Kocarev and Janic [10] provided numerical evidence that two isolated Chua circuits having globally stable fixed points may exhibit chaotic behavior when diffusively coupled. Along the same lines, Perlikowski and co-authors [17] investigated numerically the dynamics of rings of unidirectionally coupled Duffing oscillators. Starting from the situation where each oscillator has an exponentially stable equilibrium point, once the oscillators are coupled akin to diffusion the authors found a great variety of phenomena such as rotating waves, the birth of periodic dynamics, as well as chaotic dynamics.

Drubi, Ibanez and Rodriguez [4] studied two diffusively coupled Brusselators. Starting from a situation where the isolated systems have a globally stable fixed point, they proved that the unfolding of the diffusively coupled system can display a homoclinic loop with an invariant set of positive entropy.

In this paper, we provide general conditions for diffusively coupled identical systems to exhibit chaotic oscillations. We describe necessary and sufficient conditions (the so-called skewness condition) on the linearization matrix at an exponentially stable equilibrium point of the isolated system such that for any network of such systems there exists a diffusive coupling matrix such that the network has a nilpotent singularity and thus a nontrivial center manifold. When the network structure satisfies an extra condition, which we call versatility, we show that Taylor coefficients of the vector field on the center manifold are in general position. This allows us to employ the theory of bifurcations of nilpotent singularities due to Arneodo, Coullet, Spiegel and Tresser [2] and Ibanez and Rodríguez [7] and to show that when the isolated system is at least four-dimensional, invariant sets of positive entropy (i.e., chaos) emerge in such networks.

The paper is organized as follows: In Sect. 2, we formulate the main theorem. We introduce basic concepts about graph theory, and introduce the notion of versatility. In Sect. 3, we present examples and constructions of \(\rho \)-versatile graphs and illustrate some of the other concepts appearing in the main theorem. In Sect. 4, we show the existence of a positive-definite coupling matrix D that yields a nilpotent singularity in the network system. In Sect. 5, we discuss the stability of the center manifold. Finally, in Sect. A, we prove that chaotic behavior emerges in the coupled system by investigating the dynamics on the center manifold.

1.1 The model

We consider ordinary differential equations \(\dot{x}=f(x)\) with \(f\in {\mathcal {C}}^{\infty }(U,\mathbb {R}^{n}),\ n\in \mathbb {N}\) for some open set \(U\subset {\mathbb {R}^{n}}\). We assume that f has an exponentially stable fixed point in U; with no loss of generality we put the origin of coordinates to this point. We study a network of such systems coupled together according to a given graph structure by means of a diffusive interaction. Namely, we consider the following equation:

where \(\alpha >0\) is the coupling strength, \({W} = (w_{ij})\) is the adjacency matrix of the graph, thus, \(w_{ij} = 1\) if nodes i and j are connected and zero otherwise. Moreover, D is a positive-definite matrix (that is \(x^TDx > 0\) for all non-zero vectors x).

The homogeneous regime \(x=0\) persists for every value of the coupling strength \(\alpha \). It keeps its stability for small \(\alpha \) and is, typically, stable at sufficiently large \(\alpha \). However, at intermediate values of the coupling strength, the stability of the homogeneous regime can be lost. Our goal is to investigate the accompanying bifurcations. The difficulty is that the structure of system (1) is quite rigid: all network nodes are the same (are described by the same function f) and the diffusion coupling \(\alpha D\) is the same for any pair of nodes. Therefore, the genericity arguments, standard for the bifurcation theory, cannot be readily applied and must be re-examined.

1.2 Informal statement of main results

Our main goal is to give conditions for the emergence of non-periodic dynamics in system (1). Denote by \(A = Df(0)\) the linearization matrix \(n\times n\) of the individual uncoupled system at zero. Recall that matrix A is Hurwitz when all its eigenvalues have strictly negative real parts. Our main result can be stated as follows.

Suppose that for some orthogonal basis the Hurwitz matrix A has m positive entries on the diagonal. Then, there exists a positive-definite matrix D such that the linearization of system (1) at the homogeneous equilibrium at zero has a zero eigenvalue of multiplicity at least m for a certain value of the coupling parameter \(\alpha >0\). If the network satisfies a condition we call versatility, for an appropriate choice of the nonlinearity of f, the corresponding center manifold has dimension precisely m and the Taylor coefficients of the restriction of the system on the center manifold can take on any prescribed value.

The last statement means that the bifurcations of the homogeneous state of a versatile network follow the same scenarios as general dynamical systems. Applying the results for triple instability [4, 7] we obtain the following result.

For \(n\geqslant 4\), for any generic 3-parameter family of nonlinearities f and any versatile network graph, one can find the positive-definite matrix D such that the homogeneous state of the coupled system (1) has a triple instability at certain value of the coupling strength \(\alpha \), leading to chaotic dynamics for a certain region of parameter values.

The condition on the Jacobian of the isolated dynamics can be understood in a geometric sense as follows. We write \(\dot{x} = f(x) = Ax + {\mathcal {O}}(|x|^2)\). We claim that if a nonzero vector \(x_0 \in \mathbb {R}^n\) exists for which \(\langle x_0, Ax_0\rangle > 0\), then there are points arbitrarily close to the origin, whose forward orbit has its Euclidian distance to the origin increasing for some time, before coming closer to the (stable) origin again. To see why, consider \(\Vert x(t)\Vert ^2 = \langle x(t), x(t) \rangle \), then it follows that \( \frac{d}{dt}\Vert x\Vert ^2 = 2 \langle Ax, x\rangle + {\mathcal {O}}(|x|^3) \), so \(\langle Ax, x\rangle >0\) implies the growth of this derivative.

The property of versatility holds for graphs with heterogeneous degrees - the simplest example is a star network. In a sense, versatility means that the network is not very symmetric. Given a graph, one verifies whether the versatility property holds by evaluating the eigenvectors of the graph’s Laplacian matrix, so it is an effectively verifiable property.

It is possible that a similar theory can be developed for the Andronov-Hopf bifurcation in diffusively coupled networks (an analysis of diffusion-driven Andronov-Hopf bifurcations was undertaken in [18] but the question of genericity of the restriction of the network system to the central manifold was not addressed there).

We also point out that symmetry is often instrumental in explaining and predicting anomalous behavior in network dynamical systems [1, 14, 15].

The network of just two symmetrically coupled systems has the corresponding graph Laplacian that is not versatile, yet the emergence of chaos via the triple instability has been established in [4] for the system of two diffusively coupled Brusselators. In general, we do not know when the genericity of the Taylor coefficients of the center-manifold reduced vector field would hold if the graph is not versatile or when graph symmetries would impose conditions on the dynamics that forbid the existence of limiting sets of positive entropy.

2 Main Results

We start by introducing the basic concepts involved in the set-up of the problem.

2.1 Graphs

A graph G is an ordered pair (V, E), where V is a non empty set of vertices and E is a set of edges connecting the vertices. We assume both to be finite and the graph to be undirected. The order of the graph G is \(|V|=N\), its number of vertices, and the size is |E|, its number of edges. We will not consider graphs with self-loops. The degree of a vertex is the number of edges that are connected to it.

for \(i=1,\dots ,N.\) We also define \({K}={{\,\textrm{diag}\,}}\{k_{1},\dots ,k_{N}\}\) to be the diagonal matrix of vertex degrees.

We only consider undirected graphs G, meaning that a vertex i is connected with a vertex j if and only if it is vice-versa. Thus, the adjacency matrix W is a symmetric matrix. In this context there is another important matrix related to the graph G, which is the well-known Laplacian discrete matrix \(L_{G}\). It is defined by:

so that each entry \(l_{ij}\) of \(L_{G}\) can be written as

where \(\delta _{ij}\) is Kronecker’s delta. The matrix \(L_{G}\) provides us with important information about connectivity and synchronization of the network. It follows from Gershgorin disk theorem [5] that \(L_{G}\) is positive semi-definite and thus its eigenvalues can be ordered as

and let \(\{v_{1},\dots ,v_{N}\}\) be the corresponding eigenvectors. We assume the network is connected. This implies that the eigenvalue \(\lambda _1 = 0\) is simple.

We are interested in a well-behaved class of graphs G whose structure induces a special property of the associated Laplacian matrix. This property will be the existence of an eigenvector where the sum of certain coordinate powers is non-vanishing, which corresponds to a simple eigenvalue of \(L_{G}\). To this end, we define:

Definition 1

(\(\rho \)-versatile graphs). Let \(G=(V,E)\) be a graph and \(\rho \in \mathbb {N}\) a positive integer. We say that G is \(\rho \)-versatile for the eigenvalue-eigenvector pair \((\lambda ,v)\) with \(\lambda >0\), if the Laplacian matrix \(L_{G}\) has a simple eigenvalue \(\lambda \) with corresponding eigenvector \(v=(\nu _{1},\dots ,\nu _{N})\), satisfying

Note that any eigenvector \(v=(\nu _{1},\dots ,\nu _{N})\) for a non-zero eigenvalue necessarily satisfies \(\sum _{i=1}^{N}\nu _{i} = 0\). This is because \(\nu \) is orthogonal to the eigenvector \((1, \dots , 1)\) for the eigenvalue 0.

2.2 Parametrizations

We show that a system of diffusively coupled stable systems can display a wide variety of dynamical behavior, including the onset of chaos. As the coupling strength \(\alpha \) increases, a non-trivial center manifold can emerge with no general restrictions on the Taylor coefficients of the reduced dynamics.

Note that we may alternatively write Equation (1) in terms of the Laplacian:

Let \(X:=col(x_{1},\dots ,x_{N})\) denote the vector formed by stacking \(x_{i}\)’s in a single column vector. In the same way we define \(F(X):=col(f(x_{1}),\dots ,f(x_{N}))\). We obtain the compact form for equations (1) and (5) given by

where \(\otimes \) stands for the Kronecker product. In order to analyze systems of the form (1), we allow f to depend on a parameter \(\varepsilon \) taking values in some open neighborhood of the origin \(\Omega \subseteq \mathbb {R}^d\). For simplicity, we assume the fixed point at the origin persists:

We assume the origin to be exponentially stable for \(\varepsilon = 0\), from which stability follows for sufficiently small \(\varepsilon \) as well. Note that the non-linear diagonal map F now depends on the parameter \(\varepsilon \) as well.

We start with our working definition of center manifold reduction.

Definition 2

Let

be a family of vector fields on \(\mathbb {R}^n\), parameterized by a variable \(\varepsilon \) in an open neighborhood of the origin \(\Omega \subseteq \mathbb {R}^d\). Assume that \(H(0;\varepsilon ) = 0\) for all \(\varepsilon \in \Omega \), and denote by \({\mathcal {E}}^c \subseteq \mathbb {R}^n\) the center subspace of the Jacobian \(D_xH(0;0)\) in the direction of \(\mathbb {R}^n\). A (local) parameterized center manifold of the system (8) is a (local) center manifold of the unparameterized system \(\tilde{H}\) on \(\mathbb {R}^n \times \Omega \), given by

for \(x \in \mathbb {R}^n\) and \(\varepsilon \in \Omega \). We say that the parameterized center manifold is of dimension \(\dim ({\mathcal {E}}^c)\), and is parameterized by d variables. Under the assumptions on H, the center subspace of \(\tilde{H}\) at the origin is equal to \({\mathcal {E}}^c \times \mathbb {R}^d\). We can show that the dynamics on the center manifold of Equation (9) is conjugate to that of a locally defined system

on \({\mathcal {E}}^c \times \Omega \), where the conjugation respects the constant-\(\varepsilon \) fibers. The map R satisfies \(R(0; \varepsilon ) = 0\) for all \(\varepsilon \) for which this local expression is defined, and we have \(D_{x_c}R(0;0) = D_xH(0;0)|_{{\mathcal {E}}^c}: {\mathcal {E}}^c \rightarrow {\mathcal {E}}^c\). We will refer to \(R: {\mathcal {E}}^c \times \Omega \rightarrow {\mathcal {E}}^c\) as a parameterized reduced vector field of H.

In the definition above, the constant and linear terms of the parameterized reduced vector field R are given. Motivated by this, we will write \(H^{[2,\rho ]}\) for any map H to denote the non-constant, non-linear terms in the Taylor expansion around the origin of H, up to terms of order \(\rho \). In other words, we have

Given vector spaces W and \(W'\), we will use \({\mathcal {P}}_2^l(W;W')\) to denote the linear space of polynomial maps from W to \(W'\) with terms of degree 2 through l. It follows that \(H^{[2,l]} \in {\mathcal {P}}_2^l(W;W')\) for \(H: W \rightarrow W'.\)

We are interested in the situation where the domain of H involves some parameter space \(\Omega \), in which case \(H^{[2,\rho ]}\) involves all non-constant, non-linear terms up to order \(\rho \) in both types of variables (parameter and phase space). For instance, if H is a map from \(\mathbb {R} \times \Omega \) to \(\mathbb {R}\) with \(\Omega \subseteq \mathbb {R}\), then \(H^{[2,3]}(x; \varepsilon )\) involves the terms

with some constants \(a_{i}\). Note that a condition on H might put restraints on \(H^{[2,\rho ]}\) as well. For instance, if \(H(0;\varepsilon ) = 0\) for all \(\varepsilon \in \Omega \), then \(H^{[2,3]}(x; \varepsilon )\) does not involve the terms \(\varepsilon ^2\) and \(\varepsilon ^3.\)

2.3 Main theorems

We now formulate the main theorem, along with an important corollary.

Theorem 3

(Main Theorem). For any \(\alpha \ge 0\), consider the \(\varepsilon \)-family of network dynamical systems given by

Denote by \(A = D_xf(0;0)\) the Jacobian of the isolated dynamics. If there exist m mutually orthogonal vectors \(x_1,\dots ,x_m\) such that \(\langle x_i, Ax_i \rangle > 0\), then there exists a positive-definite matrix D together with a number \(\alpha ^*>0\) such that the system of Equation (11) has a local parameterized center manifold of dimension at least m for \(\alpha = \alpha ^*\).

Suppose that the graph G is \(\rho \)-versatile for the pair \((\lambda ,v)\). After an arbitrarily small perturbation to A if needed, there exists a positive-definite matrix D and a number \(\alpha ^*>0\) such that the following holds:

-

1.

The system of Equation (11) has a local parameterized center manifold of dimension exactly m for \(\alpha = \alpha ^*\).

-

2.

Denote by \(R: {\mathcal {E}}^c \times \Omega \rightarrow {\mathcal {E}}^c\) the corresponding parameterized reduced vector field, then \(R(0; \varepsilon ) = 0\) for all \(\varepsilon \in \Omega \) and \(D_xR(0;0): {\mathcal {E}}^c \rightarrow {\mathcal {E}}^c\) is nilpotent.

-

3.

The higher order terms \(R^{[2,\rho ]}\) can take on any value in \({\mathcal {P}}_2^\rho ({\mathcal {E}}^c \times \Omega ; {\mathcal {E}}^c)\) (subject to \(R^{[2,\rho ]}(0; \varepsilon ) = 0\)) as \(f^{[2,\rho ]}\) is varied (subject to \(f^{[2,\rho ]}(0; \varepsilon ) = 0\)).

The above result guarantees the existence of the center manifold and the reduced vector field. When the dimension of the isolated dynamics is at least 4, the reduced vector field can exhibit invariant sets of positive entropy as the following result shows.

Corollary 4

(Chaos). Assume the conditions of Theorem 3 to hold for \(m=3\) and \(\rho = 2\). Then, in a generic 3-parameter system we have the emergence of chaos through the formation of a Shilnikov loop on the center manifold. In particular, chaos can form this way in a system of 4-dimensional nodes coupled diffusively in a network.

In the Appendix, we show that the conditions of Theorem 3 are natural, by constructing multiple classes of networks that are \(\rho \)-versatile for any \(\rho \in \mathbb {N}\), as well as by giving examples of matrices A that satisfy the conditions of the theorem. In Subsect. A.1, we present a geometric way of constructing \(\rho \)-versatile graphs, by means of the so-called complement graph. In Subsect. A.2, we then show using direct estimates that star graphs satisfy \(\rho \)-versatility. Finally, in Subsect. A.3, we present examples of matrices that satisfy the conditions of Theorem 3. In particular, we will see that being Hurwitz is no obstruction.

3 Proof of Main Theorem

In this section we present the proof of Theorem 3. We start by analyzing the linearized system in Subsect. 3.1, after which we perform center manifold reduction and have a detailed look at the reduced vector field in Subsect. 3.2.

3.1 Linearization

In this subsection we investigate the linear part of the system

from Theorem 3. Writing \(A \in \mathbb {R}^{n \times n}\) for the Jacobian of f at the origin, we see that the linearization of Equation (12) at the origin is given by

An important observation is the following: if v is an eigenvector of \(L_{G}\) with eigenvector \(\lambda \in \mathbb {R}\), then the linearization above sends a vector \(v \otimes x\) with \(x \in \mathbb {R}^n\) to

It follows that the space

is kept invariant by the linear map of Equation (13). We claim that \(v \otimes \mathbb {R}^n\) is in fact a linear subspace.

Equation (14) tells us that the linearization (13) restricted to \(v \otimes \mathbb {R}^n\) is conjugate to \(A-\alpha \lambda D:\mathbb {R}^n \rightarrow \mathbb {R}^n\).

Recall that eigenvectors \(v_p\) and \(v_q\) of \(L_G\) corresponding to distinct eigenvalues can be chosen to be orthonormal. Finally, we write

for the corresponding linear subspaces of \(\mathbb {R}^N \otimes \mathbb {R}^n\). We thus have a direct sum decomposition

where each component is respected by the linearization (13), with the restriction to \(V_p\) conjugate to \(A-\alpha \lambda _p D:\mathbb {R}^n \rightarrow \mathbb {R}^n\). We see that the spectrum of the linearization (13) is given by the union of the spectra of the maps \(A-\alpha \lambda _p D\), with a straightforward relation between the respective algebraic and geometric multiplicities.

This observation motivates the main result of this subsection, Proposition 6 below.

In what follows, we denote by \(\mathbb {M}_{n}(\mathbb {R})\) the space of n by n matrices over the field \(\mathbb {R}\). We write \(\langle x,y\rangle :=x^{T}y\) for the Euclidean inner product between vectors \(x,y \in \mathbb {R}^n\).

We state a technical lemma. Suppose we are given a block matrix

with blocks \(M_{11}, \dots , M_{22}\). If \(M_{22}\) is invertible then we may form the Schur complement of M, given by

This expression has various useful properties. We are interested in the situation where M is symmetric, so that \(M_{12}^T = M_{21}\), \(M_{11}^T = M_{11}\) and \(M_{22}^T = M_{22}\). In that case the matrix M is positive-definite if and only if both \(M_{22}\) and \(M/{M_{22}}\) are positive-definite. Using this result, we may prove:

Lemma 5

Let

be a block matrix and assume \(A_{22}=c {{\,\textrm{Id}\,}}\) for some scalar \(c \in \mathbb {R}_{>0}\). Suppose furthermore that \(A_{11}\) is positive-definite. Then, for c sufficiently large the matrix D is positive-definite as well.

Proof

Clearly D is positive-definite if and only if the symmetric matrix

is positive-definite. We set \(H_{22}:= 2c {{\,\textrm{Id}\,}}\), \(H_{12}:= A_{12}+A_{21}^T\) and \(H_{11}:= A_{11}+A_{11}^T\), the third of which is positive-definite as \(A_{11}\) is. As \(H_{22} = 2c {{\,\textrm{Id}\,}}\) is invertible with inverse \(1/(2c){{\,\textrm{Id}\,}}\), we may form the Schur complement

As \(H_{22}\) is positive-definite, it follows from the above discussion that H is positive-definite if and only \(H/H_{22}\) is. However, as \(c \rightarrow \infty \) we have \(H/H_{22} \rightarrow H_{11}\), so \(H/H_{22}\) behaves like a small perturbation of \(H_{11},\) then it is positive-definite for \(c>0\) large enough. This shows that D is likewise positive-definite for large enough c. \(\blacksquare \)

Proposition 6

Let \(A\in \mathbb {M}_{n}(\mathbb {R})\) be a matrix. There exist m mutually orthogonal vectors \(x_{1},\dots ,x_{m}\) such that

if and only if there exists a positive-definite matrix D such that \(A-D\) has at least m zero eigenvalues, counted with algebraic multiplicity.

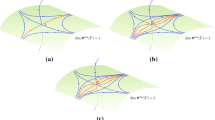

Figure 1 shows an illustration of Proposition 6.

Remark 7

Note that any Hurwitz matrix A has a negative trace, as this number equals the sum of its eigenvalues. It follows that Equation (23) can then only hold when \(m<n\), where n is the size of A. In this case, if our goal is to find a 3-dimensional center manifold for the network, we need 3 zero eigenvalues and so we must have at least \(n=4\).

Remark 8

The number of zero eigenvalues for \(A-D\) is directly connected with the number of mutually orthogonal vectors for Equation (23). Moreover, the positive-definite matrix D that we construct is in general not unique. Hence, if we have m such orthogonal vectors, then for each \(k \le m\) we might construct a different matrix D such that \(A-D\) has k zero eigenvalues.

An illustration of Proposition 6. Figure a) shows the eigenvalues of a particular matrix A, which might be the linear part of the isolated dynamics f at the origin. The matrix A has 6 eigenvalues all strictly on the left half of the complex plane. The existence of \(m=3\) mutually orthogonal vectors satisfying (23) ensures that a positive-definite matrix D exists such that \(A-D\) has a 3-dimensional generalized kernel. In other words, the subtraction of D has moved 3 eigenvalues to the origin, see Figure b).

Proof of Proposition 6

Suppose first that we have m mutually orthogonal vectors \(x_{i}\) such that for each \(i=1,\dots ,m\) we have

Note that we then also have

for all i. We may re-scale the \(x_i\) by any non-zero factor, so that we will now assume without loss of generality that \(\Vert x_i \Vert = 1\) for all i. We start by constructing an auxiliary upper-diagonal \((m\times m)\)-matrix P as follows:

where each entry \(p_{i,j}\) is defined by the rule:

We construct D by first defining it on the mutually orthogonal vectors \(x_{1},\dots ,x_{m}\) as:

Note that \((A-D)x_1 = 0\), whereas \((A-D)x_2 \in {{\,\textrm{span}\,}}(x_1)\), \((A-D)x_3 \in {{\,\textrm{span}\,}}(x_1, x_2)\) and so forth. This shows that the restriction of \(A-D\) to \({{\,\textrm{span}\,}}(x_1, \dots , x_m)\) is nilpotent. Equation (26) can be rewritten as

with \(X=(x_{1} \cdots x_{m})\) the \((n\times m)\)-matrix with columns given by the vectors \(x_1, \dots , x_m\).

To complete our construction of D, we let \(y_{m+1},\dots , y_{n} \in \mathbb {R}^n\) be mutually orthogonal vectors of norm 1 such that \(y_{k}\perp x_{i}\) for all \(i=1,\dots ,m\) and \(k=m+1,\dots , n\). We define D on \({{\,\textrm{span}\,}}(y_{m+1}, \dots , y_n )\) by simply setting \(Dy_{k}=c y_{k}\) for all k and some constant \(c >0\) that will be determined later.

To show that c can be chosen such that D is positive-definite, let \(z \in \mathbb {R}^n\) be any non-zero vector and write

where \({Y}=(y_{m+1}\cdots y_{n})\) is the \((n\times (m-n))\)-matrix with columns the vectors \(y_{k}\), and where \(a \in \mathbb {R}^m, b \in \mathbb {R}^{n-m}\) express the components of z with respect to the basis \(\{x_1, \dots , x_m, y_{m+1}, \dots , y_n\}\). Note that we have

by construction. We calculate

where in the third step we have used Equation (27), and where me make use of the identities in (28). We see that D is positive-definite if the same holds for the matrix

Next, we claim that the \((m\times m)\)-matrix \({X}^{T}A{X}-P\) is positive-definite. Indeed, by definition of P we have

which is a diagonal matrix and positive-definite by the hypothesis (23). We may thus apply Lemma 5 to \(\tilde{D}\), so that for \(c>0\) sufficiently large \(\tilde{D}\) and D are indeed positive-definite.

Conversely, suppose there exists a positive-definite matrix D such that

We will prove that m mutually orthogonal vectors \(x_1, \dots , x_m\) exist satisfying

By assumption, we may choose m linearly independent vectors \(y_{1},\dots ,y_{m}\) such that

where \(\iota _i \in \{0,1\}\) for all \(i>1\). Next, we apply the Gram-Schmidt orthonormalization process to the vectors \(y_i\). That is, we set

where each coefficient is given by

It follows that \(\langle x_{i},x_{j} \rangle = 0\) whenever \(i\ne j\). Moreover, we see from Equation (32) that we may write

for some coefficients \(\beta _{i,j}, \beta '_{i,j} \in \mathbb {R}\). We therefore have \((A-D)x_1 = 0\), and for \(2 \le i \le m\) we find

for certain \(\gamma _{i,j} \in \mathbb {R}\). By orthogonality of the \(x_i\) we get

for all \(i=2,\dots ,m.\) Finally, it follows that

which completes the proof. \(\blacksquare \)

Corollary 9

The proof of Proposition 6 tells us that the remaining eigenvalues of \(A-D\) may be assumed to have (large) negative real parts.

Proof

If m mutually orthogonal vectors \(x_1, \dots , x_m\) exist such that

then a positive-definite matrix D is constructed such that the restriction of \(A-D\) to \({{\,\textrm{span}\,}}(x_1, \dots , x_m)\) is nilpotent. In particular, \(A-D\) maps the space \({{\,\textrm{span}\,}}(x_1, \dots , x_m)\) into itself. It follows that the remaining eigenvalues of \(A-D\) are given by those of the ‘other’ diagonal block \(P_U(A-D)|_U: U \rightarrow U\), where U is some complement to \({{\,\textrm{span}\,}}(x_1, \dots , x_m)\) and \(P_U\) is the projection onto U along \({{\,\textrm{span}\,}}(x_1, \dots , x_m)\). If we choose \(U = {{\,\textrm{span}\,}}(y_{m+1}, \dots , y_n)\) as in the proof of Proposition 6, then we see that \(P_U(A-D)|_U= P_UA|_U - c{{\,\textrm{Id}\,}}_Y\). Choosing \(c>0\) large enough then ensures that the remaining eigenvalues of \(A-D\) are stable. \(\blacksquare \)

Corollary 10

It follows from the proof of Proposition 6 that \(A-D\) can generically be assumed to have a one-dimensional kernel. In other words, whereas the algebraic multiplicity of the eigenvalue 0 is m, its geometric multiplicity is generically equal to 1.

Proof

To see why, assume \(c>0\) is large enough so that \(A-D\) has a generalized kernel of dimension precisely m, see Corollary 9. From the proof of Proposition 6 we see that the restriction of \(A-D\) to its generalized kernel is conjugate to P. It follows that the dimension of the kernel of \(A-D\) is equal to 1 if

This may be assumed to hold after a perturbation of the form

for some arbitrarily small \(\varepsilon _1, \dots , \varepsilon _{m-1}>0\), if necessary. As a result, the matrix \(A-D\) has a single Jordan block of size m for the eigenvalue 0. \(\blacksquare \)

Example 1 below shows that the condition (23) imposed on A does not exclude Hurwitz matrices. This might seem surprising, as for any eigenvector x corresponding to a real eigenvalue \(\lambda < 0\) we have \(x^TAx = \lambda \Vert x\Vert ^2 < 0\). Moreover, it holds that any positive-definite matrix D has only eigenvalues with positive real part, see Lemma 11 below. This result is well-known, but included here for completeness.

Lemma 11

Let \(D \in \mathbb {M}_{n}(\mathbb {R})\) be a real positive-definite matrix (though not necessarily symmetric). That is, assume we have \(x^TDx > 0\) for all non-zero \(x \in \mathbb {R}^n\). Then, any eigenvalue of D has positive real part.

Proof

Let \(\lambda \) be an eigenvalue of D with corresponding eigenvector x. We write \(\lambda = \xi + i\zeta \) and \(x = u+iv\) for their decomposition into real and imaginary parts. On the one hand, we find

On the other, we have

Comparing the real parts of equations (38) and (39), we conclude that

where we use that u and v cannot both be zero. Hence, we see that indeed \(\xi > 0\). \(\blacksquare \)

Example 1

Consider the \((4 \times 4)\) matrix:

The matrix A is Hurwitz and Equation (23) holds for \(m=3\) with \(e_{1}=(1,0,0,0)^T,e_{2}=(0,1,0,0)^T\) and \(e_{3}=(0,0,1,0)^T\). We may determine the upper-diagonal \((3\times 3)\)-matrix P from the proof of Proposition 6 by calculating

where

It follows that \(p_{1,2}=p_{1,3}=p_{2,3}=0\), which implies we have \(P=0\). As in the proof of Proposition 6, we first define \(D\in \mathbb {M}_{4}(\mathbb {R})\) on \({{\,\textrm{span}\,}}(e_1, e_2, e_3) = \{x \in \mathbb {R}^4 \mid x_4 = 0\}\) by setting:

Hence, D agrees with A in the first three columns. To complete our construction of D, we have to choose a non-zero vector u such that \(u\perp e_{i}\) for \(i=1,2,3\), and set \(Du=cu\) for some \(c>0\). To this end, we set \(u=e_{4}\), so that D becomes:

It follows that

which has a zero eigenvalue with geometric multiplicity 3 and a negative eigenvalue \((-17.94-c)\) equal to its trace. Moreover, by the Lemma 5, D is positive-definite for large enough \(c>0.\) Indeed, in this case, we numerically found that for all \(c\ge 9.24\) is enough.

Example 2

The matrix

is Hurwitz, but symmetric. Thus, there are no vectors \(x \in \mathbb {R}^4\) such that \(\langle x,Ax\rangle >0.\)

To control a bifurcation in the system (12), we need to rule out additional eigenvalues laying on the imaginary axis. Recall that the eigenvalues of the linearization (13) are given by those of \(A -\alpha \lambda _pD\) with \(\lambda _p \ge 0\) an eigenvalue of \(L_G\). Lemma (12) below shows that generically only one of the matrices \(A -\alpha \lambda _pD\) is non-hyperbolic. In what follows we denote by \(\Vert \cdot \Vert \) the operator norm induced by the Euclidean norm on \(\mathbb {R}^n.\)

Lemma 12

Let \(A,D\in \mathbb {M}_{n}(\mathbb {R})\) be two given matrices with D positive-definite, and let \(\alpha ^* \in \mathbb {R}\) be a positive scalar. We furthermore assume \(\{\lambda _1, \dots , \lambda _K\}\) is a set of real numbers and consider the matrices \(A - \alpha ^*\lambda _iD\) for \(i \in \{1, \dots , K\}\). Given any \(\varepsilon > 0\), there exist a matrix \(\tilde{A}\) and a positive-definite matrix \(\tilde{D}\) such that \(\Vert A - \tilde{A}\Vert , \Vert D - \tilde{D}\Vert < \varepsilon \) and \(\tilde{A} - \alpha ^*\lambda _K\tilde{D} = A - \alpha ^*\lambda _KD\). Moreover, for \(i \in \{1, \dots , K-1\}\) the matrix \(\tilde{A} - \alpha ^*\lambda _i\tilde{D}\) has a purely hyperbolic spectrum (i.e. no eigenvalues on the imaginary axis).

Remark 13

From \(\Vert A - \tilde{A}\Vert , \Vert D - \tilde{D}\Vert < \varepsilon \) we get

so that we may arrange for \(\tilde{A} - \alpha ^*\lambda _i\tilde{D}\) to be arbitrarily close to the original \({A} - \alpha ^*\lambda _i{D}\) for all i. Moreover, if A is hyperbolic then for \(\varepsilon \) small enough so is \(\tilde{A}\), with the same number of stable and unstable eigenvalues. In particular, \(\tilde{A}\) may be assumed Hurwitz if A is.

Proof of Lemma 12

Let \(\delta \not = 0\) be given and set

Note that the symmetric parts of \(\tilde{D}_{\delta }\) and D differ by \(\frac{\delta }{\alpha ^* \lambda _K} {{\,\textrm{Id}\,}}\) as well, so that \(\tilde{D}_{\delta }\) remains positive-definite for \(|\delta |\) small enough. It is also clear that

A direct calculation shows that

for all \(i \in \{1, \dots , K\}\). It follows that we have \(\tilde{A}_{\delta } - \alpha ^*\lambda _K\tilde{D}_{\delta } = {A} - \alpha ^*\lambda _K{D}.\) For \(i \not = K\) we see that \(\tilde{A}_{\delta } - \alpha ^*\lambda _i\tilde{D}_{\delta }\) differs from \({A} - \alpha ^*\lambda _i{D}\) by a non-zero scalar multiple of the identity. It follows that for \(\delta \not = 0\) small enough all the matrices \(\tilde{A}_{\delta } - \alpha ^*\lambda _i\tilde{D}_{\delta }\) for \(i \in \{1, \dots , K-1\}\) have their eigenvalues away from the imaginary axis. Setting \(\tilde{A}:= \tilde{A}_{\delta }\) and \(\tilde{D}:= \tilde{D}_{\delta }\) with \(\delta = \delta (\varepsilon )\) small enough then finishes the proof. \(\blacksquare \)

3.2 Center manifold reduction

Let us now assume A, D and \(\alpha ^*\) are given such that for a particular eigenvalue \(\lambda >0\) of \(L_G\) the matrix \(A-\alpha ^*\lambda D\) has an m-dimensional center subspace. We moreover assume \(\lambda \) is simple and, motivated by Lemma 12, that the matrices \(A-\alpha ^*\kappa D\) are hyperbolic for any other eigenvalue \(\kappa \) of \(L_G\). It follows that the linearization

of (13) has an m-dimensional center subspace as well.

In what follows, we write \(\hat{I}_{s}\) for the indices of all remaining eigenvalues of \(L_G\) except the index s. In other words, writing \(0 = \lambda _1 < \lambda _2 \le \dots \le \lambda _N\) for the eigenvalues of \(L_G\), we have \(\lambda = \lambda _s\) for some \(s \in \{2, \dots , N\}\) and we set \(\hat{I}_{s} = \{1, \dots , N\}{\setminus } \{s\}\). We will likewise fix an orthonormal set of eigenvectors \(v_1, \dots , v_N\) for \(L_G\) and simply write \(v =v_s\) for the eigenvector corresponding to our fixed eigenvalue \(\lambda = \lambda _s\). Arguably the most natural situation is given by \(s = N\), corresponding to the situation where \(\alpha \) is increased until the eigenvalues of \(A-\alpha \lambda _N D\) first hit the imaginary axis for \(\alpha = \alpha ^*\). However, we will not need this assumption here.

Next, given a vector \(u \in \mathbb {R}^N\) we denote by \(\phi _u: \mathbb {R}^N \rightarrow \mathbb {R}^N\) the linear map defined by

Note that \(\phi _u\) is a projection if \(\Vert u\Vert =1\). Finally, we write \(E^c, E^h \subseteq \mathbb {R}^n\) for the center- and hyperbolic subspaces of \(A-\alpha ^*\lambda D\), respectively. It follows that

and we denote the projections onto the first and second component by \(\pi ^c\) and \(\pi ^h = {{\,\textrm{Id}\,}}_n - \pi ^c\), respectively. Likewise, we denote the center- and hyperbolic subspaces of the map (45) by \({\mathcal {E}}^c, {\mathcal {E}}^h \subseteq \mathbb {R}^N \otimes \mathbb {R}^n\). Their projections are denoted by \(\Pi ^c\) and \(\Pi ^h\). The following lemma establishes some important relations between the aforementioned maps and spaces.

Lemma 14

The spaces \({\mathcal {E}}^c\) and \(E^c\) are related by

and we have

Moreover, it holds that

Proof

The identities (48) and (49) follow directly from the fact that the linear map (45) sends a vector \(v_i \otimes x\) to \(v_i \otimes (A-\alpha ^*\lambda _iD)(x)\) for all \(i \in \{1, \dots , N\}\) and \(x \in \mathbb {R}^n\). To show that \(\Pi ^c\) is indeed given by \(\phi _v \otimes \pi ^c\), we have to show that the latter vanishes on \({\mathcal {E}}^h\) and restricts to the identity on \({\mathcal {E}}^c\). To this end, note that for all \(i \in \hat{I}_{s}\) and \(x \in \mathbb {R}^n\) we have

Given \(x_h \in E^h\) and \(x_c \in E^c\), we find

so that indeed \((\phi _v \otimes \pi ^c)|_{{\mathcal {E}}^h} = 0\) and \((\phi _v \otimes \pi ^c)|_{{\mathcal {E}}^c} = {{\,\textrm{Id}\,}}_{{\mathcal {E}}^c}\). This completes the proof. \(\blacksquare \)

Next, we investigate the dynamics on a center manifold of the system

which will lead to a proof of Theorem 3.

Recall that center manifold theory predicts a locally defined map \(\Psi : {\mathcal {E}}^c \times \Omega \rightarrow {\mathcal {E}}^h\) whose graph \(M^c\) is invariant for the system (53) and locally contains all bounded solutions. The map \(\Psi \) satisfies \(\Psi (0;0) = 0\) and \(D\Psi (0;0) = 0\). In fact, as we assume \(F(0;\varepsilon ) = 0\) for all \(\varepsilon \in \Omega \), it follows that \((0;\varepsilon ) \in M^c\), as these are bounded solutions. This shows that \(\Psi (0;\varepsilon ) = 0\) for all \(\varepsilon \in \Omega \).

In light of Lemma 14, we may write

for certain maps \(\psi : E^c \times \Omega \rightarrow E^h\) and \(\psi _i: E^c \times \Omega \rightarrow \mathbb {R}^n\). We then likewise have \(\psi (0;\varepsilon ) = 0, D\psi (0;0) = 0\) and \(\psi _i(0;\varepsilon ) = 0, D\psi _i(0;0) = 0\) for all \(\varepsilon \in \Omega \) and \(i \in \hat{I}_{s}\).

The dynamics on the center manifold \(M^c\) is conjugate to that of a vector field on \({\mathcal {E}}^c \times \Omega \) given by

where we write

for the vector field on the right hand side of (53), with \(X_c \in {\mathcal {E}}^c\), \(X_h \in {\mathcal {E}}^h\) and \(\varepsilon \in \Omega \). We further conjugate to a vector field R on \(E^c \times \Omega \) by setting

In order to describe R, we first introduce some useful notation. Given \(X \in \mathbb {R}^N \otimes \mathbb {R}^n\), we may write

with \(e_1, \dots , e_N\) the canonical basis of \(\mathbb {R}^N\) and for some unique vectors \(x_p \in \mathbb {R}^n\). In general, given \(p \in \{1, \dots , N\}\) we will denote by \(x_p \in \mathbb {R}^n\) the pth component of X as in the decomposition (58). Recall that \(v = (\nu _1,\dots ,\nu _N)\) is the eigenvector associated with \(\lambda \). Using this notation, we have the following result.

Proposition 15

Denote by \(h: \mathbb {R}^n \times \Omega \rightarrow \mathbb {R}^n\) the non-linear part of f. That is, we have \(f(x;\varepsilon ) = Ax + h(x;\varepsilon )\). The reduced vector field R is given explicitly by

for \(x_c \in E^c\) and \(\varepsilon \in \Omega \).

Proof

We write \(S(X; \varepsilon ) = S(X_c, X_h; \varepsilon )= JX + H(X; \varepsilon )\) with \(J = D_X S(0;0)\) and where H denotes higher order terms. It follows that

We start by focusing on the first term. As J sends \({\mathcal {E}}^c\) to \({\mathcal {E}}^c\) and \({\mathcal {E}}^h\) to \({\mathcal {E}}^h\), we conclude that \(\Pi ^c J = J \Pi ^c\). We therefore find

Writing \(X_c = v \otimes x_c\) and using Expression (13) for J, we conclude that the linear part of \(\tilde{R}\) is given by

We next focus on the second term in Equation (60). Note that we have

Now, by Lemma 14 it follows that we may write

We therefore find

Next, we have \((X_c)_p = (v \otimes x_c)_p = \nu _p x_c \), so that we find

Combining equations (62) and (66), we arrive at

Finally, from Eq. 57 we get

which completes the proof. \(\blacksquare \)

To further investigate the Taylor expansion of \(R(x_c; \varepsilon )\), we need to know more about how the coefficients of \(\Psi : {\mathcal {E}}^c \times \Omega \rightarrow {\mathcal {E}}^h\) depend on those of F.

To this end, let us consider for a moment the general situation where S is some vector field on \(\mathbb {R}^k\) (in our case \(k = nN\)) satisfying \(S(X) = JX + H(X)\) for some \(H: \mathbb {R}^n \rightarrow \mathbb {R}^n\) satisfying \(H(0)= 0\), \(DH(0) = 0\). We furthermore let \(\hat{{\mathcal {E}}}^c\) and \(\hat{{\mathcal {E}}}^h\) denote the center- and hyperbolic subspaces of J, respectively, and write \(\Pi ^c, \Pi ^h\) for the corresponding projections. Suppose \(\Psi : \hat{{\mathcal {E}}}^c \rightarrow \hat{{\mathcal {E}}}^h\) is a locally defined map whose graph is a center manifold \(M^c\) for the system \(\dot{X} = S(X)\). Recall that we have \(\Psi (0) = 0\) and \(D\Psi (0) = 0\). Moreover, as \(M^c\) is a flow-invariant manifold, we see that \(S|_{M^c}\) takes values in the tangent bundle of \(M^c\). This can be used to iteratively solve for the higher order coefficients of an expansion of \(\Psi \) around 0.

More precisely, the tangent space at \(X_c + \Psi (X_c) \in M^c\) is given by all vectors of the form \((V_c, D\Psi (X_c)V_c) \in \hat{{\mathcal {E}}}^c \oplus \hat{{\mathcal {E}}}^h\), with \(V_c \in \hat{{\mathcal {E}}}^c\). Invariance under the flow of S then translates to the identity

for \(X_c\) in some open neighborhood of the origin in \(\hat{{\mathcal {E}}}^c\). Equation (69) can be used to show that \(D\Psi (0) = 0\). Equation (69) can also be arranged to

As \(\Psi \) only has terms of degree 2 and higher, the same holds for both sides of Equation (70), which depend on \(\Psi \) and H. More generally, using \(\Psi ^{\rho }\) to denote the terms of order \(\rho \ge 2\) in the Taylor expansion of \(\Psi \) around the origin, Equation (70) is readily seen to imply for each \(\rho \)

for some homogeneous polynomial \(P_{\rho }\) of order \(\rho \). Moreover, \(P_{\rho }\) depends only on \(\Psi ^{2}(X_c) \dots , \Psi ^{\rho -1}(X_c)\) and on the Taylor expansion of H up to order \(\rho \). It can be shown that for fixed J and \(P_{\rho }\), Equation (71) has a unique solution \(\Psi ^{\rho }\) in the form of a homogeneous polynomial of order \(\rho \), see [24]. As a result, we get the following important observation:

Lemma 16

We may iteratively solve for the terms \(\Psi ^{\rho }\) using expression (71). Moreover, for fixed linearity J, the terms of order \(\rho \) and less of \(\Psi \) are fully determined by the terms of order \(\rho \) and less of H.

We return to our main setting where \(R(x_c;\varepsilon )\) is the reduced vector field of the system (53) as described in Proposition 15. Note that the presence of a parameter \(\varepsilon \) means that the center subspace \(\hat{{\mathcal {E}}}^c\) in the observations for general vector fields above is now given by \({\mathcal {E}}^c \times \Omega \).

Lemma 17

Let \(\rho > 1\) be given, and suppose the vector \(v = (\nu _1, \dots , \nu _N) \in \mathbb {R}^N\) satisfies

Then the reduced vector field \(R(x_c;\varepsilon )\) as described in Proposition 15 can have any Taylor expansion around 0 of order 2 to \(\rho \), subject to \(R(0;\varepsilon ) = 0\), if no conditions are put on the nonlinear part of f other than \(f(0;\varepsilon ) = 0\) and sufficient smoothness.

Proof

From Proposition 15 we know that

As we have \(\Psi (0;\varepsilon ) = 0\) and \(h(0;\varepsilon ) = 0\), we conclude that likewise \(R(0; \varepsilon ) = 0\) for all \(\varepsilon \in \Omega \). In particular, we see that \(D_{\varepsilon }R(0;0) = 0\), whereas Equation (73) tells us that \(D_{x_c}R(0;0) = (A-\alpha ^*\lambda D)|_{E^c}\).

As a warm-up, we start by investigating the second order terms of R. To this end, we write

where \(Q_{1,1}\) is linear in both components and \(Q_{2,0}\) is a quadratic form. It follows that

where we use that \(\Psi (v \otimes x_c;\varepsilon )\) has no constant or linear terms in \((x_c; \varepsilon )\). From Equation (74) we obtain

As we assume \(\sum _{p=1}^N \nu _p^3 \not = 0\), we see that the second order Taylor coefficients of \(R(x_c; \varepsilon )\) can be chosen freely (except for the \({\mathcal {O}}(|\varepsilon |^2)\) term).

Now suppose we are given a polynomial map \(P: E^c \times \Omega \rightarrow E^c\) of degree \(\rho \) satisfying \(DP(0) = ((A-\alpha ^*\lambda D)|_{E^c};0)\) and \(P(0;\varepsilon ) = 0\) for all \(\varepsilon \). We will prove by induction that we may choose the terms in the Taylor expansion of h up to order \(\rho \) in the variables \(x_c\) and \(\varepsilon \) such that the Taylor expansion up to order \(\rho \) of R agrees with P. To this end, suppose some choice of h gives agreement between P and the Taylor expansion of R up to order \(2\le k < \rho \). By the foregoing, this can be arranged for \(k=2\).

We start by remarking that a change to h that does not influence its Taylor expansion up to order k does not change the Taylor expansion of \(\Psi \) up to order k. This follows directly from Lemma 16. As a result, such a change does not influence the Taylor expansion of R up to order k as well. We write

for an order \(k+1\) change to h, where each component of \(Q_{i,j}: \mathbb {R}^n \times \Omega \rightarrow \mathbb {R}^n\) is a homogeneous polynomial of degree i in \(x_c\) and degree j in \(\varepsilon \). The \((k+1)\)-order terms of R in \((x_c; \varepsilon )\) are given by the \((k+1)\)-order terms of

As both h and \(\Psi \) have no constant and linear terms, we see that the \((k+1)\)-order terms of R are also given by those of

where \(\Psi ^{k}\) denotes the terms of \(\Psi \) up to order k. As we have previously argued, \(\Psi ^{k}\) is independent of the additional terms \(Q_{i,k+1-i}\). Hence, we may write the order \(k+1\) terms in Expression (77) as

where \(W(x_c; \varepsilon )\) denotes the order \(k+1\) terms of

As \(\sum _{p=1}^{N} v_p^{j} \not = 0\) for all \(j \in \{2, \dots , \rho +1\}\), we see that the order \(k+1\) terms of R may be freely chosen. In other words, we may arrange for the Taylor expansion up to order \(k+1\) of R to agree with that of P up to order \(k+1\). This completes the proof by induction. \(\blacksquare \)

Proof of Theorem 3

If there exist m mutually orthogonal vectors \(x_1,\dots ,x_m\) such that \(\langle x_i, Ax_i \rangle > 0\), then Proposition 6 guarantees the existence of a positive-definite matrix D such that \(A-D\) has a center subspace of dimension m or higher. Given any non-zero eigenvalue \(\lambda \) of \(L_G\), we may set \(\alpha ^* = 1/\lambda \) and conclude that \(A-\alpha ^*\lambda D\) has a center subspace of dimension at least m. As the eigenvalues of the linearization of (11) around the origin are given by those of the maps \(A-\alpha \lambda D\) for \(\lambda \) an eigenvalue of \(L_G\), we see that the system (11) has a local parameterized center manifold of dimension at least m for some choices of D and \(\alpha = \alpha ^*\).

If the graph G is \(\rho \)-versatile for the pair \((\lambda , v)\), then a choice of D as above together with \(\alpha ^* = 1/\lambda \) guarantees \(A- D\) has a center subspace of dimension at least m. By Corollary 9 we may assume this center subspace to be of dimension precisely m. Moreover, by Lemma 12 we may assume \(A-\alpha ^*\lambda _i D\) to have a hyperbolic spectrum for all other eigenvalues \(\lambda _i \not = \lambda \) of \(L_G\), after an arbitrarily small perturbation to A and D if necessary. It follows that the system (11) has a local parameterized center manifold of dimension exactly m. We argue in the proof of Lemma 17 that \(R(0;\varepsilon ) = 0\) for all \(\varepsilon \), and that \(DR(0;0) = ((A-\alpha ^*\lambda D)|_{E^c};0)\). This latter map is nilpotent by the statement of Proposition 6. Finally, Lemma 17 shows that any Taylor expansion can be realized for R up to order \(\rho \), subject to the aforementioned restrictions. \(\blacksquare \)

4 Stability of the Center Manifold

In this section we investigate the stability of the center manifold of the full network system. We know that the spectrum of the linearization of this system is fully understood if we know the spectrum of the matrices \(A-\alpha ^*\lambda _i D\) for \(\lambda _i\) an eigenvalue of \(L_G\). Proposition 6 gives conditions on A that guarantee the existence of a positive-definite matrix D such that \(A-\alpha ^*\lambda D\) has an m-dimensional generalized kernel for some fixed eigenvalue \(\lambda >0\) of \(L_G\). Moreover, by Corollary 9 we may assume that the non-zero eigenvalues of \(A-\alpha ^*\lambda D\) have negative real parts. Lemma 12 in turn shows that –after a small perturbation of A and D if necessary– we may assume \(A-\alpha ^* \lambda _{i} D\) to have a hyperbolic spectrum for all remaining eigenvalues \(\lambda _i \not = \lambda \) of \(L_G\). Thus, if the matrices \(A-\alpha ^*\lambda _i D\) for these remaining eigenvalues are all Hurwitz, then the m-dimensional center manifold of Theorem 3 may be assumed stable.

This seems most reasonable to expect when \(\lambda \) is the (simple) largest eigenvalue of \(L_G\), as the matrices \(A-\alpha ^*\lambda _i D\) for the remaining eigenvalues of \(L_G\) then “lie between” the Hurwitz matrix A and the non-invertible matrix \(A-\alpha ^*\lambda D\). More precisely, suppose D is scaled such that \(A-D = A-\alpha ^*\lambda D\). If we let \(\alpha \) vary from 0 to \(\alpha ^* = 1/\lambda \), then for each eigenvalue \(\lambda _i\) of \(L_G\), the matrix \(A-\alpha \lambda _i D\) is of the form \(A-\beta D\) for some \(\beta \) in [0, 1]. Let us therefore denote by \(\beta \mapsto \gamma _i(\beta )\) for \(i \in \{1, \dots , n\}\) a number of curves through the complex plane capturing the eigenvalues of \(A-\beta D\). As \(\alpha \) varies from 0 to \(\alpha ^* = 1/\lambda \), the eigenvalues of \(A-\alpha \lambda D\) traverse \(\gamma _i\), with the “front runners” given by those of \(A-\alpha \lambda D\). In contrast, for \(\lambda _1 = 0\) the eigenvalues of \(A-\alpha \lambda _1 D\) of course remain at \(\gamma _i(0)\). When \(\alpha = \alpha ^*\) is reached, the eigenvalues of \(A-\alpha ^*\lambda _i D\) end up in different places on the curves \(\gamma _i\). Hence, if the situation is as in Fig. 2, where each \(\gamma _i\) hits the imaginary axis only for \(\beta = 1\), or not at all, then we are guaranteed that each of the matrices \(A-\alpha ^*\lambda _i D\) is Hurwitz for \(\lambda _i \not = \lambda \). Hence, the center manifold is then stable.

Of course \(\beta = 1\) may not be the first value for which a curve \(\gamma _i\) hits the imaginary axis, see Fig. 3. Note that, if the matrix \(A- \beta D\) indeed has a non-trivial center subspace for some value \(\beta \in (0,1)\), then a bifurcation is expected to occur as \(\alpha \) is increased, before it hits \(\alpha ^*\).

Sketch of a situation where the m-dimensional center manifold of Theorem 3 may be assumed stable. Depicted are the eigenvalues of \(A-\beta D\) as \(\beta \) is varied. Colored dots denote starting points where \(\beta =0,\) the blue and red dashed paths form a conjugate pair of complex eigenvalues and the green and orange dashed paths are real eigenvalues. The arrows indicate how the eigenvalues evolve as \(\beta \) increases to 1. Three of them go to the origin, whereas one moves away from it. None of them touches the imaginary axis before \(\beta =1.\)

Numerically computed behavior of three of the four eigenvalues of the family of matrices from Example 3. As \(\beta \) increases from 0 to 1, three eigenvalues move to the origin, whereas a fourth stays to the left of the imaginary axis. For some value of \(\beta \in (0,1)\), two complex conjugate eigenvalues already cross the imaginary axis away from the origin. Likewise, a real eigenvalue crosses the origin for some \(\beta \in (0,1)\). Data was simulated using Octave

The next example shows that some of the eigenvalues of \(A-\beta D\) might cross the imaginary axis before a high-dimensional kernel emerges at \(\beta =1\), see Fig. 3.

Example 3

We consider the matrices A and D constructed in Example 1. Here we choose \(c=21\) in order to guarantee that D is a positive-definite matrix. We therefore have the family of matrices:

parameterized by the real number \(\beta \in [0,1].\) We are interested in the eigenvalue behavior as \(\beta \) is varied. We know that for \(\beta =0\) we have the Hurwitz matrix A, so that all eigenvalues have negative real parts. We would like to know if the family \(A-\beta D\) has all eigenvalues with a negative real part for all \(\beta \in (0,1).\) However, if \(\beta =\frac{1}{2}\) we have 3 eigenvalues with positive real part. By continuity of the eigenvalues, it means that each of these crossed the imaginary axis for some \(\beta <\frac{1}{2}.\) Only after this, for \(\beta =1\), do we have the bifurcation studied in the previous chapter, due to the appearance of a triple zero eigenvalue. Figure 3 shows the numerically computed behavior of these three eigenvalue-branches.

If we are in the situation of Fig. 3, then the center manifold can still be stable. This occurs when the largest eigenvalue \(\mu \) of \(L_{G}\) is significantly larger than all other eigenvalues. In that case, we have

for all eigenvalues \(\lambda \) of \(L_{G}\) unequal to \(\mu \). For small enough values of \(\lambda / \mu \) the matrix \(A-\lambda / \mu D\) is therefore still Hurwitz. As it turns out, this can be achieved in the situation explored in Subsect. A.2. More precisely, we have the following result.

Proposition 18

Let \(r < C\) be positive integers and suppose G is a connected graph with at least two nodes, consisting of one node of degree C and with all other nodes of degree at most r. Let \(\mu \) and \(\kappa \) denote the largest and second-largest eigenvalue of the Laplacian \(L_G\), respectively. Then the value \(\kappa / \mu \) goes to zero as C/r goes to infinity, uniformly in all graphs G satisfying the above conditions.

Proof

See Appendix. \(\blacksquare \)

5 Bifurcations in Diffusely Coupled Stable Systems

Using our results so far, we show what bifurcations to expect in diffusely coupled stable systems in 1, 2 or 3 bifurcation parameters. Note that Theorem 3 tells us that the dynamics on the center manifold is conjugate to that of a reduced vector field \(R: \mathbb {R}^m \times \Omega \rightarrow \mathbb {R}^m\), satisfying \(R(0; \varepsilon ) = 0\) for all \(\varepsilon \in \Omega \). By Corollary 10 we may furthermore assume the linearization \(D_xR(0;0)\) to be nilpotent with a one-dimensional kernel. Other than that, no restrictions apply to the Taylor expansion of R.

Assuming that an m-parameter bifurcation can generically generate an m-dimensional generalized kernel, we each time consider m parameter bifurcations for a system on \(\mathbb {R}^m\). In Subsect. 5.1 we briefly investigate the cases \(m=1\) and \(m=2\). Our main result is presented in Subsect. 5.2, where we show the emergence of chaos for \(m=3\). In most cases, the main difficulty lies in adapting existing results on generic unfoldings to the setting where \(R(0, \varepsilon ) = 0\) for all \(\varepsilon \in \Omega \).

5.1 One and two parameters

Motivated by our results so far, we describe the generic m parameter bifurcations for systems R on \(\mathbb {R}^m\), where \(m = 1,2\), subject to the condition \(R(0;\varepsilon ) = 0\) for all \(\varepsilon \in \Omega \). We each time assume a nilpotent Jacobian with a one-dimensional kernel. We start with the case \(m=1\).

Remark 19

(The case \(m = 1\)) A map \(R: \mathbb {R} \times \mathbb {R} \rightarrow \mathbb {R}\) satisfying \(R(0;\varepsilon ) = 0\) for all \(\varepsilon \in \mathbb {R}\) and \(D_xR(0;0) = 0\) has the general form

Under the generic assumption that \(a,b \not = 0\), we find a transcritical bifurcation. Returning to the setting of our network system, this corresponds to a loss of stability of the fully synchronous solution.

Remark 20

(The case \(m = 2\)). Consider first a two-parameter vector field \(R: \mathbb {R}^2 \times \mathbb {R}^2 \rightarrow \mathbb {R}^2\) satisfying \(R(0;0) = 0\) and with non-zero nilpotent Jacobian \(D_xR(0;0)\). Such a system generically displays a Bogdanov-Takens bifurcation. However, in this bifurcation scenario there are parameter values for which there is no fixed point. Hence, if we impose the additional condition \(R(0;\varepsilon ) = 0\) for all \(\varepsilon \in \mathbb {R}^2\), then another (generic) bifurcation scenario has to occur. This latter situation is worked out in [6]. The corresponding generic bifurcation involves multiple fixed points, heteroclinic as well as homoclinic connections, and periodic orbits. One striking feature is the presence of a homoclinic orbit from the origin, which is approached as a limit of stable periodic solutions. In our network setting, such a periodic solution means a cyclic time-evolution of the system from full synchrony to less synchrony and back. The time at which the system is indistinguishably close to full synchrony can moreover be made arbitrarily long.

5.2 Three parameters: chaotic behavior

In this section we will prove Corollary 4, which allows us to conclude that chaotic behavior occurs in diffusely coupled stable systems. To this end, we will apply the theory developed so far. Before this, we will present a detailed background on how we will achieve chaos.

We expect to find chaos in the network through the existence of a Shilnikov homoclinic orbit on a three-dimensional center manifold.

The Shilnikov configuration can be seen as a combination of linear and nonlinear behavior involving a saddle fixed point. A two-dimensional stable manifold attracts trajectories exponentially fast to the fixed point, where the eigenvalues of the linearization are \(\lambda _{1,2}=-\alpha \pm i\beta \) with \(\alpha >0\) and \(\beta \not = 0\). Transversal to this there is a one-dimensional unstable manifold repelling away trajectories with real eigenvalue \(\gamma >0.\) The Shilnikov homoclinic orbit emerges from the re-injection of the one-dimensional unstable manifold into the two-dimensional stable manifold, see Fig. 4. Of course this re-injection is a consequence of nonlinear terms. L. P. Shilnikov proved that if \(\gamma >\alpha \), there are countably many saddle periodic orbits in a neighborhood of the homoclinic orbit. The proof consists of showing topological equivalence between a Poincaré map and the shift map of two symbols. The existence of chaotic behavior is in the sense that Robert L. Devaney defined for deterministic systems, with strongly sensitive dependence on initial conditions, topological transitivity and dense periodic points.

We next give a brief summary of results contained in the paper [7]. The authors studied the three parameter unfolding of nonlinear vector fields on \(\mathbb {R}^3\) with linear part conjugate to a nilpotent singularity of codimension three. After making several coordinate changes, the following normal form is presented:

where the parameters are given by \(\tau =(\lambda ,\nu ,\kappa ).\) The parameter \(\kappa \) is introduced by means of a blow-up technique, and the term

denotes the nilpotent singularity of codimension three on \(\mathbb {R}^{3}\). Equation (80) has two hyperbolic fixed points for \(\lambda >0\) and \(\nu =0\), namely \(p_{1}=(-\sqrt{2\lambda },0,0)\) with local behavior given by a two-dimensional stable and one-dimensional unstable manifold and \(p_{2}=(+\sqrt{2\lambda },0,0)\) with local behavior given by a two-dimensional unstable and one-dimensional stable manifold. Knowing there is a solution x(t) for a specific positive parameter \(\lambda =\lambda ^{*}\) such that \(x(t)\rightarrow p_{1}\) as \(t\rightarrow -\infty \) and \(x(t)\rightarrow p_{2}\) as \(t\rightarrow +\infty \), the authors proved analytically the existence of another solution, also for the parameter \(\lambda ^{*},\) connecting both two-dimensional stable and unstable manifolds and thus forming another heteroclinic orbit. Theorem 4.1 of [7] states that in any neighborhood of the parameter \(\tau _{0}=(\lambda _{0},\nu _{0},\kappa _{0})=(\lambda ^{*},0,0)\), where the heteroclinic orbits exist, there are parameters \(\tau =(\lambda ,\nu ,\kappa )\) such that the heteroclinic orbit breaks and a Shilnikov homoclinic orbit appears.

For completeness, we state Theorem 4.1 below in a slightly altered form.

Theorem 21

(Theorem 4.1 [7].). In every neighborhood of the parameter \(\tau _{0}=(\lambda _{0},\nu _{0},\kappa _{0})=(\lambda ^{*},0,0)\) there exist parameter values \(\tau =(\lambda ,\nu ,\kappa )\) such that the equation

has a homoclinic orbit given by the intersection of the two-dimensional stable and one-dimensional unstable invariant manifolds at the hyperbolic fixed point \(p_{1}.\)

As was the case for \(m=2\), we cannot immediately use this existing result, as the parameter dependent systems on the center manifold of our network ODE satisfy \(R(0,\varepsilon ) = 0\) for all \(\varepsilon \in \mathbb {R}^3\). It remains to show that with this existing restriction, we may still reduce our system to the family given by Equation (80). This then proves Corollary 4 as a consequence of Theorem 3.

We therefore start with a parameterized vector field \(R: \mathbb {R}^3 \times \mathbb {R}^3 \rightarrow \mathbb {R}^3\) satisfying \(R(0,\varepsilon ) = 0\) for all \(\varepsilon \in \mathbb {R}^3\). After a linear coordinate change, we may assume the Jacobian \(D_xR(0;0)\) to be given by

We thus get the system

with \(x=(x_1, x_2, x_3)\), and where \(h_{1},h_{2},h_{3}\) are the higher order (nonlinear) terms of \(R(x;\varepsilon )\). Note that we have \(h_{1}(0;\varepsilon )=h_{2}(0;\varepsilon )=h_{3}(0;\varepsilon )=0\) for all \(\varepsilon \in \Omega \), and \(Dh_{1}(0;0)=Dh_{2}(0;0)=Dh_{3}(0;0)=0\).

To bring our system in the form (80), we will proceed as in the paper [7]. The first step is to get rid of the nonlinear terms \(h_{1}\) and \(h_{2}\) by means of a coordinate transformation.

To eliminate \(h_{1}\) we consider the following change of coordinates

Applying it to (81), we get

We therefore get the new system

with \(y = (y_1, y_2, y_3)\), and where \(\tilde{h}_{2}\) and \(\tilde{h}_{3}\) are uniquely defined by the relations

Note that \(\tilde{h}_{2}\) and \(\tilde{h}_{3}\) again have vanishing linear terms, and moreover satisfy \(\tilde{h}_{2}(0;\varepsilon ) = \tilde{h}_{3}(0;\varepsilon ) = 0\) for all \(\varepsilon \).

To eliminate \(\tilde{h}_2\) we consider the change of coordinates

Applying it to (83), we obtain

We thus get the new system

with \(z = (z_1, z_2, z_3)\), and where \(\hat{h}_{3}(z;\varepsilon )\) is locally defined by

Note that \(\hat{h}_{3}\) again has no linear terms and satisfies \(\hat{h}_{3}(0;\varepsilon ) = 0\) for all \(\varepsilon \). Moreover, in case of \(h_1 = h_2 = 0\) we would find \(x=y=z\) and \(h_3 = \hat{h}_3\), which shows that no other restrictions apply to \(\hat{h}_3\). Writing

for some locally defined \({\mathcal {E}}_i: \mathbb {R}^3 \rightarrow \mathbb {R}\), we therefore see that generically we may redefine \(\varepsilon = (\varepsilon _1, \varepsilon _2, \varepsilon _3)\) so that

It follows that we may write

for some coefficients \(a_1, \dots , a_6 \in \mathbb {R}\). We will assume that \(a_{1}\ne 0,\) so that the system (91) locally has two branches of steady states: \(z(\varepsilon ) = (0,0,0)\) and \(z(\varepsilon ) = (\tilde{z}_1(\varepsilon ),0,0)\).

A straightforward calculation shows that

Motivated by this, we perform the change of coordinates:

Applying this to (91), we get

We have left the remainder term \({\mathcal {O}}(|z|^3+|\varepsilon ||z|^2)\) as is, which will benefit us later. Rearranging terms, we get the new system

Similar to the paper [7], we now introduce a blow-up parameter \(\kappa \in \mathbb {R}\) and write

Note that we get

so that we may write \(\Vert z\Vert = {\mathcal {O}}(\kappa ^3)\). Applying it to Equation (95), we get

where furthermore

Summarizing, we find

We next focus on the parameter \(\gamma _{2}\). We assume from here on out that \(\gamma _{2}<0\) and perform the following change of coordinates

where

Applying it to (98), we get

We thus get the new system

Finally, we make the following change of coordinates:

This gives

Setting \(\lambda := \frac{\gamma _{1}^2r^6}{2}\) and \(\nu := \gamma _{3}r\), we arrive at the vector field

from Theorem 21. Note that \(\lambda = \frac{\gamma _{1}^2r^6}{2}\) is necessarily non-negative. However, this may be assumed in the setting of Theorem 21, as \(\lambda ^{*}>0\). This theorem thus predicts chaos in the setting of our coupled cell system, provided \(m=3\) and the network in question is at least 2-versatile.

References

Antoneli, F., Dias, A.P.S., Paiva, R.C.: Hopf bifurcation in coupled cell networks with interior symmetries. SIAM J. Appl. Dyn. Syst. 7(1), 220–248 (2008)

Arneodo, A., Coullet, P.H., Spiegel, E.A., Tresser, C.: Asymptotic chaos. Physica D 14(3), 327–347 (1985)

DeVille, L., Nijholt, E.: Circulant type formulas for the eigenvalues of linear network maps. Linear Algebra Appl. 610, 379–439 (2021)

Drubi, F., Ibanez, S., Rodriguez, J.A.: Coupling leads to chaos. J. Differ. Equ. 239(2), 371–385 (2007)

Gerschgorin, S.: Über die Abgrenzung der Eigenwerte einer Matrix. Izvestija Akademii Nauk SSSR, Serija Matematika 7(3), 749–754 (1931)

Hirschberg, P., Knobloch, E.: An unfolding of the Takens-Bogdanov singularity. Q. Appl. Math. 49(2), 281–287 (1991)

Ibáñez, S., Rodríguez, J.A.: Shil’nikov configurations in any generic unfolding of the nilpotent singularity of codimension three on R3. J. Differ. Equ. 208(1), 147–175 (2005)

Izhikevich, Eugene M.: Dynamical systems in neuroscience. MIT press (2007)

Keller, G., Liverani, C.: Uniqueness of the SRB measure for piecewise expanding weakly coupled map lattices in any dimension. Commun. Math. Phys. 262(1), 33–50 (2006)

Kocarev, L.M., Janjic, P.A.: On Turing instability in two diffusely coupled systems. IEEE Trans. Circuits and Syst. I: Fundamental Theory and Appl. 42(10), 779–784 (1995)

Kuramoto, Y.: Chemical oscillations, waves, and turbulence. Courier Corporation, (2003)

Li, Z., Xia, C.: Turing instability and Hopf bifurcation in cellular neural networks. Int. J. Bifurc. Chaos 31(08), 2150143 (2021)

Mohar, B., Alavi, Y., Chartrand, G., Oellermann, O.R.: The Laplacian spectrum of graphs. Graph Theory, Combinatorics, Appl. 2(871–898), 12 (1991)

Nijholt, E., Rink, B., Sanders, J.: Center manifolds of coupled cell networks. SIAM Rev. 61(1), 121–155 (2019)

Nijholt, E., Rink, B., Schwenker, S.: Quiver representations and dimension reduction in dynamical systems. SIAM J. Appl. Dyn. Syst. 19(4), 2428–2468 (2020)

Pereira, T., van Strien, S., Tanzi, M.: Heterogeneously coupled maps: hub dynamics and emergence across connectivity layers. J. Eur. Math. Soc. 22(7), 2183–2252 (2020)

Perlikowski, P., Yanchuk, S., Wolfrum, M., Stefanski, A., Mosiolek, P., Kapitaniak, T.: Routes to complex dynamics in a ring of unidirectionally coupled systems. Chaos: An Interdisciplin. J. Nonlinear Sci. 20(1), 013111 (2010)

Pogromsky, A., Glad, T., Nijmeijer, H.: On diffusion driven oscillations in coupled dynamical systems. Int. J. Bifurcation and Chaos 9(04), 629–644 (1999)

Ricard, M.R., Mischler, S.: Turing instabilities at Hopf bifurcation. J. Nonlinear Sci. 19(5), 467–496 (2009)

Smale, S.: A mathematical model of two cells via Turing’s equation. In: The Hopf bifurcation and its applications, pp 354–367. Springer, (1976)

Stankovski, T., Pereira, T., McClintock, P.V.E., Stefanovska, A.: Coupling functions: universal insights into dynamical interaction mechanisms. Rev. Mod. Phys. 89(4), 045001 (2017)

Tomberg, E.A., Yakubovich, V.A.: Self-oscillatory conditions in nonlinear systems. Siberian Math. J 30(4), 180–194 (1989)

Turing, A.M.: The chemical basis of morphogenesis. Bull. Math. Biol. 52(1), 153–197 (1990)

Wimmer, H.K.: The equation (g (x)) xax- bg (x)= h (x). J. Math. Anal. Appl. 67(1), 198–204 (1979)

Acknowledgements

We thank Edmilson Roque and Jeroen Lamb for enlightening discussions. TP was supported in part by FAPESP Cemeai Grant No. 2013/07375-0 and is a Newton Advanced Fellow of the Royal Society NAF\(\backslash \)R1\(\backslash \)180236. EN was supported by FAPESP grant 2020/01100-2. TP and EN were partially supported by Serrapilheira Institute (Grant No. Serra-1709-16124). FCQ was supported by CAPES. DT was supported by Leverhulme Trust grant RPG-2021-072.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by C. Liverani.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix: Examples

Appendix: Examples

1.1 Versatile graphs by means of the complement graph

We next introduce a method for generating \(\rho \)-versatile graphs, for any \(\rho \in \mathbb {N}\). Our construction involves the definition of the complement graph, given below.

Definition 22

Given an undirected graph G, we define the complement graph \(G^{\circ }\) as the graph obtained from G by leaving out all existing edges and adding all edges between distinct vertices that were not there in G.

Since our graphs don’t have self-loop, we have that \(G^{\circ \circ } = G.\)

Theorem 23

Let G be a graph consisting of precisely two disconnected components of different order. Then \(G^{\circ }\) is a connected graph whose Laplacian has a simple, largest eigenvalue whose eigenvector v satisfies \(\sum _{i=1}^{|G^{\circ }|} \nu _i^\ell \not = 0\) for all \(\ell >1.\) Moreover, suppose the two disconnected components of G have number of vertices s and t. Then the largest eigenvalue of the Laplacian of \(G^{\circ }\) is equal to \(s+t\) and a corresponding eigenvector is given by

Here the entries are ordered so that the vertices of the first component of G (which has s vertices) are enumerated first, after which those of the second component of G (which has t vertices) are listed.

Proof

The proof uses a result that relates the eigenvalues and eigenvectors of the Laplacian of a graph to those of the Laplacian of its complement graph. This result is known, but incorporated here for completeness. Suppose the two components of G have \(s\ne 0\) and \(t\ne 0\) vertices, where \(s\ne t\) and \(|G|=t+s=N\).

Recall that the dimension of the kernel of \(L_{G}\) equals the number of connected components of G, we see that \(\lambda _{2}=\lambda _{1} = 0\). Thus,

where we grouped the entries according to the connected components, we see that may choose

which we assume from here on out. Note that indeed \(v_1 \perp v_2\). Let \(L_{G^{\circ }}\) be the Laplacian matrix associated to the complement graph \(G^{\circ }\). We note that we have the identity

where E is a matrix where every element equals 1. As we have \(v_1 \perp v_{i}\) for all \(i=2,\dots ,N\), it follows that \(Ev_i = 0\) for all \(i=2,\dots ,N\). From Equation (108) we get

and evaluating at the eigenvectors \(v_{i}\) for \(i=2,\dots ,N\) gives

Thus, for each \(i=2,\dots ,N\) we find that \((N-\lambda _{i})\) is an eigenvalue of \(L_{G^{\circ }}\), with a corresponding eigenvector given by \(v_i\). As we also have \(L_{G^{\circ }}v_1 = 0\), we see that the spectrum of \(L_{G^{\circ }}\) is given by

The largest eigenvalue of \(L_{G^{\circ }}\) is therefore equal to \(N-0 = N = s+t\), with an eigenvector given by

Since \(\lambda _3 > 0\), so that the eigenvalue N is simple. Using that \(st\ne 0\) and \(s\ne t\), we find for all \(\ell >1\)

Finally, we argue that \(G^{\circ }\) is a connected graph. Indeed, if \(x,y\in G\) are in different connected components, then they share an edge in \(G^{\circ }\) by definition of this latter graph. If on the other hand x and y are in the same component of G, then in \(G^{\circ }\) they both share an edge with some node z from the other component of G. This completes the proof. \(\blacksquare \)

Example 4

Let \(G=(V,E)\) be the undirected graph with \(V=\{1,2,3\}\) and two disconnected components of a different order \(s=1\) and \(t=2\), shown in Fig. 5. Then \(G^{\circ }\) is a connected, non-regular graph with

We have \({{\,\textrm{Spec}\,}}(L_{G^{\circ }})=\{3,1,0\}\) with simple and largest eigenvalue \(\lambda =s+t=3\) whose corresponding eigenvector \(v=(1,1,-2)\) satisfies \(\sum _{i=1}^{3}\nu _{i}^{\ell }\ne 0\) for all \(\ell >1.\)

Example 5

Let \(G=(V,E)\) be the undirected graph with \(V=\{1,2,3,4,5\}\) and two disconnected components of a different order \(s=2\) and \(t=3,\) shown in Fig. 6. Then \(G^{\circ }\) is a connected graph with

Here \({{\,\textrm{Spec}\,}}(L_{G^{\circ }})=\{5,4,3,2,0\}\) with simple and largest eigenvalue \(\lambda =s+t=5\). Its corresponding eigenvector \(v=(2,2,2,-3,-3)\) satisfies \(\sum _{i=1}^{5}\nu _{i}^{\ell }\ne 0\) for all \(\ell >1.\)

Example 6 below shows that the standard star graphs are \(\rho \)-versatile for any \(\rho > 0\). These graphs consist of a single hub-node connected to all other nodes, which in turn have degree 1, shown in Fig. 7. We will explore the \(\rho \)-versatility of more general star graphs in Subsect. A.2.

Example 6

(Star graphs). Let \(G=(V,E)\) be the undirected graph with \(V=\{1,\dots ,N+1\}\) and two disconnected components of order \(s=1\) and \(t=N,\) shown in Fig. 7. If the largest component of G is complete, then \(G^{\circ }\) is a connected graph with Laplacian matrix given by

The spectrum \({{\,\textrm{Spec}\,}}(L_{G^{\circ }})=\{N+1,1,\dots ,1,0\}\) has one simple and largest eigenvalue \(\lambda =s+t=N+1.\) The corresponding eigenvector is given by \(v=(N+1,-1,\dots ,-1)\) which satisfies the property \(\sum _{i=1}^{N}\nu _{i}^{\ell }\ne 0\) for all powers \(\ell >1\). We can generate more examples of graphs \(G^{\circ }\) with the same simple largest eigenvalue \(\lambda \) and with corresponding eigenvector \(v=(N+1,-1,\dots ,-1)\), by allowing the largest component of G to be merely connected, instead of complete.

In what follows we turn to negative examples. The first of them shows us the importance of starting with connected components of different order, whereas the second one shows us what goes wrong if we start with more than 2 components.

Example 7

Let \(G=(V,E)\) be the undirected graph with \(V=\{1,2,3,4\}\) and two disconnected components, this time of the same order \(s=t=2\), shown in Fig. 8. Then \(G^{\circ }\) is a connected graph with

Here \({{\,\textrm{Spec}\,}}(L_{G^{\circ }})=\{4,2,2,0\}\) with simple and largest eigenvalue \(\lambda =4.\) However, there are no eigenvectors satisfying \(\sum _{i=1}^{4}\nu _{i}^{\ell }\ne 0\) for all \(\ell >1,\) except multiples of \(\textbf{1}=(1,1,1,1).\) The eigenvectors for the other eigenvalues satisfy \(\sum _{i=1}^{4}\nu _i^{\ell }= 0\) whenever \(\ell \) is odd. This example indicates that symmetry can be an obstruction for \(\rho \)-versatility.

Example 8

Let \(G=(V,E)\) be the undirected graph with \(V=\{1,2,3,4,5,6\}\) and three disconnected components of order \(s=1,\) \(t=2\) and \(r=3,\) shown in Fig. 9. Then \(G^{\circ }\) is a connected graph with

We have \({{\,\textrm{Spec}\,}}(L_{G^{\circ }})=\{6,6,5,4,3,0\}\) with non-simple and largest eigenvalue \(\lambda _{1,2}=6\). Nevertheless, two corresponding eigenvectors are given by \(\{(-1,-1,-1,0,0,3)\) and \((-2,-2,-2,3,3,0)\}\), which both satisfy \(\sum _{i=1}^{6}\nu _{i}^{\ell }\ne 0\) for all powers \(\ell >1.\)

G consists of two disconnected components both of the same order 2 and the complement \(G^{\circ }\) is connected. The Laplacian matrix \(L_{G^{\circ }}\) has a simple and largest eigenvalue. However there are no eigenvectors giving the \(\rho \)-versatility condition for \(\rho > 1\), except for multiples of \(\textbf{1}\)

1.2 Versatile graphs by means of the degree distribution

We next investigate another pathway to \(\rho \)-versatility, namely by looking at the degree distribution of the nodes in the network. To this end, we will prove:

Proposition 24

Let \(r<C\) be two positive integers and suppose \(G = (V,E)\) is a graph consisting of one node of degree C and N nodes of degree at most r, where \(N \ge 1\). If

then the largest eigenvalue of \(L_{G}\) is simple and every corresponding eigenvector v satisfies