Abstract

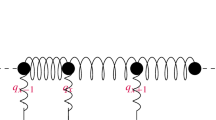

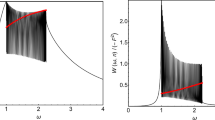

We investigate the properties of a harmonic chain in contact with a thermal bath at one end and subjected, at its other end, to a periodic force. The particles also undergo a random velocity reversal action, which results in a finite heat conductivity of the system. We prove the approach of the system to a time periodic state and compute the heat current, equal to the time averaged work done on the system, in that state. This work approaches a finite positive value as the length of the chain increases. Rescaling space, the strength and/or the period of the force leads to a macroscopic temperature profile corresponding to the stationary solution of a continuum heat equation with Dirichlet-Neumann boundary conditions.

Similar content being viewed by others

References

Basile, G., Olla, S., Spohn, H.: Energy transport in stochastically perturbed lattice dynamics. Arch. Ration. Mech. Anal. 195(1), 171–203 (2010)

Bernardin, C., Kannan, V., Lebowitz, J.L., Lukkarinen, J.: Nonequilibrium stationary states of harmonic chains with bulk noises. Eur. Phys. J. B 84, 685–689 (2011)

Bernardin, C., Olla, S.: Transport properties of a chain of anharmonic oscillators with random flip of velocities. J. Stat Phys 145, 1224–1255 (2011). https://doi.org/10.1007/s10955-011-0385-6

Bolsterli, M., Rich, M., Visscher, W.M.: Simulation of nonharmonic interactions in a crystal by self-consistent reservoirs. Phys. Rev. A 4, 1086–1088 (1970)

Bonetto, F., Lebowitz, J.L., Lukkarinen, J.: Fourier’s Law for a Harmonic Crystal with Self-Consistent Stochastic Reservoirs. J. Stat. Phys. Vol. 116, (2004)

Gihman, I.I., Skorohod, A.V.: The theory of stochastic processes. III. Translated from the Russian by Samuel Kotz. With an appendix containing corrections to Volumes I and II. Grundlehren der Mathematischen Wissenschaften, 232. Springer-Verlag, Berlin-New York, (1979)

Jara, M., Komorowski, T., Olla, S.: Superdiffusion of energy in a chain of harmonic oscillators with noise. Comm. Math. Phys. 339(2), 407–453 (2015)

Khasminskii, R.: Stochastic Stability of Differential Equations, Stochastic Modelling and Applied Probability 66, Springer

Komorowski, T., Lebowitz, J. L., Olla, S.: Heat flow in a periodically forced, thermostatted chain—with internet supplement, Available at arXiv:2205.03839

Komorowski, T., Lebowitz, J. L., Olla, S.: Heat flow in a periodically forced, thermostatted chain II, (2022) arXiv:2209.12923, to appear in J. Stat. Phys

Komorowski, T., Lebowitz, J.L., Olla, S., Simon, M.: On the Conversion of Work into Heat: Microscopic Models and Macroscopic Equations, (2022), arXiv:2212.00093, to appear in Ensaios Matemáticos for the volume dedicated to Errico Presutti 80th birthday

Komorowski, T., Olla, S., Simon, M.: An open microscopic model of heat conduction: evolution and non-equilibrium stationary states. Commun. Math. Sci. 18(3), 751–780 (2020). https://doi.org/10.4310/CMS.2020.v18.n3.a8

Koushik, R.: Green’s function on lattices, available at arXiv:1409.7806

Lebowitz, J.L., Bergmann, P.G.: Irreversible Gibbsian ensembles. Ann. Phys. 1(1), 1–23 (1957). https://doi.org/10.1016/0003-4916(57)90002-7

Lukkarinen, J.: Thermalization in harmonic particle chains with velocity flips. J. Stat. Phys. 155, 1143–1177 (2014). https://doi.org/10.1007/s10955-014-0930-1

Rich, M., Visscher, W.M.: Disordered harmonic chain with self-consistent reservoirs. Phys. Rev. B 11, 2164–2170 (1975)

Rieder, Z., Lebowitz, J.L., Lieb, E.: Properties of harmonic crystal in a stationary non-equilibrium state. J. Math. Phys. 8, 1073–1078 (1967)

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by H. Spohn

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We warmly thank David Huse for very stimulating discussions on the subject. The work of J.L.L. was supported in part by the A.F.O.S.R. He thanks the Institute for Advanced Studies for its hospitality. T.K. acknowledges the support of the NCN grant 2020/37/B/ST1/00426. S.O. has been partially supported by the ANR-15-CE40-0020-01 grant LSD.

Appendices

Appendix A. The proof of Theorem 1.1

Since the result does not depend on the scaling factor \(n^a\), standing by the force \({\mathcal F}(t)\), and the period size \(\theta _n\) we assume that \(a=0\) and \(\theta _n=\theta \). Given a Borel probability measure \(\mu \) on \({\mathbb R}^{2(n+1)}\) (see (1.1)) we denote by \(\big ({\textbf{q}}_{\mu }(t), {\textbf{p}}_{\mu }(t)\big )\) the solution of (1.3)–(1.4)) such that \(\big ({\textbf{q}}_{\mu }(0), {\textbf{p}}_{\mu }(0)\big )\) is distributed according to \(\mu \). Denote then by \(\big (\overline{{\textbf{q}}}_{\mu }(t), \overline{{\textbf{p}}}_{\mu }(t)\big )\) and \(C_{\mu }(t)\) the vector of averages and matrix of the mixed second moments of the solution, correspondingly. They are defined by formulas (2.1) and a \(2\times 2\) block matrix

Each block is an \((n+1)\times (n+1)\) matrix

and \(C^{(p,q)}_{\mu } (t)=[C^{(q,p)}_{\mu } (t)]^T\), where the initial state is taken to be \(\mu \).

By similar calculation as done in (6.15), their evolution is described by the system of linear differential equations with periodic forcing

where

and

Here \(\textrm{e}_{p,n+1}\) and \(\Sigma _2\) are defined in (2.4) and (6.10) respectively. Suppose that we are given a vector \({\overline{X}}\in {\mathbb R}^{2(n+1)}\) and a symmetric non-negative definite \(2(n+1)\times 2(n+1)\) matrix \(S\geqslant {\overline{X}}\otimes {\overline{X}}\). Then, equations (A.1) describe the evolution of the first two moments of the solution of (1.3)–(1.4)) whose initial distribution is a random vector with the first two moments given by \({\overline{X}}\) and S, respectively.

1.1 A.1. The existence and uniqueness of the periodic mean and second moment

In the first step we show the existence of a periodic solution of (A.1) that corresponds to the mean and covariance of a certain probability evolution.

Proposition A.1

There exists a unique vector \(\overline{\textbf{X}}_\textrm{per}=(\overline{\textbf{q}}_{\textrm{per}}, \overline{\textbf{p}}_{\textrm{per}} ) \in {\mathbb R}^{2(n+1)}\) and a non-negative symmetric matrix \(C_\textrm{per}\geqslant \overline{\textbf{X}}_{\textrm{per}}\otimes \overline{\textbf{X}}_{\textrm{per}}\) such that the solution of (A.1) with

satisfies

In addition, we have

The remaining part of this section is devoted to the proof of this results.

1.1.1 A.1.1. The existence of the periodic first moment

Let

Thanks to Proposition 2.1 the vector \((\overline{\textbf{q}}, \overline{\textbf{p}} )\) is well defined. One can easily check that the solution of the first equation of (A.1) starting from the vector is given by

and is therefore \(\theta \)-periodic. In fact, thanks to Proposition 2.1 the periodic solution has to be unique. Since the coordinates of \(\overline{\textbf{X}}(t)\) satisfy the first equation of (A.1) we conclude that the matrix \(\overline{\textbf{X}}_2(t):=\overline{\textbf{X}}(t)\otimes \overline{\textbf{X}}(t)\) satisfies

and it is given by the formula

1.1.2 A.1.2. The existence of the periodic second moment

Now we are going to establish the existence of a periodic second moment. Suppose that C(t) is a periodic solution of the second equation of (A.1). Using the argument made in the proof of Proposition 6.1 we can conclude that it satisfies the equation

where the matrix \(\Sigma _2\) is defined by (6.10), \(\textbf{c}_{2}(s)\) relates to C(s) via (A.2) and F(s) is defined by (A.3), using \((\overline{\textbf{q}}(t), \overline{\textbf{p}}(t) )\) instead of \((\overline{\textbf{q}}_\mu (t), \overline{\textbf{p}}_\mu (t) )\). Conversely, any periodic symmetric matrix valued function C(t) satisfying (A.9) is a periodic solution to the second equation of (A.1).

For \(x,x',y=0,\ldots ,n\) define

Consider the following linear mapping: \({\mathcal L}:[C(\mathbb T_\theta )]^{n+1}\rightarrow [C(\mathbb T_\theta )]^{n+1}\), where \(\mathbb T_\theta :=\theta \mathbb T\) is the torus of size \(\theta \), that assigns to a given vector of \(\theta \)-periodic functions \(\textbf{T}(s)=[T_{0}(s),\ldots ,T_n(s)]\) a vector valued function

where

Here

Obviously, from (A.10), we have \({\mathfrak {G}}_{x,y}(s)\geqslant 0\). Note also that although \( g_{x,x',y}(\cdot )\) need not be \(\theta \)-periodic the functions \({\mathfrak {G}}_x\textbf{T}(t)\), \(x=0,\ldots ,n\) are \(\theta \)-periodic. In addition, if C(t) satisfies (A.9), then

where for a given \(\textbf{T}^T=(T_0,\ldots ,T_n)\in {\mathbb R}^{n+1}\)

Conversely, by finding a solution \(\textbf{c}_{2}\) of (A.14) one can define then a \(\theta \)-periodic function C(t) by the right hand side of (A.9). The entries of the function corresponding to \(C_{x,x}\), \(x=n+1,\ldots ,2n+1\) coincide with the coordinates of the vector \(\textbf{c}_{2}\), by virtue of (A.14). Thus, the function C(t) solves equation (A.9). We have reduced therefore the problem of finding a periodic solution to the second equation of (A.1) to solving equation (A.14).

1.1.3 A.1.3. Solution of (A.14)

Let

It is a closed subset of \(\Big (C(\mathbb T_\theta )\Big )^{n+1}\), equipped with the norm

Consider the mapping

Using the notation of (A.12) and (A.14) we have

Comparing (6.12) with (A.9), after time averaging over a period, it is easy to identify

defined by (7.2). The matrix \([M_{x,y}]_{x,y=0}^n\) is symmetric, bi-stochastic (as can be easily seen from (7.2)). It also follows immediately that

and, as a consequence, \({\mathfrak {T}}\big ({\mathcal C}_+\big )\subset {\mathcal C}_+\). Furthermore, we claim that \(M_{x,y}>0\) for all \(x,y=0,\ldots ,n\). Indeed, a simple calculation, using (2.3) and (2.5), yields

The poles of the meromorphic functions appearing in (A.18) are given by

Suppose that \(M_{x,y}=0\) for some x, y. From (A.16) we conclude then that

which in turn would implies that \(\Big [e^{-As}\Big ]_{x+n+1,y+n+1}\equiv 0\) for all \(s\geqslant 0\), thus also

As a result, we conclude that \( \psi _j(x)\psi _j(y)=0\), for all \(j=0,\ldots ,n\), which is impossible.

We shall show that the mapping \({\mathfrak {T}}\) has a unique fixed point in \({\mathcal C}_+\) by proving that the mapping is a contraction in the norm \(|\!\Vert \cdot \Vert \!|\). Indeed, for \(\textbf{T}_j^T=[T_{j,0},T_{j,1},\ldots ,T_{j,n}]\), \(j=1,2\), we have

Therefore

where

We have proven that \( \Vert {\mathfrak {T}}(\textbf{T}_1) -{\mathfrak {T}} (\textbf{T}_2)\Vert _\infty \leqslant \rho \Vert \textbf{T}_1-\textbf{T}_2\Vert _{\infty }\) and the existence of a unique fixed point follows. This ends the proof of Proposition A.1. \(\square \)

1.2 A.2. The end of the proof of Theorem 1.1

Suppose now that \(\nu \) is a probability law whose first and second moments are \(\theta \)-periodic, e.g. it could be a Gaussian distribution with the mean and the second moment given by \(\textbf{P}_{\textrm{per}}\) and \(C_{\textrm{per}}\), respectively. Denote by

the evolution family of transition probability operators corresponding to the dynamics described by (1.3)–(1.4). Consider the event \( E:=[N_x({\theta })=0,\,x=1,\ldots ,n]. \) We have \({\mathbb P}[E]>0\). Suppose that the dynamics starts at \((\textbf{q}, \textbf{p})\). Then, for any \(F\geqslant 0\) we can write

where \({\mathcal Q}_{s,t}\) is the transition probability operator for the non-homogeneous Ornstein-Uhlenbeck dynamics that corresponds to the generator \( {\mathcal {G}}_t^{(g)} = {\mathcal {A}}_t + 2 \gamma S_{-} \), see (1.7) and (1.8). Using the hypoellipticity of \({\mathcal {G}}_t^{(g)}\), see [9, Section A.3], one can prove that there exist strictly positive transition probability density kernels \(\rho _{s,t}\) corresponding to \({\mathcal Q}_{s,t}\), see [9, Section A.2] for more details. Thanks to (A.21) we conclude that

where \(c_*:={\mathbb P}[E]\). Then, \(\nu _{0,t}:=\nu {\mathcal P}_{0,t}\) describes the law of \((\textbf{q}(t), \textbf{p}(t) )\) with the prescribed initial data. Thanks to Proposition A.1 we can see that the total energy \({\mathcal H}(t):=\sum _{x=0}^n{\mathcal E}_x(t)\) (see (1.2)) is a Lyapunov function for the above system, since \({\mathbb E}{\mathcal H}(t)\) is \(\theta \)-periodic. The above implies that the family of laws \(\{\nu _{0,t},\,t\geqslant 0\}\) is tight in \({\mathbb R}^{2(n+1)}\). Thus, also the family \(\mu _N:=N^{-1}\int _0^{N\theta }\nu _{0,s}\textrm{d}s\) is tight. Suppose that \(\mu _\infty \) is its limiting measure, i.e. there exists a sequence \(N'\rightarrow +\infty \) such that \(\mu _{N'}\rightarrow \mu _\infty \), in the topology of weak convergence. Since \({\mathcal P}_{s,t}\) has the Feller property one can easily conclude that \(\mu _\infty {\mathcal P}_{0,\theta }=\mu _\infty \). Hence \(\mu _s^P:=\mu _\infty {\mathcal P}_{0,s}\) , \(s\in [0,+\infty )\) is a periodic stationary state.

Suppose that \(\mu (\textrm{d}\textbf{q}, \textrm{d}\textbf{p})=f (\textbf{q}, \textbf{p}) \textrm{d}\textbf{q} \textrm{d}\textbf{p}\), where f is a \(C^\infty \) smooth probability density. One can show, using the regularity theory of stochastic differential equations, that \(\mu {\mathcal P}_{0,\theta }\) is absolutely continuous w.r.t. the Lebesgue measure and its density is also \(C^\infty \) smooth, see e.g. [6, Corollary III.3.4, p. 303]. This allows us to conclude further that \(\mu {\mathcal P}_{0,\theta }\) is absolutely continuous, provided that \(\mu \) is absolutely continuous. We shall denote by \({\mathcal P}_{0,\theta }\) the corresponding operator induced on \(L^1({\mathbb R}^{2(n+1)})\). The operator \({\mathcal Q}_{0,\theta }\) corresponding to the Gaussian dynamics transforms \(\mu _\infty \) into an absolutely continuous measure. Thanks to (A.22) we conclude that

Therefore the singular part of \(\mu _\infty \) is of at most mass \(1-c_*\). Since \({\mathcal P}_{0,\theta }\) transforms the space of abolutely continuous measures into itself, both the singular and absolutely continuous parts of \(\mu _\infty \), after normalization, become invariant under \({\mathcal P}_{0,\theta }\). Iterating this procedure we conclude, after m steps, that the singular part can be of at most mass \((1-c_*)^m\), which eventually leads to the conclusion that the measure \(\mu _\infty \) is absolutely continuous. The respective density is positive, due to (A.22). This ends the proof of Theorem 1.1. \(\square \)

Appendix B. Green Functions Convergence

Recall that \(G_{\omega _0}\) and \(G^n_{\omega _0}\) are the Green’s functions corresponding to \(\omega _0^2-\Delta \) and \(\omega _0^2-\Delta _{\textrm{N}}\), where \(\Delta \) is the free lattice laplacian on \({\mathbb {Z}}\) and \(\Delta _{\textrm{N}}\) is the Neumann discrete laplacian on \(\{0,1,\dots , n\}\), see Sects. 2.3 and 2.4, respectively.

1.1 B.1. Estimantes on oscillating sums

Define \(\chi _n(x)\) as the \(n+1\)-periodic extension of \(\chi _n(x):=(1+x)\wedge (n+2-x)\), \(x\in [0,n+1]\). Suppose that \(\Phi :{\mathbb R}^2\rightarrow {\mathbb {C}}\) is a \(\theta ,\theta '\)-periodic function in each variable respectively. Denote

for \(x,x'\in {\mathbb Z}\).

Lemma B.1

Suppose that \(\Phi \) is of \(C^k\)-class for some \(k\geqslant 1\). Then, there exists C such that

Proof

To simplify the notation we suppose that \(\theta =\theta '=1\). Summation by parts yields

Since \(\Phi \) is of \(C^1\) class

for some constant \(C>0\). In addition, there exists \(c>0\) such that

for \(x,x'\in {\mathbb Z},\,n\geqslant 1\). Thus, there exists \(C>0\) such that

Iterating this argument in the regularity degree k of \(\Phi \) we conclude (B.1). \(\square \)

1.2 B.2. Application

An application concerns the approximation of the Green’s function \(G_{\omega _0}\) by \(G_{\omega _0}^n\) along the diagonal.

Lemma B.2

We have

Here for some constant \(C>0\) we have

Proof

Using the definition of the Green’s function (2.13) (with \(\ell =0\)) and formulas (2.14) we obtain

where

As a result we write \(G_{\omega _0}^n(y,y)\) in the form (B.2), with

Estimate (B.3) is then a consequence of Lemma B.1. \(\square \)

Appendix C. Proofs of Lemmas 9.2, 9.3 and 9.5

1.1 C.1. Proof of Lemma 9.2

For \(m\in {\mathbb Z}\) and \(g=(g_0,\ldots ,g_n)\in {\mathbb R}^{n+1}\) define

where \(\Sigma _2\) is defined in (6.10). Note that

Arguing similarly as in the proof of (6.16) we get

where \(\alpha _m:=\pi m/\theta \). Denote

Following the same manipulations as those leading to (6.20) we obtain

and \( {\widetilde{F}}_{j,j'}=\sum _{x=0}^n\psi _j(x)\psi _{j'}(x)g_x. \) Solving the above system using the procedure used to deal with (6.20) we obtain

with

Note that \(\Theta _0(c,c')=\Theta (c,c')\) defined in (6.24). From (C.1) we conclude that

As in (7.5), for any sequence \((f_x)\in {\mathbb {C}}^{n+1}\) we can write

We have

Thus \( \lim _{m\rightarrow +\infty }\Big (1- \Theta _m(c,c')\Big )=1. \) On the other hand, if \(m\not =0\), an easy calculation shows that \(1- \Theta _m(c,c')=0\) implies that \( (c-c')^2=8\alpha ^2_m(\alpha ^2_m+\gamma ^2)\) and \(\quad c+c'=2\alpha ^2_m\), where \(\alpha _m=\pi m/\theta \). But this would clearly lead to a contradiction, as then we would have \(|c-c'|>\sqrt{2}(c+c')\), which is clearly impossible (remember that \(c,c'>0\)). Hence, there exists \( {\mathfrak {C}}_*>0\) such that

This ends the proof of (9.9). \(\square \)

1.2 C.2. Proof of Lemma 9.3

Using (C.5) we obtain

Applying elementary trigonometric identities we conclude that

Therefore we can write

where \(O_m\left( \frac{1}{n}\right) \leqslant \frac{C}{n}\) for some constant \(C>0\), independent of n and m, and

We claim that there exists \(C>0\), independent of n and m, such that \({\mathfrak {V}}_{m}(u,u')\leqslant C\) for all \(u,u'\in [0,\omega _0^2+4]\). Indeed, as can be seen directly from (C.4), we have \(\lim _{m\rightarrow +\infty }\Theta _m(c,c')=0\) uniformly in \(c,c'\in [0,\omega _0^2+4]\). On the other hand the function \({\mathbb R}\times [0,\omega _0^2+4]^2\ni (m,c,c')\rightarrow \Theta _m(c,c')\) is bounded on compact set. If otherwise, this would imply that there exist \((m,c,c')\in {\mathbb R}\times [0,\omega _0^2+4]^2\) such that

An easy calculation gives \(c+c'= 2\alpha ^2_m\) and \( (c-c')^2=8(\alpha ^2_m+\gamma ^2) \alpha ^2_m,\) where \(\alpha _m=\pi m/\theta \). This leads to a contradiction, as then \(|c-c'|>\sqrt{2}(c+c')\) (but both \(c,c'>0\)). Thus the conclusion of the lemma follows. \(\square \)

1.3 C.3. Proof of Lemma 9.4

From (A.18) we obtain

where (cf (6.18))

In the case \(\mu _j= \gamma ^2\) (then \(\lambda _{j,\pm }=\gamma \), cf (A.19)) we have \( E_j(t):=(1-\gamma t)e^{-\gamma t} . \) Using (A.6) we obtain therefore

From (C.9) we conclude that there exists \({\mathfrak {p}}_*>0\) such that

Estimate (9.11) is then a straightforward consequence of (C.10) and (4.11). \(\square \)

1.4 C.4. Proof of Lemma 9.5

Multiplying both sides of (9.4) by \(V_x(t)\) and averaging over time we get

Summing up over x we obtain

Using (7.4) and the Cauchy-Schwarz inequality we obtain in particular that

for some \(C>0\) independent of n. The last estimate follows from (9.11). To finish the proof note that from (7.2) we have

where the equality holds up to a term of order O(1/n). \(\square \)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Komorowski, T., Lebowitz, J.L. & Olla, S. Heat Flow in a Periodically Forced, Thermostatted Chain. Commun. Math. Phys. 400, 2181–2225 (2023). https://doi.org/10.1007/s00220-023-04654-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-023-04654-4