Abstract

We prove here that the height function associated to non-simple exclusion processes with arbitrary jump-length converges to the solution of the Kardar–Parisi–Zhang SPDE under suitable scaling and renormalization. This extends the work of Dembo and Tsai (Commun Math Phys 341(1):219–261, 2016) for arbitrary jump-length and Goncalves and Jara (Stoch Process Appl 127(12):4029–4052, 2017) for the non-stationary regime. Thus we answer a "Big Picture Question" from the AIM workshop on KPZ and also expand on the almost empty set of non-integrable and non-stationary particle systems for which weak KPZ universality is proven. We use an approximate microscopic Cole-Hopf transform like in Dembo and Tsai (2016) but we develop tools to analyze local statistics of the particle system via local equilibrium and work of Goncalves and Jara (2017). Local equilibrium is done via the one-block step in Guo et al. (Commun Math Phys 118:31, 1988) for path-space/dynamic statistics.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The Kardar–Parisi–Zhang SPDE, which we call the KPZ equation, is an SPDE whose statistics are conjectured to be universal among a large class of rough dynamic interfaces including fluctuations of burning fronts, bacterial growth colonies, and crack formation. In the physics literature this was unrigorously shown in [19] but mathematical proof is a difficult open problem. To begin a precise discussion, we write down the KPZ equation with an effective diffusivity \(\alpha \in {\mathbb {R}}_{>0}\)/ asymmetry \(\alpha '\in {\mathbb {R}}\):

The \(\xi \)-term is a Gaussian space-time white noise of delta-covariance \(\textbf{E}\xi _{T,X}\xi _{S,Y}=\delta _{T-S}\delta _{X-Y}\). The KPZ equation is a singular SPDE because of both the roughness of the \(\xi \)-term and the nonlinear dependence on the slope \(\partial _{X}\mathfrak {h}\). To motivate the universality problem of interest here, both from a mathematical and physics perspective, we give the following interpretation of "singular".

-

Consider a growth model \(\widetilde{\mathfrak {h}}\) given by the solution of a version of (1.1) but where the quadratic function of the slope is instead an arbitrary nonlinear function. To get the KPZ equation from the \(\widetilde{\mathfrak {h}}\)-equation, we may Taylor expand the nonlinear function there as a function of the slope \(\partial _{X}\widetilde{\mathfrak {h}}\). The first two terms, which are constant and linear in \(\partial _{X}\widetilde{\mathfrak {h}}\), can be removed by elementary transformations, and the leading-order term that remains is the quadratic in (1.1). Though this argument may hold water at a heuristic level, it ultimately gives an incorrect effective asymmetry \(\alpha '\) because of the singular nature of (1.1). Dealing with this singular equation rigorously is a major goal of [15] which was later generalized into a theory of regularity structures in [16]. Rigorous application of regularity structures to make the current bullet point on universality correct is done in [18].

-

We emphasize that although regularity structures were successfully implemented to confirm universality of the KPZ equation for SPDE growth models in [18], it depends on the noise in these SPDEs to be space-time white noise. This allows one to build solutions of said SPDEs using explicit Gaussian-based objects. Work of [17] extends universality to more general continuum noises. Extensions of universality via regularity structures to a few types of semi-discrete noise are done in [11, 23]. However, this does not include noises that are relevant for non-simple exclusions. In particular, using regularity structures for universality in the context of general particle systems with a genuinely discrete flavor is open.

The previous bullet point illustrates difficulties/interesting aspects of the proposed universality of the KPZ equation. The rest of this introduction before we introduce the particle system of interest in this paper is organized as follows. First we introduce a solution to KPZ which will allow us to avoid dealing with the singular features of the KPZ Eq. (1.1). Then we record a list of results concerning convergence to KPZ for special integrable or solvable particle systems. We conclude with progress beyond solvable models and the contributions of this paper. Later in this introduction section we will discuss additional background.

To deal with the singular nature of the KPZ equation, instead of using the regularity structures in [16] we will instead employ the following Cole-Hopf transform/solution from [2]. First, for \(\alpha \in {\mathbb {R}}_{>0}\) and \(\lambda \in {\mathbb {R}}\) we introduce the stochastic heat equation:

-

We specialize to \(\lambda =\alpha '\alpha ^{-1}\) and define the solution of the KPZ Eq. (1.1) as \(\mathfrak {h}\overset{\bullet }{=}-\lambda ^{-1}\log \mathfrak {Z}\). We clarify this log-transform is well-defined because (1.2) admits a continuous solution via Ito calculus for its Duhamel form, and for positive initial data it remains positive with probability 1; see [24]. We will also call the stochastic heat Eq. (1.2) the "SHE".

-

We must also include an infinite renormalization/counter-term in \(\mathfrak {h}\) to handle singular aspects of KPZ; see Remark 1.4.

In [2] the authors show that the height function associated to a nearest-neighbor ASEP/"asymmetric simple exclusion process" model converges to the Cole-Hopf solution of KPZ, and since then a number of similar results were obtained in [6, 8], and [9], for example. The key input for these papers is that the height function for the microscopic particle system exhibits an algebraic duality; the exponential of the height function satisfies an exact microscopic version of the stochastic heat equation. Duality of such particle systems therefore provides a direction towards establishing the KPZ equation scaling limit for microscopic height functions while only encountering stochastic analysis of the SHE and thus without directly addressing the singular features of the KPZ equation; recall from earlier that addressing these singular aspects for SPDEs in the context of particle systems is open. However duality is an indication of integrability or solvability, and the "set of integrable models" is very sparse and rare among all particle systems, so universality of the KPZ equation is unlikely to be solved with ideas based solely on integrability.

In a step outside the set of integrable models, the authors in [10] confirm the universality of the KPZ equation for the height functions associated to a non-simple generalization of the ASEP model in [2]. Roughly speaking the exponential of the height function solves a microscopic version of SHE as in [2] but with additional error terms. In [10] the authors assume the maximal jump-length in the non-simple ASEP-generalization is at most 3 in which case the aforementioned error terms can be addressed via standard ideas of hydrodynamic limits. In a nutshell the contribution of this paper is dealing with these errors for arbitrary jump-lengths. The approach that we will take to doing this is analyzing these errors using a homogenization strategy based on ideas from the first step in a general "Boltzmann-Gibbs principle" originally introduced in [4]; we cite [12] for a refined version of this Boltzmann-Gibbs principle. The Boltzmann-Gibbs principle is generally accessible for systems with invariant measure initial conditions while for general non-stationary initial measures this becomes a difficult problem. Actually one version of the Boltzmann-Gibbs principle was established in [5] for non-equilibrium initial measures. However, the proofs within [5] are not applicable in this article because of a few stochastic analytic difficulties for the SHE that come from the singular features of the KPZ equation. Thus, we introduce another mechanism to access parts of a non-equilibrium Boltzmann-Gibbs principle based on adapting the classical one-block scheme within [14] to path-space/dynamic statistics of the particle system. This additionally gives another probabilistic and general homogenization tool to establishing KPZ equation scaling limits for particle systems.

To reiterate, our first main goal is to extend [10] to arbitrary maximal jump-length, answering, in large part, one "Big Picture Problem" from the AIM workshop on KPZ. The second is to develop general tools for non-equilibrium particle systems.

1.1 The model

The particle system we focus on here is a generalization of the non-simple exclusion process studied in [10] but for arbitrary-length steps in the particle random walk; we cite [10] for this entire subsection. We give its generator below.

-

Using spin-notation adopted previously in [10], given any sub-lattice \(\mathfrak {I} \subseteq {\mathbb {Z}}\) define \(\Omega _{\mathfrak {I}} = \{\pm 1\}^{\mathfrak {I}}\). Observe such an association prescribes a mapping \(\mathfrak {I} \mapsto \Omega _{\mathfrak {I}}\) for which any containment \(\mathfrak {I}\subseteq \mathfrak {I}'\) induces the canonical projection \(\Omega _{\mathfrak {I}'} \rightarrow \Omega _{\mathfrak {I}}\) given by

$$\begin{aligned} \Pi _{\mathfrak {I}'\rightarrow \mathfrak {I}}: \Omega _{\mathfrak {I}'} \rightarrow \Omega _{\mathfrak {I}}, \quad (\eta _{x})_{x \in \mathfrak {I}'} \mapsto (\eta _{x})_{x \in \mathfrak {I}}. \end{aligned}$$(1.3)We abuse notation and denote \(\Pi _{\mathfrak {I}} \overset{\bullet }{=} \Pi _{\mathfrak {I}' \rightarrow \mathfrak {I}}\) for any \(\mathfrak {I} \subseteq \mathfrak {I}' \subseteq {\mathbb {Z}}\). We adopt the physical interpretation that for any \(\eta \in \Omega _{{\mathbb {Z}}}\), the value \(\eta _{x} = 1\) indicates the presence of a particle located at \(x \in {\mathbb {Z}}\) and that \(\eta _{x} = -1\) indicates the absence of a particle.

-

We introduce a maximal jump-length \(\mathfrak {m}\in {\mathbb {Z}}_{>0}\cup \{+\infty \}\) and two sets of coefficients/speeds; note ellipticity \(\alpha _{1} > 0\) below:

$$\begin{aligned} \textrm{A} \ = \ \left\{ \alpha _1,\ldots ,\alpha _{\mathfrak {m}} \in {\mathbb {R}}_{\geqslant 0}: \alpha _{1} > 0, \ {{\sum }}_{k = 1}^{\mathfrak {m}} \alpha _k = 1\right\} , \quad \Gamma \ = \ \left\{ \gamma _k \in {\mathbb {R}}\right\} _{k = 1,\ldots ,\mathfrak {m}}. \end{aligned}$$(1.4)For any pair of sites \(x,y \in {\mathbb {Z}}\), we denote by \(\mathfrak {S}_{x,y}\) the generator for a speed-1 symmetric exclusion process on the bond \(\{x,y\}\). We specify the generator \(\mathfrak {S}^{N,!!}\) of our dynamic for \(N \in {\mathbb {Z}}_{>0}\) large via its action on a generic functional \(\varphi : \Omega _{{\mathbb {Z}}} \rightarrow {\mathbb {R}}\):

$$\begin{aligned} \mathfrak {S}^{N,!!}\varphi (\eta )&\overset{\bullet }{=} \ N^{2} {{\sum }}_{k = 1}^{\mathfrak {m}} \alpha _{k} {{\sum }}_{x \in {\mathbb {Z}}} \left( 1 + \frac{1}{2}N^{-\frac{1}{2}}\gamma _{k}\textbf{1}_{\eta _{x}=-1}\textbf{1}_{\eta _{x+k}=1}\right. \nonumber \\&\quad \left. - \frac{1}{2}N^{-\frac{1}{2}}\gamma _{k}\textbf{1}_{\eta _{x}=1}\textbf{1}_{\eta _{x+k}=-1}\right) \mathfrak {S}_{x,x+k}\varphi (\eta ). \end{aligned}$$(1.5)We will denote by \(\eta _{T}\) the particle configuration observed after time-T evolution under the \(\mathfrak {S}^{N,!!}\) dynamic. To be clear, every superscript ! denotes another scaling factor of N for any operator for the entirety of this paper.

Definition 1.1

Provided any time \(T \in {\mathbb {R}}_{\geqslant 0}\), let us define \(\mathfrak {h}^{N}_{T,0}\) to be 2 times the net flux of particles across the bond \(\{0,1\}\), with leftward traveling particles counting as positive flux. We also define the following height function from [10]:

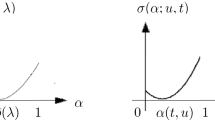

The height function \(\mathfrak {h}^{N}\) becomes the solution of the KPZ equation in the large-N limit under appropriate renormalization. This is the main theorem of the current paper. Looking more closely at what showing this scaling limit entails, we address first what the effective diffusivity \(\alpha \in {\mathbb {R}}_{>0}\) and the effective asymmetry \(\alpha '\in {\mathbb {R}}\) should be. This tells us what the limit KPZ stochastic PDE for \(\mathfrak {h}^{N}\) should be, and this also tells us how to define the corresponding microscopic Cole-Hopf transform. Indeed, let us remark from our discussion of SHE above that defining the Cole-Hopf transform for the limit KPZ equation requires knowing the ratio \(\alpha '\alpha ^{-1}\in {\mathbb {R}}\). We will not perform the calculation in here, although to this end we reference the KPZ scaling theory calculation in [10] immediately after (1.8) therein. Ultimately, the effective diffusivity and asymmetry are those of the particle random walk.

Definition 1.2

We define \(\alpha \overset{\bullet }{=} {{\sum }}_{k=1}^{\mathfrak {m}}k^{2}\alpha _{k}\) and \(\alpha '\overset{\bullet }{=}{{\sum }}_{k=1}^{\mathfrak {m}}k\alpha _{k}\gamma _{k}\). We also define \(\lambda \overset{\bullet }{=}\alpha '\alpha ^{-1}\) following notation in [10].

Definition 1.3

Define the microscopic Cole-Hopf transform denoted by \(\mathfrak {Z}^{N}\) by the following analog of the Cole-Hopf transform of the continuum SHE/KPZ equation. In the following, the growth speed \(\mathfrak {v}_{N} \in {\mathbb {R}}\) is defined in (1.29) in [10] for arbitrary \(\mathfrak {m}\):

We will realize \(\mathfrak {h}^{N}\) and \(\mathfrak {Z}^{N}\) as functions on \({\mathbb {R}}_{\geqslant 0}\times {\mathbb {R}}\) via piecewise linear interpolation of their values on \({\mathbb {R}}_{\geqslant 0}\times {\mathbb {Z}}\).

Remark 1.4

Technically \(\mathfrak {Z}^{N}\) is the microscopic Cole-Hopf transform of the renormalized height function \(\mathfrak {h}^{N}\) with counter-term of speed \(N\mathfrak {v}_{N}\gg 1\). The renormalization/counter-term is a microscopic indication of singular features of the KPZ equation.

1.2 Main theorem

The primary result of the paper is a scaling limit for \(\mathfrak {Z}^{N}\) under a large class of initial probability measures defined as follows. We emphasize this class of initial measures is also of interest in [2] and [10] for example.

Definition 1.5

We say a probability measure \(\mu _{0,N}\) on \(\Omega _{{\mathbb {Z}}}\) is near stationary, if the following moment bounds hold with respect to \(\mu _{0,N}\) uniformly in \(N\in {\mathbb {Z}}_{>0}\) provided any \(p \in {\mathbb {Z}}_{\geqslant 1}\) and \(0 \leqslant \vartheta <\frac{1}{2}\):

Moreover, we require \(\mathfrak {Z}_{0,NX}^{N} \rightarrow _{N\rightarrow \infty } \mathfrak {Z}_{0,X}\) locally uniformly for some continuous initial data \(\mathfrak {Z}_{0,\bullet }:{\mathbb {R}}\rightarrow {\mathbb {R}}_{\geqslant 0}\).

Before we can present the main result, we must first introduce a few assumptions which we package as one. The first part of the following set of assumptions is a finite maximal jump-length that does not depend on \(N\in {\mathbb {Z}}_{>0}\). We comment on what may be done to relax this constraint though this just amounts to technical adjustments throughout a few different parts of the paper. We will take the assumption of finite maximal jump-length to make this paper more readable. The second part of the following set of assumptions is more serious. It asserts that the speed of the asymmetric jumps in the particle random walk approximately satisfies a linear constraint from [10]. We actually borrow and improve on such constraint for asymmetric jumps in the particle system from [10]. In spirit of universality, the constraint should not necessarily be there. It would be interesting to remove it.

Assumption 1.6

The maximal jump-length \(\mathfrak {m}\) is uniformly bounded and independent of \(N\in {\mathbb {Z}}_{>0}\). Moreover, we have the a priori estimate \(\sup _{k=1,\ldots ,\mathfrak {m}}\alpha _{k}|\gamma _{k}-\bar{\gamma }_{k}| \lesssim N^{-1/2}\), where the "specialized" speeds of asymmetric jumps are given by the following formula:

In the following statement of our main result, we will employ the Skorokhod space of \(\textbf{D}_{1}\overset{\bullet }{=}\textbf{D}([0,1],\textbf{C}(\mathbb {K}))\) of cadlag paths valued in the Banach space of continuous functions \(\textbf{C}(\mathbb {K})\) on an arbitrary but fixed compact set \(\mathbb {K}\subseteq {\mathbb {R}}\). We cite [3] for details.

Theorem 1.7

Under any near-stationary initial data, the space-time process \(\mathfrak {Z}_{T,NX}^{N}\) is tight in the large-N limit with respect to the Skorokhod topology on \(\textbf{D}_{1}\). All limit points are the solution to SHE with parameters \(\alpha ,\lambda \in {\mathbb {R}}\) defined earlier and initial data \(\mathfrak {Z}_{0,\bullet }\).

Before we proceed, we make a few brief remarks.

-

There is nothing special about time 1. It may be replaced by any fixed positive time. We also allow \(\mathbb {K}\) to be any compact set.

-

In [10], and even in [2] and related works, the class of near-stationary initial probability measures considered allow for the a priori estimates given in Definition 1.5 to grow in some exponential-linear fashion. We did not allow for that here. However, our methods will still hold for this larger set of initial data. The differences are almost cosmetic and depend only on diffusive tails of a discretization of the classical Gaussian heat kernel as in [10]. We focus on the above class of initial data introduced in Definition 1.5 without the exponential growth just to make this paper more readable.

-

We have assumed finite maximal jump-length for the particle random walk in our paper. We can actually remove this with a strategy based on the following outline if we assume the sequences \(\{\alpha _{k}\}_{k\in {\mathbb {Z}}_{>0}}\) and \(\{\alpha _{k}\gamma _{k}\}_{k\in {\mathbb {Z}}_{>0}}\) have all moments as measures on \({\mathbb {Z}}_{>0}\). Take any \(\varepsilon \in {\mathbb {R}}_{>0}\) as small as we want but independent of \(N\in {\mathbb {Z}}_{>0}\). Because both \(\{\alpha _{k}\}_{k\in {\mathbb {Z}}_{>0}}\) and \(\{\alpha _{k}\gamma _{k}\}_{k\in {\mathbb {Z}}_{>0}}\) admit all moments, the speed of jump of length more than \(N^{\varepsilon }\) is at most \(\kappa _{C}N^{-C}\) for any \(C\in {\mathbb {R}}_{>0}\), so roughly speaking we can forget about all jumps of length more than \(N^{\varepsilon }\), and \(\mathfrak {m}=N^{\varepsilon }\). We are almost in a situation of finite maximal jump-length, but \(\mathfrak {m}=N^{\varepsilon }\) still grows in \(N\in {\mathbb {Z}}_{>0}\) even if slowly. It turns out that the presence of \(\mathfrak {m}=N^{\varepsilon }\) only affects estimates which are power-savings in \(N\in {\mathbb {Z}}_{>0}\) and their dependence on \(\mathfrak {m}=N^{\varepsilon }\) is polynomial. Thus these extra \(N^{10\varepsilon }\) factors, for example, are negligible.

1.3 Narrow-wedge initial measure

The analysis in this article can be adjusted to treat the exclusion processes considered herein where the initial probability measure on the set of particle configurations is not near-stationary or anything nearby, but rather the narrow-wedge initial measure/configuration. This initial measure for the set of particle configurations gives rise to a microscopic Cole-Hopf transform with large-N limit the Dirac point mass; for a detailed discussion, see [1] and [7].

Because of the distributional nature of Dirac point masses the adjustments that we need in order to treat exclusion processes here but with narrow-wedge initial measure are nontrivial and technical since they require adjustments of the hydrodynamic-limit-input of this paper and not just the stochastic analytic inputs as was the case for [10]. Adding these would require several detailed technical arguments. For this reason we will defer the extension to narrow-wedge initial measure to a separate paper.

1.4 Background

We have mentioned already the open problem of implementing the theory of regularity structures outside stochastic PDEs in [16] and [18] and to height functions of interacting particle systems. We now discuss a different approach to weak KPZ universality known as a theory of energy solutions. This approach was thoroughly explored in [12] by Goncalves and Jara. The approach via energy solutions is designed around a nonlinear martingale problem for the KPZ equation or its avatar in the stochastic Burgers equation. As much of previous literature on hydrodynamic limits and their fluctuations for interacting particle systems was also based on martingale problems, such nonlinear martingale problem was engineered to fit in the "same framework" to confirm the universality of (1.1) for many non-integrable interacting particle systems. In particular, it does not apply the Cole-Hopf transform and instead directly makes sense of the singular features of KPZ. The theory of energy solutions, however, depends on the model being at/very close to some invariant measure to make sense of these singular features of KPZ. In particular, the theory of energy solutions applies only to stationary interacting particle systems while much of the interest in the current work is proving a KPZ scaling limit for non-stationary interacting particle systems.

1.5 Organization

We will not be able to discuss the actual content of this paper until the end of Sect. 2 at which point we will have set up an approximate microscopic version of SHE for \(\mathfrak {Z}^{N}\). We instead give the following high-level outline for now.

-

In Sect. 2, we re-develop the framework in Sect. 2 of [10]. The strategy for the proof of Theorem 1.7 is also given.

-

In Sect. 3, we establish local equilibrium via entropy production in infinite-volume along with equilibrium calculations.

-

In Sect. 4, we introduce compactification of the \(\mathfrak {Z}^{N}\)-dynamics. This is effectively done by heat kernel estimates.

-

In Sect. 5, we develop our main technical contribution which we call a dynamic variation of the one-block strategy.

-

In Sect. 6, we establish preliminary time-regularity estimates for the microscopic Cole-Hopf transform \(\mathfrak {Z}^{N}\).

-

In Sect. 7, we use the dynamical one-block strategy and time-regularity in a multiscale analysis to get a "key" estimate.

-

In Sect. 8, we prove Theorem 1.7 for near-stationary data using the above "key" estimate.

In the “Appendix” we record auxiliary heat kernel and martingale estimates which are based on Proposition A.1/Corollary A.2 in [10] and Lemma 3.1 in [10]. We also include a list of notation in the “Appendix” for consult while reading this paper. Lastly, this paper is long consequence of trying to provide enough explanation for ideas in here and clarify each of the many big points. In particular, we try to leave no seemingly abstract estimate/construction without a word about why it is helpful/what it is doing. We also often explain proofs of various results to clarify the technical details here which adds to the length of the paper as well.

1.6 Comments on notation

We have an index for notation we use often in this paper in the “Appendix” section of this paper. We give here a few pieces of notation from the aforementioned “Appendix” section that are more commonly used.

-

A "universal" constant is one that depends on nothing beyond possibly fixed data of the particle system, for example speeds \(\{\alpha _{k}\}_{k=1}^{\mathfrak {m}}\). When we refer to a constant as "arbitrarily/sufficiently small but universal", we mean arbitrarily/sufficiently small depending only on a uniformly bounded number of universal constants. The same is true for "arbitrarily/sufficiently large but universal" constants except the word "small" is replaced by "large". The reader is invited to take "arbitrarily/sufficiently small but universal" constants to be \(999^{-999}\) and to take "arbitrarily/sufficiently large but universal" constants to be \(999^{999}\).

-

Provided any \(a,b\in {\mathbb {R}}\), we define the discretized interval \(\llbracket a,b\rrbracket =[a,b]\cap {\mathbb {Z}}\).

-

For any finite set I, let \(\kappa _{I}\in {\mathbb {R}}\) be a constant depending only on I. We also define a normalized sum \(\widetilde{{{\sum }}}_{i\in I} \overset{\bullet }{=} |I|^{-1}{{\sum }}_{i\in I}\).

-

The "microscopic time-scale" is order \(N^{-2}\) and the "macroscopic time-scale" is order 1. The "mesoscopic time-scales" are any time-scales that are between these two time-scales. Similarly the "microscopic length-scale" is order 1 and the "macroscopic length-scale" is order N. The "mesoscopic length-scales" are between these two length-scales.

-

Script font will be used for operators. Fraktur font will be used for anything related to PDEs, the particle system, or anything related to details/analysis of the particle system (such as time-shifts, time-scales for averages, functionals, and subsets in \({\mathbb {Z}}\)).

-

Finally, starting in Sect. 6, the subscript "\(\textrm{st}\)" for random times \(\mathfrak {t}_{\textrm{st}}\in {\mathbb {R}}_{\geqslant 0}\) stands for "stopping time".

2 Approximate Microscopic Stochastic Heat Equation

We recap framework developed in [10] with adjustments catered to our analysis in this paper. We then provide an outline of overcoming obstacles discussed in [10] that limit the maximal jump-length in [10]. We will employ here invariant probability measures for relevant exclusion processes from Definition 3.1. These are canonical and grand-canonical ensembles.

2.1 Quantities of interest

The goal of the current section is to write down an approximate microscopic SHE as a stochastic equation for the dynamics of the microscopic Cole-Hopf transform. To do so we must first identify functionals/coefficients for which we can conduct some probabilistic analysis. First we introduce some notation.

Definition 2.1

For any \(\mathfrak {f}:\Omega _{{\mathbb {Z}}}\rightarrow {\mathbb {R}}\), define its support as the smallest subset \(\mathfrak {I}\subseteq {\mathbb {Z}}\) so that \(\mathfrak {f}\) depends only on \(\eta _{x}\) for \(x\in \mathfrak {I}\).

Definition 2.2

For \(X\in {\mathbb {Z}}\), define \(\tau _{X}:\Omega _{{\mathbb {Z}}}\rightarrow \Omega _{{\mathbb {Z}}}\) to shift a configuration so that \((\tau _{X}\eta )_{Z}=\eta _{Z+X}\) for all \((\eta ,Z)\in \Omega _{{\mathbb {Z}}}\times {\mathbb {Z}}\).

We define three classes of functionals below. The first is a generalization of "weakly vanishing" terms in [10].

Definition 2.3

A functional \(\mathfrak {w}: {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\times \Omega _{{\mathbb {Z}}} \rightarrow {\mathbb {R}}\) is weakly vanishing if \(|\mathfrak {w}| \lesssim N^{-\beta }\) for \(\beta \in {\mathbb {R}}_{>0}\) universal, or if:

-

For all \((T,X,\eta ) \in {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\times \Omega _{{\mathbb {Z}}}\), we have \(\mathfrak {w}_{T,X}(\eta ) = \mathfrak {w}_{0,0}(\tau _{-X}\eta _{T})\) for a "reference functional" \(\mathfrak {w}_{0,0}: \Omega _{{\mathbb {Z}}} \rightarrow {\mathbb {R}}\).

-

We have \(\textbf{E}^{\mu _{0,{\mathbb {Z}}}} \mathfrak {w}_{0,0} = 0\), where \(\mu _{0,{\mathbb {Z}}}\) is the product Bernoulli measure on \(\Omega _{{\mathbb {Z}}}\) defined by \(\textbf{E}^{\mu _{0,{\mathbb {Z}}}}\eta _{x}=0\) for all \(x \in {\mathbb {Z}}\).

-

We have the deterministic bound \(|\mathfrak {w}_{0,0}| \lesssim 1\) uniformly in \(N \in {\mathbb {Z}}_{>0}\) and \(\eta \in \Omega _{{\mathbb {Z}}}\) with a universal implied constant.

-

The support of \(\mathfrak {w}_{0,0}\) is uniformly bounded, so that it is contained in an interval of length independent of \(N\in {\mathbb {Z}}_{>0}\).

At the level of hydrodynamic limits, weakly vanishing terms are negligible as near-stationary initial measures imply that the global \(\eta \)-density is roughly 0. This is the defining property of weakly vanishing terms in [10]. The one-block and two-blocks steps in the proof of Lemma 2.5 in [10] will also apply for weakly vanishing terms defined above. We return to this in the proof for Theorem 1.7, but we note here weakly vanishing terms will not give any difficulties that were not already treated in [10].

Definition 2.4

A functional \(\mathfrak {g}: {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\times \Omega _{{\mathbb {Z}}} \rightarrow {\mathbb {R}}\) is a pseudo-gradient if the following conditions are satisfied:

-

For all \((T,X,\eta ) \in {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\times \Omega _{{\mathbb {Z}}}\), we have \(\mathfrak {g}_{T,X}(\eta ) = \mathfrak {g}_{0,0}(\tau _{-X}\eta _{T})\) with reference functional \(\mathfrak {g}_{0,0}:\Omega _{{\mathbb {Z}}}\rightarrow {\mathbb {R}}\).

-

We have the deterministic bound \(|\mathfrak {g}_{0,0}| \lesssim 1\) uniformly in \(N \in {\mathbb {Z}}_{>0}\) and \(\eta \in \Omega _{{\mathbb {Z}}}\). The support of \(\mathfrak {g}_{0,0}\) is uniformly bounded.

-

We have \(\textbf{E}^{\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}} \mathfrak {g}_{0,0} = 0\) for any canonical ensemble parameter \(\varrho \in {\mathbb {R}}\) and set \(\mathfrak {I} \subseteq {\mathbb {Z}}\) containing the support of \(\mathfrak {g}_{0,0}\).

If \(\mathfrak {g}_{0,0}\) is a discrete gradient, so that \(\mathfrak {g}_{0,0} = \tau _{-\mathfrak {j}}\mathfrak {f}_{0,0} - \mathfrak {f}_{0,0}\) for a local functional \(\mathfrak {f}_{0,0}:\Omega _{{\mathbb {Z}}}\rightarrow {\mathbb {R}}\) satisfying the required uniform boundedness and support condition and \(\mathfrak {j}\in {\mathbb {Z}}\) is uniformly bounded, then \(\mathfrak {g}_{0,0}\) is the reference functional for a pseudo-gradient. To show this, uniform boundedness of \(\mathfrak {j}\in {\mathbb {Z}}\), of \(\mathfrak {f}_{0,0}\), and of the support of \(\mathfrak {f}_{0,0}\) guarantee the second bullet point for \(\mathfrak {g}_{0,0}\). To see the last bullet point for \(\mathfrak {g}_{0,0}\), it suffices to see that the canonical ensembles in that third bullet point are "invariant under shifts"; on any subset containing supports of \(\tau _{-\mathfrak {j}}\mathfrak {f}_{0,0}\) and \(\mathfrak {f}_{0,0}\), with respect to any canonical ensemble \(\tau _{-\mathfrak {j}}\mathfrak {f}_{0,0}\) and \(\mathfrak {f}_{0,0}\) are equal in law.

For a non-gradient example of a pseudo-gradient we give the cubic nonlinearity in Proposition 2.3 in [10] in the case where the maximal jump-length is \(\mathfrak {m}\geqslant 4\). This cubic functional evaluated at the particle system at \((0,0) \in {\mathbb {R}}_{\geqslant 0}\times {\mathbb {Z}}\) satisfies required estimates for the reference functional in the second bullet point in the definition of pseudo-gradients that we gave above. This can be checked directly because this cubic nonlinearity is the sum of a uniformly bounded \(\mathfrak {m}\)-dependent number of difference of cubic monomials in spins contained in some neighborhood of length-scale at most \(10\mathfrak {m}\). To justify the interesting vanishing-in-expectation requirement in the third bullet point in the definition above, we observe that canonical ensembles are invariant under swapping spins at deterministic points. Thus, if we take \(\eta _{1}\eta _{2}\eta _{3}-\eta _{-1}\eta _{-2}\eta _{-3}\), for example, in expectation with respect to any canonical ensemble containing \(\{\pm 1,\pm 2,\pm 3\}\) we may replace \(\eta _{-1}\eta _{-2}\eta _{-3} \mapsto \eta _{1}\eta _{2}\eta _{3}\) at the level of expectations.

Definition 2.5

A functional \(\widetilde{\mathfrak {g}}: {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\times \Omega _{{\mathbb {Z}}} \rightarrow {\mathbb {R}}\) is said to have a pseudo-gradient factor if it is uniformly bounded and we have a factorization of functionals \(\widetilde{\mathfrak {g}} = \mathfrak {g}\cdot \mathfrak {f}\) such that the following constraints are satisfied:

-

We have \(\mathfrak {f}_{T,X}(\eta ) = \mathfrak {f}_{0,0}(\tau _{-X}\eta _{T})\). The support of \(\mathfrak {f}_{0,0}\) is bounded but may be N-dependent. We still require \(|\mathfrak {f}_{0,0}| \lesssim 1\).

-

The factor \(\mathfrak {g}\) is a pseudo-gradient, and the \(\eta \)-wise supports of \(\mathfrak {g}_{0,0}\) and \(\mathfrak {f}_{0,0}\) are disjoint subsets in \({\mathbb {Z}}\).

-

The factor \(\mathfrak {f}_{0,0}\) is an average of terms that are each a product of \(\eta \)-spins times uniformly bounded/deterministic constants.

Since the supports of the pseudo-gradient factor and the functional \(\mathfrak {f}\) in the last class of functionals are disjoint subsets, it is easy to see \(\widetilde{\mathfrak {g}}\)-functionals above are also pseudo-gradients. However, observe that the support of the reference functional \(\mathfrak {f}_{0,0}\) is allowed to grow with \(N\in {\mathbb {Z}}_{>0}\) so the same is true of \(\widetilde{\mathfrak {g}}\). Our analysis for pseudo-gradients deteriorates in the length-scale of their support so we will not be able to efficiently study these \(\widetilde{\mathfrak {g}}\)-terms via the same ideas. The point of introducing the previous class of functionals is to highlight the probing of a pseudo-gradient factor with uniformly bounded support we will need to do.

2.2 Approximate SHE

Dynamics of the microscopic Cole-Hopf transform will be driven by the following heat operators.

Definition 2.6

Let \(\mathfrak {P}^{N}\) be the heat kernel with \(\mathfrak {P}_{S,S,x,y}^{N} = \textbf{1}_{x=y}\) solving the semi-discrete parabolic equation below where the operator \(\mathscr {L}^{!!}\), which is also defined below, acts on the backwards spatial variable of the heat kernel:

The \(\mathscr {L}^{!!}\) operator is the discrete-type Laplacian defined below in which \(\widetilde{\alpha }_{k} = \alpha _{k} + \mathscr {O}(N^{-1})\) are defined in Lemma 1.2 of [10]:

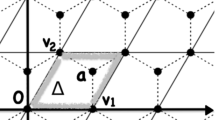

We introduced \(\Delta _{k}\varphi _{x} \overset{\bullet }{=} \varphi _{x+k}+\varphi _{x-k}-2\varphi _{x}\) and \(\Delta _{k}^{!!} \overset{\bullet }{=} N^{2}\Delta _{k}\) given any function \(\varphi : {\mathbb {Z}}\rightarrow {\mathbb {R}}\). Additionally for \((T,x) \in {\mathbb {R}}_{\geqslant 0}\times {\mathbb {Z}}\), we define space-time and spatial heat/convolution-operators acting on space-time test functions \(\varphi : {\mathbb {R}}_{\geqslant 0} \times {\mathbb {Z}}\rightarrow {\mathbb {R}}\):

We occasionally use convexity of the spatial heat operator, so for any norm \(\Vert \Vert \) and \(p\in {\mathbb {R}}_{\geqslant 1}\) we have \(\Vert \mathfrak {P}_{T,x}^{N,\textbf{X}}\varphi \Vert ^{p} \leqslant \mathfrak {P}_{T,x}^{N}\Vert \varphi \Vert ^{p}\).

The main result of this section is the following SDE-type equation for the microscopic Cole-Hopf transform. By SDE-type, we mean stochastic equation with discrete noise \(\xi ^{N}\). The following borrows largely from Sect. 2 of [10].

Proposition 2.7

Consider \(\varepsilon _{X,1} \in {\mathbb {R}}_{>0}\) arbitrarily small but universal and \(\beta _{X} \overset{\bullet }{=} \frac{1}{3}+\varepsilon _{X,1}\). We have, with notation defined after,

The first \(\Phi ^{N,2}\)-term contains the "pseudo-gradient content" in the \(\mathfrak {Z}^{N}\)-equation. We use notation to be defined after:

Meanwhile the \(\Phi ^{N,3}\)-term contains the "weakly-vanishing content" in the \(\mathfrak {Z}^{N}\)-equation for which we also use notation defined after:

-

The martingale integrator \(\textrm{d}\xi ^{N}\) is defined in (2.4) of [10]. It generalizes to any maximal-length in straightforward fashion.

-

We define a spatial-average "operator" where \(\mathfrak {g}_{T,x} = \mathfrak {g}_{0,0}(\tau _{-x}\eta _{T})\) is a pseudo-gradient whose support has size at most \(5\mathfrak {m}\):

$$\begin{aligned} \mathscr {A}_{N^{\beta _{X}}}^{\textbf{X},-}(\mathfrak {g}_{T,x}) \ {}&\overset{\bullet }{=} \ \widetilde{{{\sum }}}_{\mathfrak {l}=1,\ldots ,N^{\beta _{X}}}\tau _{-7\mathfrak {l}\mathfrak {m}}\mathfrak {g}_{T,x}. \end{aligned}$$(2.8)The summands in the \(\mathscr {A}^{\textbf{X},-}(\mathfrak {g})\)-term have disjoint supports as spatial shifts are with respect to multiples of \(7\mathfrak {m}\). We also note the superscript "\(\textbf{X},-\)" emphasizes a spatial average in the negative spatial direction. The support of \(\mathscr {A}^{\textbf{X},-}(\mathfrak {g})\) has size \(\mathscr {O}(N^{\beta _{X}})\).

-

The terms \(\widetilde{\mathfrak {g}}^{\mathfrak {l}}_{T,x} = \widetilde{\mathfrak {g}}^{\mathfrak {l}}_{0,0}(\tau _{-x}\eta _{T})\) have support of length \(\mathscr {O}(N^{\beta _{X}})\). Each \(\widetilde{\mathfrak {g}}^{\mathfrak {l}}\)-term admits a pseudo-gradient factor.

-

The terms \(\mathfrak {b}^{\mathfrak {l}}\) are uniformly bounded. The term \(\mathfrak {w}\) is the sum of a uniformly bounded number of weakly vanishing terms.

-

The terms \(\mathfrak {w}^{k}\) for \(|k|\leqslant 2\mathfrak {m}\) are weakly vanishing, and the deterministic coefficients \(c_{k} \in {\mathbb {R}}\) are uniformly bounded.

-

Provided \(k \in {\mathbb {Z}}\), define the discrete gradient \(\nabla _{k}\varphi _{x} \overset{\bullet }{=} \varphi _{x+k} - \varphi _{x}\) for any \(\varphi : {\mathbb {Z}}\rightarrow {\mathbb {R}}\) and its continuum rescaling \(\nabla _{k}^{!} \overset{\bullet }{=} N\nabla _{k}\).

Remark 2.8

The multiples \(5\mathfrak {m}\) and \(7\mathfrak {m}\) can be replaced with any uniformly bounded multiples; it will not change our analysis of (2.5a). All we need is \(\mathscr {A}^{\textbf{X},-}\) in Proposition 2.7 is an average of pseudo-gradients with disjoint supports.

Remark 2.9

With respect to any canonical ensemble pseudo-gradients are fluctuations, so averaging them in \(\mathscr {A}^{\textbf{X},-}\) above will provide better cancellation as the length-scale of averaging increases. The error in replacing the pseudo-gradient \(\mathfrak {g}\) by a spatial-average will grow in the length-scale of spatial-averaging which is where the second term in \(\Phi ^{N,2}\) comes from; this will come in the proof of Proposition 2.7. Lastly, cancellation at canonical ensembles will eventually give cancellation at non-equilibrium.

To prove Proposition 2.7 we need an elementary calculation relating asymmetry to "specialized" speeds in Assumption 1.6.

Lemma 2.10

We have the exact identity \({{\sum }}_{k=1}^{\mathfrak {m}} k \alpha _{k}\gamma _{k} = {{\sum }}_{k=1}^{\mathfrak {m}}k\alpha _{k}\bar{\gamma }_{k}\).

Proof of Proposition 2.7

The stochastic Eq. (2.5a) of SDE-type is derived using almost entirely Proposition 2.3 from [10] and the derivation of \(\mathfrak {Z}^{N}\)-dynamics in [10] that was done prior to Proposition 2.3 in [10]. We provide only extra ingredients in additional spatial averaging and reorganizing with Taylor expansion of the exponential formula for the microscopic Cole-Hopf transform similar to the proof for Proposition 2.3 in [10]. We give the additional ingredients below starting with notation.

-

Define \(\mathfrak {f}_{\mathfrak {k}}^{\mathfrak {m}}\overset{\bullet }{=}\tau _{\mathfrak {k}\mathfrak {m}}\mathfrak {f}_{T,x}\) for any \(\mathfrak {k}\in {\mathbb {Z}}\) and \(\mathfrak {f}:\Omega _{{\mathbb {Z}}}\rightarrow {\mathbb {R}}\) as we only shift by \(\mathfrak {m}\)-multiples. We also define \(\nabla _{\mathfrak {k}}^{\mathfrak {m}}\overset{\bullet }{=}\nabla _{\mathfrak {k}\mathfrak {m}}\).

-

We also declare that all functionals, including the microscopic Cole-Hopf transform, are evaluated at (T, x).

Proposition 2.3 from [10] gives the desired result but with cubic nonlinearities in place of the \((\bar{\mathfrak {Z}}^{N})^{-1}\Phi ^{N,2}\)-terms, up to another difference that we address at the end of this proof that concerns quadratic polynomials in spins. The cubic nonlinearities have support contained in \(\llbracket -\mathfrak {m},\mathfrak {m}\rrbracket \subseteq {\mathbb {Z}}\). We will replace this cubic nonlinearity with its spatial average on the length-scale \(N^{\beta _{X}}\). The error terms, which come from Taylor expansion, are the remaining terms in \(\Phi ^{N,2}\) and additional weakly vanishing terms.

The cubic nonlinearities in Proposition 2.3 of [10] are pseudo-gradients as canonical ensembles are permutation-invariant. If \(\mathfrak {c}\) denotes the contribution of these nonlinearities, an elementary discrete "product/Leibniz rule" gets the following identities for which we define \(\widetilde{\mathfrak {c}}\overset{\bullet }{=}\mathfrak {c}_{-2}^{\mathfrak {m}}\) and for which we give a little more explanation afterwards:

The second line (2.10) follows from applying the same discrete product/Leibniz rule used to obtain (2.9) but applied to the first term within the RHS of (2.9) and with \(-2\) replaced by \(-7\mathfrak {l}\). We will now match each term in (2.5a) to either a weakly vanishing term contributing to \(\Phi ^{N,3}\) or one of the terms in \(\Phi ^{N,2}\) at least after averaging (2.10) over \(\mathfrak {l}\in \llbracket 1,N^{\beta _{X}}\rrbracket \).

-

Up to uniformly bounded error terms that are of order \(N^{-1/2}\), the second term within the RHS of (2.9) is the product of linear polynomials in the spins and the cubic polynomial \(\mathfrak {c}\), all supported in some neighborhood with size at most \(5\mathfrak {m}\). We emphasize there is no \(N^{1/2}\) in this product. This product is also without any constant term because multiplying the cubic polynomial by a linear polynomial cannot cancel spin-factors to obtain a constant. As it is a polynomial in spins supported in the same set of size \(5\mathfrak {m}\), it is weakly vanishing as any polynomial of spins without constant term vanishes in expectation with respect to \(\mu _{0,{\mathbb {Z}}}\).

-

The final term from the RHS of (2.9) has the form of a gradient term in \(\Phi ^{N,3}\) for \(\mathfrak {k}=-2\mathfrak {m}\) since it is an unscaled gradient acting on the product of a uniformly bounded functional and \(\mathfrak {Z}^{N}\) which is then multiplied by the \(N^{1/2}\)-factor.

-

We move to the first three terms in (2.10). Defining \(\mathfrak {b}^{\mathfrak {l}} \overset{\bullet }{=} \widetilde{\mathfrak {c}}\) turns the third term in (2.10) into a gradient-term in \(\Phi ^{N,2}\).

-

As \(\mathfrak {c}\) is a pseudo-gradient so is \(\widetilde{\mathfrak {c}}=\mathfrak {c}_{-2}^{\mathfrak {m}}\). Thus the first term in (2.10) is the first term in \(\Phi ^{N,2}\) after we average over \(\mathfrak {l}\in \llbracket 1,N^{\beta _{X}}\rrbracket \).

-

Analysis of the cubic nonlinearity \(\mathfrak {c}\) in Proposition 2.3 of [10] now amounts to computing the second term in (2.10). For this we compute the gradient of the microscopic Cole-Hopf transform by Taylor expansion of its exponential formula in terms of \(\eta \)-spins. Taylor expansion in this fashion is done in the proof of Proposition 2.3 in [10] for example. The result of such Taylor expansion gives a representation of the second term in (2.10) that we describe as follows. First we emphasize the support of the \(\widetilde{\mathfrak {c}}_{-7\mathfrak {l}}^{\mathfrak {m}}\)-factor in the second term in (2.10) is contained strictly to the left of \(x-7\mathfrak {l}\mathfrak {m}\). Taylor expansion gives

$$\begin{aligned} N^{\frac{1}{2}}\widetilde{\mathfrak {c}}_{-7\mathfrak {l}}^{\mathfrak {m}}\left( \nabla _{-7\mathfrak {l}}^{\mathfrak {m}}\mathfrak {Z}^{N}\right) \ {}&= \ {\sum }_{\mathfrak {k}=1}^{\infty } N^{-\frac{1}{2}\mathfrak {k}+\frac{1}{2}}\lambda ^{\mathfrak {k}}(\mathfrak {k}!)^{-1}\left( \widetilde{\mathfrak {c}}_{-7\mathfrak {l}}^{\mathfrak {m}}\left( \nabla _{-7\mathfrak {l}}^{\mathfrak {m}}\mathfrak {h}^{N}\right) ^{\mathfrak {k}}\right) \end{aligned}$$(2.11)$$\begin{aligned}&= \ {\sum }_{\mathfrak {k}=1}^{\infty } N^{-\frac{1}{2}\mathfrak {k}+\beta _{X}\mathfrak {k}+\frac{1}{2}}\lambda ^{\mathfrak {k}}(\mathfrak {k}!)^{-1}\left( \widetilde{\mathfrak {c}}_{-7\mathfrak {l}}^{\mathfrak {m}}\left( N^{-\beta _{X}}\nabla _{-7\mathfrak {l}}^{\mathfrak {m}}\mathfrak {h}^{N}\right) ^{\mathfrak {k}}\right) . \end{aligned}$$(2.12)The Eq. (2.12) may be interpreted as the Taylor series for \(\mathfrak {Z}^{N}\) and recalling \(\mathfrak {Z}^{N}\) is the exponential of \(\mathfrak {h}^{N}\). We now observe that the \(\mathfrak {h}^{N}\)-gradient is a linear polynomial in \(\eta \)-spins which are contained a neighborhood with length at most \(\kappa _{\mathfrak {m}}N^{\beta _{X}}\) strictly to the right of \(x-7\mathfrak {l}\mathfrak {m}\) and thus disjoint from the support of \(\widetilde{\mathfrak {c}}^{\mathfrak {m}}_{-7\mathfrak {l}}\). We thereby additionally observe that per sum-index \(\mathfrak {k}\in {\mathbb {Z}}_{\geqslant 1}\) the corresponding summand in (2.12) with pseudo-gradient factor \(\widetilde{\mathfrak {c}}^{\mathfrak {m}}_{-7\mathfrak {l}}\). Indeed observe the remaining functional-factor \(N^{-\beta _{X}}\nabla _{-7\mathfrak {l}}^{\mathfrak {m}}\mathfrak {h}^{N}\) is uniformly bounded if \(|\mathfrak {l}|\lesssim N^{\beta _{X}}\) since the \(\eta \)-spins are uniformly bounded. Additionally, the prefactor in the \(\mathfrak {k}\)-summand is at most \(N^{\beta _{X}}N^{-(\mathfrak {k}-1)/2+(\mathfrak {k}-1)\beta _{X}}\) times uniformly bounded factors. Thus the infinite series (2.12) is summable as \(\beta _{X}<\frac{1}{2}\) so it is a functional with pseudo-gradient factor \(\widetilde{\mathfrak {c}}_{-7\mathfrak {l}}^{\mathfrak {m}}\) which is then scaled by \(N^{\beta _{X}}\). After we average (2.12) over \(\mathfrak {l}\in \llbracket 1,N^{\beta _{X}}\rrbracket \) we may match the second term from (2.10) to the second term in \(\Phi ^{N,2}\). Alternatively instead of taking the entire infinite series into the functional-with-pseudo-gradient-factor/second term in \(\Phi ^{N,2}\) we may cut this series off at \(\mathfrak {k}=4\) with an error that is uniformly vanishing in the large-N limit.

We now return to the last difference between Proposition 2.3 from [10] and Proposition 2.7 concerning quadratic polynomials in \(\eta \)-spins that we remarked on at the beginning of this proof. Within Proposition 2.3 from [10] this quadratic polynomial was absorbed as a weakly vanishing term. However because we take the weaker Assumption 1.6 we must treat it differently here. First we introduce some notation to define this quadratic polynomial of interest.

-

Define the length-k neighborhood \(\mathfrak {I}_{x,k}\overset{\bullet }{=}\{z_{1},z_{2}\in {\mathbb {Z}}: z_{1}\leqslant x<z_{2}, \ z_{2}-z_{1}=k\}\) and define \(\alpha _{k}\widetilde{\gamma }_{k}\overset{\bullet }{=}\alpha _{k}\gamma _{k}-\alpha _{k}\bar{\gamma _{k}} = \mathscr {O}(N^{-1/2})\).

This quadratic polynomial is the sum of terms below that we sum over \(k\in \llbracket 1,\mathfrak {m}\rrbracket \) and the neighborhood \(\mathfrak {I}_{x,k}\):

Observe the \(\Phi _{1;}\)-term is a pseudo-gradient because \(\eta \)-spins are exchangeable with respect to any canonical ensemble. This was the justification for the cubic nonlinearity in Proposition 2.3 in [10] being a pseudo-gradient as well. In particular after we sum over the two finite sets \(k\in \llbracket 1,\mathfrak {m}\rrbracket \) and \(\mathfrak {I}_{x,k}\) we get another pseudo-gradient since pseudo-gradients are closed under uniformly bounded linear combinations. Thus we may employ the same decomposition and expansions for the sum of \(\Phi _{1;}\)-terms as those we used to address the cubic nonlinearity in Proposition 2.3 in [10]. We emphasize Assumption 1.6 implies \(\Phi _{1;}\) is order \(N^{1/2}\) like the aforementioned cubic nonlinearity. Meanwhile the second \(\Phi _{2;k}\)-terms are all independent of the \(\mathfrak {I}_{x,k}\)-variables. Therefore after we sum over \(\mathfrak {I}_{x,k}\), we get from \(\Phi _{2;k}\) the factor \(k\alpha _{k}\widetilde{\gamma }_{k}\) times a k-independent factor. Summing over \(k\in \llbracket 1,\mathfrak {m}\rrbracket \) and applying Lemma 2.10 shows that \(\Phi _{2;k}\)-terms within the far RHS of (2.13) ultimately contribute zero to the \(\mathfrak {Z}^{N}\)-stochastic equation. \(\square \)

Proof of Lemma 2.10

By definition of \(\lambda \in {\mathbb {R}}\), it suffices to prove \({\sum }_{k = 1}^{\mathfrak {m}} k \alpha _{k} \bar{\gamma }_{k} = \lambda {\sum }_{k = 1}^{\mathfrak {m}} k^{2} \alpha _{k}\). By definition of \(\bar{\gamma }_{k}\),

We rewrite both double summations on the far RHS by accumulating the resulting coefficients for all \(\alpha _{k}\) with \(k \in {\mathbb {Z}}_{>0}\). In the first double summation, we obtain \(k \alpha _{k}\) a total of k-times provided any \(k \in {\mathbb {Z}}_{>0}\). Inside the second double summation, we grab one copy of \(j\alpha _{k}\) for each \(j \in \llbracket 1, k \rrbracket \). Combining these two observations with elementary calculations gives

Combining the previous two displays completes the proof. \(\square \)

2.3 Strategy

We now discuss "proof" of Theorem 1.7. We clarify that we will not present results here that we actually get in this paper. The reasons for this are technical and instead we will get slightly adapted quantitative versions of the following; we discuss these reasons at the end of this strategy discussion. For this reason, all results discussed here will be "pseudo-results".

The proof of Theorem 1.7 is built on the following key result that effectively says we can forget about the \(\Phi ^{N,2}\)-contribution in the stochastic equation for \(\mathfrak {Z}^{N}\) from Proposition 2.7. We discuss its importance and implications afterwards.

Pseudo-Proposition 2.11

Define a norm \(\Vert \varphi \Vert _{\mathfrak {t};\mathbb {X}}\overset{\bullet }{=}\sup _{0\leqslant \mathfrak {s}\leqslant \mathfrak {t}}\sup _{x\in \mathbb {X}}|\varphi _{\mathfrak {s},x}|\) for any \(\mathfrak {t}\in {\mathbb {R}}_{\geqslant 0}\) and \(\mathbb {X}\subseteq {\mathbb {R}}\). We get the convergence in probability \(\Vert \mathfrak {P}^{N}(\Phi ^{N,2})\Vert _{1;{\mathbb {Z}}} \rightarrow _{N\rightarrow \infty } 0\) where \(\Phi ^{N,2}\) is defined in the statement of Proposition 2.7.

Due to standard linear procedure, it is enough to analyze the process \(\mathfrak {Y}^{N}\) that solves the same stochastic equation as \(\mathfrak {Z}^{N}\) from Proposition 2.7 but without the \(\Phi ^{N,2}\)-term and replacing all factors of \(\mathfrak {Z}^{N}\) with \(\mathfrak {Y}^{N}\) and while keeping the same initial data. More precisely it is left to show \(\mathfrak {Y}^{N}\) converges to the solution of SHE. At this point, outside of the exact microscopic version of the SHE corresponding to the first two terms in the stochastic equation of \(\mathfrak {Y}^{N}\) from Proposition 2.7, there is only weakly-vanishing data. Thus the SHE scaling limit for \(\mathfrak {Y}^{N}\) can be computed using the same ideas from hydrodynamic limits that were used in the proof of Theorem 1.1 in [10]. There is a caveat to this that is ultimately negligible; see the end of the proof of Proposition 8.3.

We discuss elements of the would-be-proof of Pseudo-Proposition 2.11 below. For simplicity, let us assume that the gradient term in \(\Phi ^{N,2}\) is equal to 0. To control such a gradient term, we use a simple summation-by-parts argument and apply regularity estimates for the heat kernel in the heat operator \(\mathfrak {P}^{N}\) like in [10]. Let us also assume the order \(N^{\beta _{X}}\)-term in \(\Phi ^{N,2}\) in Proposition 2.7 is equal to 0. It turns out that all steps we require to control this \(N^{\beta _{X}}\)-term will be steps we need to control the leading-order \(N^{1/2}\)-term in \(\Phi ^{N,2}\) in Proposition 2.7 anyway. The first step to control the order \(N^{1/2}\)-term in \(\Phi ^{N,2}\) is an introduction-of-cutoff.

Pseudo-Proposition 2.12

Define the cutoff-spatial average \(\mathscr {C}^{\textbf{X},-}\) as equal to \( \mathscr {A}^{\textbf{X},-}_{N^{\beta _{X}}}(\mathfrak {g})\) in \(\Phi ^{N,2}\) in Proposition 2.7 but only when this spatial average is at most \(N^{\varepsilon -\beta _{X}/2}\) in absolute value. On the complement event, define \(\mathscr {C}^{\textbf{X},-}\) to be 0. We have, in probability,

It turns out that such a step will be unnecessary for the order \(N^{\beta _{X}}\)-term in \(\Phi ^{N,2}\) from Proposition 2.7 just because it is lower-order. We will explain both the would-be-proof and utility of the previous Pseudo-Proposition 2.12 in future subsections. The key second step towards the would-be-proof of Pseudo-Proposition 2.11 is the following replacement-by-time-average.

Pseudo-Proposition 2.13

Given \(\mathfrak {t}\in {\mathbb {R}}_{>0}\) let us define \(\mathscr {A}^{\mathfrak {t};\textbf{T},+}_{S,y} \overset{\bullet }{=} \mathfrak {t}^{-1}\int _{0}^{\mathfrak {t}} \mathscr {C}^{\textbf{X},-}_{S+\mathfrak {r},y} \textrm{d}\mathfrak {r}\) and for \(\mathfrak {t}=0\) we instead define \(\mathscr {A}^{\mathfrak {t};\textbf{T},+} = \mathscr {C}^{\textbf{X},-}\). In words \(\mathscr {A}^{\mathfrak {t};\textbf{T},+}\) is the time-average of \(\mathscr {C}^{\textbf{X},-}\) with respect to time-scale \(\mathfrak {t}\in {\mathbb {R}}_{\geqslant 0}\) in the "positive time-direction".

Defining \(\mathfrak {t}_{\max }\overset{\bullet }{=}N^{-1}\), we have the convergence in probability

The conclusion of Pseudo-Proposition 2.13 is replacement of the spatial-average-with-cutoff \(\mathscr {C}^{\textbf{X},-}\) defined in the statement of Pseudo-Proposition 2.12 with its time-average on time-scale \(\mathfrak {t}_{\max }=N^{-1}\). To take advantage of such replacement we would need to estimate the time-average \(\mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}\). Ultimately, we would get the following.

Pseudo-Proposition 2.14

Admit the setting of Pseudo-Proposition 2.13. We have the convergence in the probability

Pseudo-Proposition 2.11 would now follow from the triangle inequality for the norm \(\Vert \Vert _{1;{\mathbb {Z}}}\). We now explain the would-be-proofs for each of Pseudo-Proposition 2.12, Pseudo-Proposition 2.13, and Pseudo-Proposition 2.14.

2.4 Strategy-local equilibrium

Before we start the would-be-proofs for Pseudo-Proposition 2.12, Pseudo-Proposition 2.13, and Pseudo-Proposition 2.14, we first introduce key invariant measure calculations. The first is a large-deviations-type estimate which we establish in Lemma 3.13 and Corollary 3.14, and the second is the Kipnis-Varadhan "Brownian" inequality which we establish in Lemma 3.10, Lemma 3.11, and Lemma 3.12.

Lemma 2.15

Suppose the particle system starts at any "canonical ensemble" invariant measure so that the measure on particle configurations on any finite subset of \({\mathbb {Z}}\) is a convex combination of canonical ensembles in Definition 3.1 on that subset. We have

Lemma 2.16

Suppose the particle system starts at a canonical ensemble invariant measure as in Lemma 2.15. For any \(\mathfrak {t}\in {\mathbb {R}}_{\geqslant 0}\),

The proof of Lemma 2.15 and Lemma 2.16 depend heavily on invariant measures. However, we do not need these bounds pointwise in space-time but only after the law of the particle system is averaged against the heat kernel in space-time because of the "heat-operator-integrated" structure of the proposed estimates within Pseudo-Proposition 2.12, Pseudo-Proposition 2.13, and Pseudo-Proposition 2.14. From [14], in this averaged sense the law of the particle system is close to a convex combination of canonical ensemble invariant measures at least on mesoscopic space-time scales. We now note that the statistics we address in Lemma 2.15 and Lemma 2.16 are mesoscopic statistics. Thus, we expect that Lemma 2.15 and Lemma 2.16 still hold beyond the canonical ensemble initial measures when the absolute values therein are integrated against the heat kernel.

However, there are obstructions in actually implementing such a local equilibrium idea that concern how local equilibrium works at a more technical but still important level. We will refer to them in the would-be-proof of Pseudo-Proposition 2.14.

-

Following [14], we reduce to local equilibrium using entropy production estimates. Because of the asymmetry in the model not being sufficiently weak, and because of the infinite-volume features of the lattice \({\mathbb {Z}}\) where the exclusion process evolves, establishing entropy production estimates in our setting is noticeably more difficult; Sect. 3 addresses this.

-

We reduce to a local equilibrium by comparing the averaged law of the particle system to an invariant measure at the level of relative entropy using the entropy inequality in Appendix 1.8 in [21] which connects relative entropy to large deviations, for example. We also require a log-Sobolev inequality for the symmetric simple exclusion process established in [25] to control relative entropy by Dirichlet form in order to use entropy production from the first bullet point. The LSI in [25] is quadratic in the length-scale we want to reduce to local equilibrium on, so to reduce to local equilibrium on bigger length-scales, we need better entropy production or control, at a level of large deviations, on the functional that we are estimating in expectation.

2.5 Pseudo-Proposition 2.12

Assume Lemma 2.15 holds without expectation and the \(\mathfrak {Z}^{N}\)-process is uniformly bounded as it is supposed to look like the SHE solution. By Lemma 2.15, there is nothing to do for invariant measure initial data. However, because the statistic we estimate in Lemma 2.15 is mesoscopic, in general we can finish by reducing to local equilibrium.

2.6 Pseudo-Proposition 2.13

We use the fast-variable/slow-variable idea from stochastic homogenization. This proposes that on the time-scale on which we want to replace \(\mathscr {C}^{\textbf{X},-}\) by time-average, the heat kernel and the \(\mathfrak {Z}^{N}\)-process both exhibit little variation. The error for such a replacement-by-time-average is thus controlled by time-regularity of the heat kernel times \(\mathscr {C}^{\textbf{X},-}\) and time-regularity of the \(\mathfrak {Z}^{N}\)-process times the size of \(\mathscr {C}^{\textbf{X},-}\). The heat kernel is smooth with an integrable "enough" short-time singularity. On the other hand, we also expect that \(\mathfrak {Z}^{N}\) has time-regularity matching that of the SHE solution, and this is Holder regularity of exponent \((\frac{1}{4})^{-}\). Thus, the time-regularity for \(\mathfrak {Z}^{N}\) is worse than that for the heat kernel, so we control the error given by time-regularity of \(\mathfrak {Z}^{N}\) times \(\mathscr {C}^{\textbf{X},-}\). For any time-scale \(\mathfrak {t}\in {\mathbb {R}}_{\geqslant 0}\), we thus expect the resulting error to be at most, recalling \(\mathscr {C}^{\textbf{X},-}\) has an a priori bound by definition in Pseudo-Proposition 2.12, of order roughly equal to:

Recall \(\beta _{X}=1/3+\varepsilon _{X,1}\) in the statement of Proposition 2.7. Pseudo-Proposition 2.13 proposes that we take \(\mathfrak {t}=\mathfrak {t}_{\max }=N^{-1}\) for which the RHS of the estimate (2.21) blows up. To remedy this we will appeal to the following multiscale procedure. Roughly it proposes an initial replacement of \(\mathscr {C}^{\textbf{X},-}\) by its time-average on some time-scale and applying Lemma 2.16 and local equilibrium to boost our a priori bound for \(\mathscr {C}^{\textbf{X},-}\) after time-average. First we assume for simplicity that Lemma 2.16 holds without expectation.

-

The bound (2.21) tells us we can pick the time-scale \(\mathfrak {t}=\mathfrak {t}_{1,1} = N^{-2+\varepsilon _{1}}\) for \(\varepsilon _{1}\in {\mathbb {R}}_{>0}\) arbitrarily small but still universal, so we first replace \(\mathscr {C}^{\textbf{X},-}\) with its time-average \(\mathscr {A}^{\mathfrak {t}_{1,1};\textbf{T},+}\) with respect to this preliminary time-scale \(\mathfrak {t}_{1,1}=N^{-2+\varepsilon _{1}}\).

-

Let us now replace the time-average \(\mathscr {A}^{\mathfrak {t}_{1,1};\textbf{T},+}\) with respect to the time-scale \(\mathfrak {t}_{1,1}=N^{-2+\varepsilon _{1}}\) with the time-average \(\mathscr {A}^{\mathfrak {t}_{1,2};\textbf{T},+}\) with respect to the time-scale \(\mathfrak {t}_{1,2}=N^{\varepsilon _{1}}\mathfrak {t}_{1,1}\) inside of the heat operator integrated against both the heat kernel and the \(\mathfrak {Z}^{N}\)-process. To this end, we first observe that \(\mathscr {A}^{\mathfrak {t}_{1,2};\textbf{T},+}\) is basically a time-average of \(\mathscr {A}^{\mathfrak {t}_{1,1};\textbf{T},+}\) on the latter/larger time-scale \(\mathfrak {t}_{1,2}\). Therefore, the error we get is (2.21) as before but now with \(\mathscr {A}^{\mathfrak {t}_{1,1};\textbf{T},+}\) in place of \(\mathscr {C}^{\textbf{X},-}\) and with \(\mathfrak {t}=\mathfrak {t}_{1,2}=N^{\varepsilon _{1}}\mathfrak {t}_{1,1}\). Lemma 2.16 gives the following ultimately vanishing bound for the error in this second "replacement-by-time-average" step:

$$\begin{aligned} N^{1/2}\Vert \mathscr {A}^{\mathfrak {t}_{1,1};\textbf{T},+}\Vert _{\infty }\mathfrak {t}_{1,2}^{1/4}&\lesssim \ N^{-1/2-\beta _{X}/2+\varepsilon }\mathfrak {t}_{1,1}^{-1/2}\mathfrak {t}_{1,2}^{1/4} \ \lesssim \ N^{-2/3+\varepsilon }\mathfrak {t}_{1,1}^{-1/4}\mathfrak {t}_{1,2}^{1/4} \cdot \mathfrak {t}_{1,1}^{-1/4} \ \nonumber \\&= \ N^{-2/3+\varepsilon +\varepsilon _{1}}\mathfrak {t}_{1,1}^{-1/4} \nonumber \\&\lesssim \ N^{-1/6+\varepsilon +\varepsilon _{1}}. \end{aligned}$$(2.22) -

The rest of the multiscale procedure is then replacing \(\mathscr {A}^{\mathfrak {t}_{1,n};\textbf{T},+}\) with \(\mathscr {A}^{\mathfrak {t}_{1,n+1};\textbf{T},+}\), in which \(\mathfrak {t}_{1,n+1}=N^{\varepsilon _{1}}\mathfrak {t}_{1,n}\), until we arrive at the final time-scale \(\mathfrak {t}_{\max }=N^{-1}\). As \(\mathfrak {t}_{1,1}=N^{-2+\varepsilon _{1}}\), we only require a \(\varepsilon _{1}\)-dependent number of steps/errors to get to \(\mathfrak {t}_{\max }=N^{-1}\).

2.7 Pseudo-Proposition 2.14

Lemma 2.16 with \(\mathfrak {t}=\mathfrak {t}_{\max }=N^{-1}\) yields \(N^{1/2}|\mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}| \lesssim N^{-\beta _{X}/2+\varepsilon }\), so the proposed bound in Pseudo-Proposition 2.14 holds if the exclusion process starts at a canonical ensemble invariant measure. We would then like to perform a reduction to local equilibrium since \(\mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}\) is a mesoscopic statistic. However, there is a serious obstruction that cannot be easily circumvented. To explain this, let us observe that for longer time-scales \(\mathfrak {t}\in {\mathbb {R}}_{\geqslant 0}\), the statistic \(\mathscr {A}^{\mathfrak {t};\textbf{T},+}\) depends on spins on a larger \(\mathfrak {t}\)-dependent block. For example, the LSI for exclusion processes from [25] grows in the length-scale of the set on which the exclusion process lives. For the time-scale \(\mathfrak {t}_{\max }=N^{-1}\), the associated length-scale is too large for us to apply local equilibrium. We resolve this via the following multiscale procedure that makes local equilibrium accessible by slowly boosting a priori large-deviations estimates for time-averages. Recall better large-deviations estimates help with local equilibrium.

-

The issue with local equilibrium was that the time-scale \(\mathfrak {t}_{\max }=N^{-1}\) was too long. With this in mind, we first consider shorter time-scales. We write \(\mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}\) as an average of time-shifted time-averages on a time-scale \(0<\mathfrak {t}_{2,1}\leqslant \mathfrak {t}_{\max }\) to be determined:

$$\begin{aligned} \mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}_{S,y} \ {}&= \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,1}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}. \end{aligned}$$(2.23)The identity (2.23) follows by noting that averaging on a large time-scale is the same as averages on a smaller time-scale and averaging all the small-scale averages together. If \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) is a "short enough" time-scale, we may analyze every summand within the RHS of (2.23) using Lemma 2.16 and a reduction to local equilibrium. However, if we choose \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) too small our estimates for the summands on the RHS of (2.23) will not vanish in the large-N limit when equipped with the additional factor of \(N^{1/2}\) in the proposed estimate in the statement of Pseudo-Proposition 2.14. Because we cannot pick \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) large enough to get a vanishing estimate for the summands on the RHS of (2.23) while still being able to employ local equilibrium, we instead do the following with motivation given shortly. Take \(\beta _{2,1} = \frac{1}{2}\beta _{X}-\varepsilon +\varepsilon _{2}\); building on (2.23), we introduce cutoff:

$$\begin{aligned} \mathscr {A}^{\mathfrak {t}_{\max };\textbf{T},+}_{S,y} \ = \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,1}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y} \ {}= & {} \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,1}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}|\lesssim N^{-\beta _{2,1}}} \nonumber \\\end{aligned}$$(2.24)$$\begin{aligned}{} & {} + \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,1}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}|\gtrsim N^{-\beta _{2,1}}}. \nonumber \\ \end{aligned}$$(2.25)The first sum on the far RHS of the identity (2.25) gives each \(\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}\)-summand therein an improved a priori upper bound of \(N^{-\beta _{2,1}}\) which is \(N^{-\varepsilon _{2}}\) better than the a priori bound of \(N^{-\beta _{X}/2+\varepsilon }\) for \(\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}\) that is inherited from the \(\mathscr {C}^{\textbf{X},-}\) it averages. For the second sum on the far RHS of (2.25), we pick \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) small enough to apply local equilibrium but large enough so that the summands are negligible with high-probability with respect to canonical ensembles. To see where this negligible feature of the summands in this second sum on the far RHS of (2.25) comes from, we observe that if \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) is sufficiently large then Lemma 2.16 tells us the event \(|\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}|\gtrsim N^{-\beta _{2,1}}\) happens with low-probability. We emphasize that the point for such step is to accomplish a lesser goal. We will not try to estimate \(\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}\) by \(N^{-1/2}\) but rather by \(N^{-\beta _{2,1}}\). Because we want a less sharp estimate, we may choose a shorter time-scale \(0<\mathfrak {t}_{2,1}\leqslant \mathfrak {t}_{\max }\) that does the job and for which we can apply local equilibrium.

-

At the end of the previous bullet point, we are now left with the first sum on the far RHS of (2.25). This is an average of time-averages \(\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}\) with a priori upper bound cutoff of \(N^{-\beta _{2,1}}\) that improves on the \(N^{-\beta _{X}/2+\varepsilon }\)-cutoff on \(\mathscr {C}^{\textbf{X},-}\) by a factor of \(N^{-\varepsilon _{2}}\). Because each of these time-averages comes with the same upper bound cutoff of \(N^{-\beta _{2,1}}\) we can group these time-averages on time-scale \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\) into \(\mathfrak {t}_{\max }\mathfrak {t}_{2,2}^{-1}\) many time-averages on a to-be-determined time-scale \(\mathfrak {t}_{2,2}\) that is larger than \(\mathfrak {t}_{2,1}\), and each of these scale \(\mathfrak {t}_{2,2}\)-averages will also inherit the a priori upper bound cutoff of \(N^{-\beta _{2,1}}\) as it averages terms with such cutoff. So

$$\begin{aligned} \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,1}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,1};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,1},y}|\lesssim N^{-\beta _{2,1}}} \ \approx \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,2}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}|\lesssim N^{-\beta _{2,1}}}. \end{aligned}$$(2.26)The error terms corresponding to the approximation (2.26) look sufficiently like the second sum on the far RHS of (2.25), it turns out after unfolding definitions. To be just a little more precise, the error terms are given by scale-\(\mathfrak {t}_{2,1}\) time-averages with both upper and lower bound cutoffs. We then pick \(\beta _{2,2} = \beta _{2,1} + \varepsilon _{2}\) and improve our a priori \(N^{-\beta _{2,1}}\)-bound similar to (2.25):

$$\begin{aligned}&\widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,2}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}|\lesssim N^{-\beta _{2,1}}} = \ \widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,2}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}\textbf{1}_{|\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}|\lesssim N^{-\beta _{2,2}}} \nonumber \\&\quad +\widetilde{\sum }_{\mathfrak {l}=0}^{\mathfrak {t}_{\max }\mathfrak {t}_{2,2}^{-1}-1}\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}\textbf{1}_{N^{-\beta _{2,2}}\lesssim |\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}_{S+\mathfrak {l}\mathfrak {t}_{2,2},y}|\lesssim N^{-\beta _{2,1}}}. \end{aligned}$$(2.27)Again, if we pick \(\mathfrak {t}_{2,2}\in {\mathbb {R}}_{>0}\) sufficiently large but not too much larger than \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\), then we can use Lemma 2.16 and local equilibrium to argue the second sum within the RHS of the previous display is negligible with high-probability similar to our reasoning to control the second sum within the far RHS of (2.25). We now discuss why we can reduce to a local equilibrium on this larger time-scale \(\mathfrak {t}_{2,2}\in {\mathbb {R}}_{>0}\). The indicator functions attached to summands in the second sum within the RHS of the previous display come equipped with a priori \(N^{-\beta _{2,1}}\)-upper bounds that are \(N^{-\varepsilon _{2}}\)-better than our a priori upper bounds for the summands in the second sum on the far RHS of (2.25). The a priori upper bounds are deterministic and at the level of large-deviations trivially. Because we have better a priori upper bounds of \(N^{-\beta _{2,1}}\) for the \(\mathscr {A}^{\mathfrak {t}_{2,2};\textbf{T},+}\)-functional we are estimating in expectation, we can reduce bounds of scale \(\mathfrak {t}_{2,2}\)-data to local equilibrium as \(\mathfrak {t}_{2,2}\in {\mathbb {R}}_{>0}\) is only slightly larger than the previous time-scale \(\mathfrak {t}_{2,1}\in {\mathbb {R}}_{>0}\). We emphasize the error terms in the second sum on the RHS of the last display are time-averages with a priori upper bound and lower bound cutoffs that are \(N^{\varepsilon _{2}}\)-off from each other.

-

We iterate these improvements on time-scale and a priori upper bounds until we arrive at the a priori upper bound \(N^{-1/2-\varepsilon }\). Because we improve our a priori upper bounds by \(N^{-\varepsilon _{2}}\) at every step, we require only a \(\varepsilon _{2}\)-dependent number of iterations. All error terms in such scheme are time-averages with a priori upper and lower bound cutoffs which differ by a factor of \(N^{\varepsilon _{2}}\). Moreover, all time-scales on which we perform a time-average will be at most the \(\mathfrak {t}_{\max }\)-scale from Pseudo-Proposition 2.13.

2.8 Technical comments

We start with why/in what way Pseudo-Propositions 2.12, 2.13, and 2.14 are pseudo-results.

-

For technical reasons it will be convenient to "compactify" the microscopic Cole-Hopf transform \(\mathfrak {Z}^{N}\). The current microscopic Cole-Hopf transform solves a stochastic equation on the infinite set \({\mathbb {R}}_{\geqslant 0}\times {\mathbb {Z}}\). We will replace it by the solution of the same stochastic equation but "periodized-in-space" onto a set \({\mathbb {R}}_{\geqslant 0}\times \mathbb {T}_{N}\) as the \(\Vert \Vert _{1;\mathbb {T}_{N}}\)-norm is easier to work with than \(\Vert \Vert _{1;{\mathbb {Z}}}\). Here \(\mathbb {T}_{N}\subseteq {\mathbb {Z}}\) is a torus that is much larger than the macroscopic length-scale N. Thus, it should not change any scaling limits.

-

In view of the previous bullet point, we will only prove estimates for \(\Phi ^{N,2}\) with respect to \(\Vert \Vert _{1;\mathbb {T}_{N}}\)-norms and not \(\Vert \Vert _{1;{\mathbb {Z}}}\)-norms.

-

The details behind the version of Pseudo-Proposition 2.11 that we actually get are different at a technical level. In particular, we will actually take a different, but still morally similar, value for \(\mathfrak {t}_{\max }\) from Pseudo-Proposition 2.13.

-

The stochastic equation for \(\mathfrak {Z}^{N}\) in Proposition 2.7 is multiplicative in \(\mathfrak {Z}^{N}\), so it is not enough to study only local functionals of the particle system. We must also control \(\Vert \mathfrak {Z}^{N}\Vert _{1;\mathbb {T}_{N}}\). This ends up being a technical point we treat with a continuity argument from PDE. It is basically a strategy that says control on initial data \(\mathfrak {Z}_{0,\bullet }^{N}\) propagates itself via control on local functionals.

Let us now give an outline of the rest of this paper.

-

In Sect. 3 we construct local equilibrium and give invariant measure estimates. In Sect. 4 we "compactify" \({\mathbb {Z}}\rightarrow \mathbb {T}_{N}\).

-

In Sect. 5, we get the spatial/time-average estimates alluded to in the would-be-proofs of Pseudo-Propositions 2.12, 2.13, and 2.14. We do this via local equilibrium and a "dynamical one-block step"/dynamic version of the one-block step in [14].

-

In Sect. 6 we establish time-regularity estimates for \(\mathfrak {Z}^{N}\) using mostly standard ideas that are present in [10], for example.

-

We establish a strong version of Pseudo-Proposition 2.11 in Sect. 7 following basically the previously outlined strategy. In Sect. 8 we apply this strong estimate to pretend \(\Phi ^{N,2}\approx 0\) with high probability. We then follow the arguments in [10].

As for a possibly clearer reading of this paper, the reader is invited to do the following.

-

Sections 3, 5, 6, and 7 are all technical. The reader is invited to skim these sections and first take their results for granted and then read the paper in its written order to get a "blackbox" proof of Theorem 1.7. For technical aspects behind showing the important ingredients within Sects. 3, 5, 6, and 7, the reader is invited to read Sect. 7 followed by Sects. 3, 5, then Sect. 6. We have organized Sects. 3, 5, 6, and 7 to provide proofs of main results first, deferring proofs of technical manipulations/ingredients until the end of the section.

3 Local Equilibrium Estimates

This section is a preliminary discussion consisting of tools from hydrodynamic limits. We organize it as follows.

-

We will first introduce the key invariant measures for relevant exclusion processes. These are the canonical ensembles and grand-canonical ensembles that we used to define the three classes of functionals at the beginning of Sect. 2.

-

We move to entropy production in Proposition 3.6. We use it to perform "reduction to local equilibrium" in Lemma 3.8.

-

We then move to invariant measure calculations that will be important after our reduction to local equilibrium. These tools are given in Lemma 3.10, Lemma 3.11, Lemma 3.12, Lemma 3.13, and Corollary 3.14.

Definition 3.1

For \(\mathfrak {I} \subseteq {\mathbb {Z}}\) and \(\varrho \in {\mathbb {R}}\), let the canonical ensemble/measure \(\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}\) be the uniform measure on the hyperplane

We define the grand-canonical ensemble/measure \(\mu _{\varrho ,\mathfrak {I}}\) as a product measure on \(\Omega _{\mathfrak {I}} = \{\pm 1\}^{\mathfrak {I}}\) such that \(\textbf{E}\eta _{x} = \varrho \) for all \(x\in \mathfrak {I}\). We note that \(\varrho \in {\mathbb {R}}\) denotes spin density and not particle density in our conventions.

Definition 3.2

Consider any subset \(\mathfrak {I} \subseteq {\mathbb {Z}}\) and parameter \(\varrho \in {\mathbb {R}}\), and suppose that \(\mathfrak {f}:\Omega _{\mathfrak {I}}\rightarrow {\mathbb {R}}_{\geqslant 0}\) is a probability density with respect to either the canonical ensemble \(\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}\) or the grand-canonical ensemble \(\mu _{\varrho ,\mathfrak {I}}\) depending on the context below.

-

The relative entropy with respect to \(\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}\) and \(\mu _{\varrho ,\mathfrak {I}}\), respectively, are the functionals

$$\begin{aligned} \mathfrak {H}_{\varrho ,\mathfrak {I}}^{\textrm{can}}(\mathfrak {f}) \ \overset{\bullet }{=} \ \textbf{E}^{\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}}\mathfrak {f}\log \mathfrak {f} \quad \textrm{and} \quad \mathfrak {H}_{\varrho ,\mathfrak {I}}(\mathfrak {f}) \ {}&\overset{\bullet }{=} \ \textbf{E}^{\mu _{\varrho ,\mathfrak {I}}}\mathfrak {f}\log \mathfrak {f}. \end{aligned}$$(3.2) -

The Dirichlet form with respect to \(\mu _{\varrho ,\mathfrak {I}}^{\textrm{can}}\) and \(\mu _{\varrho ,\mathfrak {I}}\), respectively, are defined as follows in which we recall that \(\mathfrak {S}_{x,y}\) denotes the generator for the symmetric exclusion process with speed 1 on the bond \(\{x,y\}\subseteq {\mathbb {Z}}\). Recall the set \(\textrm{A}\) of symmetric speeds \(\alpha _{\bullet }\):