Abstract

We consider continuous-time random walks on a random locally finite subset of \(\mathbb {R}^d\) with random symmetric jump probability rates. The jump range can be unbounded. We assume some second-moment conditions and that the above randomness is left invariant by the action of the group \(\mathbb {G}=\mathbb {R}^d\) or \(\mathbb {G}=\mathbb {Z}^d\). We then add a site-exclusion interaction, thus making the particle system a simple exclusion process. We show that, for almost all environments, under diffusive space–time rescaling the system exhibits a hydrodynamic limit in path space. The hydrodynamic equation is non-random and governed by the effective homogenized matrix D of the single random walk, which can be degenerate. The above result covers a very large family of models including e.g. simple exclusion processes built from random conductance models on \(\mathbb {Z}^d\) and on crystal lattices (possibly with long conductances), Mott variable range hopping, simple random walks on Delaunay triangulations, random walks on supercritical percolation clusters.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The simple exclusion process is a fundamental interacting particle system obtained by adding a site-exclusion interaction to multiple random walks [26]. We assume here that particles lie on a random locally finite subset of \({\mathbb R} ^d\) (a simple point process) and allow the jump probability rates to be random as well, but symmetric (i.e. they do not depend on the orientation of the jump). We require that the law of the environment is stationary and ergodic w.r.t. the action of a group \({\mathbb G} \) of \({\mathbb R} ^d\)-translations, \({\mathbb G} \) being the full group of translations or a subgroup isomorphic to \({\mathbb Z} ^d\). Under weak second moment assumptions on the jump rates and a percolation assumption assuring the existence of the process, we then prove for almost all environments that the simple exclusion process admits a hydrodynamic limit (HL) in path space with hydrodynamic equation \(\partial _t \rho = \nabla \cdot ( D \nabla \rho )\), D being the non random effective homogenized matrix associated to a single random walk (D can also be degenerate). The above result (stated in Theorem 4.1 in Sect. 4) covers a very large class of simple exclusion processes in symmetric random environments, e.g. those obtained by adding a site-exclusion interaction to random walks on \(\mathbb {Z}^d\) and on general crystal lattices with random (possibly arbitrarily long) conductances, to random walks performing a Mott variable range hopping, to simple random walks on Delaunay triangulations [18] or on supercritical percolation clusters (in Sect. 5 we discuss some examples). In Sect. 2 we provide a brief presentation of our class of models and our main result, without insisting on technicalities (faced in the subsequent sections). We discuss below how the present work relates with the existing literature, the strategy we have followed and the most original aspects of our contribution.

Given a realization of the environment the resulting simple exclusion process is non-gradient. The usual derivation of the HL for non-gradient interacting particle systems based on the method introduced by Varadhan and further developed by Quastel (cf. [26, 34, 39]) is very technical. It becomes even harder in the disordered case (cf. [14, 35]). On the other hand, for disordered simple exclusion processes with symmetric jump rates one can try to avoid the non-gradient machinery by exploiting some duality property between the particle system and the single random walk and some averaging property of the single random walk. This was first realized by Nagy in [33] for the simple exclusion process on \({\mathbb Z} \) with symmetric random jump rates. Nagy’s analysis had two main ingredients: a representation of the exclusion process in terms of compensated Poisson processes and the Markov semigroup of the random walk (see [33, Eq. (12), (13)] and a quenched CLT for the random walk uniformly in the starting point (see [33, Theorem 1]). Nagy’s representation (coming from duality) has been further generalized in [9, 10] and in [10] we showed that Nagy’s second ingredient can be replaced but a suitable homogenization result of the \(L^2\)-Markov semigroup of the random walk. The advantage comes from the fact that homogenization requires much weaker assumptions than quenched CLT’s (moreover, it is also more natural from a physical viewpoint: the light bulb turns on because of the motion of many electrons and not of a single one). One advantage of the approach based on Nagy’s representation and homogenization is that one can prove the HL without proving the uniqueness of the weak solution of the Cauchy problem associated to the hydrodynamic limit. On the other hand, one gets the HL at a fixed macroscopic time (in the form usually stated e.g. in [26]) but not in path space.

To gain the HL in path space, one has to prove the tightness of the empirical measure. This has been achieved in [22] by developing the method of corrected empirical measure (initially introduced in [24]). This method again relies on duality and on homogenization property of the resolvent of the random walk. Once proved the tightness one can proceed in two ways. If a uniqueness result for the Cauchy problem is available, one can try to push further the analysis of the corrected empirical measure and characterize all limit points of the empirical measures as in [22]. Otherwise, one can try to extend Nagy’s representation and use homogenization (or some averaging, in general) to get the HL for a fixed time, avoiding results of uniqueness. This has revealed useful e.g. for the subdiffusive system considered in [16], where a quenched CLT for varying and converging initial points was used instead of homogenization.

Of course, the above strategies have been developed in specific contexts and not in full generality. The applications to other models require some work, already in the choice of the right function spaces and topologies. In our proof we used the corrected empirical measure and homogenization to prove tightness. To proceed we have presented the two independent routes: by proving uniqueness for the Cauchy problem in weak form we characterize the limit points of the empirical measure continuing to work with the corrected one; alternatively we prove in “Appendix C” Nagy’s representation in our context and use homogenization to get the HL at a fixed time.

We comment now how our result differs from the previous contributions concerning the diffusive HL of simple exclusion processes in symmetric environments. The main novelty is the huge class of models for which the HL has been proved. In particular, (i) we go beyond the lattice (\({\mathbb Z} ^d\) or toroidal) structure and deal with a very broad range of random environments including geometrically amorphous ones (think e.g. to a simple exclusion process on a Poisson point process), (ii) our assumptions on the jump rates are minimal and given by 2nd moment assumptions plus a percolation assumption for Harris’ percolation argument, (iii) we remove ellipticity conditions on the jump rates and treat also the case of degenerate effective homogenized matrix D, (iv) the jump range can be unbounded. Concerning Item (i) we point out that to gain such a generality we have used the theory of \({\mathbb G} \)-stationary random measures, where \({\mathbb G} ={\mathbb R} ^d\), \({\mathbb Z} ^d\) (cf. [20, 21, 25]), in order to fix our general setting in Sect. 3. This also allows to describe the ergodic properties of the environment in terms of the Palm distribution. To achieve the HL in great generality we needed the same generality for the homogenization results. This part, which has also an independent interest, has been presented in the companion work [12], where our homogenization analysis is based on 2-scale convergence. Although [12] has been preliminary to the present work, here we have kept the presentation self-contained.

For completeness, we point out that Theorem 4.1 includes also as very special cases the HL in [10, 33, 36] (for the part concerning non-dynamical random environments in [36]). We recall that in [36] the authors prove the HL for the random conductance model on \({\mathbb Z} ^d\) with possibly time-dependent random conductances in a given interval [a, b], with \(0<a<b<+\infty \). Finally, we point out that for reversible but not symmetric jump rates the homogenization results in [12] for a single random walk still hold, but the duality properties of the simple exclusion process fail. An explicit example is given by the simple exclusion process with site disorder treated in [14, 35]. In general, for reversible but not symmetric jump rates, the hydrodynamic limit is expected to be described by the non-linear equation \(\partial _t \rho = \nabla \cdot (D(\rho ) \nabla \rho )\) with a density-dependent diffusion matrix \(D(\rho )\). As rigorously proved in [35, Theorem 1] in the case of site-disorder, D(0) is expected to coincide with the effective homogenized matrix D associated to a single random walk.

Outline of the paper In Sect. 2 we give a non-technical presentation of setting and results. In Sect. 3 we present more precisely our setting and basic assumptions for the single random walk. In Sect. 4 we state our HL (see Theorem 4.1). In Sect. 5 we discuss some examples. In Sect. 6 we recall the homogenization results from [12] used in the proof of Theorem 4.1. In Sect. 7 we present the graphical construction of the simple exclusion process and analyze its Markov semigroup. In Sect. 8 we collect some results concerning duality. In Sect. 9 we recall some properties of the space \(\mathcal M\) of Radon measures on \({\mathbb R} ^d\) and of the Skorohod space \(D([0,T],\mathcal M)\) and show the uniqueness of the weak solution of the Cauchy problem. In Sect. 10 we study the family of typical environments, for which the HL will be proved. In Sect. 11 we prove Theorem 4.1. In “Appendix A” we present a model satisfying all our assumptions for which the effective homogenized matrix D is nonzero but degenerate. “Appendix B” concerns the proof of Proposition 7.4. In “Appendix C” we give an independent proof of the HL for fixed times by proving Nagy’s representation in our context and by using homogenization.

2 Overview

In this section we give a brief presentation of our context and results postponing a detailed discussion to Sects. 3 and 4. Not surprisingly, this story starts with a probability space \((\Omega , \mathcal F, \mathcal P)\). Here are the other characters: the group \({\mathbb G} \) acting on the probability space and acting by translations on \({\mathbb R} ^d\), a simple point process and a family of jump probability rates.

The group \({\mathbb G} \) can be \({\mathbb R} ^d\) or \({\mathbb Z} ^d\) (the former endowed with the Euclidean distance, the latter with the discrete topology). \({\mathbb G} \) is a measurable space endowed with the Borel \(\sigma \)-algebra and it acts on \((\Omega , \mathcal F, \mathcal P)\) by a family of maps \((\theta _g)_{g\in {\mathbb G} }\), with \(\theta _g :\Omega \rightarrow \Omega \), such that

The group \({\mathbb G} \) acts also on the space \({\mathbb R} ^d\) by translations. We denote its action by \((\tau _g)_{g\in {\mathbb G} }\), where \(\tau _g:{\mathbb R} ^d\rightarrow {\mathbb R} ^d\) is given by

for a fixed basis \(v_1, \dots , v_d\) of \({\mathbb R} ^d\). For many applications, \(\tau _g x= x+g\). When dealing with processes on general lattices (as e.g. the triangular or hexagonal lattice on \({\mathbb R} ^2\)), the general form (2) is more suited (see Sect. 5).

We assume to have a simple point process on \({\mathbb R} ^d\) defined on our probability space. In particular, to each \(\omega \in \Omega \) we associate a locally finite subset \({\hat{\omega }} \subset {\mathbb R} ^d\) by a measurable map \(\Omega \ni \omega \rightarrow {\hat{\omega }} \in \mathcal N\). Above, \(\mathcal N\) is the measurable space of locally finite subsets of \({\mathbb R} ^d\) with \(\sigma \)-algebra generated by the sets \(\{ |{\hat{\omega }} \cap A|=n\}\), where \(A\subset {\mathbb R} ^d\) is Borel and \(n \in {\mathbb N} \) (cf. [5]). As discussed in [5] one can introduce a metric d on \(\mathcal N\) such that the above \(\sigma \)-algebra equals the Borel \(\sigma \)-algebra.

Finally, we fix a measurable function

symmetric in x, y: \(c_{x,y}(\omega )= c_{y,x}(\omega )\). As it will be clear below, only the value of \( c_{x,y}(\omega )\) with \(x\not =y\) in \( {\hat{\omega }}\) will be relevant. Hence, without loss of generality, we take

All the above objects are related by \({\mathbb G} \)-invariance. As detailed in Sect. 3, we assume that \(\mathcal P\) is stationary and ergodic for the action \((\theta _g)_{g\in {\mathbb G} }\). We recall that stationarity means that \(\mathcal P\circ \theta _g^{-1}=\mathcal P\) for all \(g\in {\mathbb G} \), while ergodicity means that \(\mathcal P(A)=1\) for all translation invariant sets \(A \in {\mathcal F}\), i.e. such that \(\theta _g A= A\) for all \(g\in {\mathbb G} \) (we can identity \({\mathbb G} \) with a subset of Euclidean translations by (2), thus motivating our terminology). We also assume that, for \(\mathcal P\)-a.a. \(\omega \in \Omega \) and for all \(g\in {\mathbb G} \), it holds

The minus sign in (5) could appear ugly, but indeed if one identifies \({\hat{\omega }}\) with the counting measure \(\mu _\omega (A):= \sharp ( {\hat{\omega }} \cap A)\), one would restate (5) as \(\mu _{\theta _g\omega } (A)= \mu _\omega ( \tau _g A)\) for all \(A\subset {\mathbb R} ^d\) Borel.

Given the environment \(\omega \), we will introduce by the standard graphical construction the simple exclusion process on \(\hat{\omega }\) with probability rate \(c_{x,y}(\omega )\) for a jump between x and y when the exclusion constraint is satisfied. As discussed in Sect. 7 this simple exclusion process is a Feller process whose Markov semigroup on \(C(\{0,1\}^{{\hat{\omega }}})\) has infinitesimal generator \(\mathcal L_\omega \) acting on local functions as

Above and in what follows, \(\{0,1\}^{{\hat{\omega }}}\) is endowed with the product topology and \(C(\{0,1\}^{{\hat{\omega }}})\) denotes the space of continuous functions on \(\{0,1\}^{{\hat{\omega }}}\) endowed with the uniform topology. We recall that a function f on \(\{0,1\}^{{\hat{\omega }}}\) is called local if \(f(\eta )\) depends on \(\eta \) only through \(\eta (x)\) with x varying among a finite set. The configuration \(\eta ^{x,y}\) is obtained from \(\eta \) by exchanging the occupation variables at x and y, i.e.

The generator \(\mathcal L_\omega \) given in (7) can be thought of as an exchange operator:

When the starting configuration is given by a single particle, the dynamics reduces to a random walk in random environment, denoted by \(X_t^\omega \). In Sects. 3 and 4 we will fix basic assumptions assuring the existence of the above processes for all times for \(\mathcal P\)-a.a. \(\omega \).

We can now present the content of our Theorem 4.1 (see Sect. 4), in which we show that, under suitable weak assumptions, for \(\mathcal P\)-a.a. environments \(\omega \) the above simple exclusion process admits a hydrodynamic limit under diffusive rescaling. More precisely, for \(\mathcal P\)-a.a. \(\omega \) the following holds. Fix an initial macroscopic profile given by a Borel function \(\rho _0 :{\mathbb R} ^d \rightarrow [0,1]\). Suppose that for any \(\varepsilon >0\) the simple exclusion process starts with an initial distribution \(\mathfrak {m}_\varepsilon \) such that

Call \({\mathbb P} _{ \omega ,\mathfrak {m}_\varepsilon }^\varepsilon \) the law of the exclusion process on \( {\hat{\omega }}\) with initial distribution \(\mathfrak {m}_\varepsilon \) and generator \(\varepsilon ^{-2} \mathcal L_ \omega \). Then for all \(T>0\) one has

where \(\rho :{\mathbb R} ^d\times [0,\infty )\rightarrow {\mathbb R} \) is given by \(\rho (x ,t ): =P_t \rho _0(x) \), \((P_t)_{t\ge 0}\) being the Markov semigroup on bounded measurable functions of the Brownian motion with diffusion matrix 2D.

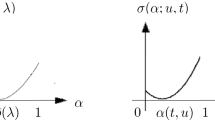

Above D is the so called effective homogenized matrix. D is a \(d\times d\) symmetric non-negative matrix, admitting a variational characterization in terms of the Palm distribution \(\mathcal P_0\) associated to \(\mathcal P\) (cf. Definition 3.3). D is related to the homogenization properties of the diffusively rescaled random walk \(\varepsilon X^\omega _{ \varepsilon ^{-2} t} \) on \(\varepsilon {\hat{\omega }}\) as discussed in [12]. Some of these properties are collected in Proposition 6.1.

3 Basic assumptions and homogenization

In this section we describe our setting and our basic assumptions for the single random walk \(X_t^\omega \) (hence the site-exclusion interaction does not appear here). The context is the same of [12] with the simplification that the jump rates are symmetric, hence the counting measure on \({\hat{\omega }}\) is reversible for \(X_t^\omega \).

We first fix some basic notation. We denote by \(e_1, \dots , e_d\) the canonical basis of \({\mathbb R} ^d\), by \(\ell (A)\) the Lebesgue measure of the Borel set \(A\subset {\mathbb R} ^d\), by \(a \cdot b\) the standard scalar product of \(a,b\in {\mathbb R} ^d\). Given a topological space W, without further mention, W will be thought of as a measurable space endowed with the \(\sigma \)-algebra \(\mathcal B(W) \) of its Borel subsets. \(\mathcal N\) is the space of locally finite subset \(\{x_i\}\) of \({\mathbb R} ^d\). \(\mathcal N\) is endowed with a metric such that the Borel \(\sigma \)-algebra \(\mathcal B(\mathcal N)\) is generated by the sets \(\{ |{\hat{\omega }} \cap A|=n\}\), where \(A\in \mathcal B( {\mathbb R} ^d)\) and \(n \in {\mathbb N} \) (cf. [5]).

Recall that \({\mathbb G} \) acts on the probability space \((\Omega , \mathcal F, \mathcal P)\) by \((\theta _g)_{g\in {\mathbb G} }\) [see (1)] and that \(\mathcal P\) is assumed to be stationary and ergodic for this action. Moreover, \({\mathbb G} \) acts on \({\mathbb R} ^d\) by \((\tau _g)_{g\in {\mathbb G} }\), where [cf. (2)]

Above, V is the matrix with columns given by the basis vectors \(v_1,v_2, \dots , v_d\), fixed once and for all.

We set

Given \(x\in {\mathbb R} ^d\), the \({\mathbb G} \)-orbit of x is defined as the set \(\{\tau _g x\,:\, g\in {\mathbb G} \}\).

If \({\mathbb G} ={\mathbb R} ^d\), then the \({\mathbb G} \)-orbit of the origin of \({\mathbb R} ^d\) equals \({\mathbb R} ^d\). In this case we introduce the function \(g: {\mathbb R} ^d \rightarrow {\mathbb G} \) as follows:

Simply, for each \(x\in {\mathbb R} ^d\), \(g(x)=V^{-1}x\). When \(V={\mathbb I} \) (as in many applications), we have \(\tau _g x=x+g\) and therefore \(g(x)=x\).

If \({\mathbb G} ={\mathbb Z} ^d\), \(\Delta \) is a set of \({\mathbb G} \)-orbit representatives for the action \((\tau _g)_{g\in {\mathbb G} }\). We introduce the functions \(\beta : {\mathbb R} ^d \rightarrow \Delta \) and \(g: {\mathbb R} ^d \rightarrow {\mathbb G} \) as follows:

Hence, given \(x\in {\mathbb R} ^d\), \(\bar{x}\) denotes the unique element of \(\Delta \) such that x and \(\bar{x}\) are in the same \({\mathbb G} \)-orbit, and g(x) denotes the unique element in \({\mathbb G} \) such that \(x= \tau _{g(x)} \bar{x}\).

3.1 An example with \({\mathbb G} ={\mathbb Z} ^d\) and \(V\not ={\mathbb I} \)

Although we will discuss several examples in Sect. 5, our mathematical objects for \({\mathbb G} ={\mathbb Z} ^d\) and \(V\not ={\mathbb I} \) could appear very abstract at a first sight. To have in mind something concrete to which refer below, we present an example related to the random walk and the simple exclusion process on the infinite cluster of the supercritical site Bernoulli percolation on the hexagonal lattice (see Sect. 5 for a further discussion). Consider the hexagonal lattice graph \(\mathcal L=(\mathcal V,\mathcal E)\) in \({\mathbb R} ^2\), partially drawn in Fig. 1. \(\mathcal V\) and \(\mathcal E\) denote respectively the vertex set and the edge set. The vectors \(v_1\), \(v_2\) in Fig. 1 form a fundamental basis for the hexagonal lattice.

We take \(\Omega :=\{0,1\}^\mathcal V\) endowed with the product topology and with the Bernoulli product probability measure \(\mathcal P\) with supercritical parameter p. We set \({\mathbb G} :={\mathbb Z} ^2\). The action \((\theta _g )_{g\in {\mathbb Z} ^2}\) is given by \(\theta _{(g_1,g_2)} \omega = ( \omega _{x-g_1 v_1 -g_2 v_2} ) _{x\in \mathcal V}\) if \(\omega =(\omega _x) _{x\in \mathcal V}\) (note that \(v_1,v_2\) are 2d vectors and not coordinates, while \((g_1,g_2)\in {\mathbb Z} ^2\)). Trivially, \(\mathcal P\) is stationary and ergodic for this action. The action of \({\mathbb Z} ^2\) on \({\mathbb R} ^2\) is given by the translations \(\tau _{(g_1,g_2)}x:= x+g_1 v_1 +g_2 v_2\). Note that \(V=[v_1|v_2]\).

The cell \(\Delta \) in (11) is here the fundamental cell of the lattice \(\mathcal L\) given by the parallelogram with ticked border in Fig. 1 (one has to remove the upper and right edges). Indeed, \(\mathcal V=\cup _{g\in {\mathbb Z} ^d} \tau _g \{0,a\}\) and \(\{0,a\}=\mathcal V\cap \Delta \). Then the map \(\beta : {\mathbb R} ^2 \rightarrow \Delta \) in (13) is the map \(\beta (x): =\bar{x} \) where \(\bar{x} \) is the unique element of \(\Delta \) such that \(x =\bar{x} \text { mod } {\mathbb Z} v_1+{\mathbb Z} v_2\). Moreover, the map \(g:{\mathbb R} ^2 \rightarrow {\mathbb Z} ^2\) in (13) assigns to x the only element \(g=(g_1,g_2) \in {\mathbb Z} ^2\) such that \(x\in \tau _g \Delta = \Delta + g_1 v_1 +g_2 v_2\).

We now describe the simple point process \({\hat{\omega }}\). As p is supercritical, for \(\mathcal P\)-a.a. \(\omega \) the set \(\{x\in \mathcal V\,:\, \omega _x=1\}\) has a unique infinite connected component \(\mathcal C(\omega )\) inside the lattice \(\mathcal L\). We set \({\hat{\omega }}:= \mathcal C(\omega )\). To extend this definition to all \(\omega \), we set \(\mathcal C(\omega ):=\emptyset \) if \(\omega \) does not have a unique infinite connected component.

3.2 Palm distribution

We recall that we have a simple point process on \({\mathbb R} ^d\) defined on our probability space \((\Omega , \mathcal F,\mathcal P)\). This means that to each \(\omega \in \Omega \) we associate a locally finite subset \({\hat{\omega }} \subset {\mathbb R} ^d\) by a measurable map \(\Omega \ni \omega \rightarrow {\hat{\omega }} \in \mathcal N\). We now recall the definition of Palm distribution \(\mathcal P_0\) associated to our simple point process by distinguishing between two main cases and a special subcase. For a more detailed discussion we refer to [12] and references therein. We remark that our treatment reduces to the one in [5] when \({\mathbb G} ={\mathbb R} ^d\), \(\Omega =\mathcal N\), \({\hat{\omega }}=\omega \), \(V={\mathbb I} \) (i.e. \(\tau _g x=x+g)\) and \(\theta _g \omega := \tau _{-g} \omega =\omega -g\). When \({\mathbb G} ={\mathbb R} ^d\) and in the special discrete case treated below, the Palm distribution \(\mathcal P_0\) can be thought of as the probability measure \(\mathcal P\) conditioned to the event \(\{0\in {\hat{\omega }}\}\). For the special discrete case see (18) below, while for \({\mathbb G} ={\mathbb R} ^d\) some care is required as the above event has zero \(\mathcal P\)-probability (see [5, 40] for more details). We will write \({\mathbb E} [\cdot ]\) and \({\mathbb E} _0[\cdot ]\) for the expectation w.r.t. \(\mathcal P\) and \(\mathcal P_0\), respectively.Footnote 1

\(\bullet \) Case \({\mathbb G} ={\mathbb R} ^d\). The intensity of the simple point process \(\hat{\omega }\) is defined as

We will assume that \(m\in (0,+\infty )\). By the \({\mathbb G} \)-stationarity of \(\mathcal P\) we have \(m \ell (B)={\mathbb E} \left[ \sharp \left( {\hat{\omega }} \cap B\right) \right] \) for any \(B\in \mathcal B({\mathbb R} ^d)\). Then the Palm distribution \(\mathcal P_0\) is the probability measure on \((\Omega ,\mathcal F)\) such that, for any \(U\in \mathcal B({\mathbb R} ^d)\) with \(0<\ell (U)<\infty \) (\(\ell (U)\) is the Lebesgue measure of U),

One can check that \(\mathcal P_0\) has support inside the set \( \Omega _0:=\{\omega \in \Omega \,:\, 0\in {\hat{\omega }}\}\).

\(\bullet \) Case \({\mathbb G} ={\mathbb Z} ^d\). The intensity of the simple point process \({\hat{\omega }}\) is defined as

By the \({\mathbb G} \)-stationarity of \(\mathcal P\), \(m \ell (B)={\mathbb E} \left[ {\hat{\omega }}\left( B \right) \right] \) for any \(B\in \mathcal B({\mathbb R} ^d)\) which is an overlap of translated cells \(\tau _g \Delta \) with \(g\in {\mathbb G} \). We will assume that \(m\in (0,+\infty )\). Then the Palm distribution \(\mathcal P_0\) is the probability measure on \(\left( \Omega \times \Delta ,\mathcal F\otimes \mathcal B(\Delta )\right) \) such that

\(\mathcal P_0\) has support inside \(\Omega _0:=\{(\omega , x)\in \Omega \times \Delta \,:\,x\in {\hat{\omega }}\}\).

Note that in the Example of Sect. 3.1, the set \({\hat{\omega }} \cap \Delta \) equals \(\{0,a\}\cap \mathcal C(\omega )\), a being as in Fig. 1. Moreover, \(\Omega _0= \{ (\omega , 0)\,:\, \omega \in \Omega , \;0 \in \mathcal C(\omega )\} \cup \{ (\omega , a)\,:\, \omega \in \Omega , \;a \in \mathcal C(\omega )\} \).

\(\bullet \) Special discrete case: \({\mathbb G} ={\mathbb Z} ^d\), \(V={\mathbb I} \) and \({\hat{\omega }} \subset {\mathbb Z} ^d\) \(\forall \omega \in \Omega \) [see (10)]. This is a subcase of the previous one and in what follows we will call it simply special discrete case. Due to its relevance in discrete probability, we discuss it apart pointing out some simplifications. As \(V={\mathbb I} \) we have \(\Delta =[0,1)^d\). In particular (see the case \({\mathbb G} ={\mathbb Z} ^d\)) \(\mathcal P_0\) is concentrated on \(\{ \omega \in \Omega :0\in {\hat{\omega }} \}\times \{0\}\). Hence we can think of \(\mathcal P_0\) simply as a probability measure concentrated on the set \(\Omega _0:=\{ \omega \in \Omega :0\in {\hat{\omega }}\}\). Formulas (16) and (17) then read

In what follows, when treating the special discrete case, we will use the above identifications without explicit mention.

3.3 Basic assumptions

Recall that the jump probability rates are given by the measurable function \(c_{x,y}(\omega )\) in (3), which is symmetric in x, y (i.e. \(c_{x,y}(\omega )= c_{y,x}(\omega )\)) and recall our convention (4). We also define

We define the functions \(\lambda _k:\Omega _0 \rightarrow [0,+\infty ]\) (for \(k=0,2\)) as follows:

For \({\mathbb G} ={\mathbb R} ^d\) and in the special discrete case, \(\lambda _0(\omega )=c_0(\omega )\) for all \(\omega \in \Omega _0\).

We collect below all our assumptions leading to homogenization of the massive Poisson equation of the diffusively rescaled random walk (some of them have already been mentioned in Sect. 2). We will not recall here the above homogenization results obtained in [12], as not necessary. On the other hand, we will collect some of their consequences in Proposition 6.1 in Sect. 6, since used in the proof of Theorem 4.1.

Assumptions for homogenization:

-

(A1)

\(\mathcal P\) is stationary and ergodic w.r.t. the action \((\theta _g)_{g\in {\mathbb G} }\) of the group \({\mathbb G} \);

-

(A2)

the intensity m of the simple point process \(\hat{\omega }\) is finite and positive [cf. (14), (16) and (18)];

-

(A3)

the \(\omega \)’s in \(\Omega \) such that \( \theta _g\omega \not = \theta _{g'} \omega \) for all \( g\not =g'\) in \({\mathbb G} \) form a measurable set of \(\mathcal P\)-probability 1;

-

(A4)

the \(\omega \)’s in \(\Omega \) such that, for all \(g\in {\mathbb G} \) and \(x,y \in {\mathbb R} ^d\),

$$\begin{aligned}&\widehat{\theta _g\omega } =\tau _{-g}( {\hat{\omega }} ) , \end{aligned}$$(21)$$\begin{aligned}&c_{x,y} (\theta _g\omega )= c_{\tau _g x, \tau _g y} (\omega ) , \end{aligned}$$(22)form a measurable set of \(\mathcal P\)-probability 1;

-

(A5)

for \(\mathcal P\)-a.a. \(\omega \in \Omega \), for all \(x,y\in {\mathbb R} ^d\) it holds

$$\begin{aligned} c_{x,y}(\omega ) = c_{y,x}(\omega )\,; \end{aligned}$$(23) -

(A6)

for \(\mathcal P\)-a.a. \(\omega \in \Omega \), given any \(x,y \in {\hat{\omega }}\) there exists a path \(x=x_0\), \(x_1\),\( \ldots , x_{n-1}, x_n =y\) such that \(x_i \in {\hat{\omega }}\) and \(c_{x_i, x_{i+1}}(\omega ) >0\) for all \(i=0,1, \ldots , n-1\);

-

(A7)

\(\lambda _0 , \lambda _2 \in L^1(\mathcal P_0)\);

-

(A8)

\(L^2(\mathcal P_0)\) is separable.

The above assumptions implies that \(\mathcal P\)-a.s. the random walk \(X_t^\omega \) on \({\hat{\omega }}\) introduced in Sect. 2 is well defined for all times \(t\ge 0\) (recall that a set \(A\subset \Omega \) is called translation invariant if \(\theta _g A=A\) for all \(g\in {\mathbb G} \)):

Lemma 3.1

[12, Lemma 3.5] There exists a translation invariant measurable set \(\mathcal A\subset \Omega \) with \(\mathcal P(\mathcal A)=1\) such that, for all \(\omega \in \mathcal A\), (i) \(c_x(\omega )\in (0,+\infty )\) for all \(x \in {\hat{\omega }}\) [cf. (19)], (ii) the continuous-time Markov chain on \({\hat{\omega }}\) starting at any \(x_0\in {\hat{\omega }}\), with waiting time parameter \(c_x(\omega )\) at \(x\in {\hat{\omega }}\) and with probability \(c_{x,y}(\omega )/c_x(\omega ) \) for a jump from x to y, is non-explosive.

In Sect. 4 we will make an additional assumption [called Assumption (SEP)] assuring that the simple exclusion process introduced via the universal graphical construction is well defined for all times [see (7) for its generator on local functions]. Hence, by thinking the random walk \(X_t^\omega \) as a simple exclusion process with only one particle, also Assumption (SEP) guarantees the well-definedness of \(X_t^\omega \).

We now report some other comments on the above assumptions (A1),...,(A8) taken from [12, Section 2.4] (where more details are provided). By Zero-Infinity Dichotomy (see [5, Proposition 10.1.IV]) and Assumptions (A1) and (A2), for \(\mathcal P\)-a.a. \(\omega \) the set \({\hat{\omega }}\) is infinite. (A3) is a rather superfluous assumption as one can add some randomness by enlarging \(\Omega \) to assure (A3). The assumption of measurability in (A3) and (A4) is always satisfied for \({\mathbb G} ={\mathbb Z} ^d\) by (4) (as discussed in [12, Section 2.4], one can even weaken this requirement). Considering the random walk \(X_t^\omega \), (A5) and (A6) correspond \(\mathcal P\)-a.s. to reversibility of the counting measure and to irreducibility. Finally, we point out that, by [3, Theorem 4.13], (A8) is fulfilled if \((\Omega _0,\mathcal F_0,\mathcal P_0)\) is a separable measure space where \(\mathcal F_0:=\{A\cap \Omega _0\,:\, A\in \mathcal F\}\) (i.e. there is a countable family \(\mathcal G\subset \mathcal F_0\) such that the \(\sigma \)-algebra \(\mathcal F_0\) is generated by \(\mathcal G\)). For example, if \(\Omega _0\) is a separable metric space and \(\mathcal F_0= \mathcal B(\Omega _0)\) [which is valid if \(\Omega \) is a separable metric space and \(\mathcal F= \mathcal B(\Omega )\)] then (cf. [3, p. 98]) \((\Omega _0,\mathcal F_0,\mathcal P_0)\) is a separable measure space and (A8) is valid.

We now explain why the Palm distribution \(\mathcal P_0\) will play a crucial role in the hydrodynamic limit of the simple exclusion process. \(\mathcal P_0\) is indeed the natural object to express the ergodic property of the environment when dealing with observables keeping track also of the local microscopic details of the environment. This is formalized by the following result which will be frequently used below (cf. [11, Appendix B], [12, Proposition 3.1] and recall that \({\mathbb E} _0\) denotes the expectation w.r.t. \(\mathcal P_0\)):

Proposition 3.2

Let \(f: \Omega _0\rightarrow {\mathbb R} \) be a measurable function with \(\Vert f\Vert _{L^1(\mathcal P_0)}<\infty \). Then there exists a translation invariant measurable subset \(\mathcal A[f]\subset \Omega \) such that \(\mathcal P(\mathcal A[f])=1\) and such that, for any \(\omega \in \mathcal A[f]\) and any \(\varphi \in C_c ({\mathbb R} ^d)\), it holds

where \(\mu ^\varepsilon _\omega := \sum _{x\in {\hat{\omega }}} \varepsilon ^d \delta _{\varepsilon x}\).

We point out that the above proposition implies that \(m=\lim _{\ell \uparrow \infty } \sharp ( {\hat{\omega }} \cap [-\ell ,\ell ]^d)/(2\ell )^d \) \(\mathcal P\)-a.s.

We can now also introduce the effective homogenized matrix D, defined in terms of the Palm distribution:

Definition 3.3

We define the effective homogenized matrix D as the unique \(d\times d\) symmetric matrix such that:

\(\bullet \) Case \({\mathbb G} ={\mathbb R} ^d\) and special discrete case

for any \(a\in {\mathbb R} ^d\), where \(\nabla f (\omega , x) := f(\theta _{g(x)} \omega ) - f(\omega )\).

\(\bullet \) Case \({\mathbb G} ={\mathbb Z} ^d\)

for any \(a\in {\mathbb R} ^d\), where \(\nabla f (\omega , x,y-x) := f(\theta _{g(y)} \omega , \beta (y) ) - f(\omega ,x)\) [recall (13)].

We give some comments on the above definition of D. Firstly, it is well posed due to (A7). We also point out that the effective homogenized matrix D, which is defined by a variational formula, can be computed explicitly essentially only in dimension \(d=1\) with positive conductances \(c_{x,y}(\omega )\) only between nearest neighboring points x, y of \({\hat{\omega }}\) (see e.g. [2] and [4, Eq. (4.22)]). On the other hand, in the last years numerical approximation methods for D have been developed in quantitative stochastic homogenization theory (see e.g. [7]).

Under Assumption (A1),...,(A8) the random walk \(X_t^\omega \) satisfies a weak form of central limit theorem where 2D equals the asymptotic diffusion matrix (cf. [12, Theorem 4.4]). Since the position of the random walk can be thought of as an antisymmetric additive functional of the environment viewed from the particle, D has the same structure of a Green–Kubo formula (cf. [4, 27, 29, 30, 38] and references therein).

Finally we introduce an additional assumption assuring a weak form of convergence for the \(L^2\)-Markov semigroup and the \(L^2\)-resolvent associated to the random walk \(X_t^\omega \) as discussed in Sect. 6 [recall definition (11) of \(\Delta \)].

Additional assumption for semigroup and resolvent convergence:

-

(A9)

At least one of the following conditions is satisfied:

-

(i)

for \(\mathcal P\)-a.a. \(\omega \) \(\exists C(\omega )>0\) such that

$$\begin{aligned} \sharp ( {\hat{\omega }} \cap \tau _k \Delta ) \le C(\omega ) \text { for all } k\in {\mathbb Z} ^d ; \end{aligned}$$(27) -

(ii)

at cost to enlarge the probability space \(\Omega \) one can define random variables \((N_k) _{k\in {\mathbb Z} ^d}\) with \( \sharp ( {\hat{\omega }} \cap \tau _k \Delta ) \le N_k\) and such that, for some \(C_0\ge 0\), it holds

$$\begin{aligned}&\sup _{k \in {\mathbb Z} ^d} {\mathbb E} [N_k]<+\infty ,\qquad \sup _{k \in {\mathbb Z} ^d}{\mathbb E} \left[ N_k^2\right] <+\infty , \end{aligned}$$(28)$$\begin{aligned}&|\text {Cov}\,(N_k, N_{k'})| \le C_0 |k-k'| ^{-1} \qquad \forall k\not = k'\text { in }{\mathbb Z} ^d . \end{aligned}$$(29)

-

(i)

Remark 3.4

If one set \(N_k:= \sharp ( {\hat{\omega }} \cap \tau _k \Delta ) \) for \(k\in {\mathbb Z} ^d\), then to check Condition (ii) in (A9) it is enough to check that \({\mathbb E} [N_0^2]<+\infty \) and (29) [due to (A1) and (A2)]. As discussed in [12, Remark 4.3], when \({\mathbb G} ={\mathbb R} ^d\), in (A9) one can replace the cells \(\{\tau _k \Delta \}_{k \in {\mathbb Z} ^d}\) by the cells of any lattice partition of \({\mathbb R} ^d\).

4 Hydrodynamic limit

Given \(\omega \in \Omega \) we consider the simple exclusion process on \(\hat{\omega }\) with particle exchange probability rate \(c_{x,y}(\omega )\). To have a well defined process for all times \(t\ge 0\), \(\mathcal P\)-a.s., we will use in Sect. 7 Harris’ percolation argument [6]. To this aim, we define

Then, given \(\omega \), we associate to each unordered pair \(\{x,y\}\in \mathcal E_\omega \) a Poisson process \(( N_{x,y}(t))_{t\ge 0}\) with intensity \(c_{x,y}(\omega )\), such that the \(N_{x,y}(\cdot )\)’s are independent processes when varying the pair \(\{x,y\}\). The random object \(( N_{x,y}(\cdot ) )_{\{x,y\}\in \mathcal E_\omega }\) takes value in the product space \( D({\mathbb R} _+, {\mathbb N} )^{\mathcal E_\omega }\), \( D({\mathbb R} _+, {\mathbb N} )\) being endowed with the standard Skorohod topology. In the rest, we will denote by \(\mathcal K= ( \mathcal K_{x,y}(\cdot ) )_{\{x,y\}\in \mathcal E_\omega }\) a generic element of \( D({\mathbb R} _+, {\mathbb N} )^{\mathcal E_\omega }\). Moreover, we denote by \({\mathbb P} _\omega \) the law on \( D({\mathbb R} _+, {\mathbb N} )^{\mathcal E_\omega }\) of \(( N_{x,y}(\cdot ) )_{\{x,y\}\in \mathcal E_\omega }\).

In this section we add the following assumption (we call it “SEP” for “simple exclusion process” as the assumption is introduced to assure the existence of the simple exclusion process):

Assumption (SEP). For \(\mathcal P\)-a.a. \(\omega \) there exists \(t_0=t_0(\omega )>0\) such that for \({\mathbb P} _\omega \)-a.a. \(\mathcal K\) the undirected graph \(\mathcal G_{t_0}(\omega ,\mathcal K)\) with vertex set \({\hat{\omega }}\) and edges

has only connected components with finite cardinality.

In Sect. 7 we discuss the universal graphical construction of the exclusion process on \({\hat{\omega }}\) under Assumption (SEP). For \(\mathcal P\)-a.a. \(\omega \) the resulting process is a Feller process and the infinitesimal generator \(\mathcal L_\omega \) acts on local functions as in (7) and (9) (see Proposition 7.4).

We denote by \(\mathcal M\) the space of Radon measures on \({\mathbb R} ^d\) endowed with the vague topology and we denote by \(D([0,T], \mathcal M)\) the Skorohod space of càdlàg paths from [0, T] to \(\mathcal M\) endowed with the Skorohod metric (see Sect. 9 for details). For each \(\varepsilon >0\) we consider the map

Above \(\pi ^\varepsilon _\omega [\eta ]\) is the so called empirical measure associate to \(\eta \). Given a path \(\eta _\cdot = (\eta _s )_{0 \le s \le T}\) and given \(t\in [0,T]\), we define \(\pi ^\varepsilon _{\omega ,t} [ \eta _\cdot ]:= \pi ^\varepsilon _\omega [ \eta _t ]\).

In what follows, given \(\varepsilon >0\) and a probability measure \(\mathfrak {m} \) on \(\{0,1\}^{ {\hat{\omega }}}\), we denote by \({\mathbb P} ^\varepsilon _{\omega , \mathfrak {m} }\) the law of the diffusively rescaled exclusion process on \( {\hat{\omega }}\) with generator \(\varepsilon ^{-2} \mathcal L_\omega \) and initial distribution \(\mathfrak {m} \). Note that the time T is fixed and does not appear in the notation.

We denote by \((B_t)_{t\ge 0}\) the Brownian motion on \({\mathbb R} ^d\) with diffusion matrix given by 2D, D being the effective homogenized matrix (see Definition 3.3). As D is symmetric we can fix an orthonormal basis \(\mathfrak {e}_1\),...,\( \mathfrak {e}_d\) of eigenvectors of D, such that \(\mathfrak {e}_1\),...,\( \mathfrak {e}_{d_*}\) have positive eigenvalues, while the other basis vectors have zero eigenvalue. Then the Brownian motion \((B_t)_{t\ge 0}\) is not degenerate when projected on \(\text {span}(\mathfrak {e}_1, \dots , \mathfrak {e}_{d_*})\), while no motion is present along \(\text {span}(\mathfrak {e}_{d_*+1}, \dots , \mathfrak {e}_{d})\). Given a bounded function \(f:{\mathbb R} ^d\rightarrow {\mathbb R} \) we set \(P_t f(x):=E\left[ f(x+B_t)\right] \).

Theorem 4.1

Suppose that Assumptions (A1),...,(A9) and Assumption (SEP) are satisfied. Then there exists a translation invariant measurable set \(\Omega _\mathrm{typ}\subset \Omega \) with \(\mathcal P(\Omega _\mathrm{typ})=1\), such that for any \(\omega \in \Omega _\mathrm{typ}\) the simple exclusion process is well defined for any initial distribution and exhibits the following hydrodynamic behavior.

Let \(\rho _0: {\mathbb R} ^d \rightarrow [0,1]\) be a measurable function and let \(\rho :{\mathbb R} ^d\times [0,\infty )\rightarrow [0,1]\) be the function \(\rho (x,t):= P_t \rho _0 (x)\). Let \(\{\mathfrak {m}_\varepsilon \}_{\varepsilon >0}\) be an \(\varepsilon \)-parametrized family of probability measures on \(\{0,1\}^{\hat{\omega }}\) such that the random empirical measure \(\pi _\omega ^\varepsilon [\eta ] \) in \(\mathcal M\), with \(\eta \) sampled according to \(\mathfrak {m}_\varepsilon \), converges in probability to \(\rho _0(x)dx\) inside \(\mathcal M\). In other words, we suppose that, for all \(\delta >0\) and \(\varphi \in C_c({\mathbb R} ^d)\), it holds

Then:

-

(i)

For all \(T>0\), as \(\varepsilon \downarrow 0\) the random path \(( \pi ^\varepsilon _{\omega ,t} [ \eta _\cdot ])_{0\le t \le T} \) in \(D ( [0,T], \mathcal M)\), with \(\eta _\cdot =( \eta _t )_{0\le t \le T} \) sampled according to \({\mathbb P} ^\varepsilon _{\omega , \mathfrak {m}_\varepsilon }\), converges in probability to the deterministic path \((\rho (x,t) dx )_{0\le t \le T}\).

-

(ii)

For all \(T>0\), \(\varphi \in C_c({\mathbb R} ^d)\) and \( \delta >0\), it holds

$$\begin{aligned} \lim _{\varepsilon \downarrow 0} {\mathbb P} ^\varepsilon _{ \omega ,\mathfrak {m}_\varepsilon } \Big (\sup _{0\le t \le T}\Big | \varepsilon ^d \sum _{x \in {\hat{\omega }}} \varphi (\varepsilon x) \eta _t (x) - \int _{{\mathbb R} ^d} \varphi (x) \rho (x,t) dx\Big | >\delta \Big )=0. \end{aligned}$$(32)

The proof of the above theorem is given in Sect. 11 (Sect. 11.2 can be replaced by “Appendix C”, the two approaches are alternative). The function \(\rho (x,t)= P_t \rho _0 (x)\) is the unique weak solution of the Cauchy system

in the sense specified by Lemma 9.3 in Sect. 11.2.

Remark 4.2

Theorem 4.1 remains valid if Assumption (SEP) is replaced by any other assumption leading to Proposition 7.4 below. Indeed, the latter contains all the properties used in the proof provided in Sect. 11. See also Remark 6.2 for what concerns modifications to Assumption (A9).

Remark 4.3

The assumptions of Theorem 4.1 do not include that the effective homogenized matrix D is strictly positive definite. Checking this property can be a non-trivial task (see the discussion on the non-degeneracy of D in [12, Introduction and Section 5]). For an example of degenerate and nonzero D see “Appendix A”.

5 Some applications

There are plenty of examples to which Theorem 4.1 can be applied. We discuss here four main classes. The application of Theorem 4.1 to the simple exclusion process with random jump rates on the Delaunay triangulation is discussed in [18].

5.1 Nearest-neighbor random conductance model on \({\mathbb Z} ^d\), \(d\ge 1\)

We take \({\mathbb G} :={\mathbb Z} ^d\) acting on \({\mathbb R} ^d\) by standard translations, i.e. \(\tau _g x=x+ g\). Let \({\mathbb E} ^d\) be the set of unoriented edges of \({\mathbb Z} ^d\) and endow \(\Omega :=(0,+\infty )^{{\mathbb E} ^d}\) with the product topology. Given \(\omega \in \Omega \), we write \(\omega _{x,y}\) for the component of \(\omega \) associated to the edge \(\{x,y\}\in {\mathbb E} ^d\). The action \((\theta _x)_{x\in {\mathbb Z} ^d}\) is the standard one: \((\theta _x \omega ) _{a,b}:= \omega _{a+x,b+x}\). We set \({\hat{\omega }} := {\mathbb Z} ^d\), hence the exclusion process lives on \({\mathbb Z} ^d\). We define \(c_{x,y}(\omega ):=\omega _{x,y}\) if \(\{x,y\}\in {\mathbb E} ^d\) and \(c_{x,y}(\omega ):=0\) otherwise. It is simple to check that Assumptions (A1),..., (A9) are satisfied whenever \(\mathcal P\) is stationary and ergodic, \(\mathcal P\) satisfies (A3) (which is a rather superfluous assumption, as already commented) and \({\mathbb E} [\omega _{x,y}]<+\infty \) for all \(\{x,y\}\in {\mathbb E} ^d\). When \(d=1\), D can be explicitly computed and one gets \(D= 1/ {\mathbb E} [1/c_{0,1}(\omega )]\in [0,+\infty )\) (apply [2, Proposition 4.1 and Exercise 4.3] or use the characterization of D as a.s. limit (for \(n\rightarrow +\infty \)) of 2n times the effective conductivity under unit potential of the 1d resistor network with node set \([-n,n]\cap {\mathbb Z} \) and with nearest-neighbors conductances \(c_{x,y}(\omega )\) [13]). For \(d\ge 2\) the variational problem in (25) leading to D does not have an explicit solution.

Below, given \(k>0\), we say that the random conductances \(\omega _{x,y}\) are k-dependent if, given \(A,B\subset {\mathbb Z} ^d\) with Euclidean distance between A and B larger than k, the random fields

are independent (see [23, page 178] for a similar definition).

Proposition 5.1

Assumption (SEP) is satisfied if at least one of the following conditions is satisfied:

-

(i)

\(\mathcal P\)-a.s. there exists a constant \(C(\omega )\) such that \(\omega _{x,y}\le C(\omega )\) for all \(\{x,y\}\in {\mathbb E} ^d\);

-

(ii)

under \(\mathcal P\) the random conductances \(\omega _{x,y}\) are independent;

-

(iii)

under \(\mathcal P\) the random conductances \(\omega _{x,y}\) are k-dependent with \(k>0\).

We note that, by ergodicity, in Item (i) one could just restrict to a non-random upper bound C. Item (ii) is a special case of Item (iii).

Proof

We start with Item (i). As \({\mathbb P} _\omega ( \mathcal K_{x,y}(t_0)>0)= 1-e^{ - \omega _{x,y} t_0}\), it is enough to take \(t_0\) small to have \(1-e^{ - C (\omega ) t_0}< p_c\), \(p_c>0\) being the critical probability for the Bernoulli bond percolation on \({\mathbb Z} ^d\).

Let us consider Items (ii) and (iii). We present an argument valid for all \(d\ge 1\) (but for \(d=1\) one can give easily a more direct proof). By \({\mathbb Z} ^d\)-stationarity the distribution of \(\omega _{x,y}\) depends only on the axis parallel to the edge \(\{x,y\}\). To simplify the notation we suppose that the conductances are identically distributed with common distribution \(\nu \) (otherwise one has just to deal with a finite family of distributions \(\nu _1,\nu _2,\dots , \nu _d\) in the stochastic domination below). We observe that, for any \(C_0>0\), the graph \(\mathcal G_{t_0} (\omega ,\mathcal K)\) described in Assumption (SEP) is contained in the graph \(\mathcal G'_{t_0}(\omega ,\mathcal K)\) with edges \(\{x,y\}\in {\mathbb E} ^d\) such that

Given \(e\in {\mathbb E} ^d\) we set \(Y_e(\omega ,\mathcal K):=1 \) if e is present in \(\mathcal G'_{t_0}(\omega ,\mathcal K)\), otherwise we set \(Y_e=0\). We define \(\alpha (C_0):= \nu \left( (C_0 ,+\infty ) \right) \). Then, under \( {\mathbb P} := \int d\mathcal P(\omega ) {\mathbb P} _\omega \), the random field \(Y=(Y_e)_{e\in {\mathbb E} ^d}\) is stationary, satisfies \({\mathbb P} ( Y_e=1)\le \alpha (C_0) + (1-\alpha (C_0))( 1-e^{ - C _0 t_0})\) and is given by independent r.v.’s under (ii) and by k-dependent r.v.’s under (iii). Hence, fixed \(p_*\in (0,p_c)\), we can first choose \(C_0\) large and afterwards \(t_0\) small to have \({\mathbb P} ( Y_e=1)\le p_*\). In particular, in case (ii) we conclude that \({\mathbb P} \)-a.s. Y does not percolate. Similarly to [23, Theorem (7.65)] (invert the role between 0 and 1 there), by taking \(p_*\) small enough we get that the random field Y is stochastically dominated by a subcritical Bernoulli bond percolation (i.e. of parameter smaller than \(p_c\)) and therefore \({\mathbb P} \)-a.s. Y does not percolate. Hence, in both cases (ii) and (iii), by suitably choosing \(C_0,t_0\), the graph \(\mathcal G'_{t_0}(\omega ,\mathcal K)\) has only connected components with finite cardinality \({\mathbb P} \) a.s. (i.e. for \(\mathcal P\)-a.a. \(\omega \) and for \({\mathbb P} _\omega \)-a.a. \(\mathcal K\)). The same then must hold for \(\mathcal G_{t_0}(\omega ,\mathcal K)\subset \mathcal G'_{t_0}(\omega ,\mathcal K)\). \(\square \)

5.2 Nearest-neighbor random conductance models on a generic crystal lattice

We consider a generic crystal lattice \(\mathcal L=(\mathcal V,\mathcal E)\) in \({\mathbb R} ^d\), \(d\ge 1\), as follows. We fix a basis \(v_1,\ldots , v_d\) of \({\mathbb R} ^d\), write V for the matrix with columns \(v_1,\ldots ,v_d\) and write \(\Delta \) for the d-dimensional cell (11). Given \(g\in {\mathbb G} :={\mathbb Z} ^d\), we denote by \(\tau _g\) the translation (10), i.e. \(\tau _g x = x+ V g \). We fix a finite set \(\mathcal A\subset \Delta \). Then the vertex set \(\mathcal V\) of the crystal lattice is given by \(\sqcup _{g\in {\mathbb G} } ( \tau _g \mathcal A)\). The edge set \(\mathcal E\) has to be a family of unoriented pairs of vertices \(\{x,y\}\) with \(x\not =y\) in \(\mathcal V\), such that \(\tau _g \mathcal E= \mathcal E\) for all \(g\in {\mathbb G} \). In particular, the crystal lattice \(\mathcal L=(\mathcal V,\mathcal E)\) is left invariant by the action \( (\tau _g )_{g\in {\mathbb G} }\) on \({\mathbb R} ^d\). As an example consider the hexagonal lattice \(\mathcal L=(\mathcal V,\mathcal E)\) in \({\mathbb R} ^2\) (cf. Sect. 3.1). Then \(\mathcal A=\{0, a\}\) (see Fig. 1).

We take \(\Omega := (0,+\infty )^{\mathcal E}\) endowed with the product topology and set \(\omega _{x,y}:=\omega _{\{x,y\}}\). The action of \((\theta _g)_{g\in {\mathbb G} }\) on \(\Omega \) is given by \( \theta _g \omega : =(\omega _{x-Vg,y-Vg }\,:\, \{x,y\}\in \mathcal E) \) if \( \omega =(\omega _{x,y}\,:\,\{x,y\}\in \mathcal E)\). For any \(\omega \in \Omega \), we set \({\hat{\omega }} := \mathcal V\), hence our simple exclusion process lives on \(\mathcal V\). The set \(\Omega _0\) introduced after (17) equals \(\Omega \times \mathcal A\) and, by (16), \(m \ell (\Delta )= |\mathcal A| \). Hence [see (17)] \(\mathcal P_0(d\omega ,dx)= \mathcal P(d\omega ) \otimes \mathrm{Av}_{u\in \mathcal A} \delta _u(dx) \), where \(\mathrm{Av}\) denotes the arithmetic average and \(\delta _u\) is the Dirac measure at u.

We set \(c_{x,y}(\omega ):= \omega _{x,y}\) if \(\{x,y\}\in \mathcal E\) and \(c_{x,y}(\omega ):=0\) otherwise. If \(\mathcal P\) satisfies (A1), (A2), (A3) and the crystal lattice is connected, then all assumptions (A1),...,(A9) are satisfied if \(\sum _{y\in \mathcal V}\sum _{u \in \mathcal A} {\mathbb E} [ \omega _{u,y}]|y-u|^2<+\infty \). It the crystal lattice is locally finite (i.e. vertices have finite degree), then the above moment bound equal the bound \({\mathbb E} [\omega _{x,y}]<+\infty \) for \(\{x,y\}\in \mathcal E\) (by \({\mathbb G} \)-stationarity and local finiteness, we have just a finite family of bounds).

For locally finite crystal lattices, by reasoning as done for the lattice \({\mathbb Z} ^d\), we get that Assumption (SEP) is satisfied if the conductances \(\omega _{x,y}\) are uniformly bounded or if the conductances \(\omega _{x,y}\) are independent or k-dependent under \(\mathcal P\).

5.3 Simple exclusion processes on marked simple point processes

We take \({\mathbb G} := {\mathbb R} ^d\) (\(d\ge 1\)) acting on \({\mathbb R} ^d\) by standard translations (\(\tau _g x=x+ g\)). \(\Omega \) is given by the space of marked counting measures with marks in \({\mathbb R} \) [5], hence \((\Omega ,\mathcal F,\mathcal P)\) describes a marked simple point process [5]. By identifying \(\omega \) with its support, we have \(\omega =\{(x_i,E_i)\}\) where \(E_i\in {\mathbb R} \) and the set \(\{x_i\}\) is locally finite. The action \(\theta _x\) on \(\Omega \) is given by \(\theta _x \omega :=\{ (x_i-x, E_i)\}\) if \(\omega =\{(x_i, E_i)\}\). Our simple point process is obtained by setting \({\hat{\omega }} =\{x_i\}\) when \(\omega =\{(x_i,E_i)\}\). We take

where \(u:{\mathbb R} ^2 \rightarrow {\mathbb R} \) is a symmetric measurable function bounded from below. We point out that Mott random walk, used to model Mott variable range hopping in amorphous solids (see e.g. [15, 17] and references therein) is the random walk with jump rates \(c_{x,y}(\omega )\) as above, with \(u(a,b)=|E_a-E_b|+|E_a|+|E_b|\).

Suppose that \(\mathcal P\) satisfies (A1),(A2) and (A3). Then \(\mathcal P_0\) is simply the standard Palm distribution associated to the marked simple point process with law \(\mathcal P\) [5]. Assumptions (A4), (A5), (A6) are automatically satisfied. As the above space \(\Omega \) is Polish (see [5]) and \(\Omega _0=\{\omega \,:\, 0 \in {\hat{\omega }}\} \) is a Borel subset of \(\Omega \), \(\Omega _0\) is separable and therefore (A8) is satisfied. As proven in [12, Section 5.4], (A7) is implied by the bound \({\mathbb E} \bigl [ |{\hat{\omega }} \cap [0,1]^d | ^{2} \bigr ]<+\infty \). Assumption (A9) is verified in numerous examples of marked simple point processes, including the Poisson point process (PPP) with intensity \(m\in (0,+\infty )\). Assumption (SEP) is of percolation nature. We show its validity for PPP’s. Moreover, since one can consider as well other jump rates \(c_{x,y}(\omega )\) for a random walk on a marked simple point process, we state our percolation result in a more general form.

Proposition 5.2

Suppose that under \(\mathcal P\) the random set \(\{x_i\}\) is a PPP with intensity \(m\in (0,+\infty )\). Take jump rates \(c_{x,y}(\omega )\) satisfying (22) in (A4) and (23) in (A5). Suppose that, for \(\mathcal P\)-a.a. \(\omega \), \(c_{x,y}(\omega ) \le g(|x-y|)\) for any \(x,y \in {\hat{\omega }}\), where g(r) is a fixed bounded function such that the map \( x \mapsto g(|x|) \) belongs to \(L^1({\mathbb R} ^d, dx)\) [for example take \(g(r)={C}e^{-r}\) for (34)]. Then Assumption (SEP) is satisfied.

Proof

Note that \({\mathbb P} _\omega ( \mathcal K_{x,y}(t) \ge 1)= 1- e^{-c_{x,y}(\omega )t} \le 1- \exp \{-g(|x-y|) t\}\le C_1 g(|x-y|) t\) for some fixed \(C_1>0\) if we take \(t\le 1\) (since g is bounded). We restrict to t small enough such that \(C_1\Vert g\Vert _\infty t <1\) and \(t\le 1\). Consider the random connection model [31] on a PPP with intensity m where an edge between \(x\not =y\) is created with probability \(C_1 g(|x-y|) t \). Due to the independence of the Poisson processes \(N_{x,y}(\cdot )'s\) given \(\omega \), one can couple the above random connection model with the field \((\omega , \mathcal K)\) with law \({\mathbb P} :=\int d \mathcal P(\omega ){\mathbb P} _\omega \) in a way that the graph in the random connection model contains the graph \(\mathcal G_{t}(\omega ,\mathcal K)\). We choose \(t=t_0\) small enough to have \( m C_1 t_0 \int _{{\mathbb R} ^d} dx g(|x|) <1\). The above bound and the branching process argument in the proof of [31, Theorem 6.1] [cf. (6.3) there] imply that a.s. the random connection model has only connected components with finite cardinality. Hence the same must hold for \(\mathcal G_{t_0}(\omega ,\mathcal K)\) \(\square \)

5.4 Simple exclusion processes on infinite clusters

For completeness we give an example associated to the random geometric structure introduced in Sect. 3.1. Recall that there \(\mathcal L=(\mathcal V,\mathcal E)\) is the hexagonal lattice, \(\Omega =\{0,1\}^{\mathcal V}\), \(\mathcal P\) is a Bernoulli site percolation, \({\hat{\omega }} = \mathcal C(\omega )\) is the unique infinite percolation cluster inside \(\mathcal L\) \(\mathcal P\)-a.s. We consider the simple exclusion process on \(\mathcal C(\omega )\) with \(c_{x,y}(\omega )=1\) if \(x,y \in \mathcal C(\omega )\) and \(\{x,y\}\in \mathcal E\). The it is trivial to check that Assumptions (A1),\(\dots \),(A9) and (SEP) are all satisfied.

We now explain how Theorem 4.1 improves the hydrodynamic result given by [10, Theorem 2.2]. We take \({\mathbb G} :={\mathbb Z} ^d\) and \(V:={\mathbb I} \) and define \({\mathbb E} ^d\) as in Example 5.1. We take \(\Omega :=[0,+\infty )^{{\mathbb E} ^d}\) with the product topology. The action \((\theta _x)_{x\in {\mathbb Z} ^d}\) is the standard one as in Example 5.1. Let \(\mathcal P\) be a probability measure on \(\Omega \) stationary, ergodic and fulfilling (A3) for the above action. We assume that for \(\mathcal P\)-a.a. \(\omega \) there exists a unique infinite connected component \(\mathcal C(\omega )\subset {\mathbb Z} ^d\) in the graph given by the edges \(\{x,y\}\) in \({\mathbb E} ^d\) with positive \(\omega _{x,y}:=\omega _{\{x,y\}}\). We set \({\hat{\omega }}:=\mathcal C(\omega )\), \(c_{x,y}(\omega ):=\omega _{x,y}\) if \(\{x,y\}\) is an edge of \(\mathcal C(\omega )\) and \(c_{x,y}(\omega ):=0\) otherwise and assume that \({\mathbb E} [c_{0,e_i}]<+\infty \) for \(i=1,2,\dots , d\). Then all Assumptions (A1),...,(A9) are satisfied. If at least one of the Items (i), (ii), (iii) in Proposition 5.1 is satisfied, then Assumption (SEP) is satisfied too (by the arguments in the proof of Proposition 5.1) and Theorem 4.1 applies, implying the hydrodynamic limit in path space. This result is stronger than [10, Theorem 2.2], since in [10] \(c_{x,y}(\omega )\) has to be bounded uniformly in x, y and \(\omega \), D has to be strictly positive definite and the hydrodynamic limit is for a fixed time.

6 Random walk semigroup and resolvent convergence by homogenizaton

In this section we recall the main results from [12] which will be used in the proof of Theorem 4.1. As in Proposition 3.2 we introduce the atomic measure

We also introduce the set [recall (19)]

As explained in [12, Section 3.3], the set \(\Omega _1\) is translation invariant and satisfies \(\mathcal P(\Omega _1)=1\). Let us fix \(\omega \in \Omega _1\). We call \({C_\mathrm{loc}}(\varepsilon {\hat{\omega }})\) the space of local functions \(f : \varepsilon {\hat{\omega }} \rightarrow {\mathbb R} \) (here local means that f has finite support, i.e. f is zero outside a finite set).

We define

and introduce the bilinear form

with domain \(\mathcal D_\omega ^\varepsilon \). Since \(\omega \in \Omega _1\) it holds \({C_\mathrm{loc}} (\varepsilon {\hat{\omega }}) \subset \mathcal D_\omega ^\varepsilon \), as explained in [12, Section 3.3]. We call \(\mathcal D_{\omega ,*}^\varepsilon \) the closure of \({C_\mathrm{loc}} (\varepsilon {\hat{\omega }})\) w.r.t. the norm \(\Vert f\Vert _{L^2(\mu ^\varepsilon _\omega )} + \mathcal E_\omega ^\varepsilon (f,f)^{1/2}\). Then, as stated in [19, Example 1.2.5], the bilinear form \( \mathcal E_\omega ^\varepsilon \) restricted to \(\mathcal D_{\omega ,*}^\varepsilon \) is a regular Dirichlet form. In particular, there exists a unique nonpositive self-adjoint operator \({\mathbb L} ^\varepsilon _\omega \) in \(L^2(\mu ^\varepsilon _\omega )\) such that \(\mathcal D_{\omega ,*}^\varepsilon \) equals the domain of \(\sqrt{-{{\mathbb L} }^\varepsilon _\omega }\) and \( \mathcal E_\omega ^\varepsilon (f,f) = \Vert \sqrt{-{\mathbb L} ^\varepsilon _\omega } f\Vert ^2_{L^2(\mu ^\varepsilon _\omega )}\) for any \(f\in \mathcal D_{\omega ,*}^\varepsilon \) (see [19, Theorem 1.3.1]). Due to [19, Lemma 1.3.2 and Exercise 4.4.1], \({\mathbb L} ^\varepsilon _\omega \) is the infinitesimal generator of the strongly continuous Markov semigroup \((P^\varepsilon _{\omega ,t} )_{t \ge 0}\) on \(L^2(\mu ^\varepsilon _\omega )\) associated to the random walk \((\varepsilon X^\omega _{ \varepsilon ^{-2} t} )_{t\ge 0}\) on \(\varepsilon {\hat{\omega }}\) defined in terms of holding times and jump probabilities (see Lemma 3.1). Hence, \(P^\varepsilon _{\omega ,t} f(x) = E_x\bigl [ f(\varepsilon X^\omega _{ \varepsilon ^{-2} t}) \bigr ]\) for \(f\in L^2(\mu ^\varepsilon _\omega )\) and \(x\in \varepsilon {\hat{\omega }}\), \(E_x\) denoting the expectation when the random walk starts at x. For completeness, although not used below, we report that (using that \(\omega \in \Omega _1\)) one can check that \({C_\mathrm{loc}} (\varepsilon {\hat{\omega }})\subset \mathcal D( {\mathbb L} ^\varepsilon _\omega )\) and that \({\mathbb L} ^\varepsilon _\omega f(\varepsilon x) = {\varepsilon ^{-2}}\sum _{y \in {\hat{\omega }}} c_{x,y}(\omega ) \left( f(\varepsilon y)-f(\varepsilon x) \right) \) for all \(x \in {\hat{\omega }},\; \forall f\in {C_\mathrm{loc}}(\varepsilon {\hat{\omega }})\) (the series in the r.h.s. is well defined being absolutely convergent).

We recall that we write \(( P_t )_{t \ge 0} \) for the Markov semigroup associated to the Brownian motion \((B_t)_{t\ge 0}\) on \({\mathbb R} ^d\) with diffusion matrix 2D given in Definition 3.3 (strictly speaking it would be natural here to refer to the semigroup on \(L^2(mdx)\) but \(P_t\) will be applied below to bounded functions, hence one can keep the same definition of \(P_t\) as for Theorem 4.1). Given \(\lambda >0\) we write \(R^\varepsilon _{\omega ,\lambda }: L^2(\mu ^\varepsilon _\omega )\rightarrow L^2(\mu ^\varepsilon _\omega ) \) for the resolvent associated to the random walk \(\varepsilon X^\omega _{ \varepsilon ^{-2} t} \), i.e. \(R^\varepsilon _{\omega ,\lambda }:= (\lambda -{\mathbb L} ^\varepsilon _\omega )^{-1} =\int _0^\infty e^{- \lambda s} P^\varepsilon _{\omega ,s} ds \). We write \( R_\lambda : L^2(m dx) \rightarrow L^2(m dx)\) for the resolvent associated to the above Brownian motion \((B_t)_{t\ge 0}\).

Proposition 6.1

[12, Theorem 4.4] Let Assumptions (A1),...,(A9) be satisfied. Then there exists a translation invariant measurable set \(\Omega _\sharp \subset \Omega \) with \(\mathcal P( \Omega _\sharp )=1\) such that for any \( \omega \in \Omega _\sharp \), any \(f \in C_c({\mathbb R} ^d)\), \(\lambda >0\), \(t\ge 0\) it holds:

Remark 6.2

As stated in [12, Remark 4.5] Assumption (A9) is used in [12] only to prove for \(\mathcal P\)-a.a. \(\omega \) that

Hence, in Theorem 4.1 one could replace (A9) by any other condition leading to the above property (39) \(\mathcal P\)-a.s.

For later use, we also point out that the \(\omega \)’s satisfying (39) form a translation invariant measurable set.

7 Graphical construction of the simple exclusion process

Let \(t_0=t_0(\omega )\) be as in Assumption (SEP) in Sect. 4. Recall definition (30) of \(\mathcal E_\omega \).

Definition 7.1

(Property \((P_r)\)) Given \(r\in {\mathbb N} \) we say that the pair \((\omega , \mathcal K)\in \Omega \times D({\mathbb R} _+,{\mathbb N} )^{\mathcal E_\omega }\) satisfies property \((P_r)\) if the undirected graph \(\mathcal G^r_{t_0}(\omega ,\mathcal K)\) with vertex set \({\hat{\omega }}\) and edge set \(\{ \{x,y\} \in \mathcal E_\omega \,:\, \mathcal K_{x,y}((r+1) t_0)> \mathcal K_{x,y} (r t_0) \}\) has only connected components with finite cardinality.

Recall definition (19) of \(c_x(\omega )\).

Definition 7.2

(Set \(\tilde{\Omega }\)) The set \(\tilde{\Omega }\) is given by the elements \(\omega \in \Omega \) such that \(c_x (\omega )<+\infty \;\forall x \in {\hat{\omega }}\) and such that the properties in Assumptions (A4) and (A5) are fulfilled [namely, (21), (22), (23) hold for all x, y, g].

As already pointed out in Sect. 6, the set \(\Omega _1\) defined in (36) is a translation invariant set and \(\mathcal P(\Omega _1)=1\). It is trivial to check that the same holds for \(\tilde{\Omega }\subset \Omega _1\).

Definition 7.3

(Sets \({\mathbb K} _\omega \), \(\Omega _*\)) Fixed \(\omega \in \Omega \), \({\mathbb K} _\omega \) is the set given by the elements \(\mathcal K\in D({\mathbb R} _+,{\mathbb N} )^{\mathcal E_\omega }\) such that

-

(i)

\((\omega ,\mathcal K)\) satisfies property \((P_r)\) for all \(r\in {\mathbb N} \);

-

(ii)

the jump time sets \(\{ t>0\,: \mathcal K_{x,y}(t-)\not = \mathcal K_{x,y} (t) \}\) are disjoint as \(\{x,y\}\) varies among \(\mathcal E_\omega \);

-

(iii)

\(\mathcal K_x(t):=\sum _{y: \{x,y\}\in \mathcal E_\omega } \mathcal K_{x,y}(t) <+\infty \) for all \(x\in {\hat{\omega }}\) and \(t\ge 0\).

We define \(\Omega _*\) as the set of \(\omega \in \tilde{\Omega }\) such that \({\mathbb P} _\omega ( {\mathbb K} _\omega )=1\).

Since \(\mathcal P(\tilde{\Omega })=1\) and by the loss of memory of the Poisson point process, we have that \(\mathcal P(\Omega _*)=1\). It is simple to check that \(\Omega _*\) is translation invariant.

Also for later use, we now recall the graphical construction of the simple exclusion process. To this aim it is convenient to think the simple exclusion process as an exchange process.

Let us fix \(\omega \in \Omega _*\) and \(\mathcal K\in {\mathbb K} _\omega \). Given a particle configuration \(\xi \in \{0,1\}^{{\hat{\omega }}}\) we now define a deterministic trajectory \((\eta ^\xi _t[\mathcal K])_{t \ge 0 }\) in \(D({\mathbb R} _+,\{0,1\}^{{\hat{\omega }}} )\) and starting at \(\xi \) by an iterative procedure. We set \(\eta ^\xi _0[ \mathcal K]:=\xi \). Suppose that the deterministic trajectory has been defined up to time \(r t_0\), \(r\in {\mathbb N} \) (note that for \(r=0\) this follows from our definition of \(\eta ^\xi _0[ \mathcal K]\)). As \(\mathcal K\in {\mathbb K} _\omega \) all connected components of \(\mathcal G^r_{t_0}(\omega ,\mathcal K)\) have finite cardinality. Let \(\mathcal C\) be such a connected component and let

As \(\mathcal K\in {\mathbb K} _\omega \), the l.h.s. is indeed a finite set. The local evolution \(\eta ^\xi _t[ \mathcal K](z) \) with \(z \in \mathcal C\) and \(r t_0 < t \le (r+1) t_0\) is described as follows. Start with \(\eta ^\xi _{rt_0}[ \mathcal K]\) as configuration at time \(r t_0\) in \(\mathcal C\). At time \(s_1\) exchange the values between \(\eta (x)\) and \(\eta (y)\) if \(\mathcal K_{x,y}(s_1)= \mathcal K_{x,y}(s_1-)+1\) and \(\{x,y\}\) is an edge in \(\mathcal C\) (there is exactly one such edge as \(\mathcal K\in {\mathbb K} _\omega \)). Repeat the same operation orderly for times \(s_2,s_3, \dots , s_k\). Then move to another connected component of \(\mathcal G_{t_0}^r(\omega ,\mathcal K)\) and repeat the above construction and so on. As the connected components are disjoint, the resulting path does not depend on the order by which we choose the connected components in the above algorithm. This procedure defines \( (\eta ^\xi _t[\mathcal K])_{ r t_0< t \le (r+1) t_0}\). Starting with \(r=0\) and progressively increasing r by 1 we get the trajectory \( (\eta ^\xi _t[\mathcal K])_{t\ge 0}\).

We recall that \(C(\{0,1\}^{{\hat{\omega }}})\) is the space of continuous functions on \(\{0,1\}^{{\hat{\omega }}}\) endowed with the uniform topology. Given \(\omega \in \Omega _*\) we consider the probability space \(( {\mathbb K} _\omega , {\mathbb P} _\omega )\), and write \({\mathbb E} _\omega \) for the associated expectation. We set

Proposition 7.4

Take \(\omega \in \Omega _*\) and fix \(\xi \in \{0,1\}^{{\hat{\omega }}}\). Then the random trajectory \(\bigl ( \eta ^\xi _t [\mathcal K]\bigr )_{t\ge 0}\) with \(\mathcal K\) sampled in the probability space \(( {\mathbb K} _\omega , {\mathbb P} _\omega )\) belongs to the Skorohod space \(D( {\mathbb R} _+, \{0,1\}^{{\hat{\omega }}})\) and it starts at \(\xi \). It describes a Feller process, called simple exclusion process. In particular, \(( S(t) )_{t\ge 0}\) is a Markov semigroup on \(C(\{0,1\}^{{\hat{\omega }}})\). Moreover, the domain of its infinitesimal generator \(\mathcal L_\omega \) contains the family of local functions and for any local function f the function \(\mathcal L_\omega f\) is given by the right hand sides of (7) and (9), which are absolutely convergent series in \(C(\{0,1\}^{{\hat{\omega }}})\).

The above proposition can be derived by the standard arguments used for the graphical construction of the SEP usually presented under the assumption of finite range jumps (see e.g. [37, Section 2.1]). The only exception is given by the derivation of the identities (7) and (9) for local functions, due to possible unbounded jump range. We refer to “Appendix B” for the proof of (7) and (9).

8 Duality

In order to prove the tightness of the empirical measure by means of the corrected empirical one, we need to deal with non local functions on \(\{0,1\}^{{\hat{\omega }}}\). In what follows we collect the extended results concerning \(\mathcal L_\omega \) and Dynkin martingales, which will be used in Sect. 11.1. Recall (19).

In all this section we restrict to \(\omega \in \Omega _*\) (cf. Definition 7.3).

Definition 8.1

Given a function \(u : \varepsilon {\hat{\omega }} \rightarrow {\mathbb R} \) such that \(\sum _{x\in {\hat{\omega }}} c_x (\omega ) |u(\varepsilon x) |<+\infty \), we define \(\tilde{{\mathbb L} }^\varepsilon _\omega u(x) :=\varepsilon ^{-2} \sum _{y\in \hat{\omega }} c_{x,y} (\omega ) ( u(\varepsilon y) - u(\varepsilon x) )\).

By symmetry of the jump rates we have

Hence, if \(\sum _{x\in {\hat{\omega }} } c_x (\omega ) |u(\varepsilon x) |<+\infty \), the series defining \(\tilde{\mathbb L} ^\varepsilon _\omega u(x)\) is absolutely convergent for any \(x\in {\hat{\omega }}\).

In what follows, to simplify the notation, we write \(\pi ^\varepsilon _\omega (u)\), \(\pi ^\varepsilon _{\omega ,t}(u)\) for the integral of u w.r.t. \(\pi ^\varepsilon _\omega [\eta ]\), \(\pi ^\varepsilon _{\omega ,t}[\eta ]\), respectively. Recall that \({\mathbb L} ^\varepsilon _\omega \) is the Markov generator in \(L^2(\mu ^\varepsilon _\omega )\) of the random walk \((\varepsilon X_{\varepsilon ^{-2} t}^\omega )_{t\ge 0}\) (see Sect. 6). Recall that \(\mathcal L_\omega \) is the Markov generator of the simple exclusion process in the function space \(C(\{0,1\}^{{\hat{\omega }}})\) of continuous functions on \(\{0,1\}^{\hat{\omega }}\) endowed with the uniform topology (see Proposition 7.4). We now state two lemmas which will be crucial when dealing with the corrected empirical measure. We postpone their proofs to the end of the section.

Lemma 8.2

(Duality) Suppose that \(u : \varepsilon {\hat{\omega }} \rightarrow {\mathbb R} \) satisfies

Then \( \pi ^{\varepsilon } _{\omega }(u) =\varepsilon ^d\sum _{x\in {\hat{\omega }} } u(\varepsilon x) \eta (x)\) is an absolutely convergent series in \( C(\{0,1\}^{{\hat{\omega }}})\). It belongs to the domain \(\mathcal D(\mathcal L_\omega ) \subset C(\{0,1\}^{{\hat{\omega }}})\) of \(\mathcal L_\omega \) and

the r.h.s. of (42) being an absolutely convergent series in \(C(\{0,1\}^{{\hat{\omega }}})\). If, in addition to (41), it holds \(u \in \mathcal D( {\mathbb L} ^\varepsilon _\omega )\subset L^2(\mu ^\varepsilon _\omega )\), then \({\mathbb L} ^\varepsilon _\omega u = \tilde{\mathbb L} ^\varepsilon _\omega u \) and in particular we have the duality relation

Let \(u:\varepsilon {\hat{\omega }} \rightarrow {\mathbb R} \) be a function satisfying (41). As, by Lemma 8.2, \(\pi ^{\varepsilon } _{\omega }(u) \in \mathcal D(\mathcal L_\omega )\), we can introduce on the Skorohod space \(D\bigl ({\mathbb R} _+, \{0,1\}^{{\hat{\omega }}}\bigr )\) the Dynkin martingale \((\mathcal M^\varepsilon _{\omega ,t})_{t\ge 0}\) given by (see e.g. [26, Appendix 1] or [37, Section 3.2])

\((\mathcal M^\varepsilon _{\omega ,t})_{t\ge 0}\) is a square integrable martingale w.r.t. the filtered probability space \(\left( D\bigl ({\mathbb R} _+, \{0,1\}^{{\hat{\omega }}}\bigr ), {\mathbb P} ^\varepsilon _{\omega ,\mathfrak {n}_\varepsilon }, (\mathcal F_t)_{t\ge 0}\right) \), \(\mathfrak {n}_\varepsilon \) being an arbitrary initial distribution and \(\mathcal F_t\) being the \(\sigma \)-field generated \(\{\eta _s:0\le s\le t\}\). Square integrability follows from the property that \(\Vert \mathcal M^\varepsilon _{\omega ,t} \Vert _\infty <+\infty \) as the same holds for all addenda in the r.h.s. of (44) (see Lemma 8.2).

Lemma 8.3

Let \(u:\varepsilon {\hat{\omega }} \rightarrow {\mathbb R} \) be a function satisfying (41). Suppose in addition that \( \sum _{x\in {\hat{\omega }}} c_x(\omega ) u(\varepsilon x )^2 <+\infty \). Then the sharp bracket process of \(\mathcal M^\varepsilon _{\omega ,t}\) is given by \(\langle \mathcal M^\varepsilon _{\omega }\rangle _t= \int _0 ^t B^\varepsilon _{\omega } (\eta _s)ds \), where

Note that the bound \(\sum _{x\in {\hat{\omega }}} c_x(\omega ) u(\varepsilon x )^2 <+\infty \) implies that the r.h.s. of (45) is an absolutely convergent series of functions in \(C(\{0,1\}^{{\hat{\omega }}})\). For later use, we recall that \(\langle \mathcal M^\varepsilon _{\omega }\rangle _t\) can be characterized as the unique predictable increasing process such that \((\mathcal M^\varepsilon _{\omega ,t})^2- \langle \mathcal M^\varepsilon _{\omega }\rangle _t\) is a martingale [28, Theorem 8.24].

Remark 8.4

In the proof of Theorem 4.1 (see Sect. 11.1) we will apply the above Lemmas 8.2 and 8.3 just to functions u of the form \(R^\varepsilon _{\omega ,\lambda } \psi \) for suitable functions \(\psi \in C_c ({\mathbb R} ^d)\), where \(R^\varepsilon _{\omega ,\lambda } \psi \) is the resolvent introduced in Sect. 6.

Proof of Lemma 8.2

As \(\sum _{x\in {\hat{\omega }} } |u(\varepsilon x) | <+\infty \), it is simple to check that the series defining \(\pi ^{\varepsilon } _{\omega }(u)\) is indeed an absolutely convergent series of continuous functions w.r.t. the uniform norm. The same holds for the series corresponding to the r.h.s. of (42). Indeed, by (40), \( \sum _{x\in {\hat{\omega }}} \Vert \eta (x) \tilde{\mathbb L} ^\varepsilon _\omega u (\varepsilon x) \Vert _\infty \le 2 \varepsilon ^{-2} \sum _{x\in {\hat{\omega }} } c_x(\omega ) |u(\varepsilon x) | <+\infty \).

When the function u is local, also the map \(\eta \mapsto \pi ^\varepsilon _\omega (u)\) is local. By locality and Proposition 7.4, this map belongs to \(\mathcal D(\mathcal L_\omega ) \). In the case of local u, (42) follows from easy computations by (9). We now treat the general case. Given \(n\in {\mathbb N} \), we define \(u_n(\varepsilon x ):= u(\varepsilon x ){\mathbbm {1}}( |\varepsilon x | \le n) \). As observed above, \(\pi ^{\varepsilon } _{\omega }(u_n)\) is a local function on \(\{0,1\}^{{\hat{\omega }}}\) belonging to \(\mathcal D(\mathcal L_\omega )\) and (42) holds with \(u_n\) instead of u. We claim that

As \(\mathcal L_\omega \) is a closed operator being a Markov generator, (42) with \(u_n\) instead of u, (46) and (47) imply that \( \pi ^{\varepsilon } _{\omega }(u)\in \mathcal D(\mathcal L_\omega )\) and that (42) holds. To prove (46) and (47) it is enough to bound the uniform norms appearing there by, respectively, \(\varepsilon ^d\sum _{x\in {\hat{\omega }}: |\varepsilon x|>n}| u(\varepsilon x) |\) and \(2 \varepsilon ^{-2}\sum _{x\in {\hat{\omega }}: |\varepsilon x|>n} c_x(\omega ) |u(\varepsilon x) |\) and use (41). This concludes the proof of (42).

It remains to show that \({\mathbb L} ^\varepsilon _\omega u = \tilde{\mathbb L} ^\varepsilon _\omega u \) if \(u \in \mathcal D( {\mathbb L} ^\varepsilon _\omega )\subset L^2(\mu ^\varepsilon _\omega )\) in addition to (41). Given a function \(f\in C(\{0,1\}^{{\hat{\omega }}})\) we write \(S(t) f (\eta ):={\mathbb E} _\eta [ f(\eta _t) ]\) for the Markov semigroup associated to the simple exclusion process (without any time rescaling). Then (42) can be read as

Given \(x_0 \in {\hat{\omega }}\) we take \(\eta \) corresponding to a single particle located at \(x_0\). Then \(\left( S(t) \pi ^{\varepsilon } _{\omega }(u)\right) (\eta )= \varepsilon ^d E_{x_0} [ u ( \varepsilon X^{\omega }_t) ]\) and (48) implies that \(\frac{d}{dt} E_{x_0} [ u ( \varepsilon X^{\omega }_{\varepsilon ^{-2}t}) ]_{|t=0}= \tilde{\mathbb L} ^\varepsilon _\omega u (\varepsilon x_0)\). On the other hand, we know that \(u \in \mathcal D({\mathbb L} ^\varepsilon _\omega )\). Hence

which implies that \(\frac{d}{dt} E_{x_0} [ u ( \varepsilon X^{\omega }_{\varepsilon ^{-2}t}) ]_{|t=0}= {\mathbb L} ^\varepsilon _\omega u (\varepsilon x_0)\). Then it must be \({\mathbb L} ^\varepsilon _\omega u (\varepsilon x_0)=\tilde{\mathbb L} ^\varepsilon _\omega u (\varepsilon x_0)\). \(\square \)

Proof of Lemma 8.3

For u local both \(\pi ^\varepsilon _\omega (u)\) and its square belong to \(\mathcal D(\mathcal L_\omega )\) being local functions of \(\eta \). Then the statement in the lemma can be checked by simple computations due to (9), Lemma 8.2 and [26, Lemma 5.1, Appendix 1] (equivalently, [37, Exercise 3.1 and Lemma 8.3]). For the computation of the sharp bracket process we just comment that, by using the symmetry of \(c_{x,y}(\omega )\), one easily gets

We now move to the general case. For simplicity of notation we write \(\mathcal M_t\), \(B(\eta )\) instead of \(\mathcal M^\varepsilon _{\omega ,t}\), \(B^\varepsilon _\omega (\eta )\). Similarly, we define \(\mathcal M_{n,t} \) and \(B_n(\eta )\) as in (44) and (45) with u replaced by \(u_n\), \(u_n(\varepsilon x):= u(\varepsilon x) \mathbbm {1}(|\varepsilon x| \le n)\). Note that \(\lim _{n \rightarrow +\infty }\sup _{t\in [0,T]} \Vert \mathcal M_t - \mathcal M_{n,t}\Vert _\infty =0\) for any \(T>0\) [see (42), (46) and (47)]. Hence, by the characterization of the sharp bracket process recalled after Lemma 8.3 and by our results for the local case (applied to \(u_n\)), to get (45) it is enough to show that \(\lim _{n\rightarrow \infty } \Vert B_n(\eta )-B(\eta ) \Vert _\infty =0\). To this aim it is enough to show that \(\sum _{x\in {\hat{\omega }}} \sum _{y \in {\hat{\omega }}} c_{x,y}(\omega )\bigl [ u_n(\varepsilon x)- u_n (\varepsilon y)\bigr ]^2\) converges, as \(n\rightarrow \infty \), to the analogous expression with u instead of \(u_n\). This follows from the dominated convergence theorem, by dominating \(\bigl [ u_n(\varepsilon x)- u_n (\varepsilon y)\bigr ]^2\) with \(2 u(\varepsilon x)^2 + 2 u(\varepsilon y)^2\) and by using that \(\sum _{x\in {\hat{\omega }}} \sum _{y \in {\hat{\omega }}} c_{x,y}(\omega )\bigl [ u(\varepsilon x)^2 + u(\varepsilon y)^2\bigr ]=2 \sum _{x\in {\hat{\omega }}} c_x(\omega ) u(\varepsilon x)^2<+\infty \). \(\square \)

9 Space \(\mathcal M\) of Radon measures and Skorohod space \(D([0,T], \mathcal M)\)

Given a measure \(\mu \) on \({\mathbb R} ^d\) and a real function G on \({\mathbb R} ^d\), we will denote by \(\mu (G)\) the integral \(\int d\mu (x) G(x)\). We denote by \(\mathcal M\) the space of Radon measures on \({\mathbb R} ^d\), i.e. locally bounded Borel measures on \({\mathbb R} ^d\). \(\mathcal M\) is endowed with the vague topology, for which \(\mu _n \rightarrow \mu \) if and only if \(\mu _n(f) \rightarrow \mu (f)\) for all \(f\in C_c({\mathbb R} ^d)\). This topology can be defined through a metric, that we now recall also for later use (for more details, see e.g. [37, Appendix A.10]). To this aim we set \(B_r:=\{x\in {\mathbb R} ^d: |x| \le r\}\). For each \(\ell \in {\mathbb N} \) we choose a sequence of functions \((\varphi _{\ell , n})_{n \ge 0}\) such thatFootnote 2

-

(i)

\(\varphi _{\ell , n} \in C^\infty _c ({\mathbb R} ^d)\) and \(\varphi _{\ell , n}\) is supported on \(B_{\ell +1}\);

-

(ii)