Abstract

We analyse the eigenvectors of the adjacency matrix of a critical Erdős–Rényi graph \({\mathbb {G}}(N,d/N)\), where d is of order \(\log N\). We show that its spectrum splits into two phases: a delocalized phase in the middle of the spectrum, where the eigenvectors are completely delocalized, and a semilocalized phase near the edges of the spectrum, where the eigenvectors are essentially localized on a small number of vertices. In the semilocalized phase the mass of an eigenvector is concentrated in a small number of disjoint balls centred around resonant vertices, in each of which it is a radial exponentially decaying function. The transition between the phases is sharp and is manifested in a discontinuity in the localization exponent \(\gamma (\varvec{\mathrm {w}})\) of an eigenvector \(\varvec{\mathrm {w}}\), defined through \(\Vert \varvec{\mathrm {w}} \Vert _\infty / \Vert \varvec{\mathrm {w}} \Vert _2 = N^{-\gamma (\varvec{\mathrm {w}})}\). Our results remain valid throughout the optimal regime \(\sqrt{\log N} \ll d \leqslant O(\log N)\).

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Overview

Let A be the adjacency matrix of a graph with vertex set \([N]\!=\! \{1, \dots , N\}\). We are interested in the geometric structure of the eigenvectors of A, in particular their spatial localization. An \(\ell ^2\)-normalized eigenvector \(\varvec{\mathrm {w}} = (w_x)_{x \in [N]}\) gives rise to a probability measure \(\sum _{x \in [N]} w_x^2 \delta _x\) on the set of vertices. Informally, \(\varvec{\mathrm {w}}\) is delocalized if its mass is approximately uniformly distributed throughout [N], and localized if its mass is essentially concentrated in a small number of vertices.

There are several ways of quantifying spatial localization. One is the notion of concentration of mass, sometimes referred to as scarring [49], stating that there is some set \({{\mathcal {B}}} \subset [N]\) of small cardinality and a small \(\varepsilon > 0\) such that \(\sum _{x \in {{\mathcal {B}}}} w_x^2 = 1 - \varepsilon \). In this case, it is also of interest to characterize the geometric structure of the vertex set \({{\mathcal {B}}}\) and of the eigenvector \(\varvec{\mathrm {w}}\) restricted to \({{\mathcal {B}}}\). Another convenient quantifier of spatial localization is the \(\ell ^p\)-norm \(\Vert \varvec{\mathrm {w}} \Vert _p\) for \(2 \leqslant p \leqslant \infty \). It has the following interpretation: if the mass of \(\varvec{\mathrm {w}}\) is uniformly distributed over some set \({{\mathcal {B}}} \subset [N]\) then \(\Vert \varvec{\mathrm {w}} \Vert _p^2 = |{{\mathcal {B}}} |^{-1 + 2/p}\). Focusing on the \(\ell ^\infty \)-norm for definiteness, we define the localization exponent \(\gamma (\varvec{\mathrm {w}})\) through

Thus, \(0 \leqslant \gamma (\varvec{\mathrm {w}}) \leqslant 1\), and \(\gamma (\varvec{\mathrm {w}}) = 0\) corresponds to localization at a single vertex while \(\gamma (\varvec{\mathrm {w}}) = 1\) to complete delocalization.

In this paper we address the question of spatial localization for the random Erdős–Rényi graph \({\mathbb {G}}(N,d/N)\). We consider the limit \(N \rightarrow \infty \) with \(d \equiv d_N\). It is well known that \({\mathbb {G}}(N,d/N)\) undergoes a dramatic change in behaviour at the critical scale \(d \asymp \log N\), which is the scale at and below which the vertex degrees do not concentrate. Thus, for \(d \gg \log N\), with high probability all degrees are approximately equal and the graph is homogeneous. On the other hand, for \(d \lesssim \log N\), the degrees do not concentrate and the graph becomes highly inhomogeneous: it contains for instance hubs of exceptionally large degree, leaves, and isolated vertices. As long as \(d > 1\), the graph has with high probability a unique giant component, and we shall always restrict our attention to it.

Here we propose the Erdős–Rényi graph at criticality as a simple and natural model on which to address the question of spatial localization of eigenvectors. It has the following attributes.

-

(i)

Its graph structure provides an intrinsic and nontrivial notion of distance.

-

(ii)

Its spectrum splits into a delocalized phase and a semilocalized phase. The transition between the phases is sharp, in the sense of a discontinuity in the localization exponent.

-

(iii)

Both phases are amenable to rigorous analysis.

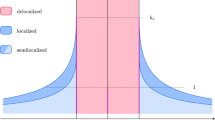

Our results are summarized in the phase diagram of Fig. 1, which is expressed in terms of the parameter b parametrizing \(d = b \log N\) on the critical scale and the eigenvalue \(\lambda \) of \(A / \sqrt{d}\) associated with the eigenvector \(\varvec{\mathrm {w}}\). To the best of our knowledge, the phase coexistence for the critical Erdős–Rényi graph established in this paper had previously not been analysed even in the physics literature.

The phase diagram of the adjacency matrix \(A / \sqrt{d}\) of the Erdős–Rényi graph \({\mathbb {G}}(N,d/N)\) at criticality, where \(d = b \log N\) with b fixed. The horizontal axis records the location in the spectrum and the vertical axis the sparseness parameter b. The spectrum is confined to the coloured region. In the red region the eigenvectors are delocalized while in the blue region they are semilocalized. The grey regions have width o(1) and are not analysed in this paper. For \(b > b_*\) the spectrum is asymptotically contained in \([-2,2]\) and the semilocalized phase does not exist. For \(b < b_*\) a semilocalized phase emerges in the region \((-\lambda _{\max }(b), -2) \cup (2, \lambda _{\max }(b))\) for some explicit \(\lambda _{\max }(b) > 2\)

Throughout the following, we always exclude the largest eigenvalue of A, its Perron–Frobenius eigenvalue, which is an outlier separated from the rest of the spectrum. The delocalized phase is characterized by a localization exponent asymptotically equal to 1. It exists for all fixed \(b > 0\) and consists asymptotically of energies in \((-2,0) \cup (0,2)\). The semilocalized phase is characterized by a localization exponent asymptotically less than 1. It exists only when \(b < b_*\), where

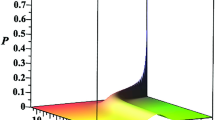

It consists asymptotically of energies in \((-\lambda _{\max }(b), -2) \cup (2, \lambda _{\max }(b))\), where \(\lambda _{\max }(b) > 2\) is an explicit function of b (see (1.14) below). The density of states at energy \(\lambda \in {\mathbb {R}}\) is equal to \(N^{\rho _b(\lambda ) + o(1)}\), where \(\rho _b\) is an explicit exponent defined in (1.14) below and illustrated in Fig. 2. It has a discontinuity at 2 (and similarly at \(-2\)), jumping from \(\rho _b(2^-) = 1\) to \(\rho _b(2^+) = 1 - b / b^*\). The localization exponent \(\gamma (\varvec{\mathrm {w}})\) from (1.1) of an eigenvector \(\varvec{\mathrm {w}}\) with associated eigenvalue \(\lambda \) satisfies with high probability

This establishes a discontinuity, in the limit \(N \rightarrow \infty \), in the localization exponent \(\gamma (\varvec{\mathrm {w}})\) as a function of \(\lambda \) at the energies \(\pm 2\). See Fig. 2 for an illustration; we also refer to Appendix A.1 for a simulation depicting the behaviour of \(\Vert \varvec{\mathrm {w}} \Vert _\infty \) throughout the spectrum. Moreover, in the semilocalized phase scarring occurs in the sense that a fraction \(1 - o(1)\) of the mass of the eigenvectors is supported in a set of at most \(N^{\rho _b(\lambda ) + o(1)}\) vertices.

The behaviour of the exponents \(\rho _b\) and \(\gamma \) as a function of the energy \(\lambda \). The dark blue curve is the exponent \(\rho _b(\lambda )\) characterizing the density of states \(N^{\rho _b(\lambda ) + o(1)}\) of the matrix \(A / \sqrt{d}\) at energy \(\lambda \). The entire blue region (light and dark blue) is the asymptotically allowed region of the localization exponent \(\gamma (\varvec{\mathrm {w}})\) of an eigenvector of \(A / \sqrt{d}\) as a function of the associated eigenvalue \(\lambda \). Here \(d = b \log N\) with \(b = 1\) and \(\lambda _{\max }(b) \approx 2.0737\). We only plot a neighbourhood of the threshold energy 2. The discontinuity at 2 of \(\rho _b\) is from \(\rho _b(2^-) = 1\) to \(\rho _b(2^+) = 1 - b / b^* = 2 - 2 \log 2\)

The eigenvalues in the semilocalized phase were analysed in [10], where it was proved that they arise precisely from vertices x of abnormally large degree, \(D_x \geqslant 2 d\). More precisely, it was proved in [10] that each vertex x with \(D_x \geqslant 2 d\) gives rise to two eigenvalues of \(A / \sqrt{d}\) near \(\pm \Lambda (D_x / d)\), where \(\Lambda (\alpha ) :=\frac{\alpha }{\sqrt{\alpha -1}}\). The same result for the O(1) largest degree vertices was independently proved in [54] by a different method. We refer also to [14, 15] for an analysis in the supercritical and subcritical phases.

In the current paper, we prove that the eigenvector \(\varvec{\mathrm {w}}\) associated with an eigenvalue \(\lambda \) in the semilocalized phase is highly concentrated around resonant vertices at energy \(\lambda \), which are defined as the vertices x such that \(\Lambda (D_x/d)\) is close to \(\lambda \). For this reason, we also call the resonant vertices localization centres. With high probability, and after a small pruning of the graph, all balls \(B_r(x)\) of a certain radius \(r \gg 1\) around the resonant vertices are disjoint, and within any such ball \(B_r(x)\) the eigenvector \(\varvec{\mathrm {w}}\) is an approximately radial exponentially decaying function. The number of resonant vertices at energy \(\lambda \) is comparable to the density of states, \(N^{\rho _b(\lambda ) + o(1)}\), which is much less than N. See Fig. 3 for a schematic illustration of the mass distribution of \(\varvec{\mathrm {w}}\).

A schematic representation of the geometric structure of a typical eigenvector in the semilocalized phase. The giant component of the graph is depicted in pale blue. The eigenvector’s mass (depicted in dark blue) is concentrated in a small number of disjoint balls centred around resonant vertices (drawn in white), and within each ball the mass decays exponentially in the radius. The mass outside the balls is an asymptotically vanishing proportion of the total mass

The behaviour of the critical Erdős–Rényi graph described above has some similarities but also differences to that of the Anderson model [11]. The Anderson model on \({\mathbb {Z}}^n\) with \(n \geqslant 3\) is conjectured to exhibit a metal-insulator, or delocalization-localization, transition: for weak enough disorder, the spectrum splits into a delocalized phase in the middle of the spectrum and a localized phase near the spectral edges. See e.g. [8, Figure 1.2] for a phase diagram of its conjectured behaviour. So far, only the localized phase of the Anderson model has been understood rigorously, in the landmark works [4, 39], as well as contributions of many subsequent developments. The phase diagram for the Anderson model bears some similarity to that of Fig. 1, in which one can interpret 1/b as the disorder strength, since smaller values of b lead to stronger inhomogeneities in the graph.

As is apparent from the proofs in [4, 39], in the localized phase the local structure of an eigenvector of the Anderson model is similar to that of the critical Erdős–Rényi graph described above: exponentially decaying around well-separated localization centres associated with resonances near the energy \(\lambda \) of the eigenvector. The localization centres arise from exceptionally large local averages of the potential. The phenomenon of localization can be heuristically understood using the following well-known rule of thumb: one expects localization around a single localization centre if the level spacing is much larger than the tunnelling amplitude between localization centres. It arises from perturbation theory around the block diagonal model where the complement of balls \(B_r(x)\) around localization centres is set to zero. On a very elementary level, this rule is illustrated by the matrix \(H(t) = \bigl ( {\begin{matrix}0 &{} t\\ t &{} 1\end{matrix}}\bigr )\), whose eigenvectors are localized for \(t = 0\), remain essentially localized for \(t \ll 1\), where perturbation theory around H(0) is valid, and become delocalized for \(t \gtrsim 1\), where perturbation theory around H(0) fails.

More precisely, it is a general heuristic that the tunnelling amplitude decays exponentially in the distance between the localization centres [25]. Denoting by \(\beta (\lambda ) > 1\) the rate of exponential decay at energy \(\lambda \), the rule of thumb hence reads

where L is the distance between the localization centres and \(\varepsilon (\lambda )\) the level spacing at energy \(\lambda \). For the Anderson model restricted to a finite cube of \({\mathbb {Z}}^n\) with side length \(N^{1/n}\), the level spacing \(\varepsilon (\lambda )\) is of order \(N^{-1}\) (see [57] and [8, Chapter 4]) whereas the diameter of the graph is of order \(N^{1/n}\). Hence, the rule of thumb (1.3) becomes

which is satisfied and one therefore expects localization. For the critical Erdős–Rényi graph, the level spacing \(\varepsilon (\lambda )\) is \(N^{-\rho (\lambda )+o(1)}\) but the diameter of the giant component is only \(\frac{\log N}{\log d}\). Hence, the rule of thumb (1.3) becomes

which is never satisfied because \(\frac{\log \beta (\lambda )}{\log d}\rightarrow 0\) as \(N \rightarrow \infty \). Thus, the rule of thumb (1.3) is satisfied in the localized phase of the Anderson model but not in the semilocalized phase of the critical Erdős–Rényi graph. The underlying reason behind this difference is that the diameter of the Anderson model is polynomial in N, while the diameter of the critical Erdős–Rényi graph is logarithmic in N. Thus, the critical Erdős–Rényi graph is far more connected than the Anderson model; this property tends to push it more towards the delocalized behaviour of mean-field systems. As noted above, another important difference between the localized phase of the Anderson model and the semilocalized phase of the critical Erdős–Rényi graph is that the density of states is of order N in the former and a fractional power of N in the latter.

Up to now we have focused on the Erdős–Rényi graph on the critical scale \(d \asymp \log N\). It is natural to ask whether this assumption can be relaxed without changing its behaviour. The question of the upper bound on d is simple: as explained above, there is no semilocalized phase for \(d > b_* \log N\), and the delocalized phase is completely understood up to \(d \leqslant N/2\), thanks to Theorem 1.8 below and [35, 42]. The lower bound is more subtle. In fact, it turns out that all of our results remain valid throughout the regime

The lower bound \(\sqrt{\log N}\) is optimal in the sense that below it both phases are disrupted and the phase diagram from Fig. 1 no longer holds. Indeed, for \(d \lesssim \sqrt{\log N}\) a new family of localized states, associated with so-called tuning forks at the periphery of the graph, appear throughout the delocalized and semilocalized phases. We refer to Sect. 1.5 below for more details.

Previously, strong delocalization with localization exponent \(\gamma (\varvec{\mathrm {w}}) = 1 + o(1)\) has been established for many mean-field models, such as Wigner matrices [1, 34,35,36,37], supercritical Erdős–Rényi graphs [35, 42], and random regular graphs [12, 13]. All of these models are homogeneous and only have a delocalized phase.

Although a rigorous understanding of the metal-insulator transition for the Anderson model is still elusive, some progress has been made for random band matrices. Random band matrices [23, 40, 47, 58] constitute an attractive model interpolating between the Anderson model and mean-field Wigner matrices. They retain the n-dimensional structure of the Anderson model but have proved somewhat more amenable to rigorous analysis. They are conjectured [40] to have a similar phase diagram as the Anderson model in dimensions \(n \geqslant 3\). As for the Anderson model, dimensions \(n > 1\) have so far seen little progress, but for \(n = 1\) much has been understood both in the localized [48, 50] and the delocalized [20,21,22, 28,29,30,31,32,33, 43, 51, 52, 59] phases. A simplification of band matrices is the ultrametric ensemble [41], where the Euclidean metric of \({\mathbb {Z}}^n\) is replaced with an ultrametric arising from a tree structure. For this model, a phase transition was rigorously established in [56].

Another modification of the n-dimensional Anderson model is the Anderson model on the Bethe lattice, an infinite regular tree corresponding to the case \(n = \infty \). For it, the existence of a delocalized phase was shown in [5, 38, 44]. In [6, 7] it was shown that for unbounded random potentials the delocalized phase exists for arbitrarily weak disorder. It extends beyond the spectrum of the unperturbed adjacency matrix into the so-called Lifschitz tails, where the density of states is very small. The authors showed that, through the mechanism of resonant delocalization, the exponentially decaying tunnelling amplitudes between localization centres are counterbalanced by an exponentially large number of possible channels through which tunnelling can occur, so that the rule of thumb (1.3) for localization is violated. As a consequence, the eigenvectors are delocalized across many resonant localization centres. We remark that this analysis was made possible by the absence of cycles on the Bethe lattice. In contrast, the global geometry of the critical Erdős–Rényi graph is fundamentally different from that of the Bethe lattice (through the existence of a very large number of long cycles), which has a defining impact on the nature of the delocalization-semilocalization transition summarized in Fig. 1.

Transitions in the localization behaviour of eigenvectors have also been analysed in several mean-field type models. In [45, 46] the authors considered the sum of a Wigner matrix and a diagonal matrix with independent random entries with a large enough variance. They showed that the eigenvectors in the bulk are delocalized while near the edge they are partially localized at a single site. Their partially localized phase can be understood heuristically as a rigorous (and highly nontrivial) verification of the rule of thumb for localization, where the perturbation takes place around the diagonal matrix. Heavy-tailed Wigner matrices, or Lévy matrices, whose entries have \(\alpha \)-stable laws for \(0< \alpha < 2\), were proposed in [24] as a simple model that exhibits a transition in the localization of its eigenvectors; we refer to [3] for a summary of the predictions from [24, 53]. In [18, 19] it was proved that for energies in a compact interval around the origin, eigenvectors are weakly delocalized, and for \(0< \alpha < 2/3\) for energies far enough from the origin, eigenvectors are weakly localized. In [3], full delocalization was proved in a compact interval around the origin, and the authors even established GOE local eigenvalue statistics in the same spectral region. In [2], the law of the eigenvector components of Lévy matrices was computed.

Conventions Throughout the following, every quantity that is not explicitly constant depends on the fundamental parameter N. We almost always omit this dependence from our notation. We use C to denote a generic positive universal constant, and write \(X = O(Y)\) to mean \(|X | \leqslant C Y\). For \(X,Y > 0\) we write \(X \asymp Y\) if \(X = O(Y)\) and \(Y = O(X)\). We write \(X \ll Y\) or \(X = o(Y)\) to mean \(\lim _{N \rightarrow \infty } X/Y = 0\). A vector is normalized if its \(\ell ^2\)-norm is one.

1.2 Results—the semilocalized phase

Let \({\mathbb {G}} = {\mathbb {G}}(N,d/N)\) be the Erdős–Rényi graph with vertex set \([N] :=\{1, \ldots , N\}\) and edge probability d/N for \(0 \leqslant d \leqslant N\). Let \(A = (A_{xy})_{x,y \in [N]} \in \{0,1\}^{N\times N}\) be the adjacency matrix of \({\mathbb {G}}\). Thus, \(A =A^*\), \(A_{xx}=0\) for all \(x \in [N]\), and \(( A_{xy} :x < y)\) are independent \({\text {Bernoulli}}(d/N)\) random variables.

The entrywise nonnegative matrix \(A/\sqrt{d}\) has a trivial Perron–Frobenius eigenvalue, which is its largest eigenvalue. In the following we only consider the other eigenvalues, which we call nontrivial. In the regime \(d \gg \sqrt{\log N/\log \log N}\), which we always assume in this paper, the trivial eigenvalue is located at \(\sqrt{d} (1 + o(1))\), and it is separated from the nontrivial ones with high probability; see [14]. Moreover, without loss of generality in this subsection we always assume that \(d \leqslant 3 \log N\), for otherwise the semilocalized phase does not exist (see Sect. 1.1).

For \(x \in [N]\) we define the normalized degree of x as

In Theorem 1.7 below we show that the nontrivial eigenvalues of \(A / \sqrt{d}\) outside the interval \([-2,2]\) are in two-to-one correspondence with vertices with normalized degree greater than 2: each vertex x with \(\alpha _x > 2\) gives rise to two eigenvalues of \(A / \sqrt{d}\) located with high probability near \(\pm \Lambda (\alpha _x)\), where we defined the bijective function \(\Lambda :[2,\infty ) \rightarrow [2,\infty )\) through

Our main result in the semilocalized phase is about the eigenvectors associated with these eigenvalues. To state it, we need the following notions.

Definition 1.1

Let \(\lambda >2\) and \(0 < \delta \leqslant \lambda - 2\). We define the set of resonant vertices at energy \(\lambda \) through

We denote by \(B_r(x)\) the ball around the vertex x of radius r for the graph distance in \({\mathbb {G}}\). Define

all of our results will hold provided \(c > 0\) is chosen to be a small enough universal constant. The quantity \(r_\star \) will play the role of a maximal radius for balls around localization centres.

We introduce the basic control parameters

which under our assumptions will always be small (see Remark 1.5 below). We now state our main result in the semilocalized phase.

Theorem 1.2

(Semilocalized phase). For any \(\nu > 0\) there exists a constant \({{\mathcal {C}}}\) such that the following holds. Suppose that

Let \(\varvec{\mathrm {w}}\) be a normalized eigenvector of \(A/\sqrt{d}\) with nontrivial eigenvalue \(\lambda \geqslant 2+{{\mathcal {C}}} \xi ^{1/2}\). Let \(0<\delta \leqslant (\lambda -2)/2\). Then for each \(x \in {{\mathcal {W}}}_{\lambda , \delta }\) there exists a normalized vector \(\varvec{\mathrm {v}}(x)\), supported in \(B_{r_\star }(x)\), such that the supports of \(\varvec{\mathrm {v}}(x)\) and \(\varvec{\mathrm {v}}(y)\) are disjoint for \(x \ne y\), and

with probability at least \(1 - {{\mathcal {C}}} N^{-\nu }\). Moreover, \(\varvec{\mathrm {v}}(x)\) decays exponentially around x in the sense that for any \(r \geqslant 0\) we have

Remark 1.3

An analogous result holds for negative eigenvalues \(-\lambda \leqslant -2 - {{\mathcal {C}}} \xi ^{1/2}\), with a different vector \(\varvec{\mathrm {v}}(x)\). See Theorem 3.4 and Remark 3.5 below for a precise statement.

Remark 1.4

The upper bound \(d \leqslant 3 \log N\) in (1.10) is made for convenience and without loss of generality, because if \(d > 3 \log N\) then, as explained in Sect. 1.1, with high probability the semilocalized phase does not exist, i.e. eigenvalues satisfying the conditions of Theorem 1.2 do not exist.

Theorem 1.2 implies that \(\varvec{\mathrm {w}}\) is almost entirely concentrated in the balls around the resonant vertices, and in each such ball \(B_{r_\star }(x)\), \(x \in {{\mathcal {W}}}_{\lambda ,\delta }\), the vector \(\varvec{\mathrm {w}}\) is almost collinear to the vector \(\varvec{\mathrm {v}}(x)\). Thus, \(\varvec{\mathrm {v}}(x)\) has the interpretation of the localization profile around the localization centre x. Since it has exponential decay, we deduce immediately from Theorem 1.2 that the radius \(r_\star \) can be made smaller at the expense of worse error terms. In fact, in Definition 3.2 and Theorem 3.4 below, we give an explicit definition of \(\varvec{\mathrm {v}}(x)\), which shows that it is radial in the sense that its value at a vertex y depends only on the distance between x and y, in which it is an exponentially decaying function. To ensure that the supports of the vectors \(\varvec{\mathrm {v}}(x)\) for different x do not overlap, \(\varvec{\mathrm {v}}(x)\) is in fact defined as the restriction of a radial function around x to a subgraph of \({\mathbb {G}}\), the pruned graph, which differs from \({\mathbb {G}}\) by only a small number of edges and whose balls of radius \(r_\star \) around the vertices of \({{\mathcal {W}}}_{\lambda ,\delta }\) are disjoint (see Proposition 3.1 below). For positive eigenvalues, the entries of \(\varvec{\mathrm {v}}(x)\) are nonnegative, while for negative eigenvalues its entries carry a sign that alternates in the distance to x. The set of resonant vertices \(\mathcal W_{\lambda ,\delta }\) is a small fraction of the whole vertex set [N]; its size is analysed in Lemma A.12 below.

Remark 1.5

Note that, by the lower bounds imposed on d and \(\lambda \) in Theorem 1.2, we always have \(\xi , \xi _{\lambda - 2} \leqslant 1/ {{\mathcal {C}}}\).

Using the exponential decay of the localization profiles, it is easy to deduce from Theorem 1.2 that a positive proportion of the eigenvector mass concentrates at the resonant vertices.

Corollary 1.6

Under the assumptions of Theorem 1.2 we have

with probability at least \(1 - {{\mathcal {C}}} N^{-\nu }\).

Next, we state a rigidity result on the eigenvalue locations in the semilocalized phase. It generalizes [10, Corollary 2.3] by improving the error bound and extending it to the full regime (1.4) of d, below which it must fail (see Sect. 1.5 below). Its proof is a byproduct of the proof of our main result in the semilocalized phase, Theorem 1.2. We denote the ordered eigenvalues of a Hermitian matrix \(M\in {\mathbb {C}}^{N\times N}\) by \(\lambda _1(M) \geqslant \lambda _2(M) \geqslant \cdots \geqslant \lambda _N(M)\). We only consider the nontrivial eigenvalues of \(A / \sqrt{d}\), i.e. \(\lambda _i(A / \sqrt{d})\) with \(2 \leqslant i \leqslant N\). For the following statements we order the normalized degrees by choosing a (random) permutation \(\sigma \in S_N\) such that \(i \mapsto \alpha _{\sigma (i)}\) is nonincreasing.

Theorem 1.7

(Eigenvalue locations in semilocalized phase). For any \(\nu > 0\) there exists a constant \({{\mathcal {C}}}\) such that the following holds. Suppose that (1.10) holds. Let

Then with probability at least \(1 - {{\mathcal {C}}} N^{-\nu }\), for all \(1\leqslant i\leqslant |{{\mathcal {U}}}|\) we have

and for all \(|{{\mathcal {U}}} | + 2 \leqslant i \leqslant N - |{{\mathcal {U}}} |\) we have

We remark that the upper bound on d from (1.10), which is necessary for the existence of a semilocalized phase, can be relaxed in Theorem 1.7 to obtain an estimate on \(\max _{2 \leqslant i \leqslant N} |\lambda _i(A / \sqrt{d}) |\) in the supercritical regime \(d \geqslant 3 \log N\), which is sharper than the one in [10]. The proof is the same and we do not pursue this direction here.

We conclude this subsection with a discussion on the counting function of the normalized degrees, which we use to give estimates on the number of resonant vertices (1.7). For \(b \geqslant 0\) and \(\alpha \geqslant 2\) define the exponent

Define \(\alpha _{\max }(b) :=\inf \{\alpha \geqslant 2 :\theta _b(\alpha ) = 0\}\). Thus, \(\theta _b\) is a nonincreasing function that is nonzero on \([0, \alpha _{\max }(b))\). Moreover, \(\theta _b(2) = [1 - b/b_*]_+\), so that \(\alpha _{\max }(b) > 2\) if and only if \(b < b_*\). From Lemma A.9 below it is easy to deduce that if \(d \gg 1\) then \(\alpha _{\sigma (1)} = \alpha _{\max }(d/\log N) + O(\zeta / d)\) with probability at least \(1 - o(1)\) for any \(\zeta \gg 1\). Thus, \(\alpha _{\max }(d/\log N)\) has the interpretation of the deterministic location of the largest normalized degree. See Fig. 4 for a plot of \(\theta _b\).

In Appendix A.4 below, we obtain estimates on the density of the normalized degrees \((\alpha _x)_{x \in [N]}\) and combine it with Theorem 1.2 to deduce a lower bound on the \(\ell ^p\)-norm of eigenvectors in the semilocalized phase. The precise statements are given in Lemma A.12 and Corollary A.13, which provide quantitative error bounds throughout the regime (1.10). Here, we summarize them, for simplicity, in simple qualitative versions in the critical regime \(d \asymp \log N\). For \(b < b_*\) we abbreviate

where \(\Lambda ^{-1}(\lambda ) = \frac{\lambda ^2}{2}(1 + \sqrt{1 - 4/\lambda ^2})\) for \(|\lambda | \geqslant 2\). Let \(d = b \log N\) with some constant \(b < b_*\), and suppose that \(2 + \kappa \leqslant \lambda \leqslant \lambda _{\max }(b) - \kappa \) for some constant \(\kappa > 0\). Then Lemma A.12 (ii) implies (choosing \(1/d \ll \delta \ll 1\))

with probability \(1 - o(1)\). From (1.15) and Theorem 1.2 we obtain, for any \(2 \leqslant p \leqslant \infty \),

with probability \(1 - o(1)\) (see Corollary A.13 below). In other words, the localization exponent \(\gamma (\varvec{\mathrm {w}})\) from (1.1) satisfies \(\gamma (\varvec{\mathrm {w}}) \leqslant \rho _b(\lambda ) + o(1)\). See Fig. 2 for an illustration of the bound (1.16) for \(p = \infty \). We remark that the exponent \(\rho _b(\lambda )\) also describes the density of states at energy \(\lambda \): under the above assumptions on b and \(\lambda \), for any interval I containing \(\lambda \) and satisfying \(\xi \ll |I | \ll 1\), the number of eigenvalues in I is equal to \(N^{\rho _b(\lambda ) + o(1)} |I |\) with probability \(1 - o(1)\), as can be seen from Lemma A.12 (i) and Theorem 1.7.

1.3 Results—the delocalized phase

Let A be the adjacency matrix of \({\mathbb {G}}(N,d/N)\), as in Sect. 1.2. For \(0< \kappa < 1/2\) define the spectral region

Theorem 1.8

(Delocalized phase). For any \(\nu >0\) and \(\kappa >0\) there exists a constant \({{\mathcal {C}}} > 0\) such that the following holds. Suppose that

Let \(\varvec{\mathrm {w}}\) be a normalized eigenvector of \(A / \sqrt{d}\) with eigenvalue \(\lambda \in {{\mathcal {S}}}_\kappa \). Then

with probability at least \(1 - \mathcal CN^{-\nu }\).

In the delocalized phase, i.e. in \({{\mathcal {S}}}_\kappa \), we also show that the spectral measure of \(A / \sqrt{d}\) at any vertex x is well approximated by the spectral measure at the root of \({\mathbb {T}}_{d\alpha _x,d}\), the infinite rooted \((d\alpha _x,d)\)-regular tree, whose root has \(d \alpha _x\) children and all other vertices have d children. This approximation is a local law, valid for intervals containing down to \(N^\kappa \) eigenvalues. See Remark 4.4 as well as Remark 4.3 and Appendix A.2 below for details.

Remark 1.9

In [42] it is shown that (1.19) holds with probability at least \(1 - \mathcal CN^{-\nu }\) for all eigenvectors provided that

This shows that the upper bound in (1.18) is in fact not restrictive.

Remark 1.10

(Optimality of (1.18) and (1.20)). Both lower bounds in (1.18) and (1.20) are optimal (up to the value of \({{\mathcal {C}}}\)), in the sense that delocalization fails in each case if these lower bounds are relaxed. See Sect. 1.5 below.

We note that the domain \({{\mathcal {S}}}_\kappa \) is optimal, up to the choice of \(\kappa > 0\). Indeed, as explained in Sect. 1.5 below, delocalization fails in the neighbourhood of the origin, owing to a proliferation highly localized tuning fork states. Similarly, we expect the delocalization to fail in the neighbourhoods of \(\pm 2\), where the masses of the eigenvectors become concentrated on vertices x with normalized degrees \(\alpha _x\) close to 2. The neighbourhoods of \(0, \pm 2\) are also singled out as the regions where the self-consistent equation used to prove Theorem 1.8 (see Lemma 4.16) becomes unstable. This instability is directly related to the appearance of singularities in the spectral measure of the tree \({\mathbb {T}}_{d \alpha _x,d}\) (see (4.11) and Fig. 8 for an illustration). The singularity near 0 occurs when \(\alpha _x\) is close to 0, and the singularities near \(\pm 2\) when \(\alpha _x\) is close to 2. See Fig. 10 for a simulation that demonstrates numerically the failure of delocalization outside of \({{\mathcal {S}}}_\kappa \).

1.4 Extension to general sparse random matrices

Our results, Theorems 1.2, 1.7, and 1.8, hold also for the following family of sparse Wigner matrices. Let \(A = (A_{xy})\) be the adjacency matrix of \({\mathbb {G}}(N,d/N)\) as above and \(W=(W_{xy})\) be an independent Wigner matrix with bounded entries. That is, W is Hermitian and its upper triangular entries \((W_{xy} :x \leqslant y)\) are independent complex-valued random variables with mean zero and variance one, \({\mathbb {E}}|W_{xy} |^2 = 1\), and \(|W_{xy} | \leqslant K\) almost surely for some constant K. Then we define the sparse Wigner matrix \(M = (M_{xy})\) as the Hadamard product of A and W, with entries \(M_{xy} :=A_{xy} W_{xy}\). Since the entries of \(M / \sqrt{d}\) are centred, it does not have a trivial eigenvalue like \(A / \sqrt{d}\).

Theorem 1.11

Let \(M = (M_{xy})_{x,y \in [N]}\) be a sparse Wigner matrix. Define

Theorems 1.2 and 1.8 hold with (1.21) if A is replaced with M, and Theorem 1.7 holds with (1.21) if \(\lambda _{i + 1}(A/\sqrt{d})\), \(\lambda _{N-i+1}(A/\sqrt{d})\), and \(\lambda _i(A/\sqrt{d})\) are replaced with \(\lambda _{i}(M / \sqrt{d})\), \(\lambda _{N-i+1}(M / \sqrt{d})\), and \(\lambda _i(M / \sqrt{d})\), respectively. Here, the constants \({{\mathcal {C}}}\) depend on K in addition to \(\nu \) and \(\kappa \).

The modifications to the proofs of Theorems 1.2 and 1.7 required to establish Theorem 1.11 are minor and follow along the lines of [10, Section 10]. The modification to the proof of Theorem 1.8 is trivial, since the assumptions of the general Theorem 4.2 below include the sparse Wigner matrix M. We also remark that, with some extra work, one can relax the boundedness assumption on the entries of W, which we shall however not do here.

1.5 The limits of sparseness and the scale \(d \asymp \sqrt{\log N}\)

We conclude this section with a discussion on how sparse \({\mathbb {G}}\) can be for our results to remain valid. We show that all of our results—Theorems 1.2, 1.7, and 1.8—are wrong below the regime (1.4), i.e. if d is smaller than order \(\sqrt{\log N}\). Thus, our sparseness assumptions—the lower bounds on d from (1.10) and (1.18)—are optimal (up to the factor \(\log \log N\) in (1.10) and the factor \({{\mathcal {C}}}\) in (1.18)). The fundamental reason for this change of behaviour will turn out to be that the ratio \(|S_2(x) | / |S_1(x) |\) concentrates if and only if \(d \gg \sqrt{\log N}\), where \(S_i(x)\) denotes the sphere in \({\mathbb {G}}\) of radius i around x. This can be easily made precise with a well-known tuning fork construction, detailed below.

In the critical and subcritical regime \(1 \ll d = O(\log N)\), the graph \({\mathbb {G}}\) is in general not connected, but with probability \(1 - o(1)\) it has a unique giant component \({\mathbb {G}}_{\mathrm {giant}}\) with at least \(N (1 - \mathrm {e}^{- d/4})\) vertices (see Corollary A.15 below). Moreover, the spectrum of \(A / \sqrt{d}\) restricted to the complement of the giant component is contained in the \(O\bigl (\frac{\sqrt{\log N}}{d}\bigr )\)-neighbourhood of the origin (see Corollary A.16 below). Since we always assume \(d \geqslant {{\mathcal {C}}} \sqrt{\log N}\) and we only consider eigenvalues in \({\mathbb {R}}\setminus [-\kappa ,\kappa ]\), we conclude that all of our results listed above only pertain to the eigenvalues and eigenvectors of the giant component.

For \(D = 0,1,2,\dots \) we introduce a starFootnote 1tuning fork of degree D rooted in \({\mathbb {G}}_{\mathrm {giant}}\), or D-tuning fork for short, which is obtained by taking two stars with central degree D and connecting their hubs to a common base vertex in \({\mathbb {G}}_{\mathrm {giant}}\). We refer to Fig. 5 for an illustration and Definition A.17 below for a precise definition.

It is not hard to see that every D-tuning fork gives rise to two eigenvalues \(\pm \sqrt{D/d}\) of \(A / \sqrt{d}\) restricted to \({\mathbb {G}}_{\mathrm {giant}}\), whose associated eigenvectors are supported on the stars (see Lemma A.18 below). We denote by \(\Sigma :=\{\sqrt{D/d} :\text {a D-tuning fork exists}\}\) the spectrum of \(A / \sqrt{d}\) restricted to \({\mathbb {G}}_{\mathrm {giant}}\) generated by the tuning forks. Any eigenvector associated with an eigenvalue \(\sqrt{D/d} \in \Sigma \) is localized on precisely \(2D + 2\) vertices. Thus, D-tuning forks provide a simple way of constructing localized states. Note that this is a very basic form of concentration of mass, supported at the periphery of the graph on special graph structures, and is unrelated to the much more subtle concentration in the semilocalized phase described in Sect. 1.2.

For \(d > 0\) and \(D \in {\mathbb {N}}\) we now estimate the number of D-tuning forks in \({\mathbb {G}}(N,d/N)\), which we denote by F(d, D). The following result is proved in Appendix A.6.

Lemma 1.12

(Number of D-tuning forks). Suppose that \(1 \ll d = b \log N = O(\log N)\) and \(0 \leqslant D \ll \log N / \log \log N\). Then \(F(d,D) = N^{1 - 2b - 2b D + o(1)}\) with probability \(1 - o(1)\).

Defining \(D_* :=\frac{\log N}{2d} - 1\), we immediately deduce the following result.

Corollary 1.13

For any constant \(\varepsilon > 0\) with probability \(1 - o(1)\) the following holds. If \(D_* \leqslant -\varepsilon \) then \(\Sigma = \emptyset \). If \(D_* \geqslant \varepsilon \) then \(\Sigma = \{\pm \sqrt{D/d} :D \in {\mathbb {N}}, D \leqslant D_* (1 + o(1))\}\).

We deduce that if \(d \leqslant (1/2 - \varepsilon ) \log N\) then \(\Sigma \ne \emptyset \) and hence the delocalization for all eigenvectors from Remark 1.9 fails. Hence, the lower bound (1.20) is optimal up to the value of \({{\mathcal {C}}}\).

Similarly, for \(d \gg \sqrt{\log N}\) the set \(\Sigma \) is in general nonempty, but we always have \(\Sigma \subset [-\kappa , \kappa ]\) for any fixed \(\kappa > 0\), so that eigenvalues from \(\Sigma \) do not interfere with the statements of Theorems 1.2, 1.7, and 1.8. On the other hand, if \(d = \sqrt{\log N} / t\) for constant t, we find that \(\Sigma \) is asymptotically dense in the interval \([-t/\sqrt{2}, t / \sqrt{2}]\). Since the conclusions of Theorems 1.2, 1.7, and 1.8 are obviously wrong for any eigenvalue from \(\Sigma \), they must all be wrong for large enough t. This shows that the lower bounds d from (1.10) and (1.18) are optimal (up to the factor \(\log \log N\) in (1.10) and the factor \({{\mathcal {C}}}\) in (1.18)).

In fact, the emergence of the tuning fork eigenvalues of order one and the failure of all of our proofs has the same underlying root cause, which singles out the scale \(d \asymp \sqrt{\log N}\) as the scale below which the concentration of the ratio

fails for vertices x satisfying \(D_x \asymp d\). Clearly, to have a D-tuning fork with \(D \asymp d\), (1.22) has to fail at the hubs of the stars. Moreover, (1.22) enters our proofs of both the semilocalized and the delocalized phase in a crucial way. For the former, it is linked to the validity of the local approximation by the \((D_x,d)\)-regular tree from Appendix A.2, which underlies also the construction of the localization profile vectors (see e.g. (3.35) below). For the latter, in the language of Definition 4.6 below, it is linked to the property that most neighbours of any vertex are typical (see Proposition 4.8 (ii) below).

2 Basic Definitions and Overview of Proofs

In this preliminary section we introduce some basic notations and definitions that are used throughout the paper, and give an overview of the proofs of Theorems 1.2 (semilocalized phase) and 1.8 (delocalized phase). These proofs are unrelated and, thus, explained separately. For simplicity, in this overview we only consider qualitative error terms of the form o(1), although all of our estimates are in fact quantitative.

2.1 Basic definitions

We write \({\mathbb {N}}= \{0,1,2,\dots \}\). We set \([n] :=\{1, \ldots , n\}\) for any \(n \in {\mathbb {N}}^*\) and \([0] :=\emptyset \). We write \(|X |\) for the cardinality of a finite set X. We use \(\mathbb {1}_{\Omega }\) as symbol for the indicator function of the event \(\Omega \).

Vectors in \({\mathbb {R}}^N\) are denoted by boldface lowercase Latin letters like \(\varvec{\mathrm {u}}\), \(\varvec{\mathrm {v}}\) and \(\varvec{\mathrm {w}}\). We use the notation \(\varvec{\mathrm {v}} = (v_x)_{x \in [N]} \in {\mathbb {R}}^N\) for the entries of a vector. We denote by \({{\,\mathrm{supp}\,}}\varvec{\mathrm {v}} :=\{x \in [N] :v_x \ne 0\}\) the support of a vector \(\varvec{\mathrm {v}}\). We denote by  the Euclidean scalar product on \({\mathbb {R}}^N\) and by

the Euclidean scalar product on \({\mathbb {R}}^N\) and by  the induced Euclidean norm. For a matrix \(M \in {\mathbb {R}}^{N \times N}\), \(\Vert M \Vert \) is its operator norm induced by the Euclidean norm on \({\mathbb {R}}^N\). For any \(x \in [N]\), we define the standard basis vector \(\varvec{\mathrm {1}}_x :=(\delta _{xy})_{y \in [N]} \in {\mathbb {R}}^N\). To any subset \(S \subset [N]\) we assign the vector \(\varvec{\mathrm {1}}_S\in {\mathbb {R}}^N\) given by \(\varvec{\mathrm {1}}_S :=\sum _{x \in S} \varvec{\mathrm {1}}_x\). In particular, \(\varvec{\mathrm {1}}_{\{ x\}} = \varvec{\mathrm {1}}_x\).

the induced Euclidean norm. For a matrix \(M \in {\mathbb {R}}^{N \times N}\), \(\Vert M \Vert \) is its operator norm induced by the Euclidean norm on \({\mathbb {R}}^N\). For any \(x \in [N]\), we define the standard basis vector \(\varvec{\mathrm {1}}_x :=(\delta _{xy})_{y \in [N]} \in {\mathbb {R}}^N\). To any subset \(S \subset [N]\) we assign the vector \(\varvec{\mathrm {1}}_S\in {\mathbb {R}}^N\) given by \(\varvec{\mathrm {1}}_S :=\sum _{x \in S} \varvec{\mathrm {1}}_x\). In particular, \(\varvec{\mathrm {1}}_{\{ x\}} = \varvec{\mathrm {1}}_x\).

We use blackboard bold letters to denote graphs. Let \({\mathbb {H}} = (V({\mathbb {H}}), E({\mathbb {H}}))\) be a (simple, undirected) graph on the vertex set \(V({\mathbb {H}}) = [N]\). We often identify a graph \({\mathbb {H}}\) with its set of edges \(E({\mathbb {H}})\). We denote by \(A^{{\mathbb {H}}} \in \{0,1\}^{N \times N}\) the adjacency matrix of \({\mathbb {H}}\). For \(r \in {\mathbb {N}}\) and \(x \in [N]\), we denote by \(B_r^{{\mathbb {H}}}(x)\) the closed ball of radius r around x in the graph \({\mathbb {H}}\), i.e. the set of vertices at distance (with respect to \({\mathbb {H}}\)) at most r from the vertex x. We denote the sphere of radius r around the vertex x by \(S_r^{{\mathbb {H}}}(x) :=B_r^{{\mathbb {H}}}(x) \setminus B_{r - 1}^{{\mathbb {H}}}(x)\). We denote by \(D_x^{{\mathbb {H}}}\) the degree of the vertex x in the graph \({\mathbb {H}}\). For any subset \(V \subset [N]\), we denote by \({\mathbb {H}} \vert _V\) the subgraph induced by \({\mathbb {H}}\) on V. If \({\mathbb {H}}\) is a subgraph of \({\mathbb {G}}\) then we denote by \({\mathbb {G}} \setminus {\mathbb {H}}\) the graph on [N] with edge set \(E({\mathbb {G}}) \setminus E({\mathbb {H}})\). In the above definitions, if the graph \({\mathbb {H}}\) is the Erdős–Rényi graph \({\mathbb {G}}\), we systematically omit the superscript \({\mathbb {G}}\).

The following notion of very high probability is a convenient shorthand used throughout the paper. It simplifies considerably the probabilistic statements of the kind that appear in Theorems 1.2, 1.7, and 1.8. It also introduces two special symbols, \(\nu \) and \({{\mathcal {C}}}\), which appear throughout the rest of the paper.

Definition 2.1

Let \(\Xi \equiv \Xi _{N,\nu }\) be a family of events parametrized by \(N \in {\mathbb {N}}\) and \(\nu > 0\). We say that \(\Xi \) holds with very high probability if for every \(\nu > 0\) there exists \({\mathcal {C}}\equiv {\mathcal {C}}_\nu \) such that

for all \(N \in {\mathbb {N}}\).

Convention 2.2

In statements that hold with very high probability, we use the special symbol \({{\mathcal {C}}} \equiv {{\mathcal {C}}}_\nu \) to denote a generic positive constant depending on \(\nu \) such that the statement holds with probability at least \(1 - {{\mathcal {C}}}_\nu N^{-\nu }\) provided \(\mathcal C_\nu \) is chosen large enough. Thus, the bound \(|X | \leqslant {\mathcal {C}}Y\) with very high probability means that, for each \(\nu >0\), there is a constant \({\mathcal {C}}_\nu >0\), depending on \(\nu \), such that

for all \(N \in {\mathbb {N}}\). Here, X and Y are allowed to depend on N. We also write \(X = {{\mathcal {O}}}(Y)\) to mean \(|X | \leqslant {{\mathcal {C}}} Y\).

We remark that the notion of very high probability from Definition 2.1 survives a union bound involving \(N^{O(1)}\) events. We shall tacitly use this fact throughout the paper. Moreover, throughout the paper, the constant \({{\mathcal {C}}} \equiv {{\mathcal {C}}}_\nu \) in the assumptions (1.10) and (1.18) is always assumed to be large enough.

2.2 Overview of proof in semilocalized phase

The starting point of the proof of Theorem 1.2 is the following simple observation. Suppose that M is a Hermitian matrix with eigenvalue \(\lambda \) and associated eigenvector \(\varvec{\mathrm {w}}\). Let \(\Pi \) be an orthogonal projection and write \(\overline{\Pi } \!\,:=I - \Pi \). If \(\lambda \) is not an eigenvalue of \(\overline{\Pi } \!\,M \overline{\Pi } \!\,\) then from \((M - \lambda ) \varvec{\mathrm {w}} = 0\) we deduce

If \(\Pi \) is an eigenprojection of M whose range contains the eigenspace of \(\lambda \) (for instance \(\Pi = \varvec{\mathrm {w}} \varvec{\mathrm {w}}^*\) if \(\lambda \) is simple) then clearly both sides of (2.1) vanish. The basic idea of our proof is to apply an approximate version of this observation to \(M = A / \sqrt{d}\), by choosing \(\Pi \) appropriately, and showing that the left-hand side of (2.1) is small by estimating the right-hand side.

In fact, we chooseFootnote 2

where \({{\mathcal {W}}}_{\lambda ,\delta }\) is the set (1.7) of resonant vertices at energy \(\lambda \), and \(\varvec{\mathrm {v}}(x)\) is the exponentially decaying localization profile from Theorem 1.2. The proof then consists of two main ingredients:

-

(a)

\(\Vert \overline{\Pi } \!\,M \Pi \Vert = o(1)\);

-

(b)

\(\overline{\Pi } \!\,M \overline{\Pi } \!\,\) has a spectral gap around \(\lambda \).

Informally, (a) states that \(\Pi \) is close to a spectral projection of M, as \(\overline{\Pi } \!\,M \Pi = [M,\Pi ] \Pi \) quantifies the noncommutativity of M and \(\Pi \) on the range of \(\Pi \). Similarly, (b) states that \(\Pi \) projects roughly onto an eigenspace of M of energies near \(\lambda \). Plugging (a) and (b) into (2.1) yields an estimate on \(\Vert \overline{\Pi } \!\,\varvec{\mathrm {w}} \Vert \) from which Theorem 1.2 follows easily. Thus, the main work of the proof is to establish the properties (a) and (b) for the specific choice of \(\Pi \) from (2.2).

The construction of the localization profile \(\varvec{\mathrm {v}}(x)\) uses the pruned graph \({\mathbb {G}}_\tau \) from [10], a subgraph of \({\mathbb {G}}\) depending on a threshold \(\tau > 1\), which differs from \({\mathbb {G}}\) by only a small number of edges and whose balls of radius \(r_\star \) around the vertices of \({{\mathcal {V}}}_\tau :=\{x :\alpha _x \geqslant \tau \}\) are disjoint (see Proposition 3.1 below). Now we define the vector \(\varvec{\mathrm {v}}(x) :=\varvec{\mathrm {v}}^\tau _+(x)\), where, for \(\sigma = \pm \) and \(\tau > 1\),

The motivation behind this choice is explained in Appendix A.2: with high probability, the \(r_\star \)-neighbourhood of x in \({\mathbb {G}}_\tau \) looks roughly like that of the root of infinite regular tree \({\mathbb {T}}_{D_x, d}\) whose root has \(D_x\) children and all other vertices d children. The adjacency matrix of \({\mathbb {T}}_{D_x, d}\) has the exact eigenvalues \(\pm \sqrt{d} \Lambda (\alpha _x)\) with the corresponding eigenvectors given by (2.3) with \({\mathbb {G}}_\tau \) replaced with \({\mathbb {T}}_{D_x, d}\).

The central idea of our proof is the introduction of a block diagonal approximation of the pruned graph. Define the orthogonal projections

The range of \(\Pi \) from (2.2) is a subspace of the range of \(\Pi ^\tau \), i.e. \(\Pi \Pi ^\tau = \Pi \). The interpretation of \(\Pi ^\tau \) is the orthogonal projection onto all localization profiles around vertices x with normalized degree at least \(2 + o(1)\), which is precisely the set of vertices around which one can define an exponentially decaying localization profile. Now we define the block diagonal approximation of the pruned graph as

here we defined the centred and scaled adjacency matrix \(H^\tau :=A^{{\mathbb {G}}_\tau } / \sqrt{d} - E^\tau \), where \(E^\tau \) is a suitably chosen matrix that is close to \({\mathbb {E}}A^{{\mathbb {G}}} / \sqrt{d}\) and preserves the locality of \(A^{{\mathbb {G}}_\tau }\) in balls around the vertices of \({{\mathcal {V}}}_\tau \). In the subspace spanned by the localization profiles \(\{\varvec{\mathrm {v}}^\tau _\sigma (x) :\sigma = \pm , x \in {{\mathcal {V}}}_{2 + o(1)}\}\), \(\widehat{H}^\tau \) is diagonal with eigenvalues \(\sigma \Lambda (\alpha _x)\). In the orthogonal complement, it is equal to \(H^\tau \). The off-diagonal blocks are zero. The main work of our proof consists in an analysis of \(\widehat{H}^\tau \).

In terms of \(\widehat{H}^\tau \), abbreviating \(H :=(A^{{\mathbb {G}}} - {\mathbb {E}}A^{{\mathbb {G}}}) / \sqrt{d}\), the problem of showing (a) and (b) reduces to showing

-

(c)

\(\Vert H - \widehat{H}^\tau \Vert = o(1)\),

-

(d)

\(\Vert \overline{\Pi } \!\,^\tau H^\tau \overline{\Pi } \!\,^\tau \Vert \leqslant 2 + o(1)\).

Indeed, ignoring minor issues pertaining to the centring \({\mathbb {E}}A^{{\mathbb {G}}}\), we replace \(M = A^{{\mathbb {G}}} / \sqrt{d}\) with H in (a) and (b). Then (a) follows immediately from (c), since \(\overline{\Pi } \!\,H \Pi = \Vert \overline{\Pi } \!\,\widehat{H}^\tau \Pi \Vert + o(1) = o(1)\), as \(\overline{\Pi } \!\,\widehat{H}^\tau \Pi = 0\) by the block structure of \(\widehat{H}^\tau \) and the relation \(\Pi ^\tau \Pi = \Pi \). To show (b), we note that the \(\Pi ^\tau \)-block of \(\widehat{H}^\tau \), \(\Pi ^\tau \widehat{H}^\tau \Pi ^\tau = \sum _{x \in {{\mathcal {V}}}_{2 + o(1)}} \sum _{\sigma = \pm } \sigma \Lambda (\alpha _x) \varvec{\mathrm {v}}^\tau _\sigma (x) \varvec{\mathrm {v}}^\tau _\sigma (x)^*\), trivially has a spectral gap: \(\overline{\Pi } \!\,\Pi ^\tau H^\tau \Pi ^\tau \overline{\Pi } \!\,\) has no eigenvalues in the \(\delta \)-neighbourhood of \(\lambda \), simply because the projection \(\overline{\Pi } \!\,\) removes the projections \(\varvec{\mathrm {v}}^\tau _\sigma (x) \varvec{\mathrm {v}}^\tau _\sigma (x)^*\) with eigenvalues \(\sigma \Lambda (\alpha _x)\) in the \(\delta \)-neighbourhood of \(\lambda \). Moreover, the \(\overline{\Pi } \!\,^\tau \)-block also has such a spectral gap by (d) and \(\lambda > 2 + o(1)\). Hence, by (c), we deduce the desired spectral gap (b).

Thus, what remains is the proof of (c) and (d). To prove (c), we prove \(\Vert H - H^\tau \Vert = o(1)\) and \(\Vert H^\tau - \widehat{H}^\tau \Vert = o(1)\). The bound \(\Vert H - H^\tau \Vert = o(1)\) follows from a detailed analysis of the graph \({\mathbb {G}} \setminus {\mathbb {G}}_\tau \) removed from \({\mathbb {G}}\) to obtain the pruned graph \({\mathbb {G}}_\tau \), which we decompose as a union of a graph of small maximal degree and a forest, to which standard estimates of adjacency matrices of graphs can be applied (see Lemma 3.8 below). To prove \(\Vert H^\tau - \widehat{H}^\tau \Vert = o(1)\), we first prove that \(\varvec{\mathrm {v}}^\tau _\sigma (x)\) is an approximate eigenvector of \(H^\tau \) with approximate eigenvalue \(\sigma \Lambda (\alpha _x)\) (see Proposition 3.9 below). Then we deduce \(\Vert H^\tau - \widehat{H}^\tau \Vert = o(1)\) using that the balls \(B_{2r_\star }(x)\), \(x \in {{\mathcal {V}}}_{2 + o(1)}\), are disjoint and the locality of the operator \(H^\tau \) (see Lemma 3.11 below). Thus we obtain (c).

Finally, we sketch the proof of (d). The starting point is an observation going back to [10, 15]: from an estimate on the spectral radius of the nonbacktracking matrix associated with H from [15] and an Ihara–Bass-type formula relating the spectra of H and its nonbacktracking matrix from [15], we obtain the quadratic form inequality \(|H | \leqslant I + Q + o(1)\) with very high probability, where \(Q = {{\,\mathrm{diag}\,}}(\alpha _x :x \in [N])\), \(|H |\) is the absolute value of the Hermitian matrix H, and o(1) is in the sense of operator norm (see Proposition 3.13 below). Using (c), we deduce the inequality

To estimate \(\Vert \overline{\Pi } \!\,^\tau H^\tau \overline{\Pi } \!\,^\tau \Vert \), we take a normalized eigenvector \(\varvec{\mathrm {w}}\) of \(\overline{\Pi } \!\,^\tau H^\tau \overline{\Pi } \!\,^\tau \) with maximal eigenvalue \(\lambda > 0\). Thus, \(\varvec{\mathrm {w}} \perp \varvec{\mathrm {v}}^\tau _\pm (x)\) for all \(x \in {{\mathcal {V}}}_{2 + o(1)}\). We estimate \(\overline{\Pi } \!\,^\tau H^\tau \overline{\Pi } \!\,^\tau \) from above (an analogous argument yields an estimate from below) using (2.5) to get

Choosing \(\tau = 1 + o(1)\), we see that (d) follows provided that we can show that

since \(\max _x \alpha _x \leqslant {{\mathcal {C}}} \log N\) with very high probability.

The estimate (2.7) is a delocalization bound, in the vertex set \({{\mathcal {V}}}_\tau \), for any eigenvector \(\varvec{\mathrm {w}}\) of \(\widehat{H}^\tau \) that is orthogonal to \(\varvec{\mathrm {v}}_\pm ^\tau (x)\) for all \(x \in \mathcal V_{2 + o(1)}\) and whose associated eigenvalue is larger than \(2 \tau + o(1)\). It crucially relies on the assumption that \(\varvec{\mathrm {w}} \perp \varvec{\mathrm {v}}_\pm ^\tau (x)\) for all \(x \in {{\mathcal {V}}}_{2 + o(1)}\), without which it is false (see Proposition 3.14 below). The underlying principle behind its proof is the same as that of the Combes–Thomas estimate [25]: the Green function \(((\lambda - Z)^{-1})_{ij}\) of a local operator Z at a spectral parameter \(\lambda \) separated from the spectrum of Z decays exponentially in the distance between i and j, at a rate inversely proportional to the distance from \(\lambda \) to the spectrum of Z. We in fact use a radial form of a Combes–Thomas estimate, where Z is the tridiagonalization of a local restriction of \(\widehat{H}^\tau \) around a vertex \(x \in {{\mathcal {V}}}_\tau \) (see Appendix A.2) and i, j index radii of concentric spheres. The key observation is that, by the orthogonality assumption on \(\varvec{\mathrm {w}}\), the Green function \(((\lambda - Z)^{-1})_{i r_\star }\), \(0 \leqslant i < r_\star \), and the eigenvector components in the radial basis \(u_i\), \(0 \leqslant i < r_\star \), satisfy the same linear difference equation. Thus we obtain exponential decay for the components \(u_i\), which yields \(u_0^2 \leqslant o(1/\log N) \sum _{i = 0}^{r_*} u_i^2\). Going back to the original vertex basis, this implies that \(w_x^2 \leqslant o(1/\log N) \Vert \varvec{\mathrm {w}}|_{B_{2r_\star }^{{\mathbb {G}}_\tau }(x)}\Vert ^2\) for all \(x \in \mathcal V_\tau \), from which (2.7) follows since the balls \(B_{2r_\star }^{{\mathbb {G}}_\tau }(x)\), \(x \in {{\mathcal {V}}}_\tau \), are disjoint.

2.3 Overview of proof in delocalized phase

The delocalization result of Theorem 1.8 is an immediate consequence of a local law for the matrix \(A / \sqrt{d}\), which controls the entries of the Green function

in the form of high-probability estimates, for spectral scales \({{\,\mathrm{Im}\,}}z\) down to the optimal scale 1/N, which is the typical eigenvalue spacing. Such a local law was first established for \(d \gg (\log N)^6\) in [35] and extended down to \(d \geqslant {{\mathcal {C}}} \log N\) in [42]. In both of these works, the diagonal entries of G are close to the Stieltjes transform of the semicircle law. In contrast, in the regime (1.4) the diagonal entry \(G_{xx}\) is close to the Stieltjes transform of the spectral measure at the root of an infinite \((D_x,d)\)-regular tree. Hence, \(G_{xx}\) does not concentrate around a deterministic quantity.

The basic approach of the proof is the same as for any local law: derive an approximate self-consistent equation with very high probability, solve it using a stability analysis, and perform a bootstrapping from large to small values of \({{\,\mathrm{Im}\,}}z\) . For a set \(T \subset [N]\) denote by \(A^{(T)}\) the adjacency matrix of the graph \({\mathbb {G}}\) where the vertices of T (and all incident edges) have been removed, and denote by \(G^{(T)} = \bigl (A^{(T)} / \sqrt{d} - z\bigr )^{-1}\) the associated Green function. In order to understand the emergence of the self-consistent equation, it is instructive to consider the toy situation where, for a given vertex x, all neighbours \(S_1(x)\) are in different connected components of \(A^{(x)}\). This is for instance the case if \({\mathbb {G}}\) is a tree. On the global scale, where \({{\,\mathrm{Im}\,}}z\) is large enough, this assumption is in fact valid to a good approximation, since the neighbourhood of x is with high probability a tree. Then a simple application of Schur’s complement formula and the resolvent identity yield

Thus, on the global scale, using that G is bounded, we obtain the self-consistent equation

with very high probability.

It is instructive to solve the self-consistent equation (2.9) in the family \((G_{xx})_{x \in [N]}\) on the global scale. To that end, we introduce the notion of typical vertices, which is roughly the set \({{\mathcal {T}}} = \{x \in [N] :\alpha _x = 1 + o(1)\}\). (In fact, as explained below, the actual definition for local scales has to be different; see (2.12) below.) A simple argument shows that with very high probability most neighbours of any vertex are typical. With this definition, we can try to solve (2.9) on the global scale as follows. From the boundedness of G we obtain a self-consistent equation for the vector \((G_{xx})_{x \in {{\mathcal {T}}}}\) that reads

It is not hard to see that the equation (2.10) has a unique solution, which satisfies \(G_{xx} = m + o(1)\) for all \(x \in {{\mathcal {T}}}\). Here m is the Stieltjes transform of the semicircle law, which satisfies \(m = \frac{1}{-z - m}\). Plugging this solution back into (2.9) and using that most neighbours of any vertex are typical shows that for \(x \notin {{\mathcal {T}}}\) we have \(G_{xx} = m_{\alpha _x} + o(1)\), where \(m_\alpha :=\frac{1}{-z - \alpha m}\). One readily finds (see Appendix A.2 below) that \(m_{\alpha _x}\) is Stieltjes transform of the spectral measure of the infinite \((D_x,d)\)-regular tree at the root.

The first main difficulty of the proof is to provide a derivation of identities of the form (2.8) (and hence a self-consistent equation of the form (2.9)) on the local scale \({{\,\mathrm{Im}\,}}z \ll 1\). We emphasize that the above derivation of (2.8) is completely wrong on the local scale. Unlike on the global scale, on the local scale the behaviour of the Green function is not governed by the local geometry of the graph, and long cycles contribute to G in an essential way. In particular, eigenvector delocalization, which follows from the local law, is a global property of the graph and cannot be addressed using local arguments; it is in fact wrong outside of the region \(\mathcal S_\kappa \), although the above derivation is insensitive to the real part of z.

We address this difficulty by replacing the identities (2.8) with the following argument, which ultimately provides an a posteriori justification of approximate versions of (2.8) with very high probability, provided we are in the region \({{\mathcal {S}}}_\kappa \). We make an a priori assumption that the entries of G are bounded with very high probability; we propagate this assumption from large to small scales using a standard bootstrapping argument and the uniform boundedness of the density of the spectral measure associated with \(m_\alpha \). It is precisely this uniform boundedness requirement that imposes the restriction to \({{\mathcal {S}}}_\kappa \) in our local law (as explained in Remark 1.10, this restriction is necessary). The key tool that replaces the simpleminded approximation (2.8) is a series of large deviation estimates for sparse random vectors proved in [42], which, as it turns out, are effective for the full optimal regime (1.4). Thus, under the bootstrapping assumption that the entries of G are bounded, we obtain (2.8) (and hence also (2.9)), with some additional error terms, with very high probability.

The second main difficulty of the proof is that, on the local scale and for sparse graphs, the self-consistent equation (2.10), which can be derived from (2.9) as explained above, is not stable enough to be solved in \((G_{xx})_{x \in {{\mathcal {T}}}}\). This problem stems from the sparseness of the graphs that we are considering, and does not appear in random matrix theory for denser (or even heavy-tailed) matrices. Indeed, the stability estimates of (2.10) carry a logarithmic factor, which is usually of no concern in random matrix theory but is deadly for the sparse regime of this paper. This is a major obstacle and in fact ultimately dooms the self-consistent equation (2.10). To explain the issue, write the sum in (2.10) as \(\sum _y S_{xy} G_{yy}\), where S is the \({{\mathcal {T}}} \times {{\mathcal {T}}}\) matrix \(S_{xy} = \frac{1}{d} A_{xy}\). Writing \(G_{xx} = m + \varepsilon _x\), plugging it into (2.10), and expanding to first order in \(\varepsilon _x\), we obtain, using the definition of m, that \(\varepsilon _x = -m^2 ((I - m^2 S)^{-1} \zeta )_x\). Thus, in order to deduce smallness of \(\varepsilon _x\) from the smallness of \(\zeta _x\), we need an estimate on the normFootnote 3\(\Vert (I - m^2 S)^{-1} \Vert _{\infty \rightarrow \infty }\). In Appendix A.10 below we show that for typical S, \({{\,\mathrm{Re}\,}}z \in {{\mathcal {S}}}_\kappa \), and small enough \({{\,\mathrm{Im}\,}}z\),we have

for some universal constant C and some constant \(C_\kappa \) depending on \(\kappa \). In our context, where \(\zeta _x\) is small but much larger than the reciprocal of the lower bound of (2.11), such a logarithmic factor is not affordable.

To address this difficulty, we avoid passing by the form (2.10) altogether, as it is doomed by (2.11). The underlying cause for the instability of (2.10) is the inhomogeneous local structure of the matrix S, which is a multiple of the adjacency matrix of a sparse graph. Thus, the solution is to derive a self-consistent equation of the form (2.10) but with an unstructured S, which has constant entries. The basic intuition is to replace the local average \(\frac{1}{d} \sum _{y \in S_1(x)} G_{yy}^{(x)}\) in the first identity of (2.8) with the global average \(\frac{1}{N} \sum _{y \ne x} G_{yy}^{(x)}\). Of course, in general these two are not close, but we can include their closeness into the definition of a typical vertex. Thus, we define the set of typical vertices as

The main work of the proof is then to prove the following facts with very high probability.

-

(a)

Most vertices are typical.

-

(b)

Most neighbours of any vertex are typical.

With (a) and (b) at hand, we explain how to conclude the proof. Using (a) and the approximate version of (2.8) established above, we deduce the self-consistent equation for typical vertices,

which, unlike (2.10), is stable (see Lemma 4.19 below) and can be easily solved to show that \(G_{xx} = m + o(1) = m_{\alpha _x} + o(1)\) for all \(x \in {{\mathcal {T}}}\). Moreover, if \(x \notin {{\mathcal {T}}}\) then we obtain from (2.8) and (b) that

where we used that \(G_{yy} = m + o(1)\) for \(y \in {{\mathcal {T}}}\). This shows that \(G_{xx} = m_{\alpha _x} + o(1)\) for all \(x \in [N]\) with very high probability, and hence concludes the proof.

What remains, therefore, is the proof of (a) and (b); see Proposition 4.8 below for a precise statement. Using the bootstrapping assumption of boundedness of the entries of G, it is not hard to estimate the probability \({\mathbb {P}}(x \in {{\mathcal {T}}})\), which we prove to be \(1 - o(1)\), although \(\{x \in {{\mathcal {T}}}\}\) does not hold with very high probability (this characterizes the critical and subcritical regimes). Now if the events \(\{x \in {{\mathcal {T}}}\}\), \(x \in [N]\), were all independent, it would then be a simple matter to deduce (a) and (b).

The most troublesome source of dependence among the events \(\{x \in {{\mathcal {T}}}\}\), \(x \in [N]\), is the Green function \(G_{yy}^{(x)}\) in the definition of \({{\mathcal {T}}}\). Thus, the main difficulty of the proof is a decoupling argument that allows us to obtain good decay for the probability \({\mathbb {P}}(T \subset {{\mathcal {T}}})\) in the size of T. This decay can only work up to a threshold in the size of T, beyond which the correlations among the different events kick in. In fact, we essentially prove that

see Lemma 4.12. Choosing the largest possible T, \(T = o(d)\), we find that the first term on the right-hand side of (2.13) is bounded by \(N^{-\nu }\) provided that \(o(1) d^2 \geqslant \nu \log N\), which corresponds precisely to the optimal lower bound in (1.18). Using (2.13), we may deduce (a) and (b).

To prove (2.13), we need to decouple the events \(\{x \in {{\mathcal {T}}}\}\), \(x \in T\). We do so by replacing the Green functions \(G^{(x)}\) in the definition of \({{\mathcal {T}}}\) by \(G^{(T)}\), after which the corresponding events are essentially independent. The error that we incur depends on the difference \(G^{(T)}_{yy} - G_{yy}\), which we have to show is small with very high probability under the bootstrapping assumption that the entries of G are bounded. For T of fixed size, this follows easily from standard resolvent identities. However, for our purposes it is crucial that T can have size up to o(d), which requires a more careful quantitative analysis. As it turns out, \(G^{(T)}_{yy} - G_{yy}\) is small only up to \(|T | = o(d)\), which is precisely what we need to reach the optimal scale \(d \gg \sqrt{\log N}\) from (1.4).

3 The Semilocalized Phase

In this section we prove the results of Sect. 1.2–Theorems 1.2 and 1.7.

3.1 The pruned graph and proof of Theorem 1.2

The balls \((B_r(x))_{x \in {{\mathcal {W}}}_{\lambda , \delta }}\) in Theorem 1.2 are in general not disjoint. For its proof, and in order to give a precise definition of the vector \(\varvec{\mathrm {v}}(x)\) in Theorem 1.2, we need to make these balls disjoint by pruning the graph \({\mathbb {G}}\). This is an important ingredient of the proof, and will also allow us to state a more precise version of Theorem 1.2, which is Theorem 3.4 below. This pruning was previously introduced in [10]; it is performed by cutting edges from \({\mathbb {G}}\) in such a way that the balls \((B_r(x))_{x \in {{\mathcal {W}}}_{\lambda , \delta }}\) are disjoint for appropriate radii, \(r = 2 r_\star \), by carefully cutting in the right places, thus reducing the number of cut edges. This ensures that the pruned graph is close to the original graph in an appropriate sense. The pruned graph, \({\mathbb {G}}_\tau \), depends on a parameter \(\tau > 1\), and its construction is the subject of the following proposition.

To state it, we introduce the following notations. For a subgraph \({\mathbb {G}}_\tau \) of \({\mathbb {G}}\) we abbreviate

Moreover, we define the set of vertices with large degrees

Proposition 3.1

(Existence of pruned graph). Let \(1 + \xi ^{1/2} \leqslant \tau \leqslant 2\) and \(d \leqslant 3 \log N\). There exists a subgraph \({\mathbb {G}}_\tau \) of \({\mathbb {G}}\) with the following properties.

-

(i)

Any path in \({\mathbb {G}}_\tau \) connecting two different vertices in \({{\mathcal {V}}}_\tau \) has length at least \(4 r_{\star } +1\). In particular, the balls \((B_{2 r_{\star }}^{\tau }(x))_{x \in {{\mathcal {V}}}_\tau }\) are disjoint.

-

(ii)

The induced subgraph \({\mathbb {G}}_\tau |_{B_{2 r_{\star }}^{\tau }(x)}\) is a tree for each \(x \in {{\mathcal {V}}}_\tau \).

-

(iii)

For each edge in \({\mathbb {G}}\setminus {\mathbb {G}}_\tau \), there is at least one vertex in \({{\mathcal {V}}}_\tau \) incident to it.

-

(iv)

For each \(x \in {{\mathcal {V}}}_\tau \) and each \(i \in {\mathbb {N}}\) satisfying \(1 \leqslant i \leqslant 2 r_{\star }\) we have \(S_i^{\tau }(x) \subset S_i(x)\).

-

(v)

The degrees induced on [N] by \({\mathbb {G}}\setminus {\mathbb {G}}_\tau \) are bounded according to

$$\begin{aligned} \max _{x \in [N]} D_x^{{\mathbb {G}} \setminus {\mathbb {G}}_\tau } \leqslant {{\mathcal {C}}} \frac{\log N}{(\tau -1)^2d} \end{aligned}$$(3.1)with very high probability.

-

(vi)

Suppose that \(\sqrt{\log N} \leqslant d\). For each \(x \in {{\mathcal {V}}}_\tau \) and all \(2 \leqslant i \leqslant 2 r_\star \), the bound

$$\begin{aligned} |S_{i}(x)\setminus S_{i}^{\tau }(x)|\leqslant {\mathcal {C}}\frac{\log N}{(\tau -1)^2}d^{i-2} \end{aligned}$$(3.2)holds with very high probability.

The proof of Proposition 3.1 is postponed to the end of this section, in Sect. 3.5 below. It is essentially [10, Lemma 7.2], the main difference being that (vi) is considerably sharper than its counterpart, [10, Lemma 7.2 (vii)]; this stronger bound is essential to cover the full optimal regime (1.4) (see Sect. 1.5). As a guide for the reader’s intuition, we recall the main idea of the pruning. First, for every \(x \in {{\mathcal {V}}}_\tau \), we make the \(2 r_\star \)-neighbourhood of x a tree by removing appropriate edges incident to x. Second, we take all paths of length less than \(4 r_\star + 1\) connecting different vertices in \({{\mathcal {V}}}_\tau \), and remove all of their edges incident to any vertex in \({{\mathcal {V}}}_\tau \). Note that only edges incident to vertices in \({{\mathcal {V}}}_\tau \) are removed. This informal description already explains properties (i)–(iv). Properties (v) and (vi) are probabilistic in nature, and express that with very high probability the pruning has a small impact on the graph. See also Lemma 3.8 below for a statement in terms of operator norms of the adjacency matrices. For the detailed algorithm, we refer to the proof of [10, Lemma 7.2].

Using the pruned graph \({\mathbb {G}}_\tau \), we can give a more precise formulation of Theorem 1.2, where the localization profile vector \(\varvec{\mathrm {v}}(x)\) from Theorem 1.2 is explicit. For its statement, we introduce the set of vertices

around which a localization profile can be defined.

Definition 3.2

(Localization profile). Let \(1 + \xi ^{1/2} \leqslant \tau \leqslant 2\) and \({\mathbb {G}}_\tau \) be the pruned graph from Proposition 3.1. For \(x \in {{\mathcal {V}}}\) we introduce positive weights \(u_0(x), u_1(x), \dots , u_{r_\star }(x)\) as follows. Set \(u_0(x) > 0\) and define, for \(i = 1, \dots , r_\star - 1\),

For \(\sigma = \pm \) we define the radial vector

and choose \(u_0(x) > 0\) such that \(\varvec{\mathrm {v}}^\tau _\sigma (x)\) is normalized.

Remark 3.3

The family \((\varvec{\mathrm {v}}_\sigma ^\tau (x) :x \in {{\mathcal {V}}}, \,\sigma = \pm )\) is orthonormal. Indeed, if \(x,y \in {{\mathcal {V}}}\) are distinct, then by Proposition 3.1 (i) the vectors \(\varvec{\mathrm {v}}^\tau _{\sigma }(x)\) and \(\varvec{\mathrm {v}}^\tau _{{\tilde{\sigma }}}(y)\) are orthogonal for any \(\sigma , {\tilde{\sigma }} = \pm \) because they are supported on disjoint sets of vertices. Moreover, \(\varvec{\mathrm {v}}^\tau _+(x)\) and \(\varvec{\mathrm {v}}^\tau _-(x)\) are orthogonal by the choice of \(u_{r_\star }(x)\) from (3.4), as can be seen by a simple computation.

The following result restates Theorem 1.2 by identifying \(\varvec{\mathrm {v}}(x)\) there as \(\varvec{\mathrm {v}}_+^\tau (x)\) given in (3.5). It easily implies Theorem 1.2, and the rest of this section is devoted to its proof.

Theorem 3.4

The following holds with very high probability. Suppose that d satisfies (1.10). Let \(\varvec{\mathrm {w}}\) be a normalized eigenvector of \(A/\sqrt{d}\) with nontrivial eigenvalue \(\lambda \geqslant 2+ {{\mathcal {C}}} \xi ^{1/2}\). Choose \(0<\delta \leqslant (\lambda -2)/2\) and set \(\tau :=1 + (\lambda -2)/8\wedge 1\). Then

Remark 3.5

An analogous result holds for negative eigenvalues \(-\lambda \), where \(\lambda \) is as in Theorem 3.4 and \(\varvec{\mathrm {v}}_+^\tau (x)\) in (3.6) is replaced with \(\varvec{\mathrm {v}}_-^\tau (x)\).

For the motivation behind Definition 3.2, we refer to the discussion in Sect. 2.2 and Appendix A.2. As explained there, if \({\mathbb {G}}_\tau \) is sufficiently close to the infinite tree \({\mathbb {T}}_{D_x, d}\) in a ball of radius \(r_\star \) around x, and if \(r_\star \) is large enough for \(u_{r_\star }(x)\) to be very small, we expect (3.5) to be an approximate eigenvector of A. This will in fact turn out to be true; see Proposition 3.9 below. That \(r_\star \) is in fact large enough is easy to see: the definition of \(r_\star \) in (1.8) and the bound \(\xi \geqslant 1/d\) imply that, for \(\alpha _x\geqslant 2+ C (\log d)^2 / \sqrt{\log N}\), we have

This means that the last element of the sequence \((u_i(x))_{i=0}^{r_\star }\) is bounded by \(\xi \). Note that the lower bound on \(\alpha _x\) imposed above always holds for \(x \in {{\mathcal {V}}}\), since, by (1.10),

An illustration of the three sets of vertices of increasing size that enter into the proof of Theorem 3.4. Each vertex x is plotted as a dot at its normalized degree \(\alpha _x\). The largest set is \({{\mathcal {V}}}_{\tau }\) from Proposition 3.1, where \(1 + \xi ^{1/2} \leqslant \tau \leqslant 2\). It is used to define the pruned graph \({\mathbb {G}}_\tau \). The intermediate set is \({{\mathcal {V}}} \equiv {{\mathcal {V}}}_{2 + \xi ^{1/4}}\) from (3.3). It is the set of vertices for which we can define the localization profile vector \(\varvec{\mathrm {v}}(x)\) that decays exponentially around x. The smallest set \(\mathcal W_{\lambda ,\delta } = \Lambda ^{-1}([\lambda - \delta , \lambda + \delta ])\) is the set of resonant vertices at energy \(\lambda \)

As a guide to the reader, in Fig. 6, we summarize the three main sets of vertices that are used in the proof of Theorem 3.4. We conclude this subsection by proving Theorem 1.2 and Corollary 1.6 using Theorem 3.4.

Proof of Theorem 1.2

The first claim follows immediately from Theorem 3.4, with \(\varvec{\mathrm {v}}(x) = \varvec{\mathrm {v}}^\tau _+(x)\). To verify the claim about the exponential decay of \(\varvec{\mathrm {v}}\), we note that the graph distance in \({\mathbb {G}}\) is bounded by the graph distance in \({\mathbb {G}}_\tau \), which implies

from which the claim easily follows using the definition (3.4). \(\quad \square \)

Proof of Corollary 1.6

We decompose  , where

, where  and \(\varvec{\mathrm {e}}\) is orthogonal to \({{\,\mathrm{Span}\,}}\{\varvec{\mathrm {v}}_+^\tau (x) :x \in {{\mathcal {W}}}_{\lambda , \delta }\}\). By Theorem 3.4 we have \(\Vert \varvec{\mathrm {e}} \Vert \leqslant \frac{{{\mathcal {C}}} (\xi +\xi _{\tau -1})}{\delta }\) and

and \(\varvec{\mathrm {e}}\) is orthogonal to \({{\,\mathrm{Span}\,}}\{\varvec{\mathrm {v}}_+^\tau (x) :x \in {{\mathcal {W}}}_{\lambda , \delta }\}\). By Theorem 3.4 we have \(\Vert \varvec{\mathrm {e}} \Vert \leqslant \frac{{{\mathcal {C}}} (\xi +\xi _{\tau -1})}{\delta }\) and

Moreover, since \(\lambda - \delta \geqslant 2 \geqslant \tau \), we have \(\mathcal W_{\lambda ,\delta } \subset {{\mathcal {V}}}_\tau \), so that Proposition 3.1 (i) implies \((\varvec{\mathrm {v}}^\tau _+(x))_y = \delta _{xy} u_0(x)\) for \(x,y \in {{\mathcal {W}}}_{\lambda , \delta }\). Thus we have

Since \(u_0(y)\) was chosen such that \(\varvec{\mathrm {v}}_+^\tau (y) \) is normalized, we find

Define \(\alpha :=\Lambda ^{-1}(\lambda )\) for \(\alpha \geqslant 2\). Since \(|\Lambda (\alpha _y)-\lambda |\leqslant \delta \) for \(y\in \mathcal W_{\lambda , \delta }\), we obtain

where we used that \(\lambda \pm \delta - 2 \asymp \lambda - 2\). Since \(\frac{\mathrm {d}}{\mathrm {d}\alpha } \frac{\alpha - 2}{2 (\alpha - 1)} = \frac{1}{2(\alpha - 1)^2} \asymp \lambda ^{-4}\), we find

where we used (3.7) and the upper bound on \(\delta \) in the last step. By an elementary computation,

and the claim hence follows by recalling (3.7) and plugging (3.9) and (3.11) into (3.10). \(\quad \square \)

3.2 Block diagonal approximation of pruned graph and proof of Theorems 3.4 and 1.7

We now introduce the adjacency matrix of \({\mathbb {G}}_\tau \) and a suitably defined centred version. Then we define a block diagonal approximation of this matrix, called \(\widehat{H}^\tau \) in (3.16) below, which is the central construction of our proof.

Definition 3.6

Let \(A^\tau \) be the adjacency matrix of \({\mathbb {G}}_\tau \). Let \(H :=\underline{A} \!\, / \sqrt{d}\) and \(H^\tau :=\underline{A} \!\,^\tau / \sqrt{d}\), where

and \(\chi ^\tau \) is the orthogonal projection onto \({{\,\mathrm{Span}\,}}\{ \varvec{\mathrm {1}}_y :y \notin \bigcup _{x \in {{\mathcal {V}}}_\tau } B_{2 r_\star }^\tau (x)\}\).

The definition of \(\underline{A} \!\,^\tau \) is chosen so that (i) \(\underline{A} \!\,^\tau \) is close to \(\underline{A} \!\,\) provided that \(A^\tau \) is close to A, since the kernel of \(\chi ^\tau \) has a relatively low dimension, and (ii) when restricted to vertices at distance at most \(2 r_\star \) from \(\mathcal V_\tau \), the matrix \(\underline{A} \!\,^\tau \) coincides with \(A^\tau \). In fact, property (i) is made precise by the simple estimate

with very high probability (see [10, Eq. (8.17)] for details). Property (ii) means that \(\underline{A} \!\,^\tau \) inherits the locality of the matrix A, meaning that applying \(\underline{A} \!\,^\tau \) to a vector localized in space to a small enough neighbourhood of \(\mathcal V_\tau \) yields again a vector localized in space. This property will play a crucial role in the proof, and it can be formalized as follows.

Remark 3.7

Let \(i + j \leqslant 2 r_\star \). Then for any \(x \in {{\mathcal {V}}}_\tau \) and vector \(\varvec{\mathrm {v}}\) we have