Abstract

We investigate the long-term behavior of a random walker evolving on top of the simple symmetric exclusion process (SSEP) at equilibrium, in dimension one. At each jump, the random walker is subject to a drift that depends on whether it is sitting on top of a particle or a hole, so that its asymptotic behavior is expected to depend on the density \(\rho \in [0, 1]\) of the underlying SSEP. Our first result is a law of large numbers (LLN) for the random walker for all densities \(\rho \) except for at most two values \(\rho _-, \rho _+ \in [0, 1]\). The asymptotic speed we obtain in our LLN is a monotone function of \(\rho \). Also, \(\rho _-\) and \(\rho _+\) are characterized as the two points at which the speed may jump to (or from) zero. Furthermore, for all the values of densities where the random walk experiences a non-zero speed, we can prove that it satisfies a functional central limit theorem (CLT). For the special case in which the density is 1/2 and the jump distribution on an empty site and on an occupied site are symmetric to each other, we prove a LLN with zero limiting speed. We also prove similar LLN and CLT results for a different environment, given by a family of independent simple symmetric random walks in equilibrium.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last decades the study of the long-term behavior of the position of a particle subject to the influence of a random environment has received great attention from the physics and mathematics community. In this context, one is usually interested in proving the existence of a well-defined limiting speed for the particle and, once the existence of such a speed is known, to characterize its fluctuations around the average position.

The random environment can be either static or dynamic depending on whether it is kept fixed or evolves stochastically after the initial configuration is sampled from a given distribution.

For one-dimensional static random environments, since the pioneering work of Solomon [Sol75], criteria for recurrence or transience, law of large numbers, central limit theorems, anomalous fluctuation regimes and large deviations have been obtained, see for instance [Sol75, KKS75, Sin82]. For higher dimensional static environments, important progress has also been achieved, however the knowledge is still modest when compared to the one-dimensional setting (see for instance [Szn04, BDR14] among many others). A number of important questions remain open and there is still much to be understood. We refer the reader to [HMZ12, Szn04] and, more recently [DR14], for very good reviews on the topic.

The research on random walks on dynamic random environments (RWDRE) was initiated more recently and a number of works have been dedicated to these models, proving laws of large numbers (LLN), central limit theorems (CLT) and deviation bounds. We provide a short historical background of these works in Sect. 1.1.

Several of the techniques developed for RWDRE focus on environments with either fast or uniform mixing conditions, see for example [CZ04, RV13, BHT18]. From a physical perspective, whenever the environment mixes fast one expects the random walk to present diffusive behavior.

Another important class of RWDRE that has received much attention are those that evolve on top of conservative particle systems such as the simple symmetric exclusion process, see [HS15, BR16, HHSST15] and Sect. 1.1 for a discussion. To the best of our knowledge, all these works have focused on ballistic and perturbative regimes, as we explain in Sect. 1.1. In this context, the random walker overtakes the particles of the environment allowing for a renewal structure to be established. As a consequence the behavior of the random walker in these regimes is also characterized by Gaussian fluctuations and CLT.

However, it is not clear if this diffusive behavior is present for the whole range of parameters of the model. In fact, in [AT12], simulations indicate that, when the random walk has zero speed, it can display non-diffusive fluctuations, due to the environment’s long term memory along the time direction. In [Huv18], diffusivity and trapping effects are predicted both from theoretical physics arguments and from simulations. However, giving a rigorous answer to the asymptotic behavior of this model remains a fascinating open problem in mathematics.

The existence of long-range dependencies, not only brings a set of interesting challenges from the mathematical perspective but, more importantly, also raises the possibility to find non-diffusive behavior and other physically relevant phenomena at the critical zero-speed regime. This is a major motivation for further investigations, both at the critical value and around its neighborhood. Let us now describe the setting we consider and present the advances we obtain in this problem.

In this work we consider one-dimensional random walks on top of conservative particle systems starting at equilibrium with density \(\rho > 0\). Although some of the techniques we develop may, in principle, be adapted to other models, we will focus on the case where the environment is either the simple symmetric exclusion process (SSEP) whose law will be denoted \(\mathbf {P}^\rho _{EP}\) or a system of independent random walks (PCRW) starting from a Poisson product measure, whose law will be denoted \(\mathbf {P}^\rho _{RW}\). We postpone the mathematical construction of the environments to Sect. 2.1 where we will also precise the meaning of the density \(\rho \) in each of the cases.

Once one of these environments is fixed, we define the evolution of the random walk as follows. Fix two parameters \(p_\circ \) and \(p_\bullet \) in [0, 1] with \(p_\circ \le p_\bullet \). The random walk starts at the origin and jumps in discrete time. At the moment of a jump, it inspects the environment exactly in the site where it lies on. If the site is vacant, the random walk decides to jump to the right with probability \(p_{\circ }\) and to the left with probability \(1-p_{\circ }\). If the site is occupied, the random walk decides to jump to the right with probability \(p_{\bullet }\) and to the left with probability \(1-p_{\bullet }\). Let us denote \((X_n)_{n\in \mathbb {N}}\) the trajectory of the random walk. We are going to revisit the definition of the random walk on Sect. 2.2 where a useful graphical construction is provided.

We will write \(\mathbb {P}^{\rho }\) for the joint law of the environment and the random walker when the density of the environment is \(\rho \), often called the “annealed law”. The details on the construction of this measure are given in Sect. 2.

The main contribution of this paper is to develop a technique that allows one to prove LLN and CLT for random walks on a class of dynamical random environments that includes the simple symmetric exclusion process (SSEP) and the Poisson cloud of independent simple symmetric random walkers (PCRW).

For these two specific models, we use lateral space–time mixing bounds together with a decoupling inequality involving small changes in the density (sprinkling) in order to prove a LLN, i.e. the existence of an asymptotic speed, for all densities \(\rho \in (0,1)\) except at most two values denoted \(\rho _-\) and \(\rho _+\). We will provide a characterization of these two possible exceptional densities. Moreover, we are able to prove a CLT for all densities for which the speed exists and is non-zero. This is the content of our main result, Theorem 2.1. A simplified version of it is stated below.

Theorem 1.1

There exists a deterministic non-decreasing function \(v:[0,1] \rightarrow \mathbb {R}\) and two points \(0\le \rho _- \le \rho _+ \le 1\) such that, for every \(\rho \in (0,1) \setminus \{\rho _-,\rho _+\}\),

Moreover, for every \(\rho \not \in [\rho _-, \rho _+]\), we have that \(v(\rho ) \ne 0\) and the process

converges in law to a non-degenerate Brownian motion.

Theorem 1.1 gives partial answers to Conjectures 3.1 and 3.2 of [AT12], and to open problems stated in [HKS14, San14]. Implicit formulas for \(\rho _-\) and \(\rho _+\) can be found in Theorem 2.1. It is natural to expect that \(\rho _-\) and \(\rho _+\) coincide, but it is actually an interesting open problem, as they could a priori be different, which would indicate a transient regime with zero-speed, reminiscent of random walks in (static) random environment. Also, we are currently unable to determine whether anomalous fluctuations take place for some values of \(\rho \) inside the interval \([\rho _-,\rho _+]\).

For the interesting case where the random walk evolves on the simple symmetric exclusion process with density \(\rho =1/2\) and if \(p_\bullet =1-p_\circ \), then the speed exists and is equal to 0, as stated in Theorem 2.2. As far as we are aware, the existence of this zero-speed regime was still an open question.

The choice to study the speed of the walk as a function of the density \(\rho \) of the environment may seem arbitrary and one could instead be interested in studying it as a function of another parameter, for instance the rate of the underlying particle system. This was done in [HS15] where the authors proved the very interesting phenomenon that, under some drift condition, when the rate is very low, the walk essentially sees a frozen environment and roughly behaves like a random walk in random environment (at the difference that no zero-speed regime seems to hold), whereas when the rate is very high, the walker sees essentially a fresh environment at each step and thus behaves in a Markovian way. We cannot translate our result to the speed seen as a function of the rate. The crucial step where we need to play with the density is the use of sprinkling in order to obtain decoupling inequalities (see Proposition 7.1). It is not clear at all whether an analogous property would hold for the speed as a function of the rate as, to start with, there is not even a clear reason why the speed should be a monotone function of the rate.

1.1 Related work

The first works dedicated to the study of RWDRE focused on the case were the underlying medium exhibit fast mixing conditions. A broad range of such conditions have been considered such as: time independence [BMP97, BMP00], strong mixing conditions [CZ04, AHR11, CZ05], exponential mixing rate [HS14, Bet18, MV15, KO05, ABF17] and fast decay of covariances [BHT18]. In all the above circumstances, one expects the random walk to exhibit Gaussian fluctuations and to satisfy a functional central limit theorem.

There have been developments on random walks on top of conservative particle systems, such as SSEP [ASV13, HS15] or PCRW [BR16, HKS14, HHSST15, BHSST19, BHSST17]. Most of the results therein hold in regimes that are perturbative in some parameter: The density of the environment [HKS14, HHSST15, BHT18, BHSST19, BHSST17], the rate of evolution of the environment [HS15] or the local drift experimented by the random walk [ASV13]. By perturbative in some parameter, we mean that this parameter has to be taken sufficiently close to extreme values. The main idea is, knowing that the random walk would be ballistic in the limiting case, try to prove that it is still ballistic as the parameters of the model approach the limiting values. From the ballistic behavior, usually LLN and CLT are obtained with renewal techniques.

In [AT12], a (continuous-time) random walk on the SSEP was studied by means of simulations. There the authors investigated the limiting behavior as a function of three parameters: The density \(\rho \) of the SSEP, the rate \(\gamma \) of the SSEP and the local drift \(p_\bullet \) of the random walk on occupied sites. They restrict themselves to the case where the local drift on vacant sites satisfy \(p_\circ = 1-p_\bullet \).

Based on their data, they conjecture that LLN should hold for every possible value of \(\rho \). They also conjecture that it is possible to tune the parameters in order to produce regimes in which the fluctuations of the walker around its limiting speed scale super or sub-diffusively. This phenomenon, should be regarded as a manifestation of the strong space–time correlations of the environment which allows, for instance, the existence of traps that survive enough time for being relevant in the long-term behavior. They also leave as an interesting open question, to determine wether there are some regimes where the walk can be transient with zero speed, which would be reminiscent of similar phenomena that take place for random walks in static random environments.

To the best of our knowledge, the conjectures and open questions presented in [AT12] concerning the behavior of the random walker at or near the zero-speed regime have remained largely open and deserve to be further investigated. We also refer the reader to [Huv18] for a non-rigorous investigation of the possible trapping mechanisms.

1.2 Overview of the proof

In Sect. 3, we provide a sequence of statements that build the key steps towards the proofs of our main results, Theorems 2.1 and 2.2. Here, we give a rougher description of the overall strategy and of the tools we use.

The first step is to give a graphical construction of the process, in Sect. 3.1, which will be very useful to emphasize some monotonicity properties. From the graphical construction, we obtain a two-dimensional space–time picture on which we define a collection of coupled random walks started from each point of space and time. We observe events in boxes, which are simply finite regions in this space–time landscape.

Most of our proofs are based on renormalization schemes. The idea is to observe some events on larger and larger boxes and prove that, if some bad event happens at some scale then similar events happen in two different boxes at a smaller scale. If these two boxes were independent, then one could obtain (given an initial estimate) that the probability of the bad event decays exponentially fast in the size of the box. Nevertheless, in our case, the boxes are not independent as the dynamical nature of the environment creates time and space dependencies. The key observation is the following: As the particles of the environment move diffusively, if the space separation between the boxes is at least D and the time separation is not larger than \(D^2\), then we should be able to prove that these boxes are essentially independent, see Proposition 4.1. This is what we call here the lateral decoupling, referring to the relative positions of the boxes at the space–time landscape.

There are two quantities that are important in our analysis: \(v_+(\rho )\) and \(v_-(\rho )\), defined in (3.19) and (3.22). These upper and lower speeds are deterministic and well-defined for every value of \(\rho \). Roughly speaking the probability to move at speeds larger than \(v_+(\rho )\) should go to zero along a subsequence (and similarly for the probability to go slower than \(v_-(\rho )\)).

Then we need to prove a few facts in order to be able to conclude the existence of the speed:

-

(1)

the probability to go faster than \(v_+(\rho )\) or slower than \(v_-(\rho )\) over a time t actually decreases fast enough;

-

(2)

\(v_+(\rho )=v_-(\rho )\), which we define as being our candidate \(v(\rho )\) for the speed.

These two points would indeed imply (1.1) proving the existence of a limiting speed for the walker. Nevertheless, due to the nature of the lateral decoupling, we are only able to decouple nicely events on space–time boxes that are well-separated in space. For instance, two boxes with the same space location but different time locations will not decouple nicely. For this reason, in Lemma 3.2 and Theorem 3.4, we are only able to prove the following:

-

(1)

the probability to go faster than \(\max (v_+(\rho ),0)\) or slower than \(\min (v_-(\rho ),0)\) over a time t actually decreases fast enough;

-

(2)

\(v_+(\rho )=v_-(\rho )\), which we define as being our candidate \(v(\rho )\) for the speed.

These points are proved using renormalization and the lateral decoupling and are not quite enough to obtain the existence of the speed. However, note that they already imply the existence of the speed if \(v(\rho ):=v_+(\rho ) = v_-(\rho )=0\).

We still need to prove that if, for instance, \(v(\rho ):=v_+(\rho )=v_-(\rho )>0\), then the probability to go slower than \(v(\rho )\) decays sufficiently fast. Note that this is not guaranteed by (1’) alone.

For this purpose, we first consider a density \(\rho _+\) for which we know that \(v(\rho _++\varepsilon )>0\) and we prove that, for any environment with density \(\rho >\rho _++\varepsilon \), the probability to go slower than \(\tfrac{1}{2} v(\rho _++\varepsilon )\) decays fast, see Proposition 3.6. This provides a linear lower bound for the displacement of the random walker, that is, we conclude that the random walker moves ballistically. A similar argument can be employed in the case \(v(\rho ) = v_+(\rho ) = v_-(\rho ) <0\).

Once we have obtained ballisticity, we conclude in Sect. 8 the existence of the speed (and the CLT) using regeneration structures developed in [HS15] for the SSEP and in [HHSST15] for the PCRW.

We want to emphasize that most of the techniques we develop here are not too sensitive to the particularities of the underlying environment nor to the the fact that the random walker evolves in discrete time rather then in continuous time. Indeed, regarding the the environment, one of the properties that we require is that it has some monotonicity meaning roughly that two copies with different densities \(\rho _1 < \rho _2\) can be coupled together so that the former always has less particles per site than the latter. Another important requirement on the environment is that it satisfies the lateral decoupling inequality as explained above. Both monotonicity and lateral decoupling should hold for other examples of environments. In addition, we make use of a decoupling technique called sprinkling (see Sect. 7). This holds for the specific environments that we work with, namely independent random walks and the exclusion process. A similar decoupling inequality has been proved for the zero-range process, see [BT18], but it has not been established in greater generality. Nevertheless we believe that it should be possible to prove it for a broad class of conservative monotonic environments.

The last block in our proof relies on a regeneration structure that is currently only available for the particular environments considered in [HS15, HHSST15]. We believe that, they could be adapted to the case of a continuous-time walk with no major issue. The question on whether it is possible to obtain a regeneration structure only based on non-microscopic properties of the environment (e.g. lateral decoupling) is more delicate.

Roughly speaking there are various processes that should be physically equivalent to the one we study and should behave similarly. However, several of the steps we have taken in our analysis are very specific to the models in question.

To summarize, we believe than one could adapt our strategy to other models as long as the environment is monotonic (increasing the density is equivalent to adding particles), fulfills the sprinkling and the lateral decoupling (or some slightly modified version of it) and allows the construction of a regenerative structure.

Let us finally precise that this model or similar ones, have been studied in dimension 2 and above, see for instance [BHSST19, SS18]. A fair amount of our proof only applies in dimension 1, in particular the proof of the item (2) above. This is due to the fact that our arguments rely on the fact that the space–time trajectory of two random walks starting at the same time, but at different space positions, cannot cross each other. If they ever intersect, they will actually merge. Of course this does not hold in higher dimensions or if we allow for long-range jumps of the walker.

2 Mathematical Setting and Main Results

2.1 Environments

In this section, we will give the mathematical construction of two dynamic random environments that we consider: The simple symmetric exclusion process (SSEP) and the Poisson cloud of simple random walks (PCRW). The starting configuration will be distributed so that the environment is in equilibrium, that is, the environmental process is stationary in time.

Our particle systems will start in equilibrium with a distribution parametrized by a value \(\rho \in (0,1)\). As it will become clear below, if \(\lambda \) denotes the expected number of particles per site then we will have \(\rho = \lambda \) for the SSEP, while we will have \(\rho = 1 - \hbox {e}^{-\lambda }\) for the PCRW. For a fixed \(\rho \), we will denote \(\mathbf {P}^{\rho }_{EP}\) and \(\mathbf {P}^{\rho }_{RW}\) the law of a SSEP and a PCRW with density parameter \(\rho \), respectively. We also write \(\mathbf {E}^\rho _{EP}\), \(\mathbf {E}^\rho _{RW}\) for the corresponding expectations. We may drop the superscript \(\rho \) and/or the subscripts EP and RW when there is no risk of confusion.

2.1.1 Definition of the SSEP

The SSEP with density \(\rho \in (0,1)\) is a stochastic process

whose graphical construction we outline below.

But let us first give an informal introduction to the process. At time \(t=0\) decide whether a site has a particle independently by tossing a coin with success probability \(\rho \). Then, each particle tries to the jump at a rate \(\gamma >0\) with equal probability to the right and to the left. The jump will only be performed in case the landing position does not contain a particle.

Mathematically, we start by fixing \(\left( \eta ^\rho _0(x),x\in \mathbb {Z}\right) \), a collection of i.i.d. Bernoulli random variables with mean \(\rho \), that is, \(\eta ^\rho _0(x)\) is equal to 1 with probability \(\rho \) and to 0 with probability \(1-\rho \), independently over \(x \in \mathbb {Z}\). This represents the initial configuration for the SSEP with density \(\rho \).

In order to define the evolution of this process, to each unit edge \(\{x,x+1\}\) of \(\mathbb {Z}\), we associate a real-valued Poisson point process \((T^x_i)_{i\ge 0}\) with rate \(\gamma \), independently over \(x\in \mathbb {Z}\). The SSEP is defined as follows.

If, for some \(t>0\), \(x\in \mathbb {Z}\) and \(i\ge 0\), we have \(T^x_i=t\), then

In words, at each arrival of the Poisson point process \((T^x_i)_{i\ge 0}\), the sites x and \(x+1\) exchange their occupation. The construction implies that \(\eta ^\rho _t(x) \in \{0,1\}\) for every \(x \in \mathbb {Z}\) and \(t \ge 0\). When \(\eta ^\rho _t(x) = 1\) we say that there is a particle on site x at time t. Differently, when \(\eta ^\rho _t(x)=0\) we say that there is a hole on site x at time t.

We will denote \(\mathbf {P}^{\rho }_{EP}\) the law of \(\eta ^{\rho }\) as an element of \(\mathcal {D}(\mathbb {R}_+, \{0,1\}^{\mathbb {Z}})\), the standard space of càdlàg trajectories in \(\{0,1\}^{\mathbb {Z}}\). It is a classical fact that the Bernoulli distribution with parameter \(\rho \) is an invariant measure for this process. Hence, under \(\mathbf {P}^{\rho }_{EP}\), and for each fixed time \(t\ge 0\), \((\eta ^\rho _{t}(x))_{x\in \mathbb {Z}}\) is a collection of Bernoulli random variables with parameter \(\rho \). We also write \(\mathbf {E}^\rho _{EP}\) for the corresponding expectations.

Note that the collections of i.i.d. random variables \(\left( \eta ^\rho _0(x),x\in \mathbb {Z}\right) \) for different parameters \(\rho \in (0,1)\) can be coupled in such a way that \(\eta ^\rho _0(x) \ge \eta _0^{\rho '}(x)\) whenever \(\rho ' < \rho \). The graphical construction presented above preserves this property for every \(t >0\).

Finally, note that \(\eta ^\rho \) depends also on \(\gamma \), but as we fix this parameter throughout the paper, we can safely make this dependency implicit.

2.1.2 Definition of PCRW

The PCRW with density parameter \(\rho \in (0,1)\) is a stochastic process

defined in terms of a collection of independent random walks on \(\mathbb {Z}\) as we show below.

Fix \(\rho \in (0,1)\) and let \(\lambda =-\ln (1-\rho )\in \mathbb {R}_+\). Now let \(\left( \eta ^\rho _0(x),x\in \mathbb {Z}\right) \) be an i.i.d. collection of Poisson random variables of parameter \(\lambda \). Independently, for every \(x\in \mathbb {Z}\), we let \((Z_t^{x,i},t\ge 0)_{1\le i\le \eta ^\rho _0(x)}\) be a collection of lazy, discrete-time, simple random walks started at x that evolve independently by jumping at each time unit by \(-1,0,1\) with probabilities \((1-q)/2,q,(1-q)/2\), respectively, for some \(q\in (0,1)\). Note that, for a given x, the collection is empty on the event that \(\eta ^\rho _0(x)=0\). Moreover, note that the random walks are indexed by a continuous time parameter \(t \ge 0\), so that their trajectories are in \(\mathcal {D}(\mathbb {R}_+, \mathbb {Z})\), despite the fact that the jumps can only occur in integer-valued instants of time.

We define the number of walkers at a given time \(t \ge 0\) and position \(y \in \mathbb {Z}\) as:

Notice that \(\eta ^\rho _t(x) \in \mathbb {N}\). When \(\eta ^\rho _t(x) = j\) for some integer \(j \ge 1\) we say that there are j particles on site x at time t. Differently, when \(\eta ^\rho _t(x) = 0\) we say that there is no particle on site x at time t.

We will denote \(\mathbf {P}^{\rho }_{RW}\) the law of \(\eta ^\rho \) defined on an appropriate probability space. We also write \(\mathbf {E}^\rho _{RW}\) for the corresponding expectations. It is well-known that, for any \(\lambda >0\), the Poisson product distribution with parameter \(\lambda \) is an invariant measure for this process. Hence, under \(\mathbf {P}^{\rho }_{RW}\), and for each fixed \(t\ge 0\), \((\eta ^\rho _{t}(x))_{x\in \mathbb {Z}}\) is a collection of independent Poisson random variables with parameter \(\lambda \).

Notice that it is possible to couple the collections of i.i.d. Poisson random variables \(\left( \eta ^\rho _0(x),x\in \mathbb {Z}\right) \) for different parameters \(\rho \in (0,1)\) in such a way that \(\eta ^\rho _0(x) \ge \eta _0^{\rho '}(x)\) for every \(x\in \mathbb {Z}\) whenever \(\rho ' < \rho \). The dynamics defined above preserves this property for every \(t>0\).

Remark 1

One should note that the environment we define here may not be the most natural. Indeed, it would be a priori simpler to consider that particles perform independent continuous-time simple random walks, instead of considering discrete-time lazy random walks. This choice was done in order to use previously proved results that have been established for the discrete-time case only. In fact, all the proofs we present here would work almost verbatim in the continuous case, at the exception of the final step of the paper where we use the regeneration structure and the results of [HHSST15]. Nevertheless, as mentioned in [HHSST15], we believe that it is possible to adapt these results to the continuous case (see [BR16] for similar statements in different context). Indeed, to do so, it seems that one should just replace the fact that one step is taken every unit of time by some large deviation estimates for the number of steps per unit of time taken by a continuous time random walk. For instance, this was made in [BHT18].

2.2 The random walker

In this section, we define a discrete-time random walker X evolving on the SSEP or on the PCRW.

We fix two transition probabilities \(p_\bullet ,p_\circ \in (0,1)\). Let \(\eta ^\rho \) be distributed under either \(\mathbf {P}^\rho _{EP}\) or \(\mathbf {P}^\rho _{RW}\). Conditioned on \(\eta ^\rho =\eta \), we define \((X_n)_{n\ge 0}\) such that \(X_0=0\) a.s. and, for \(n\ge 0\),

-

if \(X_n = x\) and \(\eta _n(x) > 0\), then \(X_{n+1}=x +1\) with probability \(p_{\bullet }\) and \(X_{n+1}=x -1\) with probability \(1 - p_{\bullet }\);

-

if \(X_n=x\) and \(\eta _n(x) = 0\), then \(X_{n+1}=x +1\) with probability \(p_{\circ }\) and \(X_{n+1}=x -1\) with probability \(1 - p_\circ \).

We will denote \(P^\eta _{p_\bullet ,p_\circ }\), or simply \(P^\eta \), the quenched law of X, i.e. the law of X on a fixed environment \(\eta ^\rho =\eta \). We will denote \(\mathbb {P}^\rho _{p_\bullet ,p_\circ }\), or simply \(\mathbb {P}^\rho \), the annealed law of the walk, in other words, the semi-product \(\mathbf {P}_{EP}^\rho \times P^\eta \) or \(\mathbf {P}_{RW}^\rho \times P^\eta \).

Remark 2

In Sect. 3.1, we will give an alternative definition of X through a graphical construction which will couple realizations with different starting positions and will be very useful in the course of the proofs.

Note that either \(p_\bullet \ge p_\circ \) or \(1-p_\bullet \ge 1-p_\circ \) must hold, hence (by flipping the integer line if necessary), we may assume without loss of generality throughout the paper

Although there is no loss of generality in imposing this assumption, we will make some statements that rely on it. In case (2.5) does not hold, then the symmetric statements would hold.

2.3 Main theorems

In this section we provide the precise statements of our main results.

Theorem 2.1

Consider the environment \(\eta ^\rho \) with density \(\rho \in (0,1)\) distributed under either the measure \(\mathbf {P}^\rho _{EP}\) or \(\mathbf {P}^\rho _{RW}\) and assume that (2.5) holds. There exists a deterministic non-decreasing function \(v:(0,1) \rightarrow \mathbb {R}\) such that

for every \(\rho \in (0,1)\setminus \{\rho _-,\rho _+\}\), where

If \(v(\rho _-)=0\), resp. \(v(\rho _+)=0\), then (2.6) holds for \(\rho =\rho _-\), resp. for \(\rho =\rho _+\).

Moreover, for every \(\rho \not \in [\rho _-, \rho _+]\), we have a functional central limit theorem for \(X_n\) under \(\mathbb {P}^\rho \), that is

where \(\left( B_t\right) _{t\ge 0}\) is a non-degenerate Brownian motion and where the convergence in law holds in the Skorohod topology.

In (2.7) we use the convention that \(\inf {\emptyset } = 1\) and \(\sup {\emptyset }=0\).

Remark 3

We believe that the speed \(v(\cdot )\) is continuous on \((0,\rho _-)\cup (\rho _+,1)\). Nevertheless, to prove so, one should adapt the definitions of regeneration times introduced in [HHSST15] and in [HS15]. Instead, in Sect. 8, we choose to use the renewal structure from these references as they appear there. Although we believe that the required modifications do not constitute a big conceptual step, they seem quite technical to implement. Indeed, one would actually need to control how the first regeneration time changes when the density is slightly increased, and prove that the difference is integrable. To do so, one should couple two processes at slightly different densities and compare their first regeneration times. We do not know how to do it with the current definitions of the first regeneration time, however it seems clear that these definitions may be modified so that, when the two densities are close enough, then the coupled processes share the same regeneration time. This would easily imply continuity. We choose not to do it here, since it would require long and technical adaptations.

Note that, in the previous theorem, we do not claim that \(\rho _- \ne \rho _+\) and neither that \(\rho _-\) and \(\rho _+\) are necessarily discontinuity points of v. In case v happens to be continuous at these points (which we strongly expect to be true), then we have a law of large numbers for every density \(\rho \). It is indeed an interesting question whether there are examples of environments for which v is discontinuous. Indeed, the Mott variable-range hopping is studied in [FGS18], where Example 2, p. 7, provides an environment and a walk such that the speed, as a function of a certain parameter, has a jump from 0 to some positive value. Let us roughly describe it: The environment is a point process on the real line where points are randomly spaced, according to some density \(1/\gamma \). On this environment, one can define a random walk which, on the one hand, is more likely to jump on points that are closer to it and, on the other hand, has a bias (denoted \(\lambda \) in [FGS18]) to the right. In [FGS18], it is proved that this walk is transient to the right with a speed that jumps from 0 to a positive value at density \(1/\gamma =1/(2-\lambda )\). Discontinuities of the speed of the walk with respect to the density have also been observed in [BHSST17, FS18].

We are currently unable to study the fluctuations of the random walker for densities in \([\rho _-, \rho _+]\), and the order of fluctuations is still a controversial issue in the literature, see [AT12, Huv18]. It is a very interesting open problem to determine whether the random walk is diffusive or not in this regime. Besides, one may ask whether we can have \(\rho _-<\rho _+\). This question seems to be related to another open problem: The existence of a transient regime with zero speed. This phenomenon is known to occur for random walks in one-dimensional static random environment due to the presence of traps in the environment that delay the evolution of the walk. For dynamic environments it is not really clear whether such traps can actually play a relevant role as they may vanish too quickly. But, if \(\rho _-<\rho _+\), then we would have a suggestion that such a regime indeed exist as there would possibly be one value \(\rho _0\in (\rho _-,\rho _+)\) of the density for which the walk would be recurrent while if we increase the density to \(\rho \in (\rho _0,\rho _+)\) then the walk would become transient still keeping a zero speed.

We now move to the symmetric case on the SSEP.

Theorem 2.2

Consider the random walk \((X_n)_n\) on the simple symmetric exclusion process. If \(p_\bullet = 1-p_\circ \) and \(\rho =1/2\), then \(X_n/n\rightarrow 0\), \(\mathbb {P}^\rho \)-almost surely.

Note that by symmetry arguments, if the speed exists then it must be zero. However, proving Theorem 2.2 is not a trivial task. Intuitively, one may think that, in order to prove it, it is necessary to control the trajectory of a walk which comes back very often to its starting position and thus interact with the same particles of the environment a large number of times. These interactions would create long-range time-dependencies in the trajectory and complicate the analysis. Our proof nicely gets around this issue and we rather show that trajectories that go far away at positive speed are very unlikely.

Remark 4

In [San14], the author proves a linear lower bound for the random walk on the exclusion process which is strictly larger than \(2p_{\circ }-1\) provided that \(p_\bullet >p_\circ \). Thus, one could almost conclude that, for the random walk on the exclusion process with \(p_{\bullet }>1/2\) and \(p_\circ =1/2\), the law of large numbers (and the CLT) holds with a positive speed for any density \(\rho >0\). The reason why we cannot easily state this, is that [San14] deals with a continuous-time random walk whereas we work with a discrete-time random walk. Nevertheless, the proof of [San14] may be adapted to our case. Even though this seems to be a natural result, there is, to the best of our knowledge, no simple proof of this fact (or even of transience), and the current proof relies on an elaborate multiscale analysis developed by Kesten and Sidoravicius [KS14].

3 Graphical Construction and Backbone of the Proof

The first goal of this section is to give a graphical construction of a family of coupled random walks, which we will use extensively throughout the paper. The second goal of the section is to state the main intermediate results that lead to the proof of our main results, Theorems 2.1 and 2.2. The graphical construction that we define in Sect. 3.1 and some of the events that we define in Sect. 3.2 may seem over-complicated. The reason is that we want to be able to have the following perspective on the process: Instead of studying just the trajectory of one random walk, we see the process as a family of coupled trajectories, where a trajectory can be started from any point in the continuous space–time \(\mathbb {R}\times \mathbb {R_+}\). This will emphasize some monotonicity properties, particularly useful to carry on with the renormalization procedure in Sect. 5 and for the proof of Theorem 3.4 in Sect. 6.

We will denote \(c_0, c_1, \ldots \) and \(k_0, k_1, \ldots \) positive numbers whose values are fixed at their first appearance in the text. These constants may depend on the law of the environment and of the walk. When a constant depends on other parameters, we shall indicate this at its first appearance, for instance, \(c_i(v,\rho )\), is a constant whose value depends on v and \(\rho \). For later appearances, we may omit some of the dependencies and simply write \(c_i\) or \(c_i(v)\), for example. Let us define the following notation: For any \(w=(x,t)\in \mathbb {R}^2\), we let

For two real numbers \(s,t\in \mathbb {R}\), we denote \(s\wedge t\) and \(s\vee t\) respectively the minimum and the maximum of s and t. For the rest of the paper, unless otherwise stated, we assume that the law of the environment is either \(\mathbf {P}^\rho _{EP}\) or \(\mathbf {P}^\rho _{RW}\), with \(\rho \in (0,1)\), and we fix \(0<p_\circ \le p_\bullet <1\) (thus (2.5) holds), without loss of generality.

Remark 5

In our proofs, we could allow for \(p_\circ =0\) or \(p_\bullet =1\), but we need to rule this out in order to use the renewal structure from [HHSST15] and [HS15], where the authors need this assumption.

3.1 Coupled continuous space–time random walks

Here we use a graphical construction in order to define a family of coupled continuous space–time random walks \((X^w_t,w\in {\mathbb {R}^2},t\ge 0)\). We now informally state the properties of this coupling that will be useful later on.

Each random walk \(X^w :=(X^w_t)_{t \ge 0}\) will be such that, \(X^w_0=\pi _1(w)\) almost surely. Furthermore, \(X^{w'}\) and \(X^w\) coalesce if they ever intersect, that is if \(X^{w'}_{t'}=X^w_t\) for some \(w,w'\in {\mathbb {R}^2}\) and \(t,t'\ge 0\), then \(X^{w'}_{t'+s}=X^w_{t+s}\) for all \(s\ge 0\). Moreover, \((X^{(0,0)}_n)_{n\in \mathbb {N}}\) has the same law as our random walker \((X_n)_{n\in \mathbb {N}}\).

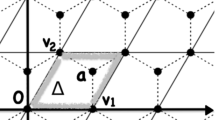

Fix a value \(\rho \in (0,1)\) and a realization of the environment \(\eta ^\rho \). Note that, as \((X_n)_{n\in \mathbb {N}}\) is assumed to be a nearest-neighbor random walk, \(X_{2n}\in 2\mathbb {Z}\) and \(X_{2n+1}\in \left( 1+2\mathbb {Z}\right) \), for every \(n\ge 0\). Define the discrete lattice

where the sum in the RHS stands for the the shift of the \(2\mathbb {Z}\) lattice by the vector (1, 1). We will define the random walks \(X^w\) first on this lattice before interpolating them to the whole plane. For that, we let \(\left( U_w\right) _{w\in \mathbb {L}_d}\) be a collection of i.i.d. uniform random variables on [0, 1]. For any \(w=(x,n)\in \mathbb {L}_d\), we set \(X^w_0=x\) and define \(X^w_1\) in the following manner:

For any integer \(m\ge 1\) and we define by induction

This defines the coupled family \((X^w_n,w\in \mathbb {L}_d,n\in \mathbb {N})\). Note that \((X^w_n,\pi _2(w)+n)_{n\in \mathbb {N}}\) evolves on \(\mathbb {L}_d\).

Having defined the random walker on \(\mathbb {L}_d\), we extend its definition to all possible starting points in \(\mathbb {R}^2\). But first we extend it to a continuous version of \(\mathbb {L}_d\), defined as follows

see Fig. 1.

For \(t\in \mathbb {R}^+\) and \(w\in \mathbb {L}_d\), we define

This defines the coupled family \((X^w_t,w\in \mathbb {L}_d,t\in \mathbb {R}_+)\). Note that \((X^w_t,\pi _2(w)+t)_{t\in \mathbb {R}_+}\), evolves on \(\mathbb {L}\).

From a starting point \(w\in \mathbb {L}\setminus \mathbb {L}_d\), intuitively speaking, we let \(X_t^w\) follow the only path such that \((X_t^w, \pi _2(w)+t)\) remains on \(\mathbb {L}\) until it hits \(\mathbb {L}_d\), after which it follows the rule given by (3.6). More precisely, given \(w \in \mathbb {L} \setminus \mathbb {L}_d\), for any \(s>0\), note that \(w+s\big ((-1)^{k},1\big )\in \mathbb {L}\) where \(k=k(w)=\lfloor \pi _1(w)\rfloor +\lfloor \pi _2(w)\rfloor \) and define

Then we define

It remains to construct the random walks starting from points \(w=(x,t)\in \mathbb {R}^2 \setminus \mathbb {L}\). The idea is very simple: it is going to be the trajectory such that \((X^w_t,\pi _2(w)+t)\) move up along direction (0, 1) until hitting \(\mathbb {L}\) and, from this point on, follow the corresponding trajectory in \(\mathbb {L}\) as defined in (3.8).

More precisely, consider \(w=(x,t)\in \mathbb {R}^2\) and let

Then we define

Equations (3.6), (3.8) and (3.10) define the coupled family \((X^w_t,w\in \mathbb {R}^2,t\in \mathbb {R}_+)\), such that the points \((X^w_t, \pi _2(w)+t)\) always remain on \(\mathbb {L}\), after the first time they hit \(\mathbb {L}\).

It is important for us that, for any \(w\in \mathbb {R}^2\) and for any \(t\ge s\ge 0\), we have

Remark 6

It should be noted that the law of \((X_t^w)_{t\ge 0}\), for \(w\in \mathbb {R}^2\) is not invariant under shifts of w. Nevertheless, the processes \((X_t^w)_{t\ge 0}\), \((X_t^{w+(1,1)})_{t\ge 0}\) and \((X_t^{w+(-1,1)})_{t\ge 0}\) have the same distribution. Therefore, the law of the collection \(\{(X_t^w)_{t\ge 0} :w\in \mathbb {R}^2\}\), is fully determined by the law of \(\{(X_t^w)_{t\ge 0} :w\in \mathcal {L}_1\}\), where

Finally, we will keep the notation \(P^\eta _{p_\bullet ,p_\circ }\) and \(\mathbb {P}^\rho _{p_\bullet ,p_\circ }\) or simply \(P^\eta \) and \(\mathbb {P}^\rho \), for the quenched and annealed joint laws of the family of random walks \((X^w_t,t\ge 0,w\in \mathbb {R}^2)\), respectively.

The result below states a useful monotonicity property for the collection of random walks defined above, for which we also give an illustration in Fig. 2. In the following, for \(s,t\in \mathbb {R}\), we denote \(s\vee t=\max (s,t)\).

Proposition 3.1

For every \(\rho \in (0,1)\), every \(z,z'\in \mathbb {R}^2\) with \(\pi _1(z')\le \pi _1(z)\) and \(\pi _2(z)= \pi _2(z')\), we have that, almost surely,

In fact, for every \(z,z'\in \mathbb {R}^2\) such that \(\pi _1(z')\le \pi _1(z)-\left| \pi _2(z')-\pi _2(z)\right| \),

Proposition 3.1 states that any trajectory started in the turquoise quadrant, e.g. from \(z'\) or \(z''\), stays on the left of the trajectory started at z

Proof

First, we prove (3.13). This is a simple consequence of the fact that the two walks \(X^{z}\) and \(X^{z'}\) evolve in continuous time and space, and, as they start at points z and \(z'\) with same time coordinates (\(\pi _2(z)= \pi _2(z')\)), they cannot cross each other without being at the same position, i.e. either \(X^{z'}_{t}< X^{z}_{t}\) for all \(t\ge 0\), or there exists \(t_0\) such that \(X^{z'}_{t_0}= X^{z}_{t_0}\) and then, by construction, \(X^{z'}_{t_0+s}= X^{z}_{t_0+s}\) for all \(s\ge 0\).

Second, we prove (3.14). Assume first that \(\pi _2(z')\ge \pi _2(z)\) and \(\pi _1(z')\le \pi _1(z)-\left( \pi _2(z')-\pi _2(z)\right) \). By (3.11), we have that

The conclusion then follows from (3.13).

Similarly, if \(\pi _2(z')\le \pi _2(z)\) and \(\pi _1(z')\le \pi _1(z)-\left( \pi _2(z)-\pi _2(z')\right) \). By (3.11), we have that

The conclusion again follows from (3.13). \(\square \)

3.2 Structure of the proof

In this section, we state the main propositions that lead to the proof of Theorems 2.1 and 2.2.

Before presenting some technical definitions that we will need, let us explain in simple words the main ingredients in the proof. The exposition may seem a bit convoluted, but it actually follows a few simple steps:

-

1.

We define two good candidates for the value of the speed \(v(\rho )\) appearing in Theorem 2.1. These are the quantities \(v_+(\rho )\) and \(v_-(\rho )\) defined in (3.19) and (3.22) that are, in some sense, the extreme speeds that the walk can attain. They are useful because they are well-defined for every \(\rho \) and although their definitions are very implicit and do not provide quantitative values, they will become central objects in our proofs as we elaborate our renormalization procedure.

-

2.

In Lemma 3.2, we prove that the probability that the random walk moves faster than \(v_+(\rho )\vee 0\), or slower than \(v_-(\rho )\wedge 0\), for a given interval of time decays fast on the length of this interval. This is a quantitative result involving the values \(v_+(\rho )\) and \(v_-(\rho )\). The appearance of the maximum and minimum with 0 is due to the use of renormalization and the lateral decoupling techniques as mentioned in Sect. 1.2.

-

3.

In Theorem 3.4, we prove that \(v_+(\rho )=v_-(\rho )\). Their common value is what we define to be \(v(\rho )\). At this point we have proved the following facts. First, if \(v(\rho )=0\) then we already have a law of large numbers with zero speed (see Theorem 3.5). Second, if \(v(\rho )>0\) then the normalized position \(X_n/n\) of the random walk asymptotically stays between 0 and \(v(\rho )\). The case \(v(\rho )<0\) is similar.

-

4.

The last step is Proposition 3.6. It states that, assuming \(v(\rho )>0\), there exists \(\delta >0\) such that the normalized position \(X_n/n\) is greater than \(\delta \) with high probability. This does not imply directly the existence of a speed (i.e. the fact that \(X_n/n\) converges), but it implies that the random walk moves away from the root at a linear pace. As the particles in the environment moves diffusively (much slower than at linear pace), one can conclude that the environment around the random walk refreshes quite often, which hints the existence of a renewal structure around the position of the walker. We indeed use available results [HS15, HHSST15] on the existence of regeneration times which implies the law of large numbers and the central limit theorem.

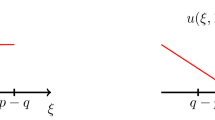

Let us now move to the technical definitions. We start by defining the important event \(A_{H, w}(v)\) that, intuitively speaking indicates that a random walk starting at w after time H had an average speed higher than v, as depicted in Fig. 3. More precisely, for any \(w \in \mathbb {R}^2\), any \(H \in \mathbb {R}_+\) and \(v \in \mathbb {R}\) let

In several places in the paper, we will bound the probability of \( A_{H,w}(v)\). It should be noted that the probability of this event depends on \(w\in \mathbb {R}^2\), because both \( A_{H,w}(v)\) and the law of \(X^w\) are not invariant when we shift w by an arbitrary value in \(\mathbb {R}^2\) (although they are invariant with respect to shifts in the lattice \(\mathbb {L}_d\)). Nevertheless, by Remark 6, it is enough to consider \(w\in \mathcal {L}_1\) (defined in 3.12).

We can now safely define

When there is no risk of confusion, we will write \( p_H(v)= p_H(v, \rho )= p_H(v, \rho , p_\bullet , p_\circ )\).

An illustration of the event \(A_{H, 0}(v)\). Starting from the point \(y \in \big ([0,H)\times \{0\}\big ) \cap \mathbb {L}\) the walker attains an average speed larger than v during the time interval [0, H]. Picture taken from [BHT18]

The following quantity is always well-defined and is key to our proofs of the main results.

Again, when there is no risk of confusion, we will write \( v_+=v_+(\rho )= v_+(\rho , p_\bullet , p_\circ )\). This quantity could be called the upper speed of X. Indeed, for any \(v>v_+\), it is unlikely that \(X_t \ge vt\) for a growing sequence of t’s. On the other hand, if \(v < v_+\), then \(X_t \ge vt\), with probability bounded away from 0.

Similarly, we define, for \(w\in \mathbb {R}^2\), \(v\in \mathbb {R}\) and \(H\in \mathbb {R}_+\),

as well as

We also define the lower speed of X as

When there is no risk of confusion, we will write \( \tilde{p}_H(v)= \tilde{p}_H(v, \rho )= \tilde{p}_H(v, \rho , p_\bullet , p_\circ )\) and \( v_-=v_-(\rho )= v_-(\rho , p_\bullet , p_\circ )\).

Note that, as (2.5) is assumed, we have that, for \(0\le p_\circ \le p_\bullet \le 1\), the functions \(\rho \mapsto v_+(\rho ,p_\bullet ,p_\circ )\) and \(\rho \mapsto v_-(\rho ,p_\bullet ,p_\circ )\) are non-decreasing.

Let us emphasize that

by (3.11), but it is a priori not guaranteed that \(v_-\le v_+\). As we will see, this is in fact one consequence of the next lemma, see Corollary 3.3.

Roughly speaking, the next lemma states that, the probability of the random walk to deviate above \(v_+(\rho )\vee 0\) or below \(v_-(\rho )\wedge 0\) over large time scales decays very fast.

Lemma 3.2

For every \(\epsilon > 0\), there exists a constant \(c_{0}=c_{0}(\epsilon , \rho )\) such that

for every \(H\ge 1\).

Remark 7

We will present only the proof of the first inequality of (3.24), involving \(v_+\). Nevertheless, a symmetric argument can be used to prove the second inequality.

The next result, whose proof is exposed in Sect. 5 is a simple consequence of Lemma 3.2 and will be important in the rest of the paper.

Corollary 3.3

We have that \(v_-(\rho )\le v_+(\rho )\), for every \(\rho \in (0,1)\).

The next result show that the two quantities \(v_+\) and \(v_-\) coincide, and thus identifies the candidate for the speed appearing in the LLN.

Theorem 3.4

We have that, for any \(\rho \in (0,1)\),

Having Theorem 3.4 in hands we can define

Combining Lemma 3.2 with Theorem 3.4 we can derive some immediate conclusions:

Theorem 3.5

Assume that for some \(\rho \), we have \(v(\rho )=0\). Then

Proof of Theorem 3.5

This is a direct consequence of Lemma 3.2, Theorem 3.4 and Borel–Cantelli Lemma. \(\square \)

Furthermore, by Theorem 3.4 and by definition of \(v_+(\rho )\) and \(v_-(\rho )\), if the speed exists, then it has to be equal to \(v(\rho )\). Hence, \(v(\rho )\) is going to be the limiting speed appearing in Theorem 2.1. But, as one can see, Lemma 3.2 does not allow us to conclude the existence of the speed, or the CLT, for \(v(\rho )>0\) (or for \(v(\rho )<0\)). For example, when \(v(\rho ) > 0\), we know that it is very unlikely that the random walker will exceed speed \(v(\rho )\). But it is not yet clear whether it can move slower than \(v(\rho )\).

In order to prove Theorem 2.1, we will first use sprinkling in order to prove the following ballisticity result, which requires using results from [HHSST15] and [HS15]. Recall the definition of \(\rho _+\) and \(\rho _-\) in (2.7).

Proposition 3.6

For any \(\epsilon >0\), we have that \(v(\rho _++\epsilon )>0\) and \(v(\rho _--\epsilon )<0\) and, for any \(\rho >\rho _++2\epsilon \) and \(\tilde{\rho }<\rho _--2\epsilon \) there exist constants \(c_{1}(\epsilon )\) and \(c_{2}(\epsilon )\) such that

for all \(H\ge 1\).

Proposition 3.6 is weaker than what one should expect, but it is actually enough to conclude the LLN and CLT of Theorem 2.1 by using results from [HS15] and [HHSST15] who construct a renewal structure respectively for the random walk on SSEP and on PCRW.

4 Lateral Decoupling

In this section, we provide a very important property of the annealed law of the walk: If one observes the family of continuous space–time random walk defined in Sect. 3.1 in two disjoint 2-dimensional boxes at a space distance that is large compared to the square root of the time distance, then events in these two boxes are essentially independent. Let us state this fact precisely.

As in Fig. 4, fix \(y_1, y_2\in \mathbb {R}^2\) such that \(\pi _1(y_1)\le \pi _1(y_2)\), \(\pi _2(y_1)=\pi _2(y_2)\), for \(H\ge 1\), let \(B_1=y_1+[-H,0]\times [0,H]\) and \(B_2=y_2+[0,H]\times [0,H]\) we define the distance \(d(B_{1},B_{2}):=|\pi _1(y_1)-\pi _1(y_2)|\). Our objective in the next proposition is to bound the dependence of what happens inside \(B_1\) and \(B_2\).

We say that a function \(f :\mathcal {D}(\mathbb {R}_+, S^{\mathbb {Z}}) \rightarrow \mathbb {R}\) is supported on a box \(B_y^H\) if it is measurable with respect to \(\sigma \big (\{\eta ^\rho _t(x) :(x,t) \in B_y^H \cap (\mathbb {Z}\times \mathbb {R}_+)\}\big )\).

Proposition 4.1

Consider the environment with law \(\mathbf {P}^\rho _{EP}\) or \(\mathbf {P}^\rho _{RW},\) with some density \(\rho \in (0,1)\). Let \(H\ge 1\) and \(y_1, y_2\in \mathbb {R}^2\) be such that \(\pi _2(y_1)=\pi _2(y_2)\), and such that

Let \(B_1=y_1+[-H,0]\times [0,H]\) and \(B_2=y_2+[0,H]\times [0,H]\). For any non-negative functions \(f_1\) and \(f_2\), with \(\Vert f_1\Vert _\infty ,\Vert f_2\Vert _\infty \le 1\), supported respectively on \(B_1\) and \(B_2\), we have that

for some constant \(c_{3}=c_{3}(\rho )\).

Proof

The idea of the proof is that there exists an event A such that \(A^c\) has very small probability and \(\mathbb {E}[f_1f_2\mathbf {1}_A]\le \mathbb {E}[f_1] \mathbb {E}[f_2]+2\mathbb {P}(A^c)\). In this case, as \(f_1,f_2\ge 0\) and \(\Vert f_1\Vert _\infty ,\Vert f_2\Vert _\infty \le 1\), one has that \(\mathrm{Cov}_\rho (f_1,f_2)\le 3\mathbb {P}(A^c)\). Roughly speaking, the event A will be the event that some particle visits both boxes \(B_1\) and \(B_2\). The proof is slightly different for SSEP and for PCRW, thus we separate it in two cases.

Case I: The PCRW.

As the particles move independently for PCRW, it is clear that on the event that no particle started at time \(\pi _2(y_1)\) on the right of \(\pi _1(y_1)+H^{3/4}/4\) enters \(B_1\) and no particle started at time \(\pi _2(y_2)=\pi _2(y_1)\) on the left of \(\pi _1(y_2)-H^{3/4}/4\) enters \(B_2\), the variables \(f_1\) and \(f_2\) behave independently, as can be shown by a simple coupling argument. Let us briefly outline a possible coupling, denoting A the event described above. Let \(\eta ^\rho \) be the environment associated with the PCRW, as described in Sect. 2.1.2. Then, we have that \(f_1=f_1(\eta ^\rho )\) and \(f_2=f_2(\eta ^\rho )\). Let us define \(\eta ^1\) (resp. \(\eta ^2\)) the environment consisting of a collection of independent random walks, as in PCRW, started at time \({\pi _2(y_1)}\) with \(\eta ^1_{\pi _2(y_1)}(x)=\eta ^\rho _{\pi _2(y_1)}(x)\) (resp. \(\eta ^2_{\pi _2(y_1)}(x)=\eta ^\rho _{\pi _2(y_1)}(x)\)) if \(x\le \pi _1(y_1)+H^{3/4}/4\) (resp. \(x\ge \pi _1(y_2)-H^{3/4}/4\)) and \(\eta ^1_{\pi _2(y_1)}(x)=0\) otherwise (resp. \(\eta ^2_{\pi _2(y_1)}(x)=0\) otherwise). Let us then define \(\tilde{f}_1=f(\eta ^1)\) and \(\tilde{f}_2=f(\eta ^2)\). In words, \(\tilde{f}_1\) (resp. \(\tilde{f}_2\)) is defined as \(f_1\) (resp. \(f_2\)) on an environment matching \(\eta ^\rho \) except that particles on the right of \(\pi _1(y_1)+H^{3/4}/4\) (resp. left of \(\pi _1(y_2)-H^{3/4}/4\)) at time \(\pi _2(y_1)\) are erased. The functions \(\tilde{f}_1\) and \(\tilde{f}_2\) are clearly independent and are respectively equal to \(f_1\) and \(f_2\) on the event A. Hence, using that \(\Vert f_1\Vert _\infty ,\Vert f_2\Vert _\infty \le 1\) and \(f_1,f_2\ge 0\), one has that

Moreover, under \(\mathbf {P}^\rho _{RW}\), \(\eta ^\rho _0(x)=k\) with probability \(\hbox {e}^{-\lambda }\lambda ^k/(k!)\), where we recall that \(\lambda =-\ln (1-\rho )\). Hence (by Azuma’s inequality for instance), we obtain, for \(H\ge 1\),

This proves the result, choosing \(c_{3}\) properly.

Case II: The SSEP.

In this case, the decoupling is slightly more complicated to justify, but one can do so by a coupling argument that we outline here. Consider the following construction of the environment from time \(\pi _2(y_1)=\pi _2(y_2)\). We will use two independent environment that we will couple with the actual environment at a relevant stopping time.

Recall the construction from Sect. 2.1.1. For simplicity, let us assume \(\pi _2(y_1)=\pi _2(y_2)=0\) and \(\pi _1(y_1)=0\). Let \(\eta ^{(\ell )}\) (resp. \(\eta ^{(r)}\)) be the following environment: for \(x< H^{3/4}/4\) (resp. \(x> \pi _1(y_2)-H^{3/4}/4\)), let \(\eta ^{(\ell )}_0(x)\) (resp. \(\eta ^{(r)}_0(x)\)) be i.i.d. Bernoulli random variables with mean \(\rho \). For \(x\ge H^{3/4}/4\) (resp. \(x\le \pi _1(y_2)-H^{3/4}/4\)), let \(\eta ^{(\ell )}_0(x)=g\) (resp. \(\eta ^{(r)}_0(x)=g\)), where g represents an undetermined state ( if 0 means a white particle and 1 a black particle, then g could mean a green particle).

Now, for every \(x<H^{3/4}/2\) (resp. \(x\ge H^{3/4}/2\)), let \((T^{\ell ,x}_i)\) (resp. \((T^{r,x}_i)\)) be independent Poisson point processes with rate \(\gamma \). As in Sect. 2.1.1, at times \((T^{\ell ,x}_i)\) or \((T^{r,x}_i)\), the occupation of site x and \(x+1\) are exchanged. Let us denote \(\mathbf{P}^{(\ell )}\) and \(\mathbf{P}^{(r)}\) the laws of \(\eta ^{(\ell )}\) and \(\eta ^{(r)}\). Besides, we define \(\eta ^{(\ell )}\) and \(\eta ^{(r)}\) on a common probability space with measure \(\widetilde{\mathbf{P}}\), under which we let them to be independent.

Let us now define two stopping times. Let \(S_1\) be the first time a green particle of \(\eta ^{(\ell )}\) enters \(B_1\) or a particle started on the left of \(H^{3/4}/4\) goes on the right of \(H^{3/4}/2\), that is,

Similarly, define

Also, define \(S=S_1\wedge S_2\). Let us now define \(\eta ^\rho \) under \(\widetilde{\mathbf{P}}\), so that its marginal on \(B_1\cup B_2\) corresponds to its law under \(\mathbf{P}^\rho \). For any \(t<S\), we let, for any \(x\le 0\), \(\eta ^\rho (x)=\eta ^{(\ell )}(x)\) and, for \(x\ge \pi _1(y_2)\), we let \(\eta ^\rho (x)=\eta ^{(r)}(x)\). At time S, each green particle takes the value of independent Bernoulli random variable with parameter \(\rho \) and, after this time, the process continues as a usual SSEP as described in Sect. 2.1.1.

It is not difficult to see that, under \(\widetilde{\mathbf{P}}\), the environment \(\eta ^{\rho }\) on \(B_1\cup B_2\) has the same law as \(\eta ^{\rho }\) under \(\mathbf{P}^\rho \), on \(B_1\cup B_2\). Hence, \(f_1\) and \(f_2\) have the same law under \(\widetilde{\mathbf{P}}\) and \(\mathbf{P}^\rho \). Finally, one should note that, on the event \(\{S>H\}\), \(f_1\) only depends on \(\eta ^{(\ell )}\) and \(f_2\) only depends on \(\eta ^{(r)}\), and thus they are conditionally independent.

Using the previous argument, the definition of S and denoting Y a continuous simple random walk with rate \(\gamma \), we have that, since \(\Vert f_1\Vert _\infty \le 1\) and \(\Vert f_2\Vert _\infty \le 1\),

where we used similar estimates as above. This proves the result, choosing \(c_{3}\) properly.

\(\square \)

5 Upper and Lower Deviations of the Speed

This section is devoted to the proof of Lemma 3.2 and Corollary 3.3. We will prove Lemma 3.2 for \(v_+\) only but exactly the same proof, with symmetric arguments, holds for \(v_-\).

5.1 Proof of corollaries

We start by showing how Lemma 3.2 implies Corollary 3.3.

Proof of Corollary 3.3

First note that, by the definition of \(v_+(\rho )\) and \(v_-(\rho )\), we have that, for any \(\epsilon >0\),

Note also that, for any \(v_1,v_2\in \mathbb {R}\) such that \(v_1<v_2\) and any \(H>0\),

We start by showing that either \(v_+(\rho )\ge 0\) or \(v_-(\rho ) \le 0\). Indeed, assume that \(v_+(\rho )<0\) and \(v_-(\rho )>0\) and fix any \(\epsilon \in (0,v_-(\rho )/4)\). Lemma 3.2 implies that, for \(H \in \mathbb {N}\) large enough,

Since \(\epsilon <v_-(\rho )-\epsilon \), we obtain from (5.1) and (5.3) that \(p_{H_i^-}(\epsilon , \rho ) + \tilde{p}_{H_i^-}(v_-(\rho ) - \epsilon , \rho ) < 1\), as soon as i is large enough. This contradicts (5.2).

Now consider the case \(v_+(\rho )\ge 0\). Assume, by contradiction, \(v_-(\rho )>v_+(\rho )\). Fix \(\epsilon \in (0,(v_-(\rho )-v_+(\rho ))/4)\) so that \(v_+(\rho )+\epsilon <v_-(\rho )-\epsilon \). By Lemma 3.2, for any \(H\in \mathbb {N}\) large enough, \(p_{H}(v_+(\rho )+\epsilon ,\rho )<1/2\). Thus, as soon as i is large enough, we obtain from (5.2) that \(p_{H_i^-}(v_+(\rho ) + \epsilon ,\rho ) + \tilde{p}_{H_i^-}(v_-(\rho ) - \epsilon ,\rho ) < 1\), which contradicts (5.2) once more. Thus, \(v_+(\rho )\ge 0\) implies \(v_-(\rho )\le v_+(\rho )\).

By a symmetric argument, \(v_-(\rho )\le 0\) implies \(v_-(\rho )\le v_+(\rho )\). This completes the proof that \(v_-(\rho )\le v_+(\rho )\). \(\square \)

Now, we prove that Theorems 3.4 and 3.5 imply Theorem 2.2, stating that the random walk on the Exclusion process with density 1/2 has zero speed when \(p_\circ =1-p_\bullet \).

Proof of Theorem 2.2

Note that the law of the exclusion process with \(\rho =1/2\) is invariant under flipping colors \(\bullet \leftrightarrow \circ \). Thus, for any \(p, q \in [0,1]\) we have \(\mathbb {P}^{1/2}_{p, q} = \mathbb {P}^{1/2}_{q, p}\) which implies

Furthermore, in the particular case \(q = 1-p\) we have, for any \(\rho \ge 0\), any \(y\in \mathbb {L}\) and any Borel set \(A \in \mathbb {R}\),

In particular,

Therefore, still assuming that \(q = 1-p\) we get

Combining (5.7) and (5.4) we get that whenever \(p_\circ = 1-p_\bullet \),

and thus, by Theorem 3.4, we have that \(v_+(1/2,p_\bullet , p_\circ )=v_-(1/2,p_\bullet , p_\circ )=0\). We can then conclude using Theorem 3.5. \(\square \)

5.2 Scales and boxes

In this section, we define some scales and boxes on \(\mathbb {R}^2\) that we will use in several renormalization procedures throughout the paper. In the following, we define sequences of scales that grow like \(L_{k+1}\sim L_k^{5/4}\); it should be noted that the exponent 5/4 is arbitrary and any exponent between 1 and 2 seems to work similarly (this one simply seems more convenient to us).

Define recursively

There exists \(c_{4} > 0\) such that

For \(L \ge 1\) and \(h \ge 1\), define

and, for \(w \in \mathbb {R}^2\),

It will be convenient to define the following set of indices:

For each \(m\in M^h_k\) of the form \(m=(h,k,w)\) and \(v\in \mathbb {R}\) we write

Note that, for each \(m=(h,k,w) \in M_k^h\), a random walk starting at \(I_m\) stays inside \(B_m\), using (3.11). We also define the horizontal distance between \(m = (h,k,(x,t))\) and \(m' = (h,k,(x',t'))\) in \(M_k^h\) as

Later on, for \(m\in M^h_{k+1}\), we will want to tile the box \(B_m\) with boxes \(B_{m'}\) with \(m'\in M^h_k\). For this purpose, we define, for \(m\in M^h_{k+1}\) with \(m=(h,k+1,(z,t))\),

Note that

5.3 A recursive inequality

The following proposition will be used several times in the paper and is a basis for our renormalization argument.

It is important to notice that \(v_{\min }\) and \(v_{\max }\) in the next proposition are free parameters that we choose in different ways throughout the text. In particular they are not related to \(v_-\) and \(v_+\) introduced earlier.

Proposition 5.1

Fix \(0<v_{\min }<v_{\max }\le 2\). Let \(k_1 = k_1(v_{\min })\) be such that \(\ell _{k_1}\ge (6/v_{\min })^2\). For any \(k\ge k_1\) and for all \(h\ge 1\), we have that

The above proposition relates the probability of a speed-up event at scale \(k + 1\) to the probability of similar events at scale k. But before proving Proposition 5.1, prove the following deterministic lemma.

Lemma 5.2

Fix \(0< v_{\min } < v_{\max } \le 2\). Let \(k_1 = k_1(v_{\min })\) be such that \(\ell _{k_1}\ge ( 6/v_{\min })^2\). For any \(k\ge k_1\), for all \(h\ge 1\) and for all \(m\in M^h_{k+1}\), we have that at least one of the following events happens:

- a):

-

There exists \(m'\in C_m\) such that \(A_{m'}(v_{\max })\) occurs;

- b):

-

There exist \(m',m''\in C_m\) such that \(d_s(m',m'')\ge 4hL_k\) and such that the event \(A_{m'}(v_{\min })\cap A_{m''}(v_{\min })\) occurs;

- c):

-

\({A_{m}\big (v_{\min }+\frac{v_{\max }-v_{\min }}{\sqrt{\ell _k}}\big )}^c\) occurs.

Proof

Assume that items a) and b) do not hold. Define

For \(y\in I_m\), let \(m_0,m_1\in \mathcal {B}\) be the first and last indexes of \(\mathcal {B}\), such that \(B_{m_0}\) and \(B_{m_1}\) are visited by \(\big ( X^y_t, 0 \le t \le hL_{k+1} \big )\), respectively. More precisely, \(0\le i_0\le i_1\le \ell _k-1\) such that \(X^y_{j_0hL_k}\in I_{m_0}\), \(X^y_{j_1hL_k}\in I_{m_1}\) with \(m_0=(h,k,(i_0,j_0)L_k)\), \(m_1=(h,k,(i_1,j_1)L_k)\), but

We need to consider two cases.

Case 1: assume \(j_1+1-j_0<\sqrt{\ell _k}\).

As the event a) does not occur, \(X^y_\cdot \) moves at speed at most \(v_{\max }\) between times \(j_0hL_k\) and \((j_1+1)hL_k\). Moreover, by definition of \(\mathcal {B}\), \(j_0\) and \(j_1\), \(X^y_\cdot \) moves at speed at most \(v_{\min }\) before time \(j_0 h L_k\) and after time \((j_1 + 1) h L_k\). Therefore, we have that

This implies that, in this case, \(A^c_{m}\left( v_{\min }+\frac{v_{\max }-v_{\min }}{\sqrt{\ell _k}}\right) \) occurs, i.e. item c).

Case 2: assume \(j_1+1-j_0\ge \sqrt{\ell _k}\).

Again, we will use that \(X^y_\cdot \) moves at speed at most \(v_{\min }\) before time \(j_0hL_k\) and after time \((j_1+1)hL_k\). Moreover, recall that \(X^y_{j_0hL_k}\ge i_0hL_k\) and \(X^y_{(j_1+1)hL_k}\le (i_1+2)hL_k\) and note that, as the event described in b) does not occur, we have that \(|i_0-i_1|\le 4\). This yields, as a) does not occur and \(6/\sqrt{\ell _k}<v_{\min }\),

This implies again that \(A^c_{m}\left( v_{\min }+\frac{v_{\max }-v_{\min }}{\sqrt{\ell _k}}\right) \) occurs, concluding the proof. \(\square \)

We are now ready to prove Proposition 5.1.

Proof of Proposition 5.1

Given \(m \in M_{k+1}^h\), we use Lemma 5.2 to obtain the following inclusion,

where the last union runs only over the set of pairs \((m',m'')\) in \(C_m\) such that \(d_s(m',m'') \ge 4hL_k\).

We now have to bound the probability of the left-hand side event. Let \(m'=(h,k,(i'hL_k,t')),m''=(h,k,(i''hL_k,t''))\in C_m\), with \(i'\le i''\), such that \(d_s(m',m'')\ge 4hL_k\), i.e. \(i''-i'\ge 4\). The events \(A_{m'}(v_{\min })\) and \(A_{m''}(v_{\min })\) are respectively supported by the boxes \(((i'+2)hL_k,0)+[-hL_{k+1},0]\times [0,hL_{k+1}]\) and \(((i''-1)hL_k,0)+[0,hL_{k+1}] \times [0,hL_{k+1}]\).

As \((i''-i'-3)hL_{k}\ge hL_k\ge (hL_{k+1})^{3/4}\), we can apply Proposition 4.1. Recalling that \(|C_m|=3\ell _k^2\), we have that

Concluding the proof of the proposition. \(\square \)

5.4 Bound on \(p_H(v)\)

We will first prove the following result, which states a strong decay for the probability that the walk go faster than \((v_+\vee 0)\), along a particular subsequence of times. Once we establish this result, we will simply need to interpolate it to any value \(H \ge 1\).

Lemma 5.3

For all \(v>(v_+\vee 0)\) there exists \(c_{5} = c_{5}(v) \ge 1\) and \(k_2=k_2(v) \ge 1\) such that for every \(k \ge k_2\)

From now on, we fix \(v>(v_+\vee 0)\). Recall the definition of \(\ell _k\) below (5.9) and let \(k_3 = k_3(v)\) be such that, for all \(k\ge k_3\),

This exact choice for the constant \(k_3\) will become clear during the proof of Lemma 5.3, but for now it suffices to observe that it is well-defined because \(\ell _k\) grows super-exponentially fast. Let us define the following sequence of speeds:

We have that

The sequence of velocities \(v_k\) as defined in (5.27)

Recall the definition of \(C_m\) below (5.16). We are now ready to conclude the proof of Lemma 5.3.

Proof of Lemma 5.3

Observe first that \(2 > (5/4)^{3/2} \sim 1.4\), so that we can choose \(k_2 \ge k_3\) such that, for any \(k\ge k_2\),

where \(c_{3}=c_{3}(\rho )\) is defined in Proposition (4.1). Since \(v_{k_2} > v_+\), we have

Therefore, we fix \(c_{5}(v)\ge 1\) for which

Now we can iteratively use Proposition 5.1, for every \(k\ge k_2\), by choosing \(v_{\min }=v_k\) and \(v_{\max }=2\). In particular, \(p_{c_{5}L_k}(v_{\max })=0\) and, by (5.26),

Therefore, we can obtain the statement of the lemma through induction, by simply observing that for all \(k\ge k_2\),

where we used (5.29), the fact that \(c_{5}\ge 1\) and \(L_k^{3/4}\ge L_k^{1/2}\) for \(k\ge 0\). \(\square \)

5.5 Proof of Lemma 3.2

With Lemma 5.3 at hand, we just need an interpolation argument to establish Lemma 3.2. Let \(v=(v_+\vee 0)+\epsilon \), \(v' = ((v_+\vee 0) + v)/2\) and let \(c_{5}(v')\) and \(k_2(v')\) be as in Lemma 5.3. For \(H \ge 1\) let us define \(\bar{k}\) as being the integer that satisfies:

Let us first assume that H is sufficiently large so that \(\bar{k} \ge k_2\), that

Therefore, we can apply Lemma 5.3 to conclude that

Now, in order to bound \(p_H(v)\), we are going to start by fixing some \(w \in \mathbb {R}^2\) and pave the box \(B^1_H( w)\) with boxes \(B_{m}\) with \(m\in M^{c_{5}}_{\bar{k}}\) such that \(m=(c_{5},\bar{k},w+(xc_{5}L_{\bar{k}}, yc_{5}L_{\bar{k}})\), where \(-\lceil H/c_{5}L_{\bar{k}}\rceil \le x\le \lceil 2H/c_{5}L_{\bar{k}}\rceil \) and \(0\le y \le \lceil H/c_{5}L_{\bar{k}}\rceil \) are integers. Let us denote M the set of such indices. Note that

An important observation at this point is that, on the event \(\cap _{m\in M} (A_{m}(v'))^{\mathsf {c}}\), for any \(y \in I^1_H( w)\) the displacement of \(X^y\) up to time \({\lfloor H/c_{5} L_{\bar{k}} \rfloor c_{5} L_{\bar{k}}}\) can be bounded by

where we used that \(A_m(v')\) does not occur for any \(m \in M\) and that each point \(X^y_{j c_{5} L_{\bar{k}}}\) belongs to \(I_{m}\) for some \(m' \in C_m\). Besides, we have that

Therefore, by the Lipschitz condition (3.11) and (5.38), on the event \(\cap _{m\in M} (A_{m}(v'))^{\mathsf {c}}\), for any \(y \in I^1_H( w)\),

Thus, using (5.35) and (5.37), this yields that

The conclusion of Lemma 3.2 now follows by taking the supremum over all \(w \in [0,1) \times \{0\}\) and then properly choosing the constant \(c_{0}\) in order to accommodate small values of H.

This finishes the proof of Lemma 3.2.

6 Proof of Theorem 3.4

As we discussed above, we want to show that \(v_+ = v_-\). We will assume by contradiction that \(v_+>v_-\). Then either \(v_+>0\) or \(v_-<0\). We pick \(v_+>0\). The other case can be handled analogously by symmetry.

Let us define

Note \(\delta \in (0, 1/2]\), since we argue by contradiction and assume that \(v_+ > v_-\).

The goal of this section is to prove the following proposition which, as we show below, immediately implies Theorem 3.4.

Proposition 6.1

Assume that \(\delta \), defined in (6.1), is positive. There exist \(k_4(c_{3})\), \(c_{7}(v_+,v_-,k)=c_{7}(k)\ge 1\) and \(c_{6}(\delta , k_4)\) such that, for all \(k\ge k_4\), for all \(h\ge c_{6}\), for all \(m\in M^h_k\),

Proof of Theorem 3.4

This proposition implies that there exists \(\epsilon >0\) independent of H such that \(\liminf _{H\rightarrow \infty } p_H(v_+-\epsilon )=0\), which contradicts the definition of \(v_+\). Therefore, this proves by contradiction that \(v_+=v_-\) and thus Theorem 3.4. \(\square \)

6.1 Trapped points

The first step of the proof is to introduce the notion of traps. Intuitively speaking, by the definitions of \(v_-\) and \(v_+\), we know that the random walker has a reasonable probability of attaining speeds close to both of these values. However, every time the random walker reaches an average speed close to \(v_-\) makes it harder for it to attain an average speed get close to \(v_+\). Specially since it is very unlikely that it will run much faster than \(v_+\) at any moment. This motivates the definition below.

Definition 6.2

Given \(K \ge 1\) and \(\delta \) as in (6.1), we say that a point \(w \in \mathbb {R}^2\) is K-trapped if there exists some \(y \in \big (w + [\delta K, 2 \delta K] \times \{0\} \big ) \cap \mathbb {L}\) such that

Note that this definition applies to points \(w \in \mathbb {R}^2\) that do not necessarily belong to \(\mathbb {L}\). \(\square \)

As we mentioned above, the existence of a trap will introduce a delay for the random walker. In fact, by monotonicity, if w is K-trapped, then for every \(w' \in \big ( w + [0, \delta K] \times \{0\} \big ) \), we have

where y is any point in \(\big (w + [\delta K, 2 \delta K] \times \{0\} \big )\) satisfying (6.3).

Our next step is to show that the probability to find a trap is uniformly bounded away from zero.

Lemma 6.3

There exist constants \(c_{8}(v_+,v_-) > 0\) and \(c_{9} (v_+,v_-)> 4/\delta \), such that

Proof

Since \(v_-+\delta >v_-\), the definition of \(v_-\) implies the positivity of the following constant:

In particular, there exists \(c_{9}>8/\delta \) such that

If we had a supremum over \(w\in \mathbb {R}^2\) in (6.5), we would be done. However, we have an infimum in (6.5), so that the proof requires a few more steps.

Recall the definition of \(\mathcal {L}_1\) in (3.12). Let us prove that if

then

Assume that (6.8) holds and fix \(y\in \mathcal {L}_1\). Assume first that \(\pi _2(y)\le \pi _2(z)\). Define \(y'=(\pi _1(z')-(\pi _2(z)-\pi _2(y)),\pi _2(y))\). Thus \(\pi _2(y')\le \pi _2(z)\) and \(\pi _1(y')\le \pi _1(z')-(\pi _2(z')-\pi _2(y'))\). Hence, by Proposition 3.1, we have that, for \(K\ge 2\),

By (3.11) and using that \(|\pi _2(z')-\pi _2(y')|\le 2\), we have that

Moreover, as \(z,y\in \mathcal {L}_1\) and \(\pi _1(y')=\pi _1(z')-(\pi _2(z)-\pi _2(y))\), we have that \(\delta K\le \pi _1(y')\le 2\delta K\).

If \(\pi _2(y)\ge \pi _2(z)\), similar arguments hold by defining \(y'=(\pi _1(z')-(\pi _2(y)-\pi _2(z)),\pi _2(y)\).

Then, for \(K > c_{9}\), let \(\tilde{K}=K-8/\delta \), so that we have, using translation invariance,

By Remark 6, the infimum over \(\mathcal {L}_1\) is equal to the infimum over \(\mathbb {R}^2\), and we can thus conclude. \(\square \)

Let us describe how we intend to employ the previous lemma, which is widely inspired by Lemma 5.2 in [BHT18]. The basic idea is that if a point is trapped, then a walk started from there will be delayed. Then, the probability that a point to be trapped was very high, the set of delayed points would resemble a (dependent) supercritical percolation cluster. In such a scenario, any random walker would have to be delayed on large distances and it would not be able to attain an average speed close to \(v_+\) in any time scale. This would ultimately contradict the definition of \(v_+\).

Nevertheless, Lemma 6.3 does not guarantee that the probability that a point is trapped is high. For this reason, we will use here the notion of threatened points introduced in [BHT18]. Intuitively speaking, we will say that a point is threatened if there exists at least one trapped point lying along a line segment with slope \(v_+\) starting from this point, see Definition 6.4 and Fig. 8.

We will then prove two key results: a point is threatened with very high probability (see Lemma 6.6) and a random walk starting at a threatened point is delayed with very high probability (see Lemmas 6.5 and 6.8).

6.2 Threatened points

Definition 6.4

Given \(\delta \) as in (6.1), \(K \ge 1\) and some integer \(r \ge 1\), we say that a point \(w \in \mathbb {L}\) is (K, r)-threatened if \(w + j K (v_+, 1)\) is K-trapped for some \(j = 0, \dots , r-1\).

The point w in \(y +[-\delta K/4,0]\times \{0\}\) is (K, r)-threatened, since \(w + j_o K (v_+, 1)\) is K-trapped. Picture taken from [BHT18]

As we are going to show below, a random walker starting on a threatened point will most likely suffer a delay, similarly to what we saw for trapped points. See Fig. 8 for an illustration. We first state and prove Lemma 6.5 below is purely deterministic.

Lemma 6.5

For any positive integer r and any real number \(K\ge c_{9}\), if we start the walker at some \(y \in \mathbb {L}\) and there exists \(w\in (y+[-\delta K/4,0] \times \{0\})\) such that

then either

-

1.

“the walker runs faster than \(v_+\) for some time interval of length K”, that is,

$$\begin{aligned} X_{(j + 1)K}^y - X_{jK}^y \ge \Big ( v_+ + \frac{\delta }{2 r} \Big )K \quad \text { for some } j = 0, \dots , r - 1, \end{aligned}$$(6.13) -

2.

or else, “it will be delayed”, that is,

$$\begin{aligned} X^y_{rK} - \pi _1(y) \le \Big ( v_+ - \frac{\delta }{2 r} \Big ) r K. \end{aligned}$$(6.14)

Proof

Fix \(r \ge 1\) and \(K\ge 1\). Assume that the point \(w\in (y+[-\delta K/4,0]\times \{0\})\) is (K, r)-threatened. Thus, for some \(j_o \in \{ 0, \dots , r - 1\}\),

or, in other words, there exists a point

such that

Fix such a point \(y'\) and notice from (6.16) that,

We now assume that (6.13) does not hold and bound the horizontal displacement of the random walk in three steps: before time \(j_o K\), between times \(j_o K\) and \((j_o + 1) K\) and from time \((j_o + 1) K\) to time rK.

So, by (6.18), \(Y^y_{j_o K}\) lies to the left of \(y'\) and, by monotonicity, (6.17) and (6.18) we have that

Now applying once more the assumption that (6.13) does not hold, for \(j = j_o, \ldots , r-1\), we can bound the overall displacement of the random walk up to time rK:

showing that (6.14) holds and thus proving the result. \(\square \)

We have seen that threatened points most likely cause a delay to the walk, just like traps do. However, the advantage of introducing the concept of threats is that they are much more likely to occur than traps as the next lemma shows.

Lemma 6.6

(Threatened points). There exist \(c_{11} = c_{11}(v_+,v_-,c_{3})\) and \(c_{10}(v_+,v_-)\) such that, for any \(r \ge 1\) and for any \(K\ge c_{10}(v_+,v_-)\),

Proof

First, we prove a statement for \(r=3^j\) for all integers \(j \ge 3\). Let us define

Note that if the event \(\{y \text { is not }(K,3^{j+1})\text {-threatened}\}\) occurs for some \(j\ge 3\), then the two following events both occur:

These events are respectively supported on

We want to apply Proposition 4.1. For this purpose, we require that \((3^jv_+ -2-2\delta )K\ge (3^{j + 1 }K)^{3/4}\) by choosing \( k_5(v_+)\ge 3\) such that \(3^j v_+ -2-2\delta >3^jv_+/2\) for all \(j\ge k_5\), and by choosing \(c_{10}(v_+,v_-)\ge c_{9}\) (defined in Lemma 6.3) such that \(v_+K^{1/4}\ge 2\) for all \(K\ge c_{10}\).

Define the constant \(\widetilde{c_{8}}=1-(1-c_{8})^{1/9}\), where \(c_{8}\) is defined in Lemma 6.3 and let \(c_{13}(v_+,v_-,c_{3})\) be such that

Now, note that, for all \(K\ge c_{10}\), by Lemma 6.3 we have \(q^K_{c_{13}+3}\le 1-c_{8}\le (1-\widetilde{c_{8}})^{3^2}\). Assume that, for some \(j \ge k_5\), \(q^K_{c_{13}+j}\le (1-\widetilde{c_{8}})^{j^2}\). Then, one has