Abstract

We obtain large gap asymptotics for Airy kernel Fredholm determinants with any number m of discontinuities. These m-point determinants are generating functions for the Airy point process and encode probabilistic information about eigenvalues near soft edges in random matrix ensembles. Our main result is that the m-point determinants can be expressed asymptotically as the product of m 1-point determinants, multiplied by an explicit constant pre-factor which can be interpreted in terms of the covariance of the counting function of the process.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Airy kernel Fredholm determinants The Airy point process or Airy ensemble [39, 42] is one of the most important universal point processes arising in random matrix ensembles and other repulsive particle systems. It describes among others the eigenvalues near soft edges in a wide class of ensembles of large random matrices [16, 21, 22, 25, 40], the largest parts of random partitions or Young diagrams with respect to the Plancherel measure [5, 13], and the transition between liquid and frozen regions in random tilings [32]. It is a determinantal point process, which means that correlation functions can be expressed as determinants involving a correlation kernel, which characterizes the process. This correlation kernel is given in terms of the Airy function by

Let us denote \(N_A\) for the number of points in the process which are contained in the set \(A\subset \mathbb {R}\), let \(A_1,\ldots , A_m\) be disjoint subsets of \(\mathbb {R}\), with \(m\in \mathbb {N}_{>0}\), and let \(s_1,\ldots , s_m\in \mathbb {C}\). Then, the general theory of determinantal point processes [11, 33, 42] implies that

where the right hand side of this identity denotes the Fredholm determinant of the operator \(\chi _{\cup _j A_j}\sum _{j=1}^m (1 - s_j) \mathcal {K}^{\mathrm{Ai}} \chi _{A_j}\), with \(\mathcal {K}^{\mathrm{Ai}}\) the integral operator associated to the Airy kernel and \(\chi _A\) the projection operator from \(L^2(\mathbb {R})\) to \(L^2(A)\). The integral kernel operator \(\mathcal {K}^{\mathrm{Ai}}\) is trace-class when acting on bounded real intervals or on unbounded intervals of the form \((x,+\,\infty )\). Note that, when \(s_j=0\) for \(j\in \mathcal {K} \subset \{1,\ldots ,m\}\), the left-hand-side of (1.2) should be interpreted as

In what follows, we take the special choice of subsets

we restrict to \(s_1,\ldots , s_m\in [0,1]\), and we study the function

The case \(m=1\) corresponds to the Tracy–Widom distribution [43], which can be expressed in terms of the Hastings–McLeod [29] (if \(s_{1} = 0\)) or Ablowitz–Segur [1] (if \(s_{1}\in (0,1)\)) solutions of the Painlevé II equation. It follows directly from (1.2) that F(x; 0) is the probability distribution of the largest particle in the Airy point process. The function F(x; s) for \(s\in (0,1)\) is the probability distribution of the largest particle in the thinned Airy point process, which is obtained by removing each particle independently with probability s. Such thinned processes were introduced in random matrix theory by Bohigas and Pato [9, 10] and rigorously studied for the sine process in [15] and for the Airy point process in [14]. For \(m \ge 1\), \(F(\vec {x};\vec {s})\) is the probability to observe a gap on \((x_{m},+\,\infty )\) in the piecewise constant thinned Airy point process, where each particle on \((x_{j},x_{j-1})\) is removed with probability \(s_{j}\) (see [18] for a similar situation, with more details provided). It was shown recently that the m-point determinants \(F(\vec {x};\vec {s})\) for \(m>1\) can be expressed identically in terms of solutions to systems of coupled Painlevé II equations [19, 44], which are special cases of integro-differential generalizations of the Painlevé II equations which are connected to the KPZ equation [2, 20]. We refer the reader to [19] for an overview of other probabilistic quantities that can be expressed in terms of \(F(\vec {x};\vec {s})\) with \(m>1\).

Large gap asymptotics Since \(F(\vec {x};\vec {s})\) is a transcendental function, it is natural to try to approximate it for large values of components of \(\vec {x}\). Generally speaking, the asymptotics as components of \(\vec {x}\) tend to \(+\,\infty \) is relatively easy to understand and can be deduced directly from asymptotics for the kernel, but the asymptotics as components of \(\vec {x}\) tend to \(-\infty \) are much more challenging. The problem of finding such large gap asymptotics for universal random matrix distributions has a rich history, for an overview see e.g. [35] and [26]. In general, it is particularly challenging to compute the multiplicative constant arising in large gap expansions explicitly. In the case \(m=1\) with \(s=0\), it was proved in [4, 23] that

where \(\zeta '\) denotes the derivative of the Riemann zeta function. Tracy and Widom had already obtained this expansion in [43], but without rigorously proving the value \(2^{\frac{1}{24}}e^{\zeta '(-1)}\) of the multiplicative constant. For \(m=1\) with \(s> 0\), it is notationally convenient to write \(s=e^{-2\pi i\beta }\) with \(\beta \in i\mathbb {R}\), and it was proved only recently by Bothner and Buckingham [14] that

where G is Barnes’ G-function, confirming a conjecture from [8]. The error term in (1.5) is uniform for \(\beta \) in compact subsets of the imaginary line.

We generalize these asymptotics to general values of m, for \(s_2,\ldots , s_m\in (0,1]\), and \(s_1\in [0,1]\), and show that they exhibit an elegant multiplicative structure. To see this, we need to make a change of variables \(\vec {s}\mapsto \vec {\beta }\), by defining \(\beta _j\in i\mathbb {R}\) as follows. If \(s_1>0\), we define \(\vec {\beta }=(\beta _1,\ldots , \beta _m)\) by

and if \(s_1=0\), we define \(\vec {\beta }_0=(\beta _2,\ldots , \beta _m)\) with \(\beta _2,\ldots , \beta _m\) again defined by (1.6). We then denote, if \(s_1>0\),

and if \(s_1=0\),

where \(\mathbb {E}'\) denotes the expectation associated to the law of the particles \(\lambda _1\ge \lambda _2\ge \cdots \) conditioned on the event \(\lambda _1 \le x_1\).

Main result for\(s_1>0\). We express the asymptotics for the m-point determinant \(E(\vec {x};\vec {\beta })\) in two different but equivalent ways. First, we write them as the product of the determinants \(E(x_j;\beta _j)\) with only one singularity (for which asymptotics are given in (1.5)), multiplied by an explicit pre-factor which is bounded in the relevant limit. Secondly, we write them in a more explicit manner.

Theorem 1.1

Let \(m\in \mathbb {N}_{>0}\), and let \(\vec {x}=(x_1,\ldots , x_m)\) be of the form \(\vec {x}=r\vec {\tau }\) with \(\vec {\tau }=(\tau _1,\ldots , \tau _m)\) and \(0>\tau _1>\tau _2>\cdots >\tau _m\). For any \(\beta _1,\ldots , \beta _m\in i\mathbb {R}\), we have the asymptotics

as \(r\rightarrow +\infty \), where \(\Sigma (\tau _k,\tau _j)\) is given by

The error term is uniformly small for \(\beta _1,\beta _2,\ldots , \beta _m\) in compact subsets of \(i\mathbb {R}\), and for \(\tau _1,\ldots , \tau _m\) such that \(\tau _1<-\delta \) and \(\min _{1\le k\le m-1}\{\tau _k-\tau _{k+1}\}>\delta \) for some \(\delta >0\). Equivalently,

as \(r\rightarrow +\infty \), with

Remark 1

We observe that \(\Sigma (r\tau _k,r\tau _j)=\Sigma (\tau _k,\tau _j)\), hence we could also write \(\Sigma (x_k,x_j)\) in (1.9) such that the right hand side would only involve \(\vec {x}\). We prefer to write \(\Sigma (\tau _k,\tau _j)\) to emphasize that it does not depend on the large parameter r.

Remark 2

The above asymptotics have similarities with the asymptotics for Hankel determinants with m Fisher–Hartwig singularities studied in [17]. This is quite natural, since the Fredholm determinants \(E(\vec {x};\vec {\beta })\) and \(E_0(\vec {x};\vec {\beta }_0)\) can be obtained as scaling limits of such Hankel determinants. However, the asymptotics from [17] were not proved in such scaling limits and cannot be used directly to prove Theorem 1.1. An alternative approach to prove Theorem 1.1 could consist of extending the results from [17] to the relevant scaling limits. This was in fact the approach used in [23] to prove (1.4) in the case \(m=1\), but it is not at all obvious how to generalize this method to general m. Instead, we develop a more direct method to prove Theorem 1.1 which uses differential identities for the Fredholm determinants \(F(\vec {x};\vec {s})\) with respect to the parameter \(s_m\) together with the known asymptotics for \(m=1\). Our approach also allows us to compute the r-independent prefactor \(e^{-4\pi ^{2}\sum _{1\le k<j\le m}\beta _j\beta _k \Sigma (\tau _k,\tau _j)}\) in a direct way.

Average, variance, and covariance in the Airy point process Let us give a more probabilistic interpretation to this result. For \(m=1\), we recall that \(E(x;\beta )=\mathbb {E} e^{-2\pi i\beta N_{(x,+\,\infty )}}\), and we note that, as \(\beta \rightarrow 0\),

Comparing this to the small \(\beta \) expansion of the right hand side of (1.11), we see that the average and variance of \(N_{(x,+\infty )}\) behave as \(x\rightarrow -\infty \) like \(\mu (x)\) and \(\sigma ^2(x)\). More precisely, by expanding the Barnes’ G-functions (see [38, formula 5.17.3]), we obtain

where \(\gamma _E\) is Euler’s constant, and asymptotics for higher order moments can be obtained similarly. At least the leading order terms in the above are in fact well-known, see e.g. [6, 28, 41].Footnote 1 For \(m=2\), (1.9) implies that

If we expand the above for small \(\beta \) (note that our result holds uniformly for \(\beta \in i\mathbb {R}\) small), we recover the logarithmic covariance structure of the process \(N_{(x,+\infty )}\) (see e.g. [11, 12, 34]), namely we then see that the covariance of \(N_{(x_1,+\infty )}\) and \(N_{(x_2,+\infty )}\) converges as \(r\rightarrow \infty \) to \(\Sigma (\tau _1,\tau _2)\). Note in particular that \(\Sigma (\tau _1,\tau _2)\) blows up like a logarithm as \(\tau _1-\tau _2\rightarrow 0\), and that such log-correlations are common for processes arising in random matrix theory and related fields. We also infer that, given \(0>\tau _1>\tau _2\),

as \(r\rightarrow +\infty \).

We also mention that asymptotics for the first and second exponential moments \(\mathbb {E} e^{-2\pi i\beta N_{(x,+\infty )}}\) and \(\mathbb {E} e^{-2\pi i\beta N_{(x_1,+\infty )}-2\pi i\beta N_{(x_2,+\infty )}}\) of counting functions are generally important in the theory of multiplicative chaos, see e.g. [3, 7, 37], which allows to give a precise meaning to limits of random measures like \(\frac{e^{-2\pi i\beta N_{(x,+\infty )}}}{\mathbb {E} e^{-2\pi i\beta N_{(x,+\infty )}}}\), and which provides efficient tools for obtaining global rigidity estimates and statistics of extreme values of the counting function.

Main result for\(s_1=0\). The asymptotics for the determinants \(F(\vec {x};\vec {s})\) if one or more of the parameters \(s_j\) vanish are more complicated. If \(s_j=0\) for some \(j>1\), we expect asymptotics involving elliptic \(\theta \)-functions in analogy to [14], but we do not investigate this situation here. The case where the parameter \(s_1\) associated to the rightmost inverval \((x_1,+\infty )\) vanishes is somewhat simpler, and we obtain asymptotics for \(E_0(\vec {x};\vec {\beta }_0)=F(\vec {x};\vec {s})/F(x_{1};0)\) in this case. We first express the asymptotics for \(E_0(\vec {x};\vec {\beta }_0)\) in terms of a Fredholm determinant of the form \(E(\vec {y};\vec {\beta }_0)\) with \(m-1\) jump discontinuities, for which asymptotics are given in Theorem 1.1. Secondly, we give an explicit asymptotic expansion for \(E_0(\vec {x};\vec {\beta }_0)\).

Theorem 1.2

Let \(m\in \mathbb {N}_{>0}\), let \(\vec {x}=(x_1,\ldots , x_m)\) be of the form \(\vec {x}=r\vec {\tau }\) with \(\vec {\tau }=(\tau _1,\ldots , \tau _m)\) and \(0>\tau _1>\tau _2>\cdots >\tau _m\), and define \(\vec {y}=(y_2,\ldots , y_m)\) by \(y_j=x_j-x_1\). For any \(\beta _2,\ldots , \beta _m\in i\mathbb {R}\), we have as \(r\rightarrow +\infty \),

The error term is uniformly small for \(\beta _2,\ldots , \beta _m\) in compact subsets of \(i\mathbb {R}\), and for \(\tau _1,\ldots , \tau _m\) such that \(\tau _1<-\delta \) and \(\min _{1\le k\le m-1}\{\tau _k-\tau _{k+1}\}>\delta \) for some \(\delta >0\).

Equivalently,

as \(r\rightarrow +\infty \), with

Remark 3

We can again give a probabilistic interpretation to this result. In a similar way as explained in the case \(s_1>0\), we can expand the above result for \(m=2\) as \(\beta _2\rightarrow 0\) to conclude that the mean and variance of the random counting function \(N'_{(x_2,x_1)}\), conditioned on the event \(\lambda _1\le x_1\), behave, in the asymptotic scaling of Theorem 1.2, like \(\mu _0(x)\) and \(\sigma _0^2(x)\). Doing the same for \(m=3\) implies that the covariance of \(N_{(x_2,x_1)}'\) and \(N_{(x_3,x_1)}'\) converges to \(\Sigma _0(\tau _2, \tau _3)\).

Remark 4

Another probabilistic interpretation can be given through the thinned Airy point process, which is obtained by removing each particle in the Airy point process independently with probability \(s=e^{-2\pi i\beta }\), \(s\in (0,1)\). We denote \(\mu _1^{(s)}\) for the maximal particle in this thinned process. It is natural to ask what information a thinned configuration gives about the parent configuration. For instance, suppose that we know that \(\mu _1^{(s)}\) is smaller than a certain value \(x_2\), then what is the probability that the largest overall particle \(\lambda _1=\mu _1^{(0)}\) is smaller than \(x_1\)? For \(x_1>x_2\), we have that the joint probability of the events \(\mu _1^{(s)}<x_2\) and \(\lambda _1<x_1\) is given by (see [19, Section 2])

If we set \(0>x_1=r\tau _1>x_2=r\tau _2\) and let \(r\rightarrow +\infty \), Theorem 1.2 implies that

or equivalently,

This describes the tail behavior of the joint distribution of the largest particle distribution of the Airy point process and the associated largest thinned particle.

Outline In Sect. 2, we will derive a suitable differential identity, which expresses the logarithmic partial derivative of \(F(\vec {x};\vec {s})\) with respect to \(s_m\) in terms of a Riemann-Hilbert (RH) problem. In Sect. 3, we will perform an asymptotic analysis of the RH problem to obtain asymptotics for the differential identity as \(r\rightarrow +\infty \) in the case where \(s_1= 0\). This will allow us to integrate the differential identity asymptotically and to prove Theorem 1.2 in Sect. 4. In Sect. 5 and in Sect. 6, we do a similar analysis, but now in the case \(s_1>0\) to prove Theorem 1.1.

2 Differential Identity for F

Deformation theory of Fredholm determinants In this section, we will obtain an identity for the logarithmic derivative of \(F(\vec {x};\vec {s})\) with respect to \(s_m\), which will be the starting point of our proofs of Theorems 1.1 and 1.2. To do this, we follow a general procedure known as the Its-Izergin-Korepin-Slavnov method [30], which applies to integral operators of integrable type, which means that the kernel of the operator can be written in the form \(K(x,y)=\frac{f^T(x)g(y)}{x-y}\) where f(x) and g(y) are column vectors which are such that \(f^T(x)g(x)=0\). The operator \(\mathcal {K}_{\vec {x},\vec {s}}\) defined by

is of this type, since we can take

Using general theory of integral kernel operators, if \(s_m\ne 0\), we have

where \(\mathcal {R}_{\vec {x},\vec {s}}\) is the resolvent operator defined by

and where \(R_{\vec {x},\vec {s}}\) is the associated kernel. Using the Its-Izergin-Korepin-Slavnov method, it was shown in [19, proof of Proposition 1] that the resolvent kernel \(R_{\vec {x},\vec {s}}(\xi ;\xi )\) can be expressed in terms of a RH problem. For \(\xi \in (x_m,x_{m-1})\), we have

where \(\Psi (\zeta )\) is the solution, depending on parameters \(x, \vec {y}=(y_1,\ldots , y_{m-1}), \vec {s}=(s_1,\ldots , s_{m})\), to the following RH problem. The relevant values of the components \(y_j\) of \(\vec {y}\) are given as \(y_j=x_j-x_m > 0\) for all \(j=1,\ldots , m-1\), and the relevant value of x is \(x=x_m\).

2.1 RH problem for \(\Psi \)

- (a)

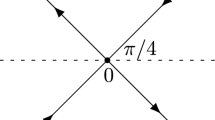

\(\Psi : \mathbb {C}\backslash \Gamma \rightarrow \mathbb {C}^{2\times 2}\) is analytic, with

$$\begin{aligned} \Gamma =\mathbb {R}\cup e^{\pm \frac{2\pi i}{3}} (0,+\infty ) \end{aligned}$$(2.3)and \(\Gamma \) oriented as in Fig. 1.

- (b)

\(\Psi (\zeta )\) has continuous boundary values as \(\zeta \in \Gamma \backslash \{y_1,\ldots , y_m\}\) is approached from the left (\(+\) side) or from the right (− side) and they are related by

$$\begin{aligned} \left\{ \begin{array}{ll} \Psi _+(\zeta ) = \Psi _-(\zeta ) \begin{pmatrix} 1 &{}\quad 0 \\ 1 &{}\quad 1 \end{pmatrix} &{}\quad \text {for } \zeta \in e^{\pm \frac{2\pi i}{3}} (0,+\infty ), \\ \Psi _+(\zeta ) = \Psi _-(\zeta ) \begin{pmatrix} 0 &{}\quad 1 \\ -1 &{}\quad 0 \end{pmatrix} &{}\quad \text {for } \zeta \in (-\infty ,0), \\ \Psi _+(\zeta ) = \Psi _-(\zeta ) \begin{pmatrix} 1 &{}\quad s_j \\ 0 &{}\quad 1 \end{pmatrix}&\quad \text {for } \zeta \in (y_{j}, y_{j-1}), j=1,\ldots , m, \end{array}\right. \end{aligned}$$where we write \(y_m=0\) and \(y_{0} = + \infty \).

- (c)

As \(\zeta \rightarrow \infty \), there exist matrices \(\Psi _1,\Psi _2\) depending on \(x,\vec {y},\vec {s}\) but not on \(\zeta \) such that \(\Psi \) has the asymptotic behavior

$$\begin{aligned} \Psi (\zeta ) = \left( I + \Psi _1\zeta ^{-1}+\Psi _2\zeta ^{-2} +{\mathcal {O}}(\zeta ^{-3})\right) \zeta ^{\frac{1}{4} \sigma _3} M^{-1} e^{-(\frac{2}{3}\zeta ^{3/2} + x\zeta ^{1/2}) \sigma _3}, \end{aligned}$$(2.4)where \(M = (I + i \sigma _1) / \sqrt{2}\), \(\sigma _1 = \begin{pmatrix} 0 &{}\quad 1 \\ 1 &{}\quad 0 \end{pmatrix}\) and \(\sigma _3 = \begin{pmatrix} 1 &{}\quad 0 \\ 0 &{}\quad -1 \end{pmatrix}\), and where principal branches of \(\zeta ^{3/2}\) and \(\zeta ^{1/2}\) are taken.

- (d)

\(\Psi (\zeta ) = \mathcal {O}( \log (\zeta -y_j) )\) as \(\zeta \rightarrow y_j\), \(j = 1, \ldots , m\).

We can conclude from this result that

From here on, we could try to obtain asymptotics for \(\Psi \) with \(\vec {y}\) replaced by \(r\vec {y}\) as \(r\rightarrow +\infty \). However, we can simplify the right-hand side of the above identity and evaluate the integral explicitly. To do this, we follow ideas similar to those of [14, Section 3].

Lax pair identities We know from [19, Section 3] that \(\Psi \) satisfies a Lax pair. More precisely, if we define

then we have the differential equation

where A is traceless and takes the form

for some matrices \(A_j\) independent of \(\zeta \), and where \(\sigma _{+} = \begin{pmatrix} 0 &{}\quad 1 \\ 0 &{}\quad 0 \end{pmatrix}.\) Therefore, we have

and we can use the relation \(-i \partial _{x} \Psi _{1,21} + \Psi _{1,21}^{2} = 2 \Psi _{1,11}\) (see [19, (3.20)]) to see that \(\widehat{A}\) takes the form

where the matrices \(\widehat{A}_{j}(x)\) are independent of \(\zeta \) and have zero trace. It follows that

Let us define \(\widehat{F}(\zeta )=\partial _{s_m}(\Psi (\zeta )) \ \Psi ^{-1}(\zeta )\). From the RH problem for \(\Psi \), \(\widehat{F}\) satisfies the following RH problem (recall that \(y_{m} = 0\)):

- (a)

\(\widehat{F}\) is analytic on \(\mathbb {C}{\setminus } [0,y_{m-1}]\).

- (b)

The jumps are given by

$$\begin{aligned} \widehat{F}_{+}(\zeta ) = \widehat{F}_{-}(\zeta ) + \Psi _{-}(\zeta ) \sigma _{+} \Psi _{-}^{-1}(\zeta ), \qquad \zeta \in (0,y_{m-1}). \end{aligned}$$(2.10) - (c)

As \(\zeta \rightarrow \zeta _{\star } \in \{0,y_{m-1}\}\), we have \(\widehat{F}(\zeta ) = {\mathcal {O}}\big (\log (\zeta - \zeta _{\star })\big )\).

As \(\zeta \rightarrow \infty \), we have

$$\begin{aligned} \widehat{F}(\zeta ) = \frac{\partial _{s_{m}}\Psi _{1}}{\zeta } +\frac{\partial _{s_{m}}\Psi _{2} - \partial _{s_{m}} (\Psi _{1}) \Psi _{1}}{\zeta ^{2}} + {\mathcal {O}}(\zeta ^{-3}). \end{aligned}$$(2.11)

Thus, by Cauchy’s formula, we have

Expanding the right-hand-side of (2.12) as \(\zeta \rightarrow \infty \), and comparing it with (2.11), we obtain the identities

Following again [19], see in particular formula (3.15) in that paper, we can express \(\Psi \) in a neighborhood of \(y_j\) as

for \(0<\arg (\zeta -y_j)<\frac{2\pi }{3}\) and with \(G_j\) analytic at \(y_j\). This implies that

for \(j=1,\ldots , m\), where we denoted \(s_{m+1}=1\), and also that

Using (2.13)–(2.14), (2.16) (in particular the fact that \(\det \widehat{A}_j=0\)) and (2.172.18)–(2.19) while substituting (2.9) into (2.5), we obtain

The above sum can be simplified using the fact that \(\det G_{j} \equiv 1\), and we finally get

where \(s_{m+1}=1\). The only quantities appearing at the right hand side are \(\Psi _1,\Psi _{2,21}\) and \(G_j\). In the next sections, we will derive asymptotics for these quantities as \(\vec {x}=r\vec {\tau }\) with \(r\rightarrow +\infty \).

3 Asymptotic Analysis of RH Problem for \(\Psi \) with \(s_{1}~\hbox {=}~0\)

We now scale our parameters by setting \(\vec {x}=r\vec {\tau }\), \(\vec {y}=r\vec {\eta }\), with \(\eta _j=\tau _j-\tau _m\). We assume that \(0>\tau _1>\cdots >\tau _m\). The goal of this section is to obtain asymptotics for \(\Psi \) as \(r\rightarrow +\infty \). This will also lead us to large r asymptotics for the differential identity (2.20). In this section, we deal with the case \(s_{1} = 0\). The general strategy in this section has many similarities with the analysis in [17], needed in the study of Hankel determinants with several Fisher–Hartwig singularities.

3.1 Re-scaling of the RH problem

Define the function \(T(\lambda )=T(\lambda ;\vec {\eta }, \tau _m, \vec {s})\) as follows,

The asymptotics (2.4) of \(\Psi \) then imply after a straightforward calculation that T behaves as

as \(\lambda \rightarrow \infty \), where the principal branches of the roots are chosen. The entries of \(T_1\) and \(T_2\) are related to those of \(\Psi _1\) and \(\Psi _2\) in (2.4): we have

where

The singularities in the \(\lambda \)-plane are now located at the (non-positive) points \(\lambda _j=\eta _j-\eta _1=\tau _j-\tau _1\), \(j=1,\ldots ,m\).

3.2 Normalization with g-function and opening of lenses

In order to normalize the RH problem at \(\infty \), in view of (3.2), we define the g-function by

once more with principal branches of the roots. Also, around each interval \((\lambda _{j},\lambda _{j-1})\), \(j = 2,\ldots ,m\), we will split the jump contour in three parts. This procedure is generally called the opening of the lenses. Let us consider lens-shaped contours \(\gamma _{j,+}\) and \(\gamma _{j,-}\), lying in the upper and lower half plane respectively, as shown in Fig. 2. Let us also denote \(\Omega _{j,+}\) (resp. \(\Omega _{j,-}\)) for the region inside the lenses around \((\lambda _{j},\lambda _{j-1})\) in the upper half plane (resp. in the lower half plane). Then we define S by

In order to derive RH conditions for S, we need to use the RH problem for \(\Psi \), the definitions (3.1) of T and (3.5) of S, and the fact that \(g_{+}(\lambda ) + g_{-}(\lambda ) = 0\) for \(\lambda \in (-\infty ,0)\). This allows us to conclude that S satisfies the following RH problem.

3.2.1 RH problem for S

- (a)

\(S : \mathbb {C}\backslash \Gamma _{S} \rightarrow \mathbb {C}^{2\times 2}\) is analytic, with

$$\begin{aligned} \Gamma _{S}=(-\infty ,0]\cup \big (\lambda _{m}+e^{\pm \frac{2\pi i}{3}} (0,+\infty )\big )\cup \gamma _{+}\cup \gamma _{-}, \qquad \gamma _{\pm } = \bigcup _{j=2}^{m} \gamma _{j,\pm }, \end{aligned}$$(3.6)and \(\Gamma _{S}\) oriented as in Fig. 2.

- (b)

The jumps for S are given by

$$\begin{aligned} S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 0 &{}\quad s_{j} \\ -s_{j}^{-1} &{}\quad 0 \end{pmatrix}, \quad \lambda \in (\lambda _{j}, \lambda _{j-1}), \, j = 2,\ldots ,m+1, \\ S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 1 &{}\quad 0 \\ s_{j}^{-1}e^{-2r^{3/2}g(\lambda )} &{}\quad 1 \end{pmatrix}, \qquad \lambda \in \gamma _{j,+} \cup \gamma _{j,-}, \, j = 2,\ldots ,m, \\ S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 1 &{}\quad 0 \\ e^{-2r^{3/2}g(\lambda )} &{}\quad 1 \end{pmatrix}, \quad \lambda \in \lambda _{m} +e^{\pm \frac{2\pi i}{3}} (0,+\infty ), \end{aligned}$$where \(\lambda _{m+1} = - \infty \) and \(s_{m+1} = 1\).

- (c)

As \(\lambda \rightarrow \infty \), we have

$$\begin{aligned} S(\lambda ) = \left( I + \frac{T_1}{\lambda } +\frac{T_2}{\lambda ^2}+ \mathcal {O}\left( \frac{1}{\lambda ^3}\right) \right) \lambda ^{\frac{1}{4} \sigma _3} M^{-1}. \end{aligned}$$(3.7) - (d)

\(S(\lambda ) = \mathcal {O}( \log (\lambda -\lambda _j) )\) as \(\lambda \rightarrow \lambda _j\), \(j = 1, \ldots , m\).

Let us now take a closer look at the jump matrices on the lenses \(\gamma _{j,\pm }\). By (3.4), we have

Since \(\eta _{1}+\tau _{m} = \tau _{1} < 0\), we have

It follows that the jumps for S are exponentially close to I as \(r \rightarrow + \infty \) on the lenses, and on \(\lambda _{m} + e^{\pm \frac{2\pi i}{3}}(0,+\infty )\). This convergence is uniform outside neighborhoods of \(\lambda _{1},\ldots ,\lambda _{m}\), but is not uniform as \(r \rightarrow + \infty \) and simultaneously \(\lambda \rightarrow \lambda _{j}\), \(j \in \{1,\ldots ,m\}\).

3.3 Global parametrix

We will now construct approximations to S for large r, which will turn out later to be valid in different regions of the complex plane. We need to distinguish between neighborhoods of each of the singularities \(\lambda _1,\ldots , \lambda _m\) and the remaining part of the complex plane. We call the approximation to S away from the singularities the global parametrix. To construct it, we ignore the jump matrices near \(\lambda _1,\ldots , \lambda _m\) and the exponentially small entries in the jumps as \(r\rightarrow +\infty \) on the lenses \(\gamma _{j,\pm }\). In other words, we aim to find a solution to the following RH problem.

3.3.1 RH problem for \(P^{(\infty )}\)

- (a)

\(P^{(\infty )} : \mathbb {C}\backslash (-\infty ,0] \rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- (b)

The jumps for \(P^{(\infty )}\) are given by

$$\begin{aligned} P^{(\infty )}_{+}(\lambda ) = P^{(\infty )}_{-}(\lambda )\begin{pmatrix} 0 &{} s_{j} \\ -s_{j}^{-1} &{} 0 \end{pmatrix}, \qquad \lambda \in (\lambda _{j}, \lambda _{j-1}), \, j = 2,\ldots ,m+1. \end{aligned}$$ - (c)

As \(\lambda \rightarrow \infty \), we have

$$\begin{aligned} P^{(\infty )}(\lambda ) = \left( I + \frac{P^{(\infty )}_1}{\lambda } +\frac{P^{(\infty )}_2}{\lambda ^2} + \mathcal {O}\left( \frac{1}{\lambda ^3}\right) \right) \lambda ^{\frac{1}{4} \sigma _3} M^{-1}. \end{aligned}$$(3.9)

The solution to this RH problem is not unique unless we specify its local behavior as \(\lambda \rightarrow 0\) and as \(\lambda \rightarrow \lambda _j\). We will construct a solution \(P^{(\infty )}\) which is bounded as \(\lambda \rightarrow \lambda _j\) for \(j=2,\ldots , m\), and which is \({\mathcal {O}}(\lambda ^{-\frac{1}{4}})\) as \(\lambda \rightarrow 0\). We take it of the form

with D a function depending on the \(\lambda _j\)’s and \(\vec {s}\), and where we define \(d_1\) below. In order to satisfy the above RH conditions, we need to take

For later use, let us now take a closer look at the asymptotics of \(P^{(\infty )}\) as \(\lambda \rightarrow \infty \) and as \(\lambda \rightarrow \lambda _j\). For any \(k \in \mathbb {N}_{N>0}\), as \(\lambda \rightarrow \infty \) we have,

where

and this also defines the value of \(d_1\) in (3.10). A long but direct computation shows that

To study the local behavior of \(P^{(\infty )}\) near \(\lambda _j\), it is convenient to use a different representation of D, namely

where

From this representation, it is straightforward to derive the following expansions. As \(\lambda \rightarrow \lambda _{j}\), \(j \in \{2,\ldots ,m\}\), \(\mathfrak {I}\lambda > 0\), we have

As \(\lambda \rightarrow \lambda _{j-1}\), \(j \in \{3,\ldots ,m\}\), \(\mathfrak {I}\lambda >0\), we have

For \(j \in \{2,\ldots ,m\}\), as \(\lambda \rightarrow \lambda _{k}\), \(k\in \{2,\ldots ,m\}\), \(k \ne j,j-1\), \(\mathfrak {I}\lambda > 0\), we have

Note that \(T_{j,k} \ne T_{k,j}\) for \(j \ne k\) and \(T_{j,k}>0\) for all j, k. From the above expansions, we obtain, as \(\lambda \rightarrow \lambda _{j}\), \(\mathfrak {I}\lambda > 0\), \(j \in \{2,\ldots ,m\}\), that

where \(\beta _1,\ldots , \beta _m\) are as in (1.6). The first two terms in the expansion of \(D(\lambda )\) as \(\lambda \rightarrow \lambda _{1} = 0\) are given by

where

The above expressions simplify if we write them in terms of \(\beta _2,\ldots , \beta _m\) defined by (1.6). For all \(\ell \in \{0,1,2,\ldots \}\), we have

We also have the identity

which will turn out useful later on.

3.4 Local parametrices

As a local approximation to S in the vicinity of \(\lambda _{j}\), \(j= 1,\ldots ,m\), we construct a function \(P^{(\lambda _{j})}\) in a fixed but sufficiently small (such that the disks do not intersect or touch each other) disk \(\mathcal {D}_{\lambda _{j}}\) around \(\lambda _{j}\). This function should satisfy the same jump relations as S inside the disk, and it should match with the global parametrix at the boundary of the disk. More precisely, we require the matching condition

uniformly for \(\lambda \in \partial \mathcal {D}_{\lambda _{j}}\). The construction near \(\lambda _1\) is different from the ones near \(\lambda _2,\ldots , \lambda _m\).

3.4.1 Local parametrices around \(\lambda _{j}\), \(j = 2,\ldots ,m\).

For \(j \in \{2,\ldots ,m\}\), \(P^{(\lambda _{j})}\) can be constructed in terms of Whittaker’s confluent hypergeometric functions. This type of construction is well understood and relies on the solution \(\Psi _\mathrm{HG}(z)\) to a model RH problem, which we recall in “Appendix A.3” for the convenience of the reader. For more details about it, we refer to [17, 27, 31]. Let us first consider the function

defined in terms of the g-function (3.4). This is a conformal map from \(\mathcal {D}_{\lambda _{j}}\) to a neighborhood of 0, which maps \(\mathbb {R}\cap \mathcal {D}_{\lambda _{j}}\) to a part of the imaginary axis. As \(\lambda \rightarrow \lambda _{j}\), the expansion of \(f_{\lambda _j}\) is given by

We need moreover that all parts of the jump contour \(\Sigma _S\cap \mathcal {D}_{\lambda _{j}}\) are mapped on the jump contour \(\Gamma \) for \(\Phi _\mathrm{HG}\), see Fig. 6. We can achieve this by choosing \(\Gamma _2,\Gamma _3,\Gamma _5, \Gamma _6\) in such a way that \(f_{\lambda _j}\) maps the parts of the lenses \(\gamma _{j,+}, \gamma _{j,-}, \gamma _{j+1,+}, \gamma _{j+1,-}\) inside \(\mathcal {D}_{\lambda _{j}}\) to parts of the respective jump contours \(\Gamma _2, \Gamma _6, \Gamma _3\), \(\Gamma _5\) for \(\Phi _\mathrm{HG}\) in the z-plane.

We can construct a suitable local parametrix \(P^{(\lambda _{j})}\) in the form

If \(E_{\lambda _j}\) is analytic in \(\mathcal {D}_{\lambda _{j}}\), then it follows from the RH conditions for \(\Phi _\mathrm{HG}\) and the construction of \(f_{\lambda _j}\) that \(P^{(\lambda _{j})}\) satisfies exactly the same jump conditions as S on \(\Sigma _S\cap \mathcal {D}_{\lambda _{j}}\). In order to satisfy the matching condition (3.23), we are forced to define \(E_{\lambda _{j}}\) by

Using the asymptotics of \(\Phi _\mathrm{HG}\) at infinity given in (A.13), we can strengthen the matching condition (3.23) to

as \(r \rightarrow + \infty \), uniformly for \(\lambda \in \partial \mathcal {D}_{\lambda _{j}}\), where \(\Phi _{\mathrm {HG},1}\) is a matrix specified in (A.14). Also, a direct computation shows that

where

3.4.2 Local parametrix around \(\lambda _{1} = 0\).

For the local parametrix \(P^{(0)}\) near 0, we need to use a different model RH problem whose solution \(\Phi _\mathrm{Be}(z)\) can be expressed in terms of Bessel functions. We recall this construction in “Appendix A.2”, and refer to [36] for more details. Similarly as for the local parametrices from the previous section, we first need to construct a suitable conformal map which maps the jump contour \(\Sigma _S\cap \mathcal {D}_0\) in the \(\lambda \)-plane to a part of the jump contour \(\Sigma _{\mathrm {Be}}\) for \(\Phi _\mathrm{Be}\) in the z-plane. This map is given by

and it is straightforward to check that it indeed maps \(\mathcal {D}_{0}\) conformally to a neighborhood of 0. Its expansion as \(\lambda \rightarrow 0\) is given by

We can choose the lenses \(\gamma _{2,\pm }\) in such a way that \(f_0\) maps them to the jump contours \(e^{\pm \frac{2\pi i}{3}}\mathbb {R}^+\) for \(\Phi _\mathrm{Be}\).

If we take \(P^{(0)}\) of the form

with \(E_{0}\) analytic in \(\mathcal {D}_{0}\), then it is straightforward to verify that \(P^{(0)}\) satisfies the same jump relations as S in \(\mathcal {D}_0\). In addition to that, if we let

then matching condition (3.23) also holds. It can be refined using the asymptotics for \(\Phi _\mathrm{Be}\) given in (A.7): we have

as \(r \rightarrow +\infty \) uniformly for \(z \in \partial \mathcal {D}_{0}\). Also, after a direct computation in which we use (3.19) and (3.32) yields

3.5 Small norm problem

Now that the parametrices \(P^{(\lambda _j)}\) and \(P^{(\infty )}\) have been constructed, it remains to show that they indeed approximate S as \(r\rightarrow +\infty \). To that end, we define

Since the local parametrices were constructed in such a way that they satisfy the same jump conditions as S, it follows that R has no jumps and is hence analytic inside each of the disks \(\mathcal {D}_{\lambda _1},\ldots , \mathcal {D}_{\lambda _m}\). Also, we already knew that the jump matrices for S are exponentially close to I as \(r\rightarrow +\infty \) outside the local disks on the lips of the lenses, which implies that the jump matrices for R are exponentially small there. On the boundaries of the disks, the jump matrices are close to I with an error of order \({\mathcal {O}}(r^{-3/2})\), by the matching conditions (3.35) and (3.28). The error is moreover uniform in \(\vec {\tau }\) as long as the \(\tau _j\)’s remain bounded away from each other and from 0, and uniform for \(\beta _j\), \(j=2,\ldots , m\), in a compact subset of \(i\mathbb {R}\). By standard theory for RH problems [21], it follows that R exists for sufficiently large r and that it has the asymptotics

as \(r\rightarrow +\infty \), uniformly for \(\lambda \in \mathbb {C}{\setminus } \Gamma _{R}\), where

is the jump contour for the RH problem for R, and with the same uniformity in \(\vec {\tau }\) and \(\beta _2,\ldots , \beta _m\) as explained above. The remaining part of this section is dedicated to computing \(R^{(1)}(\lambda )\) explicitly for \(\lambda \in \mathbb {C}{\setminus } \bigcup _{j=1}^{m}\mathcal {D}_{\lambda _{j}}\) and for \(\lambda =0\). Let us take the clockwise orientation on the boundaries of the disks, and let us write \(J_{R}(\lambda )=R_-^{-1}(\lambda )R_+(\lambda )\) for the jump matrix of R as \(\lambda \in \Gamma _R\). Since R satisfies the equation

and since \(J_R\) has the expansion

as \(r \rightarrow +\infty \) uniformly for \(\lambda \in \bigcup _{j=1}^{m}\partial \mathcal {D}_{\lambda _{j}}\), while it is exponentially small elsewhere on \(\Gamma _R\), we obtain that \(R^{(1)}\) can be written as

If \(\lambda \in \mathbb {C}{\setminus } \bigcup _{j=1}^{m}\mathcal {D}_{\lambda _{j}}\), by a direct residue calculation, we have

Similarly, by (3.28)–(3.30) and (A.13), for \(j \in \{2,\ldots ,m\}\), we have

where

We will also need asymptotics for R(0). By a residue calculation, we obtain

The above residue at 0 is more involved to compute, but after a careful calculation we obtain

In addition to asymptotics for R, we will also need asymptotics for \(\partial _{s_m}R\). For this, we note that \(\partial _{s_m}R(\lambda )\) tends to 0 at infinity, that it is analytic in \(\mathbb {C}{\setminus }\Gamma _R\), and that it satisfies the jump relation

This implies the integral equation

Next, we observe that \(\partial _{s_m}J_R(\xi ) =\partial _{s_m}J_R^{(1)}(\xi )r^{-3/2}+\mathcal {O}(r^{-3}\log r)\) as \(r\rightarrow +\infty \), where the extra logarithm in the error term is due to the fact that \(\partial _{s_m}|\lambda _j|^{\beta _j} =\mathcal {O}(\log r)\). Standard techniques [24] then allow one to deduce from the integral equation that

as \(r\rightarrow +\infty \).

4 Integration of the Differential Identity

The differential identity (2.20) can be written as

where

and, by (2.15),

where we set \(s_{m+1}=1\) as before.

4.1 Asymptotics for \(A_{\vec {\tau }, \vec {s}}(r)\)

For \(|\lambda |\) large, more precisely outside the disks \(\mathcal {D}_{\lambda _j}\), \(j=1,\ldots , m\) and outside the lens-shaped regions, we have

by (3.37). As \(\lambda \rightarrow \infty \), we can write

for some matrices \(R_1, R_2\) which may depend on r and the other parameters of the RH problem, but not on \(\lambda \). Thus, by (3.7) and (3.9), we have

Using (3.38) and the above expressions, we obtain

as \(r\rightarrow +\infty \), where \(R_{1}^{(1)}\) and \(R_{2}^{(1)}\) are defined through the expansion

After a long computation with several cancellations using (3.3), we obtain that \(A_{\vec {\tau }, \vec {s}}(r)\) has large r asymptotics given by

Using (1.6), (3.14) and (3.41)–(3.45), we can rewrite this more explicitly as

where we recall the definition (3.13) of \(d_1=d_1(\vec {s})\) and \(d_2=d_2(\vec {s})\).

4.2 Asymptotics for \(B_{\vec {\tau }, \vec {s}}^{(j)}(r)\) with \(j\ne 1\)

Now we focus on \(\Psi (\zeta )\) with \(\zeta \) near \(y_j\). Inverting the transformations (3.37) and (3.5), and using the definition (3.26) of the local parametrix \(P^{(\lambda _{j})}\), we obtain that for z outside the lenses and inside \(\mathcal {D}_{\lambda _{j}}\), \(j \in \{2,\ldots ,m\}\),

By (3.1), we have

with

Evaluation of\(B_{\vec {\tau }, \vec {s}}^{(j,3)}(r)\). The last term \(B_{\vec {\tau }, \vec {s}}^{(j,3)}(r)\) is the easiest to evaluate asymptotically as \(r\rightarrow +\infty \). By (3.38) and (3.46), we have that

Moreover, from (3.29), since \(\beta _{j} \in i \mathbb {R}\), we know that \(E_{\lambda _j}(\lambda _j)=\mathcal {O}(1)\). Using also the fact that \(\Phi _\mathrm{HG}(0;\beta _j)\) is independent of r, we obtain that

Evaluation of\(B_{\vec {\tau }, \vec {s}}^{(j,1)}(r)\). To compute \(B_{\vec {\tau }, \vec {s}}^{(j,1)}(r)\), we need to use the explicit expression for the entries in the first column of \(\Phi _\mathrm{HG}\) given in (A.19). Together with (1.6), this implies that

Using also the \(\Gamma \) function relations

we obtain

for \(m\ge 3\); for \(m=2\) the formula is correct only if we set \(\beta _{1}=0\), which we do here and in the remaining part of this section, such that the first term vanishes.

Evaluation of\(B_{\vec {\tau }, \vec {s}}^{(j,2)}(r)\). We use (3.29) and obtain

By (3.43), we get

By (4.9), (4.8), and (4.7), we obtain

4.3 Asymptotics for \(B_{\vec {\tau }, \vec {s}}^{(j)}(r)\) with \(j= 1\)

For \(j=1\), we have near \(\lambda _1=0\) that

By (3.1), we have

with

Since \(\Phi _\mathrm{Be}(0)\) is independent of \(s_m\), we have \(B_{\vec {\tau }, \vec {s}}^{(1,1)}(r)=0\). For \(B_{\vec {\tau }, \vec {s}}^{(1,2)}(r)\), we use the explicit expressions for the entries in the first column of \(\Phi _\mathrm{Be}\) given in (A.11) and (3.36) to obtain

The computation of \(B_{\vec {\tau }, \vec {s}}^{(1,3)}(r)\) is more involved. Using (3.38) and (3.46), we have

Now we use again (A.11) and (3.36) together with (3.44) in order to conclude that

as \(r\rightarrow +\infty \). Substituting (4.13) and (4.14) into (4.12), we obtain

as \(r\rightarrow +\infty \).

4.4 Asymptotics for the differential identity

We now substitute (4.4), (4.10), and (4.15) into (4.1) and obtain after a straightforward calculation in which we use (3.25),

as \(r\rightarrow +\infty \), where we recall that \(\beta _1=0\) if \(m=2\). Now we note that

by (3.13). Next, by (3.30), we have

We substitute this in (4.16) and integrate in \(s_m\). For the integration, we recall the relation (1.6) between \(\vec {\beta }\) and \(\vec {s}\), and we note that letting the integration variable \(s_m'=e^{-2\pi i\beta _m'}\) go from 1 to \(s_m=e^{-2\pi i\beta _m}\) boils down to letting \(\beta _m'\) go from 0 to \(-\frac{\log s_m}{2\pi i}\), and at the same time (unless if \(m=2\)) to letting \(\beta _{m-1}'\) go from \(\hat{\beta }_{m-1}:=-\frac{\log s_{m-1}}{2\pi i}\) to \(\beta _{m-1}=\frac{\log s_{m}}{2\pi i}-\frac{\log s_{m-1}}{2\pi i}\). If \(m=2\), we set \(\hat{\beta }_{1}=\beta _{1}=0\). We then obtain, also using (3.25) and writing \(\vec {s}_0:=(s_1,\ldots , s_{m-1},1)\),

as \(r\rightarrow +\infty \). Now we use the following identity for the remaining integrals in terms of Barnes’ G-function (which can be obtained from an integration by part of [38, formula 5.17.4], see also [17, equations (5.24) and (5.25)]),

Noting that \(\lambda _j=\tau _j-\tau _1\) and \(-\beta _{m} =\beta _{m-1}-\hat{\beta }_{m-1}\), we find after a straightforward calculation that

as \(r\rightarrow +\infty \), uniformly in \(\vec {\tau }\) as long as the \(\tau _j\)’s remain bounded away from each other and from 0, and uniformly for \(\beta _2,\ldots , \beta _{m}\) in a compact subset of \(i\mathbb {R}\).

4.5 Proof of Theorem 1.2

We now prove Theorem 1.2 by induction on m. For \(m=1\), the result (1.4) is proved in [14], and we work under the hypothesis that the result holds for values up to \(m-1\). We can thus evaluate \(F(r\vec {\tau };\vec {s}_0)\) asymptotically, since this corresponds to an Airy kernel Fredholm determinant with only \(m-1\) discontinuities. In this way, we obtain after another straightforward calculation the large r asymptotics, uniform in \(\vec {\tau }\) and \(\beta _2,\ldots , \beta _m\),

where

This implies the explicit form (1.14) of the asymptotics for \(E_0(r\vec {\tau };\vec {\beta }_0)=F(r\vec {\tau }; \vec {s})/F(r\tau _1;0)\). The recursive form (1.9) of the asymptotics follows directly by relying on (1.4) and (1.11). Note that we prove (1.11) independently in the next section.

5 Asymptotic Analysis of RH Problem for \(\Psi \) with \(s_{1} > 0\)

We now analyze the RH problem for \(\Psi \) asymptotically in the case where \(s_1> 0\). Although the general strategy of the method is the same as in the case \(s_1=0\) (see Sect. 3), several modifications are needed, the most important ones being a different g-function and the construction of a different local Airy parametrix instead of the local Bessel parametrix which we needed for \(s_1=0\). We again write \(\vec {x}=r\vec {\tau }\) and \(\vec {y}=r\vec {\eta }\), with \(\eta _j=\tau _j-\tau _m\).

5.1 Re-scaling of the RH problem

We define T, in a slightly different manner than in (3.1), as follows,

Similarly as in the case \(s_1=0\), because of the triangular pre-factor above, we then have

as \(\lambda \rightarrow \infty \), but with modified expressions for the entries of \(T_1\) and \(T_2\):

The singularities of T now lie at the negative points \(\lambda _j=\tau _{j}\), \(j = 1,\ldots ,m\).

5.2 Normalization with g-function and opening of lenses

Instead of the g-function defined in (3.4), we can now use the simpler function \(-\frac{2}{3} \lambda ^{3/2}\) with principal branch of \(\lambda ^{3/2}\), and define

where \(\Omega _{j,\pm }\) are lens-shaped regions around \((\lambda _{j},\lambda _{j-1})\) as before, but where we note that the index j now starts at \(j=1\) instead of at \(j=2\), and where we define \(\lambda _{0} := 0\), see Fig. 3 for an illustration of these regions. Note that \(\lambda _{0}\) is not a singular point of the RH problem for T, but since \(\mathfrak {R}\lambda ^{3/2} = 0\) on \((-\infty ,0)\), it plays a role in the asymptotic analysis for S. S satisfies the following RH problem.

5.2.1 RH problem for S

- (a)

\(S : \mathbb {C}\backslash \Gamma _{S} \rightarrow \mathbb {C}^{2\times 2}\) is analytic, with

$$\begin{aligned} \Gamma _{S}=(-\infty ,0]\cup \big (\lambda _{m} +e^{\pm \frac{2\pi i}{3}} (0,+\infty )\big ) \cup \gamma _{+}\cup \gamma _{-}, \quad \gamma _{\pm } =\bigcup _{j=1}^{m} \gamma _{j,\pm }, \end{aligned}$$(5.8)and \(\Gamma _{S}\) oriented as in Fig. 3.

- (b)

The jumps for S are given by

$$\begin{aligned} S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 0 &{}\quad s_{j} \\ -s_{j}^{-1} &{}\quad 0 \end{pmatrix}, \quad \lambda \in (\lambda _{j},\lambda _{j-1}), \, j = 1,\ldots ,m+1, \\ S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 1 &{}\quad 0 \\ s_{j}^{-1}e^{\frac{4}{3}(r\lambda )^{3/2}} &{}\quad 1 \end{pmatrix}, \quad \lambda \in \gamma _{j,+} \cup \gamma _{j,-}, \, j = 1,\ldots ,m, \\ S_{+}(\lambda )= & {} S_{-}(\lambda )\begin{pmatrix} 1 &{}\quad 0 \\ e^{\frac{4}{3}(r\lambda )^{3/2}} &{}\quad 1 \end{pmatrix}, \quad \lambda \in \lambda _{m} +e^{\pm \frac{2\pi i}{3}} (0,+\infty ), \\ S_{+}(\lambda )= & {} S_{-}(\lambda ) \begin{pmatrix} 1 &{}\quad s_{1}e^{-\frac{4}{3}(r\lambda )^{3/2}} \\ 0 &{}\quad 1 \end{pmatrix}, \qquad \lambda \in (0, + \infty ), \end{aligned}$$where we set \(\lambda _{m+1}:=-\infty \) and \(\lambda _{0} := 0\).

- (c)

As \(\lambda \rightarrow \infty \), we have

$$\begin{aligned} S(\lambda ) = \left( I + \frac{T_1}{\lambda } +\frac{T_2}{\lambda ^2}+ \mathcal {O}\left( \frac{1}{\lambda ^3}\right) \right) \lambda ^{\frac{1}{4} \sigma _3} M^{-1}. \end{aligned}$$(5.9) - (d)

\(S(\lambda ) = \mathcal {O}( \log (\lambda -\lambda _j) )\) as \(\lambda \rightarrow \lambda _j\), \(j = 1, \ldots , m\), and \(S(\lambda ) = {\mathcal {O}}(1)\) as \(\lambda \rightarrow 0\).

Inspecting the sign of the real part of \(\lambda ^{3/2}\) on the different parts of the jump contour, we observe that the jumps for S are exponentially close to I as \(r \rightarrow + \infty \) on the lenses, and also on the rays \(\lambda _{m} + e^{\pm \frac{2\pi i}{3}}(0,+\infty )\). This convergence is uniform outside neighborhoods of \(\lambda _0,\lambda _{1},\ldots ,\lambda _{m}\), but breaks down as we let \(\lambda \rightarrow \lambda _{j}\), \(j \in \{0,1,\ldots ,m\}\).

5.3 Global parametrix

The RH problem for the global parametrix is as follows.

5.3.1 RH problem for \(P^{(\infty )}\)

- (a)

\(P^{(\infty )} : \mathbb {C}\backslash (-\infty ,0] \rightarrow \mathbb {C}^{2\times 2}\) is analytic.

- (b)

The jumps for \(P^{(\infty )}\) are given by

$$\begin{aligned} P^{(\infty )}_{+}(\lambda ) = P^{(\infty )}_{-}(\lambda )\begin{pmatrix} 0 &{}\quad s_{j} \\ -s_{j}^{-1} &{}\quad 0 \end{pmatrix}, \quad \lambda \in (\lambda _{j},\lambda _{j-1}), \, j = 1,\ldots ,m+1. \end{aligned}$$ - (c)

As \(\lambda \rightarrow \infty \), we have

$$\begin{aligned} P^{(\infty )}(\lambda ) = \left( I + \frac{P^{(\infty )}_1}{\lambda } +\frac{P^{(\infty )}_2}{\lambda ^2} + \mathcal {O}\left( \frac{1}{\lambda ^3}\right) \right) \lambda ^{\frac{1}{4} \sigma _3} M^{-1}. \end{aligned}$$(5.10)As \(\lambda \rightarrow 0\), we have \(P^{(\infty )}(\lambda ) ={\mathcal {O}}(\lambda ^{-\frac{1}{4}})\). As \(\lambda \rightarrow \lambda _{j}\) with \(j \in \{2,\ldots ,m\}\), we have \(P^{(\infty )}(\lambda ) = {\mathcal {O}}(1)\).

This RH problem is of the same form as the one in the case \(s_1=0\), but with an extra jump on the interval \((\lambda _1,\lambda _0)\). We can construct \(P^{(\infty )}\) in a similar way as before, by setting

with

We emphasize that the sum in the above expression now starts at \(j=1\). For any positive integer k, as \(\lambda \rightarrow \infty \) we have

where

This defines the value of \(d_1\) in (5.11), and with these values of \(d_1, d_2\), the expressions (3.14) for \(P_1^{(\infty )}\) and \(P_2^{(\infty )}\) remain valid. As before, we can also write D as

This expression allows us, in a similar way as in Sect. 3, to expand \(D(\lambda )\) as \(\lambda \rightarrow \lambda _{j}\), \(\mathfrak {I}\lambda > 0\), \(j \in \{1,\ldots ,m\}\), and to show that

with \(T_{k,j}\) as in (3.17) and the equations just above (3.17) (which are now defined for \(k,j \ge 1\)). The first two terms in the expansion of \(D(\lambda )\) as \(\lambda \rightarrow \lambda _{0}=0\) are given by

where

Note again, for later use, that for all \(\ell \in \{0,1,2,\ldots \}\), we can rewrite \(d_{\ell }\) in terms of the \(\beta _{j}\)’s as follows,

and that

5.4 Local parametrices

The local parametrix around \(\lambda _{j}\), \(j \in \{0,\ldots ,m\}\), denoted by \(P^{(\lambda _{j})}\), should satisfy the same jumps as S in a fixed (but sufficiently small) disk \(\mathcal {D}_{\lambda _{j}}\) around \(\lambda _{j}\). Furthermore, we require that

uniformly for \(\lambda \in \partial \mathcal {D}_{\lambda _{j}}\).

5.4.1 Local parametrices around \(\lambda _{j}\), \(j = 1,\ldots ,m\).

For \(j \in \{1,\ldots ,m\}\), \(P^{(\lambda _{j})}\) can again be explicitly expressed in terms of confluent hypergeometric functions. The construction is the same as in Sect. 3, with the only difference being that \(f_{\lambda _j}\) is now defined as

where the principal branch of \((-\lambda )^{3/2}\) is chosen. This is a conformal map from \(\mathcal {D}_{\lambda _{j}}\) to a neighborhood of 0, satisfies \(f_{\lambda _{j}}(\mathbb {R}\cap \mathcal {D}_{\lambda _{j}})\subset i \mathbb {R}\), and its expansion as \(\lambda \rightarrow \lambda _{j}\) is given by

Similarly as in Sect. 3.4.1, we define

where \(\Phi _{\mathrm {HG}}\) is the confluent hypergeometric model RH problem presented in “Appendix A.3” with parameter \(\beta =\beta _{j}\). The function \(E_{\lambda _{j}}\) is analytic inside \(\mathcal {D}_{\lambda _{j}}\) and is given by

We will need a more detailed matching condition than (5.20), which we can obtain from (A.13):

as \(r \rightarrow + \infty \) uniformly for \(\lambda \in \partial \mathcal {D}_{\lambda _{j}}\). Moreover, we note for later use that

with

5.4.2 Local parametrices around \(\lambda _{1} = 0\).

The local parametrix \(P^{(0)}\) can be explicitly expressed in terms of the Airy function. Such a construction is fairly standard, see e.g. [21, 22]. We can take \(P^{(0)}\) of the form

for \(\lambda \) in a sufficiently small disk \(\mathcal {D}_0\) around 0, and where \(\Phi _{\mathrm {Ai}}\) is the Airy model RH problem presented in “Appendix A.1”. The function \(E_{0}\) is analytic inside \(\mathcal {D}_{0}\) and is given by

A refined version of the matching condition (5.20) can be derived from (A.2): one shows that

as \(r \rightarrow +\infty \) uniformly for \(z \in \partial \mathcal {D}_{0}\), where \(\Phi _{\mathrm {Ai},1}\) is given below (A.2). An explicit expression for \(E_0(0)\) is given by

5.5 Small norm problem

As in Sect. 3.5, we define R as

and we can conclude in the same way as in Sect. 3.5 that (3.38) and (3.46) hold, uniformly for \(\beta _1,\beta _2,\ldots , \beta _m\) in compact subsets of \(i\mathbb {R}\), and for \(\tau _1,\ldots , \tau _m\) such that \(\tau _1<-\delta \) and \(\min _{1\le k\le m-1}\{\tau _k-\tau _{k+1}\}>\delta \) for some \(\delta >0\), with

where \(J_R\) is the jump matrix for R and \(J_R^{(1)}\) is defined by (3.39).

A difference with Sect. 3.5 is that \(J_R^{(1)}\) now has a double pole at \(\lambda =0\), by (5.30). At the other singularities \(\lambda _j\), it has a simple pole as before. If \(\lambda \in \mathbb {C}{\setminus } \bigcup _{j=0}^{m}\mathcal {D}_{\lambda _{j}}\), a residue calculation yields

From (5.30), we deduce

and

By (5.25)–(5.27), for \(j \in \{1,\ldots ,m\}\), we have

where

6 Integration of the Differential Identity for \(s_{1} > 0\)

Like in Sect. 4, (2.20) yields

with

where we set \(s_{m+1}=1\). We assume in what follows that \(m\ge 2\).

For the computation of \(A_{\vec {\tau }, \vec {s}}(r)\), we start from the expansion (4.3), which continues to hold for \(s_1>0\), but now with \(P_1^{(\infty )}\) and \(P_2^{(\infty )}\) as in Sect. 5 (i.e. defined by (3.14) but with \(d_1, d_2\) given by (5.18)), and with \(R_{1}^{(1)}\) and \(R_{2}^{(1)}\) defined through the expansion

corresponding to the function \(R^{(1)}\) from Sect. 5, given in (5.33).

Using (3.14), (3.38), (3.46), (5.3)–(5.6), (5.22) and (5.34), we obtain after a long computation the following explicit large r expansion

For the terms \(B_{\vec {\tau }, \vec {s}}^{(j)}(r)\), we proceed as before by splitting this term in the same way as in (4.6). We can carry out the same analysis as in Sect. 4 for each of the terms. We note that the terms corresponding to \(j=1\) can now be computed in the same way as the terms \(j=2,\ldots , m\). This gives, analogously to (4.10),

as \(r\rightarrow +\infty \).

Summing up (6.3) and (6.4) and using the expressions (5.22) for \(c_{\lambda _{j}}\) and (5.18) for \(d_0\), we obtain the large r asymptotics

uniformly for \(\beta _1,\beta _2,\ldots , \beta _m\) in compact subsets of \(i\mathbb {R}\), and for \(\tau _1,\ldots , \tau _m\) such that \(\tau _1<-\delta \) and \(\min _{1\le k\le m-1}\{\tau _k-\tau _{k+1}\}>\delta \) for some \(\delta >0\). Next, we observe that (5.27) implies the identity

Substituting this identity and the fact that \(\lambda _j=\tau _j\), we find after a straightforward calculation [using also (1.6)] that, uniformly in \(\vec {\tau }\) and \(\vec {\beta }\) as \(r\rightarrow +\infty \),

We are now ready to integrate this in \(s_m\). Recall that we need to integrate \(s_m'=e^{-2\pi i\beta _m'}\) from 1 to \(s_m=e^{-2\pi i\beta _m}\), which means that we let \(\beta _m'\) go from 0 to \(-\frac{\log s_m}{2\pi i}\), and at the same time \(\beta _{m-1}'\) go from \(\hat{\beta }_{m-1}:=-\frac{\log s_{m-1}}{2\pi i}\) to \(\beta _{m-1}=\frac{\log s_{m}}{2\pi i}-\frac{\log s_{m-1}}{2\pi i}\). We then obtain, using (4.19) and (5.18), and writing \(\vec {s}_0:=(s_1,\ldots , s_{m-1},1)\),

as \(r\rightarrow +\infty \), where \(\mu (x)\) is as in Theorem 1.1.

We can now conclude the proof of Theorem 1.1 by induction on m. For \(m=1\), we have (1.5). Assuming that the result (1.11) holds for \(m-1\) singularities, we know the asymptotics for \(F(r\vec {\tau };\vec {s}_0)=E(r\tau _1,\ldots , r\tau _{m-1};\beta _1,\ldots ,\beta _{m-2}, \hat{\beta }_{m-1})\). Substituting these asymptotics in (6.8) and using (5.19), we obtain

with

From this expansion, it is straightforward to derive (1.11). The expansion (1.9) follows from (1.5) after another straightforward calculation. This concludes the proof of Theorem 1.1.

References

Ablowitz, M.J., Segur, H.: Asymptotic solutions of the Korteweg-de Vries equation. Stud. Appl. Math. 57(1), 13–44 (1976/1977)

Amir, G., Corwin, I., Quastel, J.: Probability distribution of the free energy of the continuum directed random polymer in \(1 + 1\) dimensions. Commun. Pure Appl. Math. 64, 466–537 (2011)

Arguin, L.-P., Belius, D., Bourgade, P.: Maximum of the Characteristic Polynomial of Random Unitary Matrices. Commun. Math. Phys. 349, 703–751 (2017)

Baik, J., Buckingham, R., Di Franco, J.: Asymptotics of Tracy-Widom distributions and the total integral of a Painlevé II function. Commun. Math. Phys. 280, 463–497 (2008)

Baik, J., Deift, P., Rains, E.: A Fredholm determinant identity and the convergence of moments for random Young tableaux. Commun. Math. Phys. 223(3), 627–672 (2001)

Basor, E., Widom, H.: Determinants of Airy operators and applications to random matrices. J. Stat. Phys. 96(1–2), 1–20 (1999)

Berestycki, N., Webb, C., Wong, M.D.: Random Hermitian matrices and gaussian multiplicative chaos. Probab. Theory Relat. Fields 172(172), 103–189 (2018)

Bogatskiy, A., Claeys, T., Its, A.: Hankel determinant and orthogonal polynomials for a Gaussian weight with a discontinuity at the edge. Commun. Math. Phys. 347(1), 127–162 (2016)

Bohigas, O., Pato, M.P.: Missing levels in correlated spectra. Phys. Lett. B 595, 171–176 (2004)

Bohigas, O., Pato, M.P.: Randomly incomplete spectra and intermediate statistics. Phys. Rev. E 74(3), 036212 (2006)

Borodin, A.: Determinantal point processes. In: Oxford Handbook of Random Matrix Theory, pp. 231–249. Oxford University Press, New York (2011)

Borodin, A., Ferrari, P.: Anisotropic growth of random surfaces in \(2+1\) dimensions. Commun. Math. Phys. 325, 603–684 (2014)

Borodin, A., Okounkov, A., Olshanski, G.: Asymptotics of Plancherel measures for symmetric groups. J. Am. Math. Soc. 13(3), 481–515 (2000)

Bothner, T., Buckingham, R.: Large deformations of the Tracy–Widom distribution I. Non-oscillatory asymptotics. Commun. Math. Phys. 359, 223–263 (2018)

Bothner, T., Deift, P., Its, A., Krasovsky, I.: On the asymptotic behavior of a log gas in the bulk scaling limit in the presence of a varying external potential I. Commun. Math. Phys. 337, 1397–1463 (2015)

Bourgade, P., Erdős, L., Yau, H.-T.: Edge universality of beta ensembles. Commun. Math. Phys. 332, 261–353 (2014)

Charlier, C.: Asymptotics of Hankel determinants with a one-cut regular potential and Fisher–Hartwig singularities. Int. Math. Res. Not. rny009, https://doi.org/10.1093/imrn/rny009

Charlier, C.: Large gap asymptotics in the piecewise thinned Bessel point process, preprint

Claeys, T., Doeraene, A.: The generating function for the Airy point process and a system of coupled Painlevé II equations. Stud. Appl. Math. 140(4), 403–437 (2018)

Corwin, I., Ghosal, P.: Lower tail of the KPZ equation, arXiv:1802.03273

Deift, P.: Orthogonal Polynomials and Random Matrices: A Riemann–Hilbert Approach. American Mathematical Society, Providence (1999)

Deift, P., Gioev, D.: Universality at the edge of the spectrum for unitary, orthogonal and symplectic ensembles of random matrices. Commun. Pure Appl. Math. 60, 867–910 (2007)

Deift, P., Its, A., Krasovsky, I.: Asymptotics for the Airy-kernel determinant. Commun. Math. Phys. 278, 643–678 (2008)

Deift, P., Kriecherbauer, T., McLaughlin, K., Venakides, S., Zhou, X.: Strong asymptotics of orthogonal polynomials with respect to exponential weights. Commun. Pure Appl. Math. 52, 1491–1552 (1999)

Forrester, P.J.: The spectrum edge of random matrix ensembles. Nuclear Phys. B 402, 709–728 (1993)

Forrester, P.J.: Asymptotics of spacing distributions 50 years later. MSRI Publ. 65, 199–222 (2014)

Foulquie Moreno, A., Martinez-Finkelshtein, A., Sousa, V.L.: Asymptotics of orthogonal polynomials for a weight with a jump on [\(-1, 1\)]. Constr. Approx. 33, 219–263 (2011)

Hagg, J.: Gaussian fluctuations in some determinantal processes. Ph.D. Thesis, 2007, ISBN 978-91-7178-603-6, http://kth.diva-portal.org/smash/get/diva2:11902/FULLTEXT01.pdf

Hastings, S.P., McLeod, J.B.: A boundary value problem associated with the second Painlevé transcendent and the Korteweg-de Vries equation. Arch. Ration. Mech. Anal. 73, 31–51 (1980)

Its, A., Izergin, A.G., Korepin, V.E., Slavnov, N.A.: Differential equations for quantum correlation functions, In: Proceedings of the Conference on Yang–Baxter Equations, Conformal Invariance and Integrability in Statistical Mechanics and Field Theory, vol. 4, pp. 1003–1037 (1990)

Its, A., Krasovsky, I.: Hankel determinant and orthogonal polynomials for the Gaussian weight with a jump. Contemp. Math. 458, 215–247 (2008)

Johansson, K.: The arctic circle boundary and the Airy process. Ann. Prob. 33(1), 1–30 (2005)

Johansson, K.: Random matrices and determinantal processes. In: Mathematical Statistical Physics, pp. 1–55. Elsevier, Amsterdam, (2006)

Krajenbrink, A., Le Doussal, P., Prolhac, S.: Systematic time expansion for the Kardar-Parisi-Zhang equation, linear statistics of the GUE at the edge and trapped fermions. Nucl. Phys. B 936, 239–305 (2018)

Krasovsky, I.: Large gap asymptotics for random matrices. In: “New Trends in Mathematical Physics”, XVth International Congress on Mathematical Physics, Springer, Berlin (2009)

Kuijlaars, A.B.J., McLaughlin, K.T.-R., Van Assche, W., Vanlessen, M.: The Riemann–Hilbert approach to strong asymptotics for orthogonal polynomials on \([-1,1]\). Adv. Math. 188, 337–398 (2004)

Lambert, G., Ostrovsky, D., Simm, N.: Subcritical multiplicative chaos for regularized counting statistics from random matrix theory. Commun. Math. Phys. 360(1), 1–54 (2018)

Olver, F.W.J., Lozier, D.W., Boisvert, R.F., Clark, C.W.: NIST Handbook of Mathematical Functions. Cambridge University Press, Cambridge (2010)

Praehofer, M., Spohn, H.: Scale invariance of the PNG droplet and the Airy process. J. Stat. Phys. 108, 1076–1106 (2002)

Soshnikov, A.: Universality at the edge of the spectrum in Wigner random matrices. Commun. Math. Phys. 207, 697–733 (1999)

Soshnikov, A.: Gaussian fluctuation for the number of particles in Airy, Bessel, sine, and other determinantal random point fields. J. Stat. Phys. 100, 491–522 (2000)

Soshnikov, A.: Determinantal random point fields. Russ. Math. Surv. 55(5), 923–975 (2000)

Tracy, C., Widom, H.: Level-spacing distributions and the Airy kernel. Commun. Math. Phys. 159, 151–174 (1994)

Xu, S.-X., Dai, D.: Tracy–Widom distributions in critical unitary random matrix ensembles and the coupled Painlevé II system. Commun. Math. Phys. (2018). https://doi.org/10.1007/s00220-018-3257-y

Acknowledgements

Open access funding provided by Royal Institute of Technology. C.C. was supported by the Swedish Research Council, Grant No. 2015-05430. T.C. was supported by the Fonds de la Recherche Scientifique-FNRS under EOS Project O013018F.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by P. Deift

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

A Model RH Problems

A Model RH Problems

In this section, we recall three well-known RH problems: (1) the Airy model RH problem, whose solution is denoted \(\Phi _{\mathrm {Ai}}\), (2) the Bessel model RH problem, whose solution is denoted by \(\Phi _{\mathrm {Be}}\), and (3) the confluent hypergeometric model RH problem, which depends on a parameter \(\beta \in i \mathbb {R}\) and whose solution is denoted by \(\Phi _{\mathrm {HG}}(\cdot ) =\Phi _{\mathrm {HG}}(\cdot ;\beta )\).

1.1 A.1 Airy model RH problem

-

(a)

\(\Phi _{\mathrm {Ai}} : \mathbb {C} {\setminus } \Sigma _{A} \rightarrow \mathbb {C}^{2 \times 2}\) is analytic, and \(\Sigma _{A}\) is shown in Fig. 4.

-

(b)

\(\Phi _{\mathrm {Ai}}\) has the jump relations

$$\begin{aligned} \Phi _{\mathrm {Ai},+}(z)= & {} \Phi _{\mathrm {Ai},-}(z) \begin{pmatrix} 0 &{}\quad 1 \\ -1 &{}\quad 0 \end{pmatrix}, \quad \text{ on } \mathbb {R}^{-}, \nonumber \\ \Phi _{\mathrm {Ai},+}(z)= & {} \Phi _{\mathrm {Ai},-}(z) \begin{pmatrix} 1 &{}\quad 1 \\ 0 &{}\quad 1 \end{pmatrix}, \quad \quad \text{ on } \mathbb {R}^{+}, \nonumber \\ \Phi _{\mathrm {Ai},+}(z)= & {} \Phi _{\mathrm {Ai},-}(z) \begin{pmatrix} 1 &{}\quad 0 \\ 1 &{}\quad 1 \end{pmatrix}, \quad \quad \text{ on } e^{ \frac{2\pi i}{3} } \mathbb {R}^{+} , \nonumber \\ \Phi _{\mathrm {Ai},+}(z)= & {} \Phi _{\mathrm {Ai},-}(z) \begin{pmatrix} 1 &{}\quad 0 \\ 1 &{}\quad 1 \end{pmatrix}, \quad \quad \text{ on } e^{ -\frac{2\pi i}{3} } \mathbb {R}^{+} . \nonumber \\ \end{aligned}$$(A.1) -

(c)

As \(z \rightarrow \infty \), \(z \notin \Sigma _{A}\), we have

$$\begin{aligned} \Phi _{\mathrm {Ai}}(z) = z^{-\frac{\sigma _{3}}{4}}M \left( I + \frac{\Phi _{\mathrm {Ai,1}}}{z^{3/2}} + {\mathcal {O}}(z^{-3}) \right) e^{-\frac{2}{3}z^{3/2}\sigma _{3}}, \quad \Phi _{\mathrm {Ai,1}} = \frac{1}{8}\begin{pmatrix} \frac{1}{6} &{}\quad i \\ i &{}\quad -\frac{1}{6} \end{pmatrix}.\nonumber \\ \end{aligned}$$(A.2)As \(z \rightarrow 0\), we have

$$\begin{aligned} \Phi _{\mathrm {Ai}}(z) = {\mathcal {O}}(1). \end{aligned}$$(A.3)

The Airy model RH problem was introduced and solved in [24] (see in particular [24, equation (7.30)]). We have

with \(\omega = e^{\frac{2\pi i}{3}}\), Ai the Airy function and

1.2 A.2 Bessel model RH problem

- (a)

\(\Phi _{\mathrm {Be}} : \mathbb {C} {\setminus } \Sigma _{\mathrm {Be}} \rightarrow \mathbb {C}^{2\times 2}\) is analytic, where \(\Sigma _{\mathrm {Be}}\) is shown in Fig. 5.

- (b)

\(\Phi _{\mathrm {Be}}\) satisfies the jump conditions

$$\begin{aligned} \Phi _{\mathrm {Be},+}(z)= & {} \Phi _{\mathrm {Be},-}(z) \begin{pmatrix} 0 &{}\quad 1 \\ -1 &{}\quad 0 \end{pmatrix}, \quad z \in \mathbb {R}^{-}, \nonumber \\ \Phi _{\mathrm {Be},+}(z)= & {} \Phi _{\mathrm {Be},-}(z) \begin{pmatrix} 1 &{}\quad 0 \\ 1 &{}\quad 1 \end{pmatrix}, \quad \quad z \in e^{ \frac{2\pi i}{3} } \mathbb {R}^{+}, \nonumber \\ \Phi _{\mathrm {Be},+}(z)= & {} \Phi _{\mathrm {Be},-}(z) \begin{pmatrix} 1 &{}\quad 0 \\ 1 &{}\quad 1 \end{pmatrix}, \quad \quad z \in e^{ -\frac{2\pi i}{3} } \mathbb {R}^{+}. \end{aligned}$$(A.6) - (c)

As \(z \rightarrow \infty \), \(z \notin \Sigma _{\mathrm {Be}}\), we have

$$\begin{aligned} \Phi _{\mathrm {Be}}(z)= & {} ( 2\pi z^{\frac{1}{2}} )^{-\frac{\sigma _{3}}{2}}M\left( I+ \frac{\Phi _{\mathrm {Be},1}}{z^{1/2}} + {\mathcal {O}}(z^{-1}) \right) e^{2 z^{\frac{1}{2}}\sigma _{3}}, \end{aligned}$$(A.7)where \(\Phi _{\mathrm {Be},1} = \frac{1}{16}\begin{pmatrix} -1 &{}\quad -2i \\ -2i &{}\quad 1 \end{pmatrix}\).

- (d)

As z tends to 0, the behavior of \(\Phi _{\mathrm {Be}}(z)\) is

$$\begin{aligned} \Phi _{\mathrm {Be}}(z) = \left\{ \begin{array}{l l} \begin{pmatrix} {\mathcal {O}}(1) &{}\quad {\mathcal {O}}(\log z) \\ {\mathcal {O}}(1) &{}\quad {\mathcal {O}}(\log z) \end{pmatrix}, &{}\quad |\arg z|< \frac{2\pi }{3}, \\ \begin{pmatrix} {\mathcal {O}}(\log z) &{}\quad {\mathcal {O}}(\log z) \\ {\mathcal {O}}(\log z) &{}\quad {\mathcal {O}}(\log z) \end{pmatrix},&\quad \frac{2\pi }{3}< |\arg z| < \pi . \end{array} \right. \end{aligned}$$(A.8)

This RH problem was introduced and solved in [36]. Its unique solution is given by

where \(H_{0}^{(1)}\) and \(H_{0}^{(2)}\) are the Hankel functions of the first and second kind, and \(I_0\) and \(K_0\) are the modified Bessel functions of the first and second kind.

By [38, Section 10.30(i)]), as \(z \rightarrow 0\) we have

Therefore, as \(z \rightarrow 0\) from the sector \(|\arg z|<\frac{2\pi }{3}\), we have

where \(*\) denotes entries whose values are unimportant for us.

1.3 A.3 Confluent hypergeometric model RH problem

- (a)

\(\Phi _{\mathrm {HG}} : \mathbb {C} {\setminus } \Sigma _{\mathrm {HG}} \rightarrow \mathbb {C}^{2 \times 2}\) is analytic, where \(\Sigma _{\mathrm {HG}}\) is shown in Fig. 6.

- (b)

For \(z \in \Gamma _{k}\) (see Fig. 6), \(k = 1,\ldots ,6\), \(\Phi _{\mathrm {HG}}\) has the jump relations

$$\begin{aligned} \Phi _{\mathrm {HG},+}(z) = \Phi _{\mathrm {HG},-}(z)J_{k}, \end{aligned}$$(A.12)where

$$\begin{aligned}&J_{1} = \begin{pmatrix} 0 &{}\quad e^{-i\pi \beta } \\ -e^{i\pi \beta } &{}\quad 0 \end{pmatrix}, \quad J_{4} = \begin{pmatrix} 0 &{}\quad e^{i\pi \beta } \\ -e^{-i\pi \beta } &{}\quad 0 \end{pmatrix}, \\&J_{2} = \begin{pmatrix} 1 &{}\quad 0 \\ e^{i\pi \beta } &{}\quad 1 \end{pmatrix}, \quad J_{3} = \begin{pmatrix} 1 &{}\quad 0 \\ e^{-i\pi \beta } &{}\quad 1 \end{pmatrix}, \quad J_{5} = \begin{pmatrix} 1 &{}\quad 0 \\ e^{-i\pi \beta } &{}\quad 1 \end{pmatrix}, \quad J_{6} = \begin{pmatrix} 1 &{}\quad 0 \\ e^{i\pi \beta } &{}\quad 1 \end{pmatrix}. \end{aligned}$$ - (c)

As \(z \rightarrow \infty \), \(z \notin \Sigma _{\mathrm {HG}}\), we have

$$\begin{aligned} \Phi _{\mathrm {HG}}(z)= & {} \left( I + \frac{\Phi _{\mathrm {HG},1}(\beta )}{z} + {\mathcal {O}}(z^{-2}) \right) z^{-\beta \sigma _{3}}e^{-\frac{z}{2}\sigma _{3}}\nonumber \\&\times \left\{ \begin{array}{l l} \displaystyle e^{i\pi \beta \sigma _{3}}, &{}\quad \displaystyle \frac{\pi }{2}< \arg z< \frac{3\pi }{2}, \\ \begin{pmatrix} 0 &{}\quad -1 \\ 1 &{}\quad 0 \end{pmatrix},&\quad \displaystyle -\frac{\pi }{2}< \arg z < \frac{\pi }{2}, \end{array} \right. \end{aligned}$$(A.13)where

$$\begin{aligned} \Phi _{\mathrm {HG},1}(\beta ) = \beta ^{2} \begin{pmatrix} -1 &{}\quad \tau (\beta ) \\ - \tau (-\beta ) &{}\quad 1 \end{pmatrix}, \qquad \tau (\beta ) = \frac{- \Gamma \left( -\beta \right) }{\Gamma \left( \beta + 1 \right) }. \end{aligned}$$(A.14)In (A.13), \(z^{\beta } = |z|^{\beta }e^{i\arg z}\) with \(\arg z \in (-\frac{\pi }{2},\frac{3\pi }{2})\).

As \(z \rightarrow 0\), we have

$$\begin{aligned} \Phi _{\mathrm {HG}}(z) = \left\{ \begin{array}{l l} \begin{pmatrix} {\mathcal {O}}(1) &{}\quad {\mathcal {O}}(\log z) \\ {\mathcal {O}}(1) &{}\quad {\mathcal {O}}(\log z) \end{pmatrix}, &{}\quad \text{ if } z \in II \cup V, \\ \begin{pmatrix} {\mathcal {O}}(\log z) &{}\quad {\mathcal {O}}(\log z) \\ {\mathcal {O}}(\log z) &{}\quad {\mathcal {O}}(\log z) \end{pmatrix},&\quad \text{ if } z \in I\cup III \cup IV \cup VI. \end{array} \right. \end{aligned}$$(A.15)

This RH problem was introduced and solved in [31]. Consider the matrix

where G and H are related to the Whittaker functions:

The solution \(\Phi _{\mathrm {HG}}\) is given by

We can now use classical expansions as \(z\rightarrow 0\) for the Whittaker functions, see [38, Section 13.14 (iii)], to conclude that, as \(z\rightarrow 0\) from sector II, we have

where the stars denote entries whose values are unimportant for us. This implies that

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Charlier, C., Claeys, T. Large Gap Asymptotics for Airy Kernel Determinants with Discontinuities. Commun. Math. Phys. 375, 1299–1339 (2020). https://doi.org/10.1007/s00220-019-03538-w

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00220-019-03538-w