Abstract

In this article we show the convergence of a loop ensemble of interfaces in the FK Ising model at criticality, as the lattice mesh tends to zero, to a unique conformally invariant scaling limit. The discrete loop ensemble is described by a canonical tree glued from the interfaces, which then is shown to converge to a tree of branching SLEs. The loop ensemble contains unboundedly many loops and hence our result describes the joint law of infinitely many loops in terms of SLE type processes, and the result gives the full scaling limit of the FK Ising model in the sense of random geometry of the interfaces. Some other results in this article are convergence of the exploration process of the loop ensemble (or the branch of the exploration tree) to \(\hbox {SLE}(\kappa ,\kappa -6)\), \(\kappa =16/3\), and convergence of a generalization of this process for 4 marked points to \(\hbox {SLE}[\kappa ,Z]\), \(\kappa =16/3\), where Z refers to a partition function. The latter SLE process is a process that can’t be written as a \(\hbox {SLE}(\kappa ,\rho _1,\rho _2,\ldots )\) process, which are the most commonly considered generalizations of SLEs.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 The setup informally

The Ising model is one of the most studied lattice models in statistical physics. The Ising model (and Potts models generalizing it) have percolation-type representations called Fortuin–Kasteleyn random cluster models (FK model). Given a graph the Ising model assigns probabilities to configurations of \(\pm 1\) spins on the vertices of the graph and the random cluster model assigns probabilities to configurations of open/closed edges. FK model is obtained from the percolation model (giving weight p to each open edge and \(1-p\) to each closed edge) by weighting by a factor q per each connected cluster of open edges. We will consider the FK Ising model, i.e., FK model whose parameter value q corresponds to the Ising model, on a square lattice. The lattice we are considering is the usual square lattice \(\mathbb {Z}^2\), for convenience rotated by \(45^\circ \). In Fig. 1a it is the lattice formed by the centers of the black squares.

The square lattices we are considering are \(\mathbb {L}^\bullet \) formed by the centers of the black squares (the vertices are connected by an edge if the corresponding squares touch by corners), \(\mathbb {L}^\circ \) formed by the centers of the white squares (the edges similarly as for \(\mathbb {L}^\bullet \)) and \(\mathbb {L}^\diamond \) formed by the vertices and edges of the black (or equivalently white) squares. We will also consider the square–octagon lattice \(\mathbb {L}^\spadesuit \) (infinite graph formed by the vertices and edges of the squares and octagons in the picture) which we see as a modification of \(\mathbb {L}^\diamond \)

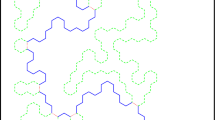

We will consider a bounded simply connected subgraph of the square lattice. We will make this definition clearer later. An FK configuration in the graph is illustrated in Fig. 2a and its dual configuration in Fig. 2b. The dual configuration is defined on the dual lattice, formed by the centers of the white squares in Fig. 1a, with the rule that exactly one of any (primal) edge and its dual edge (the dual edge is the unique edge on the dual lattice crossing the primal edge) is present in the configuration or in the dual configuration. The so called loop representation of the FK model is defined on so called medial lattice, shown in Fig. 1a and formed by the corners of the squares, by taking the inner and outer boundaries of all the connected components in a configuration of edges. The loop representation can be seen as a dense collection of simple loops when we resolve the possible double vertices by the modification illustrated in Fig. 1b or Fig. 2.

The duality of the FK configurations described above gives a mapping between the set of configurations with wired boundary conditions and the set of configurations with free boundary conditions, defined on the graph and on its dual, respectively. One can check that the FK model parameter values p and q get mapped under this involution of FK configurations to values \(p^*\) and \(q^*=q\) where \(p^*\) is given by

In this article we will consider critical point of the model which happens to be the self-dual value of p, that is, \(p=p_c\) when \(p=p^*\). For the FK Ising model, the parameter \(q=2\) and the critical parameter\(p_c= \frac{\sqrt{2}}{1+\sqrt{2}}\). By the fact that it is the self-dual point we see that in Fig. 2a, b the FK models have the same parameter values. The only difference is in boundary conditions. The resulting loop configurations have the same law for the critical parameter on both setups, either wired boundary conditions on the primal graph or free boundary conditions on the dual graph.

The correspondence of boundary conditions is slightly more complicated for other boundary conditions than the wired and free ones.

1.1.1 The role of the critical parameter.

The parameter is chosen to be critical for several reasons. First of all it is expected that only for this value of the parameter the scaling limit will be non-trivial. For the other values, we get either zero or one macroscopic loop and all the other loops will be microscopic and vanishing in the scaling limit. The only macroscopic loop will be rather uninteresting as it will follow closely the boundary, so that the loop will fluctuate from the boundary only to a distance which vanishes in the scaling limit. In contrast, for the critical parameter the scaling limit will consist of (countably) infinite number of loops as we will be showing.

The second reason for selecting this value of the parameter is more technical. For that value, the observable we are defining in Sect. 4.1 is going to satisfy a relation which we can interpret as a discrete version of the Cauchy–Riemann equations. This makes it possible to pass to the limit and recover in the limit a holomorphic function solving a boundary value problem.

The third reason for the choice of the critical parameter is that at criticality we expect that the loop collection will have a conformal symmetry. This is already suggested by the existence of the holomorphic observable.

In fact, the discrete holomorphicity and related techniques allow us to control the scaling limit well and to identify the scaling limit and to show its conformal invariance. And thus can be seen as the most important reason to consider a system at criticality.

1.2 The exploration tree and the main results

1.2.1 The exploration tree.

Figure 3 illustrates the construction of the exploration tree of a loop configuration. The (chordal) exploration tree connects the fixed root vertex to any other boundary point. The construction of the branch form the root to a fixed target vertex is the following:

-

(1)

To initialize the process cut open the loop next to the root vertex and start the process at that location on that loop.

-

(2)

Explore the current loop in clockwise direction until the target point is reached or any point at which it is clear that it is impossible to reach the target point along the current loop (meaning that the current location of the exploration is disconnected from the target in the discrete slit domain where the explored part has been removed from the original domain).

-

(3)

If the target was reached, then stop and return (as the result of the algorithm) the path concatenated from the subpaths of loops in the order that they were explored.

-

(4)

If the target was not reached yet, cut open the loop next to the current location and jump to that loop and go to Step (2).

The construction depends on the direction of the exploration which was chosen in (2) to be clockwise. Instead of a deterministic choice, independent coin flips could be used to decide whether to follow each loop in clockwise or counterclockwise direction. This would lead to a different process. In this article we will use the above construction which suits well our purposes. After all, the main goal is to show convergence of the loop collection, and the above construction agrees well with our observable.

1.2.2 Main result.

The main theorem of this article is the following result. For its formulation, call a discrete domain admissible if it is simply connected and bounded and its boundary consists of a chain of black octagons and small squares as in Fig. 2a. More generally, introduce lattice mesh by scaling the lattices (\(\mathbb {L}^\bullet , \mathbb {L}^\circ , \mathbb {L}^\diamond , \mathbb {L}^\spadesuit \) etc.) by a factor \(\delta >0\) and consider discrete, admissible domains with lattice mesh \(\delta >0\), and use notation \(\Omega ^{(\delta )}\) to explicitly refer to such a domain. A sequence of simply connected domains \(\Omega _n\) (say \(\Omega _n=\Omega ^{(\delta _n)}\)) converges to a domain \(\Omega \) in Carathéodory sense with respect to a point \(w_0\in \Omega \), if \(w_0\in \Omega _n\) for all n and the conformal and onto maps \(\psi _n: \mathbb {D}\rightarrow \Omega _n\) with \(\psi _n(0)=w_0\) and \(\psi _n^{\prime }(0)>0\) converge uniformly on compact subsets of \(\mathbb {D}\) to a conformal and onto map \(\psi : \mathbb {D}\rightarrow \Omega \) with \(\psi (0)=w_0\) and \(\psi ^{\prime }(0)>w_0\). Notice that then the inverse maps \(\phi _n = \psi ^{-1}_n\) converge uniformly on any compact subset K of \(\Omega \) (K belongs to the domain of \(\phi _n\) for large n).

Theorem 1.1

Let \(\Omega _{\delta _n}\) be a sequence of admissible domains that converges to a domain \(\Omega \) in Carathéodory sense with respect to some fixed \(w_0\in \Omega \) and let \(\Theta _{\partial ,\delta _n}\) be the random collection of loops which is the collection of all loops of the loop representation of the FK Ising model on \(\Omega _{\delta _n}\) that intersect the boundary (the boundary touching loops). Let \(\phi _n\) be a conformal map that sends \(\Omega _n\) onto the unit disc and \(w_0\) to 0. Then as \(n\rightarrow \infty \), the sequence of random loop collections \(\phi _n(\Theta _{\partial ,\delta _n})\) converges weakly to a limit \(\Theta \) whose law is independent of the choices of \(\Omega , \delta _n, \Omega _{\delta _n}, w_0\) and \(\phi _n\), and hence the law is invariant under all conformal isomorphisms of \(\mathbb {D}\).

Moreover the law of \(\Theta \) is the boundary touching loops of \(\hbox {CLE}(\kappa )\) with \(\kappa =16/3\) and is given by the image of the \(\hbox {SLE}(\kappa ,\kappa -6)\) exploration tree with \(\kappa =16/3\) under a tree-to-loops mapping which inverts the construction in Sect. 1.2.1 and is explained in more details in Sect. 2.3 (including definitions needed for understanding this theorem and making the statements more precise).

Remark 1.2

In this article we will prove Theorem 1.1 only in the case that \(\Omega \) is smooth and that each of its discrete approximations have boundary which close to the boundary of \(\Omega \) in the sense that their distance is bounded by a uniform constant times the lattice step of the approximation. By these assumptions, we will exclude the cases where the boundary forms long fjords to have better estimates for the harmonic measure. The general case follows from the sequel [15] of this article on the radial exploration tree of the FK Ising model. These restrictions are technical and they are not needed, for instance, for the convergence in the so-called 4-point case (Sect. 4.4.1 and other arguments leading to the convergence of the interface to \(\hbox {SLE}[\kappa ,Z]\) in Sect. 5.5).

The present article aims to provide clear details for the basic proof techniques which include the regularity properties of trees, derivation of the martingale observables and the corresponding martingale characterization in the boundary-touching-loop setting. In principle, one should be able to deduce the complete picture by repeatedly iterating this construction inside the resulting holes appearing after removing the boundary touching loops, the main difficult point being the fractal boundary. Instead, in the sequel [15] we build the complete tree towards interior points, thus not having to deal with fractal boundaries. This requires working with a more complicated observable, and the proof in the current article better explains what follows in the sequel.

Our result in the 4-point setting is interesting in its own right. In a follow-up paper [16], we use it to show that the interface conditioned on an internal arc pattern converges towards so-called hypergeometric SLE.

A sample of FK Ising branch is illustrated in Fig. 4.

A sample configuration of the critical FK Ising model on a rectangular domain with free boundary conditions. Components with only one vertex are not shown. The colors distinguish different components. Notice that in this particular sample, the large orange loop happens to come fairly close to the boundary without disconnecting the small loops to its right. Thus the exploration path turns and explores those boundary touching loops inside the “fjord”

1.3 Previous results on conformally invariant scaling limits of random curves and loops

So far, convergence of a single discrete interface to \(\hbox {SLE}(\kappa )\)’s has been established for but a few models: \(\kappa =2\) and \(\kappa =8\) [17], \(\kappa =3\) and \(\kappa =\frac{16}{3}\) [8], \(\kappa =4\) [21, 22] and \(\kappa =6\) [24, 25]. However, the framework for the full scaling limit, including all interfaces, is less developed: in addition to the present article only \(\kappa =3\) [4] and \(\kappa =6\) [6] and our subsequent work [15]. A similar result on a collection of random curves is the convergece of the free arc ensemble of the Ising model in [3].

1.4 Organization of the article

We will give further definitions in Sect. 2. In Sect. 3 we explore the regularity and tightness properties of the loop configurations and the exploration trees based on crossing estimates. This gives a priori knowledge needed in the main argument. In Sect. 4 we define the holomorphic observable and show its convergence. In Sect. 5 we combine these tools and extract information from the observable so that we can characterize the scaling limit and prove the main theorem in Sect. 6.

2 The Setup and More Details of the Main Result

2.1 Graph theoretical notations and setup

In this article the lattice \(\mathbb {L}^\bullet \) is the square lattice \(\mathbb {Z}^2\) rotated by \(\pi /4\), \(\mathbb {L}^\circ \) is its dual lattice, which itself is also a square lattice and \(\mathbb {L}^\diamond \) is their (common) medial lattice. More specifically, we define three lattices \(G=(V(G),E(G))\), where \(G= \mathbb {L}^\bullet ,\mathbb {L}^\circ ,\mathbb {L}^\diamond \), as

Notice that sites of \(\mathbb {L}^\diamond \) are the midpoints of the edges of \(\mathbb {L}^\bullet \) and \(\mathbb {L}^\circ \).

We call the vertices and edges of \(V( \mathbb {L}^\bullet )\)black and the vertices and edges of \(V( \mathbb {L}^\circ )\)white. Correspondingly the faces of \(\mathbb {L}^\diamond \) are colored black and white depending whether the center of that face belongs to \(V( \mathbb {L}^\bullet )\) or \(V( \mathbb {L}^\circ )\).

The directed version \(\mathbb {L}^\diamond _\rightarrow \) is defined by setting \(V(\mathbb {L}^\diamond _\rightarrow )=V(\mathbb {L}^\diamond )\) and orienting the edges around any black face in the counter-clockwise direction.

The modified medial lattice \(\mathbb {L}^\spadesuit \), which is a square–octagon lattice, is obtained from \(\mathbb {L}^\diamond \) by replacing each site by a small square. See Fig. 1b. The oriented lattice \(\mathbb {L}^\spadesuit _\rightarrow \) is obtained from \(\mathbb {L}^\spadesuit \) by orienting the edges around black and white octagonal faces in counter-clockwise and clockwise directions, respectively.

Definition 2.1

A simply connected, non-empty, bounded domain \(\Omega \) is said to be a wired\(\mathbb {L}^\spadesuit _\rightarrow \)-domain (or admissible domain) if \(\partial \Omega \) oriented in counter-clockwise direction is a path in \(\mathbb {L}^\spadesuit _\rightarrow \).

See Fig. 5 for an example of such a domain. The wired \(\mathbb {L}^\spadesuit _\rightarrow \)-domains are in one to one correspondence with non-empty finite subgraphs of \(\mathbb {L}^\bullet \) which are simply connected, i.e., they are graphs who have an unique unbounded face and the rest of the faces are unit-size squares.

2.2 FK Ising model: notations and setup for the full scaling limit

Let G be a simply connected subgraph of the square lattice \(\mathbb {L}^\bullet \). Consider the random cluster measure \(\mu =\mu _{p,q}^1\) of G with all wired boundary conditions in the special case of the critical FK Ising model, that is, when \(q=2\) and \(p = \sqrt{2}/(1 + \sqrt{2})\). Its dual model is again a critical FK Ising model, now with free boundary conditions on the dual graph \(G^\circ \) of G which is a (simply connected) subgraph of \(\mathbb {L}^\circ \). The loop representation is obtained as loops which form boundaries between open cluster and the dual open clusters and is defined as a collection of loops on the corresponding subgraph \(G^\spadesuit \) of the modified medial lattice \(\mathbb {L}^\spadesuit \). The loop collection satisfies the properties of the following definition. See also Fig. 2 for illustration of the common loop representation shared by the random cluster model and its dual model.

Let’s call a (unordered) collection of loops \(\mathcal {L}=(L_j)_{j =1 ,\ldots N}\) on \(G^\spadesuit \) a dense collection of non-intersecting loops (DCNIL) if

-

each \(L_j \subset G^\spadesuit \) is a simple loop

-

\(L_j\) and \(L_k\) are vertex-disjoint when \(j \ne k\)

-

for every edge \(e \in E^\diamond \) there is a loop \(L_j\) that visits e. Here we use that \(E^\diamond \) is naturally a subset of \(E^\spadesuit \).

Let the collection of all the loops in the loop representation be \(\Theta =(\theta _j)_{j =1 ,\ldots N}\). Then DCNIL is exactly the support of \(\Theta \) and for any DCNIL collection C of loops

where Z is the partition function that normalizes the probability measure.

We consider two boundaries of the domain, one which is the boundary of the domain and one which shifted by one lattice step from the first one towards the interior of the domain. They are both simple loops on the lattice which satisfy the same parity condition as the loops of the random cluster loop representation (the octagons on both sides have uniform color). More specifically

-

\(\partial G^\spadesuit \) is the boundary of the domain, in the usual topological sense. Call it the external boundary.

-

\(\partial _1 G^\spadesuit \) is the outermost (simple) loop can be drawn in \(G^\spadesuit \). In other words, it is the outermost loop of the empty random cluster configuration with wired boundary conditions. Call it the internal boundary. We say that \(\partial _1 G^\spadesuit \) touches the boundary everywhere and that a loop touches the boundary if if it intersects \(\partial _1 G^\spadesuit \). Notice that if a loop and \(\partial _1 G^\spadesuit \) intersect then they share an edge (which is an edge shared by two octagons).

Define the collection of boundary touching loops, \(\Theta _\partial \subset \Theta \), to be simply the set of loops which intersect the internal boundary \(\partial _1 G^\spadesuit \).

Recall the Carathéodory convergence from Sect. 1.2.2 a bounded simply connected domain \(\Omega \) in the plane. And take a sequence \(\delta _n \searrow 0\) as \(n \rightarrow \infty \) and a sequence of simply connected graphs \(G_{\delta _n}^\bullet \subset \delta _n \mathbb {L}^\bullet \) which approximate \(\Omega \) in the sense that, if we denote by \(\Omega _{\delta _n}\) the bounded component of \(\mathbb {C}{\setminus } \partial G_{\delta _n}^\spadesuit \), then \(\Omega _{\delta _n}\) converges in Carathéodory convergence to \(\Omega \) (with respect to any interior point of \(\Omega \)). Fix any \(w_0\in \Omega \) and let \(\phi _{\delta _n}: \Omega _{\delta _n} \rightarrow \mathbb {D}\) be conformal transformations normalized in the usual way using \(w_0\), that is,

Let \(\Theta _{\partial ,\delta _n}\) be the collection of boundary touching loops in \(\Omega _{\delta _n}\) and set \(\tilde{\Theta }_{\partial ,\delta _n} = \phi _{\delta _n} (\Theta _{\partial ,\delta _n})\).

Let us rephrase here the first half of Theorem 1.1.

Theorem 1.1 (a)

(Conformal invariance of \(\Theta _\partial \)). As \(n \rightarrow \infty \), \(\tilde{\Theta }_{\partial ,\delta _n}\) converges weakly to a random collection \(\Theta \) of loops in \(\mathbb {D}\). The law of \(\Theta \) is independent of \(\Omega , \delta _n, \Omega _{\delta _n}\) and \(w_0\).

Remark 2.2

Notice that the independence of the law of \(\Theta \) from \(\Omega , \delta _n, \Omega _{\delta _n}\) and \(w_0\) implies that \(\Theta \) is invariant under all conformal automorphisms (Möbius transformations) of \(\mathbb {D}\). The rotational invariance requires a separate argument using the correspondence between exploration tree and the loop collection and the fact that we are free to choose the root for the exploration. See Sect. 6.

2.3 The exploration tree of FK Ising model

Let’s simplify the notation so that we use \(\partial \Omega \) and \(\partial _1 \Omega \) to denote \(\partial G^\spadesuit \) and \(\partial _1 G^\spadesuit \). Remember that \(\partial \Omega \) and \(\partial _1 \Omega \) are simple loops on \(\mathbb {L}^\spadesuit _\rightarrow \) and that \(\Theta _\partial \) was the set of loops in \(\Theta \) that intersected \(\partial _1 \Omega \). Next we will explain the construction of the exploration tree of \(\Theta _\partial \). The branches of the tree will be simple paths from a root edge to a directed edge of \(\partial _1 \Omega \). More specifically let \(V_{\mathrm{target}}\) be the vertex set of \(\partial _1 \Omega \) and for each \(v \in V_{\mathrm{target}}\), let \(f_v\) be the edge of \(\partial _1 \Omega \) arriving to v.

We assume that the root vertex \(v_{\mathrm{root}}\in V_{\partial }\) of the exploration is fixed. For any \(w \in V_{\mathrm{target}}\), we are going to construct a simple path which starts from the inwards pointing edge of \(v_{\mathrm{root}}\) and ends on the edge \(f_w\) of w, denoting this path by \(T_w=T_{v_{\mathrm{root}},w}\). We will call the mapping from the loop collections to the trees the “loops-to-tree map.”

We describe next the algorithm of Sect. 1.2.1 when the target point is on the boundary. Consider a loop collection \(\mathcal {L}=(L_j)_{j \in J}\) where J is some finite index set, and assume that \(\mathcal {L}\) satisfies the properties of DCNIL. The index \(j \in J\) shouldn’t be confused with the concrete sequence \(L_1,\ldots ,L_n\) chosen below. Here we consider \(L_j\) as a path in \(\mathbb {L}^\diamond \) and lift it to \(\mathbb {L}^\spadesuit \) when needed.

-

(1)

Set \(e_0\) to be the inward pointing edge of \(\partial _1 \Omega \) at \(v_{\mathrm{root}}\) and \(f_{\mathrm{end}}=f_w\), that is the edge of \(\partial _1 \Omega \) arriving to w. Denote the set of edges in \(\partial _1 \Omega \) that lie between \(f_{\mathrm{end}}\) and \(e_0\), including \(f_{\mathrm{end}}\), by \(F_w\).

-

(2)

Set \(L_1\) to be the loop going through \(e_0\). Find the first edge of \(L_1\) after \(e_0\) in the orientation (remember that all the loops are oriented in the clockwise direction) of \(L_1\) that lies in \(F_w\). Call it \(f_1\) and the part of \(L_1\) between \(e_0\) and \(f_1\), not including \(f_1\), \(L_1^\text {T}\). Notice that \(L_1\) goes through \(f_{\mathrm{end}}\) if and only if \(f_1\) is equal to \(f_{\mathrm{end}}\). Notice also that if \(f_1\) is not \(f_{\mathrm{end}}\), then it is the first edge that takes the loop to a component of the domain that is no longer “visible” to \(f_{\mathrm{end}}\).

-

(3)

Suppose that \(f_n\) and \(L_1^\text {T}, L_2^\text {T}, \ldots , L_n^\text {T}\) are known. If \(f_n\) is equal to \(f_{\mathrm{end}}\), stop and return the concatenation of \(L_1^\text {T}, L_2^\text {T}, \ldots , L_n^\text {T}\) and \(f_n\) as the result \(T_w\). Otherwise take the inward pointing \(e_n\) edge next to \(f_n\) (starting at the endpoint of \(f_n\)) and the loop \(L_{n+1}\) passing through \(e_n\). Find the first edge of \(L_{n+1}\) after \(e_n\) in the orientation of \(L_{n+1}\) that lies in \(F_w\). Call it \(f_{n+1}\) and the part of \(L_{n+1}\) between \(e_n\) and \(f_{n+1}\), not including \(f_{n+1}\), \(L_{n+1}^\text {T}\). Repeat (3).

The result of the algorithm \(T_w\) is called the exploration process from \(v_{\mathrm{root}}\) to w. The collection \(\mathcal {T}= (T_w)_{w \in V_{\mathrm{target}}}\) where \(V_{\mathrm{target}}=V_\partial \) is called the exploration tree of the loop collection \(\mathcal {L}\) rooted at \(v_{\mathrm{root}}\).

The following result is immediate from the definition of the exploration tree. Two branches coincide until the first time that the branch disconnects the target points by that result, and after that the branches explore disjoint regions, which will later imply independence of this processes for the FK Ising exploration tree.

Proposition 2.3

(Target independence of exploration tree). Suppose that \(v_{\mathrm{root}}, w, w^{\prime }\) are vertices in \(V_\partial \) in counterclockwise order, that can be the same. Let \(F_{w,w^{\prime }}\) the edges of \(\partial _1 \Omega \) that lie between the outward pointing edges of w and \(w^{\prime }\) in counterclockwise direction, including the edge at \(w^{\prime }\) and but excluding the edge at w. Then \(T_w\) and \(T_{w^{\prime }}\) are equal up until the first edge lying in \(F_{w,w^{\prime }}\).

Next we will construct the inverse of the loops-to-tree map which we will call a “tree-to-loops map.” The structure of the tree \(\mathcal {T}\) is the following: the branches of \(\mathcal {T}\) are simple and follow the rule of leaving white squares on their right and black on their left. The branching occurs in a subset of vertex set of \(\partial _1 \Omega \). There is a one-to-one correspondence between branching points of \(\mathcal {T}\) and the boundary touching loops of \(\mathcal {L}\): the point on the loop, which lies on the boundary and is the closest one to the root if we move clockwise along the boundary, is a branching point and every branching point has this property for some loop. See also Fig. 6. Suppose that at a branching point w the incoming edges are \(e_1\) and \(e_2\) and the outgoing edges are \(f_1\) and \(f_2\) and they are in the order \(e_1,f_2,e_2,f_1\) clockwise and that the exploration process enters w through \(e_1\). Then necessarily each of the pairs \(e_1, f_1\) and \(e_2, f_2\) lie on the same loop of \(\mathcal {L}\) and these two loops are different. Also it follows that \(f_1\) and \(e_2\) are on \(\partial _1 \Omega \) while \(f_2\) and \(e_1\) are not. It follows that the last edge of \(T_w\) is \(e_2\) and that the part of \(T_w\) between \(f_2\) and \(e_2\) is exactly the loop of \(\mathcal {L}\) that touches the boundary at \(f_w=e_2\). Doing the same thing for every branching point defines a mapping from a suitable set of trees onto the set of loop collections of boundary touching loops of DCNIL. This mapping inverts the construction of the exploration tree and we summarize it in the following lemma.

Lemma 2.4

The mapping from the collection of boundary touching loops \(\mathcal {L}_\partial \) to the chordal exploration tree \(\mathcal {T}\) is a bijection.

Similar constructions work in the continuous setting. See [23] for the construction of the \(\hbox {SLE}(\kappa ,\kappa -6)\) exploration tree and the construction for recovering the loops.

Let us repeat here the second half of Theorem 1.1.

Theorem 1.1 (b)

The law of \(\Theta \) is given by the image of the \(\hbox {SLE}(\kappa ,\kappa -6)\) exploration tree with \(\kappa =16/3\) under the above tree-to-loops mapping.

3 Tightness of Trees and Loop Collections

In this section, we establish a priori bounds for trees and loop ensembles. The setting is relatively general, although we only apply it here to the FK Ising exploration tree of the boundary touching loops.

3.1 A probability bound on multiple crossings by the tree

An approach to establish compactness properties of sequences of probability measures based on probability bounds of multiple crossings of annuli by random curves was set up in [14] extending the results of [1]. Below we use that type of result for the FK Ising exploration tree. We start from the essential definitions.

For any fixed measurable space \((\mathcal {S},\mathcal {F})\), we call a random variable X tight over a collection \(\Sigma _0\) of probability measures \(\mathbb {P}\in \Sigma _0\) on the space \((\mathcal {S},\mathcal {F})\), if for each \(\varepsilon >0\) there exists a constant \(M>0\) such that \(\mathbb {P}(|X|<M)>1-\varepsilon \) for all \(\mathbb {P}\).

A crossing of an annulus \(A(z_0,r,R)=\{z \in \mathbb {C}\,:\, r<|z-z_0|<R\}\) is a closed segment of a curve that intersects both connected components of \(\mathbb {C}{\setminus } A(z_0,r,R)\) and a minimal crossing doesn’t contain any genuine subcrossings.

Recall the general setup of [14]: we are given a collection \((\phi ,\mathbb {P}) \in \Sigma \) where the conformal map \(\phi \) contains also the information about its domain of definition \((\Omega ,v_{\mathrm{root}},w_0)=(\Omega (\phi ),v_{\mathrm{root}}(\phi ),w_0(\phi ))\) through the requirements

and \(\mathbb {P}\) is the probability law of FK Ising model on the discrete domain \(\Omega \) and in particular gives the distribution of the FK Ising exploration tree. Given the collection \(\Sigma \) of pairs \((\phi ,\mathbb {P})\) we define the collection \(\Sigma _\mathbb {D}= \{\phi \mathbb {P}\,:\, (\phi ,\mathbb {P})\in \Sigma \}\) where \(\phi \mathbb {P}\) is the pushforward measure defined by \((\phi \mathbb {P}) (E) = \mathbb {P}(\phi ^{-1}(E))\).

Theorem 3.1

The following claim holds for the collection of the probability laws of FK Ising exploration trees

-

for any \(\Delta >0\), there exists \(n \in \mathbb {N}\) and \(K>0\) such that

$$\begin{aligned} \mathbb {P}( \text {at least } n \text { disjoint segments of } \mathcal {T}\text { cross } A(z_0,r,R)) \le K \left( \frac{r}{R} \right) ^\Delta \end{aligned}$$(7)for all \(\mathbb {P}\in \Sigma _\mathbb {D}\) and for all \(z_0 \in \mathbb {C}\) and \(R>r>0\). and there exist positive numbers \(\alpha , \alpha ^{\prime }>0\) such that the following claims hold

-

if for each \(r>0\), \(M_r\) is the minimum of all m such that each \(T\in \mathcal {T}\) can be split into m segments of diameter less or equal to r, then there exists a random variable \(K(\mathcal {T})\) such that K is a tight random variable for the family \(\Sigma _\mathbb {D}\) and

$$\begin{aligned} M_r \le K(\mathcal {T}) \, r^{-\frac{1}{\alpha }} \end{aligned}$$for all \(r>0\).

-

All branches of \(\mathcal {T}\) can be jointly parametrized so that they are all \(\alpha ^{\prime }\)-Hölder continuous and the Hölder norm can be bounded by a random variable \(K^{\prime }(\mathcal {T})\) such that \(K^{\prime }\) is a tight random variable for the family \(\Sigma _\mathbb {D}\).

Each of the claims have their own applications below although they are closely related, see [1].

Proof

We need to verify the first claim and the two other claims follow from it, by results of [1]; more specifically the second claim follows from the reformulated statement presented in the beginning of the proof of Theorem 1.1 of [1] and the third claim follows from Theorem 1.1 of [1]. Notice that we need to verify the inequality (7) for \(\Delta = \Delta _{n_i}\) and \(n=n_i\) where \(n_i\) is an increasing sequence of natural numbers and \(\Delta _{n_i}\) is a sequence of positive real numbers tending to infinity, since the left-hand side of the inequality in the first claim is non-increasing in n.

Let \(A=A(z_0,r,R)\) be annulus, \(z_0 \in \mathbb {D}\). Since either \(B(z_0,\sqrt{rR}) \subset \mathbb {D}\) or \(B(z_0,\sqrt{rR}) \cap \partial \mathbb {D}\ne \emptyset \), it holds that we can choose \(r_1= \sqrt{rR}\), \(R_1=R\) or \(r_1=r\), \(R_1= \sqrt{rR}\) such that for \(A_1 = A(z_0,r_1,R_1)\), either \(A_1 \subset \mathbb {D}\) or \(B(z_0,r_1) \cap \partial \mathbb {D}\ne \emptyset \). For that \(A_1\) and for \(C>1\) big enough, apply the estimate of conformal distortion given by either Lemma A.1 or Lemma A.2, depending on the case, to show that for any \(m=1,2,\ldots ,\lfloor \log \left( \frac{R}{r}\right) \rfloor \), there exist \(\tilde{A}_m = A(z_m,r_m,2 r_m)\) such that the conformal image of any crossing of \(A(z_0,C^{m-1} r, C^m r)\) under \(\phi ^{-1}\) is a crossing of \(A_m\).

By Lemmas B.1 and B.2 in Appendix B and the results of [7] (in particular, Lemma 5.7) applied to crossings of \(\tilde{A}_m\), it follows that for each \(\varepsilon >0\) there is n such that \(\mathbb {P}(\text {at least } n \text { disjoint segments of } \mathcal {T}\text { cross } \tilde{A}_m) < \varepsilon \). Thus (7) holds for n and constants \(K = \varepsilon ^{-1}\) and \(\Delta = \log \frac{1}{\varepsilon }\), Here the constant \(\Delta \) tends to \(\infty \) as \(n\rightarrow \infty \) (i.e. as \(\varepsilon \) tends to zero), and the estimates are uniform over all \(\mathbb {P}\in \Sigma _\mathbb {D}\) and annuli \(A(z_0,r,R)\) with \(R>r\). \(\quad \square \)

3.2 The crossing property of trees

Let \(\gamma _k\), \(k=0,\ldots ,N-1\), is the collection \(\mathcal {T}= ( T_x)\) in a (random) order and suppose that the random curves \(\gamma _k\) are each parametrized by [0, 1]. The chosen permutation specifies the order of exploration of the curves. More specifically, set \(\underline{\gamma }(t) = \gamma _k(t-k)\) when \(t \in [k,k+1)\). We call \(\underline{\gamma }\) an explored collection of branches.

For a given domain \(\Omega \) and for a given simple (random) curve \(\gamma \) on \(\Omega \), we set \(\Omega _\tau = \Omega {\setminus } \gamma [0,\tau ]\) for each (random) time \(\tau \). Similarly, for a given domain \(\Omega \) and for the given finite collection of curves \(\gamma _k\) on \(\Omega \), we set \(\underline{\Omega }_\tau = \Omega {\setminus } \underline{\gamma }[0,\tau ]\) for each (random) time \(\tau \).

We call \(\Omega _\tau \) or \(\underline{\Omega }_\tau \) the domain at time \(\tau \).

The following definition generalizes Definition 2.3 from [14]. This definition is needed in order to recognize those crossing events which have low probability.

Definition 3.2

For a given domain \((\Omega ,v_{\mathrm{root}})\) and for a given order of exploration (which defines \(\underline{\gamma }\)) of curves \(\gamma _x\), \(x \in V_{\mathrm{target}}\), where each curve \(\gamma _x\) is contained in \(\overline{\Omega }\), starting from \(v_{\mathrm{root}}\) and ending at a point x in the set \(V_{\mathrm{target}}\), define for any annulus \(A = A(z_0,r,R)\), for every (random) time \(\tau \in [0,N]\) and \(x \in V_{\mathrm{target}}\), \(A^{\mathrm{u},x}_\tau = \emptyset \) if \(\partial B(z_0,r) \cap \partial \underline{\Omega }_\tau = \emptyset \) and

otherwise. Define also

and set \(A^{\mathrm{u}}_\tau = \bigcap _{x \in V_{\mathrm{target}}}A^{\mathrm{u},x}_\tau \) and \(A^{\mathrm{f}}_\tau = \bigcup _{x \in V_{\mathrm{target}}} A^{\mathrm{f},x}_\tau \). We say that \(A^{\mathrm{u},x}_\tau \) is avoidable for \(\gamma _x\) and \(A^{\mathrm{u}}_\tau \) is avoidable for all (branches). We say that \(A^{\mathrm{f},x}_\tau \) is unavoidable for \(\gamma _x\) and \(A^{\mathrm{f}}_\tau \) is unavoidable for at least one (branch). Here and in what follows we only consider allowed lattice paths when we talk about connectedness.

Remark 3.3

Note that \(A^{\mathrm{u}}_\tau \cap A^{\mathrm{f}}_\tau = \emptyset \). This follows from \(A^{\mathrm{u},x}_\tau \cap A^{\mathrm{f},x}_\tau = \emptyset \) which holds by definition.

Recall that \(\partial \Omega \) is the boundary of \(\Omega \) and \(\partial _1 \Omega \) is the internal boundary of \(\Omega \) which is the outermost of all (simple) lattice paths are contained in \(\Omega \). A point on \(\partial _1 \Omega \) is a branching point of the tree \(\mathcal {T}\) if it is the last common point of two branches. In that case the edge on the primal lattice passing through the point has to be open in the random cluster configuration. See also Fig. 7.

The region between the large dashed circular arcs is a quarter of an annulus. A crossing of an annulus by the exploration path is drawn in green and the boundaries of the domain \(\underline{\Omega }_\tau \) in black and they are oriented according to the orientation of the medial lattice. As indicated by the figure if there are transversal open and dual-open paths in the annulus, then the crossing has to go near the left- and right-hand boundaries of the annular sector. Notice that at the branching point on the right-hand side the drawn branch jumps from a loop to another one, whereas on the left-hand side it keeps following the loop explored at that time

Next we will write down an estimate in the form of a hypothesis analogous the ones presented in Section 2 of [14]. The estimate is sufficient for the desired compactness properties of the exploration tree. In fact, we will present two equivalent conditions here. As we will later see that conformal invariance will hold for this type of conditions, again analogously to [14]. These conditions will be verified for the FK Ising exploration tree below.

Condition G1

Let \(\Sigma \) be as above. If there exists \(C >1\) such that for any \((\phi ,\mathbb {P}) \in \Sigma \), for any stopping time \(0 \le \tau \le N\) and for any annulus \(A=A(z_0,r,R)\) where \(0 < C \, r \le R\), it holds that

then the family \(\Sigma \) is said to satisfy a geometric joint unforced–forced crossing bound Call the event above \(E^{\mathrm{u,f}}\).

See Fig. 8 for more information about different types of branching points.

Condition G2

The family \(\Sigma \) is said to satisfy a geometric joint unforced–forced crossing power-law bound if there exist \(K >0\) and \(\Delta >0\) such that for any \((\phi ,\mathbb {P}) \in \Sigma \), for any stopping time \(0 \le \tau \le N\) and for any annulus \(A=A(z_0,r,R)\) where \(0 < r \le R\),

Here LHS is the left-hand side of (10).

We want to use Condition G1 or equivalently G2 as a hypothesis for theorems. We start by verifying them for the critical FK Ising model exploration tree.

Theorem 3.4

If \(\Sigma \) is the collection of pairs \((\phi ,\mathbb {P})\) where \(\phi \) satisfies the properties given in (6) and also that its domain of definition \(\Omega (\phi )\) is a discrete domain with some lattice mesh, and \(\mathbb {P}\) is the law of the critical FK Ising model exploration tree \(\mathcal {T}\) on \(U(\phi )\), then \(\Sigma \) satisfies Conditions G1 and G2.

Proof

The theorem can be proved in the same way as the result that a single interface in FK Ising model satisfies a similar condition, which was presented in Section 4.1 of [14]. However, stronger crossing estimates are needed for the exploration tree compared to a single interface. Luckily such estimates were established in Theorem 1.1 of [7]. The full argument goes as follows

-

Similarly as in [14], we try to bound uniformly from above the probability of crossings by the interface in an annular sector. This bound can achieved by given a uniform lower bound for open or dual open paths of edges in the random cluster model in the transversal direction. By symmetry we can suppose that we consider open crossings.

-

By a similar arguments as in Section 4.1 of [14], we can use FKG inequality to reduce it to a question of open crossing of a topological quadrilateral. See also Figures 12 and 13 in [14]. We can move the wired boundary where the crossing starts and introduce free boundary along the two sides which are parallel to the possible open crossing. However we cannot move the free boundary or replace it by wired boundary if the boundary condition at the endpoint of the possible open crossing is indeed free. Thus we need to consider a general topological quadrilateral and not just regular one (with an archetypical shape), which was enough in [14].

-

As indicated by considerations in Fig. 9b, we end up to wired-free-free-free or wired-free-wired-free boundary conditions after the FKG transformation. (That is, we introduce new boundary along the boundaries of the annulus of the opposite “color” as the dashed crossing of the quadrilateral, in the sense that if we consider open crossing the new boundary is free and if the crossing crossing is dual open the new boundary is wired. Then we use the duality as we stated above.)

-

Then we use the crossing estimates of [7] to reduce an uniform lower bound. By Theorem 1.1 of [7], the lower bound (as well as the upper bound) are uniform and depend only on the extremal length of the topological quadrilateral.

The upper bound for existence of a curve can improved to the form \(C \left( \frac{r}{R} \right) ^\alpha \) using a similar argument as Proposition 2.6 of [14] by considering a disjoint set of concentric annuli where an upper bound of the form \(1-\varepsilon \) holds. \(\quad \square \)

As shown in [14, Proposition 2.6], this type of bounds behave well under conformal maps. We have uniform control on how the constants in Conditions G1 and G2 change if we transform the random objects conformally from one domain to another.

Given a collection \(\Sigma \) of pairs \((\phi ,\mathbb {P})\) we define the collection \(\Sigma _\mathbb {D}= \{\phi \mathbb {P}\,:\, (\phi ,\mathbb {P})\in \Sigma \}\) where \(\phi \mathbb {P}\) is the pushforward measure defined by \((\phi \mathbb {P}) (E) = \mathbb {P}(\phi ^{-1}(E))\).

Theorem 3.5

If \(\Sigma \) is as in Theorem 3.4, then \(\Sigma _\mathbb {D}\) satisfies Conditions G1 and G2.

3.3 Uniform approximation by finite subtrees

3.3.1 Topology on trees.

Let \(\mathrm {d}_{\mathrm{curve}}\) to be the curve distance (defined to be the infimum over all reparametrizations of the supremum norm of the difference). We define the space of trees as the space of closed subsets of the space of curves and we endow this space with the Hausdorff metric. More explicitly, if \((T_x)_{x \in S}\) and \((\hat{T}_{\hat{x}})_{\hat{x} \in \hat{S}}\) are trees then the distance between them is given by

3.3.2 Uniform approximation by finite subtrees.

Let us divide the boundary of the unit disc into a finite number of connected arcs \(I_k\), \(k=1,\ldots ,N\), which are disjoint, except possibly at their end points. Let \(x^\pm _k\) be the end points of \(\overline{I_k}\). Suppose that \(-1 = \phi (v_{\mathrm{root}})\) is an end point. Naturally it is then an end point of two arcs.

Let \(\mathcal {I} = \{ I_k \,:\, k=1,\ldots ,N \}\) and denote the maximum of the diameters of \(I_k\) by \(m(\mathcal {I})\) and \(\{ x^\pm _k \,:\, k=1,\ldots ,N\}\) by \(\hat{\mathcal {S}}^\mathbb {D}\). Consider now the random tree \(\mathcal {T}_\delta = (T_{v_{\mathrm{root}},x})_x\) whose law is given by \(\mathbb {P}_\delta \). The finite subtree \(\mathcal {T}_\delta (\mathcal {I})\) is defined by the following steps:

-

first take a discrete approximation \(\hat{\mathcal {S}}_\delta \) of the set of points \(\phi ^{-1}(\hat{\mathcal {S}}^\mathbb {D})\) on the vertex set of \(\partial _1 \Omega _\delta \).

-

then consider the finite subtree \(\hat{\mathcal {T}}_\delta = (T_{v_{\mathrm{root}},x})_{x \in \hat{\mathcal {S}}_\delta }\)

-

finally set \(\mathcal {S}_\delta \) to be the union of \(\hat{\mathcal {S}}_\delta \) and all the branching points (in the sense of the definition in Sect. 3.2) of \(\hat{\mathcal {T}}_\delta \). Denote \((T_{v_{\mathrm{root}},x})_{x \in \mathcal {S}_\delta }\) by \(\mathcal {T}_\delta \). Here \(T_{v_{\mathrm{root}},x}\) for a branching point is defined as the subpath of \((T_{v_{\mathrm{root}},x})_{x \in \hat{\mathcal {S}}_\delta }\) that starts from \(v_{\mathrm{root}}\) and terminates at x.

In other words, we take the subtree corresponding to \(\hat{\mathcal {S}}\) and then we augment it by adding all its branching points and the branches ending at those branching points to the tree. We will call below \(\mathcal {T}_\delta =\mathcal {T}_\delta (\mathcal {I})\) the finite subtree corresponding to \(\mathcal {I}\). It is finite in a uniform way over the family of probability laws and domains of definition. Hence the name.

Denote the image of \(\mathcal {T}_\delta (\mathcal {I})\) under \(\phi \) by \(\mathcal {T}^\mathbb {D}_\delta (\mathcal {I})\).

Theorem 3.6

For each \(R>0\)

as \(m(\mathcal {I}) \rightarrow 0\) where the supremum is taken over \(\delta >0\).

Remark 3.7

In fact, the supremum in the claim can be taken to be over all shapes \(\Omega \), since probability bounds that the proof is based on hold for any shape.

The events which are shown to have low probability in the proof of Theorem 3.6. Two thick arrows are the initial segment of the exploration which visits the boundary first time in \(x_- x_+\) at \(x_0\) and then exits to distance R / 2. The thin arrows indicate the events whose probability is estimated

Proof

Let \(\varepsilon >0\) and suppose that K and \(\alpha \) are positive numbers such that \(M_r \le K r^{-\frac{1}{\alpha }}\) for all \(r>0\) with probability greater than \(1-\varepsilon \) for any \(\mathbb {P}_\delta \). Such constants exist by Theorem 3.1.

Let \(x \in V_{\mathrm{target}}\). Take k such that \(x \in I_k\). Set \(x_\pm = x^\pm _k\). We will show that with high probability \(T_{v_{\mathrm{root}},x}\) is close to either \(T_{v_{\mathrm{root}},x_-}\), \(T_{v_{\mathrm{root}},x_+}\) or some \(T_{v_{\mathrm{root}},\tilde{x}}\) where \(\tilde{x}\) is a branch point (in the sense of the definition in Sect. 3.2) on the arc \(x_- x_+\). We will show that with a high probability this happens for all x simultaneously.

Fix \(R>0\). We will study under which circumstances

is larger than R or smaller or equal to R. We can assume that \(m(\mathcal {I})\) is less than R / 10, say. Then the diameter of \(x_-x_+\) is at most R / 10.

First we notice, that if \(x_0\) the first branching point on \(I_k\), then the branches to \(x,x_-\) and \(x_+\) are all the same until they reach \(x_0\).

We will consider two complementary cases: either \(x_0 \in x_-x\) or \(x_0 \in x x_+\).

If \(x_0 \in x_-x\), the branches of x and \(x_+\) continue to be the same after \(x_0\) at least for some time. Suppose that \(\min \{\mathrm {d}(T_{v_{\mathrm{root}},x}, T_{v_{\mathrm{root}},x_0}),\mathrm {d}(T_{v_{\mathrm{root}},x}, T_{v_{\mathrm{root}},x_+})\}>R\). Then in particular the branch that we follow to continue towards \(x_-\) and \(x_0\) has to reach distance 3R / 4 from \(x_-x_+\) without making a branch point in \(x v_{\mathrm{root}}\), i.e. a visit to the boundary. It has to contain therefore a subpath which has diameter at least R / 2, starts at the boundary and is otherwise disjoint from the boundary. Call the subpaths with this property \(\mathcal {J}\). Then by the bound on \(M_r\) we get \(\#\mathcal {J} \le K (R/2)^{-\frac{1}{\alpha }}\) (see Lemma 2.2 in [1] for the relationship of maximal number of disjoint segments and the minimal number of segments needed to cover a path).

If we happen to reach distance 3R / 4 from \(x_-x_+\) without making a branch point, then in order \(\mathrm {d}(T_{v_{\mathrm{root}},x}, T_{v_{\mathrm{root}},x_+})>R\) to hold the path needs to come close to \(x_- x_+\) again and branch on \(x x_+\) which it will do surely. But while doing so one of the following has to hold (Fig. 10):

-

the returning path doesn’t branch on \(x_+ v_{\mathrm{root}}\) before branching in \(x x_+\) and then the path towards \(x_+\) reaches distance at least 3R / 4 from \(x_-x_+\) before reaching \(x_+\)

-

or the returning path branches in \(x x_+\) and then the path towards x reaches distance 3R / 4 from \(x_-x_+\) before reaching x.

By the conditions presented in the previous section the probabilities of both of them can be made less than \(\varepsilon \) by choosing length of \(x_-x_+\) small.

Now there are \(\#\mathcal {J}\) subpaths where this can happen. Hence the total probability is at most

which can be made arbitrarily small. This argument can be made more formal by introducing stopping times \(\tau _n\) such that \(\tau _0=0\) and \(\tau _n\), \(n \ge 1\) is the smallest t such that \(t> \tau _{n-1}\) and it holds that there exists \(s \in [\tau _{n-1},t)\) such that the exploration at time s in on the boundary and the exploration on (s, t) is disjoint from the boundary and has diameter at least R / 2. Then if \(E_n\) is the event above (described in the two bullets), then the inequality (15) follows simply by using a union bound for \(\bigcup _n E_n\).

The other case \(x_0 \in x x_+\) is similar. \(\quad \square \)

3.4 Precompactness of the loops and recovering them from the tree

3.4.1 Topology for loops.

Let \(\mathbb {T}\) be the unit circle. We consider a loop to be a continuous function on \(\mathbb {T}\), considered modulo orientation preserving reparametrizations of \(\mathbb {T}\). The metric on loops is given in a similar fashion as for curves. We define

where we use the notation \(f\in \gamma \) to denote that f is a parametrization of the (unparametrized) curve \(\gamma \). The space of loops endowed with \(\mathrm {d}_{\mathrm{loop}}\) is a complete and separable metric space.

A loop collection \(\Theta \) is a closed subset of the space of loops. On the space of loop collections we use the Hausdorff distance. Denote that metric by \(\mathrm {d}_{\mathrm{LE}}\), where LE stands for loop ensemble.

The random loop configuration we are considering is the collection of FK Ising loops which we orient in clockwise direction.

3.4.2 Precompactness of the loops.

Theorem 3.8

The family of probability laws of \(\Theta \) is tight in the metric space of loop collections.

Proof

The claim follows directly from Lemma 2.4 and the tightness of the trees. Namely, observe that there exists a constant \(\alpha >0\) and a tight random variable \(K>0\) such that the minimum number of segments needed to cover any branch in the tree with segments of diameter at most R is bounded by \(K \,R^{-\alpha }\). By the bijection between loop collections and trees, each loop is a subpath of a branch of the tree and thus we find that the minimum number of segments needed to cover any loop in the loop collection with segments of diameter at most R is bounded by \(K \,R^{-\alpha }\). \(\quad \square \)

3.5 Uniform approximation of loops by the finite subtrees

By Lemma 2.4 it is possible to reconstruct the loops from the full tree. And by Theorem 3.6 we can approximate the full tree by finite subtrees. How do we recover loops approximately from the finite subtrees? Can we do it in a uniform manner?

Consider a loop \(\theta \in \Theta \). We divide it into arcs which are excursions from the boundary to the boundary, otherwise disjoint from the boundary. Since the loop is oriented in the clockwise direction, exactly one of the arcs goes away from \(v_{\mathrm{root}}\) in the counterclockwise orientation of the boundary and rest of them go towards \(v_{\mathrm{root}}\). The first case is the top arc\(\gamma ^\text {T}\) of the loop \(\gamma \) and the rest of them are the bottom arcs. See Fig. 6. The concatenation of the bottom arcs is denoted by \(\gamma ^\text {B}\).

Suppose that the loop doesn’t intersect a fixed neigborhood of \(v_{\mathrm{root}}^\mathbb {D}\). The diameter of the entire loop is bounded by a uniform constant (depending on the chosen neighborhood) times the diameter of the top arc and vice versa. This means that if the loop has diameter greater than a fixed number, then the top arc is traced by finite subtree for fine enough mesh according to Theorem 3.6.

Also the bottom arcs from right to left are traced until the last branch point to the points defining the finite subtree. Again by Theorem 3.6, the part which remains to be discovered of the loops, has small diameter. Hence if we define approximate loop to have just a linear segment in that place, we see that the loop and the approximation are close in the given metric.

So we define the finite-subtree approximation \(\Theta _\delta \) of the random loop collection \(\Theta \) to be the collection of all those discovered top arcs concatenated with their discovered bottom arcs and the linear segment needed to close the loop. By Theorem 3.6, we have the following result.

Theorem 3.9

For each \(R>0\)

as \(m(\mathcal {I}) \rightarrow 0\).

3.6 Some a priori properties of the loop ensembles

The following theorem gathers some technical estimates needed below.

Theorem 3.10

The family of critical FK Ising loop ensemble measures \((\mathbb {P}_\delta )_{\delta >0}\) satisfy the following properties

-

(a)

(finite number of big loops) For each \(R>0\),

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \# \left\{ \gamma \,:\, {{\,\mathrm{diam}\,}}(\gamma ) \ge R \right\} \; \ge N \right) = o(1) \end{aligned}$$(18)as \(N \rightarrow \infty \).

-

(b)

(small loops and branching points are dense on the boundary) There exists \(\Delta >0\) such that for each \(x \in \partial \mathbb {D}\) and \(R>0\)

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \sup \left\{ {{\,\mathrm{diam}\,}}(\gamma ) \,:\, \gamma \cap B(x,r) \ne \emptyset \right\} \; \ge R \right) = \mathcal {O}\left( \left( \frac{r}{R} \right) ^\Delta \right) \end{aligned}$$(19)as \(r \rightarrow 0\). Consequently, for all \(c >1\)

$$\begin{aligned} \mathbb {P}_\delta \left( \inf \left\{ {{\,\mathrm{diam}\,}}(\gamma ) \,:\, \gamma \cap B(x,c \delta ) \ne \emptyset \right\} \; \ge R \right) = \mathcal {O}\left( \left( \frac{\delta }{R} \right) ^\Delta \right) \end{aligned}$$(20)for all \(\delta >0\) and \(R> c \delta \), and thus for any \(\beta \in (0,1)\) and \(r>0\) and any partitioning of the boundary of the domain to connected sets \(I_j\) of diameter at least r it holds that

$$\begin{aligned} \mathbb {P}_\delta \left( \forall j, \; I_j \text { is touched by a loop with diameter at most } \delta ^\beta \right) = 1 - \mathcal {O}\left( \delta ^{\Delta (1-\beta )} \right) \end{aligned}$$(21)as \(\delta \rightarrow 0\).

-

(c)

(big loops have positive support on the boundary and they touch the boundary infinitely often around the extremal points) For any \(R>0\)

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \exists \gamma \text { s.t. } {{\,\mathrm{diam}\,}}(\gamma ) \ge R \text { and } |x_\text {L}(\gamma ) - x_\text {R}(\gamma )| < r \right) = o(1) \end{aligned}$$(22)as \(r \rightarrow 0\). Here \(x_\text {L}(\gamma )\) and \(x_\text {R}(\gamma )\) are the left-most and the right-most points of \(\gamma \) along the boundary of the domain (as seen from the root). We call the boundary arc \(x_\text {L}(\gamma )x_\text {R}(\gamma )\) the support of\(\gamma \)on the boundary. Furthermore, for any constant \(0<\eta <1\) there exists a sequence \(\delta _0(m)>0\) and constant \(\lambda >0\) such that if \(x(\gamma )=x_\text {L}(\gamma )\) or \(x(\gamma )=x_\text {R}(\gamma )\)

$$\begin{aligned} \sup _{\delta \in (0, \delta _0(m))} \mathbb {P}_\delta \left( \begin{gathered} \exists \gamma \text { s.t. } {{\,\mathrm{diam}\,}}(\gamma ) \ge R, |x_\text {L}(\gamma ) - x_\text {R}(\gamma )| \ge r \,\text {and}\; \\ \# \{ n=1,2,\ldots ,m \,:\, \gamma \text { touches boundary} \\ \text {in } A(x(\gamma ),\eta ^n \, r, \eta ^{n-1} \, r) \} \le \lambda m \end{gathered} \right) = o(1) \end{aligned}$$(23)as \(m \rightarrow \infty \).

-

(d)

(big loops are not pinched)

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \begin{gathered} \exists \gamma \text { s.t. } \gamma ^\text {T}= \gamma _1^\text {T}\sqcup \gamma _2^\text {T}, \, \gamma _1^\text {T}\cap \gamma _2^\text {T}= \{x\}\\ {{\,\mathrm{diam}\,}}(\gamma _k^\text {T}) \ge R \text { for } k=1,2 \text { and } {{\,\mathrm{dist}\,}}(x, \partial \mathbb {D}) < r \\ \end{gathered} \right) = o(1) \end{aligned}$$(24)and

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \begin{gathered} \exists \gamma \text { and } \gamma ^{\prime } \subset \gamma ^\text {B}\text { s.t. } \gamma ^{\prime } = \gamma _1^{\prime } \sqcup \gamma _2^{\prime }, \, \gamma _1^{\prime } \cap \gamma _2^{\prime } = \{x\}\\ \gamma ^{\prime } \cap \partial _1 \Omega =\emptyset , \; {{\,\mathrm{diam}\,}}(\gamma _k^{\prime }) \ge R \text { for } k=1,2 \text { and } {{\,\mathrm{dist}\,}}(x, \partial \mathbb {D}) < r \\ \end{gathered} \right) = o(1) \end{aligned}$$(25)as \(r \rightarrow 0\).

-

(e)

(big loops are distinct)

$$\begin{aligned} \sup _{\delta >0} \mathbb {P}_\delta \left( \exists \gamma , \tilde{\gamma } \text { s.t. } {{\,\mathrm{diam}\,}}(\gamma ),{{\,\mathrm{diam}\,}}(\tilde{\gamma }) \ge R \text { and } \mathrm {d}_{\mathrm{loop}}(\gamma ,\tilde{\gamma }) < r \right) = o(1) \end{aligned}$$(26)as \(r \rightarrow 0\).

Proof

The bound (18) follows from Theorem 3.6. The bound (19) follows directly from the crossing bound of annuli at x, and the bound (20) is merely rephrasing the previous bound and specializing to \(r= c\delta \). For the bound (21), take \(x_j\) to be any point on \(I_j\), say, not too close to the endpoints of \(I_j\), and use the bound (20) for \(x=x_j\), \(R=\delta ^\beta \) and sum over j to get the an upper bound for an union of events of type in (20); the required bound is obtained as the complement.

The bound (23) is shown to hold by considering the domain \(U {\setminus } \underline{\gamma }(\tau )\) where \(\tau \) is a stopping time such that the top arc of a big loop \(\gamma \) is reaching its endpoint \(x(\gamma )\) at time \(\tau \), and using the crossing bound of annuli at \(x(\gamma )\).

The proofs of (22), (24) and (25) are similar. The exploration process discovers, as seen from the root, the top arc of any loop before the lower arcs. As noted before, the diameter of the top arc is comparable to the diameter of the whole loop. Hence it enough to work with loops that have top-arc diameter more than R.

For (22), stop the process when the current arc exits the ball of radius R / 4 around the starting point \(z_0\) of the arc. The rest of the exploration process makes with high probability a branching point in the annulus \(A(z_0,r,R/4)\) before finishing the loop at \(z_0\) by the crossing estimate (namely, the estimate (11) used in \(A(z_0,r,R/4)\) implies that the path will touch both sides of the of the slit domain boundary inside the annulus before reaching distance r from \(z_0\)). This implies that the event (22) does not occur for that loop. These segments of diameter R / 4 in the exploration process are necessarily disjoint (they start on the boundary and remain after that disjoint from the boundary) and hence their number is a tight random variable by Theorem 3.1. The bound (22) follows by a simple union bound.

For (24), stop the process when the diameter of the current arc is at least R and the tip lies within distance r from the boundary. Let the point closest on the boundary be \(z_0\). These arc of diameter R are necessarily disjoint: they start from the boundary and remain after that disjoint from the boundary. The rest of the exploration makes with high probability a branching point before it exits the ball of radius R centered at \(z_0\). The bound (24) follows by a simple union bound.

The proof of (25) is very similar and we omit the details.

For the bound (26), let \(\gamma _1\) and \(\gamma _2\) be two loops of diameter larger than R. Let S be the set of points of \(\gamma _2\) and let \(\gamma _1^\text {T}\) be the top arc of \(\gamma _1\) and let \(\gamma _1^\text {B}\) be the rest of \(\gamma _1\). Without loss of generality, we can assume that \(\gamma _1^\text {T}\) separates S from \(\gamma _1^\text {B}\) in \(\mathbb {D}\). Otherwise we can exchange the roles of \(\gamma _1\) and \(\gamma _2\). Suppose that \(\mathrm {d}_{\mathrm{loop}}(\gamma _1,\gamma _2) < r\). Then we claim that the Hausdorff distance of \(\gamma _1^\text {T}\) and \(\gamma _1^\text {B}\) is less than 2r. The distance from any point of \(\gamma _1^\text {B}\) to \(\gamma _1^\text {T}\) is less than r. This follows when we notice that any line segment connecting \(\gamma _1^-\) to S intersects \(\gamma _1^+\). Due to topological reasons, since \(\gamma _1\) and \(\gamma _2\) are oriented and \(\mathrm {d}_{\mathrm{loop}}\) uses this orientation, it also holds that the distance from any point of \(\gamma _1^\text {T}\) to \(\gamma _1^\text {B}\) is less than 2r. Namely, any point in \(\gamma _1^\text {T}\) has a point of \(\gamma _2\) in its r neighborhood, which has distance less than r to \(\gamma _1^\text {B}\). We can now use the exploration process to explore \(\gamma _1^\text {T}\). Take a point \(z_0 \in \gamma _1^\text {T}\) that has distance at least \(\sqrt{rR}\) to the boundary. Such a point exists by (22) and (25). Now by the crossing property used in \(A(z_0,r,\sqrt{rR})\) at the stopping time when the exploration has explored fully \(\gamma _1^\text {T}\), with high probability the exploration of \(\gamma _1^\text {B}\) doesn’t come close to \(z_0\). \(\quad \square \)

3.6.1 Some consequences.

In the discrete setting we are given a tree–loop ensemble pair. The tree and the loop ensemble are in one to one correspondence as explained earlier. Recall that, given the loop ensemble, the tree is recovered by the exploration process which follows the loops in counterclockwise direction and jumps to the next loop at boundary points where the loop being followed turns away from the target point. Recall also that the loops are recovered from the tree by noticing that the leftmost point in the loop corresponds to a branching point of the tree and the rest of the loop is the continuation of the branch to the point just right of that branching point.

Consider any random tree–loop ensemble pair which is a subsequential weak limit of the tree–loop ensemble pairs of the FK Ising model. Since the discrete collections of loops are have finite number of big loops with the uniform bound (18), the loop collection is almost surely at most countable also in the limit (use the Portmanteau theorem for the closed event that there are at most n loops of diameter strictly greater than R). By the properties of the loop ensemble given in Theorem 3.10, the limiting pair and the process of taking the limit have the following properties

-

the loops are distinguishable in the sense that there is no sequence of pairs of distinct loops that would converge to the same loop.

-

Each loop consists of a single top arc which is disjoint from the boundary except at the endpoints and a non-empty collection of bottom arcs. In particular, the endpoints of the top arc (the leftmost and rightmost points of the loop) are different.

From these properties we can prove the following result. The second assertion basically means that there is a way to reconstruct the loops from the tree also in the limit.

Theorem 3.11

Let \((\Theta ^\mathbb {D},\mathcal {T}^\mathbb {D})\) be the almost sure limit of \((\Theta _{\delta _n}^\mathbb {D},\mathcal {T}_{\delta _n}^\mathbb {D})\) as \(n \rightarrow \infty \). Write \(\Theta ^\mathbb {D}=(\theta _j)_{j \in J}\) and \(\Theta _{\delta _n}^\mathbb {D}= (\theta _{n,j})_{j \in J}\) (with possible repetitions) such that almost surely for all \(j \in J\), \(\theta _{n,j}\) converges to \(\theta _j\) as \(n \rightarrow \infty \), and then set \(x_{n,j}\) to be the target point of the branch of \(\mathcal {T}_{\delta _n}^\mathbb {D}\) that corresponds to \(\theta _{n,j}\) in the above bijection (described in the beginning of the Sect. 3.6.1). Then

-

Almost surely all \(x_{n,j}\) converge to some points \(x_{j}\) as \(n\rightarrow \infty \) and all the branches \(T_{x_{n,j}^+}\) converge to some branches denoted by \(T_{x_{j}^+}\) as \(n\rightarrow \infty \). Furthermore, \(x_{j}\) are distinct and they form a dense subset of \(\partial \mathbb {D}\) and \(\mathcal {T}\) is the closure of \((T_{x_{j}^+})_{j \in J}\).

-

On the other hand, \((T_{x_{j}^+})_{j \in J}\) is characterized as being the subset of \(\mathcal {T}\) that contains all the branches of \(\mathcal {T}\) that have a doublepoint on the boundary. Furthermore, that doublepoint is unique and it is the target point (that is, endpoint) of that branch.

-

Any loop \(\theta _j\) can be reconstructed from \((T_{x_{j}^+})_{j \in J}\) in the following way: the leftmost point of \(\theta _j \cap \partial \mathbb {D}\) (the point closest to the root in the counterclockwise direction) is one of the doublepoints \(x^+_j\) and the loop \(\theta _j\) is the part between the first and last visit to \(x^+_j\) by \(T_{x_{j}^+}\).

3.7 Precompactness of branches and description as Loewner evolutions

3.7.1 Precompactness of a single branch.

We know now that the sequence of exploration trees is tight in the space of curve collections, which has the topology of Hausdorff distance on the compact sets of the space of curves. This enables us to choose for any subsequence a convergent subsequence. However, it turns out that we need stronger tools to be able to characterize the limit. We will review the results of [14] that we will use.

The hypothesis of [14] is similar to the conditions in Sect. 3.2. Once that hypothesis holds for a sequence of random curves, it is shown in that paper that the sequence is tight in the topology of the space of curves. Furthermore, it is established that such a sequence is also tight in the topology of uniform convergence of driving terms of Loewner evolutions in such a way that mapping between curves and Loewner evolutions is uniform enough so that if a sequence converges in both of the above mentioned topologies the limits have to be the same.

The hypothesis of [14] for the FK Ising branch has been already established since we can see it as a special case of Theorem 3.4.

Theorem 3.12

(Kemppainen–Smirnov, [14]). Under certain hypothesis, a sequence probability laws \(\mathbb {P}_n\) of random curves of \(\mathbb {H}\) has the following properties: for each \(\varepsilon >0\), there exists an event K such that \(\inf _n \mathbb {P}_n(K) \ge 1 - \varepsilon \) and the capacity-parametrized curves in \(K \cap \{\gamma \text { simple}\}\) form an equicontinuous family, their driving processes form an equicontinuous family and finally \(|\gamma (t)| \rightarrow \infty \) as \(t \rightarrow \infty \) uniformly. Moreover the driving processes on the event \(K \cap \{\gamma \text { simple}\}\) are \(\beta \)-Hölder continuous with a bounded Hölder constant for any \(\beta \in (0, \frac{1}{2})\).

In addition to [14], see also Section 6.3 in [13] for this type of argument in the case of site percolation.

3.7.2 Precompactness of finite subtrees.

For fixed finite number of curves it is straightforward to generalize Theorem 3.12. In fact, the conclusions of Theorem 3.12 hold for any finite subtree that we considered in Sect. 3.5.

In the rest of the paper we use these tools available for us and aim to characterize the scaling limits of finite subtrees of the exploration tree. If we manage to establish the uniqueness of the subsequent scaling limit of those objects, then Theorem 1.1 follows from tightness and from the approximation result, Theorem 3.9.

4 Preholomorphic Martingale Observable

4.1 The setup for the observable

It is natural to generalize the setup of the previous section to domains of type illustrated in Fig. 11.

Generalized setting for the chordal exploration tree. We add an external arc from b to a with the following interpretation. The observable contains an indicator factor for the curve starting from c and a complex factor depending on the winding of that curve. If that curve goes to b we continue to follow it through the external arc to a and from there to d. On the other hand, if the curve starting from c ends directly to d, then the curve connecting a to b is counted as a loop giving an additional weight \(\sqrt{2}\) to the configuration. The arc \(bc \subset V_{\partial ,1}\) is marked with \(*\)’s. Notice that the general domain can have common parts for different boundary arcs longer than one lattice step such as near b. In fact, we need to consider domains in this generality, if we wish to explore the interfaces starting at the marked points and the corresponding observables conditioned on the information of the progressing exploration

Henceforth we consider \(G^\spadesuit \) with four special boundary vertices a, b, c, d, where the boundary edges (when consider as edges of the directed graph \(G^\spadesuit _\rightarrow \subset \mathbb {L}^\spadesuit _\rightarrow \)) at a and c point inwards and the boundary edges at b and d outwards. Now a and d play the roles of \(v_{\text {root}}\) and w, respectively, in the construction of the exploration tree of the previous section. The arcs ab and cd have white boundary (\(\mathbb {L}^\circ \) wired) and bc and da have black boundary (\(\mathbb {L}^\bullet \) wired).

To get a random cluster measure on \(G^\spadesuit \) consistent with the all wired boundary conditions and the exploration process in Sect. 2, we have to count the wired arcs bc and da to be in the same cluster. This corresponds to the external arc configuration where a and b are connected by an arc and c and d are connected by an arc. Denote such external arc pattern\((a \smile b, c \smile d)\). Later we will denote internal arc patterns by \((a \frown b, c \frown d)\) etc.

In fact, we will choose not to draw the external arc \(c \smile d\). The reason for this is that it is not used in the definition of the observable and the weights for loop configurations that we get either with or without \(c \smile d\) are all proportional by the same \(\sqrt{2}\) factor. Thus it doesn’t change the probability distribution.

The configuration on \(G^\spadesuit \) is \((\gamma _1,\gamma _2,l_i \,:\, i=1,2,\ldots N_{\mathrm{loops}})\), where \(\gamma _1\) and \(\gamma _2\) are the paths starting from a and c, respectively. Define

where \(\alpha \) is the planar curve that realizes the exterior arch \(a \smile b\) as in Fig. 11 and \(\sqcup \) denotes the concatenation of paths. The first case in (27) is the internal arc pattern \((a \frown b, c \frown d)\) and the second is \((a \frown d, c \frown b)\).

For a sequence of domains \(G_\delta ^\spadesuit \subset \delta \mathbb {L}^\spadesuit \) with \(a_\delta ,b_\delta ,c_\delta ,d_\delta \), define the observable as

for any \(e \in E(G^\diamond _\delta ) \subset E(G^\spadesuit _\delta )\). Here \(W(d_\delta ,e)\) is the winding from boundary edge of \(d_\delta \) to e along the reversal of \(\hat{\gamma }\) and \(\theta _\delta \) (satisfying \(|\theta _\delta |=1\)) is a constant, whose value we specify later. Notice that \(f_\delta \) doesn’t depend on the choice of \(\alpha \) since the winding is well-defined modulo \(4 \pi \).

4.2 Preholomorphicity of the observable

Let \(\lambda =e^{-i\frac{\pi }{4}}\). Associate to each edge e of the modified medial lattice one of the following four lines through the origin \(\mathbb {R}, i \mathbb {R}, \lambda \mathbb {R}, \overline{\lambda } \mathbb {R}\) as in Fig. 12. Denote this line by l(e).

Spin preholomorphicity Choose the constant \(\theta \) in the definition of \(f_\delta \) so that the value of \(f_\delta \) at the edge e belongs to the line l(e). Then \(\theta \in \{\pm 1, \pm i, \pm \lambda , \pm \overline{\lambda } \}\). The ± sign mostly doesn’t play any role, but in some situations it should be chosen consistently. The observable \(f_\delta \) satisfies the relation

for every vertex v of the medial graph, whose four neighboring edges in counterclockwise order are called \(e_N,e_W,e_S,e_E\). The relation is verified using the same involution among the loop configurations as in [26].

Therefore, using (29), we can define \(f_\delta (v) \mathrel {\mathop :}= f_\delta (e_W) + f_\delta (e_E)=f_\delta (e_S) + f_\delta (e_N)\) and it satisfies for any neighboring vertices v, w the identity

where e is the edge between v and w and \({{\,\mathrm{Proj}\,}}_e\) is the orthogonal projection to the line l(e). That is, if \(l(e)=\eta _e \mathbb {R}\) where \(\eta _e\) is a complex number with unit modulus, then

Since \(f_\delta \) on \(V(G^\diamond _\delta )\) satisfies the relation (30), we call it spin-preholomorphic (or strongly preholomorphic). Spin-preholomorphic functions satisfy a discrete version of the Cauchy–Riemann equations [26].

The associated lines \(\mathbb {R}, i \mathbb {R}, \lambda \mathbb {R}, \overline{\lambda } \mathbb {R}\) around the two different types of vertices of \(\mathbb {L}^\diamond \). Note that for a directed edge \(e \in \mathbb {L}^\diamond _\rightarrow \) the line in the complex plane is \(\sqrt{\overline{e}} \, \mathbb {R}\) where e is interpreted as complex unit vector in its direction

4.3 Martingale property of the observable

Let \(\gamma = T_{a,d}\) where \(T_{a,d}\) is the branch of the exploration tree constructed in Sect. 2.3. Let’s parametrize \(\gamma \) by lattice steps so that at integer values of the time, \(\gamma \) is at the head of an oriented edge (in \(\mathbb {L}^\spadesuit _\rightarrow \)) between black and white octagon and at half-integer values of the time, it is at the tail of such an edge. Note that step between the times \(t=k-1/2\) and \(t=k\), \(k =1,2,\ldots \), is deterministic given the information up to time \(t = k-1/2\). Hence between two consecutive integer times, at most one bit of information is generated: namely, whether the curve turn left or right in an “intersection”. Even this choice might be predetermined, if we are visiting a boundary vertex or a vertex visited already before.

Denote the loop configuration by \(\omega \), in the four marked point setting it consist of two arcs and a number of loops. We note that the pair \((\gamma [0,t],\omega )\) can be sampled in two different ways (which are basically describing the conditional distributions of one given the other):

-

(1)

In the first option, we sample first \(\omega \) and then \(\gamma [0,t]\) is a deterministic function of \(\omega \) as explained above.

-

(2)

In the second option, we sample first \(\gamma [0,t]\) (or rather we keep the sample of \(\gamma [0,t]\) of the previous construction) and then we (re-) sample \(\omega ^{\prime }\) (We can rename \(\omega ^{\prime }\) in the end as \(\omega \). The prime symbol is only used so that there is no confusion with \(\omega \) defined above.) in two steps: we sample \(\omega ''\) in the complement of \(\gamma [0,t]\) using the boundary condition given by \(\gamma [0,t]\) and then for each visit of \(\gamma [0,t]\) to the arc \(bc \subset V_{\partial ,1}\) we flip an independent coin \(\zeta _v \in \{0,1\}\), \(v \in bc\), such that

$$\begin{aligned} \mathbb {P}(\zeta _v=0) = \frac{1}{1 + \sqrt{2}} , \quad \mathbb {P}(\zeta _v=1) = \frac{\sqrt{2}}{1 + \sqrt{2}} \end{aligned}$$(32)and then we open for each visited \(v \in bc\) such that \(\zeta _v=1\) the edge \(e_v \in E(\mathbb {L}^\bullet )\) in \(\omega ^{\prime \prime } \cup \gamma [0,t]\) and call the resulting configuration \(\omega ^{\prime }\). Notice that the numbers on the right-hand sides in (32) are \(1-p_c\) and \(p_c\), repectively. The number \(1-p_c\) is the weight of the configuration where the edge at v is closed relative to the configuration where the edge is open.

See Table 1 for details about the equivalence of these two constructions of \((\gamma [0,t],\omega )\). The configurations are grouped in Table 1 in pairs so that the configurations only differ by the state of the edge at v. By construction, the right column occurs for \(\gamma \) surely, since the left column leads to branching at v. The relative weights of the \(\mathbb {L}^\bullet \)-edge at v being open or closed are independent of are calculated in the table giving (32); namely, the relative weight of the pair of the configuration is a constant. Notice also that bc mattered only here since when the branch hits ab or da (or even cd, if we continue to process all the way up to d) it continuous to follow the same “loop”. Thus the loop-to-loop jump can only occur on bc.

For a non-negative integer t, let \(G_t^\spadesuit \) be the slit graph where we have removed \(\gamma [0,t]\) and let \(a_t\) be the edge \(\gamma ([t-1/2,t])\).

Next we decorate the boundary arc \(bc \subset V_{\partial ,1} (G^\diamond )\) with i.i.d. random variables \((\xi _v)\), \(\xi _v \in \{-1,1\}\), v a vertex on bc, such that

Let \((\mathcal {F}_t)_{t \in \mathbb {R}_+}\) be the filtration such that the \(\sigma \)-algebra \(\mathcal {F}_t\) is generated by \(\gamma [0,t]\) and \(\xi _v\) for any \(v \in \gamma [0,t-1] \cap bc\).

Notice that we can couple \(\zeta _v\) and \(\xi _v\) in such a way that

We interpret \(\zeta _v\) so that \(\zeta _v = 1\) if and only if we jump at v from one boundary touching loop to the neighboring one, and \(\xi _v\) so that \(\xi _v=1\) when there is no jump and \(\xi _v\) is a fair coin flip when there is a jump.

Set for any integer t

where we can either take \(b_t=b\) or \(b_t\) is the rightmost point visible from cd. The rightmost visible point means that we move \(b_t\) on all branching events to the place where we cut the next loop open, and to the edge which points towards that vertex, to be more specific. That is, \(M_t^+\) is the probability that a is connected to d by the interface (internal arc) in the slit domain and by the proof of the next result, it is interpreted as a conditional probability in the original domain. Lemmas 4.1 and 4.2 below are very central for the proof of the main theorem, namely, the law of the exploration process is determined from the martingale property of these quantities.

Lemma 4.1