Abstract

In this paper we consider the iteratively regularized Gauss–Newton method (IRGNM) in its classical Tikhonov version as well as two further—Ivanov type and Morozov type—versions. In these two alternative versions, regularization is achieved by imposing bounds on the solution or by minimizing some regularization functional under a constraint on the data misfit, respectively. We do so in a general Banach space setting and under a tangential cone condition, while convergence (without source conditions, thus without rates) has so far only been proven under stronger restrictions on the nonlinearity of the operator and/or on the spaces. Moreover, we provide a convergence result for the discretized problem with an appropriate control on the error and show how to provide the required error bounds by goal oriented weighted dual residual estimators. The results are illustrated for an inverse source problem for a nonlinear elliptic boundary value problem, for the cases of a measure valued and of an \(L^\infty \) source. For the latter, we also provide numerical results with the Ivanov type IRGNM.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we consider a nonlinear ill-posed operator equation

where the possibly nonlinear operator \(F:\mathcal {D}(F)\subseteq X \rightarrow Y\) with domain \(\mathcal {D}(F)\) maps between real Banach spaces X and Y. We are interested in the ill-posed situation, i.e., F fails to be continuously invertible, and the data are contaminated with noise, thus regularization has to be applied (see, e.g., [8, 27], and references therein).

Throughout this paper we will assume that an exact solution \(x^\dagger \in \mathcal {D}(F)\) of (1) exists, i.e., \(F(x^\dagger )=y\), and that the noise level \(\delta \) in the (deterministic) estimate

is known.

Partially we will also refer to the formulation of the inverse problem as a system of model and observation equation

Here \(A:X\times V\rightarrow W^*\) and \(C:V\rightarrow Y\) are the model and observation operator, so that with the parameter-to-state map \(S:X\rightarrow V\) satisfying \(A(x,S(x))=0\) and \(F=C\circ S\), (1) is equivalent to the all-at-once formulation (3), (4).

Newton type methods for the solution of nonlinear ill-posed problems (1) have been extensively studied in Hilbert spaces (see, e.g., [2, 20] and the references therein) and more recently also in a in Banach space setting. In particular, the iteratively regularized Gauss–Newton method [1] can be generalized to a Banach space setting by calculating iterates \(x_{k+1}^{\delta }\) in a Tikhonov type variational form as

see, e.g., [11, 16, 17, 21, 28] where \(p\in [1,\infty )\), \((\alpha _k)_{k\in \mathbb {N}}\) is a sequence of regularization parameters, and \(\mathcal {R}\) is some nonnegative regularization functional. Alternatively, one might introduce regularization by imposing some bound \(\rho _k\) on the norm of x, or, again, generally, on a regularization functional of x

which corresponds to Ivanov regularization or the method of quasi solutions, see, e.g., [7, 13,14,15, 22, 24, 26]. A third way of incorporating regularization in a Newton type iteration is Morozov regularization, also called the method of the residuals, see, e.g., [9, 22, 23]

for some \(\sigma \in (0,1)\), where the choice of the bound in the inequality constraint is very much inspired by the inexact Newton type regularization parameter choice in [10].

We restrict ourselves to the norm in Y as a measure of the data misfit, but the analysis could as well be extended to more general functionals \(\mathcal {S}\) satisfying certain conditions, as e.g., in [11, 28]. Here \(\mathcal {C}\) is a set (possibly chosen with convenient properties for carrying out the minimization) containing \(x^\dagger \) and being contained in \(\mathcal {D}(F)\), such that F satisfies additional conditions on \(\mathcal {C}\) see (8), (10) below. If F is defined on all of X, then the minimization problem (5) can be posed in an unconstrained way \(\mathcal {C}=X\).

As a restriction on the nonlinearity of the forward operator F we impose the tangential cone condition

(also called Scherzer condition, cf. [25]) for some constant \(c_{tc}<1/3\). Here, for any \(r>0\),

is a sublevel set of the regularization functional and R will be specified in the convergence result Theorem 1.

Note that the convergence conditions imposed in [11, 16, 17, 21, 28] in the situation without source condition, namely local invariance of the range of \(F'(x)^*\), are slightly stronger, since this adjoint range invariance is sufficient for (8). However, most probably the gap is not very large, as in those application examples where (8) has been verified, the proof of (8) is actually often done via adjoint range invariance. In (5), (6), (7), the bounded linear operator \(F'(x)\) is not necessarily a Gâteaux or Fréchet derivative of F, but just some local linearization (in the sense of (8)), satisfying additionally the weak closedness condition

In here, \(\mathcal {T}_X\) and \(\mathcal {T}_Y\) are topologies on X and Y (e.g., just the weak or weak* topologies) such that bounded sets in Y are \(\mathcal {T}_Y\)-compact and the norm in Y is \(\mathcal {T}_Y\)-lower semicontinuous.

The remainder of this paper is organized as follows. In Sect. 2 we state and prove convergence results in the continuous and discretized setting. Section 3 shows how to actually obtain the required discretization error estimates by a goal oriented weighted dual residual approach and Sect. 4 illustrates the theoretical findings by an inverse souce problem for a nonlinear PDE. In Sect. 5 we provide some numerical results for this model problem and Sect. 6 concludes with some remarks.

2 Convergence

In this section we will study convergence of the IRGNM iterates first of all in a continuous setting, then in the situation of having discretized for computational purposes.

The regularization parameters \(\alpha _k\), \(\rho _k\), \(\sigma \) are chosen a priori

(note that \(({\textstyle \frac{2c_{ct}}{1-c_{ct}}})^p<1\) for \(c_{tc}<1/3\)),

and

with \(\tau \) as in (14), and the iteration is stopped according to the discrepancy principle

with some fixed \(\tau >1\) chosen sufficiently large but independent of \(\delta \).

Theorem 1

Let \(\mathcal {R}:X\rightarrow [0,\infty ]\) be proper, convex and \(\mathcal {T}_X\) lower semicontinuous with \(\mathcal {R}(x^\dagger )<\infty \) and let, for all \(r\in [\mathcal {R}(x^\dagger ),\infty )\) in case of (5), or for all \(r\in [\mathcal {R}(x^\dagger ),\rho ]\) in case of (6), or for \(r=\mathcal {R}(x^\dagger )\) in case of (7), the sublevel set (9) be compact with respect to the topology \(\mathcal {T}_X\) on X.

Moroever, let F satisfy (8), (10).

Finally, let the family of data \((y^\delta )_{\delta >0}\) satisfy (2).

-

(i)

Then for fixed \(\delta \), \(y^\delta \), the iterates according to (5)–(7) are well-defined and satisfy

$$\begin{aligned} x_k^\delta \in \mathcal {B}_R \text{ with } R \, \left\{ \begin{array}{l} \text{ defined } \text{ by } \text {{(}23{)}},\, \text {{(}19{)}},\, \text {{(}20{)}} \text{ in } \text{ case } \text{ of } {(}5{)}\\ =\rho \text{ in } \text{ case } \text{ of } \text {{(}6{)}}\\ =\mathcal {R}(x^\dagger ) \text{ in } \text{ case } \text{ of } \text {{(}7{)}} \end{array}\right. \end{aligned}$$(15)for all \(k\le k_*(\delta ,y^\delta )\), which denotes the stopping index according to the discrepancy principle (14) with \(\tau \) sufficiently large, and this stopping indes \(k_*(\delta ,y^\delta )\) is finite.

-

(ii)

Moreover, for both methods we have \(\mathcal {T}_X\)-subsequential convergence as \(\delta \rightarrow 0\) i.e., \((x^\delta _{k_*(\delta ,y^\delta )})_{\delta >0}\) has a \(\mathcal {T}_X\)-convergent subsequence and the limit of every \(\mathcal {T}_X\)-convergent subsequence solves (1). If the solution \(x^\dagger \) of (1) is unique in \(\mathcal {B}_R\), then \(x^\delta _{k_*(\delta ,y^\delta )}{\mathop {\longrightarrow }\limits ^{\mathcal {T}_X}}x^\dagger \) as \(\delta \rightarrow 0\).

-

(iii)

Additionally, \(k_*\) satisfies the asymptotics \(k_*=\mathcal {O}(\log (1/\delta ))\).

Proof

Existence of minimizers \(x_{k+1}^\delta \) of (5)–(7) for fixed k, \(x_k^\delta \) and \(y^\delta \) follows by the direct method of calculus of variations: In all three cases, the cost functional

is bounded from below and the admissible set

is nonempty (for (6) this follows from \(\rho _k\ge \mathcal {R}(x^\dagger )\) and for (7) from (8), (14) and (13), see (16) below). Hence, there exists a minimizing sequence \((x^l)_{l\in \mathbb {N}}\subseteq X^{\mathrm{ad}}\cap \mathcal {B}_r\) for

with bounded linearized residuals \(\Vert F'(x_k^\delta )(x^l-x_k^\delta )+F(x_k^\delta )-y^\delta \Vert \le s\) for

and \(\lim _{l\rightarrow \infty }J_k(x^l)=\inf _{x\in X^{\mathrm{ad}}} J_k(x)\). By \(\mathcal {T}_X\)-compactness of \(\mathcal {B}_r\), the sequence \((x^l)_{l\in \mathbb {N}}\) has a \(\mathcal {T}_X\)-convergent subsequence \((x^{l_m})_{m\in \mathbb {N}}\) with limit \(\bar{x}\in \mathcal {B}_r\). Moroever, \(\mathcal {T}_Y\)-compactness of norm bounded sets in Y together with \(\mathcal {T}_X\)-\(\mathcal {T}_Y\)-closedness of \(F'(x_k^\delta )\) and lower \(\mathcal {T}_Y\) semicontinuity of the norm in Y, implies that in all three cases \(J_k(\bar{x})\le \liminf _{m\rightarrow \infty }J_k(x^{l_m})=\inf _{x\in X^{\mathrm{ad}}} J_k(x)\) and \(\bar{x}\in X^{\mathrm{ad}}\), hence \(\bar{x}\) is a minimizer.

Note that (ii) follows from (i) by standard arguments and our assumption on \(\mathcal {T}\)-compactness of \(\mathcal {B}_R\). Thus it remains to prove (i) and (iii) for the three versions (5), (6), (7) of the IRGNM.

For this purpose we are going to show that for every \(\delta >0\), there exists \(k_*=k_*(\delta ,y^\delta )\) such that \(k_* \sim \log (1/\delta )\), and the stopping criterion according to the discrepancy principle \(\Vert F(x^\delta _{k_*(\delta ,y^\delta )})-y^\delta \Vert \le \tau \delta \) is satisfied. For (5), we also need to show that \(\mathcal {R}(x_k^\delta )\le R\) for \(k\le k_*(\delta ,y^\delta )\), whereas in (6) this automatically holds by (12). The same holds true for (7): If \(x_k^\delta \in \mathcal {B}_R\), then by (8), (14) and (13) we have

so \(x^\dagger \) is admissible, hence \(\mathcal {R}(x_{k+1}^\delta )\le \mathcal {R}(x^\dagger )\), i.e., \(x_{k+1}^\delta \in \mathcal {B}_R\).

We start with the Tikhonov version (5) and carry out an induction proof of the following statement: For all \(k\in \{0,\ldots ,k_*(\delta ,y^\delta )\}\)

where

for some fixed small \(\gamma \in (0,1)\). We will require \(\frac{q}{\theta }<1\), which by definition of q (19) is achievable for \(\gamma >0\) sufficiently small, due to \(\theta >({\textstyle \frac{2c_{ct}}{1-c_{ct}}})^p\), cf. (11). By Lemma 2 (see the “Appendix”) the right hand side estimate in (17) implies

Using the minimality of \(x_{k+1}^\delta \) and (2), (8) together with \(x^\dagger ,x_k^\delta \in \mathcal {B}_R\), we have

Using this and again (14) in (22) yields

On the other hand, since we have established \(x_{k+1}^\delta \in \mathcal {B}_R\), we can apply (8) to the left hand side of (22) to obtain

see also [11, Lemma 5.2] and [21, proof of Theorem 3].

To handle the power p we make use of the following inequalities that can be proven by solving extremal value problems, see the “Appendix”

for all \(a,b > 0,\) \(p \ge 1\) and \(\gamma , \epsilon \in (0,1)\), where for the right hand inequality to hold, additionally \(a\ge b\) is needed.

Hence, in case \((1-c_{tc})\Vert F(x_{k+1}^\delta )-y^\delta \Vert \ge c_{tc}\Vert F(x_k^\delta )-y^\delta \Vert \) the following general estimate holds

for \(\gamma , \epsilon \in (0,1).\)

So in order for this recursion to yield geometric decay of \(\Vert F(x_k^\delta )-y^\delta \Vert \), we need to ensure

for a proper choice of \(\epsilon ,\gamma \in (0,1)\). To obtain the largest possible (and therefore least restrictive) bound on \(c_{tc}\), we rewrite the requirement above as

as can be found out by evaluating the derivative of \(\phi \)

Thus we will furtheron set \(\epsilon =\frac{1}{2}\) and assume that \(\gamma >0\) is sufficiently small so that (26) holds with \(\epsilon =\frac{1}{2}\), i.e., (19). Then, using (11), estimate (25) can be written as

which we first of all regard as a recursive estimate for \(d_k\).

To derive a similar estimate also in the complementary case \((1-c_{tc})\Vert F(x_{k+1}^\delta )-y^\delta \Vert < c_{tc}\Vert F(x_k^\delta )-y^\delta \Vert \), we use that fact that, for \(d_k\) as in (18), this inequality just means

and, using (22) and the left hand part of (24),

hence after addition we again get (27) (even with a slightly smaller value of \(q:=(1+2^{p-1}(1+\gamma )^{p-1})(\frac{c_{tc}}{1-c_{tc}})^{p}\)).

Thus in both cases, using Lemma 2 we can conclude that

This finishes the induction proof of (17) for all \(k\in \{0,\ldots ,k_*(\delta ,y^\delta )\}\).

We next show that the discrepancy stopping criterion from (14), i.e., \(d_{k_*} \le \tilde{\tau }\delta ^p\) for \(\tilde{\tau }=2^{1-p}(1-c_{tc})^p\tau ^p\), will be satisfied after finitely many, namely \(O(\log (1/\delta ))\), steps. For this purpose, note that \(\tilde{\tau }>\frac{C}{1-q}\), provided \(\tau \) is chosen sufficiently large, which we assume to be done. Thus, indeed, using (11), (21), we have

where the right hand side falls below \(\tilde{\tau }\delta ^p\) as soon as

Thus we get the upper estimate \(k_{*}(\delta ,y^\delta )\le \bar{k}(\delta )=O(\log (1/\delta ))\).

For the Ivanov version (6), it only remains to show finiteness of the stopping index, as boundedness of the \(\mathcal {R}\) values by \(R=\rho \) holds by definition. Applying the minimality argument with \(x^\dagger \) being admissible [cf. (12)] to (6) leads to the special case \(p=1\), \(\alpha _k=0\) in (25)

Our notation becomes

which gives

and by induction, one can conclude

where the right hand side is smaller than \(\tilde{\tau }\delta \) (with \(\tilde{\tau }=(1-c_{tc})\tau \)) for all

so that we can again conclude \(k_{*}(\delta ,y^\delta )\le \bar{k}(\delta )=O(\log (1/\delta ))\).

Finally we consider (7), where boundedness of the \(\mathcal {R}\) values by \(R=\mathcal {R}(x^\dagger )\) holds by minimality and the fact that \(x^\dagger \) is admissible, cf. (16). Geometric decay of the residuals follows by the estimate

and (13), i.e.,

with

so that similarly to above we end up with a logarithmic estimate for \(k_*\). \(\square \)

Remark 1

Convergence of \(\mathcal {R}(x_{k_*(\delta ,y^\delta )}^\delta )\) to \(\mathcal {R}(x^\dagger )\) as \(\delta \rightarrow 0\) holds along the \(\mathcal {T}_X\) convergent subsequence according to Theorem 1 (ii), first of all for the Morozov and the Ivanov version of the IRGNM, with the choice \(\rho =\mathcal {R}(x^\dagger )\) for the latter, since in both cases \(\mathcal {R}(x_{k_*(\delta ,y^\delta )}^\delta )\le \mathcal {R}(x^\dagger )\) holds for all \(\delta \) and \(\mathcal {R}\) is \(\mathcal {T}_X\) lower semicontinuous. The same holds true also for the Tikhonov version with the alternative choice of \(\alpha _k\) such that

for some constants \(\underline{\sigma }\), \(\overline{\sigma }\) satisfying \(c_{tc}+\frac{1+c_{tc}}{\tau }<\underline{\sigma }<\overline{\sigma }<1\) in place of (11), as can be seen directly from (22). If \(\mathcal {R}\) is defined by the norm on a space with the Kadets-Klee property, and \(\mathcal {T}_X\) is the weak topology of this space, then this implies norm convergence of \(x_{k_*(\delta ,y^\delta )}^\delta \) to \(x^\dagger \) along the same subsequence.

Remark 2

The fact that \(x_k^\delta \) stays in \(\mathcal {B}_R\) [cf. (15)] is crucial for the applicability of the tangential cone condition (8) in these iterates. If the functional \(\mathcal {R}\) quantifies some distance to an a priori guess \(x_0\), (e.g., \(\mathcal {R}=\Vert x-x_0\Vert ^q\) for some norm \(\Vert \cdot \Vert \) and some \(q>0\)), then \(x\in \mathcal {B}_R\) with small R means closeness of x to \(x_0\) in a certain sense. Thus, the smaller R is, the better (8) might get achievable with some \(c_{tc}<\frac{1}{3}\). On the other hand, making R according to (15) small means closeness of \(x^\dagger \) to \(x_0\). Thus we deal with local convergence, as typical for Newton type methods.

Now we consider the appearance of discretization errors in the numerical solution of (5), (6) arising from restriction of the minimization to finite dimensional subspaces \(X^k_h\) and leading to discretized iterates \(x_{k,h}^\delta \) and an approximate version \(F^k_h\) of the forward operator i.e., we consider the discretized version of Tikhonov-IRGNM (5)

of Ivanov-IRGNM (6)

and of Morozov-IRGNM (7)

respectively. Moreover, also in the discrepancy principle, the residual is replaced by its actually computable discretized version

We define the auxiliary continuous iterates

and

respectively in order to be able to use minimality, i.e., compare with the continuous exact solution \(x^\dagger \). For an illustration we refer to [18, Figure 1].

First of all, we assess how large the discretization errors can be allowed to still enable convergence. Later on, in Sect. 3, we will describe how to really obtain such estimates a posteriori and to achieve the prescribed accuracy by adaptive discretization.

Corollary 1

Let the assumptions of Theorem 1 be satisfied and assume that the discretization error estimates

(note that no absolute value is needed in (38), (40); moreover, (40) is only be needed for (5) and (7)) hold with

for all \(k\le k_*(\delta ,y^\delta )\) and constants \(c_\eta ,c_\xi >0\) sufficiently small, \(\bar{\zeta }>0\).

Then the assertions of Theorem 1 remain valid for \(x^\delta _{k_*(\delta ,y^\delta ),h}\) in place of \(x^\delta _{k_*(\delta ,y^\delta )}\) with (34) in place of (14) and (42) in place of (23).

Proof

For the Tikhonov version (31), in order to inductively estimate \(\mathcal {R}(x_{k+1,h}^\delta )\), given \(x_k^\delta \in \mathcal {B}_R\), note that from (43) with \(k+1\) replaced by k, we get like in (23) that

where

for \(\gamma , \tilde{\gamma }, c_\eta \in (0,1)\), which are chosen small enough so that \(q<\theta \). As before, from the minimality of \(x_{k+1}^\delta \) and (2), (8) as well as \(x^\dagger \in \mathcal {D}(F)\), we have

Hence, with the same technique as in the proof of Theorem 1, using (24) with \(\epsilon =\frac{1}{2}\), we have

using (41). From this, by induction we conclude

Hence, by (39), (41), we have the following estimate

where the right hand side falls below \(\tau \delta \) as soon as

for \(\tilde{\tau }= 2^{1-p}(1-c_{tc})^p(\tau (1-c_\xi ))^p\). Note that \(\tilde{\tau } > \frac{C}{1-q} \), provided \(\tau \) is chosen sufficiently large, which we assume to be done. That is, we have shown that the discrepancy stopping criterion from (34) will be satisfied after finitely many, namely \(O(\log (1/\delta ))\), steps.

On the other hand, the continuous discrepancy at the iterate defined by the discretized discrepancy principle (34) by (39), (41) satisfies

To estimate \(\mathcal {R}(x^\delta _{k_*(\delta ,y^\delta ),h})\), note that according to our notation, from (43), we get, like in (23), that for all \(k\in \{1,\ldots ,k_*(\delta ,y^\delta )\}\)

Now we show finiteness of the stopping index for the discretized Ivanov-IRGNM (32). By minimality of \(x_{k+1}^\delta \) and (38), for this problem we have

which with

by induction, (39) and (41) gives

where the right hand side is smaller than \(\tau \delta \) for all

with \(\tilde{\tau }=(1-c_{tc})\tau (1-c_\xi )\), so that we can again conclude \(k_{*}(\delta ,y^\delta )\le \bar{k}(\delta )=O(\log (1/\delta ))\).

It remains to show finiteness of the stopping index for the discretized Morozov-IRGNM (33). By minimality of \(x_{k+1}^\delta \) we have (30) with \(x_k^\delta \) replaced by \(x_{k,h}^\delta \), thus the inequalities (38) and (41) yield

then, by (39) and induction

where

and the right hand side of (44) falls below \(\tau \delta \) for all

where \(\tilde{\tau }=\tau (1-c_\xi )\), and we can again conclude \(k_{*}(\delta ,y^\delta )\le \bar{k}(\delta )=O(\log (1/\delta ))\).

Boundedness of the \(\mathcal {R}\) values for (33) by \(\mathcal {R}(x^\dagger )+\bar{\zeta }\) follows like in the proof of Theorem 1 together with (40), (41). \(\square \)

3 Error estimators for adaptive discretization

The error estimators \(\eta _k\), \(\xi _k\) and \(\zeta _k\) can be quantified, e.g., by means of a goal oriented dual weighted residual (DWR) approach [3], applied to the minimization problems

(note that the last constraint is added in order to enable computation of \(I_2^k\) below)

and

which are equivalent to (5), (6), and (7), respectively, with

as quantities of interest [where \(I_3^k\) is only needed for (5) and (7)]. We assume that \(C,\mathcal {R}\) and the norms can be evaluated without discretization error, so the discretized versions of \(I_i^k\) only arise due to discreteness of the arguments. Indeed, it is easy to see that the left hand sides of (38) and (39) can be bounded (at least approximately) by combinations of \(I_1^k\) and \(I_2^k\), using the triangle inequality:

where we will neglect \(R_\eta ^{k+1}=\Vert F_h^{k+1}(x_{k+1,h}^{\delta })-y^\delta \Vert -\Vert F_h^k(x_{k+1,h}^{\delta })-y^\delta \Vert \).

It is important to note that \(I_{1,h}^{k+1}\) is not equal to \(I_{2,h}^k\), see [18].

The computation of the a posteriori error estimators \(\eta _k, \xi _k, \zeta _k\) is done as in [18]. These error estimators can be used within the following adaptive algorithm for error control and mesh refinement: We start on a coarse mesh, solve the discretized optimization problem and evaluate the error estimator. Thereafter, we refine the current mesh using local information obtained from the error estimator, reducing the error with respect to the quantity of interest. This procedure is iterated until the value of the error estimator is below the given tolerance (41), cf. [3].

In this case, all the variables \(x,v,u,\tilde{u}\) are subject to a new discretization. For better readability we will partially omit the iteration index k and the discretization index h. The previous iterate \(x_k^\delta \) is fixed and not subject to a new discretization.

Consider now the cost functional for (45)

and define the Langrangian functional

neglecting for simplicity (cf. Remark 2) the constraints defined by \(\mathcal {C}\). The first-order necessary optimality conditions for (45) are given by stationarity for the Lagrangian L. Setting \(z=(x,v,u,\tilde{u},\lambda ,\tilde{\mu },\mu )\), they read

and for the discretized problem,

To derive a posteriori error estimators for the error with respect to the quantities of interest (\(I_1, I_2, I_3\)), we introduce auxiliary functionals \(M_i\):

Let \(\tilde{z}=(z,\bar{z}) \in \tilde{Z} = Z \times Z\) and \(\tilde{z}_h=(z_h,\bar{z}_h) \in \tilde{Z}_h = Z_h \times Z_h\) be continuous and discrete stationary points of \(M_i\) satisfying

respectively. Then, \(z, z_h\) are continuous and discrete stationary points of L and there holds \(I_i(z)=M_i(\tilde{z}), i = 1,2,3\). Thus the z part, as computed already during the numerical solution of the minimization problem (45) (or (46)) remains fixed for all \(i\in \{1,2,3,\}\). Moreover, after computing the discrete stationary point \(z_h\) for L (e.g., by applying Newton’s method), it requires only one more Newton step to compute the \(\bar{z}\) coordinate of the stationary point for M from

According to [3], there holds

with a remainder term R of order \(O(\Vert \tilde{z}-\tilde{z}_h\Vert ^3)\) that is therefore neglected. Thus we use

where \(\pi _h\) is an operator defined such that \((\pi _h\tilde{z}_{i,h} - \tilde{z}_{i,h})\) approximates the interpolation error as in [18], typically defined by local averaging, to define the estimators \(\eta _k\), \(\xi _k\), \(\zeta _k\) according to the rule

cf. (48), (49). The estimators obtained by this procedure can be used to trigger local mesh refinement until the requirements (41) are met cf. [3].

Explictly, for \(p=2\) (for simplicity) such a stationary point \(z=(x,v,u,\tilde{u},\lambda ,\tilde{\mu })\) can be computed by solving the following system of equations (analogously for the discrete stationary point of L)

Note that (58) is decoupled from the other equations and that if \(A^{'}_u(x,u)^*\) is injective, Eq. (54) implies \(\mu =0\).

Summarizing, since we have a convex minimization problem, after solving a nonlinear system of seven equations to find the minimizer, we need only one more Newton step to compute the error estimators to check whether we need a refinement on the mesh or not.

Regarding the problem (46) related to the Ivanov-IRGNM, we have the Lagrangian functional (50) with the cost functional defined by

and the indicator functional \(I_{(-\infty ,0]}(\mathcal {R}(x)-\rho )\) takes the role of a regularization functional. The resulting optimality system is the same as above, cf. (52)-(58), just with (52) replaced by

Similarly for (47) for Morozov-IRGNM, with the cost function

we end up with an optimality system by setting \(\alpha _k=1\) and replacing (53), (555657) in (52)–(58) by

respectively.

Note that the bound on \(I_2\) only appears—via (51)—in connection to the assumption \(\eta _k \le c_\eta \Vert F_h^k(x_{k,h}^{\delta })-y^\delta \Vert \), for \(k \le k_*(\delta ,y^\delta )\) in (41). This may be satisfied in practice without refining explicitly with respect to \(\eta _k\), but simply by refining with respect to the other error estimators \(\xi _k\) (and \(\zeta _k\) in the Tikhonov or Morozov case). The fact that \(I^k_{1,h}\) and \(I_{2,h}^{k-1}\) only differ in the discretization level, motivates the assumption that for small h, we have \(I^k_{1,h} \approx I_{2,h}^{k-1}\) and \(\eta _{k-1} \approx \xi _k\). Thefore, the algorithm used in actual computations will be built neglecting \(I_2\) and hence skipping the constraint \(\langle A(x,u),w\rangle _{W^*,W}=0, \;\forall w \in W\) in (45), (46), (47), which implies a modification of the Lagrangian (50) accordingly. Therefore, the corresponding optimality systems for \(p=2\) in the Tikhonov case is given by

Note that Eq. (66) is decoupled from the others. Therefore, the strategy is to solve (66) for \(\tilde{u}\) first, then solve the linear system (62), (63), (65) for \((x,v,\lambda )\), and finally compute \(\tilde{\mu }\) via the linear equation (64). Here, the system (62), (63), (65) can be interpreted as the optimality conditions for the following problem

For the Ivanov case, we have to solve (63)–(66) with

in place of (62), hence again (66) is decoupled from the other equations, (64) is linear with respect to \(\tilde{\mu }\), once \((x,v,\lambda )\) has been computed, and the remaining system for \((x,v,\lambda )\) can be interpreted as the optimality conditions for the following problem

The Morozov case requires solution of (62) (with \(\alpha _k=1\)), (65), (66), (60), (61). Thus again, we first solve (66) for \(\tilde{u}\), then the system (62) (with \(\alpha _k=1\)), (65), (60), which is the first order optimality condition for

with Lagrange multiplier \(\lambda \) for the equality constraint, and finally the (now possibly nonlinear) inclusion (61) for \(\tilde{\mu }\).

Remark 3

Since DWR estimators are based on residuals which are computed in the optimization process, the additional costs for estimation are very low, which makes this approach attractive for our purposes. However, although these error estimators are known to work efficiently in practice (see [3]), they are not reliable, i.e., the conditions \(I^k_i(z)-I^k_i(z_h) \le \epsilon _i^k\), \(i=1,2,3\) can not be guaranteed in a strict sense in the computations, since we neglect the remainder term R and use an approximation for \(\tilde{z}-\hat{z}_h\). As our analysis in Theorem 1 is kept rather general, it is not restricted to DWR estimators and would also work with different (e.g., reliable) error estimators.

4 Model examples

We present a model example to illustrate the abstract setting from the previous section. Consider the following inverse source problem for a semilinear elliptic PDE, where the model and observation equations are given by

where \(\chi _{\omega _c}\) denotes the extension by zero of a function on \(\omega _c\) to a function on all of \(\varOmega \). We first of all consider Tikhonov regularization and, aiming for a sparsely supported source, therefore use the space of Radon measures \(\mathcal {M}(\omega _c)\) as a preimage space X. Thus we define the operators \(A: \mathcal {M}(\omega _c)\times W^{1,q^{'}}_0 (\varOmega ) \longrightarrow W^{-1,q} (\varOmega )\), \(A(x,u) = - \varDelta u + \kappa u^3 -\chi _{\omega _c}x\), \(\kappa \in \mathbb {R}\) and the injection \(C:W^{1,q^{'}}_0(\varOmega )\longrightarrow L^2(\omega _o)=Y\), \(q > d\), where \(\varOmega \) is a bounded domain in \(\mathbb {R}^d\) with \(d=2\) or 3, with Lipschitz boundary \(\partial \varOmega \) and \(\omega _c, \omega _o \subset \varOmega \) are the control domain and the observation domain, respectively.

A monotonicity argument yields well posedness of the above semilinear boundary value problem, i.e., well-definedness of \(u\in W^{1,q^{'}}_0(\varOmega )\) as a solution to the elliptic boundary value problem (68), (69), as long as we can guarantee that \(u^3\in W^{-1,q} (\varOmega )\) for any \(u\in W^{1,q^{'}}_0(\varOmega )\), i.e., the embeddings \(W^{1,q^{'}}_0(\varOmega )\rightarrow L^{3r}(\varOmega )\) and \(L^r(\varOmega )\rightarrow W^{-1,q} (\varOmega )\) are continuous for some \(r\in [1,\infty ]\), which (by duality) is the case iff \(W^{1,q^{'}}_0(\varOmega )\) embeds continuously both into \(L^{3r}(\varOmega )\) and \(L^{r'}(\varOmega )\). By Sobolev’s Embedding Theorem, this boils down to the inequalities

which by elementary computations turns out to be equivalent to

where the left hand side is larger than one and the denominator on the right hand side is positive due to the fact that for \(d\ge 2\) we have \(q>d\ge d'=\frac{d}{d-1}\). Taking the extremal bounds for \(q>d\)—note that the lower bound is increasing and the upper bound is decreasing with q—in (72) we get

Thus, as a by-product, we get that for any \(t\in [1,\bar{t})\) there exists \(q>d\) such that \(W^{1,q^{'}}_0(\varOmega )\) continuously embeds into \(L^t\), with

For the regularization functional \(\mathcal {R}(x)=\Vert x\Vert _{\mathcal {M}(\omega _c)}\), the IRGNM-Tikhonov minimization step is given by (ignoring h in the notation)

Here and below \(\int _{\varOmega }\ d\varOmega \) and \(\int _{\omega _c}\ dx\) denote the integrals with respect to the Lebesgue measure and with respect to the measure \(x\), respectively.

Therefore, to compute this Gauss–Newton step, one first needs to solve the nonlinear equation

for \(\tilde{u}=u_k^\delta \), then solve the following optimality system with respect to \((x,v,\lambda )\) (written in a strong formulation)

which can be interpreted as the optimality system for the minimization problem

with Lagrange multiplier \(\lambda \) for the equality constraint, and finally, compute \(\tilde{\mu }\) by solving

For carrying out the IRGNM iteration, \(\tilde{\mu }\) is not required, but we need it for evaluating the error estimators.

For the Ivanov case, we consider the same model and observation equations (68), (69), (70) but now we intend to regularize by imposing \(L^\infty \) bounds and thus use the slightly different function space setting, \(A: L^\infty (\omega _c)\times H^{1}_0 (\varOmega ) \longrightarrow H^{-1} (\varOmega )\), \(A(x,u) = - \varDelta u + \kappa u^3 -x\), \(\kappa \in \mathbb {R}\) and the injection \(C: H^1_0(\varOmega )\longrightarrow L^2(\omega _o)\).

The IRGNM-Ivanov minimization step with the regularization functional \(\mathcal {R}(x)=\Vert x\Vert _{L^\infty (\omega _c)}\) is given by

For the Gauss–Newton step, one needs to first solve the nonlinear equation (74) for \(\tilde{u}=u_k^\delta \), and then solve the following optimality system with respect to \((x,v,\lambda )\)

which can be interpreted as the optimality system for the minimization problem

with Lagrange multiplier \(\lambda \) for the equality constraint. Finally, \(\tilde{\mu }\) is computed from (76).

For the IRGNM-Morozov case, using for simplicity the regularization functional \(\mathcal {R}(x)=\frac{1}{2}\Vert x\Vert _{L^2(\omega _c)}^2\), and leaving the rest of the setting as in the IRGNM-Ivanov case, the step is defined by

So again we first solve (74) for \(\tilde{u}=u_k^\delta \), then the minimization problem

or actually its first order optimality system

for \((x_{k+1}^\delta ,v_{k}^\delta ,\phi ,\lambda )\), and finally,

for \(\tilde{\mu }\).

For numerically efficient methods to solve the minimization problems (75) and (77) we refer to e.g., [4,5,6] and the references therein.

We finally check the tangential cone condition in case \(\omega _o=\varOmega \) and, for simplicity also \(\omega _c=\varOmega \), in both settings

(where we will have to restrict ourselves to \(d=2\)) and

For this purpose, we use the fact that with the notation \(F(\tilde{x})=\tilde{u}\vert _{\omega _o}\), \(F(x)=u\vert _{\omega _o}\), \(F(\tilde{x})-F(x)=v\vert _{\omega _o}\) and \(F(\tilde{x})-F(x)-F'(x)(\tilde{x}-x)=w\vert _{\omega _o}\), the functions \(v,w\in W^{1,q^{'}}_0(\varOmega )\) satisfy the homogeneous Dirichlet boundary value problems for the equations

Using an Aubin-Nitsche type duality trick, we can estimate the \(L^2\) norm of w via the adjoint state \(p\in W_0^{1,n}(\varOmega )\), which solves

with homogeneous Dirichlet boundary conditions, so that by Hölder’s inequality

where we aim at choosing \(m\in [4,\infty ]\), \(n\in [1,\infty ]\) such that indeed

and the embeddings \(V\rightarrow L^m(\varOmega )\), \(W^{1,n}(\varOmega )\rightarrow L^{\frac{2m}{m-4}}(\varOmega )\), \(L^2(\varOmega )\rightarrow W^{-1,n}(\varOmega )\) are continuous. If we succeed in doing so, we can bound \(\tilde{\tilde{C}} \kappa \Vert \tilde{u}+2u\Vert _{L^m(\varOmega )} \Vert v\Vert _{L^m(\varOmega )}\) by some constant \(c_{tc}\), which will be small provided \(\Vert \tilde{x}-x\Vert _X\) and hence \(\Vert v\Vert _{L^m(\varOmega )}\) is small. Thus, the numbers n, m are limited by the requirements

\(L^2(\varOmega )\subseteq W^{-1,n}(\varOmega )\), i.e., by duality,

and the fact that \(\kappa u^2 p\in L^o(\varOmega )\) should be contained in \(W^{-1,n'}(\varOmega )\) for \(u\in V\subseteq L^t(\varOmega )\), and \(p\in W^{1,n}(\varOmega )\), which via Hölder’s inequality in

and duality leads to the requirements

In case \(V=W_0^{1,q'}(\varOmega )\) with \(q>d\) and \(d=3\), (78) will not work out, since according to (73), m cannot be chosen larger or equal to four.

In case \(V=W_0^{1,q'}(\varOmega )\) with \(q>d\) and \(d=2\), we can choose, e.g., \(t=m=n=6\), \(o=2\) to satisfy (78), (79), (80) as well as \(t,m<\bar{t}\) as in (73).

The same choice is possible in case \(V=H_0^1(\varOmega )\) with \(d\in \{2,3\}\).

5 Numerical tests

In this section, we provide some numerical illustration of the IRGNM Ivanov method applied to the example from Sect. 4, i.e., each Newton step consists of solving (74) and subsequently (77). For the numerical solution of (74) we apply a damped Newton iteration to the equation \(\varPhi (\tilde{u})=0\) where

which is stopped as soon as \(\Vert \varPhi (\tilde{u}^l)\Vert _{H^{-1}(\varOmega )}\) has been reduced by a factor of 1.e−4. The sources \(x\) and states u are discretized by piecewise linear finite elements, hence after elimination of the state via the linear equality constraint, (77) becomes a box constrained quadratic program for the dicretized version of \(x\), which we solve with the method from [12] using the Matlab code mkr_box provided to us by Philipp Hungerländer, Alpen-Adria Universität Klagenfurt. All implementations have been done in Matlab.

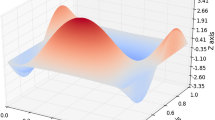

We performed test computations on a 2-d domain \(\omega _o=\omega _c=\varOmega =(-1,1)^2\), on a regular computational finite element grid consisting of \(2\cdot N\cdot N\) triangles, with \(N=32\). We first of all consider \(\kappa =1\) (below we will also show results with \(\kappa =100\)) and the piecewise constant exact source function

where B is the ball of radius 0.2 around \((-0.4,-0.3)\) cf. Fig. 1, and correspondingly set \(\rho =10\). In order to avoid an inverse crime, we generated the synthetic data on a finer grid and, after projection of \(u_{ex}\) onto the computational grid, we added normally distributed random noise of levels \(\delta \in \{0.001, 0.01, 0.1\}\) to obtain synthetic data \(y^\delta \).

In all tests we start with the constant function with value zero for \(x_0\). Moreover, we always set \(\tau =1.1\). According to our convergence result Theorem 1 with \(\mathcal {R}=\Vert \cdot \Vert _{L^\infty (\varOmega )}\), we can expect weak * convergence in \(L^\infty (\varOmega )\) here. Thus we computed the errors in certain spots within the two homogeneous regions and on their interface,

cf. Fig. 1, more precisely, on \(\frac{1}{N}\times \frac{1}{N}\) squares located at these spots, corresponding to the piecewise constant \(L^1\) functions with these supports in order to exemplarily test weak * \(L^\infty \) convergence. Additionally we computed \(L^1\) errors.

Table 1 provides an illustration of convergence as \(\delta \) decreases. For this purpose, we performed five runs on each noise level for each example and list the average errors.

In Fig. 2 we plot the reconstructions for \(\kappa =1\) and \(\kappa =100\). For \(\kappa =1\), the noise levels \(\delta \in \{0.1, 0.667,0.333,0.01\}\) correspond to a percentage of \(p\in \{5.6, 18.5, 37.1, 55.6\}\) of the \(L^2\) deviation of the exact state from the background state \(u_0=-10^{1/3}\). In case of \(\kappa =100\), where the background state is \(u_0=-0.1^{1/3}\) the corresponding percentages are \(p\in \{17.9,59.7,119.4,179.2\}\). For an illustration of the noisy data as compared to the exact ones, see Figs. 3 and 4. Indeed, the box constraints enable to cope with relatively large noise levels, even in the rather nonlinear regime with \(\kappa =100\).

6 Conclusions and remarks

In this paper we have studied convergence of the Tikhonov type, the Ivanov type, and the Morozov type IRGNM with a stopping rule based on the discrepancy principle type. To the best of our knowledge, the Ivanov and Morozov IRGNMs have not been studied so far and in all three Tikhonov, Ivanov, and Morozov type IRGNMs, convergence results without source conditions so far use stronger assumptions than the tangential cone condition used here. We also consider discretized versions of the methods and provide discretization error bounds that still guarantee convergence. Moroever, we discuss goal oriented dual weighted residual error estimators that can be used in an adaptive discretization scheme for controlling these discretization error bounds. An inverse source problem for a nonlinear elliptic boundary value problems illustrates our theoretical findings in the special situations of measure valued and \(L^\infty \) sources. We also provide some computational results with the Ivanov IRGNM for the case of an \(L^\infty \) source. Numerical implementations and tests for a measure valued source, together with adaptive discretization is subject of ongoing work, based on the approaches from [4,5,6, 18, 19]. Future research in this context will be concerend with convergence rates results for the Ivanov and Morozov IRGNMs under source conditions.

References

Bakushinsky, A.B.: The problem of the convergence of the iteratively regularized Gauss–Newton method. Comput. Math. Math. Phys. 32, 1353–1359 (1992)

Bakushinsky, A.B., Kokurin, M.: Iterative Methods for Approximate Solution of Inverse Problems. Kluwer, Dordrecht (2004)

Becker, R., Vexler, B.: Mesh refinement and numerical sensitivity analysis for parameter calibration of partial differential equations. J. Comput. Phys. 206, 95–110 (2005)

Clason, C., Kunisch, K.: A duality-based approach to elliptic control problems in non-reflexive Banach spaces. ESAIM Control Optim. Calc. Var. 17(1), 243–266 (2011)

Clason, C., Kunisch, K.: A measure space approach to optimal source placement. Comput. Optim. Appl. 53(1), 155–171 (2012)

Casas, E., Clason, C., Kunisch, K.: Approximation of elliptic control problems in measure spaces with sparse solutions. SIAM J. Control Optim. 50(4), 1735–1752 (2012)

Dombrovskaja, I., Ivanov, V.K.: On the theory of certain linear equations in abstract spaces. Sib. Mat. Z. 6, 499–508 (1965)

Engl, H., Hanke, M., Neubauer, A.: Regularization of Inverse Problems. Kluwer, Dordrecht (1996)

Grasmair, M., Haltmeier, M., Scherzer, O.: The residual method for regularizing ill-posed problems. Appl. Math. Comput. 218, 2693–710 (2011)

Hanke, M.: A regularization Levenberg–Marquardt scheme, with applications to inverse groundwater filtration problems. Inverse Prob. 13, 79–95 (1997)

Hohage, T., Werner, F.: Iteratively regularized Newton-type methods for general data misfit functionals and applications to Poisson data. Numer. Math. 123, 745–779 (2013)

Hungerländer, P., Rendl, F.: A feasible active set method for strictly convex problems with simple bounds. SIAM J. Opt. 25, 1633–1659 (2015)

Ivanov, V.K.: On linear problems which are not well-posed. Dokl. Akad. Nauk SSSR 145, 270–272 (1962)

Ivanov, V.K.: On ill-posed problems. Mat. Sb. (N.S.) 61(103), 211–223 (1963)

Ivanov, V.K., Vasin, V.V., Tanana, V.P.: Theory of Linear Ill-Posed Problems and Its Applications, Inverse and Ill-Posed Problems Series, VSP (2002)

Jin, Q., Zhong, M.: On the iteratively regularized Gauss–Newton method in Banach spaces with applications to parameter identification problems. Numer. Math. 124, 647–683 (2013)

Kaltenbacher, B., Hofmann, B.: Convergence rates for the iteratively regularized Gauss–Newton method in Banach spaces. Inverse Prob. 26, 035007 (2010)

Kaltenbacher, B., Kirchner, A., Veljović, S.: Goal oriented adaptivity in the IRGNM for parameter identification in PDEs: I. Reduced formulation. Inverse Prob. 30, 045001 (2014)

Kaltenbacher, B., Kirchner, A., Vexler, S.: Goal oriented adaptivity in the IRGNM for parameter identification in PDEs II: all-at once formulations. Inverse Prob. 30, 045002 (2014)

Kaltenbacher, B., Neubauer, A., Scherzer, O.: Iterative Regularization Methods for Nonlinear Ill-Posed Problems. Walter de Gruyter, Berlin (2008)

Kaltenbacher, B., Schöpfer, F., Schuster, T.: Convergence of some iterative methods for the regularization of nonlinear ill-posed problems in Banach spaces. Inverse Prob. 25, 065003 (2009)

Lorenz, D., Worliczek, N.: Necessary conditions for variational regularization schemes. Inverse Prob. 29, 075016 (2013)

Morozov, V.A.: Choice of parameter for the solution of functional equations by the regularization method. Dokl. Akad. Nauk SSSR 175, 1225–8 (1967)

Neubauer, A., Ramlau, R.: On convergence rates for quasi-solutions of ill-posed problems. ETNA 41, 81–92 (2014)

Scherzer, O.: Convergence criteria of iterative methods based on Landweber iteration for solving nonlinear problems. J. Math. Anal. Appl. 194, 911–933 (1995)

Seidman, T.I., Vogel, C.R.: Well posedness and convergence of some regularisation methods for non-linear ill posed problems. Inverse Prob. 5, 227–238 (1989)

Tikhonov, A.N., Arsenin, V.Y.: Solutions of Ill-Posed Problems. Wiley, New York (1977)

Werner, F.: On convergence rates for iteratively regularized Newton-type methods under a Lipschitz-type nonlinearity condition. J. Inverse Ill Posed Probl. 23, 75–84 (2015)

Acknowledgements

Open access funding provided by University of Klagenfurt. The authors wish to thank Philipp Hungerländer, Alpen-Adria Universität Klagenfurt, for providing us with the Matlab code based on the method from [12]. Moreover, the authors gratefully acknowledge financial support by the Austrian Science Fund FWF under the grants I2271 “Regularization and Discretization of Inverse Problems for PDEs in Banach Spaces” and P30054 “Solving Inverse Problems without Forward Operators” as well as partial support by the Karl Popper Kolleg “Modeling-Simulation-Optimization”, funded by the Alpen-Adria-Universität Klagenfurt and by the Carinthian Economic Promotion Fund (KWF).

Moreover, we wish to thank both reviewers for fruitful comments leading to an improved version of the manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Supported by the Austrian Science Fund FWF under the Grant I2271 “Regularization and Discretization of Inverse Problems for PDEs in Banach Spaces”.

Appendix

Appendix

Lemma 1

For all \(a,b > 0,\) \(p \ge 1\) and \(\gamma , \epsilon \in (0,1)\)

and, if additionally \(a\ge b\), also

Proof

The estimate in (82) can be done by solving the following extremal value problems

where

since for any \(\gamma ,\epsilon \in (0,1)\),

with \(x:=b/a\), \(a,b>0\) is equivalent to (24).

Solving for \(C_\gamma \), we have

which means that

so defining \(C_\gamma :=\left( \frac{1+\gamma }{\gamma }\right) ^{p-1}\) and writing the resulting inequality in terms of a and b we have the desired formula.

The formula in (83) is derived analogously. \(\square \)

Lemma 2

Let \(k\in \mathbb {N}\), \((d_j)_{1\le j\le k+1}\subseteq [0,\infty )\), \((\mathcal {R}_j)_{1\le j\le k+1}\subseteq [0,\infty )\), \(\alpha _0,\mathcal {R}^\dagger ,c,q\not =\theta \in (0,\infty )\). Then

implies that

Proof

We first show by induction that for all \(l\in \{0,\ldots ,k\}\)

Indeed, for \(l=0\), (86) is just (84) with \(j=k\). Suppose that (86) holds for l, then using (84) with \(j=k-(l+1)\), we obtain the formula for \(l+1\)

and the induction proof is complete.

Hence, setting \(l=k\) in (86) and using the geometric series formula, we get the assertion (85).

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Kaltenbacher, B., Previatti de Souza, M.L. Convergence and adaptive discretization of the IRGNM Tikhonov and the IRGNM Ivanov method under a tangential cone condition in Banach space. Numer. Math. 140, 449–478 (2018). https://doi.org/10.1007/s00211-018-0971-5

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00211-018-0971-5