Abstract

We study the front of the solution to the F-KPP equation with randomized non-linearity. Under suitable assumptions on the randomness including spatial mixing behavior and boundedness, we show that the front of the solution lags at most logarithmically in time behind the front of the solution of the corresponding linearized equation, i.e. the parabolic Anderson model. This can be interpreted as a partial generalization of Bramson’s findings (Bramson in Commun Pure Appl Math 31(5):531–581, 1978) for the homogeneous setting. Partially building on this result and its derivation, we establish functional central limit theorems for the fronts of the solutions to both equations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and Main Results

1.1 The classical F-KPP equation

The F-KPP equation is the initial value problem given by

with initial condition \(w_0: \mathbb {R}\rightarrow [0,1].\) Its investigation has a long history, with seminal results dating back to Fisher [13] and Kolmogorov–Petrovskii–Piskunov [24]. Their research had been motivated by pioneering works in genetics, where the equation has been used to model a randomly mating diploid population living in a one-dimensional habitat. Further applications can be found in chemical combustion theory or flame propagation, see [1, 12], as well as [37] and references therein.

In [24] it has been shown that for reasonably general non-linearities (see (SC) at the beginning of Sect. 1.4 for further details) and initial conditions of Heaviside type \(w_0=\mathbb {1}_{(-\infty ,0]}\), the solution to (1.1) approaches a traveling wave \(g:\mathbb {R}\rightarrow [0,1]\). I.e., there exists a function \(m: [0,\infty ) \rightarrow \mathbb {R}\)—generally referred to as the (position of the) front or breakpoint—such that

The limit g is known (see [24, Theorems 14 and 17]) to solve the differential equation

and it is unique up to spatial translations. In particular, in this case the first order asymptotics \( m(t)/t {\longrightarrow }_{t\rightarrow \infty } \sqrt{2}\) has been derived. A couple of decades later, Bramson in his seminal work [8] improved this result by computing the second order correction up to additive constants. More precisely, he showed that for each \(\varepsilon \in (0,1),\) the choice \(m(t)=m^\varepsilon (t):=\sup \{x\in \mathbb {R}: w(t,x)=\varepsilon \}\) fulfills

Later on, Bramson [7, Theorem 3 (p. 141)] extended this result to more general initial conditions; roughly speaking, he required the initial condition \(w_0(x)\) to have a sufficiently fast exponential decay for \(x\rightarrow \infty \) and to be non-vanishing for \(x\rightarrow -\infty \). One of the main tools employed in the proof was the McKean representation of the solution to (1.1) in terms of expectations of branching Brownian motion, see [20] and [27]. Another important ingredient was the comparison of the solution of (1.1) to the solution of its linearized version

Indeed, since m(t) describes the front of the solution where u is small and hence \(1-u \approx 1,\) the heuristics is that m(t) is in some sense well-approximated by the front of the solution to (1.3). More precisely, for \(\overline{m}(t):=\sup \{x\in \mathbb {R}: u(t,x)=\varepsilon \}\) and Heaviside-type initial condition \(w_0=\mathbb {1}_{(-\infty ,0]}\), standard Gaussian computations entail

In combination with (1.2), this results in a respective logarithmic backlog of the two fronts in the sense that

The main goal of this article is to investigate the effect of introducing a random potential in the non-linearity of (1.1) as well as in its linearization (1.3), on the logarithmic backlog derived in (1.5) (cf. Theorem 1.5). Taking advantage of this result we will also derive functional central limit theorems for the fronts of the respective solutions to the randomized equations, see Theorem 1.4 and Corollary 1.6 below, which can be interpreted as analogues to homogeneous-case results (1.4) and (1.2).

1.2 The randomized F-KPP equation and the parabolic Anderson model

Already in Fisher’s seminal paper [13], where he investigated the setting (1.1) of homogeneous branching rates, it has been observed that a more realistic model would be obtained by considering spatially heterogeneous rates of the transformation of recessive to advantageous alleles. This, alongside a mathematical interest, is our guiding motivation to consider the setting of random \(\xi \). Replacing the term \(w(1-w)\) of (1.1) by a more general non-linearity F(w) fulfilling suitable standard conditions (see (SC) below), and overriding the notation u and w from the homogeneous setting of the previous section, we then arrive at the equation

as well as its linearized version, the parabolic Anderson model

Here, the stochastic process \(\xi :\mathbb {R}\times \Omega \rightarrow (0,\infty )\) models the random medium; most of the time we will keep the dependence on \(\Omega \) implicit, and write \(\xi \) instead of \(\xi (\cdot , \omega )\) as is common in probability theory. For the case \(F(w)=w(1-w)\) and degenerate \(\xi \equiv 1\), these two equations yield the special cases (1.1) and (1.3), respectively. It has long been known, see e.g. Freidlin [14, Theorem 7.6.1], that under suitable assumptions there exists \(v_0>0\), such that the solution w(t, x) to (F-KPP) converges to 0 (resp. 1), uniformly for all \(x\geqq \overline{v}t\) with \(\overline{v}>v_0\) (resp. for all \(x\leqq \underline{v}t\) with \(\underline{v}<v_0\)), as t tends to infinity. In a similar way, this result can be shown to hold for the solution to (PAM) with the same \(v_0\) as well, showing that the speeds or velocities of both fronts, the one of the solution to (F-KPP) as well as the one of the solution to (PAM), coincide. Consequently, as in the homogeneous case, the question of second order corrections arises naturally.

1.3 Summary of results

In order to address this question and to be able to summarize our results, we introduce some notation. Let \(\varepsilon \in (0,1)\) and \(a>0\). Furthermore, write \(u=u^{\xi ,u_0}\) for the solution to (PAM) with initial condition \(u_0,\) and \(w=w^{\xi ,F,w_0}\) for the solution to (F-KPP) with initial condition \(w_0\). As in the previous section, the fronts of the respective solutions, denoted by

are of special interest. Since we will from now on focus on the heterogeneous setting, we override the notation from Sect. 1.1, where it was used to denote the fronts in the homogeneous case, and define

Our findings are motivated by the respective results of (1.4), (1.2), and (1.5) for the homogeneous case, which provide information about the position of the fronts of the solutions to the respective equations, and thus their respective backlog as well. Under suitable assumptions, our results can be summarized verbally in the following two statements:

-

(a)

There exist a constant \(C \in (0,\infty )\) and a \(\mathbb {P}\)-a.s. finite random time \(\mathcal {T}(\omega )\) such that for all \(t \geqq \mathcal {T}(\omega ),\)

$$\begin{aligned} \overline{m}(t) - m(t) \leqq C \ln t; \end{aligned}$$(1.8)see Theorem 1.5 below.

-

(b)

After centering and diffusive rescaling, the stochastic processes \([0,\infty ) \ni t \rightarrow \overline{m}(t)\) and \([0,\infty ) \ni t \rightarrow m(t)\) satisfy invariance principles; see Theorem 1.4 and Corollary 1.6 below.

As is shown in the companion article [10, Theorems 2.3 and 2.4], in a certain sense there is a logarithmic lower bound for \(\overline{m}(t) - m(t) \) corresponding to (1.8) as well; cf. also Sect. 1.7 below for further discussion.

1.4 Further notation

In order to be able to precisely formulate the previously summarized results, we have to introduce some further notation. We start with introducing the standard conditions for the non-linearity, i.e., F in (F-KPP) has to fulfill the following:

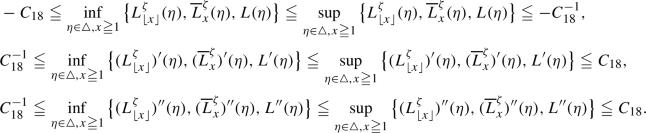

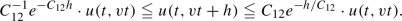

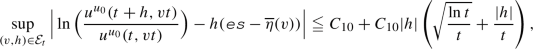

see Fig. 1 for an illustration. Note that the last condition in (SC) is essentially a \(C^{1,1}\)-condition on F at 0. Among others, the conditions in (SC) are used to employ a sandwiching argument for being able to deduce the desired result (1.8) not only for non-linearities F which have the form of a probability generating function, see (PROB) below, but also for all F fulfilling (SC). The sandwiching argument is inspired by the proof of [8, Theorem 2], where the author investigates the homogeneous case \(\xi \equiv \text {const}\).

Sketches of functions fulfilling (SC), all of which are dominated by the identity function

We will now specify the classes of initial conditions under consideration for both, (F-KPP) and (PAM). For this purpose, we fix \(\delta '\in (0,1)\) and \(C'>1,\) and require an initial condition \(u_0\) of (PAM) to fulfill

Our results also hold for initial conditions that decay sufficiently fast at infinity and grow towards minus infinity with sufficiently small exponential rate; i.e., the condition (PAM-INI) can be relaxed to

where \(C \in (0,\infty )\) is a large enough constant. However, in order to avoid further technical complications we stick to the above set of initial conditions.

In addition, let us introduce a tail condition for the initial condition of (F-KPP), which is the same as the one for the case \(\xi \equiv 1\) stated in [7, (1.17)]. For this purpose, we fix \(N,N'>0\), and require \(w_0\) as in (F-KPP) to fulfill

Denote by \(\mathcal {S}^1\) the class of functions \(f:\mathbb {R}\rightarrow [0,\infty )\) which are pointwise limits of increasing sequences of continuous functions, and let

which will be the classes of initial conditions under consideration. An emblematic example which is contained in both, \(\mathcal {I}_{\text {F-KPP}}\) and \(\mathcal {I}_{\text {PAM}},\) is the function \( \mathbb {1}_{(-\infty ,0]}\) of Heaviside type.

We will assume \(\xi =(\xi (x))_{x\in \mathbb {R}}\) to be a stochastic process on a probability space \((\Omega ,\mathcal {F},\mathbb {P})\) having locally Hölder continuous paths, i.e., there exists \(\alpha =\alpha (\xi )>0\) such that for every interval \(I\subset \mathbb {R}\) there exists \(C=C(\xi ,I)>0\), such that

and such that the following conditions are fulfilled:

-

\(\xi \) is uniformly bounded away from 0 and \(\infty \), i.e., there exist constants \(0<\texttt {ei}\leqq \texttt {es}<\infty \) such that \(\mathbb {P}\)-a.s.,

$$\begin{aligned} \texttt {ei}\leqq \xi (x)\leqq \texttt {es}\quad \text { for all }x\in \mathbb {R}; \end{aligned}$$(BDD) -

\(\xi \) is stationary, i.e. for every \(h\in \mathbb {R}\) we have

$$\begin{aligned} (\xi (x))_{x\in \mathbb {R}} \overset{d}{=}\ (\xi (x+h))_{x\in \mathbb {R}}; \end{aligned}$$(STAT) -

\(\xi \) fulfills a \(\psi \)-mixing condition: Let \(\mathcal {F}_x:=\sigma (\xi (z):z\leqq x)\) and \(\mathcal {F}^y:=\sigma (\xi (z):z\geqq y)\), \(x,y\in \mathbb {R}\) and assume that there is a continuous, non-increasing function \(\psi :[0,\infty )\rightarrow [0,\infty )\), such that for all \(j,k\in \mathbb {Z}\) with \(j\leqq k\) as well as \(X\in \mathcal {L}^1(\Omega ,\mathcal {F}_j,\mathbb {P})\) and \(Y\in \mathcal {L}^1(\Omega ,\mathcal {F}^k,\mathbb {P})\) we have

$$\begin{aligned} \big | \mathbb {E}\big [X-\mathbb {E}[X] \, |\, \mathcal {F}^{k}\big ]\big |&\leqq \mathbb {E}[|X|]\cdot \psi (k-j), \nonumber \\ \big | \mathbb {E}\big [Y-\mathbb {E}[Y] \, |\, \mathcal {F}_j\big ]\big |&\leqq \mathbb {E}[|Y|]\cdot \psi ({k-j}), \\ \sum _{k=1}^\infty&\psi (k)<\infty . \nonumber \end{aligned}$$(MIX)Note that (MIX) implies the ergodicity of \(\xi \) with respect to the shift operator \(\theta _y\) acting via \(\xi (\cdot )\circ \theta _y=\xi (\cdot +y)\), \(y\in \mathbb {R}\).

Summarizing, we arrive at the following standing assumptions:

We will provide here two prototypical examples for a suitable potential \(\xi \) satisfying the conditions of (Standing assumptions).

Example 1.1

-

(a)

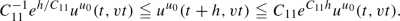

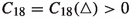

The Ornstein–Uhlenbeck process \((Y_x)\), \(x \in \mathbb {R},\) is an important stochastic process, which has all the nice properties of being stationary, Markovian, and Gaussian. It can be written as

$$\begin{aligned} Y_x = e^{-x}B_{e^{2x}}, \text { for }\quad x \in \mathbb {R},\quad \text { where }\quad (B_t), t \in [0,\infty ),\quad \text {is a Brownian motion;} \end{aligned}$$(1.9)see Fig. 2 for a realization. For \(0<\varepsilon<M < \infty \) we now consider the potential \(\xi (x) = (\varepsilon \vee Y_x) \wedge M,\) \(x \in \mathbb {R},\) and note that it satisfies (BDD) by definition. It furthermore fulfills (STAT), since the process \((Y_x),\) \(x \in \mathbb {R}\), is stationary. We also note that for any \(\gamma \in (0,1/2)\) the local \(\gamma \)-Hölder continuity of \((Y_x)\) follows from (1.9) in combination with the respective Hölder continuity of Brownian motion (see [28, Corollary 1.20]); hence, (HÖL) holds true. Property (MIX) is somewhat harder to establish. One can for instance do so by taking advantage of the fact that the Ornstein–Uhlenbeck process can also be characterized as the solution to the stochastic differential equation

$$\begin{aligned} \textrm{d}Y_x = -Y_x\, \textrm{d}x + \sqrt{2} \, \textrm{d}B_x, \end{aligned}$$(1.10)and then use the fact that the Ornstein–Uhlenbeck process started from a single point converges to its stationary distribution sufficiently fast (cf. [21, Example 6.8]) as well as the Markov property of the process. The details of the proof are slightly technical and omitted at this point. See [21, 28] for further details on the Ornstein–Uhlenbeck process.

-

(b)

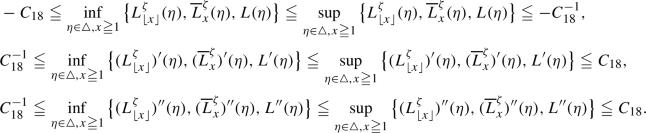

This example is instructive in the sense that not only does it fulfill these conditions, but at the same time it serves as an example in [10] to demonstrate that the transition front of the solution to (F-KPP) can grow logarithmically in time along subsequences in case \(\frac{{\texttt {es}}}{{\texttt {ei}}} > 2\); see [10] for further details. We choose \(0< {\texttt {ei}}<{\texttt {es}}< \infty \) and let \(\chi :[0,\infty ) \rightarrow [0,1]\) be a continuous non-increasing function with \(\chi (x)=1\) for \(x\leqq 1\) and \(\chi (x)=0\) for \(x\geqq 2\), Furthermore, let \(\omega = (\omega ^i)_{i\in \mathbb Z}\) be a Poisson point process on \(\mathbb R\) with homogeneous intensity one. We then define

$$\begin{aligned} \xi (x):={\texttt {ei}}+ ({\texttt {es}}-{\texttt {ei}})\cdot \sup \{ \chi (|x-\omega ^i|):i\in \mathbb Z\}. \end{aligned}$$Observe that by construction the potential \(\xi \) satisfies (HÖL). Furthermore, \(\xi (x)\in [{\texttt {ei}},{\texttt {es}}]\) for all \(x\in \mathbb {R}\), \(\xi (x)={\texttt {ei}}\) if \(|x-\omega ^i|>2\) for all i, and \(\xi (x)={\texttt {es}}\) if there exists \(\omega ^i\) such that \(|x-\omega ^i|\leqq 1\). Also, using the properties of the Poisson point process, it is not hard to show that \(\xi \) fulfills (BDD), (STAT) and (MIX). See Fig. 3 for an illustration of this potential, which highlights the fact that the potential has logarithmically in time long stretches where it equals \({\texttt {ei}}\) that are adjacent to comparably long stretches where it equals \({\texttt {es}};\) cf. [10, Lemma 5.3] for precise statements.

Since we allow for non-smooth initial conditions in both equations, (F-KPP) and (PAM), we shortly comment on the notion of solution in this setting. We call a function w to (F-KPP) a generalized solution to (F-KPP), if it satisfies

where \(E_x\) is the corresponding expectation of the probability measure \(P_x\), under which \((B_t)_{t\geqq 0}\) is a standard Brownian motion starting in \(x\in \mathbb {R}\). This equation can be interpreted as a mild formulation of (F-KPP) and one can show (see e.g. [14, (1.4), p. 354, and (a), p. 355]) that every classical solution to (F-KPP) also is a generalized one. Generalized solutions can be shown to exist under weak assumptions, and, vice versa, in many instances they turn out to be classical solutions indeed, see Proposition A.9 in Appendix A.

Remark 1.2

A useful observation is that for initial conditions in \(\mathcal {I}_{\text {F-KPP}}\), generalized solutions can be approximated by classical solutions. That is, if \((w_0^{(n)})_{n\in \mathbb {N}}\subset \mathcal {I}_{\text {F-KPP}}\) is a sequence of continuous functions which increase pointwise to \(w_0\), then by Corollary A.11 the corresponding sequence of (by Proposition A.9 classical) solutions \((w^{(n)})_{n\in \mathbb {N}}=(w^{w_0^{(n)}})_{n\in \mathbb {N}}\) to (F-KPP) is also monotone and thus the limit \(w(t,x):=\lim _{n\rightarrow \infty } w^{{(n)}}(t,x)\) exists for all \((t,x)\in [0,\infty )\times \mathbb {R}\). Dominated convergence and the fact that \(w^{(n)}\) also is a generalized solution then imply

I.e., w is the generalized solution to (F-KPP) with initial condition \(w_0\).

A similar concept can be introduced for the solution to (PAM) as well. That is, for \(u_0\in \mathcal {I}_{\text {PAM}}\), we call a function

a generalized solution to (PAM). Note that this is an explicit expression of the solution in terms of a Brownian path, while in (1.11) the solution is only given implicitly. Furthermore, if \(u_0\) is continuous, then by [21, Remark 4.4.4], there exists a unique solution u to (PAM) which fulfills \(u\in C^{1,2}((0,\infty )\times \mathbb {R})\) and \( u(0,\cdot )=u_0.\) As a consequence of these observations, in the following, we will always consider generalized solutions.

1.5 The linearized equation

As already mentioned in the first section, we expect that investigating the solution to (PAM) might also provide some insight into the solution to (F-KPP). Therefore, starting with the first order of the front as a function of time, it turns out useful to consider the so-called Lyapunov exponent

Due to Proposition A.3, the Lyapunov exponent exists \(\mathbb {P}\)-a.s. for all \(v\in \mathbb {R}\), is non-random, and—as a consequence of Corollary 3.10—does not depend on the initial condition in \(\mathcal {I}_{\text {PAM}}\) under consideration. Furthermore, the function \([0,\infty )\ni v\mapsto \Lambda (v)\) is concave, tends to \(-\infty \) as \(v\rightarrow \infty \) and \(\Lambda (0)=\texttt {es}\), where \(\texttt {es}\) is defined in (BDD). \(\Lambda (v)\) describes the asymptotic exponential growth of the solution in the linear regime with speed v. By Proposition A.3, there exists a unique \(v_0>0\) such that

which we will call velocity or speed of the solution to (PAM). Using the properties of the Lyapunov exponent, we immediately infer the first order asymptotics for \(\overline{m}\) to \(\mathbb {P}\text {-a.s.}\) satisfy

It will turn out that our methods work if we require \(v_0\) to be strictly larger than some “critical” value \(v_c\), defined in Lemma 2.4 (d). Roughly speaking, the condition \(v>v_c\) allows for the application of change-of-measure techniques; more precisely, it allows us to find a suitable additive tilting parameter in the exponent of the Feynman–Kac representation (1.12), which depends on v and makes the solution u(t, x) to (PAM) amenable to the investigation by standard tools for values \(x\approx vt\) and large t. Hence, we will work under the assumption

from now on. As will be shown in Sect. 4.4, this assumption is fulfilled for a rich class of potentials \(\xi .\)

We start with investigating the fluctuations of the function \(t \mapsto \ln u(t,vt)\) around \(t\Lambda (v)\) for values v in a neighborhood of \(v_0\), which are interesting in their own right. To this end, on the space \(C([0,\infty ))\) of continuous functions from \([0,\infty )\) to \(\mathbb {R},\) we define the metric

where we write \(\Vert f-g\Vert _j:=\sup _{x\in [0,j]}|f(x)-g(x)|.\) This makes \((C([0,\infty )),\rho )\) a complete separable metric space.

Theorem 1.3

Let (VEL) be fulfilled, \(u_0\in \mathcal {I}_{\text {PAM }},\) and \(u=u^{\xi ,u_0}\) be the corresponding solution to (PAM). Furthermore, let \(V\subset (v_c,\infty )\) be a compact interval such that \(v_0\in \text {int }(V)\). Then for each \(v\in V\), as \(n\rightarrow \infty \) the sequence of random variables \((nv)^{-1/2}\big ( \ln u(n,vn)-n\Lambda (v) \big ),\) \(n \in \mathbb {N},\) converges in \(\mathbb {P}\)-distribution to a centered Gaussian random variable with variance \(\sigma _v^2\in [0, \infty )\), where \(\sigma _v^2\) is defined in (3.1). If \(\sigma _v^2>0\), the sequence of processes

converges as \(n\rightarrow \infty \) in \(\mathbb {P}\)-distribution to a standard Brownian motion in the sense of weak convergence of measures on \(C([0,\infty ))\) endowed with the metric \(\rho \) from (1.15).

Note that in the above one should replace \([0,\infty )\) by \((0,\infty )\) for \(u_0\) such that \([0,\infty ) \ni t \mapsto u(t,vt)\) is not continuous in 0 (the latter might e.g. be the case for \(u_0\) of Heaviside type). In combination with perturbation estimates for u, we will use this result in order to infer an invariance principle for the front of the solution to (PAM). Note that since the function \(t\mapsto \overline{m}_t\) may be discontinuous, we consider convergence in the Skorohod space \(D([0,\infty ))\) in the following result; details on the latter can for example be found in [11].

Theorem 1.4

Let (VEL) be fulfilled, \(u_0\in \mathcal {I}_{\text {PAM }}\) and \(a>0\). Then for \(n \rightarrow \infty ,\) the sequence \(n^{-1/2}\big ( \overline{m}^{\xi ,u_0,a}(n)-v_0n \big ),\) \(n \in \mathbb {N},\) converges in \(\mathbb {P}\)-distribution to a centered Gaussian random variable with variance \(\widetilde{\sigma }_{v_0}^2\in [0,\infty )\), where \(\widetilde{\sigma }_{v_0}^2\) is defined in (3.58). If \(\widetilde{\sigma }_{v_0}^2>0\), the sequence of processes

converges as \(n\rightarrow \infty \) in \(\mathbb {P}\)-distribution to a standard Brownian motion in the Skorohod space \(D([0,\infty ))\).

We underline that in the above theorems the case \(\sigma _v^2=0\) (resp. \(\widetilde{\sigma }_{v_0}^2=0\)) is allowed and leads to a degenerate limit of the corresponding sequences. This can be excluded, e.g. if the finite-dimensional projections of the stochastic process \(\xi =(\xi (x))_{x\in \mathbb {R}}\) are associated (see e.g. [32] or [31] for definitions and results). In this case the covariances in (3.1) are nonnegative and \(\sigma _v^2>0\) follows. Nolen [29, Proposition 2.1] provides an example of a potential, which is generated by an i.i.d. sequence of random variables and thus associated.

1.6 The non-linear equation

Coming back to the original equation of interest, it is natural to ask whether we can obtain results for (F-KPP), which are in some sense counterparts to those derived in latter section for (PAM). For the solution to (F-KPP) it is known (see e.g. [14, §7.6]) that the first order of the position of the front is linear as well and moves with the same velocity as the front of (PAM). Indeed, by Freidlin [14, Theorem 7.6.1] we have that \(\mathbb {P}\text {-a.s.},\)

As mentioned in Sect. 1.2, the next result, which is one of the main results of the paper, states that there is an at most logarithmic distance of the fronts of (F-KPP) and (PAM).

Theorem 1.5

Let (VEL) be fulfilled. Then for each F fulfilling (SC) there exists a constant  such that the following holds: For all \(a>0\), \(\varepsilon \in (0,1)\), \(u_0\in \mathcal {I}_{\text {PAM }}\) and \(w_0\in \mathcal {I}_{\text {F-KPP }},\) there exists a non-random \(C=C(\varepsilon ,a,u_0,w_0)>0\) and a \(\mathbb {P}\text {-a.s.}\) finite random time \(\mathcal {T}=\mathcal {T}(\xi ,\varepsilon ,a,u_0,w_0)\geqq 0\), such that

such that the following holds: For all \(a>0\), \(\varepsilon \in (0,1)\), \(u_0\in \mathcal {I}_{\text {PAM }}\) and \(w_0\in \mathcal {I}_{\text {F-KPP }},\) there exists a non-random \(C=C(\varepsilon ,a,u_0,w_0)>0\) and a \(\mathbb {P}\text {-a.s.}\) finite random time \(\mathcal {T}=\mathcal {T}(\xi ,\varepsilon ,a,u_0,w_0)\geqq 0\), such that

Moreover for \(w_0=u_0\) and \(a\leqq \varepsilon \), the left inequality in (1.16) can be replaced by \(0 \leqq \overline{m}^{\xi ,u_0,a}(t) - m^{\xi ,F,w_0,\varepsilon }(t) \) for all \(t\geqq 0\).

Furthermore, combining Theorems 1.4 and 1.5, we can deduce an invariance principle for the front of (F-KPP) as well.

Corollary 1.6

Let (VEL) be fulfilled as well as F fulfill (SC), \(w_0\in \mathcal {I}_{\text {F-KPP }}\) and \(\varepsilon \in (0,1)\). Then as \(n \rightarrow \infty ,\) the sequence \(n^{-1/2}\big ( m^{\xi ,F,w_0,\varepsilon }(n)-v_0n \big ),\) \(n \in \mathbb {N},\) converges in \(\mathbb {P}\)-distribution to a centered Gaussian random variable with variance \(\widetilde{\sigma }_{v_0}^2\in [0,\infty )\), where \(\widetilde{\sigma }_{v_0}^2\) is defined in (3.58). If \(\widetilde{\sigma }_{v_0}^2>0\), the sequence of processes

converges as \(n\rightarrow \infty \) in \(\mathbb {P}\)-distribution to a standard Brownian motion in the Skorohod space \(D([0,\infty ))\).

1.7 Discussion and previous results

As already alluded to above, in the homogeneous case (1.1) of constant potential the front has been well-understood by now. This is indeed the case to a much wider extent than illustrated in the Introduction, see e.g. [6] and references therein for further details. Also the heterogeneous setting (F-KPP) of random potential and the properties of its solution have been investigated. Specifically, under fairly general assumptions, the existence and characterization of the propagation speed (i.e., the linear order \(\lim _{t \rightarrow \infty } m(t)/t\) of the position of the front) have been derived by Freidlin and Gärtner, see e.g. [15] as well as [14, Chapter VII], using large deviation principles. Incidentally, the Feynman–Kac formula (see Proposition 2.3 below), which characterizes the solution to the linearization (PAM), has also played an important role in the derivation.

Second order corrections for the position have been investigated by Nolen [29]. Also making the detour along (PAM), he examines (F-KPP) for a potential \(\xi \) under similar assumptions, but requires the (random) initial conditions to satisfy

Here, \(\mathcal {C}_1(\xi )\) and \(\mathcal {C}_2(\xi )\) are positive random variables, and \(g=g^{\xi ,\gamma }\) is a solution to

for \(\gamma >\overline{\gamma }\), for a certain \(\overline{\gamma }>0.\) For technical reasons, he additionally requires \(\gamma <\gamma ^*\), i.e., the initial condition \(w_0(x)\) must not decay too fast as x tends to infinity. The technical assumption (1.17) thus entails being in the supercritical regime which corresponds to waves that move faster than the minimal speed. As his main result, Nolen obtains a (functional) central limit theorem for m(t) in this case also, see [29, Theorem 1.4]. In this lingo, our set of initial conditions corresponds to the critical regime, and Corollary 1.6 (for the critical regime) also suggests that the randomness in Nolen’s central limit theorem is already coming from the environment, and does not necessarily require the randomness of the initial condition.

Furthermore, in [30] a corresponding invariance principle for the front has been derived in the case where the non-linearity in (F-KPP) is either ignition type or bistable.

In [18], on the other hand, for the case of periodic instead of random \(\xi ,\) the authors have investigated the respective logarithmic correction, corresponding to (1.2) in the homogeneous setting; here, the authors have been able to characterize the constant in front of the logarithmic correction as a certain minimizer.

In our main Theorem 1.5, we establish a corresponding logarithmic upper bound for the difference (1.5), also in the setting of a random environment. However, our methods are currently too coarse for identifying a sharp prefactor. Nevertheless, it is a natural question whether the logarithmic upper bound we derive captures the correct order at least. And indeed, in some sense, a partial positive answer to this questions is provided in the companion article [10]. There, the authors show that there exist an increasing sequence \((t_n)\) of times with \(t_n \in (0,\infty )\) such that \(\lim _{n \rightarrow \infty } t_n = \infty \) and a sequence \((x_n)\) of reals such that \(\overline{m}^\frac{1}{2}(t_n) - x_n \geqq c \ln t_n\) such that for all \(n \in \mathbb {N}\) one has

see the discussion around [10, (2.11)] for further details.

As explained above, there is a profound connection between the PDEs we consider and branching Brownian motion, which is more involved than in the setting of constant \(\xi .\) Indeed, related results for branching random walk in random environment (BRWRE) have been recently derived in [9]. In this source the authors analyze the distribution of the maximal particle of a branching random walk in random environment, which itself is closely related to a discrete-space version of (F-KPP). In particular, a corresponding logarithmic upper bound on the distance of the expected position of the maximal particle and the median of the distribution of the maximal particle is given. Furthermore, an invariance principle for the median of the position of the maximal particle of BRWRE is derived. It is therefore no surprise that, on the one hand, principal techniques we employ in this paper are generalizations and adaptations of respective discrete space analogues from [9]. On the other hand, we work under weaker independence assumptions and softer requirements on the non-linearity which we only require to be contained in (SC).

Recall that directly before Theorem 1.3 we have introduced our assumption (VEL), which reads \(v_0 >v_c;\) from a technical point of view, this will be necessary for our change of measure argument to work. It is not hard to show that for a rich class of potentials, this condition is satisfied indeed, cf. Sect. 4.4. What is more, however, in Proposition 4.10 below we also obtain a more profound understanding of the scope of the methods employed. I.e., there exist potentials \(\xi \) fulfilling (Standing assumptions), but such that \(v_0<v_c\) holds true. Therefore, it remains an open and interesting question to understand the behavior of the solutions to the above partial differential equations in case condition (VEL) is not fulfilled.

1.8 Strategy of the proof

Our proofs will be essentially based on techniques from probability theory. One of the principal tools for our investigations is given by the probabilistic representation of solutions to the partial differential equations (PAM) and (F-KPP) through branching Brownian motion in the random (branching) environment \(\xi ;\) the details will be discussed in Sect. 2.1. Further key roles in the proofs of Theorems 1.3 and 1.4 are played by the tilting of probability measures, concentration estimates, as well as perturbation estimates for the solution to (PAM). In the proof of Theorem 1.5 in particular, a modified second moment method will be fundamental. We now provide some further details on the proofs of the main results.

1.8.1 Proof of Theorem 1.3

Considering the initial condition \(u_0 = \mathbb {1}_{(-\infty ,0]}\) for the sake of exposition, the Feynman–Kac formula (1.12) supplies us with

where \(v>0\) stands for velocity. While in probabilistic terms, this expression can be interpreted as the expected number of particles of a branching Brownian motion—starting in vt and with branching rate \(\xi \)—to the left of the origin at time t, we will, after normalization, rather interpret it as a Brownian motion in the random potential \(\xi .\) In this vein, if \(v > v_c,\) then the expectation in (1.19) can be investigated by a random change of measure which depends on the potential \(\xi \), cf. Sect. 2.2, in particular Lemma 2.4. Under this perturbed probability measure, the typical behavior of the Brownian motion in the random potential \(\xi \) (which then has a drift towards the left) started in \(vt\) is to arrive in 0 approximately at time t. Since furthermore the first hitting time of the origin by this Brownian motion is in fact concentrated around t (the respective results can be found in Sect. 3.2), one can take advantage of a resulting renewal structure via independent—but not identically distributed—random variables, which is formulated in terms of empirical Legendre transforms. For a suitably centered and rescaled version of these, one can then show a functional central limit theorem by the use of martingale methods, see Proposition 3.1. Again using Proposition 3.5, one can then infer the desired functional central limit theorem stated in Theorem 1.3; the details are implemented in Sect. 3.3.

1.8.2 Proof of Theorem 1.4

The proof of this result boils down to understanding the behavior of the position of the front \(\overline{m}(t)\) of the solution to (PAM) in dependence on the time t. As already observed in (1.14), to first order it is not hard to observe that the position of the front of the wave is given by \(v_0t.\) Hence, the challenge is to establish the normally distributed second order correction (in functional form). For the purpose of the proof, we can take advantage of the understanding obtained along the proof of Theorem 1.3. More precisely, using perturbation estimates for the solution to (PAM) in time and space which are derived in Sects. 3.4 and 3.5, the functional central limit theorem for the suitably centered and rescaled version of the sequence \([0,\infty ) \ni t \mapsto \ln u(nt,vnt),\) \(n \in \mathbb {N},\) obtained in Theorem 1.3 can be transferred to the functional central limit theorem for the suitably centered and rescaled version of \([0,\infty ) \ni t \mapsto \overline{m}(nt),\) \(n \in \mathbb {N},\) which is the content of Theorem 1.4; see Sect. 3.7 for further details.

1.8.3 Proof of Theorem 1.5

A key difficulty in the proofs stems from the fact that the accuracy up to which we want to understand the behavior of the solutions is logarithmic in time, and hence much smaller than the typical fluctuations of the fronts of the solutions to (PAM) and (F-KPP).

In probabilistic terms, the solution w(t, x) of (F-KPP) with initial condition \(w(0,\cdot ) = \mathbb {1}_{(-\infty ,0]}\) corresponds to the probability that the leftmost particle of the branching Brownian motion in the random environment \(\xi \) is to the left of the origin at time t. As a consequence, proving that the implications of Theorem 1.5 are fulfilled therefore amounts to showing that if one starts at most logarithmically to the left of \(\overline{m}(t),\) the probability of actually seeing a particle to the left of or equal to the origin at time t, is bounded from below by \(\varepsilon .\) In order to do so, we take advantage of a second moment method, see (4.3), to obtain a lower bound on the probability to observe a particle in the branching Brownian motion in the random environment \(\xi \) to the left of the origin at time t. As in the homogeneous setting, such an approach is set to fail when considering all particles in the process; indeed, in this case the second moments would be prohibitively large in comparison to the square of the first moment. Hence, one has to restrict to so-called “leading particles” known from branching Brownian motion in homogeneous branching potential. This notion has to be adapted here in a careful manner so as to cater for the necessities of our random branching potential \(\xi .\) As in the homogeneous case, the remaining steps then boil down to finding good upper bounds for the second moment of leading particles (see Lemma 4.4 in Sect. 4.2) as well as a good lower bound on the first moment of leading particles, cf. Lemma 4.1 in Sect. 4.1; the latter is the harder part in our context. These findings then constitute the main steps in proving Theorem 1.5, the implementation of which is found in Sect. 4.3.

1.9 Notational conventions

We will frequently use sums of real-indexed quantities \(A_x\), \(x\in \mathbb {R}\). In this case, we write

where \(\sum _{i=1}^0:=0\). This notion remains consistent if we also allow for additive constants \(b\in \mathbb {R},\) i.e.

Finally, we set

A prime example is the quantity \(A_{x}= \ln E_x\big [ e^{\int _0^{H_{\lceil x\rceil -1}} (\xi (B_s)-\texttt {es}) \textrm{d}s} \big ]\), where \(H_y:=\inf \{t\geqq 0:B_t=y\}\). Indeed, by the strong Markov property we have \(\ln E_x\big [ e^{\int _0^{H_{0}} (\xi (B_s)-\texttt {es}) \textrm{d}s} \big ]=\sum _{i=1}^{\lfloor x\rfloor }A_i + A_x\) for all \(x\in [0,\infty )\setminus \mathbb {N}_0\).

Furthermore, we will often use positive finite constants \(c_1,c_2,\ldots \) in the proofs. This numbering is consistent within any of the proofs, and it is reset after each proof. On the other hand, \(C_1,C_2,\ldots \) will be used to denote positive finite constants that are fixed throughout the article, and they will oftentimes depend on each other. Other constants like \(c,C, \varepsilon ,\delta \) etc. in the proofs are used to compare certain quantities and are also reset after each proof.

The structure of this article is as follows. In Sect. 2 we will introduce various tools which play a seminal role in our investigations. In particular, these comprise the connections between the partial differential equations under consideration and branching processes, change of measure techniques, as well as concentration inequalities. Taking advantage of these results and further exact large deviation estimates, Sect. 3 first leads to a proof of Theorem 1.3. After developing perturbation results in space and time for the solution to (PAM), Theorem 1.3 will play a crucial role for the proof of Theorem 1.4 as well. The main goal of Sect. 4 then is to show Theorem 1.5, i.e., that the front of the solution to the non-linear equation (F-KPP) lags at most logarithmically in time behind the front of the solution to the linear equation (PAM).

2 Some Technical Tools

In this section we will introduce some further tools that will be helpful in the proof of the main results.

2.1 Connection to branching processes

We define a branching Brownian motion in random environment (BBMRE) as follows: Conditionally on the realization of \(\xi \) and for fixed \(x\in \mathbb {R}\), consider an initial particle starting at a point x and moving as a standard Brownian motion \((B_t)_{t\geqq 0}\) on \(\mathbb {R}\). While at site y, the particle dies at rate \(\xi (y)\). More precisely, for an exponentially distributed random variable S with parameter one, independent of everything else, the first particle dies at time \(\inf \big \{t\geqq 0: S<\int _0^t\xi (B_s)\textrm{d}s \big \}\). When the initial particle dies, it gives birth to k new particles with probability \(p_k\), \(k\in \mathbb {N}\) [see (PROB) below for the precise assumptions on the \(p_k\)]. The new particle(s) start their evolution at the site where their parent particle had died, and they evolve independently of everything else and according to the same stochastic behavior as their parent. Note that on the one hand we assume \(p_0=0\), so genealogies do not die out at any finite time. On the other hand, we allow \(p_1>0,\) i.e., it is possible to die and give birth to one descendant. This implies that a particle at site y branches into more than one particle with rate \(\xi (y)(1-p_1)\). We denote the corresponding probability measure by \(\texttt {P}_x^\xi \) and write \(\texttt {E}_{x}^\xi \) for the respective expectation. By N(t) we denote the set of particles alive at time t. For \(\nu \in N(t)\) we let \((X_s^\nu )_{s\in [0,t]}\) be the spatial trajectory of the genealogy of ancestral particles of \(\nu \) up to time t. For a Borel set \(A\subset \mathbb {R}\) and \(y \in \mathbb {R}\) we define

i.e., the set of particles which are in A at time t, and the number of particles to the left of y at time t.

Let us now introduce a set of functions, formulated in terms of the probability generating function of the sequence \((p_k)_{k\in \mathbb {N}}\) as above. More precisely, assume \((p_k)_{k\in \mathbb {N}}\) and \(F=F^{(p_k)_{k\in \mathbb {N}}}\) to fulfill

Note that such F always fulfills (SC), is smooth and strictly concave on (0, 1). Note that the assumption \(\sum _{k=1}^\infty kp_k = 2\). A fundamental result is the connection of the solution to (F-KPP) and the expected number of particles of the branching process. This is sometimes referred to as the McKean representation. The respective result in the homogeneous setting can be found in [20] as well as [27].

Proposition 2.1

Let \(w_0\in \mathcal {I}_{\text {F-KPP}}\), \(\xi \) satisfy (HÖL), and F fulfill (PROB). Then \(\mathbb {P}\)-a.s. the solution to (F-KPP) is given by

For the proof see Sect. A.5.

Remark 2.2

We will frequently use the application of the latter result to functions \(w_0=\mathbb {1}_{(-\infty ,0]}\), resulting in

Furthermore, we will need the so-called many-to-few lemma, which breaks down the moments of the branching process to a functional of the Brownian paths. For our purposes, it suffices to state it up to second moments (many-to-one and many-to-two formula).

Proposition 2.3

Let \((p_k)_{k\in \mathbb {N}}\) fulfill (PROB) and let \(\varphi _1\), \(\varphi _2: \, [0,\infty ) \rightarrow [-\infty ,\infty ]\) be càdlàg functions satisfying \(\varphi _1\leqq \varphi _2\). Then the first and second moments of the number of particles in N(t) whose genealogy stays between \(\varphi _1\) and \(\varphi _2\) in the time interval [0, t] are given by

and

respectively.

The proof of the first identity follows from [19, Section 4.1]. The second identity can be shown by using [19, Lemma 1], conditioning on the first splitting of the so-called “spines”, similarly to the proof of [16, (2.6)] for \(n=2\) there. Indeed, one has to consider branching Brownian motion instead of branching random walk and replace binary branching by general branching. Then the expectation of the quantity in display [16, (2.15)] turns into the second summand in (FK-2), because the first splitting rate of the two “spine particles” at site y is \((m_2-2)\xi (y)\).

As a consequence of Proposition 2.3, the solution to (PAM) can be expressed by a functional of the branching process, i.e. we have that the solution to (PAM) is given by

As a special case for \(u_0=\mathbb {1}_{(-\infty ,0]}\), this turns into

2.2 Change of measure

As a consequence of, among others, the previous display, it will be important to obtain a profound understanding of Brownian motion in the potential \(\xi .\) For this purpose, we introduce a change of measure which makes it typical for the Brownian motion in the Feynman–Kac formula started at tv, some \(v \ne 0,\) to be close to the origin at time t. This perturbed Brownian motion in the random potential \(\xi \) will then be amenable to an investigation through a certain regeneration structure, as we will show in Sect. 3. More precisely, let \((\xi (x))_{x\in \mathbb {R}}\) be as in (BDD) and define the shifted potential

Then \(\mathbb {P}\)-a.s.,

We will oftentimes write

for the first hitting times and their pairwise differences. The above shift of \(\xi \) gives rise to a change of measure which will play a crucial role in the following. For \(x,y \in \mathbb {R}\) as well as \(\eta \leqq 0\) define the probability measures \(P_{x,y}^{\zeta ,\eta }\) via

with normalizing constant

where \(P_x\) and \(E_x\), \(x\in \mathbb {R}\), are defined below (1.12). For \(A\in \sigma \big (B_{t\wedge H_{x-y}}:t\geqq 0\big )\), using the strong Markov property at time \(H_{x-y}\), we infer that \(P_{x,y}^{\zeta ,\eta }(A)=P_{x,y'}^{\zeta ,\eta }(A)\) for all \(y'\geqq y\). Thus, by the classical Kolmogorov’s extension theorem (see e.g. [36, Theorem 2.4.3]),

We write \(E_x^{\zeta ,\eta }\) for the corresponding expectation and introduce the logarithmic moment generating functions

where we recall the notation introduced in Sect. 1.9, and where the last equality is due to the strong Markov property. In addition, set

Due to (2.3), for any \(\eta \leqq 0\) the quantities above are well-defined, and it is easy to check that in this case and under (BDD), the expressions defined in (2.7)–(2.8) are finite. We have the following useful properties.

Lemma 2.4

-

(a)

The function \((-\infty ,0)\ni \eta \mapsto L(\eta )\) is infinitely differentiable and its derivative \(L'(\eta )\) is positive and monotonically strictly increasing.

-

(b)

We have \(\mathbb {P}\text {-a.s.}\) that

$$\begin{aligned} \lim _{x\rightarrow \infty } \overline{L}_{x}^\zeta (\eta ) = L(\eta )\quad \text {for all }\eta \leqq 0. \end{aligned}$$(2.9) -

(c)

\(L'(\eta )\downarrow 0\) as \(\eta \downarrow -\infty \)

-

(d)

For every \(v>v_c:=\frac{1}{L'(0-)},\) where \(\frac{1}{+\infty }:=0\) and where we call \(v_c\) critical velocity, there exists a

$$\begin{aligned} \text { unique solution}\quad \overline{\eta }(v)<0\quad \text {to the equation } L'( \overline{\eta }(v) ) = \frac{1}{v}. \end{aligned}$$(2.10)\(\overline{\eta }(v)\) can be characterized as the unique maximizer to \((-\infty ,0]\ni \eta \mapsto \frac{\eta }{v} - L(\eta ) \), i.e.

$$\begin{aligned} \sup _{\eta \leqq 0} \Big ( \frac{\eta }{v} - L(\eta ) \Big )= \frac{\overline{\eta }(v)}{v} - L\big ( \overline{\eta }(v) \big ). \end{aligned}$$(2.11)The function \((v_c,\infty )\ni v\mapsto \overline{\eta }(v)\) is continuously differentiable and strictly decreasing.

Proof of Lemma 2.4

-

(a)

This follows from Lemma A.1.

-

(b)

By [14, Theorem 7.5.1], for every \(\eta \leqq 0\) we get \(\mathbb {P}\)-a.s. that \( \lim _{x\rightarrow \infty } \overline{L}^\zeta _x(\eta ) = \mathbb {E}\big [ L_1^\zeta (\eta )\, |\, \mathcal {F}^\zeta _\text {inv} \big ],\) where \( \mathcal {F}^\zeta _\text {inv}\) is the \(\sigma \)-algebra of all \(\mathbb {P}\)-invariant sets. Due to our standing assumptions, the family \(\zeta (x),\) \(x \in \mathbb {R},\) is mixing and thus ergodic. Thus, \( \mathcal {F}^\zeta _\text {inv}\) is \(\mathbb {P}\)-trivial, i.e., \(\mathbb {E}\big [ L_1^\zeta (\eta )\, |\, \mathcal {F}^\zeta _\text {inv} \big ]=L(\eta )\). By continuity of the functions \(\overline{L}^\zeta _x\) and L, the statement follows.

-

(c)

We note that L is strictly increasing and strictly convex on \((-\infty , 0)\) by (a), and that

$$\begin{aligned}L(\eta )\geqq \mathbb {E}\Big [ \ln E_1\big [ e^{-(\texttt {es}-\texttt {ei}-\eta )H_0} \big ] \Big ]= -\sqrt{2(\texttt {es}-\texttt {ei}-\eta )}\quad \text {for all }\eta \leqq 0, \end{aligned}$$where the equality is due to [4, (2.0.1), p. 204]. Thus, we infer that its derivative \(L'(\eta )\) must tend to 0 as \(\eta \rightarrow -\infty \).

-

(d)

Using that \(1/v_c>1/v\) (where \(\frac{1}{0}:=+\infty \)) and the fact that \(L'\) is strictly increasing and continuous with \(L'(\eta )\downarrow 0\) for \(\eta \downarrow -\infty \), we can find a unique \(\overline{\eta }(v)<0\) such that \(L'(\overline{\eta }(v))=1/v\), giving (2.10). Display (2.11) is a direct consequence of (2.10) and standard properties of the Legendre transformations of strictly convex functions. In order to show the remaining part, we observe that since \(L'\) is strictly increasing and smooth on \((-\infty ,0)\), it has a strictly increasing inverse function \((L')^{-1}\), which is differentiable on \((0,1/v_c)\). By (2.10), for \(v>v_c\) we have \(\overline{\eta }(v)=(L')^{-1}(1/v).\) Hence, using the formula for the derivative of the inverse function we get that

$$\begin{aligned} \overline{\eta }'(v)=-\frac{1}{v^2}\cdot \frac{1}{L''(\overline{\eta }(v))}.\end{aligned}$$Since the right-hand side of the latter display is continuous in v and negative, we can conclude.

We use the standard notation \( L^*: \, \mathbb {R}\rightarrow (-\infty , \infty ]\) to denote the Legendre transformation

of L. Lemma 2.4 entails that

In the next part of this section, we are interested in the existence and the properties of a suitable tilting parameter \(\eta _x^\zeta (v)\) such that

holds true (setting \(\eta _x^\zeta (v):=0\) if no such parameter exists). For \(\eta _x^\zeta (v)\) fulfilling (2.13) we observe that under \(P_x^{\zeta ,\eta _x^{\zeta }(v)}\), the Brownian motion is tilted to have time-averaged velocity v until it reaches the origin. More precisely, in Lemma 2.5 we will show that for suitable v and x large enough, a tilting parameter as postulated in (2.13) actually exists. Furthermore, we will show that the random parameter \(\eta _x^\zeta (v)\) concentrates around the deterministic quantity \(\overline{\eta }(v)\) defined in (2.10). The last result is a perturbation estimate for \(\eta _x^\zeta (v)\) in x, cf. Lemma 2.7.

2.3 Concentration inequalities

We have the following result regarding the existence, negativity, and concentration properties of the postulated parameter \(\eta _x^\zeta (v)\).

Lemma 2.5

-

(a)

For every \(v>v_c\) there exists a finite random variable \(\mathcal {N}=\mathcal {N}(v)\) such that for all \(x\geqq \mathcal {N}\) the solution \(\eta _x^\zeta (v)<0\) to (2.13) exists.

-

(b)

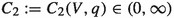

For each \(q\in \mathbb {N}\) and each compact interval \(V\subset (v_c,\infty )\), there exists

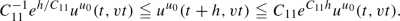

such that

such that  (2.14)

(2.14)

Proof

We recall that due to Lemma A.1, the tilting parameter \(\eta _x^\zeta (v)\) can alternatively be characterized as the unique solution \(\eta _x^\zeta (v) \in (-\infty ,0)\) to

if the solution exists, and \(\eta _x^\zeta (v)=0\) otherwise. We start with noting that Part (a) directly follows from Part (b). Indeed, let \(A_n\), \(n\in \mathbb {N}\), be the event in the probability on the left-hand side of (2.14). Then \(\sum _n \mathbb {P}(A_n)<\infty \) for \(q\geqq 2\). By the first Borel–Cantelli lemma, \(\mathbb {P}\)-a.s. only finitely many of the \(A_n\) occur. In combination with the fact that \(\overline{\eta }(v) <0\), cf. (2.10), this implies that \(\mathbb {P}\)-a.s., the value of \(\eta _x^\zeta (v)\) can only vanish for \(x>0\) small enough. In particular, we deduce the existence of a \(\mathbb {P}\)-a.s. finite random variable \(\mathcal N\) as postulated.

Hence, it remains to show (2.14). For this purpose, in the following lemma we investigate the fluctuations of the functions through which the parameters \(\eta _x^\zeta (v)\) and \(\overline{\eta }(v)\) are implicitly defined; we will then infer the desired bounds on the fluctuations of the parameters themselves through perturbation estimates for these functions.

Lemma 2.6

For every compact interval \(\triangle \subset (-\infty ,0)\) and each \(q \in \mathbb {N},\) there exists a constant  such that

such that

In order not to hinder the flow of reading, we postpone the proof of this auxiliary result to the end of the proof of Lemma 2.5 and finish the proof of Lemma 2.5 (b) first. Let \(q\in \mathbb {N}\) and \(V\subset (v_c,\infty )\) be a compact interval. By Lemma A.1, for each compact \(\triangle \subset (-\infty ,0)\) we have \(\mathbb {P}\)-a.s.,

Therefore, and because the function \(V\ni v\mapsto \overline{\eta }(v)\) is strictly decreasing by Lemma 2.4, it is possible to find \(N=N(V)\in \mathbb {N}\) and a compact interval \(\triangle =\triangle (N,V) \subset (-\infty ,0),\) where for notational convenience we write

such that, using standard calculus for sets,

Let \(n\geqq N\) and assume that the complement of the event on the left-hand side of (2.16),

occurs. On this event, for all \(v\in V\) and all \(x\in [n,n+1),\)

and thus, due to the strict monotonicity of \((\overline{L}_x^\zeta )'\) as well as its continuity implied by (2.17), there exists a unique \(\eta _x^\zeta (v)\in \triangle \) such that \(\big (\overline{L}_x^\zeta \big )'(\eta _x^\zeta (v))=1/v\). Due to (2.20), still assuming (2.19), we have

Thus, for

\(n\geqq N\), choosing

, the probability in (2.14) is upper bounded by the right-hand side of (2.16), which finishes the proof.

\(\square \)

, the probability in (2.14) is upper bounded by the right-hand side of (2.16), which finishes the proof.

\(\square \)

It remains to prove Lemma 2.6.

Proof of Lemma 2.6

Applying the strong Markov property at time \(H_{\lfloor x\rfloor },\) we get

Furthermore,

by (A.1) and Lemma A.1(b), and thus also

by (A.1) and Lemma A.1(b), and thus also

for all

\(x\geqq 1\) and all

\(\eta \in \triangle \),

\(\mathbb {P}\)-a.s. As a consequence, we get that for all

\(x\geqq 1,\)

for all

\(x\geqq 1\) and all

\(\eta \in \triangle \),

\(\mathbb {P}\)-a.s. As a consequence, we get that for all

\(x\geqq 1,\)

It is therefore enough to prove

For each \(\eta \in \triangle \), the sequence \(((L_i^\zeta )'(\eta )-L'(\eta ))_{i\in \mathbb {Z}}\) is a family of stationary, centered and bounded random variables. Furthermore, they fulfill the exponential mixing condition (A.11) due to Lemma A.2. Due to \(\sigma ((L_i^\zeta )'(\eta ):i\geqq k)\subset \sigma (\xi (x):x\geqq k-1)\) and (MIX), setting \(Y_i:=(L_i^\zeta )'(\eta )-L'(\eta )\), the left-hand side in (A.15) is bounded from above by some constant \(c_2>0\), uniformly for every i. Then setting \(m_i:=c_1\), condition (A.15) is fulfilled and we can apply the Hoeffding-type inequality from Corollary A.5 to infer the existence of \(c_2>0\) such that

Let \(\triangle _n := (\triangle \cap \frac{1}{n} \mathbb {Z})\cup \{\eta _*,\eta ^*\}\), recalling the notation of (2.18). Because \(|\triangle \cap \frac{1}{n} \mathbb {Z}|\leqq n\cdot \text {diam}(\triangle )+1\), taking advantage of the previous display we infer

By Lemma A.1(b), we have \(\mathbb {P}\)-a.s. that  . Thus, the mean value theorem entails that

. Thus, the mean value theorem entails that

and thus we find  such that (2.21) and hence (2.16) hold true.

such that (2.21) and hence (2.16) hold true.

In what comes below, many results will implicitly depend on the choice of the compact intervals V and \(\triangle ,\) which have already occurred before. Thus, in order to avoid ambiguity and due to assumption (VEL), we will now

Furthermore, due to Lemma 2.5, there exists a \(\mathbb {P}\)-a.s. finite random variable  such that

such that

We write

for the Legendre transformation of the weighted averages. We also recall that \(\eta _x^\zeta (v)=0\) if there is no solution \(\eta _x^\zeta (v)\in \triangle \) to (2.15); note that this can only happen on \(\mathcal {H}_x^c\).

In order to show an invariance principle for the Legendre transformation \(( \overline{L}_x^\zeta )^*\) in the following section, we now derive a perturbation result on the tilting parameter \(\eta _x^\zeta (v)\) in x.

Lemma 2.7

There exists a constant  such that \(\mathbb {P}\)-a.s., for all \(x \in (0,\infty )\) large enough, uniformly in \(v\in V\) and \(0\leqq h\leqq x\),

such that \(\mathbb {P}\)-a.s., for all \(x \in (0,\infty )\) large enough, uniformly in \(v\in V\) and \(0\leqq h\leqq x\),

Proof

By Lemma 2.5 we can choose x large enough such that \(\eta _y^\zeta (v)\in \triangle \) for all \(y\geqq x\) and all \(v\in V\). For \(h=0\), the statement is obvious. For \(0<h\leqq x\), it suffices to show that there exists \(c_1>0\) such that

Indeed, using (2.15) we can write

for some \(\widetilde{\eta }\in \triangle \) between \(\eta _{x}^\zeta (v)\) and \(\eta _{x+h}^\zeta (v)\). By the second display in (2.17) we know that \(\mathbb {P}\)-a.s.  . Using this, inequality (2.24) is a direct consequence of (2.25) with

. Using this, inequality (2.24) is a direct consequence of (2.25) with  . To prove (2.25), recall that for all \(\eta \in \triangle \), \(x\geqq 1,\) and \(0< h\leqq x,\) by the strong Markov property applied at time \(H_x,\)

. To prove (2.25), recall that for all \(\eta \in \triangle \), \(x\geqq 1,\) and \(0< h\leqq x,\) by the strong Markov property applied at time \(H_x,\)

Finally, recall that by Lemma A.1 there exists  such that \(\mathbb {P}\)-a.s. we have

such that \(\mathbb {P}\)-a.s. we have  . By exactly the same argument used for the proof of the latter inequality [see proof of (A.1)], one can show that also

. By exactly the same argument used for the proof of the latter inequality [see proof of (A.1)], one can show that also  holds \(\mathbb {P}\)-a.s. with the same constant

holds \(\mathbb {P}\)-a.s. with the same constant  . (2.25) now follows choosing

. (2.25) now follows choosing  .

.

3 Functional Central Limit Theorems, Large Deviations and Perturbation Results for the PAM

The principal objective of this section is to establish our first main results, i.e. the functional central limit theorems stated in Theorems 1.3 and 1.4. In order to prepare for this, we start with proving a functional central limit theorem for a suitably centered and rescaled version of the empirical Legendre transforms in Proposition 3.1 of Sect. 3.1. In Sect. 3.2 we then show how this limit theorem can be transferred to an auxiliary quantity \(Y_v^\approx ,\) see Proposition 3.5. In combination with concentration results (referred to as exact large deviations in probability theory) also obtained in Proposition 3.5, we can then deduce that the auxiliary quantity \(Y_v^\approx \) provides a good description of the (Feynman–Kac representation of the) solution to (PAM), see Corollary 3.8 also. In Sect. 3.3 we can then put these findings together to prove Theorem 1.3.

We next obtain perturbation results for the solution to (PAM) in Sects. 3.4 and 3.5. In Sect. 3.7, using the approximation results established in Sect. 3.6 in combination with Theorem 1.3, we can then transfer the latter functional central limit theorem to a functional central limit theorem for the position of the front of the solution to (PAM), hence completing the proof of Theorem 1.4.

3.1 A first functional central limit theorem

We start with a functional central limit theorem for a centered and rescaled version of the empirical Legendre transforms.

For this purpose let

We start with observing that \(\sigma _v^2\in [0,\infty )\) for all \(v\in V\). Indeed, \((\widetilde{L}_i)_{i\in \mathbb {N}}\), where \(\widetilde{L}_i:=L_i^{\zeta }(\overline{\eta }(v))-\mathbb {E}[L_i^{\zeta }(\overline{\eta }(v))]\), is a sequence of bounded (see Lemma A.1), centered and mixing (see Lemma A.2) random variables, giving

where the last inequality is due to uniform boundedness of \(\widetilde{L}_i\) in i, (A.9) and the summability criterion in (MIX). Thus, \(\sigma _v^2\) is well-defined and finite. Furthermore, the non-negativity \(\sigma _v^2\geqq 0\) is due to (3.2) and [34, Lemma 1.1].

We now introduce the process \(W^v_x(t)\) of empirical Legendre transformations

set \(W_0^v(t)=W_x^v(0)=0\) for \(t,x>0\), \(v\in V\), and obtain a first functional central limit theorem for it.

Proposition 3.1

For every \(v \in V\), \(W_n^v(1)\) converges in \(\mathbb {P}\)-distribution to a centered Gaussian random variable with variance \(\sigma _v^2\geqq 0\). If \(\sigma _v^2>0\), the sequence of processes

converges in \(\mathbb {P}\)-distribution to a standard Brownian motion in the sense of weak convergence of measures on \(C([0,\infty )),\) endowed with the topology induced by the metric \(\rho \) from (1.15).

Proof

It is sufficient to show the claim if \((W^v_n(t))_{t\in [0,\infty )}\) is replaced by \((W_n^v(t) \cdot \mathbb {1}_{\mathcal {H}_{nt}})_{t \in [0,\infty )},\) \(n \in \mathbb {N},\) with \(\mathcal H_{nt}\) as defined in (2.23), since the \(\mathbb {P}\)-probability of \(\mathcal H_{nt}\) tends to 1 for \(n\rightarrow \infty \) by Lemma 2.5. In the notation of (3.1), setting

on \(\mathcal {H}_{nt}\) we have

Thus, we can rewrite the relevant term as a sum of three differences

where we note that the third summand vanishes. Indeed, we have

where the last equality is due to (2.11) and the definition of the Legendre transform. The proof is completed by the use of Lemmas 3.3 and 3.2 below, which show that the second summand of (3.5) exhibits the postulated diffusive behavior whereas the first summand is negligible in that scaling.

Lemma 3.2

For every \(v\in V\) and \(t>0\), the sequence of random variables \(\frac{1}{\sqrt{n}}\Big (S_{nt}^{\zeta ,v}(\overline{\eta }(v)) - \mathbb {E}\big [ S_{nt}^{\zeta ,v}(\overline{\eta }(v)) \big ] \Big ),\) \(n \in \mathbb {N},\) converges in \(\mathbb {P}\)-distribution to a centered Gaussian random variable with variance \(\sigma _v^2\geqq 0\). If \(\sigma _v^2>0\), the sequence of processes

converges in \(\mathbb {P}\)-distribution to a standard Brownian motion in the sense of weak convergence of measures on \(C([0,\infty )),\) endowed with the topology induced by the metric \(\rho \) from (1.15).

Proof

Let \(\widetilde{L}_i:=L_i^{\zeta }(\overline{\eta }(v))-\mathbb {E}[L_i^{\zeta }(\overline{\eta }(v))]\), \(\widetilde{V}_i:=V_i^{\zeta }(\overline{\eta }(v))-\mathbb {E}[V_i^{\zeta }(\overline{\eta }(v))]\) and \(M\in \mathbb {N}\). Further set \(\widetilde{L}_i^{(M)}:=\sum _{j=1+(i-1)M}^{iM}\widetilde{L}_j\). Then \((\widetilde{L}_i^{(M)})_{i\in \mathbb {Z}}\) is a sequence of centered, stationary and (by Lemma A.1) bounded random variables. To show the central limit theorem on C([0, M]), we will use the method of martingale approximation from [17], which is summarized as a theorem by Nolen in [29, Section 2.3] and turns out to be applicable in our situation. That is, we have to make sure that condition [29, (2.36)] is fulfilled. Indeed, replacing \(\mathcal {F}^j\) in (A.13) by \(\mathcal {F}_k\) and noting that quantity A in (A.13) is \(\mathcal {F}_k\)-measurable we get

giving the convergence of the first series in [29, (2.36)]. Furthermore, using that \(\widetilde{L}_k^{(M)}\) is \(\mathcal {F}^{(k-1)M}\)-measurable and bounded, also recalling (MIX), we get

Because the series in (3.1) is absolutely convergent, by [34, Lemma 1.1] and [29, (2.37)] we have \(\lim _{n\rightarrow \infty }\frac{1}{n} \mathbb {E}\big [\big (\sum _{k=1}^n\widetilde{L}_k^{(M)}\big )^2\big ]=M\cdot \lim _{n\rightarrow \infty }\frac{1}{Mn} \mathbb {E}\big [\big (\sum _{k=1}^{Mn}\widetilde{L}_k\big )^2\big ]=M\cdot \sigma _v^2\in [0,\infty )\). Furthermore, if \(\sigma _v^2>0\), [29, Theorem 2.1] entails that the sequence of processes

converges in \(\mathbb {P}\)-distribution to a standard Brownian motion \((B_t)_{t\in [0,1]}\) in the sense of weak convergence of measures on C([0, 1]) with the topology induced by the uniform metric. Then by definition, the above convergence also holds true for \((\widetilde{V}_i)_{i\geqq 1}\) instead of \((\widetilde{L}_i)_{i\geqq 1}\). Furthermore, we have the uniform bound

Consequently, the sequence \([0,M]\ni t\mapsto \frac{1}{\sigma _v\sqrt{n}}\Big (S_{nt}^{\zeta ,v}(\overline{\eta }(v)) - \mathbb {E}\big [ S_{nt}^{\zeta ,v}(\overline{\eta }(v)) \big ] \Big )\) has the same weak limit as \(\big (\sqrt{M}\cdot X_n^{(M)}(t/M)\big )_{t\in [0,M]}\), \(n\in \mathbb {N}\), which converges to \(\big (\sqrt{M}\cdot B(t/M)\big )_{t\in [0,M]}\) and the latter process is a standard Brownian motion on [0, M]. Because \(M\in \mathbb {N}\) was arbitrary, Whitt [38, Theorem 5] gives weak convergence on \(C([0,\infty ))\).

To show that the first summand in (3.5) is asymptotically negligible, we use the following result.

Lemma 3.3

There exists a constant  such that for every \(v\in V\) and \(M>0\),

such that for every \(v\in V\) and \(M>0\),

Proof

There exists a \(\mathbb {P}\)-a.s. finite time  , defined before (2.23), such that for all

, defined before (2.23), such that for all  and all \(v\in V\) we have \(\eta _x^\zeta (v)\in \triangle \). Furthermore, by Lemma A.1, \(S_{x}^{\zeta ,v}\) is infinitely differentiable on \((-\infty ,0)\), so for all

and all \(v\in V\) we have \(\eta _x^\zeta (v)\in \triangle \). Furthermore, by Lemma A.1, \(S_{x}^{\zeta ,v}\) is infinitely differentiable on \((-\infty ,0)\), so for all  there exists \(\widetilde{\eta }_x^\zeta (v) \in [\overline{\eta }(v) \wedge \eta _{x}^\zeta (v), \overline{\eta }(v) \vee \eta _{x}^\zeta (v)]\) such that

there exists \(\widetilde{\eta }_x^\zeta (v) \in [\overline{\eta }(v) \wedge \eta _{x}^\zeta (v), \overline{\eta }(v) \vee \eta _{x}^\zeta (v)]\) such that

Due to (2.15), \((S_x^{\zeta ,v})'(\eta _x^{\zeta }(v))=0\) and by Lemma A.1 we have \(\sup _{\eta \in \triangle }\sup _{x\geqq 1}\big |(S_x^{\zeta ,v})''(\eta )\big |/x\leqq c_1\). By (2.14) and the first Borel–Cantelli lemma, there exists a finite random variable  such that for \(x\geqq \mathcal {N}_2\) the complementary event on the left-hand side of (2.14) occurs, hence

such that for \(x\geqq \mathcal {N}_2\) the complementary event on the left-hand side of (2.14) occurs, hence

and thus

with  . Finally, we have

. Finally, we have

where in the last inequality we used that \(\mathbb {P}\)-a.s., every summand in the definition of \(S_n^{\zeta ,\eta }\) is uniformly bounded by \(c_2\). The \(\mathbb {P}\)-a.s. finiteness of  gives the claim.

gives the claim.

As a by-product of the proof above we get an approximation result of \(W_x^v\) be a centered stationary sequence.

Corollary 3.4

For every \(v\in V\) and all t such that  we have

we have

Proof

By the definition of \(W^v_x(t)\) and \(S_x^{\zeta ,v}(\eta )\) from (3.3) and (3.4), as well as the definition in (2.12) for the corresponding Legendre transformations, we have

Then we can conclude using (3.6).

3.2 An exact large deviation result for auxiliary processes

The main result of this subsection is Proposition 3.5, where we show—cf. (3.10)—that the probability for the perturbed Brownian motion in shifted potential to hit the origin at time x/v for the first time exhibits certain concentration properties. These concentration properties then allow us to investigate \(Y_v^\approx \) instead of \(Y_v,\) see (3.7) and (3.9) below. The virtue of \(Y_v^\approx \) is that we will be able to employ Proposition 3.1 to deduce a functional central limit theorem for the process induced by it, see also (3.22) below. This again will be beneficial since \(Y_v^\approx \) provides a good description of the (Feynman–Kac representation of the) solution to (PAM), see Corollary 3.8 below.

For \(x\geqq 0\) and \(v>0\) we introduce

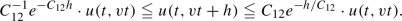

where \(K>0\) is a constant, defined in (3.17) below. For \(v\in V\) and \(x\geqq 1\) we define the random quantity

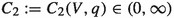

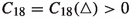

Furthermore, by Lemma A.1, there exists some \(C_{18}>1\) such that  for all \(x\geqq 1\). Thus, there is some constant

for all \(x\geqq 1\). Thus, there is some constant  such that

such that

We now prove the following result.

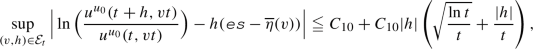

Proposition 3.5

Let V be as in (2.22), \(\sigma _v\) defined by (3.1) and \(W^v_x(t)\) as in (3.3), \(v\in V\), and \(K>0\) be such that (3.17) holds. Then there exists a constant  , such that for all \(v\in V\) and all \(x\geqq 1\), on \(\mathcal {H}_x\) we have

, such that for all \(v\in V\) and all \(x\geqq 1\), on \(\mathcal {H}_x\) we have

Furthermore, for all \(v\in V\) and all \(x\geqq 1\), on \(\mathcal {H}_{x}\) we have

and the sequence \(n^{-1/2}\big ( \ln Y^\approx _v(n)-nL^*(1/v) \big )\), \(n\in \mathbb {N}\), converges to a centered Gaussian random variable with variance \(\sigma _v^2\in [0,\infty )\), where \(\sigma _v^2\) is defined in (3.1). If furthermore \(\sigma _v^2>0\), then the sequence of processes

converges in \(\mathbb {P}\)-distribution to standard Brownian motion in \(C([0,\infty ))\).

Proof

We start with proving (3.9) and for this purpose let \(x\geqq 1\) such that \(\mathcal {H}_{\lfloor x\rfloor }\) occurs. Write \(\eta :=\eta _{x}^\zeta (v)\) and \(\sigma :=\sigma _x^\zeta (v),\) and recall the notation introduced in (2.4), i.e. \(\tau _i=H_i-H_{i-1}\), \(i=1,\ldots ,\lceil x\rceil -1\), and set \(\tau _x:= H_{\lceil x\rceil - 1} - H_x,\) \(x \in \mathbb {R}\backslash \mathbb {Z}\), (which is consistent with the definition in (2.4) for x integer) to define \(\widehat{\tau }_{i}:={\widehat{\tau }}^{(x)}_{i}:=\tau _{i} - E_x^{\zeta ,\eta }\left[ \tau _{i}\right] \). Then \(\sum _{i=1}^x E_x^{\zeta ,\eta }\left[ \tau _{i} \right] =E_x^{\zeta ,\eta }\left[ H_{0} \right] =\frac{x}{v}\). We now rewrite

Analogously, we get

We define \(\mu _x^{\zeta ,v}\) as the distribution of \(\frac{\eta }{\sigma }\sum _{i=1}^x\widehat{\tau }_i\) under \(P_x^{\zeta ,\eta }\). Then

and

Using Lemma 3.6 below, the integrals on the right-hand side of (3.13) and (3.14), multiplied by \(\sigma \), are bounded from below and above by positive constants. Display (3.9) now follows by the definition of \(W_x^v,\) and (3.10) is a direct consequence of (3.13)–(3.16). The last two statements, are a consequence of (3.9), (3.10), \(W_{nt}^v(1)=\frac{1}{\sqrt{t}} W_n^v(t)\) and Proposition 3.1.

To complete the previous proof, it remains to prove the following.

Lemma 3.6

Under the conditions of Proposition 3.5, there exists a constant  such that for all \(v\in V\) and \(x\geqq 1\), on \(\mathcal {H}_x,\)

such that for all \(v\in V\) and \(x\geqq 1\), on \(\mathcal {H}_x,\)

and

with \(\mu _x^{\zeta ,v}\) as in the proof of Proposition 3.5.

Proof

We write \(n:=\lceil x\rceil \) and recall that under \(P_x^{\zeta ,\eta }\), the sequence \(\Big (\sqrt{n}\frac{\eta _x^\zeta (v)}{\sigma _x^\zeta (v)}\widehat{\tau }_i\Big )_{i=1,\ldots ,\lfloor x\rfloor ,x}\) is a sequence of independent, centered random variables. Thus, on \(\mathcal {H}_x\) we obtain

Additionally, the \(\widehat{\tau }_i\), \(i \in \mathbb {N},\) have uniform exponential moments. Thus, the conditions of [3, Theorem 13.3] are fulfilled and an application of [3, (13.43)] yields

where the supremum is taken over all Borel-measurable convex subsets of \(\mathbb {R}\), \(\Phi \) denotes the standard Gaussian measure on \(\mathbb {R}\) and \(c_1\) only depends on the uniform bound of the exponential moments of the \(\widehat{\tau }_i,\) \(i \in \mathbb {N}.\) Without loss of generality, we will assume \(c_1>4\). Then, due to (3.8), by denoting \(\mathcal {C}:=\big [0,-K\eta _{x}^\zeta (v)/{\sigma _x^\zeta (v)}\big ]\) we can choose \(K>0\) large enough, so that

We thus get

Because the integrand in (3.15) is bounded away from 0 and infinity on the respective interval of integration (uniformly in \(n \in \mathbb {N}\)), (3.15) is a direct consequence of (3.8) and (3.18). For (3.16), we split the integral into a sum:

where we recall the notation from (2.18). The lower bound in (3.16) can be obtained by noting that

Analogously to (3.18), choosing  large enough, the last expression is bounded from below by

large enough, the last expression is bounded from below by  . Combining this with (3.8), we finally arrive at (3.16).

. Combining this with (3.8), we finally arrive at (3.16).

We are now in the position to prove the following result.

Lemma 3.7

Under the conditions of Proposition 3.5, for each \(\delta >0\) there exists a constant  such that for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

such that for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

Proof

The second inequality is obvious. Since \(\{B_{t}\leqq 0\}\subset \{ H_0\leqq t \}\) and \(\zeta \leqq 0\), we get  by Proposition 3.5 and thus the last inequality in (3.19) is obtained. Therefore, it remains to show the first inequality. For this purpose, define the random function \(p(s):=E_0\big [ e^{\int _0^s\zeta (B_r)\textrm{d}r};B_s\in [-\delta ,0] \big ]\) which almost surely is bounded from below by a deterministic constant \(c_1(K,\delta )>0\) for all \(s\in [0,K]\). Using the strong Markov property at \(H_0\), we finally get

by Proposition 3.5 and thus the last inequality in (3.19) is obtained. Therefore, it remains to show the first inequality. For this purpose, define the random function \(p(s):=E_0\big [ e^{\int _0^s\zeta (B_r)\textrm{d}r};B_s\in [-\delta ,0] \big ]\) which almost surely is bounded from below by a deterministic constant \(c_1(K,\delta )>0\) for all \(s\in [0,K]\). Using the strong Markov property at \(H_0\), we finally get

and the claim follows by choosing  .

.

Plugging the relation \(\xi (x)=\zeta (x)+\texttt {es}\), \(x\in \mathbb {R}\), into Lemma 3.7 immediately supplies us with the following corollary.

Corollary 3.8

Let  be as in Lemma 3.7. Then for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

be as in Lemma 3.7. Then for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

Using the Feynman–Kac formula (1.12), Lemma 3.7 also directly entails the following result; recall that \(u^{u_0}\) denotes the solution to (PAM) with initial condition \(u_0\in \mathcal {I}_{\text {PAM}}\).

Corollary 3.9

Let  be as in Lemma 3.7. Then for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

be as in Lemma 3.7. Then for all \(v\in V\), \(t>0\), on \(\mathcal {H}_{vt}\) we have

The previous results are fundamental for proving perturbation statements in the next section, which themselves will allow us to analyze path probabilities of the branching process.

3.3 Proof of Theorem 1.3

The previous findings already enable us to prove our first main result.

Proof of Theorem 1.3

We first assume \(\sigma _v^2>0\) and consider the case \(u_0=\mathbb {1}_{(-\infty ,0]}\) to show the second part of the claim, i.e. that the sequence of processes

converges in \(\mathbb {P}\)-distribution to standard Brownian motion. Because \([0,\infty ) \ni t\mapsto \ln u(t,vt)\) might be discontinuous only 0 (in which case we replace [0, \(\infty \)) by (0, \(\infty \)) in (3.20)), we cover both cases (continuous and discontinuous in 0) in a unified setting by showing the invariance principle for a sequence of auxiliary processes \((X_n^v(t))_{t\geqq 0}\), \(n\in \mathbb {N}\), where for every \(n\in \mathbb {N}\) and \(t\geqq \frac{1}{n},\) the term \(X_n^v(t)\) is the same as in (3.20), whereas for \(t\in [0,1/n]\) the term \(\ln u(nt,vnt)\) in (3.20) is replaced by \((1-nt)\ln u(0,0) + nt \textrm{ln}~ u(1,v)\), making \((X_n^v(t))_{t\geqq 0}\) continuous. Because the difference of the processes in (3.20) and \((X_n^v (t))_{t\geqq 0}\) converges uniformly to zero as \(n\rightarrow \infty \), convergence of the processes in (3.20) to a standard Brownian motion is equivalent to the convergence of the processes \((X_n^v (t))_{t\geqq 0}\), \(n\in \mathbb {N}\), to a standard Brownian motion in C([0, \(\infty \))) (or C((0, \(\infty \))) in case of a discontinuity in 0) with topology induced by the metric \(\rho \) from (1.15).

By Proposition 3.5 and Corollary 3.8, on \(\mathcal {H}_{nvt}\) (recall the notation from (2.23)) we have

Recall that a sequence of processes \(t\mapsto A_n(t)\), \(n\in \mathbb {N}\), converges in \(\mathbb {P}\)-distribution to standard Brownian motion if and only if for each \(c>0\) the sequence \(t\mapsto c^{-1} A_n(c^2 t)\), \(n\in \mathbb {N}\), converges in \(\mathbb {P}\)-distribution to a standard Brownian motion. Applying this to (3.11), the sequence of processes

converges in \(\mathbb {P}\)-distribution to a standard Brownian motion. Further, by the second line in (3.21),

holds. Consequently, if we can prove that

the claim follows from (3.22). To prove (3.23), we set \(n=1\) in (3.21) and note that \(\frac{W_{vt}^v(1)}{\sqrt{t}}\mathop {\longrightarrow }\limits _{t\rightarrow \infty }0\) \(\mathbb {P}\)-a.s. for all \(v\in V\), because \(W_n(1)\) converges in \(\mathbb {P}\)-distribution to a centered normally distributed random variable by Proposition 3.1. Using (3.25), (3.21) and (3.8), we get (3.23).

It remains to show the claim for arbitrary \(u_0\in \mathcal {I}_{\text {PAM}}\). Recall that there exist \(\delta '\in (0,1)\) and \(C'>1\), such that \(\delta ' \mathbb {1}_{[-\delta ',0]}(x) \leqq u_0(x)\leqq C' \mathbb {1}_{(-\infty ,0]}(x)\) for all \(x\in \mathbb {R}\). Therefore, using Corollary 3.9 we have

where we used that the solution to (PAM) is linear in its initial condition. Thus, the convergence of (3.20) for arbitrary initial condition \(u_0\in \mathcal {I}_{\text {PAM}}\) follows from the convergence with initial condition \(\mathbb {1}_{(-\infty ,0]}\). This gives the second part of Theorem 1.3.

It remains to show that \((nv)^{-1/2}\big ( \ln u(n,vn)-n\Lambda (v) \big )\) converges in \(\mathbb {P}\)-distribution to a Gaussian random variable. For \(u_0=\mathbb {1}_{(-\infty ,0]},\) this is a direct consequence of (3.21) for \(t=1\), (3.23) and the second part of Proposition 3.5. For general \(u_0,\) the claim follows from (3.24).

In view of Corollary 3.9, Proposition A.3 and (3.23), the Lyapunov exponent \(\Lambda \), defined in (1.13), determines the exponential decay (growth, resp.) for solutions to (PAM) for arbitrary initial conditions \(u_0\in \mathcal {I}_{\text {PAM}}\) (and not only for those with compact support).

Corollary 3.10

For all \(v\geqq 0\) and all \(u_0\in \mathcal {I}_{\text {PAM}}\) we have that \(\mathbb {P}\)-a.s.,

Furthermore, \(\Lambda \) is linear on \([0,v_c]\) and strictly concave on \((v_c,\infty ),\) and the convergence in (3.25) holds uniformly on any compact interval \(K\subset [0,\infty )\).

Proof