Abstract

In this paper, we study properties of a series–parallel system. The component lifetimes may be dependent and non-identically distributed (DNID) discrete random variables. We consider the number of failed components upon system failure. We derive the probability mass function and the expected value of this quantity. In addition, we find the conditional probabilities corresponding to this variate given some partial information about the system failure. We also provide a numerical example to demonstrate the theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Coherent systems are used to model mathematically sophisticated technical devices composed of simple components. According to Barlow and Proschan (1975), a structure consisting of n components is known as a coherent system if it has no irrelevant components (a component is irrelevant if it does not matter whether or not it is working) and the system is monotone in every component (that is, replacing a failed component by a working component cannot cause a working system to a fail).

The reliability properties of coherent systems have been extensively studied in recent years, see for example (Kelkinnama and Asadi 2019; Eryilmaz and Pekalp 2020; Li and Li 2020; Pitzen and Burkschat 2020; Roy and Gupta 2020; Navarro et al. 2021; Kelkinnama and Eryilmaz 2023). Most results are known to hold for the systems composed of components whose lifetimes have absolutely continuous distributions. With a continuity assumption the reliability analysis is easier, because the probability of ties between component failures is equal to zero. However, the discrete models occur very often, for example, when we consider a system in which component lifetimes represent the numbers of turn-on and switch-off up to failures or a system whose components may fail only at moments of shocks that occur in discrete time. For more details and recent advances on the study of the reliability properties of coherent systems composed of components with discrete lifetimes, we refer the reader to Weiss (1962), Young (1970), Tank and Eryilmaz (2015), Dembińska (2018), Dembińska and Goroncy (2020), Dembińska and Jasiński (2021), Jasiński (2021b), Dembińska et al. (2021) and Dembińska and Eryilmaz (2021). The classical monograph here is Unnikrishnan Nair et al. (2018).

For coherent systems, among other things, intensified interest are shown in the study of the number of failed components in the system under various conditions. Asadi and Berred (2012) investigated this quantity in a coherent system at some time t when the system is assumed to still be working and the component lifetimes are independent identically distributed (IID) absolutely continuous random variables (rvs). Jasiński (2021a) extended their results by dropping the continuous and IID assumptions. Under two different criteria this rv was considered by Hashemi and Asadi (2020) who next used these results to the optimal corrective and preventive maintenance of coherent systems with IID component lifetimes. The number of failed components in a failed or operative k-out-of-n system consisting of multiple types of components was studied by Eryilmaz (2018) and in a working arbitrary coherent system by Jasiński (2022). The k-out-of-n system, \(k=1,\ldots ,n\), is a coherent system that works as long as at least k of its n components work. Under the assumption that the component lifetimes of the k-out-of-n system are discretely distributed, Davies and Dembińska (2019) looked at the number of failed components upon system failure. This quantity is very useful in maintaining the system in optimum working condition. If we know its distribution, then we can better plan how many components would need to be repaired or replaced when the system fails. Dembińska and Eryilmaz (2021) considered this quantity in the series–parallel systems, that is, systems composed of disjoint parallel modules being serially connected. They focused on the number of failed components in each module at the time when the system fails and the component lifetimes are discretely distributed. They assumed that all components within the system are independent and each module has identical components while different modules have different types of components. Among future research problems they proposed the extension of their results to the case when the components are dependent. In this paper, we concentrate our studies on this situation. We use the concept of minimal cut sets of the system. We consider a series–parallel system when the component lifetimes are DNID discrete rvs. In Sect. 2, we derive the exact distribution of the rv X(T), i.e., the number of failed components in a series–parallel system at the time when the system breaks down and fails. Then, we use it to determine the conditional probabilities of X(T) given some partial information about the system failure. In Sect. 3, we provide a numerical example to demonstrate the theoretical results obtained in previous section.

Throughout the paper we use the following notation. We write \({\text {I}}(\cdot )\) for the indicator function, that is \({\text {I}}(A) = 1\) if the event A occurs and \({\text {I}}(A) = 0\) otherwise. The domain of \({\text {I}}(\cdot )\) is a Boolean domain consisting of exactly two elements whose interpretations include false and true. For \(A=\{a_{1},a_{2},\ldots ,a_{|A|}\}\) with \(1\le a_{1}<a_{2}<\ldots < a_{|A|}\le n\), \({\mathcal {P}}^{A}\) denotes the set of all permutations \((j_{1},j_{2},\ldots ,j_{|A|})\) of \((a_{1},a_{2},\ldots ,a_{|A|})\). Moreover, \({\mathcal {P}}^{A}_{s}\), \(s=0,\ldots ,|A|\) and \({\mathcal {P}}^{A}_{s,r}\), \(0\le s<r\le |A|\), stand for the subsets of \({\mathcal {P}}^{A}\) consisting only of permutations satisfying

and

respectively. It is understood that \({\mathcal {P}}^{A}_{0,r}={\mathcal {P}}^{A}_{r}\) and \({\mathcal {P}}^{A}_{s,n}={\mathcal {P}}^{A}_{s}\). We also use the following conventions: \(\bigcap _{i\in {\emptyset }}A_{i}=\varOmega \) and \(\prod _{i\in {\emptyset }}a_{i}\)=1. The first one means that an empty intersection is equal to the whole sample space and the second one means that an empty product is equal to 1.

2 Main result

Consider a coherent system composed of n components numbered \(1,\ldots ,n\). Let us denote by T the system lifetime. The discrete component lifetimes \(T_{1},\ldots T_{n}\) are allowed to be DNID rvs having cumulative distribution functions (cdfs) \(F_{i}(t)=P(T_{i}\le t)\), \(i=1,\ldots ,n,\) with support on finite or infinite subsets of the set of non-negative integers. Then \(p_{i}(t)=P(T_{i}=t)\) is the probability mass function (pmf) corresponding to \(F_{i}\), \(F_{i}(t^{-})=P(T_{i}<t)\) and \({\overline{F}}_{i}(t)=1-F_{i}(t)\). \(T_{1:n}\le \ldots \le T_{n:n}\) stand for order statistics from \(T_{1},\ldots T_{n}\).

Let us denote by X(T) the number of failed components at the moment of the coherent system failure. Our aim is to find the exact distribution of the rv X(T). We use the concept of minimal cut sets of the system. We say that \(C\subset \{1,\ldots ,n\}\) is a cut set of a coherent system if the failure of all components with indices in C guarantees the failure of the system. A cut set is said to be minimal if it does not contain any strict subset being a cut set. Then the system lifetime can be represented as

where \(C_{1},\ldots ,C_{s}\) are the minimal cut sets, see Navarro et al. (2007, p. 176). This means that a system works if at least one component in every minimal cut set works. Equivalently, a system fails if all components in at least one minimal cut set fail.

We assume that the minimal cut sets are pairwise disjoint, which implies the equality \(\big |\bigcup _{j=1}^{s}C_{j}\big |=\sum _{j=1}^{s}|C_{j}|\). We can treat them as the disjoint modules which are serially connected. Hence the system can be decomposed into s disjoint modules. The only systems with such structure are the series–parallel systems. Observe that when one module fails, other modules can work without any hindrance. To fix the broken system we only need to fix the broken module. Such structure of the system enables the operator to more efficient use of resources and avoid high unexpected costs.

For abbreviation, let \({\tilde{m}}\!=\!\min {\{|C_{1}|,\ldots ,|C_{s}|\}}\). We start with finding the probabilities of the events \(\{X(T)\!=w,T\!=t\}\), \(w={\tilde{m}},\ldots ,n\), \(t\in {\mathbb {N}}\).

Lemma 1

Consider a coherent system composed of n components where its minimal cut sets are pairwise disjoint. We assume that the discrete component lifetimes \(T_{1},\ldots ,T_{n}\) are DNID rvs and \(t\in {\mathbb {N}}\). Then for any \(w={\tilde{m}},\ldots ,n\), we have

where \(C_{z_{1}},\ldots ,C_{z_{s-j}}\) are the minimal cut sets which are not the elements of the union \(\bigcup _{l=1}^{j}C_{k_{l}}\) and \(P\left( A_{r_{1},v_{1},\ldots ,r_{s-j},v_{s-j}}^{w,t;m_{1},\ldots ,m_{j}}\right) \) can be expressed as follows

with \(h^{(k_{i})}\!=\!\left( h_{1}^{(k_{i})},\ldots ,h_{|C_{k_{i}}|}^{(k_{i})}\right) \), \(i\!=1,\ldots ,j\), \(h^{(z_{i})}\!=\!\left( h_{1}^{(z_{i})},\ldots ,h_{|C_{z_{i}}|}^{(z_{i})}\right) \), \(i\!=1,\ldots ,s-j\), and

Proof

By the representation (1), for \(w\!={\tilde{m}},\ldots ,n\), we obtain

Further, the above union can be also rewritten as the union of pairwise disjoint events

where \(C_{z_{1}},\ldots ,C_{z_{s-j}}\) are the minimal cut sets which are not the elements of \(\bigcup _{l=1}^{j}C_{k_{l}}\). We see at once that we take the union of not empty events for \(j=1,\ldots ,s\) and \(1\le k_{1}<\ldots <k_{j}\le s\) such that the inequality \(w\ge \sum _{l=1}^{j}\big |C_{k_{l}}\big |\) holds. Hence

Observe that

where

Because the events \(A_{r_{1},v_{1},\ldots ,r_{s-j},v_{s-j}}^{w,t;m_{1},\ldots ,m_{j}}\), \(m_{1}\!=\!1,\!\ldots \!,|C_{k_{1}}|\),\(\ldots \),\(m_{j}\!=\!1,\!\ldots \!,|C_{k_{j}}|\), \(r_{1}\!=\!0,\!\ldots \!,|C_{z_{1}}|\!-\!1\), \(v_{1}\!=\!0,\!\ldots \!,|C_{z_{1}}|\!-\!r_{1}-1\), \(\ldots \) \(r_{s-j}\!=\!0,\!\ldots \!,|C_{z_{s-j}}|\!-\!1\), \(v_{s-j}\!=\!0,\!\ldots \!,|C_{z_{s-j}}|\!-\!r_{s-j}-1\), are pairwise disjoint, we get

But

where \(h^{(k_{i})}=\left( h_{1}^{(k_{i})},\ldots ,h_{|C_{k_{i}}|}^{(k_{i})}\right) \), \(i=1,\ldots ,j\), \(h^{(z_{i})}=\left( h_{1}^{(z_{i})},\ldots ,h_{|C_{z_{i}}|}^{(z_{i})}\right) \), \(i=1,\ldots ,s-j\), and

which completes the proof. \(\square \)

In Example 1 given below, we demonstrate the application of Lemma 1.

Example 1

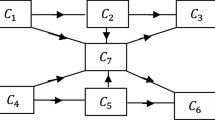

Consider a series–parallel system with the lifetime

Notice that this system has two disjoint minimal cut sets, which are serially connected, namely, \(C_{1}=\{1\}\), \(C_{2}=\{2,3\}\). We assume that the components are at risk for failure in a discrete manner. It follows that the lifetimes \(T_{1},T_{2},T_{3}\) are discretely distributed rvs. Suppose that during such a lifetime, there are cycles, and in each cycle the ith component is exposed to a shock, which it survives with probability \(p_{i}\in (0,1)\), \(i=1,2,3\). Moreover, in each cycle there is a common shock to all three components, which all of them survive with probability \(\theta \in (0,1)\) and neither of them survives with probability \(1-\theta \). The events of surviving the different shocks are independent of each other and from cycle to cycle. When a component fails, it remains forever inoperative.

Let the rvs \(T_{i}\), \(i=1,2,3\), denote the number of cycles up to and including the failure of the ith component. Under the above assumptions, they are DNID rvs with the following joint survival function

see Esary and Marshall (1973) for more details.

Combining (5) with Lemma 1, we obtain

Further, we immediately obtain

which leads to

Finally, observe that for \(t=1,2,\ldots \)

which implies

According to the expressions obtained in Example 1, it is a natural question about receiving their closed-forms in specific situations. Observe that knowing the dependence structure between \(T_{1},\ldots ,T_{n}\), we can simplify the formula given in Lemma 1. For example, we can consider the case when \(T_{1},\ldots ,T_{n}\) are exchangeable, that is for any permutation \((h_{1},\ldots ,h_{n})\) of \((1,\ldots ,n)\), the random vector \((T_{h_{1}},\ldots ,T_{h_{n}})\) has the same distribution as \((T_{1},\ldots ,T_{n})\). From the practical point of view it means that the component lifetimes have identical distributions, but they can affect one another within the system. If in Example 1 the equality \(p_{1}=p_{2}=p_{3}\) holds, i.e., when each component survives the shock in single cycle with the same probability, we obtain exchangeable rvs \(T_{1},T_{2},T_{3}\). Moreover, we can consider series–parallel systems, where \(T_{1},\ldots ,T_{n}\) are independent. In these particular cases Lemma 1 has the following closed-forms.

Corollary 1

Under the assumptions of Lemma 1, if moreover \(T_{1},\ldots ,T_{n}\) are exchangeable, then the probability of the event \(\{X(T)=w,T=t\}\) is given by (2) with

where \(\left( {\begin{array}{c}a\\ b,c\end{array}}\right) =\frac{a!}{b!c!(a-b-c)!}\) and \(^{h^{(k_{1})},\ldots ,h^{(k_{j})}}_{h^{(z_{1})},\ldots ,h^{(z_{s-j})}}B_{r_{1},v_{1},\ldots ,r_{s-j},v_{s-j}}^{t;m_{1},\ldots ,m_{j}}\) defined in (3) do not depend on permutations \(h^{(k_{1})},\ldots ,h^{(k_{j})}\), \(h^{(z_{1})},\ldots ,h^{(z_{s-j})}\). Hence the natural choice is to choose the simplest possible values, e.g., \(h^{(k_{1})}\!=\!\left( 1,\ldots ,|C_{k_{1}}|\right) \),...,\(h^{(k_{j})}\!=\!\left( \sum _{l=1}^{j-1}|C_{k_{l}}|+1, \ldots , \sum _{l=1}^{j}|C_{k_{l}}|\right) \) and \(h^{(z_{1})}\!=\!\left( 1,\ldots ,|C_{z_{1}}|\right) \), ..., \(h^{(z_{s-j})}\!=\!\left( \sum _{l=1}^{s-j-1}|C_{z_{l}}|+1,\ldots ,\sum _{l=1}^{s-j}|C_{z_{l}}|\right) \).

Corollary 2

Under the assumptions of Lemma 1, if moreover \(T_{1},\ldots ,T_{n}\) are independent such that \(T_{i}\) has cdf \(F_{i}\) and pmf \(p_{i}\), \(i=1,\ldots ,n\), then the probability of the event \(\{X(T)=w,T=t\}\) is given by (2) with

In the model of IID component lifetimes, the probability (6) has the form

By Lemma 1, we are ready to determine the probability mass function of X(T), i.e., the number of failed components upon system failure.

Theorem 1

Under the assumptions of Lemma 1, for any \(w={\tilde{m}},\ldots ,n\), we have

where the probabilities \(P(X(T)=w,T=t)\), \(t\in {\mathbb {N}}\), can be computed by the use of the formula (2).

Notice that the expected value E(X(T)) provides information about the mean number of failed components at the time of the series–parallel system failure.

Corollary 3

Applying Theorem 1, E(X(T)) can be computed as follows

Now we consider two situations when the rv X(T) depends on the time of the system failure. Firstly, we assume that the failure time of the series–parallel system is known to be \(T=t\). Under this condition, we calculate the probability of the number of failed components X(T), i.e., \(P(X(T)=w|T=t)\), \(w={\tilde{m}},\ldots ,n\). Secondly, suppose that a series–parallel system is still working at time t and we compute the corresponding conditional probabilities, i.e., \(P(X(T)=w|T>t)\), \(w={\tilde{m}},\ldots ,n\). To establish these conditional probabilities we need to recall the results concerning the reliability function of the system lifetime T.

Under the assumption that \(T_{1}\!,\!\ldots \!,\!T_{n}\) are DNID discrete rvs, Dembińska and Goroncy (2020, (4.3)) used the representation (1) to establish the expression for the survival function of T as follows

If we know the dependence structure between \(T_{1},\ldots ,T_{n}\), then the expression (9) can be simplified in particular cases. Thus

where

Using the representation (1) and the fact that the minimal cut sets are pairwise disjoint, we can also obtain considerably simpler forms of (10) in the independent and IID cases (cf. the method of derivation of the formula (3) in Dembińska and Eryilmaz (2021)). It follows that

where \(h_{1}^{(i)},\ldots ,h_{|C_{i}|}^{(i)}\) are the indices of the component lifetimes in the minimal cut set \(C_{i}\), \(i=1,\ldots ,s\), respectively.

Theorem 2

Let the assumptions of Lemma 1 hold and \(t\in {\mathbb {N}}\) be such that \(P(T=t)>0\). Then, for \(w={\tilde{m}},\ldots ,n\),

where the probabilities \(P\left( X(T)=w,T=t\right) \) and \(P(T=t)\) can be obtained from (2) and (9), respectively.

Theorem 3

Under the assumptions of Theorem 2 with “\(P(T=t)>0\)” replaced by “\(P(T>t)>0\)”, we have

where again the probabilities \(P\left( X(T)=w,T=u\right) \) and \(P(T>t)\) are given in (2) and (9), respectively.

Corollary 4

Combining Corollaries 1 and 2 with (10) (alternatively with (11)), we get the simplified forms of (7), (12) and (13), when \(T_{1},\ldots ,T_{n}\) are exchangeable or independent not necessarily identically distributed or IID rvs, respectively.

From the practical point of view, there is a problem to use software to calculate the probabilities given in (7) and (13) and consequently the expectation in (8) when the support of the rv \(T_{i}\) is not finite. Notice that the sums \(\sum _{t=1}^{\infty }\) in (7) or \(\sum _{u=t+1}^{\infty }\) in (13) may consist of infinitely many positive summands and we are not able to add all these summands using software. We propose a truncation method to solve these problems. We first fix the desired accuracy of the result (\(a>0\)). Next we decide how many elements of the infinite summation to include during calculations to obtain this accuracy. More precisely, to compute the probability (7), we split the infinite series into two parts as follows

and \(t_{0}\) is chosen so that

Thus the approximate formula \(P\left( X(T)=w\right) \approx \sum _{t=1}^{t_{0}}P(X(T)=w,T=t)\) gives an error not greater than a. Now we are interested in finding \(t_{0}\) satisfying (14). Since \(\sum _{t=1}^{\infty }p_{i}(t)=1\), \(i=1,\ldots ,n\), it easily seen that for any \(\varepsilon >0\) there exists \(t_{0}\) such that

By Lemma 1,

Since \(\sum \nolimits _{m_{l}=1}^{|C_{k_{l}}|}\!\!\left( {\begin{array}{c}|C_{k_{l}}|\\ m_{l}\end{array}}\right) \!=\!2^{|C_{k_{l}}|}\!-\!1\), \(l\!=\!1,\ldots ,j\), and \(\sum \nolimits _{r_{l}=0}^{|C_{z_{l}}|-1}\sum \nolimits _{v_{l}=0}^{|C_{z_{l}}|-r_{l}-1}\!\!\!\left( {\begin{array}{c}|C_{z_{l}}|\\ r_{l},v_{l}\end{array}}\right) \!=\!3^{|C_{z_{l}}|}-\!2^{|C_{z_{l}}|}\), \(l=1,\ldots ,s-j\), we obtain

We conclude from (15) that

where

Hence if \(\varepsilon \le \frac{a}{n\,{\tilde{a}}}\), then \(\sum _{t=t_{0}+1}^{\infty }P(X(T)=w,T=t)\le a\). Notice that we have proved the following proposition.

Proposition 1

Let \(T_{1},\ldots ,T_{n}\) be DNID discrete rvs. If \(t_{0}\) satisfies the condition

with \({\tilde{a}}\) defined in (17), then the approximate formula

gives an error not greater than a.

In particular cases, that is when \(T_{1},\ldots ,T_{n}\) are exchangeable or IID rvs, we can use a weaker condition than (18).

Proposition 2

If \(T_{1},\ldots ,T_{n}\) are exchangeable or IID rvs and \(t_{0}\) satisfies the condition

with \({\tilde{a}}\) defined in (17), then we can use the approximate formula given in (19).

Proof

Observe that if \(T_{1}\!,\!\ldots \!,\!T_{n}\) are exchangeable or IID rvs, then \(\max \nolimits _{i\in \{1,\ldots ,n\}}\!\!\!{\overline{F}}_{i}(t_{0})\) in (18) can be replaced by \({\overline{F}}_{1}(t_{0})\) as well as \(\max \nolimits _{i\in \{1,\ldots ,n\}}p_{i}(t)\) and \((p_{1}(t)+\ldots +p_{n}(t))\) in (16) by \(p_{1}(t)\). Then using the inequality (16), we get the following estimation

which is precisely the assertion of Proposition 2. \(\square \)

Similarly as \(P(X(T)=w)\), we can compute numerically the conditional probability \(P(X(T)=w|T>t)\) from Theorem 3. We only need to use the approximate formula \(P(X(T)=w|T>t)\approx \sum \nolimits _{u=t+1}^{t_{0}}P(X(T)=w,T=u)/P(T>t)\), where we choose \(t_{0}\) to control the error of the approximation of no more than a fixed level of accuracy \(a>0\). To find \(t_{0}\), we can proceed analogously to the proof of Proposition 1. It suffices to replace a in (18) by \(aP(T>t)\).

Finally, we are interested in computing numerically the mean number of failed components at the moment of the coherent system failure, i.e., E(X(T)) given in (8) with an error not greater than \(a>0\). Suppose that all maximal admissible errors of computing single probabilities \(P\left( X(T)=w\right) \), \(w={\tilde{m}},\ldots ,n\) are equal. We denote their common value by \(a_{max}\). Hence computing E(X(T)) we make an error which is not greater than \(\sum _{w={\tilde{m}}}^{n}w\,a_{max}=\frac{({\tilde{m}}+n)(n-{\tilde{m}}+1)}{2}a_{max}\). Taking \(a_{max}=\frac{2a}{({\tilde{m}}+n)(n-{\tilde{m}}+1)}\) we are ready to approximate the values of E(X(T)).

Proposition 3

Under the assumptions of Lemma 1, E(X(T)) given in (8) can be computed with an error not greater than \(a>0\), by the use of the approximate formula (19) for the probabilities \(P(X(T)=w)\), \(w={\tilde{m}},\ldots ,n\), with \(t_{0}\) chosen so that the condition in (18) holds with \(a>0\) replaced by \(a_{max}=\frac{2a}{({\tilde{m}}+n)(n-{\tilde{m}}+1)}\).

3 Numerical examples

In this section we present an example illustrating the theoretical results obtained in Sect. 2. In particular, we demonstrate the application of Corollary 4, and within these Proposition 2. According to Proposition 2, in the computational results we need to fix a level of accuracy. For all tables presented for the following example we take \(a=0.00005\).

Example 2

Consider a series–parallel system with the lifetime given in (4), where \(T_{1},T_{2},T_{3}\) are assumed to be IID rvs such that \(T_{1}\) has a geometric distribution geo(p), where \(p\in (0,1)\) and

Consequently, for \(t=1,2,\ldots ,\)

With the fact that the system has two serially connected disjoint minimal cut sets \(C_{1}=\{1\}\), \(C_{2}=\{2,3\}\), we are interested in computing numerically the probabilities \(P(X(T)=w)\), \(w=1,2,3\), for various p. Notice that combining Lemma 1 with (20) and (21), we obtain

Now to get the desired results it suffices to apply Corollary 4 (and Proposition 2). The probabilities \(P(X(T)=w)\), \(w=1,2,3\), for various \(p\in (0,1)\), are presented in Table 1. In addition, in brackets, underneath each corresponding probability, we provide the values of \(t_{0}\) (the number of summands sufficient in the sum to obtain the fixed accuracy). Next, we demonstrate some conditional probabilities obtained using Corollary 4 (and Proposition 2). For selected values of t, in Table 2 we compute the conditional probabilities that at the moment of the coherent system failure exactly w, \(w=1,2,3,\) components were broken, given that the system failure occurred at time t, i.e., \(P(X(T)=w|T=t)\). Similarly, for selected values of t, in Table 3 we give the conditional probabilities that w, \(w=1,2,3,\) components were broken at the moment of failure of the coherent system, given that the system survived beyond time t, i.e., \(P(X(T)=w|T>t)\). In addition, for the accuracy \(a=0.00005\) we provide values of \(t_{0}\) (see Proposition 2 and the comments after it). They are again in brackets. In Fig. 1 the conditional probabilities \(P(X(T)=t|T=t)\) and \(P(X(T)=w|T>t)\) are illustrated as functions of \(t=1,2,\ldots ,30\) for \(w=1,2,3\). We see at once that the conditional probabilities \(P(X(T)=2|T=t)\), \(P(X(T)=3|T=t)\) and \(P(X(T)=2|T>t)\), \(P(X(T)=3|T>t)\) are increasing functions of \(t=1,2,\ldots ,30\), while the probabilities \(P(X(T)=1|T=t)\) and \(P(X(T)=1|T>t)\) are decreasing.

In Table 4 we provide the expected values of the number of failed components at the moment of the coherent system failure. They were obtained by the use of the formula (8) and Proposition 3.

Conditional probabilities \(P(X(T)=w|T=t)\) and \(P(X(T)=w|T>t)\), \(w=1,2,3\), for the coherent system with the lifetime (4) as functions of \(t=1,2,\ldots ,30\) when \(p=0.2\)

4 Summary and conclusions

In this paper, we have considered series–parallel systems. Their reliability properties are very useful in practical situations. The series–parallel systems are suitable for modeling various systems such as, e.g. coal feeding system and port oil transportation system, see (Levitin and Amari 2009) and (Kołowrocki 2003) for more details. As it has been shown, studying the number of failed components in such system upon its failure is very important in optimal system design. We analyze systems whose component lifetimes are discretely distributed. The conclusions of this paper correspond to the results obtained by Davies and Dembińska (2019), who considered k-out-of-n systems and by Dembińska and Eryilmaz (2021), who examined series–parallel systems with independent component lifetimes. However, they were interested in the numbers of broken components in each module, not the total number of the broken components, at the time of the failure of a series–parallel system.

The study of the number of failed components in an arbitrary coherent system at the time when the system breaks down and fails will be among our future research problems. This quantity for multi-state series–parallel systems might be also very interesting.

References

Asadi M, Berred A (2012) On the number of failed components in a coherent operating system. Statist Probab Lett 82:2156–2163

Barlow RE, Proschan F (1975) Statistical Theory of Reliability and Life Testing: Probability Models. Holt, Rinehart and Winston

Davies K, Dembińska A (2019) On the number of failed components in a \(k\)-out-of-\(n\) system upon system failure when the lifetimes are discretely distributed. Reliab Eng Syst Saf 188:47–61

Dembińska A (2018) On reliability analysis of \(k\)-out-of-\(n\) systems consisting of heterogeneous components with discrete lifetimes. IEEE Trans Reliab 67:1071–1083

Dembińska A, Eryilmaz S (2021) Discrete time series-parallel system and its optimal configuration. Reliab Eng Syst Saf 215:107832

Dembińska A, Goroncy A (2020) Moments of order statistics from DNID discrete random variables with application in reliability. J Comput Appl Math 371:112703

Dembińska A, Jasiński K (2021) Maximum likelihood estimators based on discrete component lifetimes of a k-out-of-n system. TEST 30:407–428

Dembińska A, Nikolov NI, Stoimenova E (2021) Reliability properties of \(k\)-out-of-\(n\) systems with one cold standby unit. J Comput Appl Math 388:113289

Eryilmaz S (2018) The number of failed components in a \(k\)-out-of-\(n\) system consisting of multiple types of components. Reliab Eng Syst Saf 175:246–250

Eryilmaz S, Pekalp MH (2020) On optimal age replacement policy for a class of coherent systems. J Comput Appl Math 377:112888

Esary JD, Marshall AW (1973) Multivariate geometric distributions generated by a cumulative damage process. Techinal Report 55#Y73041A. Naval Postgraduate School, Monterey, California

Hashemi M, Asadi M (2020) On component failure systems with applications to maintenance strategies. Adv Appl Prob 52:1197–1223

Jasiński K (2021a) The number of failed components in a coherent working system when the lifetimes are discretely distributed. Metika 84:1081–1094

Jasiński K (2021b) Some conditional reliability properties of k-out-of-n system composed of different types of components with discrete independent lifetimes. Metrika 84:1241–1251

Jasiński K (2022) On the number of failed components in a coherent system consisting of multiple types of components. J Comput Appl Math 410:114189

Kelkinnama M, Asadi M (2019) Stochastic and ageing properties of coherent systems with dependent identically distributed components. Statist Papers 60:805–821

Kelkinnama M, Eryilmaz S (2023) Some reliability measures and maintenance policies for a coherent system composed of different types of components. Metrika 86:57–82

Kołowrocki K (2003) Asymptotic approach to reliability evaluation of large multistate systems with application to piping transportation. Int J Press Vessels Pip 80:59–73

Levitin G, Amari SV (2009) Optimal load distribution in series-parallel systems. Reliab Eng Syst Saf 94:254–260

Li Ch, Li X (2020) Weak aging properties for coherent systems with statistically dependent component lifetimes. Naval Res Logist 67:559–572

Navarro J, Ruiz JM, Sandoval CJ (2007) Properties of coherent systems with dependent components. Commun Statist Theor Meth 36:175–191

Navarro J, Rychlik T, Spizzichino F (2021) Conditions on marginals and copula of component lifetimes for signature representation of system lifetime. Fuzzy Sets Systs 415:99–117

Pitzen S, Burkschat M (2020) Representations of component importance for coherent systems with exchangeable components. J Appl Probab 57:385–406

Roy A, Gupta N (2020) Reliability of a coherent system equipped with two cold standby components. Metrika 83:677–697

Tank F, Eryilmaz S (2015) The distributions of sum, minima and maxima of generalized geometric random variables. Statist Papers 56:1191–1203

Unnikrishnan Nair N, Sankaran PG, Balakrishnan N (2018) Reliability modelling and analysis in discrete time. Academic Press

Weiss G (1962) On certain redundant systems which operate at discrete times. Technometrics 4:169–174

Young D (1970) The order statistics of the negative binomial distribution. Biometrika 57:181–186

Acknowledgements

I would like to express my sincere thanks to the associate editor and two anonymous referees for their constructive comments and suggestions which improved the presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Jasiński, K. On the number of failed components in a series–parallel system upon system failure when the lifetimes are DNID discrete random variables. Metrika 87, 183–200 (2024). https://doi.org/10.1007/s00184-023-00909-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-023-00909-1

Keywords

- Reliability theory

- Series–parallel system

- Discrete lifetime distribution

- Order statistics

- Disjoint modules