Abstract

We introduce a new quadratic asymmetric error correction model that comprehensively accounts for both sign and size asymmetries. We also propose a test protocol that allows to rigorously identify different sources of long-run nonlinearity, namely quadratic nonlinearity, size asymmetry and sign asymmetry. We use a nonparametric residual recursive bootstrap technique to report p-values for the long-run tests. Simulation results confirm the consistency of our proposed estimator in finite samples and show that the bootstrapped tests have reasonably good size and power properties. Although our estimation of the Okun’s Law for the USA confirms previous findings on the direction of the sign asymmetry, its reveals that the magnitude of the impact of economic downturns on unemployment decreases faster than the impact of upturns. Forecasting results show that our new model performs better than NARDL.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

It is well established that macroeconomic variables are likely to have a nonlinear and asymmetric behavior. This means that their fluctuations are dissimilar during different periods of the business cycle. Keynes (1936) suggested that negative economic shocks have, usually, sharper impact on the economy compared to positive shocks. Indeed, there is several empirical evidence supporting that nonlinearity and asymmetry are key features of macroeconomics dynamics (e.g.; (Neftçi 1984; Holly and Stannett 1995; Ramsey and Rothman 1996; Andreano and Savio 2002; Webber 2000; Lee 2000; Virén 2001; Granger and Yoon 2002; Schorderet 2001, 2003; Shin et al. 2014). For instance, it is commonly argued in the exchange rate literature that pass-through (ERPT) into import prices (or on domestic inflation) is asymmetric. In particular, the impact of exchange rate appreciation on prices is significantly different from the impact of depreciation (Shintani et al. 2013; Yanamandra 2015; Brun-Aguerre et al. 2012, 2017; Baharumshah et al. 2017). Based on these facts, we can conclude that the symmetric and linear assumptions used to derive classical economic models can be relatively restrictive and may lead to biased inferences and poor forecasts.

Besides non-linearity, macroeconomic data are generally non-stationary. In other words, variables do not usually exhibit mean reversion behavior in the long run, and have explosive variance processes (Rapach 2002). Obviously, this would create severe statistical issues regarding the asymptotic distribution of the coefficient estimates. To tackle this problem, the seminal work of Granger (1981) introduced the concept of cointegration, which is an estimation procedure that investigates the long-run relationship that may exist between two and more non-stationary time series. Later, Granger (1983) proved that two cointegrated series could be specified with a linear error correction model (ECM). Subsequently, a body of work has attempted to develop nonlinear cointegration models based on extended nonlinear versions of error correction model (ECM). One attractive form of the nonlinear error correction model (NLECM) is the threshold error correction model (TECM) that was introduced by Balke and Fomby (1997). The TECM has been extended by Lo and Zivot (2001) by focusing on multivariate threshold cointegration model and improved by Hansen and Seo (2002) by examining the case of unknown cointegrating vector in a complete multivariate threshold model. One major drawback of the TECM is the finite number of possible cointegrating regimes. To address this issue, many researchers have proposed generalized versions of TECM. For instance, Choi and Saikkonen (2004) provided an estimation and a testing procedure for smooth transition regression error correction model (STECM). Psaradakis et al. (2004) developed a Markov-switching error correction model (MSECM) in which deviations from the long-run equilibrium are characterized by different rates of adjustment. This class of ECM has the advantage to be more suitable in situations where the change in regime is induced by a sudden shock to the economy. To obtain a more general nonlinear cointegration framework, Park and Phillips (1999, 2001) started from a nonlinearly transformed version of a vector AR and developed an asymptotic theory for stochastic processes generated from nonlinear transformations of integrated time series. They showed that convergence rate of the sample function may be faster or slower than the linear cointegrated regression depending on the nonlinear transformation function. The pioneer work of Park and Phillips (1999, 2001) has triggered the researchers' interest in nonlinear cointegration analysis. In particular, several parametric, nonparametric and semiparametric cointegration models have been proposed to estimate nonlinear, nonstationary relationships (Karlsen et al. 2007; Cai et al. 2009; Wang and Phillips 2009a, b; Chan and Wang 2015; Linton and Wang 2008, 2016; Phillips et al. 2017; Wang et al. 2018; Dong and Gao 2018; Hu et al. 2019).

Other studies have considered asymmetric cointegration by decomposing the variables into positive and negative partial sums (see, for example, Schorderet 2001). For instance, Webber (2000) adopted the partial sum decomposition technique to investigate the long-run exchange rate pass-through rate into import prices. Moreover, Lee (2000) and Virén (2001) provided interesting analyses of asymmetries in Okun’s Law. Recently, Shin et al. (2014) developed a new framework by decomposing the time series into positive and negative partial sums to model the long-run and short-run asymmetries as a single cointegration vector. The main advantage of the proposed nonlinear ARDL (NARDL) consists in providing a flexible and simple econometric framework that accounts for both long- and short-run asymmetries. Although NARDL has been widely and successfully applied to different macroeconomics topics, it is still based on relatively restrictive assumptions of non-linearity. In fact, NARDL models implicitly assume that any potential nonlinearity in the impact of the explanatory variable, \(x\), on the dependent variable, \(y\), is exclusively explained by the sign asymmetry. However, it is rational to think that the magnitude of these impacts might vary over time, as they could depend on the level of \(x\) (long run) and/or on the size of the shock: \(\Delta x\) (short run). To illustrate this, we can refer to the exchange rate pass-through literature, where many studies report solid evidence showing that the pass-through depends on the size of the exchange rate shock (small vs large) as well as on the sign of the shock (depreciation vs appreciation).

To bridge this gap, we propose a new quadratic asymmetric error correction model (QAECM) that comprehensively accounts for different types of asymmetry: size and sign. To do so, we extend the model of Shin et al. (2014) by including the quadratic terms of the partial sum processes of both positive and negative shocks in the standard ECM model. The proposed model would account for sign and size asymmetries in both short and long runs. To the best of our knowledge, we are the first to adopt such a methodology.

It is true that TECM, MSECM and STECM can, to some extent, account for some forms of non-linearity; however, these models have some limitations. First, most of these models ignore the sign asymmetry and continue to assume symmetric impacts of positive and negative changes. Second, in the TECM and the MSECM, the size impact is modeled in a discrete manner, where the number of times the impact of the explanatory variables can vary is restricted to the number of regimes or thresholds. Hence, our QAECM can be considered as a continuous version of these models, where the number of thresholds or regimes is infinite. Third, although the QAECM is less restrictive than the abovementioned models, it requires the estimation of significantly fewer coefficients. It is true that the STECM allows for nonlinear smooth transitions in the cointegration relationship, but it does not account for any non-linearity related to the size effect.

The QAECM could potentially provide an explanation for the mixed empirical results on the direction of the sign asymmetry usually reported in the macroeconomic literature.Footnote 1 In fact, the direction of the sign asymmetry could simply depend on the size of the economic shock and/or the level of the explanatory variable.

We also propose a test protocol that allows us to rigorously identify and assess different sources of long-run nonlinearity, namely quadratic non-linearity, size asymmetry and sign asymmetry. The econometric test protocol consists, first, of testing for the presence of any nonlinear quadratic impact of the explanatory variable (the long-run quadratic impact (LQI) test). Secondly, we run the long-run quadratic decomposition (LQD) test in order to investigate whether this quadratic effect is transmitted exclusively through the overall level of \(x\) or through \({x}^{-}\), \({x}^{+}\), or any combination of these. Thirdly, based on the previous test, we run a size asymmetry test (the long-run quadratic asymmetry test (LQA test)) to check whether the quadratic impact of the positive partial sum (\(({{x}^{+})}^{2}\)) is statistically different from the quadratic impact of the negative partial sum (\({{(x}^{-})}^{2}\)).

Finally, we run the long-run sign symmetry (LSA) test to investigate whether or not the overall long-run impact of \({x}^{+}\) is different from the impact of \({x}^{-}\). It is important to mention that we need to run multiple LSA tests, given that the overall long-run impact of \({x}^{+}\) (or \({x}^{-}\)) is not stable across time and could depend on the levels of \({x}^{+}\) and \({x}^{-}\). In other words, because of the potential quadratic effect, the direction of the long-run sign asymmetry in the context of QAECM may change depending on the levels of both \({x}^{+}\) and \({x}^{-}\).

Given the potential non-stationarity of the regressors as well as the complex dependence structure between positive and negative partial sums (\({x}_{t}^{+} , {x}_{t}^{-} and {\left({x}_{t}^{+}\right)}^{2}, {\left({x}_{t}^{-}\right)}^{2}\)), it is not trivial to derive asymptotic distributions of those tests, and hence, no formal proof could be provided to validate their consistency.

Another contribution of this paper is that it provides a nonparametric residual recursive bootstrap technique to report consistent p-values for different long-run tests.Footnote 2 Given that the variables in the error correction term are usually non-stationary, it would be inconsistent to rely on conventional asymptotic theory (McNown et al. 2018). Our methodology is significantly different from the standard pairwise bootstrap technique used by Shin et al. (2014) in many aspects. First, whereas Shin et al. (2014) used the residuals of the unrestricted model to generate the bootstrap samples, we used the residuals of model under the null hypothesis. This seems to be more in line with the basic principles of bootstrapping. Second, instead of using a fixed regressor bootstrapping procedure as in Shin et al. (2014), where lagged dependent variable in the regressors is treated as exogenous, we follow an iterative bootstrapping technique where the lagged dependent variable is generated recursively. One major drawback of the fixed regressor bootstrap technique is that bootstrap samples are generated in a way that is not perfectly consistent with the null hypothesis. In fact, the bootstrapped \({y}_{t}\) vector is obtained from the observed \({y}_{t-1}\) vector (actual data), yet, the corresponding bootstrap test statistic is derived through estimation of a different regressor matrix that includes the bootstrapped \({y}_{t-1}\). It is true that the recursive bootstrap technique could be inefficient in finite samples when dealing with non-stationary regressors in the standard AR equation (Mackinnon, 2009), since, in this case, the empirical distribution of the test statistic would depend on the non-exogenous and non-stationary regressor matrix. However, in the context of ECM, all regressors are stationary when the cointegration test is not rejected.Footnote 3 Hence, recursive bootstrapping becomes obviously more appealing than fixed regressor bootstrapping.

In order to assess the finite-sample power and size of the bootstrapping tests, we conduct Monte Carlo simulations. Indeed, the Monte Carlo exercise seems to be crucial in our case since our nonlinear transformation function is nonhomogeneous, non-integrable and non-smooth, and obviously does not fall within any of the functions considered in Park and Phillips (1999, 2001), Chan and Wang (2015) and Hu et al. (2019). The results show that our estimator is consistent with relatively low bias even for small sample size. We also consider a novel data generator process parametrization that allows to generate unbalanced samples where, for instance, simulated data exhibit more positive changes than negative ones. We find that in this case, the power of tests sharply falls in particular when the sample size is small. Since many macroeconomic time series are indeed unbalanced in terms of positive versus negative changes, we should take the failing to reject the null hypothesis of the long run tests with some cautious, in particular when the sample size is small. This may also be true for other NLECMs.

To validate our proposed estimation technique, we use the QAECM to estimate Okun’s Law using US data over the period February1982–November 2003. Overall, our results are consistent with previous empirical findings suggesting that the response of unemployment to a decrease in output is stronger than the impact of an increase in output (Rothman 1998; Lee 2000; Virén 2001; Palley 1993; Cuaresma 2003; Silvapulle et al. 2004; Holmes and Silverstone 2006; Owyang and Sekhposyan 2012). However, our estimation results reveal several new interesting findings related to the presence of quadratic effects in the long-run relationship between unemployment and output gaps. According to our results, the negative impact of economic upturns on unemployment would decrease in magnitude when the cumulative economic upturns increase. Similarly, the positive effect of economic downturns on unemployment would also decrease as the cumulative economic downturns increases. Furthermore, we find that the magnitude of the impact of economic downturns on unemployment decreases faster than the impact of economic upturns.

In particular, the LSA test results suggest that the positive impact of economic downturns on unemployment is larger in magnitude than the negative impact of economic upturns for similarly low levels of \({x}_{t-1}^{+}\) and \({x}_{t-1}^{-}\). However, as the cumulative economic shocks increase, these effects are attenuated by the size impact (LQI test). The LQA test shows that the decrease in the magnitude of the impact of economic downturns is more pronounced than the impact of economic upturns.

It is, however, worth noting that since the cumulative economic upturns in the USA are generally much higher than the cumulative economic downturns, the effects of negative economic shocks on unemployment would be typically steeper than the effect of economic upturns, unless the US economy goes into a long-lasting depression.

Finally, to validate our proposed methodology, we compare our model forecast performance with those of NARDL and ARDL models. The results show that the QAECM performs better in predicting the impact of negative economic shocks on unemployment during an economic depression. This confirms that the impact of negative economic shocks on employment is usually underestimated in the context of classical econometric techniques that do not account for size non-linearity (i.e., standard NARDL models).

The paper is organized as follows. Section 2 presents the basic nonlinear ECM advanced by Shin et al. (2014). Section 3 introduces the QAECM model and develops the associated testing procedure in both the short and long runs (including the bootstrapping methodology). In Sect. 4, we conduct Monte Carlo simulations to investigate the finite sample proprieties of our new estimator. In Sect. 5, we report the results of our model estimation and we validate our model by comparing its forecasting performance with the standard NARDL and the classical ARDL models. Finally, Sect. 6 offers some concluding remarks.

2 The nonlinear ECM

The linear ECM developed by Pesaran et al. (2001) is widely used by empirical studies to examine the cointegration relationships among variables. The ECM proposed has the following form:

where \({\alpha }_{0}\) is an intercept, \({\mu }_{t}\) is an independently and identically distributed stochastic process, \(\rho \) and \(\theta \) are the long-run coefficients, \({\varphi }_{1, i}\) and \({\delta }_{2, i}\) are the short-run coefficients, and p and q are the optimal lags on the first-differenced variables selected by an information criterion, such as the Schwarz Information Criterion or Akaike Information Criterion. The null hypothesis that will be tested in order to check the existence of cointegration among the variables in Eq. (1) is \(H0: \rho \)= \(\theta \) = 0. However, several facts reveal that this relationship is nonlinear and asymmetric. In this case, the estimation of Eq. (1) will lead to a misleading result of short-run and/or long-run impacts. Several econometric approaches have been introduced to assess the existence of nonlinear relationships among variables, for instance, the STECM (Kapetanios et al. 2006), the MSECM (Psaradakis et al. 2004) and the TECM (Balke and Fomby 1997).

Recently, Shin et al. (2014) advanced a modified version of the ECM by assuming that \({x}_{t}\) has asymmetric impacts on \({y}_{t}\). The asymmetry impact is introduced by decomposing \({x}_{t}\) into its positive and negative partial sums. Therefore, the nonlinear asymmetric long-run regression can be expressed as:

where \({\theta }^{+}\) is the long-run coefficient associated with the positive change in \({x}_{t}\) and \({\theta }^{-}\) is the long-run coefficient associated with the negative change in \({x}_{t}\). It follows that \({x}_{t}\) can be decomposed as:

where \({x}_{0}\) is the initial value, and \({x}_{t}^{+}\) and \({x}_{t}^{-}\) are the partial sum processes of positive and negative changes in \({x}_{t}\), defined as:

and

Shin et al. (2014) showed that by substituting Eq. 3 in the ECM presented in Eq. 1, we obtain the following nonlinear asymmetric ECM:

where \({\theta }^{+}= -\frac{{\beta }^{+}}{\rho }\) and \({\theta }^{-}= -\frac{{\beta }^{-}}{\rho }.\)

3 The quadratic asymmetric error correction model

3.1 The theoretical model

Our model starts from the ECM of Eq. (6) by adding the quadratic terms of \({({x}_{t})}^{2}\) and \({(\Delta {x}_{t})}^{2}\). Given that \(x\) is decomposed into positive and negative cumulative changes, Eq. (6) can be extended to a more general ECM that considers the sign asymmetry, the quadratic effect and the quadratic asymmetry for both long and short runs. The QAECM can be written asFootnote 4:

One could think that the potential quadratic impacts of \({x}_{t-1}^{+}\) and \({x}_{t-1}^{-}\) could be captured by simply including \({\left({x}_{t-1}^{+}\right)}^{2}\) and\({\left({x}_{t-1}^{-}\right)}^{2}\). However, since\({\left({x}_{t-1}\right)}^{2}={\left({x}_{t-1}^{+}+{x}_{t-1}^{-}\right)}^{2}={\left({x}_{t-1}^{+}\right)}^{2}+{\left({x}_{t-1}^{-}\right)}^{2}+2{x}_{t-1}^{+}{x}_{t-1}^{-}\), it is important to include the cross-interactive term\({x}_{t-1}^{+}{x}_{t-1}^{-}\).Footnote 5 For instance, if \(\tau \) = 0, this means that the potential quadratic impact of \({x}_{t-1}\) is exclusively transmitted through \({x}_{t-1}^{+}\) and\({x}_{t-1}^{-}\). In this case, the potential quadratic impact of \({x}_{t-1}^{+} \left({x}_{t-1}^{-}\right)\) does not depend the overall level of \({x}_{t-1}\) but on the level of \({x}_{t-1 }^{-}\left({x}_{t-1}^{+}\right) .\)

Given that \({\Delta x}_{t-i}^{-}=0\) or \({\Delta x}_{t-i}^{+}\)= 0, for all \(t\), Eq. (7) simplifies to:

where \(\rho \) is the adjustment coefficient and \({\omega }_{t}={y}_{t}-{\theta }^{+}{x}_{t}^{+}-{\theta }^{-}{x}_{t}^{-}-{\sigma }^{+}{\left({x}_{t}^{+}\right)}^{2}-{\sigma }^{-} {\left({x}_{t}^{-}\right)}^{2}-\vartheta {x}_{t}^{+}{x}_{t}^{-}\) is the nonlinear error correction term, where \({\theta }^{+}=-\frac{{\beta }^{+}}{\rho }\), \({\theta }^{-}=-\frac{{\beta }^{-}}{\rho }\), \({\sigma }^{+}=-\frac{{\gamma }^{+}}{\rho }\), \({\sigma }^{-}=-\frac{{\gamma }^{-}}{\rho }\), \(\vartheta =-\frac{\tau }{\rho }\) are the long-run asymmetric coefficients.

3.2 The bootstrapping technique

Given that regressors in error correction terms are usually non-stationary, any statistical inference could be asymptotically inconsistent. To overcome this issue, we can use stochastic simulations to generate critical values or bootstrapping techniques (Li and Maddala 1997; Chang and Park 2003; Singh 1981; Beran 1988; Palm et al. 2010; McNown et al. 2018). Given that the former methodology can suffer from test mis-sizing issues as well as lack of practicality (see Shin et al. (2014)), we compute the tests’ p-values based on a recursive residual bootstrapping technique.

In order to compute bootstrap p-value, we run the following algorithm:

-

1.

Estimate the unrestricted model (H1) and calculate the test coefficient (tc).

-

2.

Estimate the restricted model (H0) and store the estimated coefficients, denoted with a hat (e.g., \(\widehat{\rho }\), \({\widehat{\beta }}^{+}\), \({\widehat{\beta }}^{-}\)), and the residuals \(\widehat{u}\).

-

3.

Rescale the residuals of the H0 model by following Davidson and MacKinnon (1999) in order to have the correct variance:\(\ddot{ u}={\left(\frac{T}{T-k}\right)}^{1/2}\widehat{u}\), where \(k\) is the number of regressors.

-

4.

Run the following loop B times (B is the number of bootstrap samples)Footnote 6:

-

4.1

Resample the rescaled residuals \({u}^{*}\sim \) EDF \((\ddot{u})\), where EDF is the the empirical distribution function that assigns probability \(1/T\) to each element of the vector \({u}^{*}\).

-

4.2

Using the resampled residuals,\({u}^{*}\), we generate the bth bootstrap sample in a recursive manner as follows (for illustration, H0:\(\tau =0\)):

-

4.1

-

$${\Delta \widehat{\mathrm{y}}}_{t}=\widehat{\rho }{\widehat{y}}_{t-1}+{\widehat{\beta }}^{+}{x}_{t-1}^{+}+{\widehat{\beta }}^{-}{x}_{t-1}^{-}+{\gamma }^{+}{\left({x}_{t-1}^{+}\right)}^{2}+{\gamma }^{-} {\left({x}_{t-1}^{-}\right)}^{2}+ \sum_{i=1}^{p-1}{\widehat{{\varphi }_{i}}\widehat{\Delta y}}_{t-i}+\sum_{i=0}^{q-1}( {\widehat{\delta }}_{i}^{+}{\Delta x}_{t-i}^{+}+{\widehat{\delta }}_{i}^{-}{\Delta x}_{t-i}^{-})+ \sum_{i=0}^{q-1}( {\widehat{\pi }}_{i}^{+}{\left({\Delta x}_{t-i}^{+}\right)}^{2}+{\widehat{\pi }}_{i}^{-}{\left({\Delta x}_{t-i}^{-}\right)}^{2})+{u}_{t}^{*} ;$$

-

$${\widehat{\mathrm{y}}}_{t}=\left\{\begin{array}{c}{{\widehat{\mathrm{y}}}_{t-1}+\Delta \widehat{\mathrm{y}}}_{t}, if t\ge m\\ {y}_{t} , if t<m,\end{array}\right., \mathrm{for}\,\,t=1\dots T,\mathrm{ where}\,\, m=\mathrm{max}\left(p,q\right)+ 1$$

We believe that it would be inconsistent to simply use the same fitted values of the restricted model H0 for all bootstrap samples, as it has been widely used in the literature on NARDL (fixed regressor bootstrapping). In fact, \({\Delta y}_{t-i}\) and \({y}_{t-i}\) should not be treated as standard exogenous explanatory variables (as \({\Delta x}_{t-i}\) and \({x}_{t-i}\)) when generating the bootstrap samples. Indeed, the values of \({\Delta y}_{t-i}\) (and \({y}_{t-i}\)) are not constant and should be updated (\({\Delta \widehat{y}}_{t-i}\) and \({\widehat{y}}_{t-i}\)) each time a new bootstrap sample is generated.

-

4.3

Compute the bootstrap test coefficient (tc_b).

-

5.

Compute the bootstrap p-value as follows: \(\frac{S}{B}\), where \(S\) is the number of bootstrap samples for which tc_b \(>\) tc, in the case of Wald tests.Footnote 7

3.3 Cointegration and nonlinearity tests

3.3.1 Cointegration tests

To test for the existence of a nonlinear long-run relationship between variables, we use two types of tests: the tBDM test and the Fpss tests. The tBDM test has the null hypothesis \(\rho =0\), whereas the Fpss test consists of testing the joint null hypothesis \({\rho =\theta }^{+}{=\theta }^{-}={\sigma }^{+}={\sigma }^{-} =\vartheta =0\). Both null hypotheses would indicate the absence of any long-run relationship among \({y}_{t}, {x}_{t}^{+}, { x}_{t}^{-}, { \left({x}_{t}^{+}\right)}^{2}, { \left({x}_{t}^{-}\right)}^{2} \mathrm{and} {(x}_{t}^{+}{x}_{t}^{-})\).

3.3.2 Long-run nonlinearity (size) tests

The nonlinear size effect can be potentially transmitted through different interrelated channels. In order to identify and investigate those channels, we propose the following test protocolFootnote 8:

-

i.

The LQI Test:

At this stage, we want to test for the presence of any long-run quadratic effect of \(x\), with H0: \({\sigma }^{+}={\sigma }^{-}=\) \(\vartheta \)= 0, (Wald test):

Under H0, the restricted model is:

If we do not reject H0, no further long-run size test is required, given the absence of any long-run quadratic effect. However, if we reject H0, we run the next test.

-

ii.

The LQD Test

We test whether the quadratic impact confirmed by the LQI test is exclusively transmitted via the overall level of \(x\) or via \({x}^{-}\), \({x}^{+}\) or any combination of these, where H0: \({\sigma }^{+}={\sigma }^{-}=\frac{1}{2}\) \(\vartheta ,\)Footnote 9 (Wald test).

Under H0, the restricted model is:

If H0 is not rejected, we conclude that the size effect is exclusively transmitted through the overall level of\({x}_{t}.\)Footnote 10 Otherwise, we can confirm that the levels of \({x}^{+}\) and \({x}^{-}\) matter for the size effect.

-

iii.

The LQA test

We only run this test if the H0 of the previous LQD test was rejected, since it would be unnecessary to test for quadratic asymmetry if this impact is transmitted exclusively through the overall level of \(x\). Here, H0: \({\sigma }^{+}=-{\sigma }^{-}\) (Wald test).

It is worth mentioning that we have to add a negative sign on \({\sigma }^{-}\) for the test to be consistent. In fact, when \({x}^{-}\) increases, \({{(x}^{-})}^{2}\) decreases, unlike \({x}^{+}\) and \({{(x}^{+})}^{2}\). We will explain this further below.

Under H0, the restricted model is:

If H0 is not rejected, we can conclude that the impact of the size is symmetric. This means that when \({x}^{+}\) and \({x}^{-}\) have the same magnitude, the quadratic effect is identical. For instance, if the size effect is positive (amplificatory impact), the impact of \({x}^{+}\) on \(y\) increases when \({x}^{+}\) goes up, and the impact of \({x}^{-}\) increases when \({x}^{-}\) goes down (i.e., the magnitude of \({x}^{-}\) increases). For this effect to be symmetric, the rate of increase in both cases should be also the same (\({\sigma }^{+}=-{\sigma }^{-}\)).

3.3.3 The LSA test

Unlike the classical NARDL model, where the impact of \({x}^{+}\) (or \({x}^{-}\)) on \(y\) is constant and equal to \({\theta }^{+}\) (\({\theta }^{-}\)), in our nonlinear ECM, this impact is variable and can potentially depend on the level of \({x}^{+}\) and \({x}^{-}\). Indeed, the derivative of \(y\) with respect to \({x}^{+}\) or \({x}^{-}\) is:

Thus, the LSA test is:

H0: \({\theta }^{+}+{2\sigma }^{+}{x}_{t}^{+}+\vartheta {x}_{t}^{-}={\theta }^{-}+{2\sigma }^{-}{x}_{t}^{-}+\vartheta {x}_{t}^{+}\) (Wald test)

Under H0, the restricted model is estimated via the Constrained Least Squares.Footnote 11 Obviously, we will have to run multiple tests for different levels of \({x}_{t }^{+}\) and \({x}_{t}^{-}\). Depending on how we define the sign symmetry effect, we can run two different sets of tests. We can use the real data values of \({x}_{t }^{+}\) and \({x}_{t}^{-}\) to test the sign asymmetry for each observation. We can also test for the sign asymmetry assuming that \({x}_{t }^{+}\) and \({x}_{t}^{-}\) have the same magnitude. The latter test procedure is consistent with defining sign symmetry as follows: when \({x}^{+}\) and \({x}^{-}\) have the same magnitude, the impact of an increase in \({x}^{+}\) on \(y\) is equal to impact of an increase in \({x}^{-}\) on \(y\).

In this case, H0 simplifies to:

We need to run multiple LSA tests for each value of \(\overline{x }\). The range of \(\overline{x }\) values should be consistent with the empirical data.

3.3.4 The short-run nonlinearity (size) asymmetry test Footnote 12

Since \(\Delta {x}^{+}\) and \(\Delta {x}^{-}\) cannot be simultaneously different from zero, any potential quadratic effect must be transmitted through (\({\Delta {x}^{+})}^{2}\) and/or (\({\Delta {x}^{-})}^{2}\). Hence, we do not need to go through the same test protocol as for the long-run quadratic component, with H0: \({\pi }_{i}^{+}=-{\pi }_{i}^{-}\), for\(i=0,\dots ,q\).

3.3.5 Short-run sign asymmetry tests

Following Shin et al. (2014), the short-run sign symmetry restrictions can take either of two forms:

-

Single test: H0: \({\delta }_{i}^{+}+2{\pi }_{i}^{+}{\Delta x}_{t-i}^{+}={\delta }_{i}^{-}+2{\pi }_{i}^{-}{\Delta x}_{t-i}^{-}\), for \(i=0,\dots ,q;\)

-

Additive test H0: \(\sum_{i=0}^{q-1}{(\delta }_{i}^{+}+2{\pi }_{i}^{+}{\Delta x}_{t-i}^{+})=\sum_{i=0}^{q-1}{(\delta }_{i}^{-}+2{\pi }_{i}^{-}{\Delta x}_{t-i}^{-})\), for \(i=0,\dots ,q.\)

In line with the sign asymmetry definition adopted for LSA tests, the short-run sign asymmetry tests simplify to:

-

Single test: H0: \({\delta }_{i}^{+}+2{\pi }_{i}^{+}\overline{\Delta x }={\delta }_{i}^{-}-2{\pi }_{i}^{-}\overline{\Delta x }\), for \(i=0,\dots ,q\);

-

Additive test H0: \(\sum_{i=0}^{q-1}{(\delta }_{i}^{+}+2{\pi }_{i}^{+}\overline{\Delta x })=\sum_{i=0}^{q-1}{(\delta }_{i}^{-}-2{\pi }_{i}^{-}\overline{\Delta x })\), for \(i=0,\dots ,q\),

where \(\overline{\Delta x } = {\Delta x}_{t-i}^{+}={- \Delta x}_{t-i}^{-}\). The range of \(\overline{\Delta x }\) should be consistent with the empirical data.

4 Finite sample performance: Monte Carlo simulations

In this section, we perform a simulation study to examine the finite-sample size and power of the bootstrap tests, where we generate data under the alternative hypotheses (unrestricted model).

We then report the average bias and the standards errors of each estimate. We also calculate the power of different bootstrap tests. To do so, we adopt the following sample data generating process (DGP):

With \({\Delta \mathrm{x}}_{t}={\varrho }_{t}\), and where (\({\varepsilon }_{t}, {\varrho }_{t})\) follow a bivariate normal distribution N \(\left(\left(\begin{array}{c}0\\ a\end{array}\right), \left(\begin{array}{cc}1& w\\ w& 1\end{array}\right)\right)\). Unlike Shin and al. (2015), we allow for \({\Delta x}_{t}\) to have nonzero mean, \(a,\) which will eventually affect the variability of \({x}^{+}\) relative to \({x}^{-}\). For instance, if \(a>0,\) positive changes in \(x\) will be much more frequent than negative changes. This would allow us to investigate the impact of unbalanced (positive Vs negative) dataset on the finite sample properties of our estimator.Footnote 13 We should also take into account the systematic bias in \({\delta }^{+}\) and \({\delta }^{-}\) introduced by \(w\), since the estimates of the coefficients of \({\Delta x}_{t}^{+}\) and \({\Delta x}_{t}^{-}\) would be equal to \({\delta }^{+}+w\) and \({\delta }^{-}+w,\) respectively.

In order to experiment wide variety of parametrization combinations, we choose arbitrary parameters \(c=0\), \({\theta }^{+}={\delta }^{+}=0.5\), \({\sigma }^{+}={\pi }^{+}=1\) and we denote \({\theta }^{-}={\theta }^{+}+{\Delta }_{\theta }\), \({\delta }^{-}={\delta }^{+}+{\Delta }_{\delta }\), \({\sigma }^{-}={-\sigma }^{+}-{\Delta }_{\sigma }\),Footnote 14\({\pi }^{-}={-\pi }^{+}-{\Delta }_{\pi }\), and \(\vartheta =\) \({\Delta }_{\vartheta }.\) After trying several parametrization combinations, we came to the conclusion that that the estimates bias, standard errors and test powers are mainly affected by \({\Delta }_{\sigma }\), \(a\), and T.Footnote 15 Without loss of generality, we set \({\Delta }_{\theta }\) and \({\Delta }_{\delta }\) to 0.5, \({\Delta }_{\pi }\) to 1, \(w\) = 0.5 and \({\Delta }_{\vartheta }\) to 0.

In Table 1, we report the bias and standard errors for different combinations of \({\Delta }_{\sigma }\)(0.5, 1, 9), \(a (\mathrm{0,1})\) and T (100, 200, 500), using 3000 replications. We can clearly notice that the bias for all estimated parameters is relatively small and not significantly affected by parameters. As expected, when the sample size, T, increases, the standard errors of the estimates decrease. Interestingly, when \(a=1\), the standard errors of \({\theta }^{-}, { \delta }^{-}, {\sigma }^{-}\) and \({\pi }^{-}\) become considerably larger than their counterparts of \({\theta }^{+}, { \delta }^{+}, {\sigma }^{+}\) and \({\pi }^{+}\). This is explained by the higher variability in \({\Delta x}_{t}^{+}\) and \({x}_{t}^{+}\) compared to \({\Delta x}_{t}^{-}\) and \({x}_{t}^{-}\), when \(a>0\).

In Table 2, we report the power of the six cointegration and long run nonlinearity tests described in 3.3.Footnote 16 We use the bootstrap technique (Sect. 3.2) to compute the power of tBDM, Fpss, LQI, LQD, LQA and LSA.Footnote 17 Noticeably, the power of the cointegration tests tBDM, Fpss and the long run Wald tests LQI and LQD are always 1 regardless of the sample size T and the values of \({\Delta }_{\sigma }\) and \(a\). However, the powers of the quadratic asymmetry test (LQA) and the sign asymmetry test (LSA) are significantly lower, in particular for small sample sizes, small values of \({\Delta }_{\sigma }\) and for \(a\ne 0\). For instance, the powers of LQA and LSA are as low as 0.095 and 0.137, respectively, when \({\Delta }_{\sigma }=0.5\), \(T=100\) and \(a=1\). In fact, it is rational to expect the powers of the long asymmetry tests (LQA and LSA) to be positively correlated with \({\Delta }_{\sigma }\), as the latter determines the difference between the null and the alternative hypotheses of the size and sign asymmetry tests. Interestingly, \(a\) seems to be the most influential parameter affecting the powers of LQA and LSA. Indeed, when simulated samples are balanced with almost same proportions of positive and negative \(\Delta x\) (\(a=0)\), the lowest power we report is 0.73, which is relatively reasonable when compared to the case where \(a=1\). Hence, we should take the bootstrap failing to reject the null hypothesis for LQA and LSA with some cautious when estimating unbalanced dataset with relatively small sample size, in particular when the difference between the estimated coefficients \({\widehat{\sigma }}^{+}\) and \({\widehat{\sigma }}^{-}\) is not considerably large.

5 Empirical application: Okun’s law

Okun’s Law describes the inverse relationship between unemployment and output. It is considered as one of the most important macroeconomic concepts at both the theoretical and empirical levels. Referring to the estimation of Okun (1962), an extra 1% of real gross national product results in a 0.3% point reduction in the unemployment rate, which suggests the existence of a negative relationship between unemployment and output. This relationship has attracted the attention of researchers, given its robust empirical regularity and complementarity with other macroeconomic theoretical models. Indeed, the aggregate supply function can be straightforwardly derived by including Okun’s Law in the standard Philips curve (Mankiw 2015).

Many empirical studies have been conducted to provide evidence on the linkage between output and unemployment as predicted by Okun’s Law (e.g., Harris and Silverstone 2001; Zanin and Marra 2012; Zanin 2014; Fernald et al. 2017; Daly et al. 2018).

Although most of these studies confirm the negative relationship between unemployment and output, the results reveal significant variation in Okun coefficients across countries and over time.

Most of these studies assume that the impact of output on unemployment is symmetric, ignoring the potential asymmetry that can characterize the relationship between output and unemployment (Virén 2001; Cuaresma 2003; Silvapulle et al. 2004; Huang and Chang 2005; Shin et al. 2014; Koutroulis et al. 2016). The argument that Okun’s Law can exhibit an asymmetric behavior is strongly related to the business cycle. In fact, output has a different impact on unemployment during economic upturns than during economic downturns. In the case of the US case, several empirical studies have shown that the impacts of downturns are stronger than the impacts of upturns. For instance, Neftçi (1984) provides evidence suggesting that the unemployment rate in the USA increases much more strongly during economic recessions than it declines during economic expansion. Using US data, Rothman (1991) and Brunner (1997) confirmed the same behavior in unemployment’s response to output. In the same vein, Palley (1993), Cuaresma (2003) and Silvapulle et al. (2004) demonstrated that the Okun coefficient is higher during recessions than during expansions. More recently, Cazes et al. (2013) showed that the impact of output on unemployment is not stable and is larger during downswings than during upswings. Likewise, Pereira (2013) provided evidence indicating that the Okun relationship is asymmetric, with a stronger relationship during periods of recession. Belaire-Franch and Peiró (2015) also found evidence in favor of asymmetric behavior between the unemployment rate and changes in the output gap.

There is no doubt that understanding different roots of the asymmetric behavior in the relationship between unemployment and output is fundamental for policy makers. However, the abovementioned studies ignored the potential nonlinearity in the impact of output on the unemployment rate related to the size of the economic shock or/and to the overall level of the output gap. Thus, estimating the equation of Okun’s Law with our new QAECM that takes both sign and size asymmetry into account can shed new light on the interpretation of the Okun coefficients and allow for better forecasts of unemployment rates. In the next section, we will try to answer the following questions:

- Q1 :

-

Does the size of a change in the output gap matter for the impact on unemployment (short run)?

- Q2:

-

Does the level of the output gap matter (long run)?

- Q3 :

-

If so, are these effects symmetric (positive vs negative)?

5.1 Nonlinear ARDL estimation

We use the following NARDL estimation:

where \(u\) is the US unemployment rate and \(y\) is the industrial output index in the USA. The data span over the period from February1982 to November 2003 (collected from the OECD's Main Economic Indicators).

Table 3 reports the NARDL estimation results of Eq. (15). This estimation can serve as a benchmark for the next estimation (QAECM). Both tests (tBDM and FPSS statistics) reject the null hypothesis, which confirms the existence of a long-run relationship between unemployment and the output gap. Moreover, the Wald tests reject the null hypothesis of long-run symmetry. In line with Shin et al. (2014), our results show that economic downturns have a larger impact on output than economic upturns.

5.2 Quadratic asymmetric ECM estimation

The corresponding QAECM for estimating Okun’s Law is:

where \({\xi }_{t}={u}_{t}-{\theta }^{+}{y}_{t}^{+}-{\theta }^{-}{y}_{t}^{-}-{\sigma }^{+}{\left({y}_{t}^{+}\right)}^{2}-{\sigma }^{-} {\left({y}_{t}^{-}\right)}^{2}-\vartheta {y}_{t}^{+}{y}_{t}^{-}\) is the nonlinear error correction term.

Following Shin et al. (2014), we use the generalized-to-specific approach to select the final QAECM lags.Footnote 18

The estimation of the selected QAECM is reported in Table 4. The cointegration test results are reported in the bottom rows of Table 4. Both tBDS and the FPSS statistics reject the hypothesis of no cointegration between variables at the 5% level of significance. The LQI test (28.22) confirms that the size effect is significant. Hence, the impact of \({y}_{t-1}\) on \({\Delta u}_{t}\) is not constant and depends on the size of \({y}_{t}\) (\({y}^{+}\) and \({y}^{-}\)). Based on the LQD test, one could think that the quadratic effect might be transmitted exclusively through the overall size of \({y}_{t-1}\). However, this could be a statistical consequence of the very large variance of the last two coefficients (\({\left({y}^{-}\right)}^{2}\) and \({y}^{+}{y}^{-}\)). Indeed, if we run individual t-tests on these three coefficients with bootstrap p-values, we can conclude that \({\sigma }^{+}\) is significant, whereas \({\sigma }^{-}\) and \(\vartheta \) are not (Table 4). However, we cannot drop these last two coefficients simultaneously, as a Wald test for H0: \({\sigma }^{-}\) =\(\vartheta \) = 0 is rejected at the 2% level. Without loss of generality, we decide to drop \({y}^{+}{y}^{-}\).Footnote 19 Table 5 presents the results estimation of the final selected QAECM.

As expected, the LQI test confirms the significance of the size effect in the impact of output on unemployment (Wald = 39.4, p-value = 0).Footnote 20

The fact that \({\upsigma }^{+}\)(\(1.61)\) is positive means that negative impact of economic upturns on unemployment, as predicted by Okun’s Law, would decrease in magnitude when the cumulative economic upturns increases. Similarly, \({\upsigma }^{-}\)(\(-7.01)\) also implies that the positive effect of economic downturns on unemployment would decrease as the cumulative economic downturns go up.Footnote 21

Hence, for both economic cumulative shocks (upturns and downturns), the size has an attenuating effect on the overall impact of the output gap on unemployment. These findings are consistent with basic economic theory. In fact, unemployment cannot decrease at the same rate after successive economic upturns, particularly when it approaches the natural rate of unemployment. Similarly, the unemployment rate cannot keep increasing at the same speed after successive negative economic shocks. In fact, governments (the Federal Reserve in our case) would adopt different policies to boost the economy in the case of persistent recessions (i.e., expansionary monetary policies, labor market restructuring, increased spending on government projects such as the 2009 Economic Stimulus Program).

The LQA test reveals significant asymmetry in the long-run quadratic impact of positive and negative output gaps on unemployment (Wald = 5.49, p-value = 0.04). This means that the magnitude of the impact of economic downturns on unemployment decreases faster than the impact of economic upturns. We will return to this finding later when we discuss the results of the sign asymmetry tests.

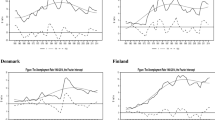

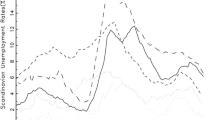

Given that in our final selected QAECM, the potential quadratic impact of \({y}_{t}\) is exclusively transmitted to \({\mathrm{u}}_{t}\) through \({y}_{t}^{+}\) and \({y}_{t}^{-}\), (\(\vartheta \) = 0), the LSA test simplifies to H0: \({\theta }^{+}+{2\sigma }^{+}\overline{y }={\theta }^{-}-{2\sigma }^{-}\overline{y }.\) In Fig. 1, we report the bootstrap p-values of the LSA tests for different \(\overline{y }\) levels, which are chosen to be consistent with their empirical counterparts. In fact, \(\overline{y }\) in our simulated tests ranges from 0 to 0.6.Footnote 22 We can see that for relatively low values of \(\overline{y }\), the overall impact of positive cumulative economic shocks is significantly different from the overall impact of negative shocks. However, as \(\overline{y }\) increases, the impact of economic upturns converges to the impact of economic downturns. To help us investigate the long-run sign asymmetry in depth and identify its direction, Fig. 2 shows the simulated overall impacts of economic upturns and downturns based on our estimation results. We can see that for low cumulative economic shock levels, the magnitude of the impact of an economic downturn on unemployment is significantly larger than the magnitude of the impact of an economic upturn. However, this asymmetry starts to vanish as the cumulative economic shock levels increase and, hence, the two impacts converge to relatively similar magnitudes.

To summarize all these findings, we can conclude that for similar low magnitudes of \({y}_{t-1}^{+}\) and \({y}_{t-1}^{-}\), the positive impact of cyclical downturns on unemployment is larger in magnitude than the negative impact of cyclical upturns. However, as the cumulative economic shocks increase, these impacts are attenuated by the quadratic (size) effect. Given that the decrease in the magnitude of the economic downturns’ impact is more pronounced than that of the economic upturns (size effect asymmetry), the asymmetry in the impact of \({y}_{t-1}^{+}\) and \({y}_{t-1}^{-}\) on unemployment gradually vanishes as the magnitudes of cumulative economic upturns and downturns increase.

In this regard, it is worth mentioning that successive economic upturns in the USA are generally much higher than the successive economic downturns. Therefore, we can expect the impact of recessions on unemployment to be typically larger than the impact of cyclical upturns unless the US economy goes into successive severe recessions. This is perfectly in line with the empirical findings of previous studies (Schorderet 2003; Mackay and Reis 2008; Shin et al. 2014).

According to the short-run estimation results, both positive and negative economic shocks have significant effects on unemployment (\({\delta }_{0}^{+}=-9.55\); \({\delta }_{0}^{-}=-8.29\)). This can be explained by the high job mobility characterizing the short-run dynamics of the US labor market. In fact, most US private sector workers are “employed at will,” which means that both employers and employees are free to terminate the contract at any time and for almost any reason. Overall, the US labor market is less regulated than labor markets in other countries (Europe), with lower union density and weaker employment protection legislation (Delacroix 2003). It is thus rational to expect these short-run coefficients to be lower in case of European countries.

The short-run asymmetry tests consist of both size and sign asymmetry tests (single and additive). The Wald test (2.85) for the short-run size asymmetry (H0: \({\pi }_{0}^{+}=0)\) rejects the null hypothesis at the 10% significance level. Thus, as the magnitude of the contemporaneous positive economic shock increases, its impact on unemployment is amplified. In fact, large economic shocks are more likely to have an impact that is more significant on firms’ expectations of future economic growth rates than relatively small shocks.Footnote 23

The bootstrap p-values of the single (H0: \({\delta }_{0}^{+}+2{\pi }_{0}^{+}\overline{\Delta x }={\delta }_{0}^{-}\)) and additive short-run sign asymmetry tests are reported in Figs. 3 and 4. Overall, the null hypothesis cannot be rejected at up to the 10% significance level.Footnote 24 It is however, important to note that for exceptionally large economic shocks, the bootstrap p-values decreased to around 10–11%, implying a relatively weak short-run sign asymmetry in favor of larger positive economic shocks impacts (due to the positive shock’s size impact).

In general, regardless of labor market regulations, firms usually make decisions about firing and hiring workers based on their expectations of future economic performance. Hence, following a negative economic shock, massive layoffs are unlikely in the short run unless the shock was preceded by successive economic downturns. This may explain the absence of the short-run asymmetry of economic cyclical shocks impacts, compared to the long-run case.

5.3 Forecasting validation

To validate the credibility of our model and its adequacy, we compare its forecasting performance with those of the standard NARDL and the classical ARDL models. We chose an estimation period from February 1982 to September 2001 and a forecast window spanning from October 2001 to February 2003.Footnote 25 Our choice of the forecast window is motivated by several reasons. First, according to our main finding, negative economic shocks are expected to have a significant larger impact on unemployment than what is usually implied by NARDL and ARDL models. To validate this claim, we choose a forecast window during one of most severe economic downturns in the history of USA, namely the 2001 recession. Second, as stated by Montogomery et al. (1998), forecasting unemployment in contractionary periods seems to be more valued than during expansionary periods, given its social and political implications. One could think that the forecast window should start at the beginning of the US 2001 recession, namely in March 2001. However, given the relative limited number of observations of economic downturns (compared with data on economic upturns), we decide to include 6 months of the US recession in our estimation period (March to September) in order to have more balanced estimation data. Moreover, choosing a forecast window starting just after the 9/11 attacks (a major negative economic shock) would be very convenient to test whether the standard NARDL actually underestimates the impact of negative economic shocks, as we are claiming.

Our forecasting test consists in generating three series of predicted unemployment rates from ARDL, NARDL and QAECM. For instance, we generate the predicted values from the QAECM in a recursive manner as follows:

-

\({\Delta \widehat{\mathrm{u}}}_{{t}^{*}+s}=\widehat{\rho }{\widehat{u}}_{{t}^{*}+s-1}+{\widehat{\beta }}^{+}{y}_{{t}^{*}+s-1}^{+}+{\widehat{\beta }}^{-}{y}_{{t}^{*}+s-1}^{-}+{\widehat{\gamma }}^{+}{\left({y}_{{t}^{*}+s-1}^{+}\right)}^{2}+{\widehat{\gamma }}^{-} {\left({y}_{{t}^{*}+s-1}^{-}\right)}^{2}+\sum_{i=1}^{p-1}{\widehat{{\varphi }_{i}}\widehat{\Delta u}}_{{t}^{*}+s-i}+\sum_{i=0}^{q-1}( {\widehat{\delta }}_{i}^{+}{\Delta y}_{{t}^{*}+s-i}^{+}+{\widehat{\delta }}_{i}^{-}{\Delta y}_{{t}^{*}+s-i}^{-})+ \sum_{i=0}^{q-1}( {\widehat{\pi }}_{i}^{+}{\left({\Delta y}_{{t}^{*}+s-i}^{+}\right)}^{2}+{\widehat{\pi }}_{i}^{-}{\left({\Delta y}_{{t}^{*}+s-i}^{-}\right)}^{2}),\)

-

\({{\widehat{\mathrm{u}}}_{{t}^{*}+s}={\widehat{\mathrm{u}}}_{{t}^{*}+s-1}+\Delta \widehat{\mathrm{u}}}_{{t}^{*}+s}\); for \(s=1\dots T\),

where T is the end date of the forecast window, \({t}^{*}\) is the end date of the estimation period; \(s\) is the s-steps ahead out-sample forecast, the coefficients with a hat correspond to the estimates of the QAECM from the estimation period data, and \({\widehat{\mathrm{u}}}_{{t}^{*}+s }{ (\Delta \widehat{\mathrm{u}}}_{{t}^{*}+s})\) are the forecasted values of the unemployment rates in levels (in first differences).

Then, we calculate the deviations of these predicted series from the actual data. From the prediction errors, we report five accuracy measures, namely, the Mean Square Error (MSE), the Root-Mean-Square Error (RMSE), the Mean Absolute Error (MAE), the Median Absolute Error (MdAE) and the Mean Absolute Percentage Error (MAPE):

As we can see from Table 6, all of the five accuracy measures of the forecasted series from QAECM are clearly lower than those of NARDL and ARDL models. In order to determine whether the difference in accuracy measures between the competing models is statistically significant, we report, in Table 7, the Diebold and Mariano (1995) test statistics.Footnote 26 The results confirm that the QAECM has a superior forecasting performance over both NARDL and ARDL.

We can see from Fig. 5 that, as we expect, the NARDL underestimates the magnitude of the impact of major economic shocks on unemployment rates, whereas our QAECM seems to be more successful in predicting these effects. Hence, we can confirm that the relative impact of an economic downturn in the US economy is much more important than what is usually implied by empirical studies where the size asymmetry (nonlinearity) is completely ignored.

6 Conclusion

Although many macroeconomic variables exhibit size-dependent relationships, most of econometric models restrict nonlinearity to sign asymmetry (e.g., NARDL) and continue to assume a combination of linear (stable) relationships. To bridge this gap, we propose a new QAECM that comprehensively accounts for sign and size asymmetries, in both the long and short terms. Since our QAECM can be derived from a standard NARDL, it could be considered an extended version of NARDL, where the size impact is captured by the square of the positive and negative partial sums. We also introduce a battery of long-run tests (testing protocols) that allows to investigate and identify different sources of nonlinearity. To obtain consistent statistical inferences of long-run tests, we propose a nonparametric residual recursive bootstrap algorithm. In fact, we believe that in ECM context, our proposed bootstrapping technique is more appealing than the fixed regressors bootstrapping method, which is widely used in empirical studies involving NARDL. The Okun’s Law estimation results confirm that unemployment’s response to a decrease in output is usually stronger than the impact of an increase in output, as is commonly reported in the empirical literature on Okun’s Law. However, we found evidence of a size effect in the relationship between the output gap and employment. In fact, according to our findings, the long-run impact of economic upturns (downturns) on unemployment is attenuated when the cumulative economic upturns (downturns) increase. Moreover, our long-run size asymmetry test reveals that the size impact is more pronounced in the case of economic slowdowns. Finally, to validate the credibility of the QAECM, we compare its forecasting performance with those of NARDL and ARDL models. The forecasting results show that the QAECM provides significantly better predictions of the impact of output gaps on unemployment, particularly during recessions. Given its flexibility, our proposed QAECM can be applied for a wide range of other macroeconomic topics.

Notes

It is well known that when the test statistic is not an exact pivot (asymptotically pivotal or even nonpivotal), the bootstrap p-values are still asymptotically consistent (Davidson and Mackinnon (1999)). The bootstrap technique allows estimating the unknown true DGP under the null hypothesis by the resampling technique and then obtaining asymptotically consistent p-values.

The right-hand side of a typical ECM includes first differenced variables and an error correction term, which are stationary.

We can also derive the same ECM by starting from a standard ARDL equation including \({({x}_{t-1})}^{2}.\) However, we need to drop all the interactive short-run terms: \({\Delta x}_{t-i}^{+}.{\Delta x}_{t-j}^{-}\), for \(i\ne j,\) and \({x}_{t-1}^{+(-)}.{ \Delta x}_{t-i}^{+-}\) for all \(i\).

Given that \({x}_{t-1}^{+}{x}_{t-1}^{-}=\frac{1}{2}\left[{\left({x}_{t-1}\right)}^{2}-{\left({x}_{t-1}^{+}\right)}^{2}-{\left({x}_{t-1}^{-}\right)}^{2}\right]\), we could have equivalently included \({x}_{t-1}^{2}\) instead of \({x}_{t-1}^{+}{x}_{t-1}^{-}\). Ultimately, the quadratic effect could be transmitted through \({x}_{t-1}^{2}\), \({{ x}_{t-1}^{+}}^{2}\) and/or \({{x}_{t-1}^{-}}^{2}\) or any combination of these.

We chose B = 9999 so that \(\kappa (B+1)\) would be an integer, where \(\kappa \) is the level of the test (Davidson and MacKinnon 1999).

For two-sided tests (t-test), p = \(2*Min\left(\frac{S}{B}, 1-\frac{S}{B}\right)\)

The p-values of these tests are obtained via bootstrapping.

Under H0,\({\omega }_{t}={y}_{t }-{\theta }^{+}{x}_{t }^{+}-{\theta }^{-}{x}_{t}^{-}-\sigma {\left({x}_{t}^{+}\right)}^{2}-\sigma {\left({x}_{t}^{-}\right)}^{2}-2\sigma {x}_{t}^{+}{x}_{t}^{-}\).

These coefficients must individually be significant. Otherwise, we should drop the insignificant variables.

To estimate the Constrained Least Squares, we used the MATLAB function “fmincon.”.

For short-run tests, we use the theoretical p-values and hence we do not write down the corresponding restricted models.

For instance, economic growth indicators usually exhibit more positive than negative changes.

As we earlier explained, the symmetry of the quadratic effect implies that \({\sigma }^{-}={-\sigma }^{+}.\)

Obviously, the test power is expected to be positively correlated with the distance between the true parameters and their counterparts under the null hypotheses. However, our sensitivity analysis of the simulations outcomes reveals that \({\Delta }_{\sigma }\) seems to be the most influential difference measure affecting the test powers.

The size of all tests (not reported here) is very close to 5% regardless of the parameterization of the DGP under null hypotheses, which is consistent with Shin and al. (2014). In fact, the bootstrap test rejects the null hypothesis when the test statistic falls outside the 95% interval of its empirical distribution under H0. This empirical distribution is approximated using the bootstrap samples generated under the null hypothesis. Hence, when the simulated samples are also generated under the same null hypothesis, it is not surprising that the probability of rejecting the null hypothesis will be asymptotically equivalent to the significance level of the bootstrap test (5%).

Without loss of generality, we report the power of the LSA test for \(\overline{x }=\frac{1}{T}\sum_{1}^{t}{x}_{t}\).

In the first step, we drop all the insignificant variables. However, we keep \(d{y}_{t-i}^{2}\) if \(d{y}_{t-i}\) is significant. In the second step, we run the nonlinear ECM, then drop all the insignificant variables.

\({y}^{-}\) and \({y}^{+}{y}^{-}\) are highly correlated (0.98).

Obviously, the quadratic decomposition test in this case is equivalent to the quadratic impact test (H0: \({\upsigma }^{+}={\upsigma }^{-}=0)\), given that \(\uptheta \) is assumed to be equal to zero.

The impact of an increase in economic downturns on unemployment is: \(\frac{\mathrm{d}u}{\mathrm{d}(-y)}={-\upbeta }^{-}-{2\upsigma }^{-}{\mathrm{y}}_{\mathrm{t}-1}^{-}\).

For instance, if \(\overline{\mathrm{y} }\)= 0.1, the test would consist of testing whether the magnitude of the impact of an economic upturn on unemployment when \({\mathrm{y}}_{\mathrm{t}-1}^{+}=0.1\) is significantly different from the magnitude of the impact of an economic downturn when \({\mathrm{y}}_{\mathrm{t}-1}^{-}=-0.1\).

It is important to note that the absence of a short-run size impact of negative economic shocks could be due the limited number of observations with negative economic shocks, particularly those of large magnitude.

The unemployment rate started to decrease in March 2003.

All reported p-values are equal to 0.

References

Andreano MS, Savio G (2002) Further evidence on business cycle asymmetries in G7 countries. Appl Econ 34(7):895–904

Belaire-Franch J, Peiró A (2015) Asymmetry in the relationship between unemployment and the business cycle. Empir Econ 48(2):683–697

Bachmeier LJ, Griffin JM (2003) New evidence on asymmetric gasoline price responses. Rev Econ Stat 85:772–776

Baharumshah AZ, Sirag A, Soon SV (2017) Asymmetric exchange rate pass-through in an emerging market economy: the case of Mexico. Res Int Bus Financ 41:247–259

Balke NS, Fomby TB (1997) Threshold cointegration. Int Econ Rev 38:627–645

Beran R (1988) Prepivoting test statistics: a bootstrap view of asymptotic refinements. J Am Stat Assoc 83:687–697. https://doi.org/10.1080/01621459.1988.10478649

Brunner AD (1997) On the dynamic properties of asymmetric models of real GNP. Rev Econ Stat 79(2):321–326

Brun-Aguerre R, Fuertes AM, Phylaktis K (2012) Country and time variation in import pass-through: Macro versus micro factors. J Int Money Financ 31(4):818–844

Brun-Aguerre R, Fuertes AM, Greenwood-Nimmo M (2017) Heads I win; tails you lose: asymmetry in exchange rate pass-through into import prices. J R Stat Soc A Stat Soc 180(2):587–612

Campa JM, Gonz´alez-Minguez JM, Sebasti’a-Barriel M (2008) Non-linear adjustment of import prices in the European Union. CIF-Center for International Finance Working Paper No. DI-734-E

Cai Z, Li Q, Park JY (2009) Functional-coefficient models for nonstationary time series data. J Econ 148(2):101–113

Cazes S, Verick S, Al Hussami F (2013) Why did unemployment respond so differently to the global financial crisis across countries? Insights from Okun’s Law. IZA J Labor Policy 2(1):1–18

Chan N, Wang Q (2015) Nonlinear regressions with nonstationary time series. J Econom 185(1):182–195

Chang Y, Park JY (2003) A sieve bootstrap for the test of a unit root. J Time Ser Anal 24:379–400. https://doi.org/10.1111/1467-9892.00312

Choi I, Saikkonen P (2004) Testing linearity in cointegrating smooth transition regressions. Econom J 7(2):341–365

Choudhri EU, Hakura DS (2015) The exchange rate pass-through to import and export prices: The role of nominal rigidities and currency choice. J Int Money Financ 51:1–25

Cuaresma JC (2003) Okun’s Law revisited. Oxf Bull Econ Stat 65(4):439–451

Daly MC, Fernald JG, Jordà Ò, Nechio F (2018) Shocks and adjustments: the view through Okun’s macroscope. Mimeo (June 3 version). Federal Reserve Bank of San Francisco, San Francisco

Davidson R, MacKinnon JG (1999) The size distortion of bootstrap tests. Econom Theor 15(3):361–376

Delacroix A (2003) Transitions into unemployment and the nature of firing costs. Rev Econ Dyn 6(2003):651–671

Dickey DA, Fuller WA (1979) Distribution of the estimators for autoregressive time series with a unit root. J Am Stat Assoc 74:427–431

Diebold FX, Mariano RS (1995) Comparing predictive accuracy. J Bus Econ Stat 13:253–263

Dong C, Linton O (2018) Additive nonparametric models with time variable and both stationary and nonstationary regressors. J Econom 207(1):212–236

Fernald JG, Hall RE, Stock JH, Watson MW (2017) The disappointing recovery of output after 2009. Brook Pap Econ Act 48(1):1–81

Granger CWJ (1981) Some properties of time series data and their use in econometric model specification. J Econom 16:121–130

Granger CWJ (1983) Co-integrated variables and error-correcting models. unpublished UCSD Discussion Paper, pp 83–13

Granger CWJ, Yoon G (2002) Hidden cointegration. Mimeo. University of California, San Diego

Hansen BE, Seo B (2002) Testing for two-regime threshold cointegration in vector error-correction models. J Econom 110(2):293–318

Harris R, Silverstone B (2001) Testing for asymmetry in Okun’s Law: cross country comparison. Econom Bull 5:1–13

Holly S, Stannett M (1995) Are there asymmetries in UK consumption? A time series analysis. Appl Econom 27(8):767–772

Holmes MJ, Silverstone B (2006) Okun’s Law, asymmetries and jobless recoveries in the United States: a Markov-switching approach. Econ Lett 92(2):293–299

Hu Z, PhillipsPC, Wang Q (2019) Nonlinear cointegrating power function regression with endogeneity. Working paper

Huang HC, Chang YK (2005) Investigating Okun’s Law by the structural break with threshold approach: evidence from Canada. Manch Sch 73(5):599–611

Lee J (2000) The robustness of Okun’s Law: evidence from OECD countries. J Macroecon 22(2):331–356

Li H, Maddala GS (1997) Bootstrapping cointegration regressions. J Econom 80:297–318

Linton O, Sperlich S, Van Keilegom I (2008) Estimation of a semiparametric transformation model. Ann Stat 36(2):686–718

Linton O, Wang Q (2016) Nonparametric transformation regression with nonstationary data. Economet Theor 32(1):1–29

Lo MC, Zivot E (2001) Threshold cointegration and nonlinear adjustment to the law of one price. Macroecon Dyn 5(4):533

Kapetanios G, Shin Y, Snell A (2006) Testing for cointegration in nonlinear smooth transition error correction models. Econom Theor 22:279–303

Karlsen HA, Myklebust T, Tjøstheim D (2007) Nonparametric estimation in a nonlinear cointegration type model. Ann Stat 35(1):252–299

Keynes JM (1936) The general theory of employment, interest and money. Macmillan, London

Koutroulis A, Panagopoulos Y, Tsouma E (2016) Asymmetry in the response of unemployment to output changes in Greece: evidence from hidden co-integration. J Econ Asymmetries 13:81–88

Kwiatkowski D, Phillips PCB, Schmidt P, Shin Y (1992) Testing the null hypothesis of stationarity against the alternative of a unit root. J Econom 54:159–178

MacKinnon JG (2009) Bootstrap hypothesis testing. In: Belsey D, Kontoghioghes E (eds) Handbook of computational econometrics, vol 6. Wiley, Hoboken

McKay A, Reis R (2008) The brevity and violence of contractions and expansions. J Monet Econ 55(4):738–751

McNown R, Sam CY, Goh SK (2018) Bootstrapping the autoregressive distributed lag test for cointegration. Appl Econ 50(13):1509–1521

Mankiw NG (2015) Macroeconomics. Worth Publishers, Duffield

Montgomery AL, Zarnowitz V, Tsay RS, Tiao GC (1998) Forecasting the US unemployment rate. J Am Stat Assoc 93(442):478–493

Neftçi SN (1984) Are economic time series asymmetric over the business cycle? J Polit Econ 92:307–328

Okun AM (1962) Potential GNP: its measurement and significance. Am Stat Assoc Proc Bus Econ Stat Sect 1962:98–104

Owyang MT, Sekhposyan T (2012) Okun’s Law of the business cycle: was the great recession all that different? Federal Reserve Bank St Louis Rev 94:399–418

Palley TI (1993) Okun’s Law and the asymmetric and changing cyclical behaviour of the USA economy. Int Rev Appl Econ 7(2):144–162

Palm FC, Smeekes S, Urbain J-P (2010) A sieve bootstrap test for cointegration in a conditional error correction model. Econom Theor 26:647–681

Park JY, Phillips PC (1999) Asymptotics for nonlinear transformations of integrated time series. Econom Theory 15:269–298

Park JY, Phillips PCB (2001) Nonlinear regressions with integrated time series. Econometrica 69:117–161

Pereira RM (2013) Okun’s law across the business cycle and during the great recession: a Markov switching analysis. College of William and Mary working paper, p 139

Pesaran MH, Shin Y, Smith RJ (2001) Bounds testing approaches to the analysis of level relationships. J Appl Econom 16:289–326

Phillips PCB, Hansen B (1990) Statistical inference in instrumental variables regression with I(1) processes. Rev Econ Stud 57:99–125

Phillips PC, Li D, Gao J (2017) Estimating smooth structural change in cointegration models. J Econom 196(1):180–195

Psaradakis Z, Sola M, Spagnolo F (2004) On Markov error-correction models with an application to stock prices and dividends. J Appl Econom 19:69–88

Ramsey JB, Rothman P (1996) Time irreversibility and business cycle asymmetry. J Money Credit Bank 28(1):1–21

Rapach DE (2002) Are real GDP levels nonstationary? Evidence from panel data tests. South Econ J 68:473–495

Rothman P (1991) Further evidence on the asymmetric behavior of unemployment rates over the business cycle. J Macroecon 13(2):291–298

Rothman P (1998) Forecasting asymmetric unemployment rates. Rev Econ Stat 80(1):164–168

Schorderet Y (2003) Asymmetric cointegration. Working Paper, Department of Econometrics, University of Geneva

Schorderet Y (2001) Revisiting Okun’s Law: an hysteretic perspective. Mimeo. University of California, San Diego

Shintani M, Terada-Hagiwara A, Yabu T (2013) Exchange rate pass-through and inflation: a nonlinear time series analysis. J Int Money Financ 32:512–527

Shin Y, Yu B, Greenwood-Nimmo M (2014) Modelling asymmetric cointegration and dynamic multipliers in a nonlinear ARDL framework. In: Festschrift in honor of Peter Schmidt. Springer, New York, NY, pp 281–314

Silvapulle P, Moosa IA, Silvapulle MJ (2004) Asymmetry in Okun’s law. Can J Econ/revue Canadienne D’économique 37(2):353–374

Singh K (1981) On the asymptotic accuracy of Efron’s bootstrap. Ann Stat 9:1187–1195. https://doi.org/10.1214/aos/1176345636

Virén M (2001) The Okun curve is non-linear. Econ Lett 70:253–257

Webber AG (2000) Newton’s gravity law and import prices in the Asia Pacific. Jpn World Econ 12:71–87

Wang Q, Phillips PC (2009a) Asymptotic theory for local time density estimation and nonparametric cointegrating regression. Econom Theory 25:710–738

Wang Q, Phillips PC (2009b) Structural nonparametric cointegrating regression. Econometrica 77(6):1901–1948

Wang Q, Wu D, Zhu K (2018) Model checks for nonlinear cointegrating regression. J Econom 207(2):261–284

Yanamandra V (2015) Exchange rate changes and inflation in India: what is the extent of exchange rate pass-through to imports? Econ Anal Policy 47:57–68

Zanin L (2014) On Okun’s law in OECD countries: an analysis by age cohorts. Econ Lett 125:243–248. https://doi.org/10.1016/j.econlet.2014.08.030

Zanin L, Marra G (2012) Rolling regression versus time-varying coefficient modelling: an empirical investigation of the Okun’s Law in some Euro area countries. Bull Econ Rev 64(1):91–108. https://doi.org/10.1111/j.1467-8586.2010.00376.x

Acknowledgements

Open Access funding provided by the Qatar National Library.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mnasri, A., Mrabet, Z. & Alsamara, M. A new quadratic asymmetric error correction model: does size matter?. Empir Econ 65, 33–64 (2023). https://doi.org/10.1007/s00181-022-02323-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00181-022-02323-4

Keywords

- Error correction model

- Quadratic nonlinearity

- Asymmetry

- Bootstrap

- Okun’s law

- Monte Carlo simulations

- Forecast