Abstract

A significant challenge in statistical process monitoring (SPM) is to find exact and closed-form expressions (CFEs) (i.e. formed with constants, variables and a finite set of essential functions connected by arithmetic operations and function composition) for the run-length properties such as the average run-length (\(ARL\)), the standard deviation of the run-length (\(SDRL\)), and the percentiles of the run-length (\(PRL\)) of nonparametric monitoring schemes. Most of the properties of these schemes are usually evaluated using simulation techniques. Although simulation techniques are helpful when the expression for the run-length is complicated, their shortfall is that they require a high number of replications to reach reasonably accurate answers. Consequently, they take too much computational time compared to other methods, such as the Markov chain method or integration techniques, and even with many replications, the results are always affected by simulation error and may result in an inaccurate estimation. In this paper, closed-form expressions of the run-length properties for the nonparametric double sampling precedence monitoring scheme are derived and used to evaluate its ability to detect shifts in the location parameter. The computational times of the run-length properties for the CFE and the simulation approach are compared under different scenarios. It is found that the proposed approach requires less computational time compared to the simulation approach. Moreover, once derived, CFEs have the added advantage of ease of implementation, cutting off on complex convergence techniques. CFE's can also easily be built into mathematical software for ease of computation and may be recalled for further work.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Monitoring schemes are evaluated using metrics based on the number of samples required to detect abnormalities in the process; see, e.g., Montgomery (2020). The distribution of the number of samples before detecting the first out-of-control (OOC) signal is called the run-length distribution. Many researchers have proposed different methods for the computation of the properties of the run-length distribution; see, e.g., Chakraborti et al. (2009) and Teoh et al. (2016). When it is difficult to find the run-length distribution (as is the case with some nonparametric schemes such as the Mann–Whitney, or the Wilcoxon rank sum monitoring scheme, the cumulative sum (CUSUM), exponentially weighted moving average (EWMA), generally weighted moving average (GWMA), and homogeneously weighted moving average (HWMA) types monitoring schemes), researchers have suggested the use of simulation techniques; see, e.g., Letshedi et al. (2021) and Qiu (2018). For some early use of simulations in statistical process monitoring (SPM), readers are referred to Pignatiello and Runger (1990) and Fu and Hu (1999) to cite a few. The advantage of simulation techniques is that regardless of the complexity of the run-length distribution, there is always a way to compute the properties of the run-length distribution using Monte Carlo simulation; see, e.g., Qiu (2018). However, the drawback of simulation techniques is that, in general, they require many iterations (i.e. simulation runs) and it takes a long time to compute the run-length properties of a monitoring scheme; see, e.g., Silberschatz et al. (2009). Moreover, regardless of the number of replications, the results are negatively affected by the simulation error, which is dependent on the number of simulations; see, e.g., Oberkampf et al. (2002). However, closed-form computational methods such as Markov chain approaches have the advantage of reducing the computational time and error. The Markov chain approach has often been used to study or derive closed-form expressions of the run-length distribution of many proposed monitoring schemes, including the CUSUM and EWMA schemes. However, for the Markov chain, the point of departure is approximating the problem, thereafter obtaining an exact solution to the problem; see, e.g., Champ and Rigdon (1991), Areepong and Sukparungsee (2013), and Sukparungsee and Areepong (2016). Weiß (2011) advocates for the extension of the use of the Markov chain approach for calculating other performance measures like the expected conditional delay, the steady-state ARL, or the worst-case ARL. Chakraborti et al. (2004) further studied the median monitoring scheme by Janacek and Meikle (1997) and derived closed-form expressions for the run-length distribution. Chakraborti et al. (2009) derived the exact run-length distributions of the precedence scheme with runs-rules using conditioning and some results from the theory of runs and scans; see Balakrishnan and Koutras (2011). Malela-Majika et al. (2021, 2022) used the Markov chain approach to derive closed-form expressions of the run-length characteristics of the precedence schemes to investigate their abilities to detect shifts in the location process parameter. The percentiles of the run-length distribution for both the optimal average run-length (ARL)-based and median run-length (MRL)-based for the double sampling \(\overline{X }\) schemes with estimated process parameters were investigated by Teoh et al. (2016). The authors reported that the percentiles of their monitoring scheme changes with the Phase I and II sample sizes and optimal shift, even though the same value of the nominal in-control \(ARL \;({ARL}_{0})\) and in-control \(MRL \;({MRL}_{0})\) is attained. Apart from simulation and exact closed-form expressions, authors have also used numerical procedures using integral equations (see, e.g., Crowder (1987)), run-length generating functions (see, e.g., Shmueli and Cohen (2003)), and discrete-time Markov chains (see, e.g., Zantek (2008) and Li et al. (2014)) to study the run-length properties.

It is important to note that for simplicity, this paper focuses on the double sampling precedence scheme for the median as it has a symmetric distribution and hence symmetric control limits. However, the precedence scheme belongs to a general class of distribution-free schemes where any order statistics (including the sample median) can be used as a charting statistic. A key advantage of distribution-free charts is that one does not need to assume any particular underlying process distribution and the in-control probability calculations remain valid for all continuous distributions; see Chakraborti et al. (2004). Thus, distribution-free charts are said to be in-control robust, where the term "robustness" highlights the ability of any statistical procedure under ideal and non-ideal condition; see e.g., Balakrishnan et al. (2006, p. 7299) and Human et al. (2011). To facilitate the investigation of the performance of the double sampling precedence scheme introduced by Malela-Majika et al. (2021), this paper introduces closed-form expressions for the run-length properties and the average sample size (\(ASS\)) using calculus. The expression of the Stage 2 probability of the in-control process is derived using mathematical statistics techniques. The implementation is simplified by making use of already existing integral functions (like the incomplete Beta function) in mathematical software like Mathcad, Matlab, R, etc. The computational times of the run-length properties of the double sampling scheme using the proposed method and simulation techniques are also compared.

The remainder of this paper is organised as follows: Sect. 2 presents a brief background of the double sampling precedence scheme as well as its operation. Section 3 introduces the different closed-form expressions of the run-length properties and the \(ASS\); these expressions are the key contribution of this paper. The in-control and out-of-control performances of the double sampling precedence scheme are investigated in Sect. 4. Moreover, a comparative study of the computational time is presented in Sect. 4. The application and implementation of the double sampling (DS) precedence monitoring scheme are given in Sect. 5 through an example. Section 6 gives the concluding remarks and suggested future research ideas.

2 Double sampling precedence scheme

2.1 Preliminaries and notations

The DS precedence scheme was first introduced by Malela-Majika et al. (2021). Let \({X}_{i}\), \(i=\) 1,2, …\(m\), where \(m>\) 1 be a sequence of \(m\) Phase I observations from the in-control (IC) process with an unknown cumulative distribution function (c.d.f.) denoted as \({F}_{X}(x)\) and let \({Y}_{tk},t=\) 1,2, …; \(k=\) 1,2, …, \(n\), where \(n>1\) be a \({t}^{th}\) Phase II test sample of size \(n\) with c.d.f. \({G}_{Y}(y)\). Assume that when the process is IC, \({F}_{X}\left(x\right)={G}_{Y}(x)\). Otherwise, the process is said to be out-of-control then \({F}_{X}\left(x\right)={G}_{Y}(x+\delta )\) where \(\delta \in {\mathbb{R}}/\{0\}\) is the change or shift in the location parameter. Note that the test samples \({Y}_{tk}\) are assumed to be independent and identically distributed (i.i.d.) of one another and of the reference sample \(X\). Then, the proposed DS precedence monitoring scheme is a two-stage precedence scheme that is divided into five charting regions defined as follows and illustrated in Fig. 1:

-

Charting regions in Stage 1

\(A=(-\infty ,{X}_{({a}_{2}:m)}]\cup [{X}_{({b}_{2}:m)},+\infty )\), \(B=({X}_{({a}_{2}:m)},{X}_{({a}_{1}:m)}]\cup [{X}_{({b}_{1}:m)},{X}_{({b}_{2}:m)})\) and \(C=({X}_{({a}_{1}:m)},{X}_{({b}_{1}:m)})\),

-

Charting regions in Stage 2

\(D=(-\infty ,{X}_{({c}_{1}:m)}]\cup [{X}_{({c}_{2}:m)},+\infty )\) and \(E=({X}_{({c}_{1}:m)},{X}_{({c}_{2}:m)})\),

where \({X}_{({\ell}:m)}\) represents the \({{\ell}}^{th},\) where \({\ell}\in \{{a}_{2}, {a}_{1}, {b}_{1},{b}_{2},{c}_{1}, {c}_{2}\}\), order statistic of the Phase I reference sample of size \(m\), and \({\ell}\) represents the position of the order statistic in the reference sample and it is also referred to as the charting constant(s).

The choices of the charting constants i.e., \(1\le {a}_{2}<\) \({a}_{1}<\) \({b}_{1}<\) \({b}_{2}\le m\) and \({1\le c}_{1}<\) \({c}_{2}\le m\), of the DS precedence scheme are selected such that in Stage 1, \({a}_{2}=m-{b}_{2}+1\), \({a}_{1}=m-{b}_{1}+1\) and in Stage 2, \({c}_{1}=m-{c}_{2}+1\). This relationship between the aforementioned parameters is a result of symmetrically placed control limits. Note that the Stage 1 charting constants i.e., \({a}_{2}\), \({a}_{1}\), \({b}_{1}\) and \({b}_{2}\), must be selected such that the attained IC \(ASS\) value is equal to some specific values denoted as \({ASS}_{0}\) in this paper; while the Stage 2 charting constants i.e., \({c}_{1}\) and \({c}_{2}\), are selected such that the attained IC \(ARL\) is equal to some pre-specified nominal \(ARL\) values denoted as \({ARL}_{0}\). Thus, Stage 1’s outer lower and upper control limits (denoted as \(OLCL\) and \(OUCL\)) and inner lower and upper control limits (denoted as \(ILCL\) and \(IUCL\)) as well as the Stage 2’s \(LCL\) and \(UCL\) of the DS precedence scheme are estimated from the IC Phase I reference sample of size \(m\) as follows:

\(\widehat{OLCL}={X}_{({a}_{2}:m)}\), \(\widehat{ILCL}={X}_{({a}_{1}:m)}\), \(\widehat{IUCL}={X}_{({b}_{1}:m)}\), \(\widehat{OUCL}={X}_{({b}_{2}:m)}\), \(\widehat{LCL}={X}_{({c}_{1}:m)}\) and \(\widehat{UCL}={X}_{({c}_{2}:m)}\).

In Phase II, at each sampling time, a sample of size \(n\) is collected i.e. \({Y}_{tk}\) (\(k=\) 1, 2, …, \(n\)), and split into two subsamples, i.e. \({Y}_{1tp}\) and \({Y}_{2tq}\) (\(p=\) 1, 2, …, \({n}_{1}\) and \(q=\) 1, 2, …, \({n}_{2}\)), of sizes \({n}_{1}\) and \({n}_{2}\), respectively. Note that, \({n}_{2}+{n}_{1}=n\) and we assume that \({n}_{2}>{n}_{1}\).

There are practical advantages to using a two-stage or a sequential sampling approach, and they include the following:

-

Early detection: Sequential sampling allows for the early detection of issues or anomalies in a process. By taking smaller samples at each point and continuously evaluating the data, one can identify problems as soon as they emerge rather than waiting until the end of a large batch.

-

Cost efficiency: It can be more cost-effective to take smaller sequential samples rather than one large sample at each point. Large samples can be expensive and time-consuming to collect and analyse, whereas smaller samples reduce resource requirements.

-

Resource allocation: Sequential sampling allows one to allocate resources more efficiently. If one identifies a problem early, one can focus resources on investigating and addressing that specific issue rather than collecting and analysing a full large sample.

-

Reduced waste: If a process is going out-of-control or is producing non-conforming products, taking a large sample at each point may result in a significant amount of waste. Sequential sampling helps minimise waste by detecting issues promptly.

-

Real-time control: It enables real-time process control and adjustment. With sequential sampling, one can make immediate adjustments to the process if deviations are detected, ensuring product quality and process stability.

-

Improved productivity: Continuous monitoring through sequential sampling can lead to improved productivity as it reduces the chances of producing defective products or processes.

2.2 Phase II operation of the DS precedence scheme

Assume the Phase I analysis is completed, and the estimated control limits for Phase II (based on the Phase I sample) are available. Thus, the Phase II operation procedure of the DS precedence scheme is as follows:

-

1.

Take a sample of size \({n}_{1}\) and compute \({Y}_{(j:{n}_{1})}\) at the \({t}^{th}\) sampling time point of the first sample. For simplicity, in this paper, it is assumed that \({n}_{1}\) is odd i.e., \({n}_{1}=2r+1\) (where \(r\) is a positive integer) so that \(j=r+1\) corresponds to the unique test sample median of the \({Y}_{1tp}\) sample in Stage 1.

-

2.

If \({Y}_{(j:{n}_{1})}\in C\), the process is considered to be IC.

-

3.

If \({Y}_{(j:{n}_{1})}\in A\), the process is said to be OOC.

-

4.

If \({Y}_{(j:{n}_{1})}\in B\), take a second sample of size \({n}_{2}\).

-

5.

At the \({t}^{th}\) sampling timepoint, compute the plotting statistic of the combined sample, \({Y}_{(h:n)}\). We assume that \({n}_{2}\) is even so that \({n=n}_{1}+{n}_{2}\) gives an odd number i.e., \(n=2s+1\) where \(s\) is a positive integer, so that \(h=s+1\) corresponds to the unique test sample median.

-

6.

The process is declared OOC at Stage 2, if \({Y}_{(h:n)}\in D\), i.e. \({Y}_{(h:n)}\ge UCL\) or \({Y}_{(h:n)}\le LCL\). Otherwise, the process is said to be IC.

3 Run-length properties of the double sampling precedence monitoring scheme

First, we focus on the Stage 1 and Stage 2 conditional probabilities. The conditional probabilities that at Stage 1 the statistic \({Y}_{(j:{n}_{1})}\) plots in region \(A\), \(B\) and \(C\) are defined by

and

respectively, where \(I(.,.,.)\) denotes the incomplete beta function and \({GF}^{-1}\left(.\right)\) is the conversion function (see Malela-Majika (2022)), and \({U}_{\left({a}_{1}:m\right)}\) and \({U}_{\left({a}_{2}:m\right)}\) represent the \({a}_{1}^{th}\) and \({a}_{2}^{th}\) order statistics of a sample of size \(m\) from the Uniform (0,1) distribution.

The conditional probability that the process is IC is given by

where \({p}_{01}\) and \({p}_{02}\) are the conditional probabilities that the process is IC at Stage 1 and Stage 2, respectively, with

and

These probabilities are conditional with regard to the Phase I order statistics. The derivations of the expression of \({p}_{02}\) is provided in the Appendix. Then, the expressions of the unconditional \(ARL\), standard deviation of the run-length (\(SDRL\)) and \(ASS\) values at each sampling time are given by

and

where \({f}_{ab}(s,t)=\frac{m!}{\left(a-1\right)! \left(b-a-1\right)!\left(m-b\right)!}{t}^{a-1}{(t-s)}^{b-a-1}(1-t{)}^{m-b}\) is the joint probability density function (pdf) of the \({a}^{th}\) and \({b}^{th}\) order statistics in a random sample of size m from the Uniform (0,1) distribution.

The average number of observations to signal (ANOS) is given by

Let \(R\) be the conditional run-length of a scheme. Then, the \({(100\rho )}^{th}\) percentile (with \(0<\rho <1\)) of the run-length distribution (denoted as \({{\ell}}_{\rho }\)) is given by

Hence, the conditional and unconditional c.d.f. of the DS precedence monitoring scheme are defined by

where \({\ell}\in \){1, 2, …} and \({p}_{0}\) is defined in Eq. (2). Henceforth, in this paper, the \({(100\rho )}^{th}\) percentile of the unconditional run-length distribution will be denoted as \({P}_{\rho }\). For instance, the 50th percentile (i.e. the median) of the run-length distribution is denoted as \({P}_{0.5}\).

Note that when the process is IC, the conversion function \({GF}^{-1}\left(q\right)=q\) (where \(q\) represents values \(u\) and \(s\) taken by the uniform random variable \(U\) and \(S\)). However, when the process is out-of-control, \({GF}^{-1}\left(q\right)\ne q\). For more details on how to derive the out-of-control expressions of \({GF}^{-1}\left(q\right)\) for different distributions, readers are referred to Malela-Majika et al. (2021).

Remark

It is important to note that conditional performance measures are conditional on the IC Phase I dataset and, more specifically, the order statistics used to estimate the Phase II control limits. Because the actual underlying distribution is assumed to be unknown, the Phase II control limits are then estimated as shown in Sect. 2. The ‘hat-notation’ indicates point estimators. These point estimates of the control limits introduce additional uncertainty and variability, which must be accounted for. Therefore, the average performance over all possible values of the order statistics is obtained by calculating the unconditional performance measures.

4 Performance analysis of the double sampling precedence monitoring scheme

4.1 IC performances

In this subsection, we study the behaviour of the DS precedence scheme when the process is assumed to be IC. Balakrishnan et al. (2006, p. 7299) defined a robust statistical procedure as a procedure that performs well under ideal conditions (i.e., the condition under which it is designed and proposed) and under departures from the ideal. However, the terms robust and distribution-free are often confused in the literature. Distribution-free schemes have the same IC run-length distribution for all continuous distributions and, in some cases, only for all symmetric continuous distributions (e.g., schemes based on the signed-rank statistic). Thus, by definition, a distribution-free statistic or monitoring scheme is robust, but a robust monitoring scheme, like the EWMA scheme for small values of the smoothing parameter (see Randles and Wolfe (1979) and Human et al. (2011)), is not distribution-free. One of the advantages of a distribution-free (or nonparametric) scheme is that the IC properties like the IC \(ARL\) do not depend on the underlying process distribution. The expectation in this paper is that for all continuous distributions, the proposed scheme will yield the same IC run-length characteristics. In this paper, to check the IC robustness of the DS precedence scheme we investigated its IC run-length properties under the normal, Student’s t, gamma, double exponential and Weibull distributions. These distributions were chosen to check the effects of symmetry, skewness, light and heavy tails on the performance of the DS precedence monitoring scheme. Table 1 presents the charting constants along with the corresponding attained \(ASS\), \(ARL\) and \(SDRL\) values when \(\delta =\) 0, \(m\in \){50,100,500}, \({n}_{1}\in \){5,7} and \({n}_{2}\in \){6,8,12} for pre-specified \({ASS}_{0}=\) 6 and 10, and nominal \({ARL}_{0}=\) 250 and 300. Note that the Stage 1 charting constants \({a}_{2},{a}_{1},{b}_{1}\) and \({b}_{2}\) are determined such that the attained IC \(ASS\) is satisfactorily close to the pre-specified \({ASS}_{0}\) value, and the Stage 2 charting constants \({c}_{1}\) and \({c}_{2}\) are determined such that the attained IC \(ARL\) is close or equal to the nominal \({ARL}_{0}\) value. Since Eq. (4c) can be expressed in terms of two charting constants through the relationship \({a}_{2}=m-{b}_{2}+1\) and \({a}_{1}=m-{b}_{1}+1\), one can use numerical integration and root finding programs in software such as Mathcad to determine the charting constants. For instance, for \(m=\) 100, (\({n}_{1}\), \({n}_{2}\)) = (5,6) so that \(j=\) 3 and \(h=\) 6, and for a pre-specified \({ASS}_{0}=\) 6 and a nominal \({ARL}_{0}=\) 250, using Eq. (4c) and the constraint IC \(ASS\) = \({ASS}_{0}\), it is found that \({b}_{1}=\) 58 and \({b}_{2}=\) 64, and \({a}_{2}=m-{b}_{2}+1=\) 37 and \({a}_{1}=m-{b}_{1}+1=\) 43, is the only combination of Stage 1 charting constants that satisfies the constraint IC \(ASS=\) 6. Then, at Stage 2, it is found that \({c}_{1}=\) 15 (with \({c}_{2}=m-{c}_{1}+1=\) 86) so that the DS precedence scheme with charting constants (\({a}_{2},{a}_{1},{b}_{1},{b}_{2},{c}_{1},{c}_{2}\)) = (37,43,58,64,15,86) yields an attained IC \(ARL=\) 271.2.

From the results displayed in Table 1, we can observe that an increase in \(m\) (i.e. Phase I sample size), results in smaller \(SDRL\) values. However, the IC \(ARL\) values converge toward the desired nominal \({ARL}_{0}\) value. This implies a stable performance of the proposed DS precedence scheme for large Phase I sample sizes. In terms of the 50th percentile (i.e. \({P}_{0.5}\)), when \(m=\) 100, (\({n}_{1},{n}_{2}\)) = (5,6) and \({ASS}_{0}=\) 6, there is 50% chance that the proposed DS precedence scheme gives a signal on the 113th sample in the prospective phase when \(\delta =\) 0 for \({ARL}_{0}=\) 321.9. The results tabulated below are thus indicating that the IC run-length properties of the DS precedence scheme are the same for all continuous distributions considered in this paper, and the DS precedence scheme is then considered to be IC robust. In the next subsection, the OOC performance is investigated across the distributions considered in this paper.

4.2 OOC performance

Since the DS precedence scheme is IC robust, we can now proceed to investigate its sensitivity under different probability distributions. Tables 2 and 3 present the OOC performance of the proposed DS precedence scheme when \(m\in \){50,100,500}, \({n}_{1}=\) 5, \({n}_{2}\in \){6,8} and \({ASS}_{0}\in \){6,10} under the \(N(\mathrm{0,1})\), \(t(5)\), \(G(\mathrm{3,1})\), \(DE\left(\mathrm{0,1}\right)\) and \(WE(\mathrm{2,1})\) distributions. The findings in Tables 2 and 3 can be summarised as follows:

-

For large values of \(m\), the DS precedence scheme performs better under symmetric distributions regardless of the magnitude of the shift and the Phase I sample sizes. in the location parameter. This is highlighted across all combinations of (\({n}_{1}\),\({n}_{2}\), \({ASS}_{0}\)).

-

An increase in the value of \({ASS}_{0}\) keeping \({n}_{1} and\) \({n}_{2}\) fixed will improve the performance of the DS precedence scheme.

-

An increase in the Stage 1 sample size, \({n}_{1}\), keeping \({ASS}_{0} \mathrm{and }{n}_{2}\) fixed does not improve the sensitivity of the DS precedence scheme. However, an increase in the Stage 2 sample size, \({n}_{2}\), results in a better OOC performance as it is expected based on the design. Thus, the larger \({n}_{1}\) and/or \({n}_{2}\), the better the performance of the DS precedence scheme.

-

Under the \(t\)(5) distribution, the DS precedence scheme performs better across all combinations of (\({n}_{1}\),\({n}_{2}\), \({ASS}_{0})\) compared to the other distributions considered except for small shifts. Following the \(t\)(5) distribution, the DS precedence scheme performs better under the symmetric distributions and, under heavy-tailed distributions, it performs relatively worse. That is, the DS precedence scheme performs better under the \(N(\mathrm{0,1})\), \(DE\left(\mathrm{0,1}\right)\) and \(WE(\mathrm{2,1})\) distributions, and in this order.

-

The DS precedence scheme performs worse, especially for small shifts under positively skewed distributions, see for instance the \(G(\mathrm{3,1})\) results in both Table 2 and 3. In addition, the proposed scheme is \(ARL-\) biased under the G(3,1) distribution for small Phase I sample sizes. As the Phase I sample size increases, the DS precedence scheme becomes \(ARL-\) unbiased.

4.3 Comparative analysis

4.3.1 Attained \({\varvec{A}}{\varvec{R}}{\varvec{L}}\) values using simulation and closed-form expression

In this subsection, the results found using simulation and closed-form expression (CFE) approaches are compared in terms of the \(ARL\) profile when \({ASS}_{0}=\) 5, \(\left({n}_{1},{n}_{2}\right)=\) (5,6), \(m\in \) {100, 500} for \({ARL}_{0}=\) 360. In this comparison, we used 105 replications to implement the simulation approach. To quantify the difference between the two approaches the absolute percentage difference, denoted as \({\%Diff}_{ARL}\), is computed as follows:

where \({ARL}_{sim}\) and \({ARL}_{CFE}\) represent the attained \(ARL\) value computed using simulation and CFE, respectively.

From Table 4, the difference between the results found using the two approaches varies between 0 and 4.15% which is considerably small. The smaller the shifts, the larger the difference between the two approaches. In addition, the percentage difference between the two approaches under the N(0,1) distribution is smaller than the ones under non-normal distributions. This is due to the fact that the DS precedence scheme performs better under symmetric distributions than it does under heavy-tailed distributions. The larger the Phase I sample size, the smaller the difference between the results found using the two approaches.

4.3.2 Simulation versus closed-form expression computational time comparison

In this subsection, we present a head-to-head comparison between the time (in seconds) taken to compute the \(ARL\) value for a specific shift using simulation and the proposed CFE approaches when \({ASS}_{0}=\) 5, \({(n}_{1},{n}_{2})=\) (5,6), \(m\in \) {50, 100, 500} under the N(0,1), t(5), G(3,1), DE(0,1) and WE(2,1) distributions for \({ARL}_{0}=\) 320 using SAS®9.4 and Mathcad 8 prime, respectively. Note that when the process is IC, the computational time, denoted as \({\tau }_{c}\), depends on the time required to determine the six charting constants and the computation of the IC \(ARL\) value. However, when the process is OOC (\(\delta \ne \) 0), the charting constants found when the process was deemed to be IC, are used to compute the OOC \(ARL\).

In addition, to quantify the difference between the time taken to compute the \(ARL\) value using simulation and the proposed CFE, we define the absolute percentage difference in computational time, \({\%Diff}_{{\tau }_{c}}\), of the two approaches as follows:

where \({\tau }_{c}^{(sim)}\) and \({\tau }_{c}^{(CFE)}\) represent the amount of time taken to compute the \(ARL\) value for a specific shift using simulation and CFE, respectively. Note that the absolute percentage difference for the amount of time taken to compute other run-length characteristics can be defined and computed in a similar way.

Using Mathcad 8 prime, it can be noticed that the computational time of the IC \(ARL\) values using CFE varies between 47 and 56 sec depending on the sample sizes. The results from Table 5 reveals that the percentage difference between the computational time of the two approaches varies between 0 and 92.56% with the CFE approach having an upper hand i.e. it requires less time to compute the \(ARL\) value as compared to the simulation approach. This means one can save up to 92.56% of the time spent computing the \(ARL\) when using the proposed CFE instead of simulation. As the magnitude of the shift increases, \({\tau }_{c}\) decreases. The \({\%Diff}_{{\tau }_{c}}\) values are smaller under the normal distribution compared to non-normal distributions. This is because the simulation under the normal distribution takes less time as compared to the simulations under non-normal distributions. Astivia (2020) highlights how simulating from non-normal distributions may come with issues of estimation and mathematical tractability, and many more. However, the computational time, if it were to be studied for rnorm (i.e., the R function that generates random variates having a specified normal distribution), would outperform other non-normal distributions, especially for large simulations.

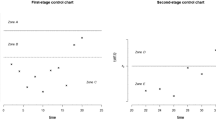

Note that for a large Phase I sample size, for both simulation and CFE approaches, the amount of time taken to compute the \(ARL\) value for a specific shift is larger than the one for small Phase I sample sizes (see Fig. 2a-d). Figure 3 compares the computational times between the Monte Carlo simulation approach and the use of the CFE in the computation of the 95th percentile points (e.g. \({P}_{0.95}\)) of the run-length distribution. Before we discuss the results displayed in this figure, one should know that all the characteristics of the run-length distribution can be computed using one specific procedure (e.g., PROC UNIVARIATE in SAS®IML9.4). In other words, the amount of time taken to compute the \(ARL\) will then be the same as that of computing the percentiles. Note that \({P}_{0.95}\) is computed using the CFE defined in Eq. (7b). Figure 3a–d show that for small shifts, the high percentile points are computed faster using CFE as compared to simulations. However, for moderate and large shifts, the computational time is almost the same for both methods. Figure 4a–b show that for small shifts the required amount of time is significantly reduced for small Phase I sample sizes. However, for moderate and large shifts the amount of time is significantly reduced for large Phase I sample sizes.

5 Illustrative example

In this section, real-world data from iron ore mining are used to illustrate the application and implementation of the DS precedence scheme in a froth flotation process (see Mukherjee et al. (2019)). Froth flotation is often used to improve the iron concentration of low-grade iron ores. Low-grade iron ores contain high concentration of impurities such as silicon dioxide (known as silica), phosphorus and alumina containing minerals, which are undesired. Froth flotation helps to remove the main impurity.

This example is implemented in two phases, namely Phase I and Phase II. The Phase I regime is used to estimate the control limits of the IC process. In Phase II, the control limits found in Phase I are used to monitor the percentage of silica that is present on each iron ore sample at the end of the flotation process.

5.1 Phase I analysis

The data from the flotation plant were collected between 01 June 2017 and 31 July 2017. In this example, 67 master samples, each of size \(n=\) 9 (i.e. 603 observations) were considered and the null hypothesis of the Shapiro–Wilk test for normality was rejected with \(p-value=2 \times {10}^{-16}\), which implies that the data are clearly not normally distributed. Each master sample was divided into two samples of sizes \({n}_{1}=\) 3 and \({n}_{2}=\) 6 to be used in Stages 1 and 2, respectively. The first step in the implementation of the DS precedence scheme is to find the charting constants that yield an attained \({ARL}_{0}\) closer to 370. Thus, it was found that when \(m=\) 603, \(\left({n}_{1},{n}_{2}\right)=\) (3, 6) and \({ASS}_{0}=\) 5, the combination of the charting constants \(\left({a}_{2},{a}_{1},{b}_{1},{b}_{2}, {c}_{1},{c}_{2}\right)=\) (162, 238, 366, 442, 24, 580) corresponding to the control limits \(OLCL=\) 1.38, \(ILCL=\) 1.58, \(IUCL=\) 1.90, \(OUCL=\) 2.20, \(LCL=\) 1.05 and \(UCL=\) 4.55, respectively, so that the DS precedence monitoring scheme yields an attained \({ARL}_{0}=\) 354.88. The second step is to construct the DS precedence scheme using the control limits found in Step 1 and plot the charting statistics. This Phase I analysis detected 31 samples that plotted OOC which were subsequently removed from the process (this plot is not shown in this paper to conserve space). Since the Phase I sample is not IC, then the control limits must be revised using the reduced Phase I sample. Thus, after removing the 31 samples (i.e. 279 observations), the remaining data were analysed again. It was found that when \(m=\) 324, \(\left({n}_{1},{n}_{2}\right)=\) (3, 6) and \({ASS}_{0}=\) 5, the combination of the charting constants \(\left({a}_{2},{a}_{1},{b}_{1},{b}_{2}, {c}_{1},{c}_{2}\right)=\) (43, 97, 228, 282, 15, 310) corresponding to the control limits \(OLCL=\) 1.35, \(ILCL=\) 1.57, \(IUCL=\) 2.11, \(OUCL=\) 2.71, \(LCL=\) 1.22 and \(UCL=\) 4.04, respectively, so that the DS precedence scheme yields an attained \({ARL}_{0}=\) 341.98. Figure 5 shows that the revised Phase I sample is now IC as in Stage 1 the charting statistics plot either in region B or C, and in Stage 2 they all plot IC (see Fig. 5). Since the Phase I sample is now considered to be IC, the percentage of the silica can be monitored continuously in Phase II.

5.2 Phase II analysis

In Phase II, the revised control limits found in Phase I are used to monitor the percentage of silica. The Phase II data contain 43 master samples of size 9 divided such that \({n}_{1}=\) 3 and \({n}_{2}=\) 6. Figure 6 shows that the charting statistic of the fifth sample plotted beyond the \(OUCL\). Therefore, the DS precedence scheme will give a signal on the fifth sample (see Fig. 6).

In practice, the advantage of the proposed approach over the simulation approach is due to the computation of the control limits in Phase I. It takes a smaller amount of time to compute the control limits of the DS precedence scheme using CFE than using simulations. However, when it comes to monitor the process in Phase II both approaches take the same amount of time.

6 Conclusion

This paper introduced a new closed-form expression (CFE) approach to compute the characteristics of the run-length distribution of the DS precedence scheme. It is found that the CFE approach considerably reduces the amount of time taken to compute the run-length properties of the DS precedence monitoring scheme. Moreover, the run-length characteristics found using the proposed CFE approach are noticeably closer to the ones found using simulation. However, quality operators who are willing to use order statistics monitoring schemes are recommended to use the proposed approach instead of simulations. The reason behind this is that to reach more accurate results, one must use a very large number of replications which will consequently increase the amount of time needed to compute the properties of the monitoring scheme. In future, researchers are recommended to derive more CFE for other types of schemes such as nonparametric EWMA and CUSUM schemes using other order statistics such as the exceedance, minimum, and maximum statistics.

References

Areepong Y, Sukparungsee S (2013) Closed form formulas of average run length of moving average control chart for nonconforming for zero-inflated process. Far East J Math Sci (FJMS) 75(2):385–400

Astivia OLO (2020) Issues, problems and potential solutions when simulating continuous, non-normal data in the social sciences. Meta-Psychology. https://doi.org/10.15626/MP.2019.2117

Balakrishnan N, Koutras MV (2011) Runs and scans with applications. Wiley, New-York

Balakrishnan N, Read CB, Vidakovic B (2006) Encyclopedia of statistical sciences, 2nd edn. Wiley, New-York

Chakraborti S, Van Der Laan P, Van de Wiel MA (2004) A class of distribution-free control charts. J R Stat Soc Ser C Appl Stat 53(3):443–462

Chakraborti S, Eryilmaz S, Human SW (2009) A Phase II nonparametric control chart based on precedence statistics with runs-type signaling rules. Comput Stat Data Anal 53:1054–1065

Champ CW, Rigdon SE (1991) A comparison of the Markov chain and the integral equation approaches for evaluating the run length distribution of quality control charts. Commun Stat Simul Comput 20(1):191–204

Crowder SV (1987) A simple method for studying run–length distributions of exponentially weighted moving average charts. Technometrics 29(4):401–407

Fu MC, Hu J-Q (1999) Efficient design and sensitivity analysis of control charts using Monte Carlo simulation. Manag Sci 45(3):395–413

Human SW, Kritzinger P, Chakraborti S (2011) Robustness of the EWMA control chart for individual observations. J Appl Stat 38(10):2071–2087

Janacek GJ, Meikle SE (1997) Control charts based on medians. J Qual Technol 46(1):19–31

Letshedi TI, Malela-Majika J-C, Castagliola P, Shongwe SC (2021) Distribution-free triple EWMA control chart for monitoring the process location using the Wilcoxon rank-sum statistic with fast initial response feature. Qual Reliab Eng Int 37(5):1996–2013

Li Z, Zou C, Gong Z, Wang Z (2014) The computation of average run length and average time to signal: an overview. J Stat Comput Simul 84(8):1779–1802

Malela-Majika J-C (2022) Nonparametric precedence chart with repetitive sampling. Stat. https://doi.org/10.1002/sta4.512

Malela-Majika J-C, Shongwe SC, Aslam M, Chong ZL, Rapoo EM (2021) Distribution-free double-sampling precedence monitoring scheme to detect unknown shifts in the location parameter. Qual Reliab Eng Int 37(8):3580–3599

Malela-Majika J-C, Shongwe SC, Castagliola P (2022) One-sided precedence monitoring schemes for unknown shift sizes using generalized 2-of-(h+1) and w-of-w improved runs-rules. Commun Stat Theory Methods 51(9):2803–2837

Montgomery D (2020) Introduction to statistical quality control, 8th edn. Wiley, New York

Mukherjee A, Chong ZL, Khoo MBC (2019) Comparisons of some distribution-free CUSUM and EWMA schemes and their applications in monitoring impurity in mining process flotation. Comput Ind Eng 137:106059. https://doi.org/10.1016/j.cie.2019.106059

Oberkampf WL, Deland SM, Rutherford BM, Diegert KV, Alvin KF (2002) Error and uncertainty in modeling and simulation. Reliab Eng Syst Saf 75:333–357

Pignatiello JJ Jr, Runger GC (1990) Comparisons of multivariate CUSUM charts. J Qual Technol 22(3):173–186

Qiu P (2018) Some perspectives on nonparametric statistical process control. J Qual Technol 50(1):49–65

Randles R, Wolfe D (1979) Introduction to the theory of nonparametric statistics. Wiley, New York

Shmueli G, Cohen A (2003) Run-Length distribution for control charts with runs and scans rules. Commun Stat Theory Methods 32(2):475–495

Silberschatz A, Gagne G, Galvin PG (2009) Operating system concepts, chapter 5, 8th edn. Wiley, New York

Sukparungsee S, Areepong Y (2016) Approximation average run lengths of zero-inflated binomial GWMA chart with Markov chain approach. Far East Journal of Mathematical Sciences (FJMS) 99(3):418–423

Teoh WL, Fun MS, Teh SY, Khoo MBC, Yeong WC (2016) Exact run length distribution of the double sampling chart with estimated process parameters. S Afr J Ind Eng 27(1):20–31

Weiß CH (2011) The Markov chain approach for performance evaluation of control charts–a tutorial. Process control: problems, techniques and applications. Nova Science Publishers, Inc., New York, pp 205–228

Zantek PF (2008) A Markov-chain method for computing the run-length distribution of the self-starting cumulative sum scheme. J Stat Comput Simul 78(5):463–473

Acknowledgment

The second author thanks the South African National Research Foundation (NRF) for their support under the grant (RA210125583099) and the Research Development Programme at the University of Pretoria, Department of Research and Innovation (DRI).

Funding

Open access funding provided by University of Pretoria.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

This Appendix provides the derivations of the in-control probability at Stage 2 denoted as \({p}_{02}\). It is known that

where

Then, because of symmetry:

Therefore,

Now

Define

Then

Let \(\left\{{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}}\right\}\) be the random variables obtained at the first sample. Let \(\left\{{Y}_{21},{Y}_{22},\dots ,{Y}_{2{n}_{2}}\right\}\) be the random variables obtained at the second sample. Finally, let \(\left\{{Y}_{1},{Y}_{2},\dots ,{Y}_{n}\right\}\) be the \(n={n}_{1}+{n}_{2}\) random variables obtained through combining the first Stage of \({n}_{1}\) observations and the second Stage of \({n}_{2}\) observations.

Then, we can consider three scenarios:

-

1.

When \({l}_{3} < {l}_{4}\), we have:

$$L\left({l}_{1},{l}_{2},{l}_{3},{l}_{4}\right)= Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {X}_{\left({l}_{3}:m\right)}, {Y}_{\left({l}_{2}:n\right)}\le {X}_{\left({l}_{4}:m\right)}\right)={\int }_{-\infty }^{\infty }{{\int }_{{x}_{1}}^{\infty } Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{2}\right)\frac{m!}{\left({l}_{3}-1\right)!\left({l}_{4}-{l}_{3}-1\right)!\left(m-{l}_{4}\right)!}F({x}_{1})}^{{l}_{3}-1}\times {(F\left({x}_{2}\right)-F({x}_{1}))}^{{l}_{4}-{l}_{3}-1}{(1-F\left({x}_{2}\right))}^{m-{l}_{4}}f\left({x}_{1}\right)f\left({x}_{2}\right)d{x}_{1}d{x}_{2}.$$$${\text{Now}}$$$$Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{2}\right)=Prob\left(\mathrm{at least }{l}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1},{Y}_{\left({l}_{2}:n\right)}<{x}_{2}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1},{Y}_{\left({l}_{2}:n\right)}<{x}_{2}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left({Y}_{\left({l}_{2}:n\right)}<{x}_{2}|\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)\times Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{at least }{l}_{2} \mathrm{of }{Y}_{1},{Y}_{2},\dots ,{Y}_{n} \le {x}_{2}|\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)\times Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right).$$(A2)For a given \({i}_{1}\left({l}_{1}{\le i}_{1}\le {n}_{1}\right)\), let \({Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1({n}_{1}-{i}_{1})}^{*}\) represent the random variables among \({Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}}\) which are obtained after deleting the \({i}_{1}\) observations. Then:

$$Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{2}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{at least }{l}_{2}^{*} \mathrm{among }{Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1\left({n}_{1}-{i}_{1}\right)}^{*} \mathrm{and }{Y}_{21},{Y}_{22},\dots ,{Y}_{2{n}_{2}} \le {x}_{2}\right)\times Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right), \left( {l}_{2}^{*}={\text{max}}\left\{0,\left({l}_{2}-{i}_{1}\right)\right\}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{i={l}_{2}^{*}}^{n-{i}_{1}}Prob\left(\mathrm{exactly }i \mathrm{among }{Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1\left({n}_{1}-{i}_{1}\right)}^{*} \mathrm{and }{Y}_{21},{Y}_{22},\dots ,{Y}_{2{n}_{2}} \le {x}_{2}\right)\times Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{i={l}_{2}^{*}}^{n-{i}_{1}}\sum_{{i}_{1}^{*}={\text{max}}\left\{0,i-{n}_{2}\right\}}^{{\text{min}}\left\{{n}_{1}-{i}_{1},i-{i}_{1}\right\}}Prob(\mathrm{exactly }{i}_{1}^{*} \mathrm{among }{Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1\left({n}_{1}-{i}_{1}\right)}^{*} \le {x}_{2} \mathrm{and exactly }\left(i- {i}_{1}^{*}\right)\mathrm{ among }{Y}_{21},{Y}_{22},\dots ,{Y}_{2{n}_{2}} \le {x}_{2})\times Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)$$$$=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{i={l}_{2}^{*}}^{n-{i}_{1}}\sum_{{i}_{1}^{*}=\;{\text{max}}\;\left\{0,i-{n}_{2}\right\}}^{{\text{min}}\;\left\{{n}_{1}-{i}_{1},i-{i}_{1}\right\}}Prob\left(\mathrm{exactly }\;{i}_{1}^{*} \;\mathrm{among }\;{Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1\left({n}_{1}-{i}_{1}\right)}^{*} \le {x}_{2}\right)\times Prob(\mathrm{ exactly }\;\left(i- {i}_{1}^{*}\right)\;\mathrm{ among }\;{Y}_{21},{Y}_{22},\dots ,{Y}_{2{n}_{2}} \le {x}_{2})\times Prob\left(\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)$$$$=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{i={l}_{2}^{*}}^{n-{i}_{1}}\sum_{{i}_{1}^{*}={\text{max}}\left\{0,i-{n}_{2}\right\}}^{{\text{min}}\left\{{n}_{1}-{i}_{1},i-{i}_{1}\right\}}\left\{\left.\left(\begin{array}{c}{n}_{1}-{i}_{1}\\ {i}_{1}^{*}\end{array}\right){F\left({x}_{2}\right)}^{{i}_{1}^{*}}{\left(1-F\left({x}_{2}\right)\right)}^{{n}_{1}-{i}_{1}-{i}_{1}^{*}}\right\}\right.\quad\times \left\{\left.\left(\begin{array}{c}{n}_{2}\\ i- {i}_{1}^{*}\end{array}\right){F\left({x}_{2}\right)}^{i- {i}_{1}^{*}}{\left(1-F\left({x}_{2}\right)\right)}^{{n}_{2}-{i}_{1}-{i}_{1}^{*}}\right\}\right.\quad \times \left\{\left.\left(\begin{array}{c}{n}_{1}\\ {i}_{1}\end{array}\right){F\left({x}_{1}\right)}^{{i}_{1}}{(1-F\left({x}_{1}\right))}^{{n}_{1}-{i}_{1}}\right\}\right.$$$$=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{i={l}_{2}^{*}}^{n-{i}_{1}}\sum_{{i}_{1}^{*}={\text{max}}\left\{0,i-{n}_{2}\right\}}^{{\text{min}}\left\{{n}_{1}-{i}_{1},i-{i}_{1}\right\}}\left\{\left.\left(\begin{array}{c}{n}_{1}-{i}_{1}\\ {i}_{1}^{*}\end{array}\right)\left(\begin{array}{c}{n}_{2}\\ i- {i}_{1}^{*}\end{array}\right){F\left({x}_{2}\right)}^{i}{\left(1-F\left({x}_{2}\right)\right)}^{n-i-{i}_{1}}\right\}\right.\quad\times \left\{\left.\left(\begin{array}{c}{n}_{1}\\ {i}_{1}\end{array}\right){F\left({x}_{1}\right)}^{{i}_{1}}{(1-F\left({x}_{1}\right))}^{{n}_{1}-{i}_{1}}\right\}\right. .$$ -

2.

When \({l}_{3}= {l}_{4}\), it follows that

$$L\left({l}_{1},{l}_{2},{l}_{3},{l}_{4}\right)=L\left({l}_{1},{l}_{2},{l}_{3},{l}_{3}\right)= Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {X}_{\left({l}_{3}:m\right)}, {Y}_{\left({l}_{2}:n\right)}\le {X}_{\left({l}_{3}:m\right)}\right)={\int }_{-\infty }^{\infty } Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{1}\right)\frac{m!}{\left({l}_{3}-1\right)!\left(m-{l}_{3}\right)!}{{F\left({x}_{1}\right)}^{{l}_{3}-1}(1-F\left({x}_{1}\right))}^{m-{l}_{3}}f\left({x}_{1}\right)d{x}_{1}.$$Now,

$$Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{1}\right)=Prob\left(\mathrm{at\; least }\;{l}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1},{Y}_{\left({l}_{2}:n\right)}<{x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{exactly }{i}_{1} \mathrm{of }{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1},{Y}_{\left({l}_{2}:n\right)}<{x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left({Y}_{\left({l}_{2}:n\right)}<{x}_{1}|\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right) \times Prob\left(\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{at least }{l}_{2}\; \mathrm{of }\;{Y}_{1},{Y}_{2},\dots ,{Y}_{n} \le {x}_{1}|\;\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right) \times Prob\left(\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{{i}_{2}={l}_{2}^{*}}^{{n}_{2}}Prob\left(\mathrm{exactly }\;{i}_{2}\; \mathrm{of }\;{Y}_{21},{Y}_{22},\dots ,{Y}_{1{n}_{2}} \le {x}_{2}\right)\times Prob\left(\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }\sum_{{i}_{2}={l}_{2}^{*}}^{{n}_{2}}\left\{\left.\left(\begin{array}{c}{n}_{2}\\ i\end{array}\right){F\left({x}_{2}\right)}^{{i}_{2}}{\left(1-F\left({x}_{2}\right)\right)}^{{n}_{2}-{i}_{2}}\right\}\right.\times \left\{\left.\left(\begin{array}{c}{n}_{1}\\ {i}_{1}\end{array}\right){F\left({x}_{1}\right)}^{{i}_{1}}{(1-F\left({x}_{1}\right))}^{{n}_{1}-{i}_{1}}\right\}\right..$$ -

3.

When \({l}_{3}> {l}_{4}\), From (\({\text{A}}.2\)) we have

$$L\left({l}_{1},{l}_{2},{l}_{3},{l}_{4}\right) Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {X}_{\left({l}_{3}:m\right)}, {Y}_{\left({l}_{2}:n\right)}\le {X}_{\left({l}_{4}:m\right)}\right)$$$$={\int }_{-\infty }^{\infty }{{\int }_{{x}_{1}}^{\infty } Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{2}\right)\frac{m!}{\left({l}_{3}-1\right)!\left({l}_{4}-{l}_{3}-1\right)!\left(m-{l}_{4}\right)!}F({x}_{1})}^{{l}_{3}-1}\times {(F\left({x}_{2}\right)-F({x}_{1}))}^{{l}_{4}-{l}_{3}-1}{(1-F\left({x}_{2}\right))}^{m-{l}_{4}}f\left({x}_{1}\right)f\left({x}_{2}\right)d{x}_{1}d{x}_{2}.$$From (A2) it follows that,

$$Prob\left( {Y}_{\left({l}_{1}:{n}_{1}\right)}\le {x}_{1}, {Y}_{\left({l}_{2}:n\right)}\le {x}_{2}\right)=\sum_{{i}_{1}={l}_{1} }^{{n}_{1} }Prob\left(\mathrm{at least }{l}_{2} \mathrm{of }{Y}_{1},{Y}_{2},\dots ,{Y}_{n} \le {x}_{2}|\;\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right) \times Prob\left(\mathrm{exactly }\;{i}_{1}\; \mathrm{of }\;{Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}} \le {x}_{1}\right)$$For a given \({i}_{1}\left({l}_{1}{\le i}_{1}\le {n}_{1}\right)\), let \({Y}_{11}^{*}, {Y}_{12}^{*},\dots , {Y}_{1{i}_{1}}^{*}\) represent the random variables among \({Y}_{11},{Y}_{12},\dots ,{Y}_{1{n}_{1}}\) which are \(\le {x}_{1}\). Then:

$$ \begin{aligned} Prob\left( {Y_{{\left( {l_{1} :n_{1} } \right)}} \le x_{1} ,Y_{{\left( {l_{2} :n} \right)}} \le x_{2} } \right) = & \sum\limits_{{i_{1} = l_{1} }}^{{n_{1} }} {\sum\limits_{{i_{1}^{*} = 0}}^{{i_{1} }} P } rob\left( {{\text{at}}\;{\text{least}}\;i_{1}^{*} \;{\text{among}}\;Y_{{11}}^{*} ,Y_{{12}}^{*} , \ldots ,Y_{{1i_{1}^{*} }}^{*} {\text{and}}\;{\text{at}}\;{\text{least}}\;i_{2}^{*} \;{\text{among}}\;Y_{{21}} ,Y_{{22}} , \ldots ,Y_{{2n_{2} }} \le x_{2} } \right) \times Prob\left( {{\text{exactly}}\;i_{1} \;{\text{of}}\;Y_{{11}} ,Y_{{12}} , \ldots ,Y_{{1n_{1} }} \le x_{1} } \right),\left( {l_{2}^{*} = {\text{max}}\left\{ {0,\left( {l_{2} - i_{1}^{*} } \right)} \right\}} \right) = \sum\limits_{{i_{1} = l_{1} }}^{{n_{1} }} {\sum\limits_{{i_{1}^{*} = 0}}^{{i_{1} }} {\sum\limits_{{i_{2}^{*} = l_{2} }}^{{n_{2} }} P } } rob\left( {{\text{at}}\;{\text{least}}\;i_{1}^{*} \;{\text{among}}\;Y_{{11}}^{*} ,Y_{{12}}^{*} , \ldots ,Y_{{1i_{1}^{*} }}^{*} {\text{and}}\;{\text{at}}\;{\text{least}}\;i_{2}^{*} \;{\text{among}}\;Y_{{21}} ,Y_{{22}} , \ldots ,Y_{{2n_{2} }} \le x_{2} } \right) \times Prob\left( {{\text{exactly}}\;i_{1} \;{\text{of}}\;Y_{{11}} ,Y_{{12}} , \ldots ,Y_{{1n_{1} }} \le x_{1} } \right) \\ = & \sum\limits_{{i_{1} = l_{1} }}^{{n_{1} }} {\sum\limits_{{i_{1}^{*} = 0}}^{{i_{1} }} {\sum\limits_{{i_{2}^{*} = l_{2} }}^{{n_{2} }} {\left\{ {\left. {\left( {\begin{array}{*{20}c} {i_{1} } \\ {i_{1}^{*} } \\ \end{array} } \right)F\left( {x_{2} } \right)^{{i_{1}^{*} }} \left( {1 - F\left( {x_{2} } \right)} \right)^{{i_{1} - i_{1}^{*} }} } \right\}} \right.} } } \quad \times \left\{ {\left. {\left( {\begin{array}{*{20}c} {{\text{n}}_{{\text{2}}} } \\ {{\text{i}}_{{\text{2}}}^{{\text{*}}} } \\ \end{array} } \right){\text{F}}\left( {{\text{x}}_{{\text{2}}} } \right)^{{{\text{i}}_{{\text{2}}}^{{\text{*}}} }} \left( {{\text{1 - F}}\left( {{\text{x}}_{{\text{2}}} } \right)} \right)^{{{\text{n}}_{{\text{2}}} {\text{ - i}}_{{\text{2}}}^{{\text{*}}} }} } \right\}} \right.\quad \times \left\{ {\left. {\left( {\begin{array}{*{20}c} {{\text{n}}_{{\text{1}}} } \\ {{\text{i}}_{{\text{1}}} } \\ \end{array} } \right){\text{F}}\left( {{\text{x}}_{{\text{1}}} } \right)^{{{\text{i}}_{{\text{1}}} }} ({\text{1 - F}}\left( {{\text{x}}_{{\text{1}}} } \right))^{{{\text{n}}_{{\text{1}}} {\text{ - i}}_{{\text{1}}} }} } \right\}} \right. \\ \end{aligned} $$

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Magagula, Z., Malela-Majika, JC., Human, S.W. et al. Closed-form expressions of the run-length distribution of the nonparametric double sampling precedence monitoring scheme. Comput Stat (2024). https://doi.org/10.1007/s00180-024-01488-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00180-024-01488-z