Abstract

Manufacturing industries require a right-first-time paradigm to remain competitive. Variation simulation (VS) is a key tool to predict variation of the final shape of flexible assemblies, allowing to reduce defects and waste. VS models involving compliant sheet-metal parts commonly integrate physics-based simulation with statistical approaches (usually Monte Carlo simulation). Although increasingly used as a backbone of synthesis techniques for (stochastic) optimization of assembly systems, the main roadblock of VS methods is the intense computational costs due to time-intensive simulations and high-dimensional design space. Driven by the need of time reduction, this paper presents an innovative real-time physics-based VS model of assembly systems with compliant sheet-metal parts. The proposed methodology involves a non-intrusive reduced-order model (niROM), empowered by a novel adaptive sampling procedure for dataset generation, and a cross-validation-based optimized radial basis function (RBF) formulation for interpolation. Demonstrated through two case studies—(i) a remote laser welding operation to predict mechanical distortions, with two input parameters, and (ii) the assembly of an aircraft vertical stabilizer with five input parameters—the methodology achieves accurate real-time results, with up to a 43% improvement in accuracy compared to traditional sampling techniques. Findings highlight the critical influence of the sampling strategy and the number of input parameters on ROM accuracy. Better results are reached by employing adaptive sampling in combination with optimum RBF, which additionally disengages the user from the choice of the interpolation settings. This study unlocks new avenues in the field of variation simulation and dimensional/quality monitoring by narrowing the gap between any advanced CAE solver and VS models with real-time physics-based simulations.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Metallic sheets find extensive use in industries such as automotive and aerospace due to their availability and capability for forming, cutting, and fastening [1], at relatively low costs. However, assembly processes of sheet-metals are strongly affected by stochastic uncertainties, principally related to part geometry variations [2], which influence the final shape of the product causing both aesthetical and functional issues. Moreover, the intrinsic flexibility of parts themselves, especially in processes involving welding operations that cause thermal distortions, introduces additional geometric variability [3]. Predicting the generation and the propagation of variations represents a fundamental step to allow validation and certification at the early design stage.

The application of variation simulation (VS) tools provides significant benefits by effectively preventing out-of-tolerance variations during the assembly of both rigid and compliant parts, while also considering the impact of stochastic error propagation. VS methods have long been used to accelerate strategies according to the right-first-time paradigm and the digitalization of the manufacturing process [4]. In the context of compliant parts, which are prevalent in both automotive and aerospace industries, most of the VS techniques rely on physics-based simulation coupled with statistical approaches. For example, finite element analysis (FEA) combined with Monte Carlo simulations (MCS) was initially introduced in the pioneering work of Liu and Hu [5] and continues to be consistently used to date [6]. VS models can be divided in (1) VS-based analysis and (2) VS-based synthesis. The former (1) aims at finding the effect of input parameters on output performance indicators, under known design constraints; the latter (2) addresses the more complicated reverse problem, covering topics including stochastic optimization processes or parametric/sensitivity analyses.

VS-based analysis has been addressed by several studies, including the analysis of variation propagation during the assembly of aircraft panels [7] and multi-stage assembly systems [8]. VS-based synthesis techniques have the potential to support in managing waste and defects during the production by enabling early design optimization and in-process quality improvement. However, VS-based synthesis has not received much attention due to the computation time.

In general, VS synthesis procedures are extremely time-consuming as they involve large assemblies and the presence of non-linearities such as contacts, large displacements, or non-linear material behaviour. Moreover, typical industrial applications are affected by the so-called curse of dimensionality of the parameter space: the number of needed physics-based evaluations increases exponentially with the dimensionality of the design space. For instance, in the automotive industry, the number of input parameters (which may concern the statistical variability of both product and process) can easily exceed the threshold of 100 for body-in-white (BIW) assemblies [9]. Under these conditions, finding the global (stochastic) optimum in such a high-dimensional space may become prohibitively and computationally expensive even with powerful computational resources, such as cloud computing with high-performance computing—HPC [10].

Several authors have been facing this obstacle. Xing [11] developed a VS-based synthesis algorithm to optimize the fixture layout for an inner hood assembly which found the global best solution in 1687 h. Aderiani et al. [12] developed a methodology for fixture layout optimization and tested it with two single-station cases, elapsing 270 h altogether. In their recent work [13], they employed genetic algorithm (GA)–based synthesis approach to maximize geometric quality and compared different design strategies by considering part and/or process variations. For each iteration of the GA, 1000 MCSs were required to incorporate stochastic variabilities for design robustness. Sinha et al. [14] proposed an innovative deep learning–based closed-loop framework to support in-process quality improvement. However, tens of thousands of physics-based simulations were required for training their deep neural network (DNN) to study only the sensitivity to 5 movable clamps.

Therefore, the main limitation of VS-based studies is the heavy computational burden caused by both time-intensive simulations and high-dimensional design space. Indeed, finding the right balance between accuracy and computational efficiency remains an open topic:

-

Model accuracy is dependent upon the completeness of the digital model itself and the capability to emulate inherent physical processes as happening in the real process.

-

Computational efficiency is addressed by either improving the efficiency of the physics-based kernel [15] or the decision-making kernel (i.e. population-based heuristics such as GA).

Based upon the above considerations, the implementation of numerical methods to accelerate physics-based simulation runs, ideally achieving real-time responses, is a pivotal stage for VS. Indeed, instant decision-making, based on the physical response of the system’s digital counterpart, could enhance industries’ inclination to embrace VS-driven tools to boost assembly efficiency, reducing faulty items and waste, and leading to a significant reduction in manufacturing expenses and energy wastage. To this end, various strategies have been suggested in literature, such as high-performance physics-based kernels, parallel computing, response surface methods (RSM), reduced-order modelling (ROM), and machine learning (ML). In many cases, in conjunction with other strategies, both parallel computing [16] and high-performance physics-based kernels [15] have demonstrated their ability to boost simulations. The former might parallelize the calculation for different instances of input parameters, while the latter permits lower mesh density of the computational domain, still retaining the same level of accuracy. Nevertheless, these approaches might not be enough, particularly in presence of large multi-stage assembly and many parameters to control.

Many approaches rely on the response surface methodology (RSM), combined with proper Design of Experiment (DoE) to solve optimization problems (surrogate-based optimization [17]). For decades, several attempts have been made to tackle VS-based synthesis problems with RSM. In an earlier application, RSM was used to optimize the fixture configuration for a cross-member assembly of BIW [18]. In Gerbino et al. [19], the authors aimed at minimizing the gaps during an aircraft wing assembly by optimizing the clamping operations. A similar case study was adopted in [20], where a different approach to include stochastic part variability (based on polynomial chaos expansion) was presented. However, RSM approaches are affected by two main limitations: (1) they are unable to explain the cause-effect mechanisms among variables, since they do not embed the physics of the problem; further, (2) these methods only optimize pre-defined quality indicators, like a few control points defined on the part/assembly. Consequently, scalability to new control points would require recomputing the model.

Reduced-order modelling (ROM) has proven to be successful in reducing computation times in a wide variety of fields including computational fluid dynamics [21], dynamic systems [22], and structural mechanics [23]. Originally developed for control system theory [24], ROM methods are appealing for the ability to reduce the dimensionality of large models. These methods can be roughly divided into intrusive (iROM) and non-intrusive (niROM). Intrusive techniques have been widely used to accelerate computations for several applications, reaching good results in time reduction with acceptable loss of accuracy [23, 25]. However, intrusive ROM often requires complex theoretical formulations to be implemented in the source code and such modifications, besides being intricate, become unfeasible if the source code is not accessible. This is particularly true with commercial software, where the physics-based kernel is embedded and not always accessible. These issues can be overcome by several robust non-intrusive ROM techniques recently developed in various fields.

niROM techniques do not require any code modifications as they rely on a limited training dataset, i.e. the solutions of full-order model (FOM), from which extracting a restricted number of modes which best represent the solution to the complete problem. Since these modes encapsulate the most important patterns in the field space and retain information from the physical model, ROM methods are considered more attractive than RSM. Among niROM methods, a popular technique for identifying the modes of the system is the Proper Orthogonal Decomposition (POD) [26], which is often used in combination with the radial basis functions (RBF) [27] for the interpolation in the reduced space, because of their ability to deal with large parameter spaces. The POD technique allows the decomposition of the data matrix into spatial modes, which are orthogonal to each other and are analogous to the principal components obtained through principal component analysis (PCA) [26]. POD has been used for structural problem with contact non-linearities [25, 28], flow dynamics in gas reservoirs [29], and heat transfer problems [30]. Recently, a POD-based ROM methodology has been developed for VS of assembly systems by Russo et al. [25], with both intrusive and non-intrusive approaches. The authors concluded that, based on the used computational kernel, the intrusive method, despite being more accurate, was much slower and not suitable for an integration with commercial software. Other niROM techniques differ for how the dimensionality reduction is accomplished and the surrogate model in the reduced space is constructed. Li et al. [31] combined the POD with the Gaussian process regression method to incorporate the influence of two parameters on fluid/structure interaction problems. Kang et al. [32] developed a multi-fidelity niROM for performing CFD simulations, based on Kriging and artificial neural network algorithms. In the CFD field, a brief review of niROM strategies can be found in [33], where the authors mainly focused on differences in dimensionality reduction, surrogate models, and sampling strategies.

In addition to data-driven niROMs, there are also other approaches based, for example, on machine/deep learning (ML/DL). These include studies applied to non-linear mechanical analyses using ML techniques to develop reduced surrogated models [34] or DL-based methodologies [35] for non-linear dynamical systems. Although all these approaches offer accurate solutions while significantly reducing the computational cost, ML/DL techniques are limited by the difficult setting of the numerous hyper-parameters [36] and large datasets to achieve accurate results [37].

From all the above considerations, non-intrusive ROM methods emerge as the most suitable choice for obtaining real-time VS: (i) they have demonstrated their effectiveness in providing accurate approximations in real time for physics-based simulations; (ii) they do not need modification of the physics-based computational kernel, allowing to work with virtually any commercial software; (iii) they embed physical information of the model (unlike RSM); and, (iv) they require relatively smaller datasets compared to ML and DL. However, despite the large amount of niROM applications found in the literature, there is still little investigation of methodologies integrating ROM within VS frameworks. Hinging ROM to VS may originate difficulties related to parameter space sampling and the selection of interpolation methods. Indeed, as inferred from the literature [25, 28], niROM accuracy is strictly dependent on the training strategy adopted for the dataset generation, as well as on the surrogate model for the reconstruction in the reduced space. On the one hand, given the complex nature of the physics-based models frequently managed by VS, which can be highly time-consuming to solve, the sampling procedure should be optimized to run as few configurations as possible, while still capturing the hidden behaviour of the full-order model. Adaptive sampling strategies have been extensively studied to overcome the challenge of determining the appropriate number of training samples and their position in the parameter space [17, 38]. On the other hand, choosing the right surrogate model, and fine tune it, is not a trivial task and can drastically affect the model accuracy. For, e.g. RBF interpolation is often combined with ROM due to its ability to handle scattered data and multidimensional design space. However, determining the optimal kernel and shape parameter is an open topic, as discussed in [28, 29]. A comprehensive review on these aspects can be found in [17].

1.1 Purpose and outline of the article

Due to all the aforementioned motivations, the present work proposes an innovative iterative methodology to accelerate the transition towards real-time physics-based variation simulation of assembly systems with compliant sheet-metal parts. To the best of the authors’ knowledge, this is the first time that a niROM approach is developed for VS using both adaptive sampling strategies and optimized RBF interpolation. Building upon and surpassing the achievements of a previous study [25], this work exhibits three key contributions: (1) integration of niROM with adaptive sampling to unlock real-time physics-based VS of sheet-metal parts, (2) implementation of a cross-validation-based procedure for the optimization of RBF interpolation of the reduced dataset, and (3) the introduction of an adaptive sampling procedure that joins two complementary sampling strategies for both reduced basis and interpolation improvement. This adaptive procedure automatically switches between the two strategies based on the rotation of the modes, extending what previously done in literature [39, 40]. To demonstrate the effectiveness of the methodology, two case studies are presented: a thermal analysis generated by a remote laser operation and the assembly process of an aircraft vertical stabilizer. The objective of this paper is to explore ROMs with the ultimate aim to achieve real-time prediction capability. The reference benchmark is therefore the full-order model (high-fidelity model). As such, the experimental validation was not in scope of this paper. This approach represents a significant contribution to the field of VS problems. It can be applied to any application involving parametrically dependent physics-based simulation and can be used with any software that serves as physics-based engine.

The plan of this paper is organized as follows: Sect. 2 reports a schematic formulation of VS problematics; Sect. 3 describes the used tools and the proposed methodology; Sect. 4 deals with two VS case studies along with results and discussions; Sect. 5 concludes the paper with opportunities for future developments. Mathematical insights of the adaptive sampling techniques are reported in the Appendix A.

2 Problem formulation

VS models, schematically illustrated in Fig. 1, aim to determine the impact of input parameters, \(\boldsymbol\mu\), on output performance indicators, \(\boldsymbol u\), while respecting precise design constraints, \(\boldsymbol D\boldsymbol C\). Input parameters define the design space, which is typically high dimensional and may involve stochastic variabilities related to parts and manufacturing process (e.g. manufacturing errors, positioning/clamping variations) as well as deterministic control parameters (e.g. size and position of clamps, fastening setting such as laser welding velocity and power). The output performance indicators usually correspond to key features measured on the final assembly (such as part-to-part gaps, residual stresses, and spring-back deviations) and/or the overall geometric quality according to the GD&T-ASME or GPS/ISO tolerance specifications. The variation propagation modelling during an assembly process can be conceptually represented by the mechanistic formulation in Eq. (1):

It is very important to recognize that the accuracy of a VS model is strictly tied to the physics-based solver embedded in \(f\), which emulates the real system. Model accuracy is dependent upon the completeness of the digital model itself and the capability to simulate physical processes as happening in the real process. However, increasing simulation complexity results in higher computational time. In the context of sheet-metal assemblies, the model should consider part compliancy and contact modelling [25], as well as local and global mechanical distortions due to thermal phenomena during welding operations. These considerations collectively form what is known as “multi-physics” problems, which involve the interaction of multiple physical processes. Furthermore, as the dimensionality of the design space increases, the number of simulations grows exponentially (curse of dimensionality). Since typical VS applications involves a large set of input parameters, finding the optimum assembly configuration may take days, or even weeks, to perform calculations. This is especially true for processes involving sequential operations across multiple stages. This concept, known as “multi-stage” assembly systems, is common in assembly processes of sheet metals, which often include different fastening operations, such as riveting and welding. As a result, the simulation of multiple physical phenomena becomes a critical aspect. This includes, but is not limited to, structural and thermal modelling. The need for such comprehensive simulations significantly increases the computational demand, highlighting the complexity and computational intensity of these multi-physics problems.

Therefore, the methodology proposed in the next section addresses the challenge of reducing the computational costs to solve VS models by integrating non-intrusive techniques for accurate real-time simulations.

3 Proposed methodology

3.1 Main framework

The proposed methodology is based on data-driven non-intrusive reduced-order modelling (niROM). In this approach, the high-fidelity solver is exploited only in a preliminary stage to generate the training dataset. Then, a real-time black box is built, mapping the input (parameter) space to the output (reduced) solution space. This approach enables the user to compute new (previously uncomputed) solutions outside the computer-aided engineering (CAE) software adopted during the training stage.

The methodology’s framework is illustrated in Fig. 2 with a straightforward beam model featuring two degrees of freedom and two variable parameters. Two main stages, detailed below, can be identified: the OFF-line (iterative) training and the ON-line deployment for new parameter instances.

OFF-line stage (training). The solution (snapshot) to several complete models is computed and a restricted number of orthogonal modes, that best describe the solution of the complete problem, are extracted from the full space and collected in the reduced basis \(\boldsymbol\Psi\). Subsequently, the high-dimensional problem can be projected onto the smaller subspace defined by \(\boldsymbol\Psi\), reducing the dimensionality of the dataset and the computational cost.

Step (1) Problem definition and starting dataset

This first step aims to define the full-order model (FOM), i.e. the numerical problem under investigation, and to provide the initial dataset used as a starting point by the iterative procedure. Here, both input and output spaces are defined along with their dimensionalities \({N}_{I}\) and \({N}_{O}\), respectively. In the example of Fig. 2, the load magnitude and the cross-sectional area of the left beam are the two variable parameters that define the input space, while the two deflections of the beam ends define the output space. Therefore, \({N}_{I}=2\) and \({N}_{O}=2\). Then, a starting dataset, consisting of input–output couples, is computed. Generally, determining the size of the initial dataset, \({N}_{S0}\), remains an open topic [38]. However, the implemented adaptive strategies possess space filling properties and work regardless the size of the initial design of experiments. Therefore, \({N}_{S0}\) can be set to a small value, such as \({N}_{S0}=\left(2\div 10\right)\times {N}_{I}\).

Step (2) Parameter space, \(\boldsymbol\mu^{\left(d\right)}\)

The \({N}_{I}\)-dimensional parameter (input) space is adaptively sampled with \({N}_{d}\) new points chosen by the adaptive sampling procedure detailed in the next section. The total number of samples, \({N}_{S}\), increases as the iterative stage runs.

Step (3) Generate snapshots, \({\boldsymbol u}_{f}^{\left(d\right)}\) (full-order model). The physics-based model is then solved for each new sample in the parameter space, generating the so-called snapshots of the system, \({\boldsymbol u}_f\in\mathbb{R}^{N_O}\). These new snapshots are added to the snapshot matrix, defined as

where \({N}_{S}={N}_{S,old}+{N}_{d}\) is the number of snapshots.

Step (4) Generate the field space and compute the reduced basis, \(\boldsymbol\Psi\)

The snapshots can be thought as points in the \({N}_{O}\)-dimensional field space. Since they could occupy only a portion of the full space, it would be possible to consider a subspace of reduced dimensionality which the solutions should lie on. A way to identify a reduced space is to compute a reduced basis \(\boldsymbol\Psi=\left[{\boldsymbol\Psi}_1,\dots,{\boldsymbol\Psi}_R\right]\in\mathbb{R}^{N_O\times R}\) by extracting a restricted number, \(R\), of orthogonal modes, \(\boldsymbol\Psi\), from the snapshot matrix, which represent the most significant patterns hidden in the field space. For the sake of clarity, in Fig. 2, the reduced basis is composed by only one mode, \(\boldsymbol\psi\). POD is a well-known technique used to extract the spatial (orthogonal) modes. The POD method ensures the optimality of the reduced basis by retaining the modes corresponding to the highest singular values of \({\boldsymbol Y}_{Snap}\), capturing the most significant variations in the data [26].

Step (5) Forward projection of snapshots

\(\boldsymbol\Psi\) Is used to reduce the dimensionality of the system from \({N}_{O}\) to \(R\ll {N}_{O}\). The snapshots are projected into the reduced basis, obtaining the so-called amplitude matrix:

which collects the reduced variables, \({\boldsymbol u}_r\in\mathbb{R}^R\), also called ROM coefficients. In Fig. 2, the dimensionality is reduced from \({N}_{O}=2\) to \(R=1\).

Step (6) Compute interpolation in the hybrid space

A surrogate model mapping the input parameter space (\(\boldsymbol\mu\)) to the output reduced space (\({\boldsymbol u}_r\left(\boldsymbol\mu\right)\)) can be obtained by interpolating the reduced dataset in the hybrid space. Being the OFF-line stage iterative, the surrogate model (represented as a surface in step 6 in Fig. 2) changes as long as new snapshots are computed. In Fig. 2 (step 6), the grey surface represents the interpolated model at the previous iteration, before adding \(\boldsymbol\mu^{\left(d\right)}\). In this work, a shape factor-free radial basis function (RBF) interpolation method [27] is used for this step.

Step (7) Convergence criterion fulfilled?

The steps (2)–(6) are iteratively performed until a convergence criterion is fulfilled. Depending on the application, the stopping criteria may be based on time constraints, accuracy goal, improvement between successive iterations, or total number of evaluations [38].

Step (8) CV-based optimized interpolation

A procedure based on cross-validation (CV) has been implemented to fine tune the interpolation by acting on the so-called hyper-parameters, which are the configuration setting parameters not learnt from the data (for, e.g. the shape factors of the RBFs). This procedure will be detailed in Sect. 3.3 .

ON-line stage (deployment). This stage is intended to provide real-time approximated solutions of the full-order model for new parameter instances that have not been computed during the previous OFF-line training.

Step (9) New parameter instance, \({\boldsymbol\mu }^{\left(\boldsymbol w\right)}\), and step (10) compute interpolation, \(\boldsymbol u_r^{\left(\boldsymbol w\right)}\)

Being the procedure non-intrusive, no more reference is done to the physics-based solver. For each new point in the parameter space, \(\boldsymbol\mu^{\left(w\right)}\) (step 9), the reduced solution, \({\boldsymbol u}_r\left(\boldsymbol\mu^{\left(w\right)}\right)\), can be instantaneously computed (step 10) by interpolating the previously generated dataset of reduced dimensionality, without referring again to the high-fidelity solver.

Step (11) Backward projection and approximated solution, \(\boldsymbol u_{\boldsymbol f}^{\left(\boldsymbol w\right)}\)

Finally, the solution of the full model, \({\boldsymbol u}_f\left(\boldsymbol\mu^{\left(w\right)}\right)\), is approximated by back-projecting the reduced solution in the full space. From a mathematical point of view, the backward projection is represented by the following equation:

meaning that the approximated solution is obtained by a weighted sum of the R orthogonal modes, \({\boldsymbol\psi}_i\), by means of the R interpolated ROM coefficients, \({u}_{r,i}\), i.e. the reduced solution vector.

While this methodology may be used to generate new solutions in any optimization framework, the optimization technique, or the stochastic analysis, such as the uncertainty quantification (UQ), is not within the scope of this work. The following sections are intended to provide some details about the adaptive sampling procedure implemented in this work and the CV-based procedure for optimized RBF interpolation.

3.2 Adaptive sampling procedure

Classic DoE methods, generally referred as a priori DoE or one-shot DoE, can be generated using various space-filling techniques such as full factorial, random, Latin hypercube sampling (LHS), or Latinized Centroidal Voronoi Tessellation (LCVT) [41]. However, these approaches do not allow for the a priori selection of the optimal number of samples and their positions in the design space. Iterative methods that randomly add new points are not efficient in addressing this challenge, as their non-optimality may lead to complications such as under- and over-sampling.

To overcome these challenges, the present study introduces a novel iterative procedure based on a more efficient adaptive sampling. Adaptive techniques strategically add additional samples to capture local details of interesting regions and/or global trends [38]. These approaches aim to find new points within the input space through an iterative process, based on “prior knowledge” of the underlying problem. In this context, prior knowledge specifically refers to existing information, which can be extracted from the previously computed simulation results stored in the snapshot matrix. To clarify, consider the Eq. (4) represents the back-projection of the reduced solution (Fig. 2, step (11)). The approximation accuracy of the full solution, \({\boldsymbol u}_f\), depends on two key components: (i) the reduced basis, \(\boldsymbol\Psi\), that defines the reduced space and gathers the most significant patterns hidden in the field space (Fig. 2, step (4)) and (ii) the reduced solution, \({\boldsymbol u}_r\) (Fig. 2, step (6)), that collects the ROM coefficients necessary to linearly combine the modes. These quantities, in turn, depend on the snapshot matrix, \({\boldsymbol Y}_{Snap}\).

Building upon this understanding, the proposed methodology involves an adaptive sampling process that selects new samples based on information extracted from \(\boldsymbol\Psi\) and \({\boldsymbol u}_r\). The iterative procedure is built on two adaptive techniques originally presented by Guenot et al. [39]: Basis improvement (BI) and interpolation improvement (II). This procedure, while built upon existing techniques, represents a significant advancement. Its innovation lies in how it leverages information processed by BI and II, joining complementary aspects of the two. Indeed, Guenot et al. [39] only presented the two strategies, analysing their main features and focusing on their numerous hyper-parameters (i.e. the number of modes/coefficients to improve, number of starting samples), while a more recent work [40] presented a first conjunction approach, using BI and II in two separate stages but leaving, however, quantitatively undetermined the switching step (hyper-parameter). Instead, the present research introduces a novel automatic switching strategy between basis improvement and interpolation improvement.

In order to provide a comprehensive understanding of the switching procedure developed in this study, it is necessary to elucidate how the two techniques work. While these techniques have been discussed in prior work, their conceptual understanding within the context of this study is crucial due to their innovative application in the proposed procedure. Both techniques are based on the leave-one-out cross-validation (LOOCV) and have a meaningful graphical interpretation, schematically illustrated in Fig. 3a, b, respectively. They aim to achieve two specific goals:

-

1.

Basis improvement (BI, Fig. 3a) aims at maximizing the information collected in the reduced basis. The aim is to capture as many informative hidden patterns as possible from the field space, which represent the model’s spatial modes. In this case, the prior knowledge is related to the sensitivity of the modes to the already available samples. Indeed, spatial modes algebraically represent vectors in the \({N}_{O}\)-dimensional field space (i.e. the principal components [26]), and their directions are dictated by the position of samples in the field space itself (refer to step 1 in Fig. 3a). The process of adding samples generally causes these vectors to rotate. Higher rotations indicate that better patterns have been recognized in the space, meaning that more informative spatial modes have been collected by the basis. Therefore, BI strategically seeks for candidate samples with high impact on modes rotation and directs the sampling towards their vicinity. To identify the sample that has the greatest influence on the rotation of the modes, samples are removed one by one according the LOOCV process (refer to step 2). Then, the sensitivity of each mode to each removed sample is quantitatively determined by calculating the resulting rotation angle. Finally, a new sample, \(\boldsymbol\mu^d\), is added in the neighbourhood of the point with the highest influence on the modes’ rotation (refer to step 3). This one is the point that causes the highest rotation. Specifically, the candidate sample is chosen to maximize a score that also considers the minimum distance with previous samples to ensure space filling. This adaptive process is exemplified in Fig. 3a, where the reduced basis is composed by a single mode, \(\boldsymbol\psi\). The angle \({\vartheta }^{-j}\) represents the angle between \(\boldsymbol\psi\) and the rotated mode \(\boldsymbol\psi^{-j}\) due to the removal of sample \(\boldsymbol\mu^j\). In the figure, the sample (1) has the strongest influence on the basis rotation, so the additional point, denoted by \(\boldsymbol\mu^d\), is sampled in red area surrounding the sample (1).

-

2.

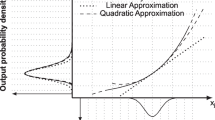

Interpolation improvement (II, Fig. 3b) aims to enhance the interpolation of the reduced dataset within the hybrid space (refer to step 1 in the figure). In this context, the prior knowledge is associated with the alterations in the reduced elements that occur due to the inclusion of a new sample. These alterations can be quantitatively assessed by calculating the interpolation errors in the samples. LOOCV approach (refer to step 2) enables the computation of the interpolation error in each sample. This is done by determining the difference between the actual and approximated values of the function when the sample is excluded. Consequently, the sensitivity of each reduced element to each data site can be quantitatively evaluated by sequentially removing the samples using the LOOCV approach. A high interpolation error resulting from the removal of the j-th sample indicates that the sample exerts a significant influence on the interpolated reduced variable. The function may not be adequately approximated in its vicinity due to local non-linearities. Therefore, the II strategically targets areas of the input space where the errors obtained with LOOCV are high, directing the sampling towards these zones. The objective is to introduce new samples where the interpolation error is larger. Ultimately, the new point is chosen in the vicinity of the most impactful sample (refer to step 3), that is, the data site where the reduced elements exhibit the most non-linear behaviour. Similar to the basis improvement strategy, in this case too, the new sample is selected to maximize a score that also takes into account the minimum distance with previous samples to ensure space filling. Figure 3b graphically illustrates the process for a generic reduced element, denoted by \({u}_{r,i}\), which depends on a single input parameter, \(\mu\). Step 2 demonstrates how, during the LOOCV, the removal of a sample alters the interpolated function. In the figure, the sample (\({N}_{S}\)) has the most substantial influence on the interpolation error; hence, the additional point, denoted by \({\mu }^{d}\), is sampled in the red area surrounding the sample (\({N}_{S}\)). In the third step, a more accurate approximation of \({u}_{r,i}\) is obtained, which was evidently not well approximated earlier.

Schematic representation of a adaptive sampling for basis improvement (BI) and b adaptive sampling for interpolation improvement (II). The apex -j indicates that the sample μ_j has been removed. Highlighted in red, the area where the additional point will be sampled. c Pseudo code for adaptive sampling procedure: switching criterion between BI and II

The Appendix A, A.1, and A.2 elucidates the mathematical aspects utilized in the study, serving to provide a more comprehensive explanation of the complex procedures, as demonstrated in [39].

As anticipated, this study introduces a novel adaptive method. Joining the power of the two complementary techniques in a fully automated manner, the new adaptive procedure incorporates a switching criterion between BI and II to further improve the efficiency of the sampling process. The strategy is grounded on the stabilization of the modes’ rotation. As demonstrated in Fig. 3a, step 2, the addition of new points in the field space can potentially alter the orientation of the modes. The rotation, \({\vartheta }_{i}\), of the i-th mode, \({\boldsymbol\psi}_i\), due to the addition of the new sample, \(\boldsymbol\mu^d\), at \(k-th\) iteration of the iterative OFF-line stage, can be expressed as

Here, the dot product is represented by (\(\bullet\)). These values can be tracked throughout the iterative process. If substantial rotations are observed, it means that the spatial modes have not yet stabilized. Consequently, the BI technique is deemed most appropriate for adaptive sampling because it steers the sampling process towards zones of the input space that mostly affect the modes rotation. It is worth noting that, if the reduced basis is not stabilized, the II approach may not be optimal as the reduced variables are not stabilized as well. This is because they are the projection of the snapshots through the reduced basis (as per Eq. (3)). As a consequence, due to modes’ rotations, the non-linearities may shift in other locations during future iterations, nullifying the samples obtained with II. Nonetheless, once the reduced basis stabilizes, the BI technique becomes less useful. This is because, based on available information, there will be no more unexplored regions of the output space. At this point, the adaptive procedure should switch to the II strategy to add new samples with the aim of enhancing the interpolation of the ROM coefficients, \({u}_{r,i}\). However, the addition of new samples may reveal new areas of the output space, previously inaccessible due to insufficient information. This may result in higher rotations being recorded once again. In such a scenario, the BI technique should be reintroduced to replace the II.

The proposed switching criterion is hinged on this logic and Pseudo Algorithm 1, reported in Fig. 3c, implements what just said. It is based on a threshold rotation, \({\vartheta }_{Th}\), of the monitored modes. The adaptive sampling switches to II if the first \({R}_{BI}\le R\) modes are stabilized, i.e. the observed rotation of the modes has been lower than the threshold value during the previous three iterations. If, during the iterative stage, the \({R}_{BI}\) most informative modes have exhibited a rotation lower than the threshold for three consecutive iterations, then the II technique replaces the BI one. Otherwise, it switches again to BI. In this manner, the procedure automatically alternates between BI and II based on the stabilization of the first \({R}_{BI}\) user-defined modes. A sensitivity analysis of parameter \({R}_{BI}\) is reported in the Appendix A.3. The threshold value, \({\vartheta }_{Th}\), is set to 20° in this study. With a smaller value, the procedure might focus on restricted zones of the field space, while, by allowing higher rotations, the reduced solutions might vary excessively, making the samples obtained with the II technique less effective.

This research, therefore, presents a significant contribution to the field by proposing an automatic switching strategy that optimizes the use of II and BI techniques based on the stabilization of the modes’ rotation. This strategy enhances the efficiency and effectiveness of the adaptive sampling process, thereby improving the overall performance of the system.

3.3 CV-based optimized radial basis function interpolation procedure

The framework presented in Sect. 3.1 involves the dimensionality reduction of the previously generated snapshots (refer to step 5 of Fig. 2). Then, the reduced dataset is interpolated in the hybrid space (step 6). These steps are iteratively repeated. At the end of the iterative procedure, an optimization stage of the interpolation is introduced, as shown in step 8. This optimization, based on cross-validation, aims at refining the interpolation by fine tuning the hyper-parameters. In this work, the hyper-parameters refer the interpolation kernel functions, specifically the radial basis functions (RBFs), and their shape factors.

RBFs are often combined with ROM models to interpolate reduced datasets [28, 29], featuring low implementation efforts and computational efficiency. The RBF can be considered a multidimensional interpolation method working with scattered data in the parameter space of any dimensions and with adjustable smoothness [27]. The mathematical formulation of the interpolation is reported in Eq. (5):

where \({\boldsymbol A}_{Snap}\) is the amplitude matrix defined in Eq. (3), \(\boldsymbol B\in\mathbb{R}^{R\times N_S}\) is the matrix gathering the unknown interpolation coefficients, and \(\boldsymbol G\in\mathbb{R}^{N_S\times N_S}\) is the (symmetric) matrix of interpolation functions whose generic element is \(\boldsymbol G\left(k,j\right)=f\left({\vert\vert\boldsymbol\mu^{\left(j\right)}-\boldsymbol\mu^{\left(k\right)}\vert\vert},\sigma\right)\), where \(f\) and \(\sigma\) are the arbitrary RBF and shape factor, respectively. Frequently used RBFs are reported in Table 1.

It is worth noting that the rows of \({\boldsymbol A}_{Snap}\) have a meaningful interpretation: the \(\text{i-th}\) row represents the set of \({N}_{S}\) points of the reduced element \({u}_{r,i}\) in the hybrid space \(\left(u_{r,i},\boldsymbol\mu\right)\). Therefore, the formulation (5) may lead to non-optimal results because all the reduced elements are interpolated with the same kernel and shape factor. In most papers, the authors interpolate all the elements, \({u}_{r,i}\), with the same RBF and by setting the shape factor often relying on formulas and suggestions found in literature as in [28], choosing a shape factor-free RBF (linear [29] or cubic [25] spline), or even not providing the adopted value of \(\sigma\) [21].

Herein, we propose a slightly different formulation for which each reduced element is individually interpolated in the hybrid space:

where \({\boldsymbol b}_i\in\mathbb{R}^{1\times N_S}\) is the vector of unknown interpolation coefficients for the \(\text{i-th}\) reduced element and \({\boldsymbol G}_i\in\mathbb{R}^{N_S\times N_S}\) with the same meaning as above. In this way, a different RBF (with its own shape factor, i.e. \({\boldsymbol G}_i\left(k,j\right)=f_i\left({\vert\vert\boldsymbol\mu^{\left(j\right)}-\boldsymbol\mu^{\left(k\right)}\vert\vert},\sigma_i\right)\)), can be used for each row of \({\boldsymbol A}_{Snap}\), independently from the others.

To choose the optimal RBF settings for each reduced element \({u}_{r,i}\), the procedure shown in Fig. 4 has been implemented. The aim of this strategy is to compute, for each reduced element (step 1) and by means of CV (step 2), the predictor error function, \(CV Error\) (step 3), which is the \({L}_{2}\)-norm of the vector containing the CV errors and imitates the interpolation error. Finally, it determines the best kernel configuration by minimizing this error (step 4). Several predictors may be derived depending on the CV technique. In this paper, the LOOCV is used because the error vector can be computed in a cost-efficient way by using the Rippa’s formula [42].

4 Case study

4.1 Implementation

The niROM technique implemented in this paper relies on the POD [26] for the mode extraction needed for the dimensionality reduction. The POD method was chosen due to its advantages in terms of ease of implementation and accuracy of results, which make the technique particularly suitable for our purpose compared with others. With POD method, the extraction of orthogonal modes is delegated to the singular value decomposition (SVD) of the snapshot matrix \({\boldsymbol Y}_{Snap}=\boldsymbol U\boldsymbol\Sigma\boldsymbol V^T\), and the reduced basis is constructed by extracting the first \(R\) left singular vectors \(\boldsymbol\Psi=\boldsymbol U\left(:,1:R\right)\), named POD modes of the system, based on decay of singular values. It is worth reporting that the POD method can also be used in an intrusive fashion in combination with the Galerkin projection of constitutive equations [22]. Recently, Russo et al. [25] have applied the POD-Galerkin method to FE-based variation simulation of sheet-metal assembly systems.

The entire framework was implemented in MATLAB®, and the physics-based variation simulations were computed with the variation response method [15, 43] in-house code. The analyses run on a laptop featuring 12 GB of RAM and a quad-core CPU operating at max turbo frequency of 3.60 GHz. Two case studies were used to test the methodology: a variation simulation of (1) a remote laser welding process (Sects. 4.2 and 4.3) and (2) an assembly process of a portion of the aircraft’s vertical stabilizer (Sects. 4.4 and 4.5).

A testing set of \({N}_{new}\) new parameter instances was used to test the ROM surrogate in both cases. The performance was quantified by two indicators: (1) the mean normalized root mean square error, defined as

between the true (FOM) and approximated (ROM) solutions, i.e. \({\boldsymbol u}_{f,true}\) and \({\boldsymbol u}_{f,apprx}\), respectively; and (2) the speedup ratio, defined as

to measure the computational efficiency.

4.2 Description of case study 1: analysis of the thermal history during a laser welding operation

According to the step 1 of Fig. 2, this paragraph aims at defining both the input and output spaces of the first test case.

The case study (Fig. 5a) investigates the effect of the thermal history of an aluminium sheet during a laser welding operation. The study is motivated by the need to model and predict mechanical distortions and related assembly non-conformities induced by thermal loads, i.e. laser welding operations. The sheet is clamped by two steel blocks, acting as heat sinkers [44]. The full process is shown in Fig. 5b at four different time steps: the laser beam moves along the y-direction to generate a straight weld seam and the operation ends with a 0.2-s cooling phase. The model has been discretized with an unstructured 2D grid, with a denser mesh in correspondence of the welding zone, resulting in a dimensionality of the (output) full-order space equal to \({N}_{O} = 3464\).

The input space comprises \({N}_{I}=2\) variable parameters (Table 2), namely the y-position of the heat sink 2 and the power of the laser beam. The output is the temperature field, \(\boldsymbol T\), of the plate at the end of the welding process, i.e. \({\boldsymbol u}_f=\boldsymbol T\left(t_{aftercooling}\right)\in\mathbb{R}^{N_O}\). The simulations of the thermal conduction problem, combined with the convective heat exchange with the air at a temperature \({T}_{air}=293K\), are performed by the VRM-FEM thermal solver based on the backward Euler method with a fixed time step of \(0.05 s\). Therefore, the full model needs to be iteratively solved for each time step, generating as many solutions as the number of steps. However, in this study, only the solution at the end of the cooling phase is worthy to be collected as snapshot for each instance of process parameters. In case the user needs to generate a reduced model to approximate the temperature field during the entire welding process, the time could be considered an additional variable parameter, and all solutions at various intervals should be collected as snapshots, but this is not the purpose of this study. For further information, see [21, 22, 31].

4.3 Results of case study 1

Settings of the OFF-line iterative training are reported in Table 3, where \({R}_{BI}\) is the number of modes to improve with the basis improvement adaptive strategy, described in Sect. 3.2 and detailed in the Appendix A.2. The sensitivity analysis of the ROM surrogate to the hyper-parameter \({R}_{BI}\) is also reported in the Appendix A.3 rather than here, as it might shift the attention from the paragraph’s main objective. The performance metrics (Eqs. (7) and (8)) are computed with reference to a testing set of high-fidelity solutions for a \(15\times 15\) sample grid \(\left({N}_{new}=225\right)\) of the input space.

The following results will show how the accuracy of the ROM hinges on three crucial elements: (i) the quantity of extracted modes, which delineate the reduced space for projecting the snapshots; (ii) the number and position of the sample/snapshot (i.e. input/output) pairs; and (iii) the interpolation process applied to the dataset once projected into the reduced space. These factors collectively shape the ROM’s accuracy.

In relation to point (i), Fig. 6 illustrates the impact of the number, \(R\), of extracted modes on the assessed ROM surrogate (at the end of the iterative OFF-line stage). It is observed that the mean error reaches a plateau after adding a few modes. Notably, more than 95% of the information of the system is captured by retaining only ten modes. This is explained by the trend of the singular values associated with the various modes, confirming that the first ones represent the most important patterns hidden in the field space. Consequently, the relative ROM coefficients exhibit large variability ranges (bottom of Fig. 6, corresponding to step 10 of Fig. 2), corresponding to large modes’ contribution in the linear combination (Eq. (4)) to approximate uncomputed solutions. Conversely, the less important ones are associated to ROM variables with lower variability ranges, which gradually carry decreasing weights in the linear combination.

Impact of the no. of extracted modes, R, on the ROM surrogate. Top: Sensitivity to R of singular values and mean NRMSE. Middle: Some extracted modes. Bottom (corresponding to step 10 of Fig. 2): Some reduced variables (related to the shown modes)

With regard to point (ii), the next results focus on the analysis of the sampling strategy used to guide the simulation runs. The proposed iterative procedure is here compared with two, more classic, one-shot approaches to train the ROM surrogate, i.e. random and LHS sampling (50 total samples) with shape-free RBF interpolation. Figure 7 reports the first comparison in terms of sample distribution in the input space and consequent assessment of approximation errors. Concerning the sample distribution (Fig. 7a), the random technique is clearly affected by over- and under-sampling. This phenomenon is partially contrasted by the pseudo-random LHS technique, but the sample position is not optimal. The herein proposed iterative strategy, instead, is based on adaptive strategies that automatically detect where to sample new points, resulting in a point distribution within the input space that is not random but is guided by the specific problem. Consequently, the process of performing new runs is optimized and, as clearly depicted in Fig. 7b, the iterative ROM reaches the accuracy of the random and LHS strategies with 28 and 32 samples, respectively. Furthermore, with the same total number of samples (50), the iterative approach provides a much narrow window of errors compared with the other two, as shown in Fig. 7c, reaching a mean NRMSE of 1.106% with an improvement of 43% and 28% w.r.t. random and LHS strategies, respectively.

For what concerns the interpolation (iii), Fig. 8 reports the impact of the CV-based optimization procedure for interpolation, highlighting the differences, in terms of mean NRMSE, with the shape-free RBFs for the three sampling strategies. Best results are obtained with the proposed methodology (Fig. 2), for which the automatically chosen RBFs (along with their optimized shape factors) are reported for the first 10 ROM variables. These results confirm that the procedure presented in Sect. 3.3 is a useful tool to automatically boost the predictive capabilities with reference to the faster and straightforward interpolation with linear or cubic splines (which do not need to be fine-tuned). For the sake of completeness, Fig. 9 displays a further comparison between the iterative procedure and the two one-shot techniques. In particular, the images show the thermal fields obtained for two different test samples for which the proposed methodology strongly outperforms the other two, both in terms of overall error and fidelity in reconstructing the patterns.

This section concludes with a consideration about the elapsed computational time to run the 225 testing solutions: the full model took approximately 4950 s while the reduced model spent only 0.03 s, achieving a speedup ratio equal to \(\mathrm{165,000}\). This outcome was predictable due to the non-intrusiveness of the methodology. Of course, the price to pay is the snapshot generation, as will be discussed in Sect. 4.6.

4.4 Description of case study 2: aircraft vertical stabilizer

The second test case, depicted in Fig. 10, models the assembly process of a portion of the vertical stabilizer of a commercial aircraft [25]. Compared to the first one, this case study is intended to show the applicability of the methodology to (i) a different branch of physics (ii) involving a multi-stage process with (iii) a higher number of parameters. The adopted material is aluminium (Young’s modulus 70 GPa, Poisson’s ratio 0.3), and the FEM model is discretized with \(\mathrm{20,497}\) shell elements (6 dofs/node) and \({N}_{O}=\mathrm{128,644}\) degrees of freedom (Fig. 10b). The components are assembled following the three-stage process illustrated in Fig. 11 for a given instance of input parameter: Stage (1) positioning of the rigid parts (rib, rib-posts, and clips). The rib-posts are placed in non-nominal position; Stage (2) positioning of the flexible parts (left- and right-handed panels). The skin panels are non-ideal and the shape-errors are generated within the VRM variational engine, based on the Morphing Mesh Procedure (MMP, originally developed in [45]), for which the FE mesh is morphed according to the deviation of some points (named control points), determining a smooth local deformation of the surface within an influence hull (Fig. 12a); Stage (3) simulation of contact interaction between parts.

Modelling of input deviations: a Morphing Mesh Procedure (MMP, [45]) for modelling the shape error of the skins and b positioning error of rib-posts

Table 4 reports the input parameters (\({N}_{I}=5\)), while the output performance indicator is the deformation field (in x, y, and z axis) for each node of the full model, i.e. \(u={\boldsymbol u}_f\in\mathbb{R}^{N_O}\). It is worth noting that the variable parameters concern both product variations (shape errors of skins by means of control point deviations) and process variations (rib-post rotations).

4.5 Results of case study 2

Settings of the OFF-line stage are reported in Table 5. The testing set consists of \({N}_{new}=200\) new parameter instances generated with LHS, and the performance is quantified by the two indicators defined in Eqs. (7) and (8).

As for case study 1, Fig. 13 shows the impact of the number, \(R\), of extracted modes on the assessed ROM surrogate (at the end of the iterative OFF-line stage). Similar to previous findings, this study reveals that only a limited number of significant modes are necessary to assess the approximation error made by the ROM. However, in this case, at least R = 20 modes are required to capture 90% of the information within the field space and to reach the error plateau. This discrepancy represents a crucial distinction between the two case studies. Indeed, the ROM coefficients (corresponding to the weights of the modes in the linear combination of Eq. (4)) demonstrate variability ranges, displayed at the bottom of Fig. 13, that decrease at a slower rate compared to the first test case (Fig. 6). This phenomenon is intricately linked to the higher number of input parameters and their more pronounced non-linear impact on the output solution.

In this context, the adoption of the proposed iterative training proves particularly impactful, as it enables the optimization of sample positions within the higher-dimensional input space. This is underscored by the results obtained with the proposed adaptive sampling-based ROM, which have surpassed those achieved with the one-shot LHS, as illustrated in Figs. 13 and 14. More specifically, Fig. 13 presents the y-displacement map for two distinct test samples, clearly showcasing the considerable superiority of the suggested approach over the LHS method. This superiority is evident in both the overall error and the accuracy of deformation pattern reconstruction. Furthermore, Fig. 15 provides insights into the sensitivity of the mean NRMSE concerning the number of variable parameters. It is evident that the adaptive sampling preserves its effectiveness as the number of variable parameters increases.

Finally, similar to the findings illustrated in case study 1, the reduced model demonstrates a real-time response. The time required to solve 200 testing instances was approximately 0.12 s, while the full model took 7550 s, resulting in an impressive speedup ratio of 62,917.

4.6 Discussion about the computational cost of the OFF-line training

The findings confirm that the ROM accuracy is strictly related to the number of pre-computed solutions, while only few modes are necessary to embed the main patterns hidden in the snapshot matrix (Figs. 6 and 13). The information gathered in the reduced basis and the interpolation quality can be improved by increasing the number of points and optimizing their position in the parameter space. Stated this, the adaptive techniques here adopted improved the capability of the reduced model with an impact on the computational cost below the 1%. However, while the time elapsed by II method remains slightly constant as the samples increase, BI method may become burdensome (Fig. 16), actually limiting its applicability to the early training stage. Indeed, the technique needs to perform \({N}_{S}\) SVDs of an \({N}_{S}\times {N}_{S}\) matrix (see [39] for further information), and the computational burden increases fast after few hundreds of samples. Nevertheless, the usage of the BI strategy is automatically limited to the first stage of the iterative training, i.e. during the stabilization process of the \({R}_{BI}\) modes.

Regarding the computational costs, the speedup ratio achieved in both the case studies is extremely high, but it is essential to note that the training process is the price to pay to obtain real-time simulations. Although ROMs can be beneficial, the time and effort required to train them might not always be worthwhile. This is particularly true if achieving the desired accuracy necessitates a large number of simulations. If the number of simulations becomes too high, the efficiency of using the ROM could be compromised. Nevertheless, VS-based techniques usually require a huge number of runs, especially when stochastic variabilities are accounted for design robustness. In these scenarios, it would still be beneficial to perform dozens of simulations to train the ROM and then exploit it as real-time solver. This concept is illustrated in Fig. 17, where the slope of the lines representing the time taken by the ROM is not appreciable, given that, once trained, it provides real-time results. Finally, we strongly recommend the proposed ROM approach whenever dealing with costly physics-based simulations and many parameters, regardless the software used as physics engine. Differently from what proposed in the literature, the proposed combination of the adaptive sampling and the optimum interpolation has proven to be useful for automatically increasing the accuracy of the ROM, keeping limited the number of training samples, and disengaging the user from the choice of interpolation settings.

5 Conclusions

This work has faced up the challenge of achieving real-time physics-based modelling for variation simulation of sheet-metal parts. The methodology herein proposed embraces a non-intrusive reduced-order model (niROM)–based iterative procedure, empowered by two complementary adaptive techniques. These techniques guide the sampling process with the aim of improving both the interpolation (interpolation improvement, II) in the reduced space and the reduced basis (basis improvement, BI). The interpolation approach automatically selects the optimum RBF and shape factor for each reduced element taken individually. The methodology was tested with two case studies: a thermal analysis due to a remote laser welding operation with two input parameters (mean normalized root mean square error below 1.11%) and the assembly process of an aircraft vertical stabilizer with five input parameters (mean normalized root mean square error below 6.1%), reaching real-time results in both cases. The overall outcomes indicate that the accuracy of the ROM is dictated by the sampling strategy adopted to determine the number and position of samples in the parameter space, as well as by the number of the input parameters. In this perspective, the adaptive strategies have proven to be extremely useful to automatically detect the most influencing zones in the parameter space, computing new snapshots until reaching the desired degree of accuracy (computed with reference to a testing set). The effectiveness of the adaptive sampling procedure has been demonstrated through comparisons with other strategies that rely on a priori (one-shot) sampling, such as random and Latin hypercube sampling, with up to 43% improvement in accuracy of the ROM surrogate. In addition, the proposed cross-validation-based optimization of RBF interpolation, besides improving the accuracy, also disengages the user from the choice of the interpolation settings, especially for the shape parameter value.

As a final remark, it should be noted that the findings of this study are restricted to responses of the reduced model within the variability range of the input parameters. No attempts were done to investigate the accuracy outside the intervals (out-of-range), since it is well known that interpolation methods, including the RBF-based one, do not provide accurate results outside the sampling ranges. Moreover, although the methodology can be used regardless the type and number of input parameters, it should be underlined that its applicability is valid as long as the parameters do not affect the connectivity of the nodes of the numerical mesh. Integration with adaptive/changing mesh techniques is currently under investigation.

Notwithstanding these limitations, the present study unlocks new prospects in the field of variation simulation and dimensional/quality monitoring by reducing the gap between any advanced computer-aided engineering solver (included commercial ones) and VS models with real-time physics-based simulations. In fact, the niROM-based methodology herein proposed can be applied to any application involving parametrically dependent models, and, finally, it can be used regardless the software adopted as physics-based engine. It remains a strong conviction of the authors that more investigation needs to be done in the field of reduced-order modelling, examining hybrid approaches with physics-informed machine learning for out-of-range evaluations in real time.

Abbreviations

- \({\boldsymbol A}_{Snap}\) :

-

Amplitude matrix

- B :

-

Matrix of interpolation coefficients

- BI :

-

Basis improvement

- CAE :

-

Computer-aided engineering

- CV :

-

Cross-validation

- DC :

-

Design constraints

- DL :

-

Deep learning

- FEM :

-

Finite element analysis

- FOM :

-

Full-order model

- G :

-

Matrix of interpolation functions (RBF)

- II :

-

Interpolation improvement

- iROM :

-

Intrusive reduced-order model

- LHS :

-

Latin hypercube sampling

- LOOCV :

-

Leave-one-out cross-validation

- MCS :

-

Monte Carlo simulation

- ML :

-

Machine learning

- NRMSE :

-

Normalized root mean square error

- \({N}_{d}\) :

-

Number of new samples

- \({N}_{O}\) :

-

Dimensionality of output space (full-order space)

- niROM :

-

Non-intrusive reduced-order model

- \({N}_{I}\) :

-

Dimensionality of input space (number of variable parameters)

- \({N}_{S0}\) :

-

Number of starting samples/snapshots

- \({N}_{S}\) :

-

Number of samples/snapshots

- PCA :

-

Principal component analysis

- POD :

-

Proper orthogonal decomposition

- \(R\) :

-

Number of retained modes (dimensionality of reduced space)

- RBF :

-

Radial basis function

- \({R}_{BI}\) :

-

Number of modes to stabilize

- RMSE :

-

Root mean square error

- ROM :

-

Reduced-order model

- RSM :

-

Response surface method

- \(SVD\) :

-

Singular value decomposition

- \(\mathbf{Y}_{snap}\) :

-

Snapshot matrix

- \( \boldsymbol u\) :

-

Output performance indicator

- \({\boldsymbol u}_f\) :

-

Solution of the full model

- \({\boldsymbol u}_r\) :

-

Solution of the reduced model

- \({u}_{r,i}\) :

-

i-th ROM variable/coefficient

- VRM :

-

Variation response method

- VS :

-

Variation simulation

- \(\boldsymbol\mu\) :

-

Input parameter

- \(\sigma\) :

-

Shape factor of RBF

- \(\Psi\) :

-

Reduced basis

- \({\boldsymbol\psi}_i\) :

-

i-th Spatial mode

References

Bond D, Suzuki FA, Scalice RK (2020) Sheet metal joining process selector. J Braz Soc Mech Sci 42:. https://doi.org/10.1007/s40430-020-02310-9

Hultman H, Cedergren S, Söderberg R, Wärmefjord K (2020) Identification of variation sources for high precision fabrication in a digital twin context. In: ASME Int Mech Eng Congress Expo, Proceedings (IMECE). https://doi.org/10.1115/IMECE2020-23358

Kumar T, Kiran D V., Arora N (2021) Sheet metal joining and distortion measurement of aluminium alloy and steel in cold wire GTAW process. In: Materials Today: Proceedings. https://doi.org/10.1016/j.matpr.2020.12.038

Ceglarek D, Colledani M, Váncza J et al (2015) Rapid deployment of remote laser welding processes in automotive assembly systems. CIRP Ann 64:389–394. https://doi.org/10.1016/j.cirp.2015.04.119

Charles Liu S, Jack Hu S (1997) Variation simulation for deformable sheet metal assemblies using finite element methods. J Manuf Sci Eng, Transactions of the ASME 119. https://doi.org/10.1115/1.2831115

Tong X, Yu J, Zhang H et al (2023) Compliant assembly variation analysis of composite structures using the Monte Carlo method with consideration of stress-stiffening effects. Arch Appl Mech. https://doi.org/10.1007/s00419-023-02479-0

Liu X, An L, Wang Z, et al (2019) Assembly variation analysis of aircraft panels under part-to-part locating scheme. Int J Aerosp Eng https://doi.org/10.1155/2019/9563596

Xu C, Luo C, Zhou Y, Zhang G (2020) Variation simulation of multi-station flexible assembly based on finite element method. In: IEEE Int. Conf. Ind. Mechatron. Autom., ICMA. https://doi.org/10.1109/ICMA49215.2020.9233551

Thiruppathi R, Selvam G, Kannan MG, et al (2021) Optimization of body-in-white weld parameters for DP590 and EDD material combination. In: SAE Technical Paper. https://doi.org/10.4271/2021-28-0215

Vavilala VS (2020) Combining high-performance hardware, cloud computing, and deep learning frameworks to accelerate physical simulations: probing the Hopfield network. Eur J Phys 41. https://doi.org/10.1088/1361-6404/ab7027

Xing YF (2017) Fixture layout design of sheet metal parts based on global optimization algorithms. J Manuf Sci Eng Transactions of the ASME 139. https://doi.org/10.1115/1.4037106

Rezaei Aderiani A, Wärmefjord K, Söderberg R, et al (2020) Optimal design of fixture layouts for compliant sheet metal assemblies. Int J Adv Manuf Technol 110. https://doi.org/10.1007/s00170-020-05954-y

Rezaei Aderiani A, Wärmefjord K, Söderberg R (2021) Evaluating different strategies to achieve the highest geometric quality in self-adjusting smart assembly lines. Robot Comput Integr Manuf 71:102164. https://doi.org/10.1016/j.rcim.2021.102164

Sinha S, Glorieux E, Franciosa P, Ceglarek D (2019) 3D convolutional neural networks to estimate assembly process parameters using 3D point-clouds. In: Stella E (ed) Multimodal sensing: technologies and applications. SPIE, pp 89 – 101. https://doi.org/10.1117/12.2526062

Franciosa P, Palit A, Gerbino S, Ceglarek D (2019) A novel hybrid shell element formulation (QUAD+ and TRIA+): a benchmarking and comparative study. Finite Elem. Anal. Des. 166. https://doi.org/10.1016/j.finel.2019.103319

Yan S, Zhou Z, Dinavahi V (2018) Large-scale nonlinear device-level power electronic circuit simulation on massively parallel graphics processing architectures. IEEE Trans Power Electron 33:4660–4678. https://doi.org/10.1109/TPEL.2017.2725239

Khatouri H, Benamara T, Breitkopf P, Demange J (2022) Metamodeling techniques for CPU-intensive simulation-based design optimization: a survey. Adv Model Simul Eng Sci 9:1. https://doi.org/10.1186/s40323-022-00214-y

Li B, Shui BW, Lau KJ (2002) Fixture configuration design for sheet metal assembly with laser welding: a case study. Int J Adv Manuf Technol 19:501–509. https://doi.org/10.1007/s001700200053

Gerbino S, Franciosa P, Patalano S (2015) Parametric variational analysis of compliant sheet metal assemblies with shell elements. In: Procedia CIRP. https://doi.org/10.1016/j.procir.2015.06.077

Franciosa P, Gerbino S, Ceglarek D (2016) Fixture capability optimisation for early-stage design of assembly system with compliant parts using nested polynomial chaos expansion. In: Procedia CIRP. https://doi.org/10.1016/j.procir.2015.12.101

Georgaka S, Stabile G, Star K, et al (2020) A hybrid reduced order method for modelling turbulent heat transfer problems. Comput Fluids 208. https://doi.org/10.1016/j.compfluid.2020.104615

Pfaller MR, Cruz Varona M, Lang J, et al (2020) Using parametric model order reduction for inverse analysis of large nonlinear cardiac simulations. Int J Numer Method Biomed Eng 36. https://doi.org/10.1002/cnm.3320

Zhang L, Zhang Y, van Keulen F (2023) Topology optimization of geometrically nonlinear structures using reduced-order modeling. Comput Methods Appl Mech Eng 416:116371. https://doi.org/10.1016/j.cma.2023.116371

Lall S, Marsden JE, Glavaški S (2002) A subspace approach to balanced truncation for model reduction of nonlinear control systems. Intl J Robust Nonlinear Control 12. https://doi.org/10.1002/rnc.657

Russo MB, Greco A, Gerbino S, Franciosa P (2023) Towards real-time physics-based variation simulation of assembly systems with compliant sheet-metal parts based on reduced-order models. In: Lecture Notes in Mechanical Engineering. https://doi.org/10.1007/978-3-031-15928-2_48

Chatterjee A (2000) An introduction to the proper orthogonal decomposition. Curr Sci 78. https://www.jstor.org/stable/24103957

Buhmann MD, Levesley J (2004) Radial basis functions: theory and implementations. Math Comput 73. https://doi.org/10.1017/CBO9780511543241

Nguyen MN, Kim HG (2022) An efficient PODI method for real-time simulation of indenter contact problems using RBF interpolation and contact domain decomposition. Comput Methods Appl Mech Eng 388. https://doi.org/10.1016/j.cma.2021.114215

Samuel JS, Muggeridge AH (2022) Fast modelling of gas reservoir performance with proper orthogonal decomposition based autoencoder and radial basis function non-intrusive reduced order models. J Pet Sci Eng 211:. https://doi.org/10.1016/j.petrol.2021.110011

Sun X, Pan X, Choi J il (2021) Non-intrusive framework of reduced-order modeling based on proper orthogonal decomposition and polynomial chaos expansion. J Comput Appl Math 390. https://doi.org/10.1016/j.cam.2020.113372

Li T, Pan T, Zhou X, et al (2024) Non-intrusive reduced-order modeling based on parametrized proper orthogonal decomposition. Energies (Basel) 17. https://doi.org/10.3390/en17010146

Kang H, Tian Z, Chen G et al (2022) Investigation on the nonintrusive multi-fidelity reduced-order modeling for PWR rod bundles. Nucl Eng Technol 54:1825–1834. https://doi.org/10.1016/j.net.2021.10.036

Yu J, Yan C, Guo M (2019) Non-intrusive reduced-order modeling for fluid problems: a brief review. Proc Inst Mech Eng G J Aerosp Eng 233:5896–5912. https://doi.org/10.1177/0954410019890721

Shah A, Rimoli JJ (2022) Smart parts: data-driven model order reduction for nonlinear mechanical assemblies. Finite Elem Anal Des 200:103682. https://doi.org/10.1016/j.finel.2021.103682

Gao H, Wang J-X, Zahr MJ (2020) Non-intrusive model reduction of large-scale, nonlinear dynamical systems using deep learning. Physica D 412:132614. https://doi.org/10.1016/j.physd.2020.132614

Fang Z-Y, Xiao Z-W, Tsai C-W (2020) An effective multi-swarm algorithm for optimizing hyperparameters of DNN. In: Proceedings of the 2020 ACM International Conference on Intelligent Computing and its Emerging Applications. ACM, New York, NY, USA, pp 1–6. https://doi.org/10.1145/3440943.3444722

Li W, Bazant MZ, Zhu J (2021) A physics-guided neural network framework for elastic plates: comparison of governing equations-based and energy-based approaches. Comput Methods Appl Mech Eng 383:113933. https://doi.org/10.1016/j.cma.2021.113933

Fuhg JN, Fau A, Nackenhorst U (2021) State-of-the-art and comparative review of adaptive sampling methods for Kriging. Arch Comput Methods Eng 28. https://doi.org/10.1007/s11831-020-09474-6

Guénot M, Lepot I, Sainvitu C, et al (2013) Adaptive sampling strategies for non-intrusive POD-based surrogates. Eng Comput (Swansea, Wales) 30. https://doi.org/10.1108/02644401311329352

Wang J, Du X, Martins JRRA (2021) Novel adaptive sampling algorithm for POD-based non-intrusive reduced order model. In: AIAA Aviation and aeronautics forum and exposition, AIAA AVIATION Forum 2021. https://doi.org/10.2514/6.2021-3051

Saka Y, Gunzburger M, Burkardt J (2007) Latinized, improved LHS, and CVT point sets in hypercubes. Int J Numer Anal Model 4

Rippa S (1999) An algorithm for selecting a good value for the parameter c in radial basis function interpolation. Adv Comput Math 11. https://doi.org/10.1023/a:1018975909870

Franciosa et al. (2016) VRM simulation toolkit. Available on line: http://www2.warwick.ac.uk/fac/sci/wmg/research/manufacturing/downloads/

Babu PD, Gouthaman P, Marimuthu P (2019) Effect of heat sink and cooling mediums on ferrite austenite ratio and distortion in laser welding of duplex stainless steel 2205. Chin J Mech Eng-En 32. https://doi.org/10.1186/s10033-019-0363-5

Franciosa P, Gerbino S, Patalano S (2011) Simulation of variational compliant assemblies with shape errors based on morphing mesh approach. Int. J. Adv. Manuf. Technol. 53. https://doi.org/10.1007/s00170-010-2839-4

Acknowledgements

This study was partially supported by the project F-Mobility (POR Campania FESR 2014/2020 - ASSE I - OT1, O.S. 1.2, AZIONE 1.2.2).

Funding

Open access funding provided by Università degli Studi della Campania Luigi Vanvitelli within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Appendix A. Adaptive sampling strategies

The proposed ROM-based surrogate, already discussed in Sect. 3.1, is hinged on the two adaptive sampling techniques schematically described in Sect. 3.2. The main steps of these two strategies, initially proposed by Guenot et al. [39], are reported in Pseudo Algorithm 1. At each iteration of the iterative training stage (Fig. 2), the input space is heavily sampled and a new sample is chosen, among the \({N}_{C}\) candidates \({\boldsymbol v}_{j}\), based on a refinement score (RS) that is the product of two factors: a \(local\) (exploitation) contribution that is a function of the already computed data sites \(\boldsymbol\mu\) and a \(global\) (exploration) contribution that depends on the Euclidean distance, \(d(\boldsymbol v,\boldsymbol\mu)\), between the candidates and the previous samples. This is a typical approach adopted in a wide range of applications [38]. In [39], the authors presented the two complementary strategies differing on how the \(local\) contribution is computed. Indeed, the two adaptive techniques, graphically described in Fig. 3, focus on two distinct goals: the first one—BI—seeks data sites that most affect the quality of the reduced basis, while the second one—II—is intended to improve the interpolation quality of the reduced variables. The following two sections will give additional mathematical insights about the two strategies, by delving deeper into what briefly outlined in Sect. 3.2 and focusing on the main differences between the previous works and what has been implemented here.

Pseudo Algorithm 2: adaptive sampling.

Appendix A.1. Local contribution based on interpolation improvement (II)

This quantity is related on the influence that each sample has on the interpolation of the ROM coefficients (defined in Eqs. (3) and (6)). The LOOCV is used to evaluate the interpolation errors in the removed samples. The generic coefficient \({\widetilde{u}}_{r,i}^{-j}\) is built on the correspondence:

that is, the interpolation is performed on the entire dataset leaving out the sample \({\boldsymbol\mu}_j\), as depicted in in Fig. 3b, step 2. The apex \(-j\) indicates that the sample \({\boldsymbol\mu}_j\) has been removed (according to the notation used in [39]). Then, the influence factor of the sample \({\boldsymbol\mu}_j\) on the ROM variable \({u}_{r,i}\) is computed as

and normalized