Abstract

Virtual assembly has become a popular trend in recent years and is used for various purposes, including selective assembly and adaptive tooling. Monte Carlo approaches based on Finite Element Method (FEM) simulations are commonly used for production applications. However, during the design phase, when testing different configurations and design options, a variational method is more suitable. This paper aims to test different implementations of the Method of System Moments applied to the second-order tolerance analysis method when actual distributions, which are non-centered and non-normal, are used as input for the simulation. The study reveals that the simulation results can significantly vary depending on the simulation settings in some cases. As a result, a series of best practices are highlighted to improve the accuracy and reliability of the simulation outcomes.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

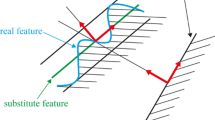

The need for a high volume of interchangeable parts in the modern industrial context first required the formalization of nominal dimensions and tolerances [1, 2]. Globalization and offshore outsourcing added complexity to tolerancing management, considering factors such as delivery time and assessing the quality of inbound batches. Alternatively, information, such as measuring reports, can be shared in real time by the supplier and used as a starting point for an assembly simulation. If the measured data is shared in its raw format (e.g., cloud point), it may be used to generate a “skin model” or non-ideal surface model [3, 4] of the manufactured parts, which can be used for the assembly simulation [4,5,6,7]. Statistical metrics could be shared instead (e.g., mean, standard deviation, cp, and cpk), and in this case, the smallest tolerance zone that fits the measured data, natural tolerance, could be defined. These fitted tolerance zones may be used for stack-up tolerance analysis [8, 9].

To perform tolerance analysis, many different models can be found in the literature. The vector-loop approach uses a kinematic model to represent the product variability [10, 11]. The variational approach represents the deviations from nominal and assembly constraints using a set of mathematical equations [12]. The matrix approach uses a matrix to describe the small displacement of a feature in its tolerance zone or the gaps between parts [13]. An approach based on the Jacobian uses virtual joints associated with functional elements to simulate small displacements in terms of translations and rotations around a point of interest. Functional elements are collected in pairs creating a kinematic chain, both internal pairs, to describe part variation, and kinematic pairs, describing the link between parts in contact, are possible [14, 15]. The torsor approach assumes that the feature deviation within its tolerance zone can be described using small linear and angular dispersions that are described using the small displacement torsor [16, 17]. The approach based on Jacobian is used in conjunction with the torsor approach in the unified Jacobian-torsor model, the first one is used for tolerance propagation, and the second is used to describe features variability [18]. The use of Tolerance-Map®, or T-Map®, to describe the multiple degrees of freedom controlled by each tolerance zone brings an approach that allows deriving stack-up equations: the Minkowski sum is used to combine different T-Maps to find the result on a target feature [19].

Based on these models, different commercial CAT (computer-aided tolerancing) software solutions were developed, e.g., 3DCS by Dimensional Control Systems®, CETOL 6 sigma by Sigmetrix, and VisVSA by Siemens®.

In the context of the vector-loop model, different methods for solving non-linear tolerance analysis are possible, such as the linearized method, system moments, quadrature, reliability index, Taguchi method, and Monte Carlo simulation. A review of these methods can be found in Kenneth and Spencer [11].

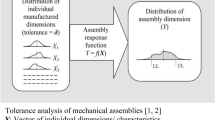

Gao et al. [20] compared the DLM (direct linearized method), a generalization of the vector-loop model, and Monte Carlo Simulation, finding that the DLM is accurate in determining the assembly variations, but it is not accurate in predicting assembly rejects when highly non-linear constraints are in place, as mean shifts and skewed output distribution result from non-linearities. The SOTA (second-order tolerance analysis) method was developed to combine the advantages of the Monte Carlo method with the computation speed associated with the DLM. The SOTA uses the Method of System Moments (MSM) [21,22,23] to link the output statistical moments, and both first- and second-order models were developed. It proved to meet the expectation with a rejection estimation comparable to the Monte Carlo using 106 samples with a computational effort five orders of magnitude less [24].

The benefits of a second-order model for tolerance analysis in the design phase were already discussed in the literature, as it can simulate highly non-linear assembly functions with accuracy comparable to the ones obtained with the Monte Carlo simulation [24].

When highly non-normal input (i.e., non-normal distributions), such as the ones that may result from actual production batches, the behavior of the variational method (as the SOTA method) is not documented. The Monte Carlo simulation still represents an approach that can simulate such conditions by discretizing all the distributions into a finite number of samples that are combined by sampling the output distribution. A large number of samples are required to guarantee accuracy, resulting in high computational time. For this reason, the use of a variational method may be convenient.

The MSM, as presented in [23], allows two variations: a linear and a second-order approach, depending on the number of terms included in the Taylor series that approximate the assembly function. Another key aspect that needs to be considered is the expansion pole for the series, which can be chosen in different positions: nominal, tolerance midpoint, and input distribution mean.

This paper aims to compare different settings for MSM implemented in the SOTA method for statistical assembly simulation and/or partial assembly simulation, e.g., virtual assembly with parts including “as-designed” tolerances and others with “as-produced” natural tolerances. The partial assembly simulation represents a formal methodology to quantify the rejection rate when a batch is accepted by derogation. In the context of this work on virtual assembly simulation, reference is made to the tolerance stack-up, which allows the estimation of geometric variations in the final assembly. This estimation is based on the allowable variations assigned to each part’s functional features (tolerances).

2 Method of System Moments

2.1 Linear model limits

The scaled statistical moments of the output distribution using a linear model are found with Eqs. (1), (2), (3), and (4).

\({\overline{u} }_{i}\) is the value that the output (\({U}_{i}\)) assumes when all the input variables \({x}_{j}\) are set to the values where the sensitivities are quantified (expansion pole).

The sensitivities of the output (\({U}_{i}\)) with respect to the input variable (\({x}_{j}\)) are represented by

\({{\gamma }_{2}}_{j}\) is the excess kurtosis (\({{\gamma }_{2}}_{j}={\beta }_{{2}_{j}}-3\)) that represents the difference in kurtosis between the given distribution and a normal one. All the steps to get to the last set of equations are not displayed for the sake of brevity (Appendix).

From Eq. (1), the mean of the output distribution depends only on the expansion point chosen for the Taylor series. From Fig. 1, it is possible to see that the mean value of the output depends on the shape of the functional equation that remaps the input distribution. Therefore, it can be assumed that both the functional equation derivatives and the input distribution parameters influence the mean of the output.

From Eq. (2), the variance of the output depends on the slope of the functional equation and the variance of the input. At the same time, it is well known that equation \({\mu }_{2}({U}_{i})=E({{U}_{i}}^{2})-{\left[E({U}_{i})\right]}^{2}=E({{U}_{i}}^{2})\) is always valid, being one of the possible definitions given to the variance. Since we have already discussed that the value of the mean depends on the full shape of the functional equation and the input distribution shape, it can be derived that the variance depends on these parameters too (higher-order derivatives of the functional equation and higher statistical moments) being the expected value for the square of the output distribution.

From Eq. (3), it is possible to see that the skewness of the output distribution depends only on the skewness of the distribution of the inputs: if the skewness of all the input distributions is null, the skewness of the output distribution is automatically null. This is proven wrong by looking at Fig. 1 in which it can be seen that in the case of a non-linear functional equation, the output distribution is asymmetric even if the input is normal. It is possible to state that the skewness of the output distribution is a function of the higher-order derivatives of the functional equation of the system.

From Eq. (4), it is possible to see that the output statistical distribution departs from normality (\({\beta }_{2}=3\)) only if the input distributions are non-normal. In other words, it can be said that the excess kurtosis depends only on the excess kurtosis of the input variables. It is not possible to arrive at a proper conclusion from Fig. 1, since it is not possible to quantify the kurtosis for the output distribution that is displayed in the figure. A complete study on second-order equations is needed to determine which parameters the kurtosis depends on.

2.2 Second-order model contributions

Using a second-order model equations from (1) to (4) becomes really complex [21,22,23]. The full second-order model, if the first four output’s statistical moments are desired, requires knowing the first eight statistical moments of the input distributions [23] as follows.

The scaled statistical moments of the output distribution are a function of the latter, but it is not possible to determine if they are also dependent on all the statistical moments involved in the \(E({{U}_{i}}^{n})\) definitions. It cannot be excluded that the contributions of higher statistical moments compensate each other.

The following considerations will be based on what is possible to graphically determine from Fig. 1 for a single-input/single-output system.

By looking at Fig. 1, the shape of the statistical distribution stretches if it is mediated by a second-order functional equation: the model depends on the value of the functional equation while the mean of the output shifts from it. The deformation of the statistical distribution will be greater if the input distribution is more spread: the shift of the mean from the mode depends both on the second-order derivatives and the standard deviation of the input variables.

The effect of the second-order derivatives stretches the bell toward the positive direction and compresses it toward the negative direction. Therefore, it cannot be detected a clear direct influence on the output standard deviation by the second-order derivatives. At the same time, it can be assumed that the standard deviation depends both on the skewness and kurtosis of the input distribution: the input distribution statistical moments can accentuate or mitigate the deformation impressed by the functional equation second-order derivatives. For example, if the input distribution in Fig. 1 had a negative skewness, it means that the probability on the area that extends the output probability toward the positive direction decreases with the effect of decreasing the spread of the output distribution.

The skewness depends on the second-order derivative of the functional equation and the standard deviation since they deform the shape of the distribution. It is also possible to assume that the asymmetry of the output distribution depends on the skewness and kurtosis interaction with second-order derivatives since it was already discussed that they can increase or decrease the deformation of the output distribution. There is no graphical way to determine in which way the 5th or 6th statistical moments affect the distribution shape since there is no graphical interpretation for them.

For the kurtosis, it is possible to repeat the same considerations made for the skewness.

3 Case study

Among commercial software, CETOL 6 sigma implements the SOTA method. One of the options of the software is the possibility to custom set different statistical distributions as input. Three different statistical distributions are supported: the uniform, the Gaussian (a.k.a. normal), and the SMLambda (Standard Moment Lambda) distributions [25]. While the normal and the uniform distributions are widely known, the SMLambda distribution is defined by four values: mean (\(\overline{x }\) or µ), standard deviation (σ), skewness (\({\beta }_{1}\)), and kurtosis (\({\beta }_{2}\)). This distribution describes non-symmetrical and non-normal distributions (see Fig. 2), but it comes with some limitations on skewness and kurtosis (Eq. (5)) [25]. The general expression for this distribution can be found in Ramberg et al. [26].

The software allows for both first-order and second-order tolerance analysis. However, the more detailed description provided by the second-order analysis comes with a higher computational cost. For a problem with n variables, more n2 values need to be computed.

Both types of analysis allow the software to choose the central point of the expansion for the Taylor series: CAD nominal values, distribution mean, or tolerance midpoint. When tolerances are symmetrical with respect to the nominal values and the statistical distributions are symmetrical, these three points coincide [25].

Once measurements have been performed and the statistical moments are evaluated, it is possible to find values outside the limit given by the SMLambda distribution (Eq. (5)). However, strategies to find the best fitting SMLambda distribution to fit experimental data go beyond the aims of this work.

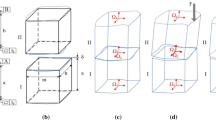

3.1 The assembly

The “Seat Latch” assembly, which is one of the training models in CETOL6sigma, was chosen as a case study. Real statistics were simulated for the “Side plate” part.

A virtual batch of 16 geometries was created using CAE process simulation: the nominal geometry was imported into MAGMASOFT, and the results for the metal casting simulation, obtained by changing process parameters, were exported as.stl files. The number of samples was chosen to obtain a discrete distribution with enough samples for fitting with a continuous distribution, as illustrated in Fig. 4. In an actual industrial application, as many samples as possible should be used to obtain a representative continuous distribution. Since the SML distribution is based on distribution moments and the higher moments are increasingly influenced by outliers, using too few samples can result in an overestimation of kurtosis and skewness in particular. For the sake of the case study, a simulated batch of 16 samples is considered a proper trade-off to obtain realistic non-normal distributions.

The samples with random deformations in size, shape, and orientation were inspected in GOM Inspect, where each feature’s real size (if applicable) and the deviations from nominal, in terms of translations and rotations, were exported (Fig. 3). These data were then converted into deviations expressed in the same reference system used in CETOL6sigma. For each dimensional variable, the first four scaled statistical moments about the mean were computed.

When the skewness and kurtosis values were out of acceptable limits, the closest admissible values were used to fit the SMLambda distribution to the real data.

The moments were then used in the CETOL6sigma model to simulate the real distribution’s impact on tolerance stack-up. Two functional dimensions (outputs), “X-POS” and “Y-POS,” were selected for display. These dimensions describe the position, with respect to the side plate, of the hole where the cables that engage the mechanism are joint. The output statistical distribution was then recorded after performing four kinds of analysis among the six available:

-

Linear analysis centered on the tolerance midpoint

-

Linear analysis centered on the mean of the input distribution

-

Second-order analysis centered on the tolerance midpoint

-

Second-order analysis centered on the mean of the input distribution

The analysis in which the pole of the Taylor expansion is set on the CAD nominal was not considered, as it was coincident with the pole centered on the nominal value, since no asymmetric tolerances were present in the model.

4 Results

The simulated real distributions are significantly away from normality and could only be described by an SMLambda distribution (Fig. 4). The computed statistical moments are used as input variables.

4.1 Critical dimension “Y-POS”

Figure 5 shows the results of the output statistical distribution for the linear and second-order analyses centered on the tolerance midpoint.

The output distribution is considerably different in the two cases: the mean shifts by \(-0.5216 {\text{mm}}\), (\(13.07\%\) of the tolerance width), resulting in a different percentage of parts within tolerance (yield), \(99.99\%\) for the linear analysis and \(78.45\%\) for the second-order analysis.

The results obtained by centering the Taylor expansion with the mean values of the input variables can be seen in Fig. 6.

A smaller difference in the mean can be noted (\(1.25\%\) of the tolerance width), and the acceptability rate is now comparable (\(72.78\%\) for the linear and \(72.40\%\) for the second-order analysis). Nonetheless, it is noteworthy that the skewness changes sign.

The differences when changing the expansion pole for the linear analysis can be noted by comparing Fig. 5a and Fig. 6a. The mean value shifts by \(14.16\%\) of the tolerance width, and the standard deviation shifts by \(6.60\%\) of the tolerance width, resulting in the yield given by the analysis centered with the input distribution means being less than \(1\mathrm{\%}\) away from second-order analysis yields (centered with the input distributions means).

Analyzing Fig. 5b and Fig. 6b, the differences in the output distribution for a second-order analysis when the expansion point is changed can be seen. The distribution shape is similar for both cases (negative skew), with the mean shifting by \(2.38\%\) of the tolerance width. As a result, the acceptability rate goes from \(78.44\) to \(72.40\%\).

For this critical dimension, the output distribution is heavily influenced by the types of analyses performed. As can be seen in Fig. 7, the distribution obtained with the linear analysis centered on the tolerance midpoint is far away from the others.

4.2 Critical dimension “X-POS”

Considering now the critical dimension “X-POS,” a significantly different behavior can be noted. In Fig. 8, the four outputs are almost overlapped, meaning that this critical dimension is not influenced by the type of analysis.

Overall, the acceptability rate ranges from \(82.35\) to \(87.17\%\), the mean maximum difference is 5.12% of the tolerance width, and the standard deviation maximum difference is \(1.65\%\) of the tolerance width.

5 Discussion

The analysis of the Method of System Moments showed that the linear model neglects many contributions to the final output, potentially leading to unreliable results. The simulated geometrical variation of the assembly cannot be deemed reliable.

5.1 The mean shift

It was shown that the mean of the output depends on both the curvature of the functional equation and the shapes of the input distributions. However, the linear model assumes that the mean of the output depends solely on the expansion pole, leading to a mean shift that can significantly impact the yield. This mean shift and its effect on yield due to the functional equation curvature were already discussed [20]. Additionally, the shape (skewness and kurtosis) of the input also has a similar impact, as it can either amplify or mitigate the mean shift caused by the functional equation curvature. However, in a tolerance stack-up with multiple inputs, each individual input shift may partially compensate for each other.

This effect was clearly observed in the case study. The critical dimension “Y-POS” exhibited a significant mean shift, while the “X-POS” showed almost no mean shift. Notably, both dimensions pertain to the same feature but in different directions. Hence, the same assembly can behave differently in terms of critical dimensions based on their specific directions.

5.2 Critical dimension “Y-POS”

The critical dimension “Y-POS” is chosen for further discussion regarding the influence of different types of analysis, as the other dimension (X-POS) has been found to be not influenced.

Among the four outputs, the one obtained with a linear analysis and the expansion pole centered on the tolerance midpoint is significantly different from the other three (Fig. 7). This indicates that the choice of the expansion pole is crucial when using a linear model. However, when the expansion pole is set to the input distribution mean, the results of the linear model are nearly overlapped with the second-order results. It is important to note that the skewness changes sign when the second-order model is applied, indicating that the linear model fails to accurately determine the output distribution shape. Nevertheless, if the expansion pole is chosen carefully, the yield result can be close to the second-order results.

When comparing the second-order results, it can be observed that the differences are small when the expansion point is changed. This can be explained by the fact that the second-order model considers both the mean shift due to the functional equation curvature and the input mean. As a result, the choice of the expansion point has less impact on the output distribution shape in the second-order analysis compared to the linear analysis.

5.3 Partial assembly simulation

As postulated in the introduction, utilizing real statistics derived from actual production batches can constitute a formal methodology for acceptance by derogation. In the case study, real statistics were obtained for a single part related to the assembly. By conducting the assembly simulation (tolerance stack-up), it is possible to statistically evaluate the effect of the actual batch on the final assembly.

In the event of a production batch being out of specifications, the influence on the final assembly quality can be determined, and the quantity of scrap can be quantified for further considerations. An economic evaluation of the losses arising from the use of the batch can be quantified, enabling a judicious decision on whether to accept or reject the batch.

Alternatively, performing the simulation using real statistics allows for the redesign of a part in the assembly, optimizing its allowable variability (tolerances) to adapt to the existing parts already in production.

6 Conclusions

The paper aimed to compare different settings for MSM implemented in the SOTA method for statistical assembly simulation and/or partial assembly simulation using actual statistics as inputs, thereby conducting virtual simulation analysis. The software CETOL6sigma was used as a benchmark since it allows the use of such inputs.

Different behaviors were observed for different critical dimensions (outputs). Considering the critical dimension that presented significant differences, it is shown that different types of analysis lead to different results, particularly for yields, in the presence of non-linear inputs. The full second-order tolerance analysis was already proven to give better results in the presence of highly non-linear functional equations. In our study case, the assembly has a functional equation close to linearity, but highly non-linear inputs were used. This allowed testing the Method of System Moments with a non-linear (i.e., non-normal) input distribution without interference from the assembly non-linearities. In the case of a non-linear assembly equation, it adds another layer of uncertainty that can lead to even larger discrepancies between the linear and second-order simulations.

The linear model centered on the nominal value simply cannot adequately represent reality. The result for this analysis gives an acceptability rate estimation that is completely different from the result of the second-order analysis. At the same time, the linear model centered on the input distribution means gives a result in line with the second-order analysis. Therefore, it can be concluded that, in the case of highly non-linear input distributions, the expansion pole for the Taylor series should be selected as the input distribution mean.

The following best practice can be extrapolated:

-

For non-linear assemblies, e.g., when rotations are predominant, the second-order analysis should be used.

-

For non-symmetric tolerance zones, the expansion point should be set on the tolerance midpoint or input distribution means.

-

For non-normal input distributions, the second-order analysis should be used.

-

When using real statistics, the second-order analysis centered on input distribution means should be used.

These best practices assume general validity since they come from the theoretical analysis of the interaction between the assembly equation with the input distribution (Sect. 2). The case study, in particular, enabled highlighting the effect of non-normal and non-centered input distributions, confirming the best practices. It also shows that different behaviors can occur along different directions, even for the same features. Therefore, in general, it is always advisable to check the linearity assumption by performing a second-order analysis. The check should be performed for all critical dimensions in the model since the behavior of each dimension can be different.

What is presented in this paper is relevant to rigid assemblies. It is noteworthy that assemblies composed of deformable parts are not negligible in the industry. The assembly simulation with flexible parts is a non-trivial task. Methodologies to simulate the constrained state of a deformable part are available in the literature, e.g., the Method of Influence Coefficient [27]. Deformable parts are often constrained hyperstatically, allowing an increase in the rigidity of the component to a “rigid-like” state. The SOTA method, used in this study, requires an “isostatic” assembly to define an explicit assembly equation. A possible solution may be found in the distinction between the free state or “as-manufactured” state and the “as-assembled” state, as presented in [28, 29]. The “as-assembled” state can then be considered as a rigid part. Real statistics shall then be derived by the simulated constrained state or by measuring the part using appropriate functional fixturing. This solution shall be appropriately tested and validated.

References

Mills B (1988) Variation analysis applied to assembly simulation. Assem Autom 8:41–44. https://doi.org/10.1108/eb004233

Ma S, Hu T, Xiong Z (2021) Precision assembly simulation of skin model shapes accounting for contact deformation and geometric deviations for statistical tolerance analysis method. Int J Precis Eng Manuf 22:975–989. https://doi.org/10.1007/s12541-021-00505-1

ISO International Organization for Standardization (2011) ISO 17450–1:2011 - Geometrical product specifications (GPS) - General concepts - Model for geometrical specification and verification. iTeh Inc., Newark, DE

Anwer N, Ballu A, Mathieu L (2013) The skin model, a comprehensive geometric model for engineering design. CIRP Ann 62:143–146. https://doi.org/10.1016/J.CIRP.2013.03.078

Yan X, Ballu A (2018) Tolerance analysis using skin model shapes and linear complementarity conditions. J Manuf Syst 48:140–156. https://doi.org/10.1016/J.JMSY.2018.07.005

Corrado A, Polini W (2018) FEA integration in the tolerance analysis using skin model shapes. Procedia CIRP 75:285–290. https://doi.org/10.1016/J.PROCIR.2018.04.055

Corrado A, Polini W (2017) Manufacturing signature in variational and vector-loop models for tolerance analysis of rigid parts. Int J Adv Manuf Technol 88:2153–2161. https://doi.org/10.1007/s00170-016-8947-z

Marziale M, Polini W (2011) Review of variational models for tolerance analysis of an assembly. Proc Inst Mech Eng Part B J Eng Manuf 225:305–318. https://doi.org/10.1177/2041297510394107

Corrado A, Polini W (2020) Comparison among different tools for tolerance analysis of rigid assemblies. Int J Comput Appl Technol 62:36. https://doi.org/10.1504/IJCAT.2020.103915

Chase KW, Gao J, Magleby S (1995) General 2-d tolerance analysis of mechanical assemblies with small kinematic adjustments. J Des Manuf 5:263–274

Gao J, Chase KW, Magleby SP (1998) Generalized 3-D tolerance analysis of mechanical assemblies with small kinematic adjustments. IIE Trans 30(4):367–377. https://doi.org/10.1023/A:1007451225222

Gupta S, Turner JU (1993) Variational solid modeling for tolerance analysis. IEEE Comput Graph Appl 13:64–74. https://doi.org/10.1109/38.210493

Desrochers A, Rivière A (1997) A matrix approach to the representation of tolerance zones and clearances. Int J Adv Manuf Technol 13:630–636. https://doi.org/10.1007/BF01350821

Lafond P, Laperriere L (1999) Jacobian-based modeling of dispersions affecting pre-defined functional requirements of mechanical assemblies. In: Proceedings of the 1999 IEEE International Symposium on Assembly and Task Planning (ISATP'99). IEEE, Porto, Portugal. https://doi.org/10.1109/ISATP.1999.782929

Polini W, Corrado A (2016) Geometric tolerance analysis through Jacobian model for rigid assemblies with translational deviations. Assem Autom 36:72–79. https://doi.org/10.1108/AA-11-2015-088

Peng H, Peng Z (2020) Using small displacement torsor to simulate the machining processes for 3D tolerance transfer. IOP Conf Ser Mater Sci Eng 831. https://doi.org/10.1088/1757-899X/831/1/012017

Bourdet P, Mathieu L, Lartigue C, Ballu A (1996) The concept of the small displacement torsor in metrology. Ser Adv Math Appl Sci 40:110–122

Desrochers A, Ghie W, Laperrie’re L (2003) Application of a unified Jacobian—torsor model for tolerance analysis. J Comput Inf Sci Eng 3:2–14. https://doi.org/10.1115/1.1573235

Davidson JK, Mujezinovic’ A, Shah JJ (2002) A new mathematical model for geometric tolerances as applied to round faces. J Mech Des 124:609–622. https://doi.org/10.1115/1.1497362

Gao J, Chase KW, Magleby SP (1995) Comparison of assembly tolerance analysis by the direct linearization and modified Monte Carlo simulation methods. Am Soc Mech Eng Des Eng Div 82:353–360

Cox ND (1979) Tolerance analysis by computer. J Qual Technol 11:80–87. https://doi.org/10.1080/00224065.1979.11980884

Shapiro SS, Gross AJ (1981) Statistical modeling techniques. Marcel Delcker Inc, New York

Cox ND (1986) Volume 11: How to perform statistical tolerance analysis. American Society for Quality Control, Milwaukee, WI

Glancy CG, Chase KW (1999) A second-order method for assembly tolerance analysis. In: Vol. 1 25th Des Autom Conf, American Society of Mechanical Engineers 977–984. https://doi.org/10.1115/DETC99/DAC-8707

Sigmetrix (2014) CETOL6sigma. User reference manual

Ramberg JS, Dudewicz EJ, Tadikamalla PR, Mykytka EF (1979) A probability distribution and its uses in fitting data. Technometrics 21:201–214. https://doi.org/10.1080/00401706.1979.10489750

Liu SC, Hu SJ (1997) Variation simulation for deformable sheet metal assemblies using finite element methods. J Manuf Sci Eng 119:368–374. https://doi.org/10.1115/1.2831115

Maltauro M, Passarotto G, Concheri G, Meneghello R (2023) Bridging the gap between design and manufacturing specifications for non-rigid parts using the influence coefficient method. Int J Adv Manuf Technol 127:579–597. https://doi.org/10.1007/s00170-023-11480-4

Maltauro M, Meneghello R, Concheri G, Pellegrini D, Viero M, Bisognin G (2023) A case study on the correlation between functional and manufacturing specifications for a large injection moulded part. in: Gerbino S, Lanzotti A, Martorelli M, Mirálbes Buil R, Rizzi C, Roucoules L (Eds.), Adv Mech Des Eng Manuf IV - Proc Int Jt Conf Mech Des Eng Adv Manuf JCM 2022, June 1–3, 2022, Ischia, Italy, Springer International Publishing, Cham, pp. 1268–1278. https://doi.org/10.1007/978-3-031-15928-2_111

Funding

Open access funding provided by Università degli Studi di Padova within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Contributions

Conceptualization, M.M.; methodology, M.M.; validation, M.M.; formal analysis, M.M.; investigation, M.M.; resources, G.C. and R.M.; data curation, M.M.; writing—original draft preparation, M.M.; writing—review and editing, M.M., G.C., and R.M.; visualization, M.M.; supervision, G.C. and R.M.; project administration, G.C. and R.M.; funding acquisition, G.C. and R.M.

Corresponding author

Ethics declarations

Consent for publication

All authors have read and agreed to the published version of the manuscript.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

The four statistical raw moments, for the output, can be written as follows for a linear analysis [23, 25].

where \({b}_{j}\) represent the sensibility of the output \({U}_{i}\) with respect to the input variable \({x}_{j}\):

The raw moments are used to find the central moments:

where \(\overline{u }\) is the value that the output (\({U}_{i}\)) assumes when all the input variables \({x}_{j}\) are set to the values where the sensitivities are quantified (expansion pole).

By combining together these equations, it is possible to find the scaled moments of the output distribution.

where \({{\gamma }_{2}}_{j}\) is the excess kurtosis (\({{\gamma }_{2}}_{j}={\beta }_{2}-3\)) that represent the difference in kurtosis between the given distribution and a normal one.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Maltauro, M., Meneghello, R. & Concheri, G. A second-order tolerance analysis approach to statistical virtual assembly for rigid parts. Int J Adv Manuf Technol 131, 437–446 (2024). https://doi.org/10.1007/s00170-024-13153-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-024-13153-2