Abstract

The development of models for the coefficient of friction is difficult due to many factors influencing its value and many tribological phenomena that accompany contact between metals (i.e., flattening, ploughing, adhesion), the influence of which also depends on the friction conditions. Therefore, developing an analytical model of friction is difficult. In this article, the CatBoost machine learning algorithm, newly developed by Yandex researchers and engineers, is used for modelling and parameter identification of friction coefficients for three grades of deep-drawing quality steel sheets. Experimental tests to determine the friction coefficient were carried out using the strip drawing method with the use of a specially designed tribological device. Lubrication conditions, normal force, and the surface roughness of countersample surfaces were used as input parameters. The friction tests were conducted in dry friction and lubricated conditions with three grades of oils with a wide range of viscosities. Different transfer functions and various training algorithms were tested to build the optimal structure of the artificial neural networks. An analytical equation based on the parameters that were being investigated was created to calculate the COF of each material. Different methods of partitioning weight were employed for the expected COF to assess the relative importance (RI) and individual feature’s relevance. A Shapley decision plot, which uses cumulative Shapley additive explanations (SHAP) values, was used to depict models for predicting COF. CatBoost was able to predict the coefficient of friction with R2 values between 0.9547 and 0.9693 as an average for the training and testing dataset, depending on the grade of steel sheet. When considering all the materials that were tested, it was discovered that the Levenberg–Marquardt training algorithm performed the best in predicting the coefficient of friction.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In the processes of sheet metal forming (SMF), the friction phenomenon is an inevitable phenomenon if there is metallic contact between two mating surfaces of the tool and the workpiece [1, 2]. Moreover, the phenomena related to friction, such as the ploughing of workpiece material by the surface asperities of the tool and adhesion and flattening of the surface asperities of the workpiece, change during the friction process [3]. In terms of surface roughness, the tool surface plays a key role because the hardness of the tool surface is greater than that of the deformed sheet [4, 5]. One of the effective methods of reducing friction in SMF is the use of lubricants of different consistencies (i.e., solid lubricants, fluids) and viscosities, as well as the use of special protective coatings that reduce the coefficient of friction (COF) [6, 7]. Due to the complexity of the friction phenomenon depending on the friction conditions (dry friction, lubrication, normal pressure), process conditions (temperature, humidity, sliding speed), and surface properties (i.e., frictional anisotropy, surface roughness of the tool and workpiece, tool coating, tool material, tool and sheet material strength, tool hardness), developing a mathematical model based on analytical methods is extremely difficult [8,9,10].

The basic tool for stochastic data analysis is linear and multiple regression; however, due to the complexity of the description of the friction phenomenon, it is necessary to use multiple regression. The main goal of multiple regression is to quantify the relationship between multiple independent variables and the dependent variable [11]. Mathematical models based on statistical methods should have such a level of complexity that they can reliably describe the phenomena occurring during the friction process [12, 13]. When selecting the factors affecting the COF, one should select those factors that significantly affect friction and are independent of each other at the same time. The condition of the significance of the influence of individual factors can be verified at a later stage of building the model, inter alia, by means of a posteriori elimination. The significance level is the assumed error probability when assessing the significance of the parameter. Usually, the significance level is set at α = 0.05. Variance as the mean square deviation of the value of a random variable from its mean value is a measure of the dispersion of possible variable values and is an indispensable tool for testing the significance of the entire regression equation [14].

Within the wide application of regression analysis in tribology, particular attention should be paid to determining the value of the COF [15, 16] and to determining the effect of the load and the friction track on the wear rate [17]. The regression model proposed by Jurkovic et al. [18] took into account the influence of two factors, i.e., lubrication conditions and sheet deformation, on the value of the COF at the die edge. Experimental verification of the analytical model of friction in the draw bead that has been developed based on the multiple regression method has confirmed the possibility of using it to determine the resistance of the sheet passing through the draw bead in SMF [19]. Research on the frictional wear of materials used for vehicle brake pads has shown a very good applicability of the artificial neural network (ANN) method to regression, taking into account more than 20 input variables [20]. However, the number of explained variables in the model is not a determinant of its quality.

ANNs are a tool of soft computing that enables the construction of linear and nonlinear models which solve complex classification and regression tasks [21]. The structure of the network and the essence of the operation is a reflection of the biological brain. ANNs consist of a set of interconnected elements called neurons that process information delivered to the input of the network based on the idea of parallel processing. The architecture of the ANN includes, in addition to the input and output layers, one or more hidden layers. In most analyses, one hidden layer is sufficient to obtain satisfactory results [22]. As a result of the training process, ANNs can acquire the ability to predict output signals based on the sequence of input signals and the corresponding output signals. The analysis of the possibility of using ANNs in tribological studies was carried out by Grymek et al. [23], pointing to the possibilities of using this technique in the analysis of tribological problems. According to the authors, the main error affecting the reduction of the quality of ANN predictions is the insufficient amount of training data used in the training process.

In the last two decades, the areas of successful incorporation of artificial intelligence generally and ANNs, in particular, have constantly been expanding in tribology research on brake performance [24, 25], wheel and rail wear [26], the surface roughness of extra deep drawing (EDD) steel [27], predicting of component hardness [28], surface roughness [29], and formability [30] in incremental sheet forming, friction in metal forming [31], and tool wear [32]. Increasing the accuracy of the ANN model was achieved by optimising the vector of explanatory variables with the use of genetic algorithms [31]. Rapetto et al. [33] studied the influence of surface roughness on real area of contact in rough contact by using a neural network. The ANN was able to prove the correlation between the roughness parameters and the real area of contact. A literature review on the use of ANNs in tribological applications was carried out by Frangu and Ripa [34]. The authors proved that ANNs are a useful tool during the design stage as well as the running stage. A review of computer techniques in modelling tribological effects is presented by Trzos [35]. As the review shows, the dissemination and wide use of scientific research in the area of tribology are limited due to the specific conditions of these processes but rather due to the lack of properly organised data storage systems that would enable a wider group of users to exchange data in the field of common research areas. Yin et al. [36] introduced the concept of tribo-informatics. In their paper, guided by the framework of tribo-informatics, they reviewed the application of tribo-informatic methods in tribology. ANNs, k-nearest neighbour, support vector machine, and random forest (RF) methods are those most commonly used by tribo-informatic practitioners. Bhaumik et al. [37] designed a new lubricant with multiple friction modifiers using ANNs and a genetic algorithm which has been used for optimisation using the ANN models as the objective function. Experimental data generated by a pin-on-disc tribometer was used to train the ANNs. Pantić et al. [38] presented the possibility of applying ANNs to solve complex nonlinear problems and to identify the tribological characteristics of dental glass ceramics. An ANN model was used to describe the influence of input tribological parameters on COF and the rate of material wear. Gyurova and Friedrich [39] explored the potential for using ANNs for the prediction of the sliding friction properties of polyphenylene sulphide matrix composites based on the results of pin-on-disk sliding wear tests. It was found that the trained ANNs possessed enough capability to generalise to predict input data that were different from the original training dataset. The results of the research by Bhaumik and Kamaraj [40] showed that ANN and multi-criteria decision-making approaches can be used in the analysis of a blend of biodegradable lubricants in investigating its tribological properties and in reducing the number of experiments required to design the desired lubricant. Nasir et al. [41] applied a multilayer perceptron (MLP) to predict the COF of a glass fibre–reinforced thermosetting polyester. It was found that the tan-sigmoid transfer function exhibited the best performance for the work currently being carried out. Otero et al. [42] tested several different ANNs for predicting the lubricated friction coefficient in thermal-elastohydrodynamic contacts. It was concluded that properly trained MLPs are able to give an excellent prediction with a high level of correlation between calculations and experimental data. A perspective of the use of artificial intelligence in tribology has been provided by Rosenkranz et al. [43]. Analysis of the literature showed that ANNs and techniques that combined statistics and machine learning are suitable for highly complex, nonlinear applied problems, which makes them particularly interesting for various fields of tribology. Artificial intelligence and machine learning, which is a sub-domain of computer science, provide opportunities to explore the complex processes in tribological systems and to classify their behaviour in an efficient or even real-time way [43, 44]. Examples of the application of ANNs in tribological studies were presented, and important features of the data-driven modelling paradigm were discussed by Argatov [45].

The condition of a weak correlation of explanatory variables with each other is particularly important when building regression models. However, in the case of neural models, correlating the variables with each other has a much smaller negative impact on the quality of the model due to the natural ability of ANNs to weaken the influence of some inputs by minimising the weighting factors of neurons. The above-detailed issues, as well as the lack of well-defined requirements of the deep drawing COF and the absence of referent predictional mathematical models, have motivated the authors to examine the investigation and prediction of the COF. This article uses the CatBoost machine learning algorithm newly developed by Yandex researchers and engineers as an open-source library for gradient boosting on decision trees. Furthermore, multilayer perceptron (MLP) structures were developed to predict COF values. The effectiveness of this algorithm was verified on the basis of predictive models of the COF of three grades of deep-drawing quality steel sheets. The most important parameters of the friction process, i.e., the surface roughness of countersamples, normal force value, and lubrication conditions, were selected as input variables. Instead of constructing, running, and evaluating a new ANN model each time, analytical equations for the prediction of COF values were retrieved from the best model. As a result, a new method was developed; one of the novelties and emerging findings of this research is to develop a neural network structure based on training and transfer functions with different conditions to extract the equation that predicts the COF from the best model that was implemented for the three different conditions. To the authors’ knowledge, such an experimental process and predictions have not been tested or described in the literature. Furthermore, as an aim and novelty in the scope of this paper, a joint partitioning weight of the neural network was adopted to assess the relative importance (RI) of COF parameters on the output. According to the review work by Marian and Tremmel [44], the current showstopper in ANN modelling is still the lack of availability of sufficient and comparable datasets as well as the handling of uncertainties regarding test conditions and deviations. So, in this respect, the underlying databases and the corresponding models have been presented in appendices.

2 Materials and methods

2.1 Materials

Deep-drawing quality steel sheets commonly used in the automotive industry were used as test materials. According to PN-87/H-92143 [46], the drawability categories of these sheets are as follows:

-

B (very deep drawing sheets),

-

SB (sheets for very difficult drawpieces),

-

SSB (sheets for particularly difficult drawpieces).

According to the international standard EN 10,130:2009 [47], the drawability categories B, SB, and SSB are equivalents of the drawability of the sheets DC03, DC04, and DC05, respectively.

2.2 Experimental setup

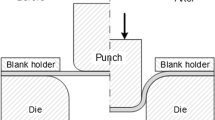

A specially designed tester (Fig. 1) mounted in a typical uniaxial tensile testing machine was used to carry out the strip drawing test. This test is commonly used to modelling the friction conditions in the flange area of drawpiece in the sheet metal forming. The body of the device is mounted in the lower holder of the testing machine. The upper end of a strip specimen was mounted in the upper holder of the testing machine, crimped between two countersamples. Cylindrical countersamples with a radius of 20 mm were made of 145Cr6 cold-work tool steel. The countersamples were locked with fixing pins to prevent rotation. The left countersample was attached non-movably to the body of the testing device, while the right countersample was attached to a horizontal sleeve which could move horizontally in the body of the device. During the test, the lower holder of the uniaxial tensile testing machine with the mounted friction device did not move. Only the upper holder of the testing machine could move, causing the sheet metal strip to be pulled. Specimens for the friction test, approximately 200-mm long and 20-mm wide, were cut from the sheet metals along their rolling direction.

Normal force (thrust force) FN was set by means of a spring, the working length of which was changed using a set screw. The normal force value was changed in the range of 30–210 N with an increment of 30 N. The tangential force (friction force) FT was recorded by the measuring system of a uniaxial tensile testing machine. Both forces were acquired with the same frequency. After correlating the force values in the Excel program, the average values of COF were determined using the formula:

Four sets of countersamples with surface roughness Sa = 0.44 µm, Sa = 0.72 µm, Sa = 1.57 µm, and Sa = 2.36 µm were used in the tests. Contrary to the roughness of the countersamples, the sheets were selected in such a way that their surface roughness was similar, allowing for independent checking of the COF results for the sheets with varying drawability properties. The average surface roughness of the sheets Sa is equal to Sa = 1.46 µm (for B sheet), 1.38 µm (SB), and 1.29 µm (SSB). The COF values determined in the strip drawing test varied between about 0.12 and 0.38, depending on the friction conditions. However, these values are only valid in the flange area of the workpiece. Therefore, the strip drawing test is commonly used to model the friction conditions in the flange area of the drawpiece in the sheet metal forming.

The purpose of using countersamples with such roughnesses was to provide training data with a wide range of variability for training neural networks. The friction tests were carried out under conditions of dry friction and with lubrication of the sheet surface with typical oils used in the metal forming industry [48, 49]: machine oil L-AN46 (Orlen Oil), gear oil Systrans Z Longlife SAE75W-80 (Castrol), and Magnatec engine oil SAE5W-40. The viscosities of the oils provided by the manufacturers were as follows: gear oil SAE75W-85—55 mm2/s, machine oil LAN-46—43.9 mm2/s, and engine oil SAE5W-40 C3—81 mm2/s. Before starting the tests, the surfaces of the samples and countersamples were degreased with acetone. In the tests performed under lubrication conditions, a layer of machine oil was applied to the sample on both sides. The lubricant was distributed uniformly on the surface of the samples at 2 g/m2 [50]. The sample was pulled through the countersample system with a constant top handle speed of 5 mm/s. Experimental matrix of friction tests is shown in Table 1.

2.3 CatBoost machine learning algorithm

CatBoost [51] is a high-performance open-source software for boosting the decision tree gradient. Yandex researchers and engineers created the CatBoost machine learning algorithm which incorporates numerous features. One of the key characteristics is the ability to use categorical data (non-numeric components) without the need for pre-processing. In addition, converting such data to integers via encoding is not required [52].

On top of this, CatBoost makes accurate predictions with default values, eliminating the need to modify parameters [53]. CatBoost creates 1000 trees by default, fully symmetrical binary trees with six in-depth, and two leaves. The learning rate is calculated automatically based on the training dataset’s attributes and the number of iterations. The learning rate chosen automatically should be close to ideal. For faster training, the number of iterations can be reduced, but the learning rate must be increased.

2.4 Multilayer perceptron

Net topology is the arrangement or structure of network elements and their connections, inputs, and outputs. The number of input and output layers and the transfer functions between these levels and the number of neurons in each layer can be used to describe the topology of an ANN [54]. The input and output layers of an ANN are separated by at least one hidden layer. Several neurons are present in each layer of the net. The number of input variables equals the number of input neurons, and the number of outputs associated with each input equals the number of output neurons. These neurons in the layers allow for the backward and forward transfer of weight between the layers based on the transfer function or the so-called activation function [55]. The current study uses a backpropagation learning approach. Werbos proposed the MLP idea in 1974, and Rumelhart, McClelland, and Hinton proposed it in 1986 [56]. The multilayer perceptron is defined as follows in Eq. (2):

where \(y\) is the output and \(x\) is input, \({\mathrm{w}}_{\mathrm{i}}\) are the weights, and \(b\) is the bias [57].

MLP structures were developed using MATLAB R2022a [58] to predict COF values. According to the purpose of this study, each net structure had one hidden layer with ten neurons connecting to the input and output layers, as shown in Fig. 2. Inputs have been obtained and target datasets using actual measured data from the experiments of three different materials have been adopted. The inputs have three neurons: Ra_roll (µm), force (N), friction conditions: dry and using oil, the COF values are the outputs. In the range of conditions using oil, three different oils have been selected: OrlenOil L-AN46 machine oil, Castrol Syntrans Z Longlife SAE75W-80 gear oil, and Castrol Magnatec SAE5W-40 C3 engine oil. The values of COF for all analysed friction cnditions are listed in Tables 2, 3, 4, and 5.

The other important parameters used for training in this study are a learning rate of 0.01, a performance target of 0.0001, and 1000 epochs, as shown in Fig. 3. Different training and transfer functions (see Tables 6 and 7) were tried and trained to identify the optimal model.

Figure 4 depicts the training flowchart in the model that was generated and the testing procedure utilising test data. During the running process, three primary conditions make decisions. The model and any variables with low condition limits are saved in the first loop, which is blue. After the first condition occurs, the second red loop is activated and stops. The second loop locates the training and variables, compares them to variables saved from prior training, and repeats the process until 1000 iterations have been completed. Green arrows indicate shared step loops.

The optimisation strategy is used in neural networks to tune and identify a set of network weights for constructing a suitable prediction map. Various optimisation algorithms, often known as training functions, exist. The training function is an algorithm for teaching the network to recognise and map a particular input to a specific output. Many factors influence the training function, including the trained dataset, weights, and biases, and the performance target. Selecting a suitable training function for the network is one problem in building good, rapid, and genuinely accurate predictions. Keeping this in mind, ten distinct forms of learning algorithms were used to map outputs to inputs in current MLP nets in the scope of this work. Table 6 lists the associated training functions.

The sums of each node are weighted in machine learning, and the accumulated weights are processed through an activation function “transfer function,” which computes the output of each layer by using the summed weights that enter that layer. Setting correct transfer functions is a difficult undertaking that depends on various elements, the most important of which is network structure. There are many functions that are commonly used for pattern recognition; different transfer functions were used to increase prediction accuracy in this study. Table 7 summarises all the transfer function algorithms employed in this work, along with their corresponding equations.

The structures and models constructed and trained as part of this study to produce COF values as network outputs used actual experimental values as input data. Data must be split into different subsets, such as training, validation, and testing datasets. In fact, splitting the dataset into training and testing subsets considerably impacts prediction accuracy and training performance [59]. Incorrect subgroups have a negative impact on benchmark performance. Shahin [60] asserted that the splitting ratio of the dataset has no obvious relationship, while Zhang et al. [59] said that it is one of the significant issues. As of now, there is no general solution accessible. Most researchers divided the datasets into lines with varying ratios of subgroups based on their surveys. The most widely used ratios for teaching and assessment are 90 to 10%, 80 to 20%, or 70 to 30%. The optimal prediction was produced as part of a training run implemented for this paper which divided the actual data (112 samples) by 80% vs. 20% related to the input data. The data were randomly divided into training and testing sets; the training dataset was partitioned into validation and test subsets to ensure the model learned all data samples. Ninety percent of the training dataset was used for training, 5% for validation, and 5% for testing. The final testing dataset (20%) did not contain the training dataset. It is worth mentioning that conditions (dry or oil) were put in binary form and encoded using ordinal encoding. The so-called early stopping strategy enhanced generalisation in the MLP network developed to predict COF values in this study. All supervised network generation functions, including backpropagation networks, use the early stopping strategy as a default method. The training dataset is divided into three subsets: training, validation, and testing (Fig. 5).

Validating performance at each stage tries to fit the results and ensure that they conform with the pre-set goal at the desired level. Validation necessitates that performance meets or exceeds the goals set for the model. Figure 6 shows the matching results of training, validation, and test, which together and overall indicate the performance of the model once trained.

Several validation metrics are available, but selecting the right one is critical for evaluating a predictive model. This study examined and verified various training and transferring algorithms to evaluate the agreement between the actual and predicted values. Selecting the appropriate validation metric is critical and difficult for evaluating outcomes and eliminating errors. All models trained and tested in this study were compared based on their evaluation by using the relevant metrics to assess findings regarding test performance.

3 Results and discussion

3.1 ANN modelling

The best findings resulting from applying various models to forecast the COF have been summarised for Catboost and MLP, with the other detailed analyses presented in the Appendix. Tables 8, 9, and 10 show the values of several validation metrics used to check the performance of Catboost methods when materials B, SB, and SSB are utilised. The statistical parameters listed in these tables are as follows: ME, mean error; MAE, mean absolute error; MSE, mean square error; RMSE, root mean square error; MRE, mean relative error; SD, standard deviation; SEM, standard error mean. R2 and adj. R2 are used to evaluate the models and structures in question, as an R2 value near 1 indicates good performance. A situation where the MAE and RMSE values are close to 0 indicates that the model is performing well. Despite this, there are considerable differences in error distribution due to the significant variance between RMSE and MAE values. If MAE is more stable, RMSE is more vulnerable to error. Standard error mean SEM was used to validate the distribution of prediction values, where SEM is the standard deviation of the sampling distribution of the sample mean. In other words, sample mean variance is inversely proportional to sample size, and SEM is the SD of the original sample size over the square root of the sample size.

CatBoost is a competent tool that can predict various targets with default parameters. In this study, Catboost was able to predict the COF for material B with R2 values of 0.9668 and 0.9281 for training and testing, respectively, as well as R2 values of 0.9699 and 0.8426 for material SB and 0.9768 for training vis a vis 0.9224 for testing when predicting the COF for material SSB.

Figure 7 for material B, Fig. 8 for material SB, and Fig. 9 for materials SSB are the summary plot prediction of COF which highlights each feature’s relevance to the other features’ effects in the same row. The idea of Shapley additive explanations (SHAP) was to calculate the Shapley values for each feature of the sample as proposed by Lundberg and Lee [61], and its interpretation has been influenced by different methodologies [62,63,64]. Each point on the summary graphic indicates a feature’s Shapley value. The feature’s level is determined by axis Y, and Shapley values are displayed on axis X. The colour of the plots indicates the importance of the trait from low to high. The features are ordered by importance. The Shapley’s values represent the relative distribution of predictions among the features. It is worth noting that identical values can contribute to different outputs depending on the values of other features in the same row.

On axis X, every data point in each dataset feature is represented as a single SHAP value, and each feature is represented on axis Y. The value of the feature is shown by the bar colour: red indicates high values, and blue shows low values. Grey points represent categorical inputs. The right-hand values have a “positive” effect on the output, whereas the left-hand values have a “negative” effect. Positive and negative are simply directional phrases that refer to how the model’s output is influenced. However, they reflect the model’s performance. For example, in the third row of Fig. 7, the most distant left point for force has a low value for the force feature in the third row. This low force has a − 2 effect on COF as a model outcome. Without it, the predictive model would have anticipated a value of 2 or higher. Similarly, the lack of the rightmost bluepoint of the force with a value of 2 predicts a COF of − 2 less. The most effective features are revealed by extending the data point further.

A SHAP decision plot, which uses cumulative SHAP values, was used to depict COF prediction models. Each line on the graph represents a single model prediction. All of the COF calculations are plotted in Figs. 10, 11, and 12.

As a result, this analysis demonstrates that each process condition is distinct and that various factors interact and have varying effects on individual results. Aside from cases of “dry” friction, conditions using different oils show variable COF. The same can be said for various forces and the Ra roll.

In other words, each value is represented individually; for example, Fig. 13 shows the total positive features values, Fig. 14 shows the total negative features values, and Fig. 15 shows combined positive and negative features values.

It is good to predict the results and expectations of the outcome of the experimental process before starting actual new experimental work using ANN techniques. Nevertheless, the ANN process requires the building of different structures and models, and most importantly, the prediction process cannot be carried out without previous actual data apart from the time of running. Predicting is a practical, cost-reducing, and effective method, but what if researchers want to predict new parameters without historical data? Instead of constructing, running, and evaluating a new ANN model each time, analytical equations for COF prediction were retrieved from the best model. As a result, a new method was developed; one of the novelties and emerging findings of this research is to develop a neural network structure based on training and transfer functions with different conditions to extract an equation for the prediction of COF from the best model that was implemented for the three different conditions. These equations can be used directly to predict the results by adding new parameters selected. Thus, Tables 12, 13, and 14 in the Appendix show the values of different validation metrics used to verify performance when predicting the COF for materials B, SB, and SSB, respectively, in various models.

It is critical to distinguish between test and training errors. The data used to train the model is utilised to calculate training errors, while the test error is derived using a whole dataset unknown to the model. The R2 value of the training dataset reflects variance within the trained samples as a result of the model, and the R2 value of the testing dataset indicates the model’s predictive quality. Tables 12, 13, and 14 in the Appendix show that many models developed can predict the COF efficiently and accurately. However, When the R2 of the testing was compared between the algorithms, it was discovered that the Levenberg–Marquardt (LM)—Trainlm performed the best as a training function in predicting the COF for all the materials (B, SB, and SSB). Positive saturating linear—Satlin was the best as a transfer function to predict the COF for material B with R2 values equal to 0.970 for training and 0.966 for testing. The radial basis transfer function produced the best prediction of the COF for the other two materials (SB and SSB), which shows the highest R2 value as a model performance. As a result, Table 11 shows the results of various validation metrics used to evaluate the best algorithms for predicting the COF for materials B, SB, and SSB.

Equations (3) and (10) are satlin and radbas transfer functions. Equation (4) is the COF prediction equation for metal B before weights and biases, and Eq. (5) is the transfer function variable. Equation (5) into Eq. (4) produces Eq. (6). Equation (7) substitutes weights and biases, and Eq. (8) describes the outcomes. Equation (9) for predicting the COF of material B using the best-performing ANN network’s constant weights and biases. Equations (11) and (12) predict COF for metals SB and SSB before adding weights and biases. Consequently, Eqs. (13) and (14) were created for materials SB and SSB, requiring constant weights and biases from the best-performing ANN network. The ANN network weights and biases retrieved served as a single input weight (IW) and the layered weight (LW). The IW represents the connection between the inputs and the hidden layer, while the LW represents the connection between the hidden and output layers, and b1/b2 are the biases for each layer. Tables 15, 16, and 17 in the Appendix contain b1, b2, IW, and LW from the best trained ANN model for the COF for materials B, SB, and SSB, respectively. These equations can predict the COF with accuracies of 0.966, 0.974, and 0.981 as R2 values for materials B, SB, and SSB. Table 18 summarises the actual and predicted values of the COF (training and testing sets) for materials B, SB, and SSB.

Material B:

Material SB and material SSB:

Material SB:

Material SSB:

3.2 Contribution analysis of input variables

A feature’s contribution and the calculation of the contributions of the features that have been implemented to reach the prediction amounts to presenting the input variables and how they affect the output, which is the COF. A feature’s importance, variable importance, or relative importance refers to the analysis of the contribution of input variables to the corresponding outputs. On the other hand, this study shows changes in the averages of predictions when the feature value changes, indicating the relative relevance of each feature in the model driving a prediction. When variables with lower relative importance (RI) values substitute high RI input variables, the outcomes are significantly different [54, 65, 66]. Garson [67], most squares [68], and connection weights [69] are some of the methods for calculating the importance of a feature. The importance of a feature calculated on the basis of these methods is established based on the connection weights of neurons and is depicted in Eqs. (7), (13), and (14), respectively, for materials B, SB, and SSB.

Figure 16, 17, and 18 indicate significant parameters impacting the COF in terms of relative importance and weight analysis, which explains how each feature contributes to and influences the model. This technique estimates each feature’s contribution to each row of the dataset. Based on weights and biases for determining the contributions of input variables impacting output (COF), all approaches reveal that changes in Ra_roll and condition (dry or using oil) significantly affect the COF for the three types of materials, with minimal variance. Furthermore, nominal force is always ranked at the bottom of the importance list because it has an almost negligible impact. The average relative importance of different input variables on COF of all materials is shown in Fig. 19.

4 Conclusions

In this article, the CatBoost machine learning algorithm developed by Yandex researchers and engineers is used for modelling and parameter identification of the coefficient of friction for three grades of deep-drawing quality steel sheets. A Shapley’s decision plot, which uses cumulative Shapley additive explanations (SHAP) values, was used to depict the COF prediction models. One of the emerging findings of this research is the value of developing a neural network structure based on training and transfer functions with different conditions to extract the equation for the prediction of COF from the best model that was implemented for the three different conditions. The following conclusions can be drawn from the research.

-

1.

CatBoost was able to predict the coefficient of friction with R2 values between 0.9668 and 0.9774 for the training dataset, between 0.8818 and 0.9324 for the testing dataset, and between 0.9574 and 0.9693 as an average for the training and testing dataset, depending on the grade of steel sheet.

-

2.

It was discovered that, for all the materials tested, the Levenberg–Marquardt training algorithm performed the best in predicting the coefficient of friction.

-

3.

As a result, this analysis demonstrates that each process condition is distinct and that various factors interact and have varying effects on individual results. Aside from cases of dry friction, conditions with different oils show a variable COF. The same can be said for various normal forces and the surface roughness of the countersamples.

-

4.

The radial basis transfer function produced the best prediction of the COF for two materials SB and SSB. The positive saturating linear—Satlin transfer function provided the best COF prediction for material B with R2 values greater than 0.96.

-

5.

All approaches reveal that changes in the surface roughness of the countersamples and conditions (dry or using oil) significantly affect the COF for three types of materials, with minimal variance. Moreover, nominal force always has the almost negligible impact.

Data availability

The data presented in this study are available on request from the corresponding author.

References

Dou S, Xia J (2019) Analysis of sheet metal forming (stamping process): a study of the variable friction coefficient on 5052 aluminum alloy. Metals (Basel) 9(8):853. https://doi.org/10.3390/met9080853

Dou S, Wang X, Xia J, Wilson L (2020) Analysis of sheet metal forming (warm stamping process): a study of the variable friction coefficient on 6111 aluminum alloy. Metals (Basel) 10(9):1189. https://doi.org/10.3390/met10091189

Trzepieciński T, Lemu HG (2014) “Frictional conditions of AA5251 aluminium alloy sheets using drawbead simulator tests and numerical methods. Strojniški Vestn–J Mech Eng 60(1):51–60. https://doi.org/10.5545/sv-jme.2013.1310

Sigvant M et al (2019) Friction in sheet metal forming: influence of surface roughness and strain rate on sheet metal forming simulation results. Procedia Manuf 29:512–519. https://doi.org/10.1016/j.promfg.2019.02.169

Zabala A et al (2021) The interaction between the sheet/tool surface texture and the friction/galling behaviour on aluminium deep drawing operations. Metals (Basel) 11(6):979. https://doi.org/10.3390/met11060979

Hol J, Wiebenga JH, Carleer B (2017) Friction and lubrication modelling in sheet metal forming: Influence of lubrication amount, tool roughness and sheet coating on product quality. J Phys Conf Ser 896:012026. https://doi.org/10.1088/1742-6596/896/1/012026

Sigvant M et al (2018) Friction in sheet metal forming simulations: modelling of new sheet metal coatings and lubricants. IOP Conf Ser Mater Sci Eng 418:012093. https://doi.org/10.1088/1757-899X/418/1/012093

Podulka P (2021) The effect of surface topography feature size density and distribution on the results of a data processing and parameters calculation with a comparison of regular methods. Materials (Basel) 14(15):4077. https://doi.org/10.3390/ma14154077

Trzepieciński T, Gelgele HL (2011) Investigation of anisotropy problems in sheet metal forming using finite element method. Int J Mater Form 4(4):357–369. https://doi.org/10.1007/s12289-010-0994-7

Trzepieciński T (2010) 3D elasto-plastic FEM analysis of the sheet drawing of anisotropic steel sheet. Arch Civ Mech Eng 10(4):95–106. https://doi.org/10.1016/S1644-9665(12)60035-1

Uyanık GK, Güler N (2013) A study on multiple linear regression analysis. Procedia - Soc Behav Sci 106:234–240. https://doi.org/10.1016/j.sbspro.2013.12.027

Tanaka H, Lee H (1998) Interval regression analysis by quadratic programming approach. IEEE Trans Fuzzy Syst 6(4):473–481. https://doi.org/10.1109/91.728436

Daoud JI (2017) Multicollinearity and regression analysis. J Phys Conf Ser 949:012009. https://doi.org/10.1088/1742-6596/949/1/012009

Kanyongo GY, Certo J, Launcelot BI (2006) Using regression analysis to establish the relationship between home environment and reading achievement: a case of Zimbabwe. Int Educ J 7(5):632–641

Matuszak A (2000) Factors influencing friction in steel sheet forming. J Mater Process Technol 106(1–3):250–253. https://doi.org/10.1016/S0924-0136(00)00625-7

Matuszak A, Gładysz K (2001) Definiowanie warunków tarcia podczas symulacji komputerowej procesów tłoczenia blach. Przegląd Mech 60:31–35

Dasgupta R, Thakur R, Govindrajan B (2002) Regression analysis of factors affecting high stress abrasive wear behavior. Pract Fail Anal 2(2):65–68. https://doi.org/10.1007/BF02715422

Jurkovic M, Jurkovic Z, Buljan S (2006) The tribological state test in metal forming processes using experiment and modelling,. J Achiev Mater Manuf Eng 18(1–2):383–386. [Online]. Available: http://ww.journalamme.org/papers_amme06/1152.pdf

Keum YT, Kim JH, Ghoo BY (2001) Expert drawbead models for finite element analysis of sheet metal forming processes. Int J Solids Struct 38(30–31):5335–5353. https://doi.org/10.1016/S0020-7683(00)00342-5

Aleksendrić D (2010) Neural network prediction of brake friction materials wear. Wear 268(1–2):117–125. https://doi.org/10.1016/j.wear.2009.07.006

Ibrahim D (2016) An overview of soft computing. Procedia Comput Sci 102:34–38. https://doi.org/10.1016/j.procs.2016.09.366

Kartam N, Flood I, and Garrett JH (1997) Artificial neural networks for civil engineers: fundamentals and applications

Grymek S, Druet K, Łubiński J (2002) Perspektywy obliczeń neuronowych w inżynierii łożyskowania. Tribologia 33(1(181)):227–237

Aleksendrić D, Barton DC (2009) Neural network prediction of disc brake performance. Tribol Int 42(7):1074–1080. https://doi.org/10.1016/j.triboint.2009.03.005

Bao J, Minming T, Zhu Z, Yin Y (2012) Intelligent tribological forecasting model and system for disc brake. 2012 24th Chin Cont Decision Conf (CCDC) 3870–3874. https://doi.org/10.1109/CCDC.2012.6243100

Shebani A, Iwnicki S (2018) Prediction of wheel and rail wear under different contact conditions using artificial neural networks. Wear 406–407:173–184. https://doi.org/10.1016/j.wear.2018.01.007

Kurra S, Hifzur Rahman N, Regalla SP, Gupta AK (2015) Modeling and optimization of surface roughness in single point incremental forming process. J Mater Res Technol 4(3):304–313. https://doi.org/10.1016/j.jmrt.2015.01.003

Najm SM, Paniti I, Trzepieciński T, Nama SA, Viharos ZJ, Jacso A (2021) Parametric effects of single point incremental forming on hardness of AA1100 aluminium alloy sheets. Materials (Basel) 14(23):7263. https://doi.org/10.3390/ma14237263

Najm SM, Paniti I (2021) Predict the effects of forming tool characteristics on surface roughness of aluminum foil components formed by SPIF using ANN and SVR. Int J Precis Eng Manuf 22(1):13–26. https://doi.org/10.1007/s12541-020-00434-5

Najm SM, Paniti I (2021) Artificial neural network for modeling and investigating the effects of forming tool characteristics on the accuracy and formability of thin aluminum alloy blanks when using SPIF. Int J Adv Manuf Technol 114(9–10):2591–2615. https://doi.org/10.1007/s00170-021-06712-4

Trzepieciński T, LemuGelgele H (2012) Application of genetic algorithm for optimization of neural networks for selected tribological test. Acta Mech Slovaca 16(2):54–60. https://doi.org/10.21496/ams.2012.019

Quiza R, Figueira L, Paulo Davim J (2008) Comparing statistical models and artificial neural networks on predicting the tool wear in hard machining D2 AISI steel. Int J Adv Manuf Technol 37(7–8):641–648. https://doi.org/10.1007/s00170-007-0999-7

Rapetto MP, Almqvist A, Larsson R, Lugt PM (2009) On the influence of surface roughness on real area of contact in normal, dry, friction free, rough contact by using a neural network. Wear 266(5–6):592–595. https://doi.org/10.1016/j.wear.2008.04.059

Frangu L, Rîpă M (2001) Artificial neural networks applications in tribology – a survey.

Trzos M (2007) Tendencje rozwojowe w modelowaniu zjawisk i procesów tribologicznych. Zagadnienia Eksploat Masz 151(3):73–88

Yin N, Xing Z, He K and Zhang Z (2022) Tribo-informatics approaches in tribology research: a review. Friction https://doi.org/10.1007/s40544-022-0596-7

Bhaumik S, Pathak SD, Dey S, Datta S (2019) Artificial intelligence based design of multiple friction modifiers dispersed castor oil and evaluating its tribological properties. Tribol Int 140:105813. https://doi.org/10.1016/j.triboint.2019.06.006

Pantić M et al (2018) Application of artificial neural network in biotribological research of dental glass ceramic. Tribol Ind 40(4):692–701. https://doi.org/10.24874/ti.2018.40.04.15

Gyurova LA, Friedrich K (2011) Artificial neural networks for predicting sliding friction and wear properties of polyphenylene sulfide composites. Tribol Int 44(5):603–609. https://doi.org/10.1016/j.triboint.2010.12.011

Bhaumik S, Kamaraj M (2021) Artificial neural network and multi-criterion decision making approach of designing a blend of biodegradable lubricants and investigating its tribological properties. Proc Inst Mech Eng Part J J Eng Tribol 235(8):1575–1589. https://doi.org/10.1177/1350650120965754

Nasir T, Yousif BF, McWilliam S, Salih ND, Hui LT (2010) An artificial neural network for prediction of the friction coefficient of multi-layer polymeric composites in three different orientations. Proc Inst Mech Eng Part C J Mech Eng Sci 224(2):419–429. https://doi.org/10.1243/09544062JMES1677

Echávarri Otero J et al (2014) Artificial neural network approach to predict the lubricated friction coefficient. Lubr Sci 26(3):141–162. https://doi.org/10.1002/ls.1238

Rosenkranz A, Marian M, Profito FJ, Aragon N, Shah R (2020) The use of artificial intelligence in tribology—a perspective. Lubricants 9(1):2. https://doi.org/10.3390/lubricants9010002

Marian M, Tremmel S (2021) Current trends and applications of machine learning in tribology—a review. Lubricants 9(9):86. https://doi.org/10.3390/lubricants9090086

Argatov I (2019) Artificial Neural Networks (ANNs) as a novel modeling technique in tribology. Front Mech Eng 5:30

PN–87/H–92143 (1987) Blachy i taśmy stalowe dla przemysłu motoryzacyjnego. Polski Komitet Normalizacyjny Warszawa

EN 10130 (2009) Cold rolled low carbon steel flat products for cold forming - technical delivery conditions. European Committee for Standardization Geneva

Trzepieciński T (2020) Tribological performance of environmentally friendly bio-degradable lubricants based on a combination of boric acid and bio-based oils. Materials (Basel) 13(17):3892. https://doi.org/10.3390/ma13173892

Szpunar M, Trzepieciński T, Żaba K, Ostrowski R, Zwolak M (2021) Effect of lubricant type on the friction behaviours and surface topography in metal forming of Ti-6Al-4V titanium alloy sheets. Materials (Basel) 14(13):3721. https://doi.org/10.3390/ma14133721

Trzepieciński T, Fejkiel R (2017) On the influence of deformation of deep drawing quality steel sheet on surface topography and friction. Tribol Int 115:78–88. https://doi.org/10.1016/j.triboint.2017.05.007

Prokhorenkova L, Gusev G, Vorobev A, Dorogush AV, Gulin A (2018) Catboost: unbiased boosting with categorical features. Adv Neural Inf Process Syst 2018(Section 4):6638–6648

Dorogush AV, Ershov V, Gulin A (2018) CatBoost: gradient boosting with categorical features support. 1–7 [Online]. Available: http://arxiv.org/abs/1810.11363

Ibragimov B and Gusev G (2019) Minimal variance sampling in stochastic gradient boosting. Adv Neural Inf Process Syst 32

Nabipour M, Keshavarz P (2017) Modeling surface tension of pure refrigerants using feed-forward back-propagation neural networks. Int J Refrig 75:217–227. https://doi.org/10.1016/j.ijrefrig.2016.12.011

Beale MH, Hagan MT, Demuth HB (2013) Neural Network Toolbox TM user ’ s guide R2013b. MathworksInc

Riedmiller PM “Machine learning : multi layer perceptrons,” Albert-Ludwigs-University Freibg. AG Maschinelles Lernen [Online]. Available: http://ml.informatik.uni-freiburg.de/_media/documents/teaching/ss09/ml/mlps.pdf

Principe J, Euliano NR, Lefebvre WC (1997) Neural and adaptive systems: fundamentals through simulation: multilayer perceptrons. Neural Adapt Syst Fundam Through Simulation© 1–108.https://doi.org/10.1002/ejoc.201200111.

Beale MH, Hagan MT, Demuth HB (2020) Deep Learning Toolbox TM user ’ s guide how to contact MathWorks.

Zhang G, Eddy Patuwo B, Hu MY (1998) Forecasting with artificial neural networks:: the state of the art. Int J Forecast 14(1):35–62. [Online]. Available: https://econpapers.repec.org/RePEc:eee:intfor:v:14:y:1998:i:1:p:35-62

Shahin M, Maier HR, Jaksa MB (2000) Evolutionary data division methods for developing artificial neural network models in geotechnical engineering Evolutionary data division methods for developing artificial neural network models in geotechnical engineering by M A Shahin M B Jaksa Departmen.

Lundberg SM, Lee S-I (2017) A unified approach to interpreting model predictions. Adv Neural Inform Process Syst 30 [Online]. Available: https://proceedings.neurips.cc/paper/2017/file/8a20a8621978632d76c43dfd28b67767-Paper.pdf

Štrumbelj E, Kononenko I (2014) Explaining prediction models and individual predictions with feature contributions. Knowl Inf Syst 41(3):647–665. https://doi.org/10.1007/s10115-013-0679-x

Bach S, Binder A, Montavon G, Klauschen F, Müller K-R, Samek W (2015) On pixel-wise explanations for non-linear classifier decisions by layer-wise relevance propagation. PLoS ONE 10(7):e0130140. https://doi.org/10.1371/journal.pone.0130140

Ribeiro MT, Singh S, Guestrin C (2016) "Why should i trust you?", Proc 22nd ACM SIGKDD Int Conf Knowledge Discov Data Mining 1135–1144. https://doi.org/10.1145/2939672.2939778.

Vatankhah E, Semnani D, Prabhakaran MP, Tadayon M, Razavi S, Ramakrishna S (2014) Artificial neural network for modeling the elastic modulus of electrospun polycaprolactone/gelatin scaffolds. Acta Biomater 10(2):709–721. https://doi.org/10.1016/j.actbio.2013.09.015

Rezakazemi M, Razavi S, Mohammadi T, Nazari AG (2011) Simulation and determination of optimum conditions of pervaporative dehydration of isopropanol process using synthesized PVA–APTEOS/TEOS nanocomposite membranes by means of expert systems. J Memb Sci 379(1–2):224–232. https://doi.org/10.1016/j.memsci.2011.05.070

Garson GD (1991) Interpreting neural-network connection weights. AI Expert 6(4):46–51

Ibrahim OM (2013) A comparison of methods for assessing the relative importance of input variables in artificial neural networks. J Appl Sci Res 9(11):5692–5700

Olden JD, Jackson DA (2002) Illuminating the ‘black box’: a randomization approach for understanding variable contributions in artificial neural networks. Ecol Modell 154(1–2):135–150. https://doi.org/10.1016/S0304-3800(02)00064-9

Acknowledgements

The artificial intelligence–related research reported in this paper and carried out at BME by the first author has been partially supported through the National Laboratory of Artificial Intelligence funded by the NRDIO under the auspices of the Ministry for Innovation and Technology.

Funding

Open access funding provided by Budapest University of Technology and Economics.

Author information

Authors and Affiliations

Contributions

Conceptualisation, T.T.; methodology, S.M.N. and T.T; software, S.M.N.; validation, S.M.N., T.T. and M.K.; formal analysis, T.T. and M.K.; investigation, S.M.N. and T.T.; data curation, S.M.N., T.T. and M.K.; writing—original draft preparation, S.M.N. and T.T; writing—review and editing, S.M.N. and T.T. All authors have read and agreed to the published version of the manuscript.

Corresponding authors

Ethics declarations

Ethics approval

Not applicable.

Consent to participate

Not applicable.

Conflict of interest

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Table 12

Table 13

Table 14

Table 15

Table 16

Table 17

Table 18

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Najm, S.M., Trzepieciński, T. & Kowalik, M. Modelling and parameter identification of coefficient of friction for deep-drawing quality steel sheets using the CatBoost machine learning algorithm and neural networks. Int J Adv Manuf Technol 124, 2229–2259 (2023). https://doi.org/10.1007/s00170-022-10544-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-022-10544-1