Abstract

The improvement of industrial grinding processes is driven by the objective to reduce process time and costs while maintaining required workpiece quality characteristics. One of several limiting factors is grinding burn. Usually applied techniques for workpiece burn are conducted often only for selected parts and can be time consuming. This study presents a new approach for grinding burn detection realized for each ground part under near-production conditions. Based on the in-process measurement of acoustic emission, spindle electric current, and power signals, time-frequency transforms are conducted to derive almost 900 statistical features as an input for machine learning algorithms. Using genetic programming, an optimized combination between feature selector and classifier is determined to detect grinding burn. The application of the approach results in a high classification accuracy of about 99% for the binary problem and more than 98% for the multi-classdetection case, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In industrial manufacturing, grinding often is a final production step that must fulfill high demands on precision and surface integrity. On the other hand, the economic productivity of the grinding process has to be ensured by maintaining maximal tool life and shorten the process time. Furthermore, the consideration of the manufacturing process environmental impact gains more importance, leading into aims to reduce the usage of resources. In general, these three aspects contradict, as increasing the production speed results in a higher wear of the grinding tool, a higher energy input per time, and the amount of scrap parts increases.

As almost all of the introduced energy is converted into heat, the high temperatures can cause undesirable effects onthe surface integrity. These microstructural changes comprise phase transformation, residual stresses, and reduced fatigue strength. According to Wegener [1], these workpiece damages are referred to as grinding burn, regardless of their extent, visibility, and depth. Therefore, it is necessary to identify possible deteriorations of the surface integrity to guarantee the quality of the machined components.

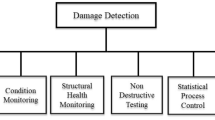

There are several methods for the detection of grinding burn on the machined workpiece, categorized by He et al. [2] in beforehand prediction and post-mortem detection methods. The latter include time-intensive procedures like metallographic or residual stress testing. Following Wegener [1], in industrial applications, predominantly nital etching and Barkhausen noise analysis (BNA) are applied. In contrast, beforehand methods include the modeling of forces, temperatures, and power. Additionally, process monitoring approaches are applied to realize an in situ detection of thermal damages.

In research areas, acoustic emission (AE) signals are quite common for condition monitoring of grinding processes [3,4,5]. Multiple studies investigate effects like chatter vibrations [6], spindle damage [7], wheel wear monitoring [8], and the detection of grinding burn.

In the following, an approach for the in situ detection of grinding burn using machine learning is proposed. Therefore, an experimental procedure to generate controlled grinding burn is developed and a comprehensive dataset is ground using high material removal rates. After applying various time-frequency transforms and extracting statistical features, the final feature matrix comprises 391 experiments and 878 features. Based on this feature matrix, the combination of feature selection and machine learning methods is optimized to ensure maximal classification accuracy for both binary and multi-class prediction. In addition, the selected and most relevant features are studied to evaluate the benefits of the different sensor types and signal processing methods.

2 State of the art

In recent years many researchers studied machine learning approaches for grinding burn detection. Even though they all pursued the same objective, each of them contributed with different procedures and techniques. The distinctive characteristics can be found in the sensor system, signal processing methods, feature engineering and feature selection/extraction techniques, and the applied classifier, as visualized in Table 1.

In the very early stages, Eda et al. [9] already studied the behavior between AE signals and corresponding grinding burn with different degrees. A general rise in AE amplitude was found with the progress of grinding burn. Furthermore, the fast Fourier transform (FFT) was used to conclude that the characteristic frequency band in the AE spectrum lies between 100 and 300kHz. Saxler [10] and Webster et al. [23] confirmed the findings and highlighted the importance of the AE root mean square feature (RMS). Further statistical features were studied by Wang et al. [11], who concluded that the band power, kurtosis, skewness, and autoregressive coefficients of the AE signal show good correlations to the occurrence of grinding burn. Kwak and Ha [12] and de Aguiar et al. [13] implemented a multi-sensor method by including the tool power signal into the data acquisition. Neto et al. [14] also applied a multi-sensor method with AE and vibration signals. All of them used an artificial neural network (ANN) for the two-class classification problem and performed with a classification accuracy from 95 to 98.3%. The presented works so far either use time-domain or frequency-domain methods for grinding burn detection. However, these approaches are limited since they don’t include methods that consider both domains at the same time.

On the assumption that purely time-domain or frequency-domain methods suffer under lack of robustness, Liu et al. [15] used the Wavelet Packet Transform (WPT) to create AE signal features. Distinguishing from other works, they provoked grinding burn by laser irradiation and demonstrated that the spectral density distribution of the AE magnitudes was shifted from lower to higher frequencies when heat was induced [15]. Chen et al. [16] used a similar experimental setup but applied the short-time Fourier transform (STFT); nonetheless, they made similar observations. Griffin and Chen [6] studied grinding burn and grinding chatter with AE, power, vibration, and force signals whereby grinding burn was generated by adjusting process parameters. The observations of Griffin and Chen [6] that the characteristic frequency bands might reach over 500kHz coincides to some extent with the results of Liu et al. [15] but it should be considered that both use same typical aerospace workpiece material consisting of iron-nickel alloy. Their characteristic frequency bands may differ from those emitted by other materials such as steels utilized in the automotive industry, for example. They used the independent component analysis (ICA) for automatic feature selection and combined it with a tree-based genetic programming algorithm [6]. In a later study, Griffin [17] even obtained better results by using the classification and regression tree algorithm which promises 99 to 100% classification accuracy. Lajmert [18] introduced the Hilbert Huang transform (HHT) as a signal processing method to quantify grinding burn for machined hardened steel workpieces. In further work, Lajmert et al. [19] used AE, vibration, and force sensors focusing on automatic feature selection methods. They created many statistical features after applying HHT, reduced the number of features with principal component analysis (PCA), and established a minimum set of relevant features with decision tree algorithms Yang et al. [19]. Since the empirical mode decomposition (EMD) suffers under the mode-mixing phenomenon, Yang et al. [20] published the ensemble empirical mode decomposition (EEMD) method promising more robustness and better results. Similar to other works, a frequency shift to higher frequency bands was observed in the range of 150 to 450kHz [20]. Furthermore, Yang et al. [20] proposed, unlike Liu et al. [15], that the frequency range of 150 to 450kHz correlates with grinding burn, even though they also used laser irradiation to generate grinding burn. The main difference is that Yang et al. [20] used the middle carbon steel ASIS 1045 in his experiments, instead of the iron-nickel-based alloy. Hence, the prominent frequency bands for grinding burn might depend on the workpiece material; Guo et al. [21] applied the EEMD as well as the WPT for signal processing and used a two-stage feature selection algorithm to determine the most meaningful features. A stacked sparse autoencoder network (SSAE) was deployed to classify between burn and no burn and performed with 97.5% classification accuracy Guo et al. [21]. Another method for frequency mode decomposition is provided by the variational mode decomposition (VMD) and, in terms of grinding burn detection, first introduced by Gao et al. [22]. This method was motivated from the assumption that grinding burn is associated with the frequency distribution of the AE signal. Four degrees of grinding burn (no burn, slight burn, medium burn, and severe burn) were generated on the metallic workpiece surface by laser irradiation with four different temperature ranges [22].

In the presented works about grinding burn detection, many different methods have been proposed. Purely time-domain and frequency-domain methods were used in the very early stages to determine relevant features and characteristic frequency bands for grinding burn detection. Especially in the last decade, time-frequency-domain methods became more popular since they contain more information and enable non-stationary signal analysis. However, after signal transformation, automatic feature selection algorithms are just rarely used for optimal feature subset selection. Furthermore, it was noticed that different workpiece materials exhibit considerably different properties for their frequency content during machining. The presented works show very good classification accuracies; however, most of them do not differentiate between different degrees of grinding burn and use a small database with few to no process variation. In addition, all of the described works either use a grinding wheel with alumina oxide grains or induced workpiece burn through laser irradiation.

Distinguishing from other works, this paper uses a metal-bonded grinding wheel with cBN grains and produces four different burn states by an emulated wear progress. Furthermore, the proposed approach applies modern signal transformation methods on multiple sensor signals. Next, the generated statistical features are reduced by the use of feature selection methods that automatically identify the most suitable features. Finally, the multi-sensor approach and the abundant amount of experimental data under varying process conditions enable multi-class prediction, which is capable to classify between different degrees of grinding burn.

3 Theoretical methods

The theoretical foundations of this study are introduced in the following. Thereon, the problem-specific application and implementation are presented in Section 4.5.

3.1 Signal processing

The most popular transformation techniques are introduced briefly in the following which are taken into account as a preprocessing step for ML. Table 2 shows a comparative overview of the Fourier, Wavelet, and Hilbert transform covering the major differing aspects with significant importance for this work.

The FFT transforms any time-domain signal x(t) into the frequency-domain by convolution with periodic sinusoidal functions. Due to this assumption of periodicity, the FFT looses the time content and therefore is not suited for non-stationary signals. This limitation gave rise to the STFT which implies, according to Isermann [25], a window function which captures the frequencies over the time by shifting a time constant τ. However, due to the time frequency uncertainty principle, the STFT is not able to provide an exact time-frequency representation of the signal [14].

To overcome this drawback, the wavelet transform was developed, which uses a group of mother wavelets Ψ(t) showing sharp and oscillating behavior. The choice of wavelets is typically done according to the similarity to the original signal [25]. Once a mother wavelet is chosen, it can be time-scaled (adjusting the frequency resolution) by the factor a and time-shifted (adjusting the time resolution) by τ. Dilatation and translation of a mother wavelet Ψ(t) lead according to [25] to Eq. 1.

Hence, the wavelet transform provides an independent modulation of time and frequency resolution [15]. The continuous wavelet transform (CWT) of the signal x(t) is then described as follows [25]:

The wavelet theory can practically be used in combination with high-pass and low-pass filters to decompose a signal into different frequency bands. The wavelet packet transform (WPT) decomposes the signal into scaling (approximations) and wavelet (details) coefficients by convolution of the signal. Thus, the high-pass and low-pass filter impulse responses lead to a symmetric tree-structured filter bank [14].

The Hilbert transform (HT), introduced by Hahn [26], handles nonstationary and nonlinear narrow-band signals and is defined as

where P describes the Cauchy principle value [27]. The HT describes a 90° phase shift of x(t), forming a complex conjugate pair of x(t) and H(t) which can then describe an analytical signal Z(t) such that

where A(t) describes the instantaneous amplitude and Φ(t) the instantaneous phase [27, 28]. The instantaneous frequency can then be derived as follows.

For the effectiveness of the HT, however, some limitations on the analyzed data x(t) are required, e.g. it needs to be a narrow-band signal [27]. This is because the instantaneous frequency function is a scalar frequency value over time and is thus not able to describe more than one oscillatory mode at any given time [27]. For this reason, Huang et al. [27] introduced the empirical mode decomposition (EMD), which decomposes a wide-band signal into its narrow-band frequency components called intrinsic mode functions (IMF’s). After that, the HT is applied to the decomposed components and then reconstructed. The combination is known as the Hilbert Huang transform (HHT). As an improvement to the EMD Wu and Huang [29] published the ensemble empirical mode decomposition (EEMD) as a truly noise-assisted data analysis method. This method builds an ensemble of white noise-added signal and takes the average as the final result to overcome the mode mixing problem which turned out as a major drawback of the EMD. As a very recent approach to decompose a signal into frequency bands, the variational mode decomposition (VMD) was proposed by Zosso and Dragomiretskiy [30]. The VMD describes an entirely non-recursive decomposition technique which extracts the modes concurrently. In contrast to the EMD or EEMD, it is mathematically well founded as a constraint variational problem, which assumes each mode to be mostly compact around its center frequency [30].

3.2 Feature engineering and data preprocessing

High-frequent sensor data typically have a low density of information, no matter if it is present in time- or frequency-domain. One way to compress the information density is provided by feature engineering, whereby statistical features are computed to represent the data as a collection of scalar values without loosing much information. After applying the signal transformation methods previously introduced, features based on time, frequency, and time-frequency information are calculated. According to Grimmett [31], the features usually include the central moments from statistics: mean, standard deviation (std), skewness (skew), and kurtosis (kurt). Especially for AE signals, additional features as the root mean square (rms), the median (med), the peak values (p2rm and p2p) and the spectral entropy (spen) could be considered [10, 22, 23, 32]. An overview of the features with their corresponding equations used for fault detection and diagnosis was listed by de Aguiar et al. [13], Sharma and Parey [33], Teti et al. [34], and Guo et al. [21].

In this work, the abovementioned features are used to build the feature matrix \(\textbf {F}=(f_{i,j}) \in \mathbb {R}^{m\times n}\) with m experimental observations and n features. The absolute values |fi,j| strongly depend on the selected unit of the features. These different scales and also the varying sign effect that for example using a distance-based classifier the higher feature values would outweigh the lower ones. As a consequence, standardization or z-score normalization is conducted to preprocess the data [35], whereby the feature matrix F is scaled column by column. Following Guyon et al. [36], this step typically results in a better performance of the machine learning method applied afterwards.

3.3 Feature selection

Not all constructed features are necessarily useful for the classification task, since there might be also redundant or even irrelevant features. Bolón-Canedo et al. [37] describe the process of feature selection as a problem of finding the most informative and compact subset of features. The main difference to feature extraction methods like the principal component analysis (PCA) is that no new features are calculated and only a subset of the full feature matrix containing the most relevant dimensions is selected [37]. According to Bolón-Canedo et al. [37] and Pilnenskiy and Smetannikov [38], feature selection methods are divided into filter, wrapper, and embedded methods.

Filter methods mainly base on univariate or multivariate statistical tests. In this work, the Fisher’s exact test (SelectPercentile) and the family wise error (SelectFwe) implemented in the Python package scikit-learn [39] are applied. Wrapper methods consider a classification algorithm as a black-box to evaluate the performance of the selected feature subset iteratively. One of the most common wrapper methods is recursive feature elimination (RFE, implemented in scikit-learn [39]), whereby the number of selected features is reduced recursively using the feature importance of the internal tree-based method.

Following Bolón-Canedo et al. [37], filter methods usually are computationally less expensive and have a good generalization ability. In contrast, wrapper methods give better classification results but also tend to overfit and have high computational costs [37]. Due to their strong dependency of the applied classifier and their high computational costs [37], embedded methods are not used in this work.

3.4 Classification

Supervised learning is a subtask of machine learning, where an algorithm learns a function that maps a given dataset to a target, the so-called label. In the training step, the applied method learns the key characteristics of the dataset to determine the target value. Afterwards, the algorithm could predict the label of a new observation due to its ability of generalization [40]. Within supervised learning, one typically distinguishes between regression and classification tasks. In regression tasks, the label is a continuous value, whereas in classification, an object from a finite categorical set is predicted [40].

There is a broad range of machine learning algorithms; however, this study focuses on classification methods suitable for the detection of grinding burn. In state of the art, mainly neural network approaches are applied. To improve the classification performance, this study assesses a distance- and a kernel-based classifier as well as different ensemble methods based on decision trees.

3.4.1 Distance-based methods

The most common distance-based classification method is the k-nearest neighbor (kNN) algorithm, implemented also in scikit-learn [39]. Using this method, all training tuples represent a point in n-dimensional space. The label of a new datapoint is predicted from the class label of the k-nearest neighbors. To determine the distances, any metric measure can be applied; predominantly, the euclidean distance \(\left \Vert .\right \Vert _{2}\) is selected. According to [35], the optimal number of neighbors k has to be determined experimentally. This choice might have crucial impact on the overall classification performance (see Section 3.4.4). Furthermore, the kNN algorithm is often assigned to lazy algorithms as no real learning is involved [40].

3.4.2 Kernel-based methods

The kernel-based machine learning methods transform the dataset in a higher dimensional space using kernel functions. Due to this so-called kernel-trick, one expects that the data becomes linearly separable and classification is possible. The support vector machine (SVM) is the kernel method used most frequently, whereby a separating hyperplane that maximizes the margin between two classes is calculated [40]. For SVM, most commonly linear or radial basis function (RBF) kernels are applied, whereby the choice of the hyperparameters C and γ is critical to the classification performance. In this study, again the Python implementation SVC from the Package scikit-learn [39] is used.

3.4.3 Tree-based ensemble methods

In general, only one learning algorithm is applied to solve a given problem. Using ensemble methods, a set of machine learning algorithms mainly of an identical type is trained for the same problem. According to Zhou [41], this combination makes the generalization ability often much stronger than applying a single method.

In many applications, a decision tree, a non-parametric supervised machine learning method, is selected as base learner. In classification tasks, a decision tree learns to predict the label by adapting binary splitting rules inferred from the given dataset. At each node, the best split to minimize the so-called impurity function (gini or entropy) is searched [40]. Finally, the split with the highest information content is selected and the procedure is repeated iteratively. At each leaf node of the decision tree, a prediction for the unknown class label is given [40]. However, decision trees tend to overfit and selecting the split with minimal impurity in each step does not necessarily result in the global optimum [40]. To overcome these disadvantages, ensemble methods combine multiple decision trees by bagging, boosting, or stacking.

For bagging, the original dataset is randomly resampled with replacement to obtain a new dataset (called bag) for each base learner. Each model is trained independently in parallel and the predictions are averaged over all base learners [41, 42]. The most common representatives for bagging are random forests, where multiple decision trees are combined to decrease the variance and improve the overall classification performance. In extremely randomized trees, an improvement of random forests, the computation of the splits is further randomized, which results in a slightly lower computational time [43]. Both RandomForestClassifier and ExtraTreesClassifier are provided by the Python package scikit-learn [39].

Boosting mainly differs in the learning procedure, as it takes place in a sequential way. Each observation is assigned a weight, whereby after the base learner is trained, the weights of the misclassified data are increased. Thus, the further training process focuses on them. The final prediction is computed by a weighted average over all previous learners [41].

3.4.4 Hyperparameter search

Each machine learning method has a various number of hyperparameters, e.g. the number of neighbors for the k-nearest neighbors algorithm. These model parameters are not known a priori, but their choice affects the performance significantly. There are four common approaches to optimize the hyperparameters and select the optimal model: Grid search, random search, Bayesian optimization, and evolutionary algorithm (EA).

According to Le et al. [44], the advantages of EA over the other approaches arise in the lower computational power needed, the flexibility in building multi-level tasks, and the large search space of methods and hyperparameters. The tree-based pipeline optimization tool (TPOT), developed by Le et al. [44], uses genetic programming to determine an optimal pipeline and is also implemented in Python. In this work, the term pipeline comprises the combination of one feature selection method and one classification algorithm. In the optimization process, a wide variety of different pipelines and their hyperparameters are evaluated with the objective to maximize the final classification performance.

3.4.5 Metric and scoring

The quality of the prediction given by the machine learning algorithms is quantified by the use of metrics. In general, the applied metric or metrics should be selected specific to the problem. In grinding burn classification, so far, mainly the accuracy is evaluated, which describes the number of right classified samples over the total number of samples presented. To ensure comparability to other works, in this study also the accuracy is used. Additionally, the balanced accuracy is calculated, which describes the average of the recall in each class and is suited for imbalanced datasets. Both of the metrics can be evaluated during training and testing of the pipeline. In this work, cross-validation (CV) is used during the training process to avoid overfitting; hence, the CV-score contains an average performance of all cross-validation folds.

4 Material and method

4.1 Experimental setup

The grinding experiments are conducted on an external cylindrical grinding machine. For all experiments, the same multi-layered metallic bonded cBN grinding wheel with a metallic wheel hub is used featuring a grit size of 150 to 180μ m and a concentration of 150% (manufacturer’s designation B181-C150-M-3V1-V15). The maximal tool diameter ds measures 373.9mm and the grinding wheel width bs of the straight profile is 7mm, featuring an inclination of 15° to compensate the tilt of the tool spindle holder. In this study, C45E (ISO EN 10269) is used as workpiece material. This quenched and tempered steel has a carbon content of 0.45% and a low content of phosphor and sulfur. It is hardened inductively to about 740HV1 up to a hardening depth of approximately 2mm. The initial diameter of the prepared workpieces dw measures 28mm.

The workpiece is radially and axially clamped between a jaw chuck and a grinding mandrel. The grinding wheel speed vs varies from 80 to 100m/s and the workpiece rotates synchronously with nw = 250 min− 1. A single experiment comprises one plunge grinding process with a constant radial infeed velocity vfr, wherein radial direction 1 mm of the workpiece material is removed. In all experiments, a mineral oil–based cutting fluid of type Wisura AKS 12 is deployed with a constant volume flow of 75l/min. The applied process parameters are summarized in Table 3.

For the development of a robust classification algorithm, the process parameters of the recurring plunge grinding experiments have to be varied. The specific material removal rate

describes the amount of material, which is removed per unit width of the grinding wheel within a certain time interval [45]. Another measure to compare different sets of process parameters is the quotient of the equivalent chip size hcu and the geometrical contact length lg, which is defined according to Klocke [45] as follows:

Thereby, fr describes the depth of cut during one workpiece revolution, vw the circumferential velocity of the workpiece, and deq the equivalent diameter.

As visualized in Fig. 1, each workpiece comprises 14 grinding positions. The corresponding process parameters are listed in Table 4. For the first four sets of process parameters, the radial infeed velocity is increased step-wise. This effects that the specific material removal rate \(Q^{\prime }_{w}\) and the dimensionless value hcu/lg both increase. For the last two positions 1 + 2 the grinding wheel speed vs is decreased, but keeping the quotient hcu/lg on the same level, the radial infeed velocity is also decreased.

Numbered workpiece after performing the 14 plunge grinding experiments according to Table 4

4.2 Signal acquisition

To obtain a signal-based grinding burn detection method, the grinding machine is equipped with several system monitoring components. As visualized in Fig. 2, two different AE sensors with different positions are used for signal acquisition. In addition, the current and the power signal are recorded.

The AE sensors capture acoustic waves which can be described as transient waves that propagate through a solid material [46]. According to Chen et al. [16], AE sensors are easy to mount, even on rotating parts, at relatively low cost. Since the grinding zone is the critical region, the sensor is typically placed as close as possible to the grinding zone [47]. For this purpose, one rotating AE sensor (Marposs, type M), in the following called AE-S, is mounted at the tool spindle center and rotates with the grinding wheel. The position at the spindle center provides a constant distance independently of the grinding position and close to the grinding source. Another AE sensor (Kistler, type 8152B) is placed on the tailstock to capture the waves which originate from the workpiece and is abbreviated with AE-T. Since the distance from this sensor to the grinding source depends on the grinding position, it has been proven first that the varying distance has no significant impact on the signal characteristics. Both AE signals are preprocessed by the Kistler piezotron coupler of type 5125B, which provides built-in functionalities such as signal amplification and different low-pass and high-pass filters designed as plug-in modules. The amplification is set to 10dB and a band-pass filter of 100 to 500kHz is deployed. The data acquisition system is realized with a National Instruments CompactDAQ-system and organized in LabView. The digitizer works with a sampling rate of 2MHz for each AE-signal.

For the measurement of the tool spindle current, Chauvin Arnoux current clamps of type MN39 are installed on each electrical wire to acquire the 3-phase current components (Iu, Iv, Iw). The current components are then digitized with a sampling rate of 100kHz. In the next step, RMS transformation is applied in LabView to convert them into the equivalent direct current Irms.

The electrical tool spindle power Pel is measured with a sampling rate of 80kHz using the true power meter Artis MU-3 system.

4.3 Controlled generation of grinding burn

In this study, a touch dressing procedure is applied to the metal-bonded wheel to generate blunt grains and provoke controlled grinding burn. For the dressing process, a CVD-diamond profile roller is used rotating in opposite to the grinding wheel. In each dressing cycle, the infeed aed is about 3μ m and divided into three single runs with 1μ m. To check, whether the grinding wheel has already contact with the dresser, the signal of the AE-sensor mounted on the grinding wheel spindle is used. All dressing parameters are summarized in Table 5.

To emulate the wear of the grinding wheel, the following procedure is proposed. The unused grinding wheel is mounted on the tool spindle. In a pretreatment step prior the actual experiment, a complete workpiece is ground with a low infeed velocity (vfr = 5mm/min) in order to achieve a quasi-stationary wear state of the tool, due to the removal of loosely bound grains with a high grain protrusion. Afterwards, the reference set with no touch dressing is ground, which includes six workpieces. Next, the first touch dressing cycle is performed and about 3μ m are removed from the grains bonded on the grinding wheel. Then, three workpieces are machined to see the impacts of the first touch dressing cycle. This procedure is repeated until all stages of grinding burn including rehardening are reached. Based on experience, this state is achieved after a total amount of 15 to 18 touch dressing strokes.

4.4 Detection of grinding burn using nital etching

As introduced, in industrial application, predominantly nital etching and Barkhausen noise analysis (BNA) are applied [1]. According to Wegener and Baumgart [1] and He et al. [2], the latter is non-destructive and fast, but requires a complex calibration process due to the strong dependency on local material differences. Apart from BNA, nital etching capitalizes the discoloration of steels with thermal damage after a chemical reaction. The test procedure requires a difficult handling of the workpieces and the interpretation of the discoloration is very subjective [1, 2]. Besides these disadvantages, nital etching is the most reliable detection method in industry standardized in ISO 14104: Surface temper etch inspection after grinding [48].

Accordingly, nital etching is used in this study following the type 2 etching procedure from ISO14104 [48] recommended for low-alloy steels. It has been found out that the cleaning and drying procedure before each acid is important to obtain well-interpretable results. Finally, the class code from ISO 14104 [48] is used to classify the different stages of discoloration. The four classes range from no grinding burn (class A), over light and heavy tempering (classes B + D) to rehardening (class E). In this study, no additional classification has been carried out with regard to the percentage of surface area affected. A visualization is given in Fig. 3.

4.5 Implementation

The implementation of a method for the detection of grinding burn proposed in this study requires multiple substeps. In the following, the implementation is divided into two separate procedures.

The first procedure comprises the steps from the grinding process to the final prediction and is visualized in Fig. 4. The data acquisition is started, when the implemented CNC-Trigger is activated few seconds before the grinding wheel comes into contact with the workpiece. All of the analog signals are first amplified and filtered before a conversion into a digital signal is carried out and the raw data is saved on local hard drive using LabView. Applying a MATLAB script, the four sensor signals are imported and cut to the relevant time period during grinding (grinding detection and time reduction). Thus, all signals have a unique length of 0.5 s independent from the actual process time. Next, the signal components outside the frequency band of 100 to 500kHz are dampened (digital filtering). Afterwards, the preprocessed signals are used to apply the time-frequency transforms introduced in Section 3.1 including PSD, STFT, WPT, EEMD, and VMD. Based on both time and frequency information, the statistical features listed in Section 3.2 are calculated. Finally, one feature vector contains all relevant information of the performed experiment and is stored in the aggregated feature matrix. In this work, all workpieces are tested on grinding burn using the nital etching procedure introduced previously so each feature vector gets its corresponding label. If a pretrained machine learning pipeline is available, a prediction on the grinding burn class can be provided to the user.

To obtain a trained machine learning pipeline, the procedure described above is repeated several times covering multiple wear states of the grinding wheel and as a consequence all of the four grinding burn classes (following Section 4.3). Hence, a high-dimensional feature matrix and the corresponding class labels obtained through nital etching are collected. In the second procedure visualized in Fig. 5, the machine learning part is implemented in Python based on the feature matrix.

First, a subset of the feature matrix is selected, which comprises either all features or a sensor-specific selection. For datasets holding a strong class imbalance, a resampling strategy is recommended to improve the training process. After resampling or in most cases directly after the selection of the sensor subset, the experiments of the feature matrix are divided into a training and a testing dataset (ratio 0.85/0.15). As a last preprocessing step, the feature matrix subset is standardized according to Section 3.2. Using only the training dataset, multiple feature selection and classification methods are preselected and their corresponding hyperparameter bounds are set according to Sections 3.3 and 3.4. As introduced in Section 3.4.4, genetic programming is applied to select the best combination of feature selector and classifier with the optimal set of hyperparameters. In this study, the objective of the hyperparameter optimization is to maximize the mean classification accuracy averaged over ten cross-validation folds. Thus, an initial population with 100 pipelines is selected, trained, and evaluated using the stated metric. A new generation is built by copying (crossover-rate of 0.2) or adapting (mutation rate of 0.8) the best pipelines of the previous generation. The algorithm converges if the best CV-score of five consecutive generations is not improved or if the maximal number of 50 generations is reached. Using the best pipeline from the hyperparameter optimization, different test scores are calculated on the previously separated test dataset. In addition, a robustness analysis covering 100 randomly generated splits of the train- and test-dataset is performed. Finally, the procedure is repeated for each sensor subset.

5 Results and discussion

So far, an approach to find a well-performing combination between feature selection and classification algorithm for the in situ detection of grinding burn has been introduced. In the following, the results are presented and discussed.

5.1 Dataset

In this study, the feature matrix comprises in total 391 experiments with 878 features, respectively. After nital etching, each experiment is matched with a corresponding grinding burn class. The distribution of the experimental observations over the four classes is visualized in Fig. 6, whereby the classes with light burn (B) and rehardening (E) contain more experimental datapoints than the classes with no burn (A) and severe burn (D). As introduced in Section 4.5, the feature matrix is randomly separated into a training and a testing dataset ensuring that both contain observations of all four classes.

To estimate the impact of each sensor on the final classification result, the steps presented in Fig. 5 are performed for each sensor subset separately resulting in four feature matrices Fi. Hence, the dataset FAE−T contains only features extracted from the signals of the AE sensor mounted on the tailstock. The subsets FAE−S and FIrms comprise the features of the AE sensor in the spindle center and the features based on the current measurement, respectively. From the power signal, only one feature is extracted; thus, it is added to the FIrms subset. Finally, the fourth dataset Fall = F includes all of the 878 features.

5.2 Two-class prediction

In two-class prediction, the machine learning algorithm is trained to differentiate experiments with grinding burn from those without. Therefore, the labels are changed to burn and no burn, whereas burn comprises the classes B, D, E and no burn only class A. Consequently, the observations with grinding burn are over-represented compared to the no burn experiments. To improve the classification ability, a resampling method is applied to equalize the class distribution. The best final performance is achieved using synthetic minority oversampling technique (SMOTE) introduced by Chawla et al. [49]. As a consequence, the training dataset size increased to 390 and the testing dataset to 69, respectively. The distribution of the labels considering the two-class problem is visualized in Fig. 7.

Using the procedure of Fig. 5, an optimized pipeline containing a feature selection and a machine learning algorithm is obtained for each feature matrix Fi. The final pipelines and their mean cross-validation and testing score are summarized in Table 6. In addition, Fig. 8 displays a confusion matrix for each of the four feature matrices, calculated with a fixed random state. Furthermore, these pipelines are analyzed using 100 random splits of the initial dataset. The resulting boxplots presented in Fig. 9 show the statistical deviations of the cross-validation scores during training on the left and the test accuracy on the right side.

For each sensor subset, the classification algorithm predicts the occurrence of grinding burn with a very high performance of over 94%, considering both the accuracy and the balanced accuracy. Furthermore, a high accordance of the cross-validation score and the testing score is evident, which suggests no over- or underfitting happens. Additionally, the standard deviation of the CV-score is very low and symmetrically distributed, which indicates a low impact of the train/test split. In general, a high level of generalization capability is established for the classification of grinding burn.

Going further into detail, the complete feature matrix containing the features from all sensors performs best with a mean accuracy of over 99% applying an kNN-classifier on 87 selected features. The great performance of the classification algorithm is slightly reduced using only features from one individual sensor. For the FAE−S dataset, a RFE (using a decision tree as estimator) is combined with a SVM (using a RBF-kernel), which results in a decrease of the mean accuracy by less than 1%. Using FAE−T and FIrms, the performance is reduced to 95 to 97%. Consequently, a reliable classification of grinding burn is possible using only features from one of the three sensor subsets. In addition, the multi-sensor approach performs even better than comparable methods from state of the art.

A deeper analysis of the wrong classified observations has shown that one experiment is classified wrong in each test run independently from the selected split. This indicates that this experiment is effected by an error in the grinding process, data acquisition, signal processing, feature extraction, or in the labeling of the etched workpiece. Removing this observation from the feature matrix would increase the overall performance towards 100%.

5.3 Multi-class prediction

For multi-class prediction, the classification algorithm is trained to distinguish between the four classes of grinding burn according to ISO14104 [48]. As visualized in Fig. 6, the class imbalance of the multi-class task is very low compared to the two-class problem. Consequently, no resampling method is applied.

The final pipelines with their scores are listed in Table 7 and the confusion matrix for a fixed random state is shown in Fig. 10. Furthermore, the boxplots in Fig. 11 visualize the statistical deviation of the training and testing accuracy.

Analyzing the complete feature matrix Fall, the obtained pipeline consists of a kNN classifier and gives a high accuracy of about 98%. The low standard deviation and also the good agreement of the accuracy and the balanced one indicate a robust algorithm. In contrast to the two classes of grinding burn, the performance of the sensor subsets in multi-class prediction drops significantly. Focusing on the dataset of the AE-sensor in the spindle center, the RFE feature selector chooses 140 features using an extra trees classifier as estimator. The applied kNN algorithm predicts the four classes with about only 92%. Using different random states for the train/test split, the standard deviation increases to over 3%, especially considering the evaluation on the testing subset. Consequently, a robust classification result using only this sensor can not be expected. These results are also valid for the extra trees classifier applied on FIrms, where grinding burn is predicted with a mean accuracy of 88%. For both of them, a high accordance between the CV-score and the testing score as well as between the accuracy and the balanced accuracy is in evidence. Considering the confusion matrix, the wrong classified experiments are mainly shifted by one class. This could be effected by minimal deviations in certain feature values.

In contrast, the features of FAE−T only achieve a classification accuracy lower than 80%, whereby the balanced accuracy is marginally better. The standard deviation of both metrics is very high; thus, the prediction on this dataset is not reliable. It can be assumed that the low information content of the AE-sensor on the tailstock is related to the sensor location on the workpiece tailstock. The transmission quality of the acoustic emission is somehow reduced due to the several mechanical transfer elements located between the source of AE creation and the signal detection. In addition, eigenfrequencies or structural vibration could influence the generalization ability of the AE sensor. Furthermore, the classes B, D, and E in the confusion matrix become somehow indistinct, especially the decision boundary between the classes D and E is not built correctly. For the above reasons, the dataset obtained from AE sensor on the tailstock is not suitable for the multi-class prediction.

Again, two experimental observations are classified wrong in most of the test runs independently from the selected split. Hence, the classification performance could be slightly improved by removing these observations. As expected, the overall performance for all datasets of the multi-class prediction is inferior to the results obtained from the two-class task. So, a reliable multi-class prediction is only possible using all of the four signals in a multi-sensor approach.

5.4 Feature analysis

Finally, the selected and most important features for the classification results are analyzed. Therefore, the number of selected features is shown in Tables 6 and 7 for each pipeline. At first, the number of selected features from each sensor for the Fall dataset is examined building the average again over 100 random states. As depicted in Fig. 12b, for multi-class prediction, features of all sensors are selected almost equally often. This indicates again that all sensors are relevant for the classification and no sensor can be omitted. In contrast, for two-class prediction (see Fig. 12a), predominatly features from the AE sensor in spindle center are selected. In addition, the single power feature is selected for each random state. Hence, these results confirm the evaluation of the sensor-specific subsets as one sensor would be enough to differ between burn and no burn as well as multiple sensors are needed to classify multiple burn states.

In Fig. 13, the selected features are visualized with regard to the applied signal transformation methods, where TD represents the time-domain. The amount of features in each category is widely varying, e.g. the PSD comprises 585 features and the STFT only 9. To overcome this inequality during feature analysis, the number of selected features is related to the number of features in the specific category (green bars in Fig. 13). From all of the six time-frequency representations, features are selected, so each category contributes to the classification task.

Going further into detail, the selected features for the subset FAE−T are analyzed. Although for the multi-class prediction features from all signal processing methods are picked, the STFT and WPT features are chosen most frequently related to the total number of features in the specific category. In two-class prediction, only features from WPT, PSD, and EEMD are selected. For both problems, many features are chosen from PSD-domain, whereby no characteristic frequency band is identified within 100 to 500kHz. Hence, the AE signal contains many relevant information in frequency-domain, but for multi-class prediction, the classifier is not able to learn the characteristics of grinding burn.

Next, the feature matrix FAE−S is analyzed. Among the selected 140 features during multi-class prediction, more than 70% of the STFT, time-domain, WPT, and EEMD features are chosen from each category. In contrast, two-class prediction focuses mainly on EEMD and VMD features. These differences in feature selection might come due to the different estimator inside the RFE-method. Although features from all frequencies are selected, two main frequency bands from 180 to 220kHz and from 100 to 140kHz are identified. This indicates that more than one characteristic frequency band correlates with the creation of grinding burn.

Assessing the Irms-dataset, the feature selector mainly relies on the time-domain information. In addition, for multi-class prediction, the WPT features and, for two-class prediction, the STFT features are selected frequently. Going further into detail, the selected Irms-frequency features for multi-class prediction are mainly located below 4kHz as well as between 16 and 20kHz and at about 32kHz. Besides the analysis of the feature selection, the most important features during classification can be evaluated for tree-based machine learning methods. Due to the known information content of each split, the mean decrease in impurity (MDI) can be interpreted as feature importance [42]. Following Rebala et al. [40], the more a feature contributes to decrease the impurity, the more important the feature is for the overall classification. In Fig. 14, the MDI values of the 10 most contributing features during classification using an extra trees classifier are visualized.

The abovementioned frequency clusters and also the importance of the time-domain features are confirmed by the MDI values. In contrast to feature selection, none of the WPT features is listed under the top ten MDI values. This indicates that for the Irms, the feature selector and the classifier are not perfectly aligned to each other and more features can be omitted. Furthermore, the formation of frequency clusters might point out that a shift of these feature values leads to another level of workpiece damage.

It can be concluded that all of the sensors and signal transformation methods are necessary to achieve the high classification accuracy for the detection of grinding burn. Nonetheless, the calculation time of the signal transformation methods strongly varies from few milliseconds for PSD to many minutes for VMD. Therefore, it should be analyzed in future work if the classification result deteriorates when the time-consuming features from VMD and EEMD are omitted.

6 Conclusion

A new method for the in-process detection of grinding burn using machine learning is presented. First, an experimental procedure to generate controlled grinding burn using touch dressing with a low dressing depth is proposed, which enables the systematic creation of the four classes of grinding burn (according to ISO14104 [48]). The touch dressing procedure allows to approximately emulate the wear progress of the abrasive grains in a controlled manner. Due to the broad variation of the radial infeed velocity and the grinding wheel speed, a classification method can be derived, which is independent from the selected process parameters and also valid for high specific material removal rates. For each experiment, different time-frequency transforms are applied to acoustic emission, spindle electric current, and spindle power signals to calculate statistical features. Optimized pipelines, consisting of a feature selection and a classification method, are created for the sensor-specific feature subsets using genetic programming for hyperparameter optimization. The results show a high classification accuracy for both two-class and multi-class prediction, whereby the applied multi-sensor approach gives better results than taking only features from one sensor. This work outperforms state of the art approaches by obtaining a mean classification accuracy of 98.6% for multi-class prediction and 99% for two-class prediction, respectively. Moreover, the post-mortem detection of grinding burn using nital etching can be replaced by the in situ method presented in this work. As a consequence in industrial manufacturing, all of the produced parts can be checked and the costly inspection process is replaced by an intelligent algorithm with a high accuracy.

In future work, the robustness of the proposed method can be improved further, whereby the experiments could be extended to different grain sizes, bond types, and also workpiece materials. Furthermore, the training of the ML algorithm is based on the results of the nital test, so the subjectivity in the class labels cannot be avoided. Therefore, in the future, other post-mortem detection methods like hardness testing can be applied on some selected workpieces to improve the quality of the labels and as a consequence the robustness of the algorithm. In this work, the hyperparameter optimization using genetic programming requires for each pipeline approximately 240 CPU hours parallelized on six cores. To improve the computation time without loosing classification performance, the use of GPUs or more efficient methods for hyperparameter optimization are needed in the future. In addition, new machine learning algorithms and feature selection methods can be explored further to strive for an error-free detection of grinding burn.

References

Wegener K, Baumgart C (2018) Grinding burn. In: CIRP Encyclopedia of production engineering, pp 800–806. Springer Berlin

He B, Wei C, Ding S, Shi Z (2019) A survey of methods for detecting metallic grinding burn. Measurement 134:426–439. https://doi.org/10.1016/j.measurement.2018.10.093

Gholizadeh S, Leman Z, Baharudin BT (2015) A review of the application of acoustic emission technique in engineering. Struct Eng Mechan 54:1075–1095. https://doi.org/10.12989/sem.2015.54.6.1075

Lee DE, Hwang I, Valente CMO, Oliveira JFG, Dornfeld DA (2006) Precision manufacturing process monitoring with acoustic emission. Int J Mach Tools Manuf 46(2):176–188. https://doi.org/10.1016/j.ijmachtools.2005.04.001

Terchi A, Au YHJ (2001) Acoustic emission signal processing. Measurement and Control 34 (8):240–244. https://doi.org/10.1177/002029400103400804

Griffin JM, Chen X (2009) Multiple classification of the acoustic emission signals extracted during burn and chatter anomalies using genetic programming. Int J Adv Manufact Technol 45(11-12):1152. https://doi.org/10.1007/s00170-009-2026-7

Kim BS, Lee SH, Lee MG, Ni J, Song JY, Lee CW (2007) A comparative study on damage detection in speed-up and coast-down process of grinding spindle-typed rotor-bearing system. J Mater Process Technol 187-188:30–36. https://doi.org/10.1016/j.jmatprotec.2006.11.222. 3rd International Conference on Advanced Forming and Die Manufacturing Technology

Aguiar PR, Cruz CED, Paula WCF, Bianchi EC (2008) Predicting surface roughness in grinding using neural networks. In: Aramburo J, Trevino AR (eds) Advances in Robotics, Automation and Control. Rijeka. https://doi.org/10.5772/5535

Eda H, Kishi K, Usiu N, Kakino Y, Fujiwara A (1983) In-process detection of grinding burn by means of utilizing acoustic emission. J Japan Soc Precis Eng 49(9):1257–1262. https://doi.org/10.2493/jjspe1933.49.1257

Saxler W (1997) Erkennung von Schleifbrand durch Schallemissionsanalyse. Ph.D. Thesis, RWTH-Aachen, Duesseldorf

Wang Z, Willett P, DeAguiar PR, Webster J (2001) Neural network detection of grinding burn from acoustic emission. Int J Mach Tools Manuf 41(2):283–309. https://doi.org/10.1016/S0890-6955(00)00057-2

Kwak J-S, Ha M-K (2004) Neural network approach for diagnosis of grinding operation by acoustic emission and power signals. J Mater Process Technol 147(1):65–71. https://doi.org/10.1016/j.jmatprotec.2003.11.016

de Aguiar PR, Bianchi EC, Canarim RC (2012) Monitoring of grinding burn by acoustic emission. In: Acoustic emission, 2nd edn, IntechOpen, pp 341–364, DOI https://doi.org/10.5772/31339, (to appear in print)

Neto RFG, Marchi M, Martins CHR, de Aguiar PR, Bianchi EC (2014) Monitoring of grinding burn by ae and vibration signals. In: ICAART

Liu Q, Chen X, Gindy N (2005) Fuzzy pattern recognition of ae signals for grinding burn. Int J Mach Tools Manuf 45(7):811–818. https://doi.org/10.1016/j.ijmachtools.2004.11.002

Chen X, Mohamed A, Oluwajobi A (2012) Investigation of AE features in grinding. J Phys Conf Ser 364:012090. https://doi.org/10.1088/1742-6596/364/1/012090

Griffin JM (2015) Traceability of acoustic emission measurements for micro and macro grinding phenomena—characteristics and identification through classification of micro mechanics with regression to burn using signal analysis. Int J Adv Manufact Technol 81(9-12):1463–1474

Lajmert P (2010) An application of hilbert-huang transform and principal component analysis for diagnostics of cylindrical plunge grinding process. J Machine Eng 10(1):39–49

Lajmert P, Sikora M, Kruszynski B, Ostrowski D (2018) Application of principal component analysis and decision trees in diagnostics of cylindrical plunge grinding process. In: Hamrol A, Ciszak O, Legutko S, Jurczyk M (eds) Advances in manufacturing, pp 707–716. Springer International Publishing, Cham

Yang Z, Wu H, Yu Z, Huang Y (2014) A non-destructive surface burn detection method for ferrous metals based on acoustic emission and ensemble empirical mode decomposition: from laser simulation to grinding process. Meas Sci Technol 25(3):035602. https://doi.org/10.1088/0957-0233/25/3/035602

Guo W, Li B, Shen S, Zhou Q (2019) An intelligent grinding burn detection system based on two-stage feature selection and stacked sparse autoencoder. Int J Adv Manufact Technol 103(5):2837–2847. https://doi.org/10.1007/s00170-019-03748-5

Gao Z, Wang X, Lin J, Liao Y (2017) Online evaluation of metal burn degrees based on acoustic emission and variational mode decomposition. Measurement 103:302–310. https://doi.org/10.1016/j.measurement.2017.02.049

Webster J, Marinescu I, Bennett R, Lindsay R (1994) Acoustic emission for process control and monitoring of surface integrity during grinding. CIRP Ann 43(1):299–304. https://doi.org/10.1016/S0007-8506(07)62218-5

Huang N (2005) Introduction to the hilbert-huang transform and its related mathematical problems. Interdiscip Math, 5. https://doi.org/10.1142/5862

Isermann R (2006) Fault diagnosis systems an introduction from fault detection to fault tolerance. Springer

Hahn SL (1996) Hilbert transforms in signal processing. Artech house publishers

Huang N, Shen Z, Long SR, Wu MLC, Shih HH, Zheng Q, Yen NC, Tung C-C, Liu HH (1998) The empirical mode decomposition and the hilbert spectrum for nonlinear and non-stationary time series analysis. Proceedings of the Royal Society of London. Series A: Mathematical, Physical and Engineering Sciences 454:903–995. https://doi.org/10.1098/rspa.1998.0193

Quek ST, Tua PS, Wang Q (2003) Detecting anomalies in beams and plate based on the hilbert–huang transform of real signals. Smart Mater Struct 12(3):447–460. https://doi.org/10.1088/0964-1726/12/3/316

Wu Z, Huang N (2009) Ensemble empirical mode decomposition: a noise-assisted data analysis method. Advances in Adaptive Data Analysis 1:1–41. https://doi.org/10.1142/S1793536909000047

Zosso D, Dragomiretskiy K (2013) Variational mode decomposition. IEEE Trans Signal Process, 62. https://doi.org/10.1109/TSP.2013.2288675

Grimmett G (2001) Probability and random processes. Oxford University Press, Oxford

Tönshoff HK, Friemuth T, Becker JC (2002) Process monitoring in grinding. CIRP Ann 51(2):551–571. https://doi.org/10.1016/S0007-8506(07)61700-4

Sharma V, Parey A (2016) A review of gear fault diagnosis using various condition indicators. Procedia Eng 144:253–263. https://doi.org/10.1016/j.proeng.2016.05.131. International Conference on Vibration Problems 2015

Teti R, Jemielniak K, O’Donnell GE, Dornfeld D (2010) Advanced monitoring of machining operations. CIRP Ann Manuf Technol 59:717–739. https://doi.org/10.1016/j.cirp.2010.05.010

Han J, Kamber M, Pei J (2012) Data mining: concepts and techniques, 3rd edn. Morgan Kaufmann Publishers, Waltham, Mass.

Guyon I, Gunn S, Nikravesh M, Zadeh LA (2006) Feature extraction: Foundations and applications (studies in fuzziness and soft computing). Springer, Berlin

Bolón-Canedo V, Sánchez-Maroño N, Alonso-Betanzos A (2015) Feature selection for high-dimensional data. Springer, Berlin

Pilnenskiy N, Smetannikov I (2020) Feature selection algorithms as one of the python data analytical tools. Future Internet 12 (3):54

Pedregosa F, Varoquaux G, Gramfort A, Michel V, Thirion B, Grisel O, Blondel M, Prettenhofer P, Weiss R, Dubourg V, Vanderplas J, Passos A, Cournapeau D, Brucher M, Perrot M, Édouard Duchesnay (2011) Scikit-learn: Machine learning in python. J Mach Learn Res 12(85):2825–2830

Rebala G, Ravi A, Churiwala S (2019) An introduction to machine learning. Springer, Cham

Zhou Z-H (2012) Ensemble methods - foundations and algorithms, 1st edn. CRC-Press, Boca Raton

Breiman L (2001) Random forests. Mach Learn 45:5–32. https://doi.org/10.1023/A:1010933404324

Geurts P, Ernst D, Wehenkel L (2006) Extremely randomized trees. Mach Learn 63(1):3–42. https://doi.org/10.1007/s10994-006-6226-1

Le TT, Fu W, Moore JH (2020) Scaling tree-based automated machine learning to biomedical big data with a feature set selector. Bioinformatics 36(1):250–256

Klocke F (2017) Fertigungsverfahren 2: Zerspanung mit geometrisch unbestimmter schneide. Springer, Berlin Heidelberg

Nazarchuk Z, Skalskyi V, Serhiyenko O (2017) Acoustic emission. Springer, Berlin

Jemielniak K (2001) Some aspects of acoustic emission signal pre-processing. J Mater Process Technol 109:242–247. https://doi.org/10.1016/S0924-0136(00)00805-0

ISO14104:2017(E) (2017) Gears — surface temper etch inspection after grinding, chemical method. Standard, International Organization for Standardization

Chawla NV, Bowyer KW, Hall LO, Kegelmeyer WP (2002) Smote: synthetic minority over-sampling technique. J Artif Intell Res 16:321–357

Funding

Open Access funding provided by ETH Zurich.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing Interests

On parts of this work a patent was filed.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sauter, E., Sarikaya, E., Winter, M. et al. In-process detection of grinding burn using machine learning. Int J Adv Manuf Technol 115, 2281–2297 (2021). https://doi.org/10.1007/s00170-021-06896-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00170-021-06896-9