Abstract

Laminated composite structures have a distinct inherent potential for optimization due to their tailorability and their associated complex failure mechanisms that makes intuitive design remarkably difficult. Optimization of such is a maturing technology with many criteria and manufacturing constraints having been successfully demonstrated. An approach for high-cycle fatigue is however yet to be developed in a gradient-based context. Thus, the objective of this work is to introduce a novel framework that allows for effective high-cycle fatigue optimization of laminated composite structures.Offset is taken in the Discrete Material and Thickness Optimization parametrization, which allows for simultaneous material and thickness selection for each layer that constitute a laminate. The fatigue analysis approach is based on accumulating damage from all variable-amplitude cycles in an arbitrary spectrum. As high-cycle fatigue behavior is highly nonlinear, it is difficult to handle in optimization. To stabilize the problem, damage is scaled using an inverse P-mean norm formulation that reduces the nonlinearity and provides an accurate measure of the damage. These scaled damages are then aggregated using P-norm functions to reduce the number of constraints. This is convenient, as it allows sensitivities to be efficiently calculated using analytical adjoint design sensitivity analysis. The effectiveness of this approach will be demonstrated on both benchmark examples and a more complicated main spar structure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fiber-reinforced polymer laminated composites are becoming increasingly popular for industrial applications. A laminate consists of two or, most commonly, more layers of fibrous orthotropic material with various orientations of fiber in each layer. The possibility of orienting fibers in the direction of the critical loads allows for efficient use of material which is desirable for minimizing weight and costs. Commonly used materials are Glass or Carbon Fiber Reinforced Polymers (GFRP/CFRP) as these achieve particular high stiffness- and strength-to-weight ratios compared to traditional engineering materials, such as steel or aluminum, and therefore have particular prevalence in weight-critical structures such as wind turbine blades (Thomsen 2009; Mishnaevsky et al. 2017) or various aerospace applications (Mangalgiri 1999; Ostergaard et al. 2011).

The design with layered materials is difficult due to the generally anisotropic behavior of laminates. The composition of laminated composites, i.e. fibers embedded in the polymer matrix, results in complex failure phenomena that can occur in either fiber, matrix, or in their interface. Traditional trial-and-error design approaches therefore often need many iterations, if relying on pure intuition of the engineer, and will typically result in sub-optimal designs. This calls for the application of structural optimization techniques, such that the large design freedom can be effectively utilized while ensuring the discussed complexities are accounted for.

Structural optimization of laminated composites has been an active research topic for many years, and as a result many approaches have been developed for the purpose, see Nikbakt et al. (2018) and Xu et al. (2018) for recent reviews. As evident from the review papers, zero-order methods remain quite popular in optimization of laminated composites. This is likely due to these methods being excellent at finding the global optimum of a problem. Another substantial motivation is that the straightforward formulation of laminate optimization problems involve some sort of discrete variables, which can be handled directly by these methods. However, these methods are computationally expensive to such a degree, that they can only be applied to solve problems with a few design variables, see Sigmund (2011) for further discussion of the topic.

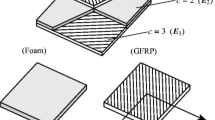

This paper will exclusively consider gradient-based methods that allow solving large-scale optimization problems, and there are many approaches to parametrization that are compatible with such a framework. For laminated composites, typical parametrizations include thickness (Pedersen 1991; Forcier and Joncas 2012; Buckney et al. 2012; Sjølund and Lund 2018) and fiber angles (Pedersen 1989; Boddeti et al. 2020). On the other hand, the currently most popular parametrization adopted in structural optimization is topology optimization, see Bendsøe and Kikuchi (1988), Bendsøe (1989), and Bendsøe and Sigmund (2003), due its potential for generating non-intuitive designs and thus significantly reducing material use. A subcategory of the field is multi-material topology optimization, which is particularly relevant in the context of composites, where several materials typically are used. Here, the objective is to place the best material with respect to some criteria instead of choosing whether to place material or not. The approach was originally introduced in Sigmund and Torquato (1997) and Gibiansky and Sigmund (2000) to design composite materials for extreme thermal expansion and for achieving an extreme bulk modulus respectively. Their approach considered at maximum three candidate materials (two isotropic and one void), and this is insufficient for complex laminated composite structures, which can consist of many different materials that further can be oriented differently in each respective layer. To have a parametrization suitable for optimization of general laminated composite structures, multi-material topology optimization has been generalized in Discrete Material Optimization (DMO) (Stegmann and Lund 2005; Lund and Stegmann 2005), where choice of material, orientation of fibers and stacking sequence are all included as design variables. This is carried out by selecting materials from a pool of discrete candidates. A typical example is GFRP with fibers oriented as \(0^{\circ }\), \(-45^{\circ }\), \(45^{\circ }\) and \(90^{\circ }\) which constitute four candidates.

Similar to topology optimization, the straightforward formulation of the DMO problem is an integer programming problem. Typically, this problem is solved by relaxing the design variables, making them continuous, and then applying penalization to achieve a discrete design. Hence, the candidate variables are also treated as continuous with selection of a discrete material being carried out through penalization of intermediate values. In the original DMO papers, a self-balancing scheme is used to perform penalization and ensure choice of a single candidate material. Later, Hvejsel and Lund (2011) generalized the well-known SIMP (Bendsøe 1989; Zhou and Rozvany 1991) and RAMP (Stolpe and Svanberg 2001a) schemes to multi-material optimization, which exhibit improved penalizability in comparison to the self-balancing schemes. As the self-balancing feature is removed, the approach relies on linear constraints to ensure only a single material candidate is chosen for each layer. This approach therefore works the best with optimization techniques suited for handling many linear constraints, in particular as other important manufacturing constraints are also formulated as linear constraints. Alternative interpolation methods have been proposed which primarily aim to reduce the number of design variables in the problem, see (Bruyneel 2011; Bruyneel et al. 2011; Gao et al. 2012; Kiyono et al. 2017; Hozič et al. 2021).

In Sørensen and Lund (2013) and Sørensen et al. (2014) DMO was extended to also include layer thickness as a design variable. This extended approach is termed Discrete Material and Thickness Optimization (DMTO). Parametrization of thickness is done using a density variable, which is also interpolated and penalized. This density design variable is associated to either each individual layer of each element or to material domains, which can span multiple layers and elements. As such, it becomes possible to design general laminates by simultaneously considering material choice and thickness through DMTO. Various criteria have been developed for and demonstrated with optimization of laminated composites using DMTO. An approach not yet developed for gradient-based discrete material optimization is one treating fatigue. Compared to other criteria, fatigue is particularly tedious due to its non-linear nature, and that calculations must be carried out over a load spectrum, which significantly increases computational requirements. However, with the use of modern optimization techniques, it is indeed feasible to carry out fatigue optimization in this context and achieve good solutions.

This paper will thus extend the state of the art within gradient-based optimization of laminated composite structures by demonstrating how variable-amplitude high-cycle fatigue loading can be included. The approach will be demonstrated with the DMO/DMTO approach allowing for the design of general laminate structures. Fatigue analysis will be based on well-known methods that are commonly applied in industry. The approach will be demonstrated on both benchmark examples and on a simplified main spar structure to demonstrate industrial application. Note, a preliminary outline of the content of the paper is given in Hermansen and Lund (2022) by the authors, however it contains no details necessary for implementing the method and reproducing the results, which is given in this present work.

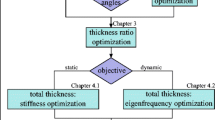

The remaining paper is structured according to the following. In Sect. 2 the DMO/DMTO parametrization is presented with associated manufacturing constraints necessary to achieve manufacturable designs. Section 3 will present the fatigue-analysis-related topics, including spectrum quantification, mean stress correction, determining cycles to failure, and damage calculations. This is followed by a description of the optimization techniques applied in Sect. 4, treating methods for stabilization of the fatigue optimization problem, dealing with stress-singularities, and damage function formulation. The design sensitivity analysis is carried out in this section, determining the general analytical adjoint gradient with partial derivatives listed in Appendix A. This is followed by a description of the optimization approach with the numerous techniques adopted that enable achieving good solutions. Section 5 will demonstrate results attained by fatigue optimization. This section will also include a discussion of the model, methods and results. Finally, Sect. 6 will give concluding remarks.

2 Discrete Material and Thickness Optimization

Discrete Material and Thickness Optimization (DMTO) (Sørensen and Lund 2013; Sørensen et al. 2014) allows simultaneous selection of material, fiber orientation, stacking sequence, and thickness of laminated composites. Material selection is based on choosing the best performing material from a pool of candidates defined for each layer l in the model. A design variable \(x^{(p,l,c)}\) is defined which governs this selection of material, see Eq. (1)

Here, p denotes a patch that consists of one or more elements.

The motivation for introducing such patches is to regularize the problem for manufacturing perspectives and to lower computational effort. If having an element-wise association of design variables, the optimal solution of the problem will depend on the discretization chosen, see Cheng and Olhoff (1981). By predefining the areas where design variables are to pertain, a solution is also defined for the problem. This also directly relates to the manufacturing of the structure. Laminated composite structures are typically manufactured by laying out rolls of material, that spans larger sections of the structure, and it is therefore desirable for such regions to share design variables. As an additional outcome, a substantial reduction in the number of design variables is achieved through this parametrization.

The thickness is parametrized by density design variables \(\rho ^{(d,l)}\), that indicate if a given layer should be present in the optimized laminate or not. These variables are often decoupled from the material patches, as it may be desirable to have different thicknesses of layers in the same material patch. Instead these are grouped in independent geometry domains d. Thus, the density design variables are formulated as shown in Eq. (2).

The described parametrization using patches and material domains is illustrated in Fig. 1.

The DMTO patch parametrization illustrated on a structure with five layered elements. Material candidates are associated to patches and thickness variables to domains. In this case, material candidates patches span the same layer of all elements, while thickness domains are created for each layer of every element

The material selection and thickness parametrization as presented is an integer programming problem. However, this formulation is undesirable as such problems are difficult and expensive to solve. Instead, the design variables are treated as continuous and are driven towards discreteness by penalizing intermediate values. In this work, the multi-phase version of the Rational Approximation of Material Parameters (RAMP) scheme (Stolpe and Svanberg 2001a; Hvejsel and Lund 2011) is applied for penalization. The RAMP scheme is preferred over the popular Solid Isotropic Material with Penalization (SIMP) approach due to its inherent property of having non-zero gradient when design variables are zero. Using RAMP, weighting functions are defined for both the candidate material and density variables as shown in Eqs. (3) and (4) respectively.

Here, \(q_x\) is the penalization factor for the candidate material design variables x and \(q_\rho\) for the density design variables \(\rho\). To make intermediate stiffness economical, the penalization exponents must be larger than one. Commonly, \(q_x = q_\rho\) is applied to give equal weight to the two types of design variables, and this is also the case in this work.

The weighting functions enter through the constitutive matrices. To only necessitate solution of a single finite element problem per iteration (Stegmann and Lund 2005), the constitutive matrix \(\tilde{{\varvec{C}}}\) is defined as a weighted sum of the candidate material constitutive matrices \({\varvec{C}}\), see Eq. (5).

Here, \(N_{\text{c}}\) is the number of candidate materials.

Completing the parametrization is the inclusion of manufacturing constraints. For the candidate material variables, linear constraints are formulated to ensure the choice of a single candidate material, see Eq. (6).

Note that thickness is parametrized through the constitutive matrix in Eq. (5), which is a strategy adapted from density-based topology optimization, see Sørensen and Lund (2013). The downside of such parametrization is that it allows intermediate voids. As intermediate voids are unmanufacturable in laminated structures, they should not be present in the optimized design. To enforce material below non-zero density domains, i.e. that material is only removed from the top, constraints are applied to the thickness variation during optimization, see Eq. (7).

However, these constraints result in the formation of density bands, i.e. a domain-wise gradient of intermediate density that is unaffected by penalization, see Fig. 2. This problem is solved by using special move limits for the density during optimization. These are given in Eq. (8) following Sørensen and Lund (2013).

Here, T is a threshold parameter which dictates the amount of density that must be present in the underlying layers. This concept is illustrated in Fig. 3.

Furthermore, constraints are formulated on the allowable number of plies to drop at a time between adjacent layers. Such constraints are typically used in laminate design to reduce stress concentrations at ply-drop locations (Mukherjee and Varughese 2001). The constraints are formulated in Eq. (9).

Here, S is the number of plies that are allowed to drop and \(N_{\text{l}}\) is the number of layers.

This leads to the formulation of a generic DMTO problem. The problem is stated in nested analysis and design form, such that it is assumed that the state equations are solved implicitly with the optimization functions. As such, the optimization problem takes the form given in Eq. (10).

Here, f is the objective function, g is a constraint and \(g_{\text{lim}}\) is the right-hand side of the constraint.

3 Fatigue analysis

Fatigue failure in composites is complex and thus no universal model exists to reliable predict the failure. This is primarily due to laminates with varying layups responding differently to the same load thereby exhibiting different failure mechanisms. Furthermore, fatigue damage is progressive, which is typically characterized by initial void formulation leading to transverse matrix cracking, which propagates until final failure. Predicting the progressive fatigue failure response requires models with computational expense too high for use in structural optimization. As such the fatigue evaluation approach presented in this section assumes that stiffness does not degrade during the life time of the structure.

The analysis is carried out by first breaking down the variable-amplitude spectrum using rainflow counting, returning a set of scaling factors for mean and amplitude loads. These are used to determine corresponding mean and amplitude stress, which are subsequently used to calculate a mean-stress-corrected equivalent amplitude stress using a constant life diagram. This stress is comparable with the material S-N curves and is thus used to determine the number of cycles to failure for each combination of mean and amplitude loads. Finally, the damage values from each combination are summed using the linear Palmgren-Miner rule. The described fatigue approach is often termed the traditional approach to fatigue analysis, see Sutherland (1999) and Nijssen (2006) for more details. It is commonly applied in industry, e.g. for wind turbine blade design, where its use is prescribed by design standards, see DNV-GL (2015), which motivates its adaptation to optimization.

3.1 Load spectrum quantification

Fatigue-critical structures are typically subject to complex variable-amplitude spectra with many oscillations in load. To perform fatigue evaluation, such spectra must be quantified by use of an appropriate counting algorithm. One popular approach is rainflow counting (Matsuishi and Endo 1968), which is also adopted for this work. This approach is also recommended for use with laminated composites as a result of the studies in Passipoularidis and Philippidis (2009). The rainflow approach discretizes the continuous spectrum to a sequence of peaks and valleys, such that it is characterized by a time-dependent set of scaling factors \({\varvec{c}}\). The fatigue stress state \({\varvec{\sigma }}^{(t)}\) at a time step t is then determined by scaling the reference stress state \(\hat{{\varvec{\sigma }}}\) with the load scaling factor \(c^{(t)}\), see Eq. (11).

This scaling factor is later broken down to amplitude and mean components when calculating the respective stresses. A section of a load spectrum with associated amplitude and mean stress scaling components is illustrated in Fig. 4.

In this work, it is assumed that the loads applied are mutually proportional. Proportionality occurs when the loads are applied in sync which causes the orientation of principal stress to be constant through the entire load history. In the general case where loading is applied non-proportionally, rainflow counting has to be repeated for every evaluation point in each element and for each design iteration, which significantly increases the computationally expense. Thus, the proportionality assumption infers that only a single rainflow counting is needed, and from this the damaging stress state is calculated by application of the same set of scaling factors throughout optimization. For an approach to efficient computation of non-proportional stress states in the context of topology optimization of metals, see Zhang et al. (2019).

The amount of combinations of mean and amplitude is typically reduced to increase computational efficiency by binning these within determined intervals. For instance, load combinations with mean and amplitude scaling factors in the interval \(c_{\text{a}}, c_{\text{m}} \in [0;0.1]\) can be placed in a single bin where \(c_{\text{a}}=c_{\text{m}}=0.05\) is assigned to represent these loads. Binning however lowers accuracy of the analysis and the degree hereof depends on the chosen bin discretization.

3.2 Damage evaluation

The fatigue evaluation takes offset in linear static stress analysis. First, the linear static finite element problem is solved, see Eq. (12).

Here, \({\varvec{K}}\) is the global stiffness matrix, \({{\mathbf{U}}}\) is the global displacement vector, and \({\varvec{F}}\) is the equivalent nodal load vector. From this, element-wise structural strains \({\varvec{\varepsilon }}_{xy}\) and stresses \({\varvec{\sigma }}_{xy}\) are calculated, see Eq. (13).

Here, \({\varvec{B}}^{(e,l,m)}\) is the strain-displacement matrix, \({\varvec{u}}^{(e)}\) is the displacement vector, \({\varvec{C}}^{(e,l)}\) is the constitutive matrix, \({\varvec{\varepsilon }}_{xy}^{(e,l,m)}\) is the strain vector in structural coordinates (x, y, z), and \({\varvec{\sigma }}_{xy}^{(e,l,m)}\) is the stress vector in structural coordinates for layer l of each element e. The position of calculation in the layered element is indicated by m, which in this work is exclusively at the top (\(m=1\)) and bottom (\(m=2\)) of each layer. To evaluate damage of the material, the stress or strain is rotated to material coordinates (1, 2), see Fig. 5.

In this work a stress-based approach is used and as such, the stress is rotated to material coordinates using the appropriate transformation matrix \({\varvec{T}}\), see Eq. (14).

Here, \({\varvec{\sigma }}_{12}\) is stress in material coordinates and \({\varvec{\sigma }}_{xy}\) is stress in the structural coordinates. Note, that if a structure is being designed for failure in the low-cycle fatigue regime, a strain-based approach is more appropriate for capturing plastic deformation effects prone to occur in this case.

The material stress is then scaled with the amplitude and mean scaling factors (\(c_{\text{a}}\) and \(c_{\text{m}}\)) determined from rainflow counting, resulting in amplitude stress \(\sigma _{\text{a}}\) and mean stress \(\sigma _{\text{m}}\) components for each load combination i, see Eqs. (15) and (16).

These components are then combined to an equivalent amplitude stress, which is comparable to the material S-N curves. Many equivalent stress models exist, and the choice of such depends on the particular case. For engineering metals, the modified Goodman expression is widely used as it is considered conservative for such materials, in particular if neglecting the beneficial effect of positive mean stress.

For composite materials, alternating compressive stresses are damaging and are therefore not negligible. In some cases, the Goodman correction has been shown to underpredict the influence of mean stress on damage due to the different failure mechanisms exhibited in tension and compression (Vassilopoulos and Keller 2011). Moreover, it is not sufficient to perform mean stress correction based on an isotropic equivalent stress criterion such as the signed von Mises or Sines methods that are available for metals. This is due to the orthotropic behavior of fiber-reinforced polymers and, as such, the damaging stress is normally determined for each stress component (Nijssen 2006). As a result, an S-N curve is necessary for each damaging stress component.

Despite its inadequacy, the Goodman correction is commonly used in industry due to its simplicity, and in particular, that only one S-N curve needs to be determined for each stress component z. The Goodman expression is given in Eq. (17).

Here, \(\sigma _{\text{eqv}}\) is the equivalent amplitude stress and \(\sigma _{\text {US}}\) is the ultimate strength (either compressive UCS or tensile UTS). Mean stress correction with modified Goodman is illustrated in a Constant Life Diagram (CLD) in Fig. 6.

The R-ratio R is used to characterize the nature of each cycle in the spectrum. It is determined from the ratio of minimum stress \(\sigma _{\text{min}}\) to maximum stress \(\sigma _{\text{max}}\), see Eq. (18).

With the modified Goodman expression, the correction is made with the fully-reversed S-N curve, that is defined at \(R=-1\). It should be noted, that in an optimization context the Goodman correction is non-differentiable at this point. Therefore, if such a cycle exists in the spectrum, it should be offset with a small value to avoid problems.

To address the inadequacy of the Goodman criterion, multiple S-N curves can be included in the analysis. This gives a good evaluation of load combinations in different areas of the CLD, that represent different loading conditions in the spectrum, i.e. tension-tension, tension-compression or compression-compression combinations. However, no expression exists to calculate the cycles to failure by correcting between two S-N curves. As such, the S-N curves are included by interpolation between available data at different the R-ratios.

In this work, linear interpolation will be applied, see Philippidis and Vassilopoulos (2004). This is a simple way to carry out the interpolation, however Vassilopoulos et al. (2010a) concludes that this method seems to perform quite well compared to more complicated interpolation schemes. An improved piece-wise non-linear formulation has since been presented in Vassilopoulos et al. (2010b), and this can replace the piece-wise linear formulation if increased accuracy is required. For other methods, see Vassilopoulos et al. (2010a).

Explicit expressions for calculating stress amplitude at any R-ratio have been derived in Philippidis and Vassilopoulos (2004), and these are used in the following to derive S-N data at a given load combination. The expressions are included here, however not derived. For the full derivation see Vassilopoulos and Keller (2011). The expressions use the R-ratio at the particular load combination, which is defined in Eq. (18). Commonly, data for stress ratios of \(R = 10\) and \(R = 0.1\) are used to represent the different loading conditions described, which yields a CLD as illustrated in Fig. 7.

Taking offset in the Goodman formulation, with mean correction based on ultimate strengths, the interpolation of data in the interval \(R_1< R < 1\) is formulated as shown in Eq. (19).

Here, UTS is the tensile strength of the material and r is the slope of a radial line in the CLD, which represents the interpolated S-N curve. This slope is defined in Eq. (20).

If the stress ratio is in the interval of \(R_2 = -1< R< R_1 < 0\), the interpolation yields the expression in Eq. (21).

Next, if the stress ratio is in the interval of \(R_2=-1< R < R_3\), the interpolation yields the expression in Eq. (22).

Finally if \(R> R_3 > 1\), the interpolation yields Eq. (23).

Here, UCS is the compressive strength of the material.

To apply these equations, an interpolation is performed first at \(N=1\). At this point, the fatigue strength parameter \(\sigma _{\text{f}}\), which is the y-intercept, can be derived. Subsequent interpolations are performed at \(N \ne 1\), from which the S-N curve slope b is derivable by regression. This yields all necessary parameters at the particular load combination for determining fatigue life for the particular load combination.

Note, that this process infers that much data is generated, as in principle, the fatigue spectrum can consist of entirely unique load combinations, each requiring an S-N curve. To reduce the amount of data, it is chosen to pre sample a number of S-N curves before performing the fatigue calculation. Because of the proportional loading assumption, sampling only once before optimization is needed. Each load combination will then use the S-N curve derived at the closest R-ratio.

To investigate the impact of choosing an S-N curve, that is not derived exactly at the R-ratio of the load combination, a sensitivity analysis has been carried out. The analysis indicated that R becomes particularly sensitive when reaching the negative abscissa, i.e. in the interval \(R \in [0,2]\). At this point, the S-N parameters change rapidly with small changes in R-ratio, indicating that more samples could be useful in this area. Nevertheless, through numerical testing it was determined that generating 20 sampling points between each reference point yielded a reasonable approximation to the fatigue damage computed by interpolating at each R-ratio.

Note that inclusion of more S-N curves increases the amount of intersecting points of straight lines, see Fig. 7, which are non differentiable, and action should therefore be taken to avoid presence of values in the transition between two S-N curves in the CLD. This problem is entirely avoidable, if instead using a continuous representation of the CLD, see Vassilopoulos et al. (2010a) for such models and a comparison of their accuracy.

After deriving the equivalent amplitude stress by an appropriate CLD method, cycles to failure is calculated for each stress component using an S-N curve expression. Many S-N representations exist with various levels of accuracy and different amounts of material characterization requirements, see e.g. Nijssen (2006). The Basquin expression, which is a linear curve in a log-log plot, is commonly applied fit to the high-cycle material behavior and is also adopted here. From the Basquin expression, cycles to failure N is determined as shown in Eq. (24).

Here, \(\sigma _{\text{f}}^{(z)}\) is the fatigue failure strength and \(b^{(z)}\) is the Basquin exponent, that represents the material fatigue strength degradation, for each stress component z. Note that, in case of using CLD interpolation, these parameters are derived from the interpolation and are used to calculate cycles to failure along with the amplitude stress at the particular R-ratio of every load cycle i.

Finally, damage from each individual load combination has to be accumulated. A variety of methods also exists for this purpose. In this work, the well-known linear Palmgren-Miner rule is adopted due to its simplicity and widespread usage, see Eq. (25).

Here, D is the damage, \(n^{(i)}\) is the number of cycles at a given level, \(N_{loads}\) is the number of unique cycles in the spectrum, \(D_{\text{lim}}\) is the damage fraction limit and \(c_{\text{L}}\) is a scaling factor which can be used to scale the load if the load spectrum consists of repeated blocks. The use of this scaling factor can make fatigue calculation more efficient, as it can be performed on only a single block smaller than the full load spectrum. In this work, \(D_{\text{lim}} = 1\) is always used for simplicity, however this value can be reduced if a higher margin of safety is desired.

4 Optimization approach

Finite-element-based fatigue analysis results in many local damage values. This infers that the optimization problem consists of many local functions that must be minimized or constrained. It is desirable to reduce this amount to be make the optimization problem easier to solve, and a method for this purpose will be presented in the following. Moreover, fatigue damage as defined has inherent difficulties, which require reformulation of the problem in order to stabilize the optimization and be able to achieve a good solution. These difficulties are namely singular optima in the design domain, and the exponential dependence of the fatigue damage on stress that makes it difficult for the problem to converge. Techniques are presented in this section to also solve these problems. A fatigue optimization function is then formulated and its gradients are derived. This is followed by a presentation of the optimization framework with several techniques to improve convergence.

4.1 Singularities in stress-based optimization

It is well known that stress-based criteria suffer from the presence of singular optima, see Sved and Ginos (1968), Kirsch (1990), and Rozvany and Birker (1994). Solving the singularity problem is typically done by relaxing the design space. Popular methods for relaxation include the \(\varepsilon\)-approach of Cheng and Guo (1997) and the qp-method of Bruggi (2008). Here, the qp-method is adopted, as it allows for further penalization of intermediate density besides resolving the singular optima problem. It entails artificially increasing the stress of intermediate density for the optimizer, thus making such uneconomical in the design. For the RAMP interpolation, see Eqs. (3) and (4), this is achieved by using \(q < 0\).

Interpolation has to be done to both stress and damage, and it is possible to add penalization to both measures. In this work, relaxation is applied to only the damage, meaning that stress is interpolated using linear functions. Penalization and relaxation functions are illustrated in Fig. 8. The actual values used for the exponent in the various penalty functions are presented when demonstrating the approach in Sect. 5. The candidate damage \(\bar{D}\) is then calculated by multiplying the corresponding weighting function \(w_{\text{D}}\) to relax the problem, see Eq. (26).

4.2 Damage scaling

Fatigue life, as evaluated in Eq. (24), is exponentially dependent on the stress. This non-linear formulation is difficult to handle in an optimization context, as relatively small changes in stress can yield large changes in cycles to failure. Stabilizing the measure is therefore needed to reliably achieve a solution which is introduced by scaling the damage. Various approaches have been formulated for this purpose, see Olesen et al. (2021) for a comparison. In this work, the inverse P-mean scaling also presented in Olesen et al. (2021) is used, see Eq. (27).

Here, \({\varvec{D}}_{\text{s}}\) is the scaled damage array, \(P_{\text{D}}\) controls the accuracy of the P-mean norm approximation, s is a scaling exponent, and \(c_{\text{D}}\) is a weighting factor which determines the accuracy between the scaled measure and the true damage.

The damage measure for different values of \(c_{\text{D}}\) is illustrated in Fig. 9. As illustrated, when \(c_{\text{D}} \rightarrow 1\), the high non-linearity is effectively suppressed. However it is also evident that the scaled damage measure overestimates the true damage when it is less than one. This is problematic when solving damage-constrained problems, since this will be the case for many local damages, and as a consequence, the design may be overdimensioned in some areas. Preferably, a damage-constrained problem is to be solved using \(c_{\text{D}} \ne 1\), as a better general approximation of the true damage is achieved. Numerical investigations however indicate, that convergence is best when using \(c_{\text{D}} = 1\), which is due to it suppressing the nonlinearity the most. Thus, inverse P-mean scaling is well-suited for application with a continuation approach on the factor \(c_{\text{D}}\), such that good convergence behavior is prioritized in the beginning of the optimization and then gradually changed to more accurately estimate the true damage, thus only allowing the expression to become more nonlinear, once the optimizer is close to an optimum.

Various approaches for how to scale the different damage measures have been tested, herein particularly scaling the candidate values before performing the summation in Eq. (28). This way would be convenient if a single S-N curve is used, as the damage may be scaled using the associated Basquin exponent b, see Eq. (24). An unfortunate feature of this scaling is that infeasible true damage can be scaled to become feasible. This behavior is undesirable as the optimizer can converge to solutions that have feasible scaled damage, but infeasible true damage. Furthermore, when using multiple S-N curves with CLD interpolation, a single scaling factor s must be chosen to scale the damage anyway. As such, an appropriate value of s is applied for the examples based on numerical studies, see also Lee et al. (2015) and Zhang et al. (2019).

Scaling of the interpolated damage and aggregated damage has also been tested. However, common for both is that performing the scaling after damage interpolation seems to negate the penalization effect and, as a result, it was not possible to solve the examples shown in Sect. 5 and achieve a discrete high-quality solution using these approaches. They were therefore abandoned going forward.

Finally, the scaled candidate damages are summed and weighted, using the density weighting function, yielding Eq. (28).

Here, \(\tilde{D}_{\text{s}}\) is the interpolated scaled damage used in optimization and \(v_{\text{D}}\) is the thickness weighting factor with an appropriate exponent for damage penalization according to Fig. 8.

4.3 Damage aggregation

Damage calculation in Eq. (28) results in \(N_{\text{e}} \times N_{\text{l}} \times 2 \times N_{\text{z}}\) functions in the optimization problem, where \(N_{\text{e}}\) is the number of elements, \(N_{\text{l}}\) is the number of layers, and \(N_{\text{z}}\) is the number of stress components considered in the model (i.e. six for a general 3D stress state). This causes the optimization problem of most finite element models to become inherently large scale, from which it is difficult to achieve a good solution efficiently using mathematical programming methods. To solve this problem, the local damage measures are aggregated to reduce the number of functions in the optimization problem. The aggregation is carried out using a P-norm function as shown in Eq. (29).

Here, \(D_{\text{PN}}\) is the P-norm approximation of the maximum damage and P is an exponent governing the accuracy of the approximation. To achieve adequate accuracy in the global measure, P has to be a contextually large number which can make the expression substantially nonlinear and this makes achieving convergence tedious. To avoid this, the P-norm aggregation is coupled with adaptive constraint scaling (Le et al. 2010), which normalizes the measure with a factor \(c_{\text{ACS}}\) based on information from previous iterations. Computational details regarding \(c_{\text{ACS}}\) can be found in Oest and Lund (2017).

It should be emphasized that the method relies on using the \(\max\)-operator, which makes the function non-differentiable. The degree of non-differentiability is however reduced since the scaling stabilizes during optimization and thus converges along with the problem. The approach is also well-documented to work on both stress and fatigue problems, see e.g. Le et al. (2010), Lund (2018), and Zhang et al. (2019). It is however undesirable to have a significantly small adaptive constraint scaling factor value as it increases the influence of the non-differentiability, when solving the problem. This can occur if trying to aggregate many values with a significant span of magnitudes using a smaller P-exponent in the P-norm function. To alleviate this problem, a local aggregation strategy (Le et al. 2010; París et al. 2010) is adopted, where the aggregation is made in multiple smaller areas of the model. This works particularly well when combined with the patch parametrization approach, as aggregation conveniently can be carried out in each patch, see e.g. Lund (2018).

4.4 Design sensitivity analysis

Design Sensitivity Analysis (DSA) is the process of deriving and computing sensitivities of the problem functions. Available DSA approaches are characterized as numerical (finite difference approximations), analytical, or semi-analytical where the two are combined. The analytical approaches are preferable as they are the most computationally efficient. However, two analytical approaches are available, direct differentiation and the adjoint approach, and the choice between these depends entirely on the problem to solve. The adjoint DSA method is in particular appropriate for problems with few constraints, and many design variables, as it requires solution of an equation per criterion. Such is the case in this work due to the use of P-norm functions that drastically reduce the number of structural constraints to significantly less than the number of design variables.

The adjoint sensitivities are thus derived in the following, which underlines its strength for said problems. Omitting many intermediate partial derivatives, the derivative of the damage function with respect to an arbitrary design variable \(x^{(j)}\) is found by application of the chain rule, see Eq. (30).

Reference is made to Appendix A for the expanded derivative and derivation of the omitted explicit terms.

The adjoint vector is then formulated from the derivative of the governing equation of Eq. (12), resulting in Eq. (31).

It is assumed that the nodal load vector is independent of the design variables and its derivative is zero as a result. It is therefore omitted in the following expressions. The partial derivative of displacement with respect to design variables is then isolated, see Eq. (32).

Inserted into the full function derivative yields Eq. (33).

Directly solving this equation is the direct DSA approach, which implies solving it for each design variable. This is demanding for problems with many design variables, which is the case for those treated in this work. For efficiently computing gradients of many design variables, it is convenient to define an adjoint vector \({\varvec{\lambda }}\) as shown in Eq. (34).

As \({\varvec{K}}\) is symmetric it is unaltered by transposition. Thus by inverting and transposing \({\varvec{K}}\) a set of linear equations is achieved, see Eq. (35).

This linear set of equations is solved efficiently by reusing the factored stiffness matrix from the finite element analysis. Thus, only this extra single set of equations has to be solved in order to compute the sensitivities of all design variables. Inserting \({\varvec{\lambda }}\) into Eq. (33) yields the resulting adjoint sensitivity expression, see Eq. (36).

4.5 Optimization techniques

DMTO problems are notoriously difficult to solve due to the design space being non-convex, and the inclusion of many linear manufacturing constraints. The typical solution strategy for these problems is application of a mathematical programming algorithm, which is tunable by a set of parameters. One framework, that has been demonstrated to handle difficult non-linear structural optimization problems well, is the Method of Moving Asymptotes (MMA) (Svanberg 1987). However, for the problems considered presently MMA is not particularly appropriate as it is only able to handle inequality constraints and works the best with few constraints. This is particularly problematic due to the reliance on the linear constraints of Eq. (6) and the necessity of other manufacturing constraints to achieve usable designs.

Another widely used framework is sequential programming, either implemented as Sequential Linear Programming (SLP) and Sequential Quadratic Programming (SQP). In this work, an SLP-based framework is adopted. This choice is made based on the conclusions of the study in Sørensen and Lund (2013) that showed that the applied SLP approaches in general outperformed the considered SQP approaches for DMTO problems. Additionally, good results for strength problems was later demonstrated in Lund (2018) using this SLP approach.

The SLP framework makes use of a merit function, see Sørensen et al. (2014). The purpose of the merit function is to ensure unconditional infeasibility of the structural constraints and to penalize such by an auxillary term in the objective function, see Nocedal and Wright (2006) for more details. Additionally, external move limits are applied on each design variables. These prevent too large design changes between iterations which would otherwise cause convergence to a poor optimum or potentially divergence. This is particularly important for highly non-linear problems such as the fatigue problem. These are applied adaptively, which is necessary to achieve convergence with SLP frameworks, as otherwise, the optimizer will not settle on an optimum.

Because of the non-linearity involved, the problem is likely to become significantly infeasible during the optimization due to the linear approximation not accurately representing the fatigue function at a particular point. To not cause a need for excessive feasibility restoration during optimization, an SLP filter is introduced. Different filters exist, however the one applied in this work is based on that of Fletcher et al. (1999), see also Sørensen and Lund (2015b). The SLP filter checks the quality of a design, i.e. if the objective function is less than in the previous point or if constraint violations have been reduced. When either of these condition are met, the design is placed in the filter, and if a design is better on both criteria than a previous solution stored in the filter, the old design is discarded. If a design does not pass the filter, the external move limits are uniformly reduced, and the problem is solved again. This process is repeated until a new point is accepted, or no better point can be found by the optimizer. By allowing only feasible designs in the SLP-filter convergence may take a significant amount of time, or the optimizer may get stuck prematurely. Some slack is introduced by accepting designs that are infeasible up to a set limit \(u_{\text{lim}}\). This limit used in this work is \(u_{\text{lim}}=0.01\), and is chosen based on numerical experiments.

The original SLP filter formulation only discards designs that are worse on both parameters than those in the filter, i.e. that both objective and constraint violations are larger. However, in the formulation adopted here, the acceptance criterion is modified so that emphasis is placed on ensuring feasibility. Thus, designs that have violations that exceed a preset limit is always discarded. Moreover note that assessment of a design should be carried out on the optimization functions to ensure consistency, i.e. the linearized function in the SLP framework. However, since this might be a poor representation of the actual fatigue damage, the true damages will be used, when comparing a design to the entries in the SLP filter. Although the global-convergence property of the original filter is lost, it has been observed to work well in practice.

Despite all the introduced techniques, a good solution is not necessarily found through their use. This is because of the problem being remarkably non-convex with high probability of ending up in a poor local minimum. To guide the optimizer to a good solution, a continuation approach is applied where the penalization is gradually increased (or decreased in case of considering the stress-relaxation parameter). The basic idea is that the design domain is convex when using linear interpolation (i.e. no penalization) for compliance problems (Bendsøe and Sigmund 2003), and less non-convex for more complex problem formulations, thus allowing the optimizer to find a good local optimum as a reference. Then, exponents are subsequently changed allowing a better starting point for the optimizer to solve a more non-convex problem. This is repeated for a number of appropriate steps, until arriving at adequate penalization.

Continuation is however a heuristic approach, and one can not expect to follow the global trajectory when changing the interpolation exponents, see Stolpe and Svanberg (2001b). In Lund (2018) it was demonstrated how application of continuation to both the penalization and relaxation exponents resulted in the global solution for some benchmark examples. Nevertheless, it is necessary to fine-tune how the exponents are updated for each problem. The continuation strategy adopted for each example in this work is detailed in Sect. 5.

Furthermore, measures of non discreteness are defined to quantify the (non) discreteness of the solution. A measure of density non discreteness \(M_{\text{dnd}}\) (Sigmund 2007) is associated to the thickness variables, and is given in Eq. (37).

Here, \(V^{(e,l)}\) is the volume of the layer l and element e and \(N_{\text{d}}\) is the number of material domains with associated thickness design variables. Note that the summation indicates that every element e is summed for their associated domain d. A measure of candidate non discreteness \(M_{\text{cnd}}\) (Sørensen et al. 2014) is also defined, see Eq. (38).

These measures are used to assess the quality of a solution, when presenting the examples in the following section. They yield 100% for the most non discreteness possible, i.e. design variables = 0.5, and 0% for 0/1 discrete designs.

5 Numerical examples

This section demonstrates fatigue optimization of various examples. The examples include two academic benchmark examples where the global optimum is known, demonstrating the DMO and DMTO methods respectively, a more challenging damage-constrained problem, and finally a simplified industrial main spar example. The finite element models demonstrated in this section use equivalent single layer (ESL) shell elements, see e.g. Reddy (2003) for details. All examples use the 9-noded isoparametric layered shell element formulation to avoid locking issues. Note that, the methods presented in the following are equivalently applicable to more general 3D models.

The materials applied are uni-directional (UD) GFRP, GFRP cross-ply (CP), rotated 45\(^{\circ }\) (this is termed biax), and Rohacell WF110 foam. Static and fatigue strength properties of these are given in tables 1 and 2. Material fatigue properties are not widely available for laminated composites, due to the extensive experimental campaigns required for S-N curve determination. The fatigue properties for the fiber materials used in this work is based on the OPTIDAT Nijssen et al. (2006) database. However, no data was available for the cross-ply laminates for all directions and R-values in this database. For these materials, the properties are derived from a scaling between the static strength values and available fatigue data. The foam data is based on that given in Zenkert and Burman (2009, 2011). As no data is available for \(R \ne -1\), mean stress correction is only carried out using a bilinear Goodman correction.

In Table 3 the continuation parameters are given. Continuation is applied on both the inverse P-mean function scaling variable and for design variable penalization. The P-mean function is used only for the third and fourth optimization problems, as only they are fatigue-constrained problems. In damage-minimization problems, which is treated in the first two examples, it is not important if the damage is accurately represented, as long as minimizing the approximated value also minimizes the true maximum damage. The number of iterations, until a continuation step is made, is stated in the following for each example as the strategy used varies between them. Finally, to solve the linear programming problems, the Sparse Nonlinear OPTimizer (SNOPT) by Gill et al. (2005) has been applied with default settings for all problems. Convergence of the problems is defined as a relative change in design variables between iterations of \(0.1\%\).

5.1 Example 1: DMO of single-layer clamped plate

This first example demonstrates fatigue optimization with the DMO parametrization. The problem concerns finding the optimal fiber distribution in a single-layered clamped plate subject to distributed pressure load \(p = {1} \, \text{MPa}\), see Fig. 10.

The objective is to minimize the P-norm aggregated scaled fatigue damage. It is a standard benchmark example where the optimal fiber distribution for stiffness and strength problems are known to be symmetric - this is also the case for fatigue. The optimization problem is stated in conventional form in Eq. (39).

The mesh consists of 32x32 shell finite elements. The material used in the model is GFRP with four DMO candidates having different fiber orientations of \(-45^{\circ }\), \(0^{\circ }\), \(45^{\circ }\) and \(90^{\circ }\). These are all associated \(\varvec{x}^{(k=0)} = 0.25\) as a starting guess. A fatigue load spectrum of \(10^6\) cycles has been applied for a zero-based constant amplitude loading, i.e. \(R=0\) for all load combinations. Only the S-N curve at \(R=-1\) is used for the fatigue evaluation, and as such the bilinear Goodman expression, see Eq. (17), is applied for mean stress correction. An 8x8 patch parametrization is used, such that each patch contains a 4x4 square of elements to which the same candidate design variable is assigned. Note that this parametrization does not ensure fiber continuity between neighboring patches and is therefore not appropriate for generating a manufacturing-ready design. The purpose of the parametrization is to be able to verify that the global optimum of the mathematical problem has been found.

Each damage component is scaled using \(s=-0.05\) and \(c_{\text{D}} = 1\) in the inverse P-norm mean function of Eq. (27). The P factor used in the P-norm, see Eq. (29), and inverse P-mean functions is chosen as 8. Penalization exponents vary according to Table 3 and is changed after every 15\(^{th}\) iteration. Additionally, adaptive external move limits are applied, which are capped at 10%.

The resulting fiber and damage distributions of \(D_{1}\) and \(D_{2}\) are illustrated in Fig. 11. Note that, shear stress is not particularly prevalent in this example, and the corresponding damages are therefore not illustrated. The maximum true damage is \(D = 3.7 \times 10^{-6}\) and is found at the bottom position of the shell element in the 2-direction.

Resulting fiber and damage distribution of \(\mathbb {P}_1\) for the given parameters. This result is also the global optimum, which has been verified by an exhaustive search. The increased gradient of damage around hot spots are observed for the scaled measure when compared to the true damage. As a consequence, damage does not change uncontrollably in between iterations

Remark that the solution is the global optimum, however, achieving a global optimum requires numerical studies to select the appropriate parameters, i.e. the continuation scheme for the interpolation exponents, P value in the P-norm approximation, scaling factor s, the external move limit cap etc. It should in general not be expected to reach the global optimum for fatigue optimization problems. The design achieved is also noticeably similar to the strength-optimized design achieved in Lund (2018) for the same parametrization, which is expected, since the stress and strain distributions used to compute both static and fatigue failure are the same. A slight difference is observed, which may be a result of a different failure mode becoming increasingly critical as the strength degrades during fatigue loading. The transverse strength is associated with the smallest S-N exponent and therefore degrades faster than the longitudinal and shear strengths, which may lead to e.g. \(\pm 45^\circ\)-plies becoming favorable in transversely loaded areas over \(90^\circ\)-plies.

Using \(c_{\text{D}} = 1\) the formulation linearizes the damage, which can be observed from the increased gradient in drop-off of scaled damage around the most damages areas. The true damage is more localized around certain hot-spot areas. The maximum damage has been evaluated post optimization, where the design variables have been enforced to zero or one. However note, that the measure of candidate non discreteness is \(M_{\text{cnd}} = 0.016\%\) when the convergence criteria had been met, i.e. an almost fully discrete design has been achieved. This causes a slight increase in the maximum damage, which can be seen if carefully considering the convergence plot in Fig. 12.

It is observed from Fig. 12 that the optimization oscillates a bit in the beginning of the optimization. This is attributable to the combination of the non-linear fatigue equations and the rather large 10% external move limit cap. It is possible to stabilize the convergence by capping the move limits at e.g. 5% however, this will be at the cost of more iterations to solve the problem. The problem may also be solved using \(c_{\text{D}} \ne 1\), however this slows convergence, and, as mentioned, accurately representing the damage is not important in damage-minimization problems. As such, it is not recommended for this problem.

The problem was also solved using SIMP interpolation with a minimum on the design variables of \(x_{\text{min}} = 10^{-6}\) to avoid a singular stiffness matrix. Similar results are achievable using SIMP, but fine tuning of the parameters is likewise necessary. It can not be expected that a given set of parameters will yield the same solution for a SIMP-penalized problem as that achieved for a RAMP-penalized problem.

5.2 Example 2: DMTO of a layered cantilever plate

This next example demonstrate fatigue optimization with the DMTO parametrization. The problem considered is a cantilever shell with a concentrated transverse load of \(P = 10N\) at the edge, see Fig. 13. The problem is to minimize the P-norm of the scaled damage subject to a mass constraint of 60% of the original mass, see Eq. (40).

Here, Manufacturing constraints is used to denote candidate summation constraints of Eq. (6), the thickness-drop constraints of Eq. (7) and ply-drop constraints of Eq. (9). This problem is also a benchmark example, where the expected solution for the fiber orientation is that all fibers are in the longitudinal direction and the thickness being distributed in a staircase-like manner. The model is meshed with 5x1 elements with ten layers each. Observe that using shell finite elements, a staircase-like geometry does not create stress singularities, which causes its occurrence in the final design.

The load spectrum considered in this example is fully reversed and of variable amplitude. 103 points are generated using the random_number() function, and this is scaled by a factor \(c_{\text{L}} = 10^4\), see Eq. (25). Bilinear Goodman is used for mean correction with respect to the S-N curve at \(R=-1\), similarly to the previous example.

Candidate materials are GFRP with orientations \(-45^{\circ }\), \(0^{\circ }\), \(45^{\circ }\) and \(90^{\circ }\), which are all assigned a starting value of \(\varvec{x}^{(k=0)} = 0.25\). The density variables are initially \(\varvec{\rho} ^{(k=0)} = 1\) in all layers. Candidate design variables patches are assigned to each layer, such that the material chosen must be continuous in the longitudinal direction of the structure, which is typically necessary for manufacturability. The density variable domains are associated to each layer of every element, except for the bottom layer where the design variable is shared for all elements. This variable is fixed at one, ensuring presence of a bottom layer in the plate. For the through-the-thickness density change constraints of Eq. (8), a threshold value of \(T = 0.1\) is used, and for the ply-drop constraints of Eq. (9) \(S = 1\), i.e. a single ply is allowed to drop at a time.

The continuation approach adopted here is changing the exponents every 20\(^{th}\) iteration according to Table 3. As in the previous example, the scaling factor in the inverse P-mean is \(c_{\text{D}} = 1\), as damage minimization is considered, and \(s=-0.1\) is used. In the P-norm damage aggregate and inverse p-mean function, P and \(P_{\text{D}}\) are selected as 8. Furthermore, adaptively updated external move limits are used with a cap at 10%.

Solving the problem results in the expected solution with all \(0^\circ\)-oriented plies and a staircase-like thickness distribution as shown in Fig. 14. The optimizer converged after 34 iterations with a measure of non discreteness for both candidate and density of \(M_{\text{cnd}} = M_{\text{dnd}} = 0.0\%\). At the point of convergence, the continuation had not been completed.

Damage distributions are illustrated in Fig. 15. As illustrated, more gradient of damage is again achieved for the scaled measure compared to the true damage. The maximum damage is found in the longitudinal direction at the bottom of the first layer. Damages are in general quite low for this example, indicating that this structure is able to carry significantly more load. There is a slight difference in the damage values and distribution between top and bottom due to the different tensile and compressive strengths of the material, when performing mean stress correction.

5.3 Example 3: Fatigue-constrained DMTO of a cantilever plate

In this example, a fatigue-constrained mass-minimization problem is solved with the DMTO parametrization. The problem is similar to that solved in the previous subsection, however 20 elements and 20 layers are used instead to make it more challenging. The load has also been adjusted to comply with the change in structure. The problem is illustrated in Fig. 16. The optimization problem is given in Eq. (41)

Note, that \(\tilde{m}\) indicates the merit reformulation of the mass objective function. The same load spectrum as the previous example is used, i.e. \(10^3\) randomly generated points that are scaled by \(10^4\). Here, mean correction is carried out using CLD interpolation with 20 sampled S-N curves between the three available, given in Table 2.

A right-hand side of 0.99 is chosen for this problem. The motivation for this is to keep the damage measure stable when modifying the inverse P-mean scaling factor \(c_{\text{D}}\) during continuation. A damage measure that is only slightly infeasible, when a modification is made to \(c_{\text{D}}\), can cause a vast increase in damage thereby making it difficult to restore feasibility or, in some cases, not possible entirely. Infeasibility is prevented entirely using the SLP limit of \(u_{\text{lim}} = 0.01\), guaranteeing stability during the chosen scheme.

As in the previous example, \(-45^{\circ }\), \(0^{\circ }\), \(45^{\circ }\) and \(90^{\circ }\) oriented GFRP are used as candidate materials and these are assigned to patches that span all elements in each layer. Density variables are assigned to every layer in every element, except for the bottom layer, which shares design variable in all elements. The starting guess is \(\varvec{x}^{(k=0)} = 0.25\) for the candidate design variables and \(\varvec{\rho} ^{(k=0)} = 1\) for the density variables. For the manufacturing constraints, a threshold value of \(T = 0.2\) is used in Eq. (8), and \(S = 2\) for Eq. (9).

Since this example involves a damage constraint, the true damage has to be accurately approximated during the optimization, else the final design will be overdimensioned. To demonstrate the effect of this, three problems with different strategies for updating the scaling factor \(c_{\text{D}}\) are solved. With offset in Table 3, a problem is solved with the shown strategy, ending up with \(c_{\text{D}} = 0.1\). The other two problems follow the strategy until \(c_{\text{D}} = 0.5\) and \(c_{\text{D}} = 1\) respectively. The scaling factor is updated every 80\(^{th}\) iteration, while the penalization exponents are updated every 30\(^{th}\). For the inverse P-mean function, an exponent of \(P_{\text{D}} = 8\) is used for all problems, and for the P-norm function, an exponent of \(P = 12\) is used. Additionally, the scaling factor used is \(s=-0.1\).

Adaptive external move limits are also used here with a cap at 5%. These are coupled with the SLP filter to achieve a good solution. An allowable infeasible limit of \(u_{\text{lim}} = 0.01\) is used. The strategy applied is to accept the design with the most amount of infeasibility, within the tolerance \(u_{\text{lim}}\), at the end of optimization and to ensure feasibility, when the optimization is finished, the right-hand side of the constraint is reduced to 0.99, see Eq. (41).

Convergence of the problems are shown in Fig. 17. As is evident, convergence takes significantly longer for this problems compared to the previous. It can also be observed, that the best designs are achieved by using \(c_{\text{D}} \ne 1\). However, by doing so the formulation becomes more non-linear as \(c_{\text{D}} = 0\) is approached, see Fig. 9, which consequently increases the number of iterations to convergence. As such, the trade-off on accuracy and efficiency in solving the problem is important to consider, when selecting values for the inverse P-mean function. Notwithstanding, DMTO is strongest in the early design phases, as the design typically needs adjustment for additional manufacturing considerations and more computationally expensive analyses, thus a good trade off may be to keep \(c_{\text{D}}=1\) in most problems.

Convergence of the three problems. As seen on the figure, there is a change in trajectory when \(c_{\text{D}}\) changes from 1. The descent of the mass function where \(c_{\text{D}}\) is changed levels out, such that it takes more iterations to reach the same mass from this point. As such it is clear that the best convergence is achieved using this setting, however it is at the cost of damage accuracy

The resulting designs and damage distributions (maximum damage component) are shown in Fig. 18. In every solution, UD fiber is chosen for all layers with a material non discreteness of \(0.0\%\), and this is selected early in the optimization. The difference between the solution is the thickness distribution and density discreteness. Here it is interesting to note, that the problem solved with \(c_{\text{D}} = 1\) is most discrete, having a density non discreteness of \(M_{\text{dnd}} = 0.6\%\). The optimizations ending with \(c_{\text{D}} = 0.5\) and \(c_{\text{D}} = 0.1\) finish with \(M_{\text{dnd}} = 1.7\%\) and \(M_{\text{dnd}} = 1.2\%\) respectively. However, layers were removed in both these designs that were not removed otherwise, indicating that these solutions are not the same as that achieved with \(c_{\text{D}}=1\), but with more intermediate density.

It was also attempted to solve the problem using \(c_{\text{D}} \ne 1\) initially. All tests did however prematurely converge to a design with unsatisfactory amounts of intermediate values for primarily the candidate materials. As such, to reach the best optimum, a continuation scheme applied to \(c_{\text{D}}\) seems to yields the best results. Nevertheless, it is important to underline, that decent designs can be achieved efficiently using exclusively \(c_{\text{D}} = 1\).

The use of the SLP filter approach is necessitated to solve this problem. Due to the significant non-linearity of the fatigue functions, the function is observed to oscillate significantly above the constraint limit. This makes it difficult to converge when coupled with the continuation approach, which may cause the mean stress to exceed e.g. the ultimate strength if performing mean stress correction with the modified Goodman expression of Eq. (17). The SLP filter will reject solutions that are significantly outside the constraint limit, thus keeping the optimizations stable as observed in Fig. 17. As is also observable from this figure is that adjusting the constraint and SLP filter limits to be less than one keeps the damage stable when performing continuation on \(c_{\text{D}}\).

5.4 Example 4: Multi-criteria DMTO of a wind turbine blade main spar

The final example demonstrate inclusion of fatigue constraints in a multi-criteria optimization of a 14 m simplified industrial main spar of a wind turbine blade. The geometry of the spar is shown in Fig. 19. It is clamped at its root (where the cross section is circular), and is loaded with an experimentally-based force equivalent to 164.7 kN, see Overgaard et al. (2010), distributed at the tip of the spar on the webs. The finite element model consists of 1,652 9-node shell elements with 20 layers each that are 0.0025 m thick, and the outer geometry is used as reference for shell offset. The structure is parametrized in 120 patches for thickness, and 8 longitudinal patches for material, which are highlighted on Fig. 19. Three candidate materials are used: 0\(^{\circ }\) UD GFRP, biax GFRP and the Rohacell WF110 foam material. In total, the number of design variables in the problem is 2,880.

The example has been solved using additional structural criteria, which besides fatigue damage are tip displacement and linear buckling. These criteria are necessary to take into account to realize this type of structure. For details on the implementation of these criteria see Sørensen et al. (2014). The optimization problem is given in Eq. (42).

Here, \(g_\lambda\) is the buckling constraint, \(g_U\) is the displacement constraint and \(g_{\text{D}}\) is the damage constraint, with the latter also including adaptive constraint scaling. Similarly to the previous example, a limit of 0.99 is used for the fatigue constraint.

The fatigue analysis is carried out using a random spectrum of 103 points generated from the random_number() intrinsic Fortran function. This loading is scaled using \(c_{\text{L}} = 10^4\) in Eq. (25). The analysis is carried out using CLD interpolation between the three S-N curves given in Table 2 for the UD and biax materials. The interpolated S-N curves are formed pre analysis and then stored for each non-zero entry in the rainflow matrix.

For fatigue evaluation in the foam, only a single S-N curve is used with modified Goodman to take into account mean stress effects. Also note, that the component-wise approach presented in the paper is also applied for evaluating damage in the foam. It may however yield more accurate results to apply an isotropic multi-axial fatigue criterion for evaluation of the damaging stress. As such, the approach for the foam would be equivalent to that applied in Oest and Lund (2017) for metal materials.

Fatigue evaluation results in 396,480 damage values. These are aggregated using a patch-wise P-norm strategy, where damage values are aggregated for each thickness domain which is assigned its own designated constraint. The problem therefore involve 120 damage constraints. Because the adaptive constraint scaling method is dependent on the P-value chosen, a rather large value of \(P = 36\) is used to get a very accurate measure of the maximum damage for the many function values. Note that this is a rather large value, however it is chosen based on extensive numerical experimentation that indicated stable performance using this factor.

The scaling exponent in the inverse P-mean function is chosen as \(s = -0.1\), and \(P_{\text{D}}\) is chosen as 8. The weighting factor \(c_{\text{D}}\) is changed according to Table 3, the continuation of this parameter starts after 200 iterations (or until convergence has been achieved), and is updated after 35 iterations. Note that the continuation is applied slowly and the reason for this is primarily to keep the stress from rapid increase, when changing exponents in the weighting functions. If the stress exceed the ultimate strength of the material, it is not possible to carry out mean stress correction, causing the optimization to stop.

The candidate design variables are initialized with equal weighting of \({\varvec{x}}^{(k=0)} = 0.33\), and the density design variables are initialized as \({\varvec{\rho }}^{(k=0)} = 1\). Additionally, a threshold of \(T=0.1\) is used for the thickness move limits and the ply-drop constraints use \(S=1\). Finally, adaptive external move limits are capped at 5%.

The optimization converges in 245 iterations, and the convergence behavior is illustrated in Fig. 20. At the first continuation step, the fatigue constraint becomes significantly infeasible. As the damage constraint is formulated using the inverse P-mean method, and at this point \(c_{\text{D}} = 1\), the true damage is immense. However, as observed, the combination of the optimizer and the scaling handles this well. After this point, the convergence is more stable, particularly evident at the final penalization step applied at the 144\(^{th}\) iteration, which is barely visible on the convergence plot.

The optimized layup is illustrated in Fig. 21 and the distribution of maximum damage components in the spar is shown in Fig. 22. The discreteness of the design is at \(M_{\text{cnd}} = 0.325\%\) and \(M_{\text{dnd}} = 0.973\%\), thus an almost fully discrete design is achieved. The locations of intermediate density is observable in Fig. 21. The optimized layup is evidently a UD sandwich construction, which is expected as a result of only including a flap-wise load case. In a realistic scenario, the structure will be subject to loads from multiple directions and an optimization under such case will yield a significantly different layup. However, such designs are not intuitive as opposed to the attained layup, which simplifies the post-optimality analysis, the present discussion of the results, and assessment of the overall approach.

Candidate material choice and thickness distribution of the eight longitudinal patches. Each square represents a circumferential patch assigned a density thickness variable. The number of layers in each of these patches is given on top of the stack of layers. Therefore, if colors are not strictly red or blue, the candidate choice of the layer is not fully discrete, and likewise, if the number of layers is not an integer, the top layer is of intermediate density. Note also that layers are offset from the top, such that the outermost layer is at zero thickness, which is illustrated in cross-section centered in the figure

Most UD material is placed in the spar caps to add the necessary stiffness and strength for all criteria. A single UD layer is also placed in layer 17 at the top region, however this is not significantly damaged and its placement is therefore attributed to a local minimum. However, if realizing the design, a fiber-reinforced material should be placed at the innermost layer anyway to protect the core material. Such is not enforced in the present approach and the design is therefore not directly manufacturable. Nevertheless, the achieved layup does give a vastly improved point of departure for the designer, that should be able to acquire a valid high-performing design through relatively few manual modifications. The alternative parametrization introduced in Sjølund et al. (2018, 2019) specifically addresses optimization of sandwich constructions by associating the density variable to the layer thickness instead of the constitutive matrix. For now, fatigue optimization in the context of this parametrization is left for future work.

In the transition regions, UD is preferred at both inner- and outermost layers. The outermost layers (i.e. at zero thickness) provides both stiffness to the displacement and buckling criteria, and fatigue strength to resist the damage. In the innermost layers in-plane shear damage is critical, therefore warranting the placement of UD here. For the webs, a UD layer is placed around the bottom and top on each side. The main purpose of the UD layer is to carry in-plane shear loading in these regions, and as such the position in the layer is arbitrary. It is observed that the shear damage is most critical in innermost UD layers (layer 18 in the left web and 19 in the right). As such, a single layer may be sufficient to carry the load in the web regions. However, as with the spar caps, these layers would be moved to the very top and bottom to form a sandwich for manufacturing purposes.

It is interesting to note, that biax is not selected as a material in the either the webs or transition regions as this material should be superior in in-plane shear loading. The reason for this could simply be attributed to reaching a local minimum, however, as the material properties are based on interpolated values, and not true measured values, this may also skew the result.

Distribution of the maximum damage component in the spar. The critical component in the spar cap is \(D^{(1)}\), while at the tip in the web and transition regions it is \(D^{(12)}\). The sensitivity of fatigue damage is particularly evident in this plot, where it is observed, that the damage is not symmetrically distributed in the spar cap due to the slight difference in layup of the transition regions

5.5 Discussion

Fatigue of laminated composites is complex, and for some cases, the model employed in the present is not entirely adequate. Laminated composite structures are inherently multi-axial due to the material anisotropy, which results in stress-interaction effects that may potentially accelerate damage. The influence of these stress interaction effects on fatigue, however, differ significantly depending on the imposed loads, and the type of stress interaction, see Quaresimin et al. (2010). The approach employed in this work neglects stress interaction, and is therefore only appropriate for structures with a smaller degree of multi-axial stress states. In fact, the fatigue evaluation approach is similar to using a max stress/strain approach for static failure evaluation. The approach is thus appropriate for structures similar to those demonstrated in Example 2, 3 and 4 where the structure is primarily damaged by a single stress component in most areas. Moreover, this approach is commonly used for structures with stress-interaction effects coupled with appropriate safety factors and reduction of \(D_{\text{lim}}\) in Eq. (25), due to computational expense of existing multi-axial assessment methods. Furthermore, the choice of criterion is often based on the particular case at hand. A review of these methods can be found in Passipoularidis and Brøndsted (2010). The issue with these criteria, particularly for optimization purposes, is that they are formulated based on fractions of the stress to the fatigue strength of which the latter is determined from the material S-N curve. This makes the expression a general non-linear equation with no explicit solution. Solving such equations as part of an optimization problem, significantly increases the computational expense. The appropriateness of multi-axial criteria in general has also been questioned in recent research (Talreja 2023).