Abstract

This paper presents a new FE-based stress-related topology optimization approach for finding bending governed flexible designs. Thereby, the knowledge about an output displacement or force as well as the detailed mounting position is not necessary for the application. The newly developed objective function makes use of the varying stress distribution in the cross section of flexible structures. Hence, each element of the design space must be evaluated with respect to its stress state. Therefore, the method prefers elements experiencing a bending or shear load over elements which are mainly subjected to membrane stresses. In order to determine the stress state of the elements, we use the principal stresses at the Gauss points. For demonstrating the feasibility of the new topology optimization approach, three academic examples are presented and discussed. As a result, the developed sensitivity-based algorithm is able to find usable flexible design concepts with a nearly discrete 0 − 1 density distribution for these examples.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

For three decades, the topology optimization has been a fast-developing branch of research. Nowadays, it is a well-established tool in many industrial sectors like automotive or aircraft. The basic idea of this structural optimization method is to find a suitable material distribution within a predefined design space.

Since the publication of the fundamental approach of Bendsøe and Kikuchi (1988) from 1988 about the homogenization method, the topology optimization of continuum structures is an active research area. One year later, Bendsoe published a second strategy, which uses the so-called Solid Isotropic Material with Penalization (SIMP) (Bendsøe and Kikuchi 1988) that is also applied in the present paper. Thus, all further explanations have to be considered in the context of the finite element method (FEM) in combination with the SIMP material law. In this approach, the elasticity tensor E of a finite element is obtained by multiplying the original elasticity tensor E0 of the element by the design variable x:

Thereby, the design variable is a dimensionless ratio (E/E0) which can be varied continuously between a lower boundary xl and an upper boundary xu. It represents an artificial density of the element. In the SIMP material model, finite elements with x ≈ 0 represent a void region or for x = 1 a solid region. The dimensionless stiffness ratio may be penalized by the exponent p in (1). Choosing the penalty coefficient p greater than 1 should result in a more discrete design. Ideally, an optimized structure consists of a clear 0 − 1 density distribution. For more details and general discussion, see Bendsøe and Sigmund (2003), Eschenauer and Olhoff (2001), and Rozvany (2009).

Finding a stiff structure within a design space for a certain volume limitation is the most common optimization task in a topology optimization. According to Soto (2001), this optimization target accounts for more than 80% of the optimization tasks in the industry. Often, the simplest objective function for linear elastic problems, the minimization of the compliance is used, which is equal to the maximization of the global stiffness.

During the last 20 years, the interest for finding flexible designs by structural topology optimization grew rapidly (Eschenauer and Olhoff 2001; Soto 2001). According to Lee (2011), there are two research fields which focus on the benefit of flexibility. The first one is the design of compliant mechanisms (CMs) and the second one is the design of energy-absorbing structures.

In contrast to conventional rigid-body mechanisms, CMs have no joints, hinges, or slides, but are elastic single-piece or jointless parts. The transfer of motion, force, or energy is done by elastic deformation of the flexible structural members (Eschenauer and Olhoff 2001; Sigmund 1997). In this way, it is possible to reduce the number of parts. In Gallego and Herder (2009), a detailed overview about the developed synthesis strategies for finding CMs is given. Here, the existing methods are divided into three branches of development (Fig. 1), which are the kinematic, the building blocks, and the structural optimization–based approaches. The third branch includes all topology, shape, and size optimization methods. Here, the focus is on the design of the mechanism. Generally, all included methods of this branch have the same conflict during the optimization: Finding a conceptual monolithic structure which works as a mechanism as well as a structure (Zhu et al. 2019). These are two contrarious optimization goals (rigidity and flexibility) which must be taken into account simultaneously. Commonly, consideration of both goals in one optimization leads to a multi-objective problem.

Synthesis of compliant mechanisms (figure based on Gallego and Herder (2009))

With the maximization of a mechanical advantage (MA), Sigmund (1997) presents a possible objective function formulation for finding an optimal mechanism. The MA represents a predefined ratio of input and output forces. By optimizing a classical micro-gripping mechanism, Sigmund demonstrates the applicability of this idea. Often, the rigidity of the mechanism is achieved by a minimization of the strain energy and the flexibility by a deflection maximization, as presented by Frecker et al. (1997), where a ratio of mutual potential energy (MPE) (Shield and Prager 1970) and strain energy (SE) is maximized. A weighted linear multi-objective formulation of minimization of SE and maximization of MPE is published by Ananthasuresh et al. (1994). Lin et al. (2010) combine the SIMP material law and the multi-objective formulation of Ananthasuresh with physical programming (PP) (Messac 1996) for finding an optimal jointless mechanism. In their paper, it is demonstrated that this approach is very effective with respect to multi-objective optimization. Lee and Gea (2014) present a similar approach but use a global effective strain for minimization instead of strain energy, which leads to optimized CMs without regions with localized high strains due to one-node-connected hinges as is the case for the classical compliance minimization approach. Other possibilities for avoiding the point flexure drawback are the usage of morphological close-open and open-close density filters (Schevenels and Sigmund 2016) or the control of the stress level with an effective stress constraint formulation (De Leon et al. 2015).

The second field of research where flexibility is a priority is the design of energy-absorbing structures. Such structures have the capacity not only to absorb energy but also to provide stiffness for protection. Consequently, energy-absorbing designs are important in the wide field of transportation to ensure safety of humans in crash situations. A classical example is a frame of a car. In general, the overall optimization goal is to find designs with high crashworthiness. Similar to CMs, the challenge is to optimize stiffness and flexibility at the same time. This is further complicated by the circumstance that many effects resulting from dynamics, nonlinearity of material, and geometry or contacts have to be taken into account during optimization. For more details, see Lee (2011), Mayer et al. (1996), and Ortmann and Schumacher (2013).

The main purpose of our paper is the presentation of a new approach enabling the user to find flexible structures without any knowledge about the structures behavior at the mounting points or their positions (see Figs. 2 and 12). A stress criterion based on principal stress differences is used to distinguish between elements subjected to bending or membrane loading. Our aim is to accelerate the design process of flexible designs by means of a better start design for further optimizations, where only the conditions at the load application point are known.

In the literature, stress-related formulations are often used as a constraint for controlling the stress level during the optimization. Duysinx and Bendsoe (1998) presented an ε-relaxation technique which allows the handling of local stress constraints for the topology optimization of continuum structures. Holmberg et al. present in Holmberg et al. (2013) an effective approach based on a design space clustering technique in combination with a modified p-norm for controlling the stress level during the optimization. More rarely is the usage of a stress-based formulation as objective function (Arnout et al. 2012; Yang and Chen 1996). The latter presents a topology optimization process which allows to control the global stress level more effectively by transferring the local stress problem into a global one.

Instead of controlling the stress concentrations during the topology optimization, we use our new stress-based formulation to evaluate finite elements with respect to their type of stress for finding conceptual designs with a dominating state of bending stress. The paper is organized as follows: In Section 2, the motivation and the general new idea will be shown. Section 3 presents the new stress-related objective function and the sensitivity analysis. In Section 4, the feasibility of the new approach is demonstrated for two examples. Finally, Section 5 presents the conclusions of this paper.

2 Motivation and idea

2.1 Motivation

Starting a design process for a new flexible casing part in combination with the question about a good mounting position for the given static structure often leads to unusable solutions, especially using a classical topology optimization objective like compliance (CP) (Lee 2011).

For a demonstration of this difficulty, a two-dimen-sional cantilever beam problem is used (see Fig. 2). The optimization model has a dimension of a × b (with a= 30 mm and b= 10mm) and is discretized with an average element edge length of 0.25mm (4800 linear plane stress continuum elements). The structure is made of steel (Young’s modulus E= 210000 N/mm2, Poisson’s ratio ν= 0.3) and has a thickness of 1 mm. The cantilever beam is fixed at the left-hand side and loaded by a single point force of 50N at the right-hand side.

Since the aim is to find a flexible structure, the optimization task CP maximization with a volume fraction constraint vf = 0.15 is used. Figure 3 shows that this task leads to an unusable (trivial) solution with an objective function value of \(f_{CP}^{*} = 1.12e^{8} \)N mm since no load path between the load application point and the mounting area exists (displacement at point A tends to \(\infty \)).

Applying the strategies for finding a general CM design (see Section 1) requires the knowledge about the positions and properties of the boundary conditions in the form of input/output forces or displacements for the description of the mechanism. Especially, the output information is important for most of the CM optimization algorithms (Gallego and Herder 2009; Zhu et al. 2019). Hence, the optimization problem of determining a bending dominated part with an unknown mounting position, described at the beginning, cannot be solved with a simple CM approach.

2.2 Properties of flexible structures

In contrast to stiff structures, flexible structures are able to reduce reaction forces and moments in case of prescribed displacements or decouple the movement of two parts. Such designs are characterized by a varying stress distribution in the structure as shown exemplarily in Fig. 4a for the well-known cantilever beam. A second example is the helical spring in Fig. 4b where a torsional moment reacts in the wire due to the applied forces and causes linearly distributed shear stresses. Both examples illustrate that the available material is no longer used in an optimal way as is the case for stiff structures with a constant stress distribution in their cross section. The fact that flexible parts consist of areas with a varying stress distribution is the basis of the new approach which is presented in the following section.

3 New approach for finding flexible structures

In this section, firstly, a new objective function for finding flexible structures with the help of topology optimization is presented. Afterwards, the calculation of sensitivities is shown since a gradient-based algorithm is applied for the optimization process. For this, the Augmented Lagrange Multiplier method (ALM) (Vanderplaats 1984) is used for solving the following presented constrained optimization problems. However, it should be noted that this new approach is only implemented for two-dimensional optimization problems at the moment.

3.1 Stress-related objective function

The basic idea of our approach is to make use of the varying stress distribution in the cross section of flexible structures. Therefore, each finite element in the design space must be evaluated with regard to its stress state. The new dominant bending stress (DBS) objective function reads as

with \({\sum }_{i=0}^{N_{ds}}\) being a summation over all finite elements of the design space, \(\sigma _{ben_{\scriptstyle i}}\) the bending stress of the i-th element, \(\sigma _{rms_{\scriptstyle i}}\) the root mean square of Gauss point stress values, xi the density factor, q a coefficient (q < p) which depends on the SIMP material penalty exponent p, and wi a weighting factor for the i-th element. The bending stresses are defined as

with \({\sum }_{l=1}^{m} {\sum }_{g=l+1}^{m}\) being a summation over all Gauss point pairs for the principal stresses σ1 and σ2 of an element (applied sorting: |σ1| > |σ2|). Thus, the defined bending stress σben can become greater than or equal to 0. Depending on σben, the stress state of a finite element may be evaluated (membrane stress state for σben = 0, bending stress state for σben > 0). The variable σrms in (2) is defined as

with \({\sum }_{l=1}^{m}\) being a summation over all m Gauss points of a finite element. By dividing σben by σrms, the bending stress is normalized which has the effect that elements with a high stress state are not preferred to elements with a lower stress level during the optimization. Simply spoken, the new approach prefers elements subjected to bending or shearing to elements subjected to tensile or compressive stresses. The minus sign in (2) results from the used optimization method (ALM method (Vanderplaats 1984)) which can only search for minima.

The second term of the objective function \({{x^{p}_{i}}}/{{x^{q}_{i}}}\) is a relaxation factor and necessary to avoid an overestimation of the stresses in low-density elements (Bruggi 2008). As a result, the effect of the so-called singularity phenomenon (elements with a density tending to 0 tend to finite stress values (Duysinx and Bendsøe 1998; Yang and Chen 1996)) on the optimization result is reduced. Moreover, a possible skipping of the gradient field from iteration to iteration is avoided. Thus, the algorithm is able to find an usable solution with a nearly discrete density distribution.

The term wi in (2) represents a dimensionless weighting factor for reducing the overestimation of elements in regions of stress singularities (Huang and Labossiere 2002) as well as the overestimation of elements with extremely small stress values. This factor is based on the density function of a trapezoidal distribution (van Dorp and Kotz 2003), determined only once per iteration (iter), and can take values between 0 and 1 (see Fig. 5). The z values required for the case-by-case analysis for computing wi are calculated as

with \(\overline {\sigma }_{rms}\) being the arithmetical mean value of all finite elements of the design space (\(\overline {\sigma }_{rms} = {1}/{N_{ds}} \cdot {\sum }_{i=0}^{N_{ds}} \sigma _{rms_{\scriptstyle i}}\)). On this basis, the four limits (A ≤ B ≤ C ≤ D) are defined as

with α and β being factors which influence the z-distance between the points A and B as well as C and D (initial values are α0 = β0 = 1.0). Thereby, the limits B and C are defined in such a way that the overestimated values of the fraction \({\sigma _{ben_{\scriptstyle i}}}/{\sigma _{rms_{\scriptstyle i}}}\) in case of stress singularities or extremely small stresses are filtered out. During the optimization process, the filter effect becomes smaller since α and β are changed such that A = B and C = D after a certain number of iterations which leads to wi = 1 for all elements. Consequently, the weighting factor which is constant during each iteration influences the optimization process just at the beginning and improves the robustness of the new approach in terms of its applicability.

The effect of the pq-relaxation in combination with the trapezoidal filter is explained in more detail in Section 4.1 on the basis of an example.

In Table 1, finite element models subjected to pure tension and pure bending are evaluated with respect to the terms used in (2). Following from this, we can conclude that generally the objective function value fDBS increases for a bigger patch of elements subjected to bending.

3.2 Sensitivity-based optimization algorithm

For implementing the approach in a sensitivity-based algorithm, the derivative of (2) is necessary. Based on the calculated gradients, the used optimization algorithm, the Augmented Lagrange Multiplier method, defines the new search direction in every iteration (Firl 2010). The state of the art of sensitivity analysis for topology optimization (large number of design variables and few response functions) is the adjoint formulation which has a high numerical efficiency (Kirsch 1993). The objective function fDBS(x,u(x)) depends not only directly on the design variables x but also indirectly via the displacements u. By using the product rule we get for the derivative:

The derivatives \({\mathrm {d} \sigma _{ben_{i}}}/{\mathrm {d} x_{j}}\) and \({\mathrm {d} \sigma _{rms_{i}}}/{\mathrm {d} x_{j}}\) with respect to xj are calculated with the help of the chain rule (Firl 2010):

The term ∂u/∂xj can be determined from Ku = f with K being the global stiffness matrix and f being the vector of external forces, by differentiation with regard to the design variable

The expression in the parentheses of (9) can be summarized to the pseudo load vector f∗. In most cases, the derivative of f is a zero vector since these forces are independent of the design variable. However, if a dependency exists, this term is approximated by a forward finite difference. The determination of ∂K/∂xj in a topology optimization using the SIMP material model is done by summing up the derivatives of the element stiffness matrices Keas

By combining (8), (9), and (10), the derivatives \({\mathrm {d}\sigma _{ben_{i}}}/{\mathrm {d} x_{j}}\) and \({\mathrm {d} \sigma _{rms_{i}}}/{\mathrm {d} x_{j}}\) can be computed as

The results of the matrix vector products \(\left ({\partial \sigma _{ben_{i}}}/{\partial \mathbf {u}}\right )^{T} \mathbf {K}^{-1}\) and \(\left ({\partial \sigma _{rms_{i}}}/{\partial \mathbf {u}}\right )^{T} \mathbf {K}^{-1}\) in (11) are the adjoint variable vectors \(\mathbf {\lambda }_{ben}^{T}\) and \(\mathbf {\lambda }_{rms}^{T}\). These variables must be calculated only once for every response function f of the optimization per iteration. By inserting this adjoint approach in (7), the absolute derivative of the objective function (2) which is used for the implementation results in:

For computing the adjoint variable vectors λben and λrms in (12) both partial derivatives \({\partial \sigma _{ben_{i}}}/{\partial \mathbf {u}}\) and \({\partial \sigma _{rms_{i}}}/{\partial \mathbf {u}}\) are replaced by a central finite difference approximation. However, the partial derivatives \({\partial \sigma _{ben_{i}}}/{\partial x_{j}}\) and \({\partial \sigma _{rms_{i}}}/{\partial x_{j}}\) are approximated by forward finite differences because stresses are much more sensitive against variations of displacements than against variations of the density factor. The application of this semi-analytical procedure is driven by the fact that the used FE software is not able to provide the necessary derivatives of stresses with respect to density or displacement.

For demonstrating the functionality of this discrete adjoint sensitivity analysis, the cantilever beam model of Section 2.1 is used. The optimization task for maximizing the flexibility can be formulated as

with f representing the external load vector, u the global displacement vector, K the global stiffness matrix of the system which depends on the vector of design variables, vi the volume of the i-th finite element, and \({x^{l}_{i}}\) the lower density boundary. The resulting optimized structure is shown in Fig. 6 (objective value \(f_{DBS}^{*} = -0.0881\)).

Within 63 iterations, the algorithm finds a closed load path with an almost discrete 0 − 1 density distribution. The resulting von Mises stress distribution in Fig. 6b clearly illustrates that the optimized beam has a varying stress distribution in thickness direction.

4 Further examples

In this section, two further examples, a cube loaded by a pair of compressive forces and the well-known L - bracket, demonstrate the feasibility of the new stress-related approach for the topology optimization of flexible structures. Both models are discretized with fully integrated linear plane stress continuum elements. Moreover, all optimizations are performed with a linear elastic isotropic material (Young’s modulus E = 210000 N/mm2, Poisson’s ratio ν = 0.3) of thickness 1 mm.

4.1 Cube

In the first example, a cube which is loaded by a compressive force is studied. The geometry of the problem is presented in Fig. 7. This optimization model has the dimensions a × a (a = 20 mm). All degrees of freedom at the edge of the left non-design space are fixed and forces with a total value of 25N at the edge of the right non-design space are applied. An average element edge length of 0.25 mm results in 6400 linear plane stress continuum elements. During the optimization, the artificial density of each design element may vary between xl = 0.01 (lower limit) and xu = 1.0 (upper limit). Furthermore, a linear gradient filter with an influence radius of 0.75 mm is used to overcome the checkerboard effect and to obtain a more discrete 0 − 1 density distribution (Bendsøe and Sigmund 2003; Eschenauer and Olhoff 2001; Masching 2016). The optimization is performed with a constant penalty exponent of p = 2, a coefficient of q = 0.5, and an initial artificial density of xi = 0.19 for all elements of the design space.

The optimization task is defined as

After 85 iterations, the algorithm develops a ring-shaped structure as shown in Fig. 8 (\(f_{DBS}^{*} = -0.1106\)). The stress plot illustrates the dominant bending stress behavior in the structure. Obviously, the new approach is able to make use of the required properties of flexible structures. Moreover, the graph in Fig. 9 shows the stability of the algorithm by its smooth history of the objective function value.

In addition, a mesh study is carried out with this example. Therefore, the optimization model in Fig. 7 is discretized once with an average element edge length of 0.5 mm and once with an average element edge length of 1.0 mm. The results in Fig. 10 show that the optimized structure does not depend on the discretization of the model but the resulting objective function values differ. These different values can be explained with Table 1 since a finer mesh leads to bigger objective function values.

The optimization results shown in Fig. 8 as well as the stable optimization behavior result from the used combination of the pq-relaxation and the dimensionless weighting factor wi which is based on the described trapezoidal filter (Section 3.1). Both methods are necessary in our approach because the gradients in areas with very small stresses or stress singularities would otherwise be overestimated due to the first term of the objective function (2). Since \(\sigma _{rms_{\scriptstyle i}}\) may have very small values like in the four corner regions of the cube, the quotient \({\sigma _{ben_{\scriptstyle i}}}/{\sigma _{rms_{\scriptstyle i}}}\) and therefore the gradient may become huge which results in an aggregation of material (see Fig. 11). In a notch region as in the L-Bracket example in Section 4.2, the stress gradient is very high due to the singularity of the geometry which causes the same effect of material aggregation. By this, the density in these corner regions of the models would increase in every iteration, which reduces the influence of the pq-relaxation.

To overcome these so-called corner effects, the trapezoidal filter is implemented which damps out the artificial aggregations at the beginning of the optimization process. Thus, no material is aggregated in these described areas at the beginning. Later, when the influence of the trapezoidal filter is reduced, the pq-relaxation takes over this part and prevents the aggregation of material because of the small densities in the corner regions.

Moreover, the stress-based optimization approach has the capability of avoiding trivial solutions with infinite displacements where no closed path of material between mounting region and load application area exists because such a result would not be an optimal solution in the sense of our objective. This can be simply shown for a single element subjected to some load. In this case, the objective reads

if we neglect for reasons of simplicity the weighting factor. Since p > q is always true and the stresses never become 0 and are always positive, it can be easily seen that the objective function value becomes smaller the bigger the density xi becomes. This fact can also be shown for the cube example. The objective function value at the end of the optimization process is − 0.1106 because all elements in the design with a high density try to carry load in order to compute significant bending stresses. In contrast, a converged structure without a closed load path would always result in an objective function value close to 0, since the elements with a low density do not contribute to the fDBS value due to the pq-relaxation. The equality condition of the volume fraction enforces a specific amount of elements with a density close to 1.0. In classical trivial solutions (confer Fig. 3), these elements are arranged as artificial material lumps in the design space. Such elements also do not contribute to the objective function value because these lumps only perform rigid-body motions and thus do not suffer any bending load.

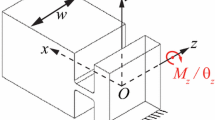

4.2 L - bracket

The L-bracket is a widely studied example in the research field of topology optimization. The model in Fig. 12 has the dimensions a × a (a = 100 mm) with a b × b (b = 60 mm) square cutout. All degrees of freedom at the upper edge are fixed and a single load with F = 10N at the right corner is applied. The model is discretized with an average element edge length of 1 mm (6400 linear plane stress continuum elements). For a smoother result, the same linear gradient filter as for the cube with an influence radius of 4 mm, which corresponds to four times the average element edge length, is used. The initial density value for all design elements is 0.14, a penalty exponent of p = 2 and a coefficient of q = 0.5 is applied. The definition of the optimization task reads as

Within 94 iterations, the algorithm finds a single arm structure with a dominating state of bending stress, which is shown in Fig. 13 (\(f_{DBS}^{*} = -0.0746\)). A solution with an almost discrete 0 − 1 density distribution and an existing load path between the load application point and the support structure is obtained. Also, in this example, the stable behavior of the algorithm can be recognized by the smooth course of the objective function which is shown in Fig. 14.

Additionally, the influence of two optimization parameters, the volume fraction (P1), and the radius of the linear gradient filter (P2) on the resulting structure should be studied. Therefore, the optimization task (16) with a gradient filter radius of 4 mm is used as a reference. Firstly, the value of P1 is varied between 0.115 and 0.165. In comparison to the reference design in Fig. 13a, it is observed that the variation of P1 only changes the thickness of the flexible arm structure (see Fig. 15).

For the second study, a constant volume fraction of vf = 0.14 is used. Now, the influence radius of the linear gradient filter is varied. The effect on the optimized structure due to the applied radius sizes of 3.0 mm and 5.0 mm is illustrated in Fig. 16 and shows almost no influence in comparison to the reference solution of Fig. 13a. As a result, both studies confirm a robust behavior for variations of the selected optimization parameters within the defined ranges.

Finally, an extension of the non-design space at the upper edge of the L-bracket model shows the usability of this stress-based approach with regard to finding a new mounting position. The defined optimization task (16) with the same linear gradient filter settings are also used here. After 98 iterations, the algorithm develops a flexible arm structure (\(f_{DBS}^{*} = -0.0678\)), but in contrast to the reference solution (see Fig. 13) a different mounting position is chosen by the algorithm. A comparison to the compliance values of both optimization results shows that the mounting point in Fig. 17b leads to a more flexible design (\(f_{CP}^{*} = 33.39\) Nmm) than that in Fig. 13a (\(f_{CP}^{*} = 12.43\) Nmm).

5 Conclusions

A new methodology for finding conceptual designs for flexible parts by using the topology optimization has been presented and investigated. Thereby, no knowledge about input-to-output force ratios or displacements as well as mounting positions is necessary. A sensitivity-based algorithm has been successfully implemented in a commercial optimization software. Academic examples illustrate that this new approach could be a useful tool for finding a better initial design for a development process of a flexible part. Furthermore, two studies have shown that the method has a conditional robustness against variation of optimization parameters. It should be noted that the current version supports only two-dimensional plane examples with plane stress continuum elements which have at least four Gauss points but an extension to three dimensions and different finite element formulations is possible.

References

Ananthasuresh GK, Kota S, Kikuchi N (1994) Strategies for systematic synthesis of compliant MEMS. ASME Winter Annual Meeting 1994 677-686:55–2

Arnout S, Firl M, Bletzinger K-U (2012) Parameter free shape and thickness optimisation considering stress response. Struct Multidisc Optim 45:801–814. https://doi.org/10.1007/s00158-011-0742-8

Bendsøe MP, Kikuchi N (1988) Generation optimal topologies in optimal design using a homogenization method. Comp Meth Appl Mech Engrg 71:197–224. https://doi.org/10.1016/0045-7825(88)90086-2

Bendsøe MP, Sigmund O (2003) Topology Optimization: Theory, methods and applications. Springer, Berlin/Heidelberg

Bruggi M (2008) On an alternative approach to stress constraints relaxation in topology optimization. Struct Multidisc Optim 36:125–141. https://doi.org/10.1007/s00158-007-0203-6

De Leon DM, Alexandersen J, Fonseca JSO, Sigmund O (2015) Stress-constrained topology optimization for compliant mechanism design. Struct Multidisc Optim 52:929–943. https://doi.org/10.1007/s00158-015-1279-z

Duysinx P, Bendsøe MP (1998) Topology optimization of continuum structures with local stress constraints. Int J Numer Meth Engng 43:1453–1478. https://doi.org/10.1002/(SICI)1097-0207

Eschenauer H, Olhoff N (2001) Topology optimization of continuum structures: A review*. Appl Mech Rev 54:331–390. https://doi.org/10.1115/1.1388075

Firl M (2010) Optimal shape design of shell structures, Phd Thesis, Chair of structural analysis, Technische Universität München

Frecker MI, Ananthasuresh GK, Nishiwaki S, Kikuchi N, Kota S (1997) Topological synthesis of compliant mechanisms using multi-criteria optimization. Transactions of the ASME 119(2):238–245. https://doi.org/10.1115/1.2826242

Gallego AJ, Herder J (2009) Synthesis methods in compliant mechanisms: an overview. Proc ASME IDETC-CIE2009 7:193–214. https://doi.org/10.1115/DETC2009-86845

Holmberg E, Torstenfelt B, Klarbring A (2013) Stress constrained topology optimization. Struct Multidisc Optim 48:33–47. https://doi.org/10.1007/s00158-012-0880-7

Huang C-S, Labossiere P (2002) Stress singularities, stress intensities and fracture initiation at sharp reentrant corners in elastic plates in bending. Damage and Fracture Mechanics VII, 35–44

Kirsch U (1993) Structural optimization. Springer

Lee E (2011) Strain based topology optimization method, A Phd thesis, The State University of New Jersey

Lee E, Gea CH (2014) A strain based topology optimization method for compliant mechanism design. Struct Multidisc Optim 49:199–207. https://doi.org/10.1007/s00158-013-0971-0

Lin J, Luo Z, Tong L (2010) A new multi-objective programming scheme for topology optimization of compliant mechanisms. Struct Multidisc Optim 40:241–255. https://doi.org/10.1007/s00158-008-0355-z

Masching H (2016) Parameter free optimization of shape adaptive shell structures, PhD thesis, Chair of structural analysis, Technische Universität München

Mayer R, Kikuchi N, Scott RA (1996) Application of topological optimization techniques to structural crashworthiness. Int J Numer Methods Eng 39:1383–1403. https://doi.org/10.1002/(SICI)1097-0207

Messac A (1996) Physical Programming: Effective optimization for design. AIAA J 34(1):149–158. https://doi.org/10.2514/3.13035

Ortmann C, Schumacher A (2013) Graph and heuristic based topology optimization of crash loaded structures. Struct Multidisc Optim 47:839–854. https://doi.org/10.1007/s00158-012-0872-7

Rozvany GIN (2009) A critical review of established methods of structural topology optimization. Struct Multidisc Optim 37:217–237. https://doi.org/10.1007/s00158-007-0217-0

Shield RT, Prager W (1970) Optimal structural design for given deflection. J Appl Math Phys 21:513–523. https://doi.org/10.1007/BF01587681

Schevenels M, Sigmund O (2016) On the implementation and effectiveness of morphological close-open and open-close filters for topology optimization. Struct Multidisc Optim 54:15–21. https://doi.org/10.1007/s00158-015-1393-y

Sigmund O (1997) On the design of compliant mechanisms using topology optimization. Mechanics Based Design of Structures and Machines 25(4):493–524. https://doi.org/10.1080/08905459708945415

Soto CA (2001) Structural topology optimization: from minimizing compliance to maximizing energy absorption, vol 25. https://doi.org/10.1504/IJVD.2001.001913

van Dorp JR, Kotz S (2003) Generalized trapezoidal distributions. Metrika 58:85–97. https://doi.org/10.1007/s001840200230

Vanderplaats GN (1984) Numerical Optimization Techniques for Engineering Design. McGraw Hill

Yang RJ, Chen CJ (1996) Stress-based topology optimization. Struct Optim 12:98–105. https://doi.org/10.1007/BF01196941

Zhu B, Zhang X, Zhang H, Liang J, Zang H, Li H, Wang R (2019) Design of compliant mechanisms using continuum topology optimization: A review. Mechanism and Machine Theory, 143 Article 103622. https://doi.org/10.1016/j.mechmachtheory.2019.103622

Acknowledgements

The authors would like to thank the FEMopt Studios GmbH for the provided free academic software license and their support.

Funding

Open Access funding enabled and organized by Projekt DEAL. This publication is based on the work within the research project KEPLER which is funded by the ProFIT program of the federal state of Brandenburg and the European Regional Development Fund (Project 80171294).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Replication results

All the results presented in this work can be reproduced with the optimization software XCARAT in combination with the open Python script interface of the program. The equations which are required for this can be found in Section 3. Our source code can not be published because it is implemented in a commercial software.

Additional information

Responsible Editor: Ole Sigmund

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Noack, M., Kühhorn, A., Kober, M. et al. A new stress-based topology optimization approach for finding flexible structures. Struct Multidisc Optim 64, 1997–2007 (2021). https://doi.org/10.1007/s00158-021-02960-w

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00158-021-02960-w

,

,  ,

,  )

)

,

,  ,

,  )

)

,

,  ,

,  )

)

,

,  ,

,  )

)