Abstract

We use the framework of permuton processes to show that large deviations of the interchange process are controlled by the Dirichlet energy. This establishes a rigorous connection between processes of permutations and one-dimensional incompressible Euler equations. While our large deviation upper bound is valid in general, the lower bound applies to processes corresponding to incompressible flows, studied in this context by Brenier. These results imply the Archimedean limit for relaxed sorting networks and allow us to asymptotically count such networks.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper we investigate the large deviation principle for a model of random permutations called the one-dimensional interchange process. The process can be roughly described as follows. We put N particles, labelled from 1 to N, on a line \(\{1,\ldots , N\}\) and at each time step perform the following procedure: an edge is chosen at random and adjacent particles are swapped. By comparing the particles’ initial positions with their positions after given time t we obtain a random permutation from the symmetric group \(\mathcal {S}_N\) on N elements.

The interchange process on the interval (whose discrete time analog is known as the adjacent transposition shuffle) and on more general graphs has attracted considerable attention in probability theory, for example with regard to the analysis of mixing times. It is natural to ask whether, after proper rescaling and as \(N \rightarrow \infty \), the permutations obtained in the interchange process converge in distribution to an appropriately defined limiting process.

Such limits have been recently studied ([HKM+13, RVV19]) under the name of permutons and permuton processes. These notions have been inspired by the theory of graph limits ([Lov12]), where the analogous notion of a graphon as a limit of dense graphs appears. A permuton is a Borel probability measure on \([0,1]^2\) with uniform marginals on each coordinate. A sequence of permutations \(\sigma ^N \in \mathcal {S}_N\) is said to converge to a permuton \(\mu \) as \(N \rightarrow \infty \) if the corresponding empirical measures

converge weakly to \(\mu \). A permuton process is a stochastic process \(X = (X_{t}, 0 \le t \le T)\) taking values in [0, 1], with continuous sample paths and having uniform marginals at each time \(t \in [0,T]\). A permutation-valued path, such as a sample from the interchange process, is said to converge to X if the trajectory of a randomly chosen particle converges in distribution to X.

Depending on the time scale considered, one observes different asymptotic structure in the permutations arising from the interchange process. If the average number of all swaps is greater than \(\sim N^3 \log N\), the process will be close to its stationary distribution ([Ald83, Lac16]), which is the uniform distribution on \(\mathcal {S}_N\). For \(\sim N^3\) swaps each particle has displacement of order N and the whole process converges, in the sense of permuton processes, to a Brownian motion on [0, 1] ([RV17]).

Here we will be interested in yet shorter time scales, corresponding to \(\sim N^{2+\varepsilon }\) swaps for fixed \(\varepsilon \in (0,1)\). In this scaling each particle has displacement \(\ll N\), so the resulting permutations will be close to the identity permutation. Nevertheless, in the spirit of large deviation theory one can still ask questions about rare events, for example “what is the probability that starting from the identity permutation we are close to a fixed permuton after time t?” or, more generally, “what is the probability that the interchange process behaves like a given permuton process X?”. We expect such probabilities to decay exponentially in \(N^\gamma \) for some \(\gamma > 0\), with the decay rate given by a rate function on the space of permuton processes.

The large deviation principle we obtain in this paper can be informally summarized as follows: for a class of permuton processes solving a natural energy minimization problem, the probability \(\mathbb {P}(A)\) that the interchange process is close in distribution to a process X satisfies asymptotically

where \(\gamma = 2 - \varepsilon \) and I(X) is the energy of X, defined as the expected Dirichlet energy of a path sampled from X. Apart from a purely probabilistic interest, the result is relevant to two other seemingly unrelated subjects, namely the study of Euler equations in fluid dynamics and the study of sorting networks in combinatorics.

Let us first state the energy minimization problem in question, which is as follows – given a permuton \(\mu \), find

where the infimum is over all permuton processes X such that \((X_0, X_T)\) has distribution \(\mu \). As it happens, such energy-minimizing processes have been considered in fluid dynamics in the study of incompressible Euler equations, under the name of generalized incompressible flows. This connection is discussed in more detail in Sect. 2.2. Very roughly speaking, Euler equations in a domain \(D \subseteq \mathbb {R}^d\) describe motion of fluid particles whose trajectories satisfy the equation

for some function p called the pressure. The incompressibility constraint means that the flow defined by the equation has to be volume-preserving. Classical, smooth solutions to Euler equations correspond to flows which are diffeomorphisms of D. Generalized incompressible flows are a stochastic variant of such solutions in which each particle can choose its initial velocity independently from a given probability distribution.

It turns out that, under additional regularity assumptions, such generalized solutions to Euler Eq. (3) for \(D = [0,1]\) correspond exactly to permuton processes solving the energy minimization problem (2) for some permuton \(\mu \). Our large deviation result (1) is valid precisely for such energy-minimizing permuton processes (again, under certain regularity assumptions).

As it happens, the original motivation for our work came from a different direction, namely from the study of sorting networks in combinatorics. This connection is explained in more detail below. Using our large deviation principle (1), we are able to prove novel results on a variant of the model we call relaxed sorting networks. Thus the large deviation principle presented in this paper provides a rather unexpected link between problems in combinatorics (sorting networks) and fluid dynamics (incompressible Euler equations), along with a quite general framework for analyzing permuton processes which we hope will find further applications.

Main results. Let us now state our main results more formally, still with complete definitions and discussion of assumptions deferred until Sects. 2.1 and 3. Let \(\mathcal {D}= \mathcal {D}([0,T], [0,1])\) be the space of càdlàg paths from [0, T] to [0, 1] and let \(\mathcal {M}(\mathcal {D})\) be the space of Borel probability measures on \(\mathcal {D}\). Let \(\mathcal {P}\subseteq \mathcal {M}(\mathcal {D})\) denote the space of permuton processes and their approximations by permutation-valued processes. For \(\pi \in \mathcal {M}(\mathcal {D})\) by \(I(\pi )\) we will denote the expected Dirichlet energy of the process X whose distribution is \(\pi \).

Let \(\eta ^N\) denote the interchange process in continuous time on the interval \(\{1, \ldots , N\}\), speeded up by \(N^{\alpha }\) for some \(\alpha \in (1,2)\). Let \(\gamma = 3 - \alpha \). We have the following large deviation principle

Theorem A

(Large deviation lower bound). Let \(\mathbb {P}^{N}\) be the law of the interchange process \(\eta ^N\) and let \(\mu ^{\eta ^{N}} \in \mathcal {M}(\mathcal {D})\) be the empirical distribution of its trajectories. Let \(\pi \) be a permuton process which is a generalized solution to Euler Eq. (19). Provided \(\pi \) satisfies Assumptions (3.1), for any open set \(\mathcal {O}\subseteq \mathcal {P}\) such that \(\pi \in \mathcal {O}\) we have

Theorem B

(Large deviation upper bound). Let \(\mathbb {P}^{N}\) be the law of the interchange process \(\eta ^{N}\) and let \(\mu ^{\eta ^{N}} \in \mathcal {M}(\mathcal {D})\) be the empirical distribution of its trajectories. For any closed set \({\mathcal {C}} \subseteq \mathcal {P}\) we have

The results are referred to as respectively Theorems 7.3 and 8.4 in the following sections. Here the large deviation upper bound is valid for all permuton processes, without any additional assumptions. On the other hand, in the proof of the lower bound we exploit rather heavily the special structure possessed by generalized solutions to Euler equations. We expect the lower bound to hold for arbitrary permuton processes as well, since one can locally approximate any permuton process by energy minimizers. However, for our techniques to apply one would need to understand in more detail regularity of the associated velocity distributions and pressure functions, which falls outside the scope of our work.

The reader may notice that the rate function, which is the energy \(I(\pi )\), is similar to the one appearing in the analysis of large deviations for independent random walks. In fact, the crux of our proofs lies in proving that particles in the interchange process and its perturbations are in a certain sense almost independent. The main techniques used here come from the field of interacting particle systems. A comprehensive introduction to the subject can be found in [KL99]. The novelty in our approach is in applying tools usually used to study hydrodynamic limits to a setting which is in some respects more involved, since the limiting objects we consider, permuton processes, are stochastic processes instead of deterministic objects like solutions of PDEs apearing, for example, for exclusion processes.

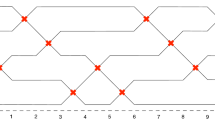

Sorting networks and the sine curve process. The large deviation bounds can be applied to obtain results on a model related to sorting networks. A sorting network on N elements is a sequence of \(M = \left( {\begin{array}{c}N\\ 2\end{array}}\right) \) transpositions \((\tau _{1}\), \(\tau _{2}\), \(\ldots \), \(\tau _{M})\) such that each \(\tau _{i}\) is a transposition of adjacent elements and \(\tau _{M} \circ \ldots \circ \tau _{1} = \textrm{rev}_{N}\), where \(\textrm{rev}_{N} = (N \,\ldots \,2 \, 1)\) denotes the reverse permutation. It is easy to see that any sequence of adjacent transpositions giving the reverse permutation must have length at least \(\left( {\begin{array}{c}N\\ 2\end{array}}\right) \), hence sorting networks can be thought of as shortest paths joining the identity permutation and the reverse permutation in the Cayley graph of \(\mathcal {S}_{N}\) generated by adjacent transpositions.

A random sorting network is obtained by sampling a sorting network uniformly at random among all sorting networks on N elements. Let us work in continuous time, assuming each transposition \(\tau _i\) happens at time \(\frac{i}{M+1}\). It was conjectured in [AHRV07] and recently proved in [Dau22] that the trajectory of a randomly chosen particle in a random sorting network has a remarkable limiting behavior as \(N \rightarrow \infty \), namely it converges in the sense of permuton processes to a deterministic limit, which is the sine curve process described below.

Here it will be more natural to consider the square \([-1,1]^2\) and processes with values in \([-1,1]\) instead of [0, 1] (with the obvious changes in the notion of a permuton and a permuton process which we leave implicit). The Archimedean law is the measure on \([-1,1]^2\) obtained by projecting the normalized surface area of a 2-dimensional half-sphere to the plane or, equivalently, the measure supported inside the unit disk \(\{x^2 + y^2 \le 1\}\) whose density is given by \(1 /(2\pi \sqrt{1 - x^2 - y^2}) \, dx \, dy\). Observe that thanks to the well-known plank property each strip \([a,b] \times [-1,1]\) has measure proportional to \(b-a\), hence the Archimedean law defines a permuton.

The sine curve process is the permuton process \({\mathcal {A}} = ({\mathcal {A}}_{t}, 0 \le t \le 1)\) with the following distribution – we sample (X, Y) from the Archimedean law and then follow the path

One can directly check that \({\mathcal {A}}_t\) has uniform distribution on \([-1,1]\) at each time t, hence \({\mathcal {A}}_t\) indeed defines a permuton process. Observe that \(({\mathcal {A}}_{0}, {\mathcal {A}}_{0}) = (X,X)\) and \(({\mathcal {A}}_{0}, {\mathcal {A}}_{1}) = (X, -X)\), thus the sine curve process defines a path between the identity permuton and the reverse permuton.

An equivalent way of describing the sine curve process consists of choosing a pair \((R, \theta )\) at random, where the angle \(\theta \) is uniform on \([0,2\pi ]\) and R has density \(r / 2 \pi \sqrt{1-r^2} \, dr\) on [0, 1], and following the path \({\mathcal {A}}_t = R \cos (\pi t + \theta )\). Thus the trajectories of this process are sine curves with random initial phase and amplitude – the path of a random particle is determined by its initial position X and velocity V, given by \((X,V) = (R \cos \theta , -\pi R \sin \theta )\).

Recall now the energy minimization problem (18). The sine curve process is the unique minimizer of energy among all permuton processes joining the identity to the reverse permuton ([Bre89], see also [RVV19]), with the minimal energy equal to \(I({\mathcal {A}}) = \frac{\pi ^2}{6}\). It is one of the few examples where the solution to the problem (18) can be explicitly calculated for a target permuton \(\mu \). It also seems to play a special role in constructing generalized incompressible flows which are non-unique solutions to the energy minimization problem in dimensions greater than one, see, e.g., [BFS09].

The sine curve process is a generalized solution to Euler equations with the pressure function \(p(x) = \frac{x^2}{2}\), which unsurprisingly leads to each particle satisyfing the harmonic oscillator equation \(x'' = -x\). The reader may check that the sine curve process satisfies the Assumptions (3.1) (with the velocity distribution being time-independent), thus providing a non-trivial and explicit example for which our large deviation bounds hold. To the best of our knowledge the connection between sorting networks on the one hand and Euler equations on the other hand was first observed in the literature in [Dau22].

Let us now describe the results on relaxed sorting networks. Fix \(\delta > 0\) and \(N \ge 1\). We define a \(\delta \)-relaxed sorting network of length M on N elements to be a sequence of M adjacent transpositions \((\tau _1, \ldots , \tau _M)\) such that the permutation \(\sigma _M = \tau _M \circ \ldots \circ \tau _1\) is \(\delta \)-close to the reverse permutation \(\textrm{rev} = (N \, \ldots \, 2 \, 1)\) in the Wasserstein distance on the space \(\mathcal {M}([0,1]^2)\) of Borel probability measures on \([0,1]^2\) (see Sect. 2.1 for the definition). For fixed \(\kappa \in (0,1)\) we define a random \(\delta \)-relaxed sorting network on N elements by choosing M from a Poisson distribution with mean \(\lfloor \frac{1}{2} N^{1 + \kappa }(N-1) \rfloor \) and then sampling a \(\delta \)-relaxed sorting network of length M uniformly at random.

Our first result is that the analog of the sorting network conjecture holds for relaxed sorting networks, that is, in a random relaxed sorting network the trajectory of a random particle is with high probability close in distribution to the sine curve process. Precisely, we have the following

Theorem 1.1

Fix \(\kappa \in (0,1)\) and let \(\pi ^{N}_{\delta }\) denote the empirical distribution of the permutation process (as defined in 5) associated to a random \(\delta \)-relaxed sorting network on N elements. Let \(\pi _{{\mathcal {A}}}\) denote the distribution of the sine curve process. Given any \(\varepsilon > 0\) we have for all sufficiently small \(\delta > 0\)

where \(B(\pi _{{\mathcal {A}}}, \varepsilon )\) is the \(\varepsilon \)-ball in the Wasserstein distance on \(\mathcal {P}\).

Here for consistency of notation we assume that the sine curve process is rescaled so that it is supported on [0, 1] rather than \([-1,1]\).

The second result is more combinatorial and concerns the problem of enumerating sorting networks. A remarkable formula due to Stanley ([Sta84]) says that the number of all sorting networks on N elements is equal to

which is asymptotic to \(\exp \left\{ \frac{N^2}{2}\log N + (\frac{1}{4} - \log 2)N^2 + O(N \log N) \right\} \).

For relaxed sorting networks we have the following asymptotic estimate

Theorem 1.2

For any \(\kappa \in (0,1)\) let \({\mathcal {S}}^{N}_{\kappa , \delta }\) be the number of \(\delta \)-relaxed sorting networks on N elements of length \(M = \lfloor \frac{1}{2} N^{1+\kappa }(N-1)\rfloor \). We have

where \(\varepsilon ^{N}_{\delta }\) satisfies \(\lim \limits _{\delta \rightarrow 0} \lim \limits _{N \rightarrow \infty } \varepsilon ^{N}_{\delta } = 0\).

The asymptotics is analogous to that of Stanley’s formula – the first term in the exponent corresponds simply to the number of all paths of required length, and, crucially, the factor \(\frac{\pi ^2}{6}\) corresponds to the energy of the sine curve process.

The proofs of Theorems 1.1 and 1.2 are given in Sect. 9. It would be an interesting problem to obtain analogous results for relaxed sorting networks reaching exactly the reverse permutation, not only being \(\delta \)-close in the permuton topology. This case is not covered by the results of this paper, since the set of permuton processes reaching exactly the reverse permuton is not open, hence the lower bound of Theorem A does not apply.

2 Preliminaries

2.1 Permutons and stochastic processes.

Permutons. Consider the space \(\mathcal {M}([0,1]^2)\) of all Borel probability measures on the unit square \([0,1]^2\), endowed with the weak topology. A permuton is a probability measure \(\mu \in \mathcal {M}([0,1]^2)\) with uniform marginals. In other words, \(\mu \) is the joint distribution of a pair of random variables (X, Y), with X, Y taking values in [0, 1] and having marginal distribution \(X, Y \sim {\mathcal {U}}[0,1]\). We will sometimes call the pair (X, Y) itself a permuton if there is no risk of ambiguity. A few simple examples of permutons are the identity permuton (X, X), the uniform permuton (the distribution of two independent copies of X, which is the uniform measure on the square) or the reverse permuton \((X, 1 - X)\).

Permutons can be thought of as continuous limits of permutations in the following sense. Let \(\mathcal {S}_{N}\) be the symmetric group on N elements and let \(\sigma \in \mathcal {S}_{N}\). We associate to \(\sigma \) its empirical measure

which is an element of \(\mathcal {M}([0,1]^2)\). By a slight abuse of terminology we will sometimes identify \(\sigma \) with \(\mu _\sigma \). Since every such measure has uniform marginals on \(\left\{ \frac{1}{N}, \frac{2}{N}, \ldots , 1 \right\} \), it is not difficult to see that if a sequence of empirical measures converges weakly, the limiting measure will be a permuton. Conversely, every permuton can be realized as a limit of finite permutations, in the sense of weak convergence of empirical measures (see [HKM+13]). We will consider \(\mathcal {M}([0,1]^2)\) endowed with the Wasserstein distance corresponding to the Euclidean metric on \([0,1]^2\), under which the distance of measures \(\mu \) and \(\nu \) is given by

where the infimum is over all couplings of (X, Y) and \((X',Y')\) such that \((X,Y) \sim \mu \), \((X',Y') \sim \nu \).

The path space \(\mathcal {D}\) and stochastic processes. A natural setting for analyzing trajectories of particles in random permutation sequences is to consider \(\mathcal {D}= \mathcal {D}([0,T], [0,1])\), the space of all càdlàg paths from [0, T] to [0, 1]. We endow it with the standard Skorokhod topology, metrized by a metric \(\rho \) under which \(\mathcal {D}\) is separable and complete. By \(\mathcal {M}(\mathcal {D})\) we will denote the space of all Borel probability measures on \(\mathcal {D}\), endowed with the weak topology. It will be convenient to metrize \(\mathcal {M}(\mathcal {D})\) by the Wasserstein distance, under which the distance between measures \(\mu \) and \(\nu \) is given by

where the infimum is over all couplings (X, Y) such that \(X \sim \mu \), \(Y \sim \nu \). We will also make use of the Wasserstein distance associated to the supremum norm, given by

where \(\left\| \cdot \right\| _{sup}\) is the supremum norm on \(\mathcal {D}\) and again the infimum is over all couplings (X, Y) as above.

Given two times \(0 \le s \le t \le T\) and a stochastic process \(X = (X_{t}, 0 \le t \le T)\) with distribution \(\mu \in \mathcal {M}(\mathcal {D})\), by \(\mu _{s, t} \in \mathcal {M}([0,1]^2)\) we will denote the distribution of the marginal \((X_{s}, X_{t})\). Note that the projection \(\mu \mapsto \mu _{s, t}\) is continuous as a map from \(\mathcal {M}(\mathcal {D})\) to \(\mathcal {M}([0,1]^2)\) as long as paths \(X \sim \mu \) sampled from \(\mu \) have almost surely no jumps at times s and t. We will sometimes implicitly identify the stochastic process with its distribution when there is no risk of misunderstanding.

Permutation processes and permuton processes. Consider a permutation-valued path \(\eta ^{N} = (\eta ^{N}_{t}, 0 \le t \le T)\), with \(\eta ^{N}_{t}\) taking values in the symmetric group \({\mathcal {S}}_{N}\). We will always assume that \(\eta ^N\) is càdlàg as a map from [0, T] to \(\mathcal {S}_N\). Let \(\eta ^{N}(i) = \left( \eta ^{N}_{t}(i), 0 \le t \le T \right) \) be the trajectory of i under \(\eta ^{N}\) and let \(X^{\eta ^{N}}(i) = \frac{1}{N}\eta ^{N}(i)\) be the rescaled trajectory. We define the empirical measure

where \(\delta _{X^{\eta ^{N}}(i)}\) is the delta measure concentrated on the trajectory \(X^{\eta ^{N}}(i)\).

The associated permutation process \(X^{\eta ^{N}} = (X^{\eta ^{N}}_{t}, 0 \le t \le T)\) is obtained by choosing \(i = 1, \ldots , N\) uniformly at random and following the path \(X^{\eta ^{N}}(i)\). In other words, \(X^{\eta ^{N}}\) is a random path with values in [0, 1] whose distribution is \(\mu ^{\eta ^{N}} \in \mathcal {M}(\mathcal {D})\). If \(\eta ^N\) is fixed, the only randomness here comes from the random choice of the particle i. Note that at each time t the marginal distribution of \(X_{t}^{\eta ^{N}}\) is uniform on \(\left\{ \frac{1}{N}, \frac{2}{N}, \ldots , 1 \right\} \).

A permuton process is a stochastic process \(X = (X_{t}, 0 \le t \le T)\) taking values in [0, 1], with continuous sample paths and such that for every \(t \in [0,T]\) the marginal \(X_{t}\) is uniformly distributed on [0, 1]. The name is justified by observing that if \(\pi \) is the distribution of X, then for any fixed \(s,t \in [0,T]\) the joint distribution \(\pi _{s,t} \in \mathcal {M}([0,1]^2)\) of \((X_{s}, X_{t})\) defines a permuton. As explained in the next subsection, permuton processes arise naturally as limits of permutation processes defined above.

Since every permutation process has marginals uniform on \(\left\{ \frac{1}{N}, \frac{2}{N}, \ldots , 1 \right\} \), we will call it an approximate permuton process. By \(\mathcal {P}\) we will denote the space of all permuton processes and approximate permuton processes, treated as a subspace of \(\mathcal {M}(\mathcal {D})\) (with the same topology and the metric \(d_{{\mathcal {W}}}\)).

Random permutation and permuton processes. A random permuton process is a permuton process chosen from some probability distribution on the space of all permuton processes, i.e., a random variable X, defined for a probability space \(\Omega \), such that \(X(\omega )\) is a permuton process for \(\omega \in \Omega \). By identifying the random variable with its distribution we can also think of a random permuton process as a random element of \(\mathcal {M}(\mathcal {P})\). In this setting, with weak topology on \(\mathcal {M}(\mathcal {P})\), one can consider convergence in distribution of random permuton processes \(X_{n}\) to a (possibly also random) permuton process X.

One can prove (see [RVV19]) that if a sequence of random permutation processes \(X^{\eta ^N}\) converges in distribution, then the limit is a permuton process (in general also random). Of particular interest will be sequences of random permutation-valued paths \(\eta ^{N}\) (coming for example from the interchange process) such that the corresponding permutation processes \(X^{\eta ^N}\) converge in distribution to a deterministic permuton process (for example the sine curve process described below).

For any random permuton process X we define its associated random particle process \({\bar{X}} = \mathbb {E}_{\omega } X(\omega )\), which is a process with a deterministic distribution, obtained by first sampling a permuton process \(X(\omega )\) and then sampling a random path according to \(X(\omega )\).

To elucidate the difference between random and deterministic permuton processes, consider a random permuton process X and its associated random particle process \({\bar{X}}\). If we sample an outcome \(X(\omega )\) and then a path from \(X(\omega )\), then obviously the distribution of paths will be the same as for \({\bar{X}}\). However, consider now sampling an outcome \(X(\omega )\) and then sampling independently two paths from \(X(\omega )\). The distribution of a pair of paths obtained in this way will not in general be the same as the distribution of two independent copies sampled from \({\bar{X}}\), since the paths might be correlated within the outcome \(X(\omega )\). The following general lemma will be useful later for showing that limits of certain random permutation processes are in fact deterministic ([RV17, Lemma 3]):

Lemma 2.1

Let K be a compact metric space and let \(\mu \) be a random probability measure on K, i.e., a random variable with values in \(\mathcal {M}(K)\). Let X and Y be two independent samples from an outcome of \(\mu \) and let Z be a sample from an outcome of an independent copy of \(\mu \). If (X, Y), as a \(K^2\)-valued random variable, has the same distribution as (X, Z), then \(\mu \) is in fact deterministic, i.e., there exists \(\nu \in \mathcal {M}(K)\) such that \(\mu = \nu \) almost surely.

Energy. Here we introduce several related notions of energy for paths, permutations, permutons and permuton processes.

Given a path \(\gamma : [0,T] \rightarrow [0,1]\) and a finite partition \(\Pi = \{ 0 = t_{0}< t_{1}< \ldots < t_{k} = T \}\) we define the energy of \(\gamma \) with respect to \(\Pi \) as

and the energy of \(\gamma \) as

where the supremum is over all finite partitions \(\Pi = \{ 0 = t_{0}< t_{1}< \ldots < t_{k} = T \}\). For a path which is not absolutely continuous the supremum is equal to \(+\infty \). If a path \(\gamma \) is differentiable, its energy is equal to

For a permutation \(\sigma \in {\mathcal {S}}_N\) we define its energy as

Likewise, for a permuton \(\mu \in \mathcal {M}([0,1]^2)\) its energy is defined by

where the pair (X, Y) has distribution \(\mu \). If \(\mu = \mu _{\sigma }\) is the empirical measure of a permutation \(\sigma \in {\mathcal {S}}_N\), defined by (4), then we have \(I(\mu _{\sigma }) = I(\sigma )\). Note also that \(I = I(\mu )\) is a continuous function of \(\mu \) in the weak topology on \(\mathcal {M}([0,1]^2)\).

Finally, we define the energy of a permuton process \(\pi \) as

where the expectation is over paths \(\gamma \) sampled from \(\pi \). We can extend this definition to any process \(\pi \in \mathcal {M}(\mathcal {D})\) by adopting the convention that \(I(\pi ) = + \infty \) if paths sampled from \(\pi \) are not absolutely continuous almost surely. The function I will turn out to correspond to the rate function in large deviation bounds for random permuton process. It can be checked that I is lower semicontinuous (in the weak topology on \(\mathcal {P}\)) and its level sets \(\{ \pi \in \mathcal {P}: I(\pi ) \le C\}\) are compact.

We will also use the notation

to denote the approximation of energy of \(\pi \) associated to the finite partition \(\Pi \). The following lemma will be useful in characterizing the large deviation rate function in terms of these approximations

Lemma 2.2

For any process \(\pi \in \mathcal {M}(\mathcal {D})\) we have

where the supremum is taken over all finite partitions \(\Pi = \{ 0 = t_{0}< t_{1}< \ldots < t_{k} = T \}\).

Proof

Let \(\Pi _n = \left\{ 0< \frac{1}{2^n}< \frac{2}{2^n}< \ldots < 1\right\} \), \(n=0,1,2,\ldots \), be the sequence of dyadic partitions of [0, 1]. It is elementary to show that if a path \(\gamma \) is continuous, then \(\mathcal {E}(\gamma ) = \lim \limits _{n \rightarrow \infty } \mathcal {E}^{\Pi _n}(\gamma )\). Note that if \(\Pi '\) is a refinement of \(\Pi \), then we have \(\mathcal {E}^{\Pi }(\gamma ) \le \mathcal {E}^{\Pi '}(\gamma )\), thus \(\mathcal {E}^{\Pi _n}(\gamma ) \rightarrow \mathcal {E}(\gamma )\) monotonically as \(n \rightarrow \infty \). Now we apply the monotone convergence theorem to get the same same convergence for the expectations \(\mathbb {E}_{\gamma \sim \pi }\mathcal {E}^{\Pi _n}(\gamma )\). \(\square \)

The interchange process. The interchange process on the interval \(\{1, \ldots , N\}\) is a Markov process in continuous time defined in the following way. Consider particles labelled from 1 to N on a line with N vertices. Each edge has an independent exponential clock that rings at rate 1. Whenever a clock rings, the particles at the endpoints of the corresponding edge swap places. By comparing the initial position of each particle with its position after time t we obtain a random permutation of \(\{ 1, \ldots ,N\}\).

Formally, we define the state space of the process as consisting of permutations \(\eta \in {\mathcal {S}}_N\), with the notation \( \eta = (x_1, \ldots , x_N)\) indicating that the particle with label i is at the position \(x_{i}\), or in other words, \(x_i = \eta (i)\). The dynamics is given by the generator

where \(\eta ^{x, x+1}\) is the configuration \(\eta \) with particles at locations x and \(x+1\) swapped and \(\alpha \in (1,2)\) is a fixed parameter (introduced so that we will be able to consider the limit \(N \rightarrow \infty \)). Since we will also be considering variants of this process with modified rates, we will often refer to the process with generator \(\mathcal {L}\) as the unbiased interchange process.

The interchange process defines a probability distribution on permutation-valued paths \(\eta ^N = (\eta ^N_t, 0 \le t \le T)\) for any \(T \ge 0\). Consider now the permutation process \(X^{\eta ^N}\) associated to \(\eta ^N\), that is, sample \(\eta ^N\) according to the interchange process, pick a particle uniformly at random and follow its trajectory in \(\eta ^N\). The distribution \(\mu ^{\eta ^N}\) of \(X^{\eta ^N}\), defined by (5), is then a random element of \(\mathcal {M}(\mathcal {D})\).

The position of a random particle in the interchange process will be distributed as the stationary simple random walk (in continuous time) on the line \(\{1, \ldots , N\}\). If we look at timescales much shorter than \(N^2\), typically each particle will have distance o(N) from its origin, so the permutation obtained at time t such that \(tN^{\alpha } \ll N^{2}\) will be close (in the sense of permutons) to the identity permutation. As mentioned in the introduction, we will be interested in large deviation bounds for rare events such as seeing a nontrivial permutation after a short time.

2.2 Euler equations and generalized incompressible flows.

Let us now discuss the connection to fluid dynamics and incompressible flows (the discussion here follows [AF09] and [BFS09]). The Euler equations describe the motion of an incompressible fluid in a domain \(D \subseteq \mathbb {R}^d\) in terms of its velocity field u(t, x), which is assumed to be divergence-free. The evolution of u is given in terms of the pressure field p

where the second equation encodes the incompressiblity constraint and the third equation means that u is parallel to the boundary \(\partial D\).

Assuming u is smooth, the trajectory g(t, x) of a fluid particle initially at position x is obtained by solving the equation

Since u is assumed to be divergence-free, the flow map \(\Phi ^{t}_{g} : D \rightarrow D\) given by \(\Phi ^{t}_{g}(x) = g(t,x)\) is a measure-preserving diffeomorphism of D for each \(t \in [0,T]\). This means that \((\Phi ^{t}_{g})_{*} \mu _D = \mu _D\), where from now on by \(f_{*}\) we denote the pushforward map on measures, associated to f, and \(\mu _D\) is the Lebesgue measure inside D. Denoting by \(\textrm{SDiff}(D)\) the space of all measure-preserving diffeomorphisms of D, we can rewrite the Euler equations in terms of g

Arnold proposed an interpretation according to which the equation above can be viewed as a geodesic equation on \(\textrm{SDiff}(D)\). Thus one can look for solutions to (13) by considering the variational problem

among all paths \(g(t, \cdot ) : [0,T] \rightarrow \textrm{SDiff}(D)\) such that \(g(0, \cdot ) = f\), \(g(T, \cdot ) = h\) for some prescribed \(f, h \in \textrm{SDiff}(D)\) (by right invariance without loss of generality f can be assumed to be the identity). The pressure p then arises as a Lagrange multiplier coming from the incompressibility constraint.

Shnirelman proved ([Shn87]) that in dimensions \(d \ge 3\) the infimum in this minimization problem is not attained in general and in dimension \(d=2\) there exist diffeomorphisms \(h = g(T, \cdot )\) which cannot be connected to the identity map by a path with finite action. This motivated Brenier ([Bre89]) to consider the following relaxation of this problem. With C(D) denoting the space of continuous paths from [0, T] to D and \(\mathcal {M}(C(D))\) the set of probability measures on C(D), the variational problem is

over all \(\pi \in \mathcal {M}(C(D))\) satisfying the constraints

where \(\pi _{0,T}\), \(\pi _t\) denote the marginals of \(\pi \) at times respectively 0, T and at time t.

Following Brenier, a probability measure \(\pi \in \mathcal {M}(C(D))\) satisfying constraints (16) is called a generalized incompressible flow between the identity id and h. To see that indeed (15) is a relaxation of (14), note that any sufficiently regular path \(g(t, \cdot ) : [0,T] \rightarrow \textrm{SDiff}(D)\), for example corresponding to a solution of (13), induces a generalized incompressible flow given by \(\pi = (\Phi _g)_{*} \mu _D\), where as before \(\Phi _g(x) = g(\cdot , x)\). As evidenced by the sine curve process mentioned in the introduction, the converse is false – trajectories of particles sampled from a generalized flow can cross each other or split at a later time when starting from the same position, which is not possible for classical, smooth flows. We refer the reader to [Bre08] for an interesting discussion of physical relevance of this phenomenon.

The problem admits a natural further relaxation in which the target map is “non-deterministic”, in the sense that we have \(\pi _{0,T} = \mu \) with \(\mu \) being an arbitrary probability measure supported on \(D \times D\) and having uniform marginals on each coordinate, not necessarily of the form \(\mu = (id, h)_{*}\mu _{D}\) for some map h. From now on whenever we refer to problem (15) or generalized incompressible flows we will be always considering this more general variant.

The connection between the generalized problem (15) and the original Euler equations (13) is provided by a theorem due to Ambrosio and Figalli ([AF09]), with earlier weaker results by Brenier ([Bre99]). Roughly speaking, they showed that given a measure \(\mu \) with uniform marginals there exists a pressure function p(t, x) such that the following holds – one can replace the problem of minimizing the functional (15) over incompressible flows satisfying \(\pi _{0,T} = \mu \) by an easier problem in which the incompressibility constraint is dropped, provided one adds to the functional a Lagrange multiplier given by p. We refer the reader to [AF09, Section 6] for a precise formulation and further results on regularity of p.

In particular, if \(\pi \) is optimal for (15) and the corresponding pressure p is smooth enough, their result implies that almost every path \(\gamma \) sampled from \(\pi \) minimizes the functional

In that case the equation \(\ddot{g}(t,x) = - \nabla p(t, g(t,x))\) from (13) is nothing but the Euler-Lagrange equation for extremal points of the functional (17). We can therefore, at least under some regularity assumptions on p, think of generalized incompressible flows as solutions to (13) in which instead of having a diffeomorphism we assume random initial conditions for each particle.

From now on let us restrict the discussion to \(D = [0,1]\), which will be most directly relevant to the results of this paper. In this case the original problem (14) is somewhat uninteresting, since the only measure-preserving diffeomorphisms of [0, 1] are \(f(x) = x\) and \(f(x) = 1 - x\). However, the relaxed problem (15) is non-trivial and indeed for the target map \(h(x) = 1 - x\) and \(T = 1\) the unique optimal solution is given by the sine curve process.

In this setting, the reader may recognize that generalized incompressible flows are in fact the same objects as permuton processes. The term measure-preserving plans is used in [AF09] for what we call permutons. The functional minimized in (15) is the energy \(I(\pi )\) of a permuton process, defined in (10). In this language the optimization problem we are interested in can be rephrased as follows:

where the infimum is over all permuton processes \(\pi \in \mathcal {P}\) satisfying \(\pi _{0,T} = \mu \) for a given permuton \(\mu \in \mathcal {M}([0,1]^2)\).

Generalized solutions to Euler equations. We will say that a permuton process \(\pi \) is a generalized solution to Euler equations if there exists a function \(p : [0,T] \times [0,1] \rightarrow \mathbb {R}\), differentiable in the second variable, such that almost every path \(x : [0,T] \rightarrow [0,1]\) sampled from \(\pi \) satisfies the equation

for \(t \in [0,T]\). This is of course equivalent to \(x''(t) = - \partial _x p (t, x(t))\).

By the remarks above, if \(\pi \) minimizes the energy in (18) and the associated pressure p is smooth enough, then \(\pi \) is always a generalized solution to Euler equations. However, this is only a necessary condition – for a discussion of corresponding sufficient conditions see [BFS09].

2.3 Proof outline and structure of the paper.

Let us now give a brief outline of the proof strategy for Theorems A and B. For the lower bound, given a process X we construct a perturbation of the interchange process (defined by introducing asymmetric jump rates based on 19) for which a law of large numbers holds, namely, the distribution of the path of a random particle converges to a deterministic limit (which is the distribution of X). The large deviation principle is then proved by estimating the Radon–Nikodym derivative between the biased process and the original one.

The key property which makes this construction possible is that the process X satisfies a second order ODE given by (19), so its trajectories are fully specified by the particle’s position and velocity (the latter chosen initially from a mean zero distribution). The biased process is then constructed by endowing each particle with an additional parameter keeping track of its velocity, but we perform an additional change variables, working instead of velocity with a variable we call color. The advantage of this is that the uniform distribution of colors is stationary when the jump rates are properly chosen, which will greatly facilitate the analysis. An additional technical difficulty arises if the velocity distribution of X is time-dependent or not regular enough near the boundary, in which case we first approximate X by a process with a sufficiently regular and piecewise time-homogeneous velocity distribution.

To prove the law of large numbers we need to show that in the biased interchange process particles’ trajectories behave approximately like independent samples from X. This requires proving that their velocities remain uncorrelated when averaged over time and is accomplished by means of a local mixing result called the one block estimate. It is here that we rely on stationarity of the uniform distribution of colors in the biased process and the fact that X has velocity zero on average.

The strategy for proving the upper bound is somewhat simpler. We consider a family of exponential martingales similar to the one employed in analyzing independent random walks and use the one block estimate to show that the particles’ velocities are typically nonnegatively correlated. This enables us to prove the large deviation upper bound for compact sets and the extension to closed sets is done by proving exponential tightness.

Structure of the paper. The rest of the paper is structured as follows. In Sect. 3 we introduce the change of variables needed to define the process with colors and prove the approximation result for X mentioned above (Proposition 3.7). In Sect. 4 we define the biased interchange process and derive the conditions on its rates which guarantee stationarity. Section 5 contains the proof of the law of large numbers for the biased interchange process (Theorem 5.1). In Sect. 6 we prove two variants of the one block estimate – one needed for the large deviation upper bound (Lemma 6.2) and a more involved one needed for the proof of the law of large numbers (Lemma 5.4). In Sect. 7 these pieces are then used to prove the large deviation lower bound (Theorem 7.3). Section 8 is devoted to the proof of the large deviation upper bound (Theorem 8.4) and is independent of the previous sections (apart from the use of Lemma 6.2). Finally, in Sect. 9 we prove Theorem 1.1 and Theorem 1.2 on relaxed sorting networks.

3 ODEs and Generalized Solutions to Euler Equations

Regularity assumptions and properties of generalized solutions. Suppose \(\pi \) is a generalized solution to Euler Eq. (19) and let X be a process with distribution \(\pi \). For the proof of the large deviation lower bound we will need to impose additional regularity assumptions on \(\pi \). For \(t \in [0,T]\) let \(\mu _t\) denote the joint distribution of \((x(t), x'(t))\) when x is sampled according to \(\pi \). In particular, \(\mu _0\) is the joint distribution of the initial conditions of the ODE (19). If \(\Phi ^{t,s}(x,v)\) denotes the solution x(s) of (19) satisfying \((x(t), v(t)) = (x, v)\), then \(\mu _t = \Phi ^{0,t}_{*}\mu _0\).

We will assume that each \(\mu _t\) has a density \(\rho _t(x,v)\) with respect to the Lebesgue measure on \([0,1] \times \mathbb {R}\). For \(x \in [0,1]\) and \(t \in [0,T]\) let \(\mu _{t,x}\) denote the conditional distribution of v, given x, at time t. In addition we assume that for \(x=0\) or 1 the distribution \(\mu _{t,x}\) is a delta mass at 0, as otherwise the process X cannot stay confined to [0, 1] and have mean velocity zero everywhere (see the discussion of incompressiblity below).

Let \(F_{t,x}\) denote the cumulative distribution function of \(\mu _{t,x}\) and let \(V_{t}(x, \cdot ) : [0,1] \rightarrow \mathbb {R}\) be the quantile function of \(\mu _{t,x}\), defined for \(x \in [0,1]\) and \(\phi \in (0,1]\) by

and \(V_t(x, 0) = \inf \left\{ v \in \mathbb {R}\, | \, F_{t,x}(v) > 0 \right\} .\) In particular for \(x=0,1\) we have \(V_{t}(x,\phi ) = 0\).

Assumption 3.1

Throughout the paper, we will assume that for a generalized solution to Euler equations \(\pi \) the following properties are satisifed

-

(1)

the pressure function \((t,x) \mapsto p(t,x)\) in (19) is measurable in t and differentiable in x, with the derivative \(\partial _x p(t,x)\) Lipschitz continuous in x (with the Lipschitz constant uniform in t);

-

(2)

there exists a compact set \(K \subseteq [0,1] \times \mathbb {R}\) such that for each \(t \in [0,T]\) the density \(\rho _t\) is supported in K;

-

(3)

for \(t \in [0,T], x \in [0,1]\) the support of \(\mu _{t,x}\) is a connected interval in \(\mathbb {R}\);

-

(4)

the density \(\rho _t\) is continuously differentiable in t, x and v for each \(t \in [0,T]\) and x, v in the interior of the support of \(\rho _t\).

Let us comment on the relevance of these assumptions. Assumption (1) will guarantee uniqueness of solutions to (19). Assumption (2) implies that the velocity of a particle moving along a path sampled from \(\pi \) stays uniformly bounded in time. Assumption (3) implies that for any \(x \in (0,1)\) and \(\phi \in [0,1]\) we have \(F_{t,x}(V_{t}(x, \phi )) = \phi \), i.e., \(V_t(x, \cdot )\) is the inverse function of \(F_{t,x}\). Assumptions (3) and (4) imply that \(V_{t}(x,\phi )\) is a continuous function of t, x, \(\phi \) and it is continuously differentiable in all variables for \(x \in (0,1)\).

Note that for \(V_t(x,\phi )\) to be differentiable at \(\phi = 0,1\), the distribution function \(F_{t,x}\) necessarily has to be non-differentiable at corresponding v such that \(F_{t,x}(v) = \phi \). This is why we can require the density \(\rho _t\) to be smooth only in the interior of its support and not at the boundary.

From now on we assume that \(\pi \) is a fixed generalized solution to Euler equations, satisfying Assumptions (3.1). Almost every path \(x : [0,T] \rightarrow [0,1]\) sampled from \(\pi \) satisfies the ODE

Note that since \(\pi \) is a permuton process, each measure \(\mu _t\) satisfies the incompressibility condition, meaning that its projection onto the first coordinate is equal to the uniform measure on [0, 1]. This is equivalent to the property that for any test function \(f : [0,1] \rightarrow \mathbb {R}\) we have

An important consequence of the incompressibility assumption is that under \(\mu _t\) the velocity has mean zero at each x, that is, we have the following

Lemma 3.2

For any \(t \in [0,T]\) and \(x \in [0,1]\) we have

Proof

Consider any test function \(f : [0,1] \rightarrow \mathbb {R}\) and write

By incompressibility the integral above is always equal to \(\int \limits _{0}^{1} f(x) \, dx \), in particular does not depend on time. On the other hand its derivative with respect to s is

Since \(\Phi ^{t,t+s}(x,v)\vert _{s=0} = x\) and \(\frac{d\Phi ^{t,t+s}}{ds}(x,v)\vert _{s=0} = v\), by evaluating the derivative at \(s = 0\) we arrive at \(\int \limits f'(x) v \, d\mu _{t}(x,v) = 0\). Since \(\int g(x,v) \, d\mu _t(x,v) = \int g(x,v) \, d\mu _{t,x}(v) dx\) for any measurable g and f was an arbitrary test function, the claim of the lemma holds for almost every x. Since we have assumed that \(\mu _{t}\) has a continuous density, the claim in fact holds for all x, which ends the proof. \(\square \)

We will also make use of an explicit evolution equation that the densities \(\rho _t\) have to satisfy. This is the content of the following lemma.

Lemma 3.3

For any \(t \in [0,T]\) and x, v in the interior of the support of \(\rho _t\) we have

Proof

Let \(f : [0,1] \times \mathbb {R}\rightarrow \mathbb {R}\) be any test function and consider the integral

On the one hand, its derivative with respect to s is equal to

Since \(\Phi ^{t,t+s}(x,v)\) is a solution to (20), we have \(\frac{d\Phi ^{t,t+s}}{ds}(x,v)\big \vert _{s=0} = v\) and \(\frac{d^2\Phi ^{t,t+s}}{ds^2}(x,v)\big \vert _{s=0} = -\partial _x p(t, x)\), which gives us

Performing integration by parts with respect to x for the first term and with respect to v for the second term gives (noting that f has compact support so the boundary terms vanish)

On the other hand, we have

so

and thus

Since the test function f was arbitrary, the equation from the statement of the lemma must hold for every t, x, v as assumed. \(\square \)

The colored trajectory process. Let \(X = (X_t, 0 \le t \le T)\) be the permuton process with distribution \(\pi \). For the large deviation lower bound we will need to construct a suitable interacting particle system in which the behavior of a random particle mimics that of the permuton process X. A crucial ingredient will be a property analogous to Lemma 3.2, i.e., having velocity distribution whose mean is locally zero. Instead of working with velocity v, whose distribution \(\rho _t(x,v)\) at a given site x may change in time, it will be more convenient to perform a change variables and use another variable \(\phi \), which we call color, whose distribution will be invariant in time.

Recall that under Assumptions (3.1) the distribution function \(F_{t,x}(\cdot )\) and the quantile function \(V_{t}(x, \cdot )\) are related by

for any \(t \in [0,T]\), \(x \in (0,1)\), \(\phi \in [0,1]\), \(v \in \textrm{supp}\, \mu _{t,x}\).

The reason for introducing the variable \(\phi \) is the following elementary property – if \(\phi \) is sampled from the uniform distribution on [0, 1], then \(V_t(x, \phi )\) is distributed according to \(\mu _{t,x}\). Thus instead of working with (x, v) variables in the ODE (20), where the distribution of v evolves in time, we can set up an ODE for x and \(\phi \) such that the joint distribution of \((x, \phi )\) will be uniform on \([0,1]^2\) at each time. The velocity v and its distribution can then be recovered via the equation \(v = V_t(x, \phi )\).

Let (x(t), v(t)) be a solution to (20) such that \(x(t) \ne 0,1\) and let

Let us derive the ODE that \((x(t), \phi (t))\) satisifes. Since (x(t), v(t)) is a solution of (20), we have

Lemma 3.3 implies that

which gives

and upon integrating by parts in the last integral we obtain

Now, differentiating (21) with respect to x and \(\phi \) gives

Also by (21) we have \(v(t) = V_t(x(t), \phi (t))\), so a change of variables \(w = V_t(x(t), \psi )\) in (22) yields

where \(R_t(x,\phi ) = - \int \limits _{0}^{\phi } \frac{\partial V_t}{\partial x}(x, \psi ) \, d\psi \).

Thus we have shown that \((x(t), \phi (t))\) satisfies the ODE

If \(x(t) \ne 0,1\), this equation is equivalent to (20), i.e., \((x(t), \phi (t))\) is a solution of (23) with initial conditions \((x(0), \phi (0)) = (x_0, \phi _0)\) if and only if (x(t), v(t)) is a solution of (20) with initial conditions \((x(0), v(0))= (x_0, V_0(x_0, \phi _0))\). We also note that Lemma 3.2 expressed in terms of \((x, \phi )\) variables states that for each \(t \in [0,T]\) and \(x \in [0,1]\) we have

From now on we work exclusively with (23). We will need to make two approximations necessary for the interacting particle system analysis later on. One is necessitated by the fact that the function \(V_t(x, \phi )\) might not be smooth with respect to x at the boundaries \(x = 0,1\) (this happens, for example, for the sine curve process). We will therefore replace the function by its smooth approximation in a \(\beta \)-neighborhood of the boundary and in the end take \(\beta \rightarrow 0\). The other approximation consists in dividing the time interval [0, T] into intervals of length \(\delta \) and approximating \(V_t(x, \phi )\) for given \(x, \phi \) with a piecewise-constant function of t. This will enable us to give a simple stationarity condition for the corresponding interacting particle system and in the end take \(\delta \rightarrow 0\) a well.

Let \(\beta \in (0,\frac{1}{4})\) and let \(V_{t}^{\beta }(x, \phi )\) be a function with the following properties

-

(a)

\(V_{t}^{\beta }(x, \phi )\) is continuously differentiable for every \(t \in [0,T]\), \(x \in [0,1]\), \(\phi \in [0,1]\),

-

(b)

\(V_{t}^{\beta }(x, \phi ) = V_{t}(x, \phi )\) for \(x \in \left[ \beta , 1 - \beta \right] \) and \(V_{t}^{\beta }(0,\phi ) = V_{t}^{\beta }(1,\phi ) = 0\),

-

(c)

for each \(x \in [0,1]\) we have \(\int \limits _{0}^{1} V_{t}^{\beta }(x, \psi ) \, d\psi = 0\),

-

(d)

\(|V_{t}^{\beta }(x, \phi )| \le |V_{t}(x, \phi )| + 1\),

-

(e)

we have \(\lim \limits _{\beta \rightarrow 0} \int \limits _{0}^{1}\int \limits _{0}^{1} |V_{t}^{\beta }(x, \phi )|^2 \, dx \, d\phi = \int \limits _{0}^{1}\int \limits _{0}^{1} |V_{t}(x, \phi )|^2 \, dx \, d\phi \).

The existence of such a function \(V_{t}^{\beta }\) is proved at the end of this section. By \((x^{\beta }(t), \phi ^{\beta }(t))\) we will denote the solution to the ODE

Take any \(\delta > 0\) (to simplify notation we will assume that T is an integer multiple of \(\delta \), this will not influence the argument in any substantial way) and consider a partition \(0 = t_0< t_1< \ldots < t_M = T\) of [0, T] into \(M = \frac{T}{\delta }\) intervals of length \(\delta \), with \(t_k = k \delta \). Let \(V^{\beta , \delta }(t, x,\phi )\) be the piecewise-constant in time approximation of \(V^{\beta }_{t}(x, \phi )\), defined by

We can now define the piecewise-stationary process which will be our main tool in subsequent arguments. Consider the ODE

where

Solutions to (27) exist and are unique as usual for any initial conditions, provided we interpret \((y'(t), \phi '(t))\) above as right-handed derivatives at \(t = 0, t_1, t_2, \ldots , t_{M-1}\) (we adopt this convention from now on).

Let \(P^{\beta , \delta } = \left( (X^{\beta , \delta }_{t}, \Phi ^{\beta , \delta }_{t}), 0 \le t \le T \right) \) be the stochastic process with values in \([0,1]^2\) with the following distribution: choose \((X^{\beta , \delta }_{0}, \Phi ^{\beta , \delta }_{0})\) uniformly at random from \([0,1]^2\) and then take \((X^{\beta , \delta }_{t}, \Phi ^{\beta , \delta }_{t}) = (y(t), \phi (t))\), where \((y, \phi )\) is the solution of the system (27) with initial conditions given by \((y(0), \phi (0)) = (X^{\beta , \delta }_{0}, \Phi ^{\beta , \delta }_{0})\). We will call this process the colored trajectory process associated to (27).

We also define the process \(P^{\beta } = \left( (X^{\beta }_{t}, \Phi ^{\beta }_{t}), 0 \le t \le T \right) \), which is obtained in the same way as \(P^{\beta , \delta }\) except that we follow solutions to (25) instead of (27), i.e., make no piecewise approximation in time of \(V_{t}^{\beta }\).

The key property of the process \(P^{\beta , \delta }\) is the following

Lemma 3.4

For each \(t \in [0,T]\) the distribution of \((X^{\beta , \delta }_t, \Phi ^{\beta , \delta }_t)\) is uniform on \([0,1]^2\).

Proof

First we show that the process stays confined to \([0,1]^2\). Because of uniqueness of solutions to (27) it is enough to show that if a solution starts in the interior of \([0,1]^2\), it never reaches the boundary, or, equivalently, that if a solution is at the boundary at some t, it is actually at the boundary for all \(s \in [0,T]\). If \(y(t) = 0\) or 1 for any t, then \(y'(t) = 0\), since \(V^{\beta , \delta }(t, 0,\phi ) = V^{\beta , \delta }(t, 1,\phi ) = 0\) for any \(\phi \). By uniqueness of solutions we then have \(y(t) \equiv 0\) or 1. If \(\phi (t) = 0\) for any t, then \(R^{\beta , \delta }(t, y, 0) = 0\) regardless of y, so as before \(\phi '(t) = 0\) and \(\phi (t) \equiv 0\). Finally, if \(\phi (t) = 1\), then using the property (c) of the function \(S^{\beta }_{t}(x, \phi )\) we have

so as before \(\phi '(t) = 0\) and \(\phi (t) \equiv 1\).

Now we observe that the form of \(V^{\beta , \delta }\) and \(R^{\beta , \delta }\) in (27) implies that the vector field \((V^{\beta , \delta }(t,\cdot ,\cdot ),R^{\beta , \delta }(t,\cdot ,\cdot ))\) is divergence-free at each t, so by Liouville’s theorem the uniform measure on \([0,1]^2\) is invariant for the corresponding flow map. \(\square \)

In particular, the process \(X^{\beta , \delta } = (X^{\beta , \delta }_t, 0 \le t \le T)\) is a permuton process. Crucially, we can couple it to the process X in a natural way. Consider \((x_0, \phi _0)\) chosen uniformly at random from \([0,1]^2\) and take \((x(0), v(0)) = (x_0, V_{0}(x_0, \phi _0))\), resp. \((y(0), \phi (0)) = (x_0, \phi _0)\), as initial conditions for (23), resp. (27). By definition of \(V_0(x, \phi )\), the pair (x(0), v(0)) has distribution given by \(\mu _0\), so indeed the pair of solutions (x(t), y(t)) corresponding to the initial conditions above defines a coupling of X and \(X^{\beta , \delta }\). From now on X and \(X^{\beta , \delta }\) are always assumed to be coupled in this way.

It is readily seen that the statements above also hold for \(P^{\beta }\) instead of \(P^{\beta , \delta }\), hence with a slight abuse of notation we can allow \(\delta = 0\) and write \(P^{\beta , 0} = P^{\beta }\), \(X^{\beta ,0} = X^{\beta }\) etc.

Our goal in the remainder of this section is to show that, as \(\beta , \delta \rightarrow 0\), the processes X and \(X^{\beta , \delta }\) typically stay close to each other and have approximately the same Dirichlet energy, so in the probabilistic part of the arguments it will be enough to work with the process \((X^{\beta , \delta }, \Phi ^{\beta , \delta })\), which is more convenient thanks to piecewise stationarity.

First we prove a simple lemma, showing that \(X^{\beta }\) is unlikely to ever be close to the boundary (so that approximation of X with \(X^{\beta }\) is meaningful as \(\beta \rightarrow 0\)).

Lemma 3.5

Let \(\mathbb {P}\) denote the law of the process \(X^{\beta }\). Let

We have

Proof

We will prove that \(X^{\beta }_{t} \notin [0, \beta ]\) with high probability as \(\beta \rightarrow 0\) (the proof for \([1-\beta , 1]\) is analogous). Suppose that y is a solution of (27) with initial condition \(y(0) \notin [0, 2\beta ]\) and that \(y(t) \in [0, \beta ]\) for some \(t \in [0,T]\). Then there exists a time interval \([s,s']\) such that \(y(s) = 2\beta \), \(y(s') = \beta \) and \(y(u) \in [\beta , 2 \beta ]\) for every \(u \in [s,s']\). Without loss of generality we can assume that \([s,s'] \subseteq [t_k, t_{k+1})\) for some k (the other case is easily dealt with by further subdividing \([\beta , 2 \beta ]\) into two equal subintervals and repeating the argument for each of them). By the mean value theorem

for some \(w \in [s,s']\). For \(x \in [\beta , 2\beta ]\) we have \(V^{\beta }(w,x,\phi ) = V_{t_k}(x,\phi )\), so \(y'(w) = V_{t_k}(w, y(w), \phi (w))\). Since \(|y(w)| \le 2\beta \) and \(V_{t_k}(x,\phi )\) is continuous at \(x=0\), we have \(|y'(w)| \le f(\beta )\) for some function f (depending only on V) satisfying \(\lim \limits _{\beta \rightarrow 0} f(\beta ) = 0\). As \(|y(s) - y(s')| = \beta \), altogether this implies that \(s'- s \ge \frac{\beta }{f(\beta )}\), i.e., if the process \(X^{\beta }\) starts outside \([0, 2\beta ]\), it has to spend time at least \(\frac{\beta }{f(\beta )}\) before it reaches \([0, \beta ]\). Thus

Taking expectation yields

Since \(X^{\beta }\) is a permuton process, \(X^{\beta }_s\) has uniform distribution for each s, which gives

Together with the inequality above this implies

Since \(X^{\beta }_{0}\) has uniform distribution, we have \(\mathbb {P}(X^{\beta }_{0} \in [0, 2\beta ]) = 2\beta \). Thus

Since \(f(\beta ) \rightarrow 0\) as \(\beta \rightarrow 0\), the claim is proved. \(\square \)

Proposition 3.6

Fix \(\beta \in (0, \frac{1}{4})\) and \((x_0, \phi _0) \in [0,1]^2\). Let \((x^{\beta }(t), \phi ^{\beta }(t))\), resp. \((x^{\beta , \delta }(t), \phi ^{\beta , \delta }(t))\), be the solution to (25), resp. (27), with initial conditions \((x_0, \phi _0)\). We have

Proof

The statement follows from continuous dependence of solutions to an ODE on parameters, see e.g., [CL55, Theorem 4.2]. Denoting \(V^{\beta , 0}(t, y, \phi ) = V^{\beta }_t(y, \phi )\), \(R^{\beta , 0}(t, y, \phi ) = R^{\beta }_t(y, \phi )\), we only need to check that for \(f(t, y, \phi , \delta ) = V^{\beta , \delta }(t,y,\phi )\), \(g(t, y, \phi , \delta ) = R^{\beta , \delta }(t, y, \phi )\) we have

-

(1)

\(f(\cdot , y, \phi , \delta )\) and \(g(\cdot , y, \phi , \delta )\) are measurable on [0, T],

-

(2)

for any fixed \(t \in [0,T]\) and \(\delta > 0\) \(f(t,\cdot ,\cdot ,\delta )\) and \(g(t,\cdot ,\cdot ,\delta )\) are continuous in \((y, \phi )\),

-

(3)

for any fixed \(t \in [0,T]\) \(f(t,\cdot ,\cdot ,\cdot )\) and \(g(t,\cdot ,\cdot ,\cdot )\) are continuous in \((y,\phi ,\delta )\) at \(\delta = 0\),

-

(4)

\(f(t,y,\phi ,\delta )\), \(g(t,y,\phi ,\delta )\) are uniformly bounded.

Properties 1), 2) and 4) follow directly from our regularity assumptions about \(V^{\beta , \delta }(t,y,\phi )\) (in case of \(R^{\beta , \delta }(t,y,\phi )\) we use continuity of \(\frac{\partial V^{\beta , \delta }}{\partial y}(t, y, \phi )\)). Property 3) follows from pointwise convergence \(f(t,y,\phi ,\delta ) \xrightarrow {\delta \rightarrow 0} f(t,y,\phi ,0)\) and equicontinuity of \(\{f(t,y,\phi ,\delta )\}_{\delta \ge 0}\) in \((y, \phi )\), which in turn follows from uniform continuity of \(V^{\beta }_{t}(y, \phi )\) in t, y and \(\phi \). The argument for \(g(t,y,\phi , \delta )\) is analogous (again, using uniform continuity of \(\frac{\partial V^{\beta , \delta }}{\partial y}(t, y, \phi )\)). \(\square \)

Now we can prove the main result of this section, which states that the trajectories of the process X and its energy can be approximated by those of the process \(X^{\beta , \delta }\).

Proposition 3.7

Let \(\pi \in \mathcal {M}(\mathcal {D})\) be the distribution of the process X and let \(\pi ^{\beta , \delta } \in \mathcal {M}(\mathcal {D})\) be the distribution of the process \(X^{\beta , \delta }\). Then we have

where \(d_{{\mathcal {W}}}^{sup}\) is the Wasserstein distance associated to the supremum norm on \(\mathcal {D}\).

Furthermore,

where \(I(\mu )\) is the energy of the process \(\mu \) defined in (10).

Proof

For the first convergence it is enough to show that \(\mathbb {E}\left\| X - X^{\beta , \delta } \right\| _{sup} \rightarrow 0\) in the coupling between X and \(X^{\beta , \delta }\) considered before. We have

Let \(B^{\beta }\) be the event from the statement of Lemma 3.5. Since the supremum norm is bounded by 1, we have

By Lemma 3.5 the first term is o(1) as \(\beta \rightarrow 0\). Since \(V^{\beta }_{t}(x, \phi ) = V_{t}(x, \phi )\) if \(x \in [\beta , 1-\beta ]\), on the event \((B^{\beta })^c\) we have \(X^{\beta } = X\), so the second term above is equal to 0. As for \(\mathbb {E}\left\| X^{\beta } - X^{\beta , \delta } \right\| _{sup}\), by Proposition 3.6 for fixed \(\beta > 0\) we have with probability one \(\left\| X^{\beta } - X^{\beta , \delta } \right\| _{sup} \rightarrow 0\) as \(\delta \rightarrow 0\), which together with the estimate on \(\mathbb {E}\left\| X - X^\beta \right\| _{sup}\) proves the first claim of the theorem.

As for the energy, let \(\pi ^\beta \) denote the distribution of the process \(X^\beta \), with X, \(X^\beta \) and \(X^{\beta , \delta }\) coupled as before. Since

it is enough to show that \(\lim \limits _{\delta \rightarrow 0} I(\pi ^{\beta , \delta }) = I(\pi ^{\beta })\) and \(\lim \limits _{\beta \rightarrow 0} I(\pi ^{\beta }) = I(\pi )\). We have

For fixed \(t \in [0,T]\) by Lemma 3.4\((X^{\beta , \delta }(t), \Phi ^{\beta , \delta }(t))\) has uniform distribution on \([0,1] \times [0,1]\) and moving the expectation inside the integral we obtain

The analogous formula is valid for \(I(\pi )\) as well. Now, for fixed \(\beta > 0\) we have \(V^{\beta , \delta }(t,x,\phi ) \xrightarrow {\delta \rightarrow 0} V^{\beta }_t(x,\phi )\) and \(V^{\beta , \delta }(t,x,\phi )\) is uniformly bounded in t, x and \(\phi \), independently of \(\delta \), which by dominated convergence implies the convergence of the integrals above as well. Thus \(\lim \limits _{\delta \rightarrow 0} I(\pi ^{\beta , \delta }) = I(\pi ^{\beta })\). The convergence \(\lim \limits _{\beta \rightarrow 0} I(\pi ^{\beta }) = I(\pi )\) follows directly from properties (d) and (e) of \(V^{\beta }_{t}(x, \phi )\) and dominated convergence.

\(\square \)

Construction of \(V_{t}^{\beta }\). We will construct the desired modification of \(V_t(x, \phi )\) for \(x \in [0,\beta ]\), the construction for \(x \in [1-\beta ,1]\) is analogous. Fix \(t \in [0,T]\). Let

Consider \(\beta ' < \beta / 2\) to be fixed later and let f be a smooth approximation of a step function which has values in [0, 1], is equal to 0 on \([0, \beta - 2 \beta ']\), equal to 1 on \([\beta ' - \beta , \beta ]\) and is increasing on \([\beta - 2\beta ', \beta - \beta ']\). In particular we have \(f(0) = 0\), \(f(\beta ) = 1\) and \(f'(\beta ) = 0\).

Let us now take

and

We will check that \(V^{\beta }_{t}(x, \phi )\) indeed satisfies the desired properties.

Let us first check that the property (c) is satisfied for \(x \in [0, \beta ]\). We have

thanks to (24).

Property (b) follows directly from \(f(0) = 0\). As for (a), for \(x \in [0,\beta )\) continuous differentiability of \(V^{\beta }_t(x, \phi )\) follows from continuous differentiability of f(x). At \(x = \beta \) we have

and \(f(\beta ) = 1\), so \({\widetilde{V}}_t(x, \phi )\) is continuous at \(x=\beta \). Likewise,

Since \(f(\beta ) = 1\) and \(f'(\beta ) = 0\), we have \(\frac{\partial {\widetilde{V}}_t}{\partial x}(\beta , \phi ) = \frac{\partial V_t}{\partial x} (\beta , \phi )\). As the functions in the formula above are continuously differentiable at \(x=\beta \), \(V^{\beta }_t(x, \phi )\) is continuously differentiable at \(x=\beta \) as well.

To see that property (d) is satisfied, we note that by continuity of \(V_t(x, \phi )\) and \(\frac{\partial V_t}{\partial x}(x, \phi )\) for \(x \ne 0,1\) we can take \(\beta '\) in the definition of f(x) above to be arbitrarily small (depending on \(V_t\), \(\frac{\partial V_t}{\partial x}\) and \(\beta \)) so that on \([\beta - 2 \beta ', \beta ]\) the function \({\widetilde{V}}_t(x, \phi )\) is less than \(|V_t(\beta , \phi )| + 1\) in absolute value. Since on \([0, \beta - 2\beta ']\) we have \(V_{t}^{\beta }(x, \phi ) = 0\), the desired bound on \(|V_{t}^{\beta }(x, \phi )|\) follows.

Finally, to prove that property (e) holds it is enough to show that

as \(\beta \rightarrow 0\), since \(V_{t}^{\beta }(x, \phi ) = V_{t}(x, \phi )\) for \(x \notin [0,\beta ]\). The claim follows immediately from property (d), since the integrand is bounded independently of \(\beta \).

4 The Biased Interchange Process and Stationarity

The biased interchange process. For the sake of proving a large deviation lower bound, we will need to perturb the interchange process to obtain dynamics which typically exhibits (otherwise rare) behavior of a fixed permuton process. Let us introduce the biased interchange process. Its configuration space E consists of sequences \(\eta = \left( (x_{i}, \phi _{i})\right) _{i=1}^{N}\), where as before \((x_1, \ldots , x_N)\) is a permutation of \(\{1, \ldots , N\}\) and \(\phi _{i}\) has N possible values, \(1, \ldots , N\). Here \(x_{i}\) will be the position of the particle with label i and \(\phi _{i}\) will be its color.

By a slight abuse of notation we will write \(\eta ^{-1}(x)\) to denote the label (number) of the particle at position x in configuration \(\eta \) (so that \(\eta ^{-1}(x_{i}) = i\)). For a position x we will often write \(\phi _{x}\) as a shorthand for \(\phi _{\eta ^{-1}(x)}\) (the positions will be always denoted by x or y and labels by i, so there is no risk of ambiguity). In this way we can treat any configuration \(\eta \) as a function which assigns to each site x a pair \((\eta ^{-1}(x), \phi _x)\), the label and the color of the particle present at x

The configuration at time t will be denoted by \(\eta ^{N}_{t}\) (or simply \(\eta _{t}\)), and likewise by \(x_{i}(\eta ^{N}_{t})\) and \(\phi _{i}(\eta ^{N}_{t})\) we denote the position and the color of the particle number i at time t. We will use notation \(X_{i}(\eta ^{N}_{t}) = \frac{1}{N}x_{i}(\eta ^{N}_{t})\), \(\Phi _{i}(\eta ^{N}_{t}) = \frac{1}{N} \phi _{i}(\eta ^{N}_{t})\) for the rescaled positions and colors. By the same convention as above \(\Phi _x(\eta ^{N}_{t})\) will denote the rescaled color of the particle at site x at time t.

Let \(\varepsilon = N^{1-\alpha }\), with the same \(\alpha \in (1,2)\) as in (12). Suppose we are given functions \(v, r : [0,T] \times \{1, \ldots , N\} \times \{1, \ldots , N\}\). The dynamics of the corresponding biased interchange process is defined by the (time-inhomogeneous) generator

Here \(\eta ^{x, x+1}\) is the configuration \(\eta \) with particles at locations x and \(x+1\) swapped, and \(\eta ^{y, \pm }\) is the configuration \(\eta \) with \(\phi _{y}\) changed by \(\pm 1\) (with the convention that \(\eta ^{y,+} = \eta ^{y}\) if \(\phi _y = N\) and likewise \(\eta ^{y,-} = \eta ^{y}\) if \(\phi _y = 1\)). We will often use the abbreviated notation \(v_{x}(t,\eta ) = v(t,x, \phi _{x}(\eta ))\) (with the convention \(v_{0}(t,\eta ) = v_{N+1}(t,\eta ) = 0\)).

In other words, at each time neighboring particles make a swap at rate close to 1, with bias proportional to the difference of their velocities \(v(t,x,\phi _{x})\), and each particle independently changes its color by \(\pm 1\), also at rate close to 1 with bias proportional to \(\pm r(t,x, \phi _{x})\). The parameter \(\varepsilon \) has been chosen so that we expect particles to have displacement of order N at macroscopic times.

Since the interchange process is a pure jump Markov process, for each particle its rescaled position \(X_{i}(\eta ^{N})\) and color \(\Phi _{i}(\eta ^{N})\) will be càdlàg paths from [0, T] to [0, 1] and thus elements of \(\mathcal {D}\). In the same way we can consider the joint trajectory \(P_{i}(\eta ^{N}) = (X_{i}(\eta ^{N}), \Phi _{i}(\eta ^{N}))\) as an element of \(\widetilde{\mathcal {D}}= \mathcal {D}([0,T], [0,1]^2)\), the space of cádlág paths from [0, T] to \([0,1]^2\) (equipped with the Skorokhod topology). By \(\mathcal {M}(\widetilde{\mathcal {D}})\) we will denote the space of Borel probability measures on \(\widetilde{\mathcal {D}}\), endowed with the weak topology, and by a slight abuse of notation the corresponding Wasserstein distance will be denoted by \(d_{{\mathcal {W}}}\), as for \(\mathcal {M}(\mathcal {D})\).

If \(\eta ^{N}\) is the trajectory of the biased interchange process, then by analogy with the permutation process \(X^{\eta ^{N}}\) we can define the colored permutation process \(P^{\eta ^{N}} = (X^{\eta ^{N}}, \Phi ^{\eta ^{N}})\), obtained by choosing a particle i at random and following the path \((X_{i}(\eta ^{N}_{t}), \Phi _{i}(\eta ^{N}_{t}))\). Thus we keep track both of the position and the color of a random particle. Since \(\eta ^N\) is random, the distribution \(\nu ^{\eta ^N}\) of \(P^{\eta ^{N}}\), given by

is a random element of \(\mathcal {M}(\widetilde{\mathcal {D}})\).

Stationarity conditions. Let us now connect the discussion of the interchange process with deterministic permuton processes and generalized solutions to Euler equations considered in Sect 3. Recall the colored trajectory process \(P^{\beta , \delta } = (X^{\beta , \delta }, \Phi ^{\beta , \delta })\) defined in Sect. 3. From now on we consider \(\beta \in (0, \frac{1}{4})\) and \(\delta > 0\) to be fixed and we suppress them in the notation, writing \(X = X^{\beta , \delta }\), \(\Phi = \Phi ^{\beta , \delta }\), \(V(t, x, \phi ) = V^{\beta , \delta }(t, x, \phi )\), \(R(t, x, \phi ) = R^{\beta , \delta }(t, x, \phi )\). Note that this should not be confused with the actual generalized solution to Euler equations, which was also denoted by X, but does not appear in this and the following sections except in Theorem 7.3.

Our goal is to set up a biased interchange process so that typically trajectories of particles will behave like trajectories of the process X. We would also like to preserve the stationarity of the uniform distribution of colors, which will greatly facilitate parts of the argument. To find the correct rates \(v(t, x, \phi )\) and \(r(t, x, \phi )\) in (28), recall that by definition the trajectories of the colored trajectory process \(P = (X, \Phi )\) satisfy the equation

with the functions V and R satisfying

for \(F(t,X,\Phi ) = \int \limits _{0}^{\Phi } V(t,X,\psi ) \, d\psi \). Note that \(F(t,X,0) = 0\) and \(F(t,X,1)=0\), where the latter equality follows from property (c) of \(V^{\beta }_{t}(x,\phi )\) (and thus of \(V = V^{\beta ,\delta }\)).

It is clear that v and r should be chosen so that approximately we have \(v(t,x, \phi ) \approx V\left( t,\frac{x}{N} , \frac{\phi }{N}\right) \), \(r(t,x, \phi ) \approx R\left( t,\frac{x}{N} , \frac{\phi }{N}\right) \). To analyze the stationarity condition, consider the uniform distribution on configurations of the biased interchange process, i.e., a distribution in which the labelling of particles is a uniformly random permutation and each particle has a uniformly random color, chosen indepedently from \(\{1, \ldots , N\}\) for each of them. We want to find a condition on rates \(v(t,x, \phi )\) and \(r(t,x, \phi )\) such that this measure will be invariant for the dynamics of \({\widetilde{\mathcal {L}}}_t\).

Note that since \(V(t,X,\Phi )\), \(R(t,X,\Phi )\) are piecewise-constant as functions of t, the dynamics induced by \({\widetilde{\mathcal {L}}}_t\) is time-homogeneous on each interval \([t_k, t_{k+1})\) from the definition (26) of V. Thus the stationarity condition for the uniform measure is that for each state (i.e., each configuration \(\eta \)) the sums of outgoing and incoming jump rates have to be equal. We write down this condition as follows. For any given configuration \(\eta \), with particle at location x having color \(\phi _{x} = \phi _{x}(\eta )\), there are the following possible outgoing jumps:

-

for some \(x \in \{ 1, \ldots , N - 1\}\) the particles at locations x and \(x+1\) swap, at rate \(1 + \varepsilon \left[ v (t,x, \phi _{x}) - v (t,x+1, \phi _{x+1}) \right] \);

-

for some \(x \in \{1, \ldots , N\}\) the particle at x changes its color from \(\phi _x\) to \(\phi _x \pm 1\), at rate \(1 \pm \varepsilon r (t,x, \phi _{x})\);

and incoming jumps:

-

for some \(x \in \{ 1, \ldots , N - 1\}\) the particles at locations x and \(x+1\) swap, at rate \(1 + \varepsilon \left[ v (t,x, \phi _{x+1}) - v (t,x+1, \phi _{x}) \right] \);

-

for some \(x \in \{1, \ldots , N\}\) the particle at x changes its color from \(\phi _x \pm 1\) to \(\phi _x\), at rate \(1 \mp \varepsilon r (t,x, \phi _{x} \pm 1)\).

Thus the condition on the sums of jump rates is

where we adopt the convention \(r(t,x,0) = r(t,x,N+1) = 0\). This implies

Since we would like this equation to be satisfied for any configuration, regardless of the choice of \(\phi _x\) for each x, we want each term in the sum and each of the boundary terms to vanish. This gives us a set of equations

which have to be satisfed for every \(\phi = 1, \ldots , N\).

Let us consider the function \(f(t,x, \phi )\) defined for \(x \in \{0, \ldots , N+1\}\), \(\phi \in \{1, \ldots , N\}\) by

where F is the function appearing in (30). It is straightforward to check that the rates given by

solve the equations for stationarity, given by (31), for any \(x, \phi \in \{1, \ldots , N\}\).

Note that with this choice of rates we have for any \(x, \phi \in \{1, \ldots , N\}\)

uniformly in x, \(\phi \) and t, because of smoothness of \(F(t, X, \Phi )\) in X and \(\Phi \) variables. In particular the rates v and r are uniformly bounded for all N.

From now on we will always assume that the biased interchange process has rates \(v(t,x, \phi )\) and \(r(t,x, \phi )\) given by (32) and is started from the uniform distribution (which by the discussion above is stationary). The properties of v and r which will be relevant to our analysis is that they are bounded, approximately equal to some smooth functions V, R, that the corresponding dynamics has the uniform measure as the stationary distribution and, crucially, that in stationarity the velocities are independent and mean zero. This last property, which should be thought of as the particle system analog of Lemma 3.2, is conveniently summarized in the following proposition.

Proposition 4.1

Let \(\phi _{x}\), \(x=1, \ldots , N\), be independent and uniformly distributed on \(\{1, \ldots , N\}\). Then for each \(t \in [0,T]\) the random variables \(v(t,x, \phi _x)\), \(x=1, \ldots , N\), are independent and for each x we have

Proof

Under the uniform distribution of \(\phi _x\) we have

which by definition of v is equal to

Recalling the definition of F below (30), the right-hand side is equal to 0. \(\square \)

5 Law of Large Numbers

Throughout this section \({\widetilde{\mathbb {P}}}^{N}\) will denote the probability law of the biased interchange process on N particles, started in stationarity, associated to the equation (29) (with all the assumptions from the previous section). To simplify notation we will usually write \(\eta = \eta ^{N}\). Whenever we use \(o(\cdot )\) or \(O(\cdot )\) asymptotic notation the implicit constants will depend only on the rates v, r and possibly on T.

Let \(P = (X, \Phi )\) be the colored trajectory process associated to the Eq. (29) and let \(P^{\eta ^{N}}\) be the colored permutation process defined in Sect. 4. Let us denote the distributions of P and \(P^{\eta ^{N}}\) respectively by \(\nu \) and \(\nu ^{\eta ^{N}}\), with \(\nu , \nu ^{\eta ^{N}} \in \mathcal {M}(\widetilde{\mathcal {D}})\). We will prove the following theorem

Theorem 5.1

Let \(\eta ^{N}\) be the trajectory of the biased interchange process. The measures \(\nu ^{\eta ^{N}}\) converge in distribution, as random elements of \(\mathcal {M}(\widetilde{\mathcal {D}})\), to the deterministic measure \(\nu \) as \(N \rightarrow \infty \).

In other words, the random processes \(P^{\eta ^{N}}\) converge in distribution to the process P whose distribution is deterministic. The theorem above can be thought of as a law of large numbers for random permuton processes and it will be useful for establishing the large deviation lower bound.

Remark 5.2

Since the limiting measure \(\nu \) is deterministic and supported on continuous trajectories, Theorem 5.1 implies that the convergence \(\nu ^{\eta ^N} \rightarrow \nu \) in fact holds in a stronger sense, namely in probability when \(\mathcal {M}(\widetilde{\mathcal {D}})\) is endowed with the Wasserstein distance \(d_{{\mathcal {W}}}^{sup}\) associated to the supremum norm on \(\widetilde{\mathcal {D}}\).