Abstract

This work proposes an algorithm for feedback ANC that does not require a prior secondary path model and usually remains stable after fast secondary path changes, as other algorithms proposed for feedforward ANC. This is achieved using a recursive least squares algorithm to model the secondary path and the primary noise with an autoregressive moving average model. The resulting model allows for predicting future values of the primary noise. Finally, the primary noise values predicted are filtered by a non-causal inverse of the secondary path model to generate the anti-noise signal. Simulation results attest to the validity of the algorithm in reducing narrowband noise.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Active noise and vibration control (ANVC) or simply active noise control (ANC) [11, 12, 15, 17, 23, 24, 33, 42] is a class of methods to reduce noise and vibration by generating an anti-noise signal with a phase opposite to the original noise so that both interfere destructively, reducing the noise level. ANC works best at low frequencies where passive techniques are less effective and can be used together with the last. It is finding applications in many fields like noise reduction in cars [40], homes [8], headphones [10, 25, 39], and even vibration of airplane tails [35].

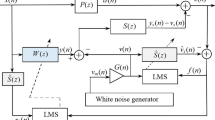

ANC methods can be divided into two categories [23], feedforward ANC and feedback ANC. In feedforward ANC, a reference sensor captures a reference signal early in the noise path. Then a controller uses information from this sensor to generate an anti-noise signal that is fed to an actuator to reduce the noise. The residual noise level is measured by an error sensor and used by the controller when generating the anti-noise. In feedback ANC, there is no reference sensor, and the anti-noise signal is generated using only the information from the error sensor. This work focuses on feedback ANC since in many applications may be difficult to obtain a good reference. Feedback ANC has been extensively applied to ANC headphones, for instance, [22, 41, 43, 44] and many other systems [21, 35].

Most feedforward and feedback ANC algorithms (like the filtered-x least mean squares (FxLMS) algorithm[32]) require a model of the secondary path. This model may be obtained offline before the ANC controller starts to reduce noise or online during the operation of the ANC controller. Online secondary path modeling allows the ANC system to cope with slow changes in the secondary path. However, only a few algorithms can cope with fast changes. Eriksson [13] first proposed online secondary path modeling using a small auxiliary white noise signal as input to an adaptive filter. After this, it was proposed to improve the modeling by using an additional adaptive filter to remove the primary noise from the secondary path model error signal [5, 45]. The auxiliary noise power can be chosen so that residual noise to auxiliary noise at the error sensor is constant [9] and it can also be turned off after convergence has been reached [2, 26] and on after the secondary path changes. Finally, it can also be used to model the path from the anti-noise transducer to the reference sensor (feedback path) [1].

In feedforward ANC, the secondary path can also be modeled without the auxiliary noise by the simultaneous equations method [14] or the overall modeling algorithm (OMA) [23]. However, the secondary path model of the OMA algorithm may be incorrect [6, 23, 27]. Nevertheless, even with an incorrect secondary path model, the resulting overall model (primary and secondary path) can be used to determine the optimum controller as in the mirror-modified FxLMS (MMFxLMS) algorithm [27, 28].

There is very little work on online secondary path modeling for feedback ANC. However, when using auxiliary noise, the same algorithms as in feedforward ANC can be used [18, 43]. Unfortunately, to the authors’ knowledge, there is no work on online secondary path modeling for feedback ANC without auxiliary noise. Moreover, the anti-noise signal and the (unknown) disturbance signal are usually highly correlated, making it difficult to use the first to obtain an accurate secondary path model.

This work proposes to use a variant of adaptive model predictive control (MPC) [30, 38] to determine the anti-noise signal. In MPC, the plant output (the ANC error signal) future values are predicted using a model and set close to a predetermined signal (zero in ANC) by carefully selecting the present and future values of the plant input signal (anti-noise). The predictions consider the effect that present inputs have on future outputs. The ANC’s primary noise signal is the MPC disturbance. However, unlike standard model predictive control applications, in ANC, the plant plus disturbance model is unknown and needs to be estimated, making this an adaptive model predictive control application [30, 38] that has yet to receive much attention. Nevertheless, the model is linear, allowing for simpler solutions, as shown in this work. ANC also falls in the more general field of adaptive control [4] and stochastic adaptive control [7] that, in general, requires complex solutions with caution and probing (a dual controller) [20]. Adaptive model predictive control applications have been presented in [34] and [31] for ANC. This work builds on these two papers but presents a different approach with lower computational complexity.

Regarding notation, vectors are boldface lowercase letters as \(\textbf{u}\). Given a signal u(n), \(\textbf{u}(n)\) is the vector with its past samples \(\textbf{u}(n)=[u(n) \dots u(n-N+1)]^\textrm{T}\) where N is the vector length, which should be determined from context. The operator \(*\) stands for convolution.

2 Proposed Algorithm

In feedback ANC, the residual noise signal e(n) is given by the sum of the primary noise signal d(n) (disturbance) with the anti-noise signal u(n) after passing through the secondary path (or plant) with impulse response s(n)

In the proposed algorithm, d(n) is modeled by an order \(N_\textrm{d}\) autoregressive (AR) model:

or \(\textbf{a}_\textrm{d}^\textrm{T}(n) \textbf{d}(n)=\tilde{d}(n)\) where \(\tilde{d}(n)\) is a white noise process and \(\textbf{a}_\textrm{d}=[1, \textbf{a}_\textrm{dx}]\). The signal \(\tilde{d}(n)\) is taken to have small power making d(n) predictable. This happens in narrowband systems, for instance. The secondary path is modeled by an autoregressive exogenous input (ARX) model so that the anti-noise signal \(y(n) = s(n) * u(n)\) is also given by

assuming the model coefficients are constant or slowly varying. Taking the z-transform [36] results in

and taking the inverse z-transform result in

where \(\textbf{a}= \textbf{a}_\textrm{s}* \textbf{a}_\textrm{d}\) and \(\textbf{b}= \textbf{b}_\textrm{s}* \textbf{a}_\textrm{d}\).

Estimates for \(\textbf{a}\) and \(\textbf{b}\), \(\hat{\textbf{a}}\), and \(\hat{\textbf{b}}\) can be obtained by minimizing the residual noise estimation error square sum \(\xi (n)=\sum _{i=0}^{\infty } j^2(n-i) \lambda ^i\) where

These estimates can be obtained using the RLS algorithm [19, 31]. The vectors \(\hat{\textbf{b}}\) and \(\hat{\textbf{a}}_\textrm{x}\) have length \(N+1\) and N resulting in a order N model. For small \(\tilde{\textbf{d}}(n)\) (as in narrowband disturbances), persistent excitation, and N large enough, the minimization results in \(\hat{\textbf{a}}=\textbf{a}\) and \(\hat{\textbf{b}}=\textbf{b}\). The resulting model has canceling poles and zeros at the zeros of \(a_\textrm{d}(z)\) and uncontrollable states that are only excited by \(\tilde{d}(n)\) in the original model, which may be described by a zero input filter in the new model.

This model is the least-squares (LS) one-step-ahead predictor of e(n) given u(n), and it can be used to predict future values of e(n). Replacing the LS with the least mean squares (LMS) predictor (since they are equivalent for large M and stationary signals) and since the last is given by the expected value [3] results in the following. Using measures up to time n, the predicted residual noise at time \(n+1\) is

The predicted residual noise at time \(n+2\) is

etc. So, \(\hat{e}(n+i)\) given u(n) can be obtained simply by iterating the difference equation of the estimated model. Namely,

where

Note, however, that the model formed by \(\hat{\textbf{a}}_\textrm{x}\) and \(\hat{\textbf{b}}\) is the LS predictor of e(n) and may not be the best predictor of \(e(n+i)\) even for \(i=1\). It can be tuned to a set of u(n) components that change in \(u(n+i)\), especially after fast changes. Regardless, this work will use this predictor in the remaining text.

Let \(u(n) = u_0(n)+u_1(n)\) where

and

Then, since (9) describes a linear IIR filter [36], then

where \(i>0\), \(\hat{s}(i)\) is the impulse response of the IIR filter, and the signal \(\hat{e}_0(n+i)\) can be obtained from iterating the difference equation

with \(\hat{e}(n+i)=e(n+i)\) for \(i\le 0\). Finally, let

Since the goal is to drive \(\hat{e}(n+i,n)\) to \(e_0(n+i)\), keeping past values and driving future values to zero, then \(u_1(n)\) should be

where \(\hat{s}^{-1}(n)\) is the impulse response of the inverse of the IIR filter in (9). Let \(\hat{s}(z)=\hat{b}(z)/\hat{a}(z)\) and \(\hat{s}^{-1}(z)=1/\hat{s}(z)\) be the z-transform of \(\hat{s}(n)\) and \(\hat{s}^{-1}(n)\), then \(\hat{s}^{-1}(n)\) can be obtained using the inverse z-transform of \(\hat{s}^{-1}(z)\). To obtain a finite energy signal (and a stable filter), the region of convergence (ROC) of the z-transform should be selected to include the unit circle resulting in general in a non-causal signal (and filter). This (non-causal) filter can be implemented since \(\hat{e}_0(n)\) is known for past and future. Finally, \(u(n+1)\) is set to \(u_1(n+1)\) and used as input to the plant at time \(n+1\).

In the proposed algorithm, the non-causal filter \(\hat{s}^{-1}(z)=\hat{a}(z)/\hat{b}(z)\) is implemented by numerically calculating the roots of \(\hat{b}(z)\), \(z_i\). Then forming \(\hat{b}_\textrm{st}(z)\) by the set of roots with \(|z_i |< \alpha \) with \(\alpha \) slightly greater than one (inside the unit circle or close), and \(\hat{b}_\textrm{ut}(z)\) by the set of roots with \(|z_i |\ge \alpha \) (outside the unit circle). Choosing \(\alpha >1\) assures that canceling poles and zeros at the unit circle (that form oscillators to predict sinusoidal signals) stay in the same filtering operation. Then \(\hat{e}_0(n+i,n)-e_0(n+i)\) is filtered by \(1/\hat{b}_\textrm{ut}(z)\) by flipping (time reversing, \(x(-n)\)) all the signals to make the filter stable; and then by \(\hat{a}(z)/b_\textrm{st}(z)\). All the signals extend from \(n-L+1\) to \(n+L\) (length 2L) with L large enough so that the transients of the filters are negligible at time \(n+1\). The source code for the proposed algorithm is available in [29].

3 Computational Complexity

The computational complexity of the proposed algorithm is approximately (for large N and L) \(32N^2\) for the system identification component, \(4/3N^3\) for the calculation of the b(z) polynomial roots by calculating the eigenvalues of the companion matrix [16, 37] and 16LN for filtering and prediction. This results in a total of \(32N^2+4/3N^3+16LN\). As a comparison, Mohseni’s algorithm [31] has a computational complexity of \(L_\textrm{h}N^2 + (8/3+6)L_\textrm{h}^3\), where \(L_\textrm{h}\) is the horizon length. Using the same parameters as in the simulation section, \(f_\textbf{s}=2000\) Hz, \(N=16\), \(L=64\), \(L_\textrm{h}=64\) results in 60 MFLOPS for the proposed algorithm and 4577 MFLOPS for Mohseni’s algorithm.

Note that it is still possible to reduce the computational complexity by noting that \(\hat{e}_0(z,n)-e_0(z) = g(z)/\hat{a}(z)\) where g(z) is an order N polynomial related to the initial conditions for \(\hat{e}_0(n+i,n)\) and that \(u_1(n+i) = -\hat{b}^{-1}(n) * g(z)\) but this will not be further discussed in this work.

4 Simulation Results

This section presents a set of simulation results. In the simulations, the sampling frequency is \(f_\textbf{s}=2000\) Hz. The plant is formed by an order \(N_\textbf{x}=6\) IIR filter. The plant parameters \(\textbf{a}_\textrm{s}\) and \(\textbf{b}_\textrm{s}\) coefficients are initially set Gaussian random values with zero mean and variance \(1/N_\textbf{x}^2\) and one, respectively, with \(\textbf{a}_\textrm{s}\) first coefficient equal to one. The parameters change during the simulation according to random walk model (\(\textbf{a}_\textrm{s}(n+1)=\textbf{a}_\textrm{s}(n)+\textbf{r}_\textrm{a}(n)\), \(\textbf{b}_\textrm{s}(n+1)=\textbf{b}_\textrm{s}(n)+\textbf{r}_\textrm{b}(n)\)) where the state noise \(\textbf{r}_\textrm{a}\) and \(\textbf{r}_\textrm{b}\) is formed by a set independent white noise signals with variance \(q_r(n)\). The plant changes fast from 2 to 3 s with \(q_\textbf{r}= 1/fs\) and slowly for the remaining time with \(q_\textbf{r}(n) = 1/(400 f_\textbf{s})\). The filter poles and zeros are kept at a distance greater than \(\beta =0.1\) of the unit circle at any time n. Three sinusoidal signals with frequencies of 200, 400, and 600 Hz and amplitudes of 0.5, 1.3, and 0.3 plus white background noise with variance \(q_\textbf{v}=0.01\) are added at the plant’s output. The charts plot the result for 100 simulations of several algorithms. The anti-noise signal saturates at 10 and \(-10\). Before ANC is started, at 0.5 s, the input of the plant is set to a unit-power white noise signal, and the model identification component of the algorithm is left running. The percentile charts show the percentile 10%, 25%, 50%, 75%, 90%, and 99% residual noise level at each time, given some information about its probability density function (PDF). The algorithms parameters are presented in Table 1. These are based on the values presented in the corresponding papers and tuned by trial and error. In Carini’s algorithm, the step size \(\mu \) was scaled by the factor \(\mu _\textrm{SC}\) to improve stability.

Figure 1 shows the residual noise level power versus time of three typical simulations of the proposed algorithm. As can be seen, the residual noise quickly settles to the minimum value after ANC is started, rises while the plant changes fast, and settles to the minimum again as desired. However, there are some narrow spikes and even divergence (not shown). Figure 2 shows the percentile chart for the 100 simulations. As can be seen, performance is not always as good as in Fig. 1. The residual noise power takes large values in less than 10% of the simulations. Narrow spikes contribute to a rise in the percentile curves, but they are not visible on the plots. The very high percentile 99% curve is primarily due to divergence in some simulations. Nevertheless, the residual noise takes low values (at each time instant) in more than 90% of the simulations. This is confirmed by the histogram of the residual noise power at the simulation end, as shown in Fig. 3. It shows that the final residual noise power in 10 out of 100 simulations is considerably larger than the minimum value (with 6 divergence cases), while in the remaining 90, it is close to the optimum.

Figures 4 and 5 show percentile plots of the residual noise power versus time of Carini’s[9] and Lopes’ [26] algorithms simulation results when used in feedback ANC. The reference signal equals the estimated primary noise signal [23]. This signal equals the error signal minus the anti-noise signal filtered by the plant estimate. These algorithms have very low computational complexity. They deal well with slow plant changes and are intended for feedforward (not feedback) ANC systems. So, performance is not good and considerably worse than the proposed algorithm, as can be seen by comparing (for instance) the percentile 90% curve.

Figure 6 shows the same results for Mohseni’s algorithm [31]. Performance is much better than Carini’s and Lopes’s algorithms, in fact also better than the proposed algorithm, but at the expense of higher computational complexity, much larger than the last. However, the residual noise is still high, at least in 1% of the simulations (and at each time n), as seen by the percentile 99% curve. Also, there is a degradation of performance with time, as seen by the 90% curve.

5 Conclusion

This work presents an algorithm for narrowband feedback ANC systems that improves the performance of state-of-the-art algorithms when dealing with fast secondary path changes while keeping a moderate computational complexity. However, performance is as good as desired in every case. This algorithm uses an estimated model to predict the primary noise and the same model to estimate a non-causal secondary path inverse that filters the predicted noise to calculate the anti-noise signal.

Data Availability

Data sharing is not applicable to this article as no datasets were generated or analyzed during the current study.

References

S. Ahmed, M.T. Akhtar, Gain scheduling of auxiliary noise and variable step-size for online acoustic feedback cancellation in narrow-band active noise control systems. IEEE/ACM Trans. Audio Speech Lang. Process. 25(2), 333–343 (2016)

S. Ahmed, M.T. Akhtar, X. Zhang, Robust auxiliary-noise-power scheduling in active noise control systems with online secondary path modeling. IEEE Trans. Audio Speech Lang. Process. 21(4), 749–761 (2013)

B.D. Anderson, J.B. Moore, Optimal filtering. Courier Corporation (2012)

K.J. Åström, B. Wittenmark, Adaptive control. Courier Corporation (2013)

C. Bao, P. Sas, H.V. Brussel, Adaptive active control of noise in 3-d reverberant enclosures. J. Sound Vib. 161, 501–514 (1993)

C. Bao, P. Sas, H.V. Brussel, Comparison of two on-line identification algorithms for active noise control. Proc. Recent Advances in Active Control of Sound Vibration, pp. 38–51 (1993)

Y. Bar-Shalom, Stochastic dynamic programming: caution and probing. IEEE Trans. Autom. Control 26(5), 1184–1195 (1981)

L. Bhan, G. Woon-Seng, Active acoustic windows: towards a quieter home. IEEE Potent. 35(1), 11–18 (2016)

A. Carini, S. Malatini, Optimal variable step-size NLMS algorithms with auxiliary noise power scheduling for feedforward active noise control. IEEE Trans. Audio Speech Lang. Process. 16(8), 1383–1395 (2008)

C.Y. Chang, A. Siswanto, C.Y. Ho, T.K. Yeh, Y.R. Chen, S.M. Kuo, Listening in a noisy environment: integration of active noise control in audio products. IEEE Consum. Electron. Mag. 5(4), 34–43 (2016)

S. Elliott, Down with noise. IEEE Spectrum, pp. 54–61 (1999)

S. Elliott, Signal Processing for Active Control (Academic Press, London, 2001)

L.J. Eriksson, M.C. Allie, Use of random noise for on-line transducer modeling in an adaptive active attenuation system. J. Acoust. Soc. Am. 85(2), 797–802 (1989)

K. Fujii, J. Ohga, Method to update the coefficients of the secondary path filter under active noise control. Signal Process. 81(2), 381–387 (2001)

C. Fuller, S. Elliott, P. Nelson, Active Control of Vibration (Academic Press, London, 1996)

G.H. Golub, C.F.V. Loan, Matrix Computations, 3rd edn. (Johns Hopkins Universtiy Press, Baltimore, 1996)

C.H. Hansen, Active Control of Noise and Vibration (CRC Press, Boca Raton, 2013)

H. Hassanpour, P. Davari, An efficient online secondary path estimation for feedback active noise control systems. Digit. Signal Process. 19(2), 241–249 (2009)

S. Haykin, Adaptive Filter Theory (Prentice-Hall, London, 1996)

T.A.N. Heirung, B.E. Ydstie, B. Foss, Dual adaptive model predictive control. Automatica 80, 340–348 (2017)

S.M. Kuo, X. Kong, W.S. Gan, Applications of adaptive feedback active noise control system. IEEE Trans. Control Syst. Technol. 11(2), 216–220 (2003)

S.M. Kuo, S. Mitra, W.S. Gan, Active noise control system for headphone applications. IEEE Trans. Control Syst. Technol. 14(2), 331–335 (2006)

S.M. Kuo, D.R. Morgan, Active Noise Control Systems, Algorithms and DSP Implementations (Wiley, Hoboken, 1996)

S.M. Kuo, D.R. Morgan, active noise control: a tutorial review 87(6), 943–973 (1999)

S. Liebich, J. Fabry, P. Jax, P. Vary, Acoustic path database for anc in-ear headphone development, in Proceedings of the 23th International Congress on Acoustics (Universitätsbibliothek der RWTH Aachen, 2019), p. 4326–4333

P.A. Lopes, J.A. Gerald, Auxiliary noise power scheduling algorithm for active noise control with online secondary path modeling and sudden changes. IEEE Signal Process. Lett. 22(10), 1590–1594 (2015)

P.A. Lopes, J.A. Gerald, Frequency domain analysis of the mirror-modified filtered-x least mean squares algorithm with low ambient noise. Int. J. Adapt. Control Signal Process. 35(7), 1370–1387 (2021)

P.A. Lopes, J.A. Gerald, M.S. Piedade, The mmfxlms algorithm for active noise control with on-line secondary path modelling. Digit. Signal Process. 60, 75–80 (2017)

P.A.C. Lopes, Source code for the predict and invert feedback ANVC algorithm. https://github.com/paclopes/Predict-and-Invert-FANVC

D.Q. Mayne, Model predictive control: recent developments and future promise. Automatica 50(12), 2967–2986 (2014)

N. Mohseni, T.W. Nguyen, S.A.U. Islam, I.V. Kolmanovsky, D.S. Bernstein, Active noise control for harmonic and broadband disturbances using RLS-based model predictive control. American Control Conference (ACC), (IEEE, 2020), p. 1393–1398

D. Morgan, An analysis of multiple correlation cancellation loops with a filter in the auxiliary path. IEEE Trans. Acoust. Speech Signal Process. 28(4), 454–467 (1980)

P. Nelson, S. Elliott, Active Control of Sound (Academic Press, London, 1996)

T.W. Nguyen, S.A.U. Islam, A.L. Bruce, A. Goel, D.S. Bernstein, I.V. Kolmanovsky, Output-feedback RLS-based model predictive control, in 2020 American Control Conference (ACC) (IEEE, 2020), p. 2395–2400

W. Niu, C. Zou, B. Li, W. Wang, Adaptive vibration suppression of time-varying structures with enhanced FxLMS algorithm. Mech. Syst. Signal Process. 118, 93–107 (2019)

A.V. Oppenhein, R.W. Schafer, Discrete-Time Signal Processing (Academic Press, London, 1999)

W.H. Press, S.A. Teukolsky, W.T. Vetterling, B.P. Flannery, Numerical Recipes 3rd Edition: The Art of Scientific Computing (Cambridge University Press, Cambridge, 2007)

J.B. Rawlings, D.Q. Mayne, M. Diehl, Model Predictive Control: Theory, Computation, and Design, vol. 2 (Nob Hill Publishing, Madison, 2017)

P. Rivera Benois, P. Nowak, E. Gerat, M. Salman, U. Zölzer, Improving the performance of an active noise cancelling headphones prototype, in INTER-NOISE and NOISE-CON Congress and Conference Proceedings, vol. 259 (Institute of Noise Control Engineering, 2019), p. 889–900

P.N. Samarasinghe, W. Zhang, T.D. Abhayapala, Recent advances in active noise control inside automobile cabins: toward quieter cars. IEEE Signal Process. Mag. 33(6), 61–73 (2016)

T. Schumacher, H. Krüger, M. Jeub, P. Vary, C. Beaugeant, Active noise control in headsets: A new approach for broadband feedback anc, in 2011 IEEE International conference on acoustics, speech and signal processing (ICASSP) (IEEE, 2011), p. 417–420

S.D. Snyder, Active Noise Control Primer (Springer, New York, 2012)

H.S. Vu, K.H. Chen, A high-performance feedback FxLMS active noise cancellation VLSI circuit design for in-ear headphones. Circuits Syst. Signal Process. 36(7), 2767–2785 (2017)

L. Wu, X. Qiu, Y. Guo, A generalized leaky FxLMS algorithm for tuning the waterbed effect of feedback active noise control systems. Mech. Syst. Signal Process. 106, 13–23 (2018)

M. Zhang, H. Lan, W. Ser, Cross-updated active noise control system with online secondary path modeling 9(5), 598–602 (2001)

Acknowledgements

This work was supported by national funds through Fundação para a Ciência e a Tecnologia (FCT) with reference UIDB/50021/2020.

Funding

Open access funding provided by FCT|FCCN (b-on).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Lopes, P.A.C. The Predict and Invert Feedback Active Noise and Vibration Control Algorithm. Circuits Syst Signal Process 42, 7640–7650 (2023). https://doi.org/10.1007/s00034-023-02471-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-023-02471-7