Abstract

In this paper, we investigate the inviscid limit \(\nu \rightarrow 0\) for time-quasi-periodic solutions of the incompressible Navier–Stokes equations on the two-dimensional torus \({\mathbb {T}}^2\), with a small time-quasi-periodic external force. More precisely, we construct solutions of the forced Navier–Stokes equation, bifurcating from a given time quasi-periodic solution of the incompressible Euler equations and admitting vanishing viscosity limit to the latter, uniformly for all times and independently of the size of the external perturbation. Our proof is based on the construction of an approximate solution, up to an error of order \(O(\nu ^2)\) and on a fixed point argument starting with this new approximate solution. A fundamental step is to prove the invertibility of the linearized Navier–Stokes operator at a quasi-periodic solution of the Euler equation, with smallness conditions and estimates which are uniform with respect to the viscosity parameter. To the best of our knowledge, this is the first positive result for the inviscid limit problem that is global and uniform in time and it is the first KAM result in the framework of the singular limit problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

In this paper, we consider the two-dimensional Navier–Stokes equations for an incompressible fluid on the two-dimensional torus \({\mathbb {T}}^2\), \({\mathbb {T}}:= {\mathbb {R}}/ 2 \pi {\mathbb {Z}}\), with a small time quasi-periodic forcing term

where \(\varepsilon \in (0, 1)\) is a small parameter, \(\omega \in {\mathbb {R}}^d\) is a Diophantine d-dimensional vector, \(\nu > 0\) is the viscosity parameter, the external force f belongs to \({{\mathcal {C}}}^q({\mathbb {T}}^d \times {\mathbb {T}}^2, {\mathbb {R}}^2)\) for some integer \(q > 0\) large enough, \(U = (U_1, U_2): {\mathbb {R}}\times {\mathbb {T}}^2 \rightarrow {\mathbb {R}}^2\) is the velocity field, and \(p: {\mathbb {R}}\times {\mathbb {T}}^2 \rightarrow {\mathbb {R}}\) is the pressure. The main purpose of this paper is to investigate the inviscid limit of the Navier–Stokes equation from the perspective of the KAM (Kolmogorov–Arnold–Moser) theory for PDEs, which in a broad sense is the theory of the existence and the stability of periodic, quasi-periodic and almost periodic solutions for Partial Differential Equations. For the forced Euler equations, namely (1.1) with \(\nu =0\), quasi-periodic solutions have been constructed in Baldi and Montalto [4]. We give now the informal statement of our result.

Informal Theorem

Let \((U_{e}(t,x),p_e(t,x))= (\breve{U}_{e}(\varphi ,x),\breve{p}_{e}(\varphi ,x))|_{\varphi =\omega t}\), \(\varphi \in {\mathbb {T}}^d\), be a quasi-periodic solution of (1.1) with \(\nu =0\) constructed in [4]. Then, there exists a quasi-periodic solution \((U_{\nu }(t,x),p_{\nu }(t,x))= (\breve{U}_{\nu }(\varphi ,x),\breve{p}_{\nu }(\varphi ,x))|_{\varphi =\omega t}\) of (1.1) bifurcating from the Euler solution \((U_{e}(t,x),p_e(t,x))\) with respect to the viscosity parameter \(\nu \rightarrow 0\), with rate of convergence \(O(\nu )\) uniform in \(\varepsilon \ll 1\) and uniform for all times \(t\in {\mathbb {R}}\).

As a consequence of our result, we obtain families of initial data for which the corresponding global quasi-periodic solutions of the Navier–Stokes equations converge to the ones of the Euler equation with a rate of convergence \(O(\nu )\), uniformly in time. The main difficulty is that this is a singular perturbation problem, namely there is a small parameter in front of the highest order derivative. To the best of our knowledge, this is both the first result in which one exhibits non-trivial, time-dependent solutions of the Navier–Stokes equations converging globally and uniformly in time to the ones of the Euler equation in the vanishing viscosity limit \(\nu \rightarrow 0\) and the first KAM result in the context of singular limit problems for PDEs.

The zero-viscosity limit of the incompressible Navier–Stokes equations in bounded domains is one of the most challenging problems in Fluid Mechanics. The first results for smooth initial data (\(H^s\) with \(s \gg 0\) large enough) have been proved by Kato [33, 34], Swann [43], Constantin [15] and Masmoudi [38] in the Euclidean domain \({\mathbb {R}}^n\) or in the periodic box \({\mathbb {T}}^n\), \(n=2,3\). For instance, it is proved in [38] that, if the initial velocity field \(u_0 \in H^s({\mathbb {T}}^n)\), \(s > n/2 + 1\), then the corresponding solutions \(u_\nu (t, x)\) of Navier–Stokes and u(t, x) of Euler, defined on \([0, T] \times {\mathbb {T}}^n\), satisfy

It is immediate to notice that the latter estimate holds only on finite time intervals and it is not uniform in time, with the estimate of the difference \(u_\nu (t) - u(t)\) eventually diverging as \(t \rightarrow + \infty \). For \(n=2\), this kind of result has been proved in low regularity by Chemin [13] and Seis [42], with rates of convergence in \(L^2\). We also mention similar results for non-smooth vorticity. In particular, the inviscid limit of Navier Stokes equation has been addressed in the case of vortex patches in Constantin and Wu [18, 19], Abidi and Danchin [1] and Masmoudi [38], with low Besov type regularity in space. In this results one typically gets a bound only in \(L^2\) of the form

In the case of non-smooth vorticity, the inviscid limit has been investigated by using a Lagrangian stochastic approach in Constantin, Drivas and Elgindi [16], with initial vorticity \(\omega _0 \in L^\infty ({\mathbb {T}}^2)\), and in Ciampa, Crippa and Spirito [14], where the initial vorticity \(\omega _0 \in L^p({\mathbb {T}}^2)\), \(p \in (1, + \infty )\). When the domain has an actual boundary, the zero-viscosity limit is closely related to the validity of the Prandtl equation for the formation of boundary layers. For completeness of the exposition, we mention the work of Sammartino and Caflisch [40, 41] and recent results by Maekawa [37], Constantin, Kukavica and Vicol [17] and Gérard-Varet, Lacave, Nguyen and Rousset [25], with references therein. The inviscid limit has been also investigated in other physical models for complex fluids, see for instance [12] for the 2D incompressible viscoelasticity system.

Our approach is different and it is based on KAM (Kolmogorov–Arnold–Moser) and Normal Form methods for Partial Differential Equations. This fields started from the Nineties, with the pioneering papers of Bourgain [11], Craig and Wayne [20], Kuksin [35], Wayne [44]. We refer to the recent review article [6] for a complete list of references on this topic. In the last years, new techniques have been developed in order to study periodic and quasi-periodic solutions for PDEs arising from fluid dynamics. For the two dimensional water waves equations, we mention Iooss, Plotnikov and Toland [30] for periodic standing waves, [2, 10] for quasi-periodic standing waves and [7, 8, 24] for quasi-periodic traveling wave solutions.

We also recall that the challenging problem of constructing quasi-periodic solutions for the three-dimensional water waves equations is still open. Partial results have been obtained by Iooss and Plotnikov, who proved existence of symmetric and asymmetric diamond waves (bi-periodic waves stationary in a moving frame) in [31, 32]. Very recently, KAM techniques have been successfully applied also for the contour dynamics of vortex patches in active scalar equations. The existence of time quasi-periodic solutions have been proved in Berti, Hassainia and Masmoudi [9] for vortex patches of the Euler equations close to Kirchhoff ellipses, in Hmidi and Roulley [29] for the quasi-geostrophic shallow-water equations, in Hassainia, Hmidi and Masmoudi [27] and Gómes-Serrano, Ionescu and Park [26] for generalized surface quasi-geostrophic equations, and in Hassainia and Roulley [28] for vortex patches of the Euler equations close to Rankine vortices in the unit disk. All the aforementioned results concern two-dimensional problems. The quasi-periodic solutions for the 3D Euler equations with time quasi-periodic external force have been constructed in [4] and also extended in [39] for the Navier–Stokes equations in arbitrary dimension, without dealing with the zero-viscosity limit. The result of the present paper closes also the gap between these two works in dimension two.

1.1 Main Result

We now state precisely our main result. We look for time-quasi-periodic solutions of (1.1), oscillating with time frequency \(\omega \). In particular, we look for solutions which are small perturbations of constant velocity fields \(\zeta \in {\mathbb {R}}^2\), namely solutions of the form

where the new unknown velocity field \(u: {\mathbb {T}}^d \times {\mathbb {T}}^2 \rightarrow {\mathbb {R}}^2\) is a function of \((\varphi ,x)\in {\mathbb {T}}^d\times {\mathbb {T}}^2\). Plugging this ansatz into the equation, one is led to solve

with \(p: {\mathbb {T}}^d \times {\mathbb {T}}^2 \rightarrow {\mathbb {R}}\) and \(\omega \cdot \partial _\varphi := \sum _{i = 1}^d \omega _i \partial _{\varphi _i}\). According to [4], we shall assume that the forcing term f is odd with respect to \((\varphi , x)\), that is

It is convenient to work in the well known vorticity formulation. We define the scalar vorticity \(v(\varphi , x)\) as

Hence, rescaling the variable \(v \mapsto \sqrt{\varepsilon } v\) and the small parameter \(\varepsilon \mapsto \varepsilon ^2\), Eq. (1.2) is equivalent to

and \((-\Delta )^{-1}\) is the inverse of the Laplacian, namely the Fourier multiplier with symbol \(|\xi |^{-2}\) for \(\xi \in {\mathbb {Z}}^2\), \(\xi \ne 0\), Since \(\int _{{\mathbb {T}}^2} v(\cdot , x)\, \textrm{d} x\) is a prime integral, we shall restrict to the space of zero average in x. Then, the pressure is recovered, once the velocity field is known, by the formula \(p = \Delta ^{- 1} \big [ \varepsilon {\textrm{div}} f(\omega t, x) - {\,\text{ div }\,}( u \cdot \nabla u ) \big ]\).

For any real \(s \ge 0\), we consider the Sobolev spaces \( H^s = H^s({\mathbb {T}}^{d + 2}) \) of real scalar and vector-valued functions of \((\varphi ,x)\), defined in (2.1), and the Sobolev space of functions with zero space average, defined by

Furthermore, we introduce the subspaces of \(L^2\) of the even and odd functions in \((\varphi ,x)\), respectively:

We first state the result concerning the existence of quasi-periodic solutions of the Euler equation \((\nu = 0)\) for most values of the parameters \((\omega ,\zeta )\) in a fixed bounded open set \(\Omega \subset {\mathbb {R}}^d\times {\mathbb {R}}^2\), proved in [4]. The statement is slightly modified for the purposes of this paper.

Theorem 1.1

(BaldiMontalto [4]). There exists \({{\overline{S}}}:= {{\overline{S}}}(d) > 0\) such that, for any \(S \ge {{\overline{S}}}(d)\), there exists \(q:= q(S) > 0\) such that, for every forcing term \(f \in {{\mathcal {C}}}^q({\mathbb {T}}^d \times {\mathbb {T}}^2, {\mathbb {R}}^2)\) satisfying (1.3), there exist \(\varepsilon _0:= \varepsilon _0(f, S, d) \in (0, 1)\) and \(C:= C(f, S, d) > 0\) such that, for every \(\varepsilon \in (0, \varepsilon _0)\), the following holds. There exist a \({{\mathcal {C}}}^1\) map

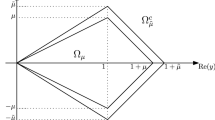

and a Borel set \(\Omega _\varepsilon \subset \Omega \) of asymptotically full Lebesgue measure, i.e., \(\lim _{\varepsilon \rightarrow 0} |\Omega {\setminus } \Omega _\varepsilon | = 0\), such that, for any \(\lambda = (\omega , \zeta ) \in \Omega _\varepsilon \), the function \(v_e(\cdot ; \lambda )\) is a quasi-periodic solution of the Euler equation

Moreover, there exists a constant \(\mathtt a:= \mathtt a(d) \in (0, 1)\) such that \(\sup _{\lambda \in {\mathbb {R}}^{d + 2}} \Vert v(\cdot ; \lambda ) \Vert _S \le C \varepsilon ^{\mathtt a}\) and, for any \(i = 1, \ldots , d + 2\), \(\sup _{\lambda \in {\mathbb {R}}^{d + 2}} \Vert \partial _{\lambda _i} v(\cdot ; \lambda ) \Vert _S \le C \varepsilon ^{\mathtt a}\).

We now are ready to state the main result of this paper. Rougly speaking, we will prove that for any value of the viscosity parameter \(\nu > 0\) and for \(\varepsilon \ll 1\) small enough, independent of the viscosity parameter, the Navier–Stokes Eq. (1.4) admits a quasi-periodic solution \(v_\nu (\varphi , x)\) for most of the parameters \(\lambda = (\omega , \zeta )\) such that \(\Vert v_\nu - v_e \Vert _S = O(\nu )\). This implies that \(v_\nu (\omega t, x)\) converges strongly to \(v_e(\omega t, x)\), uniformly in \((t, x) \in {\mathbb {R}}\times {\mathbb {T}}^2\), with a rate of convergence \(\sup _{(t, x) \in {\mathbb {R}}\times {\mathbb {T}}^2}|v_\nu (\omega t, x) - v_e(\omega t, x)| \lesssim \nu \). To the best of our knowledge, this is the first case in which the inviscid limit is uniform in time for non-trivial, time-dependent solutions. We now give the precise statement of our main theorem.

Theorem 1.2

(Singular KAM for 2D Navier–Stokes in the inviscid limit). There exist \({{\overline{s}}}:= {{\overline{s}}}(d)\) and \( {{\overline{\mu }}}:= {{\overline{\mu }}}(d) > 0\) such that, for any \(s \ge {{\overline{s}}}(d)\), there exists \(q:= q(s) > 0\) such that, for every forcing term \(f \in {{\mathcal {C}}}^q({\mathbb {T}}^d \times {\mathbb {T}}^2, {\mathbb {R}}^2)\) satisfying (1.3), there exist \(\varepsilon _0:= \varepsilon _0(f, s, d) \in (0, 1)\) and \(C:= C(f, s, d) > 0\) such that, for every \(\varepsilon \in (0, \varepsilon _0)\) and for any value of the viscosity parameter \(\nu > 0\), the following holds. Let \(v_e(\cdot ; \lambda ) \in H^{s + {{\overline{\mu }}}}_0({\mathbb {T}}^{d + 2}) \cap Y\), \(\lambda \in \Omega _\varepsilon \) be the family of solutions of the Euler equation provided by Theorem 1.1. Then, there exists a Borel set \({{\mathcal {O}}}_\varepsilon \subseteq \Omega _\varepsilon \), satisfying \(\lim _{\varepsilon \rightarrow 0} |{{\mathcal {O}}}_\varepsilon | = |\Omega |\) such that, for any \(\lambda = (\omega , \zeta ) \in {{\mathcal {O}}}_\varepsilon \), there exists a unique quasi-periodic solution \(v_\nu (\cdot ; \lambda ) \in H^s_0({\mathbb {T}}^{d + 2})\), \(\lambda \in {{\mathcal {O}}}_\varepsilon \), of the Navier–Stokes equation

satisfying the estimate

As a consequence, for any value of the parameter \(\lambda \in {{\mathcal {O}}}_\varepsilon \), the quasi-periodic solutions of the Navier Stokes equation \(v_\nu \) converge to the ones of the Euler equation \(v_e\) in \(H^s_0({\mathbb {T}}^{d + 2})\) in the limit \(\nu \rightarrow 0\).

From the latter theorem we shall deduce the following corollary which provides a family of quasi-periodic solutions of Navier–Stokes equation converging to solutions of the Euler equation with rate of convergence \(O(\nu )\) and uniformly for all times. The result is a direct consequence of the Sobolev embeddings.

Corollary 1.3

(Uniform rate of convergence for the inviscid limit). Assume the same hypotheses of Theorem 1.2 and let \(s \ge s_0\) large enough, \(v_e \in H^{s + {{\overline{\mu }}}}_0({\mathbb {T}}^{d + 2})\), \(v_\nu \in H^s_0({\mathbb {T}}^{d + 2})\) and, for \(\omega \in {{\mathcal {O}}}_\varepsilon \), let \(v_{\nu }^\omega (t, x):= v_\nu (\omega t, x)\), \(v_{ e}^\omega (t, x):= v_e(\omega t, x)\) be defined for any \((t, x) \in {\mathbb {R}}\times {\mathbb {T}}^2\). Then, for any \(\alpha \in {\mathbb {N}}\), \(\beta \in {\mathbb {N}}^2\) with \(|\alpha | + |\beta | \le s - (\lfloor \frac{d+2}{2}\rfloor +1)\), one has

Let us make some remarks on the result.

-

(1)

Vanishing viscosity solutions of the Cauchy problem. The time quasi-periodic solutions in Theorem 1.2 are slight perturbations of constant velocity fields \(\zeta \in {\mathbb {R}}^2\) with frequency vector \(\omega \in {\mathbb {R}}^d\) induced by the perturbative forcing term \(f(\omega t,x)\). Since they exist only for most values of the parameters \((\omega ,\zeta )\), we obtain equivalently that the Cauchy problem associated with (1.4) (and so of (1.1)) admits a subset of small amplitude initial data of relatively large measure, with elements evolving for all time, in a eventually larger but still bounded neighborhood in the Sobolev topology, and whose flows exhibit a uniform vanishing viscosity limit to solutions of the Cauchy problem for the Euler equations with same initial data.

-

(2)

The role of the forcing term. It is worth to note that the time quasi-periodic external forcing term \(F(\omega t,x)\) in (1.1) is independent of the viscosity parameter \(\nu >0\). Its presence ensures the existence of the time quasi-periodic Euler solution \(v_e\) in Theorem 1.1, while the construction of the viscous correction \(v_{\nu }-v_{e}\) does not rely explicitly on it: if one is able to exhibit time quasi-periodic solutions close to constant velocity fields for the free 2D Euler equation, namely (1.4) with \(\nu =0\) and \(F\equiv 0\), then the ones for the Navier–Stokes equation follow immediately by our scheme. To our knowledge, the only result of existence of time quasi-periodic flows for the free Euler equations on \({\mathbb {T}}^2\) is given by Crouseilles and Faou [21] (extended recently in the 3D case by Enciso, Peralta-Salas and Torres de Lizaur [22]), where the solutions are searched based on a prescribed stationary shear flow, locally constant around finitely many points, and propagate in time in the orthogonal direction to the shear flow. Due to the nature of their solutions, the non-resonant frequencies are prescribed as well and therefore there are no small divisors issues involved.

1.2 Strategy and Main Ideas of the Proof

In order to prove Theorem 1.2, we have to construct a solution of the Navier–Stokes Eq. (1.4) which is a correction of order \(O(\nu )\) of the solution \(v_e\) of the Euler equation (provided in Theorem 1.1 of [4]). Roughly speaking, the difficult point is the following. There are two smallness parameters which are \(\varepsilon \), the size of the Euler solution, and \(\nu \), the size of the viscosity. If one tries to construct small solutions of the Navier–Stokes equation by using a standard fixed point argument, one immediately notes that a smallness condition of the form \(\varepsilon \nu ^{- 1} \ll 1\) is needed and clearly this is not enough to pass to the inviscid limit as \(\nu \rightarrow 0\). The key point is to have a smallness condition on \(\varepsilon \) which is independent of \(\nu \) in such a way that one can pass to the limit as the viscosity \(\nu \rightarrow 0\). We can summarize the construction into three main steps:

-

(1)

Analysis of the linearized Navier–Stokes equation at the Euler solution and estimates for the inverse operators;

-

(2)

Construction of the first order approximation for the viscous solution up to errors of order \(O(\nu ^2)\);

-

(3)

A fixed point argument around the approximated viscous solution leading to the desired full solution of the Navier–Stokes equation.

Inversion of the linearized operator at the Euler solution. The essential ingredient is to analyze the linearized Navier–Stokes operator at the Euler solution \(u_e\), namely one has to linearize (1.4) at the Euler solution \(u_e(\varphi , x)\). This leads to study a linear operator of the form

is a pseudo-differential operator of order \(- 1\). Note that the linear operator \({{\mathcal {L}}}_e\) is obtained by linearizing the Euler equation at the solution with vorticity \(v_e(\varphi , x)\). If one tries to implement a naive approach by directly using Neumann series to invert the linear operator \({{\mathcal {L}}}_\nu \), one has to require that \(\varepsilon \nu ^{- 1} \ll 1\), which is not enough to pass to the limit as \(\nu \rightarrow 0\). To overcome this issue, we first implement the normal form procedure developed in [4, 5] to reduce the Euler operator \({\mathcal {L}}_{e}\) to a diagonal, constant coefficients one, generating an unbounded correction to the viscous term \(-\nu \Delta \) of size \(O(\varepsilon \nu )\). More precisely, for most values of the parameters \((\omega , \zeta )\) and for \(\varepsilon \ll 1\) small enough and independent of \(\nu \), we construct a bounded, invertible transformation \(\Phi : H^s_0 \rightarrow H^s_0\) such that

where \({{\mathcal {D}}}_\infty \) and \({{\mathcal {R}}}_{\infty , \nu }\) have the following properties. \({{\mathcal {D}}}_\infty \) is a diagonal operator of the form

The remainder term \({{\mathcal {R}}}_{\infty , \nu }\) is an unbounded operator of order two and it satisfies an estimate of the form

where we denote by \({{\mathcal {B}}}(H^s)\), the space of bounded linear operators on \(H^s\). The estimate (1.9) is the key ingredient to invert the operator \({{\mathcal {L}}}_{\infty , \nu }\) in (1.7) with a smallness condition on \(\varepsilon \) uniform with respect to the viscosity parameter \(\nu > 0\). It is also crucial to exploit the reversibility structure which is a consequence of the fact that the solutions \(v_e(\varphi , x)\) of the Euler equation are odd with respect to \((\varphi , x)\). This ensures that, for any \(\ell \in {\mathbb {Z}}^d\), \(j \in {\mathbb {Z}}^2 {\setminus } \{ 0 \}\), the eigenvalues \(\mu _\infty (\ell , j)\) of the diagonal operator \({{\mathcal {D}}}_\infty \) in (1.8) are purely imaginary (namely, the corrections \(r_j^\infty \) are real). An important consequence is that the diagonal operator \({{\mathcal {D}}}_\infty - \nu \Delta \) is invertible and gains two space derivatives with an estimate for its inverse of order \(O(\nu ^{- 1})\). Indeed, the eigenvalues of \({{\mathcal {D}}}_\infty - \nu \Delta \) are \({{\textrm{i}}}(\omega \cdot \ell + \zeta \cdot j + r_j^\infty ) + \nu |j|^2\), with \( (\ell , j) \in {\mathbb {Z}}^d \times ({\mathbb {Z}}^2 {\setminus } \{ 0 \})\), and, since \(\omega \cdot \ell + \zeta \cdot j + r_j^\infty \) is real, one gets a lower bound

implying that \({{\mathcal {D}}}_\infty - \nu \Delta \) is invertible with inverse which gain two space derivatives, namely

Thus, on one hand, \(({{\mathcal {D}}}_\infty - \nu \Delta )^{- 1}\) gains two space derivatives, compensating the loss of two space derivatives of the remainder \({{\mathcal {R}}}_{\infty , \nu }\). On the other hand, the norm of \(({{\mathcal {D}}}_\infty - \nu \Delta )^{- 1}\) explodes as \(\nu ^{- 1}\) as \(\nu \rightarrow 0\), but this is compensated by the fact that \({{\mathcal {R}}}_{\infty , \nu }\) is of order \(O(\varepsilon \nu )\). Therefore, recalling (1.9), one gets a bound

Hence, by Neumann series, for \(\varepsilon \ll 1\) small enough and independent of \(\nu \), the operator \({{\mathcal {L}}}_{\infty , \nu }\) is invertible and gains two space derivatives, with estimate \(\Vert {{\mathcal {L}}}_{\infty , \nu }^{- 1} (- \Delta ) \Vert _{{{\mathcal {B}}}(H^s)} \lesssim _s \nu ^{- 1}\). By (1.7), we deduce that \({{\mathcal {L}}}_\nu \) is invertible as well and satisfies, for \(\varepsilon \ll 1\) and for any \(\nu > 0\),

First order approximation for the viscosity quasi-periodic solution and fixed point argument. Once we have a good knowledge for properly inverting the operators \({\mathcal {L}}_{\nu }\) and \({\mathcal {L}}_{\varepsilon }\), we are ready to construct quasi-periodic solutions of the Navier–Stokes equation converging to the Euler solution \(u_e\) as \(\nu \rightarrow 0\). First, we define an approximate solution \(v_{app} = v_e + \nu v_1\) which solves Eq. (1.4) up to order \(O(\nu ^2)\). By making a formal expansion with respect to the viscosity parameter \(\nu \), we ask \(v_{e}\) to solve the equation at the zeroth order \(O(\nu ^0)\), namely the Euler equation, whose existence is provided by Theorem 1.1, and \(v_1\) to solve the linear equation at the first order \(O(\nu )\), that is \({\mathcal {L}}_{e} v_1 = \Delta v_{e}\). This procedure leads to a loss of regularity due to the presence of small divisors, appearing in the inversion of the linearized Euler operator \({{\mathcal {L}}}_e\) in (1.6), which satisfies an estimate of the form \(\Vert {{\mathcal {L}}}_e^{- 1} h \Vert _s \lesssim _s \Vert h \Vert _{s + \tau }\) for some \(\tau \gg 0\) large enough. On the other hand, this is not a problem in our scheme since it appears only twice: first, in the construction of the quasi-periodic solution \(v_e\), but it has already been dealt in Theorem 1.1; second, in the definition indeed of \(v_1\). We overcome this issue by requiring \(v_e\) to be sufficiently regular. The final step to prove Theorem 1.2 is to implement a fixed point argument for constructing solutions of the form \(v = v_e + \nu v_1 + \psi \), where the quasi-periodic correction \(\psi \) lies in the ball \(\Vert \psi \Vert _s \le \nu \). It is crucial here that \(v_e + \nu v_1\) is an approximate solution up to order \(O(\nu ^2)\): indeed, the fixed point iteration asks to invert the linearized operator at the Euler solution \({\mathcal {L}}_{\nu }\), which has a bound of order \(O(\nu ^{- 1})\) (recall (1.11)), and in this way the new term ends up to be of order \(O(\nu )\) as desired. The good news here is that, at this stage, no small divisors are involved and, consequently, no losses of derivatives, which would have made the fixed point argument not applicable otherwise.

2D vs. 3D. It is worth concluding this introduction by making some comments on the 3D case, which is not covered by the method developed in this paper. In the present paper, we construct global in time quasi-periodic solutions for the two dimensional Navier–Stokes equations converging uniformly in time to global quasi-periodic solutions of the two-dimensional forced Euler equation. The three-dimensional case is much harder. The biggest obstacle is that the reversible structure is not enough to deduce that the spectrum of the linearized Euler operator after the KAM reducibility scheme is purely imaginary. Indeed, as in [4], the reduced Euler operator \({{{\mathcal {D}}}}_\infty \) is a \(3 \times 3\) block diagonal operator \({{{\mathcal {D}}}}_\infty = {\textrm{diag}}_{j \in {\mathbb {Z}}^3 {\setminus } \{ 0 \}} D_\infty (j)\) where the \(3 \times 3\) matrix \(D_\infty (j)\) has the form \(D_\infty (j) = {{\textrm{i}}}\zeta \cdot j {\textrm{Id}} + \varepsilon R_\infty (j)\) for \(j \in {\mathbb {Z}}^3 \setminus \{ 0 \}\). This block matrix could have eigenvalues \(\mu _1(j), \mu _2(j), \mu _3(j)\) of the form \(\mu _i(j) = {{\textrm{i}}}\zeta \cdot j + \varepsilon r_i(j)\), \(i = 1,2,3\), with real part different from zero, in particular with \({\textrm{Re}}(r_i(j)) \ne 0\) for some \(i = 1,2,3\). This seems to be an obstruction to get a lower bound like (1.10) with a gain of two space derivatives, which holds uniformly in \(\varepsilon \) and for any value of the viscosity parameter. More precisely, one gets a lower bound on the eigenvalues of the form

It is therefore not clear how to bound the latter quantity by \(C \nu |j|^2\) without linking \(\varepsilon \) and \(\nu \) which prevent to pass to the limit as \(\nu \rightarrow 0\) (independently of \(\varepsilon \)).

Outline of the paper. The rest of this paper is organized as follows. In Sect. 2 we introduce the functional setting and some general lemmata that we will employ in the other sections. In Sect. 3 we formulate the nonlinear functional \({\mathcal {F}}_{\nu }\) in (3.1), whose zeroes correspond to quasi-periodic solutions of Eq. (1.4), together with the linearized operators that we have to study. In Sect. 4 we implement the normal form method on the linearized Euler and Navier–Stokes operators operator \({\mathcal {L}}_{\varepsilon }, {\mathcal {L}}_\nu \) in (1.6): first, we regularize to constant coefficients the highest and lower orders, in Sects. 4.1 and 4.2 up to sufficiently smoothing orders; then, in Sects. 4.3–4.4 we prove the full KAM reducibility scheme. We shall prove that the normal form transformations conjugate the linearized Navier–Stokes operator to a diagonal one plus a remainder which is unbounded of order two and has size \(O(\varepsilon \nu )\). This normal form procedure is uniform w.r. to the viscosity parameter since it requires a smallness condition on \(\varepsilon \) which is independent of the viscosity \(\nu > 0\). Then, in Sect. 5 we show the invertibility of the operator \({\mathcal {L}}_{\nu }\) (and also \({\mathcal {L}}_e\)) that will be used in Sect. 6 for the construction of the first order approximate solution and in Sect. 7 for the fixed point argument. Finally, the proof of Theorem 1.2 is provided in Sect. 8, together with the measure estimates proved in Sect. 8.1.

2 Norms and Linear Operators

In this section we collect some general definitions and properties concerning norms and matrix representation of operators which are used in the whole paper.

Notations. In the whole paper, the notation \( A \lesssim _{s, m} B \) means that \(A \le C(s, m) B\) for some constant \(C(s, m) > 0\) depending on the Sobolev index s and a generic constant m. We always omit to write the dependence on d, which is the number of frequencies, and \(\tau \), which is the constant appearing in the non-resonance conditions (see for instance (4.32)). We often write \(u = \textrm{even}(\varphi , x)\) if \(u \in X\) and \(u = {\textrm{odd}}(\varphi , x)\) if \(u \in Y\) (recall (1.5)). For a given Banach space Z, we recall that \({\mathcal {B}}(Z)\) denotes the space of bounded operators from Z into itself.

2.1 Function Spaces

Let \(a: {\mathbb {T}}^d \times {\mathbb {T}}^2 \rightarrow {\mathbb {C}}\), \(a = a(\varphi ,x)\), be a function. then, for \(s \in {\mathbb {R}}\), its Sobolev norm \(\Vert a \Vert _s\) is defined as

where \({{\widehat{a}}}(\ell ,j)\) (which are scalars, or vectors, or matrices) are the Fourier coefficients of \(a(\varphi ,x)\), namely

We denote, for \(E = {\mathbb {C}}^n\) or \({\mathbb {R}}^n\),

In the paper we use Sobolev norms for (real or complex, scalar- or vector- or matrix-valued) functions \(u( \varphi , x; \omega , \zeta )\), \((\varphi ,x) \in {\mathbb {T}}^d \times {\mathbb {T}}^2\), being Lipschitz continuous with respect to the parameters \(\lambda :=(\omega ,\zeta ) \in {\mathbb {R}}^{d+2}\). We fix

once and for all, and define the weighted Sobolev norms in the following way.

Definition 2.1

(Weighted Sobolev norms). Let \(\gamma \in (0,1]\), \(\Lambda \subseteq {\mathbb {R}}^{d + 2}\) and \(s \ge s_0\). Given a function \(u: \Lambda \rightarrow H^s({\mathbb {T}}^d \times {\mathbb {T}}^2)\), \(\lambda \mapsto u(\lambda ) = u(\varphi ,x; \lambda )\) that is Lipschitz continuous with respect to \(\lambda \), we define its weighted Sobolev norm by

where

For u independent of \((\varphi ,x)\), we simply denote by \(| u |^{k_0,\gamma }:= | u|^{\sup } + \gamma \, | u|^{\textrm{lip}} \).

For any \(N>0\), we define the smoothing operators (Fourier truncation)

Lemma 2.2

(Smoothing). The smoothing operators \(\Pi _N, \Pi _N^\perp \) satisfy the smoothing estimates

Lemma 2.3

(Product). For all \( s \ge s_0\),

2.2 Matrix Representation of Linear Operators

Let \({{\mathcal {R}}}: L^2({\mathbb {T}}^2) \rightarrow L^2({\mathbb {T}}^2)\) be a linear operator. Such an operator can be represented as

where, for \(j, j' \in {\mathbb {Z}}^2\), the matrix element \({{\mathcal {R}}}_j^{j'}\) is defined by

We also consider smooth \(\varphi \)-dependent families of linear operators \({\mathbb {T}}^d \rightarrow {{\mathcal {B}}} (L^2({\mathbb {T}}^2))\), \(\varphi \mapsto {{\mathcal {R}}}(\varphi )\), which we write in Fourier series with respect to \(\varphi \) as

According to (2.8), for any \(\ell \in {\mathbb {Z}}^d\), the linear operator \(\widehat{{\mathcal {R}}}(\ell ) \in {{\mathcal {B}}} (L^2({\mathbb {T}}^2))\) is identified with the matrix \((\widehat{{\mathcal {R}}}(\ell )_j^{j'})_{j, j' \in {\mathbb {Z}}^2}\). A map \({\mathbb {T}}^d \rightarrow {{\mathcal {B}}} (L^2({\mathbb {T}}^2))\), \(\varphi \mapsto {{\mathcal {R}}}(\varphi )\) can be also regarded as a linear operator \(L^2({\mathbb {T}}^{d + 2}) \rightarrow L^2({\mathbb {T}}^{d + 2})\) by

If the operator \({{\mathcal {R}}}\) is invariant on the space of functions with zero average in x, we identify \({{\mathcal {R}}}\) with the matrix

Definition 2.4

(Diagonal operators) Let \({{\mathcal {R}}}\) be a linear operator as in (2.7)–(2.10). We define \({{\mathcal {D}}}_{{\mathcal {R}}}\) as the operator defined by

In particular, we say that \({\mathcal {R}}\) is a diagonal operator if \({\mathcal {R}}\equiv {\mathcal {D}}_{{\mathcal {R}}}\).

For the purpose of the Normal form method for the linearized operator in Sect. 4, it is convenient to introduce the following norms that take into account the order and the off-diagonal decay of the matrix elements representing any linear operator on \(L^2({\mathbb {T}}^{d+2})\).

Definition 2.5

(Matrix decay norm and the class \({{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_s\)). Let \(m \in {\mathbb {R}}\), \(s \ge s_0\) and \({\mathcal {R}}\) be an operator represented by the matrix in (2.10). We say that \({{\mathcal {R}}}\) belongs to the class \({{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_s\) if

If the operator \({\mathcal {R}}= {\mathcal {R}}(\lambda )\) is Lipschitz with respect to the parameter \(\lambda \in \Lambda \subseteq {\mathbb {R}}^{\nu +2}\), we define

Directly from the latter definition, it follows that

We now state some standard properties of the decay norms that are needed for the reducibility scheme of Sect. 4.3. If \(a \in H^s\), \(s \ge s_0\), then the multiplication operator \({{\mathcal {M}}}_a: u \mapsto a u\) satisfies

Lemma 2.6

-

(i)

Let \(s \ge s_0\) and \({{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^0_s\). If \(\Vert u \Vert _s^{{\textrm{Lip}}(\gamma )} < \infty \), then

$$\begin{aligned} \Vert {{\mathcal {R}}} u \Vert _s^{{\textrm{Lip}}(\gamma )} \lesssim _{s} |{{\mathcal {R}}}|_{0, s}^{{\textrm{Lip}}(\gamma )} \Vert u \Vert _s^{{\textrm{Lip}}(\gamma )}. \end{aligned}$$ -

(ii)

Let \(s \ge s_0\), \(m, m' \in {\mathbb {R}}\), and let \({{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_s\), \({{\mathcal {Q}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{m'}_{s + |m|}\). Then \({{\mathcal {R}}} {{\mathcal {Q}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{m + m'}_s\) and

$$\begin{aligned} |{{\mathcal {R}}}{{\mathcal {Q}}}|_{m + m', s}^{{\textrm{Lip}}(\gamma )} \lesssim _{s, m} |{{\mathcal {R}}}|_{m, s}^{{\textrm{Lip}}(\gamma )} |{{\mathcal {Q}}}|_{m', s_0 + |m|}^{{\textrm{Lip}}(\gamma )} + |{{\mathcal {R}}}|_{m, s_0}^{{\textrm{Lip}}(\gamma )} |{{\mathcal {Q}}}|_{m', s + |m|}^{{\textrm{Lip}}(\gamma )}\ . \end{aligned}$$ -

(iii)

Let \(s \ge s_0\) and \({{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^0_s\). Then, for any integer \(n \ge 1\), \({{\mathcal {R}}}^n \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^0_s\) and there exist constants \(C(s_0),C(s) > 0\), independent of n, such that

$$\begin{aligned} \begin{aligned} |{{\mathcal {R}}}^n|_{0, s_0}^{{\textrm{Lip}}(\gamma )}&\le C(s_0)^{n - 1} \big (|{{\mathcal {R}}}|_{0, s_0}^{{\textrm{Lip}}(\gamma )}\big )^{n}, \\ |{{\mathcal {R}}}^n|_{0, s}^{{\textrm{Lip}}(\gamma )}&\le n\,C(s)^{n - 1} \big (C(s_0)|{{\mathcal {R}}}|_{0, s_0}^{{\textrm{Lip}}(\gamma )}\big )^{n - 1} |{{\mathcal {R}}}|_{0, s}^{{\textrm{Lip}}(\gamma )}; \end{aligned} \end{aligned}$$ -

(iv)

Let \(s \ge s_0\), \(m \ge 0\) and \({{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- m}_s\). Then there exists \(\delta (s) \in (0, 1)\) small enough such that, if \(|{{\mathcal {R}}}|_{- m, s_0}^{{\textrm{Lip}}(\gamma )} \le \delta (s)\), then the map \(\Phi = {\textrm{Id}} + {{\mathcal {R}}}\) is invertible and the inverse satisfies the estimate

$$\begin{aligned} |\Phi ^{- 1} - {\textrm{Id}}|_{- m, s}^{{\textrm{Lip}}(\gamma )} \lesssim _{s, m} |{{\mathcal {R}}}|_{- m, s}^{{\textrm{Lip}}(\gamma )}. \end{aligned}$$ -

(v)

Let \(s \ge s_0\), \(m \in {\mathbb {R}}\) and \({{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_s\). Let \({{\mathcal {D}}}_{{\mathcal {R}}}\) be the diagonal operator as in Definition 2.4. Then \({{\mathcal {D}}}_{{\mathcal {R}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_s\) and \(|{{\mathcal {D}}}_{{\mathcal {R}}}|_{m, s}^{{\textrm{Lip}}(\gamma )} \lesssim |{{\mathcal {R}}}|_{m, s}^{{\textrm{Lip}}(\gamma )}\). As a consequence,

$$\begin{aligned} | \widehat{{\mathcal {R}}}_j^j(0) |^{{\textrm{Lip}}(\gamma )} \lesssim \langle j \rangle ^m|{{\mathcal {R}}}|_{s_0}^{{\textrm{Lip}}(\gamma )}. \end{aligned}$$

Proof

(i), (ii) The proofs of the first two items use similar arguments. We only prove item (ii). We start by assuming that both \({\mathcal {R}}\) and \({\mathcal {Q}}\) do not depend on the parameter \(\lambda \). The matrix elements for the composition operator \({\mathcal {R}}{\mathcal {Q}}\) follow the rule

Using that \(\langle \ell , j - j' \rangle ^s \lesssim _s \langle \ell - k, j - i \rangle ^s + \langle k, i - j' \rangle ^s\), one gets

where

We start with estimating (A). By the elementary inequality \( \langle i \rangle ^m\langle j' \rangle ^{- m} \lesssim _m \langle j' - i \rangle ^{|m|} \), the Cauchy-Schwartz inequality and having the series \(\sum _{k \in {\mathbb {Z}}^d, i \in {\mathbb {Z}}^2} \langle k, i - j'\rangle ^{- 2 s_0} = C(s_0)<\infty \), one has

By similar arguments, one gets \((B) \lesssim _m |{{\mathcal {Q}}}|_{s + |m|, m'}^2 |{{\mathcal {R}}}|_{s_0, m}^2 \) and hence the claimed estimate follows by taking the supremum over \(j' \in {\mathbb {Z}}^2\) in (2.14). If we reintroduce the dependence on the parameter \(\lambda \), the estimate for the Lipschitz seminorm follows as usual by taking two parameters \(\lambda _1, \lambda _2\) and writing \({{\mathcal {R}}}(\lambda _1) {{\mathcal {Q}}}(\lambda _1) - {{\mathcal {R}}}(\lambda _2) {{\mathcal {Q}}}(\lambda _2) = ({{\mathcal {R}}}(\lambda _1) - {{\mathcal {R}}}(\lambda _2)) {{\mathcal {Q}}}(\lambda _1) + {{\mathcal {R}}}(\lambda _2) ({{\mathcal {Q}}}(\lambda _1) - {{\mathcal {Q}}}(\lambda _2))\).

(iii) The claim follows by an induction argument and item (ii).

(iv) The claim follows by a Neumann series argument, together with item (iii).

(v) The claims are a direct consequence of the definition of the matrix decay norm in Definition 2.5. \(\square \)

We recall the definition of the set of the Diophantine vectors in a bounded, measurable set \(\Lambda \subset {\mathbb {R}}^{d + 2}\). Given \(\gamma , \tau > 0\), we define

where \(|(\ell ,j)|:=|\ell |+|j|\) for any \(\ell \in {\mathbb {Z}}^d\), \(j\in {\mathbb {Z}}^2\).

Lemma 2.7

(Homological equation). Let \(\Lambda \ni \lambda =(\omega ,\zeta )\mapsto {{\mathcal {R}}}(\lambda )\) be a Lipschitz family of linear operators in \({{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_{s + 2 \tau + 1}\). Then, for any \(\lambda \in \Lambda (\gamma , \tau )\), there exists a solution \(\Psi = \Psi (\lambda ) \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^m_{s}\) of the equation

satisfying the estimate \(|\Psi |_{m, s}^{{\textrm{Lip}}(\gamma )} \lesssim \gamma ^{- 1} |{{\mathcal {R}}}|_{m, s + 2 \tau + 1}^{{\textrm{Lip}}(\gamma )}\). Moreover, if \({{\mathcal {R}}}\) is invariant on the space of zero average functions, also \(\Psi \) is invariant on the space of zero average functions.

Proof

By the matrix representation (2.9), (2.10), Eq. (2.16) is equivalent to

for any \((\ell , j, j') \in {\mathbb {Z}}^d \times {\mathbb {Z}}^2 \times {\mathbb {Z}}^2\) with \((\ell , j, j') \ne (0, j, j)\). We then define \(\Psi \) as

Since \(\lambda = (\omega , \zeta ) \in \Lambda (\gamma , \tau )\), by (2.15), one has that

The latter estimate, together with Definition 2.11, implies that

We prove now the Lipschitz estimate. Let \(\lambda _1, \lambda _2 \in \Lambda (\gamma , \tau )\). A direct computation shows that

Hence by recalling (2.11), (2.12) one obtains that

The estimates (2.17), (2.18) imply the claimed bound \(|\Psi |_{m, s}^{{\textrm{Lip}}(\gamma )} \lesssim \gamma ^{- 1} |{{\mathcal {R}}}|_{m, s + 2 \tau + 1}^{{\textrm{Lip}}(\gamma )}\). \(\square \)

For \(N > 0\), we define the operators \(\Pi _N {{\mathcal {R}}}\) and \(\Pi _N^\perp {\mathcal {R}}\) by means of their matrix representation as follows:

Lemma 2.8

For all \(s, \alpha \ge 0\), \(m \in {\mathbb {R}}\), one has \(|\Pi _N {{\mathcal {R}}}|_{m, s + \alpha }^{{\textrm{Lip}}(\gamma )} \le N^\alpha |{{\mathcal {R}}}|_{m, s}^{{\textrm{Lip}}(\gamma )}\) and \(|\Pi _N^\bot {{\mathcal {R}}}|_{m, s}^{{\textrm{Lip}}(\gamma )} \le N^{- \alpha } |{{\mathcal {R}}}|_{m, s + \alpha }^{{\textrm{Lip}}(\gamma )}\).

Proof

The claims follow directly from (2.11) and (2.19). \(\square \)

We also define the projection \(\Pi _0\) on the space of zero average functions as

In particular, for any \(m, s \ge 0\),

We finally mention the elementary properties of the Laplacian operator \(- \Delta \) and its inverse \((- \Delta )^{- 1}\) acting on functions with zero average in x:

By Definition 2.5, one easily verifies, for any \(s\ge 0\),

2.3 Real and Reversible Operators

We recall the notation introduced in (1.5), that is, for any function \(u(\varphi ,x)\), we write \(u \in X\) when \(u = \text {even}(\varphi ,x)\) and \(u \in Y\) when \(u = \text {odd}(\varphi ,x)\).

Definition 2.9

-

(i)

We say that a linear operator \(\Phi \) is reversible if \(\Phi : X \rightarrow Y\) and \(\Phi : Y \rightarrow X\). We say that \(\Phi \) is reversibility preserving if \(\Phi : X \rightarrow X\) and \(\Phi : Y \rightarrow Y\).

-

(ii)

We say that an operator \(\Phi : L^2({\mathbb {T}}^2) \rightarrow L^2({\mathbb {T}}^2)\) is real if \(\Phi (u)\) is real valued for any u real valued.

It is convenient to reformulate real and reversibility properties of linear operators in terms of their matrix representations.

Lemma 2.10

A linear operator \({{\mathcal {R}}}\) is:

-

(i)

real if and only if \(\widehat{{\mathcal {R}}}_{j}^{j'}(\ell ) = \overline{\widehat{{\mathcal {R}}}_{- j}^{- j'}(- \ell )}\) for all \(\ell \in {\mathbb {Z}}^d\), \(j, j' \in {\mathbb {Z}}^2\);

-

(ii)

reversible if and only if \(\widehat{{\mathcal {R}}}_j^{j'}(\ell ) = - \widehat{{\mathcal {R}}}_{- j}^{- j'}(- \ell )\) for all \(\ell \in {\mathbb {Z}}^d\), \(j, j' \in {\mathbb {Z}}^2\);

-

(iii)

reversibility preserving if and only if \(\widehat{{\mathcal {R}}}_j^{j'}(\ell ) = \widehat{{\mathcal {R}}}_{- j}^{- j'}(- \ell )\) for all \(\ell \in {\mathbb {Z}}^d\), \(j, j' \in {\mathbb {Z}}^2\).

3 The Nonlinear Functional and the Linearized Navier–Stokes Operator at the Euler Solution

We shall show the existence of solutions of (1.4) by finding zeroes of the nonlinear operator \( {{\mathcal {F}}}_\nu : H^{s + 2}_0({\mathbb {T}}^{d + 2}) \rightarrow H^s_0({\mathbb {T}}^{d + 2}) \) defined by

with \(\nabla _\bot \) as in (1.4), and, without loss of generality, \(F = \nabla \times f\) has zero average in space, namely

We consider parameters \((\omega , \zeta )\) in a bounded open set \(\Omega \subset {\mathbb {R}}^d \times {\mathbb {R}}^2\); we will use such parameters along the proof in order to impose appropriate non-resonance conditions.

In this section and Sect. 4 we assume the following ansatz, which is implied by Theorem 1.1: there exists \(S \gg 0\) large enough such that \(v_e(\cdot ; \lambda ) \in H^{S}_0({\mathbb {T}}^d \times {\mathbb {T}}^2)\), \(\lambda \in \Omega _\varepsilon \), is a solution of the Euler equation satisfying

where \({{\overline{S}}}:= {{\overline{S}}}(d)\) is the minimal regularity threshold for the existence of quasi-periodic solutions of the Euler equation provided by Theorem 1.1. We want to study the linearized operator \({{\mathcal {L}} }_\nu := {\mathrm d}{{\mathcal {F}}}_\nu (v_e)\) at the solution of the Euler equation \(v_e\). where \({\mathcal {F}}_\nu (v)\) is defined in (3.1). The linearized operator has the form

where \(a(\varphi ,x)\) is the function defined by

with \(\nabla _\bot \) as in (1.4) and \({{\mathcal {R}}}(\varphi )\) is a pseudo-differential operator of order \(- 1\), given by

Using that \({\textrm{div}} (\nabla _\bot h) = 0\) for any h, the operators \(a \cdot \nabla \), \({{\mathcal {R}}}\) \({{\mathcal {L}}}_\nu \) and \({{\mathcal {L}}}_e\) leave invariant the subspace of zero average function, with

implying that

We always work on the space of zero average functions and we shall preserve this invariance along the whole paper.

The goal of next two sections is to invert the whole linearized Navier–Stokes operator \({\mathcal {L}}_\nu \) obtained by linearizing the nonlinear functional \({\mathcal {F}}_\nu (v)\) in (3.1) at any quasi-periodic solution \(v_e(\varphi ,x)|_{\varphi =\omega t}\) provided by Theorem 1.1 by requiring a smallness condition on \(\varepsilon \) which is independent of the viscosity parameter \(\nu > 0\). This is achieved in two steps. First, we fully reduce to a constant coefficient, diagonal operator the linearized Euler operator \({\mathcal {L}}_{e}\) in (3.3). This is done in Sect. 4, in the spirit of [4, 5], by combining a reduction to constant coefficients up to an arbitrarily regularizing remainder with a KAM reducibility scheme. We check step by step that this normal form procedure, when applied to the full operator \({\mathcal {L}}_{\nu }\) in (3.3), just perturbs the unbounded viscous term \(-\nu \Delta \) by an unbounded pseudo differential operator of order two that “gain smallness”, namely it is of size \(O(\nu \varepsilon )\), see (5.1)–(5.2). In Sect. 5, we use this normal form procedure in order to infer the invertibility of the operator \({\mathcal {L}}_{\nu }\) uniformly with respect to the viscosity parameter, namely by imposing a smallness condition on \(\varepsilon \) that is independent of \(\nu \). The inverse of the Navier–Stokes operator is bounded from \(H^s_0\), gains two space derivatives and it has size \(O(\nu ^{- 1})\) (see Proposition 5.4), whereas the inverse of the linearized Euler operator \({{{\mathcal {L}}}}_e\) loses \(\tau \) derivatives, due to the small divisors (see Proposition 5.5). The invertibility of the linearized Euler operator \({{{\mathcal {L}}}}_e\) is used to construct the approximate solution in Sect. 6 and the invertibility of the linearized Navier Stokes operator \({{{\mathcal {L}}}}_\nu \) is used to implement the fixed point argument of Sect. 7.

4 Normal Form Reduction of the Operator \({\mathcal {L}}_{\nu }\)

In this section we reduce to a constant coefficients, diagonal operator the operator \({\mathcal {L}}_{\nu }\) in (3.3) up to an unbounded remainder of order two which is of size \(O(\varepsilon \nu )\). First, we deal with the conjugation of the transport operator in Sect. 4.1, which is the highest order term in the operator \({\mathcal {L}}_{e}\). In Sect. 4.2, the lower order terms after the previous conjugation are regularized to constant coefficients up to a remainder of arbitrary smoothing matrix decay and up to an unbounded remainder of order two and size \(O(\varepsilon \nu )\). Then, in Sects. 4.3–4.4 we perform the full KAM reducibility for the regularized version of the operator \({\mathcal {L}}_{e}\). In particular, in Sect. 4.3 the n-th iterative step of the reduction is performed and in Sect. 4.4 the convergence of the scheme is proved via Nash-Moser estimates to overcome the loss of derivatives coming from the small divisors. The linearized Navier Stokes operator is then reduced to a diagonal operator plus an unbounded operator of order two and size \(O(\varepsilon \nu )\) in (5.1)–(5.2). This is the starting point for its inversion in Sect. 5.

From now on, the parameters \(\gamma \in (0,1)\) and \(\tau >0\), characterizing the set \(\Lambda (\gamma ,\tau )\) in (2.15) of the Diophantine frequencies in a given measurable set \(\Lambda \), are considered as fixed and \(\tau \) is chosen in (8.2) and \(\gamma \) at the end of Sect. 8, see (8.6). Therefore we omit to recall them each time. Moreover, from now on, we denote by \(DC(\gamma , \tau )\), the set of Diophantine frequencies in \(\Omega _\varepsilon \), where the set \(\Omega _\varepsilon \) is provided in Theorem 1.1, namely \(\Lambda (\gamma , \tau )\) with \(\Lambda = \Omega _\varepsilon \). We repeat the definition for clarity of the reader:

4.1 Reduction of the Highest Order Term

First, we state the proposition that allows to reduce to constant coefficients the highest order operator

where we recall, by (3.4), that \(\Pi _0 a = 0 \) and \( {\textrm{div}}(a) = 0 \). The result has been proved in Proposition 4.1 in [4] (see also [23] for a more general result of this kind). We restate it with clear adaptation to our case and we refer to the former for the proof.

Proposition 4.1

(Straightening of the transport operator \({\mathcal {T}}\)). There exist \(\sigma := \sigma (\tau , d) > 0\) large enough such that for any \(S > s_0 + \sigma \) there exists \(\delta := \delta (S, \tau , d) \in (0, 1)\) small enough such that if (3.2) holds and

are fulfilled, then the following holds. There exists an invertible diffeomorphism \({\mathbb {T}}^2 \rightarrow {\mathbb {T}}^2\), \(x \mapsto x + \alpha (\varphi , x; \omega , \zeta )\) with inverse \(y \mapsto y + \breve{\alpha }(\varphi , y; \omega , \zeta )\), defined for all \((\omega , \zeta ) \in DC(\gamma , \tau )\), with the set given in (4.1), satisfying, for any \(s_0\le s \le S - \sigma \),

such that, by defining

one gets the conjugation

Furthermore, \(\alpha ,\breve{\alpha }\) are \(\text {odd}(\varphi ,x)\) and the maps \({{\mathcal {A}}}, {{\mathcal {A}}}^{- 1}\) are reversibility preserving, satisfying the estimates

We remark that the assumptions of Proposition 4.1 are satisfied by the ansatz (3.2) and by the choice of the parameter \(\gamma >0\) at the end of Sect. 8 in (8.6). In particular, the smallness condition (4.2) becomes \(\varepsilon \gamma ^{-1}= \varepsilon ^{1-\frac{\mathtt a}{2}} \ll 1\), which is clearly satisfied since \(\mathtt a \in (0,1)\) and for \(\varepsilon \) sufficiently small.

In order to study the conjugation of the operator \({{\mathcal {L}}}_{\nu }: H^{s+2}_0 \rightarrow H^{s}_0\) in (3.3) under the transformation \({\mathcal {A}}\), we need the following auxiliary Lemma.

Lemma 4.2

Let \(S > s_0 + \sigma + 2\) (where \(\sigma \) is the constant appearing in Proposition 4.1). Then there exists \(\delta := \delta (S, \tau , d) \in (0, 1)\) small enough such that if (3.2), (4.2) are fulfilled, the following hold:

-

(i)

Let \({{\mathcal {A}}}_\bot := \Pi _0^\bot {{\mathcal {A}}} \Pi _0^\bot \). Then, for any \(s_0\le s\le S - \sigma \), the operator \({{\mathcal {A}}}_\bot : H^s_0 \rightarrow H^s_0\) is invertible with bounded inverse given by \({{\mathcal {A}}}_\bot ^{- 1} = \Pi _0^\bot {{\mathcal {A}}}^{- 1} \Pi _0^\bot : H^s_0 \rightarrow H^s_0\);

-

(ii)

Let \(s_0\le s\le S - \sigma - 1\), \(a (\cdot ; \lambda ) \in H^{s + 1}({\mathbb {T}}^{d + 2})\) and let \({{\mathcal {R}}}_a\) be the linear operator defined by

$$\begin{aligned} {{\mathcal {R}}}_a: h(\varphi , x) \mapsto \nabla a(\varphi , x) \cdot \nabla ^\bot h(\varphi , x). \end{aligned}$$Then \({{\mathcal {A}}}_\bot ^{- 1} {{\mathcal {R}}}_a {{\mathcal {A}}}_\bot \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^1_s\) and \(|{{\mathcal {A}}}_\bot ^{- 1} {{\mathcal {R}}}_a {{\mathcal {A}}}_\bot |_{1, s}^{{\textrm{Lip}}(\gamma )} \lesssim _s \Vert a \Vert _{s + 1}^{{\textrm{Lip}}(\gamma )}\);

-

(iii)

For any \(s_0\le s\le S- \sigma - 2\), the operator \({{\mathcal {P}}}_\Delta := {{\mathcal {A}}}_\bot ^{-1} (- \Delta ) {{\mathcal {A}}}_\bot =- \Delta +{\mathcal {R}}_{\Delta }: H^{s+2}_0 \rightarrow H_{0}^{s}\) is in \({{\mathcal {O}}{{\mathcal {P}}}{{\mathcal {M}}}}^2_s\), with estimates

$$\begin{aligned} |{\mathcal {R}}_{\Delta }|_{2,s}^{{{\textrm{Lip}}(\gamma )}} \lesssim _s \varepsilon \gamma ^{- 1}. \end{aligned}$$(4.6) -

(iv)

For any \(s_0\le s \le S- \sigma - 2\), the operator \({{\mathcal {P}}}_\Delta \) is invertible, with inverse of the form

$$\begin{aligned} {{\mathcal {P}}}_\Delta ^{- 1} = {{\mathcal {A}}}_\bot ^{- 1} (- \Delta )^{- 1}{{\mathcal {A}}}_\bot \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}_s^{- 2} \end{aligned}$$satisfying the estimates

$$\begin{aligned} |{{\mathcal {P}}}_\Delta ^{- 1}|_{- 2, s}^{{\textrm{Lip}}(\gamma )} \lesssim _{s} 1. \end{aligned}$$

Proof

Proof of (i). For any \(u \in H^s({\mathbb {T}}^{d + 2})\), we split \(u = \Pi _0^\bot u + \Pi _0 u\), where \(\Pi _0^\bot u \in H^s_0 = H^s_0({\mathbb {T}}^{d + 2})\) and \(\Pi _0 u \in H^s_\varphi := H^s({\mathbb {T}}^d)\). Since \({{\mathcal {A}}}\) is an operator of the form (4.4), one has that \({{\mathcal {A}}} h = h\) if \(h(\varphi )\) does not depend on x. This implies that \({{\mathcal {A}}} \Pi _0 = \Pi _0\). Similarly, one can show that \({{\mathcal {A}}}^{- 1} \Pi _0 = \Pi _0\) and therefore

We now show that the operator \(\Pi _0^\bot {{\mathcal {A}}}^{- 1} \Pi _0^\bot : H^s_0 \rightarrow H^s_0\) is the inverse of the operator \({\mathcal {A}}_\perp :=\Pi _0^\bot {{\mathcal {A}}} \Pi _0^\bot : H^s_0 \rightarrow H^s_0\). By (4.7), one has

and similarly \((\Pi _0^\bot {{\mathcal {A}}} \Pi _0^\bot ) (\Pi _0^\bot {{\mathcal {A}}}^{- 1} \Pi _0^\bot )=\Pi _0^\perp \). The claimed statement then follows.

Proof of (ii). First, we note that, given a function \(h(\varphi , x)\) and integrating by parts

since \({\textrm{div}}(\nabla ^\bot h) = 0\) for any function h. Moreover it is easy to see that \({{\mathcal {R}}}_a \Pi _0 = 0\). This implies that the linear operator \({{\mathcal {R}}}_a\) is invariant on the space of zero average functions. Then, using also item (i), one has that

where \({{\mathcal {M}}}_{\nabla a}\) denotes the multiplication operator by \(\nabla a\). A direct calculation shows that the operator \({{\mathcal {A}}}^{- 1}{{\mathcal {M}}}_{\nabla a} {{\mathcal {A}}} = {{\mathcal {M}}}_g\), where the function \(g (\varphi , y):= \nabla a (\varphi , y + \breve{\alpha }(\varphi , y)) = \{{{\mathcal {A}}}^{- 1} \nabla a\}(\varphi , y)\). The estimates (2.13), (4.5) imply that

Moreover, one computes \({{\mathcal {A}}}^{- 1} \partial _{x_i} {{\mathcal {A}}} \,[h]= \partial _{y_i} h + {{\mathcal {A}}}^{- 1}[\partial _{x_i} \alpha ] \cdot \nabla h \), for \(i=1,2\). Using that \(|\partial _{x_1}|_{1, s}, |\partial _{x_2}|_{1, s} \le 1\) and by applying the estimates (2.13), (4.3), (4.5), (4.2), one obtains that

Using the trivial fact that \(|\Pi _0^\bot |_{0, s} \le 1\), the formula (4.8), the estimates (4.9), (4.10), together with the composition Lemma 2.6-(ii), imply the claimed bound.

Proof of (iii). Since \(\Pi _0 \Delta = \Delta \Pi _0 = 0\), one computes

and hence, a direct calculation shows that

where

By Lemma 2.3 and the estimate (4.3), we have, for any \(s_0\le s \le S- \sigma - 2\)

By (4.11), Lemma 2.3, estimates (2.13), (2.20), (4.12), Lemma 2.6-(ii) together with the trivial fact that \(|\partial _{x_j}|_{1, s}\,,\, |\partial _{x_i x_j}|_{2, s} \lesssim 1\), we conclude that \({\mathcal {R}}_{\Delta }\) satisfies the claimed bound.

Proof of (iv). We write

where \({\textrm{Id}}_0\) is the identity on the space of the \(L^2\) zero average functions. By Lemma 2.6-(ii), estimates (2.21), (4.6), one obtains that \(|(- \Delta )^{- 1} {{\mathcal {R}}}_\Delta |_{0, s}^{{\textrm{Lip}}(\gamma )} \lesssim _s \varepsilon \gamma ^{- 1}\). Hence, by the smallness condition in (4.2) and by Lemma 2.6-(iv), one gets that \( {\textrm{Id}}_0 + (- \Delta )^{- 1} {{\mathcal {R}}}_\Delta : H^s_0 \rightarrow H^s_0 \) is invertible, with \(|\big ( {\textrm{Id}}_0 + (- \Delta )^{- 1} {{\mathcal {R}}}_\Delta \big )^{- 1}|_{0, s}^{{\textrm{Lip}}(\gamma )} \lesssim _s 1 \). The claimed statement then follows since we have

using again Lemma 2.6-(ii). \(\square \)

We conclude this section by conjugating the whole operator \({{\mathcal {L}}}\) defined in (3.3) by means of the map \({{\mathcal {A}}}\) constructed in Proposition 4.1.

Proposition 4.3

Let \( S > s_0 + \sigma + 2\) (where \(\sigma \) is the constant appearing in Proposition 4.1). Then there exists \(\delta := \delta (S, \tau , d) \in (0, 1)\) small enough such that, if (3.2) and (4.2) are fulfilled, the following holds. For any \((\omega , \zeta ) \in DC(\gamma , \tau )\) defined in (4.1), one has

where the map \({\mathcal {A}}_\perp \) is defined as in Proposition 4.1 and Lemma 4.2-(i), whereas, for any \(s_0 \le s \le S - \sigma - 2\), the operators \({{\mathcal {R}}}^{(1)} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- 1}_{s}\) and \({{\mathcal {R}}}^{(1)}_\nu \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^2_s\) satisfy the estimates

Moreover, the operators \({{\mathcal {L}}}^{(1)}_e\) and \({{\mathcal {R}}}^{(1)}\) are real and reversible and \({{\mathcal {R}}}^{(1)}, {{\mathcal {R}}}_\nu ^{(1)}\) leave invariant the space of functions with zero average in x.

Proof

By recalling (3.3) and by Lemma 4.2-(i), one gets \({{\mathcal {L}}}^{(1)}_\nu = {{\mathcal {L}}}_e^{(1)} - \nu {{\mathcal {A}}}_\bot ^{- 1} \Delta {{\mathcal {A}}}_\bot \), where, by (3.6),

By Proposition 4.1, using the formula (3.3), one has that

By (3.5), one writes, according to the notation of Lemma 4.2-(ii),

Therefore,

Then, by Lemma 4.2, (ii), (iv) Lemma 2.6-(ii), the ansatz (3.2) and \(\varepsilon \gamma ^{- 1} \le \delta < 1\), one gets the claimed bound (4.14) for \({{\mathcal {R}}}^{(1)}\). By Lemma 4.2-(iii), one has \(- \nu {{\mathcal {A}}}_\bot ^{- 1} \Delta {{\mathcal {A}}}_\bot = - \nu \Delta + {{\mathcal {R}}}_\nu ^{(1)}\), with \({{\mathcal {R}}}_\nu ^{(1)}:= \nu \Pi _0^{\bot } \big ( \Delta - {{\mathcal {A}}}^{- 1} \Delta {{\mathcal {A}}} \big ) \Pi _0^\bot \) satisfying the estimate \( |{{\mathcal {R}}}_\nu ^{(1)}|_s^{{\textrm{Lip}}(\gamma )} \lesssim _s \varepsilon \gamma ^{- 1} \nu \), which is the second estimate in (4.14). Moreover, since \({{\mathcal {A}}}, {{\mathcal {A}}}^{- 1}\) are real and reversibility preserving and \({{\mathcal {R}}}\) is real and reversible, then \({{\mathcal {L}}}_e^{(1)}, {{\mathcal {R}}}^{(1)}\) are real and reversible. This concludes the proof. \(\square \)

4.2 Reduction to Constant Coefficients of the Lower Order Terms

In this section we diagonalize the operator \({{\mathcal {L}}}^{(1)}_\nu \) in (4.13) up to a remainder of size \(O(\varepsilon )\) and arbitrarily smoothing matrix decay and up to an unbounded operator of order 2 of size \(O(\varepsilon \gamma ^{- 1} \nu )\). More precisely, we prove the following Proposition.

Proposition 4.4

Let \(M\in {\mathbb {N}}\) be fixed. There exists \(\sigma _{M - 1}:= \sigma _{M - 1}( \tau , d) > \sigma + 2\) large enough (where \(\sigma \) is the constant appearing in Proposition 4.1) such that for any \(S > s_0 + \sigma _{M - 1}\) there exists \(\delta := \delta (S, M, \tau , d) \in (0, 1)\) small enough such that, if (3.2), (4.2) are fulfilled, the following holds. For any \((\omega , \zeta ) \in DC(\gamma , \tau )\) defined in (4.1), there exists a real and reversibility preserving, invertible map \({{\mathcal {B}}}\) satisfying, for any \(s_0\le s\le S-\sigma _{M - 1}\),

such that

where \({{\mathcal {Q}}} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- 1}_{s}\) is a diagonal operator, \({{\mathcal {R}}}^{(2)}\) belongs to \({{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- M}_{s}\) and \({{\mathcal {R}}}^{(2)}_\nu \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^2_{s}\). Moreover, for any \(s_0\le s \le S-\sigma _{M - 1}\),

The operators \({{\mathcal {Q}}}\), \({{\mathcal {R}}}^{(2)}\) are real, reversible and \({{\mathcal {Q}}}\), \({{\mathcal {R}}}^{(2)}, {{\mathcal {R}}}^{(2)}_\nu \) leave invariant the space of functions with zero average in x.

Proposition 4.4 follows by the following iterative lemma on the operator obtained by neglecting the viscosity term \(- \nu \Delta + {{\mathcal {R}}}^{(2)}_\nu \) in (4.13), namely, in the next proposition, we only consider

Lemma 4.5

Let \(M \in {\mathbb {N}}\). There exist \(0< \sigma _0< \sigma _1< \ldots < \sigma _{M - 1}\) large enough such that for any \(S > s_0 + \sigma _{M - 1}\) there exists \(\delta := \delta (S, \tau , d) \in (0, 1)\) small enough such that, if (3.2), (4.2) are fulfilled, the following holds. For any \(n = 0, \ldots , M -1 \) and any \((\omega , \zeta ) \in DC(\gamma , \tau )\), there exists a real, reversibility preserving, invertible map \({{\mathcal {T}}}_n\) satisfying, for any \(s_0 \le s \le S-\sigma _{n}\)

and, for any \(n = 1, \ldots , M - 1\) and for any \((\omega , \zeta ) \in DC(\gamma , \tau )\),

where \({{\mathcal {L}}}^{(1)}_n\) has the form

where \({{\mathcal {Z}}}_n \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- 1}_{s}\) is diagonal, \({{\mathcal {R}}}_n^{(1)} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}^{- (n + 1) }_{s}\) and they satisfy

The operators \({{\mathcal {L}}}_n^{(1)}, {{\mathcal {Z}}}_n\) and \( {{\mathcal {R}}}_n^{(1)}\) are real, reversible and leave invariant the space of zero average functions in x.

Proof

We prove the lemma arguing by induction. For \(n = 0\) the desired properties follow by Proposition 4.3, by defining \({{\mathcal {L}}}^{(1)}_0\) as in (4.18), \({{\mathcal {Z}}}_0 = 0\), \({{\mathcal {R}}}^{(1)}_0:= {{\mathcal {R}}}^{(1)}\) and \(\sigma _0 = \sigma \) given in Proposition 4.3.

We assume that the claimed statement holds for some \(n \in \{0, \ldots , M - 2\}\) and we prove it at the step \(n+1\). Let us consider a transformation \({{\mathcal {T}}}_{n+1} = {\textrm{Id}} +{{\mathcal {K}}}_{n+1}\) where \({{\mathcal {K}}}_{n+1} \) is an operator of order \(- (n+1)\) which has to be determined. One computes

By the induction hypothesis \({{\mathcal {R}}}_n^{(1)} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}_{s}^{- (n + 1)}\), with \(|{{\mathcal {R}}}_n^{(1)} |_{- (n + 1), s} \lesssim _{s,n+1} \varepsilon \) for any \(s_0\le s \le S- \sigma _{n}\). Therefore, by Lemma 2.7, there exists \({{\mathcal {K}}}_{n + 1} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}_{s}^{- (n + 1)}\) solving the homological equation

with \({{\mathcal {D}}}_{{{\mathcal {R}}}_n^{(1)}}\) as in Definition 2.4, satisfying, for any \(s_0\le s \le S-\sigma _{n + 1}\) (for an arbitrary \(\sigma _{n + 1} > \sigma _n + 2 \tau + 1\)),

By Lemma 2.6-(iv), we obtain that \({{\mathcal {T}}}_{n + 1} = {\textrm{Id}} + {{\mathcal {K}}}_{n + 1}\) is invertible with inverse \({{\mathcal {T}}}_{n + 1}^{- 1}\) satisfying the estimate, for \(s_0\le s\le S-\sigma _{n + 1}\),

Hence the estimate (4.19) at the step \(n + 1\) holds. By (4.23), (4.24) we get, for any \((\omega , \zeta ) \in DC(\gamma , \tau )\), the conjugation (4.20) at the step \(n + 1\) where \({{\mathcal {L}}}^{(1)}_{n + 1}\) has the form (4.21), with

By Lemma 2.6-(v), since \({{\mathcal {R}}}_n^{(1)}\) and \({{\mathcal {Z}}}_n\) satisfy (4.22), we deduce that \({{\mathcal {Z}}}_{n + 1} \in {{\mathcal {O}}}{{\mathcal {P}}}{{\mathcal {M}}}_{s}^{- 1}\), with estimates \(|{{\mathcal {Z}}}_{n + 1}|_{- 1,s}^{{\textrm{Lip}}(\gamma )} \lesssim _{s,n+1} \varepsilon \). Moreover, by (4.25), (4.26), Lemma 2.6-(ii) and the condition in (3.2), we obtain that \({{\mathcal {R}}}_{n + 1}^{(1)} \in \mathcal {OPM}_{s}^{- (n + 2)}\), with \(|{{\mathcal {R}}}_{n + 1}^{(1)}|_{- (n + 2), s}^{{\textrm{Lip}}(\gamma )} \lesssim _{s,n+1} \varepsilon \) for any \(s_0\le s\le S-\sigma _{n + 1}\), by fixing \(\sigma _{n+1}:= \sigma _{n} + 2 \tau + 1 + n + 1\). This concludes the induction argument and therefore the claimed statement is proved. \(\square \)

Proof of Proposition 4.4

By (4.13), (4.18), Lemma 4.5 and by defining

one obtains that

To deduce the claimed properties of \({{\mathcal {Q}}}\) and \({{\mathcal {R}}}^{(2)}\), it suffices to apply Lemma 4.5 for \(n = M - 1\). The estimate (4.15) then follows by the estimate (4.19) on \({{\mathcal {T}}}_n\), \(n = 1, \ldots , M - 1\), using the composition property stated in Lemma 2.6-(ii). We now study the conjugation \({{\mathcal {B}}}^{- 1}( - \nu \Delta + {{\mathcal {R}}}_\nu ^{(1)}){{\mathcal {B}}}\). One has

where

By applying again Lemma 2.6-(ii) and the estimates (4.14), (4.15) (using also that \(|\Delta |_{2, s} \le 1\) for any s), one gets that \({{\mathcal {R}}}_\nu ^{(2)}\) satisfies (4.17) for any \(s_0\le s\le S-\sigma _{M - 1}\). The proof of the claimed statement is then concluded. \(\square \)

4.3 KAM Reducibility

In this section we perform the KAM reducibility scheme for the operator obtained by neglecting the small viscosity term \(- \nu \Delta + {{\mathcal {R}}}_{ \nu }^{(2)}\) from the operator \({{\mathcal {L}}}^{(2)}_\nu \) in (4.16), namely we only consider the operator \({{\mathcal {L}}}^{(2)}_e\). More precisely we consider the operator

where the diagonal operator \({\mathcal {Q}}\) and the smoothing operator \({\mathcal {R}}^{(2)}\) are as in Proposition 4.4. Given \(\tau , N_0 > 0\), we fix the constants

where \([4\tau ]\) is the integer part of \(4\tau \) and M, \(\sigma _{M - 1}\) are introduced in Proposition 4.4.

By Proposition 4.4, replacing s by \(s + \beta \) in (4.17) and having \({{\mathcal {Q}}} = {\textrm{diag}}_{j \in {\mathbb {Z}}^2 {\setminus } \{ 0 \}} q_0(j)\) diagonal, one gets the initialization conditions for the KAM reducibility, for any \(s_0\le s\le S-\Sigma (\beta )\),

Proposition 4.6

(Reducibility). Let \(S > s_0 + \Sigma (\beta )\). There exist \(N_0:= N_0(S, \tau , d) > 0\) large enough and \(\delta := \delta (S, \tau , d) \in (0, 1)\) small enough such that, if (3.2) holds and

then the following statements hold for any integer \(n \ge 0\).

\(\mathbf{(S1)}_n\) There exists a real and reversible operator

defined for any \(\lambda \in \Lambda _n^\gamma \), where we define \(\Lambda _0^\gamma := DC(\gamma , \tau )\) for \(n=0\) and, for \(n \ge 1\),

For any \(j \in {\mathbb {Z}}^2 \setminus \{ 0 \}\), the eigenvalues \(\mu _{n}(j)= \mu _{n}(j;\lambda )\) are purely imaginary and satisfy the conditions

and the estimates

The operator \({{\mathcal {R}}}_n\) is real and reversible, satisfying, for any \(s_0\le s\le S-\Sigma (\beta )\),

for some constant \(C_* (s) = C_*(s, \tau ) > 0\).

When \(n \ge 1\), there exists an invertible, real and reversibility preserving map \(\Phi _{n -1} = {\textrm{Id}} + \Psi _{n - 1}\), such that, for any \(\lambda = (\omega , \zeta ) \in \Lambda _n^\gamma \),

Moreover, for any \(s_0\le s\le S-\Sigma (\beta )\), the map \(\Psi _{n - 1}: H^s_0 \rightarrow H^s_0\) satisfies

for some constant \(C(s,\beta )>0\).

\(\mathbf{(S2)}_n\) For all \( j \in {\mathbb {Z}}^2 \setminus \{ 0 \}\), there exist a Lipschitz extension of the eigenvalues \(\mu _n(j;\,\cdot \,):\Lambda _n^\gamma \rightarrow {{\textrm{i}}}\, {\mathbb {R}}\) to the set \(DC(\gamma , \tau )\), denoted by \( {{\widetilde{\mu }}}_n(j;\,\cdot \,): DC(\gamma , \tau ) \rightarrow {{\textrm{i}}}\,{\mathbb {R}}\), satisfying, for \(n \ge 1\),

Proof

Proof of \(\mathbf{(S1)}_0,\mathbf{(S2)}_0\). The claimed properties follow directly from Proposition 4.4, recalling (4.27), (4.29) and the definition of \(\Lambda _0^\gamma := DC(\gamma , \tau )\).

Proof of \(\mathbf{(S1)}_{n+1}\). By induction, we assume the claimed properties \(\mathbf{(S1)}_n\), \(\mathbf{(S2)}_n\) hold for some \(n \ge 0\) and we prove them at the step \(n + 1\). Let \(\Phi _n = {\textrm{Id}} + \Psi _n\) where \(\Psi _n\) is an operator to be determined. We compute

where \({{\mathcal {D}}}_n:= \zeta \cdot \nabla + {{\mathcal {Q}}}_n\) and the projectors \(\Pi _N\), \(\Pi _N^\bot \) are defined in (2.19). Our purpose is to find a map \(\Psi _n\) solving the homological equation

where \({{\mathcal {D}}}_{{{\mathcal {R}}}_n}\) is the diagonal operator as per Definition 2.4. By (2.9) and (4.31), the homological Eq. (4.41) is equivalent to

for \(\ell \in {\mathbb {Z}}^d\), \(j, j' \in {\mathbb {Z}}^2 {\setminus } \{ 0 \}\). Therefore, we define the linear operator \(\Psi _{n}\) by

which is the solution of (4.42).

Lemma 4.7

The operator \(\Psi _n\) in (4.43), defined for any \((\omega , \zeta ) \in \Lambda _{n + 1}^\gamma \), satisfies, for any \(s_0\le s\le S-\Sigma (\beta )\),

where \(\tau _1>1\) is given in (4.28). Moreover, \(\Psi _n\) is real and reversibility preserving.

Proof

To simplify notations, in this proof we drop the index n. Since \(\lambda = (\omega , \zeta ) \in \Lambda _{n + 1}^\gamma \) (see (4.32)), one immediately gets the estimate

For any \(\lambda _1 = (\omega _1, \zeta _1), \lambda _2 = (\omega _2, \zeta _2) \in \Lambda _{n + 1}^\gamma \), we define \(\delta _{\ell j j'}:={{\textrm{i}}}\, \omega \cdot \ell +\mu (j) - \mu (j')\). By (4.34), (4.32) one has

The latter estimate (recall (4.43)) implies also that

Using that \( \langle \ell , j - j' \rangle \le N \) and the elementary chain of inequalities \( |j| \lesssim |j - j'| + |j'| \lesssim N + |j'| \lesssim N |j'|\), the estimates (4.45), (4.46) take the form

Since \(M > 4 \tau \) by (4.28), recalling Definition 2.5, the latter estimates imply that

and similarly, using also that \(\langle \ell , j - j' \rangle ^{M} \lesssim N^M\),

Hence, we conclude the claimed bounds in (4.44). Finally, since \({{\mathcal {R}}}\) is real and reversible, by Lemma 2.10 and the properties (4.33) for \(\mu (j)\), we deduce that \(\Psi \) is real and reversibility preserving. \(\square \)

By Lemma 4.7 and the estimate in (4.36), we obtain, for any \(s_0\le s\le S-\Sigma (\beta )\),

which are the estimates (4.38) at the step \(n + 1\). By (4.28) and by the smallness condition (4.30), one has, for any \(s_0\le s\le S-\Sigma (\beta )\) and for \(N_0>0\) large enough,

Therefore, by Lemma 2.6-(iv), \(\Phi _n = {\textrm{Id}} + \Psi _n\) is invertible and

Then, we define

All the operators in (4.50) are defined for any \(\lambda = (\omega ,\zeta ) \in \Lambda _{n + 1}^\gamma \). Since \(\Psi _n, \Phi _n, \Phi _n^{- 1}\) are real and reversibility preserving and \({{\mathcal {D}}}_n, {{\mathcal {R}}}_n\) are real and reversible operators, one gets that \({{\mathcal {D}}}_{n + 1}\), \({{\mathcal {R}}}_{n + 1}\) are real and reversible operators. Moreover, by (4.40), (4.41), for \((\omega ,\zeta ) \in \Lambda ^\gamma _{n+1}\) one has the identity \(\Phi _n^{-1} {\mathcal {L}}_n \Phi _n = {\mathcal {L}}_{n+1}\), which is (4.37) at the step \(n+1\). By Definition 2.4 applied to \({{\mathcal {D}}}_{{{\mathcal {R}}}_n}\), one has that

The reality and the reversibility of \({{\mathcal {D}}}_{n + 1}\) and \({{\mathcal {Q}}}_{n + 1}\) imply that (4.33) is verified at the step \(n + 1\). Moreover, by Lemma 2.6-(v)

Then, the estimate (4.36) implies (4.35) at the step \(n + 1\). The estimate (4.34) at the step \(n + 1\) follows, as usual, by a telescoping argument, using the fact that \(\sum _{n \ge 0} N_{n - 1}^{- \alpha }\) is convergent since \(\alpha > 0\) (see (4.28)). Now we prove the estimates (4.36) at the step \(n + 1\). By (4.50), estimates (4.47), (4.48), (4.49), Lemma 2.6-(ii), (v) and Lemma 2.8, we get, for any \(s_0\le s \le S-\Sigma (\beta )\),

By the induction estimate (4.36), the definition of the constants in (4.28) and the smallness condition in (4.30), taking \(N_0 = N_0(S, \tau )> 0\) large enough, we obtain the estimates (4.36) at the step \(n + 1\).

Proof of \(\mathbf{(S2)}_{n + 1}\). It remains to construct a Lipschitz extension for the eigenvalues \(\mu _{n + 1}(j,\,\cdot \,): \Lambda _{n + 1}^\gamma \rightarrow {{\textrm{i}}}\,{\mathbb {R}}\). By the induction hypothesis, there exists a Lipschitz extension of \(\mu _n(j,\lambda )\), denoted by \({{\widetilde{\mu }}}_{n}(j,\lambda )\) to the whole set \(DC(\gamma , \tau )\) that satisfies \(\mathbf{(S2)}_n\). By (4.51), we have \(\mu _{n + 1}(j) = \mu _n(j) + r_n(j)\) where \(r_n(j)=r_n(j,\lambda ):= \widehat{{\mathcal {R}}}_n(0;\lambda )_j^j\) satisfies \(|r_n(j)|^{{\textrm{Lip}}(\gamma )} \lesssim N_{n - 1}^{- \alpha } |j|^{- M} \varepsilon \). By the reversibility and the reality of \({{\mathcal {R}}}_n\), we have \(r_n(j) = - r_n(- j)=\overline{r_n(-j)} \), implying that \(r_n(j) \in {{\textrm{i}}}\, {\mathbb {R}}\). Hence by the Kirszbraun Theorem (see Lemma M.5 [36]) there exists a Lipschitz extension \({{\widetilde{r}}}_n(j,\,\cdot \,): DC(\gamma , \tau ) \rightarrow {{\textrm{i}}}{\mathbb {R}}\) of \(r_n(j,\,\cdot \,): \Lambda _{n + 1}^\gamma \rightarrow {{\textrm{i}}}\,{\mathbb {R}}\) satisfying \(|{{\widetilde{r}}}_n(j)|^{{\textrm{Lip}}(\gamma )} \lesssim |r_n(j)|^{{\textrm{Lip}}(\gamma )} \lesssim N_{n - 1}^{- \alpha } |j|^{- M} \varepsilon \). The claimed statement then follows by defining \({{\widetilde{\mu }}}_{n + 1}(j):= {{\widetilde{\mu }}}_n(j) + {{\widetilde{r}}}_n(j)\). \(\square \)

4.4 KAM Reducibility: Convergence

In this section we prove that the KAM reducibility scheme for the operator \({\mathcal {L}}_{e}^{(2)}\), whose iterative step is described in Proposition 4.6, is convergent under the smallness condition (4.30) with the final operator being diagonal with purely imaginary eigenvalues.

Lemma 4.8

For any \(j \in {\mathbb {Z}}^2 \setminus \{ 0 \}\), the sequence \(\{ {{\widetilde{\mu }}}_n(j)= {{\textrm{i}}}\, \zeta \cdot j + q_n(j) \}_{n\in {\mathbb {N}}}\) converges to some limit

satisfying the following estimates

Proof

By Proposition 4.6-\(\mathbf{(S2)}_n\), we have that the sequence \(\{{{\widetilde{\mu }}}_n(j,\lambda )\}_{n\in {\mathbb {N}}}\subset {{\textrm{i}}}\,{\mathbb {R}}\) is Cauchy on the closed set \(DC(\gamma ,\tau )\), therefore it is convergent for any \( \lambda \in DC(\gamma ,\tau )\). The estimate (4.52) follows by a telescoping argument with the estimate (4.39). \(\square \)

We define the set \(\Lambda _\infty ^\gamma \) of the non-resonance conditions for the final eigenvalues as

Lemma 4.9

We have \(\Lambda _\infty ^\gamma \subseteq \cap _{n \ge 0} \, \Lambda _n^\gamma \).

Proof

We prove by induction that \(\Lambda _\infty ^\gamma \subseteq \Lambda _n^\gamma \) for any integer \(n \ge 0\). The statement is trivial for \(n=0\), since \(\Lambda _0^\gamma := DC(\gamma ,\tau )\) (see Proposition 4.6). We now assume by induction that \(\Lambda _\infty ^\gamma \subseteq \Lambda _n^\gamma \) for some \(n \ge 0\) and we show that \(\Lambda _\infty ^\gamma \subseteq \Lambda _{n + 1}^\gamma \). Let \(\lambda \in \Lambda _\infty ^\gamma \), \(\ell \in {\mathbb {Z}}^d\), \(j, j' \in {\mathbb {Z}}^2 {\setminus } \{ 0 \}\), with \((\ell , j, j') \ne (0, j, j)\) and \(|\ell |, |j - j'| \le N_n\). By (4.52), (4.53), we compute

for some positive constant \(C>0\), provided

Using that \( |\ell |, |j - j'| \le N_n\) and the chain of inequalities

we deduce that, for some \(C_0>0\) and recalling that \(M > 4 \tau \) by (4.28),

Therefore, (4.54) is verified provided \(C C_0 N_n^{2 \tau } \varepsilon \gamma ^{- 1} \le 1\,.\) The latter inequality is implied by the smallness condition (4.30). We conclude that \(\lambda = (\omega , \zeta ) \in \Lambda _{n + 1}^\gamma \). \(\square \)

Now we define the sequence of invertible maps

Proposition 4.10

Let \(S > s_0 + \Sigma (\beta )\). There exists \(\delta := \delta (S, \tau , d) > 0\) such that, if (3.2) (4.30) are verified, then the following holds. For any \(\lambda = (\omega , \zeta ) \in \Lambda _\infty ^\gamma \), the sequence \(({{\widetilde{\Phi }}}_n)_{n\in {\mathbb {N}}}\) converges in norm \(| \cdot |_{0, s}^{{\textrm{Lip}}(\gamma )}\) to an invertible map \(\Phi _\infty \), satisfying, for any \(s_0\le s\le S-\Sigma (\beta )\),

The operators \(\Phi _\infty ^{\pm 1}: H^s_0 \rightarrow H^s_0\) are real and reversibility preserving. Moreover, for any \(\lambda \in \Lambda _\infty ^\gamma \), one has

where the operator \({{\mathcal {L}}}_e^{(2)}\) is given in (4.16)–(4.27) and the final eigenvalues \(\mu _\infty (j)\) are given in Lemma 4.8.

Proof

The existence of the invertible map \(\Phi _\infty ^{\pm 1}\) and the estimates (4.56) follow by (4.38), (4.55), arguing as in Corollary 4.1 in [3]. By (4.55), Lemma 4.9 and Proposition 4.6, one has \({{\widetilde{\Phi }}}_n^{- 1} {{\mathcal {L}}}_0 {{\widetilde{\Phi }}}_n = \omega \cdot \partial _\varphi + {{\mathcal {D}}}_n + {{\mathcal {R}}}_n\) for all \(n \ge 0\). The claimed statement then follows by passing to the limit as \(n \rightarrow \infty \), by using (4.36), (4.56) and Lemma 4.8. \(\square \)

5 Inversion of the Linearized Navier–Stokes Operator \({\mathcal {L}}_\nu \)

The main purpose of this section is to prove the invertibility of the operators \({\mathcal {L}}_\nu \) in (3.3), for any value of the viscosity \(\nu > 0\) with a smallness condition on \(\varepsilon \) which is independent of \(\nu \). We also prove the invertibility of \({\mathcal {L}}_{e}\), which we shall use to construct an approximate solution up to order \(O(\nu ^2)\) in Sect. 6. We use the normal form reduction implemented in Sect. 4. First, we recollect all the terms. By (4.16) and by Lemma 4.10, for any \(\lambda \in \Lambda _\infty ^\gamma \), the operator \({\mathcal {L}}^{(2)}_\nu \) in (4.16) is conjugated to

By the estimates (4.17), (4.56), using that \(|\Delta |_{2, s} \le 1\), for any \(s > s_0\) and for \(S > s_0 + \Sigma (\beta ) + 2\), there exists \(\delta := \delta (S, \tau , d) \in (0, 1)\) such that if (3.2), (4.2) hold, by applying Lemma 2.6-(ii), one gets

Lemma 5.1

(Inversion of \({{\mathcal {L}}}_\nu ^{(\infty )}\)). For any \(S > s_0 + \Sigma (\beta ) + 4\), there exists \(\delta := \delta (s, \tau , d) \in (0,1)\) small enough such that, if (3.2) holds and \(\varepsilon \gamma ^{- 1} \le \delta \), the operator \({{\mathcal {L}}}_\nu ^{(\infty )}\) is invertible for any \(\nu > 0\) with bounded inverse \(({{\mathcal {L}}}_\nu ^{(\infty )})^{- 1} \in {{\mathcal {B}}}(H^s_0)\) for any \(s_0\le s\le S-\Sigma (\beta ) - 4\). Moreover, the inverse operator \(({\mathcal {L}}_{\nu }^{(\infty )})^{-1}\) is smoothing of order two, that is \(({{\mathcal {L}}}_\nu ^{(\infty )})^{- 1} (- \Delta ) \in {{\mathcal {B}}}(H^s_0)\), with \(\Vert ({{\mathcal {L}}}_\nu ^{(\infty )})^{- 1} (- \Delta )\Vert _{{{\mathcal {B}}}(H^s_0)} \lesssim _s \nu ^{- 1}\).

Proof