Abstract

We introduce a space-inhomogeneous generalization of the dynamics on interlacing arrays considered by Borodin and Ferrari (Commun Math Phys 325:603–684, 2014). We show that for a certain class of initial conditions the point process associated with the dynamics has determinantal correlation functions, and we calculate explicitly, in the form of a double contour integral, the correlation kernel for one of the most classical initial conditions, the densely packed. En route to proving this, we obtain some results of independent interest on non-intersecting general pure-birth chains, that generalize the Charlier process, the discrete analogue of Dyson’s Brownian motion. Finally, these dynamics provide a coupling between the inhomogeneous versions of the TAZRP and PushTASEP particle systems which appear as projections on the left and right edges of the array, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

1.1 Informal Introduction

The study of stochastic dynamics, in both discrete and continuous time, on interlacing arrays has seen an enormous amount of activity in the past two decades, see for example [1,2,3,4, 7, 8, 10,11,12, 15,16,17, 52, 53]. These dynamics can equivalently be viewed as growth of random surfaces; see [2, 7, 12] or as random fields of Young diagrams; see [20, 21]. Currently there are arguably three main approaches in constructing dynamics on interlacing arrays with some underlying integrability.Footnote 1

The one that we will be concerned with in this contribution is due to Borodin and FerrariFootnote 2 [7] (see also the independent related work of Warren and Windridge [53] and Warren’s Brownian analogue of the dynamics [52]) based on some ideas from [23]. This is the simplest out of the three approaches to describe (see Definition 1.2 for a precise description) and in some sense, see [20], the one with “maximal noise”. Many of the ideas and results from the important paper [7] have either directly generated or have been made use of in a very large body of work, see for example, [3,4,5, 8, 10,11,12, 15,16,17, 22, 24, 49] for further developments and closely related problems. The other two approaches can be concisely described as follows: one of them (which is historically the first out of the three) is based on the combinatorial algorithm of the RSK correspondence, see [29, 40,41,42] and the other, which has begun to develop very recently, is based on the Yang–Baxter equation; see [20, 21].

Now, in the past few years, there has been considerable interest in constructing new integrable models in inhomogeneous space or adding spatial inhomogeneities, in a natural way, to existing models while preserving the integrability; see [2, 18, 26, 27, 32]. In this paper we do exactly that for the original (continuous time) dynamics of Borodin and Ferrari (see Definition 1.2). We show that for a certain class of initial conditions the point process associated with the dynamics has determinantal correlation functions. We then calculate explicitly, in the form of a double contour integral, the correlation kernel for one of the most classical initial conditions, the densely packed. This allows one to address questions regarding asymptotics and it would be interesting to return to this in future work. Here, our focus is on developing the stochastic integrability aspects of the model.

Finally, the projections on the edges of the interlacing array give two Markovian interacting particle systems of independent interest; see Remark 1.3 for more details. On the left edge, we get the inhomogeneous TAZRP (totally asymmetric zero range process) or Boson particle system, see [4, 17, 51], and on the right edge, we get the inhomogeneous PushTASEP, see [6, 7, 16], which is also studied in detail in the independent work of Leonid Petrov [46] which uses different methods.Footnote 3

In the next subsection, we give the necessary background in order to introduce the model and state our main results precisely.

1.2 Background and Main Result

We define the discrete Weyl chamber with nonnegative coordinates:

where \({\mathbb {Z}}_+=\{0,1,2,\dots \ \}\).

We think of the coordinates \(x_i\) as positions of particles and will use this terminology throughout; see Fig. 1 for an illustration. We say that \(y \in {\mathbb {W}}^N\) and \(x \in {\mathbb {W}}^{N+1}\) interlace and write \(y\prec x\) if:

We define the set of Gelfand–Tsetlin patterns (interlacing arrays) of length N byFootnote 4:

The basic data in this paper are a rate function

which we think of as the spatial inhomogeneity of the environment. It governs how fast or slow particles jump when at a certain position. We enforce the following assumption throughout the paper.

Definition 1.1

(Assumption (UB)). We assume that the rate function \(\lambda :{\mathbb {Z}}_+\rightarrow (0,\infty )\) is uniformly bounded away from 0 and \(\infty \):

We now introduce the inhomogeneous space push-block dynamics in \(\mathsf {GT}_N\). This is the continuous-time Markov jump process in \(\mathsf {GT}_N\) described as follows:

Definition 1.2

(Borodin–Ferrari inhomogeneous space push-block dynamics). Let \(\lambda (\cdot )\) satisfy (UB). Let \({\mathsf {M}}_N\) be the initial distribution (possibly deterministic) of particles on \(\mathsf {GT}_{N}\). We now describe Markov dynamics in \(\mathsf {GT}_N\) denoted by \(\left( {\mathsf {X}}_N(t;{\mathsf {M}}_N);t\ge 0\right) =\left( \left( {\mathsf {X}}^1(t),\dots ,{\mathsf {X}}^N(t)\right) ;t\ge 0\right) \) where the projection on the \(k^{th}\) level is given by \(\left( {\mathsf {X}}^k(t);t\ge 0\right) =\left( \left( {\mathsf {X}}^{k}_1(t),{\mathsf {X}}^{k}_2(t),\dots ,{\mathsf {X}}^{k}_k(t)\right) ;t\ge 0\right) \).

Each particle has an independent exponential clock of rate \(\lambda (\star )\) depending on its current position \(\star \in {\mathbb {Z}}_+\) for jumping to the right by one to site \(\star +1\). The particles interact as follows; see Fig. 1 for an illustration: If the clock of particle \({\mathsf {X}}_k^{n}\) rings first, then it will attempt to jump to the right by one.

(Blocking) In case \({\mathsf {X}}_k^{n-1}={\mathsf {X}}_k^{n}\), the jump is blocked (since a move would break the interlacing; lower level particles can be thought of as heavier).

(Pushing) Otherwise, it moves by one to the right, possibly triggering instantaneously some pushing moves to maintain the interlacing. Namely, if the interlacing is no longer preserved with the particle labeled \({\mathsf {X}}_{k+1}^{n+1}\), then \({\mathsf {X}}_{k+1}^{n+1}\) also moves instantaneously to the right by one, and this pushing is propagated (instantaneously) to higher levels, if needed.

A configuration of particles in \(\mathsf {GT}_4\). If the clock of the particle labeled \({\mathsf {X}}_1^{3}\) rings, which happens at rate \(\lambda (\star +1)\), then the move is blocked since interlacing with \({\mathsf {X}}_1^{2}\) would be violated. On the other hand, if the clock of the particle \({\mathsf {X}}_2^{2}\) rings, which happens at rate \(\lambda (\star +3)\), then it jumps to the right by one and instantaneously pushes both \({\mathsf {X}}_3^{3}\) and \({\mathsf {X}}_4^{4}\) to the right by one as well, for otherwise, the interlacing would break

Remark 1.3

(Inhomogeneous Boson and PushTASEP). It is easy to see that the particle systems at the left \(\left( \left( {\mathsf {X}}^{1}_1(t),{\mathsf {X}}^{2}_1(t),\dots ,{\mathsf {X}}^{N}_1(t)\right) ;t\ge 0\right) \) and right \(\left( \left( {\mathsf {X}}^{1}_1(t),{\mathsf {X}}^{2}_2(t),\dots ,{\mathsf {X}}^{N}_N(t)\right) ;t\ge 0\right) \) edge, respectively, in the \(\mathsf {GT}_N\)-valued dynamics of Definition 1.2 enjoy an autonomous Markovian evolution.

The left edge process is called the inhomogeneous TAZRP (totally asymmetric zero range process) or Boson particle system; see [4, 17, 51]. In particular, in [51] a contour integral expression (for a q-deformation of the model) is obtained for its transition probabilities. The fact that, as we shall also see in the sequel, the distribution of particles at fixed time \(t\ge 0\) is a marginal of a determinantal point process (with explicit kernel) is essentially contained in the results of [32] (which makes use of different methods).

The right edge particle system is called inhomogeneous PushTASEP, see [6, 7] and also [16] for a q-deformation of the homogeneous case. The fact that this particle system has an underlying determinantal structure is new, but is also obtained in the independent work of Petrov [46] that uses different methods.

Remark 1.4

(A generalization of the model). Borodin and Ferrari in fact considered a more general model where all particles at level k jump at rate \(\beta _k\) (independent of their position). Using the techniques developed in this paper, we can study the following model that allows for level inhomogeneities as well.Footnote 5 Let \(\{\alpha _i \}_{i\ge 1}\) be a sequence of numbers such that:

The dynamics are as in Definition 1.2 with the modification that each of the particles at level k jumps at rate \(\alpha _k+\lambda (\star )\) depending on its position \(\star \). Since our main motivation in this work is the introduction of spatial inhomogeneities we will only consider the level homogeneous case of Definition 1.2 in detail. However, in the sequence of Remarks 2.21, 2.24, 3.3, 3.10 we indicate the essential modifications required at each stage of the argument to study the more general model.

Observe that, for any \(n\le N\) the process described in Definition 1.2 restricted to \(\mathsf {GT}_n\) is an autonomous Markov process. We consider the natural projections:

forgetting the top row \(x^{(N+1)}\) and we write:

for the corresponding projective limit, consisting of infinite Gelfand–Tsetlin patterns. We say that \(\{{\mathsf {M}}_N\}_{N\ge 1}\) is a consistent sequence of distributions on \(\{\mathsf {GT}_N \}_{N\ge 1}\) if:

Suppose we are given such a consistent sequence of distributions \(\{{\mathsf {M}}_N\}_{N\ge 1}\). Then, by construction since the projections on any sub-pattern are autonomous, the processes \(\left( {\mathsf {X}}_N\left( t;{\mathsf {M}}_N\right) ;t\ge 0\right) _{N\ge 1}\) are consistent as well:

and we can correctly define \(\left( {\mathsf {X}}_\infty \left( t;\{{\mathsf {M}}_N\}_{N\ge 1}\right) ;t\ge 0\right) \), the corresponding process on \(\mathsf {GT}_{\infty }\).

Now, we will be mainly concerned with the so-called densely packed initial conditions \(\{{\mathsf {M}}_N^{\mathsf {dp}}\}_{N\ge 1}\) defined as follows:

Clearly \(\{{\mathsf {M}}_N^{\mathsf {dp}}\}_{N\ge 1}\) is a consistent sequence of distributions. We simply write \(\left( {\mathsf {X}}_\infty (t);t\ge 0\right) \) for the corresponding process on \(\mathsf {GT}_{\infty }\).

Observe that, \({\mathsf {X}}_\infty (t)\) for any \(t\ge 0\) gives rise to a random point process on \({\mathbb {N}}\times {\mathbb {Z}}_+\) which we denote by \({\mathsf {P}}^t_{\infty }\). We will use the notation \(z=(n,x)\) to denote the location of a particle, with n being the level/height/vertical position while x is the horizontal position. Finally, it will be convenient to introduce the following functions, which will play a key role in the subsequent analysis.

Definition 1.5

For \(x \in {\mathbb {Z}}_+\), we define:

Remark 1.6

Clearly \(\psi _x(w;\lambda )=1/ p_{x+1}(w;\lambda )\), but it is preferable to think of them as two distinct families of functions. Observe that \(p_x(w)\) is a polynomial of degree x and \(p_x(0)=1\).

We have then arrived at our main result.

Theorem 1.7

Let \(\lambda :{\mathbb {Z}}_+\rightarrow (0,\infty )\) satisfy \((\mathsf {UB})\). Consider the point process \({\mathsf {P}}^t_{\infty }\) on \({\mathbb {N}}\times {\mathbb {Z}}_+\) obtained from running the dynamics of Definition 1.2 for time \(t\ge 0\) starting from the densely packed initial condition. Then for all \(t\ge 0\), \({\mathsf {P}}^t_{\infty }\) has determinantal correlation functions. Namely, for any \(k\ge 1\) and distinct points \(z_1=(n_1,x_1),\dots ,z_k=(n_k,x_k)\in {\mathbb {N}}\times {\mathbb {Z}}_+\):

where the correlation kernel \({\mathsf {K}}_{t}(\cdot ,\cdot ;\cdot ,\cdot )\) is explicitly given by:

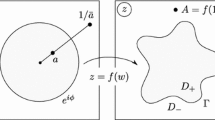

where \({\mathsf {C}}_{\lambda }\) is a counterclockwise contour encircling 0 and the points \(\{\lambda (x) \}_{x\ge 0}\), while \({\mathsf {C}}_0\) is a small counterclockwise contour around 0 as in Fig. 2.

Remark 1.8

In the homogeneous case, \(\lambda (\cdot )\equiv 1\), the correlation kernel in Theorem 1.7 above reduces to a kernel from [7] (there is a number of different kernels in [7] which give rise to equivalent determinantal point processes). To see this, it is most convenient to compare the expression for the kernel in Proposition 4.2 of [7] with the formulae from Sect. 3 herein (in particular see the expressions in display (4.4) in [7] and Lemmas 3.4, 3.5 and display (35) in this paper).

1.3 Intermediate Results and Strategy of Proof

The proof of Theorem 1.7 essentially splits into two parts that we now elaborate on. Firstly showing that under certain initial conditions \({\mathsf {M}}_N\) on \(\mathsf {GT}_N\) that we call Gibbs, of which the densely packed is a special case, the distribution of \({\mathsf {X}}_N\left( t;{\mathsf {M}}_N\right) \) for any \(t\ge 0\) is explicit (and again of Gibbs form); see Proposition 2.22. By applying an extension of the famous Eynard–Mehta theorem [28] to interlacing particle systems, see [9, 19], it is then fairly standard; see Sect. 3.1, that under some (rather general) Gibbs initial conditions the point process associated with \({\mathsf {X}}_N\left( t;{\mathsf {M}}_N\right) \) has determinantal correlation functions (with a not yet explicit kernel).

A key ingredient in the argument for this first part is played by a remarkable block determinant kernel given in Definition TwoLevelKM that we call the two-level Karlin–McGregor formula (for the original, single-level, Karlin–McGregor formula, see [31]). This terminology is due to the fact that, as we see in Sect. 2 and in particular Theorem 2.20, this provides a coupling between two Karlin–McGregor semigroups so that the corresponding processes interlace.

An instance of such a formula, in the context of Brownian motions, was first discovered by Warren in [52]. Later it was understood that it can be further developed to include general one-dimensional diffusions and birth and death chains, and this was achieved in [1, 2], respectively. Part of our motivation for this paper was to enlarge the class of models for which such an underlying structure has been shown to exist.Footnote 6

We note that, a crucial role in the previous works [1, 2] was played by the notion of Siegmund duality, see [48] (also [34, 35] for other uses of duality in integrable probability) alongside with reversibility and their absence in the present setting is an important conceptual difference.Footnote 7 However, as it turns out, an analogue of this duality suitable for our purposes does exist and is proven in Lemma 2.2.

Moreover, in Sect. 2, we prove some results on general non-intersecting pure-birth chains that generalize the Charlier process [7, 33], the discrete analogue of Dyson’s Brownian motion [25]. In particular, we construct harmonic functions (and also more general eigenfunctions) that are given as determinants with explicit entries.

Finally, we should mention that all of these formulae have in some sense their origin in the study of coalescing stochastic flows of diffusions and Markov chains; see [37]. Recently, a general abstract theory has been developed for constructing couplings between intertwined Markov processes based on random maps and coalescing flows, see [36]. It would be interesting to understand to what extent this is related to the present constructions and more generally to analogous couplings in integrable probability.

The second part of the proof involves the solution of a certain biorthogonalization problem which gives the explicit form of the correlation kernel \({\mathsf {K}}_t\) and is performed in Sect. 3. An important difference to the works [7, 9, 12, 13], where corresponding biorthogonalization problems were analyzed is that the functions involved in the current problem are not translationally invariant in the spatial variable. This is where we make use of the functions \(\{\psi _x \}_{x\in {\mathbb {Z}}_+}\) and \(\{p_x\}_{x\in {\mathbb {Z}}_+}\) which arise in the spectral theory of a general pure-birth chain (see display (8) for the spectral expansion of the transition kernel of the chain in terms of them). These provide both intuition and also make most of the (otherwise quite tedious) computations rather neat; see, in particular, the sequence of Lemmas 3.4, 3.5, 3.6, 3.7, 3.8 and their proofs.

2 Inhomogeneous Space Push-Block Dynamics

2.1 The One-Dimensional Chain

We define the forward and backwards discrete derivatives

We define the following pure-birth chain which is the basic building block of our construction: This is a continuous-time Markov chain on \({\mathbb {Z}}_+\) which when at site x jumps to site \(x+1\) with rate \(\lambda (x)\). Its generator is then given by (the subscript indicates the variable on which it is acting):

Due to (UB) non-explosiveness and thus existence and uniqueness of the pure-birth process is immediate (simply compare with a Poisson process with constant rate M). We write \(\left( \mathrm{e}^{t{\mathsf {L}}};t\ge 0\right) \) for the corresponding Markov semigroup and abusing notation we also write \(\mathrm{e}^{t{\mathsf {L}}}(x,y)\) for its transition density, namely the probability a Markov chain with generator \({\mathsf {L}}\) goes from site x to y in time t. This is the unique solution to both the Kolmogorov backwards and forwards equations; see Section 2.6 of [39]. The backwards equation, which we make use of here, reads as follows, for \(t>0,x,y \in {\mathbb {Z}}_+\):

It is an elementary probabilistic argument that \(e^{t{\mathsf {L}}}(x,y)\) is explicit; see Sect. 2 of [51] (or alternatively simply check that the expression below solves the Kolmogorov equation). We have the following spectral expansion for it; see display (2.1a) of [51]:

Remark 2.1

Here we can simply pick any counterclockwise contour which encircles the points \(\{\lambda (x)\}_{x\ge 0}\) and not necessarily 0 as well. The fact that \({\mathsf {C}}_{\lambda }\) encircles 0 will be useful in the computation of the correlation kernel later on.

Throughout the paper, we use the notation \({\mathbf {1}}_{[\![ 0,y]\!]}(\cdot )\) for the indicator function of the set \(\{0,1,2,\dots ,y \}\). We have the following key relation for the transition density \(\mathrm{e}^{t{\mathsf {L}}}(x,y)\).

Lemma 2.2

Let \(x,y \in {\mathbb {Z}}_+\) and \(t\ge 0\); we have:

Proof

We let

and show that this solves the Kolmogorov backwards equation. By uniqueness, the statement follows. The \(t=0\) initial condition follows from:

Finally,

as required. \(\square \)

Remark 2.3

It is also possible to prove Lemma 2.2 using the explicit spectral expansion (8), the argument is similar to the one in the proof of Lemma 3.4.

2.2 Two-Level Dynamics

We begin with a classical definition due to Karlin and McGregor [31].

Definition 2.4

The Karlin–McGregor sub-Markov semigroup on \({\mathbb {W}}^N\) associated with a pure-birth chain with generator \({\mathsf {L}}\) is defined by its transition density given by, for \(t\ge 0, x,y \in {\mathbb {W}}^N\):

This semigroup has the following probabilistic interpretation: It corresponds to N independent copies of a chain with generator \({\mathsf {L}}\) killed when they intersect, see [30, 31]. A conditioned upon non-intersection version of this semigroup will govern the dynamics on projections on single levels of our interlacing arrays; see Theorem 2.20 and Proposition 2.22 below.

We now move on to study two-level dynamics. We first consider the space of pairs (y, x) which interlace:

We have the following key definition that we call the two-level Karlin–McGregor formula, since as we shall see in the sequel this provides a coupling for two Karlin–McGregor semigroups, so that the corresponding processes interlace.

Definition 2.5

For \((y,x),(y',x')\in {\mathbb {W}}^{N,N+1}\) and \(t\ge 0\), define \( {\mathsf {U}}_t^{N,N+1}\left[ (y,x),(y',x')\right] \) by the following \((2N+1)\times (2N+1)\) block matrix determinant:

where the matrices \({\mathsf {A}}_t,{\mathsf {B}}_t, {\mathsf {C}}_t, {\mathsf {D}}_t\) of sizes \((N+1)\times (N+1)\), \((N+1)\times N\), \(N\times (N+1)\) and \(N\times N\), respectively, are given by:

Observe that the equivalence of the two representations for \({\mathsf {A}}_t\) is by definition while for \({\mathsf {D}}_t\) is due to Lemma 2.2.

We begin our study of \({\mathsf {U}}_t\) by proving some of its basic properties:

Lemma 2.6

For all \(t\ge 0\), the kernel \({\mathsf {U}}_t^{N,N+1}\) satisfies: \({\mathsf {U}}_t^{N,N+1}\left[ (y,x),(y',x')\right] \ge 0\), for \((y,x),(y',x')\in {\mathbb {W}}^{N,N+1}\) and \({\mathsf {U}}_t^{N,N+1}{\mathbf {1}}\le {\mathbf {1}}\).

Proof

We prove positivity first. A direct verification from Definition 2.5 appears to be hard (although it would be interesting to have one). Instead, we give a simple probabilistic argument.

Let \(\left( ({\mathcal {S}}_t(x_1),\dots ,{\mathcal {S}}_t(x_m));t\ge 0\right) \) be a system of m independent chains with generator \({\mathsf {L}}\), starting from \((x_1,\dots ,x_m) \in {\mathbb {W}}^m\), and which coalesce and move together once any two of them meet. We denote their law by \({\mathbb {P}}\). Let \(z,z' \in {\mathbb {W}}^m\) and \(t\ge 0\). Then, we have:

The claim is a consequence of the Karlin–McGregor formula; see Proposition 2.5 in [2] for a proof in a completely analogous setting. Observe that (10) allows to give the following probabilistic representation for \({\mathsf {U}}_t^{N,N+1}\) (by applying discrete derivatives to the RHS of (10); we match with the expression from Definition 2.5):

Positivity of \({\mathsf {U}}_t^{N,N+1}\) is then a consequence of the fact that the events

are increasing both as the variables \(y_i\) decrease and as the variables \(x'_j\) increase.

Finally, we need to prove that for any \((y,x) \in {\mathbb {W}}^{N,N+1}\) and \(t\ge 0\):

We claim that for any \((y,x) \in {\mathbb {W}}^{N,N+1}\) and \(t\ge 0\):

Since \(\left( {\mathcal {P}}_t^N;t\ge 0\right) \) is sub-Markov, the statement of the proposition follows. Now in order to prove the claim, we take the sum \(\sum _{\{x': (y',x') \in {\mathbb {W}}^{N,N+1} \}}^{}\) in the explicit form of the kernel from Definition 2.5 and use multilinearity of the determinant. Then, the claim follows from the relations below:

and simple row–column operations. \(\square \)

We now introduce the inhomogeneous two-level dynamics:

Definition 2.7

(Two-level inhomogeneous push-block dynamics). This is the continuous-time Markov chain \(\left( \left( Y(t),X(t)\right) ;t\ge 0\right) \) in \({\mathbb {W}}^{N,N+1}\), with possibly finite lifetime, described as follows. Each of the \(2N+1\) particles evolves as an independent chain with generator \({\mathsf {L}}\) subject to the following interactions. The Y-particles evolve autonomously. When a potential move by the X-particles would break the interlacing, it is blocked; see Fig. 3. While if a potential move by the Y-particles would break the interlacing, then the corresponding X-particle is pushed to the right by one; see Fig. 4. The Markov chain is killed when two Y-particles collide, at the stopping time:

Proposition 2.8

The block determinant kernel \({\mathsf {U}}_t^{N,N+1}\) forms the transition density for the dynamics in Definition 2.7.

Proof

We show that \({\mathsf {U}}_t^{N,N+1}\) solves the Kolmogorov’s backward equation corresponding to the dynamics in Definition 2.7. Uniqueness in the class of substochastic matrices (which \({\mathsf {U}}_t^{N,N+1}\) is a member of by Lemma 2.6) follows by a generic argument presented in a completely analogous setting in Sect. 3 in [2], see also [15].

First, observe that we have the \(t=0\) initial condition:

This follows directly from the form of \( {\mathsf {U}}_t^{N,N+1}\left[ (y,x),(y',x')\right] \), by noting that as \(t \downarrow 0\), the diagonal entries converge to \({\mathbf {1}} \left( x_i=x_i'\right) ,{\mathbf {1}}\left( y_i=y_i'\right) \), while all other contributions to the determinant vanish.

Moreover, observe that we have the Dirichlet boundary conditions when two Y-coordinates coincide:

Moving on, note that (here we are abusing notation slightly by using the same notation for both the matrices and their scalar entries) we have the following, for any \(x,x'\in {\mathbb {Z}}_+\) fixed and \(t>0\):

To see the relation for \({\mathsf {C}}_t\) observe that:

We define \(\mathsf {int}\left( {\mathbb {W}}^{N,N+1}\right) \) to be the set of all pairs \((y,x)\in {\mathbb {W}}^{N,N+1}\) which when any of the x or y coordinates is increased by 1 they still belong to \({\mathbb {W}}^{N,N+1}\), namely the pairs \((y,x)\in {\mathbb {W}}^{N,N+1}\) so that \((y,x)+({\mathsf {e}}_i,0),(y,x)+(0,{\mathsf {e}}_i)\in {\mathbb {W}}^{N,N+1}\) with \({\mathsf {e}}_i\) being the unit vector in the i-th coordinate. Observe that in \(\mathsf {int}\left( {\mathbb {W}}^{N,N+1}\right) \) each of coordinates evolves as an independent chain with generator \({\mathsf {L}}\) which do not interact. Then, by the multilinearity of the determinant and relations (12) and (13), we obtain:

It remains to deal with the interactions. We will only consider one blocking and one pushing case, as all others are entirely analogous. First, the blocking case with \(x_1=y_1=x\). In order to ease notation and also make the gist of the simple argument transparent, we further restrict our attention to the rows containing \(x_1,y_1\). In fact, it is not hard to see that it suffices to consider the \(2\times 2\) matrix determinant given by, with \(x',y'\in {\mathbb {Z}}\) fixed:

By taking the \(\frac{\mathrm{d}}{\mathrm{d}t}\)-derivative of the determinant, we obtain using (12) and (13):

On the other hand, what we would like to have according to the dynamics in Definition 2.7 is simply the following:

We thus must show that:

which corresponds to particle \(x_1\) being blocked when \(x_1=y_1\) and \(x_1\) tries to jump (see the configuration in Fig. 3). In order to obtain (14), we shall work on the RHS. We multiply the second row by \(-\lambda (x)^{-1}\) and add it to the first row to obtain

and analogously for the second column, which then gives us the LHS of (14) as desired.

Similarly, we consider a pushing move with \(y_1=x,x_2=x+1\) and \(x',y'\in {\mathbb {Z}}_+\) fixed (see the configuration in Fig. 4):

We calculate using the relations (12) and (13):

From the dynamics in Definition 2.7, we need to have the following:

Hence, we need to show:

which follows from (14) after relabeling \(x \rightarrow x+1\). \(\square \)

We now need a couple of definitions whose purpose will be clear shortly.

Definition 2.9

We define the positive kernel \(\Lambda _{N}^{N+1}\) from \({\mathbb {W}}^{N+1}\) to \({\mathbb {W}}^N\) by its density (with respect to counting measure):

Abusing notation, we can also view \(\Lambda _N^{N+1}\) as a kernel from \({\mathbb {W}}^{N+1}\) to \({\mathbb {W}}^{N,N+1}\), in which case we write \(\Lambda _N^{N+1}(x,(y,z))=\Lambda _N^{N+1}(x,y)\). Observe that, this is supported on elements \((y,z)\in {\mathbb {W}}^{N,N+1}\) such that \(z\equiv x\).

Definition 2.10

For any \(N\ge 1\) and \(x \in {\mathbb {W}}^N\), define the functions \({\mathfrak {h}}_N\left( x\right) ={\mathfrak {h}}_N\left( x;\lambda \right) \) recursively by, \({\mathfrak {h}}_1(x)\equiv 1\) and

The functions \({\mathfrak {h}}_N\) can in fact be written as determinants whose entries are defined recursively:

Lemma 2.11

Let \(N\ge 1\) and \(x \in {\mathbb {W}}^N\). Then,

where the functions \({\mathfrak {I}}_i\) are defined by, for \(x\in {\mathbb {Z}}_+\):

Proof

Direct computation by induction using multilinearity of the determinant. \(\square \)

Remark 2.12

Observe that for \(\lambda (\cdot )\equiv {\mathbf {1}}\) we have, for \(x \in {\mathbb {W}}^N\):

This is the harmonic function associated with N independent Poisson processes (i.e., with \({\mathsf {L}}=\nabla ^+\)) killed when they intersect, see [7, 33, 40, 41].

Remark 2.13

Lemma 2.11 implies that the sequence of functions \(\{{\mathfrak {I}}_{i}(\cdot ;\lambda )\}_{i\ge 1}\) forms a (discrete) extended complete Chebyshev system on \({\mathbb {Z}}_+\), see [30]. On the real line and under certain assumptions, such systems have been classified and are characterized through a recurrence like (15) (with integrals instead of sums), see [30].

Remark 2.14

It is possible to express the entries of the determinant representation for \({\mathfrak {h}}_N(x;\lambda )\) in terms of contour integrals as we shall see in Sect. 3. This is essential in order to perform the computation of the correlation kernel.

Now, we let \(\Pi _N^{N+1}\) be the operator induced by the projection on the y-coordinates. More precisely, for a function f on \({\mathbb {W}}^N\), the function \(\Pi _N^{N+1}f\) on \({\mathbb {W}}^{N,N+1}\) is defined by \(\left[ \Pi _N^{N+1}f\right] (y,x)=f(y)\). Then, we have:

Proposition 2.15

For \(t \ge 0\), we have the following equalities of positive kernels,

Proof

This computation is implicit in the proof of Lemma 2.6. \(\square \)

Similarly, we have:

Proposition 2.16

For \(t\ge 0\), we have the equalities of positive kernels,

Proof

We take the sum \(\sum _{\{y:(y,x)\in {\mathbb {W}}^{N,N+1} \}}^{}\) in the explicit form of the kernels and use multilinearity of the determinant. Then, the statement follows from the relations below

and simple row–column operations. \(\square \)

Propositions 2.15 and 2.16 above readily imply the following two results:

Proposition 2.17

For \(t\ge 0\), we have:

Proof

Combine Propositions 2.15 and 2.16, noting that \(\Lambda _N^{N+1}\Pi _N^{N+1}\equiv \Lambda _N^{N+1}\). \(\square \)

Proposition 2.18

The function \({\mathfrak {h}}_N(x)={\mathfrak {h}}_N(x;\lambda )\) is a positive harmonic function for the semigroup \(\left( {\mathcal {P}}_t^N;t\ge 0\right) \). Moreover, the function \({\mathfrak {h}}_{(N,N+1)}((y,x);\lambda )\) defined by \({\mathfrak {h}}_{(N,N+1)}((y,x);\lambda )={\mathfrak {h}}_{N}(y;\lambda )\) is a positive harmonic function for the semigroup \(\left( {\mathsf {U}}_t^{N,N+1};t\ge 0\right) \).

Proof

Inductively apply Propositions 2.17 and 2.16, respectively. \(\square \)

In order to proceed, we require a general abstract definition. For a possibly sub-Markov semigroup \(\left( {\mathsf {P}}(t);t \ge 0\right) \) having a strictly positive eigenfunction \({\mathsf {h}}\) with eigenvalue \(\mathrm{e}^{{\mathsf {c}}t}\) (i.e., \({\mathsf {P}}(t){\mathsf {h}}=\mathrm{e}^{{\mathsf {c}}t}{\mathsf {h}}\)) we define its Doob h-transform by \(\left( \mathrm{e}^{-{\mathsf {c}}t}{\mathsf {h}}^{-1}\circ {\mathsf {P}}(t)\circ {\mathsf {h}};t\ge 0\right) \). We note that this is an honest Markovian semigroup. Thus, Proposition 2.18 allows us to correctly define the Doob h-transformed versions of the semigroups and kernels above:

Note that, by their very definition, all of these are now Markovian. Moreover, as we have done previously, we can also view \({\mathfrak {L}}_N^{N+1}\) as a Markov kernel from \({\mathbb {W}}^N\) to \({\mathbb {W}}^{N,N+1}\). We observe that for the distinguished special case \(\lambda (\cdot )\equiv 1\), \(\left( {\mathfrak {P}}_t^{N};t\ge 0\right) \) is the semigroup of the well-known Charlier process; see [7, 33, 40, 41] the discrete analogue of Dyson’s Brownian motion [25]. With all these preliminaries in place, we have:

Proposition 2.19

For \(t\ge 0\), we have the intertwining relations between Markov semigroups:

Proof

These relations are straightforward consequences of Propositions 2.15, 2.16 and 2.17, respectively. \(\square \)

Observe that, the h-transform by \({\mathfrak {h}}_{(N,N+1)}(y,x)={\mathfrak {h}}_N(y)\) conditions the Y-particles to never collide and the process with semigroup \(\left( {\mathfrak {U}}_t^{N,N+1};t\ge 0\right) \) has infinite lifetime. Under this change of measure the evolution of the Y-particles is autonomous with semigroup \(\left( {\mathfrak {P}}_t^N;t\ge 0\right) \), while the X-particles evolve as \(N+1\) independent chains with generator \({\mathsf {L}}\) interacting with the Y-particles through the same push-block dynamics of Definition 2.7. We now arrive at the main result of this section.

Theorem 2.20

Consider a Markov process \(\left( \left( Y(t),X(t)\right) ;t\ge 0\right) \) in \({\mathbb {W}}^{N,N+1}\) with semigroup \(\left( {\mathfrak {U}}_t^{N,N+1};t \ge 0\right) \). Let \({\mathfrak {M}}^{N+1}\) be a probability measure on \({\mathbb {W}}^{N+1}\). Assume \(\left( \left( Y(t),X(t)\right) ;t\ge 0\right) \) is initialized according to the probability measure with density \({\mathfrak {M}}^{N+1}(x){\mathfrak {L}}_N^{N+1}(x,y)\) on \({\mathbb {W}}^{N,N+1}\). Then, the projection on the X-particles is distributed as a Markov process with semigroup \(\left( {\mathfrak {P}}_t^{N+1};t \ge 0\right) \) and initial condition \({\mathfrak {M}}^{N+1}\). Moreover, for any fixed time \(T\ge 0\), the conditional distribution of \(\left( X(T),Y(T)\right) \) given X(T) satisfies:

Proof

Let \({\mathsf {S}}\) be the operator induced by the projection on the x-coordinates:

(we do not indicate dependence on N). Observe that,

Then, the first statement of the theorem, by virtue of the intertwining relation (23), is an application of the theory of Markov functions due to Rogers and Pitman, see Theorem 2 in [47] (applied to the function s above). Finally, for the conditional law statement (25), see Remark (ii) following Theorem 2 of [47]. \(\square \)

Remark 2.21

Assume we are in the setting of Remark 1.4. Let \({\mathsf {L}}^1\) and \({\mathsf {L}}^2\) be two pure-birth chain generators:

We observe that the strictly positive eigenfunction \({\mathsf {h}}_{\beta _1}^{\beta _2}\) of \({\mathsf {L}}^2\) (with eigenvalue \(\beta _1-\beta _2\)) defined by:

Doob h-transforms \({\mathsf {L}}^2\) to \({\mathsf {L}}^1\):

We define, for \(n\ge 1\):

By an inductive argument, making use of Proposition 2.17, we can show that \({\mathsf {h}}_{n}^{(\alpha _1,\dots ,\alpha _n)}\) is a strictly positive eigenfunction of \(\left( {\mathsf {P}}_t^{(n,\alpha _n)};t\ge 0\right) \). Thus, we can consider the Doob h-transformed versions \({\mathsf {P}}_t^{(n,\alpha _n),{\mathsf {h}}_n^{(\alpha _1,\dots ,\alpha _n)}}\) and \(\mathsf {\Lambda }_n^{(n+1,\alpha _{n+1}),{\mathsf {h}}_n^{(\alpha _1,\dots ,\alpha _n)}}\). Then, all of the results above have natural extensions involving these quantities (whose precise statements we omit) to the level inhomogeneous setting.

2.3 Consistent Multilevel Dynamics

We have the following multilevel extension of the results of the preceding subsection.

Proposition 2.22

Let \(\left( {\mathfrak {P}}_{t}^{k};t \ge 0\right) \) and \({\mathfrak {L}}^{k}_{k-1}\) denote the semigroups and Markov kernels defined in (19) and (20) above and let \({\mathfrak {M}}^N(\cdot )\) be a probability measure on \({\mathbb {W}}^N\). Define the following Gibbs probability measure \({\mathsf {M}}_N\) on \(\mathsf {GT}_N\) with density:

Consider the process \(\left( {\mathsf {X}}_N\left( t;{\mathsf {M}}_N\right) ;t\ge 0\right) =\left( \left( {\mathsf {X}}^1\left( t\right) ,{\mathsf {X}}^2\left( t\right) , \dots ,{\mathsf {X}}^N\left( t\right) \right) ;t\ge 0\right) \) in Definition 1.2. Then, for \(1\le k \le N\), the projection on the k-th level \(\left( {\mathsf {X}}^{k}(t);t \ge 0\right) \) is distributed as a Markov process evolving according to \(\left( {\mathfrak {P}}_t^{k};t \ge 0\right) \). Moreover, for any fixed \(T\ge 0\), the law of \(\left( {\mathsf {X}}^1(T),\dots , {\mathsf {X}}^{N}(T)\right) \) is given by the evolved Gibbs measure on \(\mathsf {GT}_N\) :

Proof

The proof is by induction. For \(N=2\), this is Theorem 2.20. Assume the result is true for \(N-1\) and we prove it for N. We first observe that the induced measure on \(\mathsf {GT}_{N-1}\)

is again Gibbs. Then, from the induction hypothesis, \(\left( {\mathsf {X}}^{N-1}(t);t \ge 0\right) \) is a Markov process with semigroup \(\left( {\mathfrak {P}}^{N-1}_t;t \ge 0\right) \). Moreover, the joint dynamics of \(\left( {\mathsf {X}}^{N-1}(t),{\mathsf {X}}^N(t);t \ge 0\right) \) are those considered in Theorem 2.20 (with semigroup \({\mathfrak {U}}_t^{N-1,N}\)) and thus by the aforementioned result, we obtain that \(\left( {\mathsf {X}}^N(t);t \ge 0\right) \) is distributed as a Markov process with semigroup \(\left( {\mathfrak {P}}^{N}_t;t \ge 0\right) \). Furthermore, by the same theorem, we have that for fixed \(T\ge 0\), the conditional law of \({\mathsf {X}}^{N-1}(T)\) given \({\mathsf {X}}^{N}(T)\) is \({\mathfrak {L}}^{N}_{N-1}\left( {\mathsf {X}}^N(T),\cdot \right) \). Hence, since the distribution of \({\mathsf {X}}^N(T)\) has density \(\left[ {\mathfrak {M}}^N{\mathfrak {P}}^{N}_T\right] (\cdot )\), we get by the induction hypothesis, that the fixed time \(T\ge 0\), distribution of \(\left( {\mathsf {X}}^1(T),\dots , {\mathsf {X}}^{N}(T)\right) \) is given by (27) as desired. \(\square \)

We observe that the densely packed initial condition \({\mathsf {M}}_N^{\mathsf {dp}}\) is clearly Gibbs. We close this subsection with a couple of remarks on generalizations of this result.

Remark 2.23

It is also possible, by a simple extension of the argument above, to consider the distribution of \(\left( {\mathsf {X}}^1(T_1),{\mathsf {X}}^2(T_2),\dots , {\mathsf {X}}^{N}(T_N)\right) \) at distinct times \((T_1,\dots ,T_N)\) satisfying \(T_N\le T_{N-1}\le \cdots \le T_1\). This corresponds to space-like distributions in the language of growth models; see [6, 7, 9].

Remark 2.24

In the setting of the level-inhomogeneous model described in Remark 1.4 (with the notations of Remark 2.21), the statement of the corresponding proposition (and its proof) is completely analogous with \({\mathfrak {P}}_t^{k}\) replaced by \({\mathsf {P}}_t^{(k,\alpha _k),{\mathsf {h}}_k^{(\alpha _1,\dots ,\alpha _k)}}\) and \({\mathfrak {L}}_{k-1}^k\) replaced by \(\mathsf {\Lambda }_{k-1}^{(k,\alpha _k),{\mathsf {h}}_k^{(\alpha _1,\dots ,\alpha _k)}}\).

2.4 Inhomogeneous Gelfand–Tsetlin Graph and Plancherel Measure

This subsection is independent to the rest of the paper and can be skipped. However, it provides some further insight into the constructions of the present work and how they fit into a wider framework. We begin with some notation. Let

denote the discrete chamber without the nonnegativity restriction. The definitions of interlacing in this setting and of \(\tilde{{\mathbb {W}}}^{N,N+1}\) are also completely analogous. (We simply drop nonnegativity.)

Definition 2.25

We consider a graded graph \(\mathsf {\Gamma }=\mathsf {\Gamma }_{\lambda }\) with vertex set \(\uplus _{N\ge 1}\tilde{{\mathbb {W}}}^N\). Two vertices \(x\in \tilde{{\mathbb {W}}}^{N+1}\) and \(y\in \tilde{{\mathbb {W}}}^N\) are connected by an edge if and only if they interlace. For all \(N\ge 1\) we assign a weight/multiplicity, denoted by \(\mathsf {mult}_{\lambda }(y,x)\), to each edge \((y,x) \in \tilde{{\mathbb {W}}}^{N,N+1}\), and more generally to all pairs \((y,x)\in \tilde{{\mathbb {W}}}^N\times \tilde{{\mathbb {W}}}^{N+1}\):

The distinguished case \(\mathsf {\Gamma }_1\) with \(\lambda (\cdot )\equiv 1\) is the Gelfand–Tsetlin graph,Footnote 8 see [14, 50]. This describes the branching of irreducible representations of the chain of unitary groups, see [14, 50]. We propose to call the more general case \(\mathsf {\Gamma }_{\lambda }\) defined above the inhomogeneous Gelfand–Tsetlin graph. (In fact, it is a family of graphs, one for each function \(\lambda \)).

We now define the dimension \(\mathsf {dim}^{\lambda }_N(x)\) of a vertex \(x \in \tilde{{\mathbb {W}}}^N\), inductively by:

We can associate a family of Markov kernels \(\{\Lambda _{N+1\rightarrow N}\}_{N\ge 1}\) from \(\tilde{{\mathbb {W}}}^{N+1}\) to \(\tilde{{\mathbb {W}}}^N\) to the graph \(\mathsf {\Gamma }_{\lambda }\) given by:

Observe that, by the very definitions, when restricting to the positive chambers \({\mathbb {W}}^N\) (namely considering the subgraph \(\mathsf {\Gamma }_{\lambda }^+=\uplus _{N\ge 1} {\mathbb {W}}^N\)) we have:

We say that a sequence of probability \(\{ \mu _N\}_{N\ge 1}\) on \(\{\tilde{{\mathbb {W}}}^N \}_{N\ge 1}\) is consistent if:

The extremal points of the convex set of consistent probability measures form the boundary of the graph \(\mathsf {\Gamma }_{\lambda }\). In the homogeneous case \(\lambda (\cdot )\equiv 1\), the boundary of the Gelfand–Tsetlin graph \(\mathsf {\Gamma }_1\) has been determined explicitly and is in bijection (see [14, 45, 50] for more details and precise statements) with the infinite-dimensional space \(\Omega \):

and we also write:

The extremal consistent sequence of probability measures \(\big \{{\mathcal {M}}_{\gamma ^+}^N \big \}_{N\ge 1}\) corresponding to \(\gamma ^+\ge 0\) with all the other parameters on \(\Omega \) identically equal to zero is called the Plancherel measureFootnote 9 for the infinite-dimensional unitary group; see [13]. The connection to the present paper is through the following, see [7]

where the right-hand side is defined for \(\lambda (\cdot )\equiv 1\). Now, due to observation (28) and the fact that \({\mathfrak {P}}_{\gamma ^+}^N\left( (0,1,\dots ,N-1),\cdot \right) \) is supported on \({\mathbb {W}}^N\) the following is an immediate consequence of the intertwining relation (24) from Proposition 2.19:

Proposition 2.26

Let the function \(\lambda \) be fixed satisfying (\(\mathsf {UB}\)). Consider the graph \(\mathsf {\Gamma }_{\lambda }\) and for all \(N\ge 1\) the semigroups \(\left( {\mathfrak {P}}_t^{N};t\ge 0\right) \) associated with the function \(\lambda \). Then, for each \(\gamma ^+\ge 0\) the sequence of probability measures \(\big \{{\mathfrak {P}}_{\gamma ^+}^N\left( (0,1,\dots ,N-1),\cdot \right) \big \}_{N\ge 1}\) is consistent for \(\mathsf {\Gamma }_{\lambda }\).

Thus, the sequence \(\big \{{\mathfrak {P}}_{\gamma ^+}^N\left( (0,1,\dots ,N-1),\cdot \right) \big \}_{N\ge 1}\) can be viewed as the analogue of the Plancherel measure for the more general graphs \(\mathsf {\Gamma }_{\lambda }\). It would be interesting to understand whether this sequence is actually extremal for \(\mathsf {\Gamma }_{\lambda }\) for general \(\lambda \). A more ambitious question would be whether there exists a complete classification of extremal consistent measures for \(\Gamma _{\lambda }\), in analogy to the case of the Gelfand–Tsetlin graph \(\mathsf {\Gamma }_1\).

Remark 2.27

Analogous constructions exist for the level-inhomogeneous generalization of the Gelfand–Tsetlin graph c.f. Remarks 1.4, 2.21, 2.24.

3 Determinantal Structure and Computation of the Kernel

3.1 Eynard–Mehta Theorem and Determinantal Correlations

We will make use of one of the many variants of the famous Eynard–Mehta Theorem [28], and in particular a generalization to measures on interlacing particle systems; see [9, 19]. More precisely, we will use Lemma 3.4 of [9]. For the convenience of the reader and to set up some notation, we reproduce it here:

Proposition 3.1

Assume we have a (possibly signed) measure on \(\{x_i^n, i=1,\dots , N, i=1,\dots ,n\}\) given in the form:

where \(x_{n+1}^{n}\) are some “virtual” variables, which we also denote by \(\mathsf {virt}\), and \(Z_N\) is a nonzero normalization constant. Then, the correlation functions are determinantal. To write down the kernel, we need some notation. Define,

where \((a*b)(x,y)=\sum _{z \in {\mathbb {Z}}}^{}a(x,z)b(z,y)\). Also, define for \(1\le n <N\):

Set \(\phi (x_1^0,x)=1\). Then, the functions

are linearly independent and generate the n-dimensional space \(V_n\). For each \(1\le n \le N\), we define a set of functions \(\{\Phi _j^n(x), j=0,\dots ,n-1 \}\) determined by the following two properties:

The functions \(\{\Phi _j^n(x), j=0,\dots ,n-1 \}\) span \(V_n\).

For \(1\le i,j \le n-1\), we have:

$$\begin{aligned} \sum _{x}^{}\Psi _i^n(x)\Phi _j^n(x)={\mathbf {1}}(i=j). \end{aligned}$$

Finally, assume that \(\phi _n(x_{n+1}^n,x)=c_n\Phi _0^{(n+1)}(x)\) for some \(c_n\ne 0\), \(n=1,\dots ,N-1\). Then, the kernel takes the simple form:

From Proposition 2.22, we get that \(\mathsf {Law}\left[ {\mathsf {X}}_N(t;{\mathsf {M}}_{N}^{\mathsf {dp}})\right] \) for fixed time \(t\ge 0\) is given by, where we use the notation \(\triangle _N=(0,1,\dots ,N-1)\):

Using the spectral expansion (8) of \(\mathrm{e}^{t{\mathsf {L}}}(x,y)\) and row operations (recall that \(p_x(w)\) is a polynomial of degree x in w), we can rewrite the display above as follows, for a (different) nonzero constant \({\mathsf {Z}}_N\):

where the functions \(\{ \Psi _{N-i}^N(\cdot )\}_{i=1}^N\) (we suppress dependence on the time variable t since it is fixed) are given by:

Moreover, we note that it is possible (see, for example, [52]) to write the indicator function for interlacing as a determinant, for \(y \in {\mathbb {W}}^{n-1}, x \in {\mathbb {W}}^{n}\):

Thus, we can write the measure above in a form that is within the scope of Proposition 3.1:

with,

In particular, this implies determinantal correlations. The explicit computation of the kernel is performed in the next section.

Remark 3.2

A completely analogous computation as the one above gives that starting from any deterministic initial condition \({\mathfrak {M}}^N(\cdot )=\delta _{(z_1,\dots ,z_N)}(\cdot )\) for the top level the evolved Gibbs measure on \(\mathsf {GT}_N\) has determinantal correlations. A possible choice (this is clearly not unique, as we can use linear combinations) of the functions \(\Psi \) is as follows (the functions \(\phi \) are as before):

The explicit computation of the correlation kernel is an interesting open problem.

Remark 3.3

In the level-inhomogeneous setting, analogous computations, making use of Remark 2.24 show the existence of determinantal correlations for Gibbs measures with deterministic initial conditions for the top level \({\mathfrak {M}}^N(\cdot )=\delta _{(z_1,\dots ,z_N)}(\cdot )\). A possible choice of the functions \(\Psi \) and \(\phi _k\) is as follows:

3.2 Computation of the Correlation Kernel

Our aim now is to solve the biorthogonalization problem given in Proposition 3.1 and obtain concise contour integral expressions for the families of functions appearing therein. This is achieved in the following sequence of lemmas. Firstly in order to ease notation, since we are in the level homogeneous case, we define:

Lemma 3.4

For \(1\le n \le N\), we have:

Lemma 3.5

For \(1\le k\le N\), we have:

Lemma 3.6

For \(1\le k \le N\), we have:

We define a family of functions \(\Phi _{\cdot }^{\cdot }(\cdot )\) on \({\mathbb {Z}}_+\), for \(1\le n \le N\) (again we suppress dependence on the variable t since it is fixed):

Lemma 3.7

The functions \(\Phi \) are biorthogonal to the \(\Psi \)’s. More precisely, for any \(1\le n \le N\)

for \(1\le i,j \le n-1\).

Lemma 3.8

The functions \(\{ \Phi _j^n(\cdot ); j=0,\dots ,n-1\}\) span the space (see Proposition 3.1):

for \(1\le n \le N\).

Finally, it is clear that we have the following.

Lemma 3.9

For \(n=1,\dots ,N-1\):

Assuming the auxiliary results above we first prove Theorem 1.7. The proofs of these results are given afterward.

Proof of Theorem 1.7

Making use of Proposition 3.1 (by virtue of the preceding auxiliary lemmas), we get that:

The term \(\phi ^{(n_2-n_1)}(x_1,x_2)\) is given by, from Lemma 3.5:

It then remains to simplify the sum:

\(\square \)

Proof of Lemma 3.4

It suffices to show that:

It is in fact equivalent to prove that:

For any \(R>1\), we consider a counterclockwise contour \({\mathsf {C}}_{\lambda }^{\ge R}\) that contains 0 and \(\{\lambda (x)\}_{x\ge 0}\) and for which the following uniform bound holds:

Such a contour exists because of assumption \((\mathsf {UB})\); we can simply take a very large circle.

Clearly, the left-hand side of display (37) is equal to (since we can deform the contour \({\mathsf {C}}_{\lambda }\) to \({\mathsf {C}}_{\lambda }^{\ge R}\) without crossing any poles):

We now claim that uniformly for \(w \in {\mathsf {C}}_{\lambda }^{\ge R}\):

Assuming this, display (37) immediately as follows and thus also the statement of the lemma.

Now, after a simple relabeling (more precisely by writing \({\mathsf {a}}_i=\lambda (y+i+1)\)) in order to establish the claim it suffices to prove the following result. Let \(\{{\mathsf {a}}_i \}_{i\ge 0}\) be a sequence of numbers in [s, M] and let \({\mathsf {C}}_{{\mathsf {a}}}^{\ge R}\) be the contour defined above. Then, uniformly for \(w \in {\mathsf {C}}_{{\mathsf {a}}}^{\ge R}\) we have:

Observe that, if all the \(\{{\mathsf {a}}_i \}_{i\ge 0}\) are equal, this is just a geometric series. We claim that we have the following key identity for finite k:

This is a consequence (by induction) of the trivial to check equality:

Since the contour \({\mathsf {C}}_{{\mathsf {a}}}^{\ge R}\) was chosen so that for any \(k\ge 1\):

with \(R>1\), the result readily follows. \(\square \)

Proof of Lemma 3.5

We prove this by induction on k. For the base case \(k=1\), it suffices to observe that:

For the inductive step, we first compute:

We can then deform the contour \({\mathsf {C}}_{\lambda }\) to \({\mathsf {C}}_{\lambda }^{\ge R}\) as in the proof of Lemma 3.4 and use (39) to conclude. \(\square \)

Proof of Lemma 3.6

We make use of Lemma 3.5 and apply the same arguments as in the proof of Lemma 3.4 to compute:

\(\square \)

Proof of Lemma 3.7

We first write using the explicit expression:

We claim that for any \(l\in {\mathbb {Z}}_+\), uniformly for \(u \in {\mathfrak {K}}\), where \({\mathfrak {K}}\) is an arbitrary compact neighborhood of the origin, we have:

Then, (40) becomes

In order to establish the claim, it is equivalent (by taking finite linear combinations, since \(p_x(\cdot )\) is a polynomial of degree x) to prove that for any \(l\in {\mathbb {Z}}_+\), uniformly for \(u\in {\mathfrak {K}}\) (an arbitrary compact neighborhood of the origin):

Now, observe that for fixed u, the function \(x\mapsto p_x(u)\) is an eigenfunction, with eigenvalue \(-u\), of the generator \({\mathsf {L}}\):

Thus, for u fixed, we have:

Now, in order to show that the convergence is uniform for \(u \in {\mathfrak {K}}\), we proceed as follows. We first estimate:

On the other hand, making use of the spectral expansion (8), for any \(l\in {\mathbb {Z}}_+\), we have the following bound, where R can be picked arbitrarily large:

Here C denotes a generic constant independent of x (we suppress dependence of C on l). We pick R large enough so that:

Then using the Weirstrass M-test, since for all \(x \in {\mathbb {Z}}_+\)

we get that, for any \(l \in {\mathbb {Z}}_+\):

as required. \(\square \)

Proof of Lemma 3.8

Let \(1\le n \le N\). Using the Cauchy integral formula, we see that, for \(1\le k \le n\):

On the other hand, again using the Cauchy integral formula, we have for \(j=0,\dots ,n-1\):

with \(c_j^j\ne 0\). Thus, it is immediate that

as desired. \(\square \)

Remark 3.10

The computation of the correlation kernel in the level-inhomogeneous setting is more complicated and notationally cumbersome and we do not pursue the details here. We simply record the key ingredient for computing the iterated convolutions as contour integrals. This is the analogue of (and in fact follows from) display (39):

holding uniformly for w on some large contour \({\mathsf {C}}_{\lambda ,\alpha _n,\alpha _{n+1}}^{\ge R}\).

Notes

Thus the name “Borodin–Ferrari push-block dynamics” that we use throughout the paper.

The definition of interlacing, and thus of Gelfand–Tsetlin patterns, is slightly different to the one used in [7]; more precisely the positions of inequalities \(\le \) and strict inequalities < are swapped. Clearly the two conventions are equivalent (after a simple shift).

We believe (but do not have a rigorous argument) that this is the most general model of continuous-time Borodin–Ferrari dynamics (namely the particle interactions being as in Definition 1.2) with determinantal correlations and which can be treated with the methods developed here. It is plausible however that if one considers discrete time dynamics that there exists an even more general model in inhomogeneous space involving geometric or Bernoulli jumps, as is the case in the homogeneous setting; see [7].

Currently, this class has been shown to include essentially all examples of Borodin–Ferrari-type dynamics in continuous time with determinantal correlations studied in the literature (both in continuous and discrete space; see [1, 2], respectively). We expect such a formula to exist in the fully discrete setting (both time and space) as well and we plan to investigate this in future work. In this case, the Borodin–Ferrari dynamics give rise to shuffling algorithms for sampling random boxed plane partitions [10, 11] or domino tilings [8, 38].

In particular the statements of the results look different to the ones in [1, 2]. More precisely, in [1, 2], we obtain couplings between a Karlin–McGregor semigroup associated with a diffusion (or birth and death chain) and the one associated with (a Doob transform of) its Siegmund dual (which is in general different to the original process, except for a smaller sub-class of self-dual ones). In the present paper, the couplings are between two Karlin–McGregor semigroup associated with a general pure-birth chain (and Doob transforms thereof); see Sect. 2 for more details.

The Gelfand–Tsetlin graph vertex set is commonly defined in terms of signatures \(\mathsf {Sign}_N=\{\nu =(\nu _1,\dots ,\nu _N) \in {\mathbb {Z}}^N:\nu _1\ge \nu _2\ge \dots \ge \nu _N \}\) for which there is a corresponding notion of interlacing which then gives the edge set. The two definitions are equivalent since there is a natural bijection between \(\mathsf {Sign}_N\) and \({\mathbb {W}}^N\) under which interlacing in terms of signatures becomes interlacing in terms of elements of the \({\mathbb {W}}^N\)’s.

More generally the measure where both parameters \((\gamma ^+,\gamma ^-)\) can be positive is also called Plancherel.

References

Assiotis, T., O’Connell, N., Warren, J.: Interlacing diffusions. In: Donati-Martin, C., Lejay, A., Rouault, A. (eds.) Seminaire de Probabilites L, pp. 301–380. Springer, Switzerland (2019)

Assiotis, T.: Random surface growth and Karlin–McGregor polynomials. Electron. J. Probab. 23(106), 81 (2018)

Borodin, A.: Schur dynamics of the Schur processes. Adv. Math. 228(4), 2268–2291 (2011)

Borodin, A., Corwin, I.: Macdonald processes. Probab. Theory Relat. Fields 158, 225–400 (2014)

Borodin, A., Corwin, I., Ferrari, P.: Anisotropic (2+1) d growth and Gaussian limits of q-Whittaker processes. Probab. Theory Relat. Fields 172(1–2), 245–321 (2018)

Borodin, A., Ferrari, P.: Large time asymptotics of growth models on space-like paths I: PushASEP. Electron. J. Probab. 13(50), 1380–1418 (2008)

Borodin, A., Ferrari, P.: Anisotropic growth of random surfaces in 2 + 1 dimensions. Commun. Math. Phys. 325, 603–684 (2014)

Borodin, A., Ferrari, P.: Random tilings and Markov chains for interlacing particles. Markov Process. Relat. Fields 24(3), 419–451 (2018)

Borodin, A., Ferrari, P., Prahofer, M., Sasamoto, T.: Fluctuation properties of the TASEP with periodic initial configuration. J. Stat. Phys. 5–6, 1055–1080 (2007)

Borodin, A., Gorin, V.: Shuffling algorithm for boxed plane partitions. Adv. Math. 220(6), 1739–1770 (2009)

Borodin, A., Gorin, V., Rains, E.: q-Distributions on boxed plane partitions. Sel. Math. 16(4), 731–789 (2010)

Borodin, A., Kuan, J.: Random surface growth with a wall and Plancherel measures for \(O(\infty )\). Commun. Pure Appl. Math. 63, 831–894 (2010)

Borodin, A., Kuan, J.: Asymptotics of Plancherel measures for the infinite-dimensional unitary group. Adv. Math. 219(3), 804–931 (2008)

Borodin, A., Olshanski, G.: The boundary of the Gelfand–Tsetlin graph: a new approach. Adv. Math. 230, 1738–1779 (2012)

Borodin, A., Olshanski, G.: Markov processes on the path space of the Gelfand–Tsetlin graph and on its boundary. J Funct. Anal. 263, 248–303 (2012)

Borodin, A., Petrov, L.: Nearest neighbor Markov dynamics on Macdonald processes. Adv. Math. 300, 71–155 (2016)

Borodin, A., Petrov, L.: Higher spin six vertex model and symmetric rational functions. Sel. Math. (N.S) 24(2), 751–874 (2018)

Borodin, A., Petrov, L.: Inhomogeneous exponential jump model. Probab. Theory Relat. Fields 172(1–2), 323–385 (2018)

Borodin, A., Rains, E.: Eynard–Mehta theorem, Schur process, and their Pfaffian analogs. J. Stat. Phys. 121(3–4), 291–317 (2005)

Bufetov, A., Petrov, L.: Yang-Baxter field for spin Hall-Littlewood symmetric functions. Forum Math. Sigma 7, e39 (2019)

Bufetov, A., Mucciconi, M., Petrov, L.: Yang–Baxter random fields and stochastic vertex models. Adv. Math. (2019). arXiv:1905.06815(to appear)

Chhita, S., Ferrari, P.: A combinatorial identity for the speed of growth in an anisotropic KPZ model. Annales Institut Henri Poincare D 4(4), 453–477 (2017)

Diaconis, P., Fill, J.A.: Strong stationary times via a new form of duality. Ann. Probab. 18(4), 1483–1522 (1990)

Duits, M.: Gaussian free field in an interlacing particle system with two jump rates. Commun. Pure Appl. Math. 66(4), 600–643 (2013)

Dyson, F.: A Brownian-motion model for the eigenvalues of a random matrix. J. Math. Phys. 3, 1191 (1962)

Emrah, E.: Limit shapes for inhomogeneous corner growth models with exponential and geometric weights. Electron. Commun. Probab. 42, 16 (2016)

Emrah, E.: Limit shape and fluctuations for exactly solvable inhomogeneous corner growth models. PhD Thesis University of Wisconsin at Madison (2016)

Eynard, B., Mehta, M.L.: Matrices coupled in a chain. I. Eigenvalue correlations. J. Phys. A Math. Theor. 31, 4449–4456 (1998)

Johansson, K.: Shape fluctuations and random matrices. Commun. Math. Phys. 209(2), 437–476 (2000)

Karlin, S.: Total Positivity, vol. 1. Stanford University Press, Palo Alto (1968)

Karlin, S., McGregor, J.: Coincidence properties of birth and death processes. Pac. J. Math. 9(4), 1109–1140 (1959)

Knizel, A., Petrov, L., Saenz, A.: Generalizations of TASEP in discrete and continuous inhomogeneous space. Commun. Math. Phys. 372(3), 797–864 (2019)

Konig, W., O’Connell, N., Roch, S.: Non-colliding random walks, tandem queues, and discrete orthogonal polynomial ensembles. Electron. J. Probab. 7(5), 24 (2002)

Kuan, J.: A multi-species ASEP(q, j) and q-TAZRP with stochastic duality. Int. Math. Res. Not. 2018(17), 5378–5416 (2018)

Kuan, J.: An algebraic construction of duality functions for the stochastic \({\cal{U}}_q\left(A_n^{(1)}\right)\) vertex models and its degenerations. Commun. Math. Phys. 359(1), 121–187 (2018)

Miclo, L.: On the construction of set-valued dual processes. https://hal.archives-ouvertes.fr/hal-01911989 (2018). Accessed 4 Jan 2020

Le Jan, Y., Raimond, O.: Flows, coalescence and noise. Ann. Probab. 32(2), 1247–1315 (2004)

Nordenstam, E.: On the shuffling algorithm for domino tilings. Electron. J. Probab. 15(3), 75–95 (2010)

Norris, J.R.: Markov Chains. Cambridge University Press, Cambridge (1997)

O’Connell, N.: A path-transformation for random walks and the Robinson–Schensted correspondence. Trans. Am. Math. Soc. 355, 3669–3697 (2003)

O’Connell, N.: Conditioned random walks and the RSK correspondence. J. Phys. A Math. Theor. 36, 3049 (2003)

O’Connell, N.: Directed polymers and the quantum Toda lattice. Ann. Probab. 40(2), 437–458 (2012)

Okounkov, A.: Infinite wedge and random partitions. Sel. Math. 7, 57 (2001)

Okounkov, A., Reshetikhin, N.: Correlation function of Schur process with application to local geometry of a random 3-dimensional Young diagram. J. Am. Math. Soc. 16, 581–603 (2003)

Petrov, L.: The boundary of the Gelfand–Tsetlin graph: new proof of Borodin–Olshanski formula, and its q-analogue. Mosc. Math. J. 14(1), 121–160 (2014)

Petrov, L.: PushTASEP in inhomogeneous space. (2019). arXiv:1910.08994

Rogers, L.C.G., Pitman, J.: Markov functions. Ann. Probab. 9(4), 573–582 (1981)

Siegmund, D.: The equivalence of absorbing and reflecting barrier problems for stochastically monotone Markov processes. Ann. Probab. 4(6), 914–924 (1976)

Toninelli, F.L.: A (2+1)-dimensional growth process with explicit stationary measures. Ann. Probab. 45(5), 2899–2940 (2017)

Vershik, A.M., Kerov, S.V.: Characters and factor representations of the infinite unitary group. Dokl. Akad. Nauk SSSR 267(2), 272–276 (1982). (in Russian); English Translation: Soviet Math. Dokl. 26,570–574 (1982)

Wang, D., Waugh, D.: The transition probability of the q-TAZRP (q-Bosons) with inhomogeneous jump rates. SIGMA 12, 037 (2016)

Warren, J.: Dyson’s Brownian motions, intertwining and interlacing. Electron. J. Probab. 12, 573–590 (2007)

Warren, J., Windridge, P.: Some examples of dynamics for Gelfand–Tsetlin patterns. Electron. J. Probab. 14, 1745–1769 (2009)

Acknowledgements

I would like to thank Maurice Duits, Patrik Ferrari, and Jon Warren for some early discussions which motivated the problems studied in this paper. Moreover, I am very grateful to Leonid Petrov for sending me his preprint and for some interesting questions and remarks. I would also like to thank Alexei Borodin for some very interesting suggestions and pointers to the literature. Finally, I am very grateful to an anonymous referee for a careful reading of the paper and some very useful suggestions and remarks which have improved the presentation. The research described here was supported by ERC Advanced Grant 740900 (LogCorRM).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Vadim Gorin.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Assiotis, T. Determinantal Structures in Space-Inhomogeneous Dynamics on Interlacing Arrays. Ann. Henri Poincaré 21, 909–940 (2020). https://doi.org/10.1007/s00023-019-00881-5

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00023-019-00881-5