Abstract

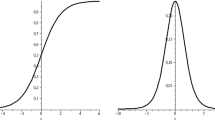

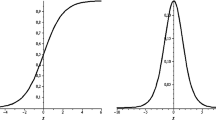

In this paper, for the neural network (NN) operators activated by sigmoidal functions, we will obtain quantitative estimates in direct connection with the asymptotic behavior of their activation functions. We will cover all cases of discrete and Kantorovich type versions of NN operators in both univariate and multivariate settings since the proofs of the latter ones can be reduced in a convenient way to the proofs in the univariate cases. The above mentioned quantitative estimates have been achieved for continuous functions and using the well-known modulus of continuity. Further, in the case of the Kantorovich version of the NN operators, we also provide quantitative estimates for \(L^p\) functions with respect to the \(L^p\) norm; this has been done using the well-known Peetre K-functionals. At the end of the paper, some examples of sigmoidal functions are presented; also the case of NN operators activated by the rectified linear unit (ReLU) functions are considered. Furthermore, numerical examples in both univariate and multivariate cases have been provided in order to show the approximation performances of all the above operators.

Similar content being viewed by others

Notes

Actually, Theorem 2.1 of [22] has been proved for \(\alpha \ge 2\) but the proof works also if we assume \(1 < \alpha \le 2\).

References

Anastassiou, G.A.: Rate of convergence of some neural network operators to the univariate case. J. Math. Anal. Appl. 212, 237–262 (1997)

Anastassiou, G.A.: Multivariate hyperbolic tangent neural network approximation. Comput. Math. Appl. 61(4), 809–821 (2011)

Anastassiou, G.A.: Multivariate sigmoidal neural network approximation. Neural Netw. 24, 378–386 (2011)

Anastassiou, G.A.: Intelligent Systems II: Complete Approximation by Neural Network Operators, Studies in Computational Intelligence, vol. 608. Springer, Cham (2016)

Anastassiou, G.A.: Multivariate approximation with rates by perturbed Kantorovich-Shilkret neural network operators. Sarajevo J. Math. 15(28)(1), 97–112 (2019)

Bajpeyi, S., Kumar, A. S.: Approximation by exponential sampling type neural network operators, Anal. Math. Phys. 11(3), paper number 108 (2021)

Cantarini, M., Coroianu, L., Costarelli, D., Gal, S. G., Vinti, G.: Inverse result of approximation for the max-product neural network operators of the Kantorovich type and their saturation order. Mathematics 10, Article Number 63 (2022)

Cantarini, M., Costarelli, D., Vinti, G.: Asymptotic expansion for neural network operators of the Kantorovich type and high order of approximation. Mediterr. J. Math. 18(2), Paper No. 66 (2021)

Cao, F., Chen, Z.: The approximation operators with sigmoidal functions. Comput. Math. Appl. 58(4), 758–765 (2009)

Cao, F., Chen, Z.: The construction and approximation of a class of neural networks operators with ramp functions. J. Comput. Anal. Appl. 14(1), 101–112 (2012)

Cardaliaguet, P., Euvrard, G.: Approximation of a function and its derivative with a neural network. Neural Netw. 5(2), 207–220 (1992)

Cheang, G.H.L.: Approximation with neural networks activated by ramp sigmoids. J. Approx. Theory 162, 1450–1465 (2010)

Coroianu, L., Costarelli, D., Gal, S.G., Vinti, G.: The max-product generalized sampling operators: convergence and quantitative estimates. Appl. Math. Comput. 355, 173–183 (2019)

Coroianu, L., Costarelli, D., Gal, S.G., Vinti, G.: Approximation by max-product sampling Kantorovich operators with generalized kernels. Anal. Appl. 19, 219–244 (2021)

Costarelli, D., Sambucini, A.R.: Approximation results in Orlicz spaces for sequences of Kantorovich max-product neural network operators. Results Math. 73(1), 15 (2018). https://doi.org/10.1007/s00025-018-0799-4

Costarelli, D., Sambucini, A.R., Vinti, G.: Convergence in Orlicz spaces by means of the multivariate max-product neural network operators of the Kantorovich type and applications. Neural Comput. Appl. 31, 5069–5078 (2019)

Costarelli, D., Spigler, R.: Approximation results for neural network operators activated by sigmoidal functions. Neural Netw. 44, 101–106 (2013)

Costarelli, D., Spigler, R.: Multivariate neural network operators with sigmoidal activation functions. Neural Netw. 48, 72–77 (2013)

Costarelli, D., Spigler, R.: Convergence of a family of neural network operators of the Kantorovich type. J. Approx. Theory 185, 80–90 (2014)

Costarelli, D., Vinti, G.: Max-product neural network and quasi-interpolation operators activated by sigmoidal functions. J. Approx. Theory 209, 1–22 (2016)

Costarelli, D., Vinti, G.: Saturation classes for max-product neural network operators activated by sigmoidal functions. Res. Math. 72(3), 1555–1569 (2017)

Costarelli, D., Vinti, G.: Quantitative estimates involving K-functionals for neural network type operators. Appl. Anal. 98(15), 2639–2647 (2019)

Cybenko, G.: Approximation by superpositions of a sigmoidal function. Math. Control Signals Syst. 2, 303–314 (1989)

Dell’Accio, F., Di Tommaso, F.: On the hexagonal Shepard method. Appl. Numer. Math. 150, 51–64 (2020)

Goebbels, S.: On Sharpness of error bounds for univariate approximation by single hidden layer feedforward neural networks. Results Math. 75, Article number: 109 (2020)

Hahm, N., Hong, B.I.: A Note on neural network approximation with a sigmoidal function. Appl. Math. Sci. 10(42), 2075–2085 (2016)

Hanin, B.: Universal function approximation by deep neural nets with bounded width and ReLU activations. Mathematics 7(10), 992 (2019)

Kadak, U.: Fractional type multivariate neural network operators. Math. Methods Appl. Sci. (2021). https://doi.org/10.1002/mma.7460

Kainen, P. C., Kurková, V., Vogt, A.: Approximative compactness of linear combinations of characteristic functions. J. Approx. Theory 257, paper number 105435 (2020)

Kurková, V., Sanguineti, M.: Classification by sparse neural networks. IEEE Trans. Neural Netw. Learn. Syst. 30(9), 2746–2754 (2019)

Qian, Y., Yu, D.: Rates of approximation by neural network interpolation operators. Appl. Math. Comput. 418, Paper No. 126781 (2022)

Schmidhuber, J.: Deep learning in neural networks: An overview. Neural Netw. 61, 85–117 (2015)

Turkun, C., Duman, O.: Modified neural network operators and their convergence properties with summability methods. Rev. R. Acad. Cienc. Exactas Fís. Nat. Ser. A Mat. RACSAM 114(3), Paper No. 132 (2020)

Yarotsky, D.: Error bounds for approximations with deep ReLU networks. Neural Netw. 94, 103–114 (2017)

Zhou, D.X.: Universality of deep convolutional neural networks. Appl. Comput. Harmonic Anal. 48(2), 787–794 (2020)

Zoppoli, R., Sanguineti, M., Gnecco, G., Parisini, T.: Neural Approximations for Optimal Control and Decision, Communications and Control Engineering book series (CCE). Springer, Cham (2020)

Acknowledgements

The contribution of Lucian Coroianu was possible with the support of a grant awarded by the University of Oradea and titled “Approximation and optimization methods with applications”. The author D. Costarelli is member of the Gruppo Nazionale per l’Analisi Matematica, la Probabilità e le loro Applicazioni (GNAMPA) of the Istituto Nazionale di Alta Matematica (INdAM), of the network RITA (Research ITalian network on Approximation), and of the UMI (Unione Matematica Italiana) group T.A.A. (Teoria dell’Approssimazione e Applicazioni). Moreover, he has been partially supported within the 2020 GNAMPA-INdAM Project “Analisi reale, teoria della misura ed approssimazione per la ricostruzione di immagini”.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Coroianu, L., Costarelli, D. & Kadak, U. Quantitative Estimates for Neural Network Operators Implied by the Asymptotic Behaviour of the Sigmoidal Activation Functions. Mediterr. J. Math. 19, 211 (2022). https://doi.org/10.1007/s00009-022-02138-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s00009-022-02138-8