Abstract

Impulsive choice is preference for a smaller-sooner (SS) outcome over a larger-later (LL) outcome when LL choices result in greater reinforcement maximization. Delay discounting is a model of impulsive choice that describes the decaying value of a reinforcer over time, with impulsive choice evident when the empirical choice-delay function is steep. Steep discounting is correlated with multiple diseases and disorders. Thus, understanding the processes underlying impulsive choice is a popular topic for investigation. Experimental research has explored the conditions that moderate impulsive choice, and quantitative models of impulsive choice have been developed that elegantly represent the underlying processes. This review spotlights experimental research in impulsive choice covering human and nonhuman animals across the domains of learning, motivation, and cognition. Contemporary models of delay discounting designed to explain the underlying mechanisms of impulsive choice are discussed. These models focus on potential candidate mechanisms, which include perception, delay and/or reinforcer sensitivity, reinforcement maximization, motivation, and cognitive systems. Although the models collectively explain multiple mechanistic phenomena, there are several cognitive processes, such as attention and working memory, that are overlooked. Future research and model development should focus on bridging the gap between quantitative models and empirical phenomena.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

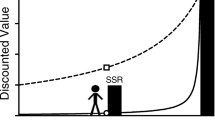

Impulsivity is a multifaceted construct that can be summarized as engaging in actions without foresight (Winstanley et al., 2006). Researchers have operationalized impulsivity into three dimensions—impulsive choice, impulsive responding, and impulsive personality. Impulsive choice is characterized by the preference for a smaller reinforcer available sooner (SS, “impulsive option”) compared with a larger reinforcer available later (LL, “self-controlled option”). The LL is the self-controlled option because it typically provides more reinforcement over time (i.e., optimal choice). Impulsive responding refers to the failure to suppress or withhold an action in the presence of certain stimuli. Impulsive personality, or trait impulsivity, measures persistent and stable aspects of personality primarily through self-report assessments (Reynolds et al., 2006). Although points of overlap exist, these dimensions are largely considered to be mechanistically distinct from one another (MacKillop et al., 2016). This review discusses learning, motivational, cognitive mechanisms of impulsive decision-making in both humans and nonhuman animals. We also review a wide array of mathematical models developed to predict impulsive choice and discuss the areas of disconnect between empirical research and theory development with the goal of motivating future research.

An essential facet of impulsive choice is that individuals devalue temporally distant reinforcers (Madden & Bickel, 2010), a phenomenon known as delay discounting. Delay discounting reflects the level of impatience, or unwillingness to wait for larger delayed outcomes that has been proposed as a key underlying mechanism that drives impulsive choice (Mazur, 1987). Impulsive choice has received considerable attention because of its relationship with important health outcomes. For example, individuals with higher discounting rates have a higher prevalence of substance use (Amlung et al., 2017; Bickel et al., 1999; MacKillop et al., 2011), obesity (Jarmolowicz et al., 2014; Weller et al., 2008), attention-deficit/hyperactivity disorder (ADHD; Marx et al., 2021; Patros et al., 2016), and gambling disorders (Grecucci et al., 2014). As a result of the breadth of application, impulsive choice has been proposed as a trans-disease process (Bickel et al., 2019; Bickel & Mueller, 2009).

Laboratory procedures have been developed to assess impulsive choice in both humans and animals. The SS and LL options are usually presented as a concurrent discrete choice so that once individuals choose one option, the remaining option is removed. The key feature of impulsive choice tasks is that individuals must weigh both the reinforcer amount and delay to reinforcement when determining the value of each option. The tasks are typically constructed so that LL choices maximize reinforcement earning over time. In these circumstances, individuals that prefer the SS are considered impulsive.

In tasks with animals, different pairings of amounts and delays are offered, and the animal can choose an option by making a specific response. The outcomes (delay and amount) are learned through experience. For example, rats may choose between pressing two levers, with one lever providing one food pellet after 10 s and another providing two food pellets after 30 s. Most impulsive choice tasks manipulate the amount or delay associated with the SS or LL. Systematic procedures employ choice parameters where the delay or magnitude of reinforcer(s) for the SS or LL option changes systematically between each session (e.g., Green & Estle, 2003). Alternatively, the delay or magnitude of the SS or LL may change systematically within each session (e.g., Evenden & Ryan, 1996). For systematic procedures, the proportion of LL choices is often the index of self-control (or impulsive choice). Finally, adjusting procedures change the delay or magnitude of the SS or LL based on recent previous choices in a titrating fashion. For example, repeated choices of the LL delay may lead to an adjustment to make that option less attractive (e.g., LL delay increases or magnitude decreases). Alternatively, preference for the SS may lead to an adjustment to make the alternative LL option more attractive (e.g., LL delay decreases, or magnitude increases). Adjustments of LL (or SS) delay or magnitude continue until achieving an indifference point, where either option is selected equally often (Mazur, 1987, 1988). In the adjusting procedure, the duration or magnitude of the adjusting option associated with the indifference point is an index of self-control.

Assessments of impulsive choice in humans utilize similar methods but often rely on hypothetical reinforcers and delays. For example, the Monetary Choice Questionnaire (MCQ; Kirby et al., 1999) delivers a fixed set of questions with specific amount/delay pairings. Adjusting tasks are delivered similar to animal tasks but with hypothetical delays and outcomes (Frye et al., 2016). Some studies make outcomes quasi-experiential by giving participants a randomly selected single outcome from their choices during the study (e.g., Rotella et al., 2019). In experiential discounting tasks, participants experience actual delays and/or magnitudes (Reynolds & Schiffbauer, 2004; Smits et al., 2013; Steele et al., 2019) that may better approximate tasks used in animals.

Steele et al. (2019) investigated whether experiencing real versus hypothetical delays and reinforcers influenced preferences. The SS and LL options delivered 1–5 mini M&Ms after 5–30 s with the LL always involving a longer delay and larger amount. The delays and amounts could be hypothetical or real. In the real conditions, the participants received the M&Ms and had to wait for the delay. Steele et al. found that there was no difference in performance with real versus hypothetical M&Ms. However, in a condition where real delays were experienced initially followed by hypothetical delays (both paired with hypothetical M&Ms), participants increased their sensitivity to delays in the hypothetical delay task as a result of experience with the real delays. In addition, choices from the MCQ did not significantly correlate with performance on the experiential task, consistent with other reports (Reynolds et al., 2006; Reynolds & Schiffbauer, 2004). One factor that may explain the poor correlation across hypothetical and experiential tasks is that different combinations of factors and behaviors can produce similar choice patterns. It is possible that hypothetical discounting better reflects choice intentions, whereas experiential discounting may better reflect actual choices. This suggests the importance of measuring specific choice mechanisms rather than simply measuring choice behavior, an issue that is discussed in the following section.

An alternative approach for measuring experiential choices in humans is the delayed gratification procedure. Delayed gratification tasks present two options (SS or LL) in succession so that choosing the SS during an initial delay forfeits access to the LL reinforcer that would otherwise be available later. This contrasts with the standard impulsive choice task in that there is no upfront commitment. One notable study that measured individual’s ability to delay gratification is the “marshmallow task” where preschool-aged children were told they could have one small, but immediate reinforcer now, or two small reinforcers if they chose to wait for a specified time (Mischel et al., 1972).

The delayed gratification procedure has also been used in nonhuman animals by incorporating a defection response into an impulsive choice task that allows for switching to SS following an initial choice of the LL (Haynes & Odum, 2022). Reynolds et al. (2002) compared impulsive choice and delayed gratification procedures in rats with an adjusting amount procedure with the addition of a defection response opportunity. In both groups, if the rats chose the SS (by making a nose-poke response), then they received an immediate small reward and if they chose the LL, they received a larger delayed reward. During the LL delay, the SS nose-poke response remained available. For the rats in the impulsive choice task, SS responses during the LL delay were recorded but had no consequence. In contrast, rats in the delay gratification task could defect by making an SS nose-poke response at any time during the LL delay to receive the immediate smaller reward. There were no significant differences in the discounting functions between the tasks, suggesting that the two experiential procedures may measure similar processes in rats. Göllner et al. (2018) also found a correlation between impulsive choices and delay gratification in humans, further supporting the claim that the tasks are measuring similar processes.

The above tasks can be used for measuring choice mechanisms and for modeling choices. The impulsive choice function generated by these procedures is likely a product of multiple empirical relationships that can be accounted for by a combination of several processes. Impulsive choice researchers have achieved an emerging understanding of the components that explain impulsive choice, but research on how these factors interact is lacking. Further experimentation is necessary to fill in the gaps. In addition, models designed to encapsulate the relevant factors are necessary. In addition to their predictive power, models can provide an organizational structure for interpreting research findings. In the absence of such a structure to explain impulsive decision-making, and the myriad of factors underlying it, researchers are only able to contribute to an ever-growing catalog of effects. Given the importance of both empirical and theoretical contributions to a unified understanding of impulsive choice, we review both elements in the current paper.

This review is broad but not comprehensive. The impulsive choice literature is vast with an “impulsive choice” search in Google Scholar producing 9,460 results and “delay discounting” producing 26,600 results (as of September 2022). Instead, this paper focuses on exemplar studies (i.e., recent, seminal, and/or highly cited) that cover the mechanisms that are relevant to both human and animal research on impulsive choice. The primary goal is to synthesize the cognitive, motivational, and attentional processes involved in impulsive choice, review the contemporary theoretical models, and highlight future directions for research that can propel the field forward.

Learning, motivational, and cognitive factors underlying impulsive decision-making

This section highlights experimental research investigating the mechanisms underlying impulsive decision-making. The consideration of learning factors will focus on learning history and impulsive-choice training procedures to provide clues about the mechanisms of impulsive choice. The discussion of cognitive and motivational factors is grouped because influential frameworks of substance use disorders often explain impulsive decision-making as an imbalance between dysregulation of the executive control system and a hyperactive motivational system (Bechara et al., 2019). Experimentation testing these frameworks can reveal the underlying mechanisms involved in impulsive decision-making. The section on motivational factors will focus on how reinforcer quantity, quality, and incentive motivation affect impulsive choice. Finally, we focus on the underlying cognitive processes that are recruited to affect impulsive decision-making, including working memory, attention, and perceptual processes (especially timing processes). This section will highlight the empirical foundation that influences models of impulsive choice, which will be discussed subsequently.

Learning factors

Learning factors relate to the organisms’ behavioral adaptation to the environment based on experiences. In this section, we focus on how impulsive decision-making is affected by learning the local choice contingencies (i.e., what the SS and LL options provide in terms of reinforcers and delays), the context and framing of the choice options (i.e., how future choices are affected by prior choices/outcomes and how presenting the choices successively or concurrently affects behavior), and the broader choice contingencies (i.e., does preference track reinforcement maximization or not).

Learning the local contingencies: Amounts, delays, and immediacy

The most elemental learning process in an impulsive choice environment involves understanding the delay and amounts associated with each choice. Correlational research indicates that rats and humans who time delays better also show greater self-control (Baumann & Odum, 2012; Brocas et al., 2018; Darcheville et al., 1992; Marshall et al., 2014; McClure et al., 2014; Moreira et al., 2016; Navarick, 1998; Paasche et al., 2019; Smith et al., 2015; Stam et al., 2020; van den Broek et al., 1992; Wittmann & Paulus, 2008). In addition, rats that display superior amount discrimination also show better self-control (Marshall & Kirkpatrick, 2016; Experiment 1). These results support a basic assumption that more self-controlled choices should follow from a better understanding of the choice outcomes.

Given the observation of correlations in timing ability and amount discrimination with impulsive choice, it is reasonable to assume that training those abilities could improve self-control. Training designed to improve self-control has been useful in determining what learning experiences, and associated processes, underlie impulsive choice. Smith et al. (2015) trained rats on different schedules of reinforcement designed to promote timing of the SS and LL delays followed by impulsive choice assessment. Training occurred on the same levers as the choice assessment to promote transfer across tasks. The DRL-Delay delivered training with a differential reinforcement of low-rate schedule where the rats had to withhold responding for a fixed delay. The FI-Delay involved rats responding after a fixed interval elapsed. The VI-Delay required rats to respond after a variable interval, with the delay varying across trials according to a uniform schedule. All three procedures increased LL choices coupled with improved timing precision of the SS and LL delays. Timing precision was indexed by reduced variability in responding on a peak interval procedure. In this procedure, rats received nonreinforced trials that extended beyond the usual time of reinforcement and response rates peaked at the anticipated time of reinforcer delivery. The location of the peak is an index of timing accuracy and the variability of the peak distribution indexes timing precision. Previous research has shown that rats can time delays in DRL, FI, and uniformly distributed VI schedules (Church et al., 1998; Pizzo et al., 2009). It appears that temporal learning during the training with the three schedules may have transferred to the choice task.

Similarly, Peterson and Kirkpatrick (2016) trained rats on a VI-Delay procedure with uniformly distributed variable delays compared with a No-Delay control group. The VI-Delay group showed greater LL choices. In addition, an individual differences analysis revealed that the rats in the VI-Delay group (but not the control) with the highest self-control showed the best timing in a temporal discrimination task. Overall, these studies suggest that training-induced improvements in self-control is linked to improved timing in peak interval (Smith et al., 2015) and temporal discrimination (Peterson & Kirkpatrick, 2016) procedures. However, Rung et al. (2018) failed to find a relationship between temporal discrimination and LL choices and Fox et al. (2019) did not find a relationship between peak interval timing and LL choices; these inconsistencies are discussed later.

An alternative training approach involved training rats to discriminate the SS and LL amounts, which increased LL choices along with increasing amount discrimination ability (Marshall & Kirkpatrick, 2016; Experiment 2). Specifically, rats were trained to discriminate 1 versus 2 pellets and 1 versus 4 pellets, whereas the control group only chose between 2 versus 2 pellets. The rats in both groups were then tested to determine their ability to discriminate between 1 versus 2, 2 versus 3, 3 versus 4, and 4 versus 5 pellets. Rats in the training, but not the control, group showed improved discrimination performance coupled with a numerical distance effect (i.e., 1 vs. 2 was easier to discriminate than 4 vs. 5), which suggests that numerical processing was selectively improved for the training group. In addition, across rats, there was a positive correlation between improvement in self-control (pre- vs post-training) and amount discrimination accuracy for the training group but not the control (i.e., reminicent of what Peterson & Kirkpatrick, 2016, observed with the discrimination of delays). Collectively, these studies revealed that refined training to learn the larger/smaller and later/sooner contingencies involved in the choice procedure resulted in more self-controlled choices.

Although research in rodents using delay and amount training techniques appears promising, the generality of these studies to humans needs further investigation. Self-control is increased in humans that are exposed to delays (Binder et al., 2000; Dixon et al., 1998; Dixon et al., 2003; Fisher et al., 2000; Neef et al., 2001; Schweitzer & Sulzer-Azaroff, 1988; Vessells et al., 2018; Young et al., 2011; Young et al., 2013), but it is unclear whether these benefits are the result of learning about the delays and/or amounts or occur through other mechanisms (see delay tolerance below).

Comparisons, contrasts, and carryover effects

Learning the amounts and delays of the options is important, but the question remains: what is learned through experiences with SS and LL outcomes? This section covers generalization of different experiences with SS and LL outcomes that can produce subsequent effects on choice. When given two options the most basic assumption is that the value of one amount–delay trade-off (e.g., LL option) is compared with the value of the alternative (e.g., SS option). Given that there are moderate positive cross-task correlations between impulsive choice methods in rodents (Craig et al., 2014; Peterson et al., 2015) it may be assumed that a common learning process may occur during experience with any impulsive choice procedure (i.e., trait effects; Odum, 2011). Be that as it may, procedural decisions for training and assessing impulsive choice may bias choices and this reflects the importance of learning- and context-based effects.

Training procedures designed to influence choices sometimes reveal that rats do not always simply learn the smaller/larger and sooner/later contingencies of the impulsive choice task. In comparison to FI-Delay and VI-Delay training, experiments that utilized a No-Delay control group trained the rats to differentiate SS and LL amounts associated with each lever (e.g., FR 2 for 1 SS or 2 LL pellets). In several studies, the No-Delay experience has not shown any significant changes in LL choices (Bailey et al., 2018; Panfil et al., 2020; Stuebing et al., 2018). This outcome is somewhat counterintuitive because the No-Delay procedure effectively trains the rats to expect 2 pellets from the LL lever and 1 pellet from the SS lever, but that experience does not promote the choice of 2 pellets over 1 pellet. If the rats learn simple associations between the SS and LL levers and their respective amounts during training, then the No-Delay training should increase LL choices. The absence of this outcome suggests that preference for the LL and SS options as a result of training does not reflect some composite associative value that generalizes to the choice procedure.

Training procedures have shown that different ways of introducing SS and LL options may lead to different preferences in choice. Marshall and Kirkpatrick (2016) found that amount discrimination training improved self-control, so it could be assumed that the No-Delay training could do the same. The No-Delay training procedures typically expose rats to SS and LL options successively and this may impede the ability to discriminate amounts. Consistent with this idea, Marshall et al. (2014) failed to find a relationship between impulsive choice and amount discrimination accuracy with a procedure that trained amount–lever associations across successive blocks. However, Marshall and Kirkpatrick (2016) observed a relationship between amount discrimination and impulsive choice after training with concurrently presented amounts. Concurrent discrimination training might be a necessary condition to observe reinforcer-amount training effects on LL choices.

Although concurrent training may promote amount discrimination, it is unknown whether it is a necessary condition for delay-based training. Outside of the impulsive choice paradigm it has been shown that pigeons only learn the temporal value of different delay-correlated cues when they are trained simultaneously with other delays in the same session, rather than successively across blocks of sessions, using the concurrent chains procedure (Grace & Hucks, 2013). The effects of delay-correlated cues were described in terms of their conditioned reinforcement value in concurrent chains research, but these effects can easily be interpreted in terms of how the context modifies the way that delayed reinforcement is learned. The impulsive choice tasks described previously are functionally concurrent chains schedules with a choice initial-link (fixed-ratio, FR, 1) chained to a delay-to-reinforcement terminal-link (FI or fixed-time, FT). The concurrent chains schedules described in this section utilize a VI initial link choice schedule where responses can be allocated to both options and the first response that completes the VI schedule on either of the options registers as the selected choice. The significance of the VI initial link choice period (vs. FR 1) is that it provides an added delay context that precedes a signaled terminal-link delay.

Grace and Savastano (2000) trained pigeons simultaneously on two different concurrent chains components that differed on initial-link schedules (VI 20 s for the “short” initial-link component and VI 100 s for the “long” initial-link component). Those components had the same terminal-link delays (VI 10-s delay, VI 20-s delay), but different terminal-link key light colors depending on the component (e.g., VI 10-s for the short component might be green, whereas the VI 10-s for the long component might be red). Then the pigeons’ preferences were probed with the green VI 10 from the short component compared with the red VI 10 from the long component. The pigeons had equivalent preference for the VI 10-s schedules. This outcome would be expected assuming that the pigeons learned that the average terminal link delays were the same and if the length of the initial link context was ignored. However, O’Daly et al. (2005) trained pigeons successively on two different multiple chains components that differed on initial-link schedules (VI 10 s for the “short” initial-link component and VI 100 s for the “long” initial-link component), but shared the same FT 30-s terminal link that differed in terms of key light color based upon the associated component (e.g., red FT 30-s schedule following VI 10-s, green FT 30-s schedule following VI 100). The pigeons’ preference between red versus green FT 30-s terminal links was probed, and the pigeons favored the terminal link signal associated with the VI 100-s initial link (e.g., green). This outcome would be expected if the pigeons learned the duration of the initial-link and terminal-link components and the signaled FT delay represented the time remaining within the component (consistent with the delay reduction hypothesis; e.g., Fantino et al., 1993). The 30-s delay following the (average) 100-s delay would indicate “most of the waiting for food has passed” in the context of the component, and the 30-s delay following a 10-s delay would indicate “most of the waiting for food is still to come.”

Overall, the results of Marshall et al. (2014) and Marshall and Kirkpatrick (2016) show that concurrently training different reinforcer amounts may be necessary to produce accurate amount discrimination. On the other hand, the results of Grace and Savastano (2000) in comparison with O’Daly et al. (2005) show that concurrent training of different delays leads to them to be compared based upon their absolute times, whereas successive training of delays leads them to be compared based upon whether they signal that food is temporally nearer or farther in the overall context. Previous FI-Delay training has used successive procedures to produce improvements in timing and self-control. Future FI-Delay training using concurrent procedures might produce relatively greater improvements in timing and self-control.

Learning-based procedures often increase preference for the choice option that provides more reinforcers, but procedures can also affect choice by increasing preference for the option that provides reinforcers sooner. T. R. Smith et al. (2022; Experiment 2) compared FI-Delay training between groups where the SS option involved either a short delay (5-s) or a long delay (10-s SS) with 30-s LL delay training delivered to both groups. The group experiencing the 5-s SS delay showed greater delay discounting in comparison to the group experiencing the 10-s SS delay training. These results were interpreted in terms of the rats experiencing the 10-s SS delay being trained to tolerate the aversiveness of waiting (i.e., delay tolerance, a mechanism discussed later), but both groups experienced 30-s LL delay training that has been shown to increase LL choices (Fox et al., 2019; only trained LL delays). The attractive dimension of the SS delay is that it is “short”; the 5-s SS delay group perhaps learned to attend to that dimension because of training, whereas the 10-s SS delay group attended to the attractive “larger” reward dimension of the LL.

Bailey et al. (2021) also reported training effects indicating that exposure to short delays can increase preference for that option, in this case the LL option with short VI delays. A VI-Delay group received training with a Weibull distribution of delays with different mean and shape parameters to produce increasing, decreasing, or constant hazard functions with mean VI durations for the SS and LL levers of 10 s and 30 s, respectively. The decreasing hazard function delivered many short delays offset by a few very long delays, whereas the constant hazard function had an exponential distribution, and the increasing hazard function closely approximated a uniform distribution. Compared with the increasing and constant hazard functions, the decreasing hazard function VI produced the greatest improvement in self-control. The frequent short delays to the LL outcome during training might have increased the LL value (similar to what the 5-s SS delay did in T. R. Smith et al., 2022), but the occasional long delay might have increased LL delay tolerance.

To summarize, what animals learn about delay and amount contingencies in a choice procedure depends on how the animals are introduced to those contingencies. These dynamics, however, have been studied using animals that learned the contingencies in a highly controlled environment. It is unclear whether these dynamics would apply to human decision-making. We will return to this issue below (see Attention section) when discussing framing effects on impulsive decision-making and will discuss the extent to which the studies in this section with rats and pigeons may bias attention in similar ways to framing effects. This provides a possible connection across species.

Learning the global contingencies: Optimal preference for reinforcement maximization

In an impulsive choice procedure, the LL option is the “optimal choice” defined in terms of maximizing reinforcer outcomes over time, thus making it the self-controlled choice. Humans can adopt an optimal strategy under the constraints of a delay discounting task (Schweighofer et al., 2006) and animals’ behavior often is adaptive to the environment and sometimes approximates the optimal solution in a variety of experimental preparations (Fantino & Abarca, 1985; Stevens & Stephens, 2010). For example, Schuweiler et al. (2021) found that rats could optimally delay gratification in a choice and diminishing returns procedure, which offered a choice between a progressive interval (PI) and an FI. The PI delay started at 0 s and increased by 1 s for each successive PI choice, and the FI option was always a 10-s delay. Choosing the FI option reset the PI delay for the next trial to 0 s. The optimal response pattern to maximize reinforcers was to choose the PI until reaching 4 s and then switch to the FI option to reset the PI to 0 s. This would keep the rat routinely encountering low PI delays. The rats in Schuweiler et al. (2021) on average switched at the 4-s delay—demonstrating precision in learning the optimal strategy.

Given that rats can optimize in the diminishing returns task, one might anticipate that they would adopt the optimal strategy in impulsive choice procedures. Typically, in impulsive choice procedures, the LL results in greater reinforcement maximization because the intertrial interval (ITI), the time between food delivery and the next choice trial, represents an opportunity cost in a limited-time session. For example, even if the SS offers 1 pellet after 10 s and the LL offers 3 pellets after 30 s, a common 60-s ITI following both choices ensures the LL option will have a greater payoff when considering trial and ITI time together. Self-control is often touted as the optimal choice, but humans and animals almost never exclusively prefer the LL option, hence why impulsive decision-making is such a ubiquitous problem. If animals are insensitive to the ITI and fail to see the “big picture” in terms of the LL option maximizing reinforcement in the long run, then that might partly explain why optimal self-controlled solutions are uncommonly observed.

Studies examining sensitivity to the ITI have found mixed results. Smethells and Reilly (2015) demonstrated that rats in a condition with a 6-s LL delay coupled with a 10-s ITI chose the SS option, providing immediate reinforcement, more often compared with a group with a 45-s ITI. The 10-s ITI imposed a low opportunity cost for choosing the SS and the rats appeared to be sensitive to that contingency. However, sensitivity to the ITI may be the exception rather than the rule. Blanchard et al. (2013) demonstrated that rhesus monkeys showed poor sensitivity to ITI durations unless the ITI was made salient with signaling. Sjoberg et al. (2021) reported that increasing the length of the ITI for the LL choice had no effect on impulsive choices in rats, and attempts to make the ITI more salient with audio cues did not improve sensitivity. Pigeons also generally showed poor sensitivity to ITIs (Logue et al., 1985). It is curious that rats (Schuweiler et al., 2021) and pigeons (Hackenberg & Hineline, 1992) generally show optimal response patterns in a diminishing returns contingency, and yet when similar delays are packaged into an impulsive choice procedure, preference often deviates from optimality.

The ITI is not the only extraneous variable that could affect impulsive choices. Low opportunity costs induced by using reinforcer postponement, rather than reinforcer waiting, can also affect choice behavior (Paglieri, 2013). In illustrating the effects of reinforcer postponement, Addessi et al. (2021) showed that when a new trial occurred immediately after a choice, but before delayed reinforcers from the previous trial were delivered, capuchin monkeys made more LL choices. This is because the LL delay imposed a smaller opportunity cost if a new choice trial could present itself concurrently with the LL delay from the previous trial. In other words, the monkeys were not waiting for the LL reinforcer; they were postponing the LL reinforcer to be delivered later while they engaged in other reinforcing activities. Although animals may often ignore the ITI, the SS and LL delays might represent salient opportunity costs that animals do not ignore. On the other hand, the hypothetical impulsive choice tasks that humans receive inherently imply reinforcement postponement because participants will not assume that a “$27 in 2 weeks” choice commits them to captively wait in a laboratory room for those weeks (Paglieri, 2013). Thus, choosing the LL does not necessitate an opportunity cost for other reinforcing activities in the hypothetical choice paradigm.

Reinforcer bundling is another extraneous contingency that affects choice outside of delay/amount contingencies (Ashe & Wilson, 2020). In bundling, a single choice results in a series of delayed outcomes that occur in succession, and this has the effect of increasing LL choices. For example, the SS option may offer $100 immediately and another $100 after two weeks, and the LL option may offer $200 after 2 weeks and another $200 after 4 weeks. Bundling effects have been observed in humans (e.g., Kirby & Guastello, 2001) and rats (e.g., Stein et al., 2013b). Stein et al. (2013b) demonstrated greater LL choices in group of rats that experienced a bundle of 9 SS or LL delayed reinforcer events (followed by a single ITI), compared with a no bundle group with an ITI length to equate rate of reinforcement between the two groups. Additionally, rats in the bundled group later made more LL choices in a standard impulsive choice task. Thus, the bundling experience resulted in learning that transferred across tasks. Stein and Madden (2021) proposed that bundling increases LL choices by allowing the sum of the values of discounted LL reinforcers in the bundle to be compared against the sum of the values of discounted SS reinforcers in the bundle (which includes delays between each subsequent SS reinforcer delivery). The first SS reinforcer in the bundle might have greater value than the LL reinforcer, but each successive SS reinforcer may have lower value than the LL; thus, the sum these reinforcer values will drive preference for the LL. The process underlying bundling effects might explain why the optimal response strategy is observed in the diminishing returns procedure. The PI option is often selected several times consecutively before switching to the FI option to reset the PI delays. If each discrete choice for the PI option is framed as a “bundle,” then the discounted value of each PI reinforcer in the series of choices might summate to compare against the value of the delayed FI reward. Future research is necessary to explore this hypothesis.

To summarize, animals can adapt their choices to find an optimal response solution to maximize reinforcers. However, the standard impulsive choice contingencies often lead to suboptimal choices. This might in part be because the prototypical impulsive choice situation is not designed to highlight the global reinforcement context. The learning effects on choice behavior highlight many possible mechanisms that may determine impulsive choice and those may independently affect choices in complex ways. The following section will discuss mechanisms of impulsive choice from a more conceptual theory-driven perspective.

Motivational and cognitive factors

Learning implicitly includes motivational and cognitive factors that may interact with impulsive choice. Motivational factors relate to influences of reinforcers and aversive stimuli/punishers in affecting choices. Within the framework of impulsive decision-making between larger/later and smaller/sooner options, the reinforcers often refer to the quantity or quality of the larger/smaller outcomes, whereas the aversive stimuli refer to the duration of the later/sooner delays. Motivation also relates to the conditions that modify the subjective value of reinforcers across time (reinforcer deprivation being the prototypical example). Collectively, the value of a consequence in a given deprivation state could be considered the “utility” of that consequence. Learning does not uniformly occur between individuals and within the same individual across all situations. Cognitive factors that functionally describe how experiences translate into adaptations in behavior are also important. The key cognitive processes that are relevant to impulsive decision-making are delay and amount perception, attention, and working-memory. Learning necessitates perceptual contact and attention to be focused on those relevant environmental features for the organism to encounter the prevailing reinforcement contingencies. Learning also requires adequate working memory to associate current environmental conditions with past outcomes to accurately plan prospectively and optimize future outcomes.

Impulsive versus self-controlled decision-making is often framed as a competition between a motivational system and a cognitive (executive) system, respectively (Bechara et al., 2019). The motivational system is driven by the short-sighted pursuit of immediate attractive reinforcers at the expense of long-term outcomes. The cognitive system strives to achieve optimal outcomes that take long-term reinforcers into consideration, foregoing any immediate reinforcer that is in competition with the long-term goals. The competing neurobehavioral decision systems (CNDS; Koffarnus et al., 2013) theory posits two neurobehavioral processes responsible for impulsive decisions and executive decisions. Brain regions associated with the (bottom-up) impulsive system include the amygdala and striatum (i.e., dopamine mediated wanting system associated with habitual reinforcer seeking and cravings). Brain regions associated with (top-down) executive control systems include the orbitofrontal cortex, prefrontal cortex, and anterior cingulate cortex (i.e., anticipation, foresight/planning, value processing in decision-making, etc.). The insular system (i.e., sensory processing, salience detecting, information integration, etc.; Gogolla, 2017) is proposed to modulate how deprivation and stress can produce an imbalance allowing hyperactivity in impulsive systems to override hypoactivity in executive systems (Bechara et al., 2019). The motivational and cognitive factors discussed in this section may fit within the CNDS framework.

Aversion to delays and temptation by immediacy

Delay aversion is a common explanatory framework that describes impulsive choices as avoidance of the aversive properties involved in waiting for a delayed reinforcer. The delayed gratification paradigm can evaluate delay aversion where waiting for the LL is challenging in the face of temptation from the immediate SS (Mischel et al., 1989; Watts et al., 2018). For example, individuals with ADHD are often considered to be delay averse (Solanto et al., 2001; Sonuga-Barke et al., 1992) and often fail to wait for the LL (Rapport et al., 1986). Individuals with ADHD often fail to wait for the LL and show greater avoidance of a cue that was associated with the delay, and this is interpreted as an aversion to the delay that the cue represents (Van Dessel et al., 2018). Their study also showed increased activity in the amygdala and dorsolateral prefrontal cortex, which are linked to negative affect and avoidance behavior, in delay-avoidant individuals. These results are further supported by Mies et al. (2018), who found that higher amygdala activity was correlated with self-reports of delay aversion and more impulsive choices. Like in humans, research with rodents has also observed that impulsive rats will respond to turn off cues associated with longer delays (Peck et al., 2019). Delay aversion as an explanation of impulsive choice is a construct that is supported by behavioral data (cue avoidance responses), subjective reports, and brain measures (activity in regions associated with aversive affect).

Preclinical research has further suggested that there might be a causal link between delay aversion and impulsive choice as exposure to delays increases self-controlled choices in humans and animals (e.g., Rung et al., 2019; Smith et al., 2019). Stein et al. (2013a) required rats to repeatedly respond for delayed reinforcement (using a fixed-time, FT, schedule) on a training lever and subsequently tested those rats’ impulsive choices using a separate pair of choice levers. Compared with rats that were trained to respond for immediate reinforcement (FR 1), the delay exposure rats made more LL choices. This effect has been replicated repeatedly using the procedure described above (Peck et al., 2019; Renda & Madden, 2016; Renda et al., 2018, 2021; Rung et al., 2018; Stein et al., 2015). Peck et al. (2019) found that that exposure to delays improved self-control and decreased avoidance of a delay-associated cue. This finding suggests that the delay exposure training may have improved self-control by reducing aversion to the LL delay evidenced by reduced responses to escape from delay-correlated stimuli. Improving delay tolerance through training was reported in Fox et al. (2019; Experiment 2) with rats exposed to FI-Delay training and assessed on an impulsive choice and peak interval procedure. Unlike A. P. Smith et al. (2015), where training involved SS and LL forced-choice trials, the training in Fox et al. delivered LL and SS amounts from both of the choice levers (randomly) after an LL delay. Fox et al. (2019) reported that their version of the FI-Delay training improved self-control, but they did not observe any improvements in peak interval timing. The different training procedures in Fox et al. (2019) allowed experience with the LL delay to occasionally lead to SS reinforcer amounts on the SS lever and this might have led to poor generalization of timing information acquired in training to the choice task. These results suggest that the improvement in self-control was driven by improvements in delay tolerance.

Delay tolerance suggests that LL delays resulting from self-controlled choices are not aversive. Alternatively, an immediacy preference suggests that reinforcer promptness associated with the SS choice is attractive. Fox et al. (2019; Experiment 2) found that the FI-Delay training improved delay tolerance, but No-Delay training increased SS preferences. The No-Delay training involved immediate access to pellets using an FR 2 contingency with the SS and LL reinforcer amounts presented randomly on each lever (like the FI-Delay training). Fox (2021) replicated this effect of the No-Delay training. Collectively, these studies support the conclusion that FI-Delay exposure may promote self-control by increasing delay tolerance, whereas a No-Delay exposure may increase impulsive choice by increasing an immediacy preference. The No-Delay training in these studies differs procedurally from previous No-Delay tasks (Bailey et al., 2018; Panfil et al., 2020; Stuebing et al., 2018) in the delivery of random SS and LL reinforcer amounts. Those previous studies did not report any change in LL choices. It is possible that the random reinforcer amount deliveries may have increased attention to the immediacy of the delays, a possibility that necessitates further research.

Preference for immediacy has also been studied by increasing the SS delay, rather than the LL delay, resulting in an increased preference for the LL (Bailey et al., 2018; Mazur & Biondi, 2009; Rodriguez & Logue, 1988). Experiments that eliminate immediacy by using a precommitment contingency can also increase LL choices (Rachlin & Green, 1972). The precommitment opportunity was presented as an initial link choice between a later terminal link offering a free choice between SS and LL options or a terminal link only offering a forced-choice LL option. When the pigeons choose to proceed to the free-choice terminal link, they favored the SS option, but overall, the pigeons preferred to commit to the forced-choice LL option in the initial link. Thus, if the SS option was unavailable to tempt immediate reinforcer delivery in the initial link, then the pigeons showed more self-controlled choices by favoring a terminal link that led to a forced-choice LL option.

Jackson and Hackenberg (1996) also demonstrated that eliminating reinforcer immediacy increased self-control by using token-reinforcement procedures. In this procedure responding did not directly lead to food, but rather illuminated LED lights that were accumulated across trials and later exchanged for food. Pigeons chose between an LL option of 3 LEDs after 6 s or an SS option of 1 LED available immediately. Under conditions where LEDs were traded for food immediately the pigeons showed an SS preference (similar to when they responded for food directly). However, if the opportunity to trade accumulated LEDs for food was delayed for LL and SS choices, then the pigeons were more likely to choose the LL option. Similar to what was observed with Rachlin and Green (1972), once the temptation of an immediately consumable reinforcer was removed, the pigeons demonstrated better self-control.

Collectively, self-control can be increased by reducing LL delays, increasing tolerance to LL delays, increasing SS delays, or preventing immediate access to SS outcomes. Preference for immediacy and aversion to delays are both significant factors in modulating impulsive choice.

Reinforcer valuation

Reinforcer valuation refers to the motivation to obtain a given reinforcer based on how intrinsically valuable an outcome is to an individual (i.e., its utility). In addition, motivational states can modulate the value of a reinforcer across situations and over time. For example, food deprivation will increase food value until the hunger state is satisfied. The quantity and quality of the “larger” and “smaller” reinforcer determines how reinforcer value affects impulsive decision-making—this section focuses on qualitative reinforcer differences.

Madden et al. (1997) found that opioid dependent individuals were more impulsive when choosing between monetary SS and LL rewards in a hypothetical choice task, but they were even more impulsive when the reinforcer was hypothetical heroin—a commodity that is highly valued to individuals with an opioid dependency. Odum et al. (2020) reviewed the effects of qualitatively different reinforcers on impulsive choice and explored why some reinforcers are discounted at higher rates. For example, nonmonetary outcomes lose value with delays more steeply than monetary outcomes. They conclude that the discounting of qualitatively different reinforcers was determined by the perceived future preference for a reinforcer (i.e., does the individual anticipate that they would want it later) and the utility of a future reinforcer (i.e., would the future value of the reinforcer be lost). But those conclusions are limited to human participants. In animals, preference between the SS and LL options do not appear vary between conditions where quantitatively (e.g., 10 vs. 30 pellets) and qualitatively different reinforcers were offered (e.g., sucrose vs. cellulose pellets; Calvert et al., 2010). It therefore seems that any consumable reinforcer may produce the same rate of delay discounting. However, this conclusion needs to be considered with caution because reinforcer quality was identical on the SS and LL options (same-reinforcer tasks) in Calvert et al. (2010). Using qualitatively different reinforcers (e.g., sucrose on SS, cellulose on LL) might have produced different results.

Evaluating impulsive choice using cross-reinforcer tasks where the SS and LL offer different types of reinforcers rather than different quantities is insightful, but is uncommon in impulsive choice assessments (Pritschmann et al., 2021). Bickel et al. (2011) looked at cross-reinforcer impulsive choice between hypothetical money and cocaine using participants meeting clinical criteria for stimulant use disorder. The cocaine-SS and money-LL group showed high degrees of self-control favoring the delayed money option, suggesting that immediate cocaine did not compete strongly against delayed money. The money-SS and cocaine-LL group showed the strongest rate of discounting, presumably because a delayed consumable reinforcer loses much of its anticipated value (Odum et al., 2020). They included same-reinforcer groups and reported that the LL choices were highest with money, and greater SS choices were found with the cocaine, consistent with steeper discounting of nonmonetary reinforcers. The use of hypothetical cocaine as a reinforcer might partially explain these effects because the individuals may have lacked motivation for that reinforcer while completing that task. However, providing a real cocaine reinforcer is not feasible in most human research studies.

Animal studies have assessed choices with different types of consumable reinforcers (Huskinson et al., 2016; Huskinson et al., 2015). Huskinson et al. (2015) reported that when rhesus monkeys were offered a food-LL and a cocaine-SS, they discounted the food-LL more steeply compared with a condition when food was available for both options. This suggests that impulsive choices may increase when a highly valued consumable reinforcer is attached to the SS option. However, Huskinson et al. (2016) presented the opposite cross-reinforcer options with cocaine-LL and food-SS. They reported that monkeys preferred the cocaine-LL with stronger preferences when higher doses of cocaine were available. Thus, with real outcomes the higher-valued cocaine reinforcer dominated preferences. The difference between the results in monkeys and humans might reflect the use of experiential versus hypothetical outcomes. Regardless, the use of cross-reinforcers in the impulsive choice tasks reveal novel dynamics that invite further research. For instance, the utility of qualitatively different reinforcers can interact in ways that affect the value of each reinforcer. Some cross-reinforcers interact in a way where consumption of one reinforcer increases the value of the alternative—these are known as complimentary cross-reinforcer interactions (e.g., consumption of salt may increase thirst and increase the consumption of a beverage). Other cross-reinforcers interact in a way where consumption of one reinforcer decreases the value of the alternative—these are known as substitutable cross-reinforcer interactions (e.g., consumption of water may decrease thirst and decrease the consumption of alternative beverages). Cross-reinforcer relationships are “independent” when they do not interact.

The economic demand procedure (Hursh & Roma, 2016) is a useful method to assess the value of a reinforcer and evaluate how cross-reinforcers interact. Procedurally, reinforcer value is evaluated by having individuals pay some cost (e.g., effort, hypothetical money) to obtain the reinforcer where the unit price (cost per amount) of the reinforcer is varied across conditions and the amount of the reinforcer obtained is measured. The reinforcer value is indexed by the elasticity of demand, the degree to which reinforcer consumption drops when the unit price is increased. Shallow decreases in consumption are termed inelastic demand and this is an index of high reinforcer value relative to elastic demand where consumption drops steeply with increases in price. In everyday terms, inelastic demand is associated with necessities that individuals would pay almost any price to obtain (e.g., food, water) and elastic demand is associated with luxuries that can be forgone if the costs are too high or reinforcer value offered is too low (e.g., entertainment). Cross-reinforcer interactions can be evaluated when the unit price of one target reinforcer is varied while the cost of a qualitatively different alternative is held constant. For substitutable relationships, the consumption of the alternative would increase with increased price for the target reinforcer, whereas for complimentary reinforcers, the consumption of the alternative would decrease with the increase in price of the target reinforcer. These cross-reinforcer relationships underscore the point that the utility of a reinforcer in a context is determined by what other reinforcers are present. Understanding these interactions is useful in substance abuse research where reinforcer overevaluation can become maladaptive.

In the extreme, overevaluation occurs when a reinforcer is excessively consumed or sought out at the expense of other outcomes. As previously discussed, conditions like substance use disorders are associated with impulsive choice, but prediction of these conditions is improved when jointly factoring impulsive choice and reinforcer valuation assessments. Observations that high impulsive choice and high reinforcer valuation (assessed using the demand procedures) are associated with maladaptive substance use behaviors has been termed reinforcer pathology (Bickel et al., 2014, 2020). Reinforcer pathology is linked to unhealthy behaviors associated with alcohol (Lemley et al., 2016; Stancato et al., 2020), cannabis (Aston et al., 2016; reinforcer value and discounting were associated with different THC use outcomes), body mass index (Epstein et al., 2014), caloric intake (Rollins et al., 2010), unsafe sexual behaviors (Harsin et al., 2021), and relapse from smoking cessation (García-Pérez et al., 2022). The observation that impulsive choice and reinforcer value often covary in individuals suggests that they may share a common underlying motivational mechanism. Interventions designed to treat maladaptive reinforcer-driven behavior should therefore be assessed for their ability to reduce impulsive choice and the value of the problem reinforcer—be it drugs, food, gambling, or sex.

Interventions such as episodic future thinking (EFT) can affect both impulsive choice and reinforcer value. EFT requires individuals to vividly imagine a future event and this has been shown to reduce impulsive choice (Peters & Büchel, 2010) along with decreased excessive valuation for alcohol (Bulley & Gullo, 2017), nicotine (Stein et al., 2018), and palatable foods (Sze et al., 2017). Alternatively, participants that receive scenarios of stressful situations, such as income constraints (Mellis et al., 2018) or hurricane losses (Snider et al., 2020), are more likely to show increased impulsivity and (food) reinforcer valuation. Collectively, this supports the reinforcer pathology framework and demonstrates that impulsive choice and reinforcer valuation are mechanistically associated. These results also support the CNDS proposal that impulsive behaviors emerge from the motivational system and are associated with heightened reinforcer valuation (Bechara et al., 2019).

Motivating operations refers to the conditions that temporarily modulate the value of a reinforcer across time (Edwards et al., 2019; Michael, 1993). The most basic example of a motivating operation is deprivation. For example, food deprived animals experience hunger, water deprived animals experience thirst, and drug-dependent animals in abstinence experience withdrawal. However, other conditions can serve as motivating operations. For example, salt intake results in thirst, advertisements for palatable food and drugs can trigger cravings, and stressful environments motivate avoidance behavior. Downey et al. (2022) provides a thorough review of the effects of deprivation on impulsive decision-making that does not need to be fully recounted here. However, there are several key proposals that highlight how motivational processes may affect impulsive choice. In humans, it has been generally concluded from the results of a variety of studies that sleep or nicotine deprivation have no effect on impulsive choice, whereas deprivation of opioids or financial resources increase impulsive choice (Downey et al., 2022). In animals, mild deprivation does not seem to affect impulsive choice in food-deprived pigeons (Oliveira et al., 2013) or water-deprived rats (Richards et al., 1997). However, opioid-dependent rats show greater impulsive choices during deprivation-induced withdrawal (Harvey-Lewis & Franklin, 2014). Collectively, there are mixed results about whether deprivation impacts impulsive decision-making. However, from prevailing trends, it is possible that mild deprivation (or other low-stress conditions) would have negligible effects while stressful deprivation (e.g., withdrawal) may increase impulsive choice.

Attention

Attention is a perceptual and cognitive concept that is broad and difficult to define precisely (Hommel et al., 2019). For the present purposes we operationalize attention as the degree to which reinforcement contingencies and stimulus cues in the environment affect impulsive choice behavior.

The importance of attention can be observed in studies where conditions lead to poor learning. As discussed earlier, delay training increased self-control corresponding with improvements in timing in some studies (Peterson & Kirkpatrick, 2016; Smith et al., 2015), but not others (Rung et al., 2018). A prominent procedural difference that may explain differences between the studies is the contingency employed at the end of the delay. Smith et al. (2015) used response-initiated FI schedules requiring the rats to make a response after the delay to collect the reinforcer during training and impulsive choice tasks. On the other hand, Rung et al. (2018) used response-initiated FT schedules where reinforcement was delivered automatically after the delay. The FI response requirements promote active attention to the delay. The FT schedules deliver the reinforcers automatically after the delay. The FT schedules in the choice procedure might not require sufficient attention to delays, and this might have led to the absence of an effect in the timing task. In another, previously discussed example, Marshall and Kirkpatrick (2016) reported that rats learned to discriminate reinforcer amounts with a concurrent training procedure (e.g., choosing between different pellet amounts in a trial), but not in a successive training procedure (e.g., responding for different pellet amounts on a lever across blocks of sessions; Marshall et al., 2014). This benefit of learning during concurrent training might be mediated by the procedure requiring attention and comparison between the two options. The successive procedure does not easily permit such comparisons between the two options. Poor attention may explain why some procedural differences produce limited effects on learning.

Refocusing attention is a proposed method to help individuals with impulsive decision-making and associated maladaptive behavior, like substance use disorder (Ashe et al., 2015). Mischel and Ebbesen (1970) and Mischel et al. (1972) investigated the impact of attention in the delayed gratification task in children. Conditions that encouraged attention to the outcome, such as thinking of the reinforcer or making the reinforcer visible, decreased delay gratification. On the other hand, conditions that distracted the children from the reinforcer, such as thinking of something fun, improved delay gratification for the LL option. Evans and Beran (2007) reported that chimpanzees engaging in self-distraction activities were better able to wait for a larger accumulation of reinforcers in a modified delay of gratification task. This finding demonstrates that attentional focus can be a relevant mechanism for self-control in animals who are not simply following verbal instructions or obeying potential demand characteristics, as may be the case with human participants. Overall, this demonstrates that shifting attention away from a tempting SS option can increase the ability to wait for an LL option.

Just as distraction can shift attention away from the choice situation, attention can also be shifted toward different aspects of the choice situation by using stimulus cues. As discussed above, ITIs often do not affect impulsive decision-making in rats (e.g., Sjoberg et al., 2021), but Pearson et al. (2010) reported that signaling the ITI increased LL choices in rhesus macaques. This signaling effectively drew attention to the ITI and increased reinforcement maximizing. The results from Peck et al. (2019), where rats avoided delay-correlated cues, indicate that cue lights associated with a choice appear to represent the aversive dimension of the delay rather than the reinforcing dimension of the food. Attention to the delay-associated cue may condition the delays to represent the aversive aspects of waiting.

Studies have also shown that cues associated with delays in the terminal link within concurrent chains can represent the delay to food (Grace & Savastano, 2000) or time left waiting for food (from transition between initial link and terminal link; O'Daly et al., 2006) based on concurrent or successive value training, respectively. These contrasting results might be best understood in terms of how the training focuses attention on learning what the delay represents. Using short SS delays in training increased impulsive choices (Smith et al., 2022), and this might be due to biasing attention towards short SS delays (i.e., rats learned the appeal of a short SS delay). The way animals experience the SS and LL options (outside of a choice procedure) may bias learning by training the animals to attend to different aspects of the impulsive choice contingencies. Overall, whether delays represent the aversive aspects of waiting, the value of the outcome, the time that has already passed during a delay, or the time left waiting may depend upon how these aspects were learned. The relevant mechanism underlying this learning might be attention to the contingencies.

The contingencies of reinforcement or cues in an initial link of an impulsive choice procedure can affect choices and these effects relate to attentional framing. Calvert et al. (2011) also demonstrated that cues can affect impulsive choices by signaling parts of the delay. They assessed impulsive choice in a study with a common delay added to the SS and LL options so that the SS option did not produce immediate reinforcer delivery. In comparison to control condition with no common delay, unsignaled common delays increased impulsivity and signaled common delays decreased impulsivity. The reason for this signaling effect is unclear, but it may have manipulated attention to the contingencies where the unsignaled delay was associated with a long delay that was aversive (e.g., Peck et al., 2019). In contrast, the signaled condition may have reframed the contingencies so that the experience with the LL option was not as subjectively long in comparison to the SS option. To compare with humans, Green et al. (2005) added a common delay to hypothetical SS and LL choices and found more LL choices as a result. Thus, pigeons showed sensitivity to the contingencies similar to humans if the task was framed in a way that highlighted the common delay between the SS and LL.

Attention can also be manipulated in experiments without the explicit use of stimulus cues. As described previously, Rachlin and Green (1972) demonstrated that pigeons committed to the LL option if given the opportunity in an initial link. In a similar experiment, Siegel and Rachlin (1995) found that an FR 31 response requirement, on either the SS or LL initial link leading up to the choice, increased LL choices compared with an FR 1 response requirement. The pigeons could switch options during the FR 31 and only the last response counted as the choice, which led to the SS or LL delay and subsequent reinforcer delivery. Under this contingency, pigeons tended to respond on the LL option early and rarely switched to the SS option. Monterosso and Ainslie (1999) suggested that the pigeons’ attention was focused on the LL at the start of the trial in the FR 31 condition because the initial link distance from the outcome produced an LL bias and the response contingency helped maintain attention on the LL for the remaining 30 responses. The self-control promoting effects of precommitment in Rachlin and Green (1972) can emerge when the initial link delay involves a response contingency that captures attention and guides the pigeon to the terminal choice associated with that option, often the LL due to an LL preference at the precommitment stage of decision-making.

Framing effects in the impulsive choice literature with humans might be understood in terms of attentional control. Instructions to human participants can reframe tasks to shift attention that can be observed with outcome framing and date framing. Hypothetical choice tasks typically ask participants, “Would you prefer $9 now or $18 in 14 days?” The explicit-zero framing asks participants, “Would you prefer $9 now and $0 in 14 days or $0 now and $18 in 14 days?” Radu et al. (2011) conducted a series of experiments comparing explicit and implicit $0 (i.e., a standard question format). They tested preferences of future outcomes in one group and satisfaction from past outcomes (e.g., “$9 an hour ago” or “$18 fourteen days ago”) in another group. They reported fewer impulsive choices with explicit zero for both the future and past outcome groups. They explained these results in terms of temporal attention, where myopic temporal horizons (for future and past outcomes) can at least partially account for impulsive choice without needing to appeal to temptation. It is interesting to point out that the focus on temporal attention in Radu et al. (2011) as a causal mechanism shares some parallels with animal research where FI-Delay training improves the timing of FI delays and leads to more LL choices (Smith et al., 2015), which may expand the temporal horizons.

Another form of framing is explicit date framing where choice questions indicate the date when the outcome would be delivered (e.g., $15 on 7/22/22 instead of $15 in 7 days). Read et al. (2005) found that date framing increased LL choices. This may have occurred because date framing does not explicitly highlight the delay dimension and may promote attention to the amount dimension. Naudé et al. (2018) found that improvements in self-control from date discounting were more likely to be observed with highly impulsive individuals. These results suggest that date framing shifts attention away from the delay dimension. If impulsivity is driven by delay aversion, then this attentional shift would disproportionally affect choices of impulsive individuals.

Attention is also implicated in studies designed to bias time perspectives. Future time perspective (FTP) measures the degree to which individuals think about the future and consider future consequences. Greater degrees of FTP are associated with greater self-control and healthful behaviors (Daugherty & Brase, 2010). Göllner et al. (2018) assessed the relationship between FTP in both impulsive choice and delayed gratification measures and found that LL choices and success in delaying gratification was correlated with a longer FTP time horizon. Thus, temporal attention is a mechanism that may explain impulsive choice and subsequently why EFT is successful in promoting self-control.

More recent versions of reinforcer pathology theory include a temporal window or time horizon as the target for interventions (Bickel et al., 2020). An individual’s short time horizon may potentially be linked to poor time perception, inattention to the future, and/or inability to make well-informed temporal choices. Short time horizons could be a possible cause of impulsive choice. If so, then training procedures designed to improve temporal horizons should improve self-control. As previously mentioned, EFT reduces impulsive choice and lowers reinforcer valuation in humans by having them vividly imagine future events (Peters & Büchel, 2010). The act of vividly imagining the future is correlated with activity in the anterior cingulate cortex (ACC, related to attention; Davis et al., 2000) and hippocampus (processes temporal information relating to episodic memories, Umbach et al., 2020). Attention is implicated in EFT in two main ways. First, it increases self-control when implemented during a choice trial, when attention would most likely influence decision-making. Second, focusing attention on episodic recent events can increase impulsive choices (Rung & Madden, 2019), demonstrating that the mechanism of attention on choice can work in both directions. Reinforcer pathology links high impulsive choice, high reinforcer valuation, and short time horizons as key aspects predictive of maladaptive behavior. The ability of EFT to increase time horizons and reduce impulsive choice and reinforcer valuation suggests that temporal attention is target mechanism for positive behavioral change.

Working memory

Working memory is the ability to maintain goal-relevant information despite interference from competing or irrelevant information. Shamosh et al. (2008) reported that working memory is negatively correlated with impulsive choice and intelligence (replicated in Bobova et al., 2009), and is partly explained by activity in the anterior prefrontal cortex (functionally associated with prospective planning; Ramnani & Owen, 2004). This correlation implies that working memory may be another target mechanism mediating impulsive choice.

To test this hypothesis, Bickel et al. (2011) gave stimulant users working memory training using digit-span and word recall tasks and reported improved self-control. Jimura et al. (2018) measured fMRI with participants making impulsive choices and completing a working memory exercise. They reported that the anterior prefrontal cortex and the dorsolateral prefrontal cortex activation was correlated with difficult working memory trials (i.e., high cognitive loads) and difficult impulsive choice trials (i.e., where both options have similar subjective values)—when the self-controlled option was chosen. This suggests that the neurobiological networks associated with challenging cognitive tasks also participate in difficult choices. Snider et al. (2018) used working memory training in addition to EFT to increase self-control in individuals with alcohol use disorder coupled with high baseline impulsive choices. Working memory training coupled with EFT improved self-control. Individuals showed improvements in a working memory transfer task that was procedurally distinct from the training task to confirm that the training improved working memory in general. Working memory training may support EFT by improving the generation of the vividly imagined stimuli that improve self-control. Overall, working memory training has had some promising results in individuals high in impulsivity, but some working memory training studies have failed to improve self-control (Hendershot et al., 2018; Wanmaker et al., 2018). Future research is needed to help better understand the mechanisms that working-memory training targets when improving self-control.

In preclinical models, working memory has also been assessed in rodents as a possible process underlying impulsive choice. Renda et al. (2014) found that rats with better working memory accuracy, assessed using a delayed match-to-position task, made more LL choices. However, training working memory using the same procedure did not increase LL choices in impulsive rats (Renda et al., 2015). It is possible that alternative working memory training procedures may produce effects on self-control, but this remains to be tested. Overall, in both rats and humans, there is evidence indicating a relationship between working memory and self-control, but more research is needed to determine whether working memory training can reliably improve self-control.

Perception, discrimination, and timing

Impulsive decision-making involves the discrimination between the LL and SS contingencies. Perceptual processes are involved in translating the objective stimulus properties (delays and reinforcer amounts) into subjective representations. Meck and Church (1983) demonstrated that amount discrimination (i.e., counting) and time discrimination (i.e., waiting) both displayed a psychophysical function where the point of subjective equivalence (judged to be the midpoint between two stimulus values) was located at the geometric mean. This is consistent with the psychophysical principles of perception that are explained by Weber’s law. As already discussed, self-control is correlated with accurate discrimination between reinforcer amounts (Marshall & Kirkpatrick, 2016), accurate discrimination between delays (Baumann & Odum, 2012; McClure et al., 2014), and accurate expectation of delayed reinforcer delivery (Marshall et al., 2014). Collectively, this demonstrates that variance in self-controlled choices can be explained by variance in an accurate representation of the choice options.

Distorted timing processes, in particular, have received considerable attention in explaining impulsive choice (Bailey et al., 2018; Baumann & Odum, 2012; Berlin et al., 2004; Kim & Zauberman, 2009; Marshall et al., 2014; McGuire & Kable, 2012, 2013; Noreika et al., 2013; Reynolds & Schiffbauer, 2004; Rubia et al., 2009; Smith et al., 2015; Wittmann & Paulus, 2008; Zauberman et al., 2009). Additionally, impulsive choice and timing dysfunctions are associated with substance use disorders with distorted timing being proposed as a mechanistic link mediating the relationship between self-control and substance use (Paasche et al., 2019). Timing distortions may affect impulsive choice via overestimations of delays, thus making the LL option less attractive, or imprecisely estimating delays may lead to uncertainty in temporal anticipation and difficulty predicting events in time. Baumann and Odum (2012) demonstrated that human impulsive choice was associated with overestimation of delays, and McGuire and Kable (2012, 2013) reported that individuals overestimating delays also were less likely to wait for delayed reinforcers. Temporal imprecision has also been associated with impulsive choice in rats (Marshall et al., 2014; McClure et al., 2014; Peterson & Kirkpatrick, 2016; Smith et al., 2015). To summarize, self-controlled choices are associated with accurate psychophysical representations of choice amounts and delays. Accurate perception of delays might be particularly important driving self-control.

Much of the research reviewed in this section included both human and animals and shows overlapping mechanisms in learning, motivational, and cognitive effects related to impulsive choice. Species differences are sometimes obvious—for example, rats and pigeons cannot read a series of questionnaire items and indicate their preference to a hypothetical offer, nor can they be tasked to vividly imagine a future outcome. Future comparative research needs to explore the ability of animal models to inform the mechanisms of impulsive choice broadly, and the extent to which those mechanisms can be translated across species. There are numerous hypotheses and predictions that are made by each factor discussed above. The foundational theoretical models that have historically described delay discounting have often failed to account for these additional factors and the following section on models of impulsive decision-making highlights this point. Researchers should consider how cognition, motivation, attention, and working memory intersect when investigating the mechanisms of impulsive decision-making.

Models of impulsive decision-making

A wide range of mathematical formulations have been proposed to explain behavior in impulsive choice tasks, but the breadth of models has not necessarily led to new insights into understanding the mechanism of impulsive choices. Here, we focus on the models that are most pertinent to the cognitive processes discussed in the previous section and point to strengths and weaknesses in the efficacy of the models in shedding light on interpreting empirical data. We present an analysis of 16 models that are grouped in four different families. All models presented have a fundamental focus on predicting subjective value of as a function of reinforcer amount and delay. Throughout this section, we differentiate the models based on whether they are better suited to predict human and/or animal data. We do not include a discussion of models that are designed to predict decision heuristics in hypothetical choice situations only (Marzilli Ericson et al., 2015), nor do we include drift diffusion models that are designed to predict reaction time distributions and/or describe evidence accumulation in place of subjective value (Amasino et al., 2019; Peters & D’Esposito, 2020). Finally, the models here assume that choice behavior follows reinforcer value in a straightforward way. We do not include discussion of separate decision rules (e.g., softmax) that could affect choice behavior (e.g., Rodriguez et al., 2014). Following a discussion of individual models, we evaluate the models overall and then discuss their relation to the empirical results described in the previous section.

Foundational models

Exponential versus hyperbolic discounting