Abstract

Image denoising approaches based on partial differential modeling have attracted a lot of attention in image processing due to their high performance. The nonlinear anisotropic diffusion equations, specially Perona–Malik model, are powerful tools that improve the quality of the image by removing noise while preserving details and edges. In this paper, we propose a powerful and accurate local meshless algorithm to solve the time-fractional Perona–Malik model which has an adjustable fractional derivative making the control of the diffusion process more convenient than the classical one. In order to overcome the complexities of the problem, a suitable combination of the compactly supported radial basis function method and operator splitting technique is proposed to convert a complex time-fractional partial differential equation into sparse linear algebraic systems that standard solvers can solve. The numerical results of classical and fractional models are explored in different metrics to demonstrate the proposed scheme’s effectiveness. The numerical experiments confirm that the method is suitable to denoise digital images and show that the fractional derivative increases the model’s ability to remove noise in images.

Similar content being viewed by others

1 Introduction

Nowadays, images have tremendous applications in scientific studies and should be of good quality in order to be useful [1, 2]. So, image denoising has an important role in image processing and computer vision to prepare images with better resolutions. Partial differential equations (PDEs) are widely used in different parts of image processing, such as filtering, restoration, segmentation, edge enhancement, detection [3], and especially denoising. There are some PDE models that are applied for image and signal denoising, such as diffusion equation, geometric curvature equation, and TV flow [4, 5]. In the models based on the diffusion equation, the PDE model uses a nonlinear anisotropic diffusion to enhance the quality of an image by removing noise while preserving details and edges [6]. In this paper, we focus on the time-fractional Perona–Malik model (FPMM) with the Caputo fractional derivative for temporal derivative denoted by \({}_{0}^{C}\mathcal{D}_{t}^{\alpha}\). The FPMM model is defined as follows:

where \(\Omega \subseteq \mathbb{R}^{2}\), \(I=(0,T)\) is the scaling time interval, α is the order of time fractional derivative, and n is the unit outward normal to the boundary of Ω. The Caputo fractional derivative of order α is defined as

in which \(\Gamma (z)=\int _{0}^{\infty}e^{-s}s^{z-1}\,ds \) has the following property:

The nonlinear anisotropic diffusion filtering has been widely used in image processing and performed significantly [7–9]. Koenderink found a relationship between the solution of a heat equation with an initial noisy image and its convolution with Gaussian function at each scale [10]. In fact, the denoising process can be described as the solution of the following linear diffusion equation:

The solution of this equation can be obtained by the following integral convolution:

where \(G_{t}\) is the Gaussian function with standard deviation σ. In this model, \(u(x,y,0)\) is the initial noisy image whose noise reduces over time. However, this model also fades the image on the edges which leads to losing some essential data of the image. So, Perona and Malik, in 1990, proposed a nonlinear diffusion model, called Perona–Malik model (PMM), to overcome the problems of previous models [11]. Actually, they used the gradient of the actual image \(u(x,y,t)\) as feedback into a diffusion process and introduced the following anisotropic diffusion equation with a zero Neumann boundary condition:

where \(g(\cdot )\) is a smooth decreasing function in terms of \(\Vert \nabla u(x,y,t)\Vert \), which controls the diffusion strength and is called the diffusion coefficient. This function is considered such that becomes equal to 1 inside the region and tends to zero near the edges. A typically used diffusion coefficient is [12]

where K is an edge detector threshold parameter that decides the amount of diffusion to take place.

However, PMM is an ill-posed problem as it is a degenerate and backward diffusion equation. In other words, if the signal-to-noise ratio (SNR) is very low, most of noises remain on the edges. Therefore, Catté et al. proposed the regularized PMM to triumph over this disadvantage [13]. This model is defined as follows:

where \(G_{\sigma} \in C^{\infty}(\mathbb{R}^{2})\) is Gauss kernel with variance σ, and we have

in which ∗ represents the standard convolution and ũ is an extension of u to \(\mathbb{R}^{2}\) specified by a periodic reflection through the boundary of the problem.

PMM is a powerful model which has been utilized in nonlinear data filtration, image restoration, noise removal, and edge detection [11, 13, 14]. For instance, scientists used PMM in optical coherence tomography [15], radiography image processing [16], etc. Moreover, solving the PMM model with numerical algorithms is a challenging problem and some researchers have been trying to improve suitable approaches to simulate its solution. In [17], the domain decomposition approach combined with the finite difference method was proposed to solve nonlinear problems in image denoising. Kamranian and Dehghan developed a meshfree finite point method to solve PMM [12]. Gu, in 2020, proposed a finite element approach for two Peron–Malik and Yu–Kaveh models and compared the obtained results with the finite difference method [4]. Moreover, Hjouji et al. proposed a mixed finite element method for bivariate PMM [18]. Also, Sidi Ammi and Jamiai applied finite difference and Legendre spectral methods to a time-fractional diffusion–convection equation and its application for image processing [3].

Fractional integration and fractional differentiation are generalizations of integer–order ones which have various applications in modeling the phenomena in different fields of sciences [3, 19–22], and scientists have been trying to develop various proper numerical approaches for solving fractional differential equations [23–28]. Fractional calculus has a long history in mathematics [29, 30], and various definitions and operators are provided to express the fractional derivative, including Grunwald–Letnikow [31], Marchaud [31], Riemann–Liouville [31, 32], Caputo [33, 34], Riesz [35, 36], etc. [37–39]. Recently, fractional calculus has also been used for many image processing applications such as image restoration, segmentation, texture enhancement, edge detection, image encryption, and image denoising to improve their performances [19, 40–42].

FPMM is an extension of the classical one whose temporal derivatives are taken in the Caputo sense, which enhances model’s applicability. It is worth mentioning that if \(\alpha =1\), the classical model is obtained. The fractional model, in comparison with the classical one, has an extra parameter α which can enhance the model’s flexibility and improve its performance.

In recent decades, meshless algorithms have been among the most powerful numerical techniques utilized to approximate solutions of complex differential equations. Unlike the other methods which need mesh generation, meshless methods only work with a scattered set of collocation points without any prior information on the domain of the problem. This property drastically reduces the computational cost compared to the other numerical methods which need mesh generation [43]. In addition, using radial basis functions (RBFs) in meshless methods has some advantages like spectral convergence for smooth functions in even complex geometries, ease of implementation, and is appropriate for high-dimension problems [44]. RBFs can be divided into two categories, namely global and local. Global RBFs (e.g., Gaussian, Multiquadric, etc.) have a severe drawback, in particular they have a dense and ill-conditioned coefficient matrix of the obtained linear system [45–47]. On the other hand, compactly-supported radial basis functions (CS–RBFs) such as Wendland’s functions are defined on the local arbitrary subdomain [48–51] which makes them more stable. The CS–RBF method is more stable due to the sparsity of the obtained coefficient matrix, but it is not as accurate as the global RBFs. CS–RBFs have been introduced by Wu and Wendland for the first time for scattered data interpolation and have been used broadly in numerical simulations [52].

Since FPMM is applied to images, we usually face a large domain, making the numerical simulation difficult. In the current paper, with the aid of the operator splitting (OS) method, PMM is divided into two partial differential equations. Then, the CS–RBF approach is applied to obtained PDEs. Finally, the subproblems reduce to linear algebraic equations of small dimension that can be efficiently solved by a proper algorithm such as the LU factorization technique. Finally, we evaluate the proposed method by applying it to some examples for image denoising. To summarize, we propose an advanced and proper numerical method based on OS and CS–RBF schemes to remove noise by FPMM. This approach has some significant advantages. First, the model considered in this paper is FPMM which has a fractional order derivative that improves model’s ability in noise removal compared to the classical PMM. Second, the proposed approach converts a complex nonlinear system to some simple linear ones and dramatically decreases computational costs. Finally, as we use a local RBF method, the obtained linear systems are sparse and well-posed in comparison with global approaches that can be solved by standard solvers. Moreover, we report the obtained numerical results with a proper value of α with different test cases and compare them with PMM in different metrics, which confirms the high accuracy and effectiveness of the proposed algorithm.

The organization of this paper is as follows: In Sect. 2, the basic properties of the algorithm presented in this work, such as the details about fractional derivative, OS, and CS–RBF collocation approaches, are briefly described. The numerical results obtained by the proposed method are presented and discussed in Sect. 3, and some concluding remarks are given in Sect. 4.

2 Proposed approach

In this section, the Trotter OS scheme [53] is applied to Eq. (1.1) and converts it to some simpler PDEs. Then, the time dimension is discretized by a backward Euler approach. Finally, the obtained equations are solved by the CS–RBF method.

2.1 Temporal discretization

In this part, we apply a divide-and-conquer approach which is known as the OS method, to divide Eq. (1.1) into two subproblems in separate directions. Then, the Caputo time derivative is discretized in each subproblem.

2.1.1 Trotter splitting scheme

First, in order to reduce the complexity of problem (1.1), a first-order OS method called Trotter splitting approach is employed on Eq. (1.1) to solve FPMM along separate directions. Using this scheme reduces the size of obtained matrices and thus substantially reduces the computational complexity. Consider the time interval I which is divided into \(M+1\) equally spaced steps \(t_{0},t_{2},\dots ,t_{M}\), \(t_{k}=k\Delta t\), \(k=0,1,\dots ,M\), and \(\Delta t=\frac{T}{M}\). Equation (1.1) can be written as follows:

where \(\mathcal{L}_{x}\) and \(\mathcal{L}_{y}\) are suboperators on function u as follows:

By employing the Trotter splitting method, the approximate solution of \(u(x,y,t_{k+1})\), which can simply be written as \(u^{k+1}\), is obtained as \(u^{k+1}=[\mathcal{S}_{x}^{\Delta t} \mathcal{S}_{y}^{\Delta t}]u^{k}\), in which \(\mathcal{S}_{x}^{\Delta t}\) and \(\mathcal{S}_{y}^{\Delta t}\) are \({}_{0}^{C}\mathcal{D}_{t}^{\alpha}u^{*}=\mathcal{L}_{x}(u^{*})\) and \({}_{0}^{C}\mathcal{D}_{t}^{\alpha}u^{**}=\mathcal{L}_{y}(u^{**})\), respectively. Finally, we have \(u^{k+1}=u^{**^{k+1}}\). The interested readers can see [43, 54–56] for more details about the OS methods.

Now, a proper scheme should be proposed to discretize the Caputo time derivative operator \({}_{0}^{C}\mathcal{D}_{t}^{\alpha}\) in the subproblems.

2.1.2 Discretization of Caputo derivative

At each time step \(t_{k}\), \(k=0,2,\dots ,M\), using the backward Euler approach, the time fractional derivative is approximated as follows:

So, we have

in which \(R_{k+1}\) is the truncation error and we have

where \(\mathcal{C}\) is a constant that depends only on u. Based on Lemma 3.1 in [57] and the fact that \(\Gamma (2-\alpha )\geq 2\) for all \(\alpha \in [0,1]\), it can be concluded that

So

Moreover, from Eq. (2.4), the following equations can be computed:

The discrete fractional differential operator \(D^{\alpha}_{t}\) is as follows:

Thus, we can write

So \(D^{\alpha}_{t}\) is an approximation to \({}_{0}^{C}\mathcal{D}_{t}\) and we have

For the sake of simplicity, we define \(\mathcal{C}_{\Delta t}^{\alpha}= \frac{1}{\Gamma (2-\alpha )(\Delta t)^{\alpha}}\), \(\mathcal{A}_{j}^{\alpha}=(j+1)^{1-\alpha}-j^{1-\alpha}\), and \(u(x,y,t_{k})=u^{k}\).

Remark 2.1

From Theorems 3.1 and 3.2 in [57], it can be deduced that the proposed temporal discretization scheme is unconditionally stable for \(\Delta t>0\) and has globally \((2-\alpha )\)-order accuracy for \(0<\alpha <1\).

Now, by using Eq. (2.11), the subproblems are discretized along the temporal dimension as follows:

2.2 Spatial discretization

Before going to the space discretization of problems (2.12) and (2.13), we focus on the convolution term \(G_{\sigma}*u^{k} (G_{\sigma}*u^{*^{k}})\). Since the kernel \(G_{\sigma}\) is smooth, the term \(G_{\sigma}*u^{k} (G_{\sigma}*u^{*^{k}})\) can be replaced by a heat equation with initial condition \(u^{k}(u^{*^{k}})\). These heat equations are solved by the OS method in conjunction with the Crank–Nicolson and CS–RBF approaches. The solutions of heat equations replaced in Eqs. (2.12) and (2.13) are represented by \(u^{c}\) and \(u^{*^{c}}\), respectively.

By substituting of the solution of the heat equations, Eqs. (2.12) and (2.13) can be considered in the following forms:

By obtaining \(u^{c}\) and \(u^{*^{c}}\), functions \(g(\Vert \nabla u^{c}\Vert )\) and \(g(\Vert \nabla u^{*^{c}}\Vert )\) are computed as follows:

Moreover, \(\frac{\partial g(\Vert \nabla u^{c}\Vert )}{\partial x}\) and \(\frac{\partial g(\Vert \nabla u^{*^{c}}\Vert )}{\partial y}\) can be obtained by the chain rule.

Now, CS–RBF method is applied on Eqs. (2.14) and (2.15) which has two main benefits. First, as there is no need for any mesh generation for constructing the shape functions, the CS–RBFs scheme is considered truly meshless, and the coefficient matrix of this approach is well-conditioned and can be solved by common methods easily. Moreover, the local RBFs like Wendland CS–RBFs do not have a shape parameter and are more stable than global ones.

At the first step of the space discretization, the nodal points over the domain Ω should be selected at each time step \(t_{k}\). We consider pixels of the images, i.e., \(X\times Y\) where \(X=\lbrace x_{1},\dots ,x_{N_{x}}\rbrace \) and \(Y=\lbrace y_{1},\dots ,y_{N_{y}}\rbrace \), as the mesh nodes on the boundary and inside of the domain. In order to approximate \(u^{k}\) for all \(k\geq 0\), \(u^{0}\) is computed by the interpolation, then \(u^{k}\) for \(k>0\) is obtained by solving Eq. (2.12) using the CS–RBFs scheme. In addition, CS–RBFs are symmetric with respect to their center points by the following definition:

Definition 2.2

([45])

A function \(\phi :\mathbb{R}^{s}\rightarrow \mathbb{R}\) is called radial provided there exists a univariate function \(\varphi :[0,\infty )\rightarrow \mathbb{R}\) such that

and \(\Vert \cdot \Vert \) is some norm on \(\mathbb{R}^{s}\), usually the Euclidean norm.

Among different choices of existing radial basis functions, in this paper, Wendland’s compactly supported radial basis functions (WCS–RBFs) with \(C^{2}\) smoothness are selected because they do not include any shape parameters that make these functions easier to use. These functions are defined as follows:

in which \(r_{i}=\Vert x-x_{i}\Vert \) is the distance from node \(x_{i}\) to x, and δ is the size of support for the radial function \(\phi _{i}(x)\). Moreover, function \((1-\delta r_{i})^{4}_{+}\) is defined such that for \(0\leq \delta r_{i} <1\) it is equal to \((1-\delta r_{i})^{4}\), otherwise is zero.

To approximate functions \(u^{k}\), \(u^{*^{k+1}}\), and \(u^{**^{k+1}}\) in Ω over center points \((x_{i},y_{j})\), \(i=1,2,\dots ,N_{x}\), \(j=1,2,\dots ,N_{y}\), the CS–RBF interpolation approximations \(\hat{u}^{k}\), \(\hat{u}^{*^{k+1}}\), and \(\hat{u}^{**^{k+1}}\) are defined as

in which \(\phi _{i}\) and \(\phi _{j}\) are RBFs based on centers \(\lbrace x_{i}\rbrace _{i=0}^{N_{x}}\) and \(\lbrace y_{j}\rbrace _{j=0}^{N_{y}}\). Additionally, \(\lambda _{ij}\) and \(\gamma _{ij}\) are unknown coefficients which should be specified.

Finally, substituting the approximation function of Eq. (2.19) into Eq. (2.14) associated to the x-direction, for all nodes in Ω, the matrix forms of their discrete equations are obtained as follows:

where \(\Lambda ^{k+1}_{j}= [ \lambda _{1j}^{k+1},\lambda _{2j}^{k+1}, \dots ,\lambda _{N_{x}j}^{k+1} ]^{T}\), \(\mathbf{A}_{j}\) and \(\mathbf{B}_{j}\) are \(N_{x} \times N_{x}\) matrix and \(N_{x} \times 1\) vector, respectively, defined as

where

\(\mathbf{H}_{x}\) is an \(N_{x}\times N_{x}\) matrix whose first and last rows are first and last rows of \(\Upsilon _{x}\) and the other rows contain only zero elements. Moreover,

that are known coefficients computed in previous iterations. Indeed, there are \(N_{y}\) linear systems of \(N_{x}\) algebraic equations which are well-posed and can be solved by a standard approach such as LU factorization. By solving these systems, the coefficient vectors \(\Lambda _{j}^{k+1}\) are obtained and the approximation of \(\hat{u}^{*^{k+1}}\) is computed as follows:

in which

The same procedure is applied on Eq. (2.15); as a result, the following system is obtained:

where \(\Gamma ^{k+1}_{i}= [ \gamma _{i1}^{k+1},\gamma _{i2}^{k+1},\dots , \gamma _{iN_{y}}^{k+1} ]^{T}\), \(\mathbf{A}_{i}\) and \(\mathbf{B}_{i}\) are \(N_{y} \times N_{y}\) matrix and \(N_{y} \times 1\) vector, respectively, defined as follows:

Vector \(\overline{\Lambda}^{m}_{i}\) is calculated by the multiplying \(\Phi ^{-1}\) in the mth column of \((\mathbf{U}^{*^{k}})^{T}\); \(\Phi _{y}\), \(\Upsilon _{y}\), and \(\Psi _{y}\) are \(N_{y}\times N_{y}\) matrices which are constructed same as \(\Phi _{x}\), \(\Upsilon _{x}\), and \(\Psi _{x}\), respectively, with \(\lbrace y_{1},y_{2},\dots ,y_{N_{y}}\rbrace \). Moreover, \(\mathbf{H}_{y}\) is an \(N_{y} \times N_{y}\) matrix like \(\mathbf{H}_{x}\) whose first and last rows are equal to the first and the last rows of matrix \(\Upsilon _{y}\); \(\tilde{\mathbf{G}}^{i}\) and \(\tilde{\mathbf{G}}_{y}^{i}\) have the following structures:

By solving \(N_{x}\) systems (2.25), \(\hat{u}^{**^{k+1}}\) is calculated as

in which

Finally, \(u^{k+1}(x_{i},y_{j})\), \(i=1,2,\dots ,N_{x}\), \(j=1,2,\ldots ,N_{y}\) are obtained from \((\mathbf{U}^{**^{k+1}} )^{T}\).

We summarize the proposed approach in Algorithm 1.

3 Numerical experiments

This section is devoted to evaluating the performance of the proposed algorithm by applying it to some examples. This section includes a \(512\times 512\) image (Lena) and two \(256\times 256\) images (Cameraman and Racoon). We consider Signal-to-Noise Ration (SNR), Peak Signal-to-Noise Ration (PSNR), Structure Similarity Index Measure (SSIM), and Mean Squared Error as four metrics to measure the performance of denoising with FPMM and PMM which are defined as follows:

in which u is the uncorrupted image, κ is its maximum intensity value, û is the reconstructed image, and \(M \times N\) is the size of the image. Moreover, \(\mu _{u}\) and \(\mu _{\hat{u}}\) are the averaging over all the pixel values of the images, \(\sigma _{\hat{u}}^{2}\), \(\sigma _{u}^{2}\) are the variance of all the pixel values of the images and \(\sigma _{u\hat{u}}\) is the covariance of u and û. The \(c_{1}\), and \(c_{2}\) coefficients are also defined as follows:

where \(k_{1}, k_{2}\ll 1\) are constant coefficients and L is the dynamic range of pixel values.

In order to illuminate the validity of the proposed method, different kinds of noise, i.e., Gaussian, Poisson, and speckle, are added to images, and the denoising process results of FPMM and PMM are demonstrated for each example. Moreover, the appropriate value of α in FPMM is selected according to SNR and PSNR indicators. Also, the stopping criterion for the denoising process is the value of PSNR that should be maximized. All the implementations are done with MATLAB 2017a, on a computer with the following hardware configuration: Intel Core i7, 16 GB RAM.

3.1 Test case 1

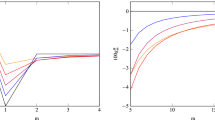

As the first example, we consider the image of Lena of size \(512 \times 512\) pixels. We set \(\Delta t=10^{-3}\), \(\delta =5\). Based on the value of δ, the obtained coefficient matrices are well-posed, and their condition numbers are almost 107. According to Fig. 1, \(\alpha =0.9\) can be a good choice for this example. Figure 2 shows the results of FPMM with \(\alpha =0.9\) and PMM for different input noises. This figure implies that FPMM overall is better than the classic one and is applicable for all types of noise. Table 1 reports this example’s result for different Gaussian noise variances. It seems that by increasing the noise variance, FPMM performs better than PMM.

Test case 1: (a) the original image Lena, (b) noisy image with Gaussian noise (variance 0.02), (c) denoised image of the Gaussian noise by FPMM (\(\alpha =0.9\)), (d) denoised image of the Gaussian noise by PMM, (e) noisy image with Poisson noise, (f) denoised image of the Poisson noise by FPMM (\(\alpha =0.9\)), (g) denoised image of the Poisson noise by PMM, (h) noisy image with speckle noise, (i) denoised image of the speckle noise by FPMM (\(\alpha =0.9\)), and (j) denoised image of the speckle noise by PMM

3.2 Test case 2

In this case, we regard the Cameraman image of size \(256 \times 256\). The parameters in this example are \(\Delta t=10^{-3}\), \(\delta =5\), and \(\alpha =0.9\). Figure 3 represents the effect of α on the accuracy of the denoising process. From this figure, \(\alpha =0.9\) is the suitable choice for the order of fractional derivative. Figure 4 depicts the result of denoising by FPMM (\(\alpha =0.9\)) and PMM for the Gaussian (variance 0.02), Poisson, and speckle noises. In addition, Table 2 contains the values of performance measurement metrics for FPMM and PMM against different Gaussian noise variances. It can be concluded that FPMM is stronger than PMM, and fractional derivative lets us denoise images more effectively.

Test case 2: (a) the original image of a cameraman, (b) noisy image with Gaussian noise (variance 0.02), (c) denoised image of the Gaussian noise by FPMM (\(\alpha =0.9\)), (d) denoised image of the Gaussian noise by PMM, (e) noisy image with Poisson noise, (f) denoised image of the Poisson noise by FPMM (\(\alpha =0.9\)), (g) denoised image of the Poisson noise by PMM, (h) noisy image with speckle noise, (i) denoised image of the Speckle noise by FPMM (\(\alpha =0.9\)), and (j) denoised image of the speckle noise by PMM

3.3 Test case 3

In the last example, the Racoon image of size \(256\times 256\) is considered as the test case. The numerical approach is applied with \(\Delta t=10^{-2}\), \(\alpha =0.8\), and \(\delta =5\). Like the previous examples, \(\alpha =0.8\) is selected based on the values of PSNR and SNR in Fig. 5. Additionally, Table 3 analyzes the results of FPMM and PMM. Both models can eliminate the noise; however, FPMM is much better for a higher level of noise variance. For instance, for variance 0.01, the MSE of PMM is less than that of FPMM, but as the noise intensity increases, the MSE model will be better. In Fig. 6, we show the performance of FPMM (with \(\alpha =0.8\)) and PMM for eliminating various kinds of noise for the Racoon example. In summary, the superiority of FPMM with \(\alpha =0.8\) is obvious in this test case.

Test case 3: (a) the orginal image Racoon, (b) noisy image with Gaussian noise (variance 0.02), (c) denoised image of the Gaussian noise by FPMM (\(\alpha =0.8\)), (d) denoised image of the Gaussian noise by PMM, (e) noisy image with Poisson noise, (f) denoised image of the Poisson noise by FPMM (\(\alpha =0.8\)), (g) denoised image of the Poisson noise by PMM, (h) noisy image with speckle noise, (i) denoised image of the speckle noise by FPMM (\(\alpha =0.8\)), and (j) denoised image of the speckle noise by PMM

4 Conclusion

In this study, we proposed a practical and accurate numerical approach based on the combination of Trotter splitting and compactly supported radial basis function methods to investigate the time-fractional Perona–Malik model (FPMM) which is a strong model for image denoising. The operator splitting approach allowed us to divide the main problem with a large domain into simpler subproblems, significantly decreasing computational costs. By employing the proposed scheme, the model was reduced to sparse and well-posed linear algebraic systems; thus, these systems could be solved by classical algorithms like the LU approach. Various test cases were examined in different factors such as SNR, PSNR, MSE, and SSIM to demonstrate the efficiency and accuracy of the proposed method. We showed that by choosing an appropriate value for fractional derivative order α, FPMM can outperform the classical one. In this paper, the proper value of α was selected such that the values of PSNR and SNR were maximized.

Availability of data and materials

Data sharing not applicable to this article as no especial datasets were generated during the current study.

References

Lotfi, Y., Parand, K.: Anti-aliasing of gray-scale/color/outline images: looking through the lens of numerical approaches for PDE-based models. Comput. Math. Appl. 113(1), 130–147 (2020)

Singh, A., Agarwal, P., Chand, M.: Image encryption and analysis using dynamic AES. In: 2019 5th International Conference on Optimization and Applications (ICOA) (2019)

Sidi ammi, M.R., Jamiai, I.: Finite difference and Legendre spectral method for a time-fractional diffusion–convection equation for image restoration. Discrete Contin. Dyn. Syst., Ser. A 11(1), 103–117 (2020)

Gu, Y.: Finite element numerical approximation for two image denoising models. Circuits Syst. Signal Process. 39, 2042–2062 (2020)

Rudin, L.I., Osher, S., Fatemi, E.: Nonlinear total variation based noise removal algorithms. Phys. D: Nonlinear Phenom. 60(1–4), 259–268 (1992)

Deng, L., Zhu, H., Yang, Z., Li, Y.: Hessian matrix-based fourth-order anisotropic diffusion filter for image denoising. Opt. Laser Technol. 110, 184–190 (2019)

Abdallah, M.B., Malek, J., Azar, A.T., Belmabrouk, H., Monreal, J.E., Krissian, K.: Adaptive noise-reducing anisotropic diffusion filter. Neural Comput. Appl. 27(5), 1273–1320 (2016)

Li, Y., Ding, Y., Li, T.: Nonlinear diffusion filtering for peak-preserving smoothing of a spectrum signal. Chemom. Intell. Lab. Syst. 159, 157–165 (2016)

Barbu, T.: Robust anisotropic diffusion scheme for image noise removal. Proc. Comput. Sci. 35, 522–530 (2014)

Koenderink, J.J., Ding, Y., Li, T.: The structure of images. Biol. Cybern. 50, 363–370 (1984)

Perona, P., Malik, J.: Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12, 629–639 (1990)

Kamranian, M., Dehghan, M., Tatari, M.: An image denoising approach based on a meshfree method and the domain decomposition technique. Eng. Anal. Bound. Elem. 39, 101–110 (2014)

Catté, F., Lions, P.-L., Morel, J.-M., Coll, T.: Image selective smoothing and edge detection by nonlinear diffusion. SIAM J. Numer. Anal. 29, 182–193 (1992)

Handlovicova, A., Mikula, K., Sgallari, F.: Variational numerical methods for solving nonlinear diffusion equations arising in image processing. J. Vis. Commun. Image Represent. 13(1–2), 217–273 (2002)

Salinas, H.M., Fernandez, D.C.: Comparison of PDE-based nonlinear diffusion approaches for image enhancement and denoising in optical coherence tomography. IEEE Trans. Med. Imaging 26(6), 761–771 (2007)

Shangguan, H., Zhang, X., Cui, X., Liu, Y., Zhang, Q., Gui, Z.: Sinogram restoration for low-dose X-ray computed tomography using regularized Perona–Malik equation with intuitionistic fuzzy entropy. Signal Image Video Process. 13, 1511–1519 (2019)

Firsov, D., Lui, S.: Domain decomposition methods in image denoising using Gaussian curvature. J. Comput. Appl. Math. 193(2), 460–473 (2006)

Hjouji, A., Jourhmane, M., EL-Mekkaoui, J., Es-sabry, M.: Mixed finite element approximation for bivariate Perona–Malik model arising in 2D and 3D image denoising. 3D Res. 9(36), 460–473 (2018)

Jun, Z., Wei, Z., Xiao, L.: Adaptive fractional-order multi-scale method for image denoising. J. Math. Imaging Vis. 43, 39–49 (2012)

Momani, S.: An algorithm for solving the fractional convection–diffusion equation with nonlinear source term. J. Comput. Phys. 12(7), 1283–1290 (2007)

Agarwal, P., Baleanu, D., Chen, Y.Q., Momani, S., Machado, J.A.T.: Fractional Calculus, ICFDA 2018, Amman, Jordan. Springer Singapore 12(7), 1283–1290 (2019)

Agarwal, P., Ramadan, M.A., Regeh, A.A.M., Hadhoud, A.R.: A fractional-order mathematical model for analyzing the pandemic trend of COVID-19. Math. Methods Appl. Sci. 45(8), 4625–4642 (2022)

Jafari, H., Momani, S.: Solving fractional diffusion and wave equations by modified homotopy perturbation method. Phys. Lett. A 370(5–6), 388–396 (2007)

Chu, Y.-M., Shah, N.A., Agrawal, P., Chung, J.D.: Analysis of fractional multi-dimensional Navier–Stokes equation. Adv. Differ. Equ. 2021, 91 (2021)

Sunarto, A., Agrawal, P., Sulaiman, J., Chew, J.V.L.: Computational approach via half-sweep and preconditioned AOR for fractional diffusion. Intell. Autom. Soft Comput. 31(2), 1173–1184 (2022)

Sunarto, A., Agrawal, P., Sulaiman, J., Chew, J.V.L., Aruchunan, E.: Iterative method for solving one-dimensional fractional mathematical physics model via quarter-sweep and PAOR. Adv. Differ. Equ. 2021, 147 (2021)

Al-Smadi, M., Momani, S., Djeddi, N., El-Ajou, A., Al-Zhour, Z.: Adaptation of reproducing kernel method in solving Atangana–Baleanu fractional Bratu model. Int. J. Dyn. Control (2022). https://doi.org/10.1007/s40435-022-00961-1

Hasan, S., Al-Smadi, M., Dutta, H., Momani, S., Hadid, S.: Multi-step reproducing kernel algorithm for solving Caputo–Fabrizio fractional stiff models arising in electric circuits. Soft Comput. 26, 3713–3727 (2022)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations, 1st edn. Wiley, New York (1993)

Ross, B.: A brief history and exposition of the fundamental theory of fractional calculus. In: Fractional Calculus and Its Applications. Springer, Berlin (1975)

Hilfer, R.: Applications of Fractional Calculus in Physics. World Scientific, Singapore (2000)

Blank, L.: Numerical Treatment of Differential Equations of Fractional Order, Manchester Center for Computational Mathematics. University of Manchester, Manchester (1996)

Caputo, M.: Linear model of dissipation whose q is almost frequency independent. Geophys. J. R. Astron. Soc. 13, 529–539 (1967)

Caputo, M.: Elasticita e Dissipazione. Zanichelli, Bologna (1969)

Podlubny, I.: Fractional Differential Equations: An Introduction to Fractional Derivatives, Fractional Differential Equations, to Methods of Their Solution and Some of Their Applications, vol. 198. Elsevier, Amsterdam (1998)

Bu, W., Tang, Y., Wu, Y., Yang, J.: Crank–Nicolson ADI Galerkin finite element method for two-dimensional fractional Fitzhugh–Nagumo monodomain model. Appl. Math. Comput. 257, 355–364 (2015)

Atangana, A., Baleanu, D.: New fractional derivatives with nonlocal and non-singular kernel: theory and application to heat transfer model. Therm. Sci. 20, 763–769 (2016)

Karaagac, B.: Analysis of the cable equation with non-local and non-singular kernel fractional derivative. Eur. Phys. J. Plus 133(2), 54 (2018)

Atangana, A., Gómez-Aguilar, J.F.: A new derivative with normal distribution kernel: theory, methods and applications. Phys. A, Stat. Mech. Appl. 476, 1–14 (2017)

Pedram, G., Micael, S.C., Jon, A.B., Nuno, M.F.F.: An efficient method for segmentation of images based on fractional calculus and natural selection. Expert Syst. Appl. 39, 12407–12417 (2012)

Yin, X., Zhou, S., Jon, A.B., Siddique, M.A.: Fractional nonlinear anisotropic diffusion with p-Laplace variation method for image restoration. Multimed. Tools Appl. 75, 4505–4526 (2016)

Yi-Fei, P., Ji-Liu, Z., Xiao, Y.: Fractional differential mask: a fractional differential-based approach for multiscale texture enhancement. IEEE Trans. Image Process. 19, 491–511 (2010)

Hemami, M., Rad, J.A., Parand, K.: The use of space-splitting RBF-FD technique to simulate the controlled synchronization of neural networks arising from brain activity modeling in epileptic seizures. J. Comput. Sci. 42, 101090 (2020)

Safdari-Vaighani, A., Larsson, E., Heryudono, A.: Radial basis function methods for the Rosenau equation and other higher order PDEs. J. Sci. Comput. 75, 84–93 (2018)

Fasshauer, G.E.: Meshfree Approximation Methods with MATLAB, vol. 6. World Scientific, Singapore (2007)

Rad, J.A., Kazem, S., Parand, K.: A numerical solution of the nonlinear controlled Duffing oscillator by radial basis functions. Comput. Math. Appl. 64, 2049–2065 (2012)

Rad, J.A., Höök, L.J., Larsson, E., von Sydow, L.: Forward deterministic pricing of options using Gaussian radial basis functions. J. Comput. Sci. 24, 209–217 (2018)

Wendland, H.: Scattered Data Approximation. Cambridge University Press, New York (2005)

Rad, J.A., Parand, K., Abbasbandy, S.: Local weak form meshless techniques based on the radial point interpolation (RPI) method and local boundary integral equation (LBIE) method to evaluate European and American options. Commun. Nonlinear Sci. Numer. Simul. 22(1–3), 1178–1200 (2015)

Hemami, M., Parand, K., Rad, J.A.: Numerical simulation of reaction–diffusion neural dynamics models and their synchronization/desynchronization: application to epileptic seizures. Comput. Math. Appl. 78(11), 3644–3677 (2019)

Hemami, M., Rad, J.A., Parand, K.: Phase distribution control of neural oscillator populations using local radial basis function meshfree technique with application in epileptic seizures: a numerical simulation approach. Commun. Nonlinear Sci. Numer. Simul. 103, 105961 (2021)

Wendland, H.: Piecewise polynomial, positive definite and compactly supported radial functions of minimal degree. Adv. Comput. Math. 78(1), 389–396 (1995)

Moayeri, M.M., Rad, J.A., Parand, K.: Dynamical behavior of reaction–diffusion neural networks and their synchronization arising in modeling epileptic seizure: a numerical simulation study. Comput. Math. Appl. 80(8), 1887–1927 (2020)

Holden, H., Karlsen, K.H., Lie, K.A., Risebro, N.H.: Splitting Methods for Partial Differential Equations with Rough Solutions: Analysis and Matlab Programs. Eur. Math. Soc., Zurich (2010)

Hellander, P., Lawson, P.J., Drawert, B., Petzold, L.: Local error estimates for adaptive simulation of the reaction–diffusion master equation via operator splitting. J. Comput. Phys. 266, 89–100 (2014)

Alonso-Mallo, I., Cano, B., Reguera, N.: Avoiding order reduction when integrating reaction–diffusion boundary value problems with exponential splitting methods. J. Comput. Appl. Math. 357, 228–250 (2019)

Lin, Y., Xu, C.: Finite difference/spectral approximations for the time-fractional diffusion equation. J. Comput. Phys. 255(2), 1533–1552 (2007)

Acknowledgements

The authors are very grateful to the reviewer for carefully reading this paper and for their comments and suggestions, which have improved the quality of the paper.

Funding

Not applicable.

Author information

Authors and Affiliations

Contributions

JM: Software, Validation, Formal analysis, Conceptualization, Methodology, Writing—Original Draft, Supervision. BHS-M: Investigation, Writing—Review Editing. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Mazloum, J., Hadian Siahkal-Mahalle, B. A time-splitting local meshfree approach for time-fractional anisotropic diffusion equation: application in image denoising. Adv Cont Discr Mod 2022, 56 (2022). https://doi.org/10.1186/s13662-022-03728-2

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-022-03728-2