Abstract

The solutions of system of linear fractional differential equations of incommensurate orders are considered and analytic expressions for the solutions are given by using the Laplace transform and multi-variable Mittag–Leffler functions of matrix arguments. We verify the result with numeric solutions of an example. The results show that the Mittag–Leffler functions are important tools for analysis of a fractional system. The analytic solutions obtained are easy to program and are approximated by symbolic computation software such as MATHEMATICA.

Similar content being viewed by others

1 Introduction

Fractional calculus studies the several different possibilities of defining integral and derivative of arbitrary order, generalizing the classical integration operator \(Jf(t)=\int_{0}^{t} f(\tau){\,d\tau} \) and differentiation operator \(D f(t)=\frac{d}{dt}\,f(t)\). In this regard, the common definitions include the Grünwald–Letnikov fractional integral and derivative, the Riemann–Liouville fractional integral and derivative, and the Caputo fractional derivative [1–9].

It is found that fractional calculus can describe memory phenomena and hereditary properties of various materials and processes [2–7, 10]. In recent decades, fractional calculus has been applied to different fields of science and engineering, covering viscoelasticity theory, non-Newtonian flow, damping materials [4, 7, 11, 12], anomalous diffusion [13–16], control and optimization theory [17–19], financial modeling [20, 21], and so on.

For the scalar function \(f(t)\) on \(a< t<+\infty\), the Riemann–Liouville fractional integral of order β is defined as

for \(\beta>0\), and \(f(t)\) for \(\beta=0\). The Riemann–Liouville and Caputo fractional derivatives of order α have the forms

and

respectively.

Fractional differential equations on scalar functions, including the existence, uniqueness and stability, and the analytic and numeric methods of solutions, were studied by many scholars [3–9, 13, 15, 17, 22–27]. In particular, new numerical schemes were designed [9, 23, 24, 28], and a Lie symmetry analysis was given and the conservation laws for fractional evolution equations were systematically investigated [29–32]. The solutions of many fractional differential equations involve a class of important special functions—Mittag–Leffler functions (AMS 2000 Mathematics Subject Classification 33E12). The Mittag–Leffler function with two parameters is defined by the series expansion [5, 33]

where \(\Gamma(\cdot)\) is Euler’s gamma function. The special case of \(\lambda=\rho=1\) degenerates to the exponential function, \(E_{1,1}(z)=e^{z}\). We note that the Mittag–Leffler functions were also used to define new fractional derivatives [34, 35].

The Laplace transform is an effective tool for the analysis of linear fractional differential equations. It is defined for a function \(f(t)\) as

The Laplace transforms of the fractional integral and the Caputo fractional derivative are

and

Atanackovic and Stankovic [36] introduced the system of fractional differential equations into the analysis of lateral motion of an elastic column fixed at one end and loaded at the other. Daftardar-Gejji and Babakhani [37] and Deng et al. [38] studied the existence, uniqueness and stability for solution of system of linear fractional differential equations with constant coefficients. Other references include [39–41]. In the above literature, solutions of the fractional differential system were given for the case of a commensurate order system.

Incommensurate fractional order linear systems were considered in [42, 43]. Odibat [42] used the Laplace transform and the Mittag–Leffler function with two parameters, but the orders were limited to rational numbers. Daftardar-Gejji and Jafari [43] derived solutions using the Adomian decomposition method.

In this paper, we focus on the system of linear fractional differential equations of incommensurate orders and give analytic expressions for the solutions by using the Laplace transform and multi-variable Mittag–Leffler functions of matrix arguments. We verify the result with numeric solutions of an example.

In the sequel, we will use the Caputo fractional derivatives and denote \({}_{0} D_{t}^{\alpha}f(t) \) by \(D_{t}^{\alpha}f(t) \) for short.

2 A generalization of the Mittag–Leffler function

We introduce an n-variable Mittag–Leffler function with \(n+1\) parameters as

where \(\alpha_{i}\) for \(i=1,2,\dots,n\) and β are positive constants.

The explicit form of the first several terms on the right hand side is

The special case of the single variable, i.e. \(n=1\), degenerates to the Mittag–Leffler function with two parameters as

where we express the parameter and the argument as α and t, instead of \(\alpha_{1}\) and \(t_{1}\).

The two-variable case, i.e. \(n=2\), is

The series on the right hand side in Eq. (8) is absolutely convergent for \((t_{1},t_{2},\dots,t_{n}) \in\mathbf{R}^{n}\). In fact, we may suppose, without loss of generality, that \(\alpha_{1}=\min\{\alpha_{1},\alpha_{2},\dots,\alpha_{n}\}\). Then, for large enough k, we have

So it follows that

We estimate the general term of the series in Eq. (8) as

It is well known that the dominant series

converges to the Mittag–Leffler function \(E_{\alpha_{1}, \beta }(|t_{1}|+|t_{2}|+\cdots+|t_{n}|)\).

If in the definition (8) the parameters satisfy \(\alpha _{1}=\alpha _{2}=\cdots=\alpha_{n}=\alpha\), then the n-variable Mittag–Leffler function in Eq. (8) also degenerates to the Mittag–Leffler function with two parameters as

In particular, if \(\alpha_{1}=\alpha_{2}=\cdots=\alpha_{n}=\beta=1\), the n-variable Mittag–Leffler function in Eq. (8) degenerates to the exponential function

We remark that different versions of multi-variable Mittag–Leffler functions were presented, such as in [43–46]. But they are not exactly the same as the version in this paper.

3 Solution of system of fractional differential equations

We consider the following system of fractional differential equations:

where \(a_{ik} \) are constants, not all zero, \(f_{i}(t)\) are specified functions, \(D_{t}^{\alpha_{i}}\) are the Caputo fractional derivative operators with \(0<\alpha_{i}\leq1\), and \(y_{i}(t)\) are unknown functions with the specified initial values \(y_{i}(0)\).

We suppose that each \({f}_{i}(t)\) is locally integrable on the interval \(0< t<+\infty\) and the Laplace transforms exist. Equation (14) may be written in matrix equation as

where D is the diagonal matrix with the fractional derivative operators

\(\mathbf{A}=(a_{ij})_{n\times n}\) is a non-zero coefficient matrix, \(\mathbf{f}(t)=(f_{1}(t),f_{2}(t),\dots,f_{n}(t))^{T}\), and \(\mathbf{y}=\mathbf{y}(t)=(y_{1}(t),y_{2}(t),\ldots,y_{n}(t))^{T}\).

Applying the Laplace transformation to Eq. (14) with respect to t we obtain

where \(\operatorname{Re}(s)>c>0\), and c is constrained by the following derivation and can be taken as the value on the right hand side of inequality (21). In matrix form, Eq. (17) is

where Λ denotes the diagonal matrix \(\boldsymbol {\Lambda}= \operatorname{diag}(s^{\alpha_{1}},s^{\alpha_{2}},\dots ,s^{\alpha_{n}})\). We rewrite Eq. (18) as

Left multiplication by the inverse matrix \(\boldsymbol{\Lambda }^{-1}=\operatorname{diag}(s^{-\alpha_{1}},s^{-\alpha_{2}},\dots ,s^{-\alpha _{n}})\) leads to

where I is the unit matrix of order n.

We let

then the matrix \(({\mathbf{I}-\Lambda^{-1} A})\) is invertible. This can be proved as follows.

In fact, by Eq. (21), we have

that is, the following holds:

Thus the absolute values of the diagonal entries of the matrix \(({\mathbf{I}-\Lambda^{-1} A})\) are greater than \(1/2\), while the sum of the absolute values of the non-diagonal entries in the ith row is less than \(1/2\). By the Gershgorin circle theorem, the eigenvalues of the matrix \(({\mathbf{I}-\Lambda^{-1} A})\) are non-zero. Therefore, the matrix \(({\mathbf{I}-\Lambda^{-1} A})\) is invertible.

From Eq. (20), we solve for \(\tilde{\mathbf{y}}(s)\) as

Inverse Laplace transform yields

where we introduce two matrix functions

and the convolution is defined as

First we consider the inverse Laplace transform of \(({\mathbf {I}-\Lambda^{-1} A})^{-1}\). We use the two decompositions of matrices as

and

where \(\mathbf{A}_{i}\) denotes the matrix formed from A by rewriting each entry of A except that in the ith row into zeros. So the ith rows of the matrices A and \(\mathbf{A}_{i}\) are identical. Hence we have the following expression:

Calculating the inverse Laplace transformation term by term we have

where \(\delta(t)\) is the Dirac delta function.

Further we have the following result for the matrix \(\mathbf{G}(t)\):

We lift the generalized Mittag–Leffler function in Eq. (8) to a matrix function and use it to express the matrix \(\mathbf{G}(t)\) as

To calculate the inverse Laplace transform in Eq. (27), we decompose the matrix \({\boldsymbol{\Lambda}^{-1}}\) as

where \(\mathbf{I}_{j}\) are formed from the unit matrix I in a similar manner as \(\mathbf{A}_{j}\). We derive the expression for the matrix \(\mathbf{Q}(t)\) as

An equivalent expression is

In terms of the generalized Mittag–Leffler function of matrix arguments, \(\mathbf{Q}(t)\) has the form from Eq. (36)

Thus the analytic solutions are obtained in Eqs. (25), (34) and (38) in terms of the generalized Mittag–Leffler function of matrix arguments. In practical computation, we can truncate the series expressions in Eqs. (33) and (37) and give analytic approximate solutions:

where \(\mathbf{G}^{[m]}(t) \) and \(\mathbf{Q}^{[m]}(t)\) are the truncation with the first m terms in Eqs. (33) and (37) as

For the special case \(\alpha_{1}=\alpha_{2}=\cdots=\alpha_{n}=\alpha\), the two matrices \(\mathbf{G}(t)\) and \(\mathbf{Q}(t)\) are simplified to

and

They are consistent with [39].

4 Comparison with numeric solutions

We take \(T>0\) and the number of nodes N, and we consider the numeric solutions on the interval \([0,T]\) with the step size \(h=T/N\). Denote the nodes as \(t_{i}=i h, i=0,1,\ldots,N\). Let \(x_{i}, y_{j,i}, f_{j,i}\) represent, respectively, the values of \(x(t), y_{j}(t),f_{j}(t)\) at \(t=t_{i}\).

In the L1 algorithm given by Oldham and Spanier [1], the fractional derivative at \(t=t_{i}\) is discretized as

Calculating the integration leads to

Regrouping the terms on the right hand side we have the approximation

where

Now for the system of the fractional differential equations (15), we specify \(t=t_{i}\) for \(i=1,2,\dots,N\) as

where \(\mathbf{y}_{i}=(y_{1,i},y_{2,i},\dots,y_{n,i})^{T}\), \(\mathbf{f}_{i}=(f_{1,i}, f_{2,i},\dots, f_{n,i})^{T}\) and

Substituting the discrete form of the fractional derivatives in Eq. (45), we obtain

where

We rewrite Eq. (48) as

For small enough step size h, the matrix \(\boldsymbol{\Omega }-\mathbf{A}\) has large enough diagonal entries to ensure its invertibility due to the Gershgorin circle theorem. Thus we obtain the scheme for the numeric solutions as

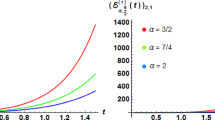

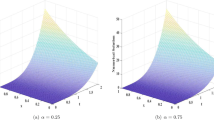

Finally, we test the analytic approximate solutions and the numeric solutions for the system of the fractional differential equations

subject to the initial conditions

From Eqs. (39), (40) and (41), the analytic approximate solutions \(y^{[14]}_{1}(t)\), \(y^{[14]}_{2}(t)\) and \(y^{[14]}_{3}(t)\) are calculated using the approximations of the first 14 terms, \(\mathbf{G}^{[14]}(t) \) and \(\mathbf{Q}^{[14]}(t)\). The numeric solutions \(y_{1,i}\), \(y_{2,i}\) and \(y_{3,i}\) for \(i=1,2,\dots,40\) with the step size \(h=0.02\) are given by suing the scheme (52). We implement these algorithms by using MATHEMATICA 8. In Fig. 1, the solid line, dot-dash line and dash line represent the analytic approximate solutions \(y^{[14]}_{1}(t)\), \(y^{[14]}_{2}(t)\) and \(y^{[14]}_{3}(t)\), respectively, and the dot lines denote the numeric solutions \(y_{1,i}\), \(y_{2,i}\) and \(y_{3,i}\). The consistence of the two solutions verifies the effectiveness of our proposed analytic method.

5 Conclusions

The solutions of system of linear fractional differential equations of incommensurate orders are investigated in this paper. First, we introduce an n-variable Mittag–Leffler function with \(n+1\) parameters. Then we derive the analytic expressions for the solutions of the fractional system by using the Laplace transform and multi-variable Mittag–Leffler functions of matrix arguments. Finally, we verify the analytic result with numeric solutions by an example, where the numeric solutions are given by generalizing the L1 algorithm to the fractional system.

We generate the plots of analytic approximate solutions and numeric solutions with the help of MATHEMATICA 8. The obtained series solutions are convergent on the entire interval \(0< t<+\infty\) and are easy to program and are approximated by any symbolic computation software.

References

Oldham, K.B., Spanier, J.: The Fractional Calculus. Academic, New York (1974)

Ross, B.: A brief history and exposition of the fundamental theory of fractional calculus. In: Ross, B. (ed.) Fractional Calculus and Its Applications (Lecture Notes in Mathematics, vol. 457, pp. 1–36. Springer, Berlin (1975)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Carpinteri, A., Mainardi, F. (eds.): Fractals and Fractional Calculus in Continuum Mechanics. Springer, Wien (1997)

Podlubny, I.: Fractional Differential Equations. Academic, San Diego (1999)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. Elsevier, Amsterdam (2006)

Mainardi, F.: Fractional Calculus and Waves in Linear Viscoelasticity. Imperial College, London (2010)

Diethelm, K.: The Analysis of Fractional Differential Equations. Springer, Berlin (2010)

Băleanu, D., Diethelm, K., Scalas, E., Trujillo, J.J.: Fractional Calculus Models and Numerical Methods. Series on Complexity, Nonlinearity and Chaos. World Scientific, Boston (2012)

Baleanu, D., Jajarmi, A., Asad, J.H., Blaszczyk, T.: The motion of a bead sliding on a wire in fractional sense. Acta Phys. Pol. A 131, 1561–1564 (2017)

Mainardi, F., Spada, G.: Creep, relaxation and viscosity properties for basic fractional models in rheology. Eur. Phys. J. Spec. Top. 193, 133–160 (2011)

Li, M.: Three classes of fractional oscillators. Symmetry 10, 40–91 (2018)

Mainardi, F.: Fractional relaxation-oscillation and fractional diffusion-wave phenomena. Chaos Solitons Fractals 7, 1461–1477 (1996)

Metzler, R., Klafter, J.: The random walk’s guide to anomalous diffusion: a fractional dynamics approach. Phys. Rep. 339, 1–77 (2000)

Duan, J.S.: Time- and space-fractional partial differential equations. J. Math. Phys. 46, 13504–13511 (2005)

Wu, G.C., Baleanu, D., Zeng, S.D., Deng, Z.G.: Discrete fractional diffusion equation. Nonlinear Dyn. 80, 281–286 (2015)

Monje, C.A., Chen, Y.Q., Vinagre, B.M., Xue, D., Feliu, V.: Fractional-Order Systems and Controls, Fundamentals and Applications. Springer, London (2010)

Baleanu, D., Jajarmi, A., Hajipour, M.: A new formulation of the fractional optimal control problems involving Mittag–Leffler nonsingular kernel. J. Optim. Theory Appl. 175, 718–737 (2017)

Jajarmi, A., Hajipour, M., Mohammadzadeh, E., Baleanu, D.: A new approach for the nonlinear fractional optimal control problems with external persistent disturbances. J. Franklin Inst. 355, 3938–3967 (2018)

Jajarmi, A., Hajipour, M., Baleanu, D.: New aspects of the adaptive synchronization and hyperchaos suppression of a financial model. Chaos Solitons Fractals 99, 285–296 (2017)

Duan, J.S., Lu, L., Chen, L., An, Y.L.: Fractional model and solution for the Black–Scholes equation. Math. Methods Appl. Sci. 41, 697–704 (2018)

Li, M., Lim, S.C., Chen, S.: Exact solution of impulse response to a class of fractional oscillators and its stability. Math. Probl. Eng. 2011, 657839 (2011)

Li, C., Zeng, F.: Numerical Methods for Fractional Calculus. CRC Press, Boca Raton (2015)

Jafari, H., Khalique, C.M., Ramezani, M., Tajadodi, H.: Numerical solution of fractional differential equations by using fractional B-spline. Cent. Eur. J. Phys. 11, 1372–1376 (2013)

Wu, G.C., Baleanu, D., Xie, H.P., Chen, F.L.: Chaos synchronization of fractional chaotic maps based on the stability condition. Physica A 460, 374–383 (2016)

Machado, J.A.T., Baleanu, D., Luo, A.C.J. (eds.): Discontinuity and Complexity in Nonlinear Physical Systems. Springer, Cham (2014)

Cao, W., Xu, Y., Zheng, Z.: Existence results for a class of generalized fractional boundary value problems. Adv. Differ. Equ. 348, 14 (2017)

Hajipour, M., Jajarmi, A., Baleanu, D.: An efficient nonstandard finite difference scheme for a class of fractional chaotic systems. J. Comput. Nonlinear Dyn. 13, 021013 (2017)

Baleanu, D., Inc, M., Yusuf, A., Aliyu, A.I.: Lie symmetry analysis, exact solutions and conservation laws for the time fractional Caudrey–Dodd–Gibbon–Sawada–Kotera equation. Commun. Nonlinear Sci. Numer. Simul. 59, 222–234 (2018)

Baleanu, D., Inc, M., Yusuf, A., Aliyu, A.I.: Space-time fractional Rosenou–Haynam equation: Lie symmetry analysis, explicit solutions and conservation laws. Adv. Differ. Equ. 2018, 46 (2018)

Baleanu, D., Inc, M., Yusuf, A., Aliyu, A.I.: Time fractional third-order evolution equation: symmetry analysis, explicit solutions, and conservation laws. J. Comput. Nonlinear Dyn. 13, 021011 (2018)

Inc, M., Yusuf, A., Aliyu, A.I., Baleanu, D.: Lie symmetry analysis, explicit solutions and conservation laws for the space-time fractional nonlinear evolution equations. Physica A 496, 371–383 (2018)

Mainardi, F., Gorenflo, R.: On Mittag–Leffler-type functions in fractional evolution processes. J. Comput. Appl. Math. 118, 283–299 (2000)

Caputo, M., Fabrizio, M.: A new definition of fractional derivative without singular kernel. Prog. Fract. Differ. Appl. 1, 73–85 (2015)

Baleanu, D., Fernandez, A.: On some new properties of fractional derivatives with Mittag–Leffler kernel. Commun. Nonlinear Sci. Numer. Simul. 59, 444–462 (2018)

Atanackovic, T.M., Stankovic, B.: On a system of differential equations with fractional derivatives arising in rod theory. J. Phys. A 37, 1241–1250 (2004)

Daftardar-Gejji, V., Babakhani, A.: Analysis of a system of fractional differential equations. J. Math. Anal. Appl. 293, 511–522 (2004)

Deng, W., Li, C., Lü, J.: Stability analysis of linear fractional differential system with multiple time delays. Nonlinear Dyn. 48, 409–416 (2007)

Duan, J.S., Chaolu, T., Sun, J.: Solution for system of linear fractional differential equations with constant coefficients. J. Math. 29, 599–603 (2009)

Duan, J.S., Fu, S.Z., Wang, Z.: Solution of linear system of fractional differential equations. Pac. J. Appl. Math. 5, 93–106 (2013)

Charef, A., Boucherma, D.: Analytical solution of the linear fractional system of commensurate order. Comput. Math. Appl. 62, 4415–4428 (2011)

Odibat, Z.M.: Analytic study on linear systems of fractional differential equations. Comput. Math. Appl. 59, 1171–1183 (2010)

Daftardar-Gejji, V., Jafari, H.: Adomian decomposition: a tool for solving a system of fractional differential equations. J. Math. Anal. Appl. 301, 508–518 (2005)

Gaboury, S., Özarslan, M.A.: Singular integral equation involving a multivariable analog of Mittag–Leffler function. Adv. Differ. Equ. 2014, 252 (2014)

Jaimini, B.B., Gupta, J.: On certain fractional differential equations involving generalized multivariable Mittag–Leffler function. Note Mat. 32, 141–156 (2012)

Parmar, R.K., Luo, M., Raina, R.K.: On a multivariable class of Mittag–Leffler type functions. J. Appl. Anal. Comput. 6, 981–999 (2016)

Funding

This work was supported by the National Natural Science Foundation of China (No. 11772203).

Author information

Authors and Affiliations

Contributions

All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares to have no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Duan, J. A generalization of the Mittag–Leffler function and solution of system of fractional differential equations. Adv Differ Equ 2018, 239 (2018). https://doi.org/10.1186/s13662-018-1693-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s13662-018-1693-9